Abstract

Background

Orthogonal confirmation of variants identified by next-generation sequencing (NGS) is routinely performed in many clinical laboratories to improve assay specificity. However, confirmatory testing of all clinically significant variants increases both turnaround time and operating costs for laboratories. Improvements to early NGS methods and bioinformatics algorithms have dramatically improved variant calling accuracy, particularly for single nucleotide variants (SNVs), thus calling into question the necessity of confirmatory testing for all variant types. The purpose of this study is to develop a new machine learning approach to capture false positive heterozygous variants (SNVs) from whole exome sequencing (WES) data.

Results

WES variant calls from Genome in a Bottle (GIAB) cell lines and their associated quality features were used to train five different machine learning models to predict whether a variant was a true positive or false positive based on quality metrics. Logistic regression and random forest models exhibited the highest false positive capture rates among the selected models, but GradientBoosting achieved the best balance between false positive capture rates and true positive flag rates. Further assessment using simulated false positive events as well as different combinations of quality features showed that model performance can be refined. Integration of the highest-performing models into a custom two-tiered confirmation bypass pipeline with additional guardrail metrics achieved 99.9% precision and 98% specificity in the identification of true positive heterozygous SNVs within the GIAB benchmark regions. Furthermore, testing on an independent set of heterozygous SNVs (n = 93) detected by exome sequencing of patient samples and cell lines demonstrated 100% accuracy.

Conclusions

Machine-learning models can be trained to classify SNVs into high or low-confidence categories with high precision, thus reducing the level of confirmatory testing required. Laboratories interested in deploying such models should consider incorporating additional quality criteria and thresholds to serve as guardrails in the assessment process.

Supplementary Information

The online version contains supplementary material available at 10.1186/s12864-025-11889-z.

Keywords: Next generation sequencing, Sanger confirmation, Machine learning, Clinical decision-support tool

Background

Sanger sequencing is a first-generation DNA sequencing method that has long been considered the gold standard for the accurate detection of small sequence variants [1], but its use as a primary approach for variant detection has been largely supplanted by NGS in clinical laboratories. Many laboratories continue to employ Sanger sequencing as an orthogonal method to confirm variants identified by NGS; however, the sensitivity and accuracy of current NGS methods and bioinformatic tools have significantly improved since its inception [2–4]. Numerous studies examining the necessity of Sanger sequencing report concordance rates of > 99% between NGS and Sanger sequencing results for single nucleotide variants (SNVs) and insertion-deletion variants (indels) in high-complexity regions [1, 5–9], whereas low-complexity regions comprised of repetitive elements, homologous regions, and high-GC content, as well as technical artifacts are more likely to be enriched for false positive variants with relatively poor quality metrics.

Machine learning is a type of artificial intelligence that has the capability to make decisions or render predictions based on inferred relationships between features (a.k.a parameters) of a dataset without explicit programming. Diagnostic applications of machine learning frequently rely on supervised learning models that require training on labeled data to learn which features are significant for a given class, while unsupervised approaches attempt to establish relationships between features without a priori knowledge of truth. Within the field of medical genetics, supervised machine-learning models have been reported to significantly reduce the confirmation rate of NGS variant calls using sequencing parameters such as read depth, allele frequency, sequencing quality, and mapping quality as variables to train models [10–12]. The success of these models can be attributed to quantitative and qualitative differences that separate high and low-confidence variant calls. While these studies demonstrate proof-of-concept for implementing machine learning models for triaging variants in confirmatory testing, pipeline-specific differences in quality features necessitate de novo model building and clinical validation before integrating these models into a clinical genetic workflow.

In this study, we aim to employ supervised machine learning models to differentiate between two types of heterozygous SNVs: high-confidence variants which do not require orthogonal confirmation, and low-confidence variants which require additional review and confirmatory testing. Random forest (RF), logistic regression (LR), AdaBoost, Gradient Boosting (GB), and Easy Ensemble methods were selected for comparison in this study. Multiple iterations of supervised machine learning were performed to identify which features and statistical methods yielded optimal results, and a two-tiered model with guardrails for allele frequency and sequence context was developed to achieve the optimal balance between sensitivity and specificity. Testing of the final model suggested that our approach significantly reduces the number of true positive variants requiring confirmation while mitigating the risk of reporting false positives.

Methods

Cell lines and specimens

Genomic DNA isolated from genome-in-a-bottle (GIAB) reference specimens NA12878, NA24385, NA24149, NA24143, NA24631, NA24694, and NA24695 were purchased from the Coriell Institute for Medical Research (Camden, NJ) (Additional file 1: Table S1). Additional lymphoblast cell line DNA (Coriell) and de-identified patient specimens were used in a separate validation of the final model. Informed consent for the clinical testing was obtained by referring physicians prior to sample submission and residual DNA was de-identified prior to use in this study. Per the United States Code of Federal Regulations for the Protection of Human Subjects, institutional review board exemption is applicable due to de-identification of the patient data presented herein (45 CFR part 46.101(b)(4)).

Data downloads and source materials

GIAB benchmark files (version v 4.2.1 for GRCh37) containing high-confidence variant calls were downloaded from the National Center for Biotechnology Information (NCBI) ftp site for use as truth sets for supervised learning and assessment of model performance. Genomic regions ineligible for Sanger bypass were compiled by downloading the following bed files from the UCSC genome browser: ENCODE blacklist, NCBI NGS high stringency, NCBI NGS low stringency, NCBI NGS dead zone, and segmental duplication tracks. These data were supplemented with additional regions of low-mappability identified by an internal assessment.

NGS library preparation and data processing

Whole exome libraries for GIAB cell lines were sequenced twice on two separate flow cells. Library preparation and target enrichment were carried out using an internally developed automation workflow on the Hamilton NGS Star workstation (Hamilton Company, Reno, NV). Briefly, libraries were prepared from 250 ng of genomic DNA using Kapa HyperPlus reagents (Kapa Biosystems, Inc./Roche, Wilmington, MA) for enzymatic fragmentation, end-repair, A-tailing and adaptor ligation. Each library was indexed with unique dual barcodes (IDT, Coralville, IA) to eliminate the possibility of index hopping between samples. For target enrichment, twelve normalized libraries were pooled together, and a custom panel of biotinylated, double-stranded DNA probes (Twist Biosciences, South San Francisco, CA) was used to capture exome sequences as well as other regions of interest (~ 41.4 Mb total). The hybridized libraries were further purified using streptavidin beads, and the library pools quantified via the Kapa qPCR Library Quantification kit (Kapa Biosystems Inc./Roche, Wilmington, MA) on a QuantStudio®7 (ThermoScientific, Waltham, MA). After normalization, the library pools were combined, and ~ 1–2% PhiX library control spiked into the final pool to monitor sequencing quality in real-time. Up to 192 libraries were sequenced (paired-end, 2 × 150 cycles) per S4 flowcell on the NovaSeq 6000 sequencer (Illumina, San Diego, CA). Sequencing run quality metrics were tracked in real-time using the Illumina Sequencing Analysis Viewer v.2.4.7 software for percent of clusters passing filter, fraction of bases at > Q30, sequencing yield and flowcell occupancy. Additional metrics related to the PhiX control such as alignment, error rate and pre-phasing/phasing were used for troubleshooting. Sequencing data were demultiplexed with the bcl2fastq2 v.2.20 or BCLConvert v.3.8.2 software (Illumina, San Diego, CA), and the fastq files processed through a customized data analysis pipeline that consisted of the CLCBio Genomics CLS WebService v.21.0.5 and Workbench v.21.0.5 (Qiagen Bioinformatics, Redwood City, CA) software and plugins thereof as well as internally developed algorithms. Reads were trimmed to remove adaptor sequences and low-quality bases (< Q20) and then aligned to the GRCh37/hg19 NCBI reference genome followed by duplicate reads removal, local re-alignment and variant detection. Data quality was assessed based on metrics such as mean target coverage, fraction of bases at minimum coverage, coverage uniformity expressed as Fold 80 base penalty, on-target rate and insert size, all of which were calculated using Picard v.2.3.0 tools in the Genome Analysis Toolkit (GATK; Broad Institute, Cambridge, MA). Sequence data were analyzed with the CLCBio Clinical Lab Service to generate annotated TR.xml files with quality features (Table S2) used for training and testing various machine-learning algorithms. Variant calling parameters included minimum read length (20 bases), minimum coverage (n = 8), and minimum frequency (20%) with base quality and variant quality filters on. Broken read pairs were discarded as a quality control measure. All heterozygous SNVs called from the internal pipeline were intersected with the GIAB benchmark bed files and variants in the high-confidence regions were annotated as 0 if present in the truth set (i.e. true positive (TP)) or 1 if absent (i.e. false positive (FP)).

Sanger sequencing

Confirmation of select variants was performed by Sanger sequencing. Primers flanking the test variants were designed online using Primer3Plus software and primer specificity was verified using the UCSC genome browser (Univerisity of California, Santa Cruz, CA) in silico PCR tool. Sanger sequencing was performed by capillary electrophoresis on the Applied Biosystems 3730xl genetic analyzer (Thermo Fischer Scientific, Waltham, MA). GeneStudio™ Pro (Informer Technologies Inc., New York City, NY) and UGENE (Unipro, Novosibirsk, Russia) software were used for alignment and analysis of Sanger sequencing traces.

Model training and testing strategies

Predictive modeling of high-confidence variants detected in the GIAB specimens was performed using logistic regression, random forest, gradient boosting, EasyEnsemble, and AdaBoost. The features used for model training included allele frequency, read count metrics, coverage, quality, read position probability, read direction probability, homopolymer presence, and overlap with low-complexity sequence (i.e. complex regions) (Table S2). All annotated variants from the two flow cells were evenly split into two subsets with truth stratification to ensure proportions of FPs and TPs are similar. The first half of the data was used for leave-one-sample-out cross validation (LOOCV), where each GIAB sample was left out once and used as the testing set, the other six samples were used as training set, and the second half of the data was reserved to test the models trained on the first dataset. In the cross validation (CV) experiment, the machine-learning models were trained using all high-confidence variants with known truth (imbalanced raw data) and all available features. Hyperparameters were tuned and selected in this phase. A second phase training was performed using the first half of data as the training set and the second half of the data as the testing set to evaluate the importance of quality features, the impact of imbalanced data and pick the best model combination. Feature coefficients were estimated on both raw and scaled data (minmaxscale module in Python scikit learn). Features with positive effects were more likely to be associated with false positive variants, whereas features with negative weights were more likely to be associated with true positive variants, which are in the context of labeling false positives as 1 considering false positive variants are the primary target. High-impact features were selected based on the estimates in this step. Since our data is imbalanced with most variants representing true positive calls, comparisons among balanced and imbalanced datasets were performed in the final testing. Methods selected to achieve balanced datasets for evaluation included simple over sampling (SOS), which randomly duplicates data points from the minority data set, and synthetic minority oversampling (SMOTE), which generates synthetic data points according to a k-nearest neighbor analysis of minority data point clustering. Common metrics in ML classification-based methods including accuracy, confusion matrix, area under the curve (AUC) or/and area under receiver operating characteristics (AUROC) and F1-score were examined to assess the performance of the models. AUC measures the true-positive rate (TPR) or sensitivity, true-negative rate (TNR) or specificity and the false positive rate (FPR), whereas the F1-score assesses precision and recall rate in highly imbalanced data. For both AUC and F1-score, a greater value reflects better model performance. The confusion matrix describes the complete model performance by measuring the model accuracy to calculate true-positive values plus true-negative values and dividing the sum over the total number of samples [13]. In the context of this study, since the primary target is false positive variants which are labeled as 1 in the model training, TPR and false positive capture rate are used interchangeably. True positive flag rate is defined as the rate at which models incorrectly tag true positive variants as low-confidence variants.

External validation

External validation of the model was performed using a new variant set comprised of 93 heterozygous SNVs identified in 83 de-identified specimens or cell lines. Criteria for the selection of test variants were based on (1) location within a genomic region validated in any of our NGS assays and the variant’s clinical significance. For the purpose of this validation, clinical significance refers to the minimum variant classification required to be considered reportable for a given panel (e.g. only likely pathogenic or pathogenic variants in a carrier screening gene; pathogenic, likely pathogenic and variants of uncertain significance (VUS) in genes overlapping a cardiogenetic or hereditary cancer panel).

Results

Dataset preparation

Seven GIAB cell lines were sequenced twice on two flow cells resulting in 282,076 heterozygous SNVs. Intersection of these variants with the GIAB benchmark high-confidence regions resulted in a total of 222,489 heterozygous SNVs, of which 212,397 variants were annotated as TP and 10,092 labeled as FP (Table 1). Labeled variants in each sample were then split into two halves such that the counts and percentages for TPs and FPs were evenly divided between the two subsets (Table 2).

Table 1.

Detailed counts by sample along the workflow for heterozygous single-nucleotide variants (SNV)

| Counts of heterozygous SNVs throughout the workflow | |||||

|---|---|---|---|---|---|

| Flow cells | NIST ID | Raw | In GIAB benchmark regions | Present in truth set (TP) | Absent in truth set (FP) |

| Flow Cell 1 | HG001 | 20,612 | 15,933 | 15,109 | 824 |

| HG002 | 20,625 | 16,600 | 15,829 | 771 | |

| HG003 | 20,540 | 16,322 | 15,583 | 739 | |

| HG004 | 21,090 | 16,630 | 15,929 | 701 | |

| HG005 | 19,844 | 15,627 | 14,808 | 819 | |

| HG006 | 18,938 | 14,970 | 14,223 | 747 | |

| HG007 | 19,618 | 15,315 | 14,663 | 652 | |

| Flow Cell 2 | HG001 | 20,645 | 15,880 | 15,120 | 760 |

| HG002 | 20,620 | 16,553 | 15,879 | 674 | |

| HG003 | 20,511 | 16,330 | 15,598 | 732 | |

| HG004 | 20,990 | 16,600 | 15,943 | 657 | |

| HG005 | 19,504 | 15,439 | 14,807 | 632 | |

| HG006 | 18,890 | 14,976 | 14,220 | 756 | |

| HG007 | 19,649 | 15,314 | 14,686 | 628 | |

| Total | - | 282,076 | 222,489 | 212,397 | 10,092 |

Seven cell lines were sequenced twice in two separate flow cells as technical replicates. Truth was retrieved from GIAB benchmark regions. TP True positive is a variant present in the truth set, FP false positive is a variant absent in the truth set

Table 2.

Detailed counts by sample along the workflow for heterozygous single-nucleotide variants (SNV)

| Counts of heterozygous SNVs for cross-validation and final test | ||||||

|---|---|---|---|---|---|---|

| First half for CV | Second half for final training | |||||

| Flow cells | NIST ID | Present (TP) | Absent (FP) | Present (TP) | Absent (FP) | Total |

| Flow Cell 1 | HG001 | 7,554 | 412 | 7,555 | 412 | 15,933 |

| HG002 | 7,914 | 386 | 7,915 | 385 | 16,600 | |

| HG003 | 7,791 | 370 | 7,792 | 369 | 16,322 | |

| HG004 | 7,964 | 351 | 7,965 | 350 | 16,630 | |

| HG005 | 7,404 | 409 | 7,404 | 410 | 15,627 | |

| HG006 | 7,111 | 374 | 7,112 | 373 | 14,970 | |

| HG007 | 7,331 | 326 | 7,332 | 326 | 15,315 | |

| Flow Cell 2 | HG001 | 7,560 | 380 | 7,560 | 380 | 15,880 |

| HG002 | 7,939 | 337 | 7,940 | 337 | 16,553 | |

| HG003 | 7,799 | 366 | 7,799 | 366 | 16,330 | |

| HG004 | 7,971 | 329 | 7,972 | 328 | 16,600 | |

| HG005 | 7,403 | 316 | 7,404 | 316 | 15,439 | |

| HG006 | 7,110 | 378 | 7,110 | 378 | 14,976 | |

| HG007 | 7,343 | 314 | 7,343 | 314 | 15,314 | |

| Total | - | 106,194 | 5,048 | 106,203 | 5,044 | 222,489 |

For each cell line in each flow cell, both TP and FP variants were split evenly into two groups. The first group was used for cross-validation; both groups were used in the second and final testing phases

Cross-validation

A LOOCV was performed using the first subset for both training and testing logistic regression, random forest, Gradient Boosting, AdaBoost, and EasyEnsemble models. In this first phase of model training, all 13 quality features and capture rates of 95% and 99% were tested, indicating that 5/100 and 1/100 false positive calls are missed, respectively. Logistic regression and random forest models exhibited the best performance with respect to false positive capture rates, while gradient boosting achieved the best all-around performance with a high FP capture rate and low TP flag rate (Table 3). Low standard deviations were observed for all models across different genetic backgrounds, indicating consistent and robust model performance.

Table 3.

Summary of cross-validation experiments for five models on heterozygous SNVs

| Recall 0.95 (TPR) | Recall 0.99 (TPR) | |||||

|---|---|---|---|---|---|---|

| Variant type | Models | CV FP capture rate (TPR %) | CV TP flag rate (FPR %) | CV FP capture rate (TPR %) | CV TP flag rate (FPR %) | CV ROC AUC (%) |

| SNV-heterozygous | GradientBoosting | 91.34+−2.32 | 19.25+−3.72 | 96.56+−0.66 | 54.33+−4.26 | 94.77+−0.81 |

| LogisticRegression | 94.88+−1.52 | 41.81+−6.89 | 99.00+−0.45 | 89.43+−3.54 | 94.52+−0.71 | |

| EasyEnsemble | 93.81+−1.63 | 34.46+−5.17 | 98.50+−0.76 | 75.12+−5.66 | 94.34+−0.88 | |

| AdaBoost | 88.19+−2.88 | 12.75+−2.47 | 91.90+−2.27 | 29.62+−4.25 | 93.83+−1.04 | |

| RandomForest | 94.22+−1.65 | 50.68+−8.22 | 99.07+−0.64 | 82.30+−4.86 | 92.79+−1.05 | |

Mean and standard deviation for TPR true positive rate, FPR false positive rate, and AUC ROC area under the receiver operating characteristics curve in CV cross-validation under 0.95 and 0.99 recall rates. Models are sorted by AUC values.

Model evaluation and selection

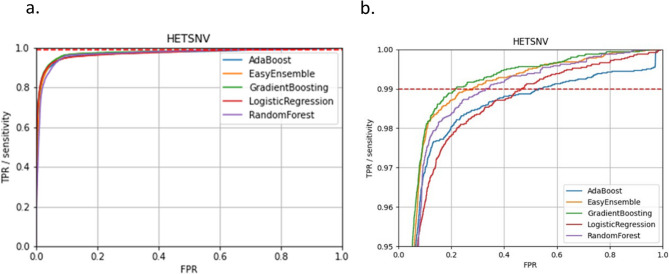

In the second phase of testing, all five models were trained using the first dataset for training and the second dataset for testing. The results of phase two were comparable to phase one with gradient boosting exhibiting the most balanced performance and random forest and logistic regression models exceeding EasyEnsemble and AdaBoost in false positive capture rates (Table 4 and Fig. 1). Refinement of model performance was then explored by comparing model performance with the full set of sequence features versus select high-impact features.

Table 4.

Summary of second-phase training experiments for five models on heterozygous SNVs

| Second-phase training | Recall 0.99 (TPR) in the second-phase training | ||||||

|---|---|---|---|---|---|---|---|

| Variant type | Models | FP capture rate (TPR %) | TP flag rate (FPR %) | TP capture rate (TNR %) | FP flag rate (FNR %) | ROC AUC (%) | Threshold (%) |

| SNV-het | GradientBoosting | 98.12 | 10.74 | 89.26 | 1.88 | 98.67 | 0.42 |

| EasyEnsemble | 98.67 | 19.41 | 80.59 | 1.33 | 98.3 | 48.64 | |

| LogisticRegression | 98.68 | 36.18 | 63.82 | 1.32 | 98.07 | 0.29 | |

| AdaBoost | 94.94 | 6.47 | 93.53 | 5.06 | 98.02 | 49.44 | |

| RandomForest | 99.13 | 37 | 63 | 0.87 | 97.85 | 10.27 | |

TPR True positive rate, FPR false positive rate, AUC ROC area under the receiver operating characteristics curve and threshold drawn under 0.99 recall rate in the second phase. Models are sorted by AUC values

Fig. 1.

ROC curves for all models showing performances in the second training-testing phase. a overall ROC curves; b zoomed-in to show TPR over 0.95

Feature coefficients (applicable to LR) and importance (applicable to RF and GB) were estimated using both raw and scaled data to determine the relative contribution of each feature to the associated true positive or false positive label, and to eliminate redundant features. This assessment indicated that scaling of LR coefficients yielded inconsistent patterns for several features (Table 5); however, eight features (frequency, read count, coverage, forward count, reverse count, forward/reverse ratio, read position probability, and overlap with complex regions) exhibited consistent trends between raw and scaled data. The features with highest contributions were selected as key features for subsequent model training and comparisons. Density plots for these key features showing the difference between true positive and false positive can be reviewed in the Additional file1: Figure S3. The effects of imbalanced data were also investigated using two statistical models for balancing skewed datasets: SOS and SMOTE. In total, eighteen combinations with variable feature sets and relative balance were evaluated across RF, LG and GR models to determine the optimal conditions for training (Table S3). The top-performing model configurations according to the F1 scores are: GB trained with all quality features and raw imbalanced data, LR trained with selected key features and SOS balanced data, and RF trained with all quality features and raw imbalanced data (Table 6, full list of statistics for all combinations can be found in the Additional file 1: Table S4).

Table 5.

Assessment of features importance/coefficients for RF, LG and GB using both Raw and scaled data

| HET SNV | frequency | read count | coverage | forward count | reverse count | forward/reverse ratio | average quality |

|---|---|---|---|---|---|---|---|

| LR_coefficients | −0.15 | −0.23 | 0 | 0.2 | 0.21 | −7.54 | 0.1 |

| LR_coeffficients_scaled | −11.44 | −1.68 | 2.18 | 15.63 | 16.59 | −1.79 | −0.4 |

| RF_importance | 0.22 | 0.01 | 0.03 | 0.04 | 0.03 | 0 | 0 |

| GB_importance | 0.24 | 0.03 | 0.11 | 0.07 | 0.05 | 0.01 | 0 |

| probability | read position probability | read direction probability | homopolymer | homopolyer length | in complex region | ||

| LR_coefficients | 0.47 | −1.43 | −0.58 | −0.02 | 0.07 | 2.65 | |

| LR_coefficients_scaled | −0.63 | −3.16 | 0.61 | −0.15 | 0.39 | 2.51 | |

| RF_importance | 0 | 0.23 | 0.05 | 0 | 0 | 0.38 | |

| GB_importance | 0 | 0.36 | 0.02 | 0 | 0 | 0.11 |

Coefficients evaluation for LR logistic regression and features importance evaluation for RF random forest and GB gradient boosting for both raw and scaled data using all thirteen NGS next-generation-sequencing features which are initially available

Table 6.

Final models selection of the top performing combinations of features and datasets for heterozygous SNVs

| features | data status | model name | model name | TPR | FPR | TNR | FNR | ROC | Threshold (%) | Ss | F1 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| all | imbalanced | imb_all | GB | 98.12 | 10.74 | 89.26 | 1.88 | 98.67 | 0.42 | 0.90 | 0.90 |

| key | SOS balanced | sos_key | LR | 98.69 | 28.66 | 71.34 | 1.31 | 98.30 | 4.91 | 0.97 | 0.82 |

| all | imbalanced | imb_all | RF | 99.13 | 37.00 | 63.00 | 0.87 | 97.85 | 10.27 | 1.00 | 0.77 |

Best models picked for the Sanger Bypass pathway are GB gradient boosting trained with all 13 features and imb_all imbalanced original data, LR logistic regression trained with key features and SOS simple-oversampling balanced data (sos_key), and RF random forest trained with all 13 features and imb_all imbalanced original data. Ss: scaled capture rate score. Models are sorted by F1 values

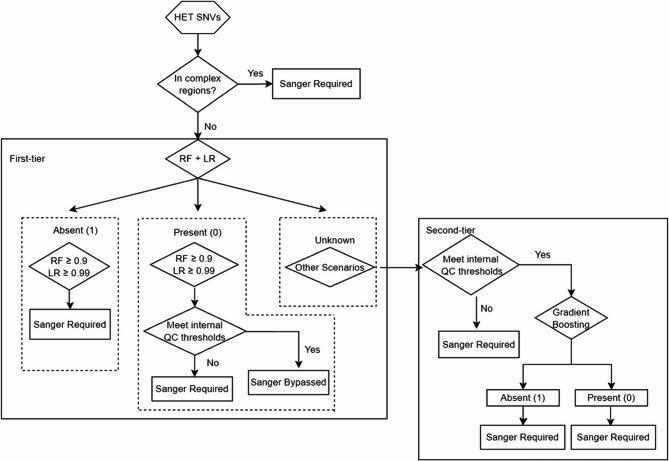

Because each optimized model has distinct advantages, we decided to combine all three models and utilize the thresholds harvested from the 0.99 recall rate in the training set (0.9 for Random Forest, 0.99 for Logistic Regression and 0.99 for Gradient Boosting) to form a two-tiered (2T) workflow for the evaluation of heterozygous SNVs (Fig. 2). Logistic regression and random forest models demonstrated the highest sensitivity in identifying false positives. Therefore, we integrated these two models as the initial classifier to detect prominent low-confidence calls. Variants with a false positive probability exceeding 0.9 in the random forest model and 0.99 in the logistic regression model are categorized as “Absent.” Conversely, variants with a true positive probability surpassing 0.9 in random forest and 0.99 in logistic regression are classified as “Present.” Any variants that fail to meet these thresholds are labeled as “Unknown” and subsequently undergo further analysis using gradient boosting classification. Given the superior robustness of the gradient boosting model, we implemented it as a secondary layer to classify the uncertain cases that emerged from the combined random forest and logistic regression analysis. Unclassified/Unknown variants from the first tier will be classified as “Present” if a true positive probability is higher than 0.99, and vice versa. Additional criteria were also integrated into the pipeline, including allele frequency ranges, minimum coverage, and genomic location to prevent bypass of variants with atypical features.

Fig. 2.

Two-tiered workflow using machine learning models to detect high-confidence heterozygous SNVs

Final model evaluation

The final 2 T workflow was then tested on the other half of GIAB variants which was serving as the testing set (a total of 106,203 known TPs and 5,044 FPs, Table 4). Tier one (RF + LR) returned variant predictions for 24,683 variants (22,320 high-confidence + 2,363 low-confidence). (Table 7). However, approximately 77.8% (86,564/111,247) of variants with known truth could not be predicted as present or absent at the selected thresholds (“Unknown” by RF + LR: 13,972 + 72,592), thus processing by the GB machine learning model as a second tier of confirmatory bypass workflow was required. GB correctly classified 86.7% (72,503/83,628) of the remaining true positive variants and 96.9% (2,847/2,936) of the true negative variants. Taken together, the 2 T predictions were concordant with GIAB truth for 89.7% (99,766/111,247) of the total variants. According to the established workflow, 89.3% ((22,314 + 72,503)/106,203) of GIAB true positives labeled as high-confidence by the workflow would be eligible to bypass orthogonal confirmation, while 16,335 (2,363 by RF + LR and 13,972 by GB) variants predicted to be low-confidence including 11,386 (261 by RF + LR and 11,125 by GB) true positive variants, would require reflex to Sanger sequencing (Table 7). Additionally, 95 (6 by RF + LR and 89 by GB) false positive variants were incorrectly predicted to be true positive by the combined models, resulting in a false positive rate of 1.88%. Overall, the 2 T model delivers a 99.9% (94,817/(94,817 + 95)) PPV/precision, 89.2% (94,817/(94,817 + 11,386)) sensitivity, and 98.1% (95/(95 + 4949)) specificity (Table 8) in the context of correctly predicting true positive ones in the truth set.

Table 7.

Performance of three models combined on the GIAB testing set

| GIAB cell lines variants truth | |||||

|---|---|---|---|---|---|

| Gradient Boosting Prediction | Present (Positive) | Absent (Negative) | Totals | ||

| Random Forest + Logistic Regression | High-confidence (Positive) | Low-confidence (Negative) | 0 | 0 | 0 |

| High-confidence (Positive) | 22,314 | 6 | 22,320 | ||

| Low-confidence (Negative) | Low-confidence (Negative) | 261 | 2,102 | 2,363 | |

| High-confidence (Positive) | 0 | 0 | 0 | ||

| Unknown | Low-confidence (Negative) | 11,125 | 2,847 | 13,972 | |

| High-confidence (Positive) | 72,503 | 89 | 72,592 | ||

| Totals | - | 106,203 | 5,044 | 111,247 | |

Table 8.

Confusion matrix of 2 T model on the GIAB testing set

| The Truth | |||

|---|---|---|---|

| Present (Positive) | Absent (Negative) | ||

| 2 T Prediction | High-confidence (Positive) | 94,817 | 95 |

| Low-confidence (Negative) | 11,386 | 4,949 | |

The first step in the pipeline is to combine both random forest and logistic models to make variants classification, then gradient boosting is served as the third model to further predict the status of variants. High-confidence variants predicted by the 2 T model are the ones qualified for Sangerbypass, while as for the low-confidence variants are the ones that will require Sanger confirmation

External validation

Validation of the models was performed on a subset of new heterozygous SNVs (n = 93) identified through end-to-end testing of samples on the exome panel to assess overfitting of the final models to the training dataset. The accuracy of the predictions for this new dataset was determined using Sanger sequencing data as a source of truth. The concordance rate between the machine-learning predictions and Sanger sequencing results was 100% in this validation study (Additional file 1: Table S5), suggesting that overfitting did not contribute to the high sensitivity rates observed in the GIAB testing dataset.

Discussion

The development of artificial intelligence for decision support in healthcare is rapidly gaining acceptance among the medical and scientific communities. In recent years, several publications have described machine learning tools that can distinguish between true positive and false positive NGS calls based on sequence metrics and variant characteristics. In this study, we built upon the framework developed by Holt et al. [12] by using a combination of continuous and binary models to establish a workflow for bypassing confirmation of heterozygous SNVs detected by WES. Developing a model based on WES data was initially selected for proof-of-concept studies as exome sequencing of GIAB cell lines would generate a sufficiently large number of variants with available truth data for model training. Additionally, sequence data from our clinical laboratory’s exome validation was available for external validation of the machine learning model. This approach can be adapted to other sequencing platforms, including whole genome sequencing, provided that the training dataset includes a sufficient number of true positive and false positive variants. Notably, we chose to include technical replicates within our training and testing datasets. Although this approach creates redundancy with respect to variant calls, none of the duplicated variant calls shared identical sequencing features, thus each true positive or false positive event presented a unique set of quality metrics for the model to ingest. Consequently, we believe the redundancy effectively increased the diversity of our dataset, which can potentially improve real-world performance, although additional studies would be needed to confirm the benefits of including technical replicates.

The decision to limit the scope of our development efforts to heterozygous variants was based on our observation that the overwhelming majority of clinically-significant variants detected by NGS in our laboratory are heterozygous sequence changes. Consequently, developing models that can accurately classify heterozygous variants as true or false positives provides the greatest benefit in terms of financial savings and improved turnaround. Of note, heterozygous structural variants (deletions, insertions, and indels) and homozygous SNVs in the GIAB dataset were initially included in our early assessment of different models. Our preliminary data suggested poor performance on structural variants and good performance on homozygous SNVs (Additional file 2). Though previous studies have reported success in applying models to indel prediction [11, 12, 14–16], our discordant outcomes can be reasonably attributed to differences between methodologies and bioinformatics pipelines. Further refinement of homozygous SNV predictions was not pursued due to the observed low frequency in the lab and historical preference for confirming all homozygous variants detected by NGS.

The decision to adopt any strategy to bypass confirmatory testing in a clinical setting, regardless of whether machine learning is involved, should be taken only after a thorough risk assessment. While several studies describe models with impressive performance, the majority of approaches failed to achieve a false positive capture rate of 100%. Training and testing of various models in our laboratory also failed to capture all false positives in the GIAB datasets. Thus, to reduce the risk of reporting false positives, additional criteria should be considered when designing confirmatory bypass workflows, such as thresholds for allele frequency range, minimum coverage, and genomic regions ineligible for bypass. Additionally, it may be advisable to limit the use of predictive models for medically actionable conditions to avoid the immediate and irreversible harm stemming from the reporting of potential false positives.

Although we believe the machine learning models and the proposed workflow described here is conservative and robust, we recognize that our approach also has limitations. Notably, training was performed exclusively on variant calls in the GIAB benchmark regions. The use of large datasets with known truth is ideal for training robust models; however, the final models may not perform well on variants beyond those high-confidence regions if those variants have different characteristics. It’s worth noting that laboratories may wish to circumvent this limitation by restricting the training data to regions of interest that correspond to target capture regions in their specific panels. This approach would yield a reduced number of variants for training and testing, which may result in more customized models with better performance due to reduced complexity of the input variants. Additionally, training models on the GIAB dataset is predicated on the assumption that GIAB benchmark files do not contain errors. Using verified datasets for model training is clearly preferable, but it’s not feasible for any lab to perform confirmatory tests for all GIAB benchmark variants. Internal datasets might be a viable alternative for some laboratories provided that these datasets have a sufficient number of false positives for a model to learn the distinguishing characteristics of the minority class.

Conclusions

To summarize, our study suggests that the general approach of using GIAB benchmark data along with variant quality features to train machine learning models can significantly improve clinical NGS workflows by easing the burden of orthogonal confirmations on labor, cost, and turnaround time. Although these models were developed on whole exome data using our internal NGS pipeline, customized models can also be developed according to different pipelines and NGS libraries.

Supplementary Information

Acknowledgements

Not Applicable.

Authors’ information.

MY-Sr. Bioinformatics Scientist; JR-Clinical laboratory director, American Board of Medical Genetics and Genomics certification in Cytogenetics and Molecular Genetics, FACMG; NL-Sr. Laboratory Director, American Board of Medical Genetics and Genomics certification in Cytogenetics and Molecular Genetics, FACMG; ZZ-Research and Development Scientist III; PO-Technical Director II; QZ-Principal Bioinformatics Scientist; AK-Director of Information Technology; SL-Executive Director, Scientific Projects, Diagnostics & Precision Medicine.

Abbreviations

- NGS

Next generation sequencing

- SNV

Single nucleotide variant

- WES

Whole exome sequencing

- GIAB

Genome in a bottle

- TPR

true positive rate

- RF

Random Forest model

- LR

Logistic Regression model

- GB

Gradient Boosting model

- NCBI

National Center for Biotechnology Information

- LOOCV

leave-one-sample-out cross validation

- CV

cross validation

- SOS

simple over sampling

- SMOTE

synthetic minority oversampling

- AUC

area under the curve

- AUROC

area under receiver operating characteristics

- TNR

true negative rate

- FPR

false positive rate

- VUS

variants with uncertain significance

- 2T

two-tiered

- PPV

positive predictive value

Authors’ contributions

MY-Conceptualization, Methodology, Software, Data Curation, Writing-Original Draft, Review & Editing, Visualization; JR-Conceptualization, Methodology, Clinical Analysis, Investigation, Writing-Original Draft, Review & Editing. MY and JR were major contributors to preparing the manuscript. ZZ performed Sanger sequencing confirmation including amplicon design, Sanger sequencing assay and data analysis. PO supervised the overall wet lab process. AK, QZ, SL and NL– Review and Editing, Supervision.

Funding

Funding for this study was solely provided by Labcorp Genetics.

Data availability

The raw sequencing data (fastq files) for the GIAB cell lines generated in this study have been deposited in the NCBI Sequence Read Archive (SRA) under BioProject accession number PRJNA1257936. Heterozygous SNVs and their associated features used for CV and final model training are provided as Additional file 3. Sequence datasets generated from patient specimens will not be made available for distribution as an additional measure to protect patient privacy. The software described in this article is proprietary and subject to company regulations. However, inquiries regarding access or usage may be directed to the corresponding author.

Declarations

Ethics approval and consent to participate

Informed consent for the use of data from human participants is not applicable to this study due to de-identification of all clinical specimens (see the US Federal Policy for the Protection of Human Subjects, (45 CFR part 46.101(b)(4))). All work presented herein complied with international standards on ethical research established by the Helsinki Declaration of 1975, as revised in 2024. This study was not reviewed by a formal Institutional Review Board or Research Ethics Committee, as these entities are not present within this organization. The contents of this manuscript have been reviewed for compliance by the Labcorp Legal department and the Department of Science and Technology.

Consent for publication

Not applicable.

Competing interests

MY, ZZ, QZ, AK, PO, SL, NL, and JR are current employees of Labcorp, a commercial laboratory that receives compensation for clinical testing. A provisional patent application for the machine learning model presented herein has been submitted with MY, QZ, AK, SL and JR listed as inventors.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Muqing Yan, Email: yanm2@labcorp.com.

Jennifer Reiner, Email: reinerj@labcorp.com.

References

- 1.Mu W, Lu H-M, Chen J, Li S, Elliott AM. Sanger confirmation is required to achieve optimal sensitivity and specificity in next-generation sequencing panel testing. J Mol Diagn. 2016;18(6):923–32. [DOI] [PubMed] [Google Scholar]

- 2.McCourt CM, McArt DG, Mills K, Catherwood MA, Maxwell P, Waugh DJ, Hamilton P, O’Sullivan JM, Salto-Tellez M. Validation of next generation sequencing technologies in comparison to current diagnostic gold standards for BRAF, EGFR and KRAS mutational analysis. PLoS One. 2013;8(7): e69604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lincoln SE, Truty R, Lin C-F, Zook JM, Paul J, Ramey VH, Salit M, Rehm HL, Nussbaum RL, Lebo MS. A rigorous interlaboratory examination of the need to confirm next-generation sequencing–detected variants with an orthogonal method in clinical genetic testing. J Mol Diagn. 2019;21(2):318–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Alharbi WS, Rashid M. A review of deep learning applications in human genomics using next-generation sequencing data. Hum Genomics. 2022;16:26. 10.1186/s40246-022-00396-x. [DOI] [PMC free article] [PubMed]

- 5.Arteche-López A, Ávila-Fernández A, Romero R, et al. Sanger sequencing is no longer always necessary based on a single-center validation of 1109 NGS variants in 825 clinical exomes. Sci Rep. 2021;11:5697. 10.1038/s41598-021-85182-w. [DOI] [PMC free article] [PubMed]

- 6.Baudhuin LM, Lagerstedt SA, Klee EW, Fadra N, Oglesbee D, Ferber MJ. Confirming variants in next-generation sequencing panel testing by sanger sequencing. J Mol Diagn. 2015;17(4):456–61. [DOI] [PubMed] [Google Scholar]

- 7.Beck TF, Mullikin JC, NISC Comparative Sequencing Program, Biesecker LG. Systematic evaluation of Sanger validation of next-generation sequencing variants. Clin Chem. 2016;62(4):647–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.De Cario RKA, Suraci S, Magi A, Volta A, Marcucci R, Gori AM, Pepe G, Giusti B, Sticchi E. Sanger validation of high-throughput sequencing in genetic diagnosis: still the best practice?? Front Genet. 2020;11: 592588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Pellegrino E, Jacques C, Beaufils N, et al. Machine learning random forest for predicting oncosomatic variant NGS analysis. Sci Rep. 2021;11:21820. 10.1038/s41598-021-01253-y. [DOI] [PMC free article] [PubMed]

- 10.Marceddu G, Guerri TDG, zulian A, Marinelli C, Bertelli M. Analysis of machine learning algorithms as integrative tools for validation of next generation sequencing data. Eur Rev Med Pharmacol Sci. 2019;23:8139–47. [DOI] [PubMed] [Google Scholar]

- 11.van den Akker J, Mishne G, Zimmer AD, et al. A machine learning model to determine the accuracy of variant calls in capturebased next generation sequencing. BMC Genomics. 2018;19:263. 10.1186/s12864-018-4659-0. [DOI] [PMC free article] [PubMed]

- 12.Holt JM, Kelly M, Sundlof B, Nakouzi G, Bick D, Lyon E. Reducing Sanger confirmation testing through false positive prediction algorithms. Genet Sci. 2021;23(7):1255–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Handelman GS, Kok HK, Chandra RV, Razavi AH, Huang S, Brooks M, Lee MJ, Asadi H. Peering into the black box of artificial intelligence: evaluation metrics of machine learning methods. AJR Am J Roentgenol. 2019;212(1):38–43. [DOI] [PubMed] [Google Scholar]

- 14.Huang Y-S, Hsu C, Chune Y-C, Liao IC, Wang H, Lin Y-L, Hwu W-L, Lee N-C, Lai F. Diagnosis of a Single-Nucleotide variant in Whole-Exome sequencing data for patients with inherited diseases: machine learning study using artificial intelligence variant prioritization. JMIR Bioinf Biotechnol. 2022;3(1):e37701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Li J, Jew B, Zhan L, Hwang S, Coppola G, Freimer NB, Sul JH. ForestQC: quality control on genetic variants from next-generation sequencing data using random forest. PLoS Comput Biol. 2019;15(12): e1007556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Talukder A, Barham C, Li X, Hu H. Interpretation of deep learning in genomics and epigenomics. Brief Bioinform. 2021;22(3): bbaa177. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The raw sequencing data (fastq files) for the GIAB cell lines generated in this study have been deposited in the NCBI Sequence Read Archive (SRA) under BioProject accession number PRJNA1257936. Heterozygous SNVs and their associated features used for CV and final model training are provided as Additional file 3. Sequence datasets generated from patient specimens will not be made available for distribution as an additional measure to protect patient privacy. The software described in this article is proprietary and subject to company regulations. However, inquiries regarding access or usage may be directed to the corresponding author.