Abstract

Objectives: In the wake of the Institute of Medicine report, To Err Is Human: Building a Safer Health System (LT Kohn, JM Corrigan, MS Donaldson, eds; Washington, DC: National Academy Press, 1999), numerous advisory panels are advocating widespread implementation of physician order entry as a means to reduce errors and improve patient safety. Successful implementation of an order entry system requires that attention be given to the user interface. The authors assessed physician satisfaction with the user interface of two different order entry systems—a commercially available product, and the Department of Veterans Affairs Computerized Patient Record System (CPRS).

Design and Measurement: A standardized instrument for measuring user satisfaction with physician order entry systems was mailed to internal medicine and medicine-pediatrics house staff physicians. The subjects answered questions on each system using a 0 to 9 scale.

Results: The survey response rates were 63 and 64 percent for the two order entry systems. Overall, house staff were dissatisfied with the commercial system, giving it an overall mean score of 3.67 (95 percent confidence interval [95%CI], 3.37–3.97). In contrast, the CPRS had a mean score of 7.21 (95% CI, 7.00–7.43), indicating that house staff were satisfied with the system. Overall satisfaction was most strongly correlated with the ability to perform tasks in a “straightforward” manner.

Conclusions: User satisfaction differed significantly between the two order entry systems, suggesting that all order entry systems are not equally usable. Given the national usage of the two order entry systems studied, further studies are needed to assess physician satisfaction with use of these same systems at other institutions.

Computerized physician order entry (POE) offers numerous advantages over traditional paper-based systems. Through rapid information retrieval and efficient data management, POE systems have the potential to improve the quality of patient care. Four specific areas in which benefits are seen with POE are process improvements, resource utilization, clinical decision support, and guide implementation.1

First, POE improves the process of order writing by generating legible orders that require less clarification from nurses and pharmacists.2 The process of ordering medications is streamd by eliminating needless transcription steps.3,4 Furthermore, the convenience of being able to access a patient's chart and order medications from any computer terminal reduces the time spent searching for charts.2

Second, POE, when it displays laboratory and cost information, changes provider prescribing habits so that drug choice is more cost effective.5 Physician order entry results in the use of more formulary drugs, which lowers costs.6 Many studies have shown reductions in hospital and patient costs after the implementation of an order entry system that alerted physicians to drug and test prices, warned of potential test redundancy, and gave antibiotic recommendations.7–12

Perhaps the most exciting advantage of POE over written orders is the ability to provide clinical decision support at the time of ordering. Decision support can include the display of patient laboratory data, allergy information, and drug–drug interactions.13 This information retrieval addresses a frequent system failure identified by Leape et al.14—the lack of appropriate knowledge at the time of medication ordering. Through these support features, POE has been shown to improve patient outcomes by reducing medication errors and adverse drug events.15–19

The fourth advantage of POE systems is the ability to incorporate clinical guides into the system.20 Overhage et al.21 embedded guideline-based reminders concerning corollary orders for certain tests and drugs. For example, if a practitioner ordered an angiotensin-converting enzyme inhibitor, the computer would suggest an appropriate monitoring test, such as a creatinine or potassium test. They were able to demonstrate that physicians who received the computerized suggestion were twice as likely to order the appropriate follow-up test.

The potential benefits of POE systems are enormous in improved quality of care, decreased hospital costs, and increased efficiency at all levels of health care utilization. However, despite growing evidence to support the many advantages of POE systems, these systems are still not widely used. In 1998, 32.1 percent of hospitals surveyed had POE either completely or partially available.22 However, only 4.9 percent of hospitals with POE required its use.

In response to these studies and to the urgent demands of patients, providers, and government to improve the quality of health care,23 several national organizations have made recommendations for the widespread implementation of POE. These organizations include the Institute of Medicine,24 the American Society of Health-System Pharmacists,3 the National Patient Safety Partnership,24 and the Leapfrog Group,25 an industry-based consortium that includes General Electric and General Motors. With only a minority of hospitals using POE, a rapid roll-out of these systems can be expected. Therefore, it is important to ensure that health care institutions implementing POE acquire only high-quality, clinically usable systems.26

A wide choice of POE systems is available.1 However, few studies have evaluated user satisfaction with commercially available order entry systems. Studies have shown that the assessment and incorporation of user feedback concerning information systems is important to ensure proper system utilization.27–30 This feedback is especially important in light of prior negative experiences with the implementation of POE systems. In one such instance, house staff initiated a work action to prevent the implementation.31,32 User satisfaction is an important predictor of a system's success.33

The medicine house staff physicians in our training program use two different POE systems at two different institutions. To take advantage of this unique situation, we compared house staff physician satisfaction between two different POE systems—a commercially available product and the Department of Veterans Affairs Computerized Patient Record System (CPRS).

Methods

Description of the Commercially Available System and Setting

We studied a commercially available product for POE in use at the Mount Sinai Hospital in New York City, New York. The Mount Sinai Hospital is a 1,171-bed tertiary-care teaching hospital. Medicine house- staff physicians spend two to four months each year at Mount Sinai on inpatient rotations.

The commercially available POE system was first implemented in October 1997 in one of several patient centers. By September 1999, the commercially available product system had been implemented and was mandatory in all but one of the general medicine care centers through which house staff physicians rotate. Although the volume of orders entered can vary depending on hospital occupancy rates, house staff consistently enter the majority of orders. In June 2000, an average of 6,300 orders were entered each day, with 4,800 of these orders entered by house staff physicians.

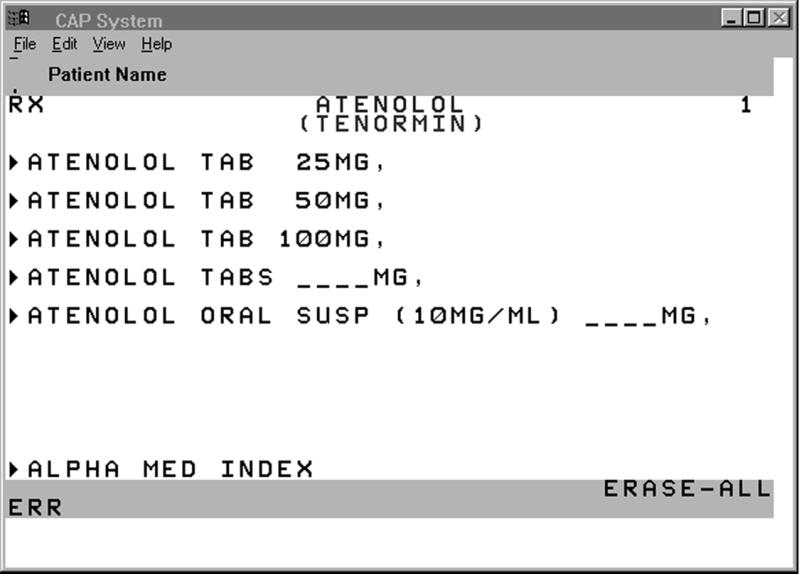

The commercially available product has order entry functions and data retrieval capabilities. The system enables a provider to locate patients; construct patient lists; order medications, procedures, laboratory and radiology studies; and develop personal order sets. The product is menu-driven, with orders entered by mouse. It is a propriety system with a character-based interface. The screens have a white background with text in three colors. Characters and fonts of similar styles are used in the majority of screens. Free text can be entered to clarify specific nursing interventions or to give clinical information in the ordering of procedures. An example of the screen layout is shown in Figure 1▶.

Figure 1.

Screen from the commercially available product, showing the process of ordering atenolol.

House staff physicians are required to attend a 60-minute training session. An automated telephone and support personnel are available to provide answers to questions about the system. During system implementation, a support crew was present on the medical floors to assist users.

Description of the Veterans Affairs Computerized Patient Record System

We also evaluated the Veterans Affairs CPRS. The Bronx Veterans Affairs Hospital is a 331-bed hospital affiliated with Mount Sinai Medical Center. Mount Sinai medicine house staff spend 1 to 2 months a year at the Bronx Veterans Affairs Hospital on inpatient rotations.

The CPRS was active on all medical and surgical floors by July 1999. As with the commercially available product system, use of the CPRS is mandatory and house staff physicians enter the majority of the approximately 3,000 daily inpatient orders.

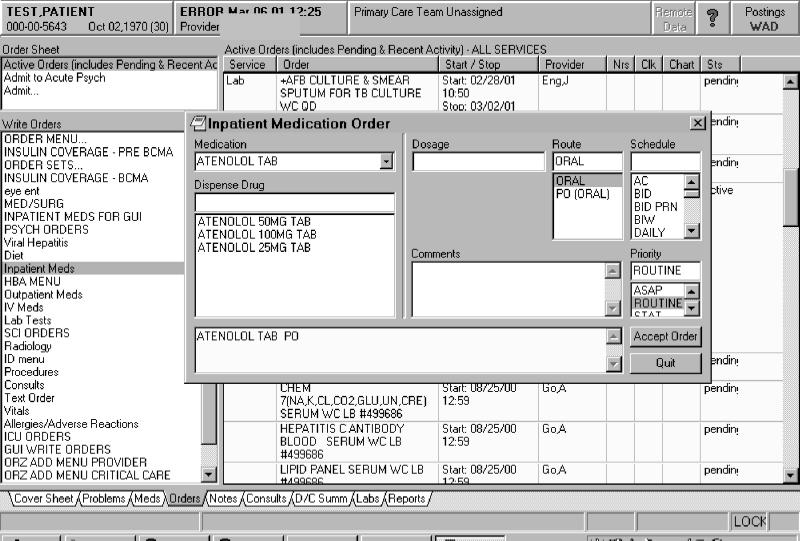

The CPRS and the commercially available product have similar capabilities, such as order entry and data retrieval. The CPRS permits all progress notes, admission notes, and discharge notes to be entered directly. It has a graphical user interface with a Microsoft Windows-style event-driven clinical user interface, and it allows the user to move between different data sections by using the tab feature. Pick-lists for data retrieval are also used. The user can input data through either the keyboard or the mouse. An example of the screen layout is shown in Figure 2▶.

Figure 2.

Screen from the Veterans Affairs Computerized Patient Medical Record, showing the process of ordering atenolol.

A 40-minute training session is arranged for all house staff physicians using the system. Support personnel are available by pager for assistance 24 hours a day. During CPRS implementation, support personnel were present on the inpatient wards to assist users.

Study Subjects

All 144 internal medicine and medicine-pediatrics house staff physicians (medicine house staff) were initially eligible for survey. For the CPRS, 12 of the 144 participants were excluded because they had no prior experience with the system. Therefore, 132 house staff physicians were surveyed about the CPRS. The survey responses were anonymous. This study was reviewed by The Mount Sinai School of Medicine Grants and Contracts Office and was approved by the Institutional Review Board.

Survey Administration

The questionnaire packets contained the Questionnaire for User Interface Satisfaction (QUIS), a cover letter, and a self-addressed envelope. Respondents were asked to consider the following system functions as they completed the QUIS—clinical result review, laboratory and radiology data retrieval, order entry, and patient lists.

Demographic information and usage pattern questions were asked in the cover letter. A comment section was included, and all comments were listed and then categorized by the frequency of response.

The first questionnaire packet was placed in the departmental mail boxes of house staff physicians in February 2000. This questionnaire asked participants to assess the commercially available product. One month after packet distribution, reminder announcements were made during educational conferences, and survey packets were redistributed for initial non-responders.

The second questionnaire packet, assessing the CPRS, was distributed in April 2000 in the house staff mailboxes. As with the first survey, reminder announcements were made 1 month later, and survey packets were redistributed to non-responders. Survey collection ended for the first system in April 2000 and for the second system in June 2000.

The Questionnaire for User Interface Satisfaction (QUIS)

Chin et al.34 at the University of Maryland Human–Computer Interaction Laboratory developed the QUIS to assess user satisfaction with the human–computer interface. The QUIS was developed on a broad range of software as a general software-assessment tool and has been used to evaluate physician satisfaction with electronic medical records.35 We used QUIS version 5.5 (short form) for our measurements.

The QUIS is a 27-item instrument. The questions are subdivided into five categories—overall reaction to the software (six questions), screen design and layout (four questions), terminology and systems information (six questions), learning (six questions), and system capabilities (five questions).

Analytic Approach

Two-tailed unpaired t-tests were used to compare the individual question and category scores between the two systems. In our study design, we believed the respondents needed to be completely anonymous to ensure accurate reporting. Therefore, we were unable to pair the survey responses. Although a loss of power can result from using paired data with an unpaired test statistic,36 we believed, on the basis of our estimated number of respondents and hypothesized effect size, that this study design would not reduce our ability to detect a difference between the two systems.

Since the probability of a Type I error is increased with multiple comparisons, we used the Bonferroni inequality formula to adjust our alpha level. We divided an initial Type I error rate of 0.05 by 27 (the number of independent comparisons per group) and set our level of statistical significance at 0.002. Because this adjustment can result in an over-conservative estimate of alpha levels, we also performed the Student-Newman-Keuls test to control for the multiple comparisons.37

The respondents to each system survey were dichotomized and compared on the basis of usage characteristics. Mean scores per category were compared between the two groups by means of the Wilcoxon rank order sum test with an alpha level of 0.01. We compared inexperienced users (subjects who had used the system 4 months or less) with experienced users (those who had used the system 9 months or more). We compared light users (subjects who spent 30 minutes or less a day on the system) with heavy users (those who spent more than 60 minutes a day on the system). And we compared recent users (subjects who had used the system within the last 2 weeks) with distant users (those who had last used the system 8 or more weeks before). We used an ANOVA to compared QUIS scores by postgraduate year level.

Because of house staff physician scheduling, the sample of users experienced with the CPRS was too small, so we dichotomized this group into those with less than 1 week of experience and those with 1 week or more of experience. Subgroup analyses were performed using the Wilcoxon rank-order sum test.

We used the Spearman correlation coefficient to determine which individual questions had a significant relationship with overall satisfaction with the system. Overall satisfaction was determined by averaging the six responses in the “Overall Reactions to the Software” category, giving a mean score for overall satisfaction. The remaining 21 items were then correlated to this mean score for overall satisfaction.

We performed univariate and multivariate ar regression analyses to assess whether the demographic characteristics collected had any influence on overall satisfaction scores. Multivariate analysis showed that no demographic factors had any clinically significant associations in either system.

SAS 6.12 software was used for all statistical analysis.38

Results

Response to Survey

One hundred and forty-four house staff physicians were eligible for the commercially available product survey, and 132 residents were eligible for the CPRS survey. After two rounds of survey distribution, 94 of the 144 commercially available product questionnaires were returned. Four were excluded because of respondents' lack of prior exposure to the system or because of the presence of more than five unanswered questions on the survey. The remaining 90 questionnaires represented a response rate of 63 percent.

Eighty-four CPRS questionnaires were returned, representing a response rate of 64 percent. All CPRS questionnaires were used.

Of the questionnaire respondents, 52.5 percent of the commercially available product group and 50 percent of the CPRS group were female. In the commercially available product group, the most frequent respondents were first-year residents (42.2 percent), whereas in the CPRS group, the most frequent respondents were second-year residents (33.3 percent). The majority of respondents in both groups spent more than than 60 minutes a day on the system (75 percent in the commercially available product group and 69 percent in the CPRS group).

An overwhelming majority of respondents in the CPRS group (96.4 percent) had four months or less experience with the system, compared with only 41.6 percent of the commercially available product group. Overall, respondents in the commercially available product group were more likely than those in the CPRS group to have used the system within 2 weeks of the survey (47.8 vs. 21.7 percent). Individual characteristics of the respondents are listed in Table 1▶.

Table1 ▪.

Characteristics of Survey Respondents

| Characteristics | Commercial System | Veterans Affairs CPRS* |

|---|---|---|

| Response rate (%) | 90 (63%) | 84 (64%) |

| Mean age, yr. | 28.5 | 28.9 |

| Female (%) | 47 (52.5%) | 42 (50%) |

| Postgraduate year: | ||

| I | 38 (42.2%) | 26 (31%) |

| II | 25 (27.8%) | 28 (33.3%) |

| III | 22 (24.4%) | 25 (29.8%) |

| IV | 5 (5.6%) | 5 (6.0%) |

| Minutes a day using the system: | ||

| Less than 30 (%) | 3 (3.4%) | 7 (8.4%) |

| 30 to 60 (%) | 19 (21.6%) | 19 (22.6%) |

| 61 to 90 (%) | 34 (38.6%) | 30 (35.7%) |

| Greater than 90 (%) | 32 (36.4%) | 28 (33.4%) |

| Months' use of the system: | ||

| 4 or less (%) | 37 (41.6%) | 81 (96.4%) |

| 5 to 8 (%) | 23 (25.8%) | 2 (2.4%) |

| 9 or greater (%) | 29 (32%) | 1 (1.2%) |

| Weeks since last use of the system: | ||

| 2 or less (%) | 43 (47.8%) | 18 (21.7%) |

| 3 to 8 (%) | 29 (32.2%) | 10 (12%) |

| 9 or greater (%) | 18 (20%) | 55 (66.3%) |

Abbreviation: CPRS indicates Computerized Patient Record System.

Overall Level of Satisfaction

We first compared the overall mean user response for all questions between the two surveys. The overall mean score was 3.67 (95 percent confidence intervals [95% CI], 3.37–3.97) for the commercially available product and 7.21 (95% CI, 7.00–7.43) for the CPRS. This difference was statistically significant, with a Pvalue of 0.0001. There were no significant differences with either system in overall satisfaction scores between postgraduate year levels.

Overall mean user responses for the individual QUIS categories were compared between the two systems and are shown in Table 2▶. Satisfaction with the CPRS was significantly higher in all individual categories (P=0.0001). These results indicate that the respondents feel that the screen layout and system capabilities of the CPRS are better than those of the commercially available product. Also, the responses indicate that they found the CPRS easier to learn to use. The largest absolute difference between category mean scores was in “learning” (absolute mean scores difference, 3.92). In addition, the Learning category scored lower than all other categories in the commercially available product group (Table 2▶).

Table 2 ▪.

Results of Category Mean Scores

| Category | Commercial System (95% CI) | Veterans Affairs CPRS (95% CI) |

|---|---|---|

| Overall reaction to the software | 3.27 (2.92, 3.62) | 7.08 (6.80, 7.36) |

| Screen design and layout | 4.41 (4.01, 4.81) | 7.68 (7.46, 7.90) |

| Terminology and systems information | 3.88 (3.53, 4.22) | 7.22 (6.97, 7.47) |

| Learning | 3.21 (2.87, 3.56) | 7.13 (6.89, 7.37) |

| System capabilities | 3.89 (3.56, 4.22) | 7.08 (6.80, 7.36) |

| Overall mean score | 3.67 (3.37, 3.97) | 7.21 (7.00, 7.43) |

Abbreviations: CPRS indicates Computerized Patient Record System; CI, confidence interval.

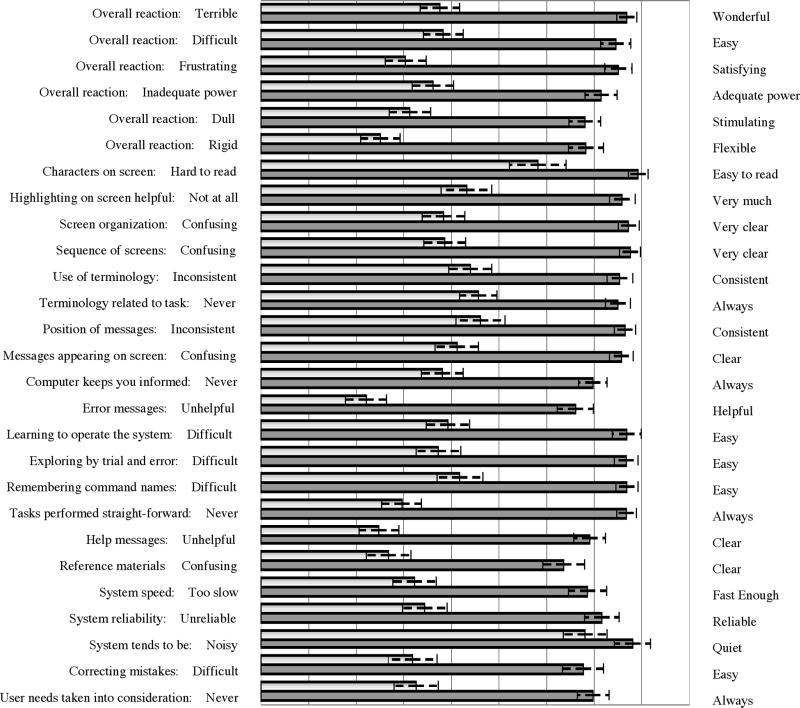

The individual question mean responses are shown in Figure 3▶. The respondents scored the CPRS system significantly higher than the commercially available product in each question, even after adjusting for multiple comparisons with the Bonferroni inequality formula (alpha, 0.002) and the Student-Newman-Keuls test. On examination of the individual items of the QUIS, the greatest absolute difference between the two systems was seen in the question ‘tasks can be performed in a straight forward manner’ (absolute mean score difference, 4.72). The smaller absolute differences were seen in the questions concerning legibility of characters on the screens (absolute mean score difference, 2.11) and system noise (absolute mean score difference, 1.0)

Figure 3.

Comparison of individual question mean scores (scale, 0 to 9). Light indicates commercially available product; dark gray, Computerized Patient Record System.; staggered bars, 95% confidence intervals.

QUIS Scores for Individual Systems

Only 4 of 27 questions (15 percent) concerning the commercially available product scored higher than our QUIS mid value of 4.5. These questions were “legibility of the characters on the screen” (mean score, 5.82; 95% CI, 5.23–6.41), “computer terminology related to the task” (mean score, 4.57; 95% CIs 4.18–4.97), “position of messages on the screen” (mean score, 4.61, 95% CI, 4.10–5.13), and “system noise” (mean score, 6.81; 95% CI, 6.35–7.28). The lowest-scoring individual questions included “error messages” (mean score, 2.21;, 95% CI, 1.78–2.64), “help message” (mean score, 2.48; 95% CI, 2.06–2.91), and “system flexibility” (mean score, 2.51; 95% CI, 2.10–2.92).

No question concerning the CPRS had a mean score below the midpoint of the QUIS. The questions with the highest scores were “legibility of characters on the screen” (mean score, 7.93; 95% CI, 7.72–8.13), “system noise”(mean score, 7.81; 95% CI, 7.43–8.18), and “sequence of screens” (mean score, 7.76; 95% CI, 7.54–7.98). The CPRS scores were lowest on the questions regarding “supplemental reference materials” (mean score, 6.36; 95% CI, 5.92–6.81) and “error messages” (mean score, 6.61; 95% CI, 6.23–6.99).

Correlations of Overall Satisfaction

Many individual QUIS questions were strongly correlated to overall satisfaction levels with the two systems (Table 3▶). On the analysis of the commercially available product, overall satisfaction was significantly correlated with questions associated with learning the system, including “tasks can be performed in a straightforward manner,” “exploring by trial and error,” and “remembering names and commands” (P=0.0001). Overall satisfaction with the CPRS was significantly correlated with the questions “[is] the terminology related to the task you are doing,” “the position of messages,” and “terminology consistency” (P=0.0001). All these questions relate to the screen design and layout of the CPRS.

Table 3 ▪.

Correlation of Overall Satisfaction and Individual Questions with the Commercially Available System and The Veterans Affairs CPRS

| Questions | Correlation Coefficient* |

|

|---|---|---|

| CPRS | Commercial | |

| Characters on the computer screen | 0.23 | 0.60 |

| Highlighting simplifies tasks | 0.55 | 0.56 |

| Organization of information on the screen | 0.49 | 0.59 |

| Sequence of screens | 0.61 | 0.67 |

| Terminology consistency | 0.60 | 0.71 |

| Terminology related to the task you are doing | 0.57 | 0.72 |

| Position of messages | 0.48 | 0.69 |

| Messages prompt user for input | 0.49 | 0.68 |

| Computer keeps you informed | 0.50 | 0.47 |

| Error messages | 0.27† | 0.44 |

| Learning to operate the system | 0.56 | 0.57 |

| Exploring by trial and error | 0.67 | 0.51 |

| Remembering names of commands | 0.67 | 0.63 |

| Task performed in a straight- forward manner | 0.71 | 0.71 |

| Help messages | 0.46 | 0.63 |

| Supplemental reference materials | 0.44 | 0.50 |

| System speed | 0.43 | 0.57 |

| System reliability | 0.45 | 0.57 |

| System noise | 0.08 (NS) | 0.50 |

| Correcting your mistakes | 0.56 | 0.53 |

| Experienced and inexperienced users' needs are taken into consideration | 0.68 | 0.52 |

Abbreviations: CPRS indicates Computerized Patient Record System; NS, not significant

*All P values are less than 0.001 unless otherwise indicated.

† P <0.05

Overall satisfaction was strongly correlated in both groups (commercially available product and CPRS) with the learning category questions “tasks performed in a straightforward manner” (r=0.71, r=0.71) and “remembering names and commands” (r=0.67, r=0.63). Overall satisfaction was also strongly correlated with “terminology consistency” (r=0.60, r=0.71). Correlation to the question about system noise was not significant in the commercially available product group. This is important, since this individual question had the highest score (6.81) in the commercially available product group. System noise is the sound the system makes when performing tasks.

Usage Patterns and Satisfaction

We compared mean satisfaction scores between experienced and inexperienced users. No category had a statistically significant difference between experienced and inexperienced users in either system. There were also no statistically significant differences in the category scores in either the commercially available product group or the CPRS group between light and heavy users.

We compared recent to distant users in the two systems. There was no statistical significance between the distant and recent users with the commercially available product. In comparing recent and distant users of the CPRS, recent users rated the system higher in all categories. Three categories achieved statistical significance—”terminology and systems information”(P=0.003), “learning” (P=0.01), and “system capabilities” (P=0.007).

Comments Section

The most frequent comment concerning the commercially available product was about routine tasks being both cumbersome and taking longer to perform. Nine respondents commented that patient care was compromised by the commercially available system. There were no comments that patient care was improved by the system. The most frequently cited benefit of the commercially available product was that it provided the ability to write orders from any location in the hospital.

In contrast, the most frequent comment on the evaluation of the CPRS was that the system was easy to use. Also frequently mentioned was the advantage of having all the data related to the patient in one place. Several respondents mentioned that the CPRS improved patient care, and no one responded that patient care was compromised. The most frequent negative comment had to do with system response time.

Discussion

Our results show that medicine house staff physicians favored the POE system of the CPRS to the commercially available POE system. The large difference in user satisfaction between these two POE systems should invite closer examination of current POE system usability, particularly since there is little literature detailing these systems. All order entry systems may not be equally usable, and caution should be exercised in the choice of an order entry system, since the inability to use a system properly could result in user errors26 as well as inefficient use of time.

We found significant differences between mean scores in questions associated with task performance and completion. The largest absolute difference was associated with the question “tasks can be performed in a straightforward manner.” This suggests that house staff physicians had difficulty using the commercially available product to order tests and drugs because they were unsure of how to perform these tasks. The written comments revealed several complaints from users of the commercially available system about the ordering process, which was felt to be cumbersome and not intuitive. Comments reflected that common orders were not located in places that made “sense” to the physician. For instance, to find and order a glucose finger stick, the user had to first enter Nursing Orders and then navigate a series of screens; this option was described as “buried” within the system.

Some respondents complained that many routinely ordered tests were difficult to find and required multiple steps to access. Placing frequently used orders in nested menus is inefficient and not intuitive to users, and thus will affect the usability of a system.

Another potential source of inefficiency in task completion with the commercially available product resulted from the way in which ordering options are displayed. Comments indicated that too many options that were “irrelevant” to physicians were listed on one screen, implying that users sorted through numerous relatively less useful options to locate the necessary one. It has been suggested that the offer of too much non-essential information may be “disturbing” to the user,39 and this could have resulted in lower user interface satisfaction scores with the commercially available product system.

The importance of the user interface in task performance and completion was further demonstrated in our correlation analyses. In both systems, overall satisfaction was highly correlated with “tasks being performed in a straightforward manner,” the ability to “remember commands” and “consistent terminology.” These findings concur with the findings of Sittig et al.,35 who used the QUIS to assess user satisfaction with the BICS system at the Brigham and Women's Hospital. Like them, we found that user satisfaction correlated best with the ability of the physician to use the system to perform the assigned tasks efficiently.

Other studies have found correlations between user satisfaction with an order entry system and efficiency in its use. Lee et al.,40 who evaluated user satisfaction with an institutionally developed POE system, found that overall satisfaction was highly correlated with perceived efficiency. In a recent study from Johns Hopkins University,41 a customized off-the-shelf POE system was assessed. As in the study by Lee et al., overall satisfaction was correlated with the ability to perform daily work efficiently using the system.

Our results show that overall satisfaction with the user interfaces of the two order entry systems is strongly related to the efficiency with which physicians successfully perform tasks when using the systems. It is important that the user interface of an order entry system be designed with the physician's needs in mind. House staff physicians are seldom able to attend long training sessions for new systems; therefore, physicians must be able to rapidly learn how to use a system on the job.

To maximize efficiency, tasks must be performed in a “straightforward” manner. This is facilitated when users can either easily develop a mental model of how they expect an application to function or apply a previously developed mental model to the software.42 If they cannot develop a mental model based on expectations or prior experience, they must invest significantly more time in learning to use the system properly.

The CPRS interface is organized like a standard patient chart, with a section devoted to orders, a section for progress notes, and a section for laboratory findings. Possibly this format, which is familiar to the physician users, capitalizes on what is already intuitive knowledge for the physician. Thus, navigating the electronic chart is similar to navigating a paper chart.

Although we did not assess experience with computers, the commercially available product interface is also less likely to be one with which house staff would be familiar. The user interface of the commercially available product is a proprietary interface, which is character-based, whereas the CPRS interface has a Microsoft Windows style. It is likely that users' prior computer experience in general would be with systems that resemble the CPRS.

Many studies have demonstrated benefits of POE, but these benefits may be mitigated by the inability of physicians to use an order entry system. Clinical software systems may introduce new errors when physicians have difficulty using them.26 It is crucial to continuously collect and evaluate physician user feedback about information systems, or errors attributable to the user interface will not be detected. Thus, any institution wanting to implement POE must have a process for collecting and incorporating user feedback.26,29

Our study may have been affected by the amount of rotation time that house staff physicians spend in each hospital and, thus, with each POE system. House staff spent less time at the Veterans Affairs hospital and had longer periods of time between system usage, which could have led to recall bias. A larger percentage of respondents for the CPRS were distant users, and in our study the distant users tended to rate the system lower. This could have lowered the overall satisfaction with the CPRS.

Another limitation of our study was that it was not designed to assess the effects of external factors—such as implementation, customization, and institutional readiness—on user satisfaction. System implementation can have a major role on how house staff physicians view a system.31,32

An institutional factor that could have affected our results is the difference in patient case-mix at the two hospitals. The nature of the orders written by house staff could have varied in complexity between the institutions, and this was not investigated. Thus, if house staff repeatedly had to enter more complex orders for their patients while at the Mount Sinai Hospital than at the Bronx Veterans Affairs Hospital, this could have had the effect of lowering satisfaction scores regardless of the system used.

Another limitation was the timing of survey administration. When an information system is introduced, any satisfaction scores may reflect the impressions of users who are still beginning to learn the system. With time and experience, users may form subsequent impressions of the system that are different from their initial impressions. The systems were implemented at different times, which could have had an effect on the QUIS scores. Since the commercially available product was implemented earlier than the CPRS, a larger proportion of respondents had more than 9 months' experience with the commercially available product system than with the CPRS (32 vs. 1 percent). However, this would be expected to have the effect of raising mean scores for the commercially available product, since residents had more experience with this system.

Conclusions

The satisfaction of house staff physicians with the two POE systems was very different. They were satisfied with one system, the CPRS, and dissatisfied with the other, a commercially available product. Satisfaction with these systems was related to user- perceived efficiency of each system in the performance of necessary tasks.

User satisfaction is an important marker for the usability of any system, and our results indicate that not all order entry systems are equally usable. It is important to ensure not only that physicians are satisfied with an order entry system but also that user feedback is collected and used at institutions with order entry systems. With the impetus for widespread utilization of POE, it is important to ensure that only well-received, high-quality order entry systems are implemented. Only by taking these precautions can we realize the full potential of physician order entry.

The two physician order entry systems included in our study are used at several sites nationally. Further studies are needed to assess whether our user satisfaction results will be similar at other sites that use these two order entry systems.

Acknowledgments

The authors thank L. Suzanne Shelton, MD, for her contribution to the editing of this manuscript.

References

- 1.Sittig DF, Stead WW. Computer-based physician order entry: the state of the art. J Am Med Inform Assoc. 1994;1(2):108–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Teich JM, Hurley JF, Beckley RF, Aranow M. Design of an easy-to-use physician order entry system with support for nursing and ancillary departments. Proc Annu Symp Comput Appl Med Care. 1992:99–103. [PMC free article] [PubMed]

- 3.Top-priority actions for preventing adverse drug events in hospitals: recommendations of an expert panel. Am J Health Syst Pharm. 1996;53(7):747–51. [DOI] [PubMed] [Google Scholar]

- 4.Teich JM, Spurr CD, Flammini SJ, et al. Response to a trial of physician-based inpatient order entry. Proc Annu Symp Comput Appl Med Care. 1993:316–20. [PMC free article] [PubMed]

- 5.Teich JM, Merchia PR, Schmiz JL, Kuperman GJ, Spurr CD, Bates DW. Effects of computerized physician order entry on prescribing practices. Arch Intern Med. 2000;160(18):2741–7. [DOI] [PubMed] [Google Scholar]

- 6.Schroeder CG, Pierpaoli PG. Direct order entry by physicians in a computerized hospital information system. Am J Hosp Pharm. 1986;43(2):355–9. [PubMed] [Google Scholar]

- 7.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999;106(2):144–50. [DOI] [PubMed] [Google Scholar]

- 8.Evans RS, Pestotnik SL, Classen DC, et al. A computer-assisted management program for antibiotics and other antiinfective agents. N Engl J Med. 1998;338(4):232–8. [DOI] [PubMed] [Google Scholar]

- 9.Tierney WM, Miller ME, Overhage JM, McDonald CJ. Physician inpatient order writing on microcomputer workstations: effects on resource utilization. JAMA. 1993;269(3): 379–83. [PubMed] [Google Scholar]

- 10.Pestotnik SL, Classen DC, Evans RS, Burke JP. Implementing antibiotic practice guides through computer-assisted decision support: clinical and financial outcomes. Ann Intern Med. 1996;124(10):884–90. [DOI] [PubMed] [Google Scholar]

- 11.Tierney WM, McDonald CJ, Martin DK, Rogers MP. Computerized display of past test results: effect on outpatient testing. Ann Intern Med. 1987;107(4):569–74. [DOI] [PubMed] [Google Scholar]

- 12.Tierney WM, Miller ME, McDonald CJ. The effect on test ordering of informing physicians of the charges for outpatient diagnostic tests. N Engl J Med. 1990;322(21):1499–504. [DOI] [PubMed] [Google Scholar]

- 13.Bates DW, Boyle DL, Teich JM. Impact of computerized physician order entry on physician time. Proc Annu Symp Comput Appl Med Care. 1994:996. [PMC free article] [PubMed]

- 14.Leape LL, Bates DW, Cullen DJ, et al. Systems analysis of adverse drug events. ADE Prevention Study Group. JAMA. 1995;274(1):35–43. [PubMed] [Google Scholar]

- 15.Bates DW, Leape LL, Cullen DJ, et al. Effect of computerized physician order entry and a team intervention on prevention of serious medication errors. Jama 1998;280(15):1311–6. [DOI] [PubMed] [Google Scholar]

- 16.Bates DW, Teich JM, Lee J, Seger D, Kuperman GJ, Ma'Luf N, et al. The impact of computerized physician order entry on medication error prevention. J Am Med Inform Assoc. 1999;6(4):313–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Classen DC, Pestotnik SL, Evans RS, Burke JP. Computerized surveillance of adverse drug events in hospital patients. JAMA. 1991;266(20):2847–51. [PubMed] [Google Scholar]

- 18.Evans RS, Pestotnik SL, Classen DC, Horn SD, Bass SB, Burke JP. Preventing adverse drug events in hospitalized patients. Ann Pharmacother. 1994;28(4):523–7. [DOI] [PubMed] [Google Scholar]

- 19.Raschke RA, Gollihare B, Wunderlich TA, et al. A computer alert system to prevent injury from adverse drug events: development and evaluation in a community teaching hospital. JAMA. 1998;280(15):1317–20. [DOI] [PubMed] [Google Scholar]

- 20.Chin HL, Wallace P. Embedding guides into direct physician order entry: simple methods, powerful results. Proc AMIA Annu Symp. 1999:221–5. [PMC free article] [PubMed]

- 21.Overhage JM, Tierney WM, Zhou XH, McDonald CJ. A randomized trial of “corollary orders” to prevent errors of omission. J Am Med Inform Assoc. 1997;4(5):364–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ash JS, Gorman PN, Hersh WR. Physician order entry in U.S. hospitals. Proc AMIA Annu Symp. 1998:235–9. [PMC free article] [PubMed]

- 23.Chassin MR, Galvin RW. The urgent need to improve health care quality. Institute of Medicine National Roundtable on Health Care Quality. JAMA. 1998;280(11):1000–5. [DOI] [PubMed] [Google Scholar]

- 24.Kohn LT, Corrigan JM, Donaldson MS (eds). To Err is Human: Building a Safer Health System. Washington, DC: Institute of Medicine/National Academy Press, 2000. [PubMed]

- 25.Booth B. IOM report spurs momentum for patient safety movement. Am Med News. 2000.

- 26.Miller RA, Gardner RM. Recommendations for responsible monitoring and regulation of clinical software systems. American Medical Informatics Association, Computer-based Patient Record Institute, Medical Library Association, Association of Academic Health Science Libraries, American Health Information Management Association, American Nurses Association. J Am Med Inform Assoc. 1997;4(6):442–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gardner RM, Lundsgaarde HP. Evaluation of user acceptance of a clinical expert system. J Am Med Inform Assoc. 1994;1(6): 428–38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mazzoleni MC, Baiardi P, Giorgi I, Franchi G, Marconi R, Cortesi M. Assessing users' satisfaction through perception of usefulness and ease of use in the daily interaction with a hospital information system. Proc AMIA Annu Fall Symp. 1996:752–6. [PMC free article] [PubMed]

- 29.Abookire SA, Teich JM, Bates DW. An institution-based process to ensure clinical software quality. Proc AMIA Annu Symp. 1999:461–5. [PMC free article] [PubMed]

- 30.Zviran M. Evaluating user satisfaction in a hospital environment: an exploratory study. Health Care Manage Rev. 1992;17(3):51–62. [PubMed] [Google Scholar]

- 31.Massaro TA. Introducing physician order entry at a major academic medical center, part II: impact on medical education. Acad Med. 1993;68(1):25–30. [DOI] [PubMed] [Google Scholar]

- 32.Massaro TA. Introducing physician order entry at a major academic medical center, part I: impact on organizational culture and behavior. Acad Med. 1993;68(1):20–5. [DOI] [PubMed] [Google Scholar]

- 33.Bailey JE. Development of an instrument for the management of computer user attitudes in hospitals. Methods Inf Med. 1990;29(1):51–6. [PubMed] [Google Scholar]

- 34.Chin JP, Diehl VA, Norman KL. Development of an instrument measuring user satisfaction of the human– computer interface. Proc CHI. 1988:213–21.

- 35.Sittig DF, Kuperman GJ, Fiskio J. Evaluating physician satisfaction regarding user interactions with an electronic medical record system. Proc AMIA Annu Symp. 1999:400–4. [PMC free article] [PubMed]

- 36.Snedecor GW, Cochran WG. Statistical Methods. 6th ed. Ames, Iowa: Iowa State University Press, 1967.

- 37.Maxwell SE, Delaney HD. Designing Experiments and Analyzing Data. Belmont, Calif.: `Wadsworth, 1990.

- 38.SAS for Windows, v. 6.12. Cary, NC: SAS Institute, 1994.

- 39.van Bemmel JH, Musen MA (eds). Handbook of Medical Informatics. Amsterdam, The Netherlands: Springer, 1997.

- 40.Lee F, Teich JM, Spurr CD, Bates DW. Implementation of physician order entry: user satisfaction and self-reported usage patterns. J Am Med Inform Assoc. 1996;3(1):42–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Weiner M, Gress T, Thiemann DR, et al. Contrasting views of physicians and nurses about an inpatient computer-based provider order entry system. J Am Med Inform Assoc. 1999;6(3):234–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Norman D. The Design of Everyday Things. New York: Doubleday, 1988.