Abstract

Motivated by neuropsychological investigations of category-specific impairments, many functional brain imaging studies have found distinct patterns of neural activity associated with different object categories. However, the extent to which these category-related activation patterns reflect differences in conceptual representation remains controversial. To investigate this issue, functional magnetic resonance imaging (fMRI) was used to record changes in neural activity while subjects interpreted animated vignettes composed of simple geometric shapes in motion. Vignettes interpreted as conveying social interactions elicited a distinct and distributed pattern of neural activity, relative to vignettes interpreted as mechanical actions. This neural system included regions in posterior temporal cortex associated with identifying human faces and other biological objects. In contrast, vignettes interpreted as conveying mechanical actions resulted in activity in posterior temporal lobe sites associated with identifying manipulable objects such as tools. Moreover, social, but not mechanical, interpretations elicited activity in regions implicated in the perception and modulation of emotion (right amygdala and ventromedial prefrontal cortex). Perceiving and understanding social and mechanical concepts depends, in part, on activity in distinct neural networks. Within the social domain, the network includes regions involved in processing and storing information about the form and motion of biological objects, and in perceiving, expressing, and regulating affective responses.

INTRODUCTION

Reports of category-specific impairments following brain injury or disease have motivated an ever-increasing number of functional imaging studies on category representation in the normal human brain. Many of these investigations have shown that object categories are represented by neural activity distributed across wide spread regions of cortex. It has also been established that many of these activations are found in processing streams associated with perception of specific object features such as form and colour (ventral occipitotemporal cortex), motion (posterior lateral temporal cortex; specifically, the middle temporal gyrus and superior temporal sulcus [STS], and with grasping and manipulating objects (left intraparietal sulcus and ventral premotor cortices). Moreover, different object categories evoke distinct patterns of activity in these regions (for recent reviews, see Josephs, 2001; Martin 2001; Martin & Chao, 2001; Thompson-Schill, 2002). For example, animate objects, as represented by pictures of human faces (e.g., Haxby, Ungerleider, Clark, Shouten, Hoffman, & Martin, 1999; Ishai, Ungerleider, Martin, Shouten, & Haxby, 1999; Kanwisher McDermott, & Chun, 1997), human figures (Beauchamp, Lee, Haxby, & Martin, 2002), and animals (e.g., Chao, Haxby, & Martin, 1999), show heightened activity in the lateral region of the fusiform gyrus and in STS, relative to a number of other object categories (houses, tools, chairs). In contrast, manipulable objects such as tools and utensils show heightened activity relative to animate objects in the medial portion of the fusiform gyrus (e.g., Chao et al., 1999), posterior region of the left middle temporal gyrus (e.g., Chao et al., 1999; Moore & Price, 1999), and in left intraparietal and ventral premotor cortices (e.g., Chao & Martin, 2000).

Although these object category-related patterns of activity have most often been found with tasks using object pictures, they have also been found when objects are represented by their written names (see above-cited reviews). These and related findings have led us to suggest that information about different object attributes and features are stored in these regions of cortex. Moreover, we have suggested that these regions are involved in both perceiving and knowing about features and properties critical for object identification (e.g., Martin, 1998).

For example, activity in posteriolateral left temporal cortex, just anterior to primary motion processing area MT, has been reported in multiple studies of object semantics. Naming pictures of tools, answering questions about tools, and generating action words in response to object pictures or their written names show enhanced activity in the left middle temporal gyrus. In contrast, naming pictures of animals and viewing human faces show enhanced activity in a more dorsal location centred on the STS, usually stronger in the right than the left hemisphere (see above-cited reviews). This region (STS) is of particular interest because it is involved in perceiving biological motion in both humans (e.g., Puce, Allison, Bentin, Gore, & McCarthy, 1998) and monkeys (e.g., Oram & Perrett, 1994). Moreover, we have recently shown that these regions are preferentially responsive to different types of object motion. Activity in the middle temporal gyrus was strongly and selectively enhanced by video clips of moving tools, while STS was selectively responsive to human movement (Beauchamp et al., 2002).

To explain these patterns of results, we suggested that, as a result of repeated experience with objects, regions in lateral temporal cortex become tuned to different properties of object-associated motion. For example, middle temporal gyrus may be tuned to unarticulated patterns of motion commonly associated with tools, whereas STS may be tuned to articulated patterns of motion commonly associated with biological objects (see Beauchamp et al., 2002, for details). In a similar fashion, ventral occipitotemporal cortex may become tuned to different properties of object form. Importantly, it is also proposed that, once these object-feature networks are established, they are active whenever an object concept is retrieved, regardless of the physical format of the stimuli used to elicit the concept (picture, word, moving shapes, mental imagery, etc.). Thus, activity in these regions can be driven bottom-up by features and properties, and top-down, as part of the representation of the concept.

The goal of the present experiment was to evaluate this idea by using the same stimuli to evoke different categories of conceptual representation. Specifically, we used functional magnetic resonance imaging to record changes in brain activity while subjects interpreted the motion of simple geometric forms (circles, rectangles, triangles) designed to elicit either social or mechanical concepts.

Behavioural studies, beginning with the seminal work of Heider and Simmel (1944), have documented that higher-order cognitive concepts such as causality and agency can be elicited from observing the motion of simple geometric stimuli (for review, see Scholl & Tremoulet, 2000). An interesting and important aspect of these demonstrations is that the moving shapes can elicit the concept of living beings (agents performing actions) who, in turn, are perceived as having goals and possessing intentional states such as beliefs and desires. If this is so, then it would be expected that the neural substrate associated with understanding the social world would include the same substrate that becomes active when perceiving animate objects, in tandem with other regions. Indeed, using positron emission tomography (PET), Castelli and colleagues (Castelli, Happé, Frith, & Frith, 2000) reported evidence consistent with this hypothesis. Specifically, viewing vignettes modelled after Heider and Simmel’s original investigation, and designed to evoke theory of mind states (ToM; intention to deceive, surprise, etc.), elicited activity in several regions, including the fusiform gyrus and STS, relative to a random motion condition. The same findings held, although to a weaker extent, when the ToM vignettes were contrasted to animations depicting only simple, goal-directed activity (dancing, chasing, etc.; see also, Castelli, Frith, Happé, & Frith, 2002). One limitation of this study was that both of the meaningful conditions (ToM and simple, goal-directed animations) concerned the same conceptual domain (human interaction). Greater activity was elicited for the more complex or higher-level concepts, relative to the simpler ones. Thus, it is possible that the activations reported by Castelli and colleagues reflected only general, problem-solving processes involved in interpreting moving visual forms, rather than being specific to ToM or social interaction.

In the current study we designed vignettes to elicit two different types of conceptual representations: social interaction and mechanical action. Further, both vignette types were preceded by the same, ambiguous cue (“What is it?” cf. Castelli et al., 2000). Based on the above discussion, we predicted that animations interpreted as social would engage regions previously found to be associated with animate objects (humans and animals), whereas mechanical interpretations would be associated with sites previously linked to perceiving and knowing about tools. Specifically, within posterior regions, vignettes interpreted as social would be associated with bilateral activity in the lateral fusiform gyrus and STS, relative to vignettes interpreted as mechanical. In contrast, vignettes interpreted as mechanical would show enhanced bilateral activity in the medial fusiform, and the left middle temporal gyri. In addition, based on investigations of social cognition (see Adolphs, 2001, for review), we also expected to find activity in the amygdala, medial prefrontal cortex, and related areas for the social vignettes.

METHODS

Subjects

Twelve strongly right-handed individuals participated in the fMRI study (six female, mean age = 27.5 years, range 23–34, mean verbal intelligence quotient (VIQ) as estimated by the National Adult Reading Test = 121, range 112–128). Informed consent was obtained in writing under an approved NIMH protocol. Prior to the imaging study, responses to the vignettes were collected from a different group of subjects (N = 12, seven female). These subjects were instructed to generate a label describing the action depicted in each vignette. These labels and their associated response times, measured from the onset of the animation, were collected.

Stimuli and design

The stimuli consisted of animations created using ElectricImage software and converted to Quicktime for subject presentation. An initial set of 24 vignettes each lasting 21 s were constructed using simple geometric shapes (circles, squares, triangles, etc.) to depict either social or mechanical action (12 social, 12 mechanical). Based on pilot testing to determine consistency of interpretation, this set of meaningful animations was reduced to 16 vignettes used in the fMRI experiment (8 of each type). Human activities depicted in the social vignettes included interactions set in the context of a baseball game, dancing, fishing, sharing, scaring, playing on a seesaw, playing on a slide, and swimming. Mechanical depictions included actions designed to represent the movement of inanimate objects in the context of billiards, bowling, pinball, a cannon, a crane, a steam shovel, a conveyor belt, and a paper shredder. In addition, two control conditions were created in which the same stimuli used in the meaningful vignettes were presented either in random motion (four blocks; two each using objects from the social or the mechanical vignettes), or as static images. For the still condition, a different static configuration of the geometric forms was presented every 4.2 s to maintain subjects’ attention.

During each of eight fMRI runs, subjects viewed 10 stimulus blocks of 30 s. Each block began with a cue screen (3 s), followed by either a motion or still condition (21 s), and ended with a response screen (6 s). Subjects were cued with the written question “What is it?” prior to each of the meaningful vignettes, and with “Stare” prior to the random motion and still conditions. The use of the “Stare” cue was necessary because behavioural pilot testing indicated that when the random motion animations were preceded by “What is it?,” subjects commonly applied meaningful interpretations to them, and often chose a meaningful verbal label to describe the action even when the response choices included “nonsense.” Thus subjects were cued as to which animations were meaningless so as to prevent them from engaging in the interpretive stance that was the focus of this study.

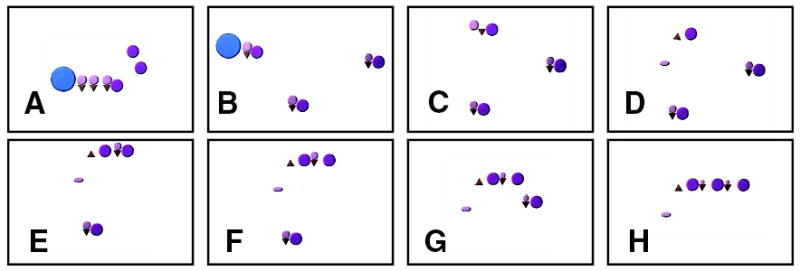

The response screens for the meaningful vignettes contained four written choices arranged in the same spatial configuration as the buttons on the response key held in the subject’s left hand. Subjects were instructed to determine what action was being depicted and indicate their choice when the response screen appeared. The four choices included one likely and two less likely interpretations, along with the correct answer. For example, for the vignette illustrated in Figure 1, the choices were “playing tag,” “playing volley ball,” “picking apples,” and “sharing ice cream.” For the random motion and still conditions subjects were instructed to press a single button as indicated by the spatial location of the word “press” on the response screen. The location was randomly varied for each presentation of this condition. Prior to scanning, subjects performed a practice run with sample animations that were not included in the experiment.

Figure 1.

Social vignette. Sample frames from a social vignette that elicited the concept of sharing. When viewing this animation, subjects interpreted the small purple circles as children receiving ice cream cones from a parent or adult figure (large blue circle) (panels A and B). In C and D, the first child drops her ice cream. In E and F, another child shares her ice cream with the first child. In G and H, the third child joins the other two and shares her ice cream, as well.

Four of the 10 blocks consisted of meaningful vignettes (two social, two mechanical). The remaining blocks consisted of the random motion and still control conditions. Each of the eight runs began and ended with a still condition. Motion conditions were presented in pseudorandom order, with alternating blocks of the meaningful and random motion control conditions. All vignettes were presented during the first 4 runs and repeated during runs 5–8 in order to ensure adequate statistical power.

Imaging parameters

High-resolution spoiled gradient recall (SPGR) anatomical images (124 sagittal slices 1.2 mm thick, field of view [FOV] = 24 cm, acquisition matrix = 256 × 256) and functional data (gradient-echo echo-planar imaging sequence, repetition time [TR] = 3 s, echo time [TE] = 40 ms, flip angle = 90°, 22 contiguous 5-mm axial slices, FOV = 24 cm, acquisition matrix = 64 × 64) were acquired on a 1.5 Tesla GE scanner.

Image analysis

Functional images were motion corrected and a 1.2-voxel smoothing filter was applied to each scan. Regressors of interest for each of five stimulus types were convolved with a Gaussian estimate of the haemodynamic response and multiple regression was performed on each voxel’s time series using AFNI v.2.40e (Cox, 1996). Individual subject Z-maps were normalised to the standardised space of Talairach and Tournoux (1988). Functional regions of interest (ROI) were determined in an unbiased manner by the group activation maps thresholded at Z > 4.90 (p < 10−6) for the contrast of meaningful versus random motion conditions, and Z > 3.09 (p < .001) for the contrast of social versus mechanical vignettes. Regions discussed in this report include all those identified by this analysis except for regions in lateral prefrontal and medial parietal cortices, for which we had no a priori hypotheses. These ROIs served as a guide to extract MR time series and local maxima from each region in each subject. Time series data were obtained from voxels that exceeded a threshold of Z > 3.09 (p < .001) for meaningful versus random motion conditions, and Z > 1.96 (p < .05) for the contrast of social versus mechanical vignettes. The first three and last two time-points from each block (which represent the cue plus two TRs, and the response screen, respectively) were dropped from the time-series analysis. Beta weights resulting from a multiple regression analysis derived from the individual subject time series were submitted to a mixed effects analysis of variance, treating subjects as a random factor, to test for differences between conditions (social, mechanical, social control, mechanical control, stills).

RESULTS

Behavioural data

Subjects who viewed the vignettes outside the magnet were slower to spontaneously generate correct verbal labels for the social compared to the mechanical vignettes (means = 9.6 s and 6.6 s, respectively, p < .001). They were also slightly less accurate. Statistical comparison of the accuracy data was not valid because of ceiling effects. (The majority of the animations—four social, six mechanical—were correctly labeled spontaneously by all 12 subjects. During scanning, subjects’ response choices were highly accurate: (> 97 % for both social and mechanical vignettes.)

Imaging data

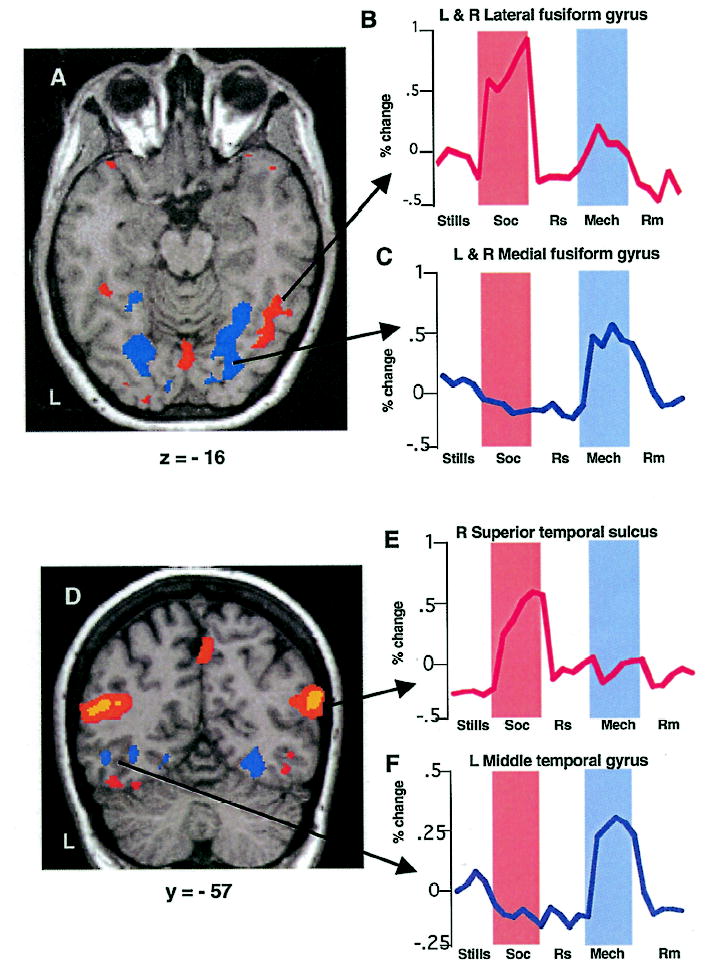

Direct comparison of the social and mechanical vignettes revealed different and highly selective patterns of activity in a number of regions (Table 1). These differences were confirmed by the mixed-effects ANOVA of the time-series data, which also revealed differences between other conditions. In ventral temporal cortex, vignettes interpreted as social elicited greater bilateral activity in the lateral portion of the fusiform gyrus, relative to mechanical vignettes, F = 6.37, p < .05 (Figure 2A). Moreover, in this region, activity associated with interpreting the mechanical animations was no greater than when subjects viewed these same objects, but were aware that their motion was random and meaningless, F = 1.79, p > .10 (Figure 2B). In contrast, vignettes interpreted as mechanical showed greater bilateral activity in the medial portion of the fusiform gyrus, relative to social animations, F = 19.58; p < .001 (Figure 2A). Here, activity associated with interpreting social animations was no greater than when viewing the random motion control condition composed of the same elements, F < 1.0 (Figure 2C). Both lateral and medial fusiform activations were bilateral, and, for the lateral fusiform, larger in the right than in the left hemisphere, t(11) = 3.23, p <.01.

Table 1.

Local maxima and Z values for each region based on the group data

| Region | Z value | X | Y | Z |

|---|---|---|---|---|

| Social > Mechanical | ||||

| L fusiform gyrus (lateral) | 3.95 | −44 | −57 | −23 |

| 3.57 | −40 | −38 | −20 | |

| R fusiform gyrus (lateral) | 4.49 | 41 | −52 | −15 |

| 3.37 | 40 | −33 | −21 | |

| R superior temporal sulcus | >7.0 | 56 | −58 | 19 |

| L superior temporal sulcus | >7.0 | −49 | −57 | 17 |

| R anterior STS | >7.0 | 53 | −20 | −4 |

| L anterior STS | 5.14 | −56 | −21 | 0 |

| R amygdala | 5.14 | 19 | −2 | −10 |

| R ventromedial prefrontal cortex | 3.76 | 3 | 52 | −11 |

| 5.12 | 1 | 43 | −13 | |

| Mechanical > Social | ||||

| L fusiform gyrus (medial) | 4.22 | −25 | −44 | −18 |

| R fusiform gyrus (medial) | 6.95 | 27 | −57 | −16 |

| L middle temporal gyrus | 3.05 | −49 | −56 | −9 |

| 3.16 | −34 | −58 | −7 | |

| Social = Mechanical | ||||

| Meaningful > Random | ||||

| L polar frontal cortex | >7.0 | −28 | 40 | 37 |

| R polar frontal cortex | >7.0 | 35 | 50 | 27 |

| R anterior temporal cortex | >7.0 | 42 | −10 | 26 |

Figure 2.

Activations in posterior occipitotemporal cortex. Group averaged activity superimposed on a brain slice from an individual subject. Shown in colour are regions that responded more to meaningful than to random motion conditions. Regions in red were more active for social than mechanical vignettes, regions in blue were more active for mechanical than social vignettes. (A) Axial view at the Talairach and Tournoux coordinate of z = −16 showing differential activity in ventral occipitotemporal regions. Haemodynamic responses of voxels within lateral (B) and medial (C) fusiform gyrus that also showed enhanced activity to moving versus still geometric forms. These time series, as well as all others included in the figures, were averaged across subjects. (D) Coronal section at y = −57 showing activated regions in lateral temporal cortex. Haemodynamic response from voxels in the right STS (E) and left middle temporal gyrus/inferior temporal sulcus (F). Soc = social vignettes, Mech = mechanical vignettes, Rs and Rm = random control conditions for social and mechanical vignettes, respectively.

Vignettes interpreted as social or mechanical also produced differential activity along the lateral surface of posterior temporal cortex (Table 1). Relative to the mechanical vignettes, activity associated with interpreting the social vignettes was centred on the posterior region of STS, bilaterally: F = 113.49; p < .0001, and F = 64.35, p < .001 in the left and right STS, respectively (Figure 2D). The activity was bilateral but larger in the right than in the left hemisphere, t(11) = 7.24, p < .0001. Activity was particularly selective in right STS, where the haemodynamic response associated with the mechanical animations and its random motion control condition did not differ, F = 1.52, p > 0.1 (Figure 2E). In contrast, relative to the social animations, mechanical animations elicited greater activity in the left middle temporal gyrus, extending into the inferior temporal sulcus, F = 24.89, p < .0001. In this region, interpreting the social animations produced no more activity than its random motion control, F < 1.0 (Figure 2F).

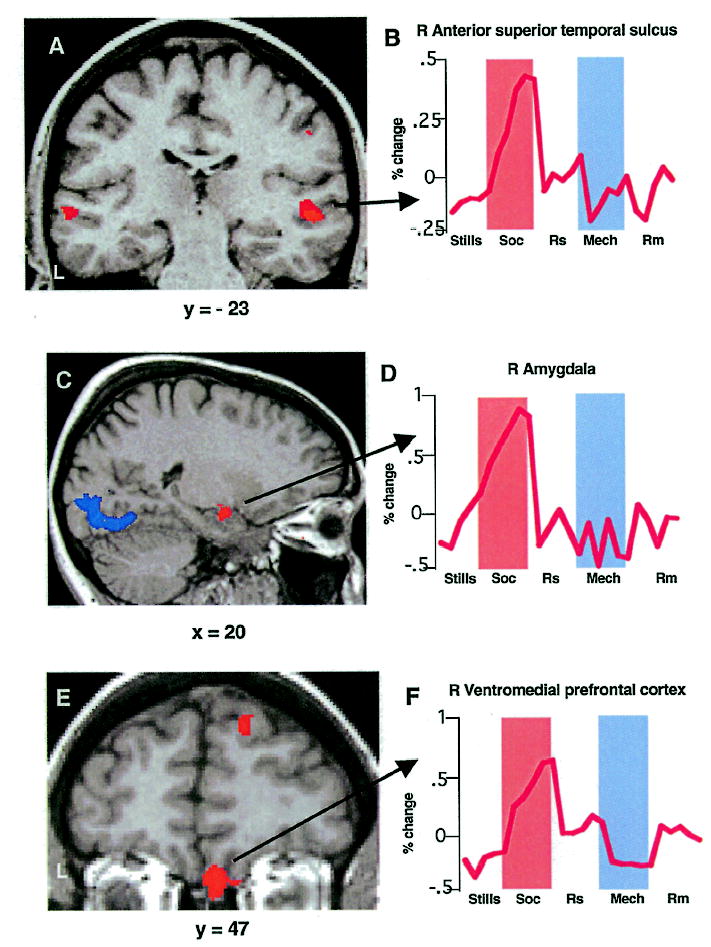

Viewing and interpreting the social vignettes were also associated with activity in several other regions. These included a more anterior region of STS (bilaterally), the right amygdala, and right ventromedial prefrontal cortex (Table 1). Each of these areas showed an enhanced response when interpreting social relative to mechanical animations: left anterior STS, F = 15.16, p < .001; right anterior STS, F = 48.85, p < .0001; right amygdala, F = 14.83, p < .001; right ventromedial prefrontal cortex, F = 8.71, p < .01. In all regions, activity for mechanical vignettes did not exceed its control condition (Figure 3).

Figure 3.

Activations in right anterior STS, amygdala, and ventral prefrontal cortices. As in Figure 2: Coronal section (A) at y = −23 and haemodynamic response in region of anterior STS (B) responding more to social than mechanical vignettes. Sagittal section (C) at x = 20 and haemodynamic response (D) of right amygdala region responding more to social than mechanical vignettes. Coronal section (E) at y = 47 and haemodynamic response (F) in right ventromedial prefrontal region responding more to social than mechanical vignettes.

Finally, two other regions were identified where activity was strongly enhanced when subjects interpreted the meaningful vignettes, but failed to show differential activity for one type of meaningful vignette versus the other. These activations were located bilaterally in polar prefrontal cortex and in the anterior region of the right temporal lobe (Table 1).

DISCUSSION

As predicted, social and mechanical vignettes showed differential activity in both the ventral and lateral regions of posterior temporal cortex. In addition, these regions showed highly specific patterns of response. In regions showing enhanced activity to social versus mechanical vignettes, the activity associated with interpreting the mechanical vignettes did not differ from the activity associated with viewing objects in random motion. Similarly, in mechanical-responsive regions, activity associated with interpreting the social vignettes did not differ from its random motion control condition.

Moreover, the regions showing differential activity were highly similar to those previously linked to viewing faces, human figures, and naming animals (lateral region of the fusiform gyrus, STS) and those linked to naming tools (medial fusiform gyrus, middle temporal gyrus). There is considerable evidence from studies of human and nonhuman primates that ventral and lateral occipitotemporal regions subserve different functions in object identification. Whereas lateral temporal cortex is primarily involved in processing object motion, ventral temporal cortex is primarily concerned with form-related features like shape and colour (for review, see Desimone & Ungerleider, 1989). However, in the present study the objects used for both the social and mechanical vignettes differed in neither shape nor colour. Thus, the central determinant of the location of these activations must have been the semantic interpretation given to these moving objects, not their physical characteristics (shape and colour).

The social, compared to the mechanical, vignettes also elicited activity in a more anterior region of STS, the amygdala, and ventromedial prefrontal cortex. As with regions identified in ventral and lateral aspects of posterior cortex, the responses were highly selective. Converging evidence from animal, human neuropsychological, and functional imaging studies of normal individuals has established that these regions form a network involved in perceiving and regulating emotion, social communication, and social decision making. Anterior STS has been implicated in the perception of complex mouth and hand movements and gestures (for review, see Allison, Puce, & McCarthy, 2000). Amygdala activity has been repeatedly shown to be modulated by attributes of human faces related to expression, especially emotions such as fear (for review, see Adolphs, 2002), but also by other emotional expressions and attributions (e.g., happiness, Breiter et al., 1996; trustworthiness, Winston, Strange, O’Doherty, & Dolan, 2002). It has also been shown that damage to the amygdala can impair identification of facial affect (e.g., Adolphs, Tranel, Damasio, & Damasio, 1994), and that the amygdala may be especially important for recognising “social” emotions such as guilt and arrogance relative to more basic emotions such as happiness and sadness (Adolphs, Baron-Cohen, & Tranel, 2002; see also Adolphs, Tranel, & Damasio, 1998). Affectively loaded verbal material can also activate the amygdala, highlighting that the response of this region is not limited to faces or other nonverbal, pictorial stimuli (Isenberg et al., 1999). In the current study, the activity was located in the dorsal portion of the amygdala, consistent with previous studies using positively valenced stimuli (for review, see Davis & Whalen, 2001). Activation of the dorsal portion of the amygdala in response to the social vignettes is also consistent with the proposal that this region plays a role in resolving ambiguity for biological relevant stimuli (Whalen, 1998). Ventromedial prefrontal cortex, a region strongly connected with the amygdala (e.g., Stefanacci & Amaral, 2002), was also selectively active during interpretation of social, relative to mechanical, vignettes. Damage to ventromedial prefrontal cortex has been associated with impaired social functioning and deficits in properly interpreting stimulus reward value as a guide to behavioural control (Bechara, Damasio, Tranel, & Damasio, 1997).

The present findings extend these previous reports by showing that the amygdala, ventromedial prefrontal cortex, as well as the anterior region of STS can be activated by stimuli that, in and of themselves, have neither affective valence nor social significance.1 They are also highly consistent with the previous study by Castelli and colleagues (2000). In their study, the ToM animations elicited bilateral activity in the posterior fusiform gyrus, STS, and the amygdala region, stronger in the right than in the left hemisphere. Medial prefrontal activity was also observed, although in a more dorsal region compared to the present investigation. The close correspondence between our findings and those of Castelli and colleagues, using a different set of animations, instils confidence that these regions are part of a network associated with, if not directly mediating, social knowledge. Further, these studies suggest that activity in this network is associated with conceptual representations, not simply the physical characteristics of the stimuli evoking the concept.

The fact that the moving stimuli used in the social and mechanical vignettes did not differ in either their physical form or affective valence suggests that the activations discussed here are likely to result from top-down activity originating elsewhere. Candidate regions identified in this study that were equally active for both social and mechanical animations included the anterior region of the right temporal lobe and bilateral regions of polar prefrontal cortex. Previous studies of brain-injured patients and fMRI studies of normal individuals have implicated these regions in the representation and retrieval of nonverbal information (Simons, Graham, Galton, Patterson, & Hodges, 2001) as well as in reasoning and problem solving (Koechlin, Basso, Pietrini, Panzer, & Grafman, 1999).

These findings provide compelling evidence that reasoning about social events requires activation of a distributed system that includes regions involved in perceiving and knowing about specific objects (animate beings) and emotional states. Importantly, this system is separate and distinct from at least one other cognitive domain—mechanical knowledge. Our findings suggest that the major components of this social processing system are the ventral (lateral fusiform) and lateral (STS) regions of posterior temporal cortex, the amygdala, and the ventromedial region of prefrontal cortex. These findings are broadly consistent with the regions identified by studies of nonhuman primates as candidate areas for a network mediating social understanding (Brothers, 1990). They are also highly consistent with a growing body of evidence on the neural substrate of social cognition in humans (e.g., Adolphs, 2001).

In one view, this network may, in total or in part, form a “core system” for understanding social interaction (Brothers, 1990; Frith & Frith, 1999, 2001) and animate concepts (Caramazza & Shelton, 1998). This core system may have evolved as a direct result of evolutionary pressure for a system specialised for perceiving and knowing about animacy, causality, deception, and the like. Alternatively, this system may have developed from a more general learning capacity interacting with predetermined mechanisms for perceiving and learning about object features (how they look, how they move), and their affective valence (Martin, 1998).

Thus, within this view, higher-order concepts emerge from the interaction of more elemental processing capacities (U. Frith & Frith, 2001; Martin, 1998), rather than as a manifestation of innately determined conceptual domains (Caramazza & Shelton, 1998). For example, in the context of the current study the concept “animate” may be represented by information about human form (stored in the lateral portion of the fusiform gyrus), biological motion (stored in STS), and affect (mediated by the amygdala and ventromedial prefrontal cortex). Combined activity in these regions (and perhaps others) is the neural correlate of the concept “animate”. Similarly, the concept “mechanical” may be represented, at least in part, by information about the form of machine-like objects (stored in the medial fusiform gyrus), and information about their characteristic motion (stored in the left middle temporal gyrus).2

It is possible, however, that these activations are simply epiphenomenal, rather than causal. For example, they may have occurred as a by-product of visual imagery processes associated with interpreting the moving geometric forms. In fact, the lateral fusiform gyrus can be activated by asking subjects to imagine human faces (Ishai, Ungerleider, & Haxby, 2000; O’Craven & Kanwisher, 2000). However, studies of patients suggest that normal function of at least some of these regions may be necessary for understanding of social events. For example, impaired performance on ToM tasks has been demonstrated in patients with damage to the amygdala (Fine, Lumsden, & Blair, 2001; Stone, Baron-Cohen, Calder, Keane, & Young, 2003), and to right ventromedial prefrontal cortex (Stuss, Gallup, & Alexander, 2001). As with the neuroimaging data, the patient data suggest a stronger role for the right than the left hemisphere in mediating understanding of social concepts (Happé, Brownell, & Winner, 1999). It has also been shown that recognition and retrieval of information about tools can be disrupted by a lesion of the posterior middle temporal gyrus (Tranel, Damasio, & Damasio, 1997). Whether these patients also have a more general impairment in understanding mechanical concepts requires further investigation.

Finally, it must be noted that the true nature of the processing characteristics and type of information stored in the different brain regions discussed in this report remain to be determined. For example, the existing evidence strongly indicate that ventral and lateral regions of posterior cortex subserve processing and storage of object form and motion information, respectively. The data also strongly suggest that neither of these regions are homogeneous, but rather have an intrinsic organisation. Moreover, it appears that each region may be organised by conceptual category. It remains to be determined whether this category-like organisation can be further reduced to an organisation by properties of form (for ventral cortex) and motion (for lateral cortex) shared by category members, or whether this organisation is dictated by innately determined conceptual domains (Caramazza & Shelton, 1998). A model incorporating both types of organizational schemes (by properties and domains) would be an attractive alternative.

CONCLUSION

Interpreting the movement of simple geometric shapes was associated with distinct neural systems depending on whether they were interpreted as depicting social or mechanical events. The active regions included those previously associated with animate objects (for the social vignettes) and tools (for the mechanical vignettes). Understanding social interactions was also associated with activity in regions linked to the perception and regulation of affect. It was suggested that these findings reveal putative “core systems” for social and mechanical understanding that are divisible into constituent parts or elements with distinct processing and storage capabilities. These elements may be viewed as semantic primitives that, in turn, form the foundation or scaffolding for realising a variety of complex mental constructs.

Acknowledgments

We thank Susan Johnson of the Dartmouth College, Media Laboratory for creating the animations, and Jay Schulkin and Cheri Wiggs for valuable discussions.

Footnotes

Although the stimuli were neutral, several of the social vignettes were perceived as having an affective component; and in particular, as being humorous. Thus the extent to which right amygdala and ventromedial prefrontal activity were related to this affective response was unclear. To examine this issue we compared activity for the four social vignettes judged to be most affectively loaded relative to the remaining four social animations (as determined by five independent raters). This analysis revealed that activity in ventromedial prefrontal cortex, but not in the amygdala, was influenced by perceived affect. Interestingly, the region identified by this analysis (1, 53, −12) was nearly identical to the region previously shown to be correlated with humour ratings of aurally presented jokes (3, 48, −12; Goel & Dolan, 2001). Whether ventromedial prefrontal cortex is activated by any humorous or rewarding stimulus, or only those of a social nature, remains to be determined.

Naming tools also activates the left intraparietal sulcus and ventral premotor cortex (e.g., Chao & Martin, 2000). These regions are associated with grasping, and object manipulation, suggesting that information about motor movements associated with the use of objects is stored here (Martin, 2001). In the current study, these areas were not differentially activated by the mechanical animations, nor were they expected to be. The mechanical vignettes did not depict small manipulable tool-like objects. Rather they evoked more machine-like actions (e.g., conveyor belt, paper shredder, cannon).

References

- Adolphs R. The neurobiology of social cognition. Current Opinion in Neurobiology. 2001;11:231–239. doi: 10.1016/s0959-4388(00)00202-6. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behavioral and Cognitive Neuroscience Reviews. 2002;1:21–62. doi: 10.1177/1534582302001001003. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Baron-Cohen S, Tranel D. Impaired recognition of social emotions following amygdala damage. Journal of Cognitive Neuroscience. 2002;14:1264–1274. doi: 10.1162/089892902760807258. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio AR. The human amygdala in social judgment. Nature. 1998;393:470–474. doi: 10.1038/30982. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR. Impaired recognition of emotion in facial expressions following damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Allison T, Puce A, McCarthy G. Social perception from visual cues: Role of the STS region. Trends in Cognitive Science. 2000;4:267–278. doi: 10.1016/s1364-6613(00)01501-1. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Tranel D, Damasio AR. Deciding advantageously before knowing the advantageous strategy. Science. 1997;275:1293–1295. doi: 10.1126/science.275.5304.1293. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Brothers L. The social brain: A project for integrating primate behavior and neurophysiology in a new domain. Concepts in Neuroscience. 1990;1:27–51. [Google Scholar]

- Caramazza A, Shelton JR. Domain-specific knowledge systems in the brain: The animate-inanimate distinction. Journal of Cognitive Neuroscience. 1998;10:1–34. doi: 10.1162/089892998563752. [DOI] [PubMed] [Google Scholar]

- Castelli F, Frith C, Happé F, Frith U. Autism, Asperger syndrome and brain mechanisms for the attribution of mental states to animated shapes. Brain. 2002;125:1839–1849. doi: 10.1093/brain/awf189. [DOI] [PubMed] [Google Scholar]

- Castelli F, Happé F, Frith U, Frith C. Movement and mind: A functional imaging study of perception and interpretation of complex intentional movement patterns. NeuroImage. 2000;12:314–325. doi: 10.1006/nimg.2000.0612. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nature Neuroscience. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Davis M, Whalen PJ. The amygdala: Vigilance and emotion. Molecular Psychiatry. 2001;6:13–34. doi: 10.1038/sj.mp.4000812. [DOI] [PubMed] [Google Scholar]

- Desimone, R., & Ungerleider, L G. (1989). Neural mechanisms of visual processing in monkeys. In F. Boller & J. Grafman (Eds.), Handbook of neuropsychology, Vol. 2 (pp. 267–299). Amsterdam: Elsevier.

- Fine C, Lumsden J, Blair RJR. Dissociation between “theory of mind” and executive functions in a patient with early left amygdala damage. Brain. 2001;124:287–298. doi: 10.1093/brain/124.2.287. [DOI] [PubMed] [Google Scholar]

- Frith CD, Frith U. Interacting minds: A biological basis. Science. 1999;286:1692–1695. doi: 10.1126/science.286.5445.1692. [DOI] [PubMed] [Google Scholar]

- Frith U, Frith C. The biological basis of social interaction. Current Directions in Psychological Science. 2001;10:151–155. [Google Scholar]

- Goel V, Dolan RJ. The functional neuroanatomy of humor: Segregating cognitive and affective components. Nature Neuroscience. 2001;4:237–238. doi: 10.1038/85076. [DOI] [PubMed] [Google Scholar]

- Happé F, Brownell H, Winner E. Acquired “theory of mind” impairments following stroke. Cognition. 1999;70:211–240. doi: 10.1016/s0010-0277(99)00005-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Clark VP, Shouten JL, Hoffman EA, Martin A. The effect of face inversion on activity in human neural systems for face and object perception. Neuron. 1999;22:189–199. doi: 10.1016/s0896-6273(00)80690-x. [DOI] [PubMed] [Google Scholar]

- Heider F, Simmel M. An experimental study of apparent behavior. American Journal of Psychology. 1944;57:243–249. [Google Scholar]

- Isenberg N, Silbersweig D, Engelien A, Emmerich S, Malavade K, Beattie B, Leon AC. Linguistic threat activates human amygdala. Proceedings of the National Academy of Science. 1999;96:10456–10459. doi: 10.1073/pnas.96.18.10456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Haxby JV. Distributed neural systems for the generation of visual images. Neuron. 2000;28:979–990. doi: 10.1016/s0896-6273(00)00168-9. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Martin A, Shouten JL, Haxby JV. Distributed representation of objects in the ventral visual pathway. Proceedings of the National Academy of Sciences, USA. 1999;96:9379–9384. doi: 10.1073/pnas.96.16.9379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Josephs JE. Functional neuroimaging studies of category specificity in object recognition: A critical review and meta-analysis. Cognitive, Affective, and Behavioral Neuroscience. 2001;1:119–136. doi: 10.3758/cabn.1.2.119. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for the perception of faces. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koechlin E, Basso G, Pietrini P, Panzer S, Grafman J. The role of the anterior prefrontal cortex in human cognition. Nature. 1999;399:148–151. doi: 10.1038/20178. [DOI] [PubMed] [Google Scholar]

- Martin, A. (1998). The organization of semantic knowledge and the origin of words in the brain. In N. G. Jablonski & L. C. Aiello (Eds.), The origins and diversification of language (pp. 69–88). San Francisco, CA: California Academy of Sciences.

- Martin, A. (2001). Functional neuroimaging of semantic memory. In R. Cabeza & A. Kingstone (Eds.), Handbook of functional neuroimaging of cognition (pp. 153–186). Cambridge, MA: MIT Press.

- Martin A, Chao LL. Semantic memory and the brain: Structure and processes. Current Opinion in Neurobiology. 2001;11:194–201. doi: 10.1016/s0959-4388(00)00196-3. [DOI] [PubMed] [Google Scholar]

- Moore CJ, Price CJ. A functional neuroimaging study of the variables that generate category-specific object processing differences. Brain. 1999;112:943–962. doi: 10.1093/brain/122.5.943. [DOI] [PubMed] [Google Scholar]

- O’Craven KM, Kanwisher N. Mental imagery of faces and places activates corresponding stimulus-specific brain regions. Journal of Cognitive Neuroscience. 2000;12:1013–1023. doi: 10.1162/08989290051137549. [DOI] [PubMed] [Google Scholar]

- Oram MW, Perrett DI. Responses of anterior superior temporal polysensory (STPa) neurons to “biological motion” stimuli. Journal of Cognitive Neuroscience. 1994;6:99–116. doi: 10.1162/jocn.1994.6.2.99. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. Temporal cortex activation in humans viewing eye and mouth movements. Journal of Neuroscience. 1998;18:2188–2199. doi: 10.1523/JNEUROSCI.18-06-02188.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scholl BJ, Tremoulet PD. Perceptual causality and animacy. Trends in Cognitive Science. 2000;8:299–309. doi: 10.1016/s1364-6613(00)01506-0. [DOI] [PubMed] [Google Scholar]

- Simons JS, Graham KS, Galton CJ, Patterson K, Hodges JR. Semantic knowledge and episodic memory for faces in semantic dementia. Neuropsychology. 2001;15:101–114. [PubMed] [Google Scholar]

- Stefanacci L, Amaral DG. Some observations on cortical inputs to the macaque monkey amygdala: An anterograde tracing study. Journal of Comparative Neurology. 2002;451:301–323. doi: 10.1002/cne.10339. [DOI] [PubMed] [Google Scholar]

- Stone VE, Baron-Cohen S, Calder A, Keane J, Young A. Acquired theory of mind impairments in individuals with bilateral amygdala lesions. Neuropsychologia. 2003;41:209–220. doi: 10.1016/s0028-3932(02)00151-3. [DOI] [PubMed] [Google Scholar]

- Stuss DT, Gallup GG, Alexander MP. The frontal lobes are necessary for “theory of mind”. Brain. 2001;124:279–286. doi: 10.1093/brain/124.2.279. [DOI] [PubMed] [Google Scholar]

- Talairach, J., & Tournoux, P. (1988). Co-planar stereotatic atlas of the human brain. New York: Thieme.

- Thompson-Schill SL. Neuroimaging studies of semantic memory: Inferring “how” from “where”. Neuropsychologia. 2002;41:280–292. doi: 10.1016/s0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Tranel D, Damasio H, Damasio AR. A neural basis for the retrieval of conceptual knowledge. Neuropsychologia. 1997;35:1319–1328. doi: 10.1016/s0028-3932(97)00085-7. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: Initial neuroimaging of the human amygdala. Current Directions in Psychological Science. 1998;7:177–188. [Google Scholar]

- Winston JS, Strange BA, O’Doherty J, Dolan RJ. Automatic and intentional brain responses during evaluation of trustworthiness of faces. Nature Neuroscience. 2002;5:277–283. doi: 10.1038/nn816. [DOI] [PubMed] [Google Scholar]