Abstract

There is scant published experience with implementing complex, multistep computerized practice guidelines for the long-term management of chronic diseases. We have implemented a system for creating, maintaining, and navigating computer-based clinical algorithms integrated with our electronic medical record. This article describes our progress and reports on lessons learned that might guide future work in this field. We discuss issues and obstacles related to choosing and adapting a guideline for electronic implementation, representing and executing the guideline as a computerized algorithm, and integrating it into the clinical workflow of outpatient care. Although obstacles were encountered at each of these steps, the most difficult were related to workflow integration.

Background

Practice Guidelines

Over the past 20 years, there has been an explosion in the availability of practice guidelines. Currently, the National Guideline Clearinghouse (http://www.guideline.gov) alone has almost 1000 publicly accessible guidelines. There are innumerable unpublished private and institutional guidelines as well. Ironically, even though many guidelines have been developed, most receive little use for a variety of reasons. Some of the barriers to use arise at the time of content development. First, there are gaps and inconsistencies in the medical literature supporting one practice versus another. There are also differences in the biases and perspectives of guideline authors, who may be specialists or generalists, payers or providers, marketers, or public health officials. The result is guidelines of variable quality and conflicting recommendations.1

Once the content has been decided, the next set of barriers involves acceptance of the guideline by both clinicians and patients. Physician disagreement,2,3 the inertia associated with traditional practice behaviors,4 and the lack of incentives (or even disincentives) to change5 can cause a guideline to be ignored. Patient-specific and community-wide factors can also impact adherence; this so-called “patient noncompliance” may be related to lack of patient education, misinformation, or the cost or side effects of proposed treatments.

Even when acceptable to both providers and patients, guideline content must be easily accessible at precisely the right time—while delivering care. If a relevant guideline is not frankly overlooked during a patient’s already short and intervention-laden visit, tracking it down and looking up its recommendations may be too time-consuming. Even if the guideline were accessed later, the opportunity to act has been lost once the patient has left the office.

Automated Guidelines

Not withstanding the above obstacles, the promise of guidelines, especially automated ones, to reduce practice variability and improve outcomes is great.6 Previous work has shown that computer-generated, patient-specific reminders can positively influence practice.7,8 As computers become standard tools of clinical practice, computer-based guidelines increasingly can be integrated into routine workflow, delivering “just-in-time” information pertinent to the current clinical situation.9 Links to related resources, such as patient handouts and references to the medical literature, can promote patient and physician acceptance, respectively. Furthermore, as electronic medical records (EMRs) become more prevalent and robust, there is more potential to specifically tailor a guideline’s recommendations to individual patients by taking into account their medications, symptoms and comorbid conditions.

Four years ago, one of the authors (RDZ) described the state of the art for electronic guidelines and tried to anticipate advancements in the field.10 One difficulty noted was the lack of a definition of “computerized.” At a first level, this term signifies access to a digital but still narrative-text version of a printed document. Such access now can be made widely available to an entire practice or institution via an intranet or more globally on the Internet. An example is the National Guideline Clearinghouse, which is conveniently indexed and searchable. However, simply displaying guidelines on a computer monitor does not necessarily increase adherence.11

The next level of automation occurs when the computer is able to make use of the patient’s clinical data, follow its own algorithm internally, and present only the information relevant to the current state. An obstacle to achieving this goal is the ambiguous language with which most text-based guidelines are composed. Eligibility criteria and severity of disease or symptoms are often not explicitly defined. When they are, the definitions may not map to computable data within an EMR. The process of translating ambiguous guideline statements into equivalent ones that use available coded data is not only arduous12 but also carries the risk of distorting the intent and spirit of the original guideline.13

Models and tools for extracting and organizing knowledge, representation models for publishing and sharing guidelines, and computational models for implementing guidelines have been developed to help overcome these problems (Arden,14 GEM,15 Protégé,16 GLIF,17 EON,18 Prodigy19). Few guidelines have been successfully translated using these systems and implemented into real clinical settings.20 Instead, most working implementations have been relatively simple “if–then” rules triggered off EMR data. The resultant messages can be synchronous and interactive, such as alerts linked to computer-based physician charting21–24 or order entry,25,26 or asynchronous, such as alphanumeric pages,27 phone calls,28,29 electronic or paper mail,30 or printed documents.31.32 The messages are usually reminders or recommendations, but they may also be performance reviews33 or feedback.34 They may be directed at nurses,35 pharmacists, clerical staff, or patients36 in addition to physicians. In general, the beneficial effect of these systems has been on the order of 10–20% absolute improvement in process measures, and most studies have not evaluated patient outcomes.37–40

There is little published experience with automating EMR-integrated complex multistep algorithmic guidelines for the management of chronic diseases over extended periods.20,41,42 At Brigham and Women’s Hospital, where we have an extensive history of implementing single-step guidelines and reminders, we began in 1996 to work on more complicated types of alerts and decision support. This article examines the progress made toward this goal at our institution and attempts to distill lessons that may guide future work in this field.

Setting

The project took place at Brigham and Women’s Hospital (BWH), a 700-bed tertiary care academic medical center in Boston. The BWH environment has several features that can support the implementation of computer-based guidelines.43 Primary care physicians at BWH use an EMR that, in addition to coded laboratory and visit data, contains physician-maintained allergy, medication, and coded problem lists.4 BWH also has an inpatient physician order entry application with built-in drug-dosing calculators and synchronous, interactive alerts and reminders about drug-allergy and drug-drug interactions.45 In addition, an event monitor,46 coupled with an active provider coverage database,47 automatically can notify clinicians asynchronously based on data added to the clinical data repository. Finally, an ambulatory reminders application can compute short messages using if–then rules and print them on the bottom of encounter sheets produced for every scheduled outpatient visit.48 These elements (coded electronic data, event monitoring, synchronous and asynchronous messaging capability, and order entry) form a robust platform on which to implement complex automated practice guidelines.

Design Objectives

There were three major objectives of the project. First was the development of a knowledge model that incorporated data input, logic and processing, and notification and effector mechanisms necessary to implement automated practice guidelines in both inpatient and outpatient settings. Second was the construction of editing tools to allow nonprogrammer analysts and/or medical domain experts to implement guideline specifications using this knowledge model; these high-level specifications would then be automatically translated into working computer code. Third was the implementation and evaluation of EMR-based automated guidelines within real clinical workflow environments.

System Description

Choice of Guideline

For our first attempt at automating a multistep practice guideline, we chose the National Cholesterol Education Program (NCEP)49 guideline for the management of hypercholesterolemia, specifically the portion dealing with secondary prevention. The NCEP guideline has several features conducive to successful automation.50,51 First, it addresses a common and clinically important problem52 and is supported by strong scientific evidence, particularly for secondary prevention.53–55 Second, the guideline is straightforward and uses data frequently found in a coded form in an EMR. Third, despite familiarity and acceptance by clinicians, compliance is unacceptably low.56,57 At our institution in particular, 69% of patients with atherosclerotic vascular disease fail to meet the NCEP goals by not having a recently checked lipid level, not being on a statin drug when indicated, or not having the dose of statin properly adjusted to meet target LDLs.58 There also is significant overuse of statins as well as inappropriate monitoring.59 These factors suggested that a computer-based version of the NCEP guidelines for secondary prevention consisting of simple well-timed reminders could substantially improve adherence.

Guideline Content

Following Lobach’s model of adapting clinical guidelines for electronic implementation,60 we met iteratively with relevant specialists (a cardiologist and an endocrinologist) to forge agreement about the relatively small portions of the printed guidelines that were vague or controversial. For instance, we needed to determine whether to include diabetes as an eligibility criterion for secondary prevention rather than just a risk factor for primary prevention; and how frequently and which lipid levels to monitor. Once this small group reached consensus, it was then possible to get sign-off from their respective clinical department chiefs.

Guideline Representation

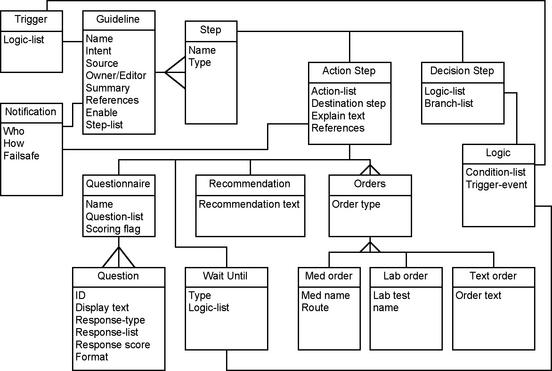

Concurrently, we worked on the knowledge model for representing and executing guidelines. In an attempt to use existing "standards" whenever possible, we decided to start with GLIF2,17 which models guidelines as directed graphs of decision and action steps. Of course, the model had to be extended to make the guideline executable within our EMR. First, the Decision Step object was extended to render the logic computable with data from the EMR. Second, the Action Step object was substantially extended to provide hooks to various reporting and ordering programs (such as order entry) as well as to allow time and event-dependent actions (such as a Wait Step). An Eligibility Step specified whether a guideline was appropriate for a given patient and designated who, if anyone, must approve enrollment onto the guideline. A new Notification object was added to specify parameters for various types of messaging, including e-mail, text paging, and online messaging. Finally, Questionnaire Steps were implemented to allow the clinician to provide data not obtainable from the EMR itself. Figure 1▶ presents an abbreviated object model of the resulting knowledge model for our guideline system.

Figure 1.

Knowledge Model for PCAPE algorithms (Rumbaugh notation). Some details are omitted for clarity.

Guideline Authoring

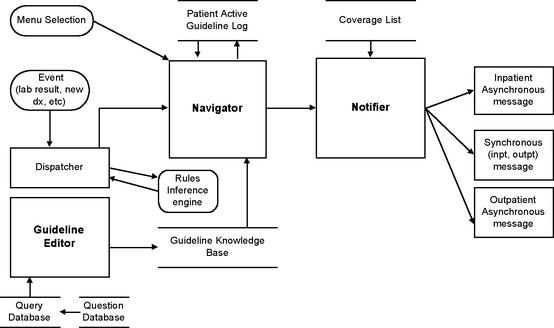

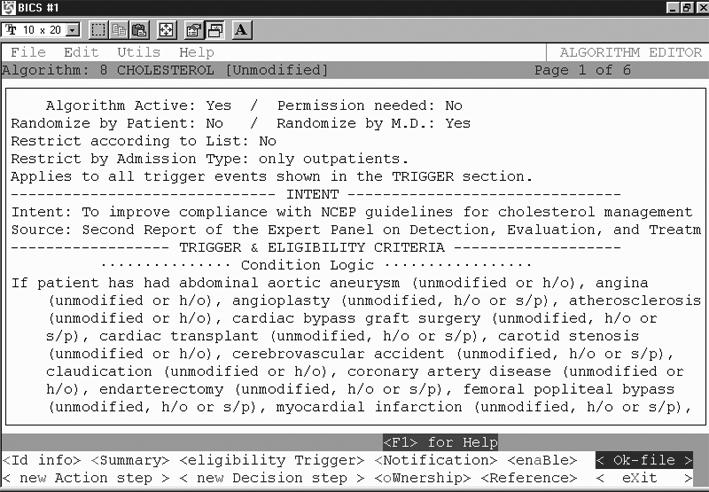

Based on the above knowledge model for representing practice guidelines, we next developed an application for authoring them called Partners Computerized Algorithm Processor and Editor (PCAPE).61 PCAPE (Figure 2▶) is intended to be used by a trained analyst or domain expert (such as a physician or nurse) to enter the parameters of an algorithm from a high-level flowchart specification (as in Figure 3). These parameters consist of triggers and eligibility criteria, instructions for obtaining permission to enroll a patient onto a guideline, provider notification rules, and action and decision steps and their relationships (Figure 4▶).

Figure 2.

PCAPE Architecture. The Editor is used to define a guideline in the knowledge base. The Navigator traverses the steps of the guideline, calling on the Notifier whenever it encounters an action step specifying a message or recommendation.

Figure 4.

PCAPE Guideline Editor screenshot, showing one screen of the cholesterol algorithm within the Editor. Here one can enter all the parameters of a guideline as specified in the guideline object model (see Figure 1▶).

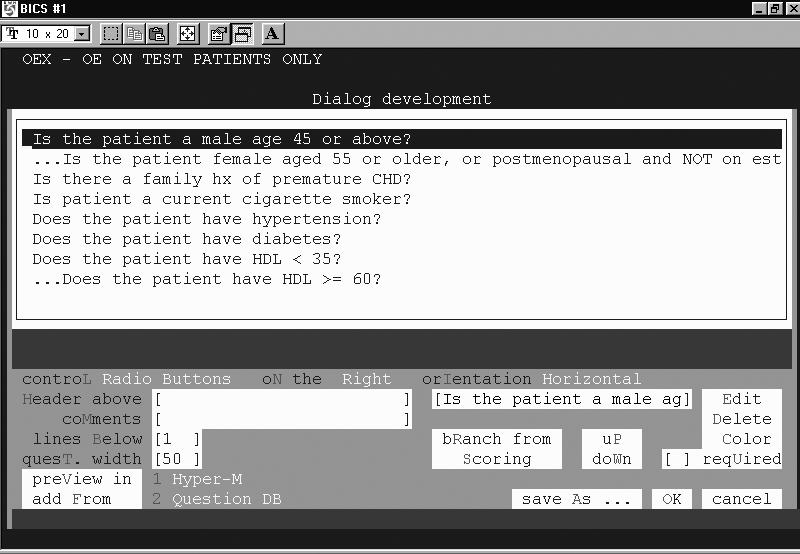

Data and logic-building templates facilitate design of an algorithm without programming. For example, PCAPE includes a dialog editor to construct questionnaires. The dialog editor calls on a database of reusable and modifiable questions, and assembles them into an online survey instrument. Multiple question/response types are supported (text box/line; radio buttons; multi- or single select check boxes, drop down lists, or list box), as are response validation and branching logic (Figure 5▶). On execution, the survey populates an array of user-defined variables with values that are numerical functions of the scored responses. The variables can be used in decision steps just like any coded element of the EMR, such as lab results, allergies, or medications.

Figure 5.

PCAPE Dialog Editor screenshot, showing the construction of an interactive questionnaire that queries a user about a patient’s cardiac risk factors.

Guideline Execution

PCAPE automatically compiles the entered parameters into MUMPS code and data structures. These, in turn, are used by the Navigator and Notifier (see Figure 2▶), which are modified components of the event-monitoring system that powers the BWH alerting system.46 The Navigator processes the steps of the guideline and logs all transactions. Events that can initiate transitions from one state of the algorithm to another include new lab results, medication orders, admissions, procedures, clinician log-on, or passage of a prespecified amount of time. Actions may take the form of messages presented to the user, perhaps requiring a response, or triggers that call the event engine to activate rules governing further actions. The Notifier sends messages to a patient’s covering clinician, seeking data or presenting recommendations and order sets that can be processed by the system. The notification of a message’s presence may be via synchronous (interactive) on-screen alerts or asynchronously via e-mail, alphanumeric page, or printed notices.

Workflow

We considered a number of ways to notify clinicians of the current recommendations and data needs at any given step of the guideline. Alphanumeric pages were abandoned because they were felt to be inappropriately interruptive for the level of urgency required for cholesterol management. E-mail was also rejected by clinicians because such notification was not actionable unless received and read during a patient’s visit.

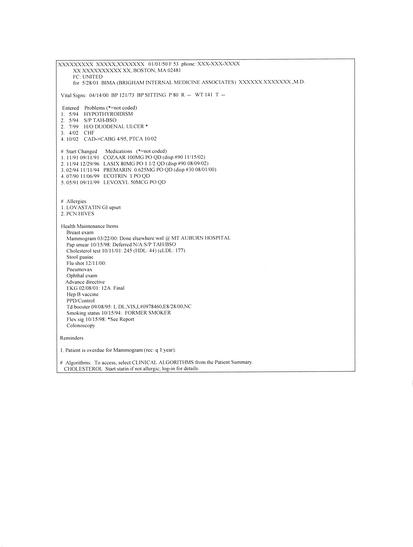

The method that was most widely accepted is an extension of the current system of printing reminders at the bottom of an encounter sheet. The encounter sheet is printed before every non-urgent patient visit, and lists the patient’s medications, allergies, and active problems (Figure 6▶). Information about the current guideline step is printed on the encounter sheet, which is routinely reviewed by most clinicians at the actual time of the patient visit. Unfortunately, because of space constraints on the printed page, guideline messages are limited to two lines of text.

Figure 6.

Sample outpatient encounter summary printed for the clinician to review during a patient’s visit. Guideline messages appear at the bottom. Data do not correspond to any real patient.

Although the encounter sheet gives good static information, it does not allow physicians to enter information interactively through dialogs. Therefore, to allow more extensive messaging and data collection, the ability to interact synchronously with the guideline was incorporated into the navigation engine as well. For example, a patient’s enrollment on a clinical guideline is indicated on the main screen of the outpatient EMR. Much as one would look up a lab result or radiology report, the clinician can access the guideline, exchange information via online dialog boxes, traverse decision nodes of the algorithm, receive computed alerts and messages, and (at least on the inpatient side) initiate order sessions. For the outpatient setting, where the NCEP guideline presumably is most frequently utilized, such order sessions really are secondary dialog screens intended to capture acknowledgement and intent of the clinician; they do not generate actual orders because outpatient order entry has not yet been implemented. Finally, throughout the interaction described above, the clinician can follow links to relevant citations, supplementary resources, and patient handouts.

Status Report

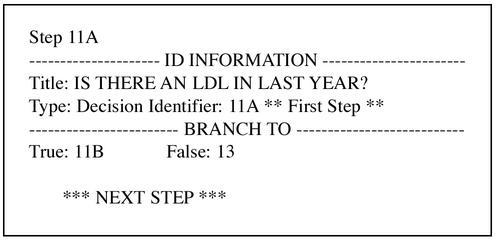

The final automated NCEP secondary prevention guideline has 9 decision steps and 9 action steps. We use Visio for the intermediary flowchart specification of the guideline (see Figure 3—online data supplement), from which the PCAPE specification was entered. The full PCAPE specification, although human-readable, is in comparison cryptic and lengthy (9 pages). For example, compare Steps 11a and 14 in Figure 3 with their corresponding PCAPE representations in Figures 7 and 8▶▶.

Figure 7.

PCAPE representation of a Decision step, the summary description within PCAPE of Decision step 11A of the cholesterol algorithm (see Figure 3).

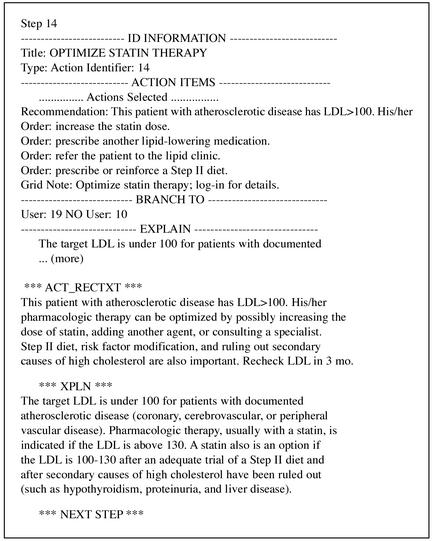

Figure 8.

PCAPE representation of an Action step, the summary description within PCAPE of Action step 14 from the cholesterol algorithm (see Figure 3).

To evaluate the impact of the computer-based guideline on compliance with the NCEP recommendations for secondary prevention patients, we are carrying out a prospective randomized controlled trial. One-half of the primary care physicians at BWH have been randomized to receive the reminders at the bottom of their patients’ encounter sheets. In the first year of the evaluation, 2,258 reminders were printed for 690 patients. Reminders were generated for 65% of the visits by secondary prevention patients of intervention group physicians. Reminders generated thus far have been to check LDL (979), start or consider a statin (554), or optimize therapy (725). Proportional numbers and types of reminders were generated but not displayed for control patients. Notably, only 20 times (0.8% of 2610 visit opportunities) has a clinician opted to interact directly with the guideline using the computer. The final evaluation will assess the impact of the reminders on overall compliance with the NCEP goals as well as on the frequency of executed recommendations.

Discussion

Our experience with this project has confirmed our belief that implementing automated guidelines is still extremely difficult—despite having started with a state-of-the-art clinical information system, garnering significant institutional commitment from the outset, employing a powerful underlying knowledge model, and starting with as ideal a guideline as possible. A number of lessons have been learned at each step of the process.

Choice of Guideline

We limited the scope of the guideline to secondary prevention to minimize complexity and to maximize consensus. First, this subset of the NCEP guidelines enjoyed significant backing by scientific evidence as well as wide acceptance by clinicians and addressed an important clinical problem. Second, data required to compute and navigate the guideline were all contained in the EMR (cholesterol levels, problem lists, and medications); in other words, interactive dialogs with the clinician to collect these data were not required. Third, the secondary prevention portion of the guideline was relatively easy to translate because the decision logic and recommendations were explicit and measurable (check cholesterol level, start drug therapy, or adjust drug therapy). In comparison, the primary prevention portion of the NCEP guidelines had less scientific support, less acceptance by clinicians, and vague logic and recommendations.

Guideline Content

It became clear that even simple and relatively straightforward guidelines can be interpreted in different ways, depending on one’s perspective or specialty. Much effort was spent trying to achieve agreement among our experts about details of the guideline. Although initial efforts tried to put too much corrective action into the algorithm’s recommendations, the experts ultimately focused on a more pragmatic goal. This goal was simply to ensure that the basic and most important recommendations of the NCEP guidelines were being followed, not to pre-specify every medical decision related to the management of hypercholesterolemia or to replace the clinician or substitute for his or her medical education. For example, rather than recommend one particular drug (or drug class) over another (which entails factoring in highly nuanced patient-specific data that is not stored in or easily accessible from the EMR), we decided to implement the more general reminder that the patient simply qualified for pharmacologic treatment. Then, by linking to background reference information about the mechanism, effectiveness, costs, and side effects of various lipid-lowering medications, the autonomy of the clinician to make the best decision for the patient was preserved.

Guideline Representation

We were pleasantly surprised to learn that our knowledge model was not the project’s limiting step. Indeed, GLIF was easily extended, even to deal with execution modalities that were not anticipated at the start of the process, notably the ability to support different notifications and actions from the same step, depending on whether the user was currently interacting with the guideline. Others have also successfully extended GLIF in similar ways.62

One noticeable but surmountable obstacle that had as much to do with the original guideline as with the knowledge model used to encode it was conflicting or borderline data. For example, the NCEP guideline does not specify what to do if more than a single recent LDL is available. For any specific patient, a human can quickly integrate the levels over time and judge whether it is reasonable to use the lowest, highest, most recent, median, or mean value. The computer is limited to an analyst’s best a priori guess, which must then be applied to every subsequent patient.

Although our guideline model allows different recommendations for different test results, it does not flexibly handle borderline labs, such as an LDL of 102 mg/dl. The NCEP guideline itself is precise enough about this point, but clinicians in practice might violate the strict guidelines for such a close result, rightly or wrongly.

Guideline Authoring

We used Visio to represent the sequence of decisions and actions at a highly conceptual level, as a flowchart. This version was passed back and forth among the experts and "debugged" by hand. Because PCAPE cannot read Visio data, the flowchart representation had to be re-entered step by step into the editor, which, though powerful, was not particularly user-friendly. A simple change in the Visio flowchart, such as the insertion of a new decision step, could mean a 15-minute interaction with PCAPE.

Guideline Execution

Others have developed integrated tools that link graphically based authoring and editing of guidelines with execution engines of one kind or another.63–65 Such tools that directly translate the flowchart specification of a guideline into executable code not only would speed development of computer-based guidelines but also would help ensure the fidelity of the translations. Without it, the PCAPE version had to be debugged independently of the expert-verified flowchart. Even after extensive testing in a "live" test environment and then again in a real-world pilot clinic, some important bugs slipped by our scrutiny. These were most commonly related to issues with modeling the passage of time or with supporting synchronous interaction between clinician and computer.

Workflow Integration

Clinicians who use our EMR are quite familiar with encounter sheet-based reminders. Other encounter sheet reminders at our institution are followed 5–60% of the time (the wide variation is due to differences among the reminders that we have implemented).48 Based on how physicians interact with our EMR in the inpatient arena, we hypothesized that direct synchronous interaction with an electronic guideline would have added value in the outpatient setting as well. However, despite incentives to do so, such as access to more detailed recommendations and background information, citations of supporting references, links to patient handouts, and facilitated documentation, clinicians almost never opted to interact in real time with the guideline. Instead, they relied only on the brief reminders printed at the bottom of patient encounter sheets. This finding is consistent with McDonald et al. that physicians do not take advantage of ancillary features that require extra time and effort.66

Whether the lack of online interaction with the cholesterol algorithm reflected obstacles in using the guideline application itself or the EMR in general or whether it was a characteristic of the problem domain is not clear. The end result was that the guideline’s ability to collect data and to disseminate in-depth recommendations was limited. Indeed, without synchronous or interactive forms of messaging, it is difficult to determine whether a recommendation has been read, let alone accepted or rejected, except by using proxies such as new LDL results or changes in the medication list (which do, in fairness, reflect the intended goal).

Our implementation of automated guidelines also may have been more effective if used in conjunction with an outpatient physician order entry system. Unlike inpatient alerts and warnings, which have been so successful at our institution,46,67,68 there was no way to facilitate the actual implementation of recommended outpatient actions, such as ordering a lipid level or prescribing a statin, because we did not have outpatient order entry. Outpatient order entry with rule-based decision support (as opposed to multistep and persistent algorithms such as ours) has been successfully implemented at other centers.60,70 Of course, order entry does not guarantee compliance with guidelines. For example, a recent study by Dexter et al. documented that one user interface model in an order entry system did not increase compliance with guidelines, whereas another user interface model did.25

We also envision additional data that can be included to make the guideline’s recommendation more meaningful. For instance, knowing details of the context of the visit (urgent, general check-up, health maintenance) can help determine the most appropriate mode of messaging. Also, additional data elements not commonly found in EMRs, such as information about modifiable risk factors (e.g., diet and exercise), may allow finer tuning of decisions and recommendations. This information could be captured with user dialogs, but, as noted above, getting physicians to provide such data is difficult. Interestingly, in our inpatient order entry system, there are many situations in which physicians enter supplemental data reliably and frequently. It may be that because entering orders is a necessary and regular part of clinical workflow in the hospital, greater interaction and user data entry have become acceptable. On the other hand, investigating a clinical algorithm—especially when the basic answer is already revealed—may be perceived as peripheral to the clinical workflow in the office, making extra interaction unnecessary and/or unacceptable.

Conclusions

Even with a robust EMR, an advanced event-monitoring system, and a rich set of messaging options, the successful implementation of complex computer-based clinical practice guidelines remains a difficult task. First, guideline development is always arduous because it demands making choices about what will and will not be automated based on degree of national and local expert consensus and the sophistication of available computer resources.

The next obstacle is guideline representation. Although this obstacle has occupied the bulk of the theoretical and published discussion on this topic, it fortunately was not the limiting factor in our effort. Nevertheless, more sophisticated development tools to translate high-level guideline specifications directly into executable code would be welcome.

Instead, the biggest obstacle to implementing complex automated guidelines that we encountered was with presentation and integration into the clinical workflow. Clinicians rarely interacted with the online version of the guideline. Other methods to integrate into the workflow are required. Until these methods are developed, including outpatient order entry and more sophisticated messaging modalities, such as synchronous methods acceptable to physicians for use during a patient’s visit, the marginal benefit of automating complicated algorithmic guidelines over simple rule-based reminders generated on demand is small.

Supplementary Material

References

- 1.Sudlow M, Thomson R. Clinical guidelines: Quantity without quality. Qual Health Care 1997; 6:60–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Lomas J, Anderson GM, Domnick-Pierre K, et al. Do practice guidelines guide practice? The effect of a consensus statement on the practice of physicians. N Engl J Med 1989; 321:1306–1311. [DOI] [PubMed] [Google Scholar]

- 3.Tunis SR, Hayward RSA, Wilson MC, et al. Internists’ attitudes about clincial practice guidelines. Ann Intern Med 1994; 120:956–963. [DOI] [PubMed] [Google Scholar]

- 4.Davis DA, Taylor-Vaisey A. Translating guidelines into practice: A systematic review of theoretic concepts, practical experience and research evidence in the adoption of clinical practice guidelines. Can Med Assoc J 1997; 157:408–416. [PMC free article] [PubMed] [Google Scholar]

- 5.Cabana MD, Rand CS, Powe NR, et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. AMA 1999; 282:1458–1465. [DOI] [PubMed] [Google Scholar]

- 6.Institute of Medicine. Guidelines for clinical practice: from development to use. Washington, DC, National Academy Press, 1992.

- 7.Johnston ME, Langton KB, Haynes RB, Mathieu A. Effects of computer-based clinical decision support systems on clinical performance and patient outcome. A critical appraisal of research. Ann Intern Med 1994; 120:135–142. [DOI] [PubMed] [Google Scholar]

- 8.Shiffman RN, Liaw Y, Brandt CA, Corb GJ. Computer-based guideline implementation systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc 1999;6:104–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chueh H, Barnett GO. "Just-in-time" clinical information. Acad Med 1997; 72:512–517. [DOI] [PubMed] [Google Scholar]

- 10.Zielstorff RD. Online practice guidelines: issues, obstacles, and future prospects. J Am Med Inform Assoc 1998; 5:227–236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stolte JJ, Ash J, Chin H. The dissemination of clinical practice guidelines over an intranet: An evaluation. Proc AMIA Symp 1999:960–964. [PMC free article] [PubMed]

- 12.Tierney WM, Overhage JM, McDonald CJ, et al. Computerizing guidelines to improve care and patient outcomes: The example of heart failure. J Am Med Inform Assoc 1995; 2:316–322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sun Y, van Wingerde FJ, Homer CJ, et al. The challenges of automating a real-time clinical practice guideline. Clin Perform Qual Health Care 1999; 7:28–35. [PubMed] [Google Scholar]

- 14.Peleg M, Ogunyemi O, Shortliffe EH, et al. Using features of Arden Syntax with object-oriented medical data models for guideline modeling. Proc AMIA Symp 2001:523–527. [PMC free article] [PubMed]

- 15.Shiffman RN, Karras BT, Nath S, et al. GEM: A proposal for a more comprehensive guideline document model using XML. J Am Med Inform Assoc 2000; 7:488–498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Musen MA, Gennari JH, Eriksson H, Tu SW, Puerta AR. PROTEGE-II: Computer support for development of intelligent systems from libraries of components. Medinfo 1995;8(Pt 1): 766–770. [PubMed] [Google Scholar]

- 17.Ohno-Machado L, Gennari JH, Barnett GO, et al. The guideline interchange format: A model for representing guidelines. J Am Med Inform Assoc 1998; 5:357–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Tu SW, Musen MA. The EON model of intervention protocols and guidelines. Proc AMIA Annu Fall Symp 1996:587–591. [PMC free article] [PubMed]

- 19.Rogers J, Jain NL, Hayes GM. Evaluation of an implementation of PRODIGY phase two. Proc AMIA Symp 1999: 604–608. [PMC free article] [PubMed]

- 20.Advani A, Tu S, Musen M, et al. Integrating a modern knowledge-based system architecture with a legacy VA database: The ATHENA and EON projects at Stanford. Proc AMIA Symp 1999:653–657. [PMC free article] [PubMed]

- 21.Safran C, Rind DM, Cotton DJ, et al. Guidelines for management of HIV infection with computer-based patient’s record. Lancet 1995. 346:341–346. [DOI] [PubMed] [Google Scholar]

- 22.Day F, Hoang LP, Ouk S, Nagda S, Schriger DL. The impact of a guideline-driven computer charting system on the emergency care of patients with acute low back pain. Proc Annu Symp Comput Appl Med Care 1995:576–580. [PMC free article] [PubMed]

- 23.Cannon DS, Allen SN. A comparison of the effects of computer and manual reminders on compliance with a mental health clinical practice guideline. JAMA 2000;7:196–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schriger DL, Baraff LJ, Rogers WH, Cretin S. Implementation of clinical guidelines using a computer charting system. Effect on the initial care of health care workers exposed to body fluids. J Am Med Assoc 1997;278:1585–590. [PubMed] [Google Scholar]

- 25.Dexter PR, Perkins S, McDonald CJ, et al. A computerized reminder system to increase the use of preventive care for hospitalized patients. N Engl J Med 2001; 345:965–970. [DOI] [PubMed] [Google Scholar]

- 26.Evans, RS, Pestotnik, SL, Classen, DC, et al. A computer-assisted management program for antibiotics and other antiinfective agents. N Engl J Med 1998;338:232–238. [DOI] [PubMed] [Google Scholar]

- 27.Milch RA, Ziv L, Evans V, Hillebrand M. The effect of an alphanumeric paging system on patient compliance with medicinal regimens. Am J Hosp Palliat Care 1996;13:46–48. [DOI] [PubMed] [Google Scholar]

- 28.Linkins RW, Dini EF, Watson G, Patriarca PA. A randomized trial of the effectiveness of computer-generated telephone messages in increasing immunization visits among preschool children. Arch Pediatr Adolesc Med 1994;148(9):908–914. [DOI] [PubMed] [Google Scholar]

- 29.Casebeer L, Roesener GH. Patient informatics: using a computerized system to monitor patient compliance in the treatment of hypertension. Medinfo 1995;8(Pt 2):1500–1502. [PubMed] [Google Scholar]

- 30.Murphy DJ, Gross R, Buchanan J. Computerized reminders for five preventive screening tests: Generation of patient-specific letters incorporating physician preferences. Proc AMIA Symp 2000:600–604. [PMC free article] [PubMed]

- 31.Lobach DF, Hammond WE. Computerized decision support based on a clinical practice guideline improves compliance with care standards. Am J Med 1997; 102:89–98. [DOI] [PubMed] [Google Scholar]

- 32.Nilasena DS, Lincoln MJ. A computer-generated reminder system improves physician compliance with diabetes preventive care guidelines. Proc Annu Symp Comput Appl Med Care 1995:640–645. [PMC free article] [PubMed]

- 33.Winickoff RN, Coltin KL, Fleishman SJ, Barnett GO. Semiautomated reminder system for improving syphilis management. J Gen Intern Med 1986;1:78–84. [DOI] [PubMed] [Google Scholar]

- 34.Lobach DF. Electronically distributed, computer-generated, individualized feedback enhances the use of a computerized practice guideline. Proc AMIA Annu Fall Symp 1996: 493–497. [PMC free article] [PubMed]

- 35.Quaglini S, Grandi M, Melino S, et al. A computerized guideline for pressure ulcer prevention. Int J Med Inform 2000;58-59:207–217. [DOI] [PubMed] [Google Scholar]

- 36.Wagner TH. The effectiveness of mailed patient reminders on mammography screening: A meta-analysis. Am J Prev Med 1998;14:64–70. [DOI] [PubMed] [Google Scholar]

- 37.Shiffman RN, Liaw Y, Brandt CA, Corb GJ. Computer-based guidelines implementation systems: a systematic review of functionality and effectiveness. J Am Med Inform Assoc 1999; 6:104–114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Morgan MM, Goodson J, Barnett GO. Long-term changes in compliance with clincal guidelines through computer-based reminders. Proc AMIA Symp 1998:493–497. [PMC free article] [PubMed]

- 39.Shea S, DuMouchel W, Bahamonde L. A meta-analysis of 16 randomized controlled trials to evaluate computer-based clinical reminder systems for preventive care in the ambulatory setting. J Am Med Inform Assoc 1996; 3:399–409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Balas EA, Weingarten S, Brown GD, et al. Improving preventive care by prompting physicians. Arch Intern Med 2000;160:301–308. [DOI] [PubMed] [Google Scholar]

- 41.Dubey AK, Chueh H. Using the extensible markup language (XML) in automated practice guidelines. Proc AMIA Symp 1998: 735–739. [PMC free article] [PubMed]

- 42.Margolis A, Bray BE, Gilbert EM, Warner HR. Computerized practice guidelines for heart failure management: The HeartMan system. Proc Annu Symp Comput Appl Med Care 1995:228–32. [PMC free article] [PubMed]

- 43.Teich JM, Glaser JP, Spurr CD, et al. The Brigham integrated computing system (BICS): Advanced clinical systems in an academic hospital environment. Int J Med Inform 1999; 54:197–208. [DOI] [PubMed] [Google Scholar]

- 44.Spurr CD, Wang SJ, Bates DW, et al. Confirming and Delivering the Benefits of an Ambulatory Electronic Medical Record for an Integrated Delivery System. TEPR, Boston, 2001.

- 45.Teich JM, Hurley JF, Beckley RF, Aranow M. Design of an easy-to-use physician order entry system with support for nursing and ancillary departments. Proc Symp Comp Appl Med Care 1992; 16:109–113. [PMC free article] [PubMed] [Google Scholar]

- 46.Kuperman GJ, Teich JM, Bates DW, et al. Detecting alerts, notifying the physician, and offering action items: a comprehensive alerting system. J Am Med Inform Assoc 1996; 3(suppl):704–708. [PMC free article] [PubMed] [Google Scholar]

- 47.Hiltz FL, Teich JM. Coverage List: A provider-patient database supporting advanced hospital information services. In Ozbolt JG, ed. Proceedings of 18th SCAMC. Philadelphia, Hanley & Belfus, Inc. 1994; 809–813. [PMC free article] [PubMed]

- 48.Karson AS, Kuperman GJ, Bates DW, et al. Patient-specific computerized outpatient reminders to improve physician compliance with clinical guidelines. Proc AMIA Symp 2000; 1043.

- 49.Summary of the Second Report of the National Cholesterol Education Program (NCEP) Expert Panel on Detection, Evaluation, and Treatment of High Blood Cholesterol in Adults (Adult Treatment Panel II). JAMA 1993; 269:3015–3023. [PubMed] [Google Scholar]

- 50.Tierney WM, Overhage JM, McDonald CJ. Computerizing guidelines: Factors for success. Proc AMIA Annu Fall Symp 1996; 459-62. [PMC free article] [PubMed]

- 51.Tierney WM. Improving clinical decisions and outcomes with information: A review. Int J Med Inform 2001; 62:1–9. [DOI] [PubMed] [Google Scholar]

- 52.Lipid Research Clinics Program. The Lipid Research Clinics Coronary Primary Prevention Trial results. II. The relationship of reduction in incidence of coronary heart disease to cholesterol lowering. JAMA 1984; 251:365–374. [PubMed] [Google Scholar]

- 53.Scandinavian Simvastatin Survival Study Group. Randomised trial of cholesterol lowering in 4444 patients with coronary heart disease: The Scandinavian Simvastatin Survival Study (4S). Lancet 1994; 344:1383–1389. [PubMed] [Google Scholar]

- 54.Sacks FM, Pfeffer MA, Moye LA, et al. The effect of pravastatin on coronary events after myocardial infarction in patients with average cholesterol levels. N Engl J Med 1996; 335:1001–1009. [DOI] [PubMed] [Google Scholar]

- 55.The Post Coronary Artery Bypass Graft Trial Investigators. The effect of aggressive lowering of low-density lipoprotein cholesterol levels and low-dose anticoagulation on obstructive changes in saphenous-vein coronary-artery bypass grafts. N Engl J Med 1997; 336:153–162. [DOI] [PubMed] [Google Scholar]

- 56.McBride P, Schrott HG, Plane MB, Underbakke G, Brown RL. Primary care practice adherence to National Cholesterol Education Program guidelines for patients with coronary heart disease. Arch Intern Med 1998;158:1238–44. [DOI] [PubMed] [Google Scholar]

- 57.Marcelino JJ, Feingold KR. Inadequate treatment with HMG-CoA reductase inhibitors by health care providers. Am J Med 1996; 100:605–610. [DOI] [PubMed] [Google Scholar]

- 58.Maviglia SM, Teich JM, Fiskio J, Bates DW. Using an electronic medical record to identify opportunities to improve compliance with cholesterol guidelines. J Gen Intern Med. 2001;16:531–537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Abookire SA, Karson AS, Fiskio J, Bates DW. Use and monitoring of "statin" lipid-lowering drugs compared with guidelines. Arch Intern Med 2001;16:53–58. [DOI] [PubMed] [Google Scholar]

- 60.Lobach DF. A model for adapting clinical guidelines for electronic implementation in primary care. Proc Annu Symp Comput Appl Med Care 1995:581–585. [PMC free article] [PubMed]

- 61.Zielstorff RD, Teich JM, Fox RL, et al. P-CAPE: A high-level tool for entering and processing clinical practice guidelines. Partners Computerized Algorithm and Editor. Proc AMIA Symp 1998; 478–482. [PMC free article] [PubMed]

- 62.Dubey AK, Chueh HC. An XML-based format for guideline interchange and execution. Proc AMIA Symp 2000: 205–209. [PMC free article] [PubMed]

- 63.Greenes RA, Boxwala A, Sloan WN, Ohno-Machado L, Deibel SRA. A framework and tools for authoring, editing, documenting, sharing, searching, navigating, and executing computer-based clinical guidelines. Proc AMIA Symp 1999: 261–265. [PMC free article] [PubMed]

- 64.Boxwala AA, Greenes RA, Deibel SR. Architecture for a multipurpose guideline execution engine. Proc AMIA Symp 1999:701–705. [PMC free article] [PubMed]

- 65.Fox J, Johns N, Wilson P, et al. PROforma: A general technology for clinical decision support systems. Comput Methods Programs Biomed 1997; 54(1-2):59–67. [DOI] [PubMed] [Google Scholar]

- 66.McDonald CJ, Wilson GA, McCabe GP Jr. Physician response to computer reminders. JAMA 1980; 244(14):1579–1581. [PubMed] [Google Scholar]

- 67.Kuperman G, Teich JM, Gandhi TK, Bates DW. Patient safety and computerized medication ordering at Brigham and Women’s Hospital. J Qual Improv 2001; 27:509–521. [DOI] [PubMed] [Google Scholar]

- 68.Teich JM, Merchia PR, Bates DW, et al. Effects of computerized physician order entry on prescribing practices. Arch Intern Med 2000. Oct 9;160(18):2741–2747. [DOI] [PubMed] [Google Scholar]

- 69.Chin HL, Wallace P. Embedding guidelines into direct physician order entry: Simple methods, powerful results. Proc AMIA Symp 1999:221–225. [PMC free article] [PubMed]

- 70.Overhage JM, Mamlin B, Warvel J, et al. A tool for provider interaction during patient care: G-CARE. In Gardner RE (ed): Proceedings of 19th SCAMC. Philadelphia, Hanley & Belfus, 1995:178–182. [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.