Abstract

Gene expression has a stochastic component because of the single-molecule nature of the gene and the small number of copies of individual DNA-binding proteins in the cell. We show how the statistics of such systems can be mapped onto quantum many-body problems. The dynamics of a single gene switch resembles the spin-boson model of a two-site polaron or an electron transfer reaction. Networks of switches can be approximately described as quantum spin systems by using an appropriate variational principle. In this way, the concept of frustration for magnetic systems can be taken over into gene networks. The landscape of stable attractors depends on the degree and style of frustration, much as for neural networks. We show the number of attractors, which may represent cell types, is much smaller for appropriately designed weakly frustrated stochastic networks than for randomly connected networks.

The complexity of a cell's genome is expressed through the interactions of many genes with a large variety of proteins. Understanding gene expression, therefore, is a many-body problem. But what kind of many-body problem? Should we think of gene expression using the metaphors and techniques of deterministic many-body problems like those developed for the “clockwork Universe” of 19th century celestial mechanics? Or is it appropriate to use statistical ideas like those that form the language of condensed matter physics and physical chemistry (1)?

The deterministic view has much to recommend it. Miracles of development require intricacy and precision (2). Cell cycles, a prominent dynamic sign of life not found in inanimate matter, are often described as clocks. With the great information content of the genome now so apparent in the “postgenomic era,” it is hard to resist making analogies between cells and those man-made information processors, electronic computers, which grind through their programs with a determination that Laplace would have found thrilling. The stochastic view is not without merit, however. Because a gene is a molecule, the statistical fluctuations of atomism cannot be avoided, as Delbrück realized so long ago (3). The technological capabilities of modern experimental biophysics have also made the presence of stochastic behavior in cells undeniable as an experimental fact (4). Under some circumstances, the game theoretic advantage of unpredictable behavior in predator–prey relations among single-cell organisms will be a clear incentive for stochasticity to have evolved adaptively. Furthermore, even when modern cells have well orchestrated patterns of gene expression, we need to know how this elegant patterning can have been achieved in the light of there being both specific and nonspecific interactions of DNA-binding proteins with the myriad possible similar but nevertheless incorrect sites along the genome, many of which remain silent.

The main purpose of this paper is to begin the exploration of stochastic gene expression by developing an analogy to quantum many-body problems. Theoretical work on stochastic models of gene expression has been dominated by simulation approaches (5–8). Because of the intricate connectivity of real gene networks, computer simulations are doubtless necessary. Yet by themselves, they do not provide an easy route to visualizing the basic emergent principles at work. Analytical approaches to stochastic gene systems have focused on single switches using methods that are not easy to generalize to the description of a complete network (9, 10). In this paper, we will see how the discrete nature of the binding sites on the DNA and the finite small numbers of transcription factor proteins in a cell can be easily accommodated by using a master equation containing operators like those encountered in describing quantum many-particle systems. The dynamics of the DNA-binding sites (genes) will be described by quantum spin operators, whereas the fluctuations in protein concentrations in the cell will be described by using creation/annihilation operators analogous to those for bosonic harmonic oscillators. The analogy between discrete number fluctuations in chemical kinetics and quantum mechanics has been uncovered many times (11–13) and is reviewed by Mattis and Glasser (14). For gene expression problems in particular, this analogy provides an immediate connection to well-studied many-body problems. A single genetic switch becomes equivalent to the spin-boson problem that features in the theory of polarons in solid-state physics (15) and electron transfer in chemistry (16). Bare switches are dressed by a “proteomic atmosphere,” much as electrons in insulating solids are accompanied by a cloud of phonons. In the quantum analogy, switches interact through the virtual emission and reabsorption of protein fluctuations. Using this formalism, a multiswitch network can be described using the language of magnets and spin models of neural networks. Finally, through this analogy, the steady states of a stochastic genetic network can be, in some approximations at least, described in landscape terms using precise mathematics rather than in the metaphorical way that has already achieved a certain popularity.

Beyond its linguistic advantages, this analogy allows the well-developed approximation methods used for quantum many-body problems to be brought to bear on the gene expression problem. These methods can be based on path integrals (17), resummed diagrammatic perturbation theory (18), and variational schemes (19). In using these tools, one significant difference from ordinary quantum many-body problems must be noted, however, the effective Hamiltonian for the Master equation is not Hermitian. This reflects the far-from-equilibrium nature of these systems. Because of non-Hermiticity, the mathematical formulation of the traditional approximations must be reexamined. In practice, also the quality of approximations well established in quantum many-body science will have to be reevaluated. In addition, for gene expression, a wider range of phenomena occurs than comes up in traditional solid-state physics, e.g., steadily oscillating states. Yet much insight from quantum many-body theory can be brought over intact.

We illustrate the utility of this approach by providing a fresh perspective on a central problem of cell biology, the stability of cell types. Multicellular, eukaryotic organisms contain a relatively modest number of cell types, by which we mean groups of cells that have a sensibly common pattern of which proteins are actually expressed. The small number of different cell types is puzzling. Just as in the famed Levinthal paradox of protein folding, if each gene switch, of which there must be hundreds, can be in two states, either “on” or “off,” why are there not of order 2100 cell types? This huge number would be possible if the switches were deterministic and noninteracting. This issue has been raised for interacting deterministic switches by using the Kauffman N-K model (20). For this model, an unusual fine tuning of parameters seems to be needed to obtain only a moderate number of expression patterns (21), although this may be avoided by invoking a scale-free topology of the network (22). Another possibility is that the number of potential cell types actually realized reflects the determinism of the developmental program found in the embryo. Of course, this determinism raises further issues of the stochastic stability of a sequence of events rather than of a steady state. Here we explore a third possibility. We exhibit a family of models of stochastic gene expression for which the cell-type-number paradox can be resolved by using the concept of frustration for the multispin system corresponding to the gene network. As for the folding problem (23), the capacity to address a central paradox of gene expression in quantitative terms promises to be a starting point for practical problems of characterizing the class of genetic networks that actually describe real cells.

First, we describe the many-body analogy for a single switch with a proteomic atmosphere, emphasizing the connections with the spin-boson problem. We also describe switch interactions in this framework. Following an approach of Eyink (24), we then formulate a variational approximation for the non-Hermitian many-body problem of a single switch and discuss an analogy to the Hartree approximation for many interacting switches. A landscape description arises naturally in this approximation. Then we explore the phase diagram of a single switch. We propose a very simple network topology where the Hartree approximation should be valid and characterize the phase diagram highlighting the range of parameters where the cell-type paradox is resolved. Finally, we discuss the prospects for using these ideas to provide a general landscape picture for gene expression, for quantifying the response and fluctuation of such networks, and for computing their long-term stability.

Spin-Boson Formalism for Stochastic Switches

A variety of mechanisms for individual gene switches have been elucidated by molecular biologists. These involve the DNA-directed synthesis of proteins that themselves bind to the DNA, thereby turning up or down the synthesis rate. Protein synthesis itself is not simple because of the intrinsic time delays of serial synthesis and the intervening step of synthesizing messenger RNA. Because our goal here is to illustrate the mathematical tools, we will ignore these doubtless important complications. They can be included simply by introducing more species. We will describe only the simplest switches here and concentrate on a switch architecture with symmetry properties that makes our later discussion of networks more transparent. This exposition should enable the reader to see how to write the equations for any known biochemical model for a single switch or network.

The most complete description of a simple stochastic gene switch would be a path probability describing the joint probabilities at various times of the DNA operator sites being occupied by ligand proteins and, for those same times, the numbers of the different ligand molecules in the cell. We assume the binding proteins are well-mixed in the cell. We can extend the formalism to a completely field theoretical description of protein concentrations at different points in the cell, if needed to account for incomplete mixing. If we ignore time delays, the Markovian nature of process ensures that the path probability can be obtained from an operator description of the master equation that describes at any one time the joint probability of the DNA occupation and the protein numbers. The two valuedness of the DNA occupation at a site makes it convenient to describe this probability as a spinor quantum state. For concreteness, consider first a single binding-site gene switch. The DNA site has two states, S = 1 active, without a bound repressor, and S = 0, inactive, with repressor bound. The two-component vector P(n, t) = (P1(n, t), P0(n, t)) expresses the joint probability of the DNA-binding state and the number n of proteins in the cell. The rate of protein synthesis gs depends on the DNA state, and the degradation rate k is independent of S. When S is fixed, then the master equation describes a simple birth–death process (for each value of S) and can be formulated as a difference equation. Consider the case that the product of the gene is a repressor protein that binds to the operator site to change its own activity. Then binding of protein occurs with a rate dependent on n, h(n), whereas unbinding has a rate f. These latter processes transfer probability between the two components of the state vector. The master equation can be written as

|

1 |

|

The analogy to a quantum system is most apparent when we express the difference operations using operators for the creation and annihilation of protein molecules. Such a notation was introduced by Doi (11) and used extensively by Zel'dovich et al. (12, 13) to describe the small-number asymptotics of diffusion-limited reactions. We follow the notation and conventions outlined in ref. 14. These differ somewhat from those ordinarily used in quantum mechanics. For each protein concentration, a creation and an annihilation operator are introduced, such that a†|n 〉 = |n + 1〉 and a|n〉 = n| n − 1〉. These satisfy [a, a†] = 1. For a process involving only a single protein particle number, the state vector is ψ = ∑n P(n, t)|n 〉, where P(n, t) is the probability of having precisely n particles. The master equation (Eq. 1) is written ∂ψ/∂t = Ωψ by using a spinor Hamiltonian for the dynamics of the DNA coupled to the proteins. Ω is a non-Hermitian “Hamiltonian” operator. Ω for this simple gene switch is

|

2 |

|

and ḡ = (g1 + g0)/2, δg = (g1 − g0)/2, μ+ = (h(a†a) + f)/2 and μ− = (h(a†a) − f)/2. In this operator formalism, averages are obtained by taking the scalar product with the bra 〈0|ea.

Ω is the Hamiltonian of a spin-boson Hamiltonian, albeit an explicitly non-Hermitian Hamiltonian. Probability is conserved because of the unusual scalar product. We see that in this representation, the “spin” state of the DNA polarizes a “proteomic” atmosphere, just as in conventional electron transfer, charge motion of an acceptor–donor pair distorts the surrounding lattice by coherently creating phonons. This distortion of the proteomic atmosphere acts to stabilize the two distinct states of the switch, allowing the switch states to be much more stable and change much more slowly than the chemical off-rate alone might suggest. As in electron transfer, the change from one switch state to another can be viewed either in an adiabatic or nonadiabatic representation, depending on whether the DNA occupation variable can always follow the protein number variable. Generally, the adiabatic limit is thought to apply, but this may not be the universal rule. In the study of charge transfer, different approximations that turn out to be exact in one limit are still useful to describe the kinematics in the other limit.

The gene network is made up of elements containing binding sites that control protein production. The state space of the entire network is the direct product of the state spaces for each element, and the non-Hermitian Hamiltonian operator Ω is the sum of terms Ωi describing each such element Ω = ∑i Ωi. Explicitly, we have

|

3 |

|

with μ = (hi(a

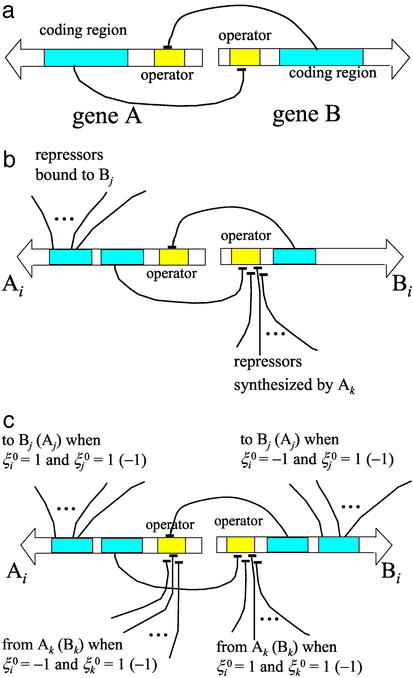

= (hi(a aj) ± fi)/2. δgi in Eq. 3 is positive when the transcription factor that binds to the ith gene is a repressor and negative when it is an activator. In a network of many gene elements, not only does each element interact with its directly generated proteomic atmosphere as in the polaron, but also interactions between gene elements occur through the exchange of proteins, the quanta of the proteomic fields. It is useful to visualize this in the representation where the DNA state changes slowly. In this case, we generate indirect spin–spin interactions in the non-Hermitian Hamiltonian, just as in the theory of condensed phase magnets. These can be considered ferromagnetic or antiferromagnetic, depending on whether the exchanged protein is a repressor or an activator. A nicely symmetric two-element switch model is illustrated in Fig. 1a. Three or more interacting components give rise to the possibility of frustrated or unfrustrated interactions in the sense of whether the corresponding spin–spin interactions lead to coherent or incoherent activation patterns. This can be decided easily by taking the product of the induced spin–spin interactions around a closed loop, a positive product being unfrustrated, a negative product tending to give multiple states or cycles.

aj) ± fi)/2. δgi in Eq. 3 is positive when the transcription factor that binds to the ith gene is a repressor and negative when it is an activator. In a network of many gene elements, not only does each element interact with its directly generated proteomic atmosphere as in the polaron, but also interactions between gene elements occur through the exchange of proteins, the quanta of the proteomic fields. It is useful to visualize this in the representation where the DNA state changes slowly. In this case, we generate indirect spin–spin interactions in the non-Hermitian Hamiltonian, just as in the theory of condensed phase magnets. These can be considered ferromagnetic or antiferromagnetic, depending on whether the exchanged protein is a repressor or an activator. A nicely symmetric two-element switch model is illustrated in Fig. 1a. Three or more interacting components give rise to the possibility of frustrated or unfrustrated interactions in the sense of whether the corresponding spin–spin interactions lead to coherent or incoherent activation patterns. This can be decided easily by taking the product of the induced spin–spin interactions around a closed loop, a positive product being unfrustrated, a negative product tending to give multiple states or cycles.

Figure 1.

(a) The circuit of a switch composed of two symmetrical genes, each of which produces the repressor that binds to the other. (b) An example of the network element with “ferromagnetic” interactions. (c) An example of the network element with interactions of the Mattis type: interactions depend on the target pattern of each switch, ξ = 1 (Ai active) or −1 (Bi active).

= 1 (Ai active) or −1 (Bi active).

Many gene switches involve multimers of individual proteins or several gene products. In this case, the on-rates simply depend on higher polynomials of the relevant protein number operators: when a monomer of the product of the jth gene binds to the ith gene site, hi(nj) = hija aj, and when a dimer of the jth product binds to the ith site, hi(nj) = hij(a

aj, and when a dimer of the jth product binds to the ith site, hi(nj) = hij(a aj)2.

aj)2.

Variational Approach in Spin-Boson Formalism

A variational method developed by Eyink (24) in a different context provides a particularly lucid set of approximations. The master equation is equivalent to the functional variation δΓ/δψL = 0 of an effective “action” Γ = ∫ dt 〈ψL|(∂t − Ω)|ψR〉 with ψ = |ψR〉. This functional variation can be reduced to a set of finite dimensional equations by representing ψL with parameters, αL = (α , α

, α , … , α

, … , α ), as ψL(αL) and ψR as ψR(αR). Here, ψL (αL = 0) is set to be consistent with the probabilistic interpretation 〈ψL(αL = 0)|ψR(αR)〉 = 1. In the spin-boson formalism, this constraint implies 〈ψL(αL = 0)| = 〈0|exp(∑i ai). The condition that this physically sensible ψL is an extremum of the action is

), as ψL(αL) and ψR as ψR(αR). Here, ψL (αL = 0) is set to be consistent with the probabilistic interpretation 〈ψL(αL = 0)|ψR(αR)〉 = 1. In the spin-boson formalism, this constraint implies 〈ψL(αL = 0)| = 〈0|exp(∑i ai). The condition that this physically sensible ψL is an extremum of the action is

|

4 |

|

To apply Eq. 4, explicit functional forms of ψL (αL) and ψR (αR) have to be given. In the simple birth–death problem, for example, the probability distribution P(n, t) to find n particles should be Poisson at large t, P(n) = (Xn/n!) exp(−X) with a mean X, so that the state vector ψ = ∑n P(n, t)|n〉 approaches a “coherent state,” ψ = exp(X(a† − 1))|0〉. To analyze the relaxation toward this stationary state, one may choose the functional form ψR = exp(X(t)(a† − 1))|0〉, with αR = X(t). The corresponding ψL is chosen to make the variation equations simple: a reasonable choice is ψL = 〈0|exp(a)exp(λa) with αL = λ. This “coherent-state Ansatz” for ψR and ψL can be taken further to describe more complex processes using, for example, “squeezed states.”

With the coherent-state Ansatz for the single gene problem, the state vector has two components corresponding to S = 1 and 0.

|

5 |

|

where C1 and C0 are the probabilities of the two DNA-binding states S = 1 and 0, respectively. With this Ansatz, the coupled dynamics of the DNA-binding state and the protein distribution is described as the motion of wavepackets with amplitudes C1(t) and C0(t) and means at X1(t) and X0(t).

A straightforward choice of the trial state vector for a gene network is a Hartree-type product of single spin-boson vectors:

|

6 |

where |ψR(i)〉 and 〈ψL(i)| are vectors of the ith element. With the coherent-state Ansatz, |ψR(i)〉 and 〈ψL(i)| have the same form as in Eq. 5 with replacement of a† with a and C1 with C1(i), and so on. A time-dependent Hartree approximation of the network is obtained by putting Eqs. 3 and 6 into Eq. 4. In the Hartree approximation, the ith and jth elements interact through terms like hj(a

and C1 with C1(i), and so on. A time-dependent Hartree approximation of the network is obtained by putting Eqs. 3 and 6 into Eq. 4. In the Hartree approximation, the ith and jth elements interact through terms like hj(a ai) in 〈ψL(i)ψL(j)|Ω|ψR(i)ψR(j)〉. In this way, genes couple through the proteomic atmosphere, which is here represented by field operators.

ai) in 〈ψL(i)ψL(j)|Ω|ψR(i)ψR(j)〉. In this way, genes couple through the proteomic atmosphere, which is here represented by field operators.

By introducing an effective “potential energy,” the term 〈∂ψL/∂α |Ω|ψR〉αmL = 0 in the Hartree equation can be expressed by a sum of derivatives of a potential energy for each switch and a residual term. Regarding the residual term as a noise, it is natural to use energy landscape language to describe behaviors of the network. When interactions among gene elements are unfrustrated, one may expect that the landscape of the effective potential energy is dominated by a small number of distinct valleys. When the network involves a sufficient number of frustrated loops, on the other hand, the landscape should be rugged, and the time-dependent Hartree trajectory would be trapped into one of many local minima or would never settle into a stationary state because of the lack of detailed balance.

|Ω|ψR〉αmL = 0 in the Hartree equation can be expressed by a sum of derivatives of a potential energy for each switch and a residual term. Regarding the residual term as a noise, it is natural to use energy landscape language to describe behaviors of the network. When interactions among gene elements are unfrustrated, one may expect that the landscape of the effective potential energy is dominated by a small number of distinct valleys. When the network involves a sufficient number of frustrated loops, on the other hand, the landscape should be rugged, and the time-dependent Hartree trajectory would be trapped into one of many local minima or would never settle into a stationary state because of the lack of detailed balance.

Phase Diagram of a Single Switch

The gene circuit shown in Fig. 1a is composed of two interacting genes. The product of gene A is a repressor that binds to gene B, and the product of gene B is a repressor that binds to gene A. This circuit was experimentally implemented in Escherichia coli plasmids and shown to work as a toggle switch (25). In one state, gene A is more active than gene B, and in the other state, gene B is more active. An inducer that changes the activity of a repressor can toggle between two states. Stochastic fluctuations in switching were numerically simulated in the adiabatic regime (26). Here we apply the Hartree approximation to this circuit. The derived phase diagram illustrates how the adiabaticity affects the switching behavior.

The two genes are assumed to be symmetrical with the same production rates, ḡ(A) = ḡ(B) = ḡ and δg(A) = δg(B) = δg, and the same unbinding rates, fA = fB = f. The degradation rates of two proteins are also assumed to be same, kA = kB = k. We consider the case that repressors bind to DNA in a dimer form with the binding rates hA = h(a aB)2 and hB = h(a

aB)2 and hB = h(a aA)2. Using scaled parameters makes the description of the results more transparent, ω = f/k, Xeq = f/h, Xad = ḡ/k, and δX = δg/k. ω is an “adiabaticity” parameter representing the relative speed of the DNA-state alterations to the rate of the protein number fluctuations. Xeq measures the tendency that proteins are unbound from DNA.

aA)2. Using scaled parameters makes the description of the results more transparent, ω = f/k, Xeq = f/h, Xad = ḡ/k, and δX = δg/k. ω is an “adiabaticity” parameter representing the relative speed of the DNA-state alterations to the rate of the protein number fluctuations. Xeq measures the tendency that proteins are unbound from DNA.

Using Eqs. 3, 4, and 6, the Hartree equations are derived. In the adiabatic limit of small ω, they have a stationary solution with X1(A) = X1(B) = Xad + δX and X0(A) = X0(B) = Xad − δX. The order parameter of the switching ability, ΔC = C1(A) − C1(B), is ΔC = 0 in this case. Effects of the small protein number become more evident when Xad is smaller. In the limit of small Xad, fluctuations are large, which also leads to ΔC = 0.

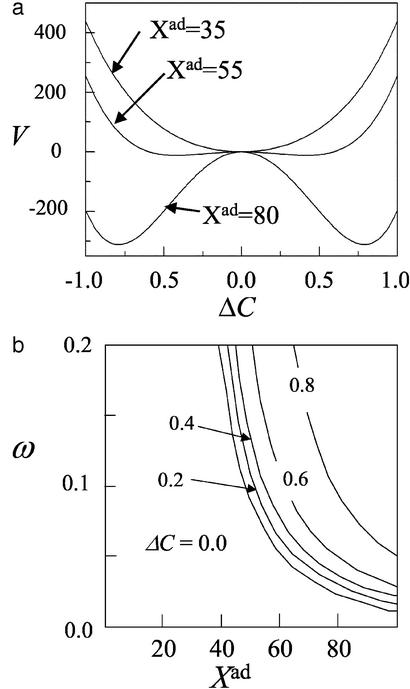

The Hartree equation for ΔC can be written as (Xeq/ω)(dΔC/dt) = −∂V/∂ΔC + (residual terms). The shape of the effective potential energy V is shown in Fig. 2a as a function of ΔC. The stationary solution of the Hartree equations corresponds to a minimum of this potential energy. The potential energy has a single minimum in the regime of small ω or Xad but has double minima when ω and Xad are large. By numerically solving the stationary Hartree equations, |ΔC| is plotted on the ω − Xad plane in Fig. 2b. When ω or Xad is small, the circuit fluctuates with equal probability between two states and does not acts as a stable switch. At the phase boundary of Fig. 2b, the ΔC = 0 state becomes unstable and bifurcates into two equivalent states with positive and negative ΔC. In the nonzero ΔC phase, the circuit takes either of two states and works as a toggle switch between them.

Figure 2.

(a) The effective “potential energy” V for dynamics of the gene circuit of Fig. 1a is shown as a function of the difference ΔC in activities of two identical genes. ω = 0.2. (b) The phase diagram of the circuit of Fig. 1a. A contour map of |ΔC| is plotted on the ω − Xad plane. When ω or Xad is small, the circuit shows no switching ability with ΔC = 0. For larger ω and Xad, the solution of ΔC = 0 becomes unstable and bifurcates into symmetry-breaking states of positive and negative ΔC. The circuit works as a toggle switch between those two states. δX = Xad and Xeq = 1,000 for both a and b.

Switch Interaction, Network Topology, and the Attractor Landscape

With the Hartree approximation, it is possible to analyze a large-scale network composed of many genes. Here, for simplicity, we consider networks whose elements are the toggle switches discussed in the last section. When there are N uncoupled switches, the network potentially has 2N states. This is a huge number, even for a moderate N. We will see this huge number may be much reduced when the network of switches interacts in only a weakly frustrated manner.

The simplest design for the network is shown in Fig. 1b. The ith switch has two operons, Ai and Bi. Ai produces both the intra- and interswitch repressors, and Bi produces only the intraswitch repressor. Their production rates are controlled by the binding state of the operator site of each operon. The binding rates of repressors hAi and hBi at the operator sites Ai and Bi are

|

7 |

respectively. In hBi, the binding rate of the interswitch protein is scaled as h′/N, because each interswitch repressor diffuses over N switches. The Hartree equations for this network have a stationary ferromagnetic solution with ΔC = C1(Ai) − C1(Bi) > 0 for all i when ω and Xad are large enough. The phase diagram on the ω − Xad plane shows that the region of the ferromagnetic phase with ΔC > 0 is wider than the switching region ΔC ≠ 0 of the single switch in Fig. 2b. Such ferromagnetic networks can also be designed by using activator proteins.

Denoting the switch state by ξi = sgn(C1(Ai) − C1(Bi)), every switch in the network of Fig. 1b is homogeneous, ξi = 1, in the ferromagnetic phase. Heterogeneous switching states with any designed pattern, ξ = ±1, are also possible when interactions are transformed from the all ferromagnetic of Fig. 1b into another set of unfrustrated interactions of Fig. 1c. This is analogous to the so-called Mattis ferromagnet (27). Here, repressors bind to Ai and Bi with the binding rates hAi and hBi,

= ±1, are also possible when interactions are transformed from the all ferromagnetic of Fig. 1b into another set of unfrustrated interactions of Fig. 1c. This is analogous to the so-called Mattis ferromagnet (27). Here, repressors bind to Ai and Bi with the binding rates hAi and hBi,

|

8 |

|

This target-dependent transformation of interactions from Eqs. 7 to 8 is analogous to a gauge transformation in the spin system. By making this transformation, an unfrustrated set of interactions will have a frozen state with a different arrangement of bound and unbound sites. The Hartree equations for this Mattis network have a stationary solution of ξi = ξ in the same parameter region where the ferromagnetic solution exists.

in the same parameter region where the ferromagnetic solution exists.

When each operon produces multiple kinds of interswitch repressors or activators, then the proteomic atmosphere is a superposition of many kinds of these transcription factors. By having interactions that are sums of different Mattis patterns, such gene networks can exhibit multiple expression patterns analogous to the memories of a Hopfield neural network (28). Superposition of multiple interactions, however, yields both unfrustrated and frustrated interactions. In such networks, both solutions that retrieve a given binding pattern, and solutions that are irrelevant to that binding pattern may coexist.

To describe the phase diagram, we consider a network composed of repressors only. The network may be designed to have superimposed interactions of p binding patterns ξ with l = 1 − p. We assume binding of the intraswitch and interswitch repressors at the operator site cause the same effect on the protein production rate of each operon. The degradation rate k and the unbinding rate f are assumed to be the same for all proteins. Then, the relevant scaled parameters for the phase diagram are ω, Xeq, Xad, and δX of the last section and Λeq = h′/h. The equation for Si = C1(Ai) − C1(Bi) is approximately derived from the Hartree equations as

with l = 1 − p. We assume binding of the intraswitch and interswitch repressors at the operator site cause the same effect on the protein production rate of each operon. The degradation rate k and the unbinding rate f are assumed to be the same for all proteins. Then, the relevant scaled parameters for the phase diagram are ω, Xeq, Xad, and δX of the last section and Λeq = h′/h. The equation for Si = C1(Ai) − C1(Bi) is approximately derived from the Hartree equations as

|

9 |

where Jij =(1/N)∑ ξ

ξ ξ

ξ , K=ΛeqC̄δX, u=δX2 {1 − (C̄/(ω+C̄))2}, r0=4uC̄2 − (X−+X−2 + Xeq + pΛeq(X−/2+δXC̄)), and X− = Xad − δX. We see that Eq. 9 represents gradient descent in an energy landscape. C̄ = (C1(Ai) + C1(Bi))/2 is self-consistently derived from the Hartree equations.

, K=ΛeqC̄δX, u=δX2 {1 − (C̄/(ω+C̄))2}, r0=4uC̄2 − (X−+X−2 + Xeq + pΛeq(X−/2+δXC̄)), and X− = Xad − δX. We see that Eq. 9 represents gradient descent in an energy landscape. C̄ = (C1(Ai) + C1(Bi))/2 is self-consistently derived from the Hartree equations.

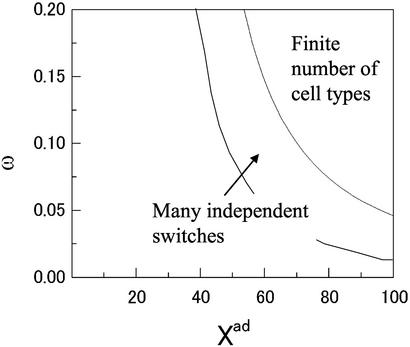

For small ω, Eq. 9 has only a solution with Si = 0, in which elements fluctuate independently from each other. For larger ω, an eigenvector of the Jij matrix appears that can satisfy the linearized stationary equation for Si. This yields the static pattern of the switch states. Such a pattern is irrelevant to the stored binding pattern ξ and should be regarded as a spin–glass solution. We expect a large number of such solutions. For general multiplicity of interactions, these are exponentially many as in the Potts glass (29). Because of the lack of the detailed balance, however, the residual noise that is neglected in Eq. 9 might destabilize this solution to prevent the time-dependent Hartree trajectory from being trapped into the spin–glass pattern. The ability of the network to produce a designed pattern can be examined by introducing order parameters ml = (1/N)∑

and should be regarded as a spin–glass solution. We expect a large number of such solutions. For general multiplicity of interactions, these are exponentially many as in the Potts glass (29). Because of the lack of the detailed balance, however, the residual noise that is neglected in Eq. 9 might destabilize this solution to prevent the time-dependent Hartree trajectory from being trapped into the spin–glass pattern. The ability of the network to produce a designed pattern can be examined by introducing order parameters ml = (1/N)∑ ξ

ξ Si. Generally, either the mls are all small or one of the mls is O(1) and others are O(1/

Si. Generally, either the mls are all small or one of the mls is O(1) and others are O(1/ ). The parameter region that allows such a dominant solution is obtained by approximately solving the self-consistent equations of order parameters (30). These results are summarized in the phase diagram of Fig. 3.

). The parameter region that allows such a dominant solution is obtained by approximately solving the self-consistent equations of order parameters (30). These results are summarized in the phase diagram of Fig. 3.

Figure 3.

The phase diagram of a Hopfield-type network composed of N = 200 elementary gene switches. P = 15 binding patterns of repressors are stored in the network. For small ω or Xad, each element shows no switching ability. For intermediate ω and Xad, all elements work as switches but fluctuate independently of each other. For larger ω and Xad, the network can produce any one of p designed binding patterns, i.e., the number of cell types is P. In this finite cell type number phase, the spin–glass solutions with random switch states coexist. Λeq = 0.5, δX = Xad and Xeq = 1,000.

Discussion

The quantum many-body analogy, when combined with a variational scheme, allows us to understand gene networks in landscape terms. A single switch has two attractors where the wavepacket Hartree solutions correspond to an active or inactive gene. As in any mean-field theory, these attractors are only approximate; improbable fluctuations can take the system from one basin to the other (31, 32). This dynamics will occur on a much longer time scale than the time to settle into one steady state. This activated fluctuation process is rather analogous to electron transfer kinetics and can be treated by instantons by using a path integral version of the present variational approximation.

Although the N switch problem might have been expected to have 2N attractors, the present variational formulation suggests this number will be strongly reduced if the magnetic spin problem corresponding to the network is only weakly frustrated. In this case, only a small number of patterns will stably emerge on the time sale of the rapid fluctuations of a single gene switch. Again, transitions between the basins of attraction found by the variational treatment can occur, but on much longer time scales. It will be very interesting to see whether real gene networks have the weak frustration described here or are more nearly random (21).

Several issues in gene expression require going beyond consideration of steady attractors alone. One such issue is the escape from stable attractors already mentioned. In addition, we must account for the periodic attractors that are involved in the cell cycle. Many experiments probe the response to externally provided signals that are not constant in time. Other experiments may probe endogenous fluctuations about individual attractors. Finally, the most central issue is not just that of steady states but the possibility of developmental programs in which epigenetic states must follow each other in specific sequences. In all these dynamical situations, it should be possible to use an analogous time-dependent variational formalism to the one we used here to at least test the robustness of these temporal patterns to stochastic fluctuations.

In summary, we believe the quantum many-body analogy will complement detailed stochastic modeling by providing a set of powerful mathematical tools and concepts to visualize gene expression.

Acknowledgments

National Science Foundation Grants PHY0225630 and PHY0216576 to the Center for Theoretical Biological Physics are much appreciated. M.S. was supported by the ACT-JST Program of Japan Science and Technology Corporation and by grants from the Japan Society for the Promotion of Science.

References

- 1.Pines D. The Many Body Problem. London: Benjamin; 1961. [Google Scholar]

- 2.Davidson E H, Rast J P, Oliveri P, Ransick A, Calestani C, Yuh C H, Minokawa T, Amore G, Hinman V, Arenas-Mena C, et al. Science. 2002;295:1669–1678. doi: 10.1126/science.1069883. [DOI] [PubMed] [Google Scholar]

- 3.Delbrück M. J Chem Phys. 1940;8:120–124. [Google Scholar]

- 4.Elowitz M B, Levine A J, Siggia E D, Swain P S. Science. 2002;297:1183–1186. doi: 10.1126/science.1070919. [DOI] [PubMed] [Google Scholar]

- 5.McAdams H H, Arkin A. Proc Natl Acad Sci USA. 1997;94:814–819. doi: 10.1073/pnas.94.3.814. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Arkin A, Ross J, McAdams H H. Genetics. 1998;194:1633–1648. doi: 10.1093/genetics/149.4.1633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cook D L, Gerber A N, Tapscott S J. Proc Natl Acad Sci USA. 1998;95:15641–15646. doi: 10.1073/pnas.95.26.15641. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Paulsson J, Berg O G, Ehrenberg M. Proc Natl Acad Sci USA. 2000;97:7148–7153. doi: 10.1073/pnas.110057697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Thattai M, van Oudenaarden A. Proc Natl Acad Sci USA. 2001;98:8614–8619. doi: 10.1073/pnas.151588598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Swain P S, Elowitz M B, Siggia E D. Proc Natl Acad Sci USA. 2002;99:12795–12800. doi: 10.1073/pnas.162041399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Doi M. J Phys A. 1976;9:1465–1477. [Google Scholar]

- 12.Zel'dovich, Ya B, Ovchinikov A A. Sov Phys JETP. 1978;47:829–834. [Google Scholar]

- 13.Mikhailov A S. Phys Lett A. 1981;85:214–216. [Google Scholar]

- 14.Mattis D C, Glasser M L. Rev Mod Phys. 1998;70:979–1001. [Google Scholar]

- 15.Fröhlich H. Adv Phys. 1954;3:325–361. [Google Scholar]

- 16.Chandler D, Wolynes P G. J Chem Phys. 1981;74:4078–4095. [Google Scholar]

- 17.Feynman R P. Phys Rev. 1955;97:660–665. [Google Scholar]

- 18.Abrikosov A A, Gor'kov L P, Dzyaloshinski I E. Methods of Quantum Field Theory in Statistical Physics. Englewood Cliffs, NJ: Prentice-Hall; 1963. [Google Scholar]

- 19.Lee T D, Low F, Pines D. Phys Rev. 1953;90:297–302. [Google Scholar]

- 20.Kauffman S A. J Theor Biol. 1969;22:437–467. doi: 10.1016/0022-5193(69)90015-0. [DOI] [PubMed] [Google Scholar]

- 21.Kauffman S A. The Origins of Order. London: Oxford Univ. Press; 1993. [Google Scholar]

- 22. Aldana, M. (2002), http://xxx.lanl.gov/cond-mat/0209571.

- 23.Wolynes P G. Proc Am Phil Soc. 2001;145:555–563. [Google Scholar]

- 24.Eyink G L. Phys Rev E. 1996;54:3419–3435. doi: 10.1103/physreve.54.3419. [DOI] [PubMed] [Google Scholar]

- 25.Gardner S T, Cantor C R, Collins J J. Nature. 2000;403:339–342. doi: 10.1038/35002131. [DOI] [PubMed] [Google Scholar]

- 26.Kepler T B, Elston T C. Biophys J. 2001;81:3116–3136. doi: 10.1016/S0006-3495(01)75949-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Mattis D C. Phys Lett A. 1976;56:421–422. [Google Scholar]

- 28.Hopfield J J. Proc Natl Acad Sci USA. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Kirkpatrick T R, Wolynes P G. Phys Rev B. 1987;36:8552–8564. doi: 10.1103/physrevb.36.8552. [DOI] [PubMed] [Google Scholar]

- 30.Shiino M, Fukai T. Phys Rev E. 1993;48:867–897. doi: 10.1103/physreve.48.867. [DOI] [PubMed] [Google Scholar]

- 31.Aurell E, Sneppen K. Phys Rev Lett. 2002;88:048101. doi: 10.1103/PhysRevLett.88.048101. [DOI] [PubMed] [Google Scholar]

- 32.Metzler R, Wolynes P G. Chem Phys. 2002;284:469–479. [Google Scholar]