Abstract

Simulations of biological evolution, in which computers are used to evolve systems toward a goal, often require many generations to achieve even simple goals. It is therefore of interest to look for generic ways, compatible with natural conditions, in which evolution in simulations can be speeded. Here, we study the impact of temporally varying goals on the speed of evolution, defined as the number of generations needed for an initially random population to achieve a given goal. Using computer simulations, we find that evolution toward goals that change over time can, in certain cases, dramatically speed up evolution compared with evolution toward a fixed goal. The highest speedup is found under modularly varying goals, in which goals change over time such that each new goal shares some of the subproblems with the previous goal. The speedup increases with the complexity of the goal: the harder the problem, the larger the speedup. Modularly varying goals seem to push populations away from local fitness maxima, and guide them toward evolvable and modular solutions. This study suggests that varying environments might significantly contribute to the speed of natural evolution. In addition, it suggests a way to accelerate optimization algorithms and improve evolutionary approaches in engineering.

Keywords: biological physics, modularity, optimization, systems biology

A central question is how evolution can explain the speed at which the present complexity of life arose (1–17). Current computer simulations of evolution are well known to have difficulty in scaling to high complexity. Such studies use computers to evolve artificial systems, which serve as an analogy to biological systems, toward a given goal (6, 9, 18). The simulations mimic natural evolution by incorporating replication, variation (e.g., mutation and recombination), and selection. Typically, a logarithmic slowdown in evolution is observed: longer and longer periods are required for successive improvements in fitness (6, 9, 18) [similar slowdown is observed in adaptation experiments on bacteria in constant environments (19, 20)]. Simulations can take many thousands of generations to reach even relatively simple goals, such as Boolean functions of several variables (9, 18). Thus, to understand the speed of natural evolution, it is of interest to find generic ways, compatible with natural conditions, in which evolution in simulations can be speeded.

To address this, we consider here the fact that the environment of organisms in nature changes over time. Previous studies have indicated that temporally varying environments can affect several properties of evolved systems such as their structure (6), robustness (21), evolvability (22, 23), and genotype-phenotype mapping (10, 24). In particular, goals that change over time in a modular fashion (18), such that each new goal shares some of the subproblems with the previous goal, were found to spontaneously generate systems with modular structure (18).

Here, we study the effect of temporally varying environments on the speed of evolution. We tested the speed of in silico evolution when the goal changes from time to time, a situation which might be thought to make evolution more difficult. We considered a variety of scenarios of temporally varying environments. The speed of evolution is defined as the number of generations needed, for an initially random population, to achieve a given goal. We find that temporally varying goals can substantially speed evolution compared with evolution under a fixed goal (in which the same goal is applied continuously). Not all scenarios of varying goals show speedup. Large speedup is consistently observed under modularly varying goals (MVG), and, in some conditions, with randomly varying goals (RVG). A central aim was to find how the speedup scales with the difficulty of the goal. We find that the more complex the goal, the greater the speedup afforded by temporally varying goals. This suggests that varying environments may contribute to speed up natural evolution.

Results

We compared evolution under a fixed goal to evolution under four different scenarios of temporally varying goals: MVG and three different scenarios of RVG.

In MVG, we considered goals that can be decomposed into several subgoals (18). The goal changes from time to time, such that each new goal shares some of the subgoals with the previous goal. For example, the two subgoals described by the functions f(x, y) and h(w, z) can be combined by a third function g to form a modular goal with four inputs: G = g(f(x, y), h(w, z)). Modular variations of such a goal were generated by changing one of the functions g, f, or h. For example, consider the subgoals made of the exclusive-OR (XOR) function: f = x XOR y and h = w XOR z. One goal can be formed by combining these, using an OR function, G1 = g(f, h) = f OR h = (x XOR y) OR (w XOR z). A modular variation of this goal is G2 = g′(f, h) = f AND h = (x XOR y) AND (w XOR z) generated by changing the function g from g = OR to g′ = AND. A different modular variation is to G3 = g(f′, h) = (x EQ y) OR (w XOR z), by changing the function f from f = XOR to f′ = EQ (equals). In this way, a large number of modular goals can be generated.

In addition to MVG, we also examined periodic changes between a given goal G1 and a randomly selected goal R (6), and then back to G1 and so on. We considered two different scenarios of such RVG: in the first scenario, the goals change periodically between G1 and the same random goal R. This is called RVGc, where c stands for constant random goal. In the second scenario, a new random goal is chosen every time the goal changes. This is called RVGv, where v stands for varying random goal.

Finally, we examined a scenario where the goal switches periodically from a given goal G1 to a situation with no fitness selection and only neutral evolution. This scenario is called VG0.

To compare the speed of evolution under a fixed goal with evolution under varying goals, we used standard genetic algorithms (25–27) to evolve networks that are able to make computations. The simulations start with a population of random networks. Each network in the population is represented by a genome that represents the nodes and connections in the network. Evolution proceeds toward a defined goal: to compute a specific output based on inputs. Each network is evaluated to compute its fitness, defined as the fraction of all possible input values for which the network gives the desired output. Networks with higher fitness are given a higher probability to replicate. Standard genetic operations [mutations and crossovers (recombination)] are applied to alter the genomes. As generations proceed, the fitness of the networks in the population increases, until a perfect solution to the goal is found [more details in Methods and supporting information (SI) Appendix]. For generality, we used four different types of well studied network models, including Boolean logic circuits, integrate-and-fire neural networks, and networks of continuous functions (Table 1). In addition to the computational models, we also considered a well studied structural model of RNA (28–32). In this model, we used genetic algorithms to evolve RNA molecules toward a specific secondary structure.

Table 1.

Evolution under varying environments: Speedup comparison summary

| Model | Building blocks | Fold-Speedup for the hardest goals (Smax), mean ± SE |

|||

|---|---|---|---|---|---|

| MVG | RVGV | VG0 | RVGC | ||

| 1 Logic circuits | NAND gates

|

95 ± 45 | 45 ± 20 | 2.5 ± 2 | <1 |

| 2 Feed-forward logic circuits | NAND, OR, AND

|

265 ± 150 | 160 ± 80 | 190 ± 90 | 1.3 ± 0.3 |

| 3 Feed-forward neural networks | Integrate-and-fire neurons

|

700 ± 450 | 10 ± 5 | 1.5 ± 1 | <1 |

| 4 Feed-forward circuits | Continuous functions

|

60 ± 10 | 3 ± 1 | 3 ± 2 | <1 |

| 5 RNA secondary structure | Nucleotides A, U, G, and C | 25 ± 5 | <1 | <1 | <1 |

Speedup results of evolutionary simulations of four different network models and a structural model of RNA. Smaxis defined as the speedup of the hardest goals (all goals with TFG > Gmax/2). Smax (mean ± SE) under four different varying goals scenarios are shown for each of the models. Bold, mean (Smax) > 3.

We begin with a detailed description of one case, and then summarize the results for all models. We start with combinatorial logic circuits made of NOT AND (NAND) gates. Circuits of NAND gates are universal in the sense that they can compute any logical function. They serve as the basic building blocks of current digital electronics. We evolved circuits built of NAND gates toward a fixed goal G1 made of three XOR operations and three AND operations acting on six inputs, G1 = [(x XOR y) AND (w XOR z)] AND [(w XOR z) AND (p XOR q)] (see SI Appendix, section 1.1). Starting from random circuits, the median time to evolve circuits that perfectly achieve this goal was TFG = 8 × 104 ± 2 × 104 generations (the subscript FG corresponds to fixed goal).

Next, we applied MVG, in which goals were changed every E = 20 generations. In MVG, during the simulation, each new goal was similar to G1 except that one of the three AND operations was replaced with an OR or vice versa (see SI Appendix, section 1.1). Evolution under these changing goals rapidly yielded networks that adapt to perfect solutions for each new goal within a few generations. The median time to find perfect solutions for G1, from initial random population, was TMVG = 8 × 103 ± 1.5 × 103 generations. This time was much shorter than in the case of a fixed goal, reflecting a speedup of evolution by a factor of ≈10, S = TFG/TMVG ≈ 10 (see Fig. 1a, arrow). Although the goals changed every 20 generations, a perfect solution for goal G1 was found faster than in the case where the goal G1 was applied continuously.

Fig. 1.

Evolution speedup under varying goals. The five panels show the speedup of different model systems. Each graph describes the speedup of evolution under MVG compared with fixed goal, versus the median time to evolve under a fixed goal (TFG). Each point represents the speedup, S = TFG /TMVG, for a given goal. (a) Model 1: general logic circuits. (b) Model 2: feed-forward logic circuits. (c) Model 3: feed-forward neural networks. (d) Model 4: continuous function circuits. (e) Model 5: RNA secondary structure. Speedup scales approximately as a power law with exponents in the range α = 0.7 to α = 1.0. T0 is the minimal TFG value to yield S > 1 (based on regression). SEs were computed by using the bootstrap method.

We repeated this for different modular goals made of combinations of XOR, EQ, AND, and OR functions. The different goals each had a different median time to reach a solution under fixed goal evolution, ranging from TFG = 2 × 102 to TFG = 3 × 106 generations. Thus, the difficulty of the goals, defined as the time needed to solve them “from scratch,” spanned about four decades. We find that the more difficult the fixed goal, the larger the speedup afforded by MVG (Fig. 1a). A speedup of ≈100-fold was observed for the hardest goals. The speedup S appeared to increase as a power-law with the goal complexity, S ∼ (TFG)α with α = 0.7 ± 0.1.

Next, we studied the other three scenarios of temporally varying goals on each one of the different goals. Again, the goals changed every 20 generations. The random goals were random Boolean functions. We found that under RVGv, there was also a significant speedup, and under VG0 and RVGc there was a slowdown for most goals (Table 1, SI Appendix, section 3).

The second model we studied was feed-forward combinatorial circuits made of three logic gate types: AND, OR and NAND gates (Model 2). We evolved circuits toward the goals mentioned for model 1, and used similar MVG scenarios. We found that MVG speeded up evolution by a factor of up to 103. Again, the more difficult the goal, the faster the speedup. The speedup scaled as S ∼ (TFG)α with α = 0.7 ± 0.1 (Fig. 1b). RVGv and VG0 also showed speedup, but smaller than under MVG; RVGC showed a slowdown for all goals (Table 1).

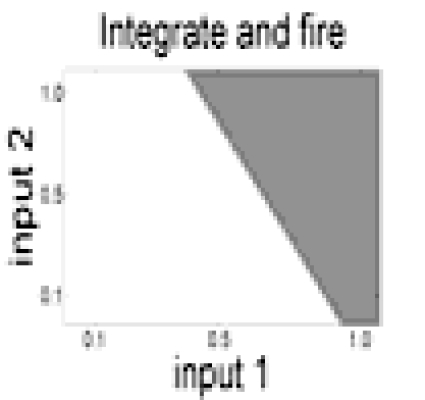

In the two network models described above, the nodes compute logic functions. We also evolved networks in which the nodes compute real (non-Boolean) continuous functions (Models 3 and 4), and found similar conclusions. Model 3 used neural networks of integrate-and-fire nodes. In these networks, edges between nodes have weights. Each node sums the inputs multiplied by their weights, and outputs a one (fires) if the sum exceeds a threshold. The goals were to identify input patterns (SI Appendix, section 1.3). We find that MVG showed the highest speedup, and scaled with goal difficulty with exponent α = 0.8 ± 0.1 (Fig. 1c and Table 1).

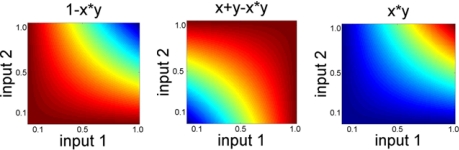

In the last network model (Model 4), each node computes a continuous function of two inputs x and y: xy, 1 − xy and x + y − xy (see SI Appendix, section 1.4). The goals were polynomials of six variables. The variables were continuous in the interval between 0 and 1. We found that MVG showed the highest speedup, whereas the other scenarios showed virtually no speedup (Table 1). The speedup afforded by MVG increased with goal complexity (exponent α = 0.7 ± 0.1, Fig. 1d).

In the RNA secondary structure model, genomes are RNA nucleotide sequences, and the goal was a given secondary structure. We used standard folding algorithms (33) to determine the secondary structure of each sequence. The fitness of each molecule was defined as 1 − d/B, where d is the structural distance to the goal and B is the sequence length (see Methods). The goals were RNA secondary structure such as a tRNA structure; MVG were generated by modifications of hairpins in the original structure (see SI Appendix, section 1.5). Under MVG, the fitness increased significantly faster than under a fixed goal (SI Appendix, section 1.5), whereas all other scenarios showed slowdown (Table 1). The speedup afforded by MVG increased with goal complexity, with an exponent of α = 1.0 ± 0.2 (Fig. 1e).

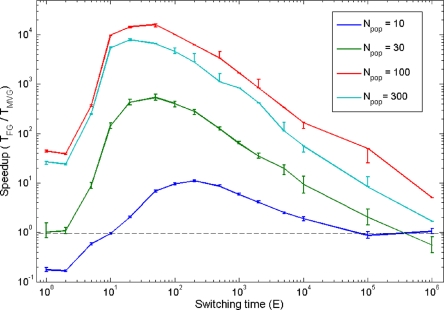

We next examined the effects of the simulation parameters on the speedup. We find that speedup under MVG occurs for a wide range of switching times (the number of generations between goal switches). For efficient speedup, the switching time of the goals should be larger than the minimal time it takes to rewire the networks to achieve each new goal and shorter than the time it takes to solve a fixed goal. In the present examples, the former is usually on the order of a few generations, and the latter is usually 103 generations or larger. We thus find that the range of switching times with significant speedup typically spans several orders of magnitude (Fig. 2). Furthermore, speedup occurred for a wide range of population sizes, mutation and recombination rates, and selection strategies (see SI Appendix, section 6). We find a similar speedup also by using a hill-climbing algorithm (34) (see SI Appendix, section 7) instead of genetic algorithms. The fact that speedup is found in two very different algorithms suggests that it is a feature of the varying fitness landscape rather than the precise algorithm used.

Fig. 2.

Effect of frequency of goal switches on speedup. Speedup (±SE) is shown under different frequencies of goal switches and with various population sizes (Npop). Results are for goal G1 = (x XOR y) OR (w XOR z), using a small version of model 2, with 4-input and 1-output goals (see Methods). In the MVG scenario, the goal switched between G1 and G2 = (x XOR y) AND (w XOR z) every E generations. The dashed line represents a speedup of S = 1.

Speedup is observed given that the problem is difficult enough, and that solutions for the different modular goals differ by only a few genetic changes in the context of the genotype used (otherwise the population cannot switch between solutions in a reasonable time). We found empirically that almost all modular goals of the form discussed above that require more than a few thousand generations to solve showed speedup under MVG.

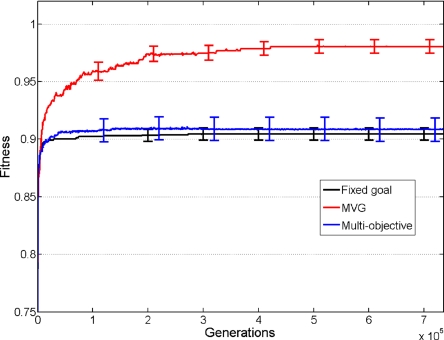

Next, we asked whether the observed speedup under MVG is due to goal modularity, goal variation, or both. For this purpose, we considered multiobjective optimization (35) in which the different variants of the modularly varying goals were represented as simultaneous (nonvarying) objectives. We compared the speed of evolution of MVG with both objectives: weighted multiobjective optimization and pareto multiobjective optimization (26) (SI Appendix, section 5). We find that the multiobjective scenarios showed virtually no speedup, whereas the equivalent MVG scenario showed speedup (Fig. 3 and SI Appendix, section 5). Thus, multiple modular goals by themselves, with no temporal variation, are not sufficient for speedup to occur.

Fig. 3.

Fitness as a function of time in MVG, fixed goal and multiobjective scenarios. Maximal fitness in the population (mean ± SE) as a function of generations for a 4-input version of model 1 for the goal G1 = (x XOR y) AND (w XOR z). For the MVG and multiobjective case, the second goal was G2 = (x XOR y) OR (w XOR z). For MVG, data are for epochs where the goal was G1. For multiobjective evolution, in which two outputs from the network were evaluated for G1 and G2, fitness of the G1 output is shown. Data are from 40 simulations in each case.

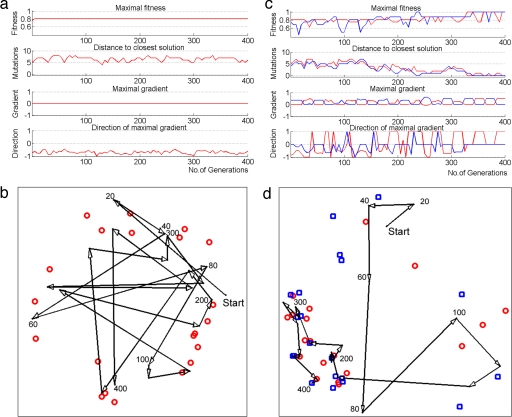

Finally, we aimed to discern the reason for the observed speedup in evolution. To address this, we fully mapped the fitness landscape of a version of model 2 with 4 inputs and 1 output by evaluating all 3 × 1011 possible genomes (see Methods). Such full enumeration allowed us to track the evolving networks and their distance to the closest solution. We find that during evolution toward a fixed goal, the population became stuck at fitness plateaus (11) for long times. These plateaus are typically many mutations away from the closest solution for the goal. The maximal fitness gradient, defined as the maximal change in fitness upon a mutation, is zero in the plateau. Moreover, the gradient typically points away from nearby solutions (Fig. 4 a and b). This explains why it takes a long time to find solutions under a fixed goal. RVGv seems to help by pushing the population in a random direction, thereby rescuing it from fitness plateaus or local maxima. We find that MVG has an additional beneficial effect: each time that a goal changes, a positive local gradient for the new goal is generated (Fig. 4c). Strikingly, we find that this gradient often points in the direction of a solution for the new goal. Thus, the population rapidly reaches an area in the fitness space where solutions for the two goals exist in close proximity (Fig. 4 c and d). In this area, when the goal switches, the networks rapidly find a solution for the new goal just a few mutations away (Fig. 4d).

Fig. 4.

Trajectories and fitness landscapes in fixed goal and MVG evolution. (a) A typical evolution simulation in a small version of model 2, toward the fixed goal G1 = (x XOR y) OR (w XOR z). Shown are properties of the best network in the population at each generation during simulation: fitness; distance to closest solution (the required number of mutations to reach the closest solution); maximal fitness gradient; and average direction of the maximal gradient. −1, away from the closest solution; +1, toward the closest solution. (b) The trajectory of the fittest network, shown every 20 generations (arrowheads). The trajectory was mapped to two-dimensions by means of multidimensional scaling (44), a technique that arranges points in a low dimension space while best preserving their original distances in a high-dimension space (the 38-dimensional genome space where each axis corresponds to one bit in the genome). Red circles describe the closest solutions. Numbers represent generations. (c) Same as in a but for evolution toward MGV, switching between G1 and G2 = (x XOR y) AND (w XOR z) every 20 generations. Properties of best circuit under goal G1 and G2 are in red and blue, respectively. (d) Trajectory of evolution under MVG. Red circles describe the closest G1 solutions, and blue squares describe the closest G2 solutions.

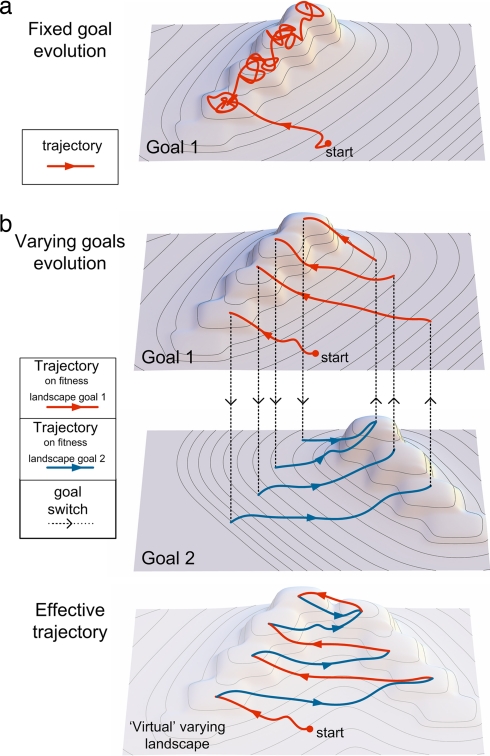

These findings lead to a schematic picture of how speedup might be generated (Fig. 5). Under a fixed goal, the population spends most of the time diffusing on plateaus or stuck at local maxima (Fig. 5a). Under MVG, local maxima or plateaus in one goal correspond to areas with a positive fitness gradient for the second goal (Fig. 5b). Over many goal switches, a “ramp” is formed in the combined landscape made of the two fitness landscapes, that pushes the population toward an area where peaks for the two goals exist in close proximity (Fig. 5b). It seems that this effect of MVG is different from a purely randomizing force [such as temperature in the language of Monte Carlo and simulated-annealing optimization algorithms (36)]. The effect may be related to the modular structure of the solutions found with MVG, with modules that correspond to each of the shared subgoals (18).

Fig. 5.

A schematic view of fitness landscapes and evolution under fixed goal and MVG. (a) A typical trajectory under fixed goal evolution. The population tends to spend long periods on local maxima or plateaus. (b) A typical trajectory under MVG. Dashed arrows represent goal switches. An effectively continuous positive gradient on the alternating fitness landscapes leads to an area where global maxima exist in close proximity for both goals.

Discussion

Our simulations demonstrate that varying goals can speed up evolution. MVG seemed to speed evolution in all models, and showed the highest speedup factor. We find that speedup increases strongly with the complexity of the goal. Not all types of temporally varying goals show speedup. RVGV sometimes shows speedup, but this depends on the model and the fitness landscape. In contrast, RVGC and VG0 showed no speedup in most cases. The results thus highlight the ability of MVG to speed up evolution, based on variations between goals that share subgoals rather than between goals that do not.

Natural evolution usually occurs in temporally and spatially changing environments. These changes are often modular in the sense that similar biological subgoals are encountered in each new condition. On the level of the organism, for example, the same subgoals, such as feeding, mating, and moving, must be fulfilled in each new environment but with different nuances and combinations. On the level of cells, the same subgoals such as adhesion and signaling must be fulfilled in each tissue type but with different input and output signals. On the level of proteins, the same subgoals, such as enzymatic activity, binding to other proteins, regulatory input domains, etc., are shared by many proteins but with different combinations in each case. On all of these levels, goals seem to have shared subgoals that vary from condition to condition (niches, tissues, etc.).

The present study demonstrates the impact that varying environments might have on the speed of evolution. In all cases studied, the more complex the problem at hand, the more dramatic the speedup afforded by temporal variations. Although the present study aimed at understanding the speed of biological evolution, it may also apply to evolutionary approaches in engineering and to optimization algorithms (26, 36–39).

Methods

Genetic Algorithms Description.

We used standard genetic algorithms (26, 27) to evolve four network models and a structural model of RNA. The settings of the simulations were as follows. A population of Npop individuals was initialized to random binary genomes of length B bits (random nucleotide sequences of length B bases in the case of RNA). In each generation, the L individuals with highest fitness (the elite) passed unchanged to the next generation [elite strategy, see SI Appendix, section 1]. The L least fit individuals were replaced by a new copy of the elite individuals. Pairs of genomes from the nonelite individuals were recombined (using crossover probability of Pc), and then each genome was randomly mutated (mutation probability Pm per genome). The present conclusions are generally valid also in the absence of recombination (Pc = 0). Each simulation was run until fitness = 1 was achieved for the goal (or for all goals in case of MVG). If the fitness was not achieved in Gmax generations, T was set at Gmax. We note that speedup occurred under a wide range of parameters (see SI Appendix, sections 1 and 6). The simulations did not include sexual selection (40), developmental programs (41), exploratory behavior (1, 4), evolutionary capacitance (42), or learning (43). For example, organisms seem to have mechanisms for facilitated phenotypic variation (1) that may further speed evolution. The impact of these effects on evolution under varying environments can in principle be studied by extending the present approach. For a detailed description of the algorithm, models, goals, and MVG schedules, see SI Appendix, section 1. Simulations were run on a 60-central processing unit computer grid.

Combinatorial Logic Circuits Composed of NAND Gates (Model 1).

Circuits were composed of up to 26 2-input NAND gates. Feedbacks were allowed. Goals were 6 inputs and 1- or 2-output Boolean functions composed from XOR, EQ, AND, and OR operations. The goal was of the form G = F(M1,M2,M3) where M1, M2, and M3 were two-input XOR or EQ functions. F was composed of AND and OR functions. The varying goal changed in a probabilistic manner every 20 generations by applying changes to F.

Feed-Forward Combinatorial Logic Circuits Composed of Several Gate Types (Model 2).

Circuits were composed of four layers of 8,4,2,1 gates (for the 1-output goals) or three layers of 8,4,2 gates (for the 2-output goals); the outputs were defined to be the outputs of the gates at the last layer. Each logic gate could be AND, OR, or NAND. Only feed-forward connections were allowed. Goals and MVG scenarios were similar to model 1.

Model 2, Small Version.

Circuits were composed of three layers of 4,2,1 gates; the output was that of the single gate at the third layer. Goals were 4 inputs, 1-output Boolean functions.

Neural Network Model (Model 3).

Networks were composed of seven neurons arranged in three layers in a feed-forward manner. Each neuron had two inputs, summed its weighted inputs and fired if the sum exceeds a threshold. Inputs to the network had three levels: low, medium, and high. The goal was to identify input patterns. MVG scenario: The goal was a combination R of two 2-input subgoals (S1, S2). R switched between AND and OR every 20 generations. In total we evaluated 15 goals composed of different combinations of eight different 2-input subgoals.

Continuous Function Circuits (Model 4).

Circuits were composed of four layers of 8,4,2,1 gates (for the 1-output goals) or three layers of 8,4,2 gates (for the 2-output goals). The outputs were those of the nodes at the last layer. Connections were allowed only to the next layer. Three types of 2-input continuous functions implementing: xy, 1 − xy, x + y − xy were used. Inputs had values between 0 and 1. A goal was defined by a multivariate polynomial (of six variables). MVG scenario: The goals were composed of three subgoals (T1, T2, and T3) combined by a function (U) on their output. Ti were bilinear functions of one of the forms: x + y − 2xy or 1 − x − y + 2xy. The varying goal switched in a probabilistic manner every 20 generations by applying changes on U.

RNA Secondary Structure (Model 5).

We used standard tools for structure prediction (33) available at www.tbi.univie.ac.at/RNA/, and the “tree edit” structural distance (33). The goals were the secondary structure of the Saccharomyces cerevisiae phenylalanine tRNA and a synthetic structure composed of three hairpins. For MVG, three modular variations of each of the two goals were constructed by changing each of the three hairpins in the secondary structure into an open loop. Goals changed every 20 generations.

Supplementary Material

Acknowledgments

We thank A. E. Mayo, M. Parter, T. Kalisky, G. Shinar, and all the members of our laboratory for discussions; and R. Kishony, H. Lipson, M. Kirschner, N. Gov, T. Tlusty, R. Milo, N. Barkai, and S. Teichmann for comments. This work was supported by the Hanford Surplus Faculty Program, the National Institutes of Health, and the Kahn Family Foundation.

Abbreviations

- Gn

goal n

- MVG

modularly varying goals

- NAND

NOT AND

- RVG

randomly varying goals

- XOR

exclusive-OR.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0611630104/DC1.

References

- 1.Kirschner M, Gerhart JC. The Plausibility of Life: Resolving Darwin's Dilemma. London: Yale Univ Press; 2005. [Google Scholar]

- 2.Eldredge N, Gould SJ. Science. 1997;276:338–341. doi: 10.1126/science.276.5311.337c. [DOI] [PubMed] [Google Scholar]

- 3.Elena SF, Cooper VS, Lenski RE. Science. 1996;272:1802–1804. doi: 10.1126/science.272.5269.1802. [DOI] [PubMed] [Google Scholar]

- 4.Gerhart J, Kirschner M. Cells, Embryos, and Evolution: Toward a Cellular and Developmental Understanding of Phenotypic Variation and Evolutionary Adaptability. Oxford: Blackwell; 1997. [Google Scholar]

- 5.Springer MS, Murphy WJ, Eizirik E, O'Brien SJ. Proc Natl Acad Sci USA. 2003;100:1056–1061. doi: 10.1073/pnas.0334222100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lipson H, Pollack JB, Suh NP. Evolution (Lawrence, Kans) 2002;56:1549–1556. doi: 10.1111/j.0014-3820.2002.tb01466.x. [DOI] [PubMed] [Google Scholar]

- 7.Nilsson DE, Pelger S. Proc Biol Sci. 1994;256:53–58. doi: 10.1098/rspb.1994.0048. [DOI] [PubMed] [Google Scholar]

- 8.Goldsmith TH. Q Rev Biol. 1990;65:281–322. doi: 10.1086/416840. [DOI] [PubMed] [Google Scholar]

- 9.Lenski RE, Ofria C, Pennock RT, Adami C. Nature. 2003;423:139–144. doi: 10.1038/nature01568. [DOI] [PubMed] [Google Scholar]

- 10.Wagner GP, Altenberg L. Evolution (Lawrence, Kans) 1996;50:967–976. doi: 10.1111/j.1558-5646.1996.tb02339.x. [DOI] [PubMed] [Google Scholar]

- 11.Kauffman S, Levin S. J Theor Biol. 1987;128:11–45. doi: 10.1016/s0022-5193(87)80029-2. [DOI] [PubMed] [Google Scholar]

- 12.Hegreness M, Shoresh N, Hartl D, Kishony R. Science. 2006;311:1615–1617. doi: 10.1126/science.1122469. [DOI] [PubMed] [Google Scholar]

- 13.Fontana W, Schuster P. Science. 1998;280:1451–1455. doi: 10.1126/science.280.5368.1451. [DOI] [PubMed] [Google Scholar]

- 14.Wagner A. FEBS Lett. 2005;579:1772–1778. doi: 10.1016/j.febslet.2005.01.063. [DOI] [PubMed] [Google Scholar]

- 15.van Nimwegen E, Crutchfield JP, Huynen M. Proc Natl Acad Sci USA. 1999;96:9716–9720. doi: 10.1073/pnas.96.17.9716. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Peisajovich SG, Rockah L, Tawfik DS. Nat Genet. 2006;38:168–174. doi: 10.1038/ng1717. [DOI] [PubMed] [Google Scholar]

- 17.Orr HA. Nat Rev Genet. 2005;6:119–127. doi: 10.1038/nrg1523. [DOI] [PubMed] [Google Scholar]

- 18.Kashtan N, Alon U. Proc Natl Acad Sci USA. 2005;102:13773–13778. doi: 10.1073/pnas.0503610102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Elena SF, Lenski RE. Nat Rev Genet. 2003;4:457–469. doi: 10.1038/nrg1088. [DOI] [PubMed] [Google Scholar]

- 20.Dekel E, Alon U. Nature. 2005;436:588–592. doi: 10.1038/nature03842. [DOI] [PubMed] [Google Scholar]

- 21.Thompson A, Layzell P. ICES 2000. 2000:218–228. [Google Scholar]

- 22.Earl DJ, Deem MW. Proc Natl Acad Sci USA. 2004;101:11531–11536. doi: 10.1073/pnas.0404656101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Reisinger J, Miikkulainen R. Proceedings of the 8th Annual Conference on Genetic and Evolutionary Computation; New York: ACM; 2006. pp. 1297–1304. [Google Scholar]

- 24.Altenberg L. In: Evolution and Biocomputation: Computational Models of Evolution (Lecture Notes in Computer Science) Banzhaf W, Eeckman F, editors. Vol 899. New York: Springer; 1995. pp. 205–259. [Google Scholar]

- 25.Holland J. Adaptation in Natural and Artificial Systems. Anne Arbor, MI: Univ of Michigan Press; 1975. [Google Scholar]

- 26.Goldberg D. Genetic Algorithms in Search, Optimization, and Machine Learning. Reading, MA: Addison–Wesley; 1989. [Google Scholar]

- 27.Mitchell M. An Introduction to Genetic Algorithms. Cambridge, MA: MIT Press; 1996. [Google Scholar]

- 28.Schuster P. Biol Chem. 2001;382:1301–1314. doi: 10.1515/BC.2001.162. [DOI] [PubMed] [Google Scholar]

- 29.Schuster P, Fontana W, Stadler PF, Hofacker IL. Proc Royal Soc London Biol Sci. 1994;255:279–284. doi: 10.1098/rspb.1994.0040. [DOI] [PubMed] [Google Scholar]

- 30.Zuker M, Mathews DH, Turner DH. In: A Practical Guide in RNA Biochemistry and Biotechnology. Barciszewski JCB, editor. Dordrecht, The Netherlands: Kluwer Academic; 1999. pp. 11–44. [Google Scholar]

- 31.Ancel LW, Fontana W. J Exp Zool. 2000;288:242–283. doi: 10.1002/1097-010x(20001015)288:3<242::aid-jez5>3.0.co;2-o. [DOI] [PubMed] [Google Scholar]

- 32.Zuker M, Stiegler P. Nucleic Acids Res. 1981;9:133–148. doi: 10.1093/nar/9.1.133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Hofacker IV, Fontana W, Stadler PF, Bonhoeffer LS, Tacker M, Schuster P. Monatsh Chem. 1994;125:167–188. [Google Scholar]

- 34.Johnson AW, Jacobson SH. Discrete Applied Mathematics. 2002;119:37–57. [Google Scholar]

- 35.Fonseca CM, Fleming PJ. Evolutionary Computation. 1995;3:1–16. [Google Scholar]

- 36.Newman MEJ, Barkema GT. Monte Carlo Methods in Statistical Physics. Oxford: Oxford Univ Press; 1999. [Google Scholar]

- 37.Gen M, Cheng R. Genetic Algorithms and Engineering Design. New York: Wiley Interscience; 1997. [Google Scholar]

- 38.Lipson H, Pollack JB. Nature. 2000;406:974–978. doi: 10.1038/35023115. [DOI] [PubMed] [Google Scholar]

- 39.Schmidt MD, Lipson H. In: Genetic Programming Theory and Practice IV. Goldberg DE, Koza JR, editors. New York: Springer; 2007. pp. 113–130. [Google Scholar]

- 40.Keightley PD, Otto SP. Nature. 2006;443:89–92. doi: 10.1038/nature05049. [DOI] [PubMed] [Google Scholar]

- 41.Wilkins A. The Evolution of Developmental Pathways. Sunderland, MA: Sinauer Associates; 2002. [Google Scholar]

- 42.Queitsch C, Sangster TA, Lindquist S. Nature. 2002;417:618–624. doi: 10.1038/nature749. [DOI] [PubMed] [Google Scholar]

- 43.Hinton GE, Nowlan SJ. Complex Systems. 1987;1:495–502. [Google Scholar]

- 44.Kruskal JB, Wish M. Multidimensional Scaling. Beverly Hills, CA: Sage; 1977. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.