Abstract

Performance on most sensory tasks improves with practice. When making particularly challenging sensory judgments, perceptual improvements in performance are tightly coupled to the trained task and stimulus configuration. The form of this specificity is believed to provide a strong indication of which neurons are solving the task or encoding the learned stimulus. Here we systematically decouple task- and stimulus-mediated components of trained improvements in perceptual performance and show that neither provides an adequate description of the learning process. Twenty-four human subjects trained on a unique combination of task (three-element alignment or bisection) and stimulus configuration (vertical or horizontal orientation). Before and after training, we measured subjects' performance on all four task-configuration combinations. What we demonstrate for the first time is that learning does actually transfer across both task and configuration provided there is a common spatial axis to the judgment. The critical factor underlying the transfer of learning effects is not the task or stimulus arrangements themselves, but rather the recruitment of commons sets of neurons most informative for making each perceptual judgment.

Introduction

Practice improves our ability to detect and distinguish sensory stimuli. Perception of most, if not all, sensory attributes improves with practice [1]–[9] and the perceptual benefits can be realized over many years [10]. The extent to which “perceptual learning” generalizes to novel, untrained stimulus attributes is very much dependent upon the task demands of training [11]. Learning of relatively coarse stimulus discriminations, for example, that requires little perceptual effort tend to generalize to untrained stimuli. Yet, when making challenging sensory judgments that demand focused attention, practice-induced improvements in perceptual performance are tightly coupled to the particular task and stimulus arrangement used during the initial training period [11].

The nature of specificity and generalization in perceptual learning is generally regarded to signify which population of neurons is solving the task and encoding the learned stimulus [4], [12]–[15]. For example, the specificity of perceptual learning for low-level visual characteristics, such as orientation and retinal position, has led to the view that tuning of individual cortical neurons to the ‘learned’ stimulus or weighting of synaptic connections between neurons in early visual cortex must be malleable or ‘plastic’ [15]–[23]. Precisely how and at what level(s) of visual cortex this experience-dependent plasticity is implemented remains controversial. According to one popular theoretical account of visual perceptual learning [12], the learning process begins at the top of the cortical visual hierarchy and works backwards searching for the most informative neurons to solve the prevailing sensory objective. Coarse, or ‘easy’, visual discriminations are learned by neurons with large receptive fields and broad stimulus tuning at advanced stages of cortical visual processing. Challenging, fine discriminations, on the other hand, are learned by neurons at earlier stages of cortical visual processing, where receptive fields are tightly tuned for retinal position and visual stimulation. It is the narrow tuning of these neurons which is thought to limit the transfer of learning effects to a restricted range of stimulus attributes [12].

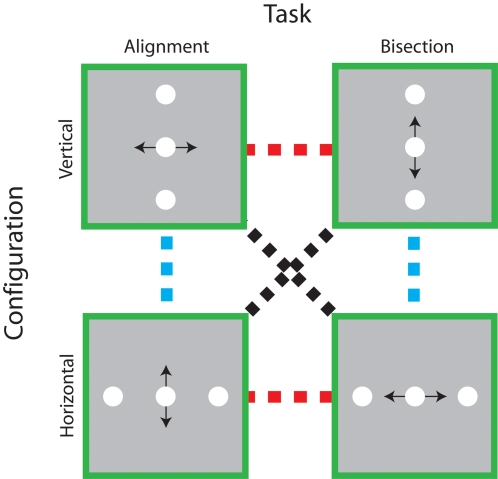

Trained improvements on fine visual discriminations are often specific for stimulus orientation, direction of motion, retinal location, trained eye [24]–[31], and do not transfer between very similar tasks [32]–[34], even when the stimulus elements share a common retinal location [34]. Here we systematically decouple task- and stimulus-mediated components of trained improvements in perceptual performance and show that neither alone provides an adequate description of the learning process. The two-dimensional paradigm (shown in Fig 1A) enabled us to dissect task- and stimulus-coupled improvements in judgments of spatial position, a visual task that is particularly amenable to perceptual learning [4], [13], [18], [26], [31], [32], [34]–[37]. If perceptual learning is tightly coupled to task performance with little regard for the spatial pattern of retinal stimulation, improvements on a positional alignment or bisection task should transfer between different orientations (dashed blue line), but not between tasks (dashed red line). On the other hand, if training improves subjects' sensitivity to particular stimulus configurations regardless of the current task demands, improvements with vertical or horizontal oriented stimuli should transfer between different tasks (dashed red line). An intriguing alternative to these two potential outcomes is that learning selectively transfers along the spatial axis of the positional judgment itself, independently of either task or stimulus configuration (dashed black line).

Figure 1. Decoupling the task and stimulus specificity of positional learning.

Schematic illustrations of each of the task and stimulus configurations used in the experiment are shown in the green boxes. In each case, the black arrow indicates the spatial axis of the required positional judgement. To characterise the specificity of perceptual learning, subjects' performance was measured on all four task-configuration combinations before and after they received training on one of them. In principle, trained improvements in performance on particular task-configuration combinations could be tightly coupled to the task (dashed blue lines), the stimulus configuration (dashed red line) or the spatial axis of the judgement (dashed black lines).

Results

Our first objective was to determine the extent to which practice enhanced visual position estimates within each training condition. Subjects were assigned to one of four groups, each of which trained for eight days on a unique combination of task (three-element alignment or bisection) and configuration (vertical or horizontal orientation), schematically represented in Figure 1. We obtained daily estimates of their performance (positional thresholds) at each of the separations between the central and reference elements. We expressed the data collected in an individual session as the proportion of trials on which the central element was judged to be rightward (Vertical alignment or Horizontal Bisection) or upward (Horizontal alignment or Vertical Bisection) of the reference elements. From logistic fits to these data, we estimated positional thresholds for each separation.

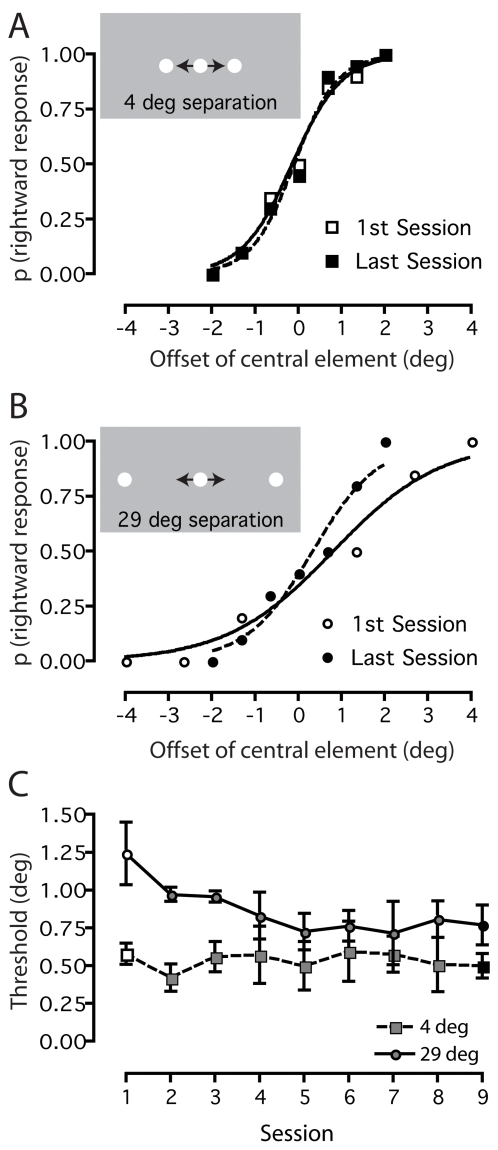

Figure 2 illustrates how we derived positional thresholds at two separations for an individual subject who trained on the horizontal bisection task. In Figure 2A, there is very little difference between the slopes of the psychometric functions (a measure of positional threshold) obtained during the first (open squares) and last (black squares) training sessions, indicating that training did not improve this subject's bisection thresholds at the smallest (4 deg) separation. The same analysis at the largest (29 deg) separation reveals a different picture (Fig 2B): the slope of the function measured during the last training session (black circles) is substantially steeper than that measured during the first session (open circles), showing that perceptual training reduced bisection thresholds at large separations. Figure 2C shows the bisection threshold estimates obtained at both separations during all training sessions. This plot shows clearly that perceptual training systematically reduces bisection thresholds at a large separation (circles), but has negligible effects on them at the smallest separation (squares).

Figure 2. Quantifying positional learning.

Example individual results are shown for a typical subject trained on the horizontal bisection task. a) A comparison of psychometric functions obtained on the first and last day reveals negligible learning at the smallest stimulus separation (4 deg). b) In contrast, a noticeable steepening of the psychometric function is evident at the largest stimulus separation (29 deg), indicating a learned improvement in task performance. c) Positional thresholds derived from the individual's psychometric functions, plotted as a function of session for the two extreme stimulus separations.

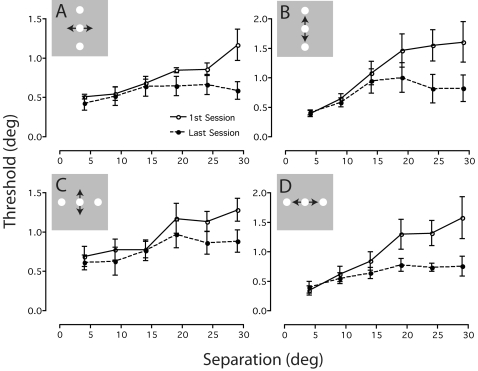

To see if this pattern of learning held for each training condition, we calculated group averaged positional thresholds (±SEM) in the first and last session for each task-configuration combination. Figure 3 shows the results of this analysis plotted as a function of separation. For the alignment task, thresholds obtained during the first session at small separations (≤14 deg) are almost independent of separation, but for larger separations increase in proportion to separation (open circles; Fig 3A–B). For the bisection task, thresholds obtained during the first session increase roughly in proportion to separation at all values of separation (open circles; Fig 3C–D). Yet the effects of training on both tasks are manifested in the last session as a marked reduction in thresholds only at large (>14 deg) separations between the central and reference elements (black circles; Fig 3A–D). This result sits comfortably with the notion that positional sensitivity at small and large separations are governed by different neural processes [38], [39].

Figure 3. Positional learning for each task and stimulus configuration.

Mean positional thresholds obtained on the first and last days for groups trained on a) vertical alignment, b) vertical bisection, c) horizontal alignment and d) horizontal bisection. Learned improvements in task performance are consistently seen for large, but not small, stimulus separations. Error bars are SEM.

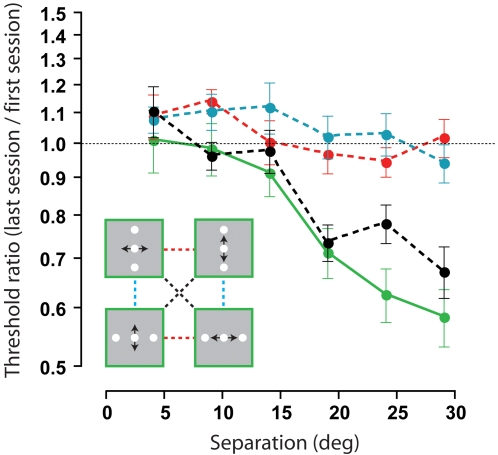

Figure 4 shows the magnitude of perceptual learning expressed as the ratio of subjects' performance on the last and first day as a function of separation between the central and reference elements. The solid green line confirms how training within task (collapsed across all task-configuration combinations) only reduced positional thresholds when there were large (>14 degree) separations between the central and reference elements. In agreement with previous work [13], [26], [34], perceptual training did not improve positional sensitivity independently of the stimulus orientation (dashed blue line) or task demands (dashed red line). Both results are consistent with the view that trained improvements in challenging sensory discriminations do not transfer between different tasks or stimulus configurations [11], [12]. What we demonstrate for the first time is that learning does actually transfer across both task and configuration provided there is a common spatial axis to the judgment (dashed black line). This pattern of transfer suggests that for relative position estimates, neurons which encode the spatial axis of the judgment rather than the stimulus orientation or task per se are most informative for the learning process.

Figure 4. Transfer of learned improvements between task and stimulus configurations.

Ratio of mean positional thresholds obtained on the first and last days, collapsed across conditions and plotted as a function of stimulus separation. Trained improvements in performance did not transfer to different configurations (dashed blue line) or tasks (dashed red line), but did transfer between task-configuration combinations when there was common spatial axis to the judgement (dashed black line). For comparison, the solid green line shows averaged learned improvements obtained within each task-configuration combination. Error bars are SEM.

Discussion

The hallmark of visual perceptual learning is its specificity for simple visual attributes, frequently being tightly coupled to the trained retinal position [28], [40]–[43], orientation [24], [28], [42], spatial frequency [24] and size of a visual pattern [24], [40], [44]. It is widely believed that stimulus-coupled learning reflects some form of experience-dependent plasticity at the cortical level which encodes the stimulus itself [15]–[23], [42]. For example, experience-dependent plasticity can arise as early primary visual cortex [16], [19], [21]–[23], where neurons encode simple stimulus attributes such as orientation [45], [46] and spatial frequency [47], [48].

In agreement with previous work [13], [26], [34], we have shown that perceptual training does not improve positional sensitivity independently of the stimulus configuration and task. However, our findings demonstrate that characterizing the specificity of learning in terms of the arrangement of stimuli and/or the type of perceptual judgment is potentially misleading. Specifically, we demonstrate that learned improvements in positional sensitivity transfer to conditions in which both these factors have been altered, provided that the spatial axis of the judgment remains constant. These findings most likely reflect the recruitment of common sets of neurons that are informative for making each judgment. Indeed, recent physiological evidence suggests that learned-induced changes in the neural population response are specific to neurons that provide the most information for solving the learned task [49]. Even though stimulus configuration and the type of task performed undoubtedly play a role in dictating which neurons are most useful for making a given perceptual decision, neither factor alone is a perfect predictor of the specificity of perceptual learning.

Visual representations that mediate fine discriminations of stimulus position reside at early levels of cortical visual processing, where neuronal receptive fields are small and retinotopically organized. Feasibly, learned improvements in making positional judgments along a spatial axis might reflect plastic changes at this early stage of visual processing or, alternatively, at later cortical stages where positional information is combined. A strong indication of how these neural plastic changes might arise is provided by our observation that the magnitude of learning increases with the separation between the central and reference elements. It is well established that the accuracy of positional estimates is inversely proportional to stimulus separation [38], [50], [51]. There are at least two putative neural mechanisms that could explain this separation-dependent degradation of positional acuity. The first is that positional acuity is mediated by independent mechanisms at small and large separations [52]–[54]. For example, in an alignment task, observers could utilize the output of neurons that encode neighboring stimulus elements when they are in close proximity [55], [56] and the retinal position or ‘local sign’ of each element when they are widely separated [57], [58]. Although this scheme could explain why we observed separation-dependent learning, it does not explain the specific transfer of learning across tasks involving judgments along a common spatial axis. In addition, it is difficult to reconcile with previous studies that have reported substantial positional learning effects at small stimulus separations [e.g. 13], [18], [20], [26], [31], [32], [34], [36], [37].

The second putative mechanism is that there is a gradual shift to lower spatial scales of analysis with increasing separation [38], [50]. That is, increasingly larger receptive fields have to be recruited to encode the stimulus elements as separation (and eccentricity) increases. Within this framework, positional learning would cause a shift to increasingly smaller spatial scales of analysis at large separations. The reason why the magnitude of learning increases with stimulus separation is there is much more scope to move to finer spatial scale of analysis at larger separations, whereas at small separations the receptive fields are already operating at the optimal spatial scale of analysis [38]. To explain the observed patterns of transfer, this scheme requires that learning-induced changes in spatial scale are tightly coupled to the spatial axis of the judgment but not the retinal position of the stimulus elements. Work is underway in our laboratory to test this possibility.

This putative mechanism also enables us to reconcile our results with previous work demonstrating large learning effects at small and medium stimulus separations. To the best of our knowledge, all of the previous studies that have demonstrated substantial positional learning effects at small separations have either used broadband stimuli (e.g. dots, lines, bars) or medium to high frequency sinusoidal gratings [13], [18], [20], [26], [31], [32], [34], [36], [37], [59]–[62]. Because of the frequency content of these stimuli, there is much more scope to recruit receptive fields with finer spatial scales to improve positional thresholds. With the Gaussian luminance patches that we have used here, performance at small and medium stimulus separations is largely independent of separation and limited by the low spatial frequency content of the stimulus envelope [38]. This mechanism prevents the recruitment of fine spatial scale receptive fields and ultimately places an upper limit on the magnitude of positional learning at small separations. It therefore explains the small learning effects that we observed at small and medium separations.

In principle, the transfer of positional learning could be explained by training induced enhancements in “absolute” positional localization of the central element. In the experimental arrangement, we did not set out to limit this possibility because previous research has highlighted the marked retinal specificity of visual learning [28], [40]–[43]. Therefore, if we had jittered the position of our stimulus configurations on a trial-by-trial basis and not observed any learning, it would have been impossible to distinguish whether this was due to a genuine lack of perceptual improvement or simply a consequence of jittering the retinal position of the stimulus. It seems unlikely that absolute position cues substantially contributed to the transfer of learning because absolute positional thresholds at a given eccentricity can be up to four times worse than the best relative position thresholds [58], [63]. Moreover, both our learning and transfer effects are separation-dependent, arguing against the notion that subjects used the absolute position of the central element or the edges of the monitor to make their judgments.

Our results necessitate a new interpretation of the concept of stimulus specificity so often shown in perceptual learning studies. The critical factor in the transfer of learning effects between tasks is not the task or stimulus arrangement, but rather the recruitment of common sets of neurons most informative for making each perceptual judgment. Although we have used a two-dimensional position design to make this distinction, it is unlikely that this principle is peculiar to the visuo-spatial domain.

Materials and Methods

Subjects

24 subjects (21–33 years; 13 males, 11 females) with normal or corrected-to-normal visual acuity participated. All were naïve to the specific purposes of the experiment. We obtained written consent from all subjects who were free to withdraw from the study at any time.

Stimuli

Stimuli were generated on an Apple Macintosh G5 using custom software written in Python [64] and displayed on a gamma corrected LaCie Electron 22 Blue IV monitor with a resolution of 1920×1440 pixels, viewing distance of 23.8 cm and update rate of 100 Hz. The stimulus elements consisted of three circular patches with Gaussian luminance profiles (standard deviation 0.33 degrees) on a uniform background (luminance ∼50 cd/m2), one positioned centrally at fixation and flanked by two others at a range of separations. The separation between the reference elements and central element ranged between 4–29 degrees in 5 degree steps. On each trial, stimuli were presented for 0.2 sec and separated by a 0.5 sec interval containing a blank screen of mean luminance.

Procedure

The experimental protocol was approved by the local ethics committee at the School of Psychology, University of Nottingham. Subjects viewed the display with their head held in a fixed position with a chin and forehead rest. Prior to the start of the experiment, we obtained an estimate of subjects' alignment and bisection thresholds at each separation using an adaptive staircase procedure, with a total of 50 presentations. This estimate determined the step size and range of values in the Method of Constant Stimuli, used throughout the remainder of the experiment to estimate positional thresholds. For each condition, positional thresholds at six separations were estimated in a single run of 420 randomly interleaved trials (20 trials per point on the psychometric function). Subjects did not receive any feedback on their performance.

On the first day of the experiment, we measured subjects' thresholds on all four combinations of task (three-element alignment and bisection) and configuration (vertical and horizontal orientation) in a random order. In the alignment task, subjects had to indicate whether the central element was offset to the left or right from vertically separated reference elements (vertical alignment) or above and below horizontally separated reference elements (horizontal alignment). In the bisection task, they had to judge whether the central element was closer to the upper or lower reference element (vertical bisection) or closer to the right or left reference element (horizontal bisection).

Subjects were pseudo-randomly assigned to one of four groups, each of which trained for eight days on one combination of task and configuration. During the training period, we obtained daily estimates of their positional thresholds at each separation using the methods described above. On the last day of the experiment, we re-measured subjects' thresholds on all four combinations of task and configuration in a random order.

Data analysis

We expressed the data as the proportion of trials on which subjects judged the central element to be rightward (Vertical alignment or Horizontal Bisection) or upward (Horizontal alignment or Vertical Bisection). To estimate positional thresholds, we fitted a logistic equation to these data of the form:

| (1) |

where y is the proportion of rightward or upward positional judgements, μ is point of subjective equality (the 50% point on the psychometric function), and α is an estimate of alignment or bisection threshold.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: The work was funded by the Leverhulme Trust. BSW is a Levehulme Trust Research Fellow and PVM is a Wellcome Trust Research Fellow.

References

- 1.Fine I, Jacobs RH. Comparing perceptual learning across tasks: A review. Journal of Vision. 2002;2:190–203. doi: 10.1167/2.2.5. [DOI] [PubMed] [Google Scholar]

- 2.Goldstone RL. Perceptual learning. Annual Review of Psychology. 1998;49:585–612. doi: 10.1146/annurev.psych.49.1.585. [DOI] [PubMed] [Google Scholar]

- 3.Fahle M, Poggio T. Cambridge, Massachusetts: MIT Press; 2002. Perceptual learning. [Google Scholar]

- 4.Fahle M. Perceptual learning: specificity versus generalization. Current Opinion in Neurobiology. 2005;15:154–160. doi: 10.1016/j.conb.2005.03.010. [DOI] [PubMed] [Google Scholar]

- 5.Tsodyks M, Gilbert C. Neural networks and perceptual learning. Nature. 2004;431:775–781. doi: 10.1038/nature03013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wilson DA, Fletcher ML, Sullivan RM. Acetylcholine and olfactory perceptual learning. Learning and Memory. 2004;11:28–34. doi: 10.1101/lm.66404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gilbert CD, Sigman M, Crist RE. The neural basis of perceptual learning. Neuron. 2001;31:681–697. doi: 10.1016/s0896-6273(01)00424-x. [DOI] [PubMed] [Google Scholar]

- 8.Gibson EJ. Perceptual learning. Annual Review of Psychology. 1963;14:29–56. doi: 10.1146/annurev.ps.14.020163.000333. [DOI] [PubMed] [Google Scholar]

- 9.Wright BA, Zhang Y. A review of learning with normal and altered sound-localization cues in human adults. International Journal of Audiology. 2006;45(Supplement 1):S92–98. doi: 10.1080/14992020600783004. [DOI] [PubMed] [Google Scholar]

- 10.Karni A, Sagi D. The time course of learning a visual skill. Nature. 1993;365:250–252. doi: 10.1038/365250a0. [DOI] [PubMed] [Google Scholar]

- 11.Ahissar M, Hochstein S. Task difficulty and the specificity of perceptual learning. Nature. 1997;387:401–406. doi: 10.1038/387401a0. [DOI] [PubMed] [Google Scholar]

- 12.Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends in Cognitive Sciences. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- 13.Fahle M. Perceptual learning: A case for early selection. Journal of Vision. 2004;4:879–890. doi: 10.1167/4.10.4. [DOI] [PubMed] [Google Scholar]

- 14.Hochstein S, Ahissar M. View from the top: hierarchies and reverse hierarchies in the visual system. Neuron. 2002;36:791–804. doi: 10.1016/s0896-6273(02)01091-7. [DOI] [PubMed] [Google Scholar]

- 15.Fiorentini A, Berardi N. Visual perceptual learning: a sign of neural plasticity at early stages of visual processing. Archives of Italian Biology. 1997;135:157–167. [PubMed] [Google Scholar]

- 16.Crist RE, Li W, Gilbert CD. Learning to see: experience and attention in primary visual cortex. Nature Neuroscience. 2001;4:519–525. doi: 10.1038/87470. [DOI] [PubMed] [Google Scholar]

- 17.Dosher BA, Lu ZL. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proc Natl Acad Sci U S A. 1998;95:13988–13993. doi: 10.1073/pnas.95.23.13988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Saarinen J, Levi DM. Perceptual learning in vernier acuity: what is learned? Vision Res. 1995;35:519–527. doi: 10.1016/0042-6989(94)00141-8. [DOI] [PubMed] [Google Scholar]

- 19.Schwartz S, Maquet P, Frith C. Neural correlates of perceptual learning: a functional MRI study of visual texture discrimination. Proc Natl Acad Sci U S A. 2002;99:17137–17142. doi: 10.1073/pnas.242414599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Folta K. Neural fine tuning during Vernier acuity training? Vision Research. 2003;43:1177–1185. doi: 10.1016/s0042-6989(03)00041-5. [DOI] [PubMed] [Google Scholar]

- 21.Furmanski CS, Schluppeck D, Engel SA. Learning strengthens the response of primary visual cortex to simple patterns. Current Biology. 2004;14:573–578. doi: 10.1016/j.cub.2004.03.032. [DOI] [PubMed] [Google Scholar]

- 22.Schoups A, Vogels R, Qian N, Orban G. Practising orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- 23.Gilbert CD. Early perceptual learning. Proc Natl Acad Sci U S A. 1994;91:1195–1197. doi: 10.1073/pnas.91.4.1195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fiorentini A, Berardi N. Perceptual learning specific for orientation and spatial frequency. Nature. 1980;287:43–44. doi: 10.1038/287043a0. [DOI] [PubMed] [Google Scholar]

- 25.Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proc Natl Acad Sci U S A. 1993;90:5718–5722. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Poggio T, Fahle M, Edelman S. Fast perceptual learning in visual hyperacuity. Science. 1992;256:1018–1021. doi: 10.1126/science.1589770. [DOI] [PubMed] [Google Scholar]

- 27.O'Toole AJ, Kersten DJ. Learning to see random-dot stereograms. Perception. 1992;21:227–243. doi: 10.1068/p210227. [DOI] [PubMed] [Google Scholar]

- 28.Shiu LP, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception and Psychophysics. 1992;52:582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- 29.Ball K, Sekuler R. Direction-specific improvement in motion discrimination. Vision Research. 1987;27:953–965. doi: 10.1016/0042-6989(87)90011-3. [DOI] [PubMed] [Google Scholar]

- 30.Ramachandran VS, Braddick O. Orientation-specific learning in stereopsis. Perception. 1973;2:371–376. doi: 10.1068/p020371. [DOI] [PubMed] [Google Scholar]

- 31.Fahle M, Edelman S. Long-term learning in vernier acuity: effects of stimulus orientation, range and of feedback. Vision Research. 1993;33:397–412. doi: 10.1016/0042-6989(93)90094-d. [DOI] [PubMed] [Google Scholar]

- 32.Fahle M. Specificity of learning curvature, orientation, and vernier discriminations. Vision Research. 1997;37:1885–1895. doi: 10.1016/s0042-6989(96)00308-2. [DOI] [PubMed] [Google Scholar]

- 33.Saffell T, Matthews N. Task-specific perceptual learning on speed and direction discrimination. Vision Research. 2003;43:1365–1374. doi: 10.1016/s0042-6989(03)00137-8. [DOI] [PubMed] [Google Scholar]

- 34.Fahle M, Morgan M. No transfer of perceptual learning between similar stimuli in the same retinal position. Current Biology. 1996;6:292–297. doi: 10.1016/s0960-9822(02)00479-7. [DOI] [PubMed] [Google Scholar]

- 35.Fahle M, Edelman S, Poggio T. Fast perceptual learning in hyperacuity. Vision Research. 1995;35:3003–3013. doi: 10.1016/0042-6989(95)00044-z. [DOI] [PubMed] [Google Scholar]

- 36.Li RW, Levi DM, Klein SA. Perceptual learning improves efficiency by re-tuning the decision ‘template’ for position discrimination. Nature Neuroscience. 2004;7:178–183. doi: 10.1038/nn1183. [DOI] [PubMed] [Google Scholar]

- 37.Beard BL, Levi DM, Reich LN. Perceptual learning in parafoveal vision. Vision Research. 1995;35:1679–1690. doi: 10.1016/0042-6989(94)00267-p. [DOI] [PubMed] [Google Scholar]

- 38.Whitaker D, Bradley A, Barrett BT, McGraw PV. Isolation of stimulus characteristics contributing to Weber's law for position. Vision Research. 2002;42:1137–1148. doi: 10.1016/s0042-6989(02)00030-5. [DOI] [PubMed] [Google Scholar]

- 39.Burbeck CA, Yap YL. Two mechanisms for localization? Evidence for separation-dependent and separation-independent processing of position information. Vision Research. 1990;30:739–750. doi: 10.1016/0042-6989(90)90099-7. [DOI] [PubMed] [Google Scholar]

- 40.Ahissar M, Hochstein S. Learning pop-out detection: specificities to stimulus characteristics. Vision Research. 1996;36:3487–3500. doi: 10.1016/0042-6989(96)00036-3. [DOI] [PubMed] [Google Scholar]

- 41.Berardi N, Fiorentini A. Interhemispheric transfer of visual information in humans: spatial characteristics. Journal of Physiology. 1987;384:633–647. doi: 10.1113/jphysiol.1987.sp016474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Karni A, Sagi D. Where practice makes perfect in texture discrimination: evidence for primary visual cortex plasticity. Proc Natl Acad Sci U S A. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Schoups AA, Vogels R, Orban GA. Human perceptual learning in identifying the oblique orientation: retinotopy, orientation specificity and monocularity. Journal of Physiology. 1995;483:797–810. doi: 10.1113/jphysiol.1995.sp020623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lu ZL, Dosher BA. Perceptual learning retunes the perceptual template in foveal orientation identification. Journal of Vision. 2004;4:44–56. doi: 10.1167/4.1.5. [DOI] [PubMed] [Google Scholar]

- 45.Hubel DH, Wiesel TN. Receptive fields, binocular interaction and functional architecture in cat's visual cortex. Journal of Physiology. 1962;160:106–154. doi: 10.1113/jphysiol.1962.sp006837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hubel DH, Wiesel TN. Receptive fields and functional architecture of monkey striate cortex. Journal of Physiology. 1968;195:215–243. doi: 10.1113/jphysiol.1968.sp008455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Foster KH, Gasaka JP, Nagler M, Pollen DA. Spatial and temporal frequency selectivity of neurons in visual cortical areas V1 and V2 of the macaque monkey. Journal of Physiology. 1985;365:331–363. doi: 10.1113/jphysiol.1985.sp015776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.De Valois RL, Albrecht DG, Thorell LG. Spatial frequency selectivity of cells in macaque visual cortex. Vision Research. 1982;22:545–559. doi: 10.1016/0042-6989(82)90113-4. [DOI] [PubMed] [Google Scholar]

- 49.Raiguel S, Vogels R, Mysore SG, Orban GA. Learning to see the difference specifically alters the most informative V4 neurons. Journal of Neuroscience. 2006;26:6589–6602. doi: 10.1523/JNEUROSCI.0457-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Hess RF, Hayes A. Neural recruitment explains “Weber's law” of spatial position. Vision Research. 1993;33:1673–1684. doi: 10.1016/0042-6989(93)90033-s. [DOI] [PubMed] [Google Scholar]

- 51.Levi DM, Klein SA, Yap YL. “Weber's law” for position: unconfounding the role of separation and eccentricity. Vision Research. 1988;28:597–603. doi: 10.1016/0042-6989(88)90109-5. [DOI] [PubMed] [Google Scholar]

- 52.Morgan MJ. Hyperacuity. In: Regan M, editor. Spatial Vision. London: Macmillan; 1991. pp. 87–113. [Google Scholar]

- 53.Waugh SJ. Visibility and vernier acuity for separated targets. Vision Research. 1995;33:539–552. doi: 10.1016/0042-6989(93)90257-w. [DOI] [PubMed] [Google Scholar]

- 54.Levi DM, Klein SA. Vernier acuity, crowding and amblyopia. Vision Research. 1985;25:979–991. doi: 10.1016/0042-6989(85)90208-1. [DOI] [PubMed] [Google Scholar]

- 55.Sullivan GD, Oatley K, Sutherland NS. Vernier acuity is affected by target length and separation. Perception and Psychophysics. 1972;12:438–444. [Google Scholar]

- 56.Watt RJ. Towards a general theory of the visual acuities for shape and spatial arrangement. Vision Research. 1984;24:1377–1386. doi: 10.1016/0042-6989(84)90193-7. [DOI] [PubMed] [Google Scholar]

- 57.Morgan MJ, Ward RM, Hole GJ. Evidence for positional coding in hyperacuity. Journal of the Optical Society of America A. 1990;7:297–304. doi: 10.1364/josaa.7.000297. [DOI] [PubMed] [Google Scholar]

- 58.Klein SA, Levi DM. Position sense of the peripheral retina. Journal of the Optical Society if America A. 1987;4:1543–1553. doi: 10.1364/josaa.4.001543. [DOI] [PubMed] [Google Scholar]

- 59.Crist RE, Kapadia MK, Westheimer G, Gilbert CD. Perceptual learning of spatial localization: specificity for orientation, position, and context. Journal of Neurophysiology. 1997;78:2889–2894. doi: 10.1152/jn.1997.78.6.2889. [DOI] [PubMed] [Google Scholar]

- 60.Herzog MH, Fahle M. The role of feedback in learning a vernier discrimination task. Vision Research. 1997;37:2133–2141. doi: 10.1016/s0042-6989(97)00043-6. [DOI] [PubMed] [Google Scholar]

- 61.Herzog MH, Fahle M. Effects of biased feedback on learning and deciding in a vernier discrimination task. Vision Research. 1999;39:4232–4243. doi: 10.1016/s0042-6989(99)00138-8. [DOI] [PubMed] [Google Scholar]

- 62.Fahle M, Henke-Fahle S. Interobserver variance in perceptual performance and learning. Investigative Ophthalmology and Visual Science. 1996;37:869–877. [PubMed] [Google Scholar]

- 63.White JM, Levi DM, Aitsebaomo AP. Spatial localization without visual references. Vision Research. 1992;32:513–526. doi: 10.1016/0042-6989(92)90243-c. [DOI] [PubMed] [Google Scholar]

- 64.Peirce JW. Psychopy–Psychophysics software in Python. Journal of Neuroscience Methods. 2007;162:8–13. doi: 10.1016/j.jneumeth.2006.11.017. [DOI] [PMC free article] [PubMed] [Google Scholar]