Abstract

The GRADE system can be used to grade the quality of evidence and strength of recommendations for diagnostic tests or strategies. This article explains how patient-important outcomes are taken into account in this process

Summary points

As for other interventions, the GRADE approach to grading the quality of evidence and strength of recommendations for diagnostic tests or strategies provides a comprehensive and transparent approach for developing recommendations

Cross sectional or cohort studies can provide high quality evidence of test accuracy

However, test accuracy is a surrogate for patient-important outcomes, so such studies often provide low quality evidence for recommendations about diagnostic tests, even when the studies do not have serious limitations

Inferring from data on accuracy that a diagnostic test or strategy improves patient-important outcomes will require the availability of effective treatment, reduction of test related adverse effects or anxiety, or improvement of patients’ wellbeing from prognostic information

Judgments are thus needed to assess the directness of test results in relation to consequences of diagnostic recommendations that are important to patients

In this fourth article of the five part series, we describe how guideline developers are using GRADE to rate the quality of evidence and move from evidence to a recommendation for diagnostic tests and strategies. Although recommendations on diagnostic testing share the fundamental logic of recommendations on treatment, they present unique challenges. We will describe why guideline panels should be cautious when they use evidence of the accuracy of tests (“test accuracy”) as the basis for recommendations and why evidence of test accuracy often provides low quality evidence for making recommendations.

Testing makes a variety of contributions to patient care

Clinicians use tests that are usually referred to as “diagnostic”—including signs and symptoms, imaging, biochemistry, pathology, and psychological testing—for various purposes.1 These purposes include identifying physiological derangements, establishing prognosis, monitoring illness and response to treatment, and diagnosis. This article focuses on diagnosis: the use of tests to establish the presence or absence of a disease (such as tuberculosis), target condition (such as iron deficiency), or syndrome (such as Cushing’s syndrome).

Whereas some tests naturally report positive and negative results (for example, pregnancy), other tests report their results as a categorical (for example, imaging) or continuous variable (for example, metabolic measures), with the likelihood of disease increasing as the test results become more extreme. For simplicity, in this discussion we assume a diagnostic approach that ultimately categorises test results as positive or negative.

Guideline panels considering a diagnostic test should begin by clarifying its purpose. The purpose of a test under consideration may be for triage (to minimise use of an invasive or expensive test), replacement (of tests with greater burden, invasiveness, or cost), or add-on (to enhance diagnosis beyond existing tests).2 The panel should identify the limitations for which alternative tests offer a putative remedy; for example, eliminating a high proportion of false positive or false negative results, enhancing availability, decreasing invasiveness, or decreasing cost. This process will lead to identification of sensible clinical questions that, as with other management problems, have four components: patients, diagnostic intervention (strategy), comparison, and outcomes of interest.3 4 The box shows an example of a question for a replacement test.

Example question for replacement test

In patients in whom coronary artery disease is suspected, does multislice spiral computed tomography of coronary arteries as a replacement for conventional invasive coronary angiography reduce complications with acceptable rates of false negatives associated with coronary events and false positives leading to unnecessary treatment and complications?5 6

Clinicians often use diagnostic tests as a package or strategy. For example, in managing patients with apparently operable lung cancer on computed tomography, clinicians may proceed directly to thoracotomy or may apply a strategy of imaging the brain, bone, liver, and adrenal glands, with subsequent management depending on the results. Furthermore, a testing sequence may use an initial sensitive but non-specific test, which, if positive, is followed by a more specific test (for example, faecal occult blood followed by colonoscopy). Thus, one can often think of evaluating or recommending not a single test but a diagnostic strategy.

Test accuracy is a surrogate for patient-important outcomes

The main contribution of this article is that it presents a framework for thinking about diagnostic tests in terms of their impact on outcomes important to patients (“patient-important outcomes”). Usually, when clinicians think about diagnostic tests they focus on test accuracy (that is, how well the test classifies patients correctly as having or not having a disease). The underlying assumption is, however, that obtaining a better idea of whether a target condition is present or absent will result in superior management of patients and improved outcome. In the example of imaging for metastatic disease in patients presenting with apparently operable lung cancer, the presumption is that positive additional tests will spare patients the morbidity and early mortality associated with futile thoracotomy.

The example of computed tomography for coronary artery disease described in the box illustrates another common rationale for a new test: replacement of another test (coronary computed tomography instead of conventional angiography) to avoid complications associated with a more invasive and expensive alternative.2 In this paradigmatic situation, the new test would need only to replicate the sensitivity and specificity (accuracy) of the reference standard to show superiority.

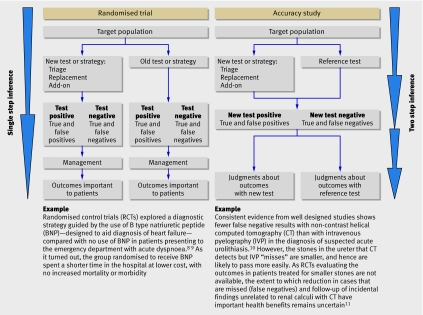

However, if a test fails to improve important outcomes, no reason exists for its use, whatever its accuracy. Thus, the best way to assess a diagnostic strategy is a controlled trial in which investigators randomise patients to experimental or control diagnostic approaches and measure mortality, morbidity, symptoms, and quality of life.7 Figure 1 illustrates two generic study structures that investigators can use to evaluate the impact of testing.

Fig 1 Two generic ways in which a test or diagnostic strategy can be evaluated. On the left, patients are randomised to a new test or strategy or to an old test or strategy. Those with a positive test result (cases detected) are randomised (or were previously randomised) to receive the best available management (second step of randomisation for management not shown). Investigators evaluate and compare patient-important outcomes in all patients in both groups.2 On the right, patients receive both a new test and a reference test (old or comparator test or strategy). Investigators can then calculate the accuracy of the test compared with the reference test (first step). To make judgments about importance to patients of this information, patients with a positive test (or strategy) in either group are (or have been in previous studies) submitted to treatment or no treatment; investigators then evaluate and compare patient-important outcomes in all patients in both groups (second step)

When diagnostic intervention studies—ideally randomised controlled trials but also observational studies—comparing alternative diagnostic strategies with assessment of direct patient-important outcomes are available (fig 1, left), guideline panels can use the GRADE approach described for other interventions in previous articles in this series.12 13 If studies measuring the impact of testing on patient-important outcomes are not available, guideline panels must focus on studies of test accuracy and make inferences about the likely impact on patient-important outcomes (fig 1, right).14 In the second situation, diagnostic accuracy is a surrogate outcome for benefits and harms to patients.1

The key questions are whether the number of false negatives (cases missed) or false positives will be reduced, with corresponding increases in true positives and true negatives; how accurately similar or different patients are classified by the alternative testing strategies; and what outcomes occur in both patients labelled as cases and those labelled as not having disease. Table 1 presents examples that illustrate these questions. We discuss these questions in subsequent sections of this article, all of which will focus on using studies of diagnostic accuracy to develop recommendations.

Table 1.

Examples and implications of different testing scenarios focusing on accuracy

| Example of new test and reference test or strategy | Putative benefit of new test | Diagnostic accuracy | Patients’ outcomes and expected impact on management | Balance between presumed outcomes, test complications, and cost | ||||

|---|---|---|---|---|---|---|---|---|

| Sensitivity | Specificity | True positives | True negatives | False positives | False negatives | |||

| Shorter version of dementia test compared with original mini mental state exam for diagnosis of dementia | Simpler test, less time | Equal | Equal | Presumed influence on patient-important outcomes: | Evidence of shorter time and similar test accuracy (and thus patients’ outcomes) would generally support new test’s usefulness | |||

| Uncertain benefit from earlier diagnosis and treatment | Almost certain benefit from reassurance | Likely anxiety and possible morbidity from additional testing and treatment | Possible detriment from delayed diagnosis | |||||

| Directness of evidence (test results) for outcomes important to patients: | ||||||||

| Some uncertainty | No uncertainty | Some uncertainty | Major uncertainty | |||||

| Helical computed tomography for renal calculus compared with intravenous pyelogram (IVP) | Detection of more (but smaller) calculi | Greater | Equal | Presumed influence on patient-important outcomes: | Fewer complications and downsides compared with IVP would support new test’s usefulness, but balance between desirable and undesirable effects is not clear in view of uncertain consequences of identifying smaller stones | |||

| Certain benefit for larger stones; less clear benefit for smaller stones, and unnecessary treatment can result | Almost certain benefit from avoiding unnecessary tests | Likely detriment from unnecessary additional invasive tests | Likely detriment for large stones; less certain for small stones, but possible detriment from unnecessary additional invasive tests for other potential causes of complaints | |||||

| Directness of evidence (test results) for patient-important outcomes: | ||||||||

| Some uncertainty | No uncertainty | No uncertainty | Major uncertainty | |||||

| Computed tomography for coronary artery disease compared with coronary angiography | Less invasive testing, but misses some cases | Slightly less | Less | Presumed influence on patient-important outcomes: | Undesirable consequences of more false positives and false negatives with computed tomography are not acceptable despite higher rate of rare complications (infarction and death) and higher cost of angiography | |||

| Benefit from treatment and fewer complications | Benefit from reassurance and fewer complications | Harm from unnecessary treatment | Detriment from delayed diagnosis or myocardial insult | |||||

| Directness of evidence (test results) for patient-important outcomes: | ||||||||

| No uncertainty | No uncertainty | No uncertainty | Some uncertainty | |||||

See text for explanations of terms.

Using indirect evidence to make inferences about impact on patient-important outcomes

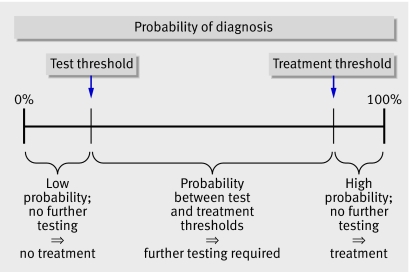

A recommendation associated with a diagnostic question depends on the balance between the desirable and undesirable consequences of the diagnostic test or strategy and should be based on a systematic review that focuses on the clinical question. We will use a simplified approach that classifies test results into those yielding true positives (patients correctly classified above the treatment threshold—table 1 and fig 2), false positives (patients incorrectly classified above the treatment threshold), true negatives (patients correctly classified below the testing threshold), and false negatives (patients incorrectly classified below the testing threshold).

Fig 2 Test and treatment thresholds. What clinicians expect of a good test is that results change the probability sufficiently to confirm or exclude a diagnosis. Tests, however, are altering only the probability of a disease of interest being present. If a test result moves the probability of the condition of interest to below the test threshold, this indicates that the condition is very unlikely, the downsides associated with any further testing and treatment for this condition outweigh any anticipated benefit, and no further testing or treatment for that condition should follow. If the test result increases the probability of disease to above the treatment threshold, this indicates that the condition is very likely, confirmatory testing that raises the probability of the condition further is unnecessary, and the anticipated benefits of treatment outweigh potential harms. If the pre-test probability is above the treatment threshold, further confirmatory testing that raises the probability further would not be helpful. If the pre-test probability is below the test threshold, further exclusionary testing would not be useful. When the probability is between the test and treatment thresholds, testing will be useful. Test results are of greatest value when they shift the probability across either threshold

However, inferring from data on accuracy that a diagnostic test or strategy improves patient-important outcomes requires the availability of effective treatment.1 Alternatively, even without an effective treatment, an accurate test may be beneficial if it reduces test related adverse effects or reduces anxiety by excluding an ominous diagnosis, or if confirming a diagnosis improves patients’ wellbeing through the prognostic information it imparts.

For instance, the results of genetic testing for Huntington’s chorea, an untreatable condition, may provide either welcome reassurance that a patient will not have the condition or the ability to plan for his or her future knowing that he or she will develop the condition. In this case, the ability to plan is analogous to an effective treatment and the benefits of planning need to be balanced against the downsides of receiving an early diagnosis.15 16 17 We will now describe the factors that influence judgments about the balance of desirable and undesirable effects, focusing on the quality of evidence.

Judgments about quality of underlying evidence

Study design

GRADE’s four categories of quality of evidence imply a gradient of confidence in estimates of the effect of a diagnostic test strategy on patient-important outcomes.13 High quality evidence comes from randomised controlled trials directly comparing the impact of alternative diagnostic strategies on patient-important outcomes (for example, trials of B type natriuretic peptide for heart failure as described in figure 1) without limitations in study design and conduct, imprecision (that is, powered to detect differences in patient-important outcomes), inconsistency, indirectness, and reporting bias.13 18 20

Although valid studies of accuracy also start as high quality in the diagnostic framework, such studies are vulnerable to limitations and often provide low quality evidence for recommendations, particularly as a result of the indirect evidence they usually offer on the impact of subsequent management on patient-important outcomes. Table 2 describes how GRADE deals with the particular challenges of judging the quality of evidence on desirable and undesirable consequences of alternative diagnostic strategies.

Table 2.

Factors that decrease quality of evidence for studies of diagnostic accuracy and how they differ from evidence for other interventions

| Factors that determine and can decrease quality of evidence | Explanations and differences from quality of evidence for other interventions |

|---|---|

| Study design | Different criteria for accuracy studies—Cross sectional or cohort studies in patients with diagnostic uncertainty and direct comparison of test results with an appropriate reference standard are considered high quality and can move to moderate, low, or very low depending on other factors |

| Limitations (risk of bias) | Different criteria for accuracy studies—Consecutive patients should be recruited as a single cohort and not classified by disease state, and selection as well as referral process should be clearly described.7 Tests should be done in all patients in the same patient population for new test and well described reference standard; evaluators should be blind to results of alternative test and reference standard |

| Indirectness: | |

| Outcomes | Similar criteria—Panels assessing diagnostic tests often face an absence of direct evidence about impact on patient-important outcomes. They must make deductions from studies of diagnostic tests about the balance between the presumed influences on patient-important outcomes of any differences in true and false positives and true and false negatives in relation to complications and costs of the test. Therefore, accuracy studies typically provide low quality evidence for making recommendations owing to indirectness of the outcomes, similar to surrogate outcomes for treatments |

| Patient populations, diagnostic test, comparison test, and indirect comparisons | Similar criteria—Quality of evidence can be reduced if important differences exist between populations studied and those for whom recommendation is intended (in previous testing, spectrum of disease or comorbidity); if important differences exist in tests studied and diagnostic expertise of people applying them in studies compared with settings for which recommendations are intended; or if tests being compared are each compared with a reference (gold) standard in different studies and not directly compared in same studies |

| Important inconsistency in study results | Similar criteria—For accuracy studies, unexplained inconsistency in sensitivity, specificity, or likelihood ratios (rather than relative risk or mean differences) can reduce quality of evidence |

| Imprecise evidence | Similar criteria—For accuracy studies, wide confidence intervals for estimates of test accuracy or true and false positive and negative rates can reduce quality of evidence |

| High probability of publication bias | Similar criteria—High risk of publication bias (for example, evidence from small studies for new intervention or test, or asymmetry in funnel plot) can lower quality of evidence |

Study limitations (risk of bias)

Valid studies of diagnostic test accuracy include representative and consecutive patients in whom legitimate diagnostic uncertainty exists—that is, the sort of patients to whom clinicians would apply the test in the course of regular clinical practice. If studies fail this criterion—and, for example, enrol severe cases and healthy controls—the apparent accuracy of a test is likely to be misleadingly high.21 22

Valid studies of diagnostic tests involve a comparison between the test or tests under consideration and an appropriate reference (sometimes called “gold”) standard. Investigators’ failure to make such a comparison in all patients increases the risk of bias. The risk of bias is further increased if the people who carry out or interpret the test are aware of the results of the reference or gold standard test or vice versa. Guideline panels can use existing instruments to assess the risk of bias in studies evaluating accuracy of diagnostic tests, and the results may lead to downgrading of the quality of evidence if serious limitations exist.23 24 25

Directness

We described considerations about directness for other interventions in a previous article.13 Judging directness presents additional, perhaps greater, challenges for guideline panels making recommendations about diagnostic tests. If a new test reduces false positives and false negatives, to what extent will that reduction lead to improvement in patient-important outcomes? Alternatively, a new test may be simpler to do, with lower risk and cost, but may produce false positives and false negatives. Consider the consequences of replacing invasive angiography with coronary computed tomography scanning for the diagnosis of coronary artery disease (tables 3 and 4). True positive results will lead to the administration of treatments of known effectiveness (drugs, angioplasty and stents, bypass surgery); true negative results will spare patients the possible adverse effects of the reference standard test; false positive results will result in adverse effects (unnecessary drugs and interventions, including the possibility of follow-up angioplasty) without apparent benefit; and false negatives will result in patients not receiving the benefits of available interventions that help to reduce the subsequent risk of coronary events.

Table 3.

Key findings of diagnostic accuracy studies—should multislice spiral computed tomography rather than conventional coronary angiography* be used to diagnose coronary artery disease in a population with a low (20%) pre-test probability?6

| Measure | Test findings (95% CI) |

|---|---|

| Pooled sensitivity | 0.96 (0.94 to 0.98) |

| Pooled specificity | 0.74 (0.065 to 0.84) |

| Positive likelihood ratio† | 5.4 (3.4 to 8.3) |

| Negative likelihood ratio† | 0.05 (0.03 to 0.09) |

*Assuming that the reference standard, angiography, does not yield false positives or false negatives.

†Average likelihood ratios from Hamon et al.6

Table 4.

Consequences of key findings of diagnostic accuracy studies—should multislice spiral computed tomography rather than conventional coronary angiography* be used to diagnose coronary artery disease in a population with a low (20%) pre-test probability?6

| Consequences | No per 1000 patients | Importance† |

|---|---|---|

| True positive results‡ | 192 | 8 |

| True negative results§ | 592 | 8 |

| False positive results¶ | 208 | 7 |

| False negative results** | 8 | 9 |

| Inconclusive results††§§ | – | 5 |

| Complications‡‡§§ | – | 5 |

| Cost§§ | – | 5 |

All results given per 1000 patients tested for prevalence of 20% and likelihood ratios shown in table 3.

*Assuming that the reference standard, angiography, does not yield false positives or false negatives.

†On a 9 point scale, GRADE recommends classifying these outcomes as not important (score 1-3), important (4-6), and critical (7-9) to a decision.13 18 19

‡Important because mandates drugs, angioplasty and stents, bypass surgery.

§Important because spares patients unnecessary interventions associated with adverse effects.

¶Important because patients are exposed to unnecessary potential adverse effects from drugs and invasive procedures.

**Important because increase risk of coronary events as a result of patients not receiving efficacious treatment.

††Uninterpretable, indeterminate, or intermediate test results; important because generate anxiety, uncertainty as to how to proceed, further testing, and possible negative consequences of either treating or not treating.

‡‡Not reliably reported; important because although rare, they can be serious.

§§Although the data for these consequences are not reported for simplicity or because they are not exactly known on the basis of the available data, they are important.

Thus, inferences that minimising false positives and false negatives will benefit patients, and that increasing them will have a negative impact on patient-important outcomes, are relatively strong. As for outcomes in other intervention studies, the degree of importance of these consequences for patients varies and should be considered by guideline panels when balancing desirable and undesirable consequences; for example, patients will place a greater value on preventing myocardial infarctions than a mild episode of angina. The impact of inconclusive test results is less clear; they are clearly undesirable, however, in that they are likely to induce anxiety and may lead to unnecessary interventions, induce further testing, or delay the application of effective treatment. The complications of invasive angiography—infarction and death—although rare, are important.

Because our knowledge of the consequences of the rates of false positives, false negatives, inconclusive results, and complications with the alternative diagnostic strategies are fairly secure, and those outcomes are important, we can make strong inferences about the relative impact of computed tomography scanning and conventional angiography on patient-important outcomes. In this example with a relatively low probability for coronary artery disease, computed tomography scanning results in a large number of false positives leading to unnecessary anxiety and further testing, including coronary angiography, after time and resources have been spent on computed tomography scanning (table 4). It also leads to about 1% (false negatives) of patients who have coronary artery disease being missed.

Uncertainty about the consequences of the false positive and false negative results will weaken inferences about the balance between desirable and undesirable consequences. Consider the consequences of false positive and false negative results of diagnostic imaging for patients in whom acute sinusitis is suspected. As the primary benefit of treatment is shortening of the duration of illness and symptoms, the balance of the consequences important to patients is less clear between patients with false negatives results who are deprived of antibiotics and will have a longer duration of symptoms and an increased risk of complications from the infection, but have no side effects from use of antibiotics and those with false positive results who receive antibiotics when they should not but may feel relieved that they have received care and treatment. Furthermore, guideline panels will have to consider the societal consequences (such as antibiotic resistance) of administering antibiotics to false positive cases.3

Consider once again the use of B type natriuretic peptide for heart failure (fig 1). The test may be accurate, but if clinicians are already making the diagnosis with near perfect accuracy, and instituting appropriate treatment, the test will not improve outcomes for patients. Even if clinicians are initially inaccurate but correct their mistakes as the clinical picture unfolds (for example, by withdrawing initial unnecessary diuretic treatment or subsequently recognising the need for diuretic treatment), patients’ outcomes may be unaffected. The link between test result and outcome here is sufficiently weak that, other considerations aside, the diagnostic accuracy information alone would provide only low quality evidence. In this case, however, two randomised controlled trials showed that (at least in their setting) B type natriuretic peptide reduced admissions hospital and length of stay in hospital without apparent adverse consequences.

Guideline panels considering questions of diagnosis also face the same sort of challenges regarding indirectness as do panels making recommendations for other interventions. Test accuracy may vary across populations of patients: panels therefore need to consider how well the populations included in studies of diagnosis correspond to the populations that are the subject of the recommendations. Similarly, panels need to consider how comparable new tests and reference tests are to the tests used in the settings for which the recommendations are made. Finally, when evaluating two or more alternative new tests or strategies, panels need to consider whether these diagnostic strategies were compared directly (in one study) or indirectly (in separate studies) with a common (reference) standard.26 27 28

Arriving at a bottom line for study quality

Table 5 shows the evidence profile and the quality assessment for all critical outcomes of computed tomography angiography in comparison with invasive angiography. The original accuracy studies were well planned and executed, the results are precise, and we do not suspect important publication bias. Little or no uncertainty exists about the directness of the evidence (for test results) for patient-important outcomes for true positives, false positives, and true negatives (tables 1 and 5). However, some uncertainty about the extent to which limitations in test accuracy will have deleterious consequences on patient-important outcomes for false negatives led to downgrading of the quality of evidence from high to moderate (that is, we believe the evidence is indirect for false negatives because we are uncertain that delayed diagnoses of coronary artery disease leads to worse outcomes).

Table 5.

Quality assessment of diagnostic accuracy studies—example: should multislice spiral computed tomography be used instead of conventional coronary angiography for diagnosis of coronary artery disease?*

| No of studies | Design | Limitations | Indirectness | Inconsistency | Imprecise data | Publication bias | Quality |

|---|---|---|---|---|---|---|---|

| True positives (patients with coronary artery disease) | |||||||

| 21 studies (1570 patients) | Cross sectional studies† | No serious limitations | Little or no uncertainty | Serious inconsistency§ | No serious imprecision | Unlikely¶ | ⊕⊕⊕◯ |

| Moderate | |||||||

| True negatives (patients without coronary artery disease) | |||||||

| 21 studies (1570 patients) | Cross sectional studies† | No serious limitations | Little or no uncertainty | Serious inconsistency§ | No serious imprecision | Unlikely¶ | ⊕⊕⊕◯ |

| Moderate | |||||||

| False positives (patients incorrectly classified as having coronary artery disease) | |||||||

| 21 studies (1570 patients) | Cross sectional studies† | No serious limitations | Little or no uncertainty | Serious inconsistency§ | No serious imprecision | Unlikely¶ | ⊕⊕⊕◯ |

| Moderate | |||||||

| False negatives (patients incorrectly classified as not having coronary artery disease) | |||||||

| 21 studies (1570 patients) | Cross sectional studies† | No serious limitations | Some uncertainty‡ | Serious inconsistency§ | No serious imprecision | Unlikely¶ | ⊕⊕◯◯ |

| Low | |||||||

*Full quality assessment would include a row for outcomes important to patients associated with each possible test result (true positive, true negative, false positive, false negative, and inconclusive) as well as complications and costs of test (see table 3); simplified summary of quality of evidence for critical outcomes presented here.

†All patients were selected to have conventional coronary angiography and were, therefore, generally presenting with high probability of coronary artery disease (median prevalence in included studies 63.5%, range 6.6-100%)

‡Some uncertainty about directness for false negatives related to detrimental effects from delayed diagnosis or myocardial insult, reducing quality of evidence for consequences of false negative test results from high to moderate.

§Statistically significant, unexplained heterogeneity of results for sensitivity (proportion of patients with positive coronary angiography with positive computed tomography scan), specificity (proportion of patients with negative coronary angiography with negative computed tomography scan), likelihood ratios, and diagnostic odds ratios, reducing quality of evidence for consequences of true positive, true negative, and false positive results from high to moderate and of false negative results from moderate to low.13

¶Possibility of publication bias not excluded but not considered sufficient to downgrade quality of evidence.

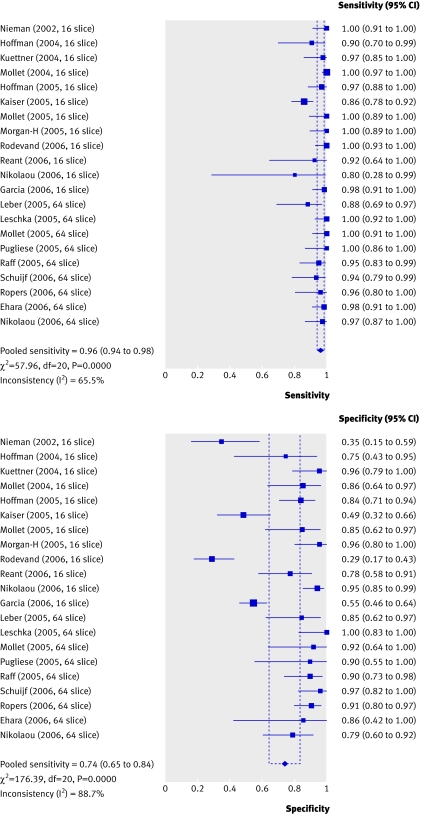

Problems with inconsistency also exist. Reviewers considering the relative merits of computed tomography and invasive angiography for diagnosis of coronary disease found important heterogeneity in the results for the proportion of angiography negative patients with a positive computed tomography test result (specificity) and in the results for the proportion of angiography positive patients with a negative computed tomography test result (sensitivity) that they could not explain (fig 3). This heterogeneity was also present for other measures of diagnostic tests (that is, positive and negative likelihood ratios and diagnostic odds ratios). Unexplained heterogeneity in the results across studies further reduced the quality of evidence for all outcomes. Major uncertainty about the impact of false negative tests on patient-important outcomes would have led to downgrading of the quality of evidence from high to low for the other examples in table 1.

Fig 3 Example of heterogeneity in diagnostic test results. Sensitivity and specificity of multislice coronary computed tomography compared with coronary angiogram (from Hamon et al4). This heterogeneity also existed for likelihood ratios and diagnostic odds ratios

Arriving at a recommendation

The balance of presumed patient-important outcomes as the result of true and false positives and negatives with test complications determines whether a guideline panel makes a recommendation for or against applying a test.12 Other factors influencing the strength of a recommendation include the quality of the evidence, the uncertainty about values and preferences associated with the tests and presumed patient-important outcomes, and cost.

Coronary computed tomography scanning avoids the adverse consequences of invasive angiography, which can include myocardial infarction and death. These consequences are, however, very rare. As a result, a guideline panel evaluating multislice spiral computed tomography as a replacement test for coronary angiography could, despite its lower cost, make a weak recommendation against its use in place of invasive coronary angiography. This recommendation follows from the large number of false positives and the risk of missing patients with the disease who could be treated effectively (false negatives). It also follows from the evidence for the new test being only low quality and the consideration of values and preferences. Despite the general preference for less invasive tests with lower risks of complications, most patients would probably favour the more invasive approach (angiography). This reasoning follows from the assumption that patients would place a higher value on reassurance about the absence or presence of coronary disease, and instituting risk reducing strategies, than on avoiding complications of angiography. Guideline panels considering the use of coronary computed tomography compared with no direct imaging of the coronary arteries (for instance, in settings with inadequate access to angiography where computed tomography is not a replacement for angiography but a triage tool) may find the evidence of higher quality and make a strong recommendation for its use in identifying patients who could be referred for angiography and further treatment.

An alternative way to conceptualise the formulation of strong and weak recommendation relates to figure 2. Test strategies that result in patients moving below the test threshold or above the treatment threshold (given that effective treatment exists) will often lead to strong recommendations.

In addition, users of recommendations on diagnostic tests should check whether the pre-test probability range is applicable. The likelihood of the disease (prevalence or pre-test probability) in the patient in front of them will often influence the probability of a true positive or false positive test result in that patient. Recommendations for populations with different baseline risks or likelihood of disease may therefore be appropriate. In particular, recommendations for screening (low risk populations) will almost always differ from recommendations for using a test for diagnosis in populations of patients in whom a disease is suspected.

Finally, individual clinicians together with their patients will be establishing treatment and test thresholds on the basis of the individual patient’s values and preferences. For example, a patient averse to the risks of coronary angiography might choose computed tomography imaging over angiograms, whereas most patients who are averse to the risk of false positives and negatives, place a high value on reassurance and knowledge about coronary disease, and are willing to accept the risk of an angiogram will choose an angiogram instead of computed tomography. As for other recommendations, the exploration and integration of patients’ values and preferences are critical to the development and implementation of recommendations for diagnostic tests.

Conclusion

The GRADE approach to grading the quality of evidence and strength of recommendations for diagnostic tests provides a comprehensive and transparent approach for developing these recommendations. We have presented an overview of the approach, based on the recognition that test results are surrogate markers for benefits to patients. The application of the approach requires a shift in clinicians’ thinking to clearly recognise that, whatever their accuracy, diagnostic tests are of value only if they result in improved outcomes for patients.

We thank the many people and organisations that have contributed to the progress of the GRADE approach through funding of meetings and feedback on the work described in this article.

The members of the GRADE Working Group who participated in this work were Phil Alderson, Pablo Alonso-Coello, Jeff Andrews, David Atkins, Hilda Bastian, Hans de Beer, Jan Brozek, Francoise Cluzeau, Jonathan Craig, Ben Djulbegovic, Yngve Falck-Ytter, Beatrice Fervers, Signe Flottorp, Paul Glasziou, Gordon H Guyatt, Robin Harbour, Margaret Haugh, Mark Helfand, Sue Hill, Roman Jaeschke, Katharine Jones, Ilkka Kunnamo, Regina Kunz, Alessandro Liberati, Merce Marzo, James Mason, Jacek Mrukowics, Andrew D Oxman, Susan Norris, Vivian Robinson, Holger J Schünemann, Tessa Tan Torres, David Tovey, Peter Tugwell, Mariska Tuut, Helena Varonen, Gunn E Vist, Craig Wittington, John Williams, and James Woodcock.

Contributors: All listed authors, and other members of the GRADE working group, contributed to the development of the ideas in the manuscript, and read and approved the manuscript. HJS wrote the first draft and collated comments from authors and reviewers for subsequent iterations. All other listed authors contributed ideas about structure and content and provided feedback. HJS is the guarantor.

Funding: This work was partially funded by a “The human factor, mobility and Marie Curie Actions Scientist Reintegration” European Commission Grant: IGR 42192-“GRADE” to HJS.

Competing interests: The authors are members of the GRADE Working Group. The work with this group probably advanced the careers of some or all of the authors and group members. Authors listed in the byline have received travel reimbursement and honorariums for presentations that included a review of GRADE’s approach to grading the quality of evidence and strength of recommendations. GHG acts as a consultant to UpToDate; his work includes helping UpToDate in their use of GRADE. HJS is documents editor and methodologist for the American Thoracic Society; he supports the implementation of GRADE by this and other organisations worldwide. VMM supports the implementation of GRADE in several North American not for profit professional organisations.

Provenance and peer review: Not commissioned; externally peer reviewed.

This is the fourth in a series of five articles that explain the GRADE system for rating grading the quality of evidence and strength of recommendations

References

- 1.Deeks JJ. Systematic reviews in health care: systematic reviews of evaluations of diagnostic and screening tests. BMJ 2001;323:157-62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bossuyt PM, Irwig L, Craig J, Glasziou P. Comparative accuracy: assessing new tests against existing diagnostic pathways. BMJ 2006;332:1089-92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Oxman AD, Guyatt GH. Guidelines for reading literature reviews. CMAJ 1988;138:697-703. [PMC free article] [PubMed] [Google Scholar]

- 4.Mulrow C, Linn WD, Gaul MK, Pugh JA. Assessing quality of a diagnostic test evaluation. J Gen Intern Med 1989;4:288-95. [DOI] [PubMed] [Google Scholar]

- 5.Guyatt G, Montori V, Devereaux PJ, Schünemann H, Bhandari M. Patients at the center: in our practice, and in our use of language. ACP J Club 2004;140(1):A11-2. [PubMed] [Google Scholar]

- 6.Hamon M, Biondi-Zoccai GG, Malagutti P, Agostoni P, Morello R, Valgimigli M, et al. Diagnostic performance of multislice spiral computed tomography of coronary arteries as compared with conventional invasive coronary angiography: a meta-analysis. J Am Coll Cardiol 2006;48:1896-910. [DOI] [PubMed] [Google Scholar]

- 7.Bossuyt PM, Lijmer JG, Mol BW. Randomised comparisons of medical tests: sometimes invalid, not always efficient. Lancet 2000;356:1844-7. [DOI] [PubMed] [Google Scholar]

- 8.Mueller C, Scholer A, Laule-Kilian K, Martina B, Schindler C, Buser P, et al. Use of B-type natriuretic peptide in the evaluation and management of acute dyspnea. N Engl J Med 2004;350:647-54. [DOI] [PubMed] [Google Scholar]

- 9.Moe G, Howlett J, Januzzi JL, Zowall H, Canadian multicenter improved management of patients with congestive heart failure (IMPROVE-CHF) Study Investigators. N-terminal pro-B-type natriuretic peptide testing improves the management of patients with suspected acute heart failure: primary results of the Canadian prospective randomized multicenter IMPROVE-CHF study. Circulation 2007;115:3103-10. [DOI] [PubMed] [Google Scholar]

- 10.Worster A, Preyra I, Weaver B, Haines T. The accuracy of noncontrast helical computed tomography versus intravenous pyelography in the diagnosis of suspected acute urolithiasis: a meta-analysis. Ann Emerg Med 2002;40:280-6. [DOI] [PubMed] [Google Scholar]

- 11.Worster A, Haines T. Does replacing intravenous pyelography with noncontrast helical computed tomography benefit patients with suspected acute urolithiasis? Can Assoc Radiol J 2002;53:144-8. [PubMed] [Google Scholar]

- 12.Guyatt GH, Oxman AD, Kunz R, Falck-Ytter Y, Vist GE, Liberati A, Schünemann HJ. Going from evidence to recommendations. BMJ 2008, doi: 10.1136/bmj.39493.646875.AE. [Google Scholar]

- 13.Guyatt GH, Oxman AD, Kunz R, Vist GE, Falck-Ytter Y, Schünemann HJ. What is “quality of evidence” and why is it important to clinicians? BMJ 2008, doi: 10.1136/bmj.39490.551019.BE. [Google Scholar]

- 14.Lord SJ, Irwig L, Simes RJ. When is measuring sensitivity and specificity sufficient to evaluate a diagnostic test, and when do we need randomized trials? Ann Intern Med 2006;144:850-5. [DOI] [PubMed] [Google Scholar]

- 15.Maat-Kievit A, Vegter-van der Vlis M, Zoeteweij M, Losekoot M, van Haeringen A, Roos R. Paradox of a better test for Huntington’s disease. J Neurol Neurosurg Psychiatry 2000;69:579-83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Walker FO. Huntington’s disease. Semin Neurol 2007;27:143-50. [DOI] [PubMed] [Google Scholar]

- 17.Almqvist EW, Brinkman RR, Wiggins S, Hayden MR. Psychological consequences and predictors of adverse events in the first 5 years after predictive testing for Huntington’s disease. Clin Genet 2003;64:300-9. [DOI] [PubMed] [Google Scholar]

- 18.Atkins D, Best D, Briss PA, Eccles M, Falck-Ytter Y, Flottorp S, et al. Grading quality of evidence and strength of recommendations. BMJ 2004;328:1490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Schünemann HJ, Jaeschke R, Cook DJ, Bria WF, El-Solh AA, Ernst A, et al. An official ATS statement: grading the quality of evidence and strength of recommendations in ATS guidelines and recommendations. Am J Respir Crit Care Med 2006;174:605-14. [DOI] [PubMed] [Google Scholar]

- 20.Deeks J. Assessing outcomes following tests. In: Price CP, Christenson RH, eds. Evidence-based laboratory medicine: principles, practice and outcomes 2nd ed. Washington: AACC Press, 2007:95-111.

- 21.Rutjes AW, Reitsma JB, Di Nisio M, Smidt N, van Rijn JC, Bossuyt PM. Evidence of bias and variation in diagnostic accuracy studies. CMAJ 2006;174:469-76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Lijmer JG, Mol BW, Heisterkamp S, Bonsel GJ, Prins MH, van der Meulen JH, et al. Empirical evidence of design-related bias in studies of diagnostic tests. JAMA 1999;282:1061-6. [DOI] [PubMed] [Google Scholar]

- 23.Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou PP, Irwig LM, et al. Towards complete and accurate reporting of studies of diagnostic accuracy: the STARD initiative. Ann Intern Med 2003;138:40-4. [DOI] [PubMed] [Google Scholar]

- 24.Whiting P, Rutjes AW, Reitsma JB, Bossuyt PM, Kleijnen J. The development of QUADAS: a tool for the quality assessment of studies of diagnostic accuracy included in systematic reviews. BMC Med Res Methodol 2003;3:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Whiting PF, Weswood ME, Rutjes AW, Reitsma JB, Bossuyt PN, Kleijnen J. Evaluation of QUADAS, a tool for the quality assessment of diagnostic accuracy studies. BMC Med Res Methodol 2006;6:9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fletcher RH. Carcinoembryonic antigen. Ann Intern Med 1986;104:66-73. [DOI] [PubMed] [Google Scholar]

- 27.Hlatky MA, Pryor DB, Harrell FE Jr, Califf RM, Mark DB, Rosati RA. Factors affecting sensitivity and specificity of exercise electrocardiography: multivariable analysis. Am J Med 1984;77:64-71. [DOI] [PubMed] [Google Scholar]

- 28.Levy D, Labib SB, Anderson KM, Christiansen JC, Kannel WB, Castelli WP. Determinants of sensitivity and specificity of electrocardiographic criteria for left ventricular hypertrophy. Circulation 1990;81:815-20. [DOI] [PubMed] [Google Scholar]