Abstract

Developmental differences in brain activation of 9- to 15-year-old children were examined during an auditory rhyme decision task to spoken words using functional magnetic resonance imaging (fMRI). As a group, children showed activation in left superior/middle temporal gyri (BA 22, 21), right middle temporal gyrus (BA 21), dorsal (BA 45, pars opercularis) and ventral (BA 46, pars triangularis) aspects of left inferior frontal gyrus, and left fusiform gyrus (BA 37). There was a developmental increase in activation in left middle temporal gyrus (BA 22) across all lexical conditions, suggesting that automatic semantic processing increases with age regardless of task demands. Activation in left dorsal inferior frontal gyrus also showed developmental increases for the conflicting (e.g. PINT-MINT) compared to the non-conflicting (e.g. PRESS-LIST) non-rhyming conditions, indicating that this area becomes increasingly involved in strategic phonological processing in the face of conflicting orthographic and phonological representations. Left inferior temporal/fusiform gyrus (BA 37) activation was also greater for the conflicting (e.g. PINT-MINT) condition, and a developmental increase was found in the positive relationship between individuals' reaction time and activation in left lingual/fusiform gyrus (BA 18) in this condition, indicating an age-related increase in the association between longer reaction times and greater visual-orthographic processing in this conflicting condition. These results suggest that orthographic processing is automatically engaged by children in a task that does not require access to orthographic information for correct performance, especially when orthographic and phonological representations conflict, and especially for longer response latencies in older children.

Introduction

Interaction of orthographic and phonological information is essential for acquiring fluent reading ability, which in turn is essential for functioning in a literate society. Phonological processing is defined as processing information encoded in the sound structure of spoken language (Campbell, 1992; Foorman, 1994; Wagner and Torgeson, 1987), whereas orthographic processing refers to processing information encoded in the spelling structure of written language (Foorman, Francis, Fletcher, and Lynn, 1996; Juel, 1983; Perfetti, 1984). Before learning to read, children process phonological information independently of orthographic information. However, reading acquisition requires making connections between our existing oral language system and written language. Therefore, phonological and orthographic representations become closely linked during reading acquisition. Behavioral and computational modeling research has shown that phonological and orthographic processes are more interactive in skilled versus less skilled readers (Booth, et al. 1999; Booth, et al. 2000; Plaut and Booth 2000). However, the neural mechanisms that underlie interactions between phonological and orthographic processes are not clearly understood. In the present study, we employed functional magnetic resonance imaging (fMRI) to elucidate the interaction between phonological and orthographic processing in children by examining the neural network activated during an auditorily-presented rhyme decision task in a group of typically-achieving 9- to 15-year-old children.

There is a long history of behavioral research with adults illustrating the influence of orthographic information on the speed of spoken word recognition during a variety of auditory language tasks employing rhyme judgment (Donnenwerth-Nolan, et al. 1981; Seidenberg and Tanenhaus 1979), phoneme monitoring (Dijkstra, et al. 1995), priming (Chereau, et al. 2007; Jakimik, et al. 1985), and lexical decision (Ziegler and Ferrand 1998). All of these behavioral studies demonstrated that reaction times differed based on the orthographic properties of stimulus words, suggesting that the processing of spoken word forms is influenced by orthographic representations.

Neuroimaging studies of auditory rhyme decision tasks in adults have helped elucidate the neural correlates of phonological and orthographic processes, as well interactions between the two, during spoken language processing. These studies have provided a relatively consistent picture, showing activation in left inferior frontal gyrus and bilateral superior temporal gyrus (Booth, et al. 2002; Burton, et al. 2003; Burton, et al. 2005; Rudner, et al. 2005). Right superior temporal gyrus activation has been linked to perception of pitch variation in linguistic and non-linguistic stimuli (Johnsrude, et al. 2000; Scott, et al. 2000). Left superior temporal gyrus, on the other hand, has been implicated in access to phonological representations (Binder, et al. 1994; Scott, et al. 2000), and greater activation in left superior temporal gyrus was found to be correlated with higher accuracy and faster reaction times for auditory rhyme decisions in adults (Booth, et al. 2003a). Activation in left inferior frontal gyrus may reflect reliance on phonological segmentation processes (Hagoort, et al. 1999), increasing activation of motor programs involved in planning articulations (Rizzolatti and Craighero 2004) and/or greater top-down modulation of posterior regions associated with phonological processing (Bitan, et al. 2005). Some studies using an auditory rhyme decision task have additionally shown activation in right inferior frontal gyrus (Burton, et al. 2003; Rudner, et al. 2005), left fusiform gyrus (Booth, et al. 2002; Burton, et al. 2003; Burton, et al. 2005), and either bilateral inferior parietal lobules (Rudner, et al. 2005) or left angular gyrus (Burton, et al. 2003). Studies of visual word processing have implicated left fusiform gyrus in orthographic processing (Cohen, et al. 2004; Dehaene, et al. 2004; McCandliss, et al. 2003). Activation of left fusiform gyrus during an auditory lexical task suggests that orthographic representations may be automatically accessed even when access to these representations is not required for correct performance. Previous neuroimaging studies have implicated inferior parietal cortex in mapping between orthographic and phonological representations (Booth, et al. 2002; Booth, et al. 2003a), and studies of developmental and acquired dyslexia have identified inferior parietal cortex as a common site for underactivation or lesions associated with these disorders (see Pugh, et al. 2000). Thus, activation in the inferior parietal cortex during auditory rhyme decision tasks may reflect the process of mapping between phonological representations in superior temporal gyrus and orthographic representations in fusiform cortex, a process essential to reading.

Neuroimaging research employing auditorily-presented rhyme decision tasks in children is more limited (Coch, et al. 2002; Corina, et al. 2001, Raizada, et al. in press). Raizada et al (in press) examined relationships among brain activation, behavior (including standardized test scores and rhyme task performance), and environmental variables (including socio-economic status), in fourteen 5-year-old children. The degree of left-greater-than-right asymmetry of inferior frontal gyrus activation positively correlated with socioeconomic status, but no significant correlations emerged between activation and behavioral measures. Corina et al (2001) compared rhyme task activation in dyslexic versus typically-achieving 10- to 13-year-old boys (n = 8 in each group). The authors did not provide main effects of the rhyme task within either group, disallowing the observance of effects of this task in typically-achieving children. Coch et al (2002) examined developmental differences in auditory rhyme decisions using event-related potentials (ERP) in participants ranging from seven years of age into adulthood. Accuracy and reaction times improved with age, and some age-related differences in ERP responses were observed for the task as a whole. This study also divided stimuli into one of two conditions: rhyming (e.g., NAIL – MALE) or non-rhyming (e.g., PAID – MEET). However, there were no age-related ERP differences associated with the comparison of rhyming versus non-rhyming conditions, and neither of the stimulus conditions, rhyming and nonrhyming, had similar spellings for the prime and target rimes.

Previous research conducted by Booth and colleagues (Booth, et al. 2003b; Booth, et al. 2004; Booth, et al. 2001) employed an auditory rhyme decision task, among other tasks, to compare language processing in adults versus children. One of these studies showed that both adults and children activated left inferior frontal gyrus, bilateral superior/middle temporal gyri, and left fusiform gyrus (Booth, et al. 2004). This study also reported that adults showed greater activation than children in left inferior frontal gyrus and left superior temporal gyrus, suggesting that adults engage these nodes of the language network to a greater degree than do children for phonological processing involved in rhyme decisions. Other neuroimaging studies using different lexical tasks have also shown developmental increases in activation of left inferior frontal gyrus (Gaillard, et al. 2003; Holland, et al. 2001; Shaywitz, et al. 2002; Turkeltaub, et al. 2003) and learning related increases in adults' activation of left superior temporal gyrus (Callan, et al. 2003; Raboyeau, et al. 2004; Wang, et al. 2003). Although neither adults nor children showed significant activation relative to a control task in left angular gyrus in a rhyming task (Booth, et al. 2004), adults showed significantly greater activation than children in this region when directly comparing the two age groups. Because left inferior parietal cortex has been implicated in mapping between orthographic and phonological representations (Booth, et al. 2002; Booth, et al. 2003a), greater activation in this region by adults may indicate more automatic mapping to orthographic representations during spoken word processing by adults than by children.

The Booth et al (2004) study did not establish differences between adults and children in fusiform gyrus, but behavioral research has shown developmental increases in the effect of orthographic information in auditorily-presented phonological tasks across a collective age range of 5 to 11 years (Bruck 1992; Tunmer and Nesdale 1982; Zecker 1991). For example, Zecker (1991) employed an auditory rhyme decision task in children ranging from 7 to 11.5 years of age. Younger children (7- to 8.5-year-olds) showed smaller orthographic effects (as measured by a smaller difference between reaction times for orthographically similar compared to dissimilar rhyming words) than older (8.5- to 11.5-year-old) children. These developmental increases in orthographic effects are presumably due to greater interaction of orthographic and phonological representations as children gain more experience with written language.

The purpose of the present study was to examine developmental changes in the neural network involved in phonological processing during rhyme judgment to words presented in the auditory modality. In order to more directly explore the developmental process, we examined children ranging from 9 to 15 years old rather than examining differences between adults and children (Booth, et al. 2004). A large number of child participants also allowed us to examine age effects while controlling for accuracy differences, as well as accuracy effects while controlling for age differences, thereby identifying the unique variance explained by each variable. In addition, we used an event-related, rather than a block design (Booth, et al. 2003b; Booth, et al. 2004; Booth, et al. 2001), so that we could manipulate the difficulty of the rhyming task by comparing word pairs with conflicting (e.g. PINT-MINT, JAZZ-HAS) versus non-conflicting (e.g. GATE-HATE, PRESS-LIST) orthographic and phonological information. Research shows that spelling and rhyming judgments are generally more difficult for conflicting than for non-conflicting pairs (Johnston and McDermott 1986; Kramer and Donchin 1987; Levinthal and Hornung 1992; Polich, et al. 1983; Rugg and Barrett 1987). Based on previous neuroimaging research (Booth, et al. 2004), we expected to see developmental increases in brain activation in left inferior frontal gyrus, left superior temporal gyrus and left inferior parietal cortex, because these regions have been implicated in phonological processes and may be more effectively recruited for the phonologically-based rhyme decision task with increasing age. We further expected that this developmental increase might be especially pronounced for the more difficult conflicting word pairs because the conflict between orthographic and phonological information in these conditions may place an extra burden on phonological processing and therefore require greater recruitment of these regions. Although previous neuroimaging research has not established developmental correlations in left fusiform gyrus during spoken word processing, developmental increases in brain activation in this region may be expected because it has been implicated in orthographic processing, and behavioral research indicates that orthographic and phonological processes become more interactive with development (Bruck 1992; Tunmer and Nesdale 1982; Zecker 1991), perhaps as a result of increasingly automatic access to orthographic representations during spoken word processing as children gain more exposure to print.

Materials and methods

Participants

Forty healthy children (mean age = 11.9 years, SD = 2.15; range 9-15 years; 18 boys) participated in the fMRI study. Children were recruited from the Chicago metropolitan area. Parents of children were given an informal interview to insure that they met the following inclusionary criteria: (1) native English speakers, (2) right-handedness, (3) normal hearing and normal or corrected-to-normal vision, (4) free of neurological disease or psychiatric disorders, (5) not taking medication affecting the central nervous system, (6) no history of intelligence, reading, or oral-language deficits, and (7) no learning disability or Attention Deficit Hyperactivity Disorder (ADHD). After the administration of the informal interview, informed consent was obtained. The informed consent procedures were approved by the Institutional Review Board at Northwestern University and Evanston Northwestern Healthcare Research Institute. Standardized intelligence testing was then administered, using the Wechsler Abbreviated Scale of Intelligence (WASI) (Wechsler 1999) with two verbal subtests (vocabulary, similarity) and two performance subtests (block design, matrix reasoning). Participants' standard scores (mean ± SD) were 116 ± 15 on the verbal scale and 110 ± 15 on the performance scale. The correlation between age and the verbal scale was not significant (r(40) = -.247, p =.125).

Functional activation tasks

During scanning, participants performed a rhyme judgment task to word pairs interspersed with perceptual control trials and null event trials.

Lexical rhyme condition

A black fixation-cross appeared throughout the trial while two auditory words were presented binaurally through earphones in sequence. The duration of each word was between 500 and 800 milliseconds (ms) followed by a brief period of silence, with the second word beginning 1000 ms after the onset of the first. A red fixation-cross appeared on the screen 1000 ms after the onset of the second word, indicating the need to make a rhyme decision response during the subsequent 2400 ms interval. In the rhyming task, twenty-four word pairs were presented in one of four lexical conditions that independently manipulated the orthographic and phonological similarity between words (see Table 1). In the two non-conflicting conditions, the two words were either similar in both their orthographic and phonological endings (O+P+, e.g. GATE-HATE), or different in both their orthographic and phonological endings (O-P-, e.g. PRESS-LIST). In the two conflicting conditions, the two words had either similar orthographic but different phonological endings (O+P-, e.g. PINT-MINT), or different orthographic but similar phonological endings (O-P+, e.g. JAZZ-HAS). The participants were instructed to quickly and accurately press the yes button to the rhyming pairs and the no button to the non-rhyming pairs.

Table 1.

Lexical rhyme conditions.

| Similar

Orthography |

Dissimilar

Orthography |

|

|---|---|---|

|

Similar

Phonology |

O+P+

gate - hate |

O-P+

*jazz-has |

|

Dissimilar

phonology |

O+P-

*pint-mint |

O-P-

press-list |

Conflicting conditions, in which phonological information (whether the two words rhyme) conflicts with orthographic information (whether the two words are spelled the same from the first vowel on).

All words for the rhyme decision task were recorded in a soundproof booth using a digital recorder and a high quality stereo microphone. A native English female speaker read each word in isolation by so that there would be no contextual effects. All words longer than 800 ms were shortened to this duration (less than 1% of the words). All words were then normalized so that they were of equal amplitude. All words were monosyllabic words, and were matched across conditions for written word frequency in adults and children (“The educator's word frequency guide”,1996) and for adult word frequency for written and spoken language (Baayen, et al. 1995). One-way ANOVAs of the measures for word frequency did not reveal significant differences across conditions. Although we attempted to match the lexical conditions for word consistency, the limited number of available words and the specific structure of the conditions precluded this possibility. Two measures of word consistency were calculated: phonological and orthographic (Bolger, et al. in press). Consistency was computed as the ratio of friends to the sum of friends and enemies (i.e. friends/(friends + enemies) based on the 2,998 mono-syllable words (Plaut, et al. 1996). Phonological enemies were defined as the number of words with similar spelling but different pronunciation of the rhyme, and orthographic enemies were defined as the number of words with similar pronunciation but different spelling of the rime. Friends were defined as words with the same rime spelling and same rhyme pronunciation. Words that have a ratio approaching 1.0 have very few or no enemies (consistent), while words with a ratio approaching 0.0 have few or no friends (inconsistent). GLM analyses of phonological or orthographic consistency as dependent variables and lexical condition as the independent variable showed a significant effect of condition (F(3) = 35.4, 10.9, p < .001) for phonological and orthographic consistency respectively. The highest phonological inconsistency was found in the O+P- condition (.91, 71, .49, and .89 for O+P+, O-P+, O+P- and O-P-, respectively), with post-hoc analyses revealing significant differences between all pairs of conditions (p ≤ .001) except the O+P+ and O-P- conditions (p = .98). The highest orthographic inconsistency was found in the O-P+ condition (.66, .48, .60, and .64 for O+P+, O-P+, O+P- and O-P-, respectively), with post-hoc analyses revealing significant difference between the O-P+ condition and all other conditions (p < .001), and no significant differences among the other three conditions (p > .75).

Control conditions

Three kinds of control conditions were included in the experiment. The simple perceptual control had 24 pairs of single pure tones, ranging from 325-875 Hz. The tones were 600 ms in duration and contained a 100 ms linear fade in and a 100 ms linear fade out. The complex perceptual control had 24 pairs of three-tone stimuli, where all the component tones were within the aforementioned frequency range. Each tone was 200 ms with a 50 ms fade in and out. An equal number of tone sequences were ascending, descending, low frequency peak in middle, and high frequency peak in middle. Differences between successive frequencies were at least 75 Hz. For both the simple and complex perceptual controls, participants determined whether the stimuli were identical or not by pressing a yes or no button. Half of the stimuli in each control condition were identical, and half were non-matching. For non-matches, half of the stimuli had the same contour and half had a different contour. The tones were equal in maximum amplitude to the words, and the procedures for presenting stimuli were the same as in the rhyme judgment task. Of the two perceptual control conditions included in the experiment, only data from the simple perceptual control condition is presented in this paper. Data from the simple perceptual control condition was used as opposed to data from the complex control condition because the simple condition was more similar to the lexical task in terms of task performance and its relationship with age. Accuracy did not differ significantly between the lexical and the simple perceptual conditions (t(39) =.33, p = .745; see Table 2 for means and standard deviations), but accuracy in the complex perceptual condition was significantly lower than in the lexical condition (M = .78, SD = .02; t(39) = 6.29, p < .001). In addition, accuracy in the complex perceptual condition was significantly correlated with age (r(40) = .40, p = .010), whereas there was no significant correlation between age and accuracy in either the simple perceptual (r(40) = .21, p = .205) or the lexical conditions (r(40) = .24, p = .131). The third control task involved 72 null events. The participant was instructed to press a button when a black fixation-cross at the center of the visual field turned red. The null event had essentially the same visual stimuli and motor response characteristics as the lexical task and the perceptual controls, with sequential presentation of black fixation cross followed by a red fixation cross indicating the need to press the yes button on the response box. Yes responses were thus scored as correct, and no responses or failures to respond were scored as incorrect.

Table 2.

Mean accuracy (%), reaction time (ms) and their standard deviations (in parentheses) for the average lexical, each lexical condition, perceptual and null events.

| Average

Lexical |

Conflicting | Non-conflicting | Perceptual | Null | |||

|---|---|---|---|---|---|---|---|

| O+P- | O-P+ | O+P+ | O-P- | ||||

| Accuracy | 92.0

(6.6) |

85.8

(12.7) |

93.4

(8.8) |

94.1

(5.9) |

93.4

(8.4) |

91.0

(10.5) |

97.0

(7.1) |

|

Reaction

Time |

1360

(281) |

1402

(306) |

1349

(298) |

1339

(280) |

1348

(295) |

1210

(260) |

1292

(239) |

Experimental procedure

After informed consent was obtained and the standardized intelligence test was administered, participants were invited for a practice session, in which they were trained in minimizing head movement in front of a computer screen using an infrared tracking device. In addition, they performed one run of the rhyming task in a simulator scanner, in order to make sure they understood the tasks and to acclimatize themselves to the scanner environment. Different stimuli were used in the practice and in the scanning sessions. Scanning took place within a week after the practice session.

MRI data acquisition

Participants lay in the scanner with their head position secured with a specially designed vacuum pillow (Bionix, Toledo, OH). An optical response box (Current Designs, Philadelphia, PA) was placed in the participants' right hand. Participants viewed visual stimuli that were projected onto a screen via a mirror attached to the inside of the head coil. Participants wore headphones to hear auditory stimuli (Resonance Technology, Northridge, CA). The rhyming task was administered in two 108 trial runs (8 minutes each), in which the order of lexical, perceptual and fixation trials was optimized for event-related design (see Burock, et al. 1998) using OptSeq (http://surfer.nmr.mgh.harvard.edu/optseq/). The order of stimuli was fixed for all subjects.

All images were acquired using a 1.5 Tesla GE (General Electric) scanner. A susceptibility weighted single-shot EPI (echo planar imaging) method with BOLD (blood oxygenation level-dependent) was used, and functional images were interleaved from bottom to top in a whole brain EPI acquisition. The following scan parameters were used: TE = 35 ms, flip angle = 90°, matrix size = 64 × 64, field of view = 24 cm, slice thickness = 5 mm, number of slices = 24; TR = 2000 ms. Each functional run had 240 repetitions. In addition, a high resolution, T1 weighted 3D image was acquired (SPGR, TR = 21 ms, TE = 8 ms, flip angle = 20°, matrix size = 256 × 256, field of view = 22 cm, slice thickness = 1 mm, number of slices = 124), using an identical orientation as the functional images.

Image analysis

Data analysis was performed using SPM2 (Statistical Parametric Mapping) (http://www.fil.ion.ucl.ac.uk/spm). The images were spatially realigned to the first volume to correct for head movements. No individual runs had more than 4 mm maximum displacement in the x, y or z dimension. Sinc interpolation was used to minimize timing-errors between slices. The functional images were co-registered with the anatomical image, and normalized to the standard T1 Montreal Neurological Institute (MNI) template volume. The data was then smoothed with a 10 mm isotropic Gaussian kernel. Statistical analyses at the first level were calculated using an event-related design with 4 lexical conditions, 2 perceptual conditions, and the null events as conditions of interest. A high pass filter with a cutoff period of 128 seconds was applied. Word pairs were treated as individual events for analysis and modeled using a canonical hemodynamic response function (HRF). Group results were obtained using random-effects analyses by combining subject-specific summary statistics across the group as implemented in SPM2.

In order to examine both main effects and correlation effects, we first created a mask that was inclusive for average lexical condition versus null (p = .01 uncorrected) and exclusive for the simple perceptual condition versus null (p = .05 FDR corrected), in order to examine effects that are more specific to linguistic processing. This approach was chosen over a direct contrast between the lexical and perceptual conditions in part because correlation effects revealed by a direct contrast of the lexical minus perceptual conditions could be due to correlations with the perceptual condition rather than to correlations with the lexical condition. The masked approach was also preferable to a direct contrast approach because a mask can be applied to all imaging analyses, including those directly comparing conflicting to nonconflicting lexical conditions and those examining correlation effects, in order to limit our exploration of effects to more lexically-specific regions in all analyses. We used a liberal threshold for the inclusive lexical versus null mask and a stringent threshold for the exclusive perceptual versus null mask because we wanted to increase our search space for regions that may be associated with age. The mask was exclusive for simple perceptual activation rather than complex perceptual activation because performance on the simple perceptual condition was comparable to performance on the lexical condition and its relationship with age (see Control conditions section above), indicating a greater comparability in terms of task demands between the lexical and simple perceptual conditions. Thus the mask was applied to the analysis of all main effects and correlational analyses. We calculated the following t-tests to examine main effects: (1) average lexical condition versus null, (2) the O+P- versus the O-P-conditions (conflicting versus non-conflicting non-rhyming conditions), and (3) the O-P+ versus the O+P- conditions (conflicting versus non-conflicting rhyming conditions).

In addition, we calculated the correlations of both age (in months) and lexical accuracy (percent correct) with activation in each of the above contrasts. Accuracy measures used in the performance correlations were taken from the appropriate lexical conditions: (1) average accuracy of the four lexical conditions for the lexical versus null contrast, (2) O+P- accuracy for the O+P- versus O-P- contrast, and (3) O-P+ accuracy for the O-P+ versus O+P+ contrast. Accuracy was used as a covariate when calculating partial correlations with age, and age was used as a covariate when calculating partial correlations with accuracy, in order to examine the unique variance explained by each variable. All significant brain-behavior correlations from the latter two contrasts were further examined in order to determine whether they were in fact due to stronger correlations in the conflicting condition, or rather due to stronger correlations in the non-conflicting condition. Only true conflict effects, due to stronger correlations in the conflicting condition, are reported.

Furthermore, age-related changes in activation as a function of reaction time were examined by entering reaction time (ms) as a continuous regressor variable to investigate within-subject activation changes as a function of reaction time, and then we calculated correlations of age (in months) with the resulting within subject activation associated with reaction time. Areas of cortical activation reported for all analyses (see Tables 3 and 4) were significant using p < 0.001 uncorrected at the voxel level, containing a cluster size greater than 15 voxels.

Table 3.

Regions of activation for 1) the lexical conditions versus null (masked for inclusive lexical activation, p < .01 uncorrected, and exclusive perceptual activation, p < .05 FDR corrected); 2) the perceptual condition versus null; 3) the lexical versus perceptual conditions; and 4) the O+P- vs. O-P- (conflicting versus non-conflicting rhyming) conditions (also masked for inclusive lexical activation, p < .01 uncorrected, and exclusive perceptual activation, p < .05 FDR corrected).

| Contrast | Region | H | BA | Voxels | x | y | z | T-value |

|---|---|---|---|---|---|---|---|---|

| Lexical – Null* | Dorsal Inferior Frontal G (opercularis) | L | 45 | 1129 | -45 | 12 | 21 | 9.37 |

| Ventral Inferior Frontal G (triangularis) | L | 46 | -36 | 27 | 0 | 7.45 | ||

| Superior/Middle Temporal G | L | 21/22 | 305 | -66 | -24 | -3 | 12.01 | |

| Medial Frontal G | L/R | 6 | 239 | -3 | 15 | 48 | 9.08 | |

| Fusiform G | L | 37 | 129 | -45 | -54 | -18 | 8.48 | |

| Middle Temporal G | R | 21 | 105 | 57 | -33 | -6 | 7.6 | |

| Middle Temporal G | R | 21 | 60 | -24 | -9 | 6.7 | ||

| Lingual G | R | 30 | 816 | 24 | -54 | 0 | 6.62 | |

| Lingual G | L | 19 | -18 | -63 | -6 | 5.8 | ||

| Insula | R | 13 | 85 | 36 | 27 | -3 | 6.36 | |

| Postcentral G | L | 2 | 15 | -48 | -30 | 60 | 4.31 | |

| Perceptual – Null** | Superior Temporal G | R | 42 | 1521 | 66 | -18 | 6 | 19.76 |

| Superior Temporal G | R | 21/38 | 54 | 6 | -9 | 14 | ||

| Superior Temporal G | L | 41 | 1607 | -51 | -24 | 9 | 15.99 | |

| Superior Temporal G | L | 41 | 1607 | -51 | -24 | 9 | 15.99 | |

| Superior Temporal G | L | 22 | -54 | 0 | -6 | 11.63 | ||

| Dorsal Inferior Frontal G (opercularis) | L | 44 | -60 | 9 | 18 | 3.5 | ||

| Medial Frontal G | L/R | 6 | 39 | 3 | 3 | 60 | 4.28 | |

| Precentral G | R | 6 | 15 | 57 | -6 | 45 | 3.92 | |

| Cuneus (Calcarine G) | R | 17 | 27 | 15 | -72 | 6 | 3.64 | |

| Lingual G | R | 19 | 16 | 21 | -54 | -6 | 3.54 | |

| Lexical – Perceptual* | Dorsal Inferior Frontal G (opercularis) | L | 44/45 | 1001 | -45 | 12 | 18 | 6.62 |

| Ventral Inferior Frontal G (orbitalis) | L | 47 | -42 | 27 | -6 | 6.33 | ||

| Superior Temporal G | L | 22 | 213 | 66 | -15 | -3 | 7.42 | |

| Medial Frontal G | L/R | 8 | 324 | -3 | 24 | 51 | 7.13 | |

| Inferior Temporal/Fusiform G | L | 37 | 1024 | -45 | -51 | -15 | 9.85 | |

| Superior/Middle Temporal G | L | 21/22 | -63 | -24 | 0 | 9.23 | ||

| Superior/Middle Temporal G | L | 21/22 | -51 | -42 | 6 | 6.46 | ||

| Fusiform | R | 36 | 120 | 36 | -33 | -24 | 5.84 | |

| O+P- vs. O-P- | Dorsal Inferior Frontal G (opercularis) | L | 46 | 260 | -42 | 12 | 27 | 5.29 |

| Medial Frontal G | L | 8 | 129 | -6 | 18 | 48 | 4.55 | |

| Inferior Temporal G | L | 37 | 19 | -54 | -51 | -15 | 3.86 |

Note. H = hemisphere, L = left, R = right, BA = Brodmann Area, G = gyrus; Inf = Infinite. Activation is presented with * p < 0.001 uncorrected for the Lexical-Null and Lexical-Perceptual contrasts, and ** p < .05 FDR-corrected for the Simple-Null contrast. x, y, z: Montreal Neurological Institute (MNI) coordinates listed only for cortical clusters with volume greater than 15 voxels. All coordinates listed correspond to global maxima (voxels and t-value listed) or local maxima (voxels not listed) at least 25 mm from the global maxima.

Table 4.

Positive (+) and negative (-) age and accuracy correlations for the lexical conditions versus null and the O+P- (conflicting non-rhyming) versus O-P- (non-conflicting non-rhyming) conditions, excluding overlap of activation with the perceptual versus null contrast. RT+ Age+ rows correspond to activation positively associated with reaction time within subjects (RT+) that increases as a function of age (Age +) between subjects.

| Contrast | Region | H | BA | voxels | x | y | z | T-value |

|---|---|---|---|---|---|---|---|---|

| Lexical-Null | ||||||||

| Age + | Middle Temporal G | L | 22 | 40 | -60 | -45 | 3 | 4.25 |

| Accuracy + | Ventral Inferior Frontal G (triangularis) | L | 46 | 66 | -42 | 27 | 12 | 4.95 |

| Medial Frontal G | L/R | 6 | 42 | -6 | 30 | 39 | 4.94 | |

| Age - | None | – | – | – | – | – | – | – |

| Accuracy - | None | – | – | – | – | – | – | – |

| O+P- vs. O-P- | ||||||||

| Age+ | Dorsal Inferior Frontal G (triangularis)/Precentral G | L | 9 | 25 | -54 | 9 | 33 | 4.54 |

| Accuracy + | None | – | – | – | – | – | – | – |

| Age - | None | – | – | – | – | – | – | – |

| Accuracy - | None | – | – | – | – | – | – | – |

| O+P- | ||||||||

| RT+ Age+ | Lingual G | L | 18 | 24 | -21 | -69 | -6 | 4.18 |

| Cuneus | R/L | 18 | 90 | 9 | -81 | 15 | 4.13 | |

| RT+ Age- | None | – | – | – | – | – | – | – |

Note. H = hemisphere, L = left, R = right, BA = Brodmann Area G = gyrus. Activation is presented with p < 0.001 uncorrected. x, y, z: Montreal Neurological Institute (MNI) coordinates listed only for clusters with volume greater than 15 voxels. All coordinates listed correspond to effect maxima.

Results

Behavioral results

Mean accuracy on all conditions was greater than 85% (see Table 2), with no individual scoring below an average of 72% across the lexical conditions. Accuracy did not differ significantly between the lexical and the simple perceptual conditions (t(39) =.33, p = .745; see Table 2 for means and standard deviations), whereas accuracy in the null control condition was significantly higher than in the lexical condition (M = .97, SD = .01; t(39) = 5.216, p < .001). Age was not significantly correlated (p < 0.05) with accuracy in the average lexical (r(40) = 0.24, p = 0.131), the simple perceptual (r(40) = 0.21, p = 0.205) or the null conditions (r(40) = 0.10, p = 0.554). Accuracy in the individual lexical conditions also did not correlate significantly with age (p < .0125 with Bonferroni correction for multiple comparisons; O+P+: r(40) = 0.18, p = 0.268; O-P+: r(40) = 0.070, p = 0.669; O+P-: r(40) = 0.189, p = 0.242; O-P-: r(40) = 0.276, p = .084). Correlations between age and reaction time also failed to reach significance (p < 0.05) in the average lexical condition (r(40) = -0.261, p = 0.104), the simple perceptual (r(40) = -0.177, p = 0.274) the null conditions (r(40) = -0.205, p = 0.205), or any of the individual lexical conditions (p <.0125 with Bonferroni correction; O+P+: r(40) = -0.184, p = 0.256; O-P+: r(40) = -0.288, p = 0.071; O+P-: r(40) = -0.184, p = 0.257; O-P-: r(40) = 0.337, p = .034).

Because conditions in which the correct response is the same (“yes” or “no” rhyme decision) are more comparable in terms of response characteristics, we examined the effect of conflict between orthographic and phonological information by calculating paired t-tests comparing the conflicting to the non-conflicting condition for the rhyming and for the non-rhyming conditions separately, with a conflict effect defined as higher reaction times or error rates in the conflicting than the non-conflicting condition. There was no significant conflict effect within the rhyming conditions (O-P+ vs. O+P+) for accuracy (t(39) = 0.53, p = .602) or reaction time (t(39) = 0.56, p = .579). A significant conflict effect was found, however, within the non-rhyming conditions (O+P- vs. O-P-) for both accuracy (t(39) = -4.53, p <.001) and reaction time (t(39) = 2.47, p = .018). ANOVA, with the four lexical conditions as a repeated measure, showed a significant main effect of condition for accuracy (F(3, 37) = 6.86, p = .001), indicating that task performance was not uniform across all four lexical conditions, but the main effect for reaction time did not reach significance (F(3,37 = 2.46, p = .078). Follow-up t-tests between the lexical conditions, with Bonferroni correction for multiple comparisons, revealed that O+P- had significantly lower accuracy compared to the other conditions (O+P+: t(39) = 4.08, p < .001; O-P+: t(39) = 3.57, p = .001; O-P-: t(39) = -4.53, p < .001; see Table 2 for means), but that the other conditions were not significantly different from one another.

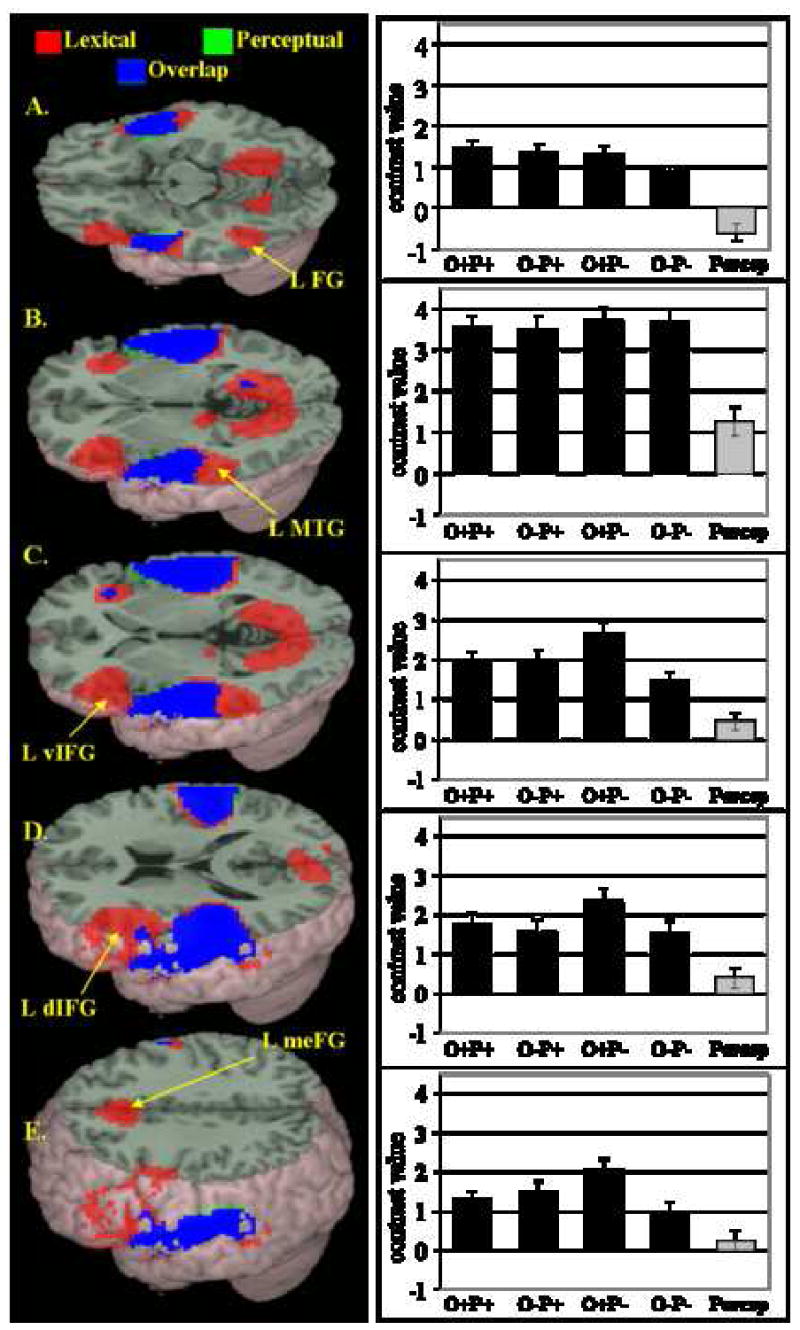

Brain activation

Figure 1 illustrates the comparison of lexical versus null conditions (shown in red) and the perceptual versus null conditions (shown in green), and their overlap (shown in blue). Table 3 shows areas of activation included in the contrast of lexical versus null conditions (p < 0.001 uncorrected) exclusively masked for the contrast of perceptual versus null conditions (p < 0.05 FDR-corrected). Activation included in this exclusive mask is also shown in Table 3, along with activation resulting from the direct contrast of lexical versus perceptual conditions. As shown in Figure 1 and Table 3, the comparison of lexical conditions versus null (masked for inclusive lexical versus null and exclusive perceptual versus null activation) revealed activation in left fusiform gyrus (BA 37), left superior/middle temporal gyri (BA 22, 21), ventral (BA 46, pars triangularis) and dorsal (BA 45, pars opercularis) aspects of left inferior frontal gyrus, and medial frontal gyrus (BA 6). In order to illustrate the relative contribution of each lexical condition to the overall lexical effects, beta values for individual subjects were extracted from activation in each of the above regions for each lexical condition, and the average group beta value for each condition is plotted next to the map of the corresponding region in Figure 1. Significant activation was found in each of the above regions for all of the lexical conditions. Overall lexical versus null activation (masked to exclude regions significantly activated by the perceptual condition) was additionally found in right middle temporal gyrus (BA 21), bilateral lingual gyri (BA 30, 19), right insula (BA 13), and left postcentral gyrus (BA 2). Perceptual activation excluded by use of the mask (visible as green and blue in Figure 1) included extensive aspects of superior temporal gyrus in the right (BA 42 and 21, 38) and left (BA 41, 22) hemispheres, and small areas of left dorsal inferior frontal gyrus (BA 44), medial frontal gyrus (BA 6), right precentral gyrus (BA 6), right cuneus/calcarine gyrus (BA 17), and right lingual gyrus (BA 19). The direct contrast of lexical versus perceptual conditions revealed a pattern very similar to that revealed by the contrast of lexical versus null conditions when masked for inclusive lexical versus null and exclusive perceptual versus null activation.

Figure 1.

Main effects for the lexical conditions versus null (in red; p <.001 uncorrected and masked for inclusive lexical activation, p < .01 uncorrected, and exclusive perceptual activation, p < .05 FDR corrected), the perceptual condition versus null (in green; p <.05 FDR-corrected), and overlap (in blue) (See Table 3 for coordinates). (A) Left fusiform/inferior temporal gyrus (L FG). (B) Left middle/superior temporal gyrus (L S+MTG). (C) Left ventral inferior frontal gyrus (L vIFG). (D) Left dorsal inferior frontal gyrus (L dIFG). E) Left medial frontal gyrus (L meFG). Bar graphs illustrate significant contributions to each of the above activation clusters from each lexical condition (in black) and corresponding beta value for the perceptual condition (in gray).

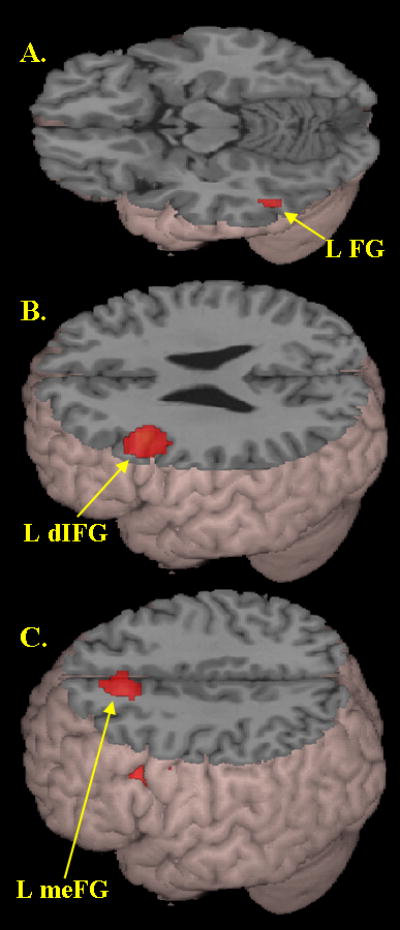

The comparison of the O-P+ versus the O+P+ conditions revealed no significant activation. However, as shown in Figure 2 and Table 3, conflict effects were revealed by the comparison of the O+P- versus the O-P- conditions in left inferior temporal gyrus (BA 37) extending into fusiform cortex, left dorsal inferior frontal gyrus (BA 46, pars opercularis), and medial frontal gyrus (BA 8). The significant conflict effect in each of these regions was proximal to the main effect of average lexical activation in these same regions, with no greater than 10 mm Euclidian distance between maxima representing main effects of all lexical conditions and main effects of conflict.

Figure 2.

Main effects of conflict, as measured by the contrast of the hardest conflicting (O+P-) versus non-conflicting (O-P-) non-rhyming conditions (see Table 3 for coordinates). Greater activation in conflicting (O+P-) condition in A) left fusiform/inferior temporal gyrus (L FG), B) left dorsal inferior frontal gyrus (L dIFG), and C) left medial frontal gyrus (meFG).

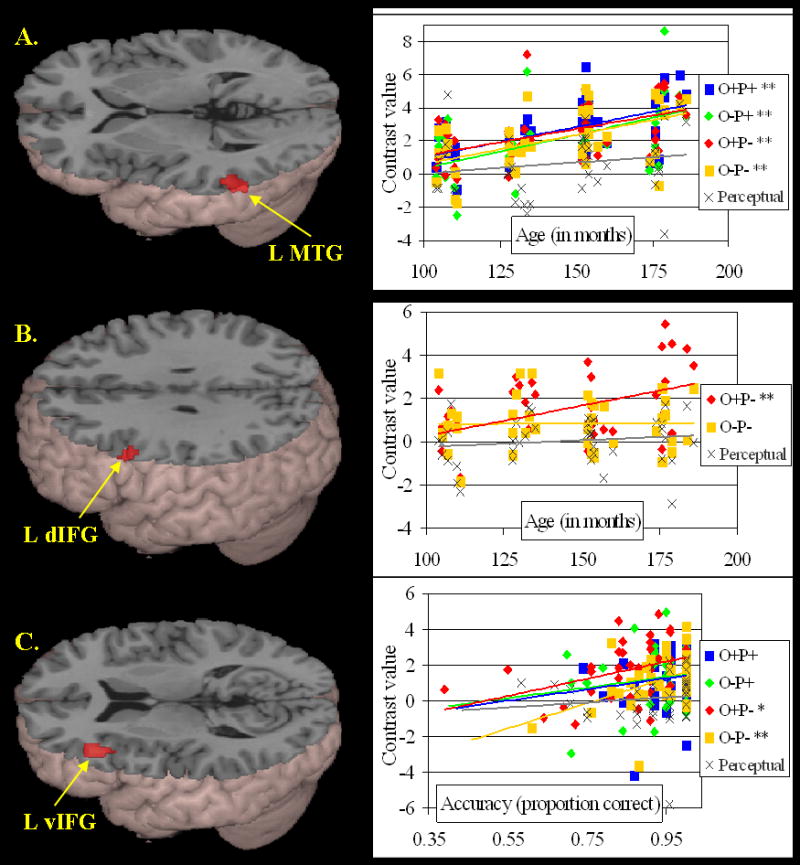

Table 4 presents the positive and negative correlations of activation with age and accuracy (partialed for one another). As shown in Figure 3A, increasing age was correlated with greater activation in left middle temporal gyrus (BA 22) for the average lexical condition versus null. As shown in the scatterplot of Figure3A, each lexical condition contributed significantly to this overall lexical correlation (O+P+: r(37) = .57, p < .001; O-P+: r(37) = .52, p =.001; O+P-: r(37) = .47, p = .002; O-P-: r(37) = .51, p = .001). No significant correlations were found for either age or accuracy for the contrast of O-P+ versus O+P+. However, as shown in Figure 3B, greater activation in left dorsal inferior frontal gyrus (BA 9) was correlated with increasing age for the O+P- versus O-P- contrast. The scatterplot in Figure 3B illustrates a significant positive correlation between age and activation in this region in the conflicting O+P- condition (r(37) = .45, p = .004), but not in the non-conflicting O-P- condition (r(37) = .00, p = .984). As shown in Figure 3C, increasing average lexical accuracy was correlated with greater activation in left ventral inferior frontal cortex (BA 46; pars triangularis) for the average lexical condition versus null. The scatterplot in Figure 3C shows that the contributions to this effect from the O+P- and the O-P- (non-rhyming) conditions were significant (r(37) = .37, p = .021; and r(37) = .45, p = .004, respectively), but the contributions from the O+P+ or the O-P+ (rhyming) conditions did not reach signficance (r(37) = .11, p = .506; and r(37) = .15, p = .359, respectively). Increasing average lexical accuracy was also correlated with greater activation in medial frontal gyri (BA 6; not shown in Figure 3), but this correlation was driven almost entirely by the O-P- condition (r(37) = .52, p=.001; O+P+: r(37) = .04, p=.001; O-P+: r(37) = .25, p = .119; O+P-: r(37) = .14, p=.401).

Figure 3.

Age and Accuracy correlations (see Table 4 for coordinates). (A) Positive correlation between age (in months) and lexical activation in left middle temporal gyrus (L MTG), with significant contributions from all conditions. (B) Significantly greater positive correlation between age and activation in conflicting non-rhyming (O+P-) condition than non-conflicting non-rhyming (O-P-) condition in left dorsal inferior frontal gyrus (L dIFG, BA 46). (C) Positive correlation between average lexical accuracy and lexical activation in left ventral inferior frontal gyrus (L vIFG, BA 9), with significant contributions from non-rhyming conditions (O+P- and O-P-). * = p < .05; ** = p < .005. Scatterplots show correlations with individual lexical conditions (in color) and the perceptual condition (in gray).

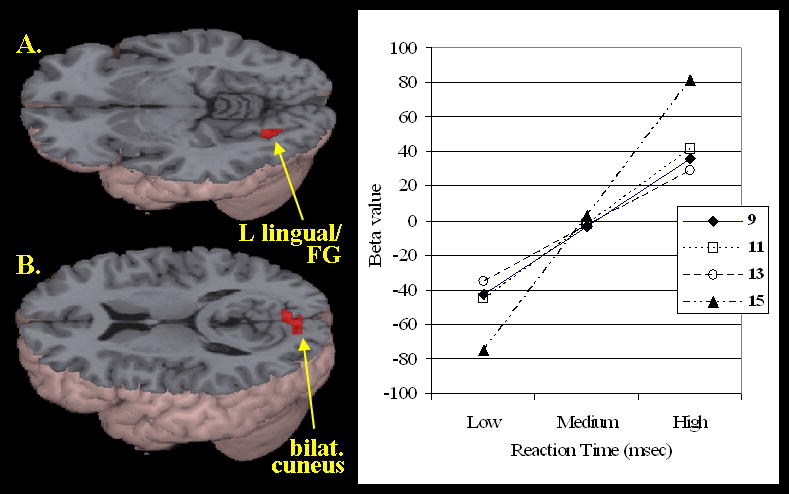

Figure 4 shows age differences in activation as a function of reaction time. No significant correlations were found for the O+P+, O-P+ or O-P- conditions. A positive relationship between individuals' reaction time (in ms) and increasing activation with age was found, however, in left lingual gyrus (BA 18), extending into left fusiform gyrus (BA 37), and bilateral cuneus (BA 18), in the O+P- condition (see Table 4). In order to determine the relationship of activation with reaction time in each of the four age groups (9, 11, 13, and 15 years; ± 6 months for each individual in the group), beta values were extracted for each individual's O+P- activation (with reaction time as a continuous within subject regressor), and averages were calculated for each age group. The graph in Figure 4 shows that the positive relationship between individual subjects' reaction time and activation in left lingual/fusiform gyrus is strongest for the 15-year olds relative to the younger children.

Figure 4.

Age differences in activation as a function of reaction time (see Table 4 for coordinates). Positive relationship between within subject individuals' reaction time (in milliseconds) and between subject activation increases with age in A) the left lingual/fusiform gyrus (BA 18) and B) the bilateral cuneus (BA 18), in the O+P- condition. The graph to the right shows increasing activation in the left lingual/fusiform gyrus as a function of reaction time across four age groups (9, 11, 13, and 15) in the O+P- condition, with the strongest correlation in the 15-year-old group.

Discussion

Lexical and Conflict Effects

This study examined the neural correlates of phonological processing during a rhyme judgment task in the auditory modality in a group of 9- to 15-year-old children. When comparing the lexical to the null conditions (masked to exclude perceptual activation), the rhyming task produced activation in left superior and middle temporal gyri (BA 22, 21) and left inferior frontal gyrus (BA 45, 46), supporting the roles of these brain regions in phonological processing (Binder, et al. 1994; Poldrack, et al. 1999). Lexical activation included both dorsal (BA 45; pars opercularis) and ventral (BA 46; pars triangularis) aspects of the inferior frontal cortex. While left inferior frontal gyrus activation has been widely implicated in phonological processing (Burton 2001; Zatorre, et al. 1996), findings from some studies support the notion that dorsal and ventral aspects of left inferior frontal gyrus show preferential activation for phonological and semantic processing, respectively (Bokde, et al. 2001; Devlin, et al. 2003; Poldrack, et al. 1999). A rhyme decision task necessarily involves phonological processing for accurate performance, but semantic representations may be automatically activated during this task. The lexical activation in the dorsal region was very close to activation identified by Devlin, Matthews, and Horowitz (2003) as stronger during phonological as compared to semantic judgments of the same words, suggesting that the dorsal activation of left inferior frontal gyrus in the current study is associated with phonological processing. The lexical activation in the ventral region, on the other hand, is very close to activation identified by Poldrack et al (1999) as stronger during semantic than phonological decisions, suggesting that this region in the current study was associated with automatic activation of semantic processes during the rhyme decision task. However, this ventral activation was also very close to a region that showed positive correlations with phonological awareness in a group of subjects ranging from 6 to 22 years of age (Turkeltaub, et al. 2003). Therefore, involvement of this ventral region of left inferior frontal cortex may have been associated with both semantic and phonological processes during the auditory rhyme decision task.

The comparison of lexical conditions versus null also produced activation in left fusiform gyrus (BA 37). This cluster is quite close to an area thought to be involved in supramodal word processing (x = -44, y = -60, z = -8) (Cohen, et al. 2004), suggesting that orthographic information in children is automatically activated to spoken words even during phonological tasks. The increased errors and reaction times for the O+P- relative to the O-P- conditions also demonstrates that orthographic information influences performance in an auditory task that does not actually require access to orthographic word representations, which in fact can actually interfere with the phonologically-based rhyme decision.

Activation in superior/posterior regions of left superior temporal and left inferior parietal cortex was not found in our comparison of lexical conditions versus null. We expected that we might observe activation, as well as potential developmental increases, in these regions because they have been implicated in phonological processes (Binder, et al. 1994) and mapping between orthographic and phonological representations (Booth, et al. 2002; Booth, et al. 2003a), respectively. The lack of such effects in these regions may result from the relatively little demands on phonological processing required by our rhyming task or because the task relied on auditory processing regions shared by the perceptual control (which was used as an exclusive mask).

The effect of conflict between orthographic and phonological representations was investigated by determining regions of greater activation in conflicting than non-conflicting conditions, separately for rhyming (“yes” response) and non-rhyming (“no” response) conditions. No regions were significantly more active for the O-P+ (e.g., JAZZ-HAS) than the O+P+ (e.g. GATE-HATE) condition; however, several conflict effects emerged through the comparison of the O+P- (e.g. PINT-MINT) versus the O-P- (e.g., PRESS-LIST) conditions. The O+P- (e.g. PINT-MINT) condition has consistently been found to be more difficult than the O-P+ condition in the context of a rhyme decision task, demonstrated by both longer reaction times and higher error rates relative to other conditions in both children and adults (Kramer and Donchin 1987; McPherson, et al. 1997; Polich, et al. 1983; Rugg and Barrett 1987; Weber-Fox, et al. 2003). One potential reason for this difference is that it is likely more difficult to appropriately reject a non-rhyme (i.e., make a correct “no” decision) than to appropriately accept a rhyme (i.e., make a correct “yes” decision) (Ratcliff 1985). Additionally, when the words are presented exclusively in the auditory modality, as was the case in the current experiment, orthographic processing may be less likely to encroach upon the rhyme decision when the answer is “yes,” in which case a rhyme is easily detected, than when the answer is “no,” leaving more room for interference from orthographic information. Such an interpretation is consistent with the finding of longer reaction times in the O+P- than the O-P+ condition (see Table 2). Within the non-rhyming conditions, the comparison of the conflicting O+P- to the non-conflicting O-P- condition revealed activation in left dorsal inferior frontal gyrus (BA 46; pars opercularis), left inferior temporal/fusiform cortex (BA 37), and medial frontal cortex (BA 8). As noted above, the dorsal inferior frontal gyrus has been implicated in phonological processing (Binder, et al. 1994). Because fusiform gyrus has been implicated in orthographic processing (Cohen, et al. 2004), greater activation in this region during conflicting conditions may represent greater interference from task-irrelevant orthographic information. Our finding of greater activation in medial frontal gyrus (BA 8) for the conflicting O+P- compared to the non-conflicting O-P- condition is generally consistent studies that have implicated the medial frontal gyrus in response selection and error monitoring (Braver, et al. 2001).

Developmental and performance effects

When comparing lexical to null conditions, we showed developmental increases in brain activation in left middle temporal cortex (BA 22). This may reflect greater automatic access to semantic representations with increasing age. Studies examining semantic judgments to visually and auditorily presented word pairs in children have shown age-related increases in a region close (less than 1 cm) to the peak activation cluster reported here and have also demonstrated that greater activation in this region is associated with lower association strength between the two words in the pair (Chou, et al. 2006a; Chou, et al. 2006b). Developmental increases in activation were also demonstrated in left dorsal inferior frontal gyrus (BA 9; pars opercularis when comparing the conflicting O+P- to the non-conflicting O+P- condition). The fact that we did not find such age correlations in the dorsal aspect of inferior frontal gyrus when comparing all lexical to null conditions shows that these age effects only emerge in the most difficult conflicting condition when the orthographically similar representations interfere with the identification of a non-rhyme. This suggests that the age-related increase in activation of dorsal inferior frontal gyrus represents a developmental change in the ability to access this region when needed, and not merely a change in excitability to any lexical stimulus. The recruitment of dorsal inferior frontal gyrus may enhance task-relevant phonological processing in the face of conflict from the orthographic domain. This interpretation is consistent with the finding from an effective connectivity study (Bitan, et al. 2005) of greater top-down modulation of relevant posterior representations in adults versus children. Our finding is also consistent with other studies showing age-related increases in left inferior frontal gyrus in a variety of lexical tasks (Gaillard, et al. 2003; Holland, et al. 2001; Shaywitz, et al. 2002; Turkeltaub, et al. 2003). Previous findings implicating the left dorsal inferior frontal gyrus in phonological processing (Bokde, et al. 2001; Devlin, et al. 2003; Poldrack, et al. 1999) further support the interpretation this effect as reflecting age-related improvements in the ability to access or manipulate phonological representations.

We also showed that higher accuracy in the lexical versus null conditions was correlated with greater activation in left ventral inferior frontal gyrus (BA 46; pars triangularis). Activation in left ventral inferior frontal gyrus has been shown to be preferentially active for semantic processing (Bokde, et al. 2001; Devlin, et al. 2003; Poldrack, et al. 1999). However, despite findings indicating preferential roles of the dorsal and ventral regions of inferior frontal gyrus for phonological and semantic processing, respectively (Bokde, et al. 2001; Devlin, et al. 2003; Poldrack, et al. 1999), various studies have defined the two regions differently. As an example, the region of left inferior frontal gyrus that showed a significant correlation with task accuracy in the current study (-42, 27, 12) is proximal to regions in two studies which offer disparate interpretations of the effect. On one hand, it is close (14 mm) to a region shown by Poldrack et al (1999) to be preferentially active for semantic decision task as compared to a phonological task with psuedowords. On the other hand, our effect is also quite close (8 mm) to a region identified by Bokde et al (2001) as having stronger connections, relative to a more inferior region, with posterior temporal and occipital regions for processing words, pseudowords, and consonant letter strings, indicating a preferential role in phonological processing, as compared to the inferior region, which showed stronger connections with occipital regions for words only, indicating a preferential role in semantic processing. It may be the case that this region is preferentially involved in semantic processing relative to phonological processing (Poldrack, et al. 1999) but also involved more in phonological processing relative to even more inferior regions of the prefrontal cortex (Bokde, et al. 2001). Despite evidence for preferential roles of dorsal and ventral regions of the left inferior frontal gyrus in phonological and semantic processing, it is likely that both regions contribute significantly to both phonological and semantic processing to different extents (Devlin, et al. 2003).

As shown in Figure 4, age differences in activation as a function of reaction time were found in left lingual/fusiform gyrus and bilateral cuneus in the O+P- condition, with the strongest correlation between individual subjects' reaction time and activation in left lingual/fusiform gyrus for the 15-year olds. Our behavioral results showed the conflicting O+P- condition to be the most difficult condition, with the longest reaction times and lowest accuracy, and as shown in Figure 2A, this region showed greater activation of left fusiform/inferior temporal gyrus in the O+P- condition than the non-conflicting O-P- condition. The association of longer reaction times in this condition with greater activation in left fusiform gyrus may indicate that, on items with the longer reaction times, the orthographic representations of the words were more strongly activated because there was more time on those trials for the orthographic representations to become activated. The stronger correlation between reaction time and activation in left fusiform gyrus for the older children shows that there was a more systematic relationship between these variables with increasing age. This stronger correlation for older children is consistent with behavioral research which suggests greater interactivity with orthographic representations during spoken language processing (Bruck 1992; Tunmer and Nesdale 1982; Zecker 1991) and with neuroimaging research which shows learning related increases in connectivity with inferior temporal cortex (Hashimoto and Sakai 2004).

In conclusion, this study showed developmental increases in activation of left dorsal inferior frontal gyrus and left middle temporal gyrus. The developmental increase in inferior frontal gyrus activation emerged as a conflict effect, indicating that, as age increases, children are better able to recruit this region for phonological processes related to the rhyme decision task in the face of conflicting orthographic information. Because the developmental increases in middle temporal gyrus were evident across all conditions, it seems that this effect represents an age-related increase in the automatic access of semantic representations during a phonological task. Performance-related increases in the left ventral inferior frontal gyrus may reflect either greater selection/retrieval of semantic representations, which could aid the phonological processing required by the rhyming task through interactivity of representational systems; or it may reflect a more direct involvement in phonological processing. Despite the exclusively auditory presentation of stimuli, several interesting effects emerged regarding left fusiform gyrus. This region was activated for the group as a whole across all conditions, indicating that the children automatically activated orthographic representations of the auditorily-presented words regardless of condition. However, activation in this region was stronger for the most difficult conflicting condition (O+P-) relative to the nonconflicting condition (O-P-), suggesting that greater orthographic activation is necessary to overcome the conflicting orthographic and phonological information. Furthermore, an age-related increase in the correlation of within-subject reaction time and activation in left fusiform gyrus in the O+P- condition suggests that there is a stronger connectivity between orthographic and phonological representations over development.

Acknowledgments

This research was supported by grants from the National Institute of Child Health and Human Development (HD042049) and by the National Institute of Deafness and Other Communication Disorders (DC06149) to James R. Booth. We thank Caroline Na for her assistance in the analysis of the fMRI data.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- The Educator's Word Frequency Guide. Brewster, NY: Touchstone Applied Science Associates, Inc.; 1996. [Google Scholar]

- Baayen RH, Piepenbrock R, Gulikers L. The CELEX Lexical Database [CD-ROM]. Version Release 2. Philadelphia, PA: Linguistic Data Consortium, University of Pennsylvania; 1995. [Google Scholar]

- Binder JR, Rao SM, Hammeke TA, Yetkin FZ, Jesmanowicz A, Bandertini PA, Wong EC, Estkowski LD, Goldstein MD, Haughton VM, et al. Functional magnetic resonance imaging of human auditory cortex. Annals of Neurology. 1994;35:662–672. doi: 10.1002/ana.410350606. [DOI] [PubMed] [Google Scholar]

- Bitan T, Booth JR, Choy J, Burman DD, Gitelman DR, Mesulam MM. Shifts of effective connectivity within a language network during rhyming and spelling. Journal of Neuroscience. 2005;25:5397–5403. doi: 10.1523/JNEUROSCI.0864-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bokde AL, Tagamets MA, Friedman RB, Horwitz B. Functional interactions of the inferior frontal cortex during the processing of words and word-like stimuli. Neuron. 2001;30(2):609–17. doi: 10.1016/s0896-6273(01)00288-4. [DOI] [PubMed] [Google Scholar]

- Bolger DJ, Hornickel J, Cone NE, Burman DD, Booth JR. Neural correlates of orthographic and phonological consistency effects in children. Human Brain Mapping. doi: 10.1002/hbm.20476. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TR, Mesulam MM. Functional anatomy of intra- and cross-modal lexical tasks. NeuroImage. 2002;16:7–22. doi: 10.1006/nimg.2002.1081. [DOI] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TR, Mesulam MM. The relation between brain activation and lexical performance. Human Brain Mapping. 2003a;19:155–169. doi: 10.1002/hbm.10111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Zhang L, Choy J, Gitelman DR, Parrish TR, Mesulam MM. Modality-specific and -independent developmental differences in the neural substrate for lexical processing. Journal of Neurolinguistics. 2003b;16:383–405. [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Zhang L, Gitelman DR, Parrish TR, Mesulam MM. Development of brain mechanisms for processing orthographic and phonological representations. Journal of Cognitive Neuroscience. 2004;16:1234–1249. doi: 10.1162/0898929041920496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Van Santen FW, Harasaki Y, Gitelman DR, Parrish TR, Mesulam MM. The development of specialized brain systems in reading and oral-language. Child Neuropsychology. 2001;7(3):119–141. doi: 10.1076/chin.7.3.119.8740. [DOI] [PubMed] [Google Scholar]

- Booth JR, Perfetti CA, MacWhinney B. Quick, automatic, and general activation of orthographic and phonological representations in young readers. Developmental Psychology. 1999;35:3–19. doi: 10.1037/0012-1649.35.1.3. [DOI] [PubMed] [Google Scholar]

- Booth JR, Perfetti CA, MacWhinney B, Hunt SB. The association of rapid temporal perception with orthographic and phonological processing in reading impaired children and adults. Scientific Studies of Reading. 2000;4:101–132. [Google Scholar]

- Braver TS, Barch DM, Gray JR, Molfese DL, Snyder A. Anterior cingulate cortex and response conflict: Effects of frequency, inhibition and errors. Cerebral Cortex. 2001;11(9):825–836. doi: 10.1093/cercor/11.9.825. [DOI] [PubMed] [Google Scholar]

- Bruck M. Persistence of dyslexic's phonological awareness deficits. Developmental Psychology. 1992;28:874–886. [Google Scholar]

- Burock MA, Buckner RL, Woldorff MG, Rosen BR, Dale AM. Randomized event-related experimental designs allow for extremely rapid presentation rates using functional MRI. NeuroReport. 1998;9:3735–3739. doi: 10.1097/00001756-199811160-00030. [DOI] [PubMed] [Google Scholar]

- Burton H, Diamond JB, McDermott KB. Dissociating cortical regions activated by semantic and phonological tasks: a FMRI study in blind and sighted people. Journal of Neurophysiology. 2003;90(3):1965–82. doi: 10.1152/jn.00279.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burton MW. The role of the inferior frontal cortex in phonological processing. Cognitive Science. 2001;25:695–709. [Google Scholar]

- Burton MW, Locasto PC, Krebs-Noble D, Gullapalli RP. A systematic investigation of the functional neuroanatomy of auditory and visual phonological processing. Neuroimage. 2005;26(3):647–61. doi: 10.1016/j.neuroimage.2005.02.024. [DOI] [PubMed] [Google Scholar]

- Callan DE, Tajima K, Callan AM, Kubo R, Masaki S, Akahane-Yamada R. Learning-induced neural plasticity associated with improved identification performance after training of a difficult second-language phonetic contrast. Neuroimage. 2003;19(1):113–24. doi: 10.1016/s1053-8119(03)00020-x. [DOI] [PubMed] [Google Scholar]

- Campbell R. Speech in the head? Rhyme skill, reading, and immediate memory in the deaf. In: Reisberg D, editor. Auditory Imagery. Hillsdale: Lawrence Erlbaum Associates Inc.; 1992. pp. 73–94. [Google Scholar]

- Chereau C, Gaskell MG, Dumay N. Reading spoken words: Orthographic effects in auditory priming. Cognition. 2007;102:341–360. doi: 10.1016/j.cognition.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Chou TL, Booth JR, Bitan T, Burman DD, Bigio JD, Cone NE, Dong L, Cao F. Developmental and skill effects on the neural correlates of semantic processing to visually presented words. Human Brain Mapping. 2006a;27:915–924. doi: 10.1002/hbm.20231. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chou TL, Booth JR, Burman DD, Bitan T, Bigio JD, Dong L, Cone NE. Developmental changes in the neural correlates of semantic processing. Neuroimage. 2006b;29:1141–1149. doi: 10.1016/j.neuroimage.2005.09.064. [DOI] [PubMed] [Google Scholar]

- Coch D, Grossi G, Coffey-Corina S, Holcomb PJ, Neville HJ. A developmental investigation of erp auditory rhyming effects. Developmental Science. 2002;5(4):467–489. [Google Scholar]

- Cohen L, Jobert A, Le Bihan D, Dehaene S. Distinct unimodal and multimodal regions for word processing in the left temporal cortex. Neuroimage. 2004;23(4):1256–70. doi: 10.1016/j.neuroimage.2004.07.052. [DOI] [PubMed] [Google Scholar]

- Corina D, Richards T, Serafini S, Richards A, Steury K, Abbott R, et al. Fmri auditory language differences between dyslexic and able reading children. Neuroreport: For Rapid Communication of Neuroscience Research. 2001;12(6):1195–1201. doi: 10.1097/00001756-200105080-00029. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Jobert A, Naccache L, Ciuciu P, Poline JB, Le Bihan D, Cohen L. Letter binding and invariant recognition of masked words: behavioral and neuroimaging evidence. Psychological Science. 2004;15(5):307–13. doi: 10.1111/j.0956-7976.2004.00674.x. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Matthews PM, Rushworth MF. Semantic processing in the left inferior prefrontal cortex: a combined functional magnetic resonance imaging and transcranial magnetic stimulation study. Journal of Cognitive Neuroscience. 2003;15(1):71–84. doi: 10.1162/089892903321107837. [DOI] [PubMed] [Google Scholar]

- Dijkstra T, Roelofs A, Fieuws S. Orthographic effects on phoneme monitoring. Canadian Journal of Experimental Psychology. 1995;49(2):264–271. doi: 10.1037/1196-1961.49.2.264. [DOI] [PubMed] [Google Scholar]

- Donnenwerth-Nolan S, Tanenhaus MK, Seidenberg MS. Multiple code activation in word recognition: Evidence from rhyme monitoring. Journal of Experimental Psychology: Human Learning & Memory. 1981;7(3):170–180. [PubMed] [Google Scholar]

- Foorman BR, Francis DJ, Fletcher JM, Lynn A. Relation of phonological and orthographic processing to early reading: comparing two approaches to regression-based, reading-level-match designs. Journal of Educational Psychology. 1996;88(4):639–652. [Google Scholar]

- Foorman BR. The varieties of orthographic knowledge I: Theoretical and developmental issues. Derdrecht, The Netherlands: Kluwer Academic Publishers; 1994. Phonological and orthographic processing: separate but equal? pp. 321–357. [Google Scholar]

- Gaillard WD, Sachs BC, Whitnah JR, Ahmad Z, Balsamo LM, Petrella JR, Braniecki SH, McKinney CM, Humter K, Xu B, et al. Developmental aspects of language processing: fMRI of verbal fluency in children and adults. Human Brain Mapping. 2003;18:176–185. doi: 10.1002/hbm.10091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hagoort P, Indefrey P, Brown C, Herzog H, Steinmetz H, Seitz RJ. The neural circuitry involved in the reading of German words and pseudowords: A PET study. Journal of Cognitive Neuroscience. 1999;11(4):383–398. doi: 10.1162/089892999563490. [DOI] [PubMed] [Google Scholar]

- Hashimoto R, Sakai KL. Learning letters in adulthood: direct visualization of cortical plasticity for forming a new link between orthography and phonology. Neuron. 2004;42(2):311–22. doi: 10.1016/s0896-6273(04)00196-5. [DOI] [PubMed] [Google Scholar]

- Holland SK, Plante E, Byars AW, Strawsburg RH, Schmithorst VJ, Ball WS. Normal fMRI brain activation patterns in children performing a verb generation task. NeuroImage. 2001;14:837–843. doi: 10.1006/nimg.2001.0875. [DOI] [PubMed] [Google Scholar]

- Jakimik J, Cole RA, Rudnicky AI. Sound and spelling in spoken word recognition. Journal of Memory & Language. 1985;24(2):165–178. [Google Scholar]

- Johnston RS, McDermott EA. Suppression effects in rhyme judgement tasks. Quarterly Journal of Experimental Psychology A. 1986;38(1A) [Google Scholar]

- Juel C. The development and use of mediated word identification. Reading Research Quarterly. 1983;18(3):306–327. [Google Scholar]

- Kramer AF, Donchin E. Brain potentials as indices of orthographic and phonological interaction during word matching. Journal of Experimental Psychology: Learning, Memory, & Cognition. 1987;13(1):76–86. doi: 10.1037//0278-7393.13.1.76. 1987. [DOI] [PubMed] [Google Scholar]

- Levinthal CF, Hornung M. Orthographic and phonological coding during visual word matching as related to reading and spelling abilities in college students. Reading & Writing. 1992;4(3):1–20. Sep 1992. [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: Expertise for reading in the fusiform gyrus. Trends in Cognitive Sciences. 2003;7:293–299. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- McPherson BW, Ackerman PT, Dykman RA. Auditiory and visual rhyme judgements reveal differences and similarities between normal and disabled adolescent readers. Dyslexia. 1997;3:63–77. [Google Scholar]

- Perfetti CA. Reading acquisition and beyond: decoding includes cognition. American Journal of Education. 1984;93(1):40–60. [Google Scholar]

- Plaut DC, Booth JR. Individual and developmental differences in semantic priming: Empirical findings and computational support for a single-mechanism account of lexical processing. Psychological Review. 2000;107(4):786–823. doi: 10.1037/0033-295x.107.4.786. [DOI] [PubMed] [Google Scholar]

- Plaut DC, McClelland JL, Seidenberg MS, Patterson K. Understanding normal and impaired word reading: Computational principles in quasi regular domains. Psychological Review. 1996;103:56–115. doi: 10.1037/0033-295x.103.1.56. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Wagner AD, Prull MW, Desmond JE, Glover GH, Gabrieli JD. Functional specialization for semantic and phonological processing in the left inferior prefrontal cortex. Neuroimage. 1999;10(1):15–35. doi: 10.1006/nimg.1999.0441. [DOI] [PubMed] [Google Scholar]

- Polich J, McCarthy G, Wang WS, Donchin E. When words collide: orthographic and phonological interference during word processing. Biological Psychology. 1983;16(34):155–80. doi: 10.1016/0301-0511(83)90022-4. [DOI] [PubMed] [Google Scholar]

- Pugh K, Mencl W, Jenner A, Katz L, Frost S, Lee J, et al. Functional neuroimaging studies of reading and reading disability (developmental dyslexia) Mental Retardation & Developmental Disabilities Research Reviews. 2000;6(3):207–213. doi: 10.1002/1098-2779(2000)6:3<207::AID-MRDD8>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- Raboyeau G, Marie N, Balduyck S, Gros H, Demonet JF, Cardebat D. Lexical learning of the English language: a PET study in healthy French subjects. Neuroimage. 2004;22(4):1808–18. doi: 10.1016/j.neuroimage.2004.05.011. [DOI] [PubMed] [Google Scholar]

- Raizada R, Richards T, Meltzoff A, Kuhl P. Socioeconomic status predicts hemispheric specialisation of the left inferior frontal gyrus in young children. NeuroImage. 2008 doi: 10.1016/j.neuroimage.2008.01.021. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R. Theoretical interpretations of the speed and accuracy of positive and negative processes. Psychological Review. 1985;92:212–225. [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annual Review of Neuroscience. 2004;27:169–92. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rudner M, Ronnberg J, Hugdahl K. Reversing spoken items--mind twisting not tongue twisting. Brain & Language. 2005;92(1):78–90. doi: 10.1016/j.bandl.2004.05.010. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Barrett SE. Event-related potentials and the interaction between orthographic and phonological information in a rhyme-judgment task. Brain and Language. 1987;32:336–61. doi: 10.1016/0093-934x(87)90132-5. [DOI] [PubMed] [Google Scholar]

- Seidenberg MS, Tanenhaus MK. Orthographic effects on rhyme monitoring. Journal of Experimental Psychology: Human Learning and Memory. 1979;5(6):546–554. [PubMed] [Google Scholar]

- Shaywitz B, Shaywitz S, Pugh K, Mencl E, Fulbright R, Skudlarski P, Constable T, Marchione K, Fletcher J, Lyon R, et al. Disruption of posterior brain systems for reading in children with developmental dyslexia. Biological Psychiatry. 2002:101–110. doi: 10.1016/s0006-3223(02)01365-3. [DOI] [PubMed] [Google Scholar]

- Tunmer WE, Nesdale AR. The effects of digraphs and pseudowords on phonemic segmentation in young children. Applied Psycholinguistics. 1982;3(4):299–311. [Google Scholar]

- Turkeltaub PE, Garaeu L, Flowers DL, Zefirro TA, Eden G. Development of the neural mechanisms for reading. Nature Neuroscience. 2003;6(6):767–773. doi: 10.1038/nn1065. [DOI] [PubMed] [Google Scholar]

- Wagner RK, Torgesen JK. The nature of phonological processing and its causal role in the acquisition of reading skills. Psychological Bulletin. 1987;101(2):192–212. [Google Scholar]

- Wang Y, Sereno JA, Jongman A, Hirsch J. fMRI Evidence for Cortical Modification during Learning of Mandarin Lexical Tone. Journal of Cognitive Neuroscience. 2003;15(7):1019–1027. doi: 10.1162/089892903770007407. [DOI] [PubMed] [Google Scholar]

- Weber-Fox C, Spencer R, Cuadrado E, Smith AB. Development of neural processses mediating rhyme judgments: Phonological and orthographic interactions. Developmental Psychobiology. 2003;43:128–145. doi: 10.1002/dev.10128. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Abbreviated Scale of Intelligence: The Psychological Corporation. a Harcourt Brace & Company; 1999. [Google Scholar]

- Zatorre RJ, Meyer E, Gjedde A, Evans AC. PET studies of phonetic processing of speech: Review, replication, and reanalysis. Cerebral Cortex. 1996;6(1):21–30. doi: 10.1093/cercor/6.1.21. [DOI] [PubMed] [Google Scholar]

- Zecker S. The orthographic code: Developmental trends in reading-disabled and normally-achieving children. Annals of Dyslexia. 1991;41:179–192. doi: 10.1007/BF02648085. [DOI] [PubMed] [Google Scholar]

- Ziegler JC, Ferrand L. Orthography shapes the perception of speech: The consistency effect in auditory word recognition. Psychonomic Bulletin & Review. 1998;5(4):683–689. [Google Scholar]