Abstract

National stakeholders are becoming increasingly concerned about the inability of college graduates to think critically. Research shows that, while both faculty and students deem critical thinking essential, only a small fraction of graduates can demonstrate the thinking skills necessary for academic and professional success. Many faculty are considering nontraditional teaching methods that incorporate undergraduate research because they more closely align with the process of doing investigative science. This study compared a research-focused teaching method called community-based inquiry (CBI) with traditional lecture/laboratory in general education biology to discover which method would elicit greater gains in critical thinking. Results showed significant critical-thinking gains in the CBI group but decreases in a traditional group and a mixed CBI/traditional group. Prior critical-thinking skill, instructor, and ethnicity also significantly influenced critical-thinking gains, with nearly all ethnicities in the CBI group outperforming peers in both the mixed and traditional groups. Females, who showed decreased critical thinking in traditional courses relative to males, outperformed their male counterparts in CBI courses. Through the results of this study, it is hoped that faculty who value both research and critical thinking will consider using the CBI method.

INTRODUCTION

A National Crisis in Critical Thinking

Not since the time of Sputnik has the focus on national science, technology, engineering, and mathematics (STEM) reform been so strong. It is becoming abundantly clear that the United States must revise STEM teaching practices to maintain its international competitiveness. Increasing the number of STEM majors in the pipeline is necessary (National Research Council [NRC] 2003; National Academy of Sciences et al., 2005) but insufficient. We must also improve STEM general education to promote an informed electorate, produce more competitive college graduates, and capitalize on this generation's potential.

Recent national reports indicate that U.S. college graduates are becoming less competitive in the global marketplace. Research shows that a large majority of U.S. college graduates lack essential critical-thinking and problem-solving skills, abilities that directly contribute to academic and professional success. A recent report reveals that 93% of college faculty consider analytical and critical thinking to be among the most essential skills students can develop, and while a majority of students believe college experiences prepare them to think, only 6% of graduates can actually demonstrate these essential skills (Association of American Colleges and Universities, 2005). The questions being asked by many higher education faculty are: (1) What is causing the disconnect between critical-thinking perception and reality? and (2) What tools can be used to rectify the problem?

In order for students to better compete on the international stage, higher education faculty need to make practical instructional changes. National recommendations are clear: Science should be learned and taught as science is done in the real world (American Association for the Advancement of Science [AAAS], 1989; NRC, 1996, 2000). Specifically, students must learn how to solve real-world problems and apply knowledge in creative and innovative ways (Council on Competitiveness, 2005; PKAL, 2006). In order for students to learn, their preconceptions of science must be engaged, they must “do” science as science is done by professionals, and they must become aware of how they think, not just what they think (Bransford and Donovan, 2005).

Despite the accumulating evidence for the efficacy of nontraditional methods like inquiry-based instruction, many faculty continue to resist pressures to modify their teaching. The lack of studies that show clear connections between critical thinking and STEM teaching methods (Tsui, 1998, 2002) may contribute to faculty reticence. Ultimately, convincing data and practical methods are necessary to motivate faculty to make the necessary instructional reforms.

One approach that may prove to be palatable for content faculty is greater integration of research into the classroom. In the laboratory and field, faculty intentionally set up investigative experiences that require students to structure and create their own knowledge and skills under the guidance of a content expert. Many such discovery-based teaching methods are used successfully (Porta, 2000; DebBurman, 2002; Howard and Miskowski, 2005), but small-scale implementation in the classroom limits long-term meaningful instructional reform (Building Engineering and Science Talent, 2003). With regard to national recommendations, teaching methods that focus more on integrating research experiences may help students build core thinking skills, which in turn will increase their academic and professional success in ways that contribute to worker productivity and national competitiveness (National Academy of Sciences et al., 2005; Bybee and Fuchs, 2006; National Science Board, 2007). However, it is not always feasible to use research-focused teaching methods in STEM courses, particularly those with large sections. What is needed is a practical instructional approach that addresses faculty concerns and considers professional values, improves critical-thinking skills, and connects teaching and learning to solving real-world problems. Considering that fewer students are choosing STEM disciplines in college, it is also imperative that undergraduates be provided with authentic research experiences that increase engagement, relevance, and promote entry into STEM majors (Smith et al., 2005; Russell et al., 2007).

The Centrality of Critical Thinking

The importance of critical thinking has been established since the time of Socrates. Yet, despite the requirement of critical thinking in many scientific endeavors, it is shocking how few undergraduate students can demonstrate these skills (Association of American Colleges and Universities, 2005), and how little emphasis is placed on explicitly teaching these skills in an organized and systematic way in STEM courses (Miri et al., 2007). Critical thinking and the elements that constitute it are described in detail elsewhere (Ennis, 1985; Facione and American Philosophical Association, 1990; Jones et al., 1995; Facione, 2007), but briefly, critical thinking is defined as a “process of purposeful self-regulatory judgment that drives problem-solving and decision-making,” or the “engine” that drives how we decide what to do or believe in a given context. Critical thinking comprises behavioral tendencies (e.g., curiosity, open-mindedness) and cognitive skills (e.g., analysis, inference, evaluation; Ennis, 1985). Behavioral tendencies toward critical thinking appear not to change, at least not over the short term (Giancarlo and Facione, 2001; Ernst and Monroe, 2006). However, significant gains in critical-thinking skill can occur in as little as 9 weeks (Quitadamo and Kurtz, 2007). The academic and personal benefits of critical thinking are fairly obvious; students tend to get better grades, use better personal reasoning (United States Department of Education, 1990), and are preferentially employed (Carnevale and American Society for Training and Development, 1990; Holmes and Clizbe, 1997; National Academy of Sciences et al., 2005). It is also clear from the literature that the majority of university faculty value critical thinking as an essential student learning outcome (Association of American Colleges and Universities, 2005).

The Utility of Undergraduate Research

There exists a sizable body of knowledge on the benefits of undergraduate research (Kardash, 2000; Hathaway et al., 2002; Lopatto, 2004; Seymour et al., 2004; Hunter et al., 2007; Russell et al., 2007), but few studies have explored the relationship between undergraduate research and critical thinking explicitly. Ten years ago, the Boyer Commission advocated research as a way to improve undergraduate science education (Boyer, 1998). However, providing independent research opportunities for each and every STEM undergraduate is not a reasonable goal for most colleges and universities. Furthermore, undergraduate research has rarely been applied to general education courses, which host students who arguably have the greatest need for these experiences.

Most studies of the effects of undergraduate research rely on anecdotal evidence including academic performance (e.g., GPA), undergraduate and alumni surveys of student and faculty attitudes and perception, entry into STEM programs, and postgraduation marketability and employment rates (Kinkel and Henke, 2006; Russell et al., 2007). A number of studies have found that undergraduate research experiences help students to develop their ability to conceptualize a scientific problem, design experiments, collect and analyze data, draw conclusions in a contextual manner, and in general to “think and act like a scientist” (Kardash, 2000; Hathaway et al., 2002; Lopatto, 2004; Seymour, et al., 2004; Hunter et al., 2007. Undergraduate research experiences seem to lower barriers to primary literature and improve perception of gains in content knowledge (DebBurman, 2002; Russell et al., 2007) regardless of institution type (Lopatto, 2004). However, there is some indication that females do not benefit to the same extent as males (Kardash, 2000). Furthermore, while students seem to connect more strongly to particular fields of study as a result of research experiences (Lopatto et al., 2004; Hunter et al., 2007), they remain largely unable to frame research questions, creatively design experiments to investigate their questions, and lack an understanding of how scientific knowledge is constructed over time (Hunter et al., 2007).

These studies provide important insight into the utility of undergraduate research experiences and infer a correlation between undergraduate research and greater research skill, deeper content knowledge, entry into STEM majors, and future success. While many studies have looked at stand-alone undergraduate research programs, few studies have investigated the effects of research experiences as an integral part of STEM general education courses, and even fewer have investigated their specific effects on critical thinking. If the majority of faculty believe that critical thinking is a core outcome of higher education (Association of American Colleges and Universities, 2005) and undergraduate research is a key method for improving student learning (Kardash, 2000; Hathaway et al., 2002; Lopatto, 2004; Seymour et al., 2004; Russell et al., 2007), then it stands to reason that more students should be able to experience these benefits, not just a select few.

Using Community-based Inquiry to Integrate Research and Critical Thinking

Instructional methods that incorporate elements of undergraduate research are called by different names. The terms project-based learning, experiential learning, and community-based research generally refer to teaching methods that promote student inquiry and discovery in an authentic context (Kolb, 1984; Blumenfeld et al., 1991; Sclove, 1995). Inquiry is recommended as a core teaching strategy in STEM courses (American Association for the Advancement of Science, 1993; National Research Council, 1996), ranges from teacher-guided to student-directed (Colburn, 2000), and can provide a focus for laboratory activities or serve as a framework for an entire course. Some colleges and universities have successfully used such community-based inquiry (CBI) methods (Huard, 2001) to address critical-thinking outcomes in STEM courses (Magnussen et al., 2000; Arwood, 2004; Ernst and Monroe, 2006); but results supporting a relationship between critical thinking and inquiry-based instruction are somewhat mixed. Some studies indicate that inquiry-based instruction in STEM courses improves critical-thinking skill (Arwood, 2004; Ernst and Monroe, 2006; Quitadamo and Kurtz, 2007) but not critical-thinking disposition in the same time frame (Ernst and Monroe, 2006). Other studies show inquiry-based instruction has no overall effect on critical thinking (Magnussen et al., 2000). Based on existing literature, it is difficult to generate a clear picture of the effect of inquiry-based teaching on critical thinking because most studies do not focus on critical thinking explicitly (Kardash, 2000; Hathaway et al., 2002; DebBurman, 2002; Lopatto, 2004; Henke, 2006; Russell et al., 2007) or suffer from issues like regression toward the mean (Magnussen et al., 2000), the lack of a valid and reliable measure to assess critical thinking (DiPasquale et al., 2003; Arwood, 2004), nonmatched pretest and posttest scores, or lack of suitable comparison groups that account for covariable effects (Kardash, 2000; Lopatto, 2004; Kinkel and Henke, 2006).

Purpose of the Study

This study was designed to discover whether CBI could improve student critical thinking in general education biology courses compared with traditionally taught sections of the same course using the valid and reliable California Critical Thinking Skills Test (CCTST). The research questions that framed this investigation were:

Can CBI produce greater critical-thinking gains than traditional methods in general education biology?

Do students experiencing CBI develop greater analysis, inference, and evaluation skills than those who experience traditional instruction?

Do critical-thinking gains vary by gender, ethnicity, prior thinking skill, or instructor?

METHODS

Study Context

This study took place at a regional comprehensive university in the Pacific Northwest. Eight sections of a fundamentals of biology nonmajors course over two successive years were included in the study (n = 337). Two sections of conventional lecture/laboratory were assigned as a traditional group (n = 82). Two sections that partially implemented CBI but retained aspects of traditional instruction were assigned to a mixed group (n = 80). Four sections that fully implemented CBI were assigned as the treatment group (n = 175). Only students who completed both the critical-thinking pretest and posttest were included in the statistical analysis.

All course sections were taught in similarly appointed modern lecture classrooms and common laboratory facilities. Each lecture section included a maximum of 48 students and was taught for 50 min 4 d/wk. Laboratory included 24 students per section and met 1 d/wk for 2 h. Laboratory for all sections took place in the same two rooms except when field work was required. Two different instructors taught four CBI sections, one instructor taught both mixed sections, and two instructors taught the traditional course sections over successive fall terms. Lecture instructors materially participated in laboratory with support from one graduate assistant per lab section. The same nonmajors textbook was used across all sections. None of the instructors from the CBI, mixed, or traditional groups had implemented CBI previously.

CBI, mixed, and traditional groups differed primarily by the instructional method used and the extent to which it was implemented. The traditional group used a standard lecture format and a conventional laboratory manual with drill and skill-based exercises that covered common themes of biology, including the scientific method, ecology, evolution and natural selection, genetics and cell biology, macromolecules, and basic biochemistry. These same topics were covered in both the CBI and mixed groups using more nontraditional methods. Little emphasis was placed on student-driven scientific inquiry in the traditional group, and probably the only activities explicitly addressing critical-thinking skills were the discussion of and laboratory exercise on the scientific method. This method did include small collaborative group work, but interactions were typically limited to completing the laboratory exercises.

The mixed group emphasized critical thinking somewhat superficially and included an inquiry laboratory component, but did not include the case studies that were used in the CBI group to explicitly connect critical thinking and scientific process. This group included a standard lecture that did address some aspects of critical thinking. The lab was inquiry based, but it was somewhat conceptually disconnected from the other course work and was taught primarily by the teaching assistants. The mixed group included collaborative writing assignments for the group research proposal and poster (see below). A comparison of methods used in CBI, mixed, and traditional groups is described in Table 1.

Table 1.

Methods used in CBI, mixed, and traditional groups

| Method | CBI | Mixed | Traditional |

|---|---|---|---|

| Lecture | + | + | + |

| Small group work | + | + | + |

| Collaborative writing | + | + | − |

| Student-driven inquiry | + | +/− | − |

| Critical-thinking framework | + | +/− | − |

| Case studies | + | − | − |

+, +/−, and − symbols refer to full, partial, or no use of method, respectively.

CBI Overview and Planning

The CBI and mixed group laboratory implemented undergraduate research using quarter-long experiments organized around student-generated research questions. Naturalistic field observations during initial lab meetings led to drafting of preliminary research proposals based on student interest. Each proposal was connected in some way to addressing a pressing community need (e.g., water quality, amphibian decline). Proposals included a research question, null and alternative hypotheses, predictions, anticipated materials and methods, and a description of the experimental design. Students worked with faculty and teaching assistants to revise proposals, to collect necessary materials, and conduct their experiments over a period of several weeks. When students needed to analyze their data, they were taught mathematics and statistics using a just-in-time approach (Novak et al., 1999). Students orally presented and defended their research at a poster session during the final week of laboratory. Some examples of student research projects included frog capture-recapture, habitat selection, isopod tracking and migration, chemical effects on macroinvertebrate frequency and diversity, microbial contamination, water quality, environmental chemistry, and decomposition studies.

Initial CBI planning meetings took place just before the beginning of the academic term and included graduate assistant and instructor training to help them learn to consistently evaluate student work (i.e., proposals) using a rubric. A range of samples from poor to high quality was used to calibrate scoring and ensure consistency between evaluators from different sections within the CBI and mixed groups.

Description of the CBI Method

The CBI instructional model consisted of four main elements that worked in concert to foster gains in critical thinking. These elements included: (1) authentic inquiry related to community need, (2) case study exercises aligned to major course themes, (3) peer evaluation and individual accountability, and (4) lecture/content discussion. All four of these elements were integrated and used as a framework (Sundberg, 2003; Pukkila, 2004) focused on promoting the development of critical thinking through application of the scientific method (Miri et al., 2007).

On the first day of lecture, CBI instructors informed their students that, in addition to traditional exams and quizzes, their course performance would be evaluated using a combination of case study exercises, peer evaluations, and a research poster presentation. Students were grouped into teams of three to four individuals during the first laboratory session. The criteria for completing CBI assignments were further explained at that time.

Authentic science inquiry formed the cornerstone experience of the CBI model that took place during laboratory. Student learning outcomes were assessed using initial and final research proposals, a laboratory journal, and a formal research poster. Teams were introduced to the scientific method and data collection during the first few laboratory periods by examining some basic biological phenomena such as the dynamics of frog populations or the growth of bacteria. Each team developed a written research proposal built around their own research question with clearly defined independent and dependent variables, explicit null and alternative hypotheses, a detailed experimental design with anticipated materials and methods, and predictions for experimental results.

Initial drafts of research proposals were composed in Microsoft Word and submitted to course instructors using the campus Blackboard system. Each team's research proposal (typically six per lab section) was evaluated for clarity, effective research question, hypotheses, predictions, research design, and practicality. An initial grade worth 25% of the total proposal grade was assigned based on the proposal rubric (see Supplemental Material A). Evaluators electronically inserted comments and suggestions (largely in the form of Socratic questions (Faust and Paulson, 1998; Elder and Paul, 2004) using Microsoft Word “track changes” tools. Each student team received their evaluated proposals electronically along with a completed proposal rubric. Teams addressed comments and suggestions and submitted a final draft worth 75% of the total proposal grade.

Once proposals were approved, students spent the remainder of laboratory time executing their research projects, collecting and analyzing data, and producing a formal research poster. Research posters included all components normally associated with a scientific manuscript, including an introduction, materials and methods, results, discussion, conclusions, and a literature cited section. CBI instructors provided students with a research poster rubric (see Supplemental Material B) ahead of time to clarify performance expectations and to help students gauge their own efforts. During a final poster session, students used the research poster rubric to peer evaluate other team posters. A compilation of all peer poster evaluations was incorporated into each team's final poster grade.

CBI students were required to keep a personal research journal summarizing all laboratory work. As with research proposal and poster rubrics, journal evaluation criteria were given to the students on the first day of laboratory (see Supplemental Material C). Collectively, CBI research proposals, journals, and posters accounted for approximately 23% of the students' final course grade.

Case study exercises were used during lecture to increase student understanding of the scientific method. Approximately eight to 10 lecture periods were devoted to case study work during the term. Each case study exercise was designed around a major theme in biology (e.g., the scientific method, molecules and cells, molecular genetics, evolution, and ecology) and intended to mimic and reinforce the scientific process used for CBI in the laboratory. Case study exercises followed a slightly modified version of the interrupted case method (Herreid, 1994, 2004, 2005a) where students worked in the same collaborative teams as for laboratory and submitted all answers in writing. Each exercise consisted of multiple parts (usually four) that were completed sequentially. The decision to use collaborative teams to support CBI was partly based on existing literature (Collier, 1980; Bruffee, 1984; Jones and Carter, 1998; Springer et al., 1999) and prior research that showed writing in small groups helps to measurably improve undergraduate critical-thinking skills (Quitadamo and Kurtz, 2007).

In the case study exercises, students were required to work through the scientific method, identify important questions and variables, state hypotheses, integrate important content information (supported by lecture), propose experiments, analyze data, and draw reasoned conclusions based on real examples from the scientific literature (see Herreid [2005b] for one example of a case study used in this part of the CBI model). Each research team submitted their answers using Microsoft Word as described above, were evaluated using a case study rubric (see Supplemental Material D), and were assigned an initial grade (33% of the total case study assignment grade). Instructors or graduate assistants who evaluated each team's case study submission posed additional Socratic questions (Faust and Paulson, 1998; Elder and Paul, 2004) aimed at clarifying the initial questions and/or answers. Teams reflected on initial proposal rubric scores and instructor comments, and were then allowed to revise and resubmit their work. This reflection and revision strategy was used in an attempt to develop student critical thinking (Dewey, 1933; Brookfield, 1987) and metacognitive awareness (Bransford and Donovan, 2005). Answers were reevaluated using a final proposal rubric (66% of the final case study assignment grade). Altogether, the case study exercises accounted for approximately 20% of the final course grade.

Peer evaluations were another element of the CBI model that provided individual accountability within each research team. This was done to help students reflect on and evaluate their own performance, maximize individual contributions to the group, and make sure students received credit proportional to their contributions. A peer evaluation rubric was used to assess team members based on their contributions, quality of work, effort, attitude, focus on tasks, work with others in the group, problem solving, and group efficacy (see Supplemental Material E). The average peer evaluation score for each student was included as approximately 5% of the final course grade.

The final element in the CBI instructional model was the lecture. As with traditional lecture sections these were largely content driven, but were also modified to focus on supporting both the CBI and the case study exercises within a framework of critical thinking. As with the CBI laboratory and case studies, the scientific method, inquiry as a process, and Socratic questioning (Faust and Paulson, 1998; Elder and Paul, 2004) were emphasized. Traditional exams and quizzes accounted for approximately 53% of a student's course grade.

Research Design and Statistical Methods

A quasi-experimental pretest/posttest control group design was used to determine critical-thinking gains in CBI, mixed, and traditional groups. This design was chosen because intact groups were used and it was not feasible to randomly assign students between course sections. In the absence of a true experimental design, this design was the most useful because it minimizes threats to internal and external validity (Campbell and Stanley, 1963). Additional threats were managed by administering the CCTST 9 wk apart, and by including multiple covariables in the statistical analysis. Pretest sensitivity and selection bias were potential concerns, but minimized via the use of a valid and reliable critical-thinking assessment evaluated for sensitivity (Facione, 1990) and by statistically accounting for prior critical thinking in critical-thinking gains.

Student critical-thinking skills were assessed using an online version of the CCTST (Facione, 1990; Facione et al., 1992, 2004). Critical- thinking gains between CBI, mixed, and traditional groups were evaluated using an analysis of covariance (ANCOVA) test, which was used to increase statistical accuracy and precision. Covariables used in the ANCOVA included prior critical-thinking skill (CCTST pretest scores), gender, ethnicity, age, class standing, time of day, instructor, course section, and academic year. Paired-samples t tests were used to compare CCTST pretest to posttest scores for the CBI, mixed, and traditional groups to determine whether gains or declines were significant. Mean, SE, and effect size were also compared between the CBI, mixed, and traditional groups.

RESULTS

Participant Demographics

A distribution of age, gender, and ethnicity was constructed to provide context for experimental results (see Table 2). In general, demographics were consistent across CBI, mixed, and traditional groups. CBI and mixed groups had more females than the traditional, which had a near-even gender split. Most participants were Caucasian, with Asian-American, Latino/Hispanic, African-American, and Native American students constituting the remainder with decreasing frequency.

Table 2.

Participant demographics

| Sample | No. | Age (%) |

Gender (%) |

||||||

|---|---|---|---|---|---|---|---|---|---|

| <17 | 18–19 | 20–21 | 22–23 | 24–25 | 26+ | M | F | ||

| CBI | 175 | 3.9 | 52.6 | 27.9 | 7.4 | 2.2 | 6.0 | 35.4 | 64.6 |

| Mixed | 80 | 1.3 | 52.4 | 36.3 | 5.0 | 1.3 | 3.7 | 33.7 | 66.3 |

| Traditional | 82 | 2.4 | 48.8 | 36.6 | 9.7 | 1.3 | 1.2 | 54.9 | 45.1 |

| Overall | 337 | 2.5 | 51.3 | 33.6 | 7.4 | 1.6 | 3.6 | 39.8 | 60.2 |

| Sample | 337 | Ethnic distribution (%) |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| Caucasian | Asian | Hispanic | African-American | Native American | Other* | ||||

| CBI | 175 | 78.9 | 5.7 | 7.4 | 2.9 | 1.1 | 4 | ||

| Mixed | 80 | 77.5 | 8.8 | 6.3 | 2.5 | 0.0 | 4.9 | ||

| Traditional | 82 | 76.8 | 9.8 | 3.7 | 2.4 | 1.2 | 6.1 | ||

| Overall | 337 | 78.0 | 7.4 | 6.2 | 2.7 | 0.9 | 4.8 | ||

*Other includes the “choose not to answer” response.

Statistical Assumptions

ANCOVA tests were used to parse out effects of a number of variables on critical-thinking gains, and to more accurately and precisely analyze critical-thinking differences between CBI, mixed, and traditional groups. Error variance across groups (homogeneity of covariances) and normal distribution (normality) assumptions were evaluated using Levene's test and a frequency histogram of CCTST gain scores, respectively. Levene's test results, F(2, 334) = 0.995, p = 0.371, indicated error variance did not differ significantly across CBI, mixed, and traditional groups. A frequency distribution of critical-thinking gains (pretest/posttest difference) showed the sample approximated a standard normal curve (data not shown).

Prior critical thinking (indicated with pretest scores) was compared across CBI, mixed, and traditional groups to establish baseline thinking performance. Pretest scores were also used to determine whether students with low prior thinking skill showed greater gains than students with high prior thinking skill (regression toward the mean).

Effect of CBI on Critical-Thinking Gains

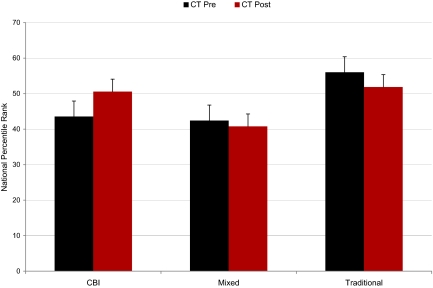

Significant pretest/posttest critical-thinking gains were observed for the CBI group (p = 0.0001), but not for mixed (p = 0.298) or traditional (p = 0.111) groups. Critical-thinking gains differed significantly between the CBI and traditional (p = 0.13) groups but not the mixed group (p = 0.076; see Figure 1). Critical-thinking gains in the CBI group were more than 2.5 times greater than the mixed group, and nearly 3 times greater gains than the traditional group (see Table 3). National percentile equivalent scores for critical thinking indicated the CBI group gained 7.01 (44th to 51st percentile), whereas the mixed and traditional groups decreased −1.64 (42nd to 40th percentile) and −4.17 (56th to 52nd percentile), respectively.

Figure 1.

Comparison of national percentile critical-thinking gains between CBI, mixed, and traditional groups. National percentile ranking was computed using CCTST raw scores, an equivalency scale from Insight Assessment, and a linear conversion script in SPSS. Values above columns represent net gains in percentile rank. Error bars represent standard error of the mean.

Table 3.

CBI effect on total critical-thinking gains

| Treatment | No. | CCTST mean raw score |

Raw CT Gain | |||

|---|---|---|---|---|---|---|

| Pre | SEM | Post | SEM | |||

| CBI | 175 | 15.64 | 0.34 | 16.95 | 0.37 | 1.31* |

| Mixed | 80 | 15.49 | 0.49 | 15.09 | 0.52 | −0.40 |

| Traditional | 82 | 17.77 | 0.52 | 17.12 | 0.58 | −0.65 |

| Overall | 337 | 16.12 | 0.25 | 16.55 | 0.27 | 0.43 |

SEM indicates standard error of the mean. *Significance tested at 0.05 level.

Results indicated that gender, age, class standing, and academic term did not significantly influence critical-thinking outcomes. However, prior critical-thinking skill, instructor, and ethnicity significantly affected critical-thinking gains (see Table 4). Prior critical-thinking skill had the greatest significant effect on critical-thinking gains of any variable tested, with 4 times greater effect than instructor and over 5 times greater effect than ethnicity.

Table 4.

Factors significantly affecting critical-thinking gains

| Treatment | F | df | P | Power | Effect size |

|---|---|---|---|---|---|

| CBI | 3.171 | 325 | 0.043* | 0.605 | 0.019 |

| Prior critical thinking | 27.665 | 325 | 0.000* | 0.999 | 0.078 |

| Instructor | 5.594 | 325 | 0.019* | 0.655 | 0.017 |

| Ethnicity | 4.983 | 325 | 0.026* | 0.605 | 0.015 |

Prior critical-thinking skill indicated by CCTST pretest. *Significance tested at 0.05 level. Effect size represented in standard units.

CBI, mixed, and traditional groups were subsequently divided into gender and ethnic subgroups to determine which group benefited most from each instructional method (see Tables 5 and 6). In the CBI group, female critical-thinking gains in national percentile rank were 1.3 times greater than male gains, but 5.4 times less than males in the mixed group and nearly 2 times less than males in the traditional group. The greatest critical-thinking gains for all ethnicities occurred in the CBI group, with Asian/Pacific Islander, Caucasian, Other, Hispanic, and African-American in decreasing order of performance. In the mixed and traditional groups, nearly all students showed nonsignificant decreases in critical-thinking skill, with the exception of Caucasian students who showed very slight gains in the mixed group and Hispanic students who showed gains in the traditional group.

Table 5.

Critical-thinking gains by gender

| Female |

Male |

|||||

|---|---|---|---|---|---|---|

| No. | %tile | SEM | No. | %tile | SEM | |

| CBI | 113 | 7.630 | 2.305 | 62 | 5.883 | 2.159 |

| Mixed | 53 | −3.477 | 2.567 | 27 | 1.959 | 4.133 |

| Traditional | 37 | −5.704 | 3.937 | 45 | −2.909 | 2.857 |

| Total | 203 | 2.300 | 1.526 | 134 | 2.140 | 1.638 |

Gains based on CCTST national percentile.

SEM refers to standard error of the mean.

Table 6.

Critical-thinking gains by ethnicity

| Caucasian |

Asian, Pacific Islander |

Hispanic, Latino |

African-American |

Native American |

Other* |

|||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| No. | %tile | SEM | No. | %tile | SEM | No. | %tile | SEM | No. | %tile | SEM | No. | %tile | SEM | No. | %tile | SEM | |

| CBI | 138 | 7.45 | 1.67 | 10 | 11.79 | 5.74 | 13 | 2.85 | 4.27 | 5 | 1.55 | 11.47 | 2 | −4.91 | 9.57 | 7 | 7.32 | 17.28 |

| Mixed | 62 | 0.11 | 2.62 | 7 | −5.19 | 6.22 | 5 | −5.65 | 4.07 | 2 | −18.41 | 16.46 | 0 | 0.00 | 0.00 | 4 | −9.18 | 8.44 |

| Traditional | 63 | −1.54 | 2.63 | 8 | −19.93 | 8.41 | 3 | 9.25 | 13.42 | 2 | −16.51 | 4.18 | 1 | −34.11 | 0.00 | 5 | −9.85 | 9.88 |

Gains based on CCTST national percentile.

SEM refers to standard error of the mean.

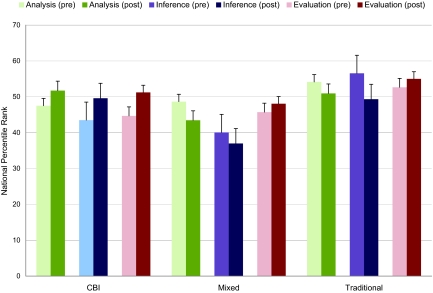

The effects of CBI on gains in the component critical-thinking skills of analysis, inference, and evaluation were also investigated. Students in the CBI group showed significant gains in inference and evaluation skill, whereas students in the traditional group showed significant decreases in inference skill (see Figure 2). Mixed group students showed no significant change in any component skill. Students from the CBI group showed 9.4 times greater analysis, 9.2 times greater inference, and 4.2 times greater evaluation skill gains than the mixed group. The CBI group also showed 7.4 times greater analysis, 13.4 times greater inference, and 4.2 times greater evaluation skill gains than the traditional group.

Figure 2.

Comparison of analysis, inference, and evaluation skills for CBI, mixed, and control groups. Gains scores indicated by national percentile ranking. *Significance tested at 0.05 level. Error bars based on standard error of the mean.

DISCUSSION

The purpose of this study was to discover whether CBI could elicit greater gains in critical thinking than traditional lecture/laboratory instruction in general education biology courses. The CBI group showed the only significant change in overall critical thinking, with large gains in national percentile rank compared with mixed and traditional groups. The CBI group also showed the only significant gains in inference and evaluation skills, whereas mixed and traditional groups showed either no change or significant declines in inference skill. Small evaluation skill gains were observed for mixed and traditional groups, although CBI evaluation gains were more than 4 times greater. Females, who showed larger critical-thinking decreases than males in traditionally taught courses, outperformed males when CBI was used. CBI also produced greater gains in critical thinking than traditional methods for nearly all ethnic groups. Collectively, these results indicate that CBI students outperform traditionally taught students and show greater gains in overall critical thinking and analysis, inference, and evaluation skill.

Students in the traditional group showed the largest critical-thinking declines even though they began the term with the highest prior critical-thinking skill. Although not significant in this study, this negative trend has been observed in nearly every traditionally taught biology course we have investigated to date. While it is not totally clear as to why critical-thinking skills decrease, it is reasonable to suggest that traditional methods are less effective because they are not conducive to how students learn science most effectively (Bransford and Donovan, 2005). Traditional methods typically do not build from students' prior knowledge, are disconnected from how science is done in the real world, and generally do not promote student awareness of how they learn (Bransford and Donovan, 2005). In contrast, nontraditional methods like CBI may be more effective because they build from what students already know, allow them to experience authentic scientific research, and require them to reflect and improve in ways that increase self-awareness and metacognition. The mental constructs produced from experiential methods like CBI are more likely to promote critical thinking (Wesson, 2002; Miri et al., 2007).

In addition to CBI, prior critical-thinking skill and instructor significantly affected critical-thinking performance. Although some overlap between method and instructor was expected, instructor was included as a standalone variable in an attempt to more specifically isolate CBI effects on critical-thinking gains when multiple faculty used the same method. Prior critical-thinking skill and instructor have significantly affected critical-thinking gains in other studies as well (Quitadamo and Kurtz, 2007), with the former being a major determinant of future critical-thinking gains. These results further underscore the need for critical-thinking skills to be explicitly taught (Miri et al., 2007), not only in higher education, but throughout the K–20 continuum, so that students are provided with equitable opportunity and necessary tools for learning success. Teaching these skills during formative years will serve to decrease gaps in critical-thinking performance as students continue their education.

It is interesting to note that nearly all ethnicities experienced greater critical-thinking gains in the CBI group compared with the mixed and traditional groups. Presumably, this benefit comes from using an instructional method that promotes student-driven questions and research. By precluding an instructional top-down model of what is and is not important to individuals, students are encouraged to choose their own area of research. By engaging them in a rigorous scientific research process, these students learn the values and beliefs of science without a particular viewpoint being imposed on them. This in turn may encourage greater openness to learning, which ultimately manifests in greater thinking skill. However, this interpretation is speculative, and further research is necessary to uncover cause and effect relationships between CBI, ethnicity, and critical thinking.

It was also interesting to find that CBI helped females to erase critical-thinking deficits seen in traditionally taught course sections. Perhaps the collaborative nature of the CBI model and increased connection to community issues encouraged women to take a more active role in STEM learning, and thereby gave them opportunity to practice critical-thinking skill to a greater extent than traditional drill and skill instruction. Small group collaboration, which was used to some extent in CBI, mixed, and traditional groups, is known to improve attitude and promote student achievement in STEM courses (Springer et al., 1999) but less is known about its effects on critical thinking. Despite the use of small groups in both the mixed and traditional groups, critical-thinking skill gains did not occur. One interesting project would be to consider male only, female only, and mixed collaboration group effects on critical-thinking gains. More research is required to tease out the particular elements of CBI that caused gender differences.

Although content knowledge was not assessed using a valid and reliable instrument, it is important to note that CBI instructors “covered” the same amount of lecture content as they had in previous, traditionally taught courses. The perceived lack of content knowledge is a common criticism of teaching methods that focus on thinking process, although recent studies have shown no content knowledge penalties manifest (Sundberg, 2003). Future iterations of this research will include a standardized content knowledge assessment to further clarify the relationship between content knowledge and thinking-skills development.

Faculty considering whether to use CBI may wonder about their specific role, and if time and energy spent implementing the method will produce better scientists. Aside from engaging in meaningful research and learning to think critically, it is also important that students learn to appreciate the values and beliefs inherent in the scientific enterprise. Faculty played a pivotal role in CBI, modeling a range of good scientific behaviors from how to problem solve and create an effective research design, to drawing conclusions based on evidence. A clear emphasis was placed on the value of discovery, not just rote facts. Faculty trying CBI for the first time may also find students tend to become increasingly frustrated over the first 3–4 wk of the term because they are asked to do more than memorize. Whereas others have found students may retain skeptical attitudes of new instructional methods for some time (Sundberg and Moncada, 1994), our results indicate CBI students rapidly become acclimated after approximately 4 wk as research projects begin and beneficial relationships between investigative science and critical thinking become more clear.

Learning how to use critical thinking as the course framework (Pukkila, 2004) and clearly connecting this to the scientific method (Miri et al., 2007) appears to be a major determinant in the success of the CBI model. This may explain why students in the mixed (and traditional) groups did not show critical-thinking gains. Major differences between the CBI and mixed groups were: (1) the time that course instructors spent in lab modeling knowledge, skills, and behaviors of investigative science, (2) the ability of faculty and teaching assistants to ask Socratic questions, and (3) the extent to which instructors integrated the fundamental elements of CBI, including undergraduate research, case studies, and lecture content discussion. In and of itself, adding an inquiry laboratory component and talking about critical thinking without building it into the course are unlikely to produce meaningful critical-thinking gains. Although not tested explicitly, is possible that the tighter integration of lecture and laboratory (Sundberg, 2003) in the CBI group could have accounted for some of the critical-thinking gains.

Some practical considerations for adopting the CBI model include the requirements of greater collaboration with colleagues, clearly defining for students what kinds of critical-thinking behaviors and skills are expected, and developing explicit examples of how critical thinking relates to the scientific process (Miri et al., 2007). Time spent evaluating and providing meaningful feedback on research proposals, journals, and posters is another potential concern. In this study, implementation of CBI did not take more time and effort per se; rather, it required faculty to reconceptualize how they spent their instructional time and adopt different primary objectives. For example, students were informed ahead of time that each member of their research group would receive the lowest common grade for their proposal or poster. As a result, they tended to self-regulate group behavior and productivity and became less tolerant of noncontributors. Rubric evaluations, which would be time-consuming if faculty completed one for every student, were provided for each research group. Group members then discussed strengths and weaknesses of their submission and worked collaboratively to address them. Faculty and teaching assistants must understand how the CBI model is different, why it is being used, and what they can expect from students (Sundberg et al., 2000). Training on CBI objectives, how to evaluate student work using a rubric, and reinforcement of the Socratic and just-in-time teaching methods of CBI are required for successful implementation. It is also important that support staff be aware that students will be making a variety of requests for equipment and materials in the coming weeks and that these requests are somewhat unpredictable.

Future Directions

The CBI model has worked repeatedly for faculty included in this study. CBI has created a buzz among students, which has prompted faculty at our and other institutions to ask to be taught the model and corresponding techniques so they can use it in their own courses. At this point, all faculty teaching nonmajors biology at our institution use the CBI model, partly because students better learn how to think, and partly because it is more fun to teach courses that involve discovery and research. However, it is not clear how extensible this model is to other disciplines. Further study would involve implementing CBI in nonmajors STEM courses such as chemistry or physics. Two of the authors have implemented CBI in majors cell biology, genetics, and microbiology courses, with similar benefits.

CONCLUSIONS

The results of this study are encouraging for faculty who seek better alternatives than traditional lecture and laboratory methods for promoting critical thinking in STEM general education courses. Based on previous literature and the results presented here, we conclude that CBI helps improve critical-thinking skill in general education biology, and should be considered by faculty searching for better ways to improve STEM teaching and learning. It remains to be seen whether this model is extensible to other science disciplines, and further study is necessary to evaluate the widescale suitability of the CBI model. As a research-based instructional method, CBI has the potential to improve essential learning outcomes like critical thinking, and through this process enhance the cognitive performance and competitiveness of the general population. As we all search for better ways to improve STEM teaching and learning, faculty should consider CBI.

Supplementary Material

ACKNOWLEDGMENTS

The authors acknowledge the generous financial support provided by the Central Washington University Office of the Provost and the Office of the Associate Vice President for Undergraduate Studies.

REFERENCES

- American Association for the Advancement of Science (AAAS) Science for All Americans. Washington DC: 1989. A Project 2061 Report on Literacy Goals in Science, Mathematics, and Technology. [Google Scholar]

- AAAS. Benchmarks For Science Literacy. New York: Oxford University Press; 1993. [Google Scholar]

- Arwood L. Teaching cell biology to nonscience majors through forensics, or how to design a killer course. Cell Biol. Educ. 2004;3:131–138. doi: 10.1187/cbe.03-12-0023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Association of American Colleges and Universities. Liberal Education Outcomes: A Preliminary Report on Student Achievement in College. Washington, DC: 2005. [Google Scholar]

- Blumenfeld P. C., Soloway E., Marx R. W., Krajcik J. S., Guzdial M., Palincsar A. Motivating project-based learning: sustaining the doing, supporting the learning. Educ. Psychol. 1991;26:369. [Google Scholar]

- Boyer E. L. The Boyer Commission on Educating Undergraduates in the Research University, Reinventing Undergraduate Education: A Blueprint for America's Research Universities. New York: SUNY, Stony Brook; 1998. [Google Scholar]

- Bransford J., Donovan S., editors. How Students Learn: Science in the Classroom. Washington, DC: National Academies Press; 2005. [Google Scholar]

- Brookfield S. D. Developing Critical Thinkers: Challenging Adults to Explore Alternative Ways of Thinking and Acting. San Fransisco: Jossey-Bass; 1987. [Google Scholar]

- Bruffee K. A. Collaborative learning and the “Conversation of mankind.” Coll. Engl. 1984;46:635–653. [Google Scholar]

- Building Engineering and Science Talent. The talent imperative: meeting America's challenge in science and engineering ASAP. [accessed 20 June 2007];2003 http://www.bestworkforce.org/PDFdocs/BESTTalentlmperativeFINAL.pdf.

- Bybee R. W., Fuchs B. Preparing the twenty-first century workforce: a new reform in science and technology education. J. Res. Sci. Teach. 2006;43:349–352. [Google Scholar]

- Campbell D. T., Stanley J. C. Experimental and Quasi-experimental Designs for Research. Boston, MA: Houghton Mifflin Company; 1963. [Google Scholar]

- Carnevale A. P. American Society for Training and Development. Workplace Basics: The Essential Skills Employers Want. San Francisco: Jossey-Bass; 1990. [Google Scholar]

- Colburn A. An inquiry primer. Sci. Scope. 2000;23:42–44. [Google Scholar]

- Collier K. G. Peer-group learning in higher education: the development of higher-order skills. Stud. High. Educ. 1980;5:55–61. [Google Scholar]

- Council on Competitiveness. Innovate America: thriving in a world of challenge and change. [accessed 20 July 2007];2005 http://innovateamerica.org/webscr/report.asp.

- DebBurman S. K. Learning how scientists work: experiential research projects to promote cell biology learning and scientific process skills. Cell Biol. Educ. 2002;1:154–172. doi: 10.1187/cbe.02-07-0024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dewey J. How We Think: A Restatement of the Relation of Reflective Thinking to the Educational Process. Lexington, MA: Heath; 1933. [Google Scholar]

- DiPasquale D. M., Mason C. L., Kolkhorst F. W. Exercise in inquiry: critical thinking in an inquiry-based exercise physiology laboratory course. J. Coll. Sci. Teach. 2003;32:388–393. [Google Scholar]

- Elder L., Paul R. The Miniature Guide on the Art of Asking Essential Questions for Students and Teachers. Dillon Beach, CA: Foundation for Critical Thinking; 2004. [Google Scholar]

- Ennis R. H. A logical basis for measuring critical thinking skills. Educ. Leader. 1985;43:44–48. [Google Scholar]

- Ernst J., Monroe M. The effects of environment-based education on student's critical thinking skills and disposition toward critical thinking. Environ. Educ. Res. 2006;12:429–443. [Google Scholar]

- Facione P. A. Experimental Validation and Content Validity. Millbrae, CA: Insight Assessment; 1990. The California Critical Thinking Skills Test: College level. Technical report #1. [Google Scholar]

- Facione P. A. American Philosophical Association. Research Findings and Recommendations. Millbrae, CA: Insight Assessment; 1990. Critical Thinking: A Statement of Expert Consensus for Purposes of Educational Assessment and Instruction. [Google Scholar]

- Facione P. A., Facione N. C., Giancarlo C. A. Test Manual: The California Critical Thinking Disposition Inventory. Millbrae, CA: Insight Assessment; 1992. [Google Scholar]

- Facione P. A., Facione N. C. Insight Assessment. [accessed 30 June 2004];Test of everyday reasoning. 2004 www.insightassessment.com/test-ter.html.

- Facione P. A. Critical Thinking: What It Is and Why It Counts: 2007 Update. Millbrae, CA: Insight Assessment; 2007. [Google Scholar]

- Faust J. L., Paulson D. R. Active learning in the college classroom. J. Excel. Coll. Teach. 1998;9:3–24. [Google Scholar]

- Giancarlo C. A., Facione P. A. A look across four years at the disposition toward critical thinking among undergraduate students. J. Gen. Educ. 2001;50:29–55. [Google Scholar]

- Hathaway R. S., Nagda B. A., Gregerman S. R. The relationship of undergraduate research participation to graduate and professional education pursuit: an empirical study. J. Coll. Student. Dev. 2002;43:614–631. [Google Scholar]

- Herreid C. F. Case studies in science - a novel method of science education. J. Coll. Sci. Teach. 1994;23(4):221–229. [Google Scholar]

- Herreid C. F. Can case studies be used to teach critical thinking? J. Coll. Sci. Teach. 2004;33(6):12–14. [Google Scholar]

- Herreid C. F. The interrupted case method. J. Coll. Sci. Teach. 2005a;35(2):4–5. [Google Scholar]

- Herreid C. F. Mom always liked you best: examining the hypothesis of parental favoritism. J. Coll. Sci. Teach. 2005b;35(2):10–14. [Google Scholar]

- Holmes J., Clizbe E. Facing the twenty-first century. Bus. Educ. Forum. 1997;52:33–35. [Google Scholar]

- Howard D. R., Miskowski J. A. Using a module-based laboratory to incorporate inquiry into a large cell biology course. Cell Biol. Educ. 2005;4:249–260. doi: 10.1187/cbe.04-09-0052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huard M. Service-learning in a rural watershed, Unity College and Lake Winnecook: a community perspective. J. Contemp. Water. Res. 2001;119:60–71. [Google Scholar]

- Hunter A.-B., Laursen S. L., Seymour E. Becoming a scientist: the role of undergraduate research in students' cognitive, personal, and professional development. Sci. Educ. 2007;91:36–74. [Google Scholar]

- Jones E. A., Hoffman S., Moore L. M., Ratcliff G., Tibbets S., Click B., III . Final project report. University Park, PA: U.S. Department of Education, Office of Educational Research and Improvement; 1995. National assessment of college student learning: identifying college graduates' essential skills in writing, speech and listening, and critical thinking. Report nr NCES-95–001. [Google Scholar]

- Jones G. M., Carter G. Small groups and shared constructions. In: Mintzes J. J., Wandersee J. H., Novak J. D., editors. Teaching Science for Understanding: A Human Constructivist View. San Diego: Academic Press; 1998. pp. 261–279. [Google Scholar]

- Kardash C. M. Evaluation of an undergraduate research experience: perceptions of undergraduate interns and their faculty mentors. J. Educ. Psychol. 2000;92:191–201. [Google Scholar]

- Kinkel D. H., Henke S. E. Impact of undergraduate research on academic performance, educational planning, and career development. J. Nat. Res. Life Sci. Educ. 2006;35:194–201. [Google Scholar]

- Kolb D. A. Experiential Learning: Experience as the Source of Learning and Development. Upper Saddle River, NJ: Prentice Hall; 1984. [Google Scholar]

- Lopatto D. Survey of undergraduate research experiences (SURE): first findings. Cell Biol. Educ. 2004;3:270–277. doi: 10.1187/cbe.04-07-0045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Magnussen L., Ishida D., Itano J. The impact of the use of inquiry-based learning as a teaching methodology on the development of critical thinking. J. Nurs. Educ. 2000;39:360–364. doi: 10.3928/0148-4834-20001101-07. [DOI] [PubMed] [Google Scholar]

- Miri B., Ben-Chaim D., Zoller U. Purposely teaching for the promotion of higher-order thinking skills: a case of critical thinking. Res. Sci. Educ. 2007;37:353–369. [Google Scholar]

- National Academy of Sciences, National Academy of Engineering, and Institute of Medicine. Rising Above the Gathering Storm: Energizing and Employing America for a Brighter Economic Future. Washington, DC: National Academies Press; 2005. [Google Scholar]

- National Research Council (NRC) National Science Education Standards. Washington, DC: National Academies Press; 1996. [Google Scholar]

- NRC. How People Learn: Brain, Mind, Experience, and School. Washington, DC: National Academies Press; 2000. [Google Scholar]

- NRC. Bio 2010, Transforming Undergraduate Education for Future Research Biologists. Washington, DC: National Academies Press; 2003. [PubMed] [Google Scholar]

- National Science Board. Science, Technology, Engineering, and Mathematics Education System. Washington, DC: National Science Foundation; 2007. A National Action Plan for Addressing the Critical Needs of the U.S. [Google Scholar]

- Novak G., Patterson E., Gavrin A., Christian W. Just-in-Time Teaching: Blending Active Learning with Web Technology. Upper Saddle River, NJ: Prentice Hall; 1999. [Google Scholar]

- Porta A. R. Making a cell physiology teaching laboratory more like a research laboratory. Am. Biol. Teach. 2000;62:341–344. [Google Scholar]

- Project Kaleidoscope. Transforming America's Scientific and Technological Infrastructure: Recommendations for Urgent Action. Washington, DC: National Science Foundation; 2006. [Google Scholar]

- Pukkila P. J. Introducing student inquiry in large introductory genetics classes. Genetics. 2004;166:11–18. doi: 10.1534/genetics.166.1.11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quitadamo I. J., Kurtz M. J. Learning to improve: using writing to increase critical thinking performance in general education biology. CBE Life Sci. Educ. 2007;6:140–152. doi: 10.1187/cbe.06-11-0203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Russell S. H., Hancock M. P., McCullough J. Benefits of undergraduate research experiences. Science. 2007;316:548–549. doi: 10.1126/science.1140384. [DOI] [PubMed] [Google Scholar]

- Sclove R. E. Putting science to work in communities. Chron. High. Educ. 1995;41:B1–B3. [Google Scholar]

- Seymour E., Hunter A.-B., Laursen S. L., DeAntoni T. Establishing the benefits of research experiences for undergraduates in the sciences: first findings from a three-year study. Sci. Educ. 2004;88:493–534. [Google Scholar]

- Smith A. C., Stewart R., Shields P., Hayes-Klosteridis J., Robinson P., Yuan R. Introductory biology courses: a framework to support active learning in large-enrollment introductory science courses. Cell Biol. Educ. 2005;4:143–156. doi: 10.1187/cbe.04-08-0048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Springer L., Donovan S. S., Stanne M. E. Effects of small-group learning on undergraduates in science, mathematics, engineering, and technology: A meta-analysis. Rev. Educ. Res. 1999;69:21–51. [Google Scholar]

- Sundberg M. D. Reports of the National Center for Science Education. Emporia, KS: National Center for Science Education; 2003. Strategies to help students change naive alternative conceptions about evolution and natural selection. [Google Scholar]

- Sundberg M. D., Armstrong J. E., Dini M. L., Wischusen E. W. Some practical tips for instituting investigative biology laboratories. J. Coll. Sci. Teach. 2000;29:353–359. [Google Scholar]

- Sundberg M. D., Moncada G. J. Creating effective investigative laboratories for undergraduates. Bioscience. 1994;44:698–704. [Google Scholar]

- Tsui L. A review of research on critical thinking. Association for the Study of Higher Education annual meeting paper; November 5–8; Miami, FL. 1998. [Google Scholar]

- Tsui L. Fostering critical thinking through effective pedagogy: evidence from four institutional case studies. J. High. Educ. 2002;73:740–763. [Google Scholar]

- United States Department of Education. National Goals for Education. Washington, DC: 1990. [Google Scholar]

- Wesson K. A. [accessed 30 June 2007];Memory and the brain: how teaching leads to learning. 2002 www.nais.org/publications/ismagazinearticle.cfm?ltemnumber=144290&sn.ltemNumber=145956&tn.ltemNumber=145958.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.