Abstract

Many tasks have been used to probe human directional knowledge, but relatively little is known about the comparative merits of different means of indicating target azimuth. Few studies have compared action-based versus non-action-based judgments for targets encircling the observer. This comparison promises to illuminate not only the perception of azimuths in the front and rear hemispaces, but also the frames of reference underlying various azimuth judgments, and ultimately their neural underpinnings. We compared a response in which participants aimed a pointer at a nearby target, with verbal azimuth estimates. Target locations were distributed between 20 and 340 deg. Non-visual pointing responses exhibited large constant errors (up to −32 deg) that tended to increase with target eccentricity. Pointing with eyes open also showed large errors (up to −21 deg). In striking contrast, verbal reports were highly accurate, with constant errors rarely exceeding +/− 5 deg. Under our testing conditions, these results are not likely to stem from differences in perception-based vs. action-based responses, but instead reflect the frames of reference underlying the pointing and verbal responses. When participants used the pointer to match the egocentric target azimuth rather than the exocentric target azimuth relative to the pointer, errors were reduced.

Keywords: open loop pointing, spatial cognition, perception/action, perceived direction

Tasks that probe observers’ knowledge of the directional relationships between locations in the environment have been used to address a wide range of perceptual and cognitive phenomena. These tasks have been used to study visual space perception (e. g., Foley 1977; Foley et al 2004; Fukusima et al 1997; Kelly et al 2004; Koenderink et al 2003), self-motion sensing (e. g., Israël et al 1996; Philbeck et al 2006), spatial memory (Holmes and Sholl 2005; Mou et al 2004; Waller and Hodgson 2006; Wang and Spelke 2000), spatial updating (e. g., Farrell and Robertson 2000; Loomis et al 1998; Presson and Montello 1994; Rieser et al 1986), and other aspects of spatial cognition (e. g., Sadalla and Montello 1989; Shelton and McNamara 2004a; Woodin and Allport 1998). The wide range of research paradigms involving directional judgments reflects the crucial importance of directional knowledge as a component of both short-term and longer-term spatial representations. These representations, in turn, are crucial for effectively controlling action and navigation in the environment. A variety of behavioral measures has been used to assess perception or memory of a target’s direction. Some examples, drawn from the literature above, include aiming the eyes, head, or hands at a target; turning the body to face a target; manipulation of a dial, pointer, or joystick; drawing an angle or a map; and giving verbal estimates (Haber et al 1993; Montello et al 1999).

The choice of response measure is an important consideration, because, under some circumstances, one may obtain a very different pattern of results depending upon which response type is used. A variety of studies have focused on comparing action-based responses (those that involve some motor activity on the part of the observer) with responses that do not require action (e.g., Brenner and Smeets 1996; Bridgeman et al 1981; Franz et al 2001; Haffenden and Goodale 1998; Haun et al 2005; Wraga 2003). As we will discuss shortly, a particularly active area of recent inquiry has concerned the extent to which behavioral evidence may be found of anatomically segregated neural pathways in the neocortex. When action-based and non-action-based responses are found to differ in these studies, it is not unusual for the action-based response to reflect more accurately the physical stimulus arrangement (Creem et al 2001). For example, certain action-based responses have been found to be less susceptible to visual size illusions than non-action-based responses (e. g., Haffenden and Goodale 1998). In the context of measuring directional knowledge, however, the utility of action-based responses is less clear. On the basis of the superior accuracy of action-based responses in some situations, one might expect motoric responses to be more accurate than non-motoric ones when measuring directional knowledge as well. Testing this prediction is a pressing concern, because action-based responses are commonly used as measures of directional knowledge. If they are found to provide a poor estimate of perceived or remembered target azimuths, this could call for a re-examination of previous work. Non-motoric responses have been shown to result in lower errors than action-based measures in at least some situations (e.g., when an imagined heading differs from one’s physical heading; de Vega and Rodrigo 2001; May 2004; Wang 2004; Wraga 2003), but the conditions under which this holds true remain poorly understood. In addition, action-based and non-action-based measures do not necessarily share the same underlying frame of reference, and therefore they could be responsive to distinctly different kinds of spatial knowledge even though they might initially seem to measure the same psychological construct.

There are many possible action-based and non-action-based responses, and even for specific response types, the motoric versus non-motoric distinction must be considered a composite of differences along multiple dimensions. Examining all possible response types and all potentially-relevant features of the motoric / non-motoric distinction is clearly beyond the scope of this article. Instead, to address the aforementioned issues, we take the approach of comparing a non-action-based method that is especially straightforward (verbal report of target direction), with an action-based method that is especially common in the spatial cognition literature, namely, manipulation of a pointing device so that it points at the target1. This approach provides a basis for choosing between these two particular measures in subsequent work, for interpreting the results of past work using these measures, and for investigating other action-based and non-action-based indications of target direction in the future.

Few studies have directly compared these two methods in the domain of judging egocentric target azimuth (i.e., target direction in a horizontal plane, relative to an observer’s facing direction). One of the most extensive studies of this kind is that of Haber et al. (1993), who compared 9 methods for indicating the azimuth of auditory sources in a population of blind individuals. The possible target locations were arrayed in the front hemispace and the targets emitted sound while participants generated each type of response. Under these conditions, the authors found that body-based measures (e.g., pointing with the nose or a finger) resulted in the lowest absolute (unsigned) error, with measures such as drawing and verbal report showing the highest error. Error for manual pointing with a dial was intermediate. More recently, Montello et al. (1999) compared manual pointing with a dial versus rotating the body to face the target in sighted observers. This study showed that both of these body-based measures resulted in approximately the same amount of absolute error; however, manual pointing exhibited more constant (signed) error, particularly when the responses were made without vision. For our purposes, this study is notable because it suggests that manual pointing is not necessarily accurate in an azimuth perception task, but instead exhibits some systematic bias. Haun, Allen and Wedell (2005) compared verbal reports of target azimuth with manual judgments, in which participants aimed a long wooden dowel at a target placed at azimuths of 0 to 90 deg relative to straight ahead. Both verbal and pointing judgments exhibited some constant error within the tested azimuth range; although neither response type resulted in mean errors larger than 10 deg, biases in verbal reports were somewhat larger than those in pointing judgments. The authors attributed the biases to categorization processes in spatial memory.

Manual pointing and verbal reports clearly differ along more dimensions than simply the involvement of the motor system. One potentially relevant dimension concerns the neural pathways that might control each response. At least in some situations, responses requiring a motor action have been found to be more accurate than non-action-based responses (e.g., Bridgeman 1999; Haffenden and Goodale 1998; Proffitt et al 2001). These differences in action-based and non-action-based responses have been interpreted by some as behavioral evidence of separate neural pathways underlying the two response types, with one neural stream being specialized for the visual control of actions and another stream being specialized for visual object recognition and presumably other forms of conscious perception (Creem and Proffitt 2001; Milner and Goodale 1995). Thus, in some situations, verbal / pointing differences may arise due to differences in the underlying spatial representations controlling each response type. However, when action-based indications of target location are performed without on-line visual guidance after brief delays, there is evidence that these responses are primarily controlled by the neural stream specialized for conscious perception, rather than the visuomotor control stream (Goodale et al 1994; see also Creem and Proffitt 1998). This means that if participants point without vision, or even if they can see the pointer but not the target, verbal / pointing differences are not likely to be due to differential engagement of the two visual streams. Importantly, both of these conditions are common in spatial cognition studies investigating spatial memory (e.g., Haun et al 2005; Holmes and Sholl 2005; Philbeck et al 2001; Shelton and McNamara 2004b). We use non-visual pointing in Experiments 1a, 1b, and 3, below, and visually-guided pointing in Experiment 2. To some extent, these issues overlap with ideas expressed by Huttenlocher et al. (1991), who described a category-adjustment model of spatial memory. In this model, spatial locations are encoded in either a short-lived, precise representation or a more enduring, categorical representation. Location judgments are increasingly influenced by the categorical representation as the short-lived, precise representation becomes more uncertain. Thus, depending on the specific methodologies used in a given experiment, motoric and non-motoric responses could vary along a fine-grained vs. categorical representation dimension as well. Responding without vision after a short delay could tend to favor control by the categorical representation, for both verbal and manual judgments (but see Haun et al., 2005).

Another potentially relevant aspect of the action-based vs. non-action-based distinction between pointing and verbal responses is that aiming a pointer requires a complex set of transformations to translate the visually perceived location (or the remembered location) into a motoric response (Soechting and Flanders 1992). Physical properties of the torso, arm and hand introduce mechanical constraints that might bias manual pointing responses without the participant’s knowledge. Verbal responses are not subject to these mechanical constraints.

One more difference is that pointing is a more direct response, while verbal reports are more abstract. Pointing uses a spatial medium to indicate a spatial property of the physical world, and this spatial medium is embedded in, and aligned with, the physical world (Allen and Haun 2004). By contrast, assigning a verbal label to the perceived target azimuth arguably requires somewhat more abstraction, and this may introduce additional noise and/or bias into verbal responses.

Yet another difference between manual pointing and verbal indications of azimuth is that verbal responses must be produced in discrete units (e.g., degrees), whereas manual pointing allows for a more continuous range of responses, limited only by the precision of the measurement device. Thus, a possible drawback of verbal reports is their potential to underestimate an observer’s true sensitivity to stimulus changes due to the relative coarseness of the verbal units that observers might select.

Perhaps the most important difference between manual pointing and verbal responses concerns the frames of reference underlying the two response types. Verbal estimates are typically referenced to the observer’s body-centered (or “egocentric”) heading. By contrast, aiming a pointer at an object is a more exocentric task, in the sense that both the pointer and the object are displaced from the observer’s body. Thus, there is a difference between the object direction relative to the pointing device and the egocentric direction relative to the observer. This directional difference, called parallax, becomes increasingly pronounced as targets are brought nearer to the observer. Although instructions to “point to the target” are straightforward and may initially seem sufficient, they may leave observers unsure about which frame of reference to use, and this could result in systematic errors, particularly for relatively near targets. Observers may also have trouble adopting a frame of reference that is not centered on their own viewpoint. Most striking, however, is research by Cuijpers and colleagues (Cuijpers et al 2000, 2002), who have found large biases when observers attempt to visually align a rod with nearby targets via remote control. The researchers attribute these errors to biases in the visual perception of object alignment. Aiming a pointer at a target, then, may be treated by participants as an exocentric alignment task, and therefore may be affected by these large biases in visually perceived alignment.

In the studies below, we addressed these issues by extensively comparing manual pointing and verbal report as means of indicating target azimuth. Experiment 1a compares these two response types for a wide range of target azimuths; Experiment 1b concentrates on a smaller range of closely-spaced target azimuths to assess the ability of observers to respond to small changes in target direction using each method. In both experiments, we presented observers with a single target in a natural indoor environment, and responses were made after relatively brief delays (approximately 3 – 5 sec). This provided a multitude of cues for perception of target azimuth and minimized memory-related errors. We aimed to create conditions in which the represented target azimuth underlying each response would be accurate with respect to the physical azimuth, or at least nearly so, so that we could examine the properties of the two responses under near-optimal naturalistic conditions. We found large, systematic errors in the pointing responses, but much smaller errors in the verbal responses. In Experiment 2, we obtained manual pointing responses under full vision of the target, testing environment, and pointing apparatus. This allowed us to evaluate the impact of the brief delays before pointing and the lack of visual feedback during pointing. Providing vision during the response resulted in decreased errors, but sizeable biases remained. In Experiment 3, observers pointed without vision, but used the pointer to indicate the egocentric target azimuth, rather than performing the exocentric task of aiming the pointer at the target. Pointing errors were reduced in this situation, particularly for targets in the rear hemispace. Taken together, the experiments support the view that the large differences in verbal and pointing responses in Experiments 1a and 2 are in fact due primarily to differing frames of reference underlying the two response types, rather than to differences in perception-based vs. action-based responses.

EXPERIMENT 1a

Our approach in this study was to compare verbal estimates of target azimuth with non-visual, manual pointing using a dial mounted in front of the participant. The manual responses were performed without vision and were executed after a brief delay following the occlusion of vision (3 – 5 s; see Methods, below). Although errors may be increased somewhat under these conditions, these features are thought to yield responses that are predominantly controlled by conscious perceptual representations rather than by largely unconscious, on-line visuomotor representations (Bridgeman 1999; Milner and Goodale 1995). Verbal estimates of azimuth are likewise assumed to be controlled primarily by conscious perception. This manipulation, then, is crucial for equating as much as possible the cortical spatial representation that forms the basis of the two response types.

We will compare verbal reports and manual pointing in terms of three types of error: (1) Constant error, which we calculated as the response value minus the physical target azimuth (see below); this provides an indication of an overall tendency to overshoot or undershoot a target value. This type of error is sometimes called “bias”, and we will use the latter term synonymously with “constant error”. (2) We will also compare absolute (unsigned) error. Because constant error retains the sign of the error term, positive and negative errors can cancel out, thereby potentially underestimating the total amount of error. To the extent that constant errors are predominantly positive or negative, absolute and constant errors will tend to yield similar results. (3) Finally, we will also report within-subject variable error, as measured by the within-subject standard deviations across multiple repetitions per condition. This provides a measure of response consistency, with high error indicating low consistency and vice-versa.

On each trial, participants viewed a small target at some eccentricity relative to straight ahead and then covered their eyes and indicated the target azimuth using one of three methods. One method involved attempting to manually rotate a pointer without vision so that it lined up with the target; the second and third methods involved giving a verbal estimate of the target azimuth, either in clock-face directions or in degrees. Introspectively, we felt that verbal / degree estimates in the 90 – 360 range might be relatively unfamiliar and difficult to apply. If true, unfamiliarity with the numerical scale might introduce additional biases and/or variability in verbal degree estimates for certain target azimuths. To explore this issue, approximately half of the participants in Verbal / Degree trials were instructed to respond using a 0 – 360 deg range. The remaining participants gave Verbal / Degree estimates using a 0 – 90 deg range, coupled with an indication of the target’s quadrant location (e.g., “right front”, “back left”, etc.).

Method

Participants

Twenty-one people (16 females, 5 males) participated in this study in exchange for course credit. Their mean age was 18.7 years (range: 17 – 21). All reported having normal vision in both eyes and three reported being left-handed. We did not attempt to equally balance the number of female and male participants, as previous work has found no reliable sex-related differences in a very similar task, involving manual azimuth judgments (Montello et al 1999).

Design

The target (a small black cylinder, 1 cm diameter × 0.7 cm tall) could appear at 20, 40, 60, 80, 100, 110, 120, 130, 140, 150, 160 or 170 deg to the left or right of the participants’ initial facing direction, or at 180 deg. The target appeared somewhat below eye level on a table that encircled the participant, at a distance of approximately 1 m. Each possible azimuth was sampled twice for each of the three response types (Pointing, Verbal / Degrees, Verbal / Clock-Face). The number of repetitions per condition was relatively small, and this might have the effect of providing a poor estimate of the within-subject variability, but, as we will see, this design provides a clear characterization of the response patterns across a wide range of azimuths. The presentation order was fully randomized for each participant, for a total of 150 trials. Four males and 7 females gave Verbal / Degree estimates using a 0 – 360 deg range, while the rest of the participants responded using the 0 – 90 deg “quadrant” format in Verbal / Degree trials. The design for these two groups was otherwise identical. We did not analyze for gender differences.

Apparatus

The experiment took place in a well-lit 6 m × 6 m room. This provided participants with multiple visual cues for localizing the target, as well as contextual information about how the testing location was situated in the room. Participants sat in a chair placed in the center of a table, 1.52 m square and 0.76 m high, which had a circular hole (0.76 m diameter) cut in the center (see Figure 1). The table was situated in one corner of the room and oriented so that its sides were parallel to the room walls. During testing, the participant faced one of the nearby walls. A 1.52 m diameter circle was inscribed on the table top, graduated in degrees and labeled in 5 deg increments. These markings were used to position the target. Between trials, the markings were covered by an annulus made of white medium density fiberboard; while the participant was blindfolded, experimenters lifted the white boards to find the correct location for the stimulus, then covered the markings. A pointing device was mounted on the chair immediately in front of the participant; when the participant was seated, the device occupied about the same position relative to the body as a dinner plate during a meal. The pointer was a thin rod extending 16 cm from its rotation axis, which was itself situated in the participant’s midline plane and allowed the pointer to pivot around a vertical axis. A polar scale, graduated in degrees, was mounted beneath the pointer. The scale was covered while participants viewed the target.

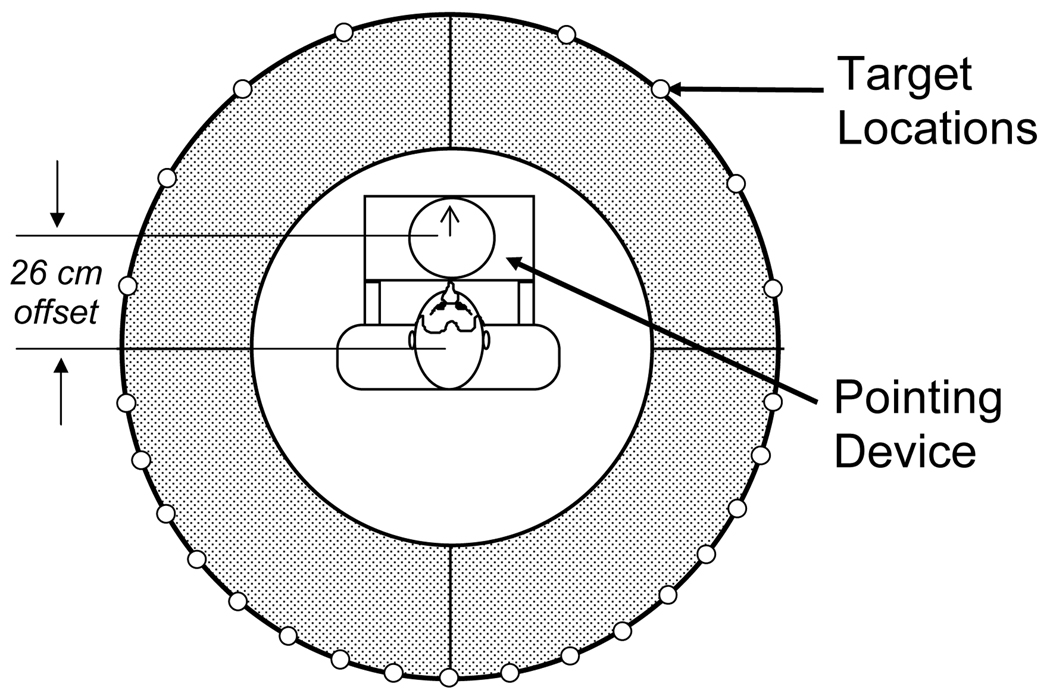

Figure 1.

Schematic overhead view of the experimental apparatus. The observer sat in a chair surrounded by a table; access to the chair was gained via a removable panel in the table top. Targets were placed at different azimuths (relative to the center of the table) on a large circle inscribed on the table. A pointing device was mounted on the chair in front of the participant. The center of the pointing device was offset 26 cm in a horizontal plane from the center of the chair.

Procedure

Prior to testing, participants were alerted to the fact that the rotation axis of the pointer was offset from the center of the chair. They were instructed to set the pointer in pointing trials such that a straight line would extend along the shaft of the pointer and connect exactly with the target2. This was demonstrated by placing a target 90 deg to the participant’s right (relative to the chair center) and showing that an accurate pointing response would entail setting the pointer to more than 90 deg, relative to pointer axis. Because the right and left hands might introduce different biases in pointing due to differing mechanical constraints, we asked all participants to set the pointer with their right hand. Left-handed participants were required to point with their non-preferred hand, but we felt that any error or bias that this might contribute would be small relative to the potential effects related to the differing mechanical constraints between hands. (Upon completion of the study, the mean constant errors of the three left-handed individuals fell within −1.13 and 0.61 standard deviation units of the mean errors of the right-handed participants, suggesting that pointing with the non-dominant hand is not likely to exert a strong influence on pointing errors.)

In Verbal / Clock-Face trials, participants were instructed to respond as if they were at the center of a horizontally-oriented clock, with straight ahead being “twelve o’clock”. Participants were encouraged to respond using increments of 10 min (i.e., one-sixth of an hour) as often as possible; in Verbal / Degree trials, participants were encouraged to use increments of 10 deg as often as possible. Although other alignment schemes are possible, we instructed participants in the 0–90 deg “quadrant” group that zero degrees for the two front quadrants should be aligned with straight ahead, and zero degrees for the two back quadrants should be aligned with the left-right axis. Thus, for example, a correct response for a target located at 100 deg would be “10 deg back right”; a target at 355 deg would be “5 deg left front”. For all verbal trials, we kept a running tally of the number of times participants used relatively small units (e.g., 5 deg or 10 min) versus larger units (e.g., 30 deg or 1 hour). If participants appeared to be using primarily larger units in the first third of the experiment, we encouraged them to increase their usage of small units in the remainder of trials.

Participants were tested individually. They began each trial wearing a blindfold. A piece of paper covered the numbers on the pointing dial, and the pointer itself was oriented straight ahead (zero degrees) from the participant’s facing direction. After placing the target, an experimenter tapped the participant on the shoulder as a signal to raise the blindfold, and announced the response type to be used on that particular trial (“pointing”, “degrees”, “clock”). The participant raised the blindfold, viewed the target for several seconds (typically less than 5), then donned the blindfold again. An experimenter removed the paper covering the pointer dial and prompted the participant to make the specified response. A delay of between 3 – 5 s transpired between the occlusion of vision and the beginning of the response. The participant’s response was recorded, and the target was positioned for the next trial. No error feedback was given3. The pointer was repositioned toward zero degrees and re-covered, if necessary.

Data Analyses

Because the pointing device was mounted immediately in front of the participant, the rotation axis of the pointer was offset by approximately 26 cm from the center of the chair. The target azimuths, however, were defined relative to the center of the chair. Given our apparatus, this meant that an accurate pointing response to a target at 90 deg relative to the chair center would be 106 deg relative to the pointer axis. In this example, both 90 deg and 106 deg accurately describe the azimuth of the target, but from two different reference points. To facilitate comparison between these two reference frames (and between response types), we adopted the convention of expressing target azimuths and pointer responses in the same, chair-centered coordinate system. This required a transformation of the pointing data to reflect the shifted frame of reference, but did not affect the error data.

In preliminary analyses, we used circular statistics to characterize central tendencies and angular dispersions in the data (Batschelet 1981), but these measures were virtually identical to the arithmetic means and standard deviations because the distribution of responses in each condition tended to be relatively restricted, so we did not proceed further with circular analyses. Because only two measurements were collected per condition, we could not identify within-subject outliers. Nevertheless, it was clear in some cases that individual responses fell far outside the range of others generated by the participant in similar trials, perhaps due to experimenter error in placing the target or to forgetting on the part of the participant. To identify these responses, we took the absolute value of the difference between the two repetitions for each condition. If two responses in the same condition differed by more than 50 deg, we omitted the response that was farthest from the nominal value. This resulted in the removal of less than 0.50 % of the data.

We performed separate analyses of variance (ANOVA) on the constant, absolute, and within-subject variable errors. Clock-face judgments and verbal degree (quadrant) estimates were first converted to degrees, ranging from 0 to 360 deg. Then, all responses to targets on the left were subtracted from 360 so that they could be directly compared with responses to targets on the right (e.g., responses of “9 o’clock” and “270 deg” became 90 deg). We calculated constant error as the response value minus the physical target azimuth. This means that all negative constant errors denote responses that underestimated the target azimuth by being too close to 0 deg (straight ahead), with positive errors denoting responses that fell on the other side of the nominal target azimuth. Targets placed at 180 deg azimuth cannot be characterized as right or left, so responses to these stimuli were analyzed separately. We calculated absolute error by taking the absolute value of the constant errors. We calculated variable error as the within-subject standard deviation (SD) across the two measurements per condition for each participant. Because of the small number of repetitions, this measure is relatively coarse. We averaged the SDs for each participant over azimuth to obtain a more stable estimate of the overall variable error. Finally, before analysis, the constant and absolute error data were averaged over the two repetitions per condition within subjects. Due to the relatively large number of comparisons, we adopted an alpha level of 0.01 and used the Greenhouse-Geisser degree of freedom correction where appropriate. When analyzing the data, we first examined the Verbal / Degree trials by themselves, with “response range” (0–360 vs. 0 – 90) included as a between-group factor and the rest of the variables manipulated within-subjects. We then collapsed over the response range factor in separate analyses comparing Pointing, Verbal / Degree, and Verbal / Clock-Face responses.

Results

Constant Error (Bias)

Verbal / Degree Trials Only

An ANOVA on these trials, comparing responses using the 0–360 deg format versus the 0–90 deg quadrant, showed no main effects of response format, side or azimuth, and no interactions between these variables (all p’s > 0.01).

All Response Types

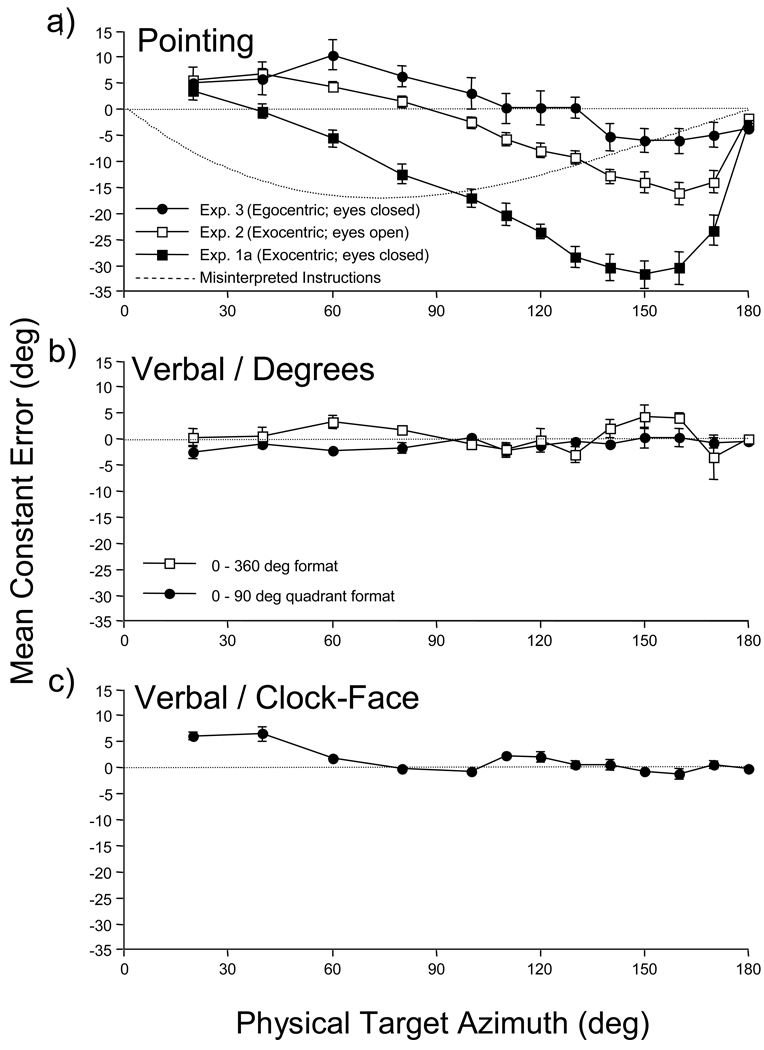

An ANOVA on the constant errors involving the entire data set (this time, ignoring the distinction between verbal degree formats) showed that there was a strong effect of response type (F[2, 40] = 124.4, MSe = 60,702, p < .001); pairwise planned contrasts (alpha = .01) showed that verbal degree and clock-face judgments did not differ reliably, but both differed reliably from the pointing responses (see Figure 2). The main effect of response type was further qualified by a response × azimuth interaction (F[22, 440] = 32.8, MSe = 1940.7, p < .001). Figure 2 shows that pointing responses generally undershot the physical target location, and that the magnitude of the undershooting steadily increased with target azimuth, peaking at about – 32 deg for targets placed at 150 deg. By stark contrast, verbal degree and clock-face estimates erred no more than 2.5 and 6.6 deg, on average. The mean constant errors across all target azimuths for pointing, verbal degree, and verbal clock-face estimates were −18.29, −0.14, and 1.47 deg, respectively. The corresponding values of mean absolute (unsigned) errors were 20.29, 4.61, and 4.50 deg. Not surprisingly, the large pointing errors contributed to a main effect of azimuth (F[11, 220] = 50.3, MSe = 2696.8, p < .001).

Figure 2.

Mean constant errors (bias) for the various response measures in Experiments 1a, 2 and 3. Responses are collapsed over side (left vs. right) for Experiments 1a and 2. The dotted horizontal line on each panel denotes accurate performance. Negative errors are associated with responses that underestimated the target azimuth, i.e., were too close to the origin. Error bars show +/− one standard error of the mean. (a) Manual pointing responses for Experiments 1a, 2 and 3. Pointing in Experiments 1a and 3 was conducted without vision; Experiment 2 was conducted with full vision of the laboratory, target, and pointing apparatus. The dashed line shows the pattern of errors that would be expected if participants ignored the exocentric instruction to aim the pointer at the target, and instead set the pointer to reproduce the target azimuth defined relative to the center of the chair. (b) Verbal / Degree estimates in Experiment 1a. Responses using the 0 – 360 deg format and the 0 – 90 deg quadrant format are shown. (c) Verbal Clock-Face estimates in Experiment 1a.

There was a main effect of side (F[1, 20] = 14.7, MSe = 1146.3, p < .01), which was qualified by a significant side × response type interaction (F[2, 40] = 28.3, MSe = 4806.4, p < .001). Planned contrasts (alpha = 0.01) showed that only the pointing responses differed according to side; pointing to right-sided targets undershot the physical target azimuth by 23 deg, on average, while pointing to left-sided targets undershot by an average of 14 deg (see Table 1). No other interactions were reliable.

Table 1.

Mean constant errors and standard errors in Experiments 1a and 2a

| Verbal Responses |

||||||

|---|---|---|---|---|---|---|

| 0 – 360 Deg (Exp. 1a) | 0 – 90 Deg (Exp. 1a) | Clock (Exp. 1a) | ||||

| Targ Aza | Left | Right | Left | Right | Left | Right |

| 20 | −1 (2.5) | 1 (1.2) | −4 (1.2) | −1 (1.8) | 4 (0.9) | 8 (1.3) |

| 40 | 2 (3.5) | −1 (1.1) | 0 (1.1) | −2 (1.4) | 4 (2.0) | 9 (1.6) |

| 60 | 3 (2.0) | 3 (1.2) | −5 (1.7) | 1 (1.6) | 0 (0.7) | 4 (1.3) |

| 80 | 2 (1.1) | 2 (0.6) | −4 (1.5) | 0 (0.9) | −2 (1.0) | 1 (0.8) |

| 100 | −2 (0.6) | 0 (1.0) | 0 (1.0) | 1 (0.8) | −1 (0.7) | 0 (1.0) |

| 110 | −3 (1.1) | −1 (2.2) | −3 (1.9) | −1 (1.1) | 3 (1.2) | 1 (0.7) |

| 120 | 2 (3.6) | −2 (1.8) | 0 (1.3) | −2 (1.7) | 2 (1.5) | 2 (1.2) |

| 130 | −4 (2.6) | −2 (3.3) | 0 (0.9) | −1 (1.1) | −1 (1.9) | 2 (1.3) |

| 140 | 4 (3.1) | 0 (2.7) | −2 (0.7) | 0 (0.9) | −3 (1.2) | 4 (1.7) |

| 150 | 5 (2.4) | 4 (4.0) | −2 (2.0) | 3 (2.5) | −2 (1.1) | 1 (0.8) |

| 160 | 4 (1.7) | 4 (1.4) | −2 (2.0) | 3 (1.8) | −4 (1.4) | 1 (0.9) |

| 170 | 1 (0.9) | −8 (8.4) | −2 (1.1) | 1 (1.1) | −1 (1.1) | 2 (0.6) |

| Pointing Responses |

||||||

| Eyes Closed (Exp. 1a) | Eyes Open (Exp. 2) | |||||

| Targ Aza | Left | Right | Left | Right | ||

| 20 | 6 (1.4) | 1 (1.1) | 8 (0.9) | 3 (0.7) | ||

| 40 | 3 (2.0) | −3 (1.3) | 9 (1.2) | 4 (0.9) | ||

| 60 | 1 (2.1) | −12 (1.7) | 5 (1.0) | 3 (1.2) | ||

| 80 | −7 (2.5) | −18 (1.7) | 4 (1.3) | −1 (0.9) | ||

| 100 | −11 (1.8) | −23 (2.2) | −1 (1.3) | −4 (1.2) | ||

| 110 | −14 (2.3) | −26 (2.3) | −5 (1.9) | −7 (1.2) | ||

| 120 | −19 (1.9) | −29 (2.2) | −7 (1.5) | −9 (1.2) | ||

| 130 | −23 (2.2) | −34 (2.5) | −7 (1.6) | −12 (1.0) | ||

| 140 | −26 (2.8) | −35 (2.5) | −12 (2.0) | −13 (1.5) | ||

| 150 | −27 (2.7) | −36 (3.7) | −10 (2.2) | −18 (1.9) | ||

| 160 | −28 (3.2) | −33 (3.5) | −14 (2.6) | −19 (2.1) | ||

| 170 | −23 (2.4) | −23 (4.1) | −13 (2.5) | −15 (2.1) | ||

Bold-face numbers show the mean constant error (bias) in degrees; negative numbers denote biases toward zero deg (straight ahead). Italicized numbers show the between-subject standard errors in degrees. (0–360: N = 11; 0–90: N = 10; Clock: N = 21; Eyes Closed Pointing: N = 21; Eyes Open Pointing: N = 16)

Target Azimuth (deg)

To further characterize the pattern of verbal responses, we tallied the number of times that participants gave responses based on several different units. In this analysis, we categorized responses according to the largest number (10, 5, or 1 deg) that could be divided into the response value with no remainder. In Verbal / Degree trials, participants on average used units based on 10, 5 and 1 deg in 69%, 25%, and 5% of the trials, respectively. Note that this analysis is not directly informative about the smallest degree increment that individual observers tended to use. If an observer only used increments of 30 deg, or even 90 deg, these responses would be counted as 10 deg units in this analysis, and response increments of 45 deg would get counted as 5 deg units. Nevertheless, the analysis gives a sense of the relative overall frequency of the units observers used. In Verbal / Clock-Face trials, observers gave responses based on whole hour, 15 min (including 30 min), and less-than-15-min units in 34%, 40%, and 26% of the trials, respectively.

180 deg Azimuth Trials

All responses were very near 180 deg for these targets, with no reliable differences between response types (p > .01).

Absolute Error

Verbal / Degree Trials Only

The absolute errors of verbal responses obtained using the 0–360 deg format were slightly larger than those obtained using the 0–90 deg “quadrant” format (averaging 5.6 deg versus 3.5 deg, respectively; F(1, 19) = 8.63; MSe = 534.3; p = .008).

All Response Types

An ANOVA including all response types showed main effects of response type (F[2, 40] = 125.0; MSe = 41,596; p = .0001), target side (F[1, 20] = 18.84; MSe = 1,795; p = .0003), and target azimuth (F[11, 220] = 26.92; MSe = 1,326; p = .0001). There were also significant interactions of response × side, response × azimuth, side × azimuth, and response × side × azimuth (all F’s > 2.47; all p’s < .007). The grand mean absolute errors for pointing, verbal degree and verbal clock-face responses were 20.29, 4.61, and 4.50 deg, respectively. Although we did not examine these interactions in detail, there were clear similarities to the constant error analyses: absolute errors tended to increase strongly with target azimuth, but only for pointing responses; only pointing showed appreciable differences according to target side, and these differences were primarily apparent for targets in the rear hemispace.

180 deg Azimuth Trials

An ANOVA indicated that there was a main effect of response mode in the absolute errors (F[2, 40] = 10.78; MSe = 85.82; p = .003). The mean errors for the pointing, degree and clock-face responses were 3.68, 0.24, and 0.12 deg, respectively. Pairwise planned contrasts (p = .01) showed that the pointing responses differed reliably from the two verbal responses, but the verbal responses did not differ from each other.

Within-Subject Variable Error

Variable error was estimated by calculating the within-subject standard deviation (SD) across two measurements per condition, then averaging these values across the various target azimuths on each side. This yielded two SD values per participant, one for each side.

Verbal / Degree Trials Only

An ANOVA on the variable errors showed only a main effect of response format (F[1, 19] = 33.41, MSe = 115.45, p < .001). Verbal degree responses using the 0–90 deg quadrant format showed less variable error (i.e., more response consistency) than responses using the 0 – 360 deg format, with the mean SDs being 2.8 deg vs. 5.2 deg, respectively. There were no other main effects or interactions (all other p’s > .01).

All Response Types

An ANOVA on the within-subject SDs of the entire data set showed only a main effect of response type (F[2, 40] = 15.44, MSe = 64.11, p < .001; all other p’s > .01). The mean SDs for the pointing, verbal degree and verbal clock-face judgments were 6.15, 3.84, and 4.24 deg, respectively. Pairwise planned contrasts (p = .01) showed that the pointing responses differed reliably from the two verbal responses, but the verbal responses did not differ from each other.

180 deg Azimuth Trials

There was a main effect of response type in an ANOVA performed on the variable errors for targets placed at 180 deg (F[2, 40] = 14.4, MSe = 60.2, p < .001). There was very little variability in the two verbal response types, with virtually all responses corresponding to 180 deg, whereas pointing responses showed somewhat more variability (mean SD = 3 deg).

Discussion

Non-visual, manual pointing responses exhibited large constant errors; these errors generally increased with target azimuth, peaking at an astonishing −32 deg mean error for targets at 150 deg azimuth. By contrast, verbal responses (using either degree or clock-face formats) were dramatically more accurate, with mean constant errors rarely exceeding +/− 5 deg. We will investigate some possible reasons for the apparent discrepancy between pointing and verbal responses in Experiments 2 and 3, below. Verbal responses also exhibited more within-subject consistency (lower variable error) than pointing responses.

Although we carefully instructed participants to align the pointer directly with the target, is it possible that participants did not comply? Might they instead have set the pointer to match the target azimuth defined relative to the center of the table (e.g., setting the pointer at 90 deg for a target at 90 deg relative to the chair center, instead of the setting the pointer at 106 deg, which is the correct azimuth relative to the pointer axis)? This kind of pointing strategy could be the result of verbal mediation, although generation of an explicit numerical estimate is not required. In any case, the data argue against the idea that participants did not follow the instructions. If participants misinterpreted the instructions in this way (or were unable to comply, due to cognitive difficulties--e.g., in adopting an imagined perspective), one would expect the pattern of errors shown in Figure 2a (dashed curve). As can be seen in the figure, the observed pattern of errors peaks at a different azimuth and is larger in magnitude than the errors predicted by errors in following the instructions. In addition, previous research has shown that observers are typically able to perform small amounts of imagined translation in perspective easily and without incurring large systematic errors (Easton and Sholl 1995; Presson and Montello 1994; Rieser 1989).

Pointing judgments in a recent study by Haun et al. (2005) were made without vision after a short delay, similar to the conditions used in our Experiment 1a. In some ways, our results might seem to contrast with theirs. In our study, verbal estimates were generally accurate and pointing judgments exhibited large errors; in Haun et al., however, both response types yielded relatively small constant errors and verbal responses were slightly less accurate overall. It is important to note that the large pointing biases in our study were manifested at azimuths not tested by Haun et al. (namely, 90 – 180 deg); in the 0 – 90 deg range, the discrepancies between our results and theirs are relatively small. Nevertheless, even in the 0 – 90 deg range, our data do not show the error patterns described by Haun et al., which the authors attributed to categorical biases in spatial memory. This may be because our target azimuths were generally not placed at locations that were optimized to find evidence of such biases. Another methodological difference is that in our instructions to participants, we repeatedly stressed the importance of being as precise and accurate as possible when giving verbal estimates, and this may have improved performance in verbal trials relative to that reported by Haun et al. (2005). Finally, our targets were closer to the observer and were seen against a more detailed background than those in Haun et al. The full import of these and other methodological details is unclear, but some combination of these factors no doubt accounts for the relatively small discrepancies between our results and those of Haun et al. (2005).

In this study, we instructed participants to use small verbal units, and, in general, they complied. On average, the units that participants adopted allowed them to respond to changes in target azimuth accurately and precisely. We investigate this issue more closely in Experiment 1b.

EXPERIMENT 1b

One possible reason for preferring action-based responses over verbal reports is the potential for verbal reports to underestimate an observer’s true sensitivity to stimulus changes due to the relative coarseness of the verbal units that observers might select. For example, if observers do not use units smaller than 10 deg when stimuli are spaced at 5 deg increments, this will necessarily add response variability even if the observer is perfectly sensitive to the stimulus changes. Pointing responses are not subject to this kind of quantization error. The potential for quantization errors might seem to be a fatal flaw of verbal reports and make manual pointing a clearly preferable response, but the precision of pointing responses is itself limited, namely, by noise in efferent and afferent sensory signals. Also, observers have some control over the verbal units they use and they may well be able to use relatively small verbal units if encouraged to do so. Finally, the ability of verbal responses to accurately track small changes in target azimuth can be enhanced if observers consciously or unconsciously vary the way stimuli map onto response categories across trials involving the same target. Thus, reports of “20 deg” and “30 deg” in two separate trials for a target at 25 deg azimuth would yield accurate mean responding, at the expense of increased within-subject variability. The practical import of these sources of error (quantization vs. sensory noise) for indications of target azimuth is currently unknown.

In Experiment 1a, the possible target locations were spaced in increments of 10 or 20 deg. It may be that pointing responses would begin to show an advantage over verbal estimates, at least in terms of variable error, for more closely spaced target azimuths. We tested this idea in Experiment 1b. In Experiment 1a, we instructed participants to use units of 10 deg or smaller in Verbal / Degree trials and 10 min (= 5 deg) or smaller in Verbal / Clock-Face trials. In Experiment 1b, we repeated these instructions, but used a target spacing (3 deg increments) that was less than the smallest verbal unit that we encouraged participants to use. We were particularly interested in whether the variable error of verbal estimates would exceed that of manual pointing for these smaller increments in azimuth.

Method

Participants

Fourteen participants (11 females, 3 males) took part in this experiment in exchange for course credit. Their mean age was 18.4 years (range: 17 – 20). All reported having normal vision in both eyes, and two reported being left-handed.

Design

Targets in this study appeared in a much narrower range of azimuths than in Experiment 1a, and with a smaller spacing. Possible target locations were: 25, 28, 31, 34, and 37 deg. There were three possible response types: pointing, verbal / degrees, and verbal / clock-face. Each combination of target location and response type was measured four times in random order, for a total of 60 trials.

Apparatus and Procedure

This study used the same apparatus and testing room as Experiment 1a. The same procedures and instructions were used, as well.

Results

Constant Error (Bias)

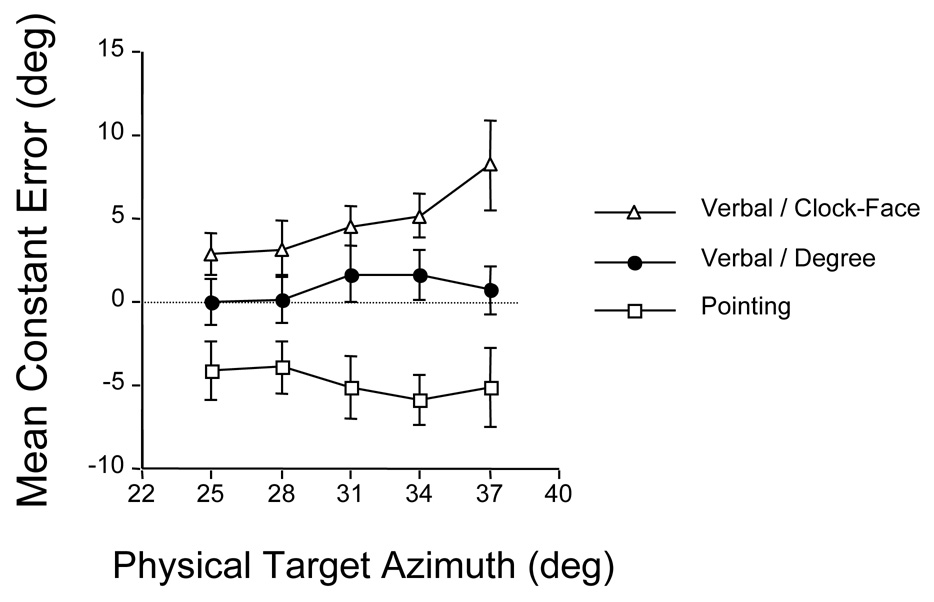

Constant error data for Experiment 1b are shown in Figure 3. An ANOVA involving the mean constant errors showed a main effect of response type (F[2, 26] = 9.3, MSe = 1640.7, p < .001). Pairwise planned contrasts showed that only the pointing and verbal clock-face judgments differed reliably (p < .01). The response type main effect was qualified by a significant response type × azimuth interaction (F[8, 104] = 3.87, MSe = 255.3, p < .001). Pointing responses tended to undershoot the physical target location by approximately 5 deg, while verbal degree estimates were more accurate (mean constant error of + 0.8 deg); bias did not vary much for either of these response types across this range of target azimuths. Verbal clock-face responses tended to overestimate the target azimuth, with bias increasing from approximately + 3 deg to + 8 deg over this range of azimuths. There was no overall effect of azimuth, however (p > .01).

Figure 3.

Mean constant errors (bias) for the various response measures in Experiment 1b. The dotted horizontal line denotes accurate performance. Negative errors are associated with responses that underestimated the target azimuth, i.e., were too close to the origin. Error bars show +/− one standard error of the mean.

As in Experiment 1a, we tallied the number of times that participants gave responses based on several different units. In Verbal / Degree trials, participants on average used units based on 10, 5 and 1 deg in 69%, 28%, and 3% of the trials, respectively. In Verbal / Clock-Face trials, they gave responses based on whole hour, 15 min (including 30 min), and less-than-15-min units in 31%, 19%, and 50% of the trials, respectively.

Absolute Error

An ANOVA of the absolute errors showed only a main effect of azimuth (F[4, 52] = 6.2, MSe = 44.9, p < .001), with error increasing from 5.7 to 8.2 deg across this range of target azimuths, averaging across response types.

Within-Subject Variable Error

Analysis of the within-subject SD’s by ANOVA showed no main effect of response type (F[2, 28] = 0.33; MSe = 0.85; p > .01). The mean SDs for the pointing, verbal degree and verbal clock-face judgments were 4.36, 3.89, and 4.26 deg, respectively.

Discussion

At this range of target azimuths, verbal degree estimates were most accurate, showing very little systematic bias. Pointing responses tended to undershoot the target azimuth by about 5 deg, while clock-face judgments were overestimated by 5.5 deg, on average. Despite the small increments in target azimuth (3 deg), verbal degree estimates accurately reflected these increments, and in fact showed less bias than pointing responses. The fact that clock-face judgments showed increasing bias across these small increments in target azimuths could be due to increased response quantization in that condition. As in Experiment 1a, we again instructed participants to use small verbal units—down to at least 10 deg for verbal degree estimates and at least 10 minutes (= 5 deg) for clock-face estimates. They again complied, using increments of 5 deg and 10 minutes with remarkable frequency. Thus, although some response quantization occurred, the errors due to this quantization tended to average out across trials, allowing participants to differentiate relatively small changes in target azimuth. Quantization undoubtedly contributed to the variable errors for verbal estimates, but even so, these errors were no higher than the variable errors for pointing, on average.

EXPERIMENT 2

In Experiment 1, we collected verbal and manual pointing estimates of target azimuth under conditions which are common in spatial cognition studies. Most notably, vision of the target was prevented during the responses, and the pointing device was placed immediately in front of the observer. Although these conditions are common, they are not ideal for identifying the source of data discrepancies involving the two response types. The responses differ along a variety of potentially relevant dimensions. One difference is that the pointing responses were executed after a delay of several seconds, whereas the value of the verbal estimates could have been generated during the visual preview and then verbalized after the delay. Thus, the pointing biases (and presumably at least some of the variable error) could have been due to degradation in the represented target azimuth which was not present in the verbal task. Another difference is that verbal responses do not require monitoring self-motion signals, whereas the manual pointing responses do. In this view, executing pointing responses without vision may introduce constant and variable errors due to errors in sensing the position of the arm and hand, while verbal reports are not subject to this kind of errors. Finally, it is possible that the pointing responses in Experiment 1 were not based directly on the spatial memory of the target azimuth, but instead were verbally-mediated. In this view, participants may have generated a verbal estimate during the preview, then, after the delay, either verbalized that estimate (if required) or generated a pointing response to match that verbal estimate. If one assumes that errors are always introduced when transforming a verbal estimate into an overt pointing response, this account would predict larger errors for pointing responses, because presumably verbal estimates would not be subject to such output transformation errors. At any rate, verbal mediation might potentially muddy the waters by confounding the cognitive processes recruited by the two types.

In Experiment 2, we sought to address some of these issues by allowing participants to point to the targets with full vision of the target, testing environment, and pointing device. This makes the pointing response more comparable to the verbal responses in Experiment 1 by eliminating the response delay. By providing visual guidance, we were also able to maximize the precision and accuracy with which participants could monitor the position of their arm and hand while setting the pointer. We did not collect verbal responses in Experiment 2. By not interspersing verbal and pointing responses, we hoped to discourage participants from using verbal strategies while setting the pointer. After testing, we administered a questionnaire designed to assess the possible use of verbally-mediated strategies.

Method

Participants

Sixteen participants (8 females, 8 males) took part in this experiment in exchange for course credit. Their mean age was 18.7 years (range: 18 – 21). All reported having normal vision in both eyes, and all reported being right-handed.

Design

This study used the same possible target locations as in Experiment 1. In the present study, manual pointing was the only response mode.

Apparatus and Procedure

This study used the same apparatus and testing room as Experiments 1a and b. The procedures were very nearly identical, with the notable exception that pointing responses were made with unrestricted vision in a well-lit environment. Thus, when executing the pointing responses, participants could see the target, their hand, the pointing apparatus, and the testing environment. After testing, participants completed a questionnaire designed to assess how they approached the task of aiming the pointer (see Appendix A). For example, response options were designed to detect intentional usage of verbally-mediated strategies versus more visually-based strategies.

Results

Constant Error (Bias)

This analysis showed main effects of side (F[1, 15] = 20.27; MSe = 1343.4; p < .001] and azimuth (F[11, 165] = 58.26; MSe = 2197.9; p < .001). Figure 2 shows the Experiment 2 constant error data averaged over side, along with the pointing data from Experiments 1a and 3; Table 1 shows the Experiment 2 errors broken down by side and azimuth. In general, the errors mirrored those seen in Experiment 1a, albeit on a somewhat smaller scale. When pointing with eyes open, participants tended to undershoot slightly more for right- than left-sided targets (averaging −7.2 deg versus −3.5 deg, respectively). There was some overshooting for targets in the 20 – 80 deg range, peaking at +6.8 deg error for the 40 deg target; targets in the 100 – 170 deg range were generally undershot, peaking at −16 deg for the 160 deg target. The mean constant error for targets at 180 deg azimuth was −1.8 deg (standard error of the mean: 0.71 deg). The grand mean for the other azimuths was −5.37 deg.

Absolute Error

The absolute error of pointing responses did not show any overall differences according to side of the target (F[1, 15] = 0.49; MSe = 19.1; p > .01), but there was a main effect of azimuth (F[11, 165] = 17.8; MSe = 640.7; p < .001) and a side x azimuth interaction (F[11, 165] = 8.2; MSe = 93.9; p < .001). The mean absolute error for targets at 180 deg azimuth was 2.89 deg (standard error of the mean: 0.77 deg). The grand mean for the other azimuths was 9.24 deg.

Within-Subject Variable Error

We performed a two-tailed t-test, comparing the within-subject SD’s (calculated across two replications per condition) for responses to left- versus right-sided targets. As in Experiments 1a and b, before analysis, we averaged across azimuth within subjects to increase the stability of the variable error estimate for each participant. This analysis showed no effect of target side (t[15] = 0.53; p > .01). The SD’s averaged 2.66 deg.

Questionnaire Data

Fourteen of the sixteen participants (87.5%) indicated that they used a visually-based strategy (“Visualize a straight line connecting the pointer to the target, then move the pointer to line up with that line”); the two others indicated that they used verbally-based strategies (estimating the target direction in degrees or clock-face directions, then moving the pointer to match that estimate). The constant errors for these two individuals fell −0.7 and 0.08 standard deviation units (averaging across azimuth) from the means for the rest of the participants; this suggests that their pointing responses did not differ radically from those of the rest of the group despite using a verbally-mediated strategy.

Discussion

Constant, absolute and variable errors for the pointing responses were all lower in Experiment 2 than in Experiment 1a. The lowered errors presumably reflect a combination of eliminating errors in remembering the target azimuth and improving the accuracy and precision with which participants could monitor their arm and hand position when setting the pointer. Most striking, however, is the fact that sizeable pointing biases remained (up to −16 deg), even though the responses were conducted with unrestricted vision of the target, pointer, and laboratory environment. This strongly suggests that the differences between verbal and manual pointing judgments we observed in Experiment 1 were not due to mechanical constraints imposed by physical properties of the hand, because here, participants could use visual feedback to overcome these constraints when pointing. Most participants indicated that they did not use a verbally-mediated strategy when aiming the pointer. Although it remains possible that the pointing judgments may have been verbally-mediated to some degree in Experiments 1a and 1b, Experiment 2 shows that substantial pointing biases remain even when verbal mediation is minimal. In addition, because pointing with eyes open eliminates the need to rely upon enduring spatial representations, Experiment 2 shows that pointing biases are not restricted to situations in which targets are encoded in the relatively coarse, categorical representation espoused by Huttenlocher et al. (1991). In the framework of the category-adjustment model, targets in Experiment 2 presumably would have been encoded in the fine-grained spatial representation, yet sizeable pointing biases remained.

The eyes-open pointing task is largely one of adjusting the pointer so that it appears visually to be aligned with the target. In principle, this could be accomplished by the participant via remote control (e.g., by directing an experimenter to adjust the pointer), without any direct motor involvement. In this view, the biases are not due to any necessary difference between verbal estimates and manual pointing, but are instead related to the task of perceiving the exocentric alignment of two nearby points. Experiment 3 tests this idea.

EXPERIMENT 3

One possible explanation of the pointing biases seen in Experiments 1 and 2 is that they are due to the exocentric nature of the task (i.e., alignment of the pointer with the target), rather than the action-based nature of the task. If this is true, an egocentric action-based response should not show the same biases, perhaps even approaching the accuracy seen in the (egocentric) verbal reports of Experiment 1a. A candidate for such an egocentric action-based response is aiming the index finger at the target. This type of response has been investigated in several studies (e. g., Wang 2004; Wang and Spelke 2000). A potential disadvantage of this technique is that the arm undergoes dynamic variations in mechanical constraints when pointing to locations all around the body; the extent to which these constraints might introduce additional biases into the pointing responses is currently poorly understood. In addition, the distance from the pointing hand to the target can change dramatically for targets that are relatively close to the body. Another possible egocentric pointing response is to place a pointer on the participant’s head, but again, we were concerned about mechanical constraints involved in reaching up to the head to manipulate a pointer. An alternative approach that avoids some of these pitfalls is to use a pointing apparatus placed just in front of the observer (as in Experiments 1 and 2), but rather than asking observers to perform the exocentric task of aiming the pointer at the target, ask them to adjust the pointer to reproduce the target direction defined relative to their body. Thus, for example, an accurate pointing response for a target placed 90 deg to the participant’s right would be 90 deg. Because it is no longer an aiming response, this type of pointing task is somewhat more abstract than pointing directly at the target, but it may be taken as a reflection of the remembered egocentric target azimuth. If the large pointing biases in Experiments 1 and 2 were due to distortions in exocentric relations in visually perceived space when assessing the alignment of two objects, this egocentric pointing task should not be sensitive to this distortion. Also, if the biases were due to difficulties in understanding or following the instructions to aim the pointer at the target, this pointing task should minimize those biases because there is no requirement to take into account the offset of the pointer axis from the chair center.

Method

Participants

Fourteen participants (7 females, 7 males) took part in this experiment in exchange for course credit. Their mean age was 20 years (range: 18 – 28). All reported having normal vision in both eyes, and all but 2 reported being right-handed.

Design

This study used the same possible target locations as in Experiment 1a, except that here, only target positions on the right side were used. Also, non-visual pointing was the only response mode.

Apparatus and Procedure

This study used the same testing room, materials, and many of the same procedures as Experiments 1 and 2. The chief difference in the pointing responses of this study concerned the instructions. Participants were instructed to adjust the pointer so that it reproduced the direction of the target relative to their body. After testing, participants completed a questionnaire designed to assess what explicit strategies they may have used when generating responses (see Appendix B). We were specifically interested in whether participants first covertly generated a verbal estimate and then adjusted the pointer to match the verbal estimate.

Results

Constant Error, Absolute Error, and Within-Subject Variable Error

In Experiment 3, the only factor was target azimuth. Separate ANOVAs analyzing the constant, absolute and variable error scores all showed a main effect of target azimuth (F[12,156] = 5.47, 2.06, and 2.28; MSe = 413.8, 80.1, and 84.6; p = 0.0001, 0.023, and 0.011, respectively). In the constant error analysis, target azimuths between 20 and 100 deg tended to be overestimated (by approximately 3 – 10 deg), while responses to targets between 110 and 130 deg were fairly accurate; target azimuths between 140 and 180 deg tended to be underestimated (by approximately 5.5 deg). In the absolute error analysis, mean errors ranged from approximately 8 to 14 deg for targets between 20 and 170 deg, while the mean error for the target at 180 was about 4 deg. The mean absolute error across azimuths was 9.66 deg. In Experiments 1a and 2, constant and absolute errors were somewhat greater for targets on the right side; in Experiment 3, targets were only presented on the right, so errors here may be slightly inflated relative to the results had we included stimuli on both sides.

In the variable error analysis, within-subject standard deviations (SDs) across two repetitions ranged from approximately 4 to 11 deg for targets between 20 and 170 deg; the mean SD for the target at 180 deg was 1.6 deg.

Questionnaire Data

Most participants reported using some kind of non-verbal egocentric strategy (using the pointer to reproduce the egocentric target azimuth: 8 out of 14 participants; pointing the head at the target and then reproducing the head angle with the pointer: 1 of 14; visualizing the egocentric target azimuth as seen from above: 1 of 14. This last response may not be wholly egocentric in nature). One person indicated that he or she used a verbally-mediated strategy (using a number or compass direction). Another used an exocentric strategy (moving the pointer so that it lined up with the target), while still another used an exocentric strategy to initially aim the pointer at the target, then adjust that pointer setting to take into account the pointer offset.

Discussion

In Experiment 3, the constant errors in pointing were generally smaller than in either Experiment 1a or Experiment 2, even though pointing in Experiment 2 was conducted under unrestricted vision and eyes were closed during pointing here. In particular, the large pointing biases in the exocentric pointing tasks of Experiments 1a and 2 for targets in the rear hemispace were greatly diminished in the egocentric pointing task of Experiment 3. Interestingly, there were somewhat greater biases in the front hemispace in Experiment 3. Despite instructions to use the pointer to reproduce the egocentric target azimuth, the questionnaire data suggest that 2 of the 14 participants (14%) did not comply with these instructions. Our data do not speak directly to the issue of which pointing task (egocentric or exocentric) participants might find to be more natural, but it is possible that participants found this egocentric pointing task to be abstract and/or artificial and that this contributed to the remaining pointing errors. More research is required to resolve this issue.

General Discussion

Although there were some small biases in verbal estimates of egocentric target azimuth, verbal reports were surprisingly accurate overall. By contrast, when observers attempted to aim a pointer at the targets, there were large, systematic biases. Previous studies using manual pointing as a measure of target azimuth have also shown some constant error (e. g., Haun et al 2005; Montello et al 1999). Our study extends previous work by showing that this error depends strongly on target azimuth and that it can be quite high, even when conducted with full vision of the target and pointing device. Taken together, our results suggest that a significant portion of the large differences in verbal and manual pointing judgments of target azimuth is due to the frame of reference underlying the two response types. Aiming a pointer at a nearby target, both of which are displaced from the body, is an exocentric task, and this task is associated with large constant errors—most notably, undershooting for relatively eccentric target azimuths. When the same pointer is manipulated to reproduce the egocentric target azimuth, errors for eccentric targets are greatly diminished and performance approaches the level of accuracy observed in verbal estimates of egocentric target azimuth. The verbal estimates, however, remain most accurate overall. Although superficially our results might appear to demonstrate an unusual perception / action dissociation in which the action-based response is uncharacteristically less accurate than the perception-based response, the response differences are more properly interpreted as reflecting the frame of reference associated with each response type rather than any fundamental perception / action distinction. We argued in the Introduction that, from the perspective of the Two Visual Streams framework (Milner and Goodale 1995), both responses in our tested conditions are likely controlled by the ventral visual stream.

A full explanation for why such large pointing errors occur in exocentric pointing tasks is beyond the scope of this article, but we will discuss a few possibilities. The highly accurate verbal estimates of target azimuth strongly suggest that there were no large errors in target localization. The fact that sizeable pointing errors still occurred under visual guidance (Experiment 2) suggests that constraints imposed by mechanical properties of hand are not to blame. This experiment also argued against memory errors and verbal mediation strategies as primary factors. Informal testing among the authors has suggested that some pointing bias remains even when both the pointer and the target are located in the front hemispace; this is supported by more formal work (though differing in certain methodological details) by Doumen, Kappers and Koenderink (2005; 2006). These results indicate that the bias is not fundamentally associated with targets in the rear hemispace or aiming the pointer near the abdomen. To some extent, our pointing errors are reminiscent, in both direction and magnitude, of biases observed by Koenderink and colleagues under multi-cue, free viewing conditions (Doumen et al 2005; Doumen et al 2006; Koenderink et al 2003). Although those researchers have not reported tests under conditions that duplicate our configuration of observer, pointing device and target locations, it is possible that a common explanation underlies both our results and theirs. Koenderink et al. attribute the biases to non-Euclidean properties of visually perceived space, which apparently change in complex ways depending on the viewing conditions and behavioral task. According to Foley (1972; Foley et al 2004), one aspect of these non-Euclidean properties is that the angular separation between locations in a horizontal plane tends to be over-perceived. If observers over-perceive the angular separation between a pointer and a target, they should perceive veridical pointing angles as too large, and therefore should under-shoot when attempting to aim the pointer at the target. This prediction is borne out in our Experiments 1a, 1b, and 2. If the amount of over-perception increases as the pointer / target angular separation increases, this would also account for the tendency for pointing errors in our studies to increase with azimuth.

One implication of the notion that non-Euclidean properties of visual space may be responsible for the large errors we observed in exocentric pointing is that this pointing task is responsive to conscious visual perception, rather than to a more special-purpose visuomotor spatial representation. It is possible that individual targets are localized relatively accurately (a fact that accords well with the accurate verbal reports of azimuth we found in Experiment 1), even though the perceived exocentric azimuths are distorted relative to the physically accurate values due to non-Euclidean properties within visual space (Foley 1972; Koenderink et al 2002). It is also possible that the large constant errors in pointing we observed in Experiments 1a and 2 reflect a Euclidean visual space coupled with distortions introduced in the mapping from the perceived egocentric azimuth onto the pointer direction. Ascertaining whether visual space is Euclidean or non-Euclidean under our viewing conditions is beyond the scope of this paper, but Experiment 3 showed that the magnitude of the constant errors is reduced (though not eliminated) in a task that allows observers to ignore the offset between the egocentric axis and the pointer axis. We are continuing to investigate this complex issue.

Methodological Implications

The fact that observers’ verbal estimates are highly accurate on average suggests that reliance upon manual pointing responses, at least under the common conditions tested here, can dramatically underestimate participants’ knowledge of target azimuth. If pointing responses are used, care should be taken to instruct participants to indicate the egocentric target azimuth, rather than to aim the pointer at the target. Our results do not speak directly to the possibility that biases in the exocentric pointing task are the result of difficulties in understanding the task or in performing an imagined translation to bring the vertical body and pointer axes into register. However, cognitive limitations such as these are not likely to be the primary culprit given the aforementioned work of Koenderink, Foley, and others.

There were only small differences in constant error between the various verbal formats we tested; verbal estimates using degree units in “quadrant” format resulted in the least within-subject variable error. Thus, although some response quantization is inevitable when using verbal reports of azimuth, by no means should verbal reports be ruled out as a response measure on this basis. Indeed, given the precision and accuracy of verbal reports we found in Experiment 1a, they may be advantageous in many experimental paradigms.

In Experiment 2, in which participants pointed with eyes open, systematic pointing errors were somewhat reduced relative to those in Experiment 1. It is not clear to what extent this reduction was due to the decreased retention interval or to the availability of visual guidance during the pointing response. Montello et al. (1999) found that when the pointer is visible but not the target, manual pointing responses are quite similar to responses performed while blindfolded, at least when participants must remember multiple object locations at once. This suggests that vision of the hand and pointer is not crucial. Also, White, Sparks and Stanford (1994) found that saccadic eye movements were less accurate when made to remembered target locations (as opposed to visible targets) but concluded that memory degradation was not the main source of these errors. More research is required to determine more precisely what contributed to the increased pointing accuracy in Experiment 2.

In any case, longer retention intervals are common in other research paradigms, and these longer delays may well introduce additional sources of inaccuracy into directional judgments. For example, there are pointing biases near certain salient regional boundaries (e.g., front versus right side; Huttenlocher et al 1991; Montello and Frank 1996), and some researchers have suggested that items in front of the observer are afforded a special status in memory compared with items to the rear (Sholl 1987). If the biases we have described for short retention intervals persist at longer intervals, it is possible that these biases could be mistaken for systematic errors in remembering target locations (e.g., poorer memory for targets located behind the observer than those in front). It is clear from our pointing data that there is little evidence of categorical biases of the type described by Huttenlocher et al. (1991) (see Figure 2). Thus, a central finding of our work is that one should not expect all manual pointing judgments to be subject to categorical biases; the duration of the retention interval and other factors are likely to influence the presence and magnitude of pointing biases.

Another implication of this work is that many kinds of directional judgments involving targets in the nearby environment could be subject to biases, even if they do not involve the motor system (e.g., estimating whether a nearby moving object might intercept a second object, or judging what another person is looking at). We are continuing to investigate the source and boundary conditions of the pointing errors. For instance, it is not known whether the pointing biases may be manifested when observers point to targets after adopting an imagined perspective or after undergoing a whole-body rotation without vision. If the biases stem from non-Euclidean properties of visual space, the pointing errors may well be diminished or disappear entirely under these conditions. Similarly, if these non-Euclidean properties appear only in visual space, the pointing biases may disappear when targets are presented by audition. Haber et al. (1993) found that manual pointing responses to auditory targets was more accurate than verbal reports of target azimuth in their blind participants, so a difference in the spatial metrics underlying visual and auditory space may well explain the apparent discrepancy of our results and theirs.

From a practical standpoint, manual pointing biases, whatever their source, may be at least partially factored out without measuring them directly if one assumes the same biases are present in two conditions that are being compared. This assumption may or may not be warranted; motor calibration is known to be sensitive to contextual factors to some extent (DiZio and Lackner 2002). Ideally, this assumption should be directly tested whenever possible.

All of our targets were presented at roughly the same egocentric distance from the observer (i.e., approximately 1 m from the observer’s eyes, with some individual variations due to differences in eye height and unconstrained head movements). Would one expect to see similar results for targets at different distances? Contextual cues may bias target localization in some cases, but otherwise, azimuthal cues will remain virtually constant across changes in egocentric target distance. This being the case, the pattern of verbal estimates would likely remain very nearly the same. Whether or not the pattern of pointing responses might change will likely depend on the source of the pointing errors. If the errors are due to non-Euclidean properties of visual space revealed in exocentric pointing tasks, the magnitude of pointing errors should vary with target distance. The same holds true for situations in which the pointing device is placed in different locations relative to the observer (Kelly et al 2004; Koenderink et al 2003). Additional work is required to answer this question.

Acknowledgements

This work was supported in part by NIH Grant RO1 NS052137 to JP. Correspondence concerning this article should be addressed to John Philbeck at: Department of Psychology, The George Washington University, 2125 G. Street, N.W., Washington, DC, 20052 (e-mail: philbeck@gwu.edu).

Appendix A: Experiment 2 Questionnaire

When you were pointing to the target, which method did you use most often? (CHOOSE ONLY ONE)

_____ Estimate the target direction in terms of degrees, then move the pointer to match the degree estimate.

_____ Estimate the target direction in clock-face directions (for example, “five o’clock”), then move the pointer to match that estimate.