Abstract

Five experiments investigated whether observer locomotion provides specialized information facilitating novel-view scene recognition. Participants detected a position change after briefly viewing a desktop scene when the table stayed stationary or was rotated and when the observer stayed stationary or locomoted. The results showed that 49° novel-view scene recognition was more accurate when the novel view was caused by observer locomotion than when the novel view was caused by table rotation. However such superiority of observer locomotion disappeared when the to-be-tested viewpoint was indicated during the study phase, when the study viewing direction was indicated during the test phase, and when the novel test view was 98°, and was even reversed when the study viewing direction was indicated during the test phase in the table rotation condition but not in the observer locomotion condition. These results suggest scene recognition relies on the identification of the spatial reference directions of the scene and accurately indicating the spatial reference direction can facilitate scene recognition. The facilitative effect of locomotion occurs because the spatial reference direction of the scene is tracked during locomotion and more accurately identified at test.

As people move in their environments, they need to update their mental representations of spatial relations between themselves and objects in the environment to remain oriented (Rieser, 1989). Several models of these perceptual and cognitive processes have been proposed (e.g., Burgess, 2008; Byrne, Becker, & Burgess, 2007; Holmes & Sholl, 2005; Mou, McNamara, Rump, & Xiao, 2006; Mou, McNamara, Valiquette, & Rump, 2004; Sholl, 2001; Waller & Hodgson, 2006; Wang & Spelke, 2002). All of these models contain an egocentric system that computes and represents self-to-object spatial relations needed to guide locomotion in the nearby environment. Self-to-object spatial relations are continuously and efficiently updated in the egocentric system as a navigator locomotes through an environment. Among other things, this updating process allows a navigator to walk around and between objects, and through apertures. These models also contain an environmental, or allocentric, system that represents locations of objects and layouts of environments in an enduring manner. This system supports wayfinding, the abililty to use mental representations of environments and the perception of objects in those environments to locate unseen goals and to orient toward unobservable landmarks.1

A key feature that distinguishes these models is the role of spatial reference directions in spatial updating. According to Mou et al. (2004), the environmental system uses an orientation-dependent spatial reference system with a small number of dominant reference directions (one or two). During locomotion, navigators update their orientation with respect to the dominant reference directions used to represent the spatial structure of the environment (e.g., Mou et al., 2004). None of the other models contains such a process. Other models of spatial memory and navigation typically update self-to-object or self-to-object-array spatial relations during locomotion (e.g., Wang & Spelke, 2002).

Recent research has provided empirical evidence that spatial updating processes facilitate recognizing scenes at novel viewpoints. (e.g. Burgess, Spiers, & Paleologou, 2004; Simons & Wang, 1998; Wang & Simons, 1999). Simons and Wang (1998) had participants briefly view an array of five objects on a desktop and then detect the position change of one object. Participants were tested either from the learning perspective or from a new perspective and either when the table was stationary or when it was rotated. The results showed that visual detection of a position change of an object at a novel view was less impaired when the novel view was caused by the locomotion of the observer than when the novel view was caused by the table rotation. In a follow-up study, Wang and Simons (1999) reported that for stationary participants, a visual cue to the magnitude of table rotation, provided by a rod attached to the table, did not facilitate detecting the position change of an object in a novel test view. A motoric cue to the magnitude of table rotation, provided by having the participants turn the table themselves, also did not facilitate detecting the position change of an object in a novel test view.

Using these findings, Simons and Wang (1998) and Wang and Simons (1999; see also Wang & Spelke, 2002) argued that scene recognition relies on a mechanism that updates egocentric spatial representations of object arrays during the observer’s locomotion and that this mechanism is not available when the observer is stationary. Furthermore, they argued that the difference in performance between table rotation and observer locomotion is not due to a lack of visual or motion information about the magnitude of the view change. The updating process might be specialized and readily incorporate information about viewer position changes but not other information indicating a view change.

In this project, we propose and test an alternative hypothesis for the facilitative effect of locomotion on position change detection at a novel view. As discussed previously, Mou, McNamara, and their colleagues proposed that people establish spatial reference directions (one axis or two orthogonal axes) inside a scene when representing locations of objects in the scene and that the recovery of spatial reference directions is important to retrieve spatial relations (Mou & McNamara, 2002; Mou, Zhao, & McNamara, 2007; Mou, Xiao, & McNamara, 2008). Following this proposal, we hypothesize that the superiority of locomotion to table rotation occurs because the spatial reference direction of the test scene is more accurately identified when the novel view was caused by observer locomotion than when the novel view was caused by table rotation. In detecting a position change, people need to align the spatial reference direction identified in the test scene with the spatial reference direction represented in memory and then compare the locations of objects in the test scene with those in memory (Mou, Fan, McNamara, & Owen, 2008). We further propose that the more accurately people can identify the spatial reference direction in the test scene, the more accurately they will be able to detect the position change of an object (Mou, Xiao, & McNamara, 2008).

People can identify the spatial reference direction in the test scene using different sources of information. Results of several studies indicate that people represent interobject spatial relations with respect to a spatial reference direction in the scene (e.g. Mou & McNamara, 2002; Mou, Xiao, & McNamara, 2008; Werner & Schmidt, 1999). Hence people may rely solely on interobject spatial relations to identify the spatial reference direction. Studies of spatial memory also indicate that people update their orientation during locomotion with respect to the same spatial reference direction that is used to represent the interobject spatial relations (Mou, McNamara, Valiquette, & Rump, 2004). It is possible that people rely on updated spatial relations between themselves and the spatial reference direction to identify the spatial reference direction. Our conjecture is that in the table stationary/observer locomotion condition, participants can identify the spatial reference direction using the updated spatial relations between themselves and the spatial reference direction but in the table rotation/observer stationary condition, participants can identify the spatial reference direction using interobject spatial relations alone.

To explain Simons and Wang’s (1998) findings, we speculate that, at least in their paradigm, the spatial reference direction in the test scene can be identified more accurately with updated self-to-reference-direction spatial relations than with interobject spatial relations alone. Simons and Wang claimed that locomotion invoked spatial updating provides unique information that facilitates novel-view scene recognition. By contrast, the hypothesis of this project is that any information that allows the spatial reference direction to be identified more accurately than can be accomplished solely with the interobject spatial relations in the test scene can facilitate position change detection at a novel viewpoint. Spatial updating during locomotion just produces one such source of information. This claim is supported indirectly by results of an investigation of shape recognition (Christou, Tjan, & Bülthoff, 2003). Christou et al. found that novel-view shape recognition by a stationary observer in a virtual environment was facilitated when the to-be-tested viewpoint was indicated explicitly during the learning phase and when the study viewpoint was indicated explicitly during the test phase. We assume that either the to-be-tested viewpoint indicated during the learning phase or the study viewpoint indicated during the test phase can provide information about the spatial reference direction of the shape. The hypothesis of this project predicts that scene recognition of a novel view caused by table rotation could be as accurate as or even more accurate than scene recognition of a novel view caused by observer locomotion if the spatial reference direction is identified equally or more accurately in the former condition. Six experiments in this project directly tested this prediction.

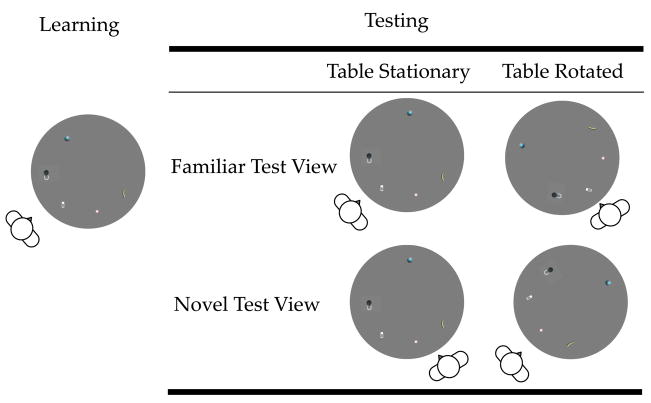

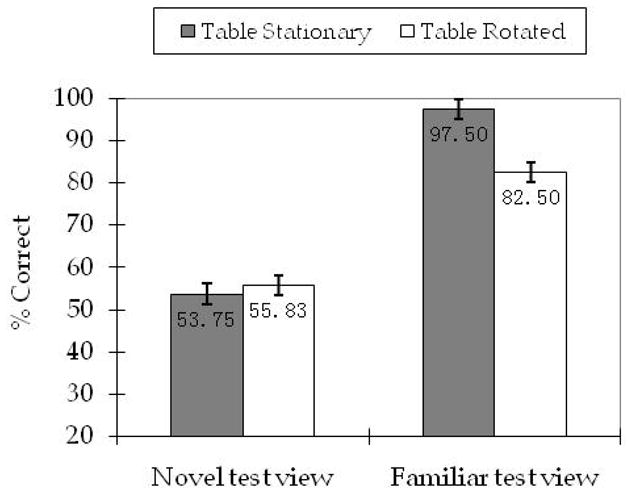

Experiments 1 to 4 demonstrated that novel-view scene recognition could be just as accurate when the novel view was caused by table rotation as when the novel view was caused by observer locomotion. The general experimental paradigm was similar to that used by Simons and Wang (1998). Participants detected a position change after briefly viewing a desktop scene when they stayed stationary or locomoted and when the table stayed stationary or was rotated (Figure 1). However, we analyzed the data in the way introduced by Burgess, Spiers and Paleologou (2004, see also Mou et al., 2004). Burgess et al. interpreted the findings of Simons and Wang in terms of two independent effects: One effect is the viewpoint effect, which refers to better recognition of the study view than of a novel view (Familiar test view vs. Novel test view in Figure 1). The second effect is the spatial updating effect, which is better recognition of a view expected from the updated spatial representation when the table is stationary than of a view unexpected from the updated spatial representation when the table is rotated (Table stationary vs. Table rotated in Figure 1). The locomotion information can be used to anticipate the self-to-reference-direction spatial relations in the table stationary condition but not in the table rotated condition.

Figure 1.

The experiment design in Experiments 1 to 4.

The comparison between the table stationary novel view condition and the table rotated familiar view condition was conducted by Wang and Simons (1999) and Burgess et al. (2004). As illustrated in Figure 1, this comparison reflects the competition between the viewpoint effect and the spatial updating effect as it contrasts conditions that differ on both independent variables. The table stationary novel view condition is superior in terms of the spatial updating effect whereas the table rotated familiar view condition is superior in terms of the viewpoint effect. For example, performance in the table stationary novel view condition could be better than in the table rotated familiar view condition if the spatial updating effect is larger than the viewpoint effect; on the other hand, relative performance in these two conditions could be reversed if the relative strengths of the spatial updating effect and the viewpoint effect were reversed. Extant experimental results and theories do not allow one to predict in advance the relative strengths of these effects. Both might be influenced by various factors including the complexity of layout, the visibility of the objects, the distance between the layout and the participants, and the walking distance during locomotion. Because of this ambiguity, we did not conduct the comparison between the table stationary novel view condition and the table rotated familiar view condition.

Experiment 1 replicated the experiment of Simons and Wang using a novel viewpoint 49° different from the learning view. Both viewpoint and spatial updating effects were observed. In particular, the spatial updating effect included a facilitative effect of locomotion on the novel-view scene recognition (table stationary better than table rotated for the novel test view in Figure 1) and an interfering effect of locomotion on the familiar-view scene recognition (table rotated worse than table stationary for the familiar test view in Figure 1).

In Experiment 2, during the learning phase, a chopstick was placed at the center of the table pointing to the to-be-tested viewpoint and the chopstick was removed at test. The accuracy of novel-view scene recognition in the table rotation condition increased and did not differ from that in the table stationary condition demonstrating that indicating the to-be-tested viewpoint during the study phase to a stationary observer had the same facilitative effect on position change detection at the novel 49° view as did self motion of a locomoting observer.

In Experiment 3, during the testing phase (but not the study phase), a chopstick was placed at the center of the table pointing to the study viewpoint. The accuracy of novel-view scene recognition in the table rotation condition increased and did not differ from that in the table stationary condition demonstrating that indicating the study viewpoint during the test phase to a stationary observer had the same facilitative effect on position change detection at the novel 49° view as did self motion of a locomoting observer.

In Experiment 4, the novel test view was 98° different from the study view. The accuracy of novel-view scene recognition in the table stationary condition decreased and did not differ from that in the table rotation condition. This finding indicated that the facilitative effect of locomotion disappeared if the inaccuracy in identifying the spatial reference direction using information from self motion increased to be comparable to the inaccuracy in identifying the spatial reference direction using the interobject spatial relations in the test scene.

Experiment 5 demonstrated that performance at the novel 98° view was better in the table rotation condition than in the observer locomotion condition when the study viewpoint was indicated in the test scene in the former condition but not in the latter condition. Performance in detecting position change at the novel 98° view was compared across three conditions: (a) when the to-be-tested viewpoint was indicated during the study phase (but not the test phase) to a stationary participant, (b) when the study viewpoint was indicated during the test phase (but not study phase) to a stationary participant, and (c) when neither the to-be-tested viewpoint nor the study viewpoint were indicated to a locomoting participant.

Experiment 6 tested the hypothesis that the facilitation produced by using a chopstick to indicate the to-be-tested or study viewpoint occurred because the chopstick was used as a reference object at test. The results showed that the facilitative effect of locomotion recurred if the chopstick was replaced by a spherical object.

Experiment 1

In Experiment 1, we replicated the experiments of Simons and Wang (1998). After viewing an array of five objects for three seconds, participant walked to a new viewing position 49° from the learning position or stayed at the learning position while blindfolded. One object was moved to a new location after participants were blindfolded. Ten seconds after they put on the blindfold, participants were asked to remove the blindfold and indicate which object was moved.

Method

Participants

Twenty four university students (12 men and 12 women) participated in the study in return for monetary compensation.

Materials and Design

The experiment was conducted in a room (4.0 by 2.8 m) with walls covered in black curtains. The room contained a circular table covered by a grey mat (80 cm in diameter, 69 cm above the floor), two chairs (seated 42 cm high), and 5 common objects sized around 5 cm coated with phosphorescent paint (Figure 1). The objects were placed on five of nine possible positions in an irregular array on the circular table. The distance between any two adjacent positions varied from 18 to 29 cm. The irregularity of the array ensured that no more than two objects were aligned with the observer throughout the experiment. The distance of the chairs to the middle of the table was 90 cm. The viewing angle between the chairs was 49°. Participants wore a blindfold and a wireless earphone that was connected to a computer outside of the curtain. The lights were always off during the experiment, and the experimenter used a flashlight when she arrayed the layout. Throughout the experiment, participants were only able to see the locations of the five objects. The earphone was used to present white noise and instructions (e.g., to remove the blindfold and view the layout, to put the blindfold on).

Forty irregular configurations of object locations were created. In each configuration, one of the five occupied locations was selected randomly to be the location of the moved object. The object was moved to be at one of the four unoccupied locations. This new location of the object was usually the open location closest to the original location and had a similar distance to the center of the table so that this cue could not be used to determine whether an object had moved.

Participants were randomly assigned to the familiar test view and the novel test view conditions with the restriction that equal numbers of men and women were in each condition. Forty trials were created for each participant by presenting the 40 configurations in a random order and dividing them into 8 blocks (5 configurations for each block). Four blocks were assigned to the table stationary condition (participants in the familiar test view group, stayed stationary; participants in the novel test view group, walked to the new test position). Four blocks were assigned to the table rotated condition (participants in the familiar test view group, walked to the new test position; participants in the novel test view group, stayed stationary). The blocks of table stationary and the blocks of table rotated were presented alternatively. For both familiar and novel test view groups, the block of table stationary was presented first in half of the male and female participants. At the beginning of each block, participants were informed of the condition of the block (table stationary or table rotated).

The primary independent variables were test view (familiar test view vs. novel test view) and table movement (table stationary vs. table rotated). Locomotion information can anticipate the spatial reference direction in the table stationary conditions but not in the table rotation condition. Table movement was manipulated within participants and test view was manipulated between participants.

The dependent variable was the percentage of the correct judgments in deciding which target object changed position.

Procedure

Wearing a blindfold, participants walked into the testing room and sat on the viewing chair assisted by the experimenter. Each trial was initiated by a key press of the experimenter and started with a verbal instruction via earphone (“please remove the blindfold, and try to remember the locations of the objects you are going to see.”). After three seconds, participants were instructed to walk, while blindfolded, to the new viewing position (“please wear the blindfold, walk to the other chair”) or to remain stationary at the learning position (“please wear the blindfold”). Ten seconds after participants were instructed to stop viewing the layout, they were instructed to determine which object was moved (“please remove the blindfold and make judgment”). The participant was instructed to respond as accurately as possible; speedy response was discouraged. After the response, the trial was ended by a key press of the experimenter and the participant was instructed to be ready for the next trial (“please wear the blindfold and sit on the original viewing chair.”) All of the above instructions in presenting trials were prerecorded. The presentations of the instructions were sequenced by a computer with which the earphone was connected.

Before the 40 experimental trials, participants practiced until they were able to walk to the other chair while blindfolded and had 8 extra trials (4 for each table condition) as practice to get used to the procedure.

Results

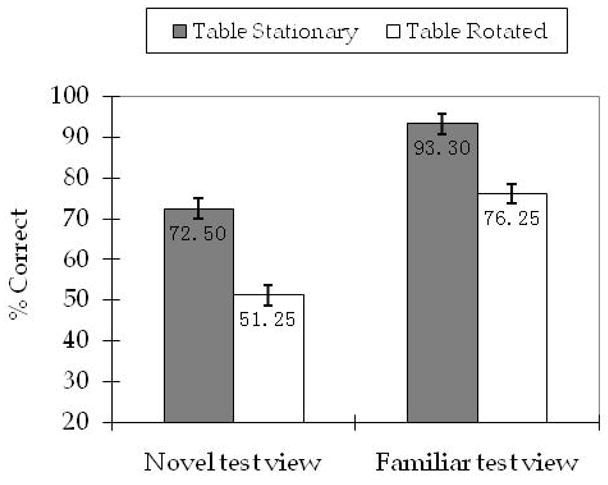

Mean percentage of correct judgments as a function of test view and table movement is plotted in Figure 2. Percentage of correct judgments was computed for each participant and each table condition, and analyzed in mixed analyses of variance (ANOVAs), with variables corresponding to table and test view. Table movement was within participants. Test view was between participants.

Figure 2.

Correct percentage in detecting position change as the function of table movement and test view in Experiment 1. (Error bars are confidence intervals corresponding to ±1 standard error of the mean, as estimated from the analysis of variance.)

The main effect of table movement was significant, F(1, 22) = 60.07, p <.001, MSE =.007. The main effect of test view was significant, F(1, 22) = 49.7, p <.001, MSE =.013. The interaction between table movement and test view was not reliable, F(1, 22) = 0.71, p =.41. Planned comparisons showed that participants in the familiar test view group were more accurate in the table stationary condition than in the table rotated condition, t(22) = 4.99, p <.001, and participants in the novel test view group were also more accurate in the table stationary condition than in the table rotated condition, t(22) = 6.22, p <.001. Planned comparisons also showed that participants in the familiar test view group were more accurate than participants in the novel test view group when the table was stationary for both groups, t(22) = 6.53, p <.001.

Discussion

In Experiment 1, participants were more accurate when the table was stationary than when the table was rotated between study and test. More specifically, performance at a novel test view was facilitated by locomotion whereas performance at the original study view was interfered by locomotion. The facilitative effect of locomotion on the novel-view scene recognition replicated the key finding of Simons and Wang (1998). The interference of locomotion to the familiar view scene recognition suggested that spatial updating during locomotion could not be ignored (e.g. Farrell & Robertson, 1998). In addition, participants in the familiar test view group were more accurate than participants in the novel test view group when the table was stationary for both groups suggesting that the scene recognition was viewpoint dependent (e.g. Christou & Bülthoff, 1999; Diwadkar & McNamara, 1997) and that viewpoint dependency was not eliminated by the facilitation of locomotion at the novel test view (e.g. Burgess et al., 2004; Mou et al., 2004).

Experiment 2

In Experiment 2, a short chopstick coated with phosphorescent paint was placed at the center of the table with an angle 49° counterclockwise away from the study viewpoint (Figure 3) for the novel test view group and 0° from the study viewpoint for the familiar test view group so that the chopstick would point to the test viewpoint for both groups. We investigated whether change detection at a novel view caused by the table rotation was as accurate as change detection at a novel view caused by the observer locomotion.

Figure 3.

The experiment setup in Experiment 2.

Method

Participants

Twenty four university students (12 men and 12 women) participated in this study in return for monetary compensation.

Materials, Design, and Procedure

The materials, design, and procedure were similar to those used in Experiment 1 except the following modifications: (a) A chopstick (7 cm long) coated with phosphorescent paint was placed at the center of the table pointing to the to-be-tested viewpoint during the learning phase but not shown during the test phase. In particular, the chopstick was placed pointing to the study viewpoint for the familiar test view group and pointing to the novel test viewpoint for the novel test view group. (b) Participants were explicitly instructed to use the chopstick to anticipate the test viewpoint. Participants in the familiar test view group were instructed that “A chopstick will be placed on the table pointing to you when you learn the layout; when you stay stationary the table will stay stationary so that the chopstick will always point to you; when you locomote to the test position the table will be rotated accordingly so that the chopstick will point to you when you stop.” Participants in the novel test view group were instructed that “A chopstick will be placed on the table pointing to the test position when you learn the layout; when you stay stationary, the table will be rotated until the chopstick points to you; when you walk to the test position the table will stay stationary so that the chopstick will point to you after you stop.” In fact the chopstick was only presented at study and removed at test.

Results

Mean percentage of correct judgments as a function of test view and table movement is plotted in Figure 4. Percentage of correct judgments was computed for each participant and each table condition, and analyzed in mixed analyses of variance (ANOVAs), with variables corresponding to table and test view. Table was within participants. Test view was between participants.

Figure 4.

Correct percentage in detecting position change as the function of table movement and test view in Experiment 2.

The main effect of test view was significant, F(1, 22) = 16.45, p <.001, MSE =.022. The main effect of table movement was significant, F(1, 22) = 9.98, p =.005, MSE=.008. The interaction between table movement and test view was not reliable, F(1, 22) = 3.47, p =.076. However planned comparisons showed that participants in the familiar test view group were more accurate in the table stationary condition than in the table rotated condition, t(22) = 3.56, p = 0.002; participants in the novel test view group were not more accurate in the table stationary condition than in the table rotated condition, t(22) = 1.10, p = 0.283. Planned comparisons also showed that participants in the familiar test view group were more accurate than participants in the novel test view group when the table was stationary for both group, t(22) = 5.08, p <.001.

Discussion

I n Experiment 2, participants in the novel test view group were not more accurate in change detection when the table was stationary than when the table was rotated. In other words indicating the to-be-tested viewpoint with a chopstick on the table at study facilitated the novel-view change detection to the same degree as did locomotion. We assumed that the spatial reference direction identified with the information provided by the to-be-tested viewpoint was as accurate as the spatial reference direction identified with the locomotion information. This result provides the first demonstration of this project that novel-view scene recognition caused by table rotation could be as accurate as novel-view scene recognition caused by observer locomotion if equally accurate spatial reference direction is identified in these two conditions. As in Experiment 1, both the interference of locomotion to the familiar-view scene recognition and the viewpoint dependent scene recognition in the table stationary condition were observed in this experiment.

Experiment 3

In Experiment 3, a short chopstick coated with phosphorescent paint was placed at the center of the table pointing to the study viewpoint during the testing phase. We investigated whether performance in position change detection at a novel view was no less accurate when the novel view was caused by table rotation than when the novel view was caused by observer locomotion.

Method

Participants

Twenty four university students (12 men and 12 women) participated in this study in return for monetary compensation.

Materials, Design, and Procedure

The materials, design, and procedure were similar to those used in Experiment 1 except the following modifications: (a) A chopstick (7 cm long) coated with phosphorescent paint was placed at the center of the table pointing to the study viewpoint during the test phase. (b) Participants were explicitly instructed to use the bar to infer their study viewpoint (“A chopstick will be added to the test scene pointing to your original study viewpoint when you make your judgment”). The chopstick was not presented at the study phase.

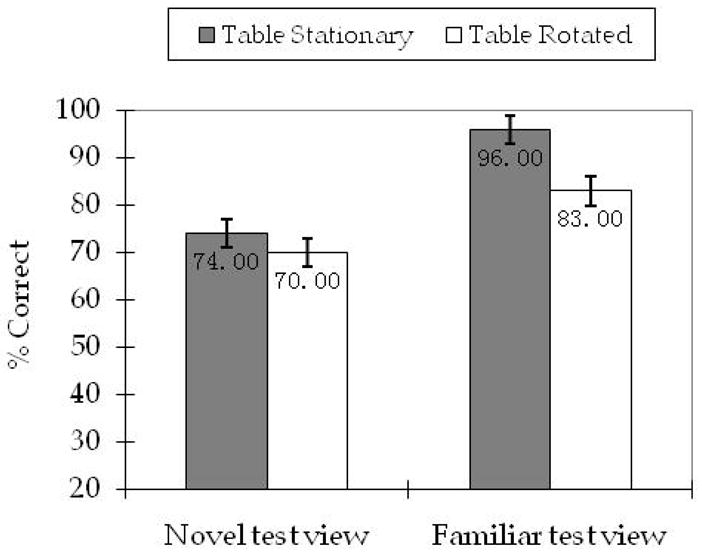

Results

Mean percentage of correct judgments as a function of test view and table is plotted in Figure 5. Percentage of correct judgments was computed for each participant and each table condition, and analyzed in mixed analyses of variance (ANOVAs), with variables corresponding to table and test view. Table movement was within participants. Test view was between participants.

Figure 5.

Correct percentage in detecting position change as the function of table movement and test view in Experiment 3.

The main effect of test view was significant, F(1, 22) = 4.98, p =.036, MSE =.024. The main effect of table movement was significant, F(1, 22) = 7.65, p =.011, MSE =.007. The interaction between table movement and test view was significant, F(1, 22) = 5.86, p =.024. Planned comparisons showed that participants in the familiar test view group were more accurate in the table stationary condition than in the table rotated condition, t(22) = 3.66, p =.001; participants in the novel test view group were not more accurate in the table stationary condition than in the table rotated condition, t(22) = 0.26, p =.797. Planned comparisons also showed that participants in the familiar test view group were more accurate than participants in the novel test view group when the table was stationary for both groups, t(22) = 3.04, p =.006.

Discussion

In Experiment 3, participants in the novel test view group were not more accurate in the change detection when the table was stationary than when the table was rotated. In other words, indicating the study view with a chopstick on the table at test facilitated change detection at least as well as did locomotion. We assume that the spatial reference direction is established parallel to the study viewpoint (e.g. Shelton & McNamara, 2001). The results of Experiment 3 provide another demonstration verifying that novel-view scene recognition caused by table rotation could be as accurate as novel-view scene recognition caused by observer locomotion if equally accurate spatial reference direction is identified in these two conditions. As in the previous experiments, both the interference of locomotion to the familiar view scene recognition and the viewpoint dependent scene recognition in the table stationary condition were observed in this experiment.

Experiment 4

In Experiment 4, the distance between the study view and the novel was 98° instead of 49° in Experiment 1. Farrell and Robertson (1998, p. 229) reported a linear increase of errors in pointing to objects as a function of rotation magnitude in the updating condition, indicating that errors of updating one’s position and orientation accumulate over greater distances. Accordingly we assumed that the inaccuracy in updating the self with respect to the spatial reference direction of the scene increased with the locomotion distance. Hence the inaccuracy in identification of the spatial reference direction might be as high when people used the cue of locomotion as when people only used interobject spatial relations. We investigated whether change detection at a novel view caused by table rotation was not less accurate than change detection at a novel view caused by observer locomotion when the angular distance between the novel view and the study view was doubled.

Method

Participants

Twenty four university students (12 men and 12 women) participated in this study in return for monetary compensation.

Materials, Design, and Procedure

The materials, design, and procedure were similar to those used in Experiment 1 except that the angular distance between the study view and the novel view was 98°.

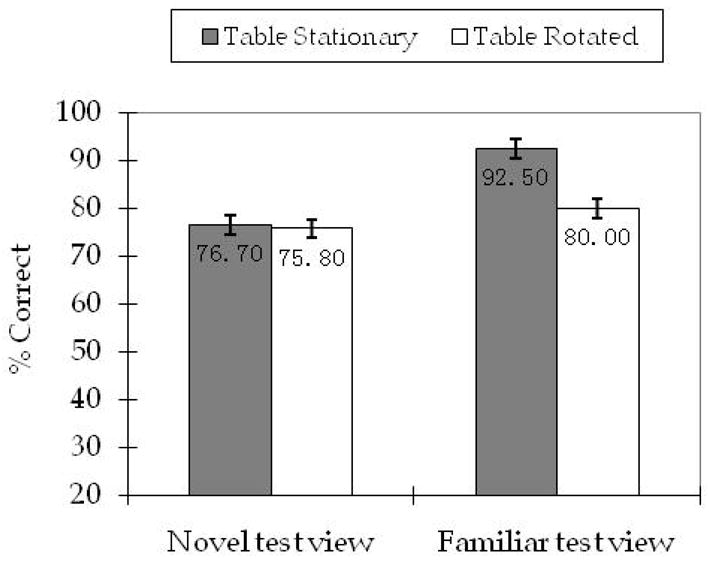

Results

Mean percentage of correct judgments as a function of test view and table is plotted in Figure 6. Percentage of correct judgments was computed for each participant and each table condition, and analyzed in mixed analyses of variance (ANOVAs), with variables corresponding to table and test view. Table movement was within participants. Test view was between participants.

Figure 6.

Correct percentage in detecting position change as the function of table movement and test view in Experiment 4.

The main effect of test view was significant, F(1, 22) = 87.59, p <.001, MSE =.017. The main effect of table movement was significant, F(1, 22) = 7.29, p =.013, MSE =.007. The interaction between the two effects was significant, F(1, 22) = 12.74, p =.002. Planned comparisons showed that participants in the familiar test view group were more accurate in the table stationary condition than in the table rotated condition, t(22) = 4.39, p <.001; participants in the novel test view group were not more accurate in the table stationary condition than in the table rotation condition, t(22) = −0.61, p =.548. Planned comparisons also showed that participants in the familiar test view group were more accurate than participants in the novel test view group when the table was stationary for both group, t(22) = 11.15, p <.001.

Discussion

In Experiment 4, participants in the novel test view group were not more accurate when the table was stationary than when the table was rotated suggesting that the facilitative effect of locomotion is limited to a small range of walking distance (e.g. 49°). Farrell and Robertson (1998) reported a linear increase of errors in pointing to objects as a function of rotation magnitude in the updating condition, indicating that errors of updating one’s position and orientation accumulate over greater distances. Accordingly we assumed that the inaccuracy in updating the self with respect to the spatial reference direction of the scene increased with the locomotion distance. These results provide yet another demonstration verifying that novel-view scene recognition could be as accurate when the novel view was caused by table rotation as when the novel view was caused by observer locomotion if the spatial reference direction is identified equally accurately in these two conditions. As in the previous experiments, both the interference of locomotion to the familiar view scene recognition and the viewpoint dependent scene recognition in the table stationary condition were observed in this experiment.

Experiment 5

In Experiments 2 and 3, participants were instructed on the to-be-tested viewpoint or the study viewpoint for both table rotation and table stationary conditions. Participants might have been able to use the chopstick cue when the novel view was caused by their locomotion. We were therefore not able to examine the relative facilitative effects of knowledge about the to-be-tested viewpoint, knowledge about the study viewpoint, and locomotion information on change detection. In Experiment 5, we addressed this issue by not informing participants about the to-be-tested or study viewpoints when they locomoted. In particular, we tested whether stationary participants who were given the direction of the study view with a chopstick in a novel test view could be more accurate in position change detection than participants who were not informed about the direction of the study view with a chopstick but locomoted to the novel test view of 98°. Because this experiment relied on comparisons of between-participants conditions, the sample size was increased considerably.

Method

Participants

132 university students (66 men and 66 women) participated in this study in return for monetary compensation.

Materials, Design, and Procedure

The materials, design, and procedure were similar to those used in the previous experiments.

The familiar test view was not included in this experiment. All 40 configurations in previous experiments were used and the novel test view was 98° different from the study view. There were three conditions of indicating the viewpoint change between the study view and the novel test view. In the first condition, the table was rotated and participants stayed stationary, and a chopstick was placed at the center of the table pointing to the test view during the learning phase as in Experiment 2. In the second condition, the table was rotated and participants stayed stationary, and a chopstick was placed at the center of the table pointing to the study view during the test phase as in Experiment 3. In the third condition, the table stayed stationary and participants moved to the novel view, and no chopstick was presented at either study or test views as in Experiment 4.

Forty-four participants were randomly assigned to each of the three conditions of indication of viewpoint change, with the restriction that each condition had equal numbers of men and women.

Results

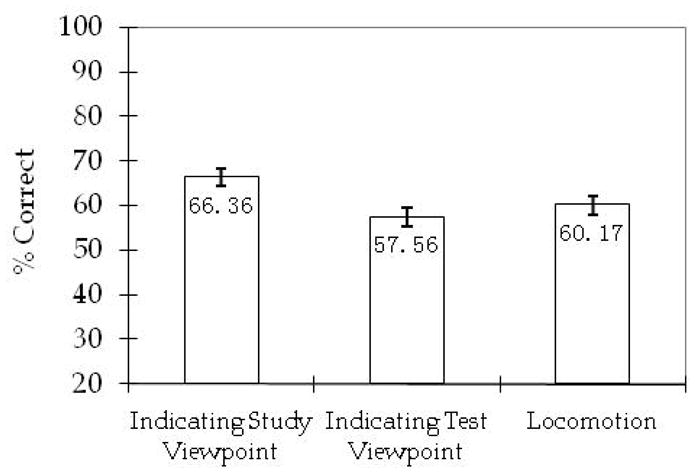

Mean percentage of correct judgments as a function of indication of viewpoint change is presented in Figure 7. Percentage of correct judgments was computed for each participant, and analyzed in one way analyses of variance (ANOVA), with the between participants variable of indication of viewpoint change.

Figure 7.

Correct percentage in detecting position change as the function of way of indicating the spatial reference direction in Experiment 5.

The main effect of indication of viewpoint change was significant, F(2, 129) = 5.04, p =.008, MSE =.018. Further comparisons showed that participants in the condition in which the study viewpoint was indicated were more accurate than participants in the condition in which the test viewpoint was indicated, t(129) = 3.09, p =.003, and participants in the condition of locomotion without chopstick, t(129) = 2.17, p =.032; the latter two conditions did not differ significantly, t(129) = 0.92, p =.359.

Discussion

In Experiment 5, scene recognition at the novel 98° test view was better when the novel view was caused by table rotation and the study viewpoint was indicated in the test scene than when the novel view was caused by observer locomotion and the study viewpoint was not indicated. This result verifies that performance in position change detection can be better when the novel view is caused by table rotation than when the novel view is caused by observer locomotion if the spatial reference direction is identified more accurately in the former condition than in the latter condition (e.g. Farrell & Robertson, 1998).

Experiment 6

In the previous experiments, a chopstick was placed either only at study (Experiment 2 and the indicating test viewpoint condition in Experiment 5) or only at test (Experiment 3 and the indicating study viewpoint condition in Experiment 5). These manipulations were included to reduce the likelihood that the chopstick would influence scene recognition as a reference object. When a chopstick was only presented at study, other objects might be coded with respect to the chopstick at study, but that information could not be used at test because the chopstick was not presented at test. When a chopstick was only presented at test, other objects could not be coded with respect to the chopstick because the chopstick was not presented at study. Hence the facilitation produced by the chopstick could not be attributed to the representation of interobject spatial relations between other objects and the chopstick. It is possible, however, that a chopstick presented at study in Experiment 2 and the indicating test viewpoint condition in Experiment 5 might have improved change detection performance in the table rotation condition by improving the configural binding of the object positions on the table top during study, providing an extra referent by which to associate them. Experiment 6 was designed to test this possibility.

Experiment 6 was similar to Experiment 2 with two modifications. First, a spherical object (a hat) instead of the chopstick was placed at the center of the table (Figure 8) at study and removed at test. Second, only the conditions testing novel views were included as the familiar views were not relevant to this issue. If the chopstick in Experiment 2 and in the test view condition of Experiment 5 facilitated novel view recognition because it provided an extra referent to associate objects, we should expect that the spherical object should have the same effect. Hence change detection would not be better in the table stationary condition than in the table rotated condition. If the chopstick facilitated novel view recognition only because it indicated a reference direction, we should expect that the spherical object has no such effect. Hence change detection would be better in the table stationary condition than in the table rotated condition and the difference should be comparable to that between two novel views conditions in Experiment 1.

Figure 8.

The experiment setup in Experiment 6.

Method

Participants

Twelve graduate students (6 men and 6 women) participated in this study in return for monetary compensation.

Material, Design, and Procedure

The material, design, and procedure were similar to those used in Experiment 2 with the exception of the following modifications: (a) A spherical object (a small hat) instead of the chopstick was placed at the center of the table at study and removed at test (as shown in Figure 8). (b) Only the conditions testing novel views were included. Participants were instructed that “A hat will be put at the center of the table when you study the layout. It will be removed when you make the judgment.”

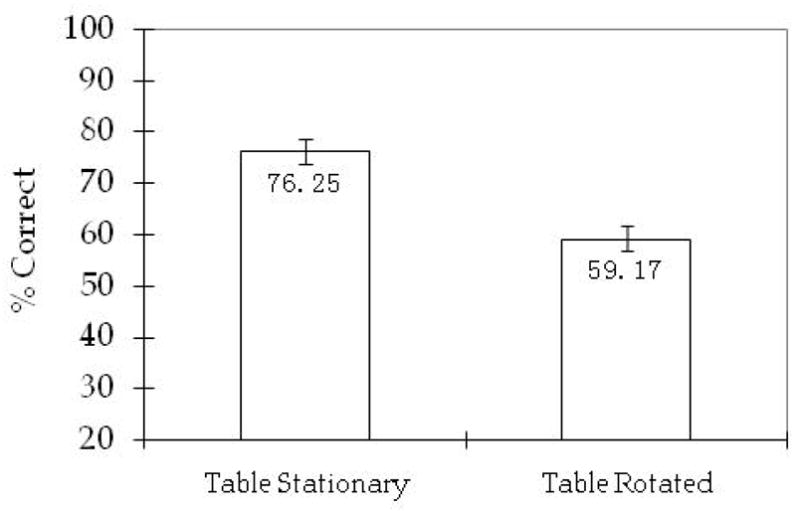

Results

Mean percentage of correct judgments as a function of table movement is plotted in Figure 9. Percentage of correct judgments was computed for each participant and table condition, and analyzed in one-way analyses of variance (ANOVA) with one variable corresponding to table movement (table stationary vs. table rotated). Table movement was within participants. The main effect of table movement was significant, F(1, 11) = 11.42, p = 0.006, MSE =.015.

Figure 9.

Correct percentage in detecting position change at a novel view as the function of table movement in Experiment 6.

Discussion

In Experiment 6, novel view change detection was more accurate when the table was stationary than when the table was rotated, replicating the facilitative effect of locomotion on novel view recognition in Experiment 1. Furthermore the facilitative effect of locomotion was comparable between Experiment 6 (17%) and Experiment 1 (22%). Hence this result confirmed that the facilitation of the chopstick in the previous experiment occurred because it indicated a spatial reference direction rather than because it was used as a landmark.

General Discussion

The goal of this project was to investigate whether observer locomotion, compared with table rotation, provides unique information facilitating novel-view scene recognition. The findings of the experiments lead to a negative answer. Novel-view scene recognition was as accurate in the table rotation condition as in the observer locomotion condition when the to-be-tested viewpoint was indicated during the study phase, when the study viewing direction was indicated during the test phase, and when the novel test view was 98°. Novel-view scene recognition was even more accurate in the table rotation condition than in the observer locomotion condition when the study viewing direction was indicated during the test phase in the table rotation condition but not in the observer locomotion condition. These findings demonstrate that position change detection at a novel view can be no less accurate or even more accurate when the novel view is caused by the table rotation than when the novel view is caused by observer locomotion.

All of these striking findings can be explained by the elaboration of the model of spatial memory and navigation proposed by Mou, McNamara, and their colleagues (Mou et al., 2004; Mou, Fan, McNamara, & Owen, 2008). In their model, people represent interobject spatial relations and their position in terms of spatial reference directions2 (e.g. Mou & McNamara, 2002). When people navigate in the environment, they update their orientation with respect to the same spatial reference directions. In scene recognition, people need to align the spatial reference direction identified in the test scene with the represented intrinsic reference direction in memory and then compare the interobject spatial relations in the test scene with the represented interobject spatial relations in the memory. Hence the more accurately people are able to identify the spatial reference direction in the test scene the more accurately people can detect the position change.

When people are informed of the to-be-tested viewpoint with the chopstick they also represent the to-be-tested viewpoint with respect to the spatial reference direction that is established to represent the objects’ locations. Hence they can infer the spatial reference direction when they are tested at the test viewpoint. In the absence of other salient cues (e.g. layout geometry, environmental cues), people represent interobject spatial relations in terms of the spatial reference direction established parallel to their study viewing direction. Hence indicating the study viewing direction in the test scene can facilitate the identification of the spatial reference direction, which in turn facilitates scene recognition. Because people update their orientation in terms of the same spatial reference direction when they are locomoting, locomotion can facilitate the identification of the spatial reference direction and in turn facilitate scene recognition. However the facilitative effect of locomotion will decrease and vanish eventually as the locomotion distance increases because the inaccuracy of updating with respect to the spatial reference direction increases with the locomotion distance (e.g. Farrell & Robertson, 1998).

Even if directional cues, such as the chopstick, and feedback from locomotion are not available, people can identify the spatial reference direction using only the interobject spatial relations, because interobject spatial relations are represented with respect to the spatial reference direction. The lowest level of accuracy of detecting position change at a novel view should therefore be significantly higher than chance level. In the present experiments, the lowest levels of accuracy of novel-view change detection in the table rotated condition were 51%, 56%, and 59% in Experiments 1, 4, and 6 respectively, in contrast with the chance level of 25%.

Locomotion provided an additional source of information for identifying the intrinsic reference direction, improving performance even more. The accuracy of novel-view scene recognition in the table stationary condition was 73% in Experiment 1, yielding a facilitative effect of locomotion of 22% (73% - 51%), and 76% in Experiment 6, yielding a facilitative effect of locomotion of 17% (76% - 59%).

Indicating the to-be-tested viewpoint provided yet another cue to identification of the intrinsic reference direction. The accuracy of novel-view scene recognition in the table rotated condition increased to 70% in Experiment 2, such that the facilitation from knowing the to-be-tested view was equivalent to that of locomotion. Indicating the study viewpoint directly provided the spatial reference direction as spatial reference directions were established parallel to the study viewpoint without other cues. The accuracy of novel-view scene recognition in the table rotated condition was 76% in Experiment 3 so the facilitation of knowing study viewpoint was at least as big as that of locomotion shown as the result.

When locomotion distance increased to 98°, the facilitative effect of locomotion was eliminated and the accuracy of novel-view scene recognition in the table stationary condition dropped to 54% in Experiment 4. Similarly, facilitation from knowing the to-be-tested view dropped to the same degree in Experiment 5. However the facilitation from knowing the study view was more resistant to the increase of view change than that of locomotion. This indicates that the facilitation from knowing the study viewpoint was greater than that of locomotion. These results are also consistent with the conjecture that the accuracy in identifying spatial reference direction determines the performance in position change detection if we assume that a spatial reference direction is established parallel to the study viewpoint.

Wang and Simons (1999) reported that the facilitative effect of locomotion on novel-view scene recognition occurred even when the visual information of the magnitude of the view change was available to the stationary participants by watching a rod that was affixed to the table and extended outside of the table. One difference between their experiment and ours is that the rod was outside the scene, affixed to the edge of the table, whereas the chopstick was in the scene, placed at the center of the table. We speculate that it was easier to bind objects in the scene with the chopstick than with the rod in the same mental representation given the brief viewing time. Hence the rod in Wang and Simons’ study might not have provided as accurate information as the chopstick about the spatial reference direction of the array of objects.

We are not claiming that the rod did not provide any information about the spatial reference direction of the array of objects. We are suggesting instead that the rod was a less effective cue than participants’ locomotion to the spatial reference direction of the scene at test; the rod might still have facilitated novel view recognition to some degree. Performance in the novel view table rotation condition (different retinal projection and unchanged observation point in Simons and Wang’s terminology) was better when the rod was used (70% correct in Experiment 1 of Wang and Simons, 1999) than when no rod was used (55% correct, Experiments 1 and 2 of Simons and Wang, 1998). As this comparison is across studies, the higher performance when the rod was used may occur because of other differences between the studies, such as different subject populations. A future systematic investigation is required to test the possible facilitation of the rod in the novel view recognition.

The robust interfering effect of locomotion on the familiar-view scene recognition was observed in Experiments 1 to 4 of this project indicating that participants did update their orientation with respect to the layout during locomotion. Because participants knew whether table would be rotated or not in each trial, these results suggest that updating during locomotion is relatively automatic (e.g. Farrell & Robertson, 1998; Mou, Li, & McNamara, 2008; Presson & Montello, 1994; Rieser, 1989). When participants moved to a new testing position, they automatically updated the spatial reference direction relative to their new position and such updating anticipated a novel test view. But if the familiar test view was presented, participants needed to ignore the updated spatial reference direction at their test location and retrieve the original spatial reference direction at the study locations to cope with the familiar test view. We assume that ignoring the updated spatial reference direction at the test location may interfere with the retrieval of the original spatial reference at the study location and such interference introduces error. Importantly, the robust interfering effect of locomotion on the familiar-view scene recognition but vanishing facilitative effect of locomotion on novel-view scene recognition in Experiments 2 to 4 strongly suggests that the interfering effect of locomotion on the familiar-view scene recognition is a more sensitive indicator of spatial updating during locomotion than the facilitative effect of locomotion on novel-view scene recognition.

Another important finding of this project is that novel-view scene recognition in the table rotated condition was as accurate as that in the table stationary condition (observer locomotion) when the to-be-tested viewpoint was indicated during the learning phase (Experiments 2 and 5). This pattern suggests that the same level of transformation error is involved during the mental transformation process in the table rotation condition and during the spatial updating process in the observer locomotion condition to cope with the viewpoint change. We conjecture that a similar error prone mental transformation process may also underlie the spatial updating process during locomotion although the process is relatively automatic and unconscious as suggested by the interfering effect of locomotion on the familiar test view. This conjecture is consistent with the robust finding that the spatial updating process invoked by locomotion is not able to eliminate the viewpoint dependent performance; that is, participants in the familiar test view group were more accurate than participants in the novel test view group when the table remained stationary for both groups, in Experiments 1 to 4 of this study and in other studies (e.g. Burgess et al, 2004).

In summary, the role of spatial updating during locomotion in novel-view scene recognition might have been overstated in previous studies (see also Motes, Finlay, & Kozhevnikov, 2006). The superiority of observer locomotion to table rotation in novel-view scene recognition is eliminated or reversed when an equal or less accurate spatial reference direction is identified in the condition of observer locomotion than in the condition of table rotation. Although people update their orientation with respect to the spatial reference direction of the scene automatically, as shown by the interfering effect of locomotion on the familiar-view scene recognition, the automatic spatial updating process is still an error prone mental transformation process. Hence the spatial updating process invoked with locomotion is neither able to eliminate the viewpoint dependent scene recognition nor able to facilitate scene recognition when the viewpoint change is relatively larger (e.g. 98°).

Acknowledgments

Preparation of this paper and the research reported in it were supported in part by a grant from the Natural Sciences and Engineering Research Council of Canada and a grant from the National Natural Science Foundation of China (30770709) to WM and National Institute of Mental Health Grant 2-R01-MH57868 to TPM. We are grateful to Dr. Andrew Hollingworth, Dr. Daniel Simons, and one anonymous reviewer for their helpful comments on a previous version of this manuscript.

Footnotes

Waller and Hodgson (2006) distinguish transient and enduring spatial representations (e.g., Mou et al., 2004) and carefully refrain from specifying whether those representations are egocentric or allocentric. Because the architecture and the function of their model are similar to those in which egocentric and allocentric systems are distinguished, we have taken the liberty to group their model with the others.

To acknowledge that this project provides no direct evidence that can dissociate between intrinsic reference directions and egocentric reference directions, we replace the intrinsic reference direction in the model with the spatial reference direction in explaining the current findings.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Burgess N, Spiers HJ, Paleologou E. Orientational manoeuvres in the dark: Dissociating allocentric and egocentric influences on spatial memory. Cognition. 2004;94:149–166. doi: 10.1016/j.cognition.2004.01.001. [DOI] [PubMed] [Google Scholar]

- Burgess N. In Year in Cognitive Neuroscience 2008. Vol. 1124. Oxford: Blackwell Publishing; 2008. Spatial cognition and the brain; pp. 77–97. [DOI] [PubMed] [Google Scholar]

- Byrne P, Becker S, Burgess N. Remembering the past and imagining the future: A neural model of spatial memory and imagery. Psychological Review. 2007;114:340–375. doi: 10.1037/0033-295X.114.2.340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christou CG, Bülthoff HH. View dependence in scene recognition after active learning. Memory & Cognition. 1999;27:996–1007. doi: 10.3758/bf03201230. [DOI] [PubMed] [Google Scholar]

- Christou CG, Tjan BS, Bülthoff HH. Extrinsic cues aid shape recognition from novel viewpoints. Journal of Vision. 2003;3:183–198. doi: 10.1167/3.3.1. [DOI] [PubMed] [Google Scholar]

- Diwadkar VA, McNamara TP. Viewpoint dependence in scene recognition. Psychological Science. 1997;8:302–307. [Google Scholar]

- Farrell MJ, Robertson IH. Mental rotation and the automatic updating of body-centered spatial relationships. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1998;24:227–233. [Google Scholar]

- Holmes MC, Sholl MJ. Allocentric coding of object-to-object relations in overlearned and novel environments. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2005;31:1069–1087. doi: 10.1037/0278-7393.31.5.1069. [DOI] [PubMed] [Google Scholar]

- Motes MA, Finlay CA, Kozhevnikov M. Scene recognition following locomotion around a scene. Perception. 2006;35:1507–1520. doi: 10.1068/p5459. [DOI] [PubMed] [Google Scholar]

- Mou W, Fan Y, McNamara TP, Owen CB. Intrinsic frames of reference and egocentric viewpoints in scene recognition. Cognition. 2008;106:750–769. doi: 10.1016/j.cognition.2007.04.009. [DOI] [PubMed] [Google Scholar]

- Mou W, Li X, McNamara TP. Body- and environment-stabilized processing of spatial knowledge. Journal of Experimental Psychology: Learning Memory and Cognition. 2008;34:415–421. doi: 10.1037/0278-7393.34.2.415. [DOI] [PubMed] [Google Scholar]

- Mou W, McNamara TP. Intrinsic frames of reference in spatial memory. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2002;28:162–170. doi: 10.1037/0278-7393.28.1.162. [DOI] [PubMed] [Google Scholar]

- Mou W, McNamara TP, Rump B, Xiao C. Roles of egocentric and allocentric spatial representations in locomotion and reorientation. Journal of Experimental Psychology: Learning Memory and Cognition. 2006;32:1274–1290. doi: 10.1037/0278-7393.32.6.1274. [DOI] [PubMed] [Google Scholar]

- Mou W, McNamara TP, Valiquette CM, Rump B. Allocentric and egocentric updating of spatial memories. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2004;30:142–157. doi: 10.1037/0278-7393.30.1.142. [DOI] [PubMed] [Google Scholar]

- Mou W, Xiao C, McNamara TP. Reference directions and reference objects in spatial memory of a briefly-viewed layout. Cognition. 2008;108:136–154. doi: 10.1016/j.cognition.2008.02.004. [DOI] [PubMed] [Google Scholar]

- Mou W, Zhao M, McNamara TP. Layout geometry in the selection of intrinsic frames of reference from multiple viewpoints. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2007;33:145–154. doi: 10.1037/0278-7393.33.1.145. [DOI] [PubMed] [Google Scholar]

- Presson CC, Montello DR. Updating after rotational and translational body movements: Coordinate structure of perspective space. Perception. 1994;23:1447–1455. doi: 10.1068/p231447. [DOI] [PubMed] [Google Scholar]

- Rieser JJ. Access to knowledge of spatial structure at novel points of observation. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1989;15:1157–1165. doi: 10.1037//0278-7393.15.6.1157. [DOI] [PubMed] [Google Scholar]

- Shelton A, McNamara TP. Systems of spatial reference in human memory. Cognitive Psychology. 2001;43:274–310. doi: 10.1006/cogp.2001.0758. [DOI] [PubMed] [Google Scholar]

- Sholl MJ. The role of a self-reference system in spatial navigation. In: Montello D, editor. Spatial information theory: Foundations of geographical information science. Berlin: Springer-Verlag; 2001. pp. 217–232. [Google Scholar]

- Simons DJ, Wang RF. Perceiving real-world viewpoint changes. Psychological Science. 1998;9:315–320. [Google Scholar]

- Waller D, Hodgson E. Transient and enduring spatial representations under disorientation and self-rotation. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2006;32:867–882. doi: 10.1037/0278-7393.32.4.867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang RF, Simons DJ. Active and passive scene recognition across views. Cognition. 1999;70:191–210. doi: 10.1016/s0010-0277(99)00012-8. [DOI] [PubMed] [Google Scholar]

- Wang RF, Spelke ES. Human spatial representation: Insights from animals. Trends in Cognitive Sciences. 2002;6:376–382. doi: 10.1016/s1364-6613(02)01961-7. [DOI] [PubMed] [Google Scholar]

- Werner S, Schmidt K. Environmental frames of reference for large-scale spaces. Spatial Cognition and Computation. 1999;1:447–473. [Google Scholar]