Abstract

Fan & Li (2001) propose a family of variable selection methods via penalized likelihood using concave penalty functions. The nonconcave penalized likelihood estimators enjoy the oracle properties, but maximizing the penalized likelihood function is computationally challenging, because the objective function is nondifferentiable and nonconcave. In this article we propose a new unified algorithm based on the local linear approximation (LLA) for maximizing the penalized likelihood for a broad class of concave penalty functions. Convergence and other theoretical properties of the LLA algorithm are established. A distinguished feature of the LLA algorithm is that at each LLA step, the LLA estimator can naturally adopt a sparse representation. Thus we suggest using the one-step LLA estimator from the LLA algorithm as the final estimates. Statistically, we show that if the regularization parameter is appropriately chosen, the one-step LLA estimates enjoy the oracle properties with good initial estimators. Computationally, the one-step LLA estimation methods dramatically reduce the computational cost in maximizing the nonconcave penalized likelihood. We conduct some Monte Carlo simulation to assess the finite sample performance of the one-step sparse estimation methods. The results are very encouraging.

Key words and phrases: AIC, BIC, Lasso, One-step estimator, Oracle Properties, SCAD

1. Introduction

Variable selection and feature extraction are fundamental for knowledge discovery and predictive modeling with high-dimensionality (Fan & Li 2006). The best subset selection procedure along with traditional model selection criteria, such as AIC and BIC, becomes infeasible for feature selection from high-dimensional data due to too expensive computational cost. Furthermore, the best subset selection suffers from several drawbacks, the most severe of which is its lack of stability as analyzed in Breiman (1996). LASSO (Tibshirani 1996) method utilizes the L1 penalty to automatically select significant variable via continuous shrinkage, thus retaining the good features of both the best subset selection and ridge regression. In the same spirit of LASSO, the penalized likelihood with nonconcave penalty functions has been proposed to select significant variables for various parametric models, including generalized linear regression models and robust linear regression model (Fan & Li 2001, Fan & Peng 2004), and some semiparametric models, such as the Cox model and partially linear models (Fan & Li 2002, Fan & Li 2004, Cai, Fan, Li & Zhou 2005). Fan & Li (2001) provide deep insights into how to select a penalty function. They further advocate the use of penalty functions satisfying certain mathematical conditions such that the resulting penalized likelihood estimate possesses the properties of sparsity, continuity and unbiasedness. These mathematical conditions imply that the penalty function has to be singular at the origin and nonconvex over (0, ∞). In the work aforementioned, it has been shown that when the regularization parameter is appropriately chosen, the nonconcave penalized likelihood estimates perform as well as the oracle procedure in terms of selecting the correct subset model and estimating the true nonzero coefficients.

Although nonconcave penalized likelihood approaches have promising theoretical properties, the singularity and nonconvexity of the penalty function challenge us to invent numerical algorithms which are capable of maximizing a non-differentiable nonconcave function. Fan & Li (2001) suggested iteratively, locally approximating the penalty function by a quadratic function and referred such approximation as to local quadratic approximation (LQA). With the aid of the LQA, the optimization of penalized likelihood function can be carried out using a modified Newton-Raphson algorithm. However, as pointed out in Fan & Li (2001) and Hunter & Li (2005), the LQA algorithm shares a drawback of backward stepwise variable selection: if a covariate is deleted at any step in the LQA algorithm, it will necessarily be excluded from the final selected model (see Section 2.2 for more details). Hunter & Li (2005) addressed this issue by optimizing a slightly perturbed version of LQA, which alleviates the aforementioned drawback, but it is difficult to choose the size of perturbation. Another strategy to overcome the computational difficulty is using the one-step (or K-step) estimates from the iterative LQA algorithm with good starting estimators, as suggested by Fan & Li (2001). This is similar to the well known one-step estimation argument in the maximum likelihood estimation (MLE) setting (Bickel 1975, Lehmann & Casella 1998, Robinson 1988, Cai, Fan, Zhou & Zhou 2006). See also Fan & Chen (1999), Fan, Lin & Zhou (2006) and Cai et al. (2006) for some recent work on one-step estimators in local and marginal likelihood models. However, the problem with the one-step LQA estimator is that it cannot have a sparse representation, thus losing the most attractive and important property of the nonconcave penalized likelihood estimator.

In this article we develop a methodology and theory for constructing an efficient one-step sparse estimation procedure in nonconcave penalized likelihood models. For that purpose, we first propose a new iterative algorithm based on local linear approximation (LLA) for maximizing the nonconcave penalized likelihood. The LLA enjoys three significant advantages over the LQA and the perturbed LQA. First, in the LLA we do not have to delete any small coefficient or choose the size of perturbation in order to avoid numerical instability. Secondly, we demonstrate that the LLA is the best convex minorization-maximization (MM) algorithm, thus proving the convergence of the LLA algorithm by the ascent property of MM algorithms (Lange, Hunter & Yang 2000). Thirdly, the LLA naturally produces a sparse estimates via coninuous penalization. We then propose using the one-step LLA estimator from the LLA algorithm as the final estimates. Computationally, the one-step LLA estimates alleviate the computation burden in the iterative algorithm and overcome the potential local maxima problem in maximizing the nonconcave penalized likelihood. In addition, we can take advantage of the efficient algorithm for solving LASSO to compute the one-step LLA estimator. Statistically, we show that if the regularization parameter is appropriately chosen, the one-step LLA estimates enjoy the oracle properties, provided that the initial estimates are good enough. Therefore, the one-step LLA estimator can dramatically reduce the computation cost without losing statistical efficiency.

The rest of the paper is organized as follows. In Section 2 we introduce the local linear approximation algorithm and discuss its various properties. In Section 3 we discuss the one-step LLA estimator, in which asymptotical normality and consistency of selection are established. Section 4 describes the implementation detail, and Section 5 shows numerical examples. Proofs are presented in Section 6.

2. Local Linear Approximation Algorithm

Suppose that are n identically and independently distributed samples, where xi denotes the p-dimension predictor and yi is the response variable. Assume that yi depends on xi through a linear combination , and the conditional log-likelihood given xi is , where ϕ is a dispersion parameter. In some models, such as logistic regression and Poisson regression, there is no dispersion parameter. In linear regression model, ϕ is the variance of the random error, and is often estimated separately after β is estimated. In most variable selection applications, we do not penalize the dispersion parameter (Frank & Friedman 1993, Tibshirani 1996, Fan & Li 2001, Miller 2002). Thus, we simplify notation in the reminder of this paper by suppressing ϕ, and further use ℓi (β) to stand for .

2.1. Penalized likelihood

In the variable selection problem, the assumption is that some components of β are zero. The goal is to identify and estimate the subset model. In this work we consider the variable selection methods by maximizing the penalized likelihood function taking the form

| (2.1) |

In principle, pλj can be different for different components (coefficients). For ease of presentation we let Pλj(|βj|) = Pλ(|β j|), i.e., the same penalty function is applied to every component of β. Formulation in (2.1) includes many popular variable selection methods. For instance, the best subset selection amounts to using the L0 penalty, while the lasso (Tibshirani 1996) uses the L1 penalty pλ(|β|) = λ|β|. Bridge regression (Frank & Friedman 1993) uses the Lq penalty pλ(|β|) = λ|β|q. When 0 < q < 1, the Lq penalty is concave over (0, ∞), and non-differentiable at zero. The SCAD penalty (Fan & Li 2001) is a concave function defined by pλ(0) = 0 and for |β| > 0

| (2.2) |

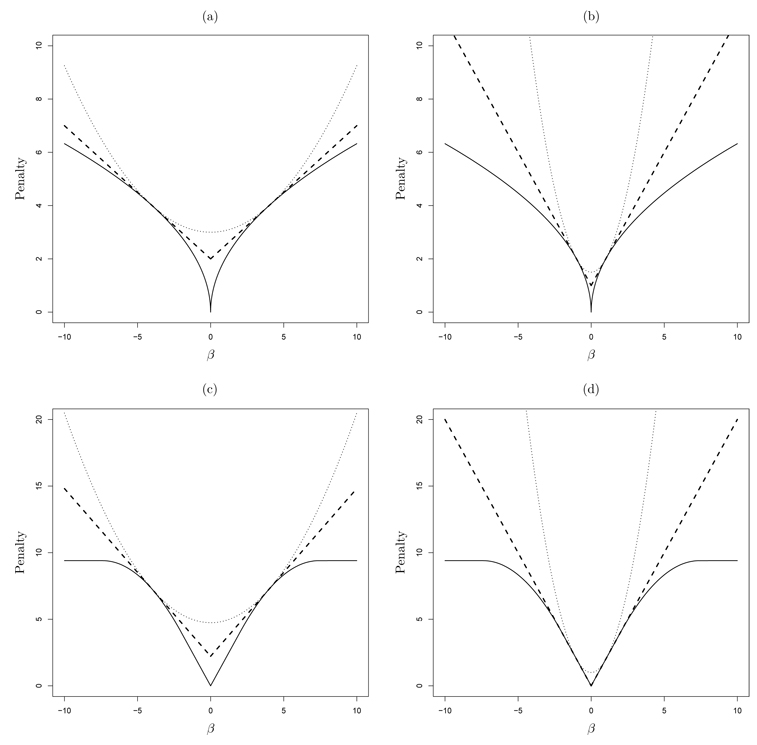

Often α = 3.7 is used. The notation z+ stands for the positive part of z: z+ is z if z > 0, zero otherwise. The SCAD penalty and L0.5 penalty are illustrated in Figure 1. Note that with a concave penalty the penalized likelihood in (2.1) is a nonconcave function. Hence maximizing nonconcave penalized likelihood is challenging. Antoniadis & Fan (2001) proposed nonlinear regularized Sobolev interpolators (NRSI) and regularized one-step estimator (ROSE) for nonconvex penalized least squares problems under wavelets settings. They further introduced the graduated nonconvexity (GNC) algorithm for minimizing high-dimensional nonconvex penalized least squares problem. The GNC algorithm was first developed for reconstructing piece wise continuous images (Black & Zisserman 1987). The GNC algorithm offers nice ideas for minimizing high-dimensional nonconvex objective function, but in general, it is computationally intensive, and its implementation depends on a sequences of tuning parameters. Fan & Li (2001) proposed the local quadratic approximation (LQA) algorithm for the nonconcave penalized likelihood. We introduce the LQA algorithm in Section 2.2 in detail. Hunter & Li (2005) showed that the LQA shares the same spirit as that of the MM algorithm (Lange et al. 2000). Wu (2000) pointed out that the MM algorithm and GNC algorithm share the same spirit in terms of optimization transfer. In general, the GNC algorithms do not guarantee the ascent property for maximization problems, evidenced from Figure 8(c) in Antoniadis & Fan (2001), while the MM algorithms enjoy the ascent property, as demonstrated in Hunter & Li (2005).

FIG. 1.

Plot of Local Quadratic Approximation (thin dotted lines) and Local Linear Approximation (thick broken lines) at β = 4 and 1. (a) and (b) are for the L0.5 penalty with λ = 2, and (c) and (d) are for the SCAD penalty with λ = 2.

2.2. Local quadratic approximation

It can be seen from Figure 1 that the penalized likelihood functions become non-differentiable at the origin and nonconcave with respect to β. The singularity and nonconcavity make it difficult to maximize the penalized likelihood functions. Suppose that we are given an initial value β(0) that is close to the true value of β. Fan and Li (2001) propose locally approximating the first order derivative of the penalty function by a linear function:

Thus, they use a LQA to the penalty function:

| (2.3) |

Figure 1 illustrates the LQA for the L0.5 penalty and the SCAD penalty. With iteratively updating the LQA, Newton-Raphson algorithm can be modified for maximization of the penalized likelihood function. Specifically, we take the un-penalized likelihood estimate to be the initial value β(0): For k = 1, 2,…, repeatedly solve

| (2.4) |

Stop the iteration if the sequence of {β(k)} converges.

To avoid numerical instability, Fan and Li (2001) suggested that if in (2.4) is very close to 0, say (a pre-specified value), then set β̂j = 0 and delete the jth component of x from the iteration. Thus, the LQA algorithm shares a drawback of backward stepwise variable selection: if a covariate is deleted at any step in the LQA algorithm, it will necessarily be excluded from the final selected model. Furthermore, one has to choose ε0, which practically becomes an additional tuning parameter. The size of ε0 potentially affects the degree of sparsity of the solution as well as the speed of convergence. Hunter and Li (2005) studied the convergence property of the LQA algorithm. They found that the LQA algorithm is one of minorize-maximize (MM) algorithms, extensions of the well-known EM algorithm. They further demonstrated that the behavior of the LQA algorithm is the same as that of an EM algorithm with the LQA playing the same role of E-step in the EM algorithm. To avoid numerical instability and the drawback of backward stepwise variable selection, Hunter and Li (2005) suggested optimizing a slightly perturbed version of (2.4) bounding the denominator away from zero: for k = 1,2,…, repeatedly solve

| (2.5) |

for a pre-specified size perturbation τ0. Stop the iteration if the sequence of {β(k)} converges. In the practical implementation, we have to determine the size of perturbation. This sometimes may be difficult, and furthermore, the size of τ0 potentially affects the degree of sparsity of the solution as well as the speed of convergence.

2.3. Local linear approximation

To eliminate the weakness of the LQA, we propose a new unified algorithm based on local linear approximation to the penalty function:

| (2.6) |

Figure 1 illustrates the LLA for the L0.5 penalty and the SCAD penalty. Fan & Li (2001) show that in order to have a continuous thresholding rule, the penalty function must satisfy a continuity condition: the minimum of |θ| + p′λ(|θ|) is attained at zero. Although the L0.5 penalty fails to hold the continuity condition, we show in Section 3 that it is still good for deriving continuous one-step sparse estimates. For ease of presentation, we assume in this section, unless otherwise specified, that the right derivative of pλ(·) at 0 is finite.

Similar to the LQA algorithm, the maximization of the penalized likelihood can be carried out as follows. Set the initial value β(0) be the un-penalized maximum likelihood estimate. For k = 1, 2,…, repeatedly solve

| (2.7) |

Stop the iterations if the sequence of {β(k)} converges. We refer this algorithm as to the LLA algorithm. The LLA algorithm is distinguished from the LQA algorithm in that β(k+1) and the final estimates naturally adopt a sparse representation. The LLA algorithm inherits the good features of LASSO in terms of computational efficiency, and therefore the maximization can be solved by efficient algorithms, such as the least angle regression (LARS) algorithm (Efron, Hastie, Johnstone & Tibshirani 2004). From (2.7), the approximation is numerical stable, and thus, the drawback of backward variable selection can be avoided in LLA algorithm.

We next study the convergence of the LLA algorithm. Denote

and

Theorem 1

For a differentiahle concave penalty function pλ(·) on [0, ∞), we have

| (2.8) |

Furthermore, the LLA has the the ascent property, i.e., for all k = 0, 1, 2,…

| (2.9) |

If the penalty function is strictly concave then we always take “>” in (2.9).

From (2.8), G(β|β(k)) is a minorization of Q(β), and finding β (k+1) is the maximize-step in MM (minorize-maximize) algorithms. Therefore, the LLA algorithm is an instance of the MM algorithms. For a survey of work in MM algorithms, see Heiser (1995) and Lange et al. (2000).

The analysis of convergence of LLA can be done by following the general convergence results for MM algorithms. Let M(β) denote the map defined by the LLA algorithm from β(k) to β(k+1). Note that the penalty function has continuous first derivative and solving β(k+1) is a convex optimization problem, thus M is a continuous map. We define a stationary point of the function Q(β) to be any point β at which the gradient vectors is zero.

Proposition 1

Given an initial value β(0), let β(k) = Mk(β(0)). If Q(β) = Q(M(β)) only for stationary points of Q and if β* is a limit point of the sequence {β(k)}, then β* is a stationary point of Q(β).

Proposition 1 is a slightly modified version of Lyapunov’s Theorem in Lange (1995). We omit its proof. In Theorem 1 we show that the LLA of pλ(·) provides a majorization of the penalty function pλ(·). In fact, the LLA is the best convex majorization of pλ(·) as stated in the next Theorem.

THEOREM 2

Denote by ψ*(·) the LLA approximation of pλ(·). . Suppose that ψ(·) is a convex majorization function of pλ(·) at to, i.e.,

. We must have Ψ(t) ≥ Ψ*(t) for all t. If the right derivative of pλ(·) at zero diverges, the above conclusions hold for t0 > 0 and t ≥ 0.

Figure 1 shows an illustration of Theorem 2 with the SCAD and L0.5 penalties. As can be seen from Figure 1, the LLA approximation is underneath the LQA approximation in all four cases.

The ascent property of MM indicates that MM is an extension of the famous EM algorithm. Under certain conditions, we show that the LLA algorithm can be cast as an EM algorithm.

Suppose that exp(− npλ(·)) is a Laplace transformation of some non-negative function H(·). Then H(·) is the inverse Laplace transformation of exp(−npλ(·)) and

| (2.10) |

For example, if pλ(|β|) = λ|β|q, the Bridge penalty (0 < q < 1), then

where and S(·) is the density of the stable distribution of index q (Mike 1984).

Let and we independently put a Laplacian prior on βj

| (2.11) |

Further regard Π as a hyper-prior on τj (2.10) implies

| (2.12) |

Maximizing Q(β) is equivalent to computing the posterior mode of p(β|y), if we treat exp(−npλ(|βj|)) as the marginal prior of β. The identity (2.12) implies an EM algorithm for maximizing the posterior p(β|y).

To derive the EM algorithm, we consider τ1,…, τp as missing data. The complete log-likelihood function (CLF) is

Suppose the current estimator is β(k). The E-step computes the conditional mean of CLF

The M-step finds β(k+1) maximizing . Thus

| (2.13) |

Theorem 3

Suppose that (2.10)–(2.13) hold for pλ(·), the LLA algorithm and the EM algorithm are identical. Moreover, (2.10) implies that pλ(·) must be a strictly increasing function on [0, ∞) and unbounded. Thus the SCAD penalty does not have an inverse Laplace transformation.

In the above discussion, we have assumed all the necessary conditions to ensure the the EM algorithm is proper. If this is the case, then Theorem 3 shows that the EM algorithm is exactly the LLA algorithm. On the other hand, it is also worth noting that there are concave penalty functions for which (2.10) cannot be true. The SCAD penalty is such an example. Thus, Theorem 3 also indicates that MM algorithms are more flexible than EM algorithms.

3. One-step Sparse Estimates

In this section, we propose the one-step LLA estimator, which is significantly distinguished from the one-step or k-step LQA estimate because it automatically adopts a sparse representation. Thus it can be used as a model selector. One may further define k-step LLA estimator, but, in general, it is unnecessary. As demonstrated in Fan & Chen (1999) and Cai, Fan & Li (2000), both empirically and theoretically, the one-step method is as efficient as the fully iterative method, provided that the initial estimators are reasonably good. In LQA finding β(k+1) is a ridge regression problem, which indicates that almost surely, none of the components of β(k+1) will be exact zero. Hence the one-step or k-step LQA estimates in the LQA will not be able to achieve the goal of variable selection. To get insights into the one-step LLA estimator, let us start with linear regression models and consider the penalized least squares.

3.1. Linear regression models

The LLA algorithm naturally provides a sparse one-step estimator. For simplicity, let the initial estimate β(0) be ordinary least squares estimator. Then the one-step estimator is obtained by

| (3.1) |

We denote by β̂(ose) the one-step estimator β(1).

We show that the one-step estimator enjoys the oracle properties. To this end we assume two regularity conditions

-

(Al)

yi = xiβ0 + εI, where ε1,…, εn are independent and identically distributed random variables with mean 0 and variance σ2

-

(A2)

where C is a positive definite matrix.

Without loss of generality, let and β20 = 0. We write .

Theorem 4

Let pλn(·) be the SCAD penalty. If , then the one-step SCAD estimates β̂(ose) must satisfy,

Sparsity: with probability tending to one, β̂(ose)2 = 0.

Asymptotic normality: .

In addition, consider pλn(·) = λnp(·). Suppose p′(·) is continuous on (0, ∞) and there is some s > 0 such that p′(θ) = O(θ−s) as θ → 0+. Then (a) and (b) hold, if .

3.2. Penalized likelihood

For a general likelihood model, let denote the log-likelihood. Suppose that the log-likelihood function is smooth and has the first two derivatives with respect to β. For a given initial value β(0) , the log-likelihood function can be locally approximated by

| (3.2) |

Let us take β(0) = β̂(mle). Then ∇ℓ(β(0)) = 0 by the definition of MLE. Thus β(1) is given by

| (3.3) |

It is interesting to see that (3.3) reduces to the one-step estimates in linear regression models, if we are willing to assume that ε ∼ N(0, σ2). However, it should be noted that normality assumption is not needed in Theorem 4.

We show that in the general likelihood setting, β(1) is desired the one-step estimates, denoted by β̂(ose). Let I(β0) be the Fisher information matrix and I1(β10) = I1(β10,0) denote the Fisher information knowing β20 = 0. Note that I(β0) is a p × p matrix and I1(β10) is a submatrix of I1(β0). It is well known that under some regularity conditions (Lehmann & Casella 1998), n−1∇2ℓ(β̂(mle)) ኒ P −I(β0), and

Theorem 5

Let pλn(·) be the SCAD penalty. If , then the one-step SCAD estimates β̂(ose) must satisfy

Sparsity: with probability tending to one, β̂(ose)2 = 0.

Asymptotic normality: .

In addition, consider pλn(·) = λnp(·). Suppose p′(·) is continuous on (0, ∞) and there is some s > 0 such that p′(θ) = O(θ−s) as θ → 0+. Then (a) and (b) hold, if .

In Theorems 4 and 5 we have established the oracle properties of the one-step SCAD estimator. It is interesting to note that the choice of λn is the same as that in Theorem 2 of Fan & Li (2001). It is also worth noting that our results require less regularity conditions than Theorem 2 of Fan & Li (2001), for the penalty function does not need to be twice differentiable.

3.3. Continuity of the one-step estimator

For the nonconcave penalized likelihood estimates to be continuous, the minimum of the function must be attained at 0 (Fan & Li 2001). Bridge penalty (0 < q < 1) fails to satisfy the continuity condition, thus it is considered suboptimal (Fan & Li 2001). Our results require weaker conditions to ensure a continuous thresholding estimator. Note that β̂(ose) is obtained through an ℓ1 penalized criterion. Therefore, we only require is continuous for |θ| > 0 to ensure the continuity of β̂ (ose). Theorem 4 and Theorem 5 indicate that Bridge penalty, pλ(|θ|)=λ|θ|q for 0 < q < l, can be used in the one-step estimation scheme and their one-step estimates are continuous.

There is another interesting implication of the continuity of β̂ (ose). Suppose two penalty functions have very similar derivatives, then we expect their one-step estimators are very close too. To illustrate this point, we consider the limiting one-step estimator with the Lq penalty when q → 0+.

For each fixed q, we are interested in the whole profile of as a function of λ. Thus we can consider λ* = λq as the effective regularization parameter. On the other hand, suppose we consider the one-step estimator with the logarithm penalty, Pλ(|β|) = λlog|β|,

Proposition 2

If q → 0+, then the profile of converges to the profile of in the sense that .

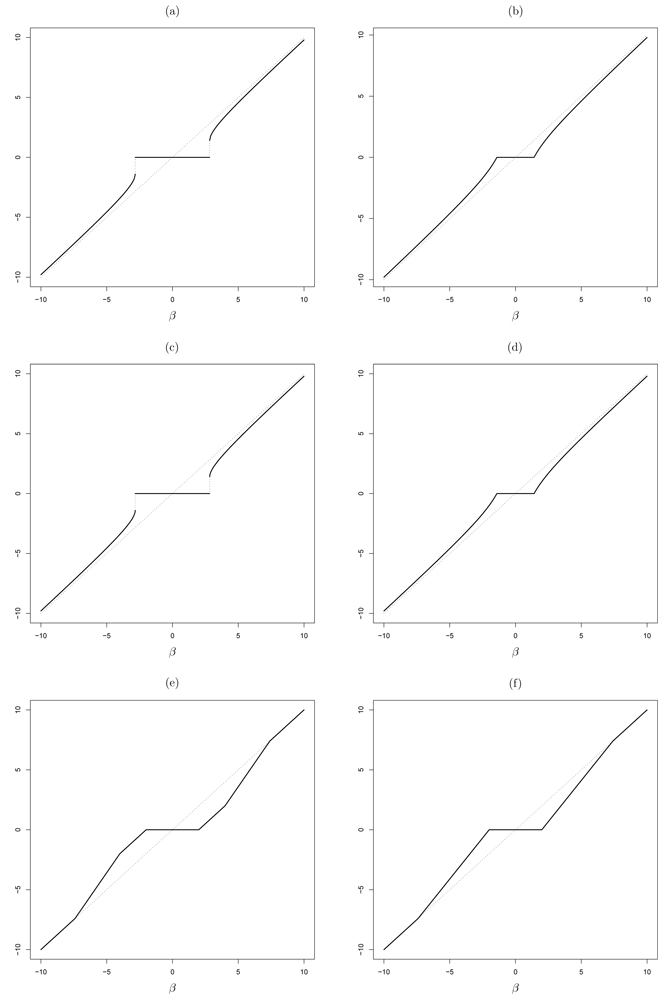

We make a note that the convexity of the LLA is crucial for Proposition 2. We demonstrate the continuity property of the one-step estimator in linear regression models with an orthogonal design. As can be seen from Figure 2, in orthogonal design the L0.01 penalty and the logarithm penalty are equivalent to some discontinuous thresholding rules, but their one-step estimators yield continuous thresholding rules. Moreover, the one-step L0.01 estimator with λ = 200 is very similar to the one-step logarithm estimator with λ = 2, which shows us an illustrative example of Proposition 2. We also show the SCAD thresholding and its one-step version in Figure 2. They are both continuous and unbiased for large coefficients, but they are not identical.

FIG. 2.

Compare thresholding rules in orthogonal design, (a) and (b) are for the logarithm penalty and its one-step LLA approximation, λ = 2. (c) and (d) are for Bridge (L0.01) and its one-step LLA approximation, λ = 200. (e) and (f) are for SCAD and its one-step LLA approximation, λ = 2.

4. Implementation

In this section we show that the LLA allows an efficient implementation of the one-step sparse estimator. The key is to notice that solving β(1) is not much different from solving LASSO. Standard quadratic programming software can be used to solve LASSO. The shooting algorithm also works well (Fu 1998, Yuan & Lin 2006). Efron et al. (2004) proposed an efficient path algorithm called LARS for computing the entire solution path of LASSO. See also the homotopy algorithm by Osborne, Presnell & Turlach (2000). The LARS algorithm is a major breakthrough in the development of the LASSO-type methods. Zou & Hastie (2005) modified the LARS algorithm to compute the solution paths of the elastic net. Rosset & Zhu (2004) generalized the LARS type algorithm to a class of optimization problems with a LASSO penalty. The LARS algorithm was used to simplify the computations in an empirical Bayes model for LASSO (Yuan & Lin 2005).

We adopt the LARS idea in our implementation. Write . Observe that

where D is a n × n diagonal matrix with . In linear regression models, Dii = 2. We separately discuss the algorithm for two types of concave penalties.

Type 1: pλ(t) = λp′(t) and p′(t) > 0 for all t. Bridge penalties and the logarithm penalty belong to this category which also covers many other penalties. We propose the following algorithm to compute the one-step estimator.

Algorithm 1

- Step. 1 Create working data by

- Step 2. Apply the LARS algorithm to solve

Then it is not hard to show that

Thus, if , then xi is selected in the final model.

Type 2: For some penalties, the derivative can be zero. In addition, the regularization parameter λ cannot be separated from the penalty function. The SCAD penalty is a typical example. Let us assume that

We write

We propose the following algorithm to compute β(1).

Algorithm 2

Step 1a. Create working data by

Step 1b. Let .

Step 1c. Let HU be the projection matrix in the space of . Compute .

- Step 2. Apply the LARS algorithm to solve

- Step 3. Compute . Then it is not hard to show that

Thus, if , then xj is selected in the final model for j ∈ V.

In both algorithms the LARS step uses the same order of computations of a single OLS fit (Efron et al. 2004). Thus it is very efficient to compute the one-step estimator. It is also remarkable that if the penalty is of type 1, then the entire profile of the one-step estimator (as a function of λ) can be efficiently constructed. For the SCAD type penalty we still need to solve the one-step estimator for each fixed λ, for the sets U and V could change as λ varies.

5. Numerical Examples

In this section, we assess the finite sample performance of the one-step sparse estimates for linear regression models, logistic regression models and Poisson regression models in terms of model complexity (sparsity) and model error, defined by

for a selected model μ̂(·), where the expectation is taken over the new observation x. We compare their performance with that of the SCAD with the original LQA algorithm (Fan & Li 2001) and the perturbed LQA algorithm (Hunter & Li 2005), and the best subset variable selection with the AIC, and BIC. For a fitted subset model ℳ, the AIC and BIC statistics are of the form

where |ℳ| is the size of the model and λ = 2 and log(n), respectively. Note that the BIC is a consistent model selection criterion, while AIC is not. We further demonstrate the proposed methodology by analysis of a real data set.

In our simulation studies, we examine the performance of one-step sparse estimates with the SCAD penalty, logarithm penalty (defined in Section 3.3) and L0.01 penalty. Note that we expect the logarithm penalty and L0.01 penalty generate similar one-step sparse estimators. In Table 1–Table 3, one-step SCAD, one-step LOG and one-step L0.01 stand for the one-step sparse estimate with the SCAD, logarithm and L0.01 penalty, respectively; SCAD and P-SCAD represent the penalized least squares or likelihood estimators with the SCAD penalty using LQA and perturbed LQA algorithm, respectively; and AIC and BIC are the best subset variable selection with the AIC and BIC criteria, respectively. For the best subset variable selection, we exhaustively searched over all possible subsets. We used five-fold cross-validation to select the tuning parameters.

TABLE 1.

Simulation Results for Linear Regression Models.

| No. of Zeros | Proportion of | |||||

|---|---|---|---|---|---|---|

| Method | MRME | C | IC | Under-fit | Correct-fit | Over-fit |

|

n = 50 | ||||||

| one-step SCAD | 0.208 | 3.00 | 0.55 | 0.000 | 0.771 | 0.229 |

| one-step LOG | 0.263 | 3.00 | 0.89 | 0.000 | 0.559 | 0.441 |

| one-step L0.01 | 0.262 | 3.00 | 0.90 | 0.000 | 0.555 | 0.445 |

| SCAD | 0.233 | 3.00 | 0.83 | 0.000 | 0.682 | 0.318 |

| P-SCAD | 0.235 | 3.00 | 0.64 | 0.000 | 0.701 | 0.299 |

| AIC | 0.660 | 3.00 | 1.84 | 0.000 | 0.195 | 0.805 |

| BIC | 0.401 | 3.00 | 0.63 | 0.000 | 0.576 | 0.424 |

|

n = 100 | ||||||

| one-step SCAD | 0.234 | 3.00 | 0.55 | 0.000 | 0.784 | 0.216 |

| one-step LOG | 0.281 | 3.00 | 0.71 | 0.000 | 0.657 | 0.343 |

| one-step L0.01 | 0.281 | 3.00 | 0.71 | 0.000 | 0.657 | 0.343 |

| SCAD | 0.252 | 3.00 | 0.75 | 0.000 | 0.732 | 0.268 |

| P-SCAD | 0.262 | 3.00 | 0.63 | 0.000 | 0.711 | 0.289 |

| AIC | 0.676 | 3.00 | 1.63 | 0.000 | 0.192 | 0.808 |

| BIC | 0.337 | 3.00 | 0.32 | 0.000 | 0.728 | 0.272 |

TABLE 3.

Simulation Results for Poisson Regression Models

| No. of Zeros | Proportion of | |||||

|---|---|---|---|---|---|---|

| Method | MRME | C | IC | Under-fit | Correct-fit | Over-fit |

|

n = 60 | ||||||

| one-step SCAD | 0.284 | 2.99 | 1.35 | 0.011 | 0.386 | 0.603 |

| one-step LOG | 0.260 | 2.99 | 1.10 | 0.006 | 0.460 | 0.534 |

| one-step L0.01 | 0.260 | 2.99 | 1.10 | 0.006 | 0.460 | 0.534 |

| SCAD | 0.292 | 3.00 | 2.75 | 0.003 | 0.095 | 0.902 |

| P-SCAD | 0.327 | 2.91 | 1.72 | 0.055 | 0.270 | 0.675 |

| AIC | 0.496 | 3.00 | 1.40 | 0.001 | 0.265 | 0.734 |

| BIC | 0.228 | 3.00 | 0.34 | 0.002 | 0.735 | 0.263 |

|

n = 120 | ||||||

| one-step SCAD | 0.271 | 3.00 | 1.00 | 0.001 | 0.552 | 0.447 |

| one-step LOG | 0.266 | 3.00 | 0.76 | 0.000 | 0.603 | 0.397 |

| one-step L0.01 | 0.266 | 3.00 | 0.77 | 0.000 | 0.601 | 0.399 |

| SCAD | 0.342 | 3.00 | 2.36 | 0.000 | 0.174 | 0.826 |

| P-SCAD | 0.356 | 2.95 | 1.60 | 0.037 | 0.322 | 0.641 |

| AIC | 0.594 | 3.00 | 1.45 | 0.000 | 0.235 | 0.765 |

| BIC | 0.277 | 3.00 | 0.25 | 0.000 | 0.790 | 0.210 |

Example 1

(Linear Model) In this example, simulation data were generated from the linear regression model,

where β = (3,1.5,0, 0, 2, 0,0, 0,0, 0,0,0)T, ε ∼ N(0,1) and x is multivariate normal distribution with zero mean and covariance between the ith and jth elements being p|i−j| with ρ = 0.5. In our simulation, the sample size n is set to be 50 and 100. For each case, we repeated the simulation 1000 times.

For linear model, model error for μ̂ = xTβ̂ is ME(μ̂) = (β̂−β)TE(xxT)(β̂−β). Simulation results are summarized in Table 1, in which MRME stands for median of ratios of ME of a selected model to that of the ordinary least squares estimate under the full model. Both the columns of ’C’ and ’IC’ are measures of model complexity. Column ’C’ shows the average number of nonzero coefficients correctly estimated to be nonzero, and column ’IC’ presents the average number of zero coefficients incorrectly estimated to be nonzero. In the column labeled ’Under-fit’ we presented the proportion of excluding any nonzero coefficients in 1000 replications. Likewise, we reported the probability of selecting the exact subset model and the probability of including all three significant variables and some noise variables in the columns ’Correct-fit’ and ’Over-fit’, respectively.

As can be seen from Table 1, all variable selection procedures dramatically reduce model error. One-step SCAD has the smallest model error among all competitors, followed by the SCAD and perturbed-SCAD. In terms of model error, penalized least squares methods with concave penalties outperform the best subset selection. In terms of sparsity, one-step SCAD also has the highest probability of correct fit. The SCAD penalty performs better than the other penalties. One-step LOG and one-step L0.01 perform very similarly, which numerically confirms the assertion in Proposition 2. It is also interesting to note that a simulation study by Leng, Lin & Wahba (2006) showed that in this example the LASSO did not consistently select the true model when optimizing the prediction error. In contrast, the nonconcave penalty methods and their one-step estimates all work very well in this example because of their oracle properties.

Example 2

(Logistic regression) In this example, we simulated 1000 data sets consisting of n = 200 observations from the model

where p(u) = exp(u)/(l + exp(u)), and β is the same as that in Example 1. The covariate vector x is created as follows. We first generate z from a 12-dimensional multivariate normal distribution with zero mean and covariance between the ith and jth elements being ρ|i−j with ρ = 0.5. Then we set x2k−1 = z2k−1 and x2k = I(z2k < 0) for k = 1, … , 6, where I(·) is an indicator function. Thus, x has continuous as well as binary components.

Unlike the model error for linear regression models, there is no closed form of model error for the logistic regression model. In this example, the model error was estimated using Monte Carlo simulation. Simulation results are summarized in Table 2, in which MRME stands for median of ratios of ME of a selected model to that of the un-penalized maximum likelihood estimate under the full model, and other notation is the same as that in Table 1.

TABLE 2.

Simulation Results for Logistic Regression Model.

| No. of Zeros | Proportion of | |||||

|---|---|---|---|---|---|---|

| Method | MRME | C | IC | Under-fit | Correct-fit | Over-fit |

| one-step SCAD | 0.238 | 2.95 | 0.82 | 0.051 | 0.565 | 0.384 |

| one-step LOG | 0.229 | 2.97 | 0.61 | 0.029 | 0.518 | 0.453 |

| one-step L0.01 | 0.230 | 2.97 | 0.61 | 0.028 | 0.516 | 0.456 |

| SCAD | 0.238 | 2.92 | 0.51 | 0.076 | 0.706 | 0.218 |

| P-SCAD | 0.237 | 2.92 | 0.50 | 0.079 | 0.707 | 0.214 |

| AIC | 0.596 | 2.98 | 1.56 | 0.021 | 0.216 | 0.763 |

| BIC | 0.208 | 2.95 | 0.22 | 0.053 | 0.800 | 0.147 |

From Table 2, it can be seen that the best subset variable selection with the BIC criterion performs the best, however, the computational cost of the best subset variable selection is much more expensive than that of the nonconcave penalized likelihood approach. One-step sparse estimates require the least computational cost. It is interesting to see from Table 2 that the one-step SCAD performs as well as the fully iterative SCAD estimates by the LQA and perturbed LQA algorithms in terms of model error. The one-step estimates with logarithm and L0.01 penalties perform very well. They have lower model error and rate of under-fit models than ones with the SCAD penalty.

Example 3

(Poisson log-linear regression) In this example, we considered a Poisson regression model

where λ(u) = exp(u), β = (1.2, 0.6, 0,0, 0.8, 0,0, 0,0,0, 0, 0)T and x is the same as that of Example 1. We let the sample size be 60 and 120. For each case we simulated 1000 data sets. Note that the model error is ME(β̂) = E{exp(xTβ̂)−exp(xTβ)}2. Since x is normally distributed, we can derive a closed form for the model error using the moment generating function of normal distribution. Simulation results are summarized in Table 3, in which notation is the same as that in Table 2.

From Table 3, we can see that one-step SCAD sparse estimate outperforms the SCAD using both the original LQA algorithm and perturbed LQA algorithm in terms of model errors, model complexity and the rate of correct-fit. The best subset variable selection has the best rate of correct-fit for both n = 60 and 120. The correct-fit rate of one-step sparse estimates becomes much higher when the sample size increases from 60 to 120. This is not case for SCAD, P-SCAD and the best subset variable selection procedures.

Example 4

(Data analysis) In this example, we demonstrate our one-step estimation methodology using the burns data, collected by the General Hospital Burn Center at the University of Southern California. The data set consists of 981 observations. Fan & Li (2001) analyzed this data set as an illustration of the nonconcave penalized likelihood methods. As in Fan & Li (2001), the binary response variable is taken to be the indicator whether the victims survived their burns or not. Four covariates, x1 = age, x2 = sex, x3 = log(burn area + 1) and binary variable x4 = oxygen (0=normal 1=abnormal), are considered. To reduce modeling bias, quadratic terms of x1 and x3 and all interaction terms were included in the logistic regression model. We computed the one-step estimators with the SCAD and logarithm penalties. The regularization parameter was chosen by 5-fold cross-validation. The logarithm of selected λ equals −0.356, and −7.095 for the one-step estimates with the SCAD and logarithm penalties, respectively.

With the selected regularization parameter, the fitted one-step SCAD sparse estimate yields the following model

| (5.1) |

where Y = 1 stands for a victims survived from his/her burns. This model indicates that only x1 and x3 are significant. This is the same as the ones in the model selected by the SCAD with the LQA algorithm and reported in Fan & Li (2001). The one-step fit with logarithm penalty is

| (5.2) |

It selects more variables than (5.1). This is consistent with Table 2, from which we can see that one-step fit with logarithm penalty has a higher rate of ’over-fit’ than the one-step SCAD estimator. The one-step L0.01 fit is almost identical to (5.2).

6. Proofs

6.1. Proof of Theorem 1

At the k-step, define a function with parameter β (k) as follows

Observe that Q(β(k)) = G(β(k)|β(k)), and

By the concavity of the penalty function pλ(·), we have

If we use the right derivative. Thus it follows that

We can take ”>” in the above inequality if pλ(·) is strictly concave. Moreover, it is easy to check that

Hence we have that

This completes the proof.

6.2. Proof of Theorem 2

Without loss of generality let us consider t > t0. suffices to show

| (6.1) |

Note that

Thus (6.1) is equivalent to

| (6.2) |

Take a sequence of {tk} such that t0 < tk < t and tk → t0. By the convexity of ϕ(·), we know

| (6.3) |

Since ϕ(·) is a majorization of pλ(·) at t0, we have

| (6.4) |

Thus combining (6.3) and (6.4), we know

Taking the limit in the above inequality we obtain (6.2). Similar arguments can be applied to the case of t < t0.

6.3. Proof of Theorem 3

It suffices to show that

| (6.5) |

Then (2.13) is equivalent to (2.7), which in turn shows that LLA is identical to the EM algorithm.

By p(τj|β,y) ∝ p(βj|τj)π(τj), we have

Hence (6.5) is proven.

By the non-negativity of H(t), it is easy to see that exp(−npλ(|β|)) is a strictly decreasing function of |β|, thus pλ(·) is strictly increasing. To show pλ(·) is unbounded, using dominant (or monotone) convergence theorem, we have exp(−npλ(|β|)) → 0 as |β| → ∞. Hence pλ(·) is unbounded.

6.4. Proof of Theorem 4 and Theorem 5

Theorem 4 can be proven by the same proof for Theorem 5, and therefore we only prove Theorem 5.

Let us define

Let û(n) = arg min[Vn(u) − Vn(0)], then . By Slutsky’s Theorem, it follows that

| (6.6) |

| (6.7) |

We can write T3 as

Note that

We now examine the behavior of . First consider the case where . When β0j ≠ 0, since , continuous mapping theorem says that . Hence yields T3j →P 0. When β0j = 0, T3j = 0 if uj = 0. For uj ≠ 0, we have

By , then from we see T3j →P ∞.

For the SCAD penalty, we have similar conclusions. if θ > aλn (a = 3.7). Thus when , then λn → 0 ensures . When β0j = 0, T3j = 0 if uj = 0. For uj ≠ 0, we have . Also note that for all 0 < θ < λn, which implies that if with probability tending to one. Thus T3j → ∞.

Let us write . Then we have

| (6.8) |

Denote . Combining (6.6), (6.7) and (6.8) we conclude that for each fixed u,

The unique minimum of V(u) is and u20 = 0. Vn(u) − Vn(0) is a convex function of u. By epiconvergence (Geyer 1994, Knight & Fu 2000), we conclude that

| (6.9) |

| (6.10) |

By W10 = N(0,I1(β10)), (6.9) is equivalent to

Note that (6.10) implies that . We now show that with probability tending to one, β̂ = 0. This is a stronger statement than (6.10). It suffices to prove that if β0j = 0, P (β̂j (ose)≠= 0) → 0. Assume β̂j(ose) ≠ 0. By KKT conditions of (3.3), we must have

| (6.11) |

We have shown that when β0j = 0, the right hand side goes to ∞ in probability. However, the left hand side can be written as

By (6.9) and (6.10) we know the first term converges in law to some normal, and so does the second term. Thus

7. Discussion

In this article we have proposed a new algorithm based on the LLA for maximizing the nonconcave penalized likelihood. We further suggest using the one-step LLA estimator as the final estimates, because the one-step estimator naturally adopts a sparse representation and enjoys the oracle properties. In addition, the one-step sparse estimate can dramatically reduce the computational cost in the fully iterative methods. The simulation shows that one-step sparse estimates have very competitive performance with finite samples.

We have concentrated on the one-step sparse estimate for linear models and likelihood-based models, including generalized linear models. The proposed one-step sparse estimation method can be easily extended for variable selection in survival data analysis using penalized partial likelihood (Fan & Li 2002, Cai et al. 2005), variable selection for longitudinal data (Fan & Li 2004) and variable selection in semiparametric regression modeling (Li & Liang 2007).

Supplementary Material

Acknowledgements

Li’s research was supported by a NSF grant DMS-0348869 and partially supported by a National Institute on Drug Abuse (NIDA) grant P50 DA10075. The authors sincerely thank the Co-Editor, the Associate Editor and the referees for constructive comments that substantially improved an earlier version of this paper.

Footnotes

AMS 2000 subject classifications. 62J05, 62J07

REFERENCES

- Antoniadis A, Fan J. Regularization of wavelets approximations. Journal of the American Statistical Association. 2001;96:939–967. [Google Scholar]

- Bickel PJ. One-step Huber estimates in the linear model. Journal of the American Statistical Association. 1975;70:428–434. [Google Scholar]

- Black A, Zisserman A. Visual Reconstruction. Cambridge, MA: MIT Press; 1987. [Google Scholar]

- Breiman L. Heuristics of instability and stabilization in model selection. The Annals of Statistics. 1996;24:2350–2383. [Google Scholar]

- Cai J, Fan J, Li R, Zhou H. Variable selection for multivariate failure time data. Biometrika. 2005;92:303–316. doi: 10.1093/biomet/92.2.303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai J, Fan J, Zhou H, Zhou Y. Marginal hazard models with varying-coefficients for multivariate failure time data. The Annals of Statistics. To appear. 2006 [Google Scholar]

- Cai Z, Fan J, Li R. Efficient estimation and inferences for varying-coefficient models. Journal of the American Statistical Association. 2000;95:888–902. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. The Annals of Statistics. 2004;32:407–499. [Google Scholar]

- Fan J, Chen J. One-step local quasi-likelihood estimation. Journal of the Royal Statistical Society, Series B. 1999;61:927–943. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Fan J, Li R. Variable selection for Cox’s proportional hazards model and frailty model. The Annals of Statistics. 2002;30:74–99. [Google Scholar]

- Fan J, Li R. New estimation and model selection procedures for semiparametric modeling in longitudinal data analysis. Journal of the American Statistical Association. 2004;99:710–723. [Google Scholar]

- Fan J, Li R. Statistical challenges with high dimensionality: Feature selection in knowledge discovery. Proceedings of the Madrid International Congress of Mathematicians 2006, to apepar. 2006 [Google Scholar]

- Fan J, Peng H. On non-concave penalized likelihood with diverging number of parameters. The Annals of Statistics. 2004;32:928–961. [Google Scholar]

- Fan J, Lin H, Zhou Y. Local partial likelihood estimation for life time data. The Annals of Statistics, to appear. 2006;34:290–325. [Google Scholar]

- Frank I, Friedman J. A statistical view of some chemometrics regression tools. Technometrics. 1993;35:109–148. [Google Scholar]

- Fu W. Penalized regression: The bridge versus the lasso. Journal of Computational and Graphical Statistics. 1998;7:397–416. [Google Scholar]

- Geyer C. On the asymptotics of constrainted M-estimation. The Annals of Statistics. 1994;22:1993–2010. [Google Scholar]

- Heiser W. OXford: Clarendon Press; 1995. Convergent computation by iterative majorization: Theory and applications in multidimensional data analysis. [Google Scholar]

- Hunter D, Li R. Variable selection using mm algorithms. The Annals of Statistics. 2005;33:1617–1642. doi: 10.1214/009053605000000200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight K, Fu W. Asymptotics for lasso-type estimators. The Annals of Statistics. 2000;28:1356–1378. [Google Scholar]

- Lange K. A gradient algorithm locally equivalent to the EM algorithm. Journal of the Royal Statistical Society, Series B. 1995;57:425–437. [Google Scholar]

- Lange K, Hunter D, Yang I. Optimization transfer using surrogate objective functions (with discussion) Journal of Computational and Graphical Statistics. 2000;9:1–59. [Google Scholar]

- Lehmann E, Casella G. second edn. Springer; 1998. Theory of Point Estimation. [Google Scholar]

- Leng C, Lin Y, Wahba G. A note on the lasso and related procedures in model selection. Statistica Sinica. 2006;16:1273–1284. [Google Scholar]

- Li R, Liang H. Variable selection in semiparametric regression modeling. Annals of Statistics. 2007 doi: 10.1214/009053607000000604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mike W. Outlier models and prior distributions in Bayesian linear regression. Journal of the Royal Statistical Society, Series B. 1984;46:431–439. [Google Scholar]

- Miller A. Subset Selection in Regression. second edn. London: Chapman and Hall; 2002. [Google Scholar]

- Osborne M, Presnell B, Turlach B. A new approach to variable selection in least squares problems. IMA Journal of Numerical Analysis. 2000;20(3):389–403. [Google Scholar]

- Robinson P. The stochastic difference between econometrics and statistics. Econometrics. 1988;56:531–547. [Google Scholar]

- Rosset S, Zhu J. Piecewise linear regularized solution paths, Technical report, Department of Statistics. University of Michigan; 2004

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- Wu Y. Optimization transfer using surrogate objective functions: discussion. Journal of Computational and Graphical Statistics. 2000;9:32–34. [Google Scholar]

- Yuan M, Lin Y. Efficient empirical bayes variable selection and estimation in linear models. Journal of the American Statistical Association. 2005;100:1215–1225. [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society, Series B. 2006;68:49–67. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, Series B. 2005;67:301–320. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.