Abstract

Objectives. We sought to identify factors believed to facilitate or hinder evidence-based practice (EBP) implementation in public mental health service systems as a step in developing theory to be tested in future studies.

Methods. Focusing across levels of an entire large public sector mental health service system for youths, we engaged participants from 6 stakeholder groups: county officials, agency directors, program managers, clinical staff, administrative staff, and consumers.

Results. Participants generated 105 unique statements identifying implementation barriers and facilitators. Participants rated each statement on importance and changeability (i.e., the degree to which each barrier or facilitator is considered changeable). Data analyses distilled statements into 14 factors or dimensions. Descriptive analyses suggest that perceptions of importance and changeability varied across stakeholder groups.

Conclusions. Implementation of EBP is a complex process. Cross-system–level approaches are needed to bring divergent and convergent perspectives to light. Examples include agency and program directors facilitating EBP implementation by supporting staff, actively sharing information with policymakers and administrators about EBP effectiveness and fit with clients' needs and preferences, and helping clinicians to present and deliver EBPs and address consumer concerns.

As the evidence base for health care improves, a concomitant obligation arises to ensure that community-based health care providers combine the best scientific evidence with clinical expertise and consumer preferences—that is, to engage in evidence-based practice (EBP).1–3 Effective treatment practices often take 15 to 20 years to diffuse into common practice,2,4,5 a delay that causes unnecessary costs and suffering; this is a critical concern.6,7 The slow integration of scientific evidence into practice has particularly serious implications for public sector mental health systems that serve many of the most vulnerable individuals and families. Members of these populations often have difficulty accessing services and have few alternatives if treatments are not effective. It is critical that public sector mental health agencies implement and utilize the most effective services best suited to system, agency, provider, and consumer clinical and contextual circumstances.

In keeping with the National Institutes of Health, we define implementation as “the use of strategies to introduce or change evidence-based health interventions within specific settings”8 and support the need for implementation and translational research.9 There is growing interest in identifying theoretical models of key factors likely to affect implementation of EBPs.10–12 Much of the existing evidence is from outside the United States13–15 and from related studies that take place outside of health care settings.16,17

We focused on identifying factors likely to impact implementation of EBPs in public sector mental health settings by deriving data from multiple stakeholder groups, ranging from policymakers and funders to consumers. This approach values research evidence for intervention efficacy and effectiveness, considers the context into which practices are to be implemented, and strengthens the evidence–policy interface.18–21 We then conducted analyses to create a model of implementation barriers and facilitators that can serve as a heuristic for policymakers and researchers and can be tested in real-world settings. This inquiry thus complements recent work examining factors affecting EBP implementation at the state level22 and for other public sectors such as child welfare systems.23,24

This discussion raises the question of the meaning of evidence when one considers multiple stakeholder perspectives across system levels. We define a stakeholder as someone involved with the mental health service system by virtue of employment by a mental health authority, agency, or program, or via receiving mental health services. Distinct stakeholders may value different types of evidence.25,26 It is likely that what intervention developers and efficacy researchers might overlook (e.g., system and organizational context)19,27 may be as significant as what they consider (i.e., treatment effects). Because the present study bridges policy, management, clinician, and consumer perspectives, a closer look at this issue is warranted.

Scholars have identified multiple types of evidence used in making policy decisions including research, knowledge, ideas, political factors, and economic factors, and have determined, for instance, that researchers and policymakers may have very different agendas and decision-making processes.20,28 In short, engaging stakeholders across system levels is needed to identify potential barriers and facilitators because EBPs must integrate varying perspectives regarding research evidence, clinical expertise, and judgment, and fit with consumer choice, preference, and culture.2,29

Research conducted in a wide range of settings (e.g., health, mental health, child welfare, substance abuse treatment, business) suggests several factors likely to affect implementation of EBPs as well as other forms of organizational change. For example, changes are more likely to be implemented if they have demonstrated benefits for the adopting organizations.30 Conversely, higher perceived costs undermine change.30,31 Change is more likely to occur and persist if it fits the existing norms and processes of an organization.30–34 Supportive resources and leadership also make change much more likely to occur within organizations,35 perhaps because both ongoing training and incentives are generally necessary to support behavioral change.36–39 Change is also more likely if the individuals implementing it believe that doing so is in their best interest.30,35 Studies in the private sector have found attitudes toward organizational change to be important in the dynamics of innovation.40

Findings from research on EBP implementation in public sector agencies suggest many commonalities with factors identified in other organizational settings.41 Recent studies conducted with public sector child-welfare agencies suggest that service providers must account for intervention fit, in conjunction with intervention preference and the service delivery context.23 As in other domains, leadership emerges as salient in the public sector, with recent research showing that positive leadership in mental health agencies is associated with more favorable clinician attitudes toward adopting EBPs.42

In some respects public sector agencies have distinctive challenges that may affect EBP implementation. First, public sector service delivery is embedded in many-layered systems.43,44 These include not only the individuals most directly involved—the consumers and clinicians—but also program managers, agency directors, and local, state, and federal policymakers who may structure organization and financing in ways more or less conducive to EBPs. One reason quality improvement may not build on evidence-based models is the challenge of satisfying such diverse stakeholders.45 For example, there are marked differences in the ways that researchers and policymakers develop and use information for decision-making18,26; although models for linking research to action are being developed,46,47 they still require empirical examination to determine their viability.

The growing concern with moving research to practice makes it crucial to understand how different stakeholders perceive factors relevant to EBP implementation. As Hoagwood and Olin observe, “The science base must be made usable. To do so will require partnerships among scientists, families, providers, and other stakeholders.”12(p764) We focused on preliminary identification of implementation factors in public sector mental health services. We used concept mapping—a systems-based methodology48 that supports participatory public health research48,49—to identify and examine the perspectives of cross-system level stakeholders on implementation barriers and facilitators. The purpose of this research was to: (1) identify factors likely to facilitate or hinder EBP implementation in a large public sector mental health service system and (2) ascertain their perceived importance and changeability (i.e., the degree to which each barrier or facilitator is considered changeable), in order to provide a conceptual and heuristic model for hypotheses to be tested in future studies.

METHODS

The study took place in San Diego County, California, the sixth-largest county in the United States.50 Working with County Children's Mental Health officials, public agency directors, and program managers, we identified 32 individuals representing a diversity of organizational levels, and a broad range of mental health agencies and programs including outpatient, day treatment, case management, and residential. One participant changed jobs and withdrew, leaving 31 participants: 5 county mental health officials, 5 agency directors, 6 program managers, 7 clinicians, 3 administrative staff, and 5 mental health service consumers (i.e., parents of children receiving services).

The majority of the participants were women (61.3%) and ages ranged from 27 to 60 years, with a mean of 44.4 years (standard deviation[SD] = 10.9). The sample was 74.2% White, 9.7% Hispanic, 3.2% African American, 3.2% Asian American, and 9.7% other. A majority of participants had earned a Master's degree or higher and almost three quarters had direct experience with an EBP. The 8 agencies represented in this sample were either operated by or contracted with the county. Agencies ranged in size from 65 to 850 full-time-equivalent staff and 9 to 90 programs, with the majority located in urban settings.

Concept mapping using the Concept System software, version 3b97 (Concept Systems Inc, Ithaca, NY) was used to organize and illustrate emergent concepts based on study participants' responses. Data collection occurred during the spring and summer of 2005. First, investigators met with a mixed group of members from each stakeholder group (n = 13) and explained that the goal of the project was to identify barriers and facilitators of EBP implementation in public sector child and adolescent mental health settings. Three specific examples of EBPs were presented representing the most common types of interventions that might be implemented in this setting (i.e., individual child–focused, family-focused, and group-based). The individual child–focused intervention outlined was Cognitive Problem Solving Skills Training,51 the family-focused EBP intervention was Functional Family Therapy,52 and the group-based intervention was Aggression Replacement Training.53 In addition to a description of the intervention, participants were provided a summary of training requirements, intervention duration and frequency, therapist experience and education requirements, cost estimates, and cost–benefit estimates. The investigative team then worked with the study participants to develop the following focus statement: “What are the factors that influence the acceptance and use of evidence-based practices in publicly funded mental health programs for families and children?”

The focus statement and the 3 examples of EBP types served as focus group prompts. Separate focus group sessions were conducted with each stakeholder group to promote candid response and reduce desirability effects.54

Participants were asked to brainstorm statements describing factors likely to serve as barriers or facilitators to EBP implementation; “fit with consumer values” was one such phrase. A total of 230 statements were generated across all 6 stakeholder groups. By eliminating duplicate statements and combining similar statements, the investigative team distilled these into 105 distinct statements (see the box on page 2090).48

Examples of Concept-Mapping Statements by Cluster for 14 Factors Affecting Evidence-Based Practice (EBP) Implementation: San Diego County, CA, 2005

Clinician's perceptions

▪ Fit between therapist and the EBP (e.g., theoretical orientation, preference for individual versus family, group, or systems therapy)

▪ EBP challenges provider professional relationships and status (e.g., was experienced but now having to be beginner)

Staff development and support

▪ EBP's potential to reduce staff burnout

▪ Training can be used for clinician licensure hours and continuing education credits

Staffing resources

▪ Challenge of changing existing staffing structure (e.g., group or individual treatment)

▪ Staff turnover

Agency compatibility

▪ EBP compatibility with agency values, philosophy, and vision

▪ Logistics of EBP (e.g., location [clinic, school, home-based], transportation, scheduling)

EBP limitations

▪ Incorporating a structured practice into a current model

▪ Limited number of clients that can be served with an EBP

Consumer concerns

▪ Fit of EBP with consumers' culture

▪ EBP decreases stigma of having a mental health problem and seeking treatment

Impact on clinical practice

▪ Ability to individualize treatment plans

▪ EBP implementation effect on quality of therapeutic relationship

Beneficial features (of EBP)

▪ EBP seen as effective for difficult cases

▪ Potential for adaptation of the EBP without affecting outcomes

Consumer values and marketing

▪ Empowered consumers demanding measurable outcomes

▪ Communicating and marketing EBP to consumers

System readiness and compatibility

▪ Meeting standards for accountability and effective services

▪ EBP compatibility with other initiatives that are being implemented

Research and outcomes supporting EBP

▪ EBP proven effective in real-world settings

▪ EBP more likely to use data to show client progress

Political dynamics

▪ Political or administrative support for the EBP

▪ Government responsibility for fairness in selecting programs to implement EBP

Funding

▪ Willingness of funding sources to adjust requirements (productivity, case load, time frames)

▪ Funders provide clear terms or contracts and auditing requirements for EBPs

Costs of EBP

▪ Having clear knowledge of the exact costs (hidden costs; e.g., specific outcome measures, retraining, etc.)

▪ Potential risk for agency (cost–benefit in regard to outcomes)

Note: Each statement was rated by each participant for importance and changeability and all statements were sorted according to perceived similarity.

G. A. A. and K. Z. randomly renumbered statements to minimize priming effects. Next, a researcher met individually with each study participant. The researcher presented 105 cards (1 statement per card) and asked each participant to sort similar statements into the same pile, yielding as many piles as he or she deemed appropriate.55 Finally, each participant was asked to rate each statement on a zero to 4-point scale of importance (from 0 = not at all important to 4 = extremely important) and changeability (from 0 = not at all changeable to 4 = extremely changeable).

As part of the concept mapping procedures, the Concept Systems software uses multidimensional scaling and hierarchical cluster analysis to generate a visual display of how statements clustered across all participants.56 The result was a single concept map depicting which statements participants had frequently sorted together. G. A. A., K. Z., and L. P. independently evaluated potential solutions (e.g., 12 clusters, 15 clusters) and agreed on the final model based on a statistical stress value and interpretability.57,58 Finally, 22 of the 31 initial study participants (17 through consensus in a single group meeting and 5 through individual phone calls) participated with the research team in defining the meaning of each cluster and identifying an appropriate name for each of the 14 final clusters.

RESULTS

The 14 identified clusters were: consumer values and marketing, consumer concerns, impact on clinical practices, clinical perceptions, evidence-based practices limitations, staff development and support, staffing resources, agency compatibility, costs of evidence-based practices, funding, political dynamics, system readiness and compatibility, beneficial features of EBPs, and research and outcomes supporting EBPs. The box on the next page shows examples of statements for each cluster. A stress value of 0.26 for the final solution indicated good model fit.59

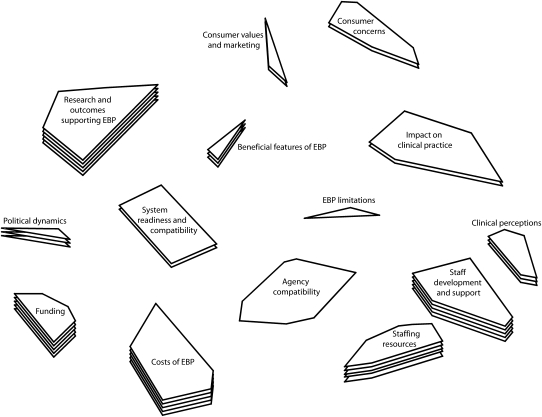

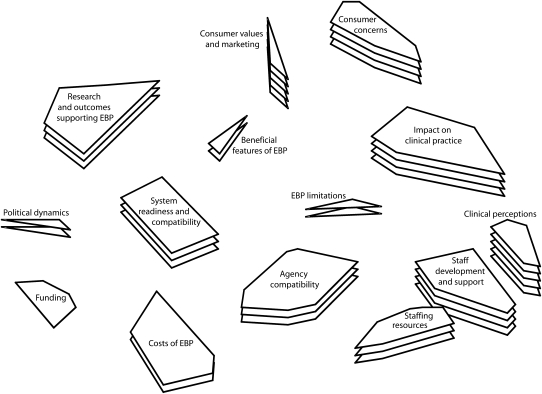

Figure 1 and Figure 2 show the concept maps for barriers and facilitators of EBP implementation. In each figure, the number of layers in each cluster's stack indicates the overall importance and changeability ratings, respectively. For example, in Figure 1, more layers for “funding” indicates higher overall importance ratings relative to clusters with fewer layers (layers are relative to the range of scores, thus do not have a 1-to-1 correspondence with mean values in the tables). A smaller cluster indicates that statements were more frequently sorted into the same piles by participants (indicating a higher degree of similarity). Clusters closer to one another are more similar than those farther away. However, the overall orientation of the concept map (e.g., top, bottom, right, or left) has no inherent meaning.

FIGURE 1.

Importance ratings for 14 factors affecting evidence-based practice (EBP) implementation: San Diego County, CA, 2005.

FIGURE 2.

Changeability ratings for 14 factors affecting evidence-based practice (EBP) implementation: San Diego County, CA, 2005.

Table 1 and Table 2 list the mean participant ratings for importance and changeability, respectively, of statements in each cluster, as well as the means within each stakeholder group for that cluster. Although all participants sorted and rated all statements, some clusters reference issues that might be construed as more relevant to particular stakeholder groups (e.g., consumer- or clinician-related issues). Resource issues emerged in 2 broad areas: financial (funding and costs of EBPs) and human (staffing resources, staff development, and support).

TABLE 1.

Mean Importance Ratings of Factors Affecting Evidence-Based Practice (EBP) Implementation, Overall and by Stakeholder Group: San Diego County, CA, 2005

| Cluster | Overall Rating, mean | County Rating, mean | Agency Director Rating, mean | Program Manager Rating, mean | Clinician Rating, mean | Administrative Staff Rating, mean | Consumer Rating, mean |

| Agency compatibility | 2.68 | 2.36 | 2.64 | 2.54 | 2.72 | 3.11 | 2.91 |

| Beneficial features of EBP | 2.94 | 2.80 | 2.53 | 3.05 | 2.78 | 3.11 | 3.40 |

| Clinical perceptions | 2.88 | 2.70 | 2.53 | 2.82 | 3.08 | 3.33 | 2.95 |

| Consumer concerns | 2.85 | 2.59 | 2.67 | 2.84 | 2.87 | 3.26 | 3.03 |

| Consumer values and marketing | 2.87 | 2.60 | 2.73 | 2.81 | 2.67 | 3.11 | 3.47 |

| Costs of EBP | 3.13 | 2.91 | 3.42 | 3.08 | 2.96 | 3.56 | 3.09 |

| EBP limitations | 2.70 | 2.53 | 2.80 | 2.67 | 2.72 | 3.11 | 2.53 |

| Funding | 3.17 | 3.13 | 3.25 | 3.00 | 2.94 | 3.71 | 3.33 |

| Impact on clinical practice | 2.81 | 2.00 | 2.80 | 2.59 | 3.02 | 3.33 | 3.38 |

| Political dynamics | 2.90 | 2.67 | 3.13 | 2.95 | 2.78 | 3.22 | 2.80 |

| Research and outcomes supporting EBP | 3.09 | 2.91 | 3.11 | 3.21 | 2.95 | 3.15 | 3.22 |

| Staff development and support | 3.16 | 2.96 | 3.12 | 3.09 | 3.15 | 3.47 | 3.32 |

| Staffing resources | 3.16 | 3.06 | 3.26 | 3.29 | 3.05 | 3.27 | 3.08 |

| System readiness and compatibility | 2.81 | 2.50 | 3.00 | 2.60 | 2.81 | 3.06 | 3.10 |

Notes. All ratings were made for each of 105 statements on a zero to 4 scale, with 0 = not at all important and 4 = extremely important.

TABLE 2.

Mean Changeability Ratings of Factors Affecting Evidence-Based Practice (EBP) Implementation, Overall and by Stakeholder Group: San Diego County, CA, 2005

| Cluster | Overall Rating, mean | County Rating, mean | Agency Director Rating, mean | Program Manager Rating, mean | Clinician Rating, mean | Administrative Staff Rating, mean | Consumer Rating, mean |

| Agency compatibility | 2.31 | 2.02 | 2.42 | 2.25 | 2.15 | 2.11 | 2.89 |

| Beneficial features of EBP | 2.24 | 2.20 | 2.13 | 2.33 | 1.78 | 2.00 | 2.93 |

| Clinical perceptions | 2.70 | 2.20 | 2.95 | 2.86 | 2.71 | 2.42 | 2.90 |

| Consumer concerns | 2.45 | 2.07 | 2.64 | 2.50 | 2.29 | 2.17 | 2.93 |

| Consumer values and marketing | 2.56 | 2.33 | 2.60 | 2.67 | 2.22 | 2.44 | 3.07 |

| Costs of EBP | 2.16 | 1.87 | 1.93 | 2.08 | 2.02 | 2.30 | 2.91 |

| EBP limitations | 2.19 | 2.00 | 1.87 | 2.19 | 2.00 | 2.11 | 3.00 |

| Funding | 1.95 | 1.58 | 1.60 | 1.84 | 1.69 | 2.08 | 3.05 |

| Impact on clinical practice | 2.55 | 2.05 | 2.70 | 2.52 | 2.44 | 2.50 | 3.10 |

| Political dynamics | 2.22 | 2.27 | 1.93 | 2.10 | 1.94 | 1.89 | 3.13 |

| Research and outcomes supporting EBP | 2.40 | 2.24 | 2.18 | 2.43 | 2.21 | 2.58 | 2.84 |

| Staff development and support | 2.54 | 2.48 | 2.76 | 2.49 | 2.25 | 2.37 | 2.90 |

| Staffing resources | 2.26 | 2.06 | 2.30 | 2.14 | 2.05 | 2.30 | 2.84 |

| System readiness and compatibility | 2.30 | 2.13 | 2.23 | 2.10 | 2.22 | 2.39 | 2.87 |

Notes. All ratings were made for each of 105 statements on a zero to 4 scale, with 0 = not at all changeable and 4 = extremely changeable.

Participants rated funding as both the most important (3.17, on a zero to 4 scale) and least changeable (1.95). Staffing resources were rated as important (3.16), but were viewed as more changeable than funding, at a 2.26 or medium level on the zero to 4 scale. Staff development and support were perceived as equal in importance to staff resources, but more changeable, with a mean of 2.54. Participants also saw clinical perceptions as both of very great importance (2.88) and moderate to high in changeability (2.70). In addition, research and outcomes supporting EBP was viewed as having very great importance (3.09), along with medium-high changeability (2.40).

Although the small sample size makes significance testing inappropriate, Tables 1 and 2 do suggest variability across stakeholder groups. We provide a few examples here to illustrate some of that variability. For example, county officials on average rated impact on clinical practice as less important than did other stakeholders (2.00 versus 2.81), and differed from others' ratings of agency compatibility (2.36 versus the overall sample mean of 2.68). County officials rated costs as less important than did agency directors or administrative staff (2.91 versus 3.42 and 3.56, respectively). Finally, consumers rated consumer values and marketing as more important than did other stakeholder groups (3.47 versus 2.87).

DISCUSSION

We identified 14 factors perceived to facilitate or impede EBP implementation in public mental health services. Factors address concerns about the strength of the evidence base, how agencies with limited financial and human resources can bear the costs attendant to changing therapeutic modalities, clinician concerns about effects on their practice, consumer concerns about quality and stigma, and potential burden for new types of services. The factors identify structures, processes, and relationships likely to be important in EBP implementation.

Subsequent work should operationalize, further explore, and test these factors in the context of EBP implementation efforts to build a body of evidence regarding which factors are more or less important in various contexts and with various types of EBPs. As research validates findings across other settings, these factors may indicate promising areas for system or organizational intervention. For example, areas higher on perceived importance may deserve more attention during the implementation planning process. The degree to which factors are deemed changeable indicates how much emphasis or effort may be needed to effect change.

The consistency of this study's findings with those from other settings suggests that some key dynamics apply across service sectors. For instance, participants reported that greater perceived benefits would facilitate EBP implementation,30 whereas greater costs would undermine it.30 Compatibility with agency norms and processes was identified as a facilitator,31–33 as were staffing resources35 and clinician perceptions of the value of these practices for themselves30,35 and their clients. Other factors are also consistent with previous findings about organizational change. For example, in proximity to beneficial features and costs are clusters representing system readiness and agency compatibility, illustrating concerns at both the system and agency levels. These factors are consistent with evidence that higher levels of congruence between organizational values and characteristics of an innovation are important in successful implementation.17,33,60

Consumer concerns were salient in the current mental health context as well as in recent research on EBP in other sectors.23 Implementation of EBP may differ from other types of major organizational change in the requirement that consumer roles are changed, sometimes radically, from receipt of care to more partnership in care. For example, study participants saw EBPs as requiring more consumer time. There was also concern that consumers might be participants in “experiments” testing new modalities. However, therapies with stronger evidence bases were thought to be potentially more effective and to offer the potential to reduce stigma associated with receiving mental health services. Clinicians and caseworkers could address consumer concerns more effectively by discussing these specific issues relative to treatment options. This would allow consumers to judge the fit with their own needs, preferences, and goals for services. These issues suggest more emphasis on making consumer perspectives integral in developing models of EBP implementation.

Resource availability for EBP implementation30 appeared particularly salient for public sector mental health services, which can be underfunded and frequently contends with high staff turnover, often averaging 25% per year or more.61 However, there is recent evidence that appropriate EBP implementation can lead to reduced staff turnover.62 Participants in the current sample voiced concern regarding their ability to secure additional resources to overcome resource barriers. Funds for mental health and social services may face competing priorities of legislatures that may favor funding to cover other increasing costs such as Medicaid and prisons.63

Such penury is not inevitable, however. For instance, in California, the Mental Health Services Act provides for a 1% tax on personal income over $1 million annually to be allocated for mental health care. This illustrates the potential for policymakers at higher levels of government to change larger system factors that can impact the county and agency levels. Part of what distinguishes the Mental Health Services Act from some other government efforts to improve public health funding has been its continuity and predictability. Such funding changes could be examined for their impact on EBP implementation.

Agency executive directors and administrators in another public sector study cited uncertainty about future allocations as a reason not to invest in EBPs.30 Previous research in other organizational settings suggests that payers can improve EBP implementation by paying for initial costs such as time spent on training.16 Clinicians in general require ongoing training to support new treatment approaches,36 and such ongoing support is especially applicable to public sector agencies with regard to staff turnover rates.61 A one-time funding allocation, even if generous, is likely insufficient to support a sustainable EBP implementation. However, varying funding strategies could be tested in future studies.

Findings also suggest some promising means of supporting staff in evidence-based implementation. First, participants believed that there were feasible ways of enhancing staff development and support for EBPs. Associations between positive leadership and positive clinician attitudes toward adopting EBPs in mental health agencies support this view.42 Another study found leadership at the supervisory level to be particularly important during EBP implementation.64 Thus, leadership appears important at multiple levels in shaping provider attitudes and facilitating a climate for innovation that can bolster buy-in for EBPs. One project is currently testing tools to help mental health leaders support staff with implementation45 and a recent review suggests that training opinion leaders can help to promote practice change.11

Finally, differences across stakeholder groups in ratings of the importance and changeability of factors affecting EBPs suggest that communication among stakeholders may facilitate a more complete understanding of what affects implementation. For instance, agency directors might share information with county-level officials about the costs of EBPs as well as challenges of achieving fit between EBPs and agency norms, cultures, and infrastructures. The organizational literature may help stakeholders consider the fit between EBP and specific agencies. For instance, larger, more formal agencies may be slower to adopt EBP, but may also be better equipped to implement changes.16 The relative credibility of key individuals favoring or opposing EBP may also affect implementation.30 County officials might advocate resources that would facilitate use of EBPs, such as information systems and forms compatible with data required for EBPs. Consumer perspectives also provide insight that is critical in tailoring implementation efforts and system and agency responsiveness to consumer preferences.

Limitations and Strengths

Some limitations of the current study should be noted. The sample was derived from 1 public mental health system. However, the system is in 1 of the 6 most populous counties in the United States, has a high degree of racial/ethnic diversity, and likely represents many issues applicable to other service settings. Second, the relatively small sample size, though not uncharacteristic for qualitative studies, did not allow for direct significance testing of stakeholder group differences on importance and changeability ratings. Third, other approaches to eliciting participant views, such as Delphi methods, may have elicited different or more-consistent or less-consistent data.46 In addition to the methods used in the present study, the literature further reinforces the need for multiple approaches in identifying factors related to EBP implementation, including qualitative investigations of their normative and political contexts. In our developing work, quantitative and qualitative methods are being employed to address these issues.23,62 Fourth, the EBPs presented to research participants were behavioral and psychosocial interventions. The degree to which inclusion of evidence-based pharmacological treatments as an exemplar of EBP would have resulted in new, different, or redundant data is unknown. Finally, selection of stakeholder participants was done on the basis of insider knowledge of a large community mental health system. Though it is not a random sample, we believe the purposive sampling approach provided good representation and knowledge of this large urban service system.

Some study strengths should also be noted. First, the selected research approach has been effective in facilitating stakeholder understanding of factors likely to be important in EBP implementation in a complex mental health service system and for facilitating the inclusion of stakeholders across system and organizational levels. Second, the focus group structure and brainstorming process allowed stakeholders to express their perceptions in a safe environment that facilitated candor. Such processes for eliciting stakeholder input have promise for facilitating exchange among cultures of research, practice, and policy.

Conclusion

The current study provides the basis for a conceptual model of implementation barriers and facilitators in public mental health services. Future studies should test the relevance of these and other factors for EBP implementation in mental health and other human service agencies. Because of the congruence between current findings and those from studies in other service sectors, and in business and organizational settings,30–33,35 we believe that readers should be particularly attentive to these results in relation to their own implementation efforts. By considering multiple stakeholder perspectives and fostering communication across groups, leaders may better facilitate the implementation and use of effective treatment practices.

Acknowledgments

This research was supported by the National Institute of Mental Health (grants R03MH070703 and R01 MH072961).

Human Participant Protection

This study was approved by the institutional review board of the University of California, San Diego.

References

- 1.APA Presidential Task Force on Evidence-Based Practice. Evidence-based practice in psychology. Am Psychol 2006;61:271–285 [DOI] [PubMed] [Google Scholar]

- 2.Institute of Medicine Committee on the Quality of Health Care in America Crossing the Quality Chasm: A New Health System for the 21st Century Washington, DC: National Academy Press; 2001 [Google Scholar]

- 3.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ 1996;312:71–72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Balas EA, Boren SA. Managing clinical knowledge for health care improvement. In: Bemmel J, McCray AT, eds Yearbook of Medical Informatics. Patient-Centered Systems. Stuttgart, Germany: Schattauer Verlagsgesellshaft mbH;2000:65–70 [PubMed] [Google Scholar]

- 5.Hoagwood K, Burns BJ, Kiser L, Ringeisen H, Schoenwald SK. Evidence-based practice in child and adolescent mental health services. Psychiatr Serv 2001;52:1179–1189 [DOI] [PubMed] [Google Scholar]

- 6.Lancaster B. Closing the gap between research and practice. Health Educ Q 1992;19:408–411 [Google Scholar]

- 7.Lenfant C. Shattuck lecture—Clinical research to clinical practice—lost in translation? N Engl J Med 2003;349:868–874 [DOI] [PubMed] [Google Scholar]

- 8.National Institutes of Health Dissemination and implementation research in health (R01) Web site. Available at: http://grants.nih.gov/grants/guide/pa-files/PAR-06-039.html. Accessed August 1, 2008

- 9.Woolf SH. The meaning of translational research and why it matters. JAMA 2008;299:211–213 [DOI] [PubMed] [Google Scholar]

- 10.Aarons GA. Measuring provider attitudes toward evidence-based practice: consideration of organizational context and individual differences. Child Adolesc Psychiatr Clin N Am 2005;14:255–271 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network; 2005. FMHI publication 231 [Google Scholar]

- 12.Hoagwood K, Olin S. The NIMH blueprint for change report: research priorities in child and adolescent mental health. J Am Acad Child Adolesc Psychiatry 2002;41:760–767 [DOI] [PubMed] [Google Scholar]

- 13.Dopson S, Fitzgerald L. Knowledge to Action: Evidence-Based Care in Context. New York, NY: Oxford University Press; 2006 [Google Scholar]

- 14.Dopson S, Locock L, Gabbay J, Ferlie E, Fitzgerald L. Evidence-based medicine and the implementation gap. Health (London) 2003;7:311–330 [Google Scholar]

- 15.Morgenstern J. Effective technology transfer in alcoholism treatment. Subst Use Misuse 2000; 35:1659–1678 [DOI] [PubMed] [Google Scholar]

- 16.Frambach RT, Schillewaert N. Organizational innovation adoption: a multi-level framework of determinants and opportunities for future research. J Business Res 2002;55(special issue):163–176 [Google Scholar]

- 17.Klein KJ, Conn AB, Sorra JS. Implementing computerized technology: an organizational analysis. J Appl Psychol 2001;86:811–824 [DOI] [PubMed] [Google Scholar]

- 18.Brownson RC, Royer C, Ewing R, McBride TD. Researchers and policymakers: travelers in parallel universes. Am J Prev Med 2006;30:164–172 [DOI] [PubMed] [Google Scholar]

- 19.Glasgow RE, Emmons KM. How can we increase translation of research into practice? Types of evidence needed. Annu Rev Public Health 2007;28:413–433 [DOI] [PubMed] [Google Scholar]

- 20.Syed SB, Hyder AA, Bloom G, et al. andFuture Health Systems: Innovation for Equity Exploring evidence-policy linkages in health research plans: a case study from six countries. Health Res Policy Syst 2008;6:4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Varvasovszky Z, Brugha R. How to do (or not to do)… a stakeholder analysis. Health Policy Plan 2000;15:338–345 [DOI] [PubMed] [Google Scholar]

- 22.Isett KR, Burnam MA, Coleman-Beattie B, et al. The state policy context of implementation issues for evidence-based practices in mental health. Psychiatr Serv 2007;58:914–921 [DOI] [PubMed] [Google Scholar]

- 23.Aarons GA, Palinkas LA. Implementation of evidence-based practice in child welfare: service provider perspectives. Adm Policy Ment Health 2007;34:411–419 [DOI] [PubMed] [Google Scholar]

- 24.Palinkas LA, Aarons GA. A view from the top: executive and management challenges in a statewide implementation of an evidence-based practice to reduce child neglect. Int J Child Health Hum Dev 2009;2 [Google Scholar]

- 25.Choi BC, Pang T, Lin V, et al. Can scientists and policy makers work together? J Epidemiol Community Health 2005;59:632–637 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Innvaer S, Vist G, Trommald M, Oxman A. Health policy-makers' perceptions of their use of evidence: a systematic review. J Health Serv Res Policy 2002;7:239–244 [DOI] [PubMed] [Google Scholar]

- 27.Glisson C. The organizational context of children's mental health services. Clin Child Fam Psychol Rev 2002;5:233–253 [DOI] [PubMed] [Google Scholar]

- 28.Bowen S, Zwi AB. Pathways to “evidence-informed” policy and practice: a framework for action. PLoS Med 2005;2:e166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Report of the 2005 Presidential Task Force on Evidence-Based Practice. Washington, DC: American Psychological Association; 2005:1–28 [Google Scholar]

- 30.Buchanan D, Fitzgerald L, Ketley D, et al. No going back: a review of the literature on sustaining organizational change. Int J Manag Rev 2005;7:189–205 [Google Scholar]

- 31.Rimmer M, Macneil J, Chenhall R, Langfield-Smith K, Watts L. Reinventing Competitiveness: Achieving Best Practice in Australia. South Melbourne, Australia: Pitman; 1996 [Google Scholar]

- 32.Kotter JP. Leading change: why transformation efforts fail. Harv Bus Rev 1995;73:59–67 [Google Scholar]

- 33.Lozeau D, Langley A, Denis JL. The corruption of managerial techniques by organizations. Hum Relat 2002;55:537–564 [Google Scholar]

- 34.Pettigrew AM, Ferlie E, McKee L. Shaping Strategic Change: Making Change in Large Organizations: The Case of the National Health Service. London, England: Sage Publications; 1992 [Google Scholar]

- 35.Dale BG, Boaden RJ, Wilcox M, McQuater RE. Sustaining continuous improvement: what are the key issues? Qual Eng 1999;11:369–377 [Google Scholar]

- 36.Drake RE, Torrey WC, McHugo GJ. Strategies for implementing evidence-based practices in routine mental health settings. Evid Based Ment Health 2003;6:6–7 [DOI] [PubMed] [Google Scholar]

- 37.Grimshaw JM, Russell IT. Achieving health gain through clinical guidelines II: ensuring guidelines change medical practice. Qual Health Care 1994;3:45–52 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Haynes B, Haines A. Barriers and bridges to evidence based clinical practice. BMJ 1998;317:273–276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Simpson DD. A conceptual framework for transferring research to practice. J Subst Abuse Treat 2002;22:171–182 [DOI] [PubMed] [Google Scholar]

- 40.Dunham RB, Grube JA, Gardner DG, Pierce JL. The development of an attitude toward change instrument. Presented at the 49th Annual Meeting of the Academy of Management; August 1989; Washington, DC [Google Scholar]

- 41.Dopson S, Fitzgerald L. The role of the middle manager in the implementation of evidence-based health care. J Nurs Manag 2006;14:43–51 [DOI] [PubMed] [Google Scholar]

- 42.Aarons GA. Transformational and transactional leadership: association with attitudes toward evidence-based practice. Psychiatr Serv 2006;57:1162–1169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Backer TE, David SL, Soucy GE. Reviewing the Behavioral Science Knowledge Base on Technology Transfer. Rockville, MD: National Institute on Drug Abuse; 1995. NIDA research monograph 155; NIH publication no. 95-4035 [PubMed] [Google Scholar]

- 44.Ferlie EB, Shortell SM. Improving the quality of health care in the United Kingdom and the United States: a framework for change. Milbank Q 2001;79:281–315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hermann RC, Chan JA, Zazzali JL, Lerner D. Aligning measurement-based quality improvement with implementation of evidence-based practices. Adm Policy Ment Health 2006;33:636–645 [DOI] [PubMed] [Google Scholar]

- 46.Denis JL, Lehoux P, Hivon M, Champagne F. Creating a new articulation between research and practice through policy? The views and experiences of researchers and practitioners. J Health Serv Res Policy 2003;8(Suppl 2):44–50 [DOI] [PubMed] [Google Scholar]

- 47.Lavis JN, Lomas J, Hamid M, Sewankambo NK. Assessing country-level efforts to link research to action. Bull World Health Organ 2006;84:620–628 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Trochim WM. An introduction to concept mapping for planning and evaluation. Eval Program Plann 1989;12:1–16 [Google Scholar]

- 49.Burke JG, O'Campo P, Peak GL, Gielen AC, McDonnell KA, Trochim WM. An introduction to concept mapping as a participatory public health research methodology. Qual Health Res 2005;15:1392–1410 [DOI] [PubMed] [Google Scholar]

- 50.US Census Bureau Population estimates for the 100 largest U.S. counties cased on July 1, 2007 population estimates: April 1, 2000 to July 1, 2007 (CO-EST2007-07). Available at: http://www.census.gov/popest/counties/CO-EST2007-07.html. Accessed February 2, 2009

- 51.Kazdin AE, Siegel TC, Bass D. Cognitive problem-solving skills training and parent management training in the treatment of antisocial behavior in children. J Consult Clin Psychol 1992;60:733–747 [DOI] [PubMed] [Google Scholar]

- 52.Sexton TL, Alexander JF. Functional Family Therapy: Principles of Clinical Intervention, Assessment, and Implementation. Henderson, NV: RCH Enterprises; 1999 [Google Scholar]

- 53.Goldstein AP, Gick B, Reiner S, Zimmerman D, Coultry TM. Aggression Replacement Training: A Comprehensive Intervention for Aggressive Youth. Champaign, IL: Research Press; 1987 [Google Scholar]

- 54.Trochim WM, Milstein B, Wood BJ, Jackson S, Pressler V. Setting objectives for community and systems change: an application of concept mapping for planning a statewide health improvement initiative. Health Promot Pract 2004;5:8–19 [DOI] [PubMed] [Google Scholar]

- 55.Rosenberg S, Kim M. The method of sorting as a data-gathering procedure in multivariate research. Multivariate Behav Res 1975;10:489–502 [DOI] [PubMed] [Google Scholar]

- 56.Davison ML. Multidimensional Scaling. New York, NY: John Wiley & Sons Inc; 1983 [Google Scholar]

- 57.Aarons GA, Goldman MS, Greenbaum PE, Coovert MD. Alcohol expectancies: integrating cognitive science and psychometric approaches. Addict Behav 2003;28:947–961 [DOI] [PubMed] [Google Scholar]

- 58.Kane M, Trochim W. Concept Mapping for Planning and Evaluation. Thousand Oaks, CA: Sage Publications Inc; 2007 [Google Scholar]

- 59.Trochim WM, Stillman FA, Clark PI, Schmitt CL. Development of a model of the tobacco industry's interference with tobacco control programmes. Tob Control 2003;12:140–147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manage Rev 1996;21:1055–1080 [Google Scholar]

- 61.Aarons GA, Sawitzky AC. Organizational climate partially mediates the effect of culture on work attitudes and turnover in mental health services. Adm Policy Ment Health 2006;33:289–301 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Aarons GA, Sommerfeld DH, Hecht DB, Silovsky JF, Chaffin MJ. The impact of evidence-based practice implementation and fidelity monitoring on staff turnover: evidence for a protective effect. J Consult Clin Psychol 2009;77:270–280 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Domino ME, Norton EC, Morrissey JP, Thakur N. Cost shifting to jails after a change to managed mental health care. Health Serv Res 2004;39:1379–1402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Aarons GA, Sommerfeld DH. Transformational leadership, team climate for innovation, and staff attitudes toward adopting evidence-based practice. Presented as a talk at the 68th Annual Meeting of the Academy of Management; August 2008; Anaheim, CA [Google Scholar]