Abstract

According to the sensorimotor theory of lexicosemantic organization, semantic representations are neurally distributed and anatomically linked to category-specific sensory areas. Previous functional neuroimaging studies have demonstrated category specificity in lexicosemantic representations. However, little evidence is available from word generation paradigms, which provide access to semantic representations while minimizing confounds resulting from low-level perceptual features of stimulus presentation. In this study, 13 healthy young adults underwent fMRI scanning while performing a word generation task, generating exemplars to nine different semantic categories. Each semantic category was assigned to one of three superordinate category types, based upon sensorimotor modalities (visual, motor, somatosensory) presumed to predominate in lexical acquisition. For word generation overall, robust activation was seen in left inferior frontal cortex. Analyses by sensorimotor modality categories yielded activations in brain regions related to perceptual and motor processing: Visual categories activated extrastriate cortex, motor categories activated the intraparietal sulcus and posterior middle temporal cortex, and somatosensory categories activated postcentral and inferior parietal regions. Our results are consistent with the sensorimotor theory, according to which lexicosemantic representations are distributed across brain regions participating in sensorimotor processing associated with the experiential components of lexicosemantic acquisition.

Introduction

A key issue in the field of cognitive neuroscience is how and where word meanings are represented and processed in the brain (Pulvermüller, 1999). It has been suggested that the neural substrates underlying lexicosemantic representations are associated with regions that were activated at the time of encoding corresponding sensorimotor experiences (Damasio et al., 1996; Wheeler et al., 2000). In addition to the classic language areas of Broca and Wernicke, researchers have identified several brain regions associated with lexicosemantic processing. In particular, neuroimaging studies have associated lexicosemantic knowledge with cortices known for their participation in motor and sensory/perceptual processing, such as the left motor cortex (Chao and Martin, 2000; Goldberg et al., 2006b; Hauk et al., 2004), fusiform gyrus and occipital cortex (Chao et al., 1999; Chao et al., 2002; Mechelli et al., 2006; Simmons et al., 2007; Pulvermüller and Hauk, 2006), and olfactory cortex (Goldberg et al., 2006a, b; Gonzalez et al., 2006, Simmons et al., 2005). These sensorimotor activations may be accounted for by the sensorimotor theory of semantic processing (Barsalou, 2008; Martin, 2007). The sensorimotor theory was first developed from studies of patients with semantic deficits that appear to differentially affect certain categories or classes of objects, in particular animate versus man-made objects. Some patients, for example, show greater picture naming deficits for animals than for other types of objects (Humphreys and Forde, 2001). Warrington (1987) proposed that sensory features are important for distinguishing between living items, while action semantics are more important for non-living items, like tools. Therefore, loss of sensory or action knowledge differentially disrupts the semantic representations of living and non-living items, respectively. According to the sensorimotor theory, semantic information is thus anatomically linked to category-specific sensory areas in the human brain (Martin, 2007).

Evidence from lesion patients has been more recently complemented by functional neuroimaging studies targeting brain areas specifically involved in the retrieval of concept knowledge belonging to different semantic categories. For example, studies have shown that while processing action/function related meanings, activation was found in left premotor cortex (Chao and Martin, 2000; Chao et al., 2002; Hauk et al., 2004), left precentral sulcus (Goldberg et al., 2006b), left precentral and postcentral gyrus (Kemmerer et al., 2007), and left posterior middle temporal cortex (Chao et al., 1999; Chao et al., 2002; Mechelli et al., 2006; Noppeney et al., 2005), an area involved in processing non-biological object motion (Beauchamp et al., 2003; Martin et al., 1996). Studies have also associated processing of animate categories with activations in visual cortices, such as the fusiform gyrus (Chao et al., 2002; Damasio et al., 2004; Devlin et al., 2005; Mechelli et al., 2006) and the superior temporal gyrus (Chao et al., 1999; Tyler et al., 2003), an area known to be involved in the perception of biological motion (Beauchamp et al., 2003; Pelphrey et al., 2005).

Both neuropsychological and functional neuroimaging studies have used a variety of tasks to investigate the brain representation of lexicosemantic information. The most common of these tasks are picture naming, generation of verbs to pictures, and pictorial decision. It can be argued that the use of visual stimuli in these tasks either confounds visual effects related to semantic retrieval or reduces the detection of such effects (when control stimuli of equal complexity are used). An additional, more specific concern regarding these tasks is that the perceptual complexity of the stimuli used to prompt semantic processing may differ systematically across categories of objects and may therefore contribute to between-modality differences in performance or brain activation (Devlin et al., 2005; Gerlach, 2007; Tyler et al., 2003). It is therefore important to rule out perceptual complexity when evaluating data for effects of semantic categories. In task paradigms such as word reading and lexical semantic decision, visual complexity can be controlled, but is still present as a potential issue because in comparisons of visual task and control conditions, semantic effects in visual cortex may be wiped out or underestimated. These confounds can be minimized by using word generation paradigms, which require lexical access and lessen effects related to visual presentation and perceptual complexity.

Thus, word generation paradigms are well suited for use in lexicosemantic studies. In a word generation task, participants can be given a semantic category (e.g., Animals) and can be asked to generate words belonging to this category. This generation process involves retrieval of semantic representations of potential lexical exemplars, evaluation of their appropriateness for the given category, retrieval and execution of phonological and articulatory codes, and maintenance in working memory of the category and generated lexical exemplars (Cardebat et al., 1996; Mummery et al., 1996). Word generation paradigms have been used by neuropsychologists to assess frontal lobe function (Milner, 1982) and verbal intelligence (Arffa, 2007), and to investigate cortical areas involved in language processing (Cuenod et al., 1995; Gaillard et al., 2003). However, few studies have used word generation paradigms to examine category-specific semantic representations. Vitali and colleagues (2005) used a covert word generation paradigm to examine category-specific effects while subjects silently generated animal and tool names. They found tool specific activations in motor cortices, but no activations specific for animals. The study by Vitali et al. had two main limitations. First, participants generated names covertly, therefore no behavioral data were available to verify performance. Secondly, the categories (animals and tools) were used repeatedly and imaging results may have been confounded by practice effects and by repeated generation of identical items.

The current study included a data set, for which results related to word generation modes (overt vs. covert; paced vs. unpaced) have been previously published (Basho et al., 2007). In the present study, we investigated whether generating words belonging to categories with strong experiential sensorimotor components would activate relevant sensorimotor regions. We used a category driven word generation task to examine category-specific activations during word generation (for a list of categories, see Table 1). We predicted that categories with highly salient visual properties (Animals, Colors, Shapes) would activate extrastriate regions, categories related to somatosensory experience (Body parts, Things you eat, Things you drink) would activate postcentral brain regions, and categories with strong motion components (Tools, Sports, Transportation) would activate primary motor or premotor regions, as well as regions associated with the perception of motion, such as superior temporal sulcus and temporoparietal area MT.

Table 1.

Semantic Categories Grouped by Modality

| Modality | Categories |

|---|---|

| “Visual” | Animals |

| Colors | |

| Shapes | |

| “Motor” (incl. motion perception) |

Sports |

| Tools | |

| Transportation | |

| “Somatosensory” (incl. gustatory and olfactory modalities) |

Body Parts |

| Things you eat | |

| Things you drink | |

| Categories not included in fMRI analysis |

Academic subjects |

| Car parts | |

| Musical instruments | |

| Furniture | |

| Hobbies | |

| Occupations | |

Methods

Participants

Participants were 13 healthy right-handed college students ages 21–37 years (M = 25.8 yrs), including four men and nine women, all of whom were native speakers of English. The study was approved by the Internal Review Boards of San Diego State University and University of California San Diego. All participants gave informed consent.

Tasks

Category-driven word generation was implemented using a modification of the traditional verbal fluency task. The paradigm was also designed to examine the brain activation effects of covert and overt speech as well as response pacing (paced, unpaced) (as published separately in Basho et al., 2007). The Presentation software package (Neurobehavioral Systems, Inc., 2003) was used for stimulus presentation. At the beginning of each task block, a semantic category was given by an auditory instruction (e.g., “Tell me animals”). There were two response modes. In overt conditions participants responded verbally, whereas in covert conditions they generated words without overt speech. In addition, there were two response pacing conditions. In paced versions, participants received a prompt (an exclamation mark presented for 1.5 sec) every three seconds and were instructed to name one exemplar per exclamation mark. Twelve such prompts were presented per experimental block and category. Participants were instructed to say the word “nothing” (overtly or covertly, depending on condition), if they could not think of a new exemplar when prompted. In the unpaced conditions, the exclamation point stayed continuously on the screen for 36 seconds and subjects were instructed to name as many exemplars as possible at their own pace. Similar to the paced versions, subjects were instructed to say the word “nothing” when unable to produce new exemplars, but at their own pace. In the control condition, subjects repeatedly produced the word “nothing.” Four functional runs were acquired, one for each generation mode condition of the category-driven word generation paradigm: paced-overt, paced-covert, unpaced-overt and unpaced-covert. The order of functional runs was counterbalanced across subjects. From a total of 16 semantic categories (pseudorandomly distributed across four generation modes) nine categories with strong experiential sensorimotor components were selected for the present study (Table 1). These nine categories were grouped into three sensorimotor domains (each containing three categories) according to the modality (visual, motor, or somatosensory) predominating the experiential bases of lexical acquisition. Note that the categories subsumed under “motor” included both action and perception-related aspects of movement (see Discussion).

fMRI Scanning

The experiment was conducted using a Varian 3T scanner at the Center for Functional MRI of the University of California, San Diego. Four functional runs were acquired for each subject (144 time points per run, echo time [TE] = 40ms, repetition time [TR] = 2000ms, flip angle = 90 degrees; field of view = 21cm, 64×64 matrix with an in-plane resolution of 3.125mm × 3.125mm, slice thickness =4mm, 32 axial slices). In each functional run, four experimental blocks (42 seconds each) were alternated with four control blocks (28 seconds each), for a total duration of 4 minutes and 48 seconds per run. Four time point volumes at the beginning of each run were discarded to allow magnetization to reach equilibrium. Structural images were sagittally acquired for each subject using a spoiled GRASS sequence (SPGR), with an isotropic voxel dimension of 1mm3.

Processing of behavioral data

Overt responses were recorded using a Commander XG MRI-compatible sound system (Resonance Technology, Inc., Northridge, CA), with a microphone attached to headphones worn by the subject during the MR scans. Responses were recorded on a laptop computer using SoundEdit 16 software (Macromedia, Inc., 1995) at a sampling rate of 44.1 kHz. Due to technical problems, recordings were not available for three participants. Recordings were filtered to remove scanner noise and improve intelligibility of vocal responses for the remaining participants, using Audacity (http://audacity.sourceforge.net/). The following filtering approach was adopted: Using the equalizer function we increased signal strength of frequencies <300 Hz and decreased frequencies >400 Hz. This reduced scanner noise considerably. Nonetheless, in two of the ten subjects from whom recordings were available, responses remained unintelligible, probably due to the placement of the microphone (for details see Basho et al., 2007). Behavioral results were therefore limited to eight participants. Responses were transcribed and scored to exclude any exemplars that were repeated within a category. For each modality, the mean number of exemplars across subjects and categories was calculated.

Image Processing and Analysis

After fieldmap correction for reduction of image distortions, data for each subject were preprocessed using the FMRIB Software Library (Smith et al., 2004). Functional runs first underwent brain extraction (BET), then image time series were motion corrected (MCFLIRT), registered to the high-resolution structural volume of the individual subject (FLIRT), and spatially smoothed (6 mm Gaussian kernel). General Linear Model (GLM) statistical analyses were performed using Analysis of Functional NeuroImages (AFNI) software (Cox, 1996) to identify word generation effects and generation mode effects (reported in Basho et al., 2007). Effects of head motion were modeled based on output from motion detection in MCFLIRT and included as 6 orthogonal regressors (3 translations and 3 rotations). Mean displacement in head position was ≤0.1mm (with no significant difference between overt and covert generation; see Basho et al. (2007) for further details).

Category-specific effects were identified by creating GLM contrasts comparing three different sensorimotor modalities (collapsing all 3 categories within each modality) against the control condition (i.e., Visual vs. Control, Motor vs. Control, and Somatosensory vs. Control). All categories with strong modality components were included in the category-specific analysis (Table 1). Statistical maps for each contrast were normalized to Talairach space (Talairach and Tournoux, 1988), and fit coefficients were entered into groupwise t-tests. To correct for multiple comparisons, cluster significance was determined by Monte Carlo-type alpha simulation (Forman et al., 1995). For all comparisons, a corrected significance threshold of p <.05 was used. Peak activation voxels were identified within each activation cluster, and the mean percent signal change was calculated for each peak voxel, participant, and category. Additional t-tests were carried out for percent signal change at each peak voxel for comparison between category types.

Results

Behavioral findings

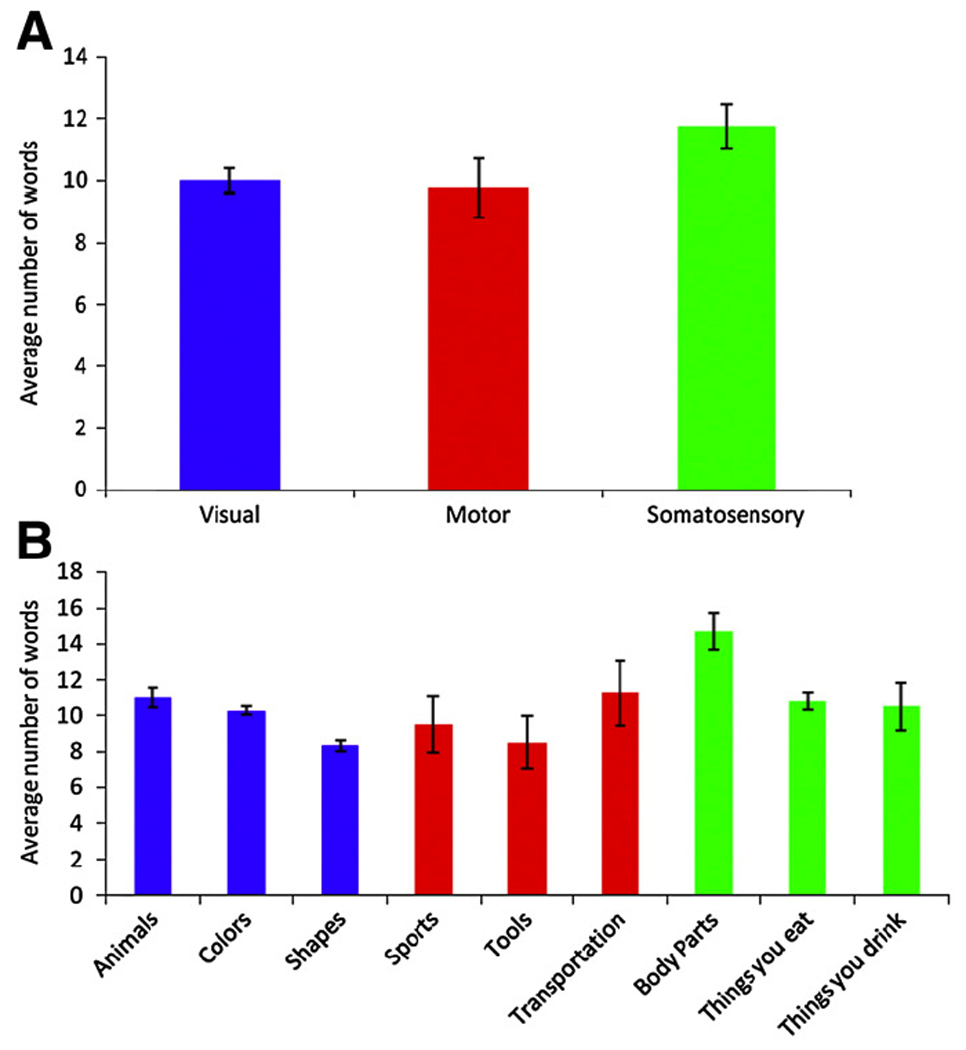

Based on the data collected from overt word generation blocks, subjects generally performed at high levels across the modality-specific categories. As can be seen in Figure 1 A, means of items generated tended to be higher for somatosensory than for visual and motor categories. Figure 1 B showed that subjects performed at similar levels for visual, motor, and somatosensory categories, although the number of items produced for the category Body parts was slightly higher than those for other categories. In a within subject repeated measures analysis of variance, the factor category did not reach significance (F(8,8) = 3.079, p = 0.066).

Figure 1.

(A) Average number of words produced per block for each modality. (B) Average number of words produced per block for each category. Error bars represent standard error.

Imaging findings

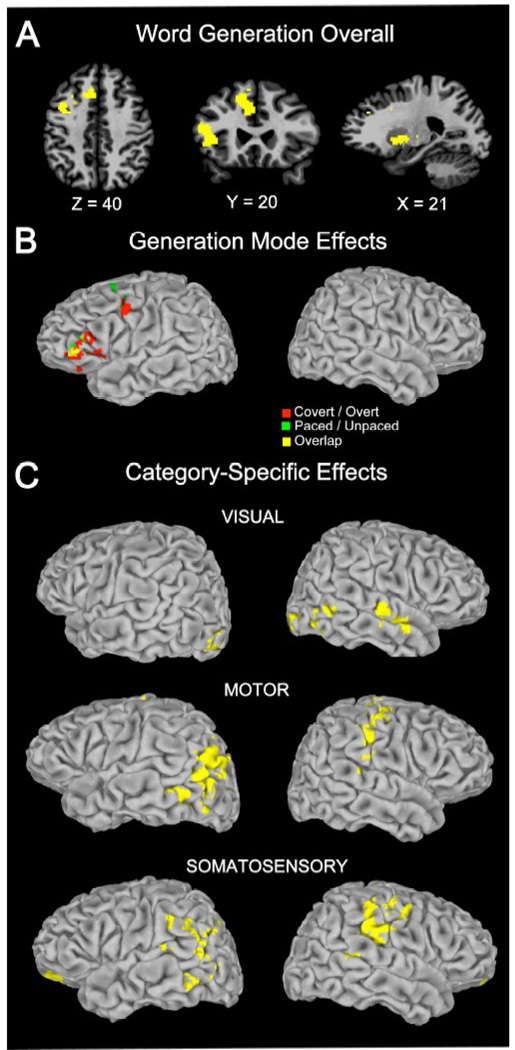

Activations for word generation overall are presented for background reference (Figure 2 panel A and Table 2; for details from a previous analysis in a largely overlapping sample, see Basho et al., 2007). Activations for word generation overall were seen in the left inferior and middle frontal gyri, left medial frontal gyrus, bilateral anterior cingulate gyri, thalamus, and basal ganglia.

Figure 2.

Activation clusters for word generation overall (Panel A), generation mode effects (Covert/Overt, Paced/Unpaced) compared to baseline (panel B), and clusters for motor, visual and somatosensory categories (panel C). Category-specific effects occurred exclusively in regions outside those showing generation mode effects. All clusters p < .05 (corr.).

Table 2.

Cluster list for word generation effect (across all conditions)

| Peak Talairach Coordinates |

|||||

|---|---|---|---|---|---|

| Cluster Size (µl) | x | y | z | Peak t value | Location of peak activation (approximate Brodmann area) |

| 4568 | −13 | −17 | 15 | 8.9 | Left thalamus |

| −16 | −6 | 13 | 8.0 | Left lentiform nucleus | |

| 4336 | −34 | 17 | 17 | 12.5 | Left inferior frontal (44/45) |

| 2608 | −4 | 17 | 39 | 9.3 | Left cingulate (32) |

| 2336 | −24 | 2 | 52 | 9.5 | Left middle frontal (6) |

| 1752 | −9 | −58 | −2 | 7.7 | Left lingual (19) |

Note. All clusters p < .05 corrected.

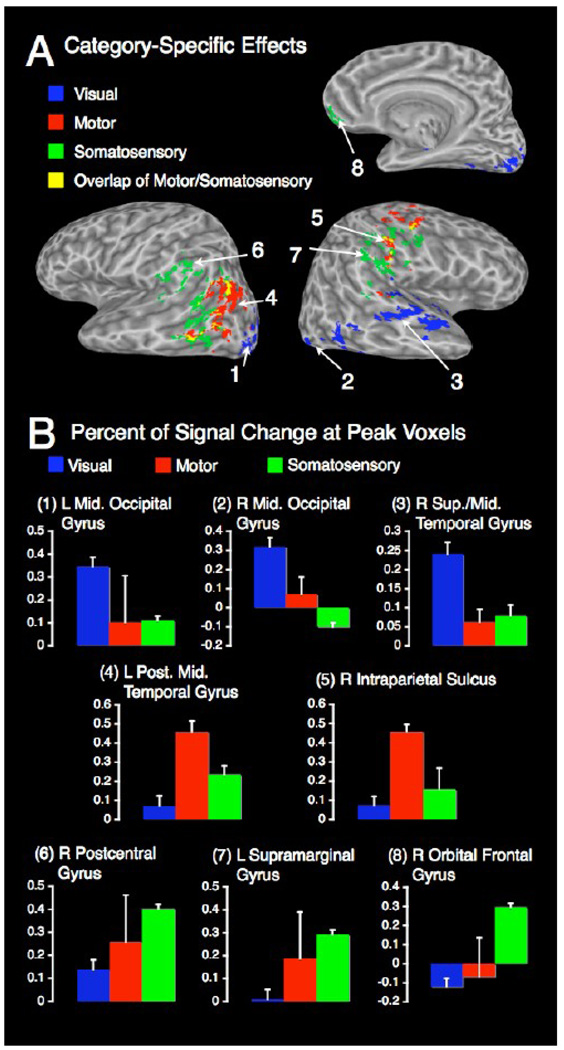

Category-specific effects were found for each of the three sensorimotor modalities (Figure 2 panel C, Figure 3, and Table 3). Visual categories were associated with activations in bilateral lateral occipital cortex and right superior temporal gyrus. For categories in the motor domain, activations were found in the left angular gyrus, left posterior middle temporal gyrus, and right intraparietal sulcus. For somatosensory categories, effects were detected in right postcentral gyrus, left supramarignal gyrus, and bilateral orbital frontal gyrus. Each of these sites showed significant category-specific activations for one modality, but not for the others (Figure 3 panel B, and Table 4), except for some overlap of category-specific activations for motor and somatosensory categories observed in the left middle temporal cortex and temporo-parietal juncture and in right pericentral cortex (Figure 3 panel A).

Figure 3.

(A) Clusters of category-specific effects for each modality and regions of overlap between motor and somatosensory categories; all clusters p < .05 (corr.). (B) Percent signal change at peak voxels for each cluster indicated by numbers in panel A.

Table 3.

Cluster list for category-specific effects

| Peak Talairach Coordinates |

|||||

|---|---|---|---|---|---|

| Cluster Size (µl) | x | y | z | Peak t value |

Location of peak activation(s) (approximate Brodmann area) |

| Visual | |||||

| 3112 | −28 | −90 | 4 | 6.7 | Left Middle Occipital Gyrus (18) |

| 2240 | 36 | −89 | −3 | 5.7 | Right Middle Occipital Gyrus (18) |

| 1920 | 60 | −12 | −2 | 6.4 | Right Superior Temporal Gyrus/Sulcus (22) Right Middle Temporal Gyrus (21) |

| Motor | |||||

| 8800 | −45 | −69 | 34 | 8.7 | Left Angular Gyrus (39) Left Posterior Middle Temporal Gyrus (21) |

| 3952 | 49 | −2.4 | 46 | 6.9 | Right Intraparietal Sulcus (2) |

| Somatosensory | |||||

| 3212 | 56 | −2.9 | 41 | 6.3 | Right Postcentral Gyrus (2) |

| 3976 | −56 | −44 | 31 | 5.7 | Left Supramarginal Gyrus (40) |

| 1016 | 5 | 48 | −20 | 5.9 | Right Orbital Frontal Gyrus (11) |

Note. All clusters p < .05 (corrected)

Table 4.

Between-category comparisons of mean signal change for peak loci

| Comparisons | Difference in mean percent signal change | t | df | p |

|---|---|---|---|---|

| Left Middle Occipital Gyrus | ||||

| Visual - Motor | 0.24 | 1.16 | 12 | 0.269 |

| Visual - Somatosensory | 0.23 | 7.07 | 12 | <0.001 |

| Right Middle Occipital Gyrus | ||||

| Visual - Motor | 0.25 | 2.47 | 12 | 0.029 |

| Visual - Somatosensory | 0.42 | 11.34 | 12 | 0<.001 |

| Right Superior Temporal Sulcus | ||||

| Visual - Motor | 0.18 | 3.97 | 12 | 0.002 |

| Visual - Somatosensory | 0.16 | 5.55 | 12 | <0.001 |

| Left Posterior Middle Temporal Gyrus | ||||

| Motor - Visual | 0.39 | 4.96 | 12 | <0.001 |

| Motor - Somatosensory | 0.22 | 4.27 | 12 | 0.001 |

| Right Intraparietal Sulcus | ||||

| Motor - Visual | 0.38 | 6.58 | 12 | <0.001 |

| Motor - Somatosensory | 0.3 | 3.67 | 12 | 0.003 |

| Right Postcentral Gyrus | ||||

| Somatosensory - Visual | 0.26 | 3.71 | 12 | 0.003 |

| Somatosensory - Motor | 0.14 | 1.71 | 12 | 0.113 |

| Left Supramarginal Gyrus | ||||

| Somatosensory - Visual | 0.28 | 2.69 | 12 | 0.020 |

| Somatosensory - Motor | 0.11 | 0.81 | 12 | 0.434 |

| Right Orbital Frontal Gyrus | ||||

| Somatosensory - Visual | 0.42 | 5.37 | 12 | <0.001 |

| Somatosensory - Motor | 0.36 | 4.79 | 12 | <0.001 |

Discussion

We examined brain activation patterns associated with category-driven word generation. For word generation overall, patterns of activation were comparable to those in similar studies (Costafreda et al., 2006). Specifically, portions of the left inferior and middle frontal gyri were activated. These regions are believed to contribute to word retrieval and selection (Thompson-Schill et al., 1997; Kan et al., 2006), as well as verbal working memory (Braver et al., 1997; Chein et al., 2002). Additional cortical activation was seen in left anterior cingulate gyrus, which likely reflects the attentional demands of the word generation task (Abrahams et al., 2003; Phelps et al., 1997). Subcortical activations that occurred in thalamus and basal ganglia were consistent with previous imaging studies showing involvement of these regions in word generation (Crosson et al., 2003).

When categories were grouped according to modalities predominantly involved in lexical learning and were compared separately to the control condition, each comparison revealed activation in the vicinity of modality-specific sensorimotor cortices. For example, categories in which lexical items were acquired primarily based on visual perception (Colors, Shapes, and Animals) were associated with activation in lateral occipital cortex (area 18) bilaterally, which is part of extrastriate visual cortex (McFadzean et al., 1999; Tanaka, 1996; Tootell et al., 1998). This is consistent with a previous study showing that retrieval of color information activated regions associated with color perception (Simmons et al., 2007). Visual categories also activated cortex around the right superior temporal sulcus (STS). The STS has been previously found to activate during the perception of biological motion (as reviewed in Puce and Perrett, 2003). However, many studies of biological motion perception have reported activation in more posterior portions of STS, compared to the current finding.

Categories with a strong movement component (Sports, Tools, Transportation) were associated with activation in the left posterior middle temporal gyrus, left angular gyrus, and right intraparietal sulcus. The activation peak we found in left angular gyrus was located at a distance of 7mm from area MT+ as defined by a probabilistic cytoarchitectonic map (Eickhoff et al., 2005) - an area known to be crucially involved in motion perception (Grill-Spector and Malach, 2004). The left posterior middle temporal gyrus has been shown to be associated with action/function concepts in many studies (Chao et al., 2002; Martin et al., 1995; Noppeney et al., 2005). Activation in the left angular gyrus has been reported in studies of action planning and understanding (Johnson-Frey et al., 2005). We also found activation in right intraparietal sulcus. While this region has not been previously reported as associated with motor-related semantic representations, its left homologue has been shown to be activated during naming of tool pictures (Chao and Martin, 2000). Absence of effects in motor cortex may appear surprising and could be related to inclusion of categories involving strong motion perception components (see below). Note, however, that some other studies examining action-related semantics (Noppeney et al., 2005; Hauk et al., 2008) did not detect significant effects in motor cortex either, similar to our study.

Categories associated with somatosensory and proprioceptive experience (Body parts, Things you drink, Things you eat) activated right postcentral, left supramarginal, and bilateral orbitofrontal gyri. Postcentral gyrus is the location of primary somatosensory cortices. Both postcentral and supramarginal gyri have been shown to be involved in haptic perception (Fabri et al., 2005; Golaszewski et al., 2002; Jones et al., 2007). It has been further argued that anterior insula, frontal operculum and orbitofrontal cortex are involved in integrating olfactory and gustatory perception (de Araujo et al., 2003; Small et al., 2007; Small and Prescott, 2005). In particular, orbitofrontal cortex has been shown to be activated during viewing of food pictures (Gonzalez et al., 2006, Simmons et al., 2005) and judgment of gustatory attributes (Goldberg et al., 2006a, b).

Our design was based on the predominant impact of specific sensorimotor modalities on given categories. Only categories that were sufficiently “rich” to allow easy generation of words throughout a block of 36 seconds could be used in this fMRI implementation, thus constraining the selection of adequate categories. This prevented us from including the auditory modality in our study because we could not identify three primarily auditory-based categories that fulfilled the above criterion. While a few of our categories (Shapes, Colors) were almost purely unimodal, most of them were only predominantly so. Note that even a category such as Shape, while being heavily based on visual perception, may conceivably contain some somatosensory experiential basis (e.g., what it feels like to touch a triangle versus a circle). We found several small clusters of overlapping effects for motor and somatosensory categories in left middle temporal cortex and temporo-parietal juncture and in right pericentral cortex. This is not unexpected given the close relationship of motor and somatosensory systems, both functionally and with regard to the location of primary cortices. Furthermore, motor categories such as Sports and Tools clearly also relate to proprioceptive and tactile experience, as much as somatosensory categories, such as body parts, may relate to motor experiences and motion perception.

In general, our assignments of individual categories to a modality was thus based on the relative predominance of sensorimotor modality experience during semantic knowledge acquisition. For example, the acquisition of lexical items for the category “Animals” depends heavily on visual components with less prevalent somatosensory and motor components, whereas acquisition of items for “Tools” relies more strongly on motor and functional experience related to acquisition. Somatosensory categories (Body parts, Things you can eat/drink) were selected because pilot behavioral studies had shown that these were sufficiently rich for production of about 10 items in 36 seconds (Basho et al., 2007, and unpublished data). It is clear that these latter categories are by no means exclusively founded on somatosensory experience: All three of them also rely on visual experience; and the categories “Things you can eat/drink” also incorporate experience from the chemical senses (gustatory, olfactory) that were presumably reflected in orbitofrontal fMRI effects presented above.

The categories attributed to the motor modality related to movement both perceptually and motorically. On the perceptual side, they were based heavily on motion perception, which is typically considered part of the visual system, in particular involving area MT (Grill-Spector and Malach, 2004) and the superior temporal sulcus (Puce and Perrett, 2003). On the motor side, these categories were heavily based on experience of one’s own actions, largely controlled by premotor and parietal regions.

Categories considered to belong to the visual modality also varied. “Colors” and “Shapes” related to “early” visual processing, while “Animals” was linked to more complex perception along the ventral stream. Our finding of bilateral activity in extrastriate occipitotemporal cortex could be attributed the two former less complex visual categories. However, since each single category could only be used in a single block to avoid practice effects, the number of time points was too small to examine the effects of each category on its own.

A potential explanation to our category-specific activations is that subjects were engaging in mental imagery during word generation, thus inducing activations in perceptual cortices. A recent study by Hauk and colleagues (2008) addressed the possibility that category-specific activations reflect mental imagery instead of lexicosemantic processing. Using a single word reading test, they showed that category-specific activations negatively correlated with word frequency in fusiform gyrus for vision-related words and middle temporal gyrus for action-related words. Word frequency is generally not correlated with mental imagery, but is associated with accessing lexicosemantic information. This study suggests that category-specific activations were not induced by mental imagery, but instead reflect lexicosemantic processing.

Our findings overall are consistent with the sensorimotor theory of lexicosemantic organization, according to which lexicosemantic information is grounded in the function of corresponding sensorimotor cortices (Barsalou, 1999, 2008; Martin, 2007). Our category-specific activation results are not only consistent with the sensorimotor theory of lexicosemantic organization, but also consistent with evidence that retrieval of episodic memory information associated with a specific sensory modality activates some of the same brain regions that are involved in the perception of that information (cf., Wheeler et al., 2000).

The categories we tested were pseudo-randomly assigned to different generation mode conditions (paced/unpaced; covert/overt response); therefore it was unlikely that domain-specific effects were driven by generation mode effects of the paradigm (Basho et al., 2007). Nonetheless, we inspected the location of category-specific findings in comparison with generation mode findings. As seen in Fig. 2 panel B, generation mode effects occurred in regions that did not overlap with category-specific effects. We can therefore be confident that the category-specific effects reported here were not related to the use of different versions of the word generation task.

An advantage of implementing a task with non-pictorial stimuli for the study of lexicosemantic representation is that perceptual confounds related to the complexity of visual stimuli are minimized. Some researchers have speculated that category-specific activations observed in visual cortices in previous studies were driven by visual processing related to stimulus presentation rather than semantic retrieval (Devlin et al., 2005; Gerlach, 2007; Tyler et al., 2003). A few previous studies have utilized tasks with minimal visual stimulus presentation such as word reading, lexical decision, or auditory word presentation to study lexicosemantic representations (Hauk et al., 2004; Mechelli et al., 2006; Noppeney et al., 2006; Pulvermüller and Hauk, 2006). However, in lexical decision or reading tasks, lexicosemantic representations are activated by external stimuli, whereas our word generation task required participants to voluntarily search and retrieve internal lexicosemantic representations. Aside from minimizing perceptual confounds, as discussed above, our word generation paradigm had the advantage of requiring active retrieval of lexicosemantic representations. Cortical activations observed in the present study thus reflected the active process of lexicosemantic retrieval.

We found category-general activations in left lateral frontal cortex across all categories and modalities (Basho et al., 2007), and category-specific activations in cortices with established sensorimotor and perceptual functions. The category-general activations in left inferior frontal cortex suggest that during word generation, left inferior frontal cortex is involved with the search and retrieval of lexicosemantic knowledge (Kan and Thompson-Schill, 2004; Thompson-Schill, 2003). In contrast, the category-specific activations in sensorimotor cortices we observed likely reflect the brain representation of lexicosemantic knowledge itself. Our results suggest that lexicosemantic knowledge is at least partially represented in sensorimotor cortices. In particular, our results suggest that vision-based semantic representations involve cortices associated with visual perception, action/function-based semantic representations involve regions associated with movement and motion processing, and semantic representations based on somatosensory, gustatory, and olfactory experiences involve corresponding sensorimotor cortices.

One effective connectivity study found that left lateral frontal cortex was functionally connected with category-specific regions during a semantic fluency task (Vitali et al., 2005). Specifically, left inferior frontal cortex was functionally connected with left motor cortex and left temporal-parietal junction for generation of tool names, left inferior frontal cortex was functionally connected with visual association cortex for generation of animal names. These connectivity patterns suggest that left frontal cortex retrieves and selects information from category-specific regions during the word generation process.

To summarize, our results show that category-specific activation can be observed in a task that minimizes perceptual input confounds and requires participants to actively engage in lexical retrieval and selection. Further, differentially activated sensorimotor regions seem to reflect the modalities that are predominant during and crucial for word acquisition. These findings strongly suggest that at least some components of lexicosemantic representations are processed in sensorimotor cortices.

Acknowledgments

This study was supported by the National Institutes of Health, grant R01-NS43999. Thanks to Miguel Rubio for general technical assistance and to Patricia Shih for comments on the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abrahams S, Goldstein LH, Simmons A, Brammer MJ, Williams SC, Giampietro VP, Andrew CM, Leigh PN. Functional magnetic resonance imaging of verbal fluency and confrontation naming using compressed image acquisition to permit overt responses. Hum Brain Mapp. 2003;20:29–40. doi: 10.1002/hbm.10126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arffa S. The relationship of intelligence to executive function and non-executive function measures in a sample of average, above average, and gifted youth. Arch Clin Neuropsychol. 2007;22:969–978. doi: 10.1016/j.acn.2007.08.001. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behav Brain Sci. 1999;22:577–609. doi: 10.1017/s0140525x99002149. discussion 610–560. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Grounded cognition. Annu Rev Psychol. 2008;59:617–645. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Basho S, Palmer ED, Rubio MA, Wulfeck B, Müller R-A. Effects of generation mode in fMRI adaptations of semantic fluency: Paced production and overt speech. Neuropsychologia. 2007;45:1697–1706. doi: 10.1016/j.neuropsychologia.2007.01.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. FMRI responses to video and point-light displays of moving humans and manipulable objects. J Cogn Neurosci. 2003;15:991–1001. doi: 10.1162/089892903770007380. [DOI] [PubMed] [Google Scholar]

- Braver TS, Cohen JD, Nystrom LE, Jonides J, Smith EE, Noll DC. A parametric study of prefrontal cortex involvement in human working memory. Neuroimage. 1997;5:49–62. doi: 10.1006/nimg.1996.0247. [DOI] [PubMed] [Google Scholar]

- Cardebat D, Demonet JF, Viallard G, Faure S, Puel M, Celsis P. Brain functional profiles in formal and semantic fluency tasks: a SPECT study in normals. Brain Lang. 1996;52:305–313. doi: 10.1006/brln.1996.0013. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chao LL, Weisberg J, Martin A. Experience-dependent modulation of category-related cortical activity. Cereb Cortex. 2002;12:545–551. doi: 10.1093/cercor/12.5.545. [DOI] [PubMed] [Google Scholar]

- Chein JM, Fissell K, Jacobs S, Fiez JA. Functional heterogeneity within Broca's area during verbal working memory. Physiol. Behav. 2002;77:635–639. doi: 10.1016/s0031-9384(02)00899-5. [DOI] [PubMed] [Google Scholar]

- Costafreda SG, Fu CH, Lee L, Everitt B, Brammer MJ, David AS. A systematic review and quantitative appraisal of fMRI studies of verbal fluency: role of the left inferior frontal gyrus. Hum Brain Mapp. 2006;27:799–810. doi: 10.1002/hbm.20221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: Software for analysis and visualization of functional magnetic resonance neuroimages. Computers and Biomedical Research. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Crosson B, Benefield H, Cato MA, Sadek JR, Moore AB, Wierenga CE, Gopinath K, Soltysik D, Bauer RM, Auerbach EJ, Gokcay D, Leonard CM, Briggs RW. Left and right basal ganglia and frontal activity during language generation: contributions to lexical, semantic, and phonological processes. J Int Neuropsychol Soc. 2003;9:1061–1077. doi: 10.1017/S135561770397010X. [DOI] [PubMed] [Google Scholar]

- Cuenod CA, Bookheimer SY, Hertz-Pannier L, Zeffiro TA, Theodore WH, Le Bihan D. Functional MRI during word generation, using conventional equipment: a potential tool for language localization in the clinical environment. Neurology. 1995;45:1821–1827. doi: 10.1212/wnl.45.10.1821. [DOI] [PubMed] [Google Scholar]

- Damasio H, Grabowski TJ, Tranel D, Hichwa RD, Damasio AR. A neural basis for lexical retrieval. Nature. 1996;380:499–505. doi: 10.1038/380499a0. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- de Araujo IE, Rolls ET, Kringelbach ML, McGlone F, Phillips N. Taste-olfactory convergence, and the representation of the pleasantness of flavour, in the human brain. Eur J Neurosci. 2003;18:2059–2068. doi: 10.1046/j.1460-9568.2003.02915.x. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Rushworth MF, Matthews PM. Category-related activation for written words in the posterior fusiform is task specific. Neuropsychologia. 2005;43:69–74. doi: 10.1016/j.neuropsychologia.2004.06.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Fabri M, Polonara G, Salvolini U, Manzoni T. Bilateral cortical representation of the trunk midline in human first somatic sensory area. Hum Brain Mapp. 2005;25:287–296. doi: 10.1002/hbm.20099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forman SD, Cohen JD, Fitzgerald M, Eddy WF, Mintun MA, Noll DC. Improved assessment of significant activation in functional magnetic resonance imaging (fMRI): use of a cluster-size threshold. Magn Reson Med. 1995;33:636–647. doi: 10.1002/mrm.1910330508. [DOI] [PubMed] [Google Scholar]

- Gaillard WD, Sachs BC, Whitnah JR, Ahmad Z, Balsamo LM, Petrella JR, Braniecki SH, McKinney CM, Hunter K, Xu B, Grandin CB. Developmental aspects of language processing: fMRI of verbal fluency in children and adults. Hum Brain Mapp. 2003;18:176–185. doi: 10.1002/hbm.10091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gerlach C. A review of functional imaging studies on category specificity. J Cogn Neurosci. 2007;19:296–314. doi: 10.1162/jocn.2007.19.2.296. [DOI] [PubMed] [Google Scholar]

- Golaszewski SM, Siedentopf CM, Baldauf E, Koppelstaetter F, Eisner W, Unterrainer J, Guendisch GM, Mottaghy FM, Felber SR. Functional magnetic resonance imaging of the human sensorimotor cortex using a novel vibrotactile stimulator. Neuroimage. 2002;17:421–430. doi: 10.1006/nimg.2002.1195. [DOI] [PubMed] [Google Scholar]

- Goldberg RF, Perfetti CA, Schneider W. Distinct and common cortical activations for multimodal semantic categories. Cogn Affect Behav Neurosci. 2006a;6:214–222. doi: 10.3758/cabn.6.3.214. [DOI] [PubMed] [Google Scholar]

- Goldberg RF, Perfetti CA, Schneider W. Perceptual knowledge retrieval activates sensory brain regions. J Neurosci. 2006b;26:4917–4921. doi: 10.1523/JNEUROSCI.5389-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gonzalez J, Barros-Loscertales A, Pulvermüller F, Meseguer V, Sanjuan A, Belloch V, Avila C. Reading cinnamon activates olfactory brain regions. Neuroimage. 2006;32:906–912. doi: 10.1016/j.neuroimage.2006.03.037. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R. The human visual cortex. Annu Rev Neurosci. 2004;27:649–677. doi: 10.1146/annurev.neuro.27.070203.144220. [DOI] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermüller F. Somatotopic representation of action words in human motor and premotor cortex. Neuron. 2004;41:301–307. doi: 10.1016/s0896-6273(03)00838-9. [DOI] [PubMed] [Google Scholar]

- Hauk O, Shtyrov Y, Pulvermuller F. The time course of action and action-word comprehension in the human brain as revealed by neurophysiology. J Physiol Paris. 2008;102:50–58. doi: 10.1016/j.jphysparis.2008.03.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys GW, Forde EM. Hierarchies, similarity, and interactivity in object recognition: "category-specific" neuropsychological deficits. Behav Brain Sci. 2001;24:453–476. discussion 476–509. [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cereb Cortex. 2005;15:681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jones SR, Pritchett DL, Stufflebeam SM, Hamalainen M, Moore CI. Neural correlates of tactile detection: a combined magnetoencephalography and biophysically based computational modeling study. J Neurosci. 2007;27:10751–10764. doi: 10.1523/JNEUROSCI.0482-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kan IP, Kable JW, Van Scoyoc A, Chatterjee A, Thompson-Schill SL. Fractionating the left frontal response to tools: dissociable effects of motor experience and lexical competition. J Cogn Neurosci. 2006;18:267–277. doi: 10.1162/089892906775783723. [DOI] [PubMed] [Google Scholar]

- Kan IP, Thompson-Schill SL. Selection from perceptual and conceptual representations. Cogn Affect Behav Neurosci. 2004;4:466–482. doi: 10.3758/cabn.4.4.466. [DOI] [PubMed] [Google Scholar]

- Kemmerer D, Castillo JG, Talavage T, Patterson S, Wiley C. Neuroanatomical distribution of five semantic components of verbs: Evidence from fMRI. Brain Lang. 2007 doi: 10.1016/j.bandl.2007.09.003. [DOI] [PubMed] [Google Scholar]

- Martin A. The Representation of Object Concepts in the Brain. Annu Rev Psychol. 2007;58:25–45. doi: 10.1146/annurev.psych.57.102904.190143. [DOI] [PubMed] [Google Scholar]

- Martin A, Haxby JV, Lalonde FM, Wiggs CL, Ungerleider LG. Discrete cortical regions associated with knowledge of color and knowledge of action. Science. 1995;270:102–105. doi: 10.1126/science.270.5233.102. [DOI] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Neural correlates of category-specific knowledge. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- McFadzean RM, Condon BC, Barr DB. Functional magnetic resonance imaging in the visual system. J Neuroophthalmol. 1999;19:186–200. [PubMed] [Google Scholar]

- Mechelli A, Sartori G, Orlandi P, Price CJ. Semantic relevance explains category effects in medial fusiform gyri. Neuroimage. 2006;30:992–1002. doi: 10.1016/j.neuroimage.2005.10.017. [DOI] [PubMed] [Google Scholar]

- Milner B. Some cognitive effects of frontal-lobe lesions in man. Philos Trans R Soc Lond B Biol Sci. 1982;298:211–226. doi: 10.1098/rstb.1982.0083. [DOI] [PubMed] [Google Scholar]

- Mummery CJ, Patterson K, Hodges JR, Wise RJ. Generating 'tiger' as an animal name or a word beginning with T: differences in brain activation. Proc Biol Sci. 1996;263:989–995. doi: 10.1098/rspb.1996.0146. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Josephs O, Kiebel S, Friston KJ, Price CJ. Action selectivity in parietal and temporal cortex. Brain Res Cogn Brain Res. 2005;25:641–649. doi: 10.1016/j.cogbrainres.2005.08.017. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ, Penny WD, Friston KJ. Two distinct neural mechanisms for category-selective responses. Cereb Cortex. 2006;16:437–445. doi: 10.1093/cercor/bhi123. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Morris JP, Michelich CR, Allison T, McCarthy G. Functional Anatomy of Biological Motion Perception in Posterior Temporal Cortex: An fMRI Study of Eye, Mouth and Hand Movements. Cereb Cortex. 2005;15:1866–1876. doi: 10.1093/cercor/bhi064. [DOI] [PubMed] [Google Scholar]

- Phelps EA, Hyder F, Blamire AM, Shulman RG. FMRI of the prefrontal cortex during overt verbal fluency. Neuroreport. 1997;8:561–565. doi: 10.1097/00001756-199701200-00036. [DOI] [PubMed] [Google Scholar]

- Puce A, Perrett D. Electrophysiology and brain imaging of biological motion. Philos. Trans. R. Soc. Lond. B. Biol. Sci. 2003;358:435–445. doi: 10.1098/rstb.2002.1221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pulvermüller F. Words in the brain's language. Behav Brain Sci. 1999;22:253–259. discussion 280–336. [PubMed] [Google Scholar]

- Pulvermüller F, Hauk O. Category-specific conceptual processing of color and form in left fronto-temporal cortex. Cereb Cortex. 2006;16:1193–1201. doi: 10.1093/cercor/bhj060. [DOI] [PubMed] [Google Scholar]

- Small DM, Bender G, Veldhuizen MG, Rudenga K, Nachtigal D, Felsted J. The role of the human orbitofrontal cortex in taste and flavor processing. Ann N Y Acad Sci. 2007;1121:136–151. doi: 10.1196/annals.1401.002. [DOI] [PubMed] [Google Scholar]

- Small DM, Prescott J. Odor/taste integration and the perception of flavor. Exp Brain Res. 2005;166:345–357. doi: 10.1007/s00221-005-2376-9. [DOI] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23 Suppl 1:S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Martin A, Barsalou LW. Pictures of appetizing foods activate gustatory cortices for taste and reward. Cereb Cortex. 2005;15:1602–1608. doi: 10.1093/cercor/bhi038. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Ramjee V, Beauchamp MS, McRae K, Martin A, Barsalou LW. A common neural substrate for perceiving and knowing about color. Neuropsychologia. 2007;45:2802–2810. doi: 10.1016/j.neuropsychologia.2007.05.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. Co-planar stereotaxic atlas of the human brain. New York: Thieme; 1988. [Google Scholar]

- Tanaka K. Inferotemporal cortex and object vision. Annu Rev Neurosci. 1996;19:109–139. doi: 10.1146/annurev.ne.19.030196.000545. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL. Neuroimaging studies of semantic memory: inferring "how " from "where". Neuropsychologia. 2003;41:280–292. doi: 10.1016/s0028-3932(02)00161-6. [DOI] [PubMed] [Google Scholar]

- Thompson-Schill SL, D'Esposito M, Aguirre GK, Farah MJ. Role of left inferior prefrontal cortex in retrieval of semantic knowledge: a reevaluation. Proc Natl Acad Sci U S A. 1997;94:14792–14797. doi: 10.1073/pnas.94.26.14792. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tootell RB, Hadjikhani NK, Vanduffel W, Liu AK, Mendola JD, Sereno MI, Dale AM. Functional analysis of primary visual cortex (V1) in humans. Proc Natl Acad Sci U S A. 1998;95:811–817. doi: 10.1073/pnas.95.3.811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tyler LK, Bright P, Dick E, Tavares P, Pilgrim L, Fletcher P, Greer M, Moss H. Do semantic categories activate distinct cortical regions? Evidence for a distributed neural semantic system. Cognitive Neuropsychology. 2003;20:541–559. doi: 10.1080/02643290244000211. [DOI] [PubMed] [Google Scholar]

- Vitali P, Abutalebi J, Tettamanti M, Rowe J, Scifo P, Fazio F, Cappa SF, Perani D. Generating animal and tool names: An fMRI study of effective connectivity. Brain Lang. 2005;93:32–45. doi: 10.1016/j.bandl.2004.08.005. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy RA. Categories of knowledge: further fractionations and an attempted integration. Brain. 1987;110:1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci U S A. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]