Abstract

Emotional signals are crucial for sharing important information, with conspecifics, for example, to warn humans of danger. Humans use a range of different cues to communicate to others how they feel, including facial, vocal, and gestural signals. We examined the recognition of nonverbal emotional vocalizations, such as screams and laughs, across two dramatically different cultural groups. Western participants were compared to individuals from remote, culturally isolated Namibian villages. Vocalizations communicating the so-called “basic emotions” (anger, disgust, fear, joy, sadness, and surprise) were bidirectionally recognized. In contrast, a set of additional emotions was only recognized within, but not across, cultural boundaries. Our findings indicate that a number of primarily negative emotions have vocalizations that can be recognized across cultures, while most positive emotions are communicated with culture-specific signals.

Keywords: communication, affect, universality, vocal signals

Despite differences in language, culture, and ecology, some human characteristics are similar in people all over the world. Because we share the vast majority of our genetic makeup with all other humans, there is great similarity in the physical features that are typical for our species, even though minor characteristics vary between individuals. Like many physical features, aspects of the human psychology are shared. These psychological universals can inform arguments about what features of the human mind are part of our shared biological heritage and which are predominantly products of culture and language. For example, all human societies have complex systems of communication to convey their thoughts, feelings, and intentions to those around them (1). However, although there are some commonalities between different communicative systems, speakers of different languages cannot understand each others’ words and sentences. Other aspects of communicative systems do not rely on common lexical codes and may be shared across linguistic and cultural borders. Emotional signals are an example of a communicative system that may constitute a psychological universal.

Humans use a range of cues to communicate emotions, including vocalizations, facial expressions, and posture (2 –4). Auditory signals allow for affective communication when the recipient cannot see the sender, for example, across a distance or at night. Infants are sensitive to vocal cues from the very beginning of life, when their visual system is still relatively immature (5).

Vocal expressions of emotions can occur overlaid on speech in the form of affective prosody. However, humans also make use of a range of nonverbal vocalizations to communicate how they feel, such as screams and laughs. In this study, we investigate whether certain nonverbal emotional vocalizations communicate the same affective states regardless of the listener’s culture. Currently, the only available cross-cultural data of vocal signals come from studies of emotional prosody in speech (6 –8). This work has indicated that listeners can infer some affective states from emotionally inflected speech across cultural boundaries. However, no study to date has investigated emotion recognition from the voice in a population that has had no exposure to other cultural groups through media or personal contact. Furthermore, emotional information overlaid on speech is restricted by several factors, such as the segmental and prosodic structure of the language and constraints on the movement of the articulators. In contrast, nonverbal vocalizations are relatively “pure” expressions of emotions. Without the simultaneous transmission of verbal information, the articulators (e.g., lips, tongue, larynx) can move freely, allowing for the use of a wider range of acoustic cues (9).

We examined vocal signals of emotions using the two-culture approach, in which participants from two populations that are maximally different in terms of language and culture are compared (10). The claim of universality is strengthened to the extent that the same phenomenon is found in both groups. This approach has previously been used in work demonstrating the universality of facial expressions of the emotions happiness, anger, fear, sadness, disgust, and surprise (11), a result that has now been extensively replicated (12). These emotions have also been shown to be reliably communicated within a cultural group via vocal cues, and additionally, vocalizations effectively signal several positive affective states (3, 13).

To investigate whether emotional vocalizations communicate affective states across cultures, we compared European native English speakers with the Himba, a seminomadic group of over 20,000 pastoral people living in small settlements in the Kaokoland region in northern Namibia. A handful of Himba settlements, primarily those near the regional capital Opuwo, are so-called “show villages” that welcome foreign tourists and media in exchange for payment. However, in the very remote settlements, where the data for the present study were collected, the individuals live completely traditional lives, with no electricity, running water, formal education, or any contact with culture or people from other groups. They have thus not been exposed to the affective signals of individuals from cultural groups other than their own.

Participants heard a short emotional story, describing an event that elicits an affective reaction: for example, that a person is very sad because a close relative of theirs has passed away [see Table S1]. After confirming that they had understood the intended emotion of the story, they were played two vocalization sounds. One of the stimuli was from the same category as the emotion expressed in the story and the other was a distractor. The participant was asked which of the two human vocalizations matched the emotion in the story (Fig. 1). This task avoids problems with direct translation of emotion terms between languages because it includes additional information in the scenarios and does not require participants to be able to read. The English sounds were from a previously validated set of nonverbal vocalizations of emotion, produced by two male and two female British English-speaking adults. The Himba sounds were produced by five male and six female Himba adults, and were selected in an equivalent way to the English stimuli (13).

Fig. 1.

Participant watching the experimenter play a stimulus (Upper) and indicating her response (Lower).

Results

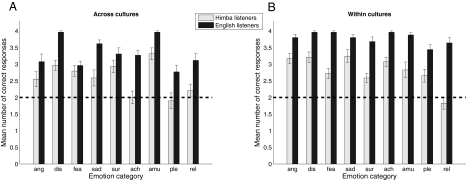

To examine the cross-cultural recognition of nonverbal vocalizations, we tested the recognition of emotions from vocal signals from the other cultural group in each group of listeners (Fig. 2A). The English listeners matched the Himba sounds to the story at a level that significantly exceeded chance (χ1 = 418.67, P < 0.0001), and they performed better than would be expected by chance for each of the emotion categories [χ1 = 30.15 (anger), 100.04 (disgust), 24.04 (fear), 67.85 (sadness), 44.46 (surprise), 41.88 (achievement), 100.04 (amusement), 15.38 (sensual pleasure), and 32.35 (relief), all P < 0.001, Bonferroni corrected]. These data demonstrate that the English listeners could infer the emotional state of each of the categories of Himba vocalizations.

Fig. 2.

Recognition performance (out of four) for each emotion category, within and across cultural groups. Dashed lines indicate chance levels (50%). Abbreviations: ach, achievement; amu, amusement; ang, anger; dis, disgust; fea, fear; ple, sensual pleasure; rel, relief; sad, sadness; and sur, surprise. (A) Recognition of each category of emotional vocalizations for stimuli from a different cultural group for Himba (light bars) and English (dark bars) listeners. (B) Recognition of each category of emotional vocalizations for stimuli from their own group for Himba (light bars) and English (dark bars) listeners.

The Himba listeners matched the English sounds to the stories at a level that was significantly higher than would be expected by chance (χ1 = 271.82, P < 0.0001). For individual emotions, they performed at better-than-chance levels for a subset of the emotions [χ1 = 8.83 (anger), 27.03 (disgust), 18.24 (fear), 9.96 (sadness), 25.14 (surprise), and 49.79 (amusement), all P < 0.05, Bonferroni corrected]. These data show that the communication of these emotions via nonverbal vocalizations is not dependent on a shared culture between producer and listener: these signals are recognized across cultural borders.

We also examined the recognition of vocalizations from the listeners’ own cultural groups (Fig. 2B). As expected, listeners from the British sample matched the British sounds to the story at a level that significantly exceeded chance (χ1 = 271.82, P < 0.0001), and they performed better than would be expected by chance for each of the emotion categories [χ1 = 81.00 (anger), 96.04 (disgust), 96.04 (fear), 81.00 (sadness), 70.56 (surprise), 96.04 (achievement), 88.36 (amusement), 51.84 (sensual pleasure), and 67.24 (relief), all P < 0.001, Bonferroni corrected]. This replicates previous findings that have demonstrated good recognition of a range of emotions from English nonverbal vocal cues both within (13) and between (3) European cultures.

The Himba listeners also matched the sounds from their own group to the stories at a level that was much higher than would be expected by chance (χ1 = 111.42, P < 0.0001), and they performed better than would be expected by chance for almost all of the emotion categories [χ1 = 39.86 (anger), 42.24 (disgust), 44.69 (sadness), 9.97 (surprise), 15.21 (fear), 33.14 (achievement), 19.86 (amusement), and 12.45 (sensual pleasure), all P < 0.05, Bonferroni corrected]. Only sounds of relief were not reliably paired with the relevant story. Overall, the consistently high Himba recognition of Himba sounds confirms that these stimuli constitute recognizable vocal signals of emotions to Himba listeners, and further demonstrate that this range of emotions can be reliably communicated within the Himba culture via nonverbal vocal cues.

Discussion

The emotions that were reliably identified by both groups of listeners, regardless of the origin of the stimuli, comprise the set of emotions commonly referred to as the “basic emotions.” These emotions are thought to constitute evolved functions that are shared between all human beings, both in terms of phenomenology and communicative signals (14). Notably, these emotions have been shown to have universally recognizable facial expressions (11, 12). In contrast, vocalizations of several positive emotions (achievement/triumph, relief, and sensual pleasure) were not recognized bidirectionally by both groups of listeners. This finding is despite the fact that they, with the exception of relief, were well recognized within each cultural group and that nonverbal vocalizations of these emotions are recognized across several groups of Western listeners (3). This pattern suggests that there may be universally recognizable vocal signals for communicating the basic emotions, but that this does not extend to all affective states, including ones that can be identified by listeners from closely related cultures.

Our results show that emotional vocal cues communicate affective states across cultural boundaries. The basic emotions—anger, fear, disgust, happiness (amusement), sadness, and surprise—were reliably identified by both English and Himba listeners from vocalizations produced by individuals from both groups. This observation indicates that some affective states are communicated with vocal signals that are broadly consistent across human societies, and do not require that the producer and listener share language or culture. The findings are in line with research in the domain of visual affective signals. Facial expressions of the basic emotions are recognized across a wide range of cultures (12) and correspond to consistent constellations of facial muscle movements (15). Furthermore, these facial configurations produce alterations in sensory processing, suggesting that they likely evolved to aid in the preparation for action to particularly important types of situations (16). Despite the considerable variation in human facial musculature, the facial muscles that are essential to produce the expressions associated with basic emotions are constant across individuals, suggesting that specific facial muscle structures have likely been selected to allow individuals to produce universally recognizable emotional expressions (17). The consistency of emotional signals across cultures supports the notion of universal affect programs: that is, evolved systems that regulate the communication of emotions, which take the form of universal signals (18). These signals are thought to be rooted in ancestral primate communicative displays. In particular, facial expressions produced by humans and chimpanzees have substantial similarities (19). Although a number of primate species produce affective vocalizations (20), the extent to which these parallel human vocal signals is as yet unknown. The data from the current study suggest that vocal signals of emotion are, like facial expressions, biologically driven communicative displays that may be shared with nonhuman primates.

In-Group Advantage.

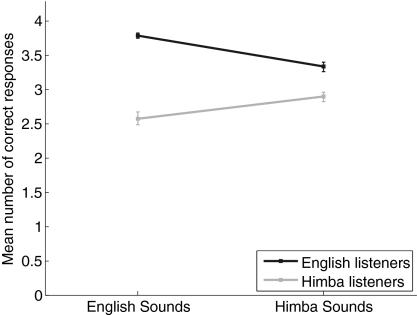

In humans, the basic emotional systems are modulated by cultural norms that dictate which affective signals should be emphasized, masked, or hidden (21). In addition, culture introduces subtle adjustments of the universal programs, producing differences in the appearance of emotional expression across cultures (12). These cultural variations, acquired through social learning, underlie the finding that emotional signals tend to be recognized most accurately when the producer and perceiver are from the same culture (12). This is thought to be because expression and perception are filtered through culture-specific sets of rules, determining what signals are socially acceptable in a particular group. When these rules are shared, interpretation is facilitated. In contrast, when cultural filters differ between producer and perceiver, understanding the other’s state is more difficult. To examine whether those listeners who were from the same culture where the stimuli were produced performed better in the recognition of the emotional vocalizations, we compared recognition performance for the two groups and of the two sets of stimuli. A significant interaction between the culture of the listener and that of the stimulus producer was found (F1,114 = 27.68, P < 0.001; means for English recognition of English sounds: 3.79; English recognition of Himba sounds: 3.34; Himba recognition of English sounds: 2.58; Himba recognition of Himba sounds: 2.90), confirming that each group performed better with stimuli produced by members of their own culture (Fig. 3). The analysis yielded no main effect of stimulus type (F < 1; mean recognition of English stimuli: 3.19; mean recognition of Himba stimuli: 3.12), demonstrating that overall, the two sets of stimuli were equally recognizable. The analysis did, however, result in a main effect of listener group, because the English listeners performed better on the task overall (F1,114 = 127.31, P < 0.001; English mean: 3.56; Himba mean: 2.74). This effect is likely because of the English participants’ more extensive exposure to psychological testing and education.

Fig. 3.

Group averages (out of four) for recognition across all emotion categories for each set of stimuli, for Himba (black line) and English (gray line) listeners. Error bars denote standard errors.

The current study thus extends models of cross-cultural communication of emotional signals to nonverbal vocalizations of emotion, suggesting that these signals are modulated by culture-specific variation in a similar way to emotional facial expressions and affective speech prosody (12).

Positive Emotions.

Some affective states are communicated using signals that are not shared across cultures, but specific to a particular group or region. In our study, vocalizations intended to communicate a number of positive emotions were not reliably identified by the Himba listeners. Why might this be? One possibility is that this is due to the function of positive emotions. It is well established that the communication of positive affect facilitates social cohesion with group members (22). Such affiliative behaviors may be restricted to in-group members with whom social connections are built and maintained. However, it may not be desirable to share such signals with individuals who are not members of one’s own cultural group. An exception may be self-enhancing displays of positive affect. Recent research has shown that postural expressions of pride are universally recognized (23). However, pride signals high social status in the sender rather than group affiliation, differentiating it from many other positive emotions. Although pride and achievement may both be considered “agency-approach” emotions (involved in reward-related actions; see ref. 24), they differ in their signals: achievement is well recognized within a culture from vocal cues (3), whereas pride is universally well recognized from visual signals (23) but not from vocalizations (24).

We found that vocalizations of relief were not matched with the relief story by Himba listeners, regardless of whether the stimuli were English or Himba. This could imply that the relief story was not interpreted as conveying relief to the Himba participants. However, this explanation seems unlikely for several reasons. After hearing a story, each participant was asked to explain what emotion they thought the person in the story was feeling. Only once it was clear that the individual had understood the intended emotion of the story did the experimenters proceed with presenting the vocalization stimuli, thus ensuring that each participant had correctly understood the target emotion of each story. Furthermore, Himba vocalizations expressing relief were reliably recognized by English listeners, demonstrating that the Himba individuals producing the vocalizations (from the same story presented to the listeners) were able to produce appropriate vocal signals for the emotion of the relief story. A more parsimonious explanation for this finding may be that the sigh used by both groups to signal relief is not an unambiguous signal to Himba listeners. Although used to signal relief, demonstrated by the ability of Himba individuals to produce vocalizations that were recognizable to English listeners, sighs may be interpreted to indicate a range of other states as well by Himba listeners. Whether there are affective states that can be inferred from sighs across cultures remains a question for future research.

In the present study, one type of positive vocalization was reliably recognized by both groups of participants. Listeners agreed, regardless of culture, that sounds of laughter communicated amusement, exemplified as the feeling of being tickled. Tickling triggers laugh-like vocalizations in nonhuman primates (25) as well as other mammals (26), suggesting that it is a social behavior with deep evolutionary roots (27). Laughter is thought to have originated as a part of playful communication between young infants and mothers, and also occurs most commonly in both children and nonhuman primates in response to physical play (28). Our results support the idea that the sound of laughter is universally associated with being tickled, as participants from both groups of listeners selected the amused sounds to go with the tickling scenario. Indeed, given the well-established coherence between expressive and experiential systems of emotions (15), our data suggest that laughter universally reflects the feeling of enjoyment of physical play.

In our study, laughter was cross-culturally recognized as signaling joy. In the visual domain, smiling is universally recognized as a visual signal of happiness (11, 12). This raises the possibility that laughter is the auditory equivalent of smiling, as both communicate a state of enjoyment. However, a different interpretation may be that laughter and smiles are in fact quite different types of signals, with smiles functioning as a signal of generally positive social intent, whereas laughter may be a more specific emotional signal, originating in play (29). This issue highlights the importance of considering positive emotions in cross-cultural research of emotions (30). The inclusion of a range of positive states should be extended to conceptual representations in semantic systems of emotions, which has been explored in the context of primarily negative emotions (31).

Conclusion

In this study we show that a number of emotions are cross-culturally recognized from vocal signals, which are perceived as communicating specific affective states. The emotions found to be recognized from vocal signals correspond to those universally inferred from facial expressions of emotions (11). This finding supports theories proposing that these emotions are psychological universals and constitute a set of basic, evolved functions that are shared by all humans. In addition, we demonstrate that several positive emotions are recognized within—but not across—cultural groups, which may suggest that affiliative social signals are shared primarily with in-group members.

Materials and Methods

Stimuli.

The English stimuli were taken from a previously validated set of nonverbal vocalizations of negative and positive emotions. The stimulus set was comprised of 10 tokens of each of nine emotions: achievement, amusement, anger, disgust, fear, sensual pleasure, relief, sadness, and surprise, based on demonstrations that all of these categories can be reliably recognized from nonverbal vocalizations by English listeners (13). The sounds were produced in an anechoic chamber by two male and two female native English speakers and the stimulus set was normalized for peak amplitude. The actors were presented with a brief scenario for each emotion and asked to produce the kind of vocalization they would make if they felt like the person in the story. Briefly, achievement sounds were cheers, amusement sounds were laughs, anger sounds were growls, disgust sounds were retches, fear sounds were screams, sensual pleasure sounds were moans, relief sounds were sighs, sad sounds were sobs, and surprise sounds were sharp inhalations. Further details on the acoustic properties of the English sounds can be found in ref. 13.

The Himba stimuli were recorded from five male and six female Himba adults, using an equivalent procedure to that of the English stimulus production, and were also matched for peak amplitude. The researchers (D.A.S. and F.E.) excluded poor exemplars, as it was not possible to perform multiple-choice pilot tests with Himba participants to pilot test the stimuli. Stimuli containing speech or extensive background noise were excluded, as were multiple, similar stimuli by the same speaker. Examples of the sounds can be found as Audio S1 and Audio S2.

Participants.

The total sample consisted of two English and two Himba groups. The English sample that heard the English stimuli consisted of 25 native English speakers (10 male, 15 female; mean age 28.7 years), and those who heard Himba sounds consisted of 26 native English speakers (11 male, 15 female; mean age 29.0 years). Twenty-nine participants (13 male, 16 female) from Himba settlements in Northern Namibia comprised the Himba sample who heard the English sounds, and another group of 29 participants (13 male, 16 female) heard the Himba sounds. The Himba do not have a system for measuring age, but no children or very old adults were included in the study. Informed consent was given by all participants.

Design and Procedure.

We used an adapted version of a task employed in previous cross-cultural research on the recognition of emotional facial expressions (11). In the original task, a participant heard a story about a person feeling in a particular way and was then asked to choose which of three emotional facial expressions fit with the story. This task is suitable for use with a preliterate population, as it requires no ability to read, unlike the forced-choice format using multiple labels that is common in emotion-perception studies. Furthermore, the current task is particularly well suited to cross-cultural research, as it does not rely on the precise translation of emotion terms because it includes additional information in the stories. The original task included three response alternatives on each trial, with all three stimuli presented simultaneously. However, as sounds necessarily extend over time, the response alternatives in the current task had to be presented sequentially. Thus, participants were required to remember the other response alternatives as they were listening to the current response option. To avoid overloading the participants’ working memory, the number of response alternatives in the current study was reduced to two.

The English participants were tested in the presence of an experimenter; the Himba participants were tested in the presence of two experimenters and one translator. For each emotion, the participant listened to a short prerecorded emotion story describing a scenario that would elicit that emotion (Audio S3 and Audio S4). After each story, the participant was asked how the person was feeling to ensure that they had understood the story. If necessary, participants could hear the story again. No participants failed to identify the intended emotion from any of the stories, although some individuals needed to hear a story more than once to understand the emotion. The emotion stories used with the Himba participants were developed together with a local person with extensive knowledge of the culture of the Himba people, who also acted as a translator during testing. The emotion stories used with the English participants were matched as closely as possible to the Himba stories, but adapted to be easily understood by English participants. The stories were played over headphones from recordings, spoken in a neutral tone of voice by a male native speaker of each language (the Himba local language Otji-Herero and English). Once they had understood the story, the participant was played two sounds over headphones. Stimulus presentation was controlled by the experimenter pressing two computer mice in turn, each playing one of the sounds (see Fig. 1). A subgroup of the Himba participants listening to Himba sounds performed a slightly altered version of the task, where the stimuli were played without the use of computer mice, but the procedure was identical to that of the other participants in all other respects. The participant was asked which one was the kind of sound that the person in the story would make. They were allowed to hear the stimuli as many times as they needed to make a decision. Participants indicated their choice on each trial by pointing to the computer mouse that had produced the sound appropriate for the story (see Fig. 1), and the experimenter inputted their response into the computer. Throughout testing, the experimenters and the translator were naive to which response was correct and which stimulus the participant was hearing. Speaker gender was constant within any trial, with participants hearing two male and female trials for each emotion. Thus, all participants completed four trials for each of the nine emotions, resulting in a total of 36 trials. The target stimulus was of the same emotion as the story, and the distractor was varied in terms of both valence and difficulty, such that for any emotion participants heard four types of distractors: maximally and minimally easy of the same valence, and maximally and minimally easy of the opposite valence, based on confusion data from a previous study (13). Which mouse was correct on any trial, as well as the order of stories, stimulus gender, distractor type, and whether the target was first or second, was pseudorandomized for each participant. Stimulus presentation was controlled using the PsychToolbox (32) for Matlab, running on a laptop computer.

Supplementary Material

Acknowledgments

We thank David Matsumoto for discussions. This research was funded by grants from the Economic and Social Research Council, University College London Graduate School Research Project Fund, and the University of London Central Research Fund (to D.A.S.), a contribution toward travel costs from the University College London Department of Psychology, and a grant from the Wellcome Trust (to S.K.S.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/cgi/content/full/0908239106/DCSupplemental

References

- 1.Hauser MD, Chomsky N, Fitch WT. The faculty of language: What is it, who has it, and how did it evolve? Science. 2002;298:1569–1579. doi: 10.1126/science.298.5598.1569. [DOI] [PubMed] [Google Scholar]

- 2.Coulson M. Attributing emotion to static body postures: Recognition accuracy, confusions, and viewpoint dependence. J Nonverbal Behav. 2004;28:117–139. [Google Scholar]

- 3.Sauter DA, Scott SK. More than one kind of happiness: Can we recognize vocal expressions of different positive states? Motiv Emot. 2007;31:192–199. [Google Scholar]

- 4.Ekman P, Friesen WV, Ancoli S. Facial signs of emotional experience. J Pers Soc Psychol. 1980;39:1125–1134. [Google Scholar]

- 5.Mehler J, Bertoncini J, Barriere M, Jassikgerschenfeld D. Infant recognition of mothers voice. Perception. 1978;7:491–497. doi: 10.1068/p070491. [DOI] [PubMed] [Google Scholar]

- 6.Scherer KR, Banse R, Wallbott HG. Emotion inferences from vocal expression correlate across languages and cultures. J Cross Cult Psychol. 2001;32:76–92. [Google Scholar]

- 7.Pell MD, Monetta L, Paulmann S, Kotz SA. Recognizing emotions in a foreign language. J Nonverbal Behav. 2009;33:107–120. [Google Scholar]

- 8.Bryant G, Barrett H. Vocal emotion recognition across disparate cultures. J Cogn Cult. 2008;8:135–148. [Google Scholar]

- 9.Scott SK, Sauter D, McGettigan C. Brain mechanisms for processing perceived emotional vocalizations in humans. In: Brudzynski S, editor. Handbook of Mammalian Vocalizations. Oxford: Academic Press; 2009. pp. 187–198. [Google Scholar]

- 10.Norenzayan A, Heine SJ. Psychological universals: What are they and how can we know? Psychol Bull. 2005;131:763–784. doi: 10.1037/0033-2909.131.5.763. [DOI] [PubMed] [Google Scholar]

- 11.Ekman P, Sorenson ER, Friesen WV. Pan-cultural elements in facial displays of emotion. Science. 1969;164:86–88. doi: 10.1126/science.164.3875.86. [DOI] [PubMed] [Google Scholar]

- 12.Elfenbein HA, Ambady N. On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychol Bull. 2002;128:203–235. doi: 10.1037/0033-2909.128.2.203. [DOI] [PubMed] [Google Scholar]

- 13.Sauter D, Calder AJ, Eisner F, Scott SK. Perceptual cues in non-verbal vocal expressions of emotion. Q J Exp Psychol. doi: 10.1080/17470211003721642. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ekman P. An argument for basic emotions. Cogn Emotion. 1992;6:169–200. [Google Scholar]

- 15.Rosenberg EL, Ekman P. Coherence between expressive and experiential systems in emotion. In: Ekman P, Rosenberg EL, editors. What the Face Reveals: Basic and Applied Studies of Spontaneous Expression. New York: Oxford University Press; 2005. pp. 63–85. [Google Scholar]

- 16.Susskind JM, et al. Expressing fear enhances sensory acquisition. Nat Neurosci. 2008;11:843–850. doi: 10.1038/nn.2138. [DOI] [PubMed] [Google Scholar]

- 17.Waller BM, Cray JJ, Burrows AM. Selection for universal facial emotion. Emotion. 2008;8:435–439. doi: 10.1037/1528-3542.8.3.435. [DOI] [PubMed] [Google Scholar]

- 18.Ekman P. Facial expressions. In: Dalgleish T, Power M, editors. Handbook of Cognition and Emotion. New York: Wiley; 1999. pp. 301–320. [Google Scholar]

- 19.Parr LA, Waller BM, Heintz M. Facial expression categorization by chimpanzees using standardized stimuli. Emotion. 2008;8:216–231. doi: 10.1037/1528-3542.8.2.216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Seyfarth RM. Meaning and emotion in animal vocalizations. Ann N Y Acad Sci. 2003;1000:32–55. doi: 10.1196/annals.1280.004. [DOI] [PubMed] [Google Scholar]

- 21.Matsumoto D, Yoo S, Hirayama S, Petrova G. Development and initial validation of a measure of display rules: The Display Rule Assessment Inventory (DRAI) Emotion. 2005;5:23–40. doi: 10.1037/1528-3542.5.1.23. [DOI] [PubMed] [Google Scholar]

- 22.Shiota M, Keltner D, Campos B, Hertenstein M. Positive emotion and the regulation of interpersonal relationships. In: Phillipot P, Feldman R, editors. Emotion Regulation. Mahwah, NJ: Erlbaum; 2004. pp. 127–156. [Google Scholar]

- 23.Tracy J, Robins R. The nonverbal expression of pride: Evidence for cross-cultural recognition. J Pers Soc Psychol. 2008;94:516–530. doi: 10.1037/0022-3514.94.3.516. [DOI] [PubMed] [Google Scholar]

- 24.Simon-Thomas E, Keltner D, Sauter D, Sinicropi-Yao L, Abramson A. The voice conveys specific emotions: evidence from vocal burst displays. Emotion. doi: 10.1037/a0017810. in press. [DOI] [PubMed] [Google Scholar]

- 25.Ross MD, Owren MJ, Zimmermann E. Reconstructing the evolution of laughter in great apes and humans. Curr Biol. 2009;19:1106–1111. doi: 10.1016/j.cub.2009.05.028. [DOI] [PubMed] [Google Scholar]

- 26.Knutson B, Burgdorf J, Panksepp J. Ultrasonic vocalizations as indices of affective states in rats. Psychol Bull. 2002;128:961–977. doi: 10.1037/0033-2909.128.6.961. [DOI] [PubMed] [Google Scholar]

- 27.Ruch W, Ekman P. The expressive pattern of laughter. In: Kaszniak A, editor. Emotion, Qualia and Consciousness. Tokyo: Word Scientific Publisher; 2001. [Google Scholar]

- 28.Panksepp J. Rough-and-tumble play: The brain sources to joy. In: Panksepp J, editor. Affective Neuroscience: the Foundations of Human and Animal Emotions. New York: Oxford University Press; 2004. pp. 280–299. [Google Scholar]

- 29.van Hooff JARAM. A comparative approach to the phylogeny of laughter and smiling. In: Hinde RA, editor. Nonverbal Communication. Cambridge, England: Cambridge University Press; 1972. [Google Scholar]

- 30.Sauter D. More than happy: the need for disentangling positive emotions. Curr Dir Psychol Sci. in press. [Google Scholar]

- 31.Romney AK, Moore CC, Rusch CD. Cultural universals: Measuring the semantic structure of emotion terms in English and Japanese. Proc Natl Acad Sci USA. 1997;94:5489–5494. doi: 10.1073/pnas.94.10.5489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.