Abstract

Thinking allows an animal to take an effective action in a novel situation based on a mental exploration of possibilities and previous knowledge. We describe a model animal, with a neural system based loosely on the rodent hippocampus, which performs mental exploration to find a useful route in a spatial world it has previously learned. It then mentally recapitulates the chosen route, and this intent is converted to motor acts that move the animal physically along the route. The modeling is based on spiking neurons with spike-frequency adaptation. Adaptation causes the continuing evolution in the pattern of neural activity that is essential to mental exploration. A successful mental exploration is remembered through spike-timing-dependent synaptic plasticity. The system is also an episodic memory for an animal chiefly concerned with locations.

Keywords: hippocampus, memory, planning, thought, hippocampus, memory

What is mental exploration? I am on the Princeton campus and a visitor asks for walking directions to Firestone Library. I consider where I am, where the library is, mentally explore possible routes, and select one that will be easy to follow. I do not have prelearned answers to all routing problems. Instead, I have a knowledge base of relationships between locations, and use it to mentally solve a novel routing task. At a more abstract level, all imagination of multiple possible futures, all planning of a chain of actions to achieve a novel goal, and much of what is meant by thinking involve mental exploration.

Mental exploration takes place on the timescale of a fraction of a second to minutes. It involves a protracted evolution of neural activity, often while an animal is stationary, followed by an apt behavioral action that directly relates to the activity during the exploration. When circumstances permit its use, mental exploration can be faster, more energy-efficient, and safer than a physical exploration to solve the same problem. Mental exploration is different from “mental time travel,” (1) which is a mental recapitulation of a prior salient physical experience whereas mental exploration is searching for an appropriate neural activity sequence that may never before have been experienced.

In an attempt to understand such a capability, we have designed and simulated a network of neurons for mental exploration involving spatial tasks. The network controls the motor behavior of a model animal (rudimentary rodent; RR). An experimental paradigm is described for testing mental exploration, a paradigm readily extensible to real animals in real (or virtual) environments. RR moves along spatial paths as desired trajectories, not as memorized motor patterns, giving a hint as to how an ability to think may have piggybacked on an evolving ability to perform other tasks (2).

Three key ideas are embedded in the modeling. First, in circumstances for which a network of simple neurons has activity dynamics characterized by an attractor surface, adapting can produce a continuing exploration on that surface. Second, when an activity trajectory has been experienced that achieves a desired “mental” goal, synaptic learning produces an ability to mentally repeat that intended trajectory. Third, this repetition controls the motor system in such a fashion that the physical path taken corresponds to the path described by the trajectory of mental activity. The modeling is loosely based on some of the most salient facts about the rodent hippocampus, an area involved with place, memory, and cognition (3–5). The speculation that thinking involves sequential and reverberant activation of assemblies of cells representing concepts and the environment has a long history (2, 6). This paper presents a concise model within which such ideas can be examined and related to experiments.

Model

Behavioral Paradigm.

Construct mazes α, β, γ, … , into which an animal can be inserted at any location. Each maze has walls and a floor with a variety of colors, patterns, textures, and so forth. Only local sensory information is available. Individual features are multiply used so that only the ensemble of features is a unique descriptor of place. Over the course of several sessions of experience in each environment, an animal will become familiar with each environment through passive learning.

Next, place a thirsty animal at position w in α, where the experimenter has now placed a dish of water. Let the animal drink briefly, then remove it from w and insert it at x, which may or may not be in α. The animal has learned that water is available at w, but has been placed elsewhere. It has no direct way of knowing whether x is connected to w, and water is available, or whether x is in a different maze, and water is not available. An animal that relies on physical exploration might search without waiting, making choices (initially random) at branches, until it either finds water or tires of searching. Or it could do nothing, because the likelihood of reward (water) may not be worth the cost of extensive physical exploration. An animal that can carry out mental exploration should remain at x until it finds, through mental exploration, whether x is connected to w by some pathway and, if so, how to follow that path. If x is connected to w, it should then move to w without random exploration. If x is not connected to w, it should not search for w. Behavior corresponding to this pattern would be strong circumstantial evidence that the animal carries out mental exploration.

This animal behavioral paradigm is closely related to the human “library” paradigm. The goal is stated verbally to humans but by the introduction of water in the animal case. The physical response is verbal in the library example but is appropriate motion in the case of a thirsty animal. The parallel to the many known maze environments is the possibility of being asked, while at Princeton, how to walk to a library located at Caltech.

Neural Circuit Organization.

The overall RR system is shown in Fig. 1. Areas A and E have excitatory place cells, analogous to hippocampal place cells (7, 8), that respond selectively to spatial location. Their Gaussian receptive field size was 3% of an environment area. Lacking an understanding of how place cells achieve selectivity, we simply presume that a place cell has an input current derived from the sensory system, and which provides the location selectivity. Spike-timing details characteristic of the real hippocampus are not included. Each model place cell has a receptive field in each environment α, β, … , and these receptive fields are uncorrelated. The place cells have no orientation selectivity.

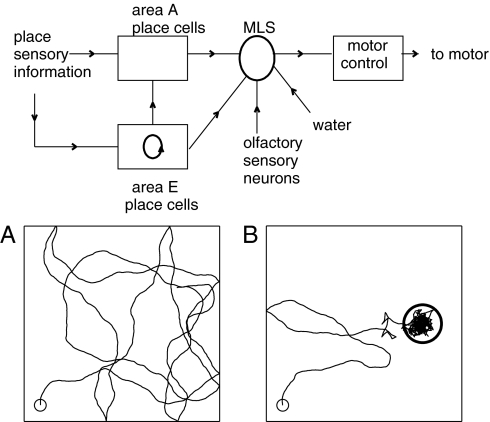

Fig. 1.

Circuit diagram and synaptic connection pathways (arrows) of the model. (A) Twenty-second segment of the path of the model system in the absence of control signals. (B) A trajectory as in A but with motor control signals from olfactory neurons relayed via area MLS. An odor source with a Gaussian profile is located at the center of the circle (radius σ).

In the absence of a control signal, the motor system moves RR ahead at constant speed in a direction that wavers, representing effects of motor and environmental noise. When RR runs into a wall, its trajectory bounces (reversal of the normal component of velocity). A typical path produced by the motor system without control is shown in Fig. 1A. Mathematical and simulation details of the motor and neural systems are given in SI Modeling and Mathematics.

Area E is responsible for finding, through mental exploration, an activity sequence representing an appropriate route. The rest of the neural circuitry is for producing movement in physical space. It illustrates how a mental trajectory that describes what an animal wants to experience (intent) can be compared to what the animal is actually experiencing (indirect sensory signals) to control a motor system. Such a comparison, made by area A, is essential in any real system, for the alternative (mental trajectory → motor command sequence) fails after a short time because of noise and system imperfections.

The motor control area has a single input that can be selected from different sources (area A, area E, olfactory neurons) by area MLS (motor & learning and selection). There are synaptic connections between the excitatory cells in area E, and from area E to area A. These two sets of connections are learned from experiencing environments. MLS also contains a neuron S that can signal when a target has been found (success) by mental or physical exploration. It is driven by a true reward such as water, and by synapses from E with Hebbian learning capabilities. Although it is an essential auxiliary to a complete system, the output of S has only simple control properties (such as “learn now” or “mental exploration has found the target”) and is not modeled in detail. The switching that selects between different possible sources of input to the motor controller is also not modeled in detail.

Results

Learning Multiple Planar “Bump” Attractors.

Let a ring of N neurons have excitatory short-range and inhibitory long-range synaptic interconnections. For appropriate ranges and strengths, the stable activity states of the network are N equivalent localized bumps (9). If N is large and the bumps contain many neurons, the set of attractor points is essentially a continuous ring (or line) attractor. There is an energy function (10) for the dynamics of such systems. The minimum of this function in N-dimensional neural activity space is a valley of constant energy along a curving line, with the energy increasing rapidly in the other N − 1 directions. Planar attractors can be similarly constructed (11).

Planar attractors provide a short-term memory for two-dimensional location. Consider the place cells of area E and a rectangular environment R. Present the activity of the place cells in an x-y display, with each cell displayed at the center of its receptive field. When the animal is in R, the strongly active place cells (driven by sensory inputs) will be in a small region centered at the location of the animal as in Fig. 2A. In a system with a planar attractor for R, if the sensory input is turned off when the animal is at r, the bump will remain fixed (except for noise-induced drift). This feature can be used for path integration (11).

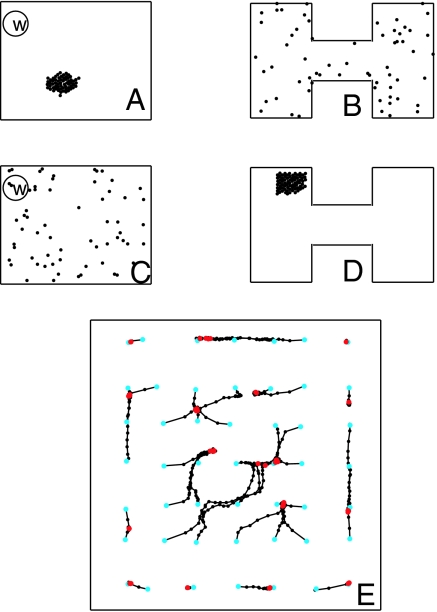

Fig. 2.

Activity bumps and bump motions. (A) Locations of strongly active place cells (dots) arrayed for environment R when the RR is at a particular location in R. (B) The same activity state as in A but with cells arranged according to their positions in environment H. (C) Same state as shown in D, but neurons are arranged according to their place fields in R. (D) A clump of strongly active neurons when the RR is in H and the cells are arrayed according to their positions in H. (E) Trajectories of the center of gravity of the bump location when started at 49 different spatial points (cyan dots) in R. Black dots are located at 0.2-s intervals. Motions are followed for 10 s. Red dots are final states.

There are multiple environments R, H, … , in the experimental paradigm. For mental exploration it is necessary to embed these multiple planar attractors simultaneously in the synapses of area E. This can be done using learnable binary excitatory synapses (strength 0 or 1) and a fixed global inhibitory system, a procedure useful in associative memory (12). Allow RR to explore environment R for a few minutes, using its motor to move. During this exploration, place cells are active. Let there be a spike-timing-dependent potentiation (STDP) procedure for excitatory synapses that sums the effect of near-coincidences between presynaptic action potentials of neuron j and the postsynaptic action potentials of neuron k, weighted by exp(−|tpre − tpost|/τlearning). Accumulate this value as Skj for each synapse. When the exploration is finished, for each k connect the m presynaptic neurons with the largest values of Ski to neuron k. This procedure implements an STDP-based “neurons that fire together wire together.” The connections to a neuron then come from its m nearest neighbors, with minor deviations due to exploratory noise.

Consider a second environment shaped like the letter H, with the same excitatory place cells of area E randomly deployed over H. Make a display with the place cells arrayed according to their centers in environment H, shown in Fig. 2 B and D. Fig. 2 A and B are the same state displayed differently. Because there is no correlation of locations of place cells in the two environments, a pattern that is a local clump in one display is disorganized in the other. Sensory input to the system in environment H will produce a clump of activity as in Fig. 2D, which appears disorganized in Fig. 2C.

The learning procedure is repeated to learn additional planar attractors for additional environments. There are 2016 neurons in area E in the simulation, and thus ∼4 million possible synapses. The learning procedure chooses m = 60 incoming connections for each neuron, so only 3% of the synapses have been written in learning an environment. There is little crosstalk between desired attractors until ∼20% of the connections have been written.

The flatness of the slow dynamical manifold R (nominal planar attractor) is examined in Fig. 2E. The synaptic connection matrix contains five different environments: a rectangle R, an H, and three others. The neural activity was initiated as a bump in the R environment, and sensory input then turned off. The figure shows 49 time trajectories of the location of the bump. The position slowly drifts from a starting point to a nearby final point over a time of 0–2 s. There are at least 16 point attractors, indicating that the nominally planar energy surface is gently wrinkled. When initiated in any of the five environments, the activity remained a bump in the initial representation.

During the exploratory learning, connections are learned in exactly the same fashion from place cells in area E to place cells in area A. This results in the ability of a bump of active place cells in area E and environment R (or H, … ,) to drive a corresponding bump of place cell activity in area A.

Adaptation Produces Useful Attractor Exploration.

A thirsty animal familiar with the environments of Fig. 2 experiences water at location w in R, following the experimental paradigm. Cell S in MLS is active when the RR detects water. Hebbian learning can connect the active place cells to S. S will then be active when the RR is in the vicinity of w in environment R but be inactive at all locations in other environments because, when in the others, there is never a bump of active neurons in the R format. Physically exploring an environment and noting whether S turns on somewhere would answer the question “was w located in the environment in which I am now situated?” However, this exploration can be carried out mentally if the location of the bump activity can be made to move throughout an animal's present environment while the animal is stationary. When an environmental input initiates a bump of activity in area E and is then turned off, the subsequent trajectory is very limited in its exploration (Fig. 2E). Weak added noise produces an extensive but extremely slow diffusive exploration. Strong noise induces transitions between environments and tends to destroy the bump. Noise alone does not produce useful mental exploration.

Cellular or synaptic adaptation [both of which are common in the hippocampal excitatory system (13)] produces fast and effective attractor exploration. Consider a line of neurons with synaptic connections arranged to produce a bump attractor. Without adaptation, a stable bump can be placed anywhere along the line. Adaptation destabilizes that location, resulting in the propagation of the bump with constant velocity in either direction. This propagation is illustrated in Fig. 3 B–D, based on neurons having the spike-frequency adaptation of Fig. 3A.

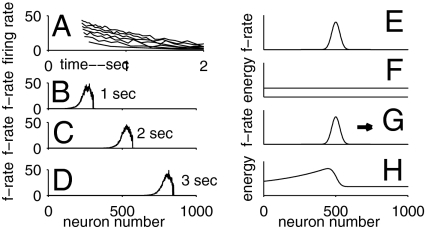

Fig. 3.

Adaptation and its consequences. (A) Firing rates of an adapting neuron as a function of time for step input currents of various sizes beginning at 0.4 s. (B–D) Snapshots of the firing rates of a line of neurons with spike-frequency adaptation, with synaptic connections for a line attractor. (E) A stable state bump of activity for the line attractor system with nonadapting neurons. (F) The energy of the system as a function of the location of the bump. (G) With adaptation, the bump moves in either direction with constant velocity, to the right in this example. (H) The frozen energy of the system as a function of bump location, defined by freezing the adaptation when the moving bump is at the location shown in G.

Bump propagation can be understood in energy function terms (10). Without adaptation, a bump (Fig. 3E) is stable anywhere, and the energy of the system is independent of bump location (Fig. 3F). The adaptive system has no defined energy function. However, if at some particular time the adaptive parameters are frozen, then an energy function exists (Fig. 3 G and H). The frozen adaptation level for a neuron depends on how recently it was strongly active. The bump is located where the frozen energy function would push it to the right. In concept, the bump surfs on the front of a wave of an evolving energy function that the moving bump created. The mathematics of adapting nonspiking (continuous variable) neurons and the associated frozen energy function is described in SI Modeling and Mathematics.

Adaptation similarly propels a bump of activity on a planar attractor. Fig. 4A shows the trajectory of the location of a bump for adapting neurons and the same synaptic network used for Fig. 2E, when started at a single location in R. The adaptation process pushes the bump out of the local minima apparent in Fig. 2E, and results in a trajectory that explores most of R. Figure 4B shows a similar result for environment H with the same synaptic matrix. Given the size of the activity bumps illustrated in Fig. 2 A and C, the pathway in Fig. 4A would at some time excite the cluster of place cells connected to S and make S fire, whereas the pathway in Fig. 4B would not. The question “am I in the environment that contains w?” is thus answered by mental exploration. The RR could use the activity of S as a signal for starting physical exploration. But mental exploration should do far more. It should follow alternative mental paths, choose among them, recapitulate the chosen path, and use that recapitulation to direct a corresponding physical motion. To do all this, additional capabilities are needed.

Fig. 4.

Time progression of the center of the activity bump when initiated at a location in the R environment (A) or the H environment (B). Black dots are 0.2 s apart. Trajectories last 20 s.

Learning an Activity Trajectory and Repeating It Mentally.

When a smooth trajectory of area E bump activity is experienced between times t1 and t2, that activity can be used as the basis for synaptic modification. A smoothly moving bump activity can be generated from sensory signals occurring when an animal moves in a known environment. Initially, the attractor terrain of area E is as an almost flat two-dimensional sheet (in the absence of adaptation). The following procedure embeds a furrow that follows the experienced trajectory. Accumulate, during the time interval t1–t2, the synaptic change parameter Skj for all nonzero synapses in area E. Increase the synaptic strength of all those existing (i.e., nonzero) synapses which were important during the trajectory, as defined by having Skj greater than a threshold value. The appropriate threshold depends on the speed of motion along the trajectory, and could be set automatically. The minimum increase necessary depends on the flatness of the previous terrain, and was between 25% and 100% in these studies. In biology, such synapse modification can be controlled by partially localized neuromodulators (14).

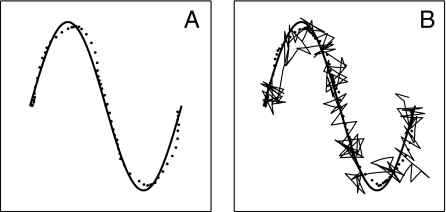

Figure 5A illustrates the ability to recapitulate a trajectory on the basis of this learning. The line shows the curved path along which the RR was carried during the time interval t1–t2, producing a corresponding activation of a moving bump of area E place cells. Synaptic changes were then made as above. Bump activity in area E was initialized at the spatial point where the learned trajectory begins, and the sensory input then turned off. The subsequent path of the center of the bump closely follows the trajectory on which the RR moved in the learning procedure. The speed of the mental trajectory depends on the adaptation parameters, not on the speed of motion during learning. The observed hippocampal mental recapitulation of overlearned spatial sequences of activation (15) during REM sleep or while a rat is stationary during the performance of the task (16) may be somewhat related to this. However, the observed replay involves representation of place in a theta-rhythm-based position code, whereas the system described here has only rate-based coding.

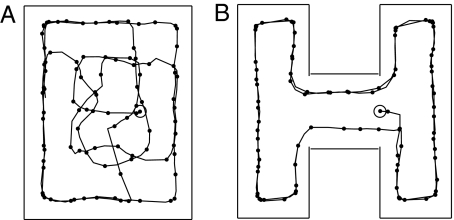

Fig. 5.

Learning to reproduce a trajectory. (A) Training trajectory used (thick solid line). Mental recapitulation of the bump trajectory after a single trial learning (dots, spaced at 0.2-s intervals). The dots are calculated as the spatial center of gravity of the firing rates of all place cells in area E. (B) The spatial trajectory of the RR in following the mental trajectory (thin solid line). The rate of adaptation has been decreased by a factor of 2 from that in A so that the mental trajectory is generated more slowly and the motor system can follow better.

Because this procedure depends only on the activity trajectory experienced in area E, the result is the same whether the trajectory was caused by physical experience or whether the trajectory during learning was generated by mental activity alone. Thus, Fig. 5A also illustrates the capability of the system to store and recapitulate a mental trajectory. An initial mental trajectory produces synaptic modification; the subsequent mental trajectory from the same starting state follows that trajectory because of the valley in the energy landscape caused by the synaptic change. A symmetric kernel has been used for selecting the synapses to strengthen, resulting in a pathway that can be mentally followed in either direction. The use of an asymmetric kernel would produce a direction-dependent additional drive to bump motion.

Motor Controller.

The motor control and guidance system developed here are entirely different from those of a mammal. This elementary control was chosen to describe a conceptually complete system, which requires a solution to the problem of motor equivalence (2).

The control signal to the motor is spikes generated by a single control neuron. When the motor receives a single spike, it approximately reverses the velocity of RR, but with noise yielding a broad distribution of direction changes (180° ± 60°). The control neuron is an integrate-and-fire neuron with an additional self-inhibitory current activated by a spike, making the cell refractory for a period after each spike. This allows time for one control spike to have an effect on RR location before the next one occurs. During actual behaviors, area MLS merely relays the chosen signal source to the motor control input. All signals to the motor control neuron have both an excitatory input producing a slow excitatory current and a balancing inhibitory pathway with a faster response.

To illustrate the action of the motor controller, let area MLS send the action potentials from a pool of olfactory neurons to the motor control neuron. When the odor intensity is decreasing (increasing), the net input current is positive (negative). When the odor intensity is rapidly decreasing, the control neuron will spike. Thus, while the RR moves toward the odor source the motion simply continues, and while it is moving away a spike is soon generated that on average reverses the velocity. This neuron-based system accomplishes a control similar to that used by Escherichia coli to swim up a concentration gradient (17). Fig. 1B shows typical RR system homing-in. There is no control far from the source, where the odor is weak. Abrupt changes in direction of the path, due to spikes of the control neuron, are apparent within ∼2σ of the source. The dithering near the maximum is due to the limited accuracy in evaluating the concentration gradient because of the stochastic arrival of sensory action potentials.

Producing a Physical Motion Following a Mental Trajectory.

The function of area A is to produce a signal to the motor system that will cause the RR to follow the same pathway in real space that area E is generating mentally. All neurons of area A are equally connected to the motor control, whose input from area A is then proportional to the total spiking activity of that area.

The place cells of area A are driven by sensory inputs having a Gaussian falloff around the place cell center. They are also driven by synaptic connections from area E to area A learned during spatial exploration of each environment, when the inputs to both areas A and E were sensory currents representing place. As a result, when a bump of place cells is active in area E in the representation of a particular environment, this bump drives a corresponding region of place cells in area A in the representation of the same environment. For controlling physical motion, the sensory and area E inputs to area A are adjusted so that neither alone drives the area A neurons sufficiently to make them fire, but the sum of the two can do so.

Consider for simplicity one known environment. Let area E be making a mental motion along a trajectory. The momentary position xe of the bump of activity in E is then independent of the spatial position of x of the RR. The drive to area A neurons, represented in space, is the sum of two Gaussians, one centered on x and the other on xe. If x is near xe, the combined inputs will make a patch of neurons fire strongly. As x and xe become farther apart, the firing rates in this patch drop. The total spike rate from area A as a function of x is equivalent to the chemosensory input case whose effect is illustrated in Fig. 1B, and RR will move to be near xe. If xe slowly moves, the animal will correspondingly move its preferred position. Thus, the location of RR in real space follows the mental location of the bump activity in area E. The same considerations apply when the synaptic connections represent multiple environments. In this case, area A activity is generated only when the mental trajectory in area E corresponds to the physical environment that the RR is in.

The thin solid line of Fig. 5B shows the position of the RR when the motor control system is guided by mental activity in area E. The RR recapitulates in physical motion the trajectory on which it was trained. Following the trajectory would be better if the mental motion in area E were slower, but this cannot be slowed too far without getting stuck.

This system does not learn motor commands. It has learned the task at a higher level, from which (in combination with sensory information) appropriate motor commands can be generated. Perturbations that deflect a physical trajectory have little effect on this system, whereas they have disastrous accumulative effects on a system that merely recapitulates a learned sequence of motor commands.

Traveling along the pathway is a brief episode in the life of the RR, a sequence of locations traversed in time. Fig. 5A represents the mental recall of that episode, and Fig. 5B puts that memory episode to use. Although spatial pathways can also be learned as procedural memories, there is then no ability to recapitulate without physical motion.

Using Mental Exploration to Choose and Physically Follow a Path.

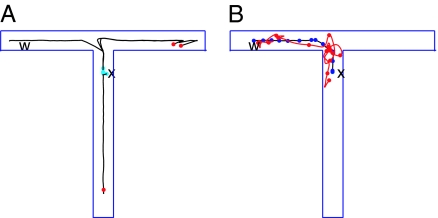

One particular example illustrates some of the exploration abilities of the complete system. The environment to be explored is the T of Fig. 6. Its branches are narrow, so an active bump of place cells spans the width of a branch. This T is one of five known environments embedded in the connection matrices. The RR has learned about a potential reward at a location w, so neuron S is activated strongly when the center of the bump attractor in E is at w. RR is now placed at position x. While the rat is stationary at x and carrying out this mental exploration, there is a weak continuing input that favors a bump of activity in E at x.

Fig. 6.

From mental exploration to physical performance. (A) The location of the center of the activity bump (solid line) when the activity was initiated at x. Red dots are ends of trajectories from which the activity bump jumped back to a location near x (cyan dots) and reinitiated an exploration. (B) The motion of the bump (blue line) and of the position of the RR (red line) after learning. Dots are spaced at 0.4-s intervals. The jagged real-space trajectory has been smoothed to facilitate clear presentation in this small-scale figure.

The learning algorithm is to accumulate Skj as in the previous section, beginning at a time when the mental location coincides with the physical location x, and continue accumulating until neuron S is activated. The accumulation should begin anew if the mental exploration bump returns to x before activation of S, because such a path segment is not useful. There is an obvious signal available for this control, for there is strong activity in area A when (and only when) the bump location in E corresponds to the RR physical location.

Fig. 6A shows an example of the resulting mental exploration. (i) The bump moves up the stem of the T. (Why the initial motion is up rather than down lies in details of the synaptic matrices set by a noisy learning experience.) The bump turns right at the T junction and propagates to the end. At the end, the bump would eventually reverse, but this is not favorable because it would involve neurons recently activated. Instead, the activity bump jumps back to x because of the weak continuing input. (ii) Because of residual adaptation, the bump now propagates down to the bottom of the stem, where it stops and jumps back to x. (iii) The bump again moves upward and to the right, and then reinitiates at x. (iv) The bump moves from x upward and turns left, finally reaching w, where it activates the potential reward neuron.

The mental exploration eventually found w, which causes the synaptic change rule to be applied. Because the learning accumulation is reset every time the RR mentally returns to x, only the fourth path segment is represented in the synapse change. After the relevant connection strengths increased, when a bump is initiated in area E at x and the input to area E is then turned off, the bump will propagate directly to w. When the motor system is engaged, the RR follows this trajectory in space (Fig. 6B).

The observed behavior of the RR would be to remain stationary for a few seconds, then take the direct path to w, making no errors. No physical exploration was required. The ability of a system to mentally jump from what seems a nonproductive location or dead end back to the real-space initiation point to try another mental trajectory is one of many advantages of mental exploration over physical exploration. This elementary synapse change procedure will not find good solutions to all problems. But even such a simple procedure can successfully use mental exploration to reduce the burdens associated with physical exploration.

Discussion

We have demonstrated that a simple system, based loosely on the rodent hippocampus, generates a mental exploration of possible actions (spatial paths to take) and selects a desirable pathway. The mental recapitulation of that pathway produces motor control signals that cause the RR to follow the chosen route in physical space. The system was based on spiking neurons, whose adaptation provides the driving force for mental exploration on a low-dimensional activity landscape. The selection of an action involved a modest increase in strength of selected synapses chosen by an STDP protocol, providing a valley in an energy landscape that guides the motion. The RR system displays the hallmarks of primitive thought.

Seward (18) carried out latent learning experiments that correspond closely to the highly simplified version (Fig. 6) of the general mental exploration paradigm described. A rat was first allowed to explore a T maze having drooping box ends that were not visible from the T intersection. The hungry rat was later placed via a trap door into one of the end boxes where a familiar food had been placed, allowed to eat briefly, and removed from the maze. After 25 min in a detention box, the rat was inserted at the root of the T. Eighty-seven percent chose to go to the food-reward side of the T although the reward location was invisible from the choice point. This is consistent with the kind of mental exploration proposed. Electrophysiological experiments in freely moving rats under this paradigm would test this explanation.

The RR system can also learn a pathway which it has physically experienced, a useful natural behavior of learning from self-directed experience or from following others. Rodents can be easily trained to follow amazingly complex paths, either by inducing them to follow a pathway during training (in which case their motor acts make the animal follow the path) or by physically guiding them through the pathway (in which case the motor acts are only partially responsible for animal location). The result is the same, for what is learned is the intended pathway, not motor actions.

In the RR, as in real rodents, place cells tend to be active when the rat is in the corresponding location. However, the word “when” does not imply causality. In the RR there are two very different circumstances of physical behavior: (i) exploration of an environment without instruction from area A; and (ii) following a mentally chosen or learned pathway. Both can exhibit “when” coordination between animal spatial location and place cell activity in area E. In the former circumstance, a sensory signal due to the spatial location of the RR causes the activity of the corresponding place cell in area E and in A. In the latter case, the activity of the place cells of area E is independent of sensory input, and the activity of a patch of area E place cells causes the RR to be near the corresponding spatial point. Correlation between animal location and place cell activity remains, but the direction of causality is reversed. Activity in area E appears on average to slightly anticipate the physical motion, resulting from the fact that this activity is actually causing that motion. Anticipatory activity in CA3 is known in situations that involve well-learned tasks (5).

It is tempting to identify with rodent biology and to equate area A with hippocampal CA1 and area E with CA3, because that would make the excitatory connectivity pattern of RR match that of mammals. However, the complexity of even the rat system is so enormous—head direction cells, grid cells, theta-induced timing, orientation tuning of place cells, and a complex motor system—that a simple 1:1 system mapping is unlikely. The function of area A, to convert psychological intent into appropriate motor commands, might be carried out elsewhere or by a combination of areas.

A better system would control the bump speed in E depending on the tracking success of the motor system. More complex signals from area A would be necessary to control a walking gait effectively. Nevertheless, such complexities may only conceal a conceptually simple basis for mental exploration and physical recapitulation, a system that could be revealed through simultaneous recordings from many neurons within CA3, CA1, and related areas while a rat is in a situation requiring mental exploration. Comparing the time history of neural activity before a novel action and the action itself on a case-by-case basis would be particularly useful.

Reinforcement learning (19) involves an animal achieving a goal by executing complex exploratory behavior over a period. To learn to do a task effectively requires a credit assignment procedure that specifies what parts of a behavior over time are to be reinforced. Mental exploration does not address this credit assignment problem. It merely substitutes mental exploration for an equivalent physical exploration. However, when the exploratory process necessary to such learning can be done mentally, any particular procedure for reinforcement learning can be carried out with improved speed, safety, and energy conservation.

How a brain carries out mental explorations is a general problem, not limited to explorations that have a spatial basis. Because the fundamental attractor structure (slow dynamics manifold) that provides the basis for mental exploration is learned from experience, the dimensionality is determined by the experience itself and need not be 2 or even an integer. The RR system also has the capability of knowing and recapitulating many learned pathways in multiple environments, and is an episodic memory for an animal chiefly interested in space. The neurons that are synaptically coupled to each other became linked on the basis of being active at almost the same time. In the simple sensory environment used, this implied that linked neurons represent nearby points in space. In a general sensory environment, assemblies of active cells might represent higher concepts than “location,” and “close together in time” should then link general time-dependent sensory experiences into episodes.

Supplementary Material

Acknowledgments

I thank A.V. Herz, S. Leibler, and C.D. Brody for comments on the manuscript.

Footnotes

The author declares no conflict of interest.

This article contains supporting information online at www.pnas.org/cgi/content/full/0913991107/DCSupplemental.

References

- 1.Tulving E. Episodic memory: From mind to brain. Annu Rev Psychol. 2000;53:1–25. doi: 10.1146/annurev.psych.53.100901.135114. [DOI] [PubMed] [Google Scholar]

- 2.Hebb DO. The Organization of Behavior: A Neuropsychological Theory. New York: Wiley; 1949. pp. 98–100.pp. 153–157. [Google Scholar]

- 3.Johnson A, Redish AD. Neural ensembles in CA3 transiently encode paths forward of the animal at a decision point. J Neurosci. 2007;27:12176–12189. doi: 10.1523/JNEUROSCI.3761-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Moser EI, Kropff E, Moser M-B. Place cells, grid cells, and the brain's spatial representation system. Annu Rev Neurosci. 2008;31:69–89. doi: 10.1146/annurev.neuro.31.061307.090723. [DOI] [PubMed] [Google Scholar]

- 5.Eichenbaum H, Dudchenko P, Wood E, Shapiro M, Tanila H. The hippocampus, memory, and place cells: Is it spatial memory or a memory space? Neuron. 1999;23:209–226. doi: 10.1016/s0896-6273(00)80773-4. [DOI] [PubMed] [Google Scholar]

- 6.Braitenberg V. Cell assemblies in the cerebral cortex. In: Heim R, Palm G, editors. Theoretical Approaches to Complex Systems, Lecture Notes in Biomathematics 21. Berlin: Springer; 1978. pp. 171–188. [Google Scholar]

- 7.O’Keefe J, Dostrovsky J. The hippocampus as a spatial map. Preliminary evidence from unit activity in the freely-moving rat. Brain Res. 1971;34:171–175. doi: 10.1016/0006-8993(71)90358-1. [DOI] [PubMed] [Google Scholar]

- 8.O’Keefe J. Hippocampal neurophysiology in the behaving animal. In: Andersen P, Morris R, Amaral D, Bliss T, O'Keefe J, editors. The Hippocampus Book. New York: Oxford Univ Press; 2007. pp. 487–517. [Google Scholar]

- 9.Zhang K. Representation of spatial orientation by the intrinsic dynamics of the head-direction cell ensemble: A theory. J Neurosci. 1996;16:2112–2126. doi: 10.1523/JNEUROSCI.16-06-02112.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hopfield JJ. Neurons with graded response have collective computational properties like those of two-state neurons. Proc Natl Acad Sci USA. 1984;81:3088–3092. doi: 10.1073/pnas.81.10.3088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Samsonovich A, McNaughton BL. Path integration and cognitive mapping in a continuous attractor neural network model. J Neurosci. 1997;17:5900–5920. doi: 10.1523/JNEUROSCI.17-15-05900.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hopfield JJ. Searching for memories, Sudoku, implicit check bits, and the iterative use of not-always-correct rapid neural computation. Neural Comput. 2008;20:1119–1164. doi: 10.1162/neco.2007.09-06-345. [DOI] [PubMed] [Google Scholar]

- 13.Spruston N, McBain C. Structural and functional properties of hippocampal neurons. In: Andersen P, Morris R, Amaral D, Bliss T, O'Keefe J, editors. The Hippocampus Book. New York: Oxford Univ Press; 2007. pp. 139–140. [Google Scholar]

- 14.Izhikevich EM. Solving the distal reward problem through linkage of STDP and dopamine signaling. Cereb Cortex. 2007;17:2443–2452. doi: 10.1093/cercor/bhl152. [DOI] [PubMed] [Google Scholar]

- 15.Louie K, Wilson MA. Temporally structured replay of awake hippocampal ensemble activity during rapid eye movement sleep. Neuron. 2001;29:145–156. doi: 10.1016/s0896-6273(01)00186-6. [DOI] [PubMed] [Google Scholar]

- 16.Diba K, Buzsáki G. Forward and reverse hippocampal place-cell sequences during ripples. Nat Neurosci. 2007;10:1241–1242. doi: 10.1038/nn1961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Segall JE, Block SM, Berg HC. Temporal comparisons in bacterial chemotaxis. Proc Natl Acad Sci USA. 1986;83:8987–8991. doi: 10.1073/pnas.83.23.8987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Seward JP. An experimental analysis of latent learning. J Exp Psychol. 1949;32:177–186. doi: 10.1037/h0063169. [DOI] [PubMed] [Google Scholar]

- 19.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. Cambridge, MA: MIT Press; 1998. p. 5. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.