Abstract

Motivated by medical studies in which patients could be cured of disease but the disease event time may be subject to interval censoring, we presents a semiparametric non-mixture cure model for the regression analysis of interval-censored time-to-event datxa. We develop semiparametric maximum likelihood estimation for the model using the expectation-maximization method for interval-censored data. The maximization step for the baseline function is nonparametric and numerically challenging. We develop an efficient and numerically stable algorithm via modern convex optimization techniques, yielding a self-consistency algorithm for the maximization step. We prove the strong consistency of the maximum likelihood estimators under the Hellinger distance, which is an appropriate metric for the asymptotic property of the estimators for interval-censored data. We assess the performance of the estimators in a simulation study with small to moderate sample sizes. To illustrate the method, we also analyze a real data set from a medical study for the biochemical recurrence of prostate cancer among patients who have undergone radical prostatectomy. Supplemental materials for the computational algorithm are available online.

Keywords: Convex optimization, Hellinger consistency, maximum likelihood estimation, primal-dual interior-point method, prostate cancer

1. INTRODUCTION

Medical advancements have made it possible for patients to be cured of certain types of diseases. Monitoring for a non-fatal disease event, such as cancer biochemical recurrence or AIDS drug resistance, is usually carried out through periodic clinical visits of patients (Lindsey and Ryan, 1998). The exact time of the disease event is only known to have occurred during two consecutive clinical visits if it did happen; however, a complication is that the disease event may never happen because the patient can be cured of the disease. This type of interval-censored event-time data with possibility of cure is commonly encountered in medical studies, especially in follow-up studies when the cure of non-fatal event is of clinical interest.

For example, it is of routine practice to monitor serum prostate-specific antigen (PSA) for cancer biochemical recurrence among patients who have undergone radical prostatectomy for their clinically localized prostate cancer. Radical prostatectomy removes the entire prostate gland and presumably all tumor cells. Patients who are free of PSA for two to three years after surgery are generally considered of being cured of biochemical recurrence because the operation has successfully removed all PSA-generating tumor cells (Dillioglugil et al., 1997; Pound et al., 1999). However, PSA may reappear in serum after surgery, which is called biochemical recurrence, indicating the operation is not success and the cancer has come back. Investigators would like to know why some patients are cured of the biochemical recurrence but not the others, and how these relate to patient’s baseline characteristics such as cancer clinical stage and tumor histology. During the follow-up, biochemical recurrence is found out by detectable PSA level in serum as ascertained at periodic clinic visits for about every three months after surgery. The actual time of biochemical recurrence is only known to have occurred between two consecutive visits, or may not even happen for some patients who are cured of the disease. It is therefore of great interest to develop new method for analyzing the probability of cure for the interval-censored data in the regression-analysis framework for including the patient baseline characteristics.

Extensive statistical literature has been devoted to interval-censored data, but without incorporating the possibility of cure. The nonparametric maximum likelihood estimator (NPMLE) for the distribution function for interval-censored data was first studied by Peto (1973) and then by Turnbull (1976) using a self-consistency algorithm. Groeneboom and Wellner (1992) proposed an iterative convex minorant algorithm for current status data and case-2 interval-censored data. Wellner and Zhan (1997) discussed some efficient algorithms for obtaining the NPMLE for these cases. Strong consistency of the NPMLE was proved for interval-censored data under a L1 topology (Schick and Yu, 2000; Yu et al., 2001) and under the Hellinger metric (van der Vaart and Wellner, 2000). Semiparametric regression methods for interval-censored data have been investigated for the proportional hazards model (Finkelstein, 1986), the proportional odds model (Rabinowitz et al., 1995), and the accelerated failure time model (Betensky et al., 2001). Semiparametric models have also been proposed for regression analysis of current status data (Huang, 1996; Rossini and Tsiatis, 1996; Lin et al., 1998; Tian and Cai, 2006). In addition, Lam and Xue (2005) considered a mixture cure model for current status data using the estimating equation approach.

Two major approaches to model the cure rate are the mixture cure model and the non-mixture cure model (Chen et al., 1999). The mixture cure model is a mixture of two separate regression models for the cure rate of the cured population and the survival function for the non-cured population, which has been investigated extensively in the literature (Berkson and Gage, 1952; Yamaguchi, 1992; Taylor, 1995; Maller and Zhou, 1996; Sy and Taylor, 2000; Li et al., 2007, among others). Chen, Ibrahim and Sinha (1999) pointed out that the mixture cure model did not maintain the assumed survival model structure, e.g. proportional hazards, for the whole population. As an alternative, the non-mixture cure model was proposed to keep the proportional hazards structure for the whole population, while allowing for a straight-forward interpretation of the covariate effects on the probability of cure (Tsodikov, 1998; Chen et al., 1999; Tsodikov et al., 2003). As described by Chen et al. (1999) and Zeng et al. (2006), the non-mixture cure model has a biological interpretation of tumor cell growth, for which the model is also called the promotion time cure model. The works for both the mixture and the non-mixture cure models are largely based on right-censored data, not on interval-censored data.

In this article, we consider the regression analysis of interval-censored data for a semiparametric non-mixture cure model. Let T be a nonnegative random variable representing the event time and let Z be the vector of covariates. The survival function of T conditional on the covariate Z is assumed to satisfy the following cure model:

| (1) |

where (α, β) are the regression coefficients, and F is a completely unspecified cumulative distribution function. For right-censored data, Tsodikov (1998) considered a cure model with a bounded function F, where the intercept is not allowed. The regression model (1) is a natural structure to keep the proportional hazards assumption while allowing a nonzero probability of cure as t → ∞. When F is an unbounded cumulative hazard function, the model becomes the Cox proportional hazards model by setting α = 0. Letting F be bounded by 1, we will show that the model (1) is identifiable and leads to an improper survival function.

The paper is organized as follows. In Section 2, we describe the model, the identifiability issue and the likelihood function. In Section 3, we develop the maximum likelihood estimation (MLE) based on an expectation-maximization (EM) approach for interval-censored data. The computation utilizes the expectation conditional-maximization (ECM) algorithm of Meng and Rubin (1993), which alternates the M-step for (α, β) and the M-step for F in addition to the E-step. The M-step for F is the nonparametric MLE, which imposes challenge for numerical calculation. We apply a convex optimization method to develop a self-consistency-type algorithm in the spirit of Turnbull (1976). In Section 4, we prove strong consistency of the MLE estimators under the Hellinger distance using the modern empirical processes of van der Vaart and Wellner (1996). In Section 5, we describe the simulation studies to evaluate the performance of the proposed method, and illustrate the method through analysis of data for serum PSA recurrence among prostate cancer patients. We provide concluding remarks in Section 6 and the technical proofs in Supplemental Materials.

2. MODEL AND LIKELIHOOD

2.1. The Model

Under the non-mixture cure model (1), the overall survival distribution falls in the framework of a proportional hazards model with the cumulative hazard function eαF(·). The intercept α in the regression part of the cure model allows for the easy interpretation of covariate effects while keeping a exible baseline function. When t → ∞, the probability of cure for a given Z is exp(−eα+β′Z), the complimentary log-log regression. The proposed method can be easily extended to a general regression model by replacing exp(·) with a known positive convex function. When Z = 0, the baseline cure rate is simply exp (−eα), which depends on the intercept α.

Let E0 denote the expectation under the true values α0, β0 and F0 that satisfy model (1). We assume that and |α0| < ∞. It is clear that the baseline survival function S0(t) = exp(−F0(t)) is not a proper survival function because limt→∞ S0(t) = 1/e. The marginal survival function P0(T ≥ t) = E0[P0(T ≥ t|Z)] is also not a proper survival function by Jensen’s inequality , always greater than 0.

In order to ensure the identifiability of model (1), we require F to be a regular distribution function with limt→∞ F(t) = 1. To show the identifiability, let (α*, β*, F*) be a set of parameters that satisfies (1), i.e., . Fixing ω in a set of probability 1 in the underlying probability space, then S*(t|Z(ω)) = S(t|Z(ω)) implies that for each t ∈ (0,∞), . Letting t → ∞ yields because limt→∞ F*(t) = limt→∞ F(t) = 1. This implies that with probability 1. Suppose that if P(b′Z = a) = 1 for a real number a and any real vector b, then a = 0 and b = 0. This implies that α* = α and β* must be equal to β. Hence, F*(t) = F(t) for each t ∈ (0, ∞).

2.2. Data and Likelihood

We consider a setting in which the event time T may not be observed exactly, but instead is known to have occurred in an interval [L, R]. Here, L is the latest examination time before the event, and R is the earliest examination time after the event, where R = ∞ if the event has not happened before the last follow-up. The data are n i.i.d copies of (L, R, Z), denoted by (Li, Ri, Zi) for patient i, where Li < Ri for i = 1, ⋯, n. Denote θ′ = (α, β′) and . Then model (1) can be re-expressed as SZi (t) = Sθ,F (t|Zi) = exp (−eθ′Z̃iF(t)), where the function SZi (t) is left continuous, with right limits. If Ri < ∞,

where Pθ,F is the probability measure under the parameters θ and F. If Ri = ∞, then there are two possibilities for the ith patient: the patient is cured, or the event of interest for that patient occurs after the last examination time, which can be calculated by

The likelihood function for the n observed interval-censored data is then

| (2) |

Note that unlike the standard Cox model, the full likelihood function derived under the cure model (1) needs to account for the probability of cure.

To obtain the maximum likelihood estimators (MLE) based on (2) for (θ, F), we now show that the nonparametric MLE for F is only unique up to an equivalent class. This derivation is an extension of the method of Peto (1973) and Turnbull (1976) for the NPMLE of interval-censored data without covariates. Define a finite number of disjoint intervals constructed as follows: sj ∈ {Li : i = 1, ⋯, n} and rj ∈ {Ri : i = 1, ⋯, n}, the interval (sj , rj) does not contain any members of {Li,Ri, i = 1, ⋯, n}, and s1 ≤ r1 < s2 ≤ r2 ⋯ < sm ≤ rm < sm+1 < rm+1 = ∞. It is possible that sj = rj , j = 1, ⋯,m. Here sm+1 is the observed longest follow-up time. Let . Note that function x ↦ e−exp(θ′Z)x is nonincreasing in x.

We first examine the maximization of the likelihood function with respect to F for an arbitrary but fixed θ. For given data and fixed θ, the maximization of the likelihood function (2) depends on F only through its values on 𝒞 but not on the outside of 𝒞. For fixed values of , the likelihood function does not change with the value of F in (sj, rj). Let pj = F(rj+) − F(sj−) for j = 1, ⋯, m. Then the vector p ≡ (p1, ⋯, pm) where defines an equivalence class of cumulative distribution functions that are constant outside 𝒞. Thus, we can limit our search for the MLE of F in the equivalence class of the following step functions that have right-hand limits and are continuous from the left:

, with the constraint . Without loss of generality, we still denote the function by F in the equivalence class. The function is continuous from the right F(t) = F(t+) and takes a constant value on [rj , sj+1], which is equal to F(rj) for j = 1, ⋯, m. Further, F(0) = 0 and F(rm) = F(sm+1) = 1. The function F has jumps of size p1, ⋯, pm right before r1, ⋯, rm, respectively. Subsequently, the survival function of T given Zi can be written as:

| (3) |

In particular, for j = 1, ⋯,m, SZi(sj−) = exp [−eθ′Z̃i(p0 + p1 + ⋯ + pj−1)], and

where p0 ≡ 0 for the convenience of notation.

The likelihood function (2) of the observed data is now a function of the parameters p = (p1, ⋯, pm) and θ. Let δij indicate whether [sj, rj ] belongs to [Li, Ri] for j = 1, ⋯, m+1. In particular, δi,m+1 = 1 indicates whether the ith person has completed the follow-up without the occurrence of the event (i.e. Ri = ∞). Using the above notation, we write the log-likelihood function of (2) as

| (4) |

Since we assume a cure in our application, the data have at least one event-free subject (Ri = ∞) whose follow-up time is longer than rm. It follows that the term δi,m+1SZi(sm+1) does not vanish from (4). The likelihood contribution of event-free subjects is determined by comparing their follow-up times with rm. Only the subjects who are event-free and have Li > rm contribute to the likelihood as the cured subjects. The random times {rm, sm+1} essentially serve the same role as the deterministic “cure threshold” proposed by Zeng, Yin, and Ibrahim (2006) for right-censored data. As with all cure modeling, the viability of this model requires the follow-up of a study to be sufficiently long so that sm+1 is large enough for some subjects to be considered cured in the given scientific context.

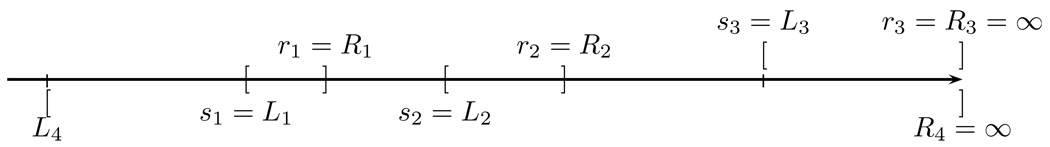

Due to the complexity of data notation, we use a hypothetical data example with 4 subjects to illustrate the derivation of the equivalent class for the likelihood function as depicted in Figure 1. The cases for patients i = 1, 2, 3 are straightforward. We only describe the case for patient i = 4, where δ4,1 = δ4,2 = δ4,3 = 1. The contribution of patient i = 4 to the log-likelihood function (4) is which is equal to SZ4(s1−) in the equivalent class for the likelihood function (2).

Figure 1.

A hypothetic example with n = 4. Each pair of brackets [ ] corresponds to a patient data.

In summary, we have derived the nonparametric MLE F̂n(θ) = argmaxFln(θ, F) by an equivalence class argument for a fixed but arbitrary θ. The MLE for θ can then be obtained by maximizing the profile log-likelihood θ ↦ ln(θ, F̂n(θ)), which is equivalent to maximizing the function θ ↦ supF Ln(θ, F). Maximizing the profile log-likelihood yields the semiparametric maximum likelihood estimator (θ̂n, F̂n).

3. COMPUTATIONAL ALGORITHM AND PROPERTIES

We now develop the computational algorithm to obtain the MLE from (4). It is numerically difficult to maximize the log-likelihood function (4), while the dimension of F̂n can increase with the sample size n. To overcome this dificulty, we first consider an EM method for interval-censored data as an extension of the method for the nonparametric estimation when the covariates are absent (Kalbeisch and Prentice, 2002, page 80–81). The resulting expected log-likelihood function has the appealing property of being concave. We then replace the M-step by two conditional M-steps that alternate the M-step for θ and the M-step for p, which allow us to utilize diffierent optimization techniques for maximizing the expected log-likelihood function for θ and p, separately. This is essentially the ECM algorithm of Meng and Rubin (1993), but we further exploit convex optimization techniques for a numerically stable and efficient algorithm for the M-step. Our algorithm forces the search of p in the interior of a constrained convex parameter space for obtaining MLE.

3.1. EM Method: Expectation-step

To simplify the notation in (4), for i = 1, ⋯, n, j = 1, ⋯, m, we define

The log likelihood function (4) is then

| (5) |

where for each i = 1, ⋯, n.

By the equivalence of (2) and (4), the interval-censored data are now represented by Δi = (δi,1, ⋯, δi,m, δi,m+1) for i = 1, ⋯, n. We may view Δi as the incomplete data arising from an (m+1)-class multinomial distribution with index 1. Specifically, we consider the complete data as denoted by X = {Xi ≡ (Xi,1, ⋯, Xi,m,Xi,m+1), Zi : i = 1, ⋯, n}. Conditional on the covariate Zi, Xi is assumed to have an m+1 class multinomial distribution with index 1, and for j = 1, ⋯, m + 1,

The Xi for i = 1, ⋯, n are assumed to be independent of each other so the complete data log-likelihood is

| (6) |

From the incomplete data {Δi, i = 1, ⋯, n}, we know that the ith outcome falls into one of the classes j as indexed by δij but we do not know the specific class into which it actually falls, except for the trivial cases. For example, in Figure (1), the event for patient 4 is known to have occurred in either (s1, r1), (s2, r2) or (s3, r3), but in exactly which one is unknown. Under this missing mechanism, the log-likelihood of the incomplete data is identical to (4).

The E-step is to calculate the expected log-likelihood function of (6). Given data {Δi, Zi : i = 1, ⋯, n} and current parameter estimation (θ(k), p(k)) of the k-th step,

| (7) |

Then the expected log-likelihood at the kth step is

where s0 is set to be −∞ for notational convenience. Denote , which is independent of the parameters θ and p. The expected log-likelihood can be expressed as

| (8) |

The following proposition shows that the expected log-likelihood is a concave function with respect to both θ and pj > 0, j = 1, ⋯, m, which implies that any local maximizer of the function is also a global maximizer on the set defined by and θ in an open convex set.

Proposition 1

The function l(k)(θ, p) is a concave function for each pj > 0, j = 1, ⋯, m, and for each θ that belongs to an open compact set.

Proof

The function l(k) (θ, p) is a summation of functions of (θ, p) ↦ −pjeθ′Ẑi and (θ, p) ↦ log(1−exp(−pjeθ′Zi)), which are concave by noting that (x, y) ↦ −x exp(y) is concave and (x, y) ↦ log(1 − exp(−xey)) is strictly concave for x > 0 and −∞ < y < ∞, respectively. A summation of concave functions is still a concave function.

The M-step will be followed to maximize the expected log-likelihood function using the ECM algorithm: the maximization with respect to θ for fixed p, and the maximization with respect to p for fixed θ, iteratively.

3.2. Maximization Step for Covariate Coefficients θ

The number of covariates is often much smaller than n, so it is straightforward to maximize l(k)(θ, p) directly with respect to θ for a fixed p, for example, by a quasi-Newton method such as the Broyden-Fletcher-Goldfarb-Shanno (BFGS) algorithm (Boyd and Vandenberghe, 2004, Chapter 9). The first partial derivative of l(k)(θ, p) with respect to θ is

The second partial derivative of l(k)(θ, p) with respect to θ is

We may apply the inequality 1 − x < e−x for x < 1 to confirm that the gradient is positive definite whenever the covariate matrix is positive definite. For every i, j, the second term in the above display is always nonnegative when pjeθ′Ẑi ≥ 1. When pjeθ′Ẑi < 1, this term is still positive by noting that pjeθ′Ẑi − 1 > −exp(−pjeθ′Ẑi). Proposition 1 also infers that for each fixed p, l(k) (θ, p) is strictly concave when the matrix is positive definite and θ is in a bounded convex set ℬ in ℝd+1. The concavity implies that a local maximizer is also the unique global maximizer.

3.3. Maximization Step for F: Optimality Conditions

The next step in the ECM algorithm is to maximize the expected log-likelihood function with respect to p conditional on θ. Although the maximization in p is numerically challenging, we note that the function p ↦ l(k) (θ, p) is strictly concave by Proposition 1 when not all pj ∈ [0, 1] are zero for j = 1, ⋯, m, and not all are zero for j = 1, ⋯, m. Thus, if we can find a local maximizer for p, it is also the unique global maximizer for p in the conditional maximization step. We first explore the optimality conditions for the maximization step for p by convex optimization techniques (Boyd and Vandenberghe, 2004, Section 5.5). To develop a numerically efficient and stable algorithm, we apply the primal-dual interior-point method for constrained optimization (Boyd and Vandenberghe, 2004, page 609–613), which yields a self-consistency algorithm for p in the sense of Turnbull (1976) and keeps the point mass pj positive.

For notational convenience, denote for j = 1, ⋯, m,

where is defined in (7) and k is the index for the current EM iteration. Here both θ and k are fixed, so aj, eθ′Ẑi and , are positive constants in the maximization step for F. Denote a = (a1, ⋯, am)′. Note that the last term in (8) is not a function of p, so the part in the expected log-likelihood that involves p is

with the constraint that and 0 ≤ pj ≤ 1 j = 1, ⋯, m. Because f(p) is strictly concave in p, the maximization of f becomes a concave optimization problem with constraints:

| (9) |

The first-order partial derivative of f with respect to pj is given by

The second-order partial derivative of f with respect to pj is given by

The second-order partial derivative of f with respect to pj and pj′ is zero for j ≠ j′, j, j′ = 1, ⋯, m, i.e., ∂2/∂pjpj′f(p) = 0. The Hessian matrix ∇2 f is thus a diagonal matrix, which we will exploit to derive a simple and efficient algorithm.

First we characterize the optimal solution of the concave function with the Lagrangian function. Let ν and τj, j = 1, ⋯, m be the Lagrange multipliers for the equality constraint and the inequality constraint, respectively. Let τ = (τ1, ⋯, τm) and 1 = (1, ⋯, 1)T. The Lagrange function with parameters τ and ν for the constrained maximization problem (9) is Γ(p, τ, ν) = f(p) + τT p + ν(1 − 1T p). For a concave optimization, the necessary and sufficient conditions for a point p* to be the optimal solution are the Karush-Kuhn-Tucker (KKT) conditions (Boyd and Vandenberghe, 2004, page 243–244). Specifically, a point p* in [0, 1]m, in ℝm and ν* in ℝ is an optimal solution if and only if

| (10) |

The maximization problem now is equivalent to solving the KKT conditions to find the solution (p*, τ*, ν*) from (10). The nonlinear equations in (10) have a simple form because of the diagonal Hessian matrix. Eliminating from these equations, the KKT conditions become

If , then ḟj(p*) = ∞, which is impossible by the second inequality in the above display. Thus, , and the maximizers p* and ν* must satisfy:

This implies that must be less than 1 because of the constraint . The KKT conditions are the necessary and sufficient conditions for the maximizers to satisfy our constrained convex maximization. The sufficient condition suggests that the initial values start from the “interior”, i.e., 0 < pj < 1 for each j. The solutions p* and ν* are found directly from the equations for the equality constraint, while the interior-point method forces the inequality constraints . The necessary KKT conditions infer that the interior point constraints continue to hold strictly for each ECM iteration.

3.4. Maximization Step for F: Self-Consistent Algorithm

We now describe the details of the algorithm for the maximization step of (9) using the primal-dual interior-point method, which avoids using a generic computer maximizer. The key step is to apply Newton’s method to solve the nonlinear equations (10) for the modified KKT conditions with a scalar parameter η > 0 that controls the inequality constraints. Specifically, Newton’s method approximates the nonlinear equations by the Taylor’s expansion and solves the following equations for the Newton steps (Δp, Δτ, Δν):

| (11) |

where ∇2f(p) is the Hessian Matrix, diag(p) is the diagonal matrix with diagonal entries p1, ⋯, pm, I is the identity matrix, and 1 is the vector with all components one (Boyd and Vandenberghe, 2004, page 610).

We can solve (11) explicitly for (Δp, Δτ, Δν) because the Hessian matrix ∇2 f is diagonal

| (12) |

| (13) |

| (14) |

Denote , and ν+ = ν + Δν. Equation (12) gives

| (15) |

Equation (13) gives . Plugging (15) into the equation, we get

When τj − pj f̈(p) ≠ 0, we have

| (16) |

Using equation (14),

This gives

provided that the denominator is not zero. The Newton step Δpj is then calculated by (16). After calculating by (15), we obtain the Newton steps Δν = ν+ − ν and . Newton’s method is an iteration procedure. Given the current iteration (p, τ, ν), the next iteration is determined by

| (17) |

where ψ is calculated by a standard backtracking line search, which further controls the inequality constraints (supplemental materials available online).

Equations (17), similar in the spirit to the self-consistent algorithm of Turnbull (1976), are explicit and simple self-updating equations. Equation (15) and Equation (16) provide accurate calculations for the Newton step (Δp, Δτ, Δν). Another computational advantage of the algorithm is that it provides a numerically stable solution to the maximization problem, even if the dimension of p is large.

3.5. Summary of the Iterative Algorithm for MLE

We now summarize the algorithm that combines the ECM algorithm with the primaldual interior-point method for obtaining the MLE for the cure model with interval-censored data:

Take a random initial value θ(0) and a random initial value p(0) that satisfies

- CM1: Obtain θ(k+1) by maximizing l(k)(θ, p(k)) with respect to θ,

where for i = 1, ⋯, n, , -

CM2 (obtain p(k+1) by the primal-dual interior method): given θ(k+1), define and update for j = 1, ⋯, m,Start with the initial value p̃ = p(k); choose σ > 1 and τ = (τs, ⋯, τm) where τj > 0. Repeat the following to update p̃ until convergence to get p(k+1):

- Determine η = σ/ξ̂, where .

- Evaluate

- Update p̃, τ and ν by (17) with the standard backtracking line search as described in supplemental materials available online.

Repeat steps 2–3 until ‖θ(k+1) − θ(k)‖ + ‖p(k+1) − p(k)‖ < ∊1 and the expected log-likelihood converges for a pre-specified small ∊1 > 0.

Note that the convergence of the algorithm can be established by the general results for the constrained ECM algorithms that have been shown in Meng and Rubin (1993) and Nettleton (1999); see also Little and Rubin (2002, Theorem 8.1, page 173).

4. HELLINGER CONSISTENCY OF MLE

The technical challenge for studying the strong consistency of the MLE (θ̂n, F̂n) for the proposed cure model comes from interval-censored data, for which a classical approach via the Kullback-Leibler information cannot be easily applied. Instead, we prove the strong consistency of (θ̂n, F̂n) to the true values θ0 and F0 under the Hellinger distance, which provides a global consistency. The Hellinger distance is an L2-distance between the square roots of two probability densities q1 and q2,

which does not depend on the dominating measure ν. This is a true distance that satisfies h(q1, q2) ≤ 1 and h(q1, q2) = 0 if and only if q1 = q2 a.e. ν. For the nonparametric estimation for interval-censored data, van der Vaart and Wellner (2000) proved the strong consistency of MLE under the Hellinger distance. We extend their method to the semiparametric MLE under the cure model for a general case of interval-censored data, for which the proof is largely based on the theory of modern empirical processes (van der Vaart and Wellner, 1996, Chapter 2.4).

We first describe the setup of the general case of interval-censored data, which is similar to these described by Schick and Yu (2000) and van der Vaart and Wellner (2000), but with a modification to allow for the possibility of cure. This setup is necessary in order to take into account of the randomness of the event inspection times, but it leads to the same likelihood function (2), for which we have developed a computationally efficient algorithm for the MLE (θ̂n, F̂n).

Let K be a positive random integer that denotes the number of inspection times for one person, and YK = {YK,1, ⋯, YK,K} denote the inspection times for the event of interest, where YK,1 < ⋯ < YK,K. In practice, it is natural to assume E0(K) < ∞. The random observation times for one person is a triangular array Y = {Yk,j : j = 1, ⋯, k, k = 1, 2, ⋯ }, where k is the realization of K. Let Yk = (Yk,1, ⋯, Yk,k) be the k-th row of the array Y with the realization denoted by yk = (yk,1, ⋯, yk,k).

The event time T is only known to have occurred in one of the intervals [YK,j−1, YK,j), j = 1, ⋯, K, or [YK,K, YK,K+1], where YK,0 ≡ 0 and YK,K+1 ≡ ∞. We denote Λk = (Λk,1, ⋯, Λk,k+1), where Λk,j = 1[Yk,j−1,Yk,j)(T) for j = 1, ⋯, k, and Λk,k+1 = 1[Yk,k,Yk,k+1](T), with the realization denoted by λk = (λk,1, ⋯, λk,k+1).

We now formulate the likelihood under this setup. Assume that conditional on Z, (K, Y) is independent of the event time T. We also make the noninformative inspection assumption that the distribution of (K, YK, Z) dose not depend on the event time T and the parameters of interest θ and F. Given Z, the conditional survival function of T follows cure model (1). Conditional on (K, YK) and Z, the random vector Λk has a multinomial distribution, i.e., (Λk|K, YK, Z) ~ MultinomialK+1 (1, ΔSK(Z)), where the vector

Let V = (K, Λk, YK, Z) with the realization denoted by υ = (k, λk, yk, z). Denote z̃T = (1, zT). The distribution of V has a version of density dPV / dµ with respect to a dominating measure µ determined by the joint distribution of (K, YK, Z), which does not depend on the parameters of interest θ and F by the non-informative inspection assumption. A version of density of the distribution of V , which is the likelihood function for one observation υ that we will use, is thus given by

| (18) |

The data are n i.i.d. V 1, ⋯, Vn copies of V , where , i = 1, ⋯, n. Define by the empirical distribution for a measurable real function g on the sample space 𝒱. The log-likelihood function for (θ, F) of Ṽ = (V 1, ⋯, Vn) is

| (19) |

where

The log-likelihood function (19) is same as the logarithm of the original likelihood function (2) by noting that . Therefore, Li = YKi,j−1 and Ri = YKi,j if YKi,j−1 ≤ Ti < YKi,j, and if Ti ≥ YKi,Ki, Li = YKi,Ki and Ri = ∞. This setup is flexible enough to allow a “data-generating” mechanism for the interval-censoring data [Li, Ri], where the observation stops after a random number Ki of inspections. There is no need to make specific distribution assumptions about the inspection process. This setup is also convenient for the technical proof of the Hellinger consistency.

We will work with the log-likelihood function (19) to prove the Hellinger consistency, and need the following regularity conditions:

C1. Parameter θ is restricted in a compact set Θ in ℝd+1, and the baseline distribution function F is in the set ℬ of all sub-distribution functions on (0, ∞).

C2. E0(‖Z‖2) < ∞ for the Euclidean norm ‖ · ‖2 in ℝp. For the true parameter .

- C3. The true parameter function F0 is strictly increasing, and satisfies

Condition C3 is a technical condition for preventing E0(1/pθ0,F0) from escaping to infinity. It regulates the variability of the inspection process {YK,1, ⋯, YK,K}. The following theorem summarizes the strong consistency property of (θ̂n, F̂n). The detail of the technical proofs is provided in Supplemental Materials.

Theorem 1

Under the regularity conditions C1–C3, the maximum likelihood estimate θ̂n and F̂n satisfies

5. NUMERICAL STUDIES

5.1. Simulation Study

To examine the empirical properties of the proposed method, we perform a simulation study. The event time T is generated under the cure model (1) for given Z,

There is a connection between the cure model (1) and the mixture cure model as pointed out by Chen et al. (1999). The overall survival function can be written in the form of the mixture cure model:

where is the covariate-specific probability of cure, and

The conditional survival function S* is a proper survival function.

To simulate T under (1), we consider a setting with one covariate Z, which has a uniform distribution on (−1:0, 1:0). The first step is to generate a random variate distributed as Bernoulli with π(Z) for a given single covariate Z. If it is 1, then T = ∞. If it is 0, then generate a random variate T* distributed as the proper survival function S*(t|Z). To generate T* ~ S*(t|Z), we apply the accept-reject method for simulating the random variate (Devroye, 1986, page 40–65). For simplicity, we choose the baseline distribution function F0(t) = 1 − exp(−t).

The sample size is chosen to be 50, 100 or 200. The true value for β0 is chosen to be 0.0, 0.5 or 1.0. When β0 = 0.0, the probability of cure is exp(−eα0). The value of α0 is chosen to be either 0.0 or 1.0, which corresponds to a baseline cure rate of either 0.37 or 0.07, respectively. For small sample sizes, care has been taken to ensure that there is at least one cured patient in the simulated dataset. The examination times are generated independent of Z and T, from a homogeneous Poisson process. The inter-examination times are independent and identically distributed as exponential with mean ζ of either 1/5 year or 1/2 year, corresponding to short or long inter-examinations, respectively. The length of study is either 3 years for short inter-examination or 5 years for long inter-examination. The simulation replicates are 1,000 for each scenario.

The results of the Monte Carlo simulations are summarized in Table 1, including the bias, the empirical estimate of the mean squared error (MSE) and its average standard error. The biases of estimates for α and β are very small even for a small sample size, indicating that the algorithm performs surprisingly well. The empirical estimates for the standard error, as well as the mean squared error, decrease with the sample sizes. The MSE of the estimator under the long inter-examination time is slightly higher than those under the short inter-examination time.

Table 1.

Summary of the simulation results including bias and mean squared error (MSE) for the MLE estimator α̂ and β̂, where ζ is the mean inter-examination time, n is the sample size, and the true values are α0 and β0.

| ζ = 0.2 | ζ = 0.5 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| α̂ | β̂ | α̂ | β̂ | |||||||

| n | α0 | β0 | Bias | MSE | Bias | MSE | Bias | MSE | Bias | MSE |

| 50 | 0.0 | 0.0 | −0.007 | 0.043 | 0.010 | 0.113 | 0.023 | 0.042 | −0.012 | 0.122 |

| 0.5 | 0.002 | 0.040 | 0.032 | 0.120 | 0.036 | 0.043 | 0.044 | 0.133 | ||

| 1.0 | −0.003 | 0.043 | 0.042 | 0.136 | 0.052 | 0.055 | 0.133 | 0.174 | ||

| 1.0 | 0.0 | 0.031 | 0.040 | 0.004 | 0.073 | 0.087 | 0.046 | −0.002 | 0.125 | |

| 0.5 | 0.042 | 0.042 | 0.021 | 0.092 | 0.110 | 0.055 | 0.113 | 0.152 | ||

| 1.0 | 0.030 | 0.048 | 0.037 | 0.093 | 0.116 | 0.069 | 0.165 | 0.151 | ||

| 100 | 0.0 | 0.0 | −0.009 | 0.019 | 0.011 | 0.053 | 0.023 | 0.019 | −0.014 | 0.058 |

| 0.5 | 0.005 | 0.020 | 0.011 | 0.057 | 0.027 | 0.020 | 0.038 | 0.063 | ||

| 1.0 | 0.004 | 0.019 | 0.021 | 0.056 | 0.041 | 0.026 | 0.103 | 0.082 | ||

| 1.0 | 0.0 | 0.018 | 0.022 | −0.007 | 0.034 | 0.067 | 0.023 | −0.012 | 0.058 | |

| 0.5 | 0.021 | 0.019 | 0.012 | 0.038 | 0.078 | 0.026 | 0.089 | 0.086 | ||

| 1.0 | 0.012 | 0.022 | 0.020 | 0.043 | 0.070 | 0.024 | 0.098 | 0.056 | ||

| 200 | 0.0 | 0.0 | −0.006 | 0.009 | 0.004 | 0.025 | 0.024 | 0.010 | −0.003 | 0.028 |

| 0.5 | 0.002 | 0.009 | 0.007 | 0.027 | 0.026 | 0.010 | 0.034 | 0.032 | ||

| 1.0 | 0.001 | 0.011 | 0.011 | 0.028 | 0.042 | 0.013 | 0.085 | 0.045 | ||

| 1.0 | 0.0 | 0.003 | 0.010 | 0.002 | 0.017 | 0.054 | 0.012 | −0.028 | 0.026 | |

| 0.5 | 0.008 | 0.009 | 0.006 | 0.018 | 0.066 | 0.014 | 0.074 | 0.057 | ||

| 1.0 | 0.005 | 0.010 | 0.009 | 0.020 | 0.053 | 0.011 | 0.061 | 0.023 | ||

NOTE: MSE was the empirical mean-squared error. The average standard errors of MSE for (α̂, β̂) were (0.036, 0.087) when ζ = 0.2, and were (0.048, 0.128) when ζ = 0.5.

5.2. Data Analysis

The proposed method was motivated by the medical study for patients with clinically localized prostate cancer, who were followed and monitored for biochemical recurrence of cancer after undergoing radical prostatectomy. As a widely used surgical procedure, radical prostatectomy takes away the entire prostate gland and surrounding tissue to remove all tumor cells, leading to a very high cure rate for early prostate cancer (Kufe et al., 2003, Chapter 111). Although no cells should remain to produce prostate-specific antigen (PSA), clinical studies have shown that about 20% to 30% of these patients could still experience biochemical recurrence of cancer, defined as a detectable serum PSA level (≥ 0.1 ng/mL) after surgery. Clinical studies have also shown that the biochemical recurrence of cancer often occurs within 3 years after surgery, which is a much earlier event than the clinical recurrence of cancer (Dillioglugil et al., 1997; Pound et al., 1999). A patient who has remained free of serum PSA three years after his surgery is generally considered to be cured of biochemical recurrence of prostate cancer. It is therefore important to know what preoperative clinical characteristics might be prognostic for the rate of cure for biochemical recurrence and how long it is likely to occur after surgery.

For this purpose, we obtained the clinical data of 260 men who underwent radical prostatectomy for clinically localized prostate cancer performed by a single surgeon at an academic hospital in Houston, Texas. The average age for the men at the operation was 59.5 (median age 59). After the operation, these men were followed up periodically for serum PSA level examination for about every 3 months. All patients were alive at their last follow-up. The longest follow-up duration sm+1 was 4.1 years, and all biochemical recurrences were observed within rm = 3.3 years after surgery. This indicated that the patients’ follow-up times were adequate for the analysis of the cure rate for biochemical recurrence. The exact time of biochemical recurrence was only known to have occurred between the previous examination and the latest examination for a patient. The possible prognostic factors for biochemical recurrence included the following: pathologic stage at surgery (T2, clinically organ-confined; T3, locally advanced), preoperative PSA level, Gleason score, and positive surgical margins (PSM). The preoperative PSA level was logarithmically transformed. The Gleason score graded histologically the prostate tumor cells, ranging from 2 to 10 (the higher the worse). We classified the Gleason score into 2 categories: less or equal to 7, and above 7(the baseline). Positive surgical margins (PSM), defined as the presence of cancer cells at the inked margin of a resected tumor specimen, might indicate incomplete excision of tumor, but it was not always clear in the medical literature whether PSM was associated with biochemical recurrence. Of note, being cured of biochemical recurrence is different from being cured of prostate cancer, which may be confounded with over-diagnosis of prostate cancer.

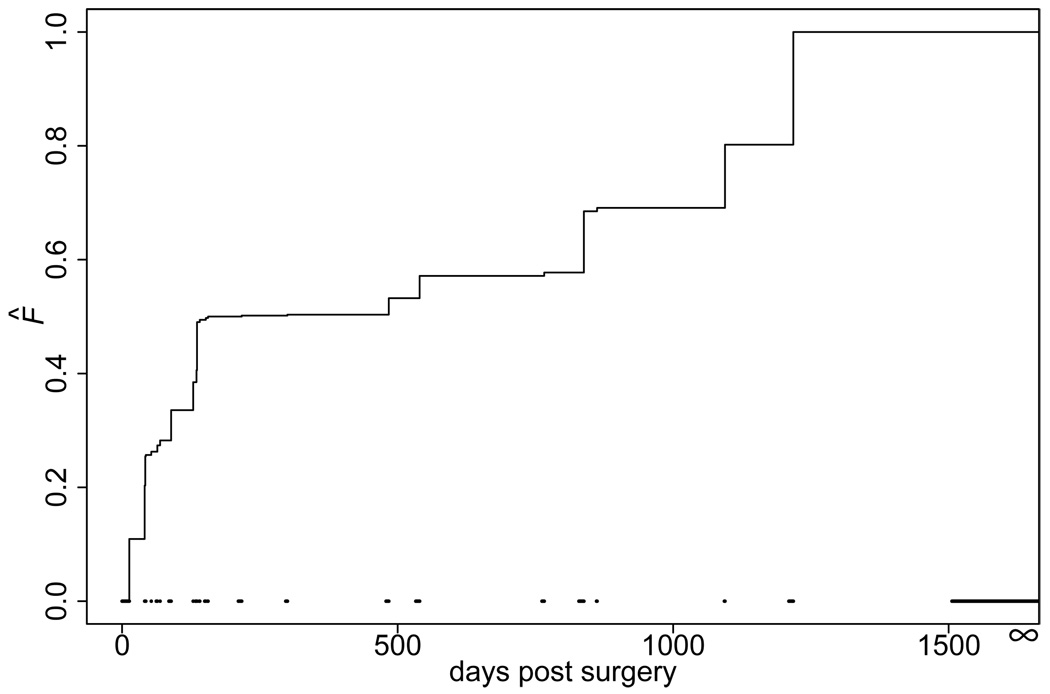

The estimated covariate coefficients were shown in Table 2. The estimated baseline function F̂ was plotted in Figure 2. For computational convenience, we used the nonparametric bootstrap method to estimate the standard errors, where the replicates of the bootstrap samples were 1,000. The intercept, stage T2, and Gleason score ≤ 7 in the regression model were not significantly different from zero, but their magnitude were negative, indicating an increased cure rate (≈ 66.3%) for patients with the following clinical characteristics: tumor of stage T2, preoperative PSA level 1.0, Gleason score ≤ 7, and a negative surgical margin. Only the preoperative PSA level was significantly associated with the cure of biochemical recurrence at the 0.05 level. The positive estimated coefficient indicated a lower preoperative PSA level would lead to a higher probability of cure.

Table 2.

Estimates and standard error (se) for analyzing the biochemical recurrence study.

| Intercept | Stage T2 | log PSA level | Gleason ≤ 7 | PSM | |

|---|---|---|---|---|---|

| estimates | −0.34 | −0.24 | 0.62 | −0.31 | 0.06 |

| bootstrap se | 0.41 | 0.74 | 0.29 | 0.33 | 0.37 |

PSM: Positive surgical margins.

Figure 2.

NPMLE of the function F̂. The dashed lines at the bottom are the non-overlapping intervals (sj, rj] for j = 1, ⋯, m + 1.

6. DISCUSSION

We have studied the semiparametric MLE for a non-mixture cure model for interval-censored data. Besides its attractive properties and biological interpretation as explained by Tsodikov (1998) and Chen, Ibrahim and Sinha (1999), one advantage of the non-mixture cure model for interval-censored data is the parsimonious model of the covariate effects on both cure rate and time to event. This is especially appealing when the primary goal of a study is to evaluate risk factors for the cure rate. In addition, the proposed semiparametric non-mixture cure model S(t/Z) = exp(−ϕ(θ′Z̃)F(t)) is flexible enough to allow a general function ϕ(·) to replace exp(·) in the regression part. Our algorithm can be easily modified for the general function ϕ as long as it is nonnegative and convex. It is important to assess the goodness-of-fit for the posited model (1), perhaps based on the likelihood function developed herein. Another obvious extension within the regression framework is to allow for time-dependent covariates, which may be useful for checking the proportionality assumption in model (1). Although it will not be conceptually difficult to develop the estimators, studying their properties requires substantial effort.

Interval-censored data provides unique opportunity and challenge for analyzing the cure rate in the medical studies. We developed the likelihood function through the classic equivalence class approach of Turnbull (1976). To estimate cure rate, the likelihood function requires that at least one event-free subject be followed longer than rm. In contrast to the mixture cure model, the definition of cure fraction for the non-mixture cure model is unchanged even in the case of insufficient follow-up. However, for sound data analysis, one should always consult with clinical investigators to assess whether the value of rm in a given data is appropriate for the scientific context.

Another major contribution in this work for interval-censored data is the development of a computational algorithm that incorporated some modern optimization techniques with the EM method for solving MLE. The maximization step is a constrained optimization that requires the sophisticated interior-point method to keep searching in the restricted parameter subspace. Combined with the theoretically sound primal-dual method, our algorithm provides a computationally robust solution for the nonparametric estimation of the function F. We further worked out an efficient and numerically stable algorithm for the M-step for F, which is self-consistency in the sense of Turnbull (1976). Our experience is that the algorithm converges very quickly in each M-step even when the dimension of p is large, although the convergence for the entire ECM iterations still needs extra computation time.

The assumption of an independent and non-informative inspection process is for the consistency proof. That assumption is, however, likely satisfied in our data example. This is because biochemical recurrence after radical prostatectomy has been shown to occur much earlier than symptomatic clinical recurrence (Pound et al., 1999). Therefore, it is unlikely that the inspection process would be altered among the asymptomatic patients. The asymptotic normality and efficiency of n½(θ̂n − θ0) may be obtained by deriving the efficient score function of θ while treating F as the nuisance function, where the detail will be reported elsewhere. We conjecture that the rate of convergence of θ̂n is n−½ and that of F̂n is n−1/3. It is difficult to obtain the asymptotic distribution for n1/3(F̂n − F0), while the asymptotic normality of n½(θ̂n − θ0) is relatively easy to prove by modifying the empirical process techniques for the case-2 interval-censored data as in the work of van de Geer (2000, page 228–230). The nonparametric bootstrap is a valid approach to calculating the standard error for θ̂n. Alternatively, the asymptotic variance-covariance matrix of estimator θ̂n and F̂n may be estimated by the supplemented ECM (S-ECM) algorithm of van Dyk et al. (1995), which is a computational byproduct of the ECM algorithm.

Supplementary Material

Line Search for Primal-dual Interior-point Algorithm: A brief review of the line-search procedure for primal-dual interior-point algorithm as in Boyd and Vandenberghe (2004, Chapter 11).

Proof of Hellinger consistency: Technical proof of Theorem 1.

Acknowledgments

The Authors thank Dr. J. M. Levitt and Dr. K. M. Slawin for providing the data, and the associated editor and three referees for their helpful comments. The research of Hao Liu was supported in part by NIH grant P30-CA125123. The research of Yu Shen was supported in part by NIH grant R01-CA079466.

Contributor Information

Hao Liu, Division of Biostatistics, Dan L. Duncan Cancer Center, BCM 305, Baylor College of Medicine, Houston, TX 77030, U.S.A. (E-mail: haol@bcm.edu.

Yu Shen, Department of Biostatistics, M. D. Anderson Cancer Center, 1515 Holcombe Blvd, Box 447, Houston, TX 77030, U.S.A. (Email: yshen@mdanderson.org.

REFERENCES

- Berkson J, Gage RP. Survival curve for cancer patients following treatment. Journal of the American Statistical Association. 1952;47:501–515. [Google Scholar]

- Betensky RA, Rabinowitz D, Tsiatis AA. Computationally simple accelerated failure time regression for interval censored data. Biometrika. 2001;88(3):703–711. [Google Scholar]

- Boyd SP, Vandenberghe L. Convex Optimization. Cambridge, UK: Cambridge University Press; 2004. [Google Scholar]

- Chen M-H, Ibrahim JG, Sinha D. A new Bayesian model for survival data with a surviving fraction. Journal of the American Statistical Association. 1999;94(447):909–919. [Google Scholar]

- Devroye L. Non-Uniform Random Variate Generation. New York: Springer-Verlag; 1986. [Google Scholar]

- Dillioglugil O, Leibman BD, Kattan MW, Seale-Hawkins C, Wheeler TM, Scardino PT. Hazard rates for progression after radical prostatectomy for clinically localized prostate cancer. Urology. 1997;50(1):93–99. doi: 10.1016/S0090-4295(97)00106-4. [DOI] [PubMed] [Google Scholar]

- Finkelstein DM. A proportional hazards model for interval-censored failure time data. Biometrics. 1986;42(4):845–854. [PubMed] [Google Scholar]

- Groeneboom P, Wellner JA. Information Bounds and Nonparametric Maximum Likelihood Estimation. Basel: Birkhauser Verlag; 1992. [Google Scholar]

- Huang J. Efficient estimation for the proportional hazards model with interval censoring. Annals of Statistics. 1996;24(2):540–568. [Google Scholar]

- Kalbeisch JD, Prentice RL. The Statistical Analysis of Failure Time Data. second edition. Hoboken, NJ: Wiley-Interscience; 2002. [Google Scholar]

- Kufe DW, Pollock RE, Weichselbaum RR, Bast RC, Gansler TS, Holland JF, Frei E, editors. Holland-Frei Cancer Medince 6. 6th edition. BC Decker Inc; 2003. [Google Scholar]

- Lam KF, Xue H. A semiparametric regression cure model with current status data. Biometrika. 2005;92(3):573–586. [Google Scholar]

- Li Y, Tiwari RC, Guha S. Mixture cure survival models with dependent censoring. Journal of the Royal Statistical Society. Series B: Statistical Methodology. 2007;69(3):285–306. [Google Scholar]

- Lin DY, Oakes D, Ying Z. Additive hazards regression with current status data. Biometrika. 1998;85(2):289–298. [Google Scholar]

- Lindsey JC, Ryan LM. Tutorial in biostatistics: methods for interval-censored data. Statistics in Medicine. 1998;17:219–238. doi: 10.1002/(sici)1097-0258(19980130)17:2<219::aid-sim735>3.0.co;2-o. [DOI] [PubMed] [Google Scholar]

- Little RJA, Rubin DB. Statistical Analysis with Missing Data. 2nd ed edition. Hoboken, N.J: Wiley; 2002. [Google Scholar]

- Maller R, Zhou X. Survival Analysis with Long-term Survivors. New York: John-Wiley; 1996. [Google Scholar]

- Meng X-L, Rubin DB. Maximum likelihood estimation via the ECM algorithm: a general framework. Biometrika. 1993;80(2):267–278. [Google Scholar]

- Nettleton D. Convergence properties of the em algorithm in constrained parameter spaces. Canadian Journal of Statistics. 1999;27(3):639–648. [Google Scholar]

- Peto R. Experimental survival curves for interval-censored data. Journal of the Royal Statistical Society. Series C. Applied Statistics. 1973;22:86–91. [Google Scholar]

- Pound CR, Partin AW, Eisenberger MA, Chan DW, Pearson JD, Walsh PC. Natural history of progression after PSA elevation following radical prostatectomy. Journal of the American Medical Association. 1999;281(17):1591–1597. doi: 10.1001/jama.281.17.1591. [DOI] [PubMed] [Google Scholar]

- Rabinowitz D, Tsiatis A, Aragon J. Regression with interval censored data. Biometrika. 1995;82:501–513. [Google Scholar]

- Rossini AJ, Tsiatis AA. A semiparametric proportional odds regression model for the analysis of current status data. Journal of the American Statistical Association. 1996;91(434):713–721. [Google Scholar]

- Schick A, Yu Q. Consistency of the GMLE with mixed case interval-censored data. Scandinavian Journal of Statistics. 2000;27(1):45–55. [Google Scholar]

- Sy JP, Taylor JMG. Estimation in a cox proportional hazards cure model. Biometrics. 2000;56(1):227–236. doi: 10.1111/j.0006-341x.2000.00227.x. [DOI] [PubMed] [Google Scholar]

- Taylor JMG. Semi-parametric estimation in failure time mixture models. Biometrics. 1995;51(3):899–907. [PubMed] [Google Scholar]

- Tian L, Cai T. On the accelerated failure time model for current status and interval censored data. Biometrika. 2006;93(2):329–342. [Google Scholar]

- Tsodikov A. A proportional hazards model taking account of long-term survivors. Biometrics. 1998;25(54):1508–1516. [PubMed] [Google Scholar]

- Tsodikov AD, Ibrahim JG, Yakovlev AY. Estimating cure rates from survival data: an alternative to two-component mixture models. Journal of the American Statistical Association. 2003;98(464):1063–1078. doi: 10.1198/01622145030000001007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turnbull BW. The empirical distribution function with arbitrarily grouped, censored and truncated data. Journal of the Royal Statistical Society. Series B. Methodological. 1976;38(3):290–295. [Google Scholar]

- van de Geer SA. Applications of Empirical Process Theory. Cambridge, UK: Cambridge University Press; 2000. [Google Scholar]

- van der Vaart AW, Wellner JA. Weak Convergence and Empirical Processes. New York: Springer; 1996. [Google Scholar]

- van der Vaart AW, Wellner JA. Preservation theorems for Glivenko-Cantelli and uniform Glivenko-Cantelli classes. In: Gine E, M D, Wellner J, editors. High Dimensional Probability II. Boston: Birkhäauser; 2000. pp. 113–132. [Google Scholar]

- van Dyk DA, Meng X-L, Rubin DB. Maximum likelihood estimation via the ECM algorithm: computing the asymptotic variance. Statistica Sinica. 1995;5(1):55–75. [Google Scholar]

- Wellner JA, Zhan Y. A hybrid algorithm for computation of the nonparametric maximum likelihood estimator from censored data. Journal of the American Statistical Association. 1997;92(439):945–959. [Google Scholar]

- Yamaguchi K. Accelerated failure-time regression models with a regression model of surviving fraction: An application to the analysis of ‘permanent employment’ in japan. Journal of the American Statistical Association. 1992;87(418):284–292. [Google Scholar]

- Yu QQ, Wong GYC, Li LX. Asymptotic properties of self-consistent estimators with mixed interval-censored data. Annals of the Institute of Statistical Mathematics. 2001;53(3):469–486. [Google Scholar]

- Zeng D, Yin G, Ibrahim JG. Semiparametric transformation models for survival data with a cure fraction. Journal of the American Statistical Association. 2006;101(474):670–684. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Line Search for Primal-dual Interior-point Algorithm: A brief review of the line-search procedure for primal-dual interior-point algorithm as in Boyd and Vandenberghe (2004, Chapter 11).

Proof of Hellinger consistency: Technical proof of Theorem 1.