Abstract

A tacit but fundamental assumption of the Theory of Signal Detection (TSD) is that criterion placement is a noise-free process. This paper challenges that assumption on theoretical and empirical grounds and presents the Noisy Decision Theory of Signal Detection (ND-TSD). Generalized equations for the isosensitivity function and for measures of discrimination that incorporate criterion variability are derived, and the model's relationship with extant models of decision-making in discrimination tasks is examined. An experiment that evaluates recognition memory for ensembles of word stimuli reveals that criterion noise is not trivial in magnitude and contributes substantially to variance in the slope of the isosensitivity function. We discuss how ND-TSD can help explain a number of current and historical puzzles in recognition memory, including the inconsistent relationship between manipulations of learning and the slope of the isosensitivity function, the lack of invariance of the slope with manipulations of bias or payoffs, the effects of aging on the decision-making process in recognition, and the nature of responding in Remember/Know decision tasks. ND-TSD poses novel and theoretically meaningful constraints on theories of recognition and decision-making more generally, and provides a mechanism for rapprochement between theories of decision-making that employ deterministic response rules and those that postulate probabilistic response rules.

Keywords: Signal detection, recognition memory, criteria, decision-making

The Theory of Signal Detection (TSD1; Green & Swets, 1966; Macmillan & Creelman, 2005; Peterson, Birdsall, & Fox, 1954; Tanner & Swets, 1954) is a theory of decision-making that has been widely applied to psychological tasks involving detection, discrimination, identification, and choice, as well as to problems in engineering and control systems. Its historical development follows quite naturally from earlier theories in psychophysics (Blackwell, 1953; Fechner, 1860; Thurstone, 1927) and advances in statistics (Wald, 1950). The general framework has proven sufficiently flexible so as to allow substantive cross-fertilization with related areas in statistics and psychology, including mixture distributions (DeCarlo, 2002), theories of information integration in multidimensional spaces (Banks, 2000; Townsend & Ashby, 1982), models of group decision-making (Sorkin & Dai, 1994), models of response timing (Norman & Wickelgren, 1969; Sekuler, 1965; Thomas & Myers, 1972), and multiprocess models that combine thresholded and continuous evidence distributions (Yonelinas, 1999). It also exhibits well-characterized relationships with other prominent perspectives, such as individual choice theory (Luce, 1959) and threshold-based models (Krantz, 1969; Swets, 1986a). Indeed, it is arguably the most widely used and successful theoretical framework in psychology of the last half century.

The theoretical underpinnings of TSD can be summarized in four basic postulates:

Events are individual enumerable trials on which a signal is present or not.

A strength value characterizes the evidence for the presence of the signal on a given trial.

Random variables characterize the conditional probability distributions of strength values for signal-present and signal-absent events (for detection) or for signal-A and signal-B events (for discrimination).

A criterion serves to map the continuous strength variable (or its associated likelihood ratio) onto a binary (or n-ary) decision variable.

As applied to recognition memory experiments (Banks, 1970; Egan, 1958; Lockhart & Murdock, 1970; Parks, 1966), in which subjects make individual judgments about whether a test item was previously viewed in a particular delimited study episode, the “signal” is considered to be the prior study of the item. That study event is thought to confer additional strength on the item such that studied items generally, but not always, yield greater evidence for prior study than do unstudied items. Subjects then make a decision about whether they did or did not study the item by comparing the strength yielded by the current test stimulus to a decision criterion. Analytically, TSD reparameterizes the obtained experimental statistics as estimates of discriminability and response criterion or bias. Theoretical conclusions about the mnemonic aspects of recognition performance are often drawn from the form of the isosensitivity function2, which is a plot of the theoretical hit rate against the theoretical false-alarm rate across all possible criterion values. The function is typically estimated from points derived from a confidence-rating procedure (Egan, 1958; Egan, Schulman, & Greenberg, 1959).

TSD has been successfully applied to recognition because it provides an articulated and intuitive description of the decision portion of the task without obliging any particular theoretical account of the relevant memory processes. In fact, theoretical interpretations derived from the application of TSD to recognition memory have been cited as major constraints on process models of recognition (e.g., McClelland & Chappell, 1998; Shiffrin & Steyvers, 1997). Recent evidence reveals, in fact, an increased role of TSD in research on recognition memory: The number of citations in PsycInfo that appear in response to a joint query of “recognition memory” and “signal detection” as keywords has increased from 23 in the 1980s to 39 in the 1990s to 67 in just the first seven years of this decade.

The purpose of this paper is to theoretically and empirically evaluate the postulate of a noise-free criterion (Assumption [4], above), and to describe an extension of TSD that is sufficiently flexible to handle criterion variability. The claim is that criteria may vary from trial to trial in part because of noise inherent to the processes involved with maintaining and updating them. Although this claim does not seriously violate the theoretical structure of TSD, it does have major implications for how we draw theoretical conclusions about memory, perception, and decision processes from detection, discrimination, and recognition experiments.

As we review below, concerns about variability in the decision process are apparent in a variety of literatures, and theoretical tools have been advanced to address the problems that arise from noisy decision making (Rosner & Kochaniski, 2008). However, theorizing in recognition memory has mostly advanced independently of such concerns, perhaps in part because of the difficulty associated with disentangling decision noise from representational noise (see, e.g., Ratcliff & Starns, in press). This paper considers the statistical and analytic problems that arise from its postulation in the context of detection theoretical models, and applies a novel experimental task—the ensemble recognition paradigm—towards the problem of estimating criterion variance.

Historical antecedents and contemporary motivation

Considerations similar to the ones forwarded here have been previously raised in the domains of psychoacoustics and psychophysics (Durlach & Braida, 1969; Gravetter & Lockhead, 1973), but have not been broadly considered in the domain of recognition memory. An exception is the seminal “strength theory” of Wickelgren (1968; Wickelgren & Norman, 1966; Norman & Wickelgren, 1969), on whose work our initial theoretical rationale is based. That work was applied predominately to problems in short-term memory and to the question of how absolute (yes-no) and relative (forced-choice) response tasks differed from one another. However, general analytic forms for the computation of detection statistics were not provided, nor was the work applied to the relationship between the isosensitivity function and theories of recognition memory (which were not prominent at the time).

Contemporary versions of the TSD are best understood by their relation to the general class of judgment models derived from Thurstone (1927). A taxonomy of those models described by Torgerson (1958) allows various restrictions on the equality of stimulus variance and of criterial variance; current applications of TSD to recognition memory vary in whether they permit stimulus variance to differ across distributions, but they almost unilaterally disallow criterial variance. This is a restriction that, although not unique to this field, is certainly a surprising dissimilarity with work in related areas such as detection and discrimination in psychophysical tasks (Bonnel & Miller, 1994; Durlach & Braida, 1969; Nosofsky, 1983) and classification (Ashby & Maddox, 1993; Erev, 1998; Kornbrot, 1980). The extension of TSD to ND-TSD is a relaxation of this restriction: ND-TSD permits nonzero criterial variance.

The recent explosion of work evaluating the exact form of the isosensitivity function in recognition memory under different conditions (Arndt & Reder, 2002; Glanzer, Kim, Hilford, & Adams, 1999; Gronlund & Elam, 1994; Kelley & Wixted, 2001; Matzen & Benjamin, in press; Qin, Raye, Johnson, & Mitchell, 2001; Ratcliff, Sheu, & Gronlund, 1992; Ratcliff, McKoon, & Tindall, 1994; Slotnick, Klein, Dodson, & Shimamura, 2000; Van Zandt, 2000; Yonelinas, 1994, 1997, 1999) and in different populations (Healy, Light, & Chung, 2005; Howard, Bessette-Symons, Zhang, & Hoyer, 2006; Manns, Hopkins, Reed, Kitchener, & Squire, 2003; Wixted & Squire, 2004a, 2004b; Yonelinas, Kroll, Dobbins, Lazzara, & Knight, 1998; Yonelinas, Kroll, Quamme, Lazzara, Suavé, Widaman, & Knight, 2002; Yonelinas, Quamme, Widaman, Kroll, Suavé, & Knight, 2004), as well as the prominent role those functions play in current theoretical development (Dennis & Humphreys, 2001; Glanzer, Adams, Iverson, & Kim, 1993; McClelland & Chappell, 1998; Wixted, 2007; Shiffrin & Steyvers, 1997; Yonelinas, 1999), suggests the need for a thorough reappraisal of the underlying variables that contribute to those functions. Because work in psychophysics (Krantz, 1969; Nachmias & Steinman, 1963) and, more recently, in recognition memory (Malmberg, 2002; Malmberg & Xu, 2006; Wixted & Stretch, 2004) has illustrated how aspects and suboptimalities of the decision process can influence the shape of the isosensitivity function, the goals of this article are to provide an organizing framework for the incorporation of decision noise within TSD, and to help expand the various theoretical discussions within the field of recognition memory to include a role for decision variability. We suggest that drawing conclusions about the theoretical components of recognition memory from the form of the isosensitivity function can be a dangerous enterprise, and show how a number of historical and current puzzles in the literature may benefit from a consideration of criterion noise.

Organization of the paper

The first part of this paper provides a short background on the assumptions of traditional TSD models, as well as evidence bearing on the validity of those assumptions. Appreciating the nature of the arguments underlying the currently influential unequal-variance version of TSD is critical to understanding the principle of criterial variance and the proposed analytic procedure for separately estimating criterial and evidence variance. In the second part of the paper, we critically evaluate the assertion of a stationary and nonvariable scalar criterion value3 from a theoretical and empirical perspective, and in the third section, provide basic derivations for the form of the isosensitivity function in the presence of nonzero criterial variability. The fourth portion of the paper provides derivations for measures of accuracy in the presence of criterial noise, and leads to the presentation, in the fifth section, of the “ensemble recognition” task, which can be used to assess criterial noise. In the sixth part of the paper, different models of that experimental task are considered and evaluated, and estimates of criterial variability are provided. In the seventh and final part of the paper, we review the implications of the findings and review some of the situations in which a consideration of criterial variability might advance our progress on a number of interesting problems in recognition memory and beyond.

It is important to note that the successes of TSD have led to many unanswered questions, and that a reconsideration of basic principles like criterion invariance may provide insight into those problems. No less of an authority than John Swets—the researcher most responsible for introducing TSD to psychology—noted that it was “unclear” why, for example, the slope of isosensitivity line for detection of brain tumors was approximately ½ the slope of the isosensitivity line for detection of abnormal tissue cells (Swets, 1986b). Within the field of recognition memory, there is evidence that certain manipulations that lead to increased accuracy, such as increased study time, are also associated with decreased slope of the isosensitivity function (Glanzer et al., 1999; Hirshman & Hostetter, 2000), whereas other manipulations that also lead to superior performance are not (Ratcliff et al., 1994). Although there are extant theories that account for changes in slope, there is no agreed-upon mechanism by which they do so, nor is an explanation of such heterogeneous effects forthcoming.

Throughout this paper, we will make reference to the recognition decision problem, but most of the considerations presented here are relevant to other problems in detection and discrimination, and we hope that the superficial application to recognition memory will not deter from the more general message about the need to consider decision-based noise in such problems (see also Durlach & Braida, 1969; Gravetter & Lockhead, 1973; Nosofsky, 1983; Wickelgren, 1968).

Assumptions about evidence distributions

The lynchpin theoretical apparatus of TSD is the probabilistic relationship between signal status and perceived evidence. The historical assumption about this relationship is that the distributions of the random variables are normal in form (Thurstone, 1927) and of equal variance, separated by some distance, d’ (Green & Swets, 1966). Whereas the former assumption has survived inquiry, the latter has been less successful.

The original (Peterson et al., 1954) and most popularly applied version of TSD assumes that signal and noise distributions are of equal variance. Although many memory researchers tacitly endorse this assumption by reporting summary measures of discrimination and criterion placement that derive from the application of the equal-variance model, such as d’ and Cj, respectively, the empirical evidence does not support the equal-variance assumption. The slope of the isosensitivity function in recognition memory is often found to be ~0.80 (Ratcliff et al., 1992), although this value may change with increasing discriminability (Glanzer et al., 1999; Heathcote, 2003; Hirshman & Hostetter, 2000). This result has been taken to imply that the evidence distribution for studied items is of greater variance than the distribution for unstudied items (Green & Swets, 1966). The magnitude of this effect, and not its existence, as well as whether manipulations that enhance or attenuate it are actually affecting representational variance, are the issues at stake here.

The remarkable linearity of the isosensitivity function notwithstanding, it is critical for present purposes to note not only that the mean slope for recognition memory is often less than 1, but also that it varies considerably over situations and individuals (Green & Swets, 1966). It is considerably lower than 0.8 for some tasks (i.e., ~0.6 for the detection of brain tumors; Swets, Pickett, Whitehead, Getty, Schnur, Swets, & Freeman, 1979), higher than 1 for other tasks (like information retrieval; Swets, 1969), and around 1.0 for yet others (such as odor recognition; Rabin & Cain, 1984).

Nonunit and variable slopes reveal an inadequacy of the equal-variance model of Peterson et al. (1954), and of the validity of the measure d’. This failure can be addressed in several ways. It might be assumed that the distributions of evidence are asymmetric in form, for example, or that one or the other distribution reflects a mixture of latent distributions (DeCarlo, 2002; Yonelinas, 1994). The traditional and still predominant explanation, however, is the one described above—that the variance of the distributions is unequal (Green & Swets, 1966; Wixted, 2007). Because the slope of the isosensitivity function is equal to the ratio of the standard deviations of the noise and signal distributions in the unequal-variance TSD model, the empirical estimates of slope less than 1 have promoted the inference that the signal distribution is of greater variance than the noise distribution in recognition. However, the statistical theory of the form of the isosensitivity function that is used to understand nonunit slopes and slope variability has been only partially unified with the psychological theories that produce such behavior, via either the interactivity of continuous and thresholding mechanisms (Yonelinas, 1999) or the averaging process presumed by global matching mechanisms (Gillund & Shiffrin, 1984; Hintzman, 1986; Humphreys, Pike, Bain, & Tehan, 1989; Murdock, 1982) None of these prominent theories include a role for criterial variability, nor do they provide a comprehensive account of the shape of isosensitivity functions and of the effect of manipulations on that shape. Criterial variability can directly affect the slope of the isosensitivity function, a datum that opens up novel theoretical possibilities for psychological models of behavior underlying the isosensitivity function.

The form of the isosensitivity function has been used to test the validity of assumptions built into TSD about the nature of the evidence distributions, as well as to estimate parameters for those distributions. In that sense, TSD can be said to have bootstrapped itself into its current position of high esteem: Its validity has mostly been established by confirming its implications, rather than by systematically testing its individual assumptions. This is not intended to be a point of criticism, but it must be kept in mind that the accuracy of such estimation and testing depends fundamentally on the joint assumptions that evidence is inherently variable and criterion location is not. Allowing criterial noise to play a role raises the possibility that previous explorations of the isosensitivity function in recognition memory have conflated the contributions of stimulus and criterial noise.

Evidence for criterion variability

As noted earlier, traditional TSD assumes that criterion placement is a noise-free and stationary process. Although there is some acknowledgment of the processes underlying criterion inconsistency (see, e.g., Macmillan & Creelman, 2005, p. 46), the apparatus of criterion placement in TSD stands in stark contrast with the central assumption of stimulus-related variability (see also Rosner & Kochanski, 2008). There are numerous reasons to doubt the validity of the idea that criteria are noise-free. First, there is evidence from detection and discrimination tasks of response autocorrelations, as well as systematic effects of experimental manipulations on response criteria. Second, maintaining the values of one or multiple criteria poses a memory burden and should thus be subject to forgetting and memory distortion. Third, comprehensive models of response time and accuracy in choice tasks suggest the need for criterial variability. Fourth, there is evidence from basic and well controlled psychophysical tasks of considerable trial-to-trial variability in the placement of criteria. Fifth, there are small but apparent differences between forced-choice response tasks and yes-no response tasks that indicate a violation of one of the most fundamental relationships predicted by TSD: the equality of the area under the isosensitivity function as estimated by the rating procedure and the proportion of correct responses in a two-alternative forced-choice task. This section will review each of these arguments more fully.

In each case, it is important to distinguish between systematic and nonsystematic sources of variability in criterion placement. This distinction is critical because only nonsystematic variability violates the actual underlying principle of a nonvariable criterion. Some scenarios violate the usual use of, but not the underlying principles of, TSD. This section identifies some sources of systematic variability and outlines the theoretical mechanisms that have been invoked to handle them. We also review evidence for nonsystematic sources of variability. Systematic sources of variability can be modeled within TSD by allowing criterion measures to vary with experimental manipulations (Benjamin, 2001; Benjamin & Bawa, 2004; Brown & Steyvers, 2005; Brown, Steyvers, & Hemmer, in press), by postulating a time-series criterion localization process contingent upon feedback (Atkinson, Carterette, & Kinchla, 1964; Atkinson & Kinchla, 1965; Friedman, Carterette, Nakatani, & Ahumada, 1968) only following errors (Kac, 1962; Thomas, 1973) or only following correct responses (Model 3 of Dorfman & Biderman, 1971), or as a combination of a long-term learning process and nonrandom momentary fluctuations (Treisman, 1987; Treisman & Williams, 1984). Criterial variance can even be modeled with a probabilistic responding mechanism (Parks, 1966; Thomas, 1975; White & Wixted, 1999), although the inclusion of such a mechanism violates much of the spirit of TSD.

1. Nonstationarity

When data are averaged across trials in order to compute TSD parameters, the researcher is tacitly assuming that the criterion is invariant across those trials. By extension, when parameters are computed across an entire experiment, measures of discriminability and criterion are only valid when the criterion is stationary over that entire period. Unfortunately, there is a abundance of evidence that this condition is rarely, if ever, met.

Response autocorrelations

Research more than one-half century ago established the presence of longer runs of responses than would be expected under a response-independence assumption (Fernberger, 1920; Howarth & Bulmer, 1956; McGill, 1957; Shipley, 1961; Verplanck, Collier, & Cotton, 1952; Verplanck, Cotton, & Collier, 1953; Wertheimer, 1953). More recently, response autocorrelations (Gilden & Wilson, 1995; Luce, Nosofsky, Green, & Smith, 1982; Staddon, King, & Lockhead, 1980) and response time autocorrelations (Gilden, 1997; 2001; Van Orden, Holden, & Turvey, 2003) within choice tasks have been noted and evaluated in terms of long-range fractal properties (Gilden, 2001; Thornton & Gilden, in press) or short-range response dependencies (Wagenmakers, Farrell, & Ratcliff, 2004, 2005). Such dependencies have even been reported in the context of tasks eliciting confidence ratings (Mueller & Weidemann, 2008). Numerous models were proposed to account for short-range response dependencies, most of which included a mechanism for the adjustment of the response criterion on the basis of feedback of one sort or another (e.g., Kac, 1962; Thomas, 1973; Treisman, 1987; Treisman & Williams, 1984). Because criterion variance was presumed to be systematically related to aspects of the experiment and the subject's performance, however, statistical models that incorporated random criterial noise were not applied to such tasks (e.g. Durlach & Braida, 1969, Gravetter & Lockhead, 1973, Wickelgren, 1968) .

The presence of such response correlations in experiments in which the signal value is uncorrelated across trials implies shifts in the decision regime, either in terms of signal reception or transduction, or in terms of criterion location. To illustrate this distinction, consider a typical subject in a detection experiment whose interest and attention fluctuate with surrounding conditions (did an attractive research assistant just pass by the door?) and changing internal states (increasing hunger or boredom). If these distractions cause the subject to attend less faithfully to the experiment for a period of time, it could lead to systematically biased evidence values and thus biased responses. Alternatively, if a subject's criterion fluctuates because such distraction affects their ability to maintain a stable value, it will bias responses equivalently from the decision-theoretic perspective. More importantly, fluctuating criteria can lead to response autocorrelations even when the transduction mechanism does not lead to correlated evidence values. Teasing apart these two sources of variability is the major empirical difficulty of our current enterprise.

Effects of experimental manipulations

Stronger evidence for the lability of criteria comes from tasks in which experimental manipulations are shown to induce strategic changes. Subjects appear to modulate their criterion based on their estimated degree of learning (Hirshman, 1995) and perceived difficulty of the distractor set in recognition (Benjamin & Bawa, 2004; Brown et al., in press). Subjects even appear to dynamically shift criteria in response to item characteristics, such as idiosyncratic familiarity (Brown, Lewis, & Monk, 1977) and word frequency (Benjamin, 2003). In addition, criteria exhibit reliable individual differences as a function of personality traits (Benjamin, Wee, & Roberts, 2008), thus suggesting another unmodeled source of variability in detection tasks.

It is important to note, however, that criterion changes do not always appear when expected (e.g., Stretch & Wixted, 1998; Higham, Perfect, & Bruno, in press; Verde & Rotello, 2007) and are rarely of an optimal magnitude. It is for this reason that there is some debate over whether subject-controlled criterion movement underlies all of the effects that it has been invoked to explain (Criss, 2006), and indeed, more generally, over whether a reconceptualization of the decision variable itself provides a superior explanation to that of strategic criterion-setting (for a review in the context of “mirror effects,” see Greene, 2007). For present purposes, it is worth noting that this inconsistency may well reflect the fact that criterion maintenance imposes a nontrivial burden on the rememberer, and they may occasionally forgo strategic shifting in order to minimize the costs of allocating the resources to do so.

These many contributors to criterion variability make it likely that every memory experiment contains a certain amount of systematic but unattributed sources of variance that may affect interpretations of the isosensitivity function if not explicitly modeled. To be clear, such effects are the province of the current model only if they are undetected and unincorporated into the application of TSD to the data. The systematic variability evident in strategic criterion movement may, depending on the nature of that variability, meet the assumptions of ND-TSD and thus be accounted for validly, but we will explicitly deal with purely nonsystematic variability in our statistical model.

2. The memory burden of criterion maintenance

Given the many systematic sources of variance in criterion placement, it is unlikely that recapitulation of criterion location from trial to trial is a trivial task for the subject. The current criterion location is determined by some complex function relating past experience, implicit and explicit payoffs, and experience thus far in the test, and retrieval of the current value is likely prone to error—a fact that may explain why intervening or unexpected tasks or events that disrupt the normal pace or rhythm of the test appear to affect criterion placement (Hockley & Niewiadomski, 2001). Evidence for this memory burden is apparent when comparing the form of isosensitivity functions estimated from rating procedures with estimates from other procedures, such as payoff manipulations.

Differences between rating-scale and payoff procedures

The difficulty of criterion maintenance is exacerbated in experiments in which confidence ratings are gathered because the subject is forced to maintain multiple criteria, one for each confidence boundary. Although it is unlikely that these values are maintained as independent entities (Stretch & Wixted, 1998), the burden nonetheless increases with the number of required confidence boundaries. Variability introduced by the confidence-rating procedure may explain why the isosensitivity function differs slightly when estimated with that procedure as compared to experiments that manipulate payoff matrices, and why rating-derived functions change shape slightly but unexpectedly when the prior odds of signal and noise are varied (Balakrishnan, 1998a; Markowitz & Swets, 1967; Van Zandt, 2000). These findings have been taken to indicate a fundamental failing of the basic assumptions of TSD (Balakrishnan, 1998a,b, 1999) but may simply reflect the contribution of criterion noise (Mueller & Weidemann, 2008).

Deviations of the yes-no decision point on the isosensitivity function

A related piece of evidence comes from the comparison of isosensitivity functions from rating procedures with single points derived from a yes-no judgment. As noted by Wickelgren (1968), it is not uncommon for that yes-no point to lie slightly above the isosensitivity function (Egan, Greenberg, & Schulman, 1961; Markowitz & Swets, 1967; Schulman & Mitchell, 1966; Watson, Rilling, & Bourbon, 1964; Wickelgren & Norman, 1966) and for that effect to be somewhat larger when more confidence categories are employed. This result likely reflects the fact that the maintenance of criteria becomes more difficult with increasing numbers of criterion points. In recognition memory, Benjamin, Lee, and Diaz (2008) showed that discrimination between previously studied and unstudied words was measured to be superior when subjects made yes-no discrimination judgments than when they used a four-point response scale, and superior on the four-point response scale when compared to an eight-point response scale. This result is consistent with the idea that each criterion introduces noise to the decision process, and that, in the traditional analysis, that noise inappropriately contributes to estimates of memory for the studied materials.

3. Sampling models of choice tasks

A third argument in favor of criterion variance comes from sequential sampling models that explicitly account for both response time and accuracy in two-choice decisions. Specifically, the diffusion model of Ratcliff (1978, 1988; Ratcliff & Rouder, 1998) serves as a benchmark in the field of recognition memory (e.g., Ratcliff, Thapar, & McKoon, 2004) in that it successfully accounts for aspects of data, including response times, that other models do not explicitly address. It would thus seem that general, heuristic models like TSD have much to gain from analyzing the nature of the decision process in the diffusion model.

That model only provides a full account of recognition memory when two critical parameters are allowed to vary (Ratcliff & Rouder, 1998). First is a parameter that corresponds to the variability in the rate with which evidence accumulates from trial to trial. This value corresponds naturally to stimulus-based variability and resembles the parameter governing variability in the evidence distributions in TSD. The second parameter corresponds to trial-to-trial variability in the starting point for the diffusion process. When this value moves closer to a decision boundary, less evidence is required prior to a decision—thus, this value is analogous to variability in criterion placement. A recent extension of the diffusion model to the confidence rating procedure (Ratcliff & Starns, in press) has a similar mechanism. The fact that the otherwise quite powerful diffusion model fails to provide a comprehensive account of recognition memory without possessing explicit variability in criterion suggests that such variability influences performance in recognition nontrivially.

4. Evidence from psychophysical tasks

Thurstonian-type models with criterial variability have been more widely considered in psychophysics and psychoacoustics, where they have generally met with considerable success. Nosofsky (1983) found that range effects in auditory discrimination were due to both increasing representational and criterial variance with wider ranges. Bonnel and Miller (1994) found evidence of considerable criterial variance in a same/different line-length judgment task in which attention to two stimuli was manipulated by instruction. They concluded that criterial variability was greater than representational variability in their task (see their Experiment 2) and that focused attention served to decrease that variance.

5. Comparisons of forced-choice and yes-no procedures

One of the outstanding early successes of TSD was the proof by Green (1964; Green & Moses, 1966) that the area under the isosensitivity function as estimated by the rating-scale procedure should be equal to the proportion of correct responses in a 2-alternative forced-choice task. This result generalizes across any plausible assumption about the shape of evidence distributions, as long as they are continuous, and is thus not limited by the assumption of normality typically imposed on TSD. Empirical verification of this claim would strongly support the assumptions underlying TSD, including that of a nonvariable criterion, but the extant work on this topic is quite mixed.

In perceptual tasks, this relationship appears to be approximately correct under some conditions (Emmerich, 1968; Green & Moses, 1966; Schulman & Mitchell, 1966; Shipley, 1965; Whitmore, Williams, & Ermey, 1968), but is not as strong or as consistent as one might expect (Lapsley Miller, Scurfield, Drga, Galvin, & Whitmore, 2002). Even within a generalization of Green's principle to a wide range of other decision axes and decision variables (Lapsley Miller et al., 2002), considerable observer inconsistency was noted. Such inconsistency is the province of our exploration here. In fact, a relaxation of the assumption of nonvariable criteria permits conditions in which this relationship can be violated. Wickelgren (1968) even noted that it was “quite amazing” (p. 115) that the relationship appeared to hold even approximately

The empirical evidence regarding the correspondence between forced-choice and yes-no recognition also suggests an inadequacy in the basic model. Green and Moses (1966) reported one experiment that conformed well to the prediction (Experiment 2) and one that violated it somewhat (Experiment 1). Most recent studies have made this comparison under the equal-variance assumption reviewed earlier as inadequate for recognition memory (Deffenbacher, Leu, & Brown, 1981; Khoe, Kroll, Yonelinas, Dobbins, & Knight, 2000; Yonelinas, Hockley, & Murdock, 1992), but experiments that have relaxed this assumption have yielded mixed results: some have concluded that TSD-predicted correspondences are adequate (Smith & Duncan, 2004) and others have concluded in favor of other models (Kroll, Yonelinas, Dobbins, & Frederick, 2002). However, Smith and Duncan (2004) used rating scales for both forced-choice and yes/no recognition, making it impossible to establish whether their correspondences were good because ratings imposed no decision noise or because the criterion variance imposed by ratings was more or less equivalent on the two tasks. In addition, amnesic patients, who might be expected to have a great difficulty with the maintenance of criteria, have been shown to perform relatively more poorly on yes-no than forced-choice recognition (Freed, Corkin, & Cohen, 1987; see also Aggleton & Shaw, 1996), although this result has not been replicated (Khoe et al., 2000; Reed, Hamann, Stefanacci, & Squire, 1997). The inconsistency in this literature may reflect the fact that criterion noise accrues throughout an experiment: Bayley, Wixted, Hopkins, and Squire (2008) recently showed that, whereas amnesics do not show any disproportionate impairment on yes-no recognition on early testing trials, their performance on later trials does indeed drop relative to control subjects.

Although we shall not pursue the comparison of forced-choice and yes-no responding further in our search for evidence of criterial variability, it is noteworthy that the evidence in support of the fundamental relationship between the two tasks reported by Green has not been abundant, and that the introduction of criterial variability allows conditions under which that relationship is violated.

Recognition memory and the detection formulation with criterial noise

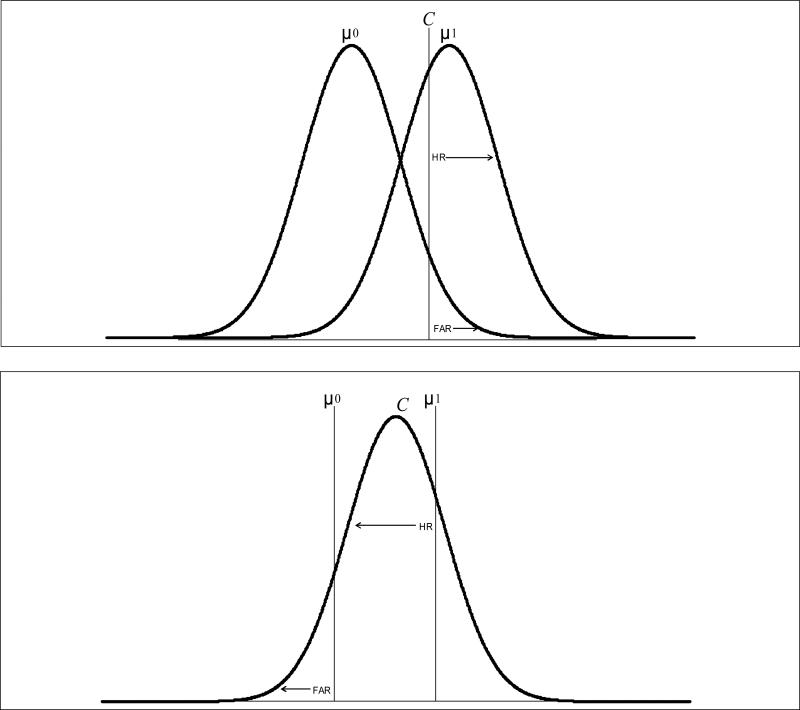

This section outlines the mathematical formulation of the decision task and the basic postulates of TSD, and extends that formulation by explicitly modeling criterion placement as a random variable with nonzero variability. To start, let us consider a subject's perspective on the task. Recognition requires the subject to discriminate between previously studied and unstudied stimuli. The traditional formulation of recognition presumes that test stimuli yield mnemonic evidence for studied status and that prior study affords discriminability between studied and unstudied stimuli by increasing the average amount of evidence provided by studied stimuli, and likely increasing variance as well. However, inherent variability within both unstudied and studied groups of stimuli yields overlapping distributions of evidence. This theoretical formulation is depicted in the top panel of Figure 1, in which normal probability distributions represent the evidence values (e) that previously unstudied (S0) and previously studied (S1) stimuli yield at test.4 If these distributions are nonzero over the full range of the evidence variable, then there is no amount of evidence that is unequivocally indicative of a particular underlying distribution (studied or unstudied). Equivalently, the likelihood ratio at criterion is –∞ < β < ∞. The response is made by imposing a decision criterion (c), such that:

The indicated areas in Figure 1 corresponding to hit and false-alarm rates (HR and FAR) illustrate how the variability of the representational distributions directly implies a particular level of performance.

Figure 1.

Top panel: Traditional TSD representation of the recognition problem, including variable evidence distributions and a scalar criterion. Bottom panel: An alternative formulation with scalar evidence values and a variable criterion. Both depictions lead to equivalent performance.

Consider, as a hypothetical alternative case, a system without variable stimulus encoding. In such a system, signal and noise are represented by nonvariable (and consequently nonoverlapping) distributions of evidence, and the task seems trivial. But there is, in fact, some burden on the decision-maker in this situation. First, the criterion must be placed judiciously—were it to fall anywhere outside the two evidence points, performance would be at chance levels. Thus, as reviewed previously, criterion placement must be a dynamic and feedback-driven process that takes into account aspects of the evidence distributions and the costs of different types of errors. Here we explicitly consider the possibility that there is an inherent noisiness to criterion placement in addition to such systematic effects.

The bottom panel of Figure 1 illustrates this alternative scenario, in which the decision criterion is a normal random variable with variance greater than 0, and e is a binary variable. Variability in performance in this scenario derives from variability in criterion placement from trial to trial, but yields—in the case of this example—the same performance as in the top panel (shown by the areas corresponding to HR and FAR). This model fails, of course, to conform with our intuitions and we shall see presently that it is untenable. However, the demonstration that criterial variability can yield identical outcomes as evidence variability is illustrative of the predicament we find ourselves in; namely, how to empirically distinguish between these two components of variability. The next section of this paper outlines the problem explicitly.

Distribution of the decision variable and the isosensitivity function

Let μx and σx indicate the mean and standard deviation of distribution x, and the subscripts e and c refer to evidence and criterion, respectively. If both evidence and criterial variability are assumed to be normally distributed (N) and independent of one another, as generally assumed by Thurstone (1927) and descendant models (Kornbrot, 1980; Peterson et al., 1954; Tanner & Swets, 1954), the decision variable is distributed as

| Equation 1a |

Because the variances of the component distributions sum to form the variability of the decision variable, it is not possible to discriminate between evidence and criterial variability on a purely theoretical basis (see also Wickelgren & Norman, 1966). This constraint does not preclude an empirical resolution, however. In addition, reworking the Thurstone model such that criteria can not violate order contstraints yields a model in which theoretical discrimination between criterion and evidence noise may be possible (Rosner & Kochanski, 2008).

Performance in a recognition task can be related to the decision variable by defining areas over the appropriate evidence function and, as is typically done in TSD, assigning the unstudied (e0) distribution a mean of 0 and unit variance:

| Equation 1b |

in which “respond S” indicates a signal response, or a “yes” in a typical recognition task. These values are easiest to work with in normal-deviate coordinates:

| Equation 1c |

Substitution and rearrangement yields the general model for the isosensitivity function with both representational and criterial variability (for related derivations, see McNicol, 1972; Wickelgren, 1968):

| Equation 2 |

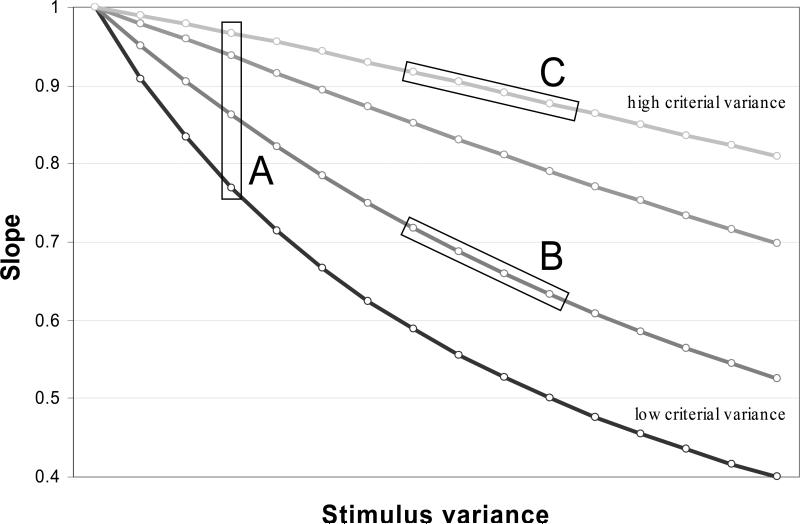

Note that, by this formulation, the slope of the function is not simply the reciprocal of the signal standard deviation, as it is in unequal-variance TSD. Increasing evidence variance will indeed decrease the slope of the function. However, the variances of the evidence and the criterion distribution also have an interactive effect: When the signal variance is greater than 1, increasing criterion variance will increase the slope. When it is less than 1, increasing criterion variance will decrease the slope. Equivalently, criterial variance reduces the effect of stimulus variance and pushes the slope towards 1.

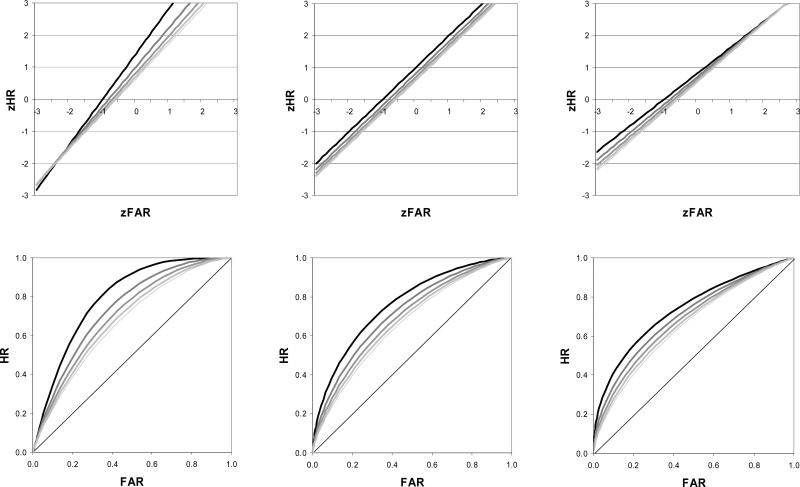

Figure 2 depicts how isosensitivity functions vary as a function of criterial variance, and confirms the claim of previous theorists (Treisman & Faulkner, 1984; Wickelgren, 1968) and implication of Equation 2 that criterial variability generally decreases the area under the isosensitivity function. The slight convexity at the margins of the function that results from unequal variances is an exception to that generality (see also Thomas and Myers, 1972). The left panels depict increasing criterial variance for signal variance less than 1, and the right panels for signal variance greater than 1. The middle panels show that, when signal variance is equal to noise variance, criterial variance decreases the area under the curve but the slope does not change. It is worth noting that the prominent attenuating effect of criterial variance on the area under the function is generalizable across a number of plausible alternative distributions (including the logistic and gamma distributions; Thomas & Myers, 1972).

Figure 2.

Isosensitivity functions in probability coordinates (top row) and normal-deviate coordinates (bottom row) for increasing levels of criterial noise (indicated by increasingly light contours). Left panels illustrate the case when the variability of the signal (old item) distribution is less than that of the noise distribution (which has unit variance), middle panels for when they are equal in variance, and right panels for the (typical) case when the signal distribution is more variable than the noise distribution.

When criterial variability is zero, Equation 2 reduces to the familiar form of the unequal-variance model of TSD:

in which the slope of the function is the reciprocal of the signal variance and the y-intercept is μ1/σ1. When the distributions are assumed to have equal variance, as shown in the top panel in Figure 1, the slope of this line is 1.

When stimulus variability is zero and criterion variability is nonzero, as in the bottom example of the scenario depicted in Figure 1, the isosensitivity function is:

and when stimulus variability is nonzero but equal for the two distributions, the zROC is:

In both cases, the function has a slope of 1 and is thus identical to the case in which representational variability is nonzero but does not vary with stimulus type; thus, there is no principled way of using the isosensitivity function to distinguish between the two hypothetical cases shown in Figure 1, in which either evidence but not criterial variability or criterial but not evidence variability is present. Thankfully, given the actual form of the empirical isosensitivity function—which typically reveals a nonunit slope—we can use the experimental technique presented later in this paper to disentangle these two bases.

Measures of accuracy with criterion variability

Because empirical isosensitivity functions exhibit nonunit slope, we need to consider measures of accuracy that generalize to the case when evidence distributions are not of equal variance. This section provides the rationale and derivations for ND-TSD generalizations of two commonly used measures, da and de.

There are three basic ways of characterizing accuracy (or, variously, discriminability or sensitivity) in the detection task. First, accuracy is related to the degree to which the evidence distributions overlap, and is thus a function of the distance between them, as well as their variances. Second, accuracy is a function of the distance of the isosensitivity line from an arbitrary point on the line that represents complete overlap of the distributions (and thus chance levels of accuracy on the task). Finally, accuracy can be thought of as the amount of area below an isosensitivity line—an amount that increases to 1 when performance is perfect and drops to 0.5 when performance is at chance. Each of these perspectives has interpretive value: the distribution-overlap conceptualization is easiest to relate to the types of figures associated with TSD (like the top part of Figure 1); distance-based measures emphasize the desirable psychometric qualities of the statistic (e.g., that they are on a ratio scale; Matzen & Benjamin, in press). Area-based measures bear a direct and transparent relation with forced-choice tasks. All measures can be intuitively related to the geometry of the isosensitivity space.

To derive measures of accuracy, we shall deal with the distances from the isosensitivity line, as defined by Equation 2.5 Naturally, there are an infinite number of distances from a point to a line, so it is necessary to additionally restrict our definition. Here we do so by using the shortest possible distance from the origin to the line, which yields a simple linear transformation of da (Schulman & Mitchell, 1966). In Appendix A, we provide an analogous derivation for de, which is the distance from the origin to the point on the isosensitivity line that intersects with a line perpendicular to the isosensitivity line. These values also correspond to distances on the evidence axis scaled by the variance of the underlying evidence distributions: de corresponds to the distance between the distributions, scaled by the arithmetic average of the standard deviations, and da corresponds to distance in terms of the root-mean-square average of the standard deviations (Macmillan & Creelman, 2005). For the remainder of this paper, we will use da, as it is quite commonly used in the literature (e.g., Banks, 2000; Matzen & Benjamin, in press), is easily related to area-based measures of accuracy, and provides a relatively straightforward analytic form.

The generalized version of da can be derived for by solving for the point at which the isosensitivity function must intersect with a line of slope (-1/m):

The intersection point of Equation 2 and this equation is

which yields a distance of

from the origin. This value is scaled by √2 in order to determine the length of the hypotenuse on a triangle with sides of length noisy d*a (Simpson & Fitter, 1973):

| Equation 3 |

The area measure AZ also bears a simple relationship with d*a:

Empirical estimation of sources of variability

Because both criterial and evidence variability affect the slope of the isosensitivity function, it is difficult to isolate the contributions of each to performance. To do so, we must find conditions over which we can make a plausible case for criterial and evidential variance being independently and differentially related to a particular experimental manipulation. We start by taking a closer look at this question.

Units of variability for stimulus and criterial noise

Over what experimental factor is evidence presumed to vary? Individual study items probably vary in pre-experimental familiarity and also in the effect of a study experience. In addition, the waxing and waning of attention over the course of an experiment increases the item-related variability (see also DeCarlo, 2002).

Do these same factors influence criterial variability? By the arguments presented here, criterial variability related to item characteristics is mostly systematic in nature (see, e.g., Benjamin, 2003) and is thus independent of the variability modeled by Equation 1. We have specifically concentrated on nonsystematic variability, and have argued that it is likely a consequence of the cognitive burden of criterion maintenance. Thus, the portion of criterial variability with which we concern ourselves with is trial-to-trial variability on the test. What is needed is a paradigm in which item variability can be dissociated from trial variability.

Ensemble recognition

In the experiment reported here, we use a variant of a clever paradigm devised by Nosofsky (1983) to investigate range effects in the absolute identification of auditory signals. In our experiment, subjects made recognition judgments for ensembles of items that vary in size. Thus, each test stimulus included a variable number of words (1,2, or 4), all of which were old or all of which were new. The subjects’ task was to evaluate the ensemble of items and provide an “old” or “new” judgment on the group.

The size manipulation is presumed to affect stimulus noise—because each ensemble is composed of heterogeneous stimuli and is thus subject to item-related variance—but not criterial noise, because the items are evaluated within a single trial, as a group. Naturally, this assumption might be incorrect: subjects might, in fact, evaluate each item in an ensemble independently and with heterogeneous criteria. We will examine the data closely for evidence of a violation of the assumption of criterial invariance within ensembles.

Information integration

In order to use the data from ensemble recognition to separately evaluate criterial and stimulus variance, we must have a linking model of information integration within an ensemble—that is, a model of how information from multiple stimuli is evaluated jointly for the recognition decision. We shall consider two general models. The independent variability model proposes that the variance of the strength but not the criterial distribution is affected with ensemble size, as outlined in the previous section. Four submodels are considered. The first two assume that evidence is averaged across stimulus within an ensemble and differ only in whether criterial variability is permitted to be nonzero (ND-TSD) or not (TSD). The latter two assume that evidence is summed across the stimuli within an ensemble and, as before, differ in whether criterion variability is allowed to be nonzero. These models will be compared to the OR model, which proposes that subjects respond positively to an ensemble if any member within that set yields evidence greater than a criterion. This latter model embodies a failure of the assumption that the stimuli are evaluated as a group, and its success would imply that our technique for separating criterial and stimulus noise is invalid. Thus, a total of five models of information intergration are considered.

Criterion placement

For each ensemble size, five criteria had to be estimated to generate performance on a 6-point rating curve. For all models except the two summation models, a version of the model was fit in which criteria were free to vary across ensemble size (yielding 15 free parameters, and henceforth referred to as without restriction) and another version was fit in which the criteria were constrained (with restriction) to be the same across ensemble sizes (yielding only 5 free parameters). Because the scale of the mean evidence values varies with ensemble size for the summation model, only one version was fit in which there were fifteen free parameters (i.e., they were free to vary across ensemble size).

Model flexibility

One important concern in comparing models, especially non-nested models like the OR model, is that a model may benefit from undue flexibility. That is, a model may account for a data pattern more accurately not because it is a more accurate description of the underlying generating mechanisms, but rather because its mathematical form affords it greater flexibility (Myung & Pitt, 2002). It may thus appear superior to another model by virtue of accounting for nonsystematic aspects of the data. There are several approaches we have taken to reduce concerns that ND-TSD may benefit from greater flexibility than its competitors.

First, we have adopted the traditional approach of using an index of model fit that is appropriate for nonnested models and penalizes models according to the number of their free parameters (AIC; Akaike, 1973). Second, we use a correction on the generated statistic that is appropriate for the sample sizes in use here (AICc; Burnham & Anderson, 2004). Third, we additionally report the Akaike weight metric, which, unlike the AIC or AICc, has a straightforward interpretation as the probability that a given model is the best among a set of candidate models. Fourth, in addition to reporting both AICc values and Akaike weights, we also report the number of subjects best fit by each model, ensuring that no model is either excessively penalized for failing to account for only a small number of subjects (but dramatically so) or bolstered by accounting for only a small subset of subjects considerably more effectively than the other models.

Finally, we report in Appendix C the results of a large series of Monte Carlo simulations evaluating the degree to which ND-TSD has an advantage over TSD in terms of accounting for failures of assumptions common to the two models. We consider cases in which the evidence distributions are of a different form than assumed by TSD, and cases in which the decision rule is different from what we propose. To summarize the results from that exercise here, ND-TSD never accrues a higher AIC score or Akaike weight than TSD unless the generating distribution is ND-TSD itself. These results indicate that a superior fit of ND-TSD to empirical data is unlikely to reflect undue model flexibility when compared to TSD.

Experiment: Word ensemble recognition

In this experiment, we evaluate the effects of manipulating study time on recognition of word ensembles of varying sizes. By combining ND-TSD and TSD with a few simple models of information integration, we will be able to separately estimate the influence of criterial and evidence variability on recognition across those two study conditions. This experiment pits the models outlined in the previous section against one another.

Method

Subjects

Nineteen undergraduate students from the University of Illinois participated to partially fulfill course requirements for an introductory course in psychology.

Design

Word set size (one, two or four words in each set) was manipulated within-subjects in both experiments. Each subject participated in a single study phase and a single test phase. Subjects made their recognition responses on a 6-point confidence rating scale, and the raw frequencies of each response type were fit to the models in order to evaluate performance.

Materials

All words were obtained from the English Lexicon Project (Balota, Cortese, Hutchison, Neely, Nelson, Simpson, & Treiman, 2002). We drew 909 words with a mean word length of 5.6 (range: 4 – 8 letters) and mean log HAL frequency of 10.96 (range:5.5 – 14.5). A random subset of 420 words was selected for the test list, which consisted of 60 single-item sets, 60 double-item sets, and 60 four-item sets. A random half of the items from each ensemble-size set was assigned to the study list. All study items were presented singly, while test items were presented in sets of one, two, or four items. Words presented in a single ensemble were either all previously studied or all unstudied. This resulted in 210 study item presentations and 180 test item presentations (90 old and 90 new). Again, every test presentation included all old or all new items; there were no trials on which old and new items were mixed in an ensemble.

Procedure

Subjects were tested individually in a small, well-lit room. Stimuli were presented, and subject responses were recorded, on PC-style computers programmed using the Psychophysical Toolbox for MATLAB (Brainerd, 1997; Pelli, 1997). Prior to the study phase, subjects read instructions on the computer screen informing them that they were to be presented with a long series of words that they were to try and remember as well as they could. They began the study phase by pressing the space bar. During the study phase, words were presented for 1.5 seconds. There was a 333 ms inter-stimulus interval (ISI) between presentations. At the conclusion of the study phase, subjects were given instructions for the test phase. Subjects were informed that test items would be presented in sets of one, two, or four words, and that they were to determine if the word or words that they were presented had been previously studied or not. They began the test phase by pressing the space bar. There was no time limit on the test.

Results

Table 1 shows the frequencies by test condition summed across subjects. Discriminability (da) was estimated separately for each ensemble size and study time condition by maximum-likelihood estimation (Ogilvie & Creelman, 1968), and is also displayed in Table 1. All model fitting reported below was done on the data from individual subjects because of well known problems with fitting group data (see, e.g., Estes & Maddox, 2005) and particular problems with recognition data (Heathcote, 2003) None of the subjects or individual trials were omitted from analysis. Details of the fitting procedure are outlined in Appendix D.

Table 1.

Summed rating frequencies over ensemble size and old/new test items. Standard equal-variance estimates of model parameters are shown on the right (μ1 = mean of the signal distribution, σ1 = standard deviation of the signal distribution, m = slope of the isosensitivity function, and da is a standard estimate of memory sensitivity).

| Condition | 1 | 2 | 3 | 4 | 5 | 6 | μ 1 | σ 1 | m | da |

|---|---|---|---|---|---|---|---|---|---|---|

| Size = 1 | ||||||||||

| new | 105 | 109 | 124 | 105 | 67 | 60 | 0.75 | 1.28 | 0.78 | 0.64 |

| old | 62 | 65 | 64 | 108 | 103 | 168 | ||||

| Size = 2 | ||||||||||

| new | 107 | 139 | 115 | 99 | 70 | 40 | 1.25 | 1.52 | 0.66 | 0.88 |

| old | 47 | 67 | 81 | 80 | 82 | 213 | ||||

| Size = 4 | ||||||||||

| new | 134 | 146 | 108 | 76 | 57 | 49 | 1.50 | 1.60 | 0.62 | 1.10 |

| old | 53 | 54 | 61 | 56 | 104 | 242 |

The subject-level response frequencies were used to evaluate the models introduced earlier. Of particular interest is the independent variability model that we use to derive separate estimates of criterial and evidence variability. That model's performance is evaluated with respect to several other models. One is a sub-model (zero criterial variance model) that is equivalent to the independent variability model but assumes no criterial variance. For both the model with criterion variability (ND-TSD) and without (TSD), two different decision rules (averaging versus summation) are tested. Another model (the OR model) assumes that each stimulus within an ensemble is evaluated independently and that the decision is made on the basis of combining those independent decisions via an OR rule. Comparison of the independent variability model with the OR model is used to evaluate the claim that the stimulus is evaluated as an ensemble, rather than as n individual items. Comparison of the independent variability model with the nested zero-criterial-variance model is used to test for the presence of criterial variability.

Independent variability models

The averaging version of this model is based on ND-TSD and the well known relationship between the sampling distribution of the mean and sample size, as articulated by the Central Limit Theorem. Other applications of a similar rule in psychophysical tasks (e.g., Swets & Birdsall, 1967; Swets, Shipley, McKey, & Green, 1959) have confirmed this assumption of averaging stimuli or samples, but we will evaluate it carefully here because of the novelty of applying that assumption to recognition memory.

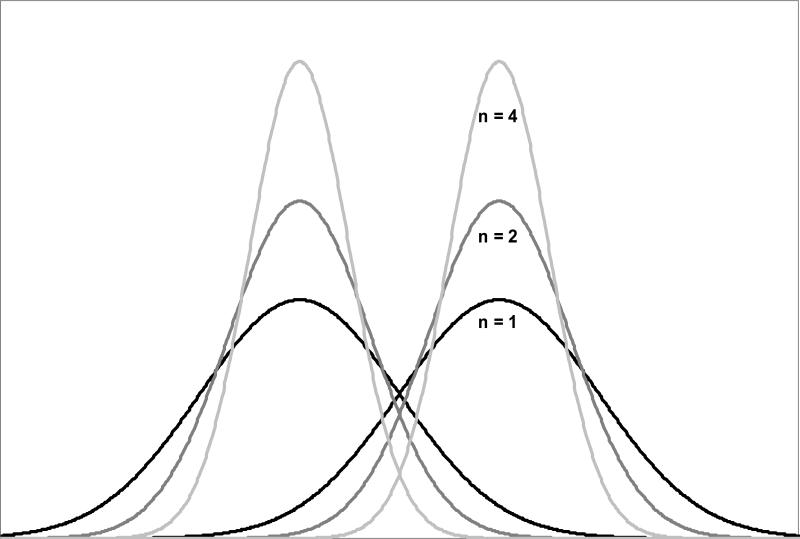

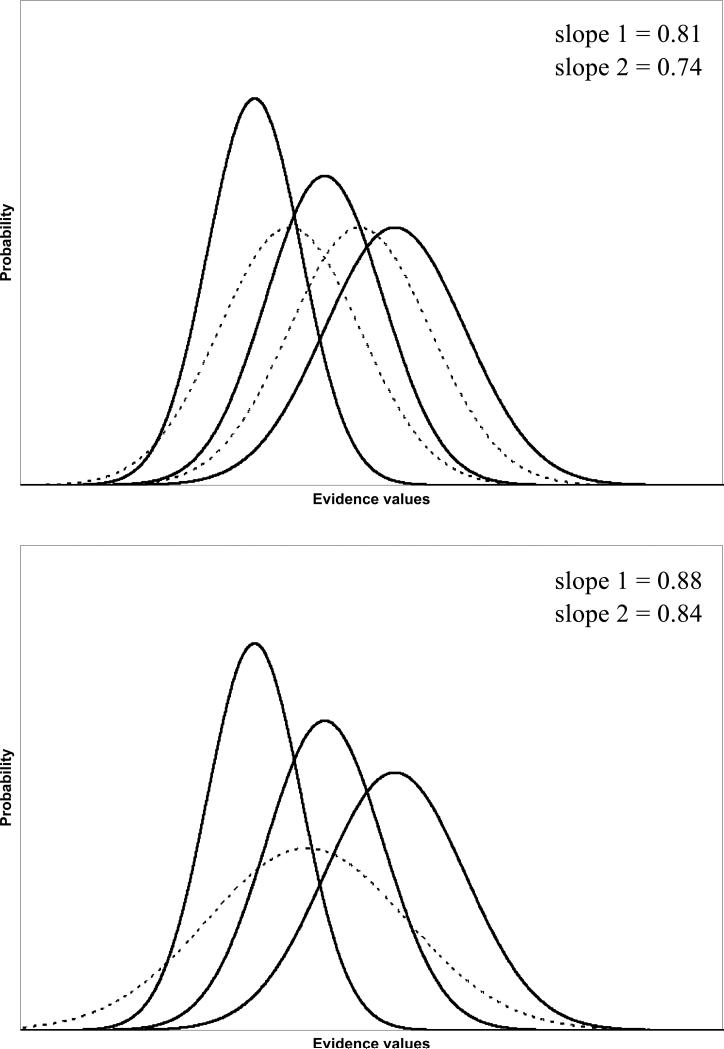

If the probability distribution of stimulus strength has variability σ2 then that probability distribution for the ensemble of n stimuli drawn from that distribution has variability σ2/n. This model assumes that the distribution of strength values is affected by n, but that criterial variability is not. Thus, the isosensitivity function of the criterion-variance ensemble recognition model is :

| Equation 4 |

Because we fit the frequencies directly rather than the derived estimates of distance (unlike previous work: Nosofsky, 1983), there is no need to fix any parameters (such as the distance between the distributions) a priori. The hypothesized effect of the ensemble size manipulation is shown in Figure 3, in which the variance of the stimulus distributions decreases with increasing size. For clarity, the criterion distribution is not shown.

Figure 3.

Predictions of the variability models of information integration for the relationship between ensemble size (n) and the shapes of the evidence distributions.

The fit of this model is compared to a simpler model that assumes no criterial variability:

| Equation 5 |

Another possibility is that evidence is summed, rather than averaged within an ensemble. In this case, the size of the ensemble scales both the signal mean and the stimulus variances, and the isosensitivity functions assumes the form:

| Equation 6 |

when criterion variance is nonzero and

| Equation 7 |

when criterion variance is zero. Note that Equations 7 and 5 are equivalent, demonstrating that the summation rule is equivalent to the averaging rule when criterion variability is zero.

We must also consider the possibility that our assumption of criterial invariance within an ensemble is wrong. If criterial variance is affected by ensemble size in the same purely statistical manner as is stimulus variance, then both stimulus and criterion variance terms are affected by n. Under these conditions, the model is:

| Equation 8 |

Two aspects of this model are important. First, it can be seen that it is impossible to separately estimate the two sources of variability, because they can be combined into a single super-parameter. Second, as shown in Appendix B, this model reduces to the same form as Equation 5, and thus can fit the data no better than the zero criterial variability model. Consequently, if the zero criterial variability model is outperformed by the independent variability model, then we have supported the assumption that criterial variability is invariant across an ensemble.

OR model

The OR model assumes that each stimulus within an ensemble is evaluated independently, and that subjects respond positively to a set if any one of those stimuli surpasses a criterion value (e.g., Macmillan & Creelman, 2005; Wickens, 2002). This is an important “baseline” against which to evaluate the information integration models because the interpretation of those models hinges critically on the assumption that the ensemble manipulation alters representational variability in predictable ways embodied by Equations 4 – 8. The OR model embodies a failure of this assumption: If subjects do not average or sum evidence across the stimuli in an ensemble, but rather evaluate each stimulus independently, then this multidimensional extension of the standard TSD model will provide a superior fit to the data.

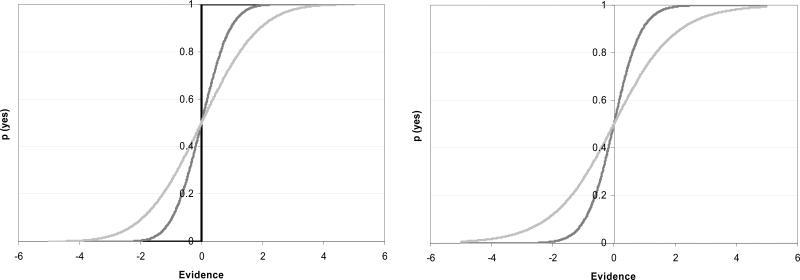

The situation is simplified because the stimuli within an ensemble (and, in fact, across the entire study set) can be thought of as multiple instances of a common random variable. The advantage of this situation is apparent in Figure 4, which depicts the 2-dimensional TSD representation of the OR model applied to 2 stimuli. Here the strength distributions are shown jointly as density contours; the projection of the marginal distributions onto either axis represents the standard TSD case. Because the stimuli are represented by a common random variable, those projections are equivalent.

Figure 4.

The multidimensional formulation of the OR model for information integration. Distributions are shown from above. Given a criterion value and performance on a single stimulus, the shaded area is equal to the complement of predicted performance on the joint stimulus.

According to the standard TSD view, a subject provides a rating of r to a stimulus if and only if the evidence value yielded by that stimulus exceeds the criterion associated with that rating, Cr. Thus, the probability of at least one of n independent and identically distributed instances of that random variable exceeding that criterion is

| Equation 9 |

The shaded portion of the figure corresponds to the bracketed term in Equation 9. Equivalently, the region of endorsement for a subject is above or to the right of the shaded area (which extends leftward and downward to -∞).

Model fitting

Details of the model-fitting procedure are provided in Appendix D.

Model results

The performance of the models is shown in Table 2, which indicates AICC, Akaike weights, and number of individual subjects best fit by each model. It is clear that the superior fit was provided by ND-TSD with the restriction of equivalent criteria across ensemble conditions, and with the averaging rather than the summation process. That model provided the best fit (lowest AICc score) for more than 80% of the individual subjects, and had (on average across subjects) a greater than 80% chance of being the best model in the set tested. This result is consistent with the presence of criterial noise and additionally with the suggestion that subjects have a very difficult time adjusting criteria across trials (e.g., Ratcliff and McKoon, 2000).

Table 2.

Corrected AIC values (AICc), Akaike weights, and number of subjects best fit by each model. With and without restriction refer to models in which criteria were allowed to vary across ensemble size (without restriction) or were not (with restriction).

| Model and parameter | Without restriction | With restriction |

|---|---|---|

| ND-TSD Averaging model | ||

| Mean AICc | 154 | 116 |

| Mean Akaike weight | 0.00 | 0.82 |

| Number of subjects | 0 | 16 |

| TSD Averaging model | ||

| Mean AICc | 146 | 128 |

| Mean Akaike weight | 0.00 | 0.13 |

| Number of subjects | 0 | 3 |

| ND-TSD Summation model | ||

| Mean AICc | 152 | |

| Mean Akaike weight | 0.00 | |

| Number of subjects | 0 | |

| TSD Summation model | ||

| Mean AICc | 150 | |

| Mean Akaike weight | 0.05 | |

| Number of subjects | 1 | |

| OR model | ||

| Mean AICc | 146 | 159 |

| Mean Akaike weight | 0.00 | 0.00 |

| Number of subjects | 0 | 0 |

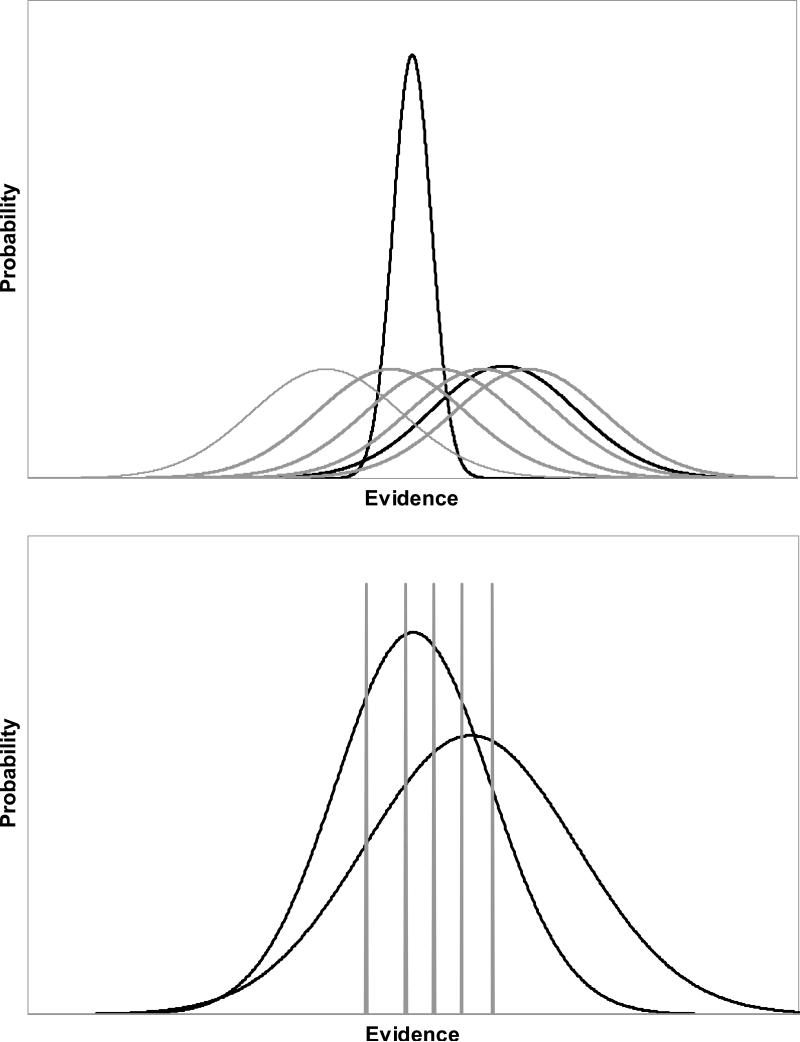

A depiction of the fit of the winning model is shown in the top panel of Figure 5, in which it can be seen that ND-TSD provides a quite different conceptualization of the recognition process than does standard TSD (shown in the bottom panel). In addition to criterial variance, the variance of the studied population of items is estimated to be much greater relative to the unstudied population. This suggests that the act of studying words may confer quite substantial variability, and that criterial variance acts to mask that variability. The implications of this will be considered in the next major section.

Figure 5.

Depiction of the results from the winning ND-TSD model (top panel) and traditional TSD (bottom panel). Dark lines are evidence distributions and lighter lines represent criteria.

Psychological implications of criterial variance

When interpreted in the context of TSD, superior performance in one condition versus another, or as exhibited by one subject over another, is attributable either to a greater distance between the means of the two probability distributions or to lesser variability of the distributions. In ND-TSD, superior performance can additionally reflect lower levels of criterial variability. In this section, we outline several current and historical problems that may benefit from an explicit consideration of criterial variability. The first two issues we consider underlie current debates about the relationship between the slope of the isosensitivity function and theoretical models of recognition and of decision-making. The third section revisits the standoff between deterministic and probabilistic response models and demonstrates how decision noise can inform that debate. The fourth, fifth, and sixth issues address the effects of aging, the consequences of fatigue, and consider the question of how subjects make introspective remember/know judgments in recognition tasks. These final points are all relevant to current theoretical and empirical debates in recognition memory.

Effects of recognition criterion variability on the isosensitivity function

Understanding the psychological factors underlying the slope of the isosensitivity function have proven to be somewhat of a puzzle in psychology in general and in recognition memory in particular. Different tasks appear to yield different results: for example, recognition of odors yield functions with slopes ~1 (Rabin & Cain, 1984; Swets, 1986b), whereas recognition of words typically yields considerably shallower slopes (Ratcliff et al., 1992, 1994). That latter result is particularly important because it is inconsistent with a number of prominent models of recognition memory (Eich, 1982; Murdock, 1982; Pike, 1984). The form of the isosensitivity function has even been used to explore variants of recognition memory, including memory for associative relations (Kelley & Wixted, 2001; Rotello, Macmillan, & Van Tassel, 2000) and memory for source (Healy et al., 2005; Hilford et al.. 2002)

One claim about the slope of the isosensitivity function in recognition memory is the constancy-of-slopes generalization, and owes to the pioneering work of Ratcliff and his colleagues (Ratcliff et al., 1992, 1994), who found that slopes were not only consistently less than unity, but also relatively invariant with manipulations of learning. Later work showed, however, that this may not be the case (Glanzer et al., 1999; Heathcote, 2003; Hirshman & Hostetter, 2000). In most cases, it appears as though variables that increase performance decrease the slope of the isosensitivity function (for a review, see Glanzer et al., 1999). This relation holds for manipulations of normative word frequency (Glanzer & Adams, 1990; Glanzer et al., 1999; Ratcliff et al., 1994), concreteness (Glanzer & Adams, 1990), list length (Elam, 1991, as reported in Glanzer et al., 1999; Gronlund & Elam, 1994; Ratcliff et al., 1994; Yonelinas, 1994), retention interval (Wais, Wixted, Hopkins, & Squire, 2006), and study time (Glanzer et al., 1999; Hirshman & Hostetter, 2000; Ratcliff et al., 1992, 1994).

These two findings—slopes of less than 1 and decreasing slopes with increasing performance—go very much hand in hand from a measurement perspective. Consider the limiting case, in which learning has been so weak and memory thus so poor, that discrimination between the old and new items on a recognition test is nil. The isosensitivity function must have a slope of 1 in both probability and normal-deviate coordinates in that case, because any change in criterion changes the HR and FAR by the same amount. As that limiting case is approached, it is thus not surprising that slopes move towards 1. The larger question in play here is whether the decrease in performance that elicits that effect owes specifically to shifting evidence distributions, or whether criterial variance might also play a role. We tackle this question below by carefully examining the circumstances in which a manipulation of learning affects the slope and the circumstances in which it does not.

The next problem we consider is why isosensitivity functions estimated from the rating task differ from those estimated by other means and whether such differences are substantive and revealing of fundamental problems with TSD. In doing so, we consider what role decision noise might play in promoting such differences, and also whether reports of the demise of TSD (Balakrishnan, 1998a) may be premature.

Inconsistent effects of memory strength on slope

The first puzzle we will consider concerns the conflicting reports on the effects of manipulations of learning on the slope of the isosensitivity function. Some studies have revealed that the slope does not change with manipulations of learning (Ratcliff et al., 1992, 1994), whereas others have supported the idea that the slope decreases with additional learning or memory strength. While some models of recognition memory predict changes in slope (Gillund & Shiffrin, 1984; Hintzman, 1986) with increasing memory strength, others either predict unit slope (Murdock, 1982) or invariant slope with memory strength. This puzzle is exacerbated by the lack of entrenched theoretical mechanisms that offer a reason why the effect should sometimes obtain and sometimes not.

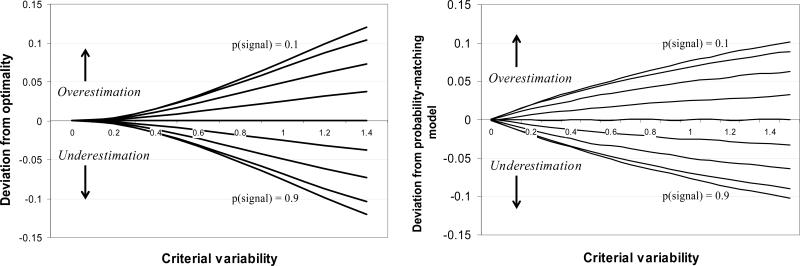

To understand the way in which criterion noise might underlie this inconsistency, it is important to note the conditions under which changes in slope are robust and the conditions under which they are not. Glanzer et al. (1999) reviewed these data and their results provide an important clue. Of the four variables for which a reasonable number of data were available (≥5 independent conditions), list length and word frequency manipulations clearly demonstrated the effect of learning on slope: shorter list lengths and lower word frequency led to higher accuracy and also exhibited a lower slope (in 94% of their comparisons). In contrast, greater study time and more repetitions led to higher accuracy but revealed the effect on slope less consistently (on only 68% of the comparisons).

To explore this discrepancy, we will consider the criterion-setting strategies that subjects bring to bear in recognition, and how different manipulations of memory might interact with those strategies. There are two details about the process of criterion setting and adjustment that are informative. First, the control processes that adjust criteria are informed by an ongoing assessment of the properties of the testing regimen. This may include information based on direct feedback (Dorfman & Biderman, 1971; Kac, 1962; Thomas, 1973, 1975) or derived from a limited memory store of recent experiences (Treisman, 1987; Treisman & Williams, 1984). In either case, criterion placement is likely to be a somewhat noisy endeavor until a steady state is reached, if it ever is. From the perspective of these models, it is not surprising that support has been found for the hypothesis that subjects set a criterion as a function of the range of experienced values (Parducci, 1984), even in recognition memory (Hirshman, 1995). These theories have at their core the idea that recognizers hone in on optimal criterion placement by assessing, explicitly or otherwise, the properties of quantiles of the underlying distributions. Because this process is subject to a considerable amount of irreducible noise—for example, from the particular order in which early test stimuli are received—decision variability is a natural consequence. To the degree that criterion variability is a function of the range of sampled evidence values (cf. Nosofsky, 1983), criterion noise will be greater when that range is larger.

The second relevant aspect of the criterion-setting process is that it takes advantage of the information conveyed by the individual test stimuli. A stimulus may reveal something about the degree of learning a prior exposure would have afforded it, and subjects appear to use this information in generating an appropriate criterion (Brown et al., 1977). Such a mechanism has been proposed as a basis for the mirror effect (Benjamin, 2003; Benjamin, Bjork, & Hirshman, 1998; Hirshman, 1995), and, according to such an interpretation, reveals the ability of subjects to adjust criteria on an item-by-item basis in response to idiosyncratic stimulus characteristics. It is noteworthy that within-list mirror effects are commonplace for stimulus variables, such as word frequency, meaningfulness, and word concreteness (Glanzer & Adams, 1985; 1990), but typically absent for experimental manipulations of memory strength, such as repetition (Higham et al., in press; Stretch & Wixted, 1998) or study time (Verde & Rotello, 2007). This difference has been taken to imply that recognizers are not generally willing or able to adjust criteria within a test list based on an item's perceived strength class.