Abstract

The role of attention in perceptual learning has been a topic of controversy. Sensory psychophysicists/physiologists and animal learning psychologists have conducted numerous studies to examine this role; but because these two types of researchers use two very different lines of approach, their findings have never been effectively integrated. In the present article, we review studies from both lines and use exposure-based learning experiments to discuss the role of attention in perceptual learning. In addition, we propose a model in which exposure-based learning occurs only when a task-irrelevant feature is weak. We hope that this article will provide new insight into the role of attention in perceptual learning to the benefit of both sensory psychophysicists/physiologists and animal learning psychologists.

In 1963, Eleanor Gibson defined perceptual learning as follows: “Any relatively permanent and consistent change in the perception of a stimulus array following practice or experience with this array will be considered perceptual learning” (p. 29). Nowadays, the definition of perceptual learning is broader and is generally referred to as improvement on a perceptual or sensory task by practice or experience, which includes cases accompanied by no perceptual changes (Fahle, Edelman, & Poggio, 1995; Watanabe, Náñez, & Sasaki, 2001).

Perceptual learning occurs in all the sensory systems, such as the visual (see, e.g., Ahissar & Hochstein, 1993, 1997; Ball & Sekuler, 1987; Beard, Levi, & Reich, 1995; Crist, Li, & Gilbert, 2001; Dosher & Lu, 1998, 1999; Fahle et al., 1995; Fine & Jacobs, 2002; Fiorentini & Berardi, 1980; Fiser & Aslin, 2002; Furmanski, Schluppeck, & Engel, 2004; Herzog & Fahle, 1998, 1999; Karni & Sagi, 1993; Koyama, Harner, & Watanabe, 2004; Poggio, Fahle, & Edelman, 1992; Ramachandran & Braddick, 1973; Schoups, Vogels, & Orban, 1995; Schoups, Vogels, Qian, & Orban, 2001; Schwartz, Maquet, & Frith, 2002; Watanabe et al., 2001), the auditory (Amitay, Irwin, & Moore, 2006; Bao, Chan, & Merzenich, 2001; Demany, 1985; Polley, Steinberg, & Merzenich, 2006), the olfactory (Bende & Nordin, 1997), the gustatory (Owen & Machamer, 1979), and the tactile (Dinse, Ragert, Pleger, Schwenkreis, & Tegenthoff, 2003; Sathian & Zangaladze, 1997).

Perceptual learning is characterized by three distinctive aspects. First, perceptual learning is often very specific for lower level attributes of the stimulus. It is well known that visual perceptual learning is highly specific to stimulus features, such as retinal location (Ahissar & Hochstein, 1997; Crist, Kapadia, Westheimer, & Gilbert, 1997; Fahle & Edelman, 1993; Fiorentini & Berardi, 1980; Karni & Sagi, 1991; McKee & Westheimer, 1978; Poggio et al., 1992; Saarinen & Levi, 1995; Sagi & Tanne, 1994; Shiu & Pashler, 1992), spatial frequency (Fiorentini & Berardi, 1980; Sowden, Rose, & Davies, 2002), and orientation (Fiorentini & Berardi, 1980; Poggio et al., 1992; Schoups et al., 1995; Shiu & Pashler, 1992). Psychophysical studies of visual perceptual learning have shown that there are cases in which performance on detection or discrimination tasks is improved only with respect to features of trained visual stimuli, such as orientation, motion direction, and location. Perceptual learning also tends to be specific to the trained eye. For example, monocular training of a vernier discrimination task improved task performance to a significantly larger degree for the trained eye than for the untrained eye (Fahle et al., 1995). Second, it takes time for perceptual learning to be formed. In many cases, it takes a few days (thousands of trials per day) to see the slightest increase in performance. Third, perceptual learning is persistent. Once perceptual learning is formed, it lasts for months or years (Karni & Sagi, 1993; Watanabe et al., 2002).

There have been two distinctive lines of research into perceptual learning. One line has been followed by animal learning researchers (e.g., Blair & Hall, 2003; Blair, Wilkinson, & Hall, 2004; Dwyer & Mackintosh, 2002; Mackintosh, Kaye, & Bennett, 1991; McLaren, Kaye, & Mackintosh, 1989; McLaren & Mackintosh, 2000; Mitchell, Nash, & Hall, 2008; Symonds, Hall, & Bailey, 2002); the other line has been followed by sensory psychophysicists. Animal learning researchers have indicated that to fully understand the behavioral rules of learning, it is necessary to clarify the role of perceptual learning as a component of learning. Along this line, perceptual learning has been studied in relation to associative learning as a factor that influences behavior. Psychophysicists, however, have measured performance improvement mainly to understand changes in the underlying physiological and functional mechanisms of sensory information processing and have emphasized the link between their findings and physiological sensory plasticity.

These lines appear to be very different, but the differences could be merely superficial. For example, recently, in both lines of research, the role of attention in learning has been a central issue. This may be a manifestation of these lines' approaching common ground despite their employment of very different methods.

Here we take a practical approach, suggesting that researchers from either discipline may find it beneficial to their own research to know more about the other line of research. For example, although sensory psychophysicists who have conducted perceptual learning research usually have focused only on clarifying changes in sensitivity or in a sensory system, they may gain a broader perspective by considering how perceptual learning is related to learning and behavior in general. Similarly, animal learning researchers may find examples where perceptual learning in conditions with apparently subtle differences in sensory factors lead to large differences in physiological and functional mechanisms. For example, there are different subcategories of attention (see Fan, McCandliss, Sommer, Raz, & Posner, 2002; Treue & Martínez Trujillo, 1999), and their respective effects on perceptual learning may be very different.

The Role of Attention in Perceptual Learning

The role of attention in perceptual learning is not entirely understood. There is still some controversy over whether attention to a feature to be learned is necessary for perceptual learning to occur. A considerable number of studies have tackled the question of exposure-based learning: Can mere exposure to a stimulus feature—without the performing of a task on the feature—induce perceptual learning? But this issue might not be resolved without one's taking into consideration that there are two types of attention: top-down and bottom-up.

Both the functional and physiological mechanisms of top-down and bottom-up attention and the effects of the mechanisms on other processing or outcomes are different. Top-down attention activates cognitive strategies, voluntarily biasing attention toward important features that are related to the subject's task. It has long been reported that top-down attention strongly affects sensory systems (James, 1890/1950). For example, activities in the primary visual cortex, the first cortex to which visual signals from the retina are projected, can be strongly influenced by top-down attention (Brefczynski & DeYoe, 1999; Gandhi, Heeger, & Boynton, 1999; Motter, 1993; Somers, Dale, Seiffert, & Tootell, 1999; Watanabe, Harner, et al., 1998; Watanabe, Sasaki, et al., 1998). Top-down attention usually enhances the signals and perception of task-relevant stimuli (Moran & Desimone, 1985) and inhibits the signals and perception of task-irrelevant stimuli (Friedman-Hill, Robertson, Desimone, & Ungerleider, 2003).

On the other hand, the direction of bottom-up attention is involuntarily shifted by the presentation of a salient feature (Desimone & Duncan, 1995; Treisman & Gelade, 1980). Such involuntary attention usually evokes a “pop-out” of the feature to which it is directed. For example, the difficulty of a visual-search task increases with the increased similarity of targets to nontargets (Duncan & Humphreys, 1989). In addition, the presentation of less familiar perceptual information induces a stronger bottom-up attention effect (Yantis, 1993; Yantis & Hillstrom, 1994).

Since both types of attention normally work together (Egeth & Yantis, 1997), their influences are often difficult to separate. However, state-of-the-art experimental manipulations do make it possible (Connor, Egeth, & Yantis, 2004; Egeth & Yantis, 1997; Ogawa & Komatsu, 2004; Yantis, 1993; Yantis & Hillstrom, 1994). Therefore, the effects of the two types of attention on perceptual learning should be discussed separately.

Perceptual learning with top-down attention

It has been shown that top-down attention plays a significant role in perceptual learning. Ahissar and Hochstein (1993) found that perceptual learning occurred for a feature that was relevant to a task but did not occur for a feature that was merely exposed. Electrophysiological and behavioral studies of hyperacuity (the ability to discriminate highly fine-grained visual signals) have indicated that perceptual learning is influenced by specific task demands (Gilbert, Ito, Kapadia, & Westheimer, 2000; Ito, Westheimer, & Gilbert, 1998). Polley et al. (2006) conducted experiments in which rats were exposed to auditory stimuli that varied in both intensity and frequency. The rats that were trained to discriminate frequencies showed improved performance for those frequencies and an expanded representation in the primary auditory cortex, whereas no learning was observed for the frequencies that were not trained. In the visual cortex of monkeys, Schoups et al. (2001) found that, whereas orientation properties of neurons surrounding discriminated orientations were changed as a result of an orientation-discrimination task, no such change was found at or surrounding an orientation that was merely exposed.

The studies above compared task-relevant and task-irrelevant conditions and showed no learning or physiological changes in task-irrelevant conditions. It appears that these and other studies (e.g., Ahissar & Hochstein, 2004) support the hypothesis that top-down attention to a sensory feature is necessary for that feature to be learned.

Perceptual learning with bottom-up attention versus mere exposure

Studies by animal learning psychologists and by sensory psychophysicists/physiologists have shown that simple exposure to stimulus features leads to perceptual learning of the features. However, those researchers' respective conclusions regarding the mechanism have been literally opposite one another in terms of the absence and/or presence of “attention.” Whereas animal learning psychologists have suggested that the results are sometimes due to “attention” to the features, sensory psychophysicists/physiologists have regarded the results as evidence for learning without “attention.”

In classical animal learning (i.e., associative learning) studies, Gibson and Walk (1956) demonstrated that rats learned to discriminate between a circle and a triangle more rapidly if they had lived in cages in which circles and triangles were displayed on the walls. Even though exposure to the stimuli promoted improvement in the discrimination of two distinctive stimuli, Gibson and Walk suggested that the distinctive and contrasting features between two stimuli needed to be noticed, and that the learning process was one of learning to abstract and attend to these features and to ignore other, irrelevant features (see also Gibson, 1969; Gibson & Levin, 1975). In other animal learning research, McLaren et al. (1989) found that simple exposure to stimuli that shared common elements (e.g., AX and BX) led to a decrease in attention to those shared elements and that simple stimulus exposure improved discriminability between the two stimuli. They suggested that attention to the common features decreased, leading to relative enhancement of that to the unique features (see also McLaren & Mackintosh, 2000). In these studies, since exposed features were not relevant to a task, the type of attention that might have been involved should be classified as bottom-up attention.

In studies in mice by sensory psychophysicists/physiologists, Frenkel et al. (2006) found that repeated simple exposure to the orientation of a grating pattern resulted in a persistent enhancement of responses specific to the stimulus. Dinse et al. (2006) showed that passive tactile stimulation of two points on a human finger improved discrimination between the points. Since the learned features in these studies were not related to a task, the authors suggested that perceptual learning occurred passively, as a result of mere exposure. These results do not favor the hypothesis that top-down attention is necessary for perceptual learning; nor do they entirely rule out the possibility that bottom-up attention plays a role.

In summary, different conclusions by researchers from different disciplines have been drawn from findings that indicate that exposure to stimulus features leads to perceptual learning. This discrepancy may have come from the fact that, in neither line of study, have top-down and/or bottom-up attention processes been taken into account. In the exposure-based experiments, a role for top-down attention is ruled out simply due to the definition of top-down attention, but it is not clear whether bottom-up attention or no attention was involved.

Perceptual learning as a result of mere exposure

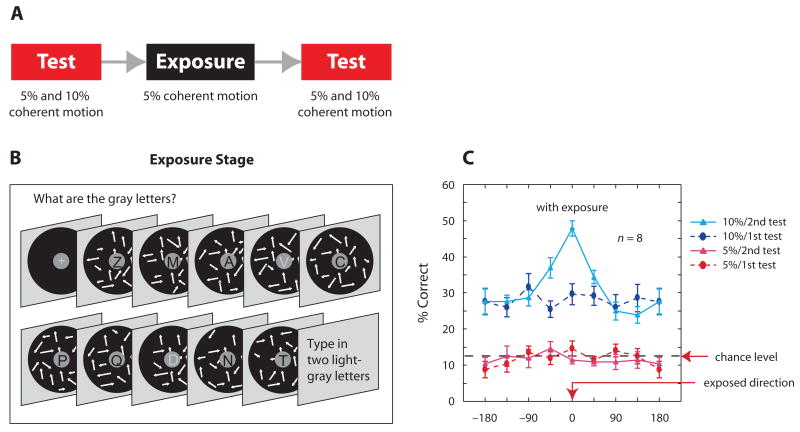

Watanabe et al. (2001) reported that perceptual learning occurred on a feature that was not only task-irrelevant but also subthreshold (detection performance at a chance level). There were two test stages, one preceding and the other following the exposure stage (Figure 1A). At the exposure stage, the subjects were repeatedly presented with a dynamic random-dot (DRD) display. The DRD display consisted of 5% coherently moving dots (signal) and 95% randomly moving dots (noise) in the background. The DRD display was presented in the periphery of the visual field, and the subjects were instructed to perform a letter- identification task in the center of the display (Figure 1B). Therefore, the 5% coherent motion was task irrelevant. When the subjects were tested with the 5% coherent- motion display before and after the exposure phase, performance was around the chance level, and, therefore, the 5% coherent motion was still subthreshold after the exposure. However, when the subjects were tested with a suprathreshold 10% coherent-motion display, sensitivity enhancement was obtained when the direction of motion was at or around that of the 5% coherent motion to which they were exposed.

Figure 1.

(A) Experimental procedure. Two motion-sensitivity tasks were conducted before and after an exposure stage. (B) Exposure stage. A sequence consisted of eight black letters and two gray letters on a gray center circle with a diameter of 1° of visual angle. Dynamic random-dot displays with subthreshold 5% coherent motion were presented in the periphery. (C) On the second test, enhanced discrimination was observed for a suprathreshold 10% coherent-motion pattern that was in the same or a similar direction (−45) as that to which exposure had occurred. From “Perceptual Learning Without Perception,” by T. Watanabe, J. E. Náñez, and Y. Sasaki, 2001, Nature, 413, p. 844. Copyright 2001 by the Nature Publishing Group. Adapted with permission.

These results indicate that the exposure to subthreshold 5% coherent motion actually enhanced sensitivity to the direction of the coherent motion (Figure 1C), although the sensitivity enhancement was not revealed with the 5% coherent motion that stayed subthreshold. That is, the coherent motion was task irrelevant and might as well have been subthreshold during the exposure phase, since it was subthreshold even after the exposure phase. Thus, neither top-down nor bottom-up attention was found to be necessary for perceptual learning. A number of subsequent studies (Hansen & Dragoi, 2007; Ludwig & Skrandies, 2002; Nishina, Seitz, Kawato, & Watanabe, 2007; Seitz, Náñez, Holloway, & Watanabe, 2005, 2006; Seitz & Watanabe, 2003; Watanabe et al., 2002) have supported the Watanabe et al. (2001) results.

The Role of Inhibition by Attention in Perceptual Learning

If attention is not necessary for a feature to be learned, what is the role of attention in perceptual learning? As was mentioned above, some studies have indicated that perceptual learning does not occur for features that were merely exposed when the subjects conducted prior tasks on other features (Ahissar & Hochstein, 1993; Polley et al., 2006; Shiu & Pashler, 1992). In contrast, Watanabe et al. (2001) showed that perceptual learning does occur with task-irrelevant and subthreshold features. Our recent data may resolve this controversy.

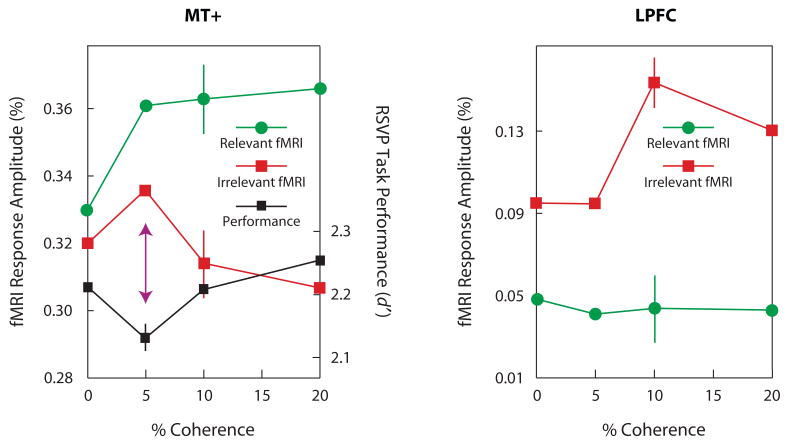

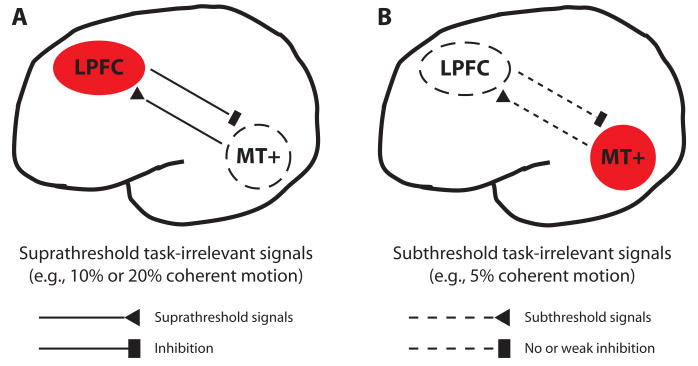

Tsushima, Sasaki, and Watanabe (2006) examined how weak signals are processed. They measured BOLD signals with a procedure similar to that used in the exposure stage in Watanabe et al. (2001; see Figure 1B). Note that the number of trials was not sufficiently large to induce significant learning. When 5% subthreshold coherent motion was presented in the periphery while the subjects were conducting a letter-identification task in the center, the human homologues of the middle temporal area (MT+), which is highly responsive to coherent motion (Rees, Friston, & Koch, 2000), were highly activated. At the same time, activation in the lateral prefrontal cortex (LPFC) was no larger than with no coherent motion (Figure 2). On the other hand, when the suprathreshold coherent motion (10% or 20%) was presented, MT+ was less active than with the 5% subthreshold coherent motion, and the LPFC was significantly more active than with the 5% motion (Figure 2). Since one role of the LPFC may be to provide inhibitory control on task-irrelevant features (Knight, Staines, Swick, & Chao, 1999), Tsushima et al. (2006) concluded that when a suprathrehold task- irrelevant feature is presented, the LPFC “notices” this and provides inhibitory control of the feature's signals (Figure 3A). On the other hand, when a task-irrelevant signal is subthreshold, the LPFC does not notice the presence of the feature and fails to provide effective inhibitory control of the feature (Figure 3B). That is, the attentional system may provide a stronger inhibition for suprathreshold task-irrelevant features than for subthreshold task-irrelevant features. This finding parallels results of several behavioral studies that have indicated that subthreshold or near-threshold task-irrelevant features had a stronger effect on task performance than did suprathreshold features (Meteyard, Zokaei, Bahrami, & Vigliocco, 2008; Tsushima et al., 2006).

Figure 2.

fMRI activities in the middle temporal area (MT+) and the lateral prefrontal cortex (LPFC), while the subject performed a letter-identification task in the center, with task-irrelevant motion presented in the periphery, and fMRI activities for areas MT+ and LPFC in the condition in which the moving dots were task irrelevant. BOLD signals for MT+ at 5% (subthreshold) coherence were significantly higher than at 10% and 20% coherence. BOLD signals for LPFC at the 5% coherence ratio were significantly lower those at the 10% and 20% ratios, with no significant difference from those at 0% coherence. From “Greater Disruption Due to Failure of Inhibitory Control on an Ambiguous Distractor,” by Y. Tsushima, Y. Sasaki, and T. Watanabe, 2006, Science, 314, p. 1787. Copyright 2006 by the American Association for the Advancement of Science. Adapted with permission.

Figure 3.

Schematic illustration of the hypothesized bidirectional interactions between the lateral prefrontal cortex (LPFC) and the middle temporal area (MT+) (e.g., left hemisphere). (A) The LPFC-noticed suprathreshold coherent-motion signals from MT+ provide direct or indirect effective inhibitory control on MT+. (B) The LPFC fails to notice subthreshold coherent-motion signals from MT+. Thus, the LPFC does not provide direct or indirect effective inhibitory control on MT+.

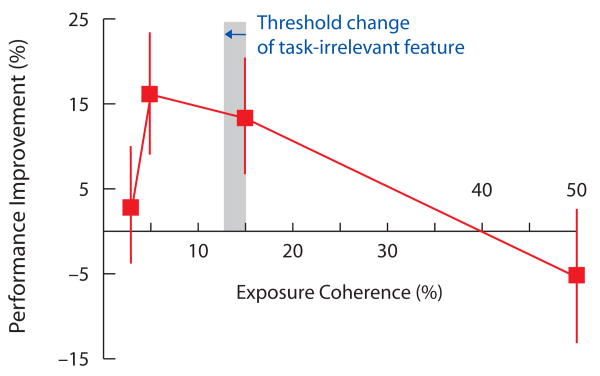

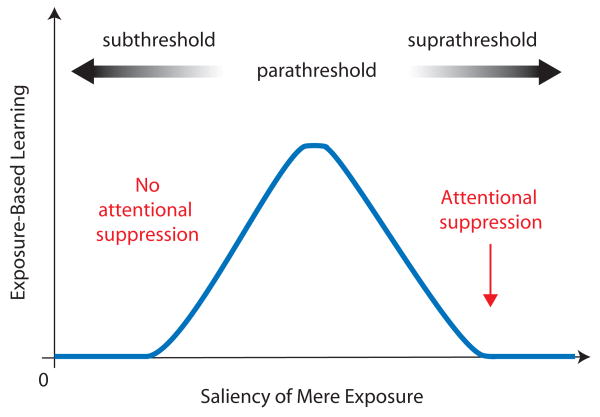

Note that Tsushima et al. (2006) was not meant to clarify the effects of subthreshold signals on learning. However, if stronger internal signals lead to stronger plasticity, it may well be that learning for near-threshold task- irrelevant signals is stronger than learning for suprathreshold signals. To test whether this is the case, Tsushima, Seitz, and Watanabe (2008) measured the strength of perceptual learning of task-irrelevant coherent motion as a function of the coherent-motion ratio (i.e., the signal/noise ratio of coherently moving dots to randomly moving dots). They used a within-subjects design in which each subject was exposed to different directions of coherent motion, each at a different (higher or lower) but consistent coherence level. They found that the strongest perceptual learning was obtained for coherent-motion ratios that were close to the coherent-motion threshold (5%), and no perceptual learning was observed for 50% coherent motion (Figure 4). In addition, Paffen, Verstraten, and Vidnyánszky (2008) recently found that perceptual learning of a task-relevant feature resulted in inhibition of suprathreshold task-irrelevant signals exposed when the task-relevant feature was learned. These results suggest that perceptual learning for suprathreshold task-irrelevant features (see also Ahissar & Hochstein, 1993; Polley et al., 2006; Shiu & Pashler, 1992) occurred not because the irrelevant feature was noticed and inhibited by the attentional system, but because attention to a feature was necessary for the feature to be learned (Figure 5). In short, the absence or presence of exposure-based learning on a feature depends on the strength of the feature.

Figure 4.

Performance improvement as a function of coherence of stimulus exposure. Only 5% and 15% (parathreshold) coherent-motion signals improved motion sensitivity. The horizontal arrow represents the mean threshold change of the task-irrelevant feature measured in the test stages, from before exposure to after exposure. Error bars show standard errors. From “Task-Irrelevant Learning Occurs Only When the Irrelevant Feature Is Weak,” by Y. Tsushima, A. R. Seitz, and T. Watanabe, 2008, Current Biology, 18, p. R517. Copyright 2008 by the Elsevier Corporation. Adapted with permission.

Figure 5.

Relationship between exposure-based learning and the saliency of mere exposure. Because the attentional system suppresses salient exposure stimuli, exposure-based learning occurs only when stimulus exposure is parathreshold.

Conclusions

Sensory psychophysicists and physiologists have manipulated stimulus features in order to focus on attention's role in perceptual learning and its mechanisms and on the sensory mechanisms that underlie it. Animal learning psychologists have broader viewpoints from which to examine the significance of perceptual learning and attention for learning and behavior. It is extremely important and useful for students from the disciplines of sensory psychophysics and physiology to study the achievements of animal learning psychologists, because this may provide them a broader perspective of perceptual learning.

The message for animal learning psychologists from visual psychophysicists is that the work with animals can be expanded to include different attentional processes exposed by our work, since it is not clear whether the results of the exposure are due to bottom-up attention or to passive stimulus input. In addition, for understanding perceptual learning, inhibition of task-irrelevant features is a key factor (Friedman-Hill et al., 2003) and one to which we wish animal learning psychologists would pay more “attention.”

We hope that this issue of Learning & Behavior will promote better understanding of perceptual learning and learning in general by both animal learning psychologists and sensory psychophysicists and physiologists.

Acknowledgments

Preparation of this article was supported by NIH–NEI Grants R21EY017737 and R21EY018925 and NSF Grant BCS-PR04-137 (CELEST).

References

- Ahissar M, Hochstein S. Attentional control of early perceptual learning. Proceedings of the National Academy of Sciences. 1993;90:5718–5722. doi: 10.1073/pnas.90.12.5718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. Task difficulty and the specificity of perceptual learning. Nature. 1997;387:401–406. doi: 10.1038/387401a0. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. The reverse hierarchy theory of visual perceptual learning. Trends in Cognitive Sciences. 2004;8:457–464. doi: 10.1016/j.tics.2004.08.011. [DOI] [PubMed] [Google Scholar]

- Amitay S, Irwin A, Moore DR. Discrimination learning induced by training with identical stimuli. Nature Neuroscience. 2006;9:1446–1448. doi: 10.1038/nn1787. [DOI] [PubMed] [Google Scholar]

- Ball K, Sekuler R. Direction-specific improvement in motion discrimination. Vision Research. 1987;27:953–965. doi: 10.1016/0042-6989(87)90011-3. [DOI] [PubMed] [Google Scholar]

- Bao S, Chan VT, Merzenich MM. Cortical remodelling induced by activity of ventral tegmental dopamine neurons. Nature. 2001;412:79–83. doi: 10.1038/35083586. [DOI] [PubMed] [Google Scholar]

- Beard BL, Levi DM, Reich LN. Perceptual learning in parafoveal vision. Vision Research. 1995;35:1679–1690. doi: 10.1016/0042-6989(94)00267-p. [DOI] [PubMed] [Google Scholar]

- Bende M, Nordin S. Perceptual learning in olfaction: Professional wine testers versus controls. Physiology & Behavior. 1997;62:1065–1070. doi: 10.1016/s0031-9384(97)00251-5. [DOI] [PubMed] [Google Scholar]

- Blair CAJ, Hall G. Perceptual learning in flavor aversion: Evidence for learned changes in stimulus effectiveness. Journal of Experimental Psychology: Animal Behavior Processes. 2003;29:39–48. [PubMed] [Google Scholar]

- Blair CAJ, Wilkinson A, Hall G. Assessments of changes in the effective salience of stimulus elements as a result of stimulus preexposure. Journal of Experimental Psychology: Animal Behavior Processes. 2004;30:317–324. doi: 10.1037/0097-7403.30.4.317. [DOI] [PubMed] [Google Scholar]

- Brefczynski JA, DeYoe EA. A physiological correlate of the “spotlight” of visual attention. Nature Neuroscience. 1999;2:370–374. doi: 10.1038/7280. [DOI] [PubMed] [Google Scholar]

- Connor CE, Egeth HE, Yantis S. Visual attention: Bottom-up versus top-down. Current Biology. 2004;14:R850–R852. doi: 10.1016/j.cub.2004.09.041. [DOI] [PubMed] [Google Scholar]

- Crist RE, Kapadia MK, Westheimer G, Gilbert CD. Perceptual learning of spatial localization: Specificity for orientation, position, and context. Journal of Neurophysiology. 1997;78:2889–2894. doi: 10.1152/jn.1997.78.6.2889. [DOI] [PubMed] [Google Scholar]

- Crist RE, Li W, Gilbert CD. Learning to see: Experience and attention in primary visual cortex. Nature Neuroscience. 2001;4:519–525. doi: 10.1038/87470. [DOI] [PubMed] [Google Scholar]

- Demany L. Perceptual learning in frequency discrimination. Journal of the Acoustical Society of America. 1985;78:1118–1120. doi: 10.1121/1.393034. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annual Review of Neuroscience. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Dinse HR, Kleibel N, Kalisch T, Ragert P, Wilimzig C, Tegenthoff M. Tactile coactivation resets age-related decline of human tactile discrimination. Annals of Neurology. 2006;60:88–94. doi: 10.1002/ana.20862. [DOI] [PubMed] [Google Scholar]

- Dinse HR, Ragert P, Pleger B, Schwenkreis P, Tegenthoff M. Pharmacological modulation of perceptual learning and associated cortical reorganization. Science. 2003;301:91–94. doi: 10.1126/science.1085423. [DOI] [PubMed] [Google Scholar]

- Dosher BA, Lu ZL. Perceptual learning reflects external noise filtering and internal noise reduction through channel reweighting. Proceedings of the National Academy of Sciences. 1998;95:13988–13993. doi: 10.1073/pnas.95.23.13988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher BA, Lu ZL. Mechanisms of perceptual learning. Vision Research. 1999;39:3197–3221. doi: 10.1016/s0042-6989(99)00059-0. [DOI] [PubMed] [Google Scholar]

- Duncan J, Humphreys GW. Visual search and stimulus similarity. Psychological Review. 1989;96:433–458. doi: 10.1037/0033-295x.96.3.433. [DOI] [PubMed] [Google Scholar]

- Dwyer DM, Mackintosh NJ. Alternating exposure to two compound flavors creates inhibitory associations between their unique features. Animal Learning & Behavior. 2002;30:201–207. doi: 10.3758/bf03192829. [DOI] [PubMed] [Google Scholar]

- Egeth HE, Yantis S. Visual attention: Control, representation, and time course. Annual Review of Psychology. 1997;48:269–297. doi: 10.1146/annurev.psych.48.1.269. [DOI] [PubMed] [Google Scholar]

- Fahle M, Edelman S. Long-term learning in vernier acuity: Effects of stimulus orientation, range and of feedback. Vision Research. 1993;33:397–412. doi: 10.1016/0042-6989(93)90094-d. [DOI] [PubMed] [Google Scholar]

- Fahle M, Edelman S, Poggio T. Fast perceptual learning in hyperacuity. Vision Research. 1995;35:3003–3013. doi: 10.1016/0042-6989(95)00044-z. [DOI] [PubMed] [Google Scholar]

- Fan J, McCandliss BD, Sommer T, Raz A, Posner MI. Testing the efficiency and independence of attentional networks. Journal of Cognitive Neuroscience. 2002;14:340–347. doi: 10.1162/089892902317361886. [DOI] [PubMed] [Google Scholar]

- Fine I, Jacobs RA. Comparing perceptual learning tasks: A review. Journal of Vision. 2002;2:190–203. doi: 10.1167/2.2.5. [DOI] [PubMed] [Google Scholar]

- Fiorentini A, Berardi N. Perceptual learning specific for orientation and spatial frequency. Nature. 1980;287:43–44. doi: 10.1038/287043a0. [DOI] [PubMed] [Google Scholar]

- Fiser J, Aslin RN. Statistical learning of higher-order temporal structure from visual shape sequences. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2002;28:458–467. doi: 10.1037//0278-7393.28.3.458. [DOI] [PubMed] [Google Scholar]

- Frenkel MY, Sawtell NB, Diogo ACM, Yoon B, Neve RL, Bear MF. Instructive effect of visual experience in mouse visual cortex. Neuron. 2006;51:339–349. doi: 10.1016/j.neuron.2006.06.026. [DOI] [PubMed] [Google Scholar]

- Friedman-Hill SR, Robertson LC, Desimone R, Ungerleider LG. Posterior parietal cortex and the filtering of distractors. Proceedings of the National Academy of Sciences. 2003;100:4263–4268. doi: 10.1073/pnas.0730772100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furmanski CS, Schluppeck D, Engel SA. Learning strengthens the response of primary visual cortex to simple patterns. Current Biology. 2004;14:573–578. doi: 10.1016/j.cub.2004.03.032. [DOI] [PubMed] [Google Scholar]

- Gandhi SP, Heeger DJ, Boynton GM. Spatial attention affects brain activity in human primary visual cortex. Proceedings of the National Academy of Sciences. 1999;96:3314–3319. doi: 10.1073/pnas.96.6.3314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson EJ. Perceptual learning. Annual Review of Psychology. 1963;14:29–56. doi: 10.1146/annurev.ps.14.020163.000333. [DOI] [PubMed] [Google Scholar]

- Gibson EJ. Principles of perceptual learning and development. Cambridge, MA: MIT Press; 1969. [Google Scholar]

- Gibson EJ, Levin H. The psychology of reading. New York: Appleton-Century-Crofts; 1975. [Google Scholar]

- Gibson EJ, Walk RD. The effect of prolonged exposure to visually presented patterns on learning to discriminate them. Journal of Comparative & Physiological Psychology. 1956;49:239–242. doi: 10.1037/h0048274. [DOI] [PubMed] [Google Scholar]

- Gilbert C, Ito M, Kapadia M, Westheimer G. Interactions between attention, context and learning in primary visual cortex. Vision Research. 2000;40:1217–1226. doi: 10.1016/s0042-6989(99)00234-5. [DOI] [PubMed] [Google Scholar]

- Hansen BJ, Dragoi V. Learning by exposure in the visual system. Paper presented at the 37th Annual Meeting of the Society of Neuroscience; San Diego. 2007. Nov, [Google Scholar]

- Herzog MH, Fahle M. Modeling perceptual learning: Difficulties and how they can be overcome. Biological Cybernetics. 1998;78:107–117. doi: 10.1007/s004220050418. [DOI] [PubMed] [Google Scholar]

- Herzog MH, Fahle M. Effects of biased feedback on learning and deciding in a vernier discrimination task. Vision Research. 1999;39:4232–4243. doi: 10.1016/s0042-6989(99)00138-8. [DOI] [PubMed] [Google Scholar]

- Ito M, Westheimer G, Gilbert CD. Attention and perceptual learning modulate contextual influences on visual perception. Neuron. 1998;20:1191–1197. doi: 10.1016/s0896-6273(00)80499-7. [DOI] [PubMed] [Google Scholar]

- James W. The principles of psychology. New York: Dover; 1950. Original work published 1890. [Google Scholar]

- Karni A, Sagi D. Where practice makes perfect in texture discrimination: Evidence for primary visual cortex plasticity. Proceedings of the National Academy of Sciences. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karni A, Sagi D. The time course of learning a visual skill. Nature. 1993;365:250–252. doi: 10.1038/365250a0. [DOI] [PubMed] [Google Scholar]

- Knight RT, Staines WR, Swick D, Chao LL. Prefrontal cortex regulates inhibition and excitation in distributed neural networks. Acta Psychologica. 1999;101:159–178. doi: 10.1016/s0001-6918(99)00004-9. [DOI] [PubMed] [Google Scholar]

- Koyama S, Harner A, Watanabe T. Task-dependent changes of the psychophysical motion-tuning functions in the course of perceptual learning. Perception. 2004;33:1139–1147. doi: 10.1068/p5195. [DOI] [PubMed] [Google Scholar]

- Ludwig I, Skrandies W. Human perceptual learning in the peripheral visual field: Sensory thresholds and neurophysiological correlates. Biological Psychology. 2002;59:187–206. doi: 10.1016/s0301-0511(02)00009-1. [DOI] [PubMed] [Google Scholar]

- Mackintosh NJ, Kaye H, Bennett CH. Perceptual learning in flavour aversion conditioning. Quarterly Journal of Experimental Psychology. 1991;43B:297–322. [PubMed] [Google Scholar]

- McKee SP, Westheimer G. Improvement in vernier acuity with practice. Perception & Psychophysics. 1978;24:258–262. doi: 10.3758/bf03206097. [DOI] [PubMed] [Google Scholar]

- McLaren IPL, Kaye H, Mackintosh NJ. An associative theory of the representation of stimuli: Applications to perceptual learning and latent inhibition. In: Morris RGM, editor. Parallel distributed processing: Implications for psychology and neurobiology. Oxford: Oxford University Press; 1989. pp. 102–130. [Google Scholar]

- McLaren IPL, Mackintosh NJ. An elemental model of associative learning: I. Latent inhibition and perceptual learning. Animal Learning & Behavior. 2000;28:211–246. [Google Scholar]

- Meteyard L, Zokaei N, Bahrami B, Vigliocco G. Visual motion interferes with lexical decision on motion words. Current Biology. 2008;18:R732–R733. doi: 10.1016/j.cub.2008.07.016. [DOI] [PubMed] [Google Scholar]

- Mitchell C, Nash S, Hall G. The intermixed–blocked effect in human perceptual learning is not the consequence of trial spacing. Journal of Experimental Psychology: Learning, Memory, & Cognition. 2008;34:237–242. doi: 10.1037/0278-7393.34.1.237. [DOI] [PubMed] [Google Scholar]

- Moran J, Desimone R. Selective attention gates visual processing in the extrastriate cortex. Science. 1985;229:782–784. doi: 10.1126/science.4023713. [DOI] [PubMed] [Google Scholar]

- Motter BC. Focal attention produces spatially selective processing in visual cortical areas V1, V2, and V4 in the presence of competing stimuli. Journal of Neurophysiology. 1993;70:909–919. doi: 10.1152/jn.1993.70.3.909. [DOI] [PubMed] [Google Scholar]

- Nishina S, Seitz AR, Kawato M, Watanabe T. Effect of spatial distance to the task stimulus on task-irrelevant perceptual learning of static Gabors. Journal of Vision. 2007;7:1–10. doi: 10.1167/7.13.2. [DOI] [PubMed] [Google Scholar]

- Ogawa T, Komatsu H. Target selection in area V4 during a multidimensional visual search task. Journal of Neuroscience. 2004;24:6371–6382. doi: 10.1523/JNEUROSCI.0569-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owen DH, Machamer PK. Bias-free improvement in wine discrimination. Perception. 1979;8:199–209. doi: 10.1068/p080199. [DOI] [PubMed] [Google Scholar]

- Paffen CLE, Verstraten FAJ, Vidnyánszky Z. Attention-based perceptual learning increases binocular rivalry suppression of irrelevant visual features. Journal of Vision. 2008;8:1–11. doi: 10.1167/8.4.25. [DOI] [PubMed] [Google Scholar]

- Poggio T, Fahle M, Edelman S. Fast perceptual learning in visual hyperacuity. Science. 1992;256:1018–1021. doi: 10.1126/science.1589770. [DOI] [PubMed] [Google Scholar]

- Polley DB, Steinberg EE, Merzenich MM. Perceptual learning directs auditory cortical map reorganization through top-down influences. Journal of Neuroscience. 2006;26:4970–4982. doi: 10.1523/JNEUROSCI.3771-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ramachandran VS, Braddick O. Orientation-specific learning in stereopsis. Perception. 1973;2:371–376. doi: 10.1068/p020371. [DOI] [PubMed] [Google Scholar]

- Rees G, Friston K, Koch C. A direct quantitative relationship between the functional properties of human and macaque V5. Nature Neuroscience. 2000;3:716–723. doi: 10.1038/76673. [DOI] [PubMed] [Google Scholar]

- Saarinen J, Levi DM. Perceptual learning in vernier acuity: What is learned? Vision Research. 1995;35:519–527. doi: 10.1016/0042-6989(94)00141-8. [DOI] [PubMed] [Google Scholar]

- Sagi D, Tanne D. Perceptual learning: Learning to see. Current Opinion in Neurobiology. 1994;4:195–199. doi: 10.1016/0959-4388(94)90072-8. [DOI] [PubMed] [Google Scholar]

- Sathian K, Zangaladze A. Tactile learning is task specific but transfers between fingers. Perception & Psychophysics. 1997;59:119–128. doi: 10.3758/bf03206854. [DOI] [PubMed] [Google Scholar]

- Schoups AA, Vogels R, Orban GA. Human perceptual learning in identifying the oblique orientation: Retinotopy, orientation specificity and monocularity. Journal of Physiology. 1995;483:797–810. doi: 10.1113/jphysiol.1995.sp020623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoups A, Vogels R, Qian N, Orban G. Practicing orientation identification improves orientation coding in V1 neurons. Nature. 2001;412:549–553. doi: 10.1038/35087601. [DOI] [PubMed] [Google Scholar]

- Schwartz S, Maquet P, Frith C. Neural correlates of perceptual learning: A functional MRI study of visual texture discrimination. Proceedings of the National Academy of Sciences. 2002;99:17137–17142. doi: 10.1073/pnas.242414599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Náñez JE, Sr, Holloway SR, Watanabe T. Visual experience can substantially alter critical flicker fusion thresholds. Human Psychopharmacology. 2005;20:55–60. doi: 10.1002/hup.661. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Náñez JE, Sr, Holloway SR, Watanabe T. Perceptual learning of motion leads to faster flicker perception. PLoS ONE. 2006;1:e28. doi: 10.1371/journal.pone.0000028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. Psychophysics: Is subliminal learning really passive? Nature. 2003;422:36. doi: 10.1038/422036a. [DOI] [PubMed] [Google Scholar]

- Shiu LP, Pashler H. Improvement in line orientation discrimination is retinally local but dependent on cognitive set. Perception & Psychophysics. 1992;52:582–588. doi: 10.3758/bf03206720. [DOI] [PubMed] [Google Scholar]

- Somers DC, Dale AM, Seiffert AE, Tootell RBH. Functional MRI reveals spatially specific attentional modulation in human primary visual cortex. Proceedings of the National Academy of Sciences. 1999;96:1663–1668. doi: 10.1073/pnas.96.4.1663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sowden PT, Rose D, Davies IRL. Perceptual learning of luminance contrast detection: Specific for spatial frequency and retinal location but not orientation. Vision Research. 2002;42:1249–1258. doi: 10.1016/s0042-6989(02)00019-6. [DOI] [PubMed] [Google Scholar]

- Symonds M, Hall G, Bailey GK. Perceptual learning with a sodium depletion procedure. Journal of Experimental Psychology: Animal Behavior Processes. 2002;28:190–199. [PubMed] [Google Scholar]

- Treisman AM, Gelade G. A feature-integration theory of attention. Cognitive Psychology. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Treue S, Martínez Trujillo JC. Feature-based attention influences motion processing gain in macaque visual cortex. Nature. 1999;399:575–579. doi: 10.1038/21176. [DOI] [PubMed] [Google Scholar]

- Tsushima Y, Sasaki Y, Watanabe T. Greater disruption due to failure of inhibitory control on an ambiguous distractor. Science. 2006;314:1786–1788. doi: 10.1126/science.1133197. [DOI] [PubMed] [Google Scholar]

- Tsushima Y, Seitz AR, Watanabe T. Task-irrelevant learning occurs only when the irrelevant feature is weak. Current Biology. 2008;18:R516–R517. doi: 10.1016/j.cub.2008.04.029. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe T, Harner AM, Miyauchi S, Sasaki Y, Nielsen M, Palomo D, Mukai I. Task-dependent influences of attention on the activation of human primary visual cortex. Proceedings of the National Academy of Sciences. 1998;95:11489–11492. doi: 10.1073/pnas.95.19.11489. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe T, Náñez JE, Sr, Koyama S, Mukai I, Liederman J, Sasaki Y. Greater plasticity in lower-level than higher-level visual motion processing in a passive perceptual learning task. Nature Neuroscience. 2002;5:1003–1009. doi: 10.1038/nn915. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Náñez JE, Sasaki Y. Perceptual learning without perception. Nature. 2001;413:844–848. doi: 10.1038/35101601. [DOI] [PubMed] [Google Scholar]

- Watanabe T, Sasaki Y, Miyauchi S, Putz B, Fujimaki N, Nielsen M, et al. Attention-regulated activity in human primary visual cortex. Journal of Neurophysiology. 1998;79:2218–2221. doi: 10.1152/jn.1998.79.4.2218. [DOI] [PubMed] [Google Scholar]

- Yantis S. Stimulus-driven attentional capture. Current Directions in Psychological Science. 1993;2:156–161. doi: 10.1111/j.1467-8721.2008.00554.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yantis S, Hillstrom AP. Stimulus-driven attentional capture: Evidence from equiluminant visual objects. Journal of Experimental Psychology: Human Perception & Performance. 1994;20:95–107. doi: 10.1037//0096-1523.20.1.95. [DOI] [PubMed] [Google Scholar]