Abstract

Objective To evaluate the effectiveness of an educational visit to help obstetricians and midwives select and use evidence from a Cochrane database containing 600 systematic reviews.

Design Randomised single blind controlled trial with obstetric units allocated to an educational visit or control group.

Setting 25 of the 26 district general obstetric units in two former NHS regions.

Subjects The senior obstetrician and midwife from each intervention unit participated in educational visits. Clinical practices of all staff were assessed in 4508 pregnancies.

Intervention Single informal educational visit by a respected obstetrician including discussion of evidence based obstetrics, guidance on implementation, and donation of Cochrane database and other materials.

Main outcome measures Rates of perineal suturing with polyglycolic acid, ventouse delivery, prophylactic antibiotics in caesarean section, and steroids in preterm delivery, before and 9 months after visits, and concordance of guidelines with review evidence for same marker practices before and after visits.

Results Rates varied greatly, but the overall baseline mean of 43% (986/2312) increased to 54% (1189/2196) 9 months later. Rates of ventouse delivery increased significantly in intervention units but not in control units; there was no difference between the two types of units in uptake of other practices. Pooling rates from all 25 units, use of antibiotics in caesarean section and use of polyglycolic acid sutures increased significantly over the period, but use of steroids in preterm delivery was unchanged. Labour ward guidelines seldom agreed with evidence at baseline; this hardly improved after visits. Educational visits cost £860 each (at 1995 prices).

Conclusions There was considerable uptake of evidence into practice in both control and intervention units between 1994 and 1995. Our educational visits added little to this, despite the informal setting, targeting of senior staff from two disciplines, and donation of educational materials. Further work is needed to define cost effective methods to enhance the uptake of evidence from systematic reviews and to clarify leadership and roles of senior obstetric staff in implementing the evidence.

Key messages

There was marked variation in four common obstetric practices known to improve patient outcomes in 25 district general obstetric units across south east England in both 1994 and 1995

Labour ward guidelines in the 25 units showed little concordance with Cochrane review evidence in 1994 and 1995

The gap between Cochrane review evidence and clinical practice narrowed in 1995, but 46% (1010) of 2196 pregnant women studied were still not managed according to current evidence

Educational visits to senior staff led to a significant but clinically modest uptake of evidence from systematic reviews in only one of the four practices studied

Reducing practice variations and improving clinical knowledge management by helping clinicians to locate, select, and implement systematic review evidence remain important challenges for the NHS

Introduction

Although local circumstances must always be taken into account, it is acknowledged that as far as possible clinical practice should be guided by rigorous evidence from large trials or systematic reviews.1,2 This is because traditional review articles or textbooks often contain recommendations based on clinical impression or evidence outdated years ago.3 Much time and effort are being invested in writing systematic reviews, but it is unclear whether reviews can be used directly by those in charge of clinical units to inform and improve local practice and patient outcome.

Like other clinical specialists,4,5 obstetricians6,7 have found it difficult to change their practice in line with mounting evidence—for example, giving corticosteroids to fewer than 20% of women in preterm labour.8 However, in the United Kingdom, 83% of consultant obstetricians stated that they would be willing to change their practice if provided with conclusive evidence from randomised trials.9 The Cochrane Collaboration published a comprehensive database of over 600 systematic reviews on pregnancy and childbirth, the Cochrane module on pregnancy and childbirth,10 so we sought a method to help obstetric units use the evidence contained in the reviews to inform their clinical practice.

To identify existing information sources for obstetricians11 and potential local obstacles to change12,13 in English obstetric units, we surveyed all UK teaching hospitals and a random sample of obstetric units in district general hospitals.14,15 As well as identifying potential barriers to evidence based obstetrics, our survey showed that only 1 in 6 district general units had access to the Cochrane review database. After publication of our survey, several NHS regions distributed copies of the database to district general hospitals, and some organised formal didactic conferences to introduce the Cochrane module on pregnancy and childbirth to clinicians. However, even posting attractively presented information to healthcare professionals can fail to change their practice16,17; posting a database containing systematic reviews seemed even less likely to succeed. Similarly, large scale, formal continuing educational activities usually fail to change clinical practice, whereas small scale educational sessions in which the participants decide the agenda are more effective.17,18

To ensure that evidence from systematic reviews informs clinical practice in district general hospitals, we believe that those professionals who lead clinical departments should appreciate evidence based medicine and how to incorporate review evidence into effective implementation methods to influence their staff, such as wall posters or practice guidelines.12,17–19 Junior staff alone are unlikely to bring about significant innovation, especially if it requires new equipment or supplies, without the support of senior staff to mandate and fund such changes. In addition, senior clinical staff share some characteristics with the locally nominated opinion leaders whom Lomas and colleagues showed can be a powerful force in changing clinical practice.20 Finally, targeting two or three senior staff rather than all unit clinicians costs less NHS time, makes it easier to arrange meetings without disrupting clinical activity, and leaves senior staff free to reflect on the evidence, local barriers, constraints, and needs before they select targets and implementation methods sensitive to local circumstances. Thus, for example, if heads of units identify low rates of prophylactic antibiotic use in caesarean section as a priority, they may want to approach anaesthetists rather than their own junior staff if this seems the most appropriate implementation route.

To help senior unit staff to appreciate evidence based medicine and how to incorporate review evidence into effective implementation methods, we decided to use educational visits. Educational outreach or academic detailing visits are effective at changing specific clinical practices21 and are extensively used by the pharmaceutical industry to manipulate physicians’ prescribing for commercial reasons. In educational outreach a knowledgeable person visits each target clinician to explore a problem and possible local solutions, discuss their concerns, and provide attractive documents summarising key facts.22 However, the time and travel required can be costly17 and there are reports of outreach failing,23,24 perhaps because of failure to identify local barriers to change. In Canada, outreach visits to opinion leaders in midwifery failed to change unit midwifery practice, probably because obstetricians were not targeted at the same time.24

Educational visits have not been evaluated for their potential to bring about a general change in emphasis, such as a greater appreciation of evidence based medicine. Current evidence suggests that combining two or more implementation strategies, such as educational visits, to those individuals who are best placed to determine local barriers to change and adjust unit policy, is most likely to be effective.12,17–19 Our aim was to test if this strategy was an effective, economical method to enhance the uptake of evidence from Cochrane reviews in district general obstetric units as a pilot for other Cochrane specialty databases.

Subjects and methods

We conducted a randomised controlled trial to test the hypothesis that a single educational visit to the lead obstetrician and midwife in district general obstetric units, outlining the principles of evidence based medicine and ways they might apply evidence from Cochrane pregnancy and childbirth reviews in their unit, would enhance application of this evidence after 9 months, measured by changes in four marker clinical practices.

Intervention

We targeted our educational visits to the lead obstetrician and midwife on the labour ward, whom we equated with Lomas and colleagues’ opinion leaders20 because they had usually been nominated to hold these positions by peers as being the most involved in labour ward management, policy making, and training. We deliberately limited the intervention to a single informal 1.5-3 hour visit by RJ (a nationally respected obstetrician and author of several Cochrane pregnancy and childbirth reviews) and a research midwife, as there is good evidence that a single educational visit can be effective.21 Also, if the intervention proved cost effective it could be used nationally.

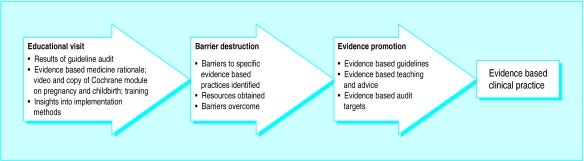

During the visit, RJ outlined the principles of evidence based medicine, and helped lead staff to understand how to find and select Cochrane pregnancy and childbirth reviews and apply them to inform their own clinical practice and that of other unit staff (fig 1). Topics covered during the educational visit were determined by lead staff but included feedback on the quality of their labour ward guidelines, demonstration of the Cochrane module, and description of methods to promote evidence based practice such as evidence based labour ward guidelines, audit targets, one to one training, and wall posters. RJ gave each intervention unit a copy of the Cochrane module on pregnancy and childbirth and a short video about evidence based medicine and the Cochrane pregnancy and childbirth reviews,25 copies of overhead slides on evidence based medicine and effective dissemination methods, and a telephone number for further contact. He did not focus on any specific obstetric practice, and he was blind to our choice of marker practices. We collected the same baseline and follow up data in all obstetric units, but control units received no educational visit, no feedback on guideline quality, and no copy of the Cochrane module from us.

Figure 1.

Anticipated role of educational visits in enhancing uptake of evidence in obstetric practice

Trial design

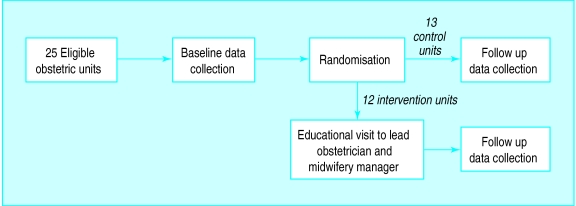

Our intention was to enhance the application of evidence by the whole labour ward team so, to minimise contamination, the unit of randomisation and analysis was the obstetric unit. Each obstetric unit was given an identifier then stratified according to NHS region, annual delivery rate, possession of the Cochrane module on pregnancy and childbirth at baseline, and distance from the nearest teaching hospital. Annual delivery rate, possession of the Cochrane module, and distance from the nearest teaching hospital were taken as surrogates for exposure to evidence based medicine. Obstetric units were allocated to intervention or control group by the toss of a coin (fig 2). To eliminate bias during data collection at follow up by a second research midwife, and to allow blinded assessment of guideline quality, the allocation was concealed from everyone except JCW, DGA, RJ, and the first research midwife. As only 25 obstetric units were available for randomisation, and accurate baseline figures for the rates and variability of the four marker clinical practices were not available, sample size calculation was not carried out, but confidence intervals are given for the results.

Figure 2.

Trial design

Participants

We included all consultant led district general obstetric units with more than 1500 deliveries per annum in two former NHS regions (North East and South West Thames). We excluded one smaller unit (1200 deliveries per annum) and three university teaching units because we suspected that the professional roles and relationships in these differed from the large district general units, which form 90% of UK units. Our sample included 25 district units in the two regions and formed 15% of all English obstetric units. The consultant obstetrician designated as head of labour ward, and the labour ward midwifery manager, participated in the educational visits.

Measures

One research midwife collected the baseline data in 1994 in all 25 obstetric units, and another collected the follow up data 9 months later. The research midwives were blind to which were intervention units. At each unit the research midwife conducted chart audits and obtained copies of labour ward guidelines. Data collection was preceded by a letter from the regional health authority informing staff, and by telephone calls to arrange an appointment. To reduce Hawthorne effects, data from patients discharged less than 1 month before either data collection exercise were excluded, and staff were not informed about data collection at follow up until 2 weeks beforehand. Researchers reassured staff that no data would be attributable to individual staff members or obstetric units.

Since we wanted to change clinical practices, not merely attitudes or guidelines, we audited four marker clinical practices, selected because of their clear effects on patient outcome. Two of the practices also required and reflected changes in unit purchasing policy for equipment (ventouse) or disposables (polyglycolic acid). These practices have subsequently been recognised by the Royal College of Obstetricians as providing “effective procedures in maternity care suitable for audit.”26 The practices were: use of polyglycolic acid sutures for repair of deep muscle after perineal tears or episiotomies; use of ventouse for instrumental delivery, defined as instrumental delivery patients in which the instrument of first choice was the ventouse, even if unsuccessful; use of prophylactic antibiotics in caesarean section, defined as caesarean section patients in whom any antibiotic was given within 6 hours of surgery; and (4) use of prophylactic corticosteroids in preterm deliveries, defined as patients who delivered at <34 weeks’ gestation in whom any steroid was given within 7 days before delivery.

Rates of practices for all the procedures except the use of ventouse were obtained from chart audits of approximately 30 consecutive patients at baseline and follow up, each representing between 10 and 14 days’ clinical practice per unit. To locate charts the researcher used the labour ward delivery book, counting back from 1 month before the date of data collection. Rates for use of ventouse were derived direct from labour ward registers.

To assign each labour ward guideline a score for the extent to which it was evidence based, two experienced obstetricians (NMF and SP-B) independently applied prewritten rules, blind to the hospital of origin. A Bland-Altman plot indicated good agreement with no bias.27

Data analysis

Because we randomised obstetric units, to avoid the “unit of analysis” error we analysed the rates of marker clinical practices by obstetric unit.28 No unit was excluded after randomisation, all intervention units participated in the visits, and data on clinical practices were available for all units, although smaller numbers of case notes were obtainable than planned for steroid usage.

To reduce the impact of ceiling effects, the proportion of cases in which clinicians failed to carry out each clinical practice was recorded for each obstetric unit at baseline and follow up, and then baseline to follow up ratios were computed to yield the risk ratio for failure to implement each practice in each unit. The overall change for each practice between baseline and follow up visit was estimated by combining the individual ratios for each obstetric unit in the intervention group and control group with the Mantel-Haenszel risk ratio method. The change in practice between the two types of units was then compared using student’s t test on the logarithm of the overall risk ratio.

Ethics

Approval for the trial was given by regional research and development and audit directors. Senior staff in all obstetric units gave permission for chart audits as part of an external audit project approved and funded by the regional health authority. Ethical advice indicated that, since we were only providing information to clinicians, there was no reason to seek patient consent.

Results

Characteristics and comparability of obstetric units

The mean annual delivery rate in the 25 obstetric units was 3200 births (1800-4500): 3330 in intervention units and 3050 in control units. However, despite randomisation there were baseline differences in two of the four clinical practices: use of ventouse was 36% (130/360) in intervention units and 55% (212/390) in control units, and use of polyglycolic acid sutures was 8% (30/347) in intervention units and 26% (89/354) in control units. There were no other baseline differences.

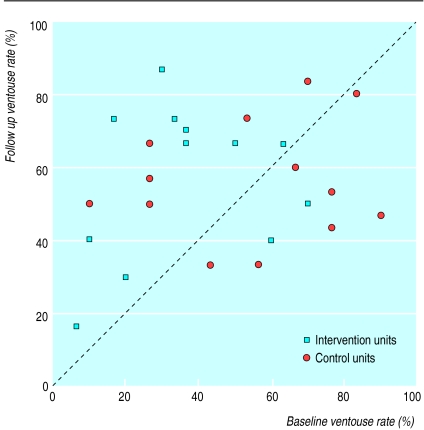

Actual clinical practices

The average rates for all 25 obstetric units at baseline were 18% (119/701) for use of polyglycolic acid sutures, 46% (342/750) for use of ventouse, 59% (412/707) for use of prophylactic antibiotics in caesarean section, and 72% (113/154) for use of steroids in preterm delivery (table). However, these mean figures hide wide baseline variations between individual units (fig 3).

Figure 3.

Rates of ventouse use at baseline and follow up for each obstetric unit. Units above diagonal increased ventouse usage during trial

The table shows the mean rates of marker clinical practices for the 13 obstetric units in the control group and the 12 obstetric units in the intervention group at baseline and at follow up. There was a significantly greater increase in the use of ventouse in intervention units compared with control units: risk ratio for failure to use ventouse was 0.68 (95% confidence interval 0.59 to 0.78) and 0.96 (0.82 to 1.12) respectively. However, as the intervention units and control units showed a similar performance at follow up, this might be due to regression to the mean.

For the other three clinical practices, the risk ratio for failure to bring practice into line with evidence did not differ significantly between intervention units and control units. When the data from all 25 obstetric units for these practices were pooled, failure to use antibiotics in caesarean section decreased by about a third overall (risk ratio for all 25 units 0.67, 0.58 to 0.77, P<0.0001), as did failure to use polyglycolic acid sutures (0.71, 0.65 to 0.77, P<0.0001). Failure to use steroids in threatened preterm delivery did not change significantly (1.13, 0.82 to 1.57, P=0.46), although this is based on data from only 298 patients.

Availability of the Cochrane module on pregnancy and childbirth, and guideline quality

At baseline, the Cochrane module on pregnancy and childbirth was available in six of 13 (46%) control units and six of 12 (50%) intervention units. At follow up the module was available in 10 (77%) control units; we had donated a copy to all intervention units.

There was excellent correlation between the two judges on the extent to which labour ward guidelines were based on evidence; scores agreed within four points on all but one occasion. All scores were skew distributed with median (range) in control units at baseline of 2 (0-7.5) out of a maximum of 16 which increased to 4 (0-9.5) at follow up. In intervention units the median score increased from 1.5 (0-7.8) to 2.75 (0-9.5).

Costs

The fixed cost of preparing the video was £5000, and the variable costs per visit for travel (£25), hotel accommodation (£60), staff time (£330), and sundries totalled £445. Thus, the mean cost per visit was £860 (at 1995 prices).

Discussion

Our data show encouraging trends in the number of obstetric units practising according to the evidence contained in the Cochrane module on pregnancy and childbirth. During the study period ventouse usage increased significantly more in intervention units than in control units, but there was no difference in the use of steroids for preterm delivery in either control units or intervention units. Increases in use of polyglycolic acid sutures and use of antibiotics in caesarean section over the study period were similar in control units and intervention units, with no difference attributable to the visit. Educational visits were associated with a significantly higher uptake of Cochrane review evidence relevant to only one of the four clinical practices studied.

Taking a conservative view, the educational visit led to only a modest difference between control units and intervention units in the extent to which clinical practice was based on evidence, and no change in the extent to which practice guidelines were based on evidence. This is similar to the results of a more intensive social marketing intervention to Canadian midwives by Hodnett and colleagues, though these investigators attributed their failure to excluding obstetricians.24

Internal and external validity

Our study was a rigorous randomised trial of educational visits as a method for helping obstetricians and midwives identify and implement evidence from the Cochrane module on pregnancy and childbirth in their units. We recruited, randomised, and followed up 25 of the 26 district general obstetric units in two former NHS regions. Four representative, common clinical practices linked closely to patient outcomes were assessed. The assessor was blind to unit allocation. A Hawthorne effect is unlikely as clinical practice data were obtained from notes of patients who gave birth at least 1 month before data collection, and we informed obstetric units that we were conducting a regionally coordinated audit only a fortnight beforehand. While we did not directly measure patient outcomes, we chose our four marker practices because they have been shown in systematic reviews to improve major outcomes, so were valid surrogates.10,29

Study limitations

There are several possible explanations for our failure to show much effect of the educational visits. It is possible that longer or repeated visits might work better, but these would be harder to deploy on a national scale. Single visits have worked well in the past for influencing individual clinical practices, and usually do so quite rapidly.21 Contamination between intervention units and control units is unlikely as randomisation was by hospital, rather than by patient or professional. We did not ask obstetric unit staff to nominate colleagues most influential to their education, as has been done in other studies,20 but instead targeted the obstetrician most involved with the labour ward, and the midwifery manager. These individuals may not be as academically influential as university based opinion leaders,20 but they are constantly on hand, and they are in a stronger position to identify and remove barriers to evidence based practice in their own unit than outsiders.

At baseline in 1994, two of the four marker practices’ procedures were already being carried out according to Cochrane review evidence in more than half of the deliveries studied (table). This shows encouraging progress between 1990-928 and 1994, and achieving further increases may have been difficult because of a ceiling effect.30 The wide variation in clinical practices between units and the marked chance differences in baseline rates for use of polyglycolic acid sutures and ventouse between control units and intervention units, made follow up rates hard to interpret (table).

In 1993 we found that the Cochrane module on pregnancy and childbirth was available in only 16% of UK district hospital obstetric units,14 but by 1994 it was available in six of our 13 (48%) control units and by 1995 in 10 (77%) of the units, suggesting that passive diffusion of the concept of evidence based medicine was taking place.31 During the study period there were several regional and national initiatives to enhance the uptake of clinical evidence, such as the Royal College of Obstetricians and Gynaecologists’ Minimum Standards of Care in Labour, which may have facilitated this.32 It is possible that our data collection at follow up occurred too early in the process of innovation31 to observe changes in clinical practice, since other changes may need to precede this, such as enhanced awareness of the role of evidence and changed unit policy. However, we did not find that written unit guidelines were more evidence based in intervention units than in control units.

Study implications

Important lessons from our study for others conducting such implementation research are firstly, that it is hard to improve on baseline rates for clinical practices of 60% or 80%. By analogy with clinical practice, where specific treatment is given only to diseased patients, our support should be focused where it is most needed. Secondly, the heterogeneity of clinical practice (rates for all practices varied between units from 0 to 100%) or the passive diffusion of innovation in control units during the study period must not be underestimated. Finally, data we shall report elsewhere show very large mismatches between the policies claimed by unit staff, their written guidelines, and actual clinical practice, emphasising the need to measure actual clinical practice and not rely on clinicians’ statements or written guidelines.33

Educational visits are clearly one effective way of implementing change in clinical practice, based mainly on studies showing improved prescribing—for example of antibiotics and other drugs.21,22,34 Our study is the first randomised trial examining how to enhance the uptake of Cochrane review evidence using educational visits. Our failure to show much effect on obstetric practice does not contradict other studies of educational visits, as different mechanisms may be active when trying to change the basis on which a medical specialty rests. However, our study shows that educational visits, even to senior staff in two disciplines, are insufficient to convert an obstetric unit to evidence based practice, and that other techniques will be needed to enhance the impact of Cochrane reviews. It would be unfortunate if health services in the United Kingdom and elsewhere invested in educational visits to spread the ideas of evidence based medicine without further rigorous evaluation.

Table.

Mean rates of four marker practices in 13 control, 12 intervention, and all 25 obstetric units at baseline and follow up. Figures are number (percentage) of patients in whom procedure carried out, and 95% confidence interval

| Obstetric unit | Marker practice

|

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Antibiotics in caesarean sections

|

Ventouse in instrumental deliveries

|

Polyglycolic acid in episiotomies

|

Steroids in preterm deliveries

|

Overall mean for all practices

|

||||||

| No of patients | Percentage (95% CI) | No of patients | Percentage (95% CI) | No of patients | Percentage (95% CI) | No of patients | Percentage (95% CI) | No of patients | Percentage (95% CI) | |

| At baseline | ||||||||||

| Control | 196/364 | 54 (38 to 70) | 212/390 | 54 (40 to 69) | 89/354 | 25 (2 to 48) | 66/84 | 79 (61 to 96) | 563/1192 | 47 (40 to 55) |

| Intervention | 216/343 | 63 (46 to 80) | 130/360 | 36 (24 to 48) | 30/347 | 9 (1 to 16) | 47/70 | 67 (55 to 79) | 423/1120 | 38 (28 to 48) |

| Total | 412/707 | 58 (47 to 69) | 342/750 | 46 (36 to 55) | 119/701 | 17 (5 to 29) | 113/154 | 73 (63 to 84) | 986/2312 | 43 (35 to 50) |

| At follow up | ||||||||||

| Control | 221/297 | 74 (63 to 85) | 219/390 | 56 (47 to 65) | 144/351 | 41 (16 to 66) | 54/75 | 72 (61 to 83) | 638/1113 | 57 (49 to 65) |

| Intervention | 224/314 | 71 (54 to 89) | 204/360 | 57 (45 to 69) | 80/340 | 24 (5 to 42) | 43/69 | 62 (49 to 76) | 551/1083 | 51 (39 to 63) |

| Total | 445/611 | 73 (63 to 83) | 423/750 | 56 (49 to 64) | 224/691 | 32 (17 to 48) | 97/144 | 67 (59 to 76) | 1189/2196 | 54 (47 to 61) |

Acknowledgments

We thank Iain Chalmers, Mark Starr, and David Spiegelhalter for support, Liz Byford and Val Dineen for data collection, and all clinicians who collaborated.

Editorial by Keirse

Footnotes

Funding: This study was funded by regional research implementation initiatives of the North Thames and South Thames regional health authorities; the Imperial Cancer Research Fund; and North Staffordshire Hospital Trust.

Conflict of interest: None.

References

- 1.Peto R, Collins R, Gray R. Large-scale randomised evidence: large, simple trials and overviews of trials. Annals NY Acad Sci. 1993;703:314–340. doi: 10.1111/j.1749-6632.1993.tb26369.x. [DOI] [PubMed] [Google Scholar]

- 2.Sackett DL, Rosenberg WM, Gray JAM, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn’t. BMJ. 1996;312:71–72. doi: 10.1136/bmj.312.7023.71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Antman E, Lau J, Kupelnick B, Mosteller F, Chalmers T. A comparison of the results of meta-analysis of randomised controlled trials and recommendations of clinical experts. JAMA. 1992;268:240–248. [PubMed] [Google Scholar]

- 4.Stross J. Relationship between knowledge and experience in use of anti-rheumatics. JAMA. 1989;262:2721–2723. [PubMed] [Google Scholar]

- 5.Bath PMW, Prasad A, Brown MM, MacGregor GA. Survey of use of anticoagulation in patients with atrial fibrillation. BMJ. 1993;307:1045. doi: 10.1136/bmj.307.6911.1045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.House of Commons Health Committee. Maternity services. London: HMSO; 1992. [Google Scholar]

- 7.Steer P. Rituals in antenatal care: do we need them? BMJ. 1993;307:697–698. doi: 10.1136/bmj.307.6906.697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Donaldson LJ. Maintaining excellence: the preservation and development of specialised services. BMJ. 1992;305:1280–1284. doi: 10.1136/bmj.305.6864.1280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Penn Z, Steer P. How obstetricians manage the problem of preterm delivery with special reference to preterm breech. Br J Obs Gynaecol. 1991;98:531–534. doi: 10.1111/j.1471-0528.1991.tb10365.x. [DOI] [PubMed] [Google Scholar]

- 10.Enkin MW, Keirse MJNC, Renfrew MJ, Neilson JP, editors. Cochrane collaboration module on pregnancy and childbirth [Updated 1995]. Cochrane Collaboration; Issue 2. Oxford: Update Software; 1995. [Google Scholar]

- 11.Wyatt J. Use and sources of medical knowledge. Lancet. 1991;338:1368–1373. doi: 10.1016/0140-6736(91)92245-w. [DOI] [PubMed] [Google Scholar]

- 12.Haines A, Jones R. Implementing the findings of research. BMJ. 1994;308:1488–1492. doi: 10.1136/bmj.308.6942.1488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lomas J. Teaching old (and not so old) docs new tricks: Effective ways to implement research findings. In: Dunn E, Norton P, Stewart M, Bass M, Tudiver F, editors. Disseminating new knowledge and having an impact on practice. Newbury Park: Sage Press; 1994. [Google Scholar]

- 14.Paterson-Brown S, Wyatt J, Fisk N. Are clinicians interested in up-to-date reviews of effective care? BMJ. 1993;307:1464. doi: 10.1136/bmj.307.6917.1464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Paterson-Brown S, Fisk N, Wyatt J. Uptake of meta-analytical overviews of effective care in English obstetric units. Br J Obstet Gynaecol. 1995;102:297–301. doi: 10.1111/j.1471-0528.1995.tb09135.x. [DOI] [PubMed] [Google Scholar]

- 16.Evans CE, Haynes RB, Birkett NJ, Gilbert JR, Taylor DW, Sackett DL, et al. Does a mailed continuing education package improve physician performance? Results of a randomised trial. JAMA. 1986;255:501–504. [PubMed] [Google Scholar]

- 17.Davis DA, Thomson MA, Oxman AD, Haynes RB. A systematic review of the effect of continuing medical education strategies. JAMA. 1995;274:700–705. doi: 10.1001/jama.274.9.700. [DOI] [PubMed] [Google Scholar]

- 18.Oxman AD, Thomson MA, Davis DA, Haynes RB. No magic bullets: a systematic review of 102 trials of interventions to improve professional practice. Can Med Assoc J. 1995;153:1423–1431. [PMC free article] [PubMed] [Google Scholar]

- 19.Greco PJ, Eisenberg JM. Changing physicians’ practices. New Engl J Med. 1993;329:1271–1273. doi: 10.1056/NEJM199310213291714. [DOI] [PubMed] [Google Scholar]

- 20.Lomas J, Enkin M, Anderson GM, Hannah WJ, Vayda E, Singer J. Opinion leaders vs audit and feedback to implement practice guidelines. JAMA. 1991;265:2202–2207. [PubMed] [Google Scholar]

- 21.Thomson MA, Oxman AD, Davis DA, Haynes RB, Freemantle N, Harvey EL. Outreach visits to improve health professional practice and patient outcomes. In: Bero L, Grilli R, Grimshaw J, Oxman A, editors. Collaboration on effective professional practice module, Cochrane Database of Systematic Reviews [updated 1 September 1997]. The Cochrane Library [database on disk and CD ROM]. Cochrane Collaboration; Issue 4. Oxford: Update Software; 1997. Updated quarterly. [Google Scholar]

- 22.Soumerai SB, Avorn J. Principles of educational outreach (‘academic detailing’) to improve clinical decision-making. JAMA. 1990;263:549–556. [PubMed] [Google Scholar]

- 23.de Burgh S, Mant A, Mattick RP, Donnely N, Hall W, Bridges-Webb C. A controlled trial of educational visiting to improve benzodiazepine prescribing in general practice. Aust J Public Health. 1995;19:142–148. doi: 10.1111/j.1753-6405.1995.tb00364.x. [DOI] [PubMed] [Google Scholar]

- 24.Hodnett E, Kaufman K, O’Brien-Pallas L, Chipman M, Watson-McDonell J, Hunsberger W. A strategy to promote research-based nursing care: effects on childbirth outcomes. Res Nurs Health. 1996;19:13–20. doi: 10.1002/(SICI)1098-240X(199602)19:1<13::AID-NUR2>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- 25.Johanson R. Promoting effective care in pregnancy and childbirth. Stoke on Trent: Keele University Audiovisual services; 1994. [Video.] [Google Scholar]

- 26.Benbow A, Semple D, Maresh M. Effective procedures in maternity care suitable for audit. London: Royal College of Obstetricians and Gynaecologists; 1997. [Google Scholar]

- 27.Bland JM, Altman DG. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet. 1986;1:307–310. [PubMed] [Google Scholar]

- 28.Whiting O’Keefe QE, Henke C, Simborg DW. Choosing the correct unit of analysis in medical care experiments. Med Care. 1984;22:1101–1114. doi: 10.1097/00005650-198412000-00005. [DOI] [PubMed] [Google Scholar]

- 29.Mant J, Hicks N. Detecting differences in quality of care: the sensitivity of measures of process and outcome in treating acute myocardial infarction. BMJ. 1995;311:793–796. doi: 10.1136/bmj.311.7008.793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Streiner DL, Norman GR. Health measurement scales: a practical guide to their development and use. Oxford: Oxford University Press; 1993. p. 80. [Google Scholar]

- 31.Rogers EM. Diffusion of innovations, 3rd ed. New York: Free Press; 1983. [Google Scholar]

- 32.Royal College of Obstetrics and Gynaecology. Minimum standards of care in labour: report of a working party. London: RCOG; 1994. [Google Scholar]

- 33.Clinical Standards Advisory Group. Women in normal labour. London: HMSO; 1995. [Google Scholar]

- 34.Soumerai SB, McLaughlin TJ, Avorn J. Improving drug prescribing in primary care: a critical analysis of the experimental literature. Millbank Quart. 1989;67:268–317. [PubMed] [Google Scholar]