Abstract

Performance evaluations of health services providers burgeons. Similarly, analyzing spatially related health information, ranking teachers and schools, and identification of differentially expressed genes are increasing in prevalence and importance. Goals include valid and efficient ranking of units for profiling and league tables, identification of excellent and poor performers, the most differentially expressed genes, and determining “exceedances” (how many and which unit-specific true parameters exceed a threshold). These data and inferential goals require a hierarchical, Bayesian model that accounts for nesting relations and identifies both population values and random effects for unit-specific parameters. Furthermore, the Bayesian approach coupled with optimizing a loss function provides a framework for computing non-standard inferences such as ranks and histograms.

Estimated ranks that minimize Squared Error Loss (SEL) between the true and estimated ranks have been investigated. The posterior mean ranks minimize SEL and are “general purpose,” relevant to a broad spectrum of ranking goals. However, other loss functions and optimizing ranks that are tuned to application-specific goals require identification and evaluation. For example, when the goal is to identify the relatively good (e.g., in the upper 10%) or relatively poor performers, a loss function that penalizes classification errors produces estimates that minimize the error rate. We construct loss functions that address this and other goals, developing a unified framework that facilitates generating candidate estimates, comparing approaches and producing data analytic performance summaries. We compare performance for a fully parametric, hierarchical model with Gaussian sampling distribution under Gaussian and a mixture of Gaussians prior distributions. We illustrate approaches via analysis of standardized mortality ratio data from the United States Renal Data System.

Results show that SEL-optimal ranks perform well over a broad class of loss functions but can be improved upon when classifying units above or below a percentile cut-point. Importantly, even optimal rank estimates can perform poorly in many real-world settings; therefore, data-analytic performance summaries should always be reported.

Keywords: percentiling, Bayesian models, decision theory, operating characteristic

1 Introduction

Performance evaluation burgeons in many areas including health services (Goldstein and Spiegelhalter 1996; Christiansen and Morris 1997; Normand et al. 1997; McClellan and Staiger 1999; Landrum et al. 2000, 2003; Daniels and Normand 2006; Austin and Tu 2006), drug evaluation (DuMouchel 1999), disease mapping (Devine and Louis 1994; Devine et al. 1994; Conlon and Louis 1999; Wright et al. 2003; Diggle et al. 2006), and education (Lockwood et al. 2002; Draper and Gittoes 2004; McCaffrey et al. 2004; Rubin et al. 2004; Tekwe et al. 2004; Noell and Burns 2006). Goals of such investigations include valid and efficient estimation of population parameters such as average performance (over clinics, physicians, health service regions or other “units of analysis”), estimation of between-unit variation (variance components) and unit-specific evaluations. The latter includes estimating unit specific performance, computing the probability that a unit's true, underlying performance is in a specific region, ranking units for use in profiling and league tables (Goldstein and Spiegelhalter 1996), identification of excellent and poor performers.

Bayesian models coupled with optimizing a loss function provide a framework for computing non-standard inferences such as ranks and histograms and producing data-analytic performance assessments. Inferences depend on the posterior distribution, and how the posterior is used should depend on inferential goals. Gelman and Price (1999) showed that no single set of estimates can simultaneously optimize loss functions targeting the unit-specific parameters (e.g, unit-specific means, optimized by the posterior mean) and those targeting the ranks of these parameters. For example, as Shen and Louis (1998) and Liu et al. (2004) showed, ranking the unit-specific maximum likelihood estimates (MLEs) performs poorly as does ranking Z-scores for testing whether a unit's mean equals the population mean. In some situations, ranking the posterior means of unit-specific parameters can perform well, but in general an optimal approach to estimate ranks is needed.

In the Shen and Louis (1998) approach, SEL operates on the difference between the estimated and true ranks. But, in many applications interest focuses on identifying the relatively good (e.g., in the upper 10%) or relatively poor performers, a down/up classification. For example, quality improvement initiatives should be targeted at health care providers that have the highest likelihood of being the poorest performers; geography-specific, environmental assessments should be targeted at the most likely high incidence locations (Wright et al. 2003); genes with differential expression in the top 1% (say) should be selected for further study.

We construct new loss functions that focus on down/up classification and derive the optimizers for a subset of them. We develop connections between the new optimizers and others in the literature; report performance evaluations among the new ranking methods and other candidates; identify appropriate uncertainty assessments including a new performance measure. We evaluate performance for a fully parametric hierarchical model with unit-specific Gaussian sampling distributions and assuming either a Gaussian or a mixture of Gaussians prior. We evaluate performance and robustness under the prior and loss function that was used to generate the ranks as well as under other priors and loss functions. Shen and Louis (1998) showed that when the posterior distributions are stochastically ordered, maximum likelihood estimate based ranks, posterior mean based ranks, SEL-optimal ranks and those based on most other rank-specific loss functions are identical. We report performance assessments for the stochastically ordered case and compare approaches for situations when the posterior distributions are not stochastically ordered. We illustrate approaches using Standardized Mortality Ratio (SMR) data from the United States Renal Data System (USRDS).

2 The two-stage, Bayesian hierarchical model

We consider a two-stage model with independent identically distributed (iid) sampling from a known prior G with density g and possibly different unit-specific sampling distributions fk:

| (1) |

From model (1) we can derive the independent (ind) posterior distributions for Bayesian inferences:

For computing efficiency, we assume that the θs are iid, though model (1) can be generalized to allow a regression structure in the prior and extended to three stages. Our theoretical results hold for these more general situations.

2.1 Loss functions and decisions

Let θ = (θ1 ,…, θK) and Y = (Y1, …, YK). For a loss function L(θ, a), the optimal Bayesian a(Y) minimizes the posterior Bayes risk,

and thereby the pre-posterior risk

Also, for any a(Y) we can compute the frequentist risk:

3 Ranking

Laird and Louis (1989) represented the ranks by,

| (3) |

with the smallest θ having rank 1 and the largest having rank K. The non-linear form of (3) implies that, in general, the optimal ranks are neither the ranks of the observed data nor the ranks of the posterior means of the θs. A loss function is necessary to formalize developing estimates and related uncertainties.

3.1 Squared-error loss (SEL)

Square error loss (SEL) is the most common loss function used in estimation. It is optimized by the posterior mean of the target parameter. For example, under the model (1), with the unit-specific θs as the target, the loss function is L(θ, a) = (θ – a)2 and the optimal estimator is posterior mean (PM) .

When ranks are the target, producing SEL-optimal ranks by minimizing

| (4) |

and setting equal to,

| (5) |

The R̄k are shrunk towards the mid-rank (K + 1)/2, and generally are not integers (Shen and Louis 1998). Optimal integer ranks are reached by

| (6) |

See Section Appendix A for additional details on producing optimal ranks under weighted SEL.

3.2 Notation

Henceforth, we drop dependency on θ and omit conditioning on Y whenever this does not cause confusion. For example, Rk stands forRk(θ) and R̂k stands for R̂k(Y). Furthermore, use of the ranks facilitates notation in mathematical proofs, but percentiles

| (7) |

normalize large sample performance and aid in communication. For example, Lockwood et al. (2002) showed that mean square error (MSE) for percentiles rapidly converges to a function that does not depend on K; the same normalization strategy applies in the loss functions below.

4 Upper 100(1 − γ)% loss functions

L ^(Equation 4) evaluates general performance without specific attention to identifying the relatively well or poorly performing units. To attend to this goal, for 0 < γ < 1 we investigate loss functions that focus on identifying the upper 100(1 − γ)% of the units, with loss depending on the correctness of classification and, possibly, a distance penalty; identification of the lower 100γ% group is similar. For notational convenience, we assume that γK is an integer, so γ(K + 1) is not an integer and in the following it is not necessary to distinguish between (>, ≥) or (<, ≤).

4.1 Summed, unit-specific loss functions

For 0 < γ < 1, let

| (8) |

ABk and BAk indicate the two possible modes of misclassification. ABk indicates that the true percentile is above the cutoff, but the estimated percentile is below the cutoff. Similarly, BAk indicates that the true percentile is below the cutoff while the estimated percentile is above it.

For p, q, c ≥ 0 define,

| (9) |

The loss functions confer no penalty if the pair of estimated and true unit-specific percentiles, , are either both above or both below the γ cut point. If they are on different sides of γ, L̃ penalizes by an amount that depends on the distance of the estimated percentile from γ, L† by the distance of the true percentile from γ and L‡ by the distance between the true and estimated percentiles. Parameters p and q adjust the intensity of the penalties; p ≠ q and c ≠ 1 allow for different penalties for the two kinds of misclassification. L0/1(γ) counts the number of discrepancies and is equivalent to setting p = q = 0, c = 1; we use the relation in its definition. In practice, L† and L‡ would be harder to use than L̃ because their penalizing quantities depend on unknown Pk. However, inclusion of them in our investigation does help to calibrate the robustness of other estimators.

Our mathematical analyses apply to the general loss functions (9), but our simulations are conducted for p = q = 2, c = 1. Within this setting, we denote the first three loss functions in (9) as L̃(γ), L†(γ) and L‡(γ).

We do not investigate the “all or nothing, experiment-wise” loss function with zero loss, when all units are correctly classified as above γ or below γ and penalty 1 if any unit is misclassified. While this loss function is one of the most fundamental and provides framework in many multiple comparison methods, it does not provide a good guideline in our ranking problem. Finding the optimal classification is challenging in computation and there will be many nearly optimal solutions. Furthermore, in the spirit of computing the false detection rate, loss functions that compute average performance over units or a subset of units are more appropriate in most applications.

5 Optimizers and other candidate ranks

We find ranks/percentiles that optimize L0/1 and L̃ and study an estimator that performs well for L‡, but is not optimal. First, note that optimizers for the loss functions in (9) and L̂ are equal when the posterior distributions are stochastically ordered (the Gk(t | Yk) never cross). So, in this case P̂k, which minimizes L̂ (see equations (4), (6) and (7)), is optimal for a broad class of loss functions (see Theorem 4). Also, it is straightforward to show that all rank/percentile estimators operating through the posterior distribution of the ranks are monotone transform invariant; that is, they are unchanged by monotone transforms of the target parameter.

5.1 Optimizing L0/1

Theorem 1

L0/1 loss is minimized by

| (10) |

These are not unique optimizers.

See Section Appendix B.

5.2 Optimizing L̃

Theorem 2

The P̃k(γ) optimize L̃. In Section Appendix C, we show in detail that the P̃k(γ) are also optimal for more general loss functions with the distance penalties replaced by any nondecreasing functions of . The proof has three steps: first, classify the units into (above γ)/(below γ) groups; second, inside each group, rewrite the posterior risk as the inner product of the discrepancy vector and the misclassification probability vector; third, repeatedly use the rearrangement inequality (Hardy et al. 1967) to minimize the inner product.

The Normand et al. (1997) estimate

Normand et al. (1997) proposed using the posterior probability pr(θk > t| Y) and ranks based on it to compare the performance of medical care providers. The cut point t is an application-relevant threshold. Using this approach, we define with properly chosen cut point t and show that is essentially identical to P̃k(γ).

Definition of : Let

| (11) |

and define as the percentiles produced by ranking the pr(θk ≥ (γ)|Y). Section 6.2 gives a relation among P̄k, P̃k(γ) and . Theorems 5 and 6 show that P̃k(γ) is asymptotically equivalent to .

By making a direct link to the original θ scale, is straightforward to explain and interpret. Furthermore, for a desired accuracy, computing is substantially faster than computing P̃k(γ), since the former requires only accurate computation of individual posterior distributions and of ḠY.

5.3 Optimizing L†

Section Appendix D presents an optimization procedure for the case p = q = 2, −∞ < c < ∞. However, other than use of brute force (complete enumeration), we have not found an algorithm for general (p, q, c). As for L0/1, performance depends only on optimal allocation into the (above γ)/(below γ) groups. Additional criteria are needed to specify the within-group order.

5.4 Optimizing L‡

We have not found a simple means of optimizing this loss function, but Section Appendix E develops a helpful relation.

5.5 Other ranking estimators

Traditional rank estimators include ranks based on maximum likelihood estimates, those based on posterior means of the θs and those based on hypothesis testing statistics (Z-scores, P-values). MLE-based ranks are monotone transform invariant, but the others are not. As shown in Liu et al. (2004), MLE-based ranks will tend to give units with relatively large variances extreme ranks, while hypothesis testing statistics based ranks will tend to place units with relatively small variances at the extremes. Though not an optimal solution to this problem, modified hypothesis testing statistics moderate this shortcoming by reducing the ratio of the variances (Tusher et al. 2001; Efron et al. 2001).

A two stage ranking estimator

Ranking estimator P̃k(γ) optimize the (above γ)/(below γ) misclassification loss L0/1 and P̂k optimize the L̂, which penalizes on the distance |Pestk Pk|. A convex combination loss function, , thus addresses both inferential goals, as L‡ similarly does, and motivates , a two stage hybrid ranking estimator.

Definition of : Use P̃k(γ) to classify into (above γ)/(below γ) percentile groups. Then, within each percentile group order the estimates by P̂k.

Theorem 3

minimizes L0/1 and conditional on this (above γ)/(below γ) classification, produces optimal SEL estimates within the two groups.

See Section Appendix F.

Furthermore, it is straightforward to show that for there exists a w* > 0 such that for all w ≤ w*, is optimal and there exists a w* < 1 such that for all w ≥ w*, P̂k is optimal.

6 Relations among estimators

In this section we develop relations among estimators using ranks or percentiles depending on the context for convenience.

6.1 A general relation

Let ν = [γK] and define

Then the ranked equal the ranked pr(Rk ≥ ν) and so each generates the R̃k(γ). Note that is a constant used to standardize such that:

Theorem 4

R̅k is a linear combination of the with respect to ν and so for any convex loss function the R̅k outperform the for at least one value of ν = 1,…,K. For SEL, R̅k dominates for all ν. As shown in Section Appendix A, the R̂k = rank(R̅k) also dominate rank for all ν.

See Section Appendix G.

6.2 Relating P̂k, P̃k(γ) and

From (3), (5) and (11), we have that,

The are generated by ranking the pr , which is equivalent to ranking pr(ḠY (θk) ≥ γ). By the foregoing, it is equivalent to ranking the pr(E[Rk|θk] ≥ γK), which is similar to pr(Rk ≥ γK), the generator of P̃k(γ). The R̂k are produced by ranking the R̄k which is the same as ranking the expectation of the random variables used to produce the or R̃k(γ).

6.3 Approximate equivalence of P̃k(γ) and

Theorem 5

Assume that and that the posterior cumulative distribution function (cdf) of each θk is continuous and differentiable at G−1(γ). If Gk(· |Y) has a universally bounded second moment, then for K → ∞, is equivalent to P̃k(γ).

See Section Appendix H.

Theorem 6

Assume that and that the posterior cumulative distribution function (cdf) of each θk is continuous and differentiable at G−1(γ). Furthermore, assume that the empirical distribution function (edf) of the ζk converges to a probability distribution. If Gk(·|Y, ζ) has a universally bounded second moment, then for K → ∞, is equivalent to P̃k(γ).

Proof

Regard ζk as part of the observed data and use in Theorem 5.

Theorems 5 and 6 imply that is asymptotically optimal for L̃ and provides a loss function basis for the Normand et al. (1997) estimates.

6.4 A unifying score function

We provide a unified approach to loss function based ranking. To this end, we define a non-negative, nondecreasing scoring function S(P) : (0, 1) → [0, 1]. The function can be regarded as the scores (reward) a unit would get if its percentile was P. It relates percentiles, P, to “consequences” S(P). These relations can help in eliciting an application-relevant loss function and in interpreting loss-function based percentiles. We use SEL for S(P) to produce percentiles and ranks, specifically:

| (12) |

The optimal Sest satisfy and we use the ranks and percentiles based on them.

When S(P) = aP + b, a > 0, i.e. the reward is linear in the estimated percentile, we have L̂s = a2L̂ and so P̂k is associated with linear rewards. When S(P) = I{P>γK}, i.e., the reward only depends on whether the percentile of a unit is beyond the threshold γ, there is no constraint on the rankings of units inside each of the (above γ)/(below γ) groups. With this setting, there exist many optimizers and P̃k(γ) is one of them. For the two stage ranking estimator , let S(P) = aP + I{P>γ} and so . When a is sufficiently close to zero, is optimal. More S(P) are given in Section Appendix I.

7 Performance evaluations and comparisons

7.1 Posterior and pre-posterior performance evaluations

In a Bayesian model, an estimator’s performance can be evaluated by using the posterior distribution (data analytic evaluations) and by using the marginal distribution of the data (pre-posterior evaluations). For example, preposterior SEL performance is the sum of expected posterior variance plus expected squared posterior bias.

We provide evaluations relative to the loss function used to produce the estimate and other potentially relevant loss functions. For example, performance with respect to SEL should be computed for the SEL optimizer and for other estimators. These comparisons help to determine the efficiency of an estimator that optimizes one loss function when evaluated for other loss functions. Procedures that are robust to the choice of loss functions will be attractive in applications.

7.2 The (above γ)/(below γ) operating characteristic

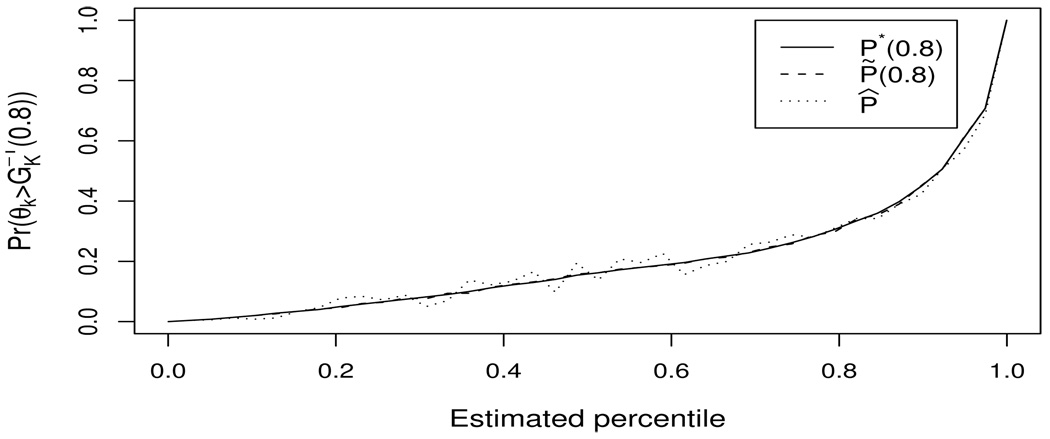

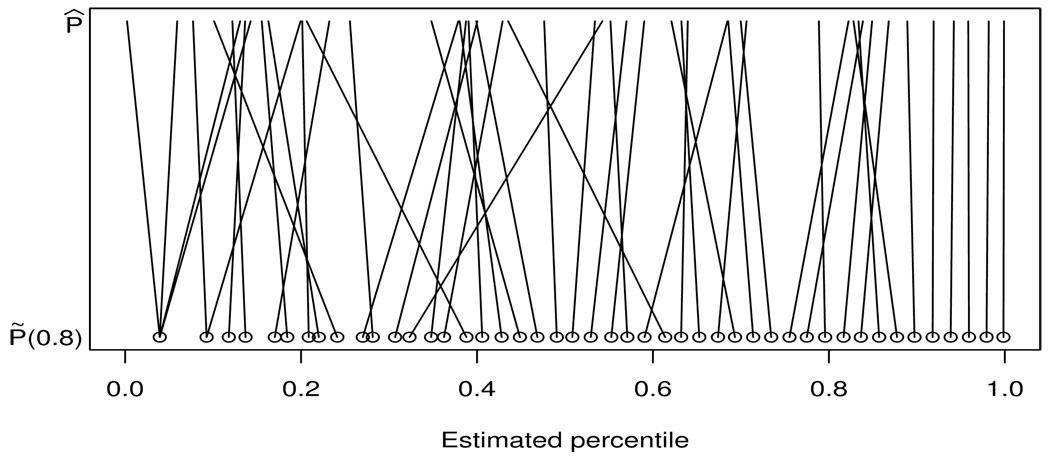

For (above γ)/(below γ) classification, plots of the posterior probability of exceeding γ versus estimated percentiles are informative (see Figure 4). Such plots can be summarized by the a posteriori operating characteristic (OC). For any percentiling method, define,

| (13) |

with the last equality following from identity . OC(γ) is the sum of two misclassification probabilities and so is optimized by P̃k(γ). It is normalized so that if the data provide no information on the θk, then for all γ, OC(γ) ≡ 1. Evaluating performance using only one of the probabilities, e.g., pr is analogous to computing the false discovery rate (Benjamini and Hochberg 1995; Storey 2002, 2003).

Figure 4.

Pr(θk > 0.18 | Y) with X-axis percentiles determined separately by the three percentiling methods.

7.3 Unit-specific performance

For loss functions that sum over unit-specific components, performance can also be evaluated for individual units and, in a frequentist evaluation, for individual θ vectors. These evaluations are in Section 9.3.

8 Simulation scenarios

We evaluate pre-posterior performance for the Gaussian sampling distribution with K = 200 using 2000 simulation replications. We compute pr(Rk = ℓ | Y) using an independent sample Monte Carlo with 2000 draws. All simulations are for loss functions with p = q = 2 and c = 1.

8.1 The Gaussian-Gaussian model

We evaluate estimators for the Gaussian/Gaussian, two-stage model with a Gaussian prior and Gaussian sampling distributions and allow for varying unit-specific variances. Without loss of generality we assume that the prior mean is µ = 0 and the prior variance is τ2 = 1. Specifically,

This derives:

where . When unit-specific variances are all equal, the posterior distributions are stochastically ordered and all ranking methods we investigate are identical. Evaluation for this case provides a baseline performance with respect to the set of loss functions. In practice, the ’s can vary substantially and we evaluate this situation using two departures from the case. In each case, the equal variance scenario is produced by rls = 1:

log uniform: Ordered, geometric sequences of the with ratio of the largest σ2 to the smallest and geometric mean .

two clusters: The first half of the ; for the second half, . Here, and gmv = 1.

In both cases the variance sequence is monotone in k, but simulation results would be the same if the indices were permuted. These variance sequences — constant, uniform in the log scale, clustered at the extremes of the range — triangulate possible patterns, though specific applications can, of course, have their unique features.

8.2 A Mixture prior

This prior is a mixture of two Gaussian distributions with the mixture constrained to have mean 0 and variance 1:

where

We present results for ε = 0.1, Δ = 3.40, ξ2 = .25, γ = 0.9 and compute the preposterior risk for estimators that are computed from the posterior produced by this mixture and for estimators that are based on a standard, Gaussian prior.

9 Simulation results

9.1 SEL for P̂k and estimated θ-based percentiles

Table 1 documents SEL (L̂) performance for P̂k, the optimal estimator, for percentiled Yk (the MLE), percentiled and percentiled (the posterior mean of eθk).

Table 1.

Simulated preposterior SEL (10000 L̂) for gmv = 1.

| percentiles based on | |||||

|---|---|---|---|---|---|

| rls | P̂k | exp | Yk | ||

| 1 | 516 | 516 | 516 | 516 | |

| 25 | 517 | 517 | 534 | 582 | |

| 100 | 522 | 525 | 547 | 644 | |

The posterior mean of eθk is presented to assess performance for a monotone, non-linear transform of the target parameters. For rls = 1, the posterior distributions are stochastically ordered and the four sets of percentiles are identical, as is their performance. As rls increases, performance of Yk-derived percentiles degrades, those based on the are quite competitive with P̂k but performance for percentiles based on the posterior mean of eθk rapidly degrades. Results show that though the posterior mean can perform well for some models and target parameters, in general it is not competitive with rank-based approaches.

9.2 Comparisons among loss function-based estimates

Table 2 reports results for P̂k, P̃k(γ) and under four loss functions and for the “log-uniform” variance pattern. For the “two-clusters” pattern, differences between estimators are modified relative to those for the log-uniform pattern, but preference relations are unchanged. For example, the L̂ risks are generally smaller for the “two-clusters” variance pattern than for the “log-uniform” pattern, but the reverse is true for L̃.

Table 2.

Simulated preposterior risk for gmv = 1. All values are 10000×(Loss). The first block is for the Gaussian-Gaussian model; the second for the Gaussian mixture prior assuming the mixture; the third for the Gaussian mixture prior, but with analysis based on a single Gaussian prior.

| L0/1 | L̂ | L̃ | L‡ | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| γ | rls | P̂k | P̃k(γ) | P̂k | P̃k(γ) | P̂k | P̃k(γ) | P̂k | P̃k(γ) | ||||||

| 0.5 | 1 | 2508 | 2511 | 517 | 518 | 517 | 104 | 105 | 104 | 336 | 337 | 336 | |||

| 0.5 | 25 | 2506 | 2508 | 519 | 524 | 519 | 98 | 96 | 98 | 340 | 335 | 340 | |||

| 0.5 | 100 | 2503 | 2503 | 521 | 530 | 521 | 93 | 90 | 93 | 342 | 334 | 342 | |||

| 0.6 | 100 | 2432 | 2422 | 522 | 537 | 523 | 91 | 87 | 91 | 324 | 316 | 327 | |||

| 0.8 | 25 | 1740 | 1717 | 517 | 558 | 517 | 67 | 59 | 67 | 175 | 170 | 181 | |||

| 0.8 | 100 | 1742 | 1689 | 523 | 595 | 523 | 71 | 57 | 71 | 178 | 170 | 189 | |||

| 0.9 | 1 | 1059 | 1058 | 515 | 520 | 515 | 30 | 30 | 30 | 73 | 73 | 73 | |||

| 0.9 | 25 | 1060 | 1032 | 518 | 609 | 519 | 37 | 29 | 37 | 75 | 72 | 81 | |||

| 0.9 | 100 | 1048 | 1005 | 523 | 673 | 523 | 43 | 29 | 42 | 77 | 70 | 86 | |||

| 0.8 | 1 | 1469 | 1471 | 565 | 567 | 565 | 54 | 54 | 54 | 150 | 150 | 150 | |||

| 0.8 | 25 | 1524 | 1494 | 566 | 606 | 567 | 59 | 51 | 59 | 161 | 158 | 168 | |||

| 0.9 | 1 | 782 | 782 | 565 | 575 | 565 | 14 | 14 | 14 | 42 | 42 | 42 | |||

| 0.9 | 25 | 823 | 783 | 564 | 699 | 564 | 23 | 14 | 23 | 51 | 48 | 58 | |||

| 0.8 | 1 | 1473 | 1470 | 565 | 566 | 565 | 54 | 54 | 54 | 150 | 150 | 150 | |||

| 0.8 | 25 | 1496 | 1493 | 567 | 615 | 567 | 58 | 52 | 59 | 159 | 158 | 168 | |||

| 0.9 | 1 | 782 | 782 | 565 | 570 | 565 | 14 | 14 | 14 | 42 | 42 | 42 | |||

| 0.9 | 25 | 788 | 783 | 565 | 664 | 564 | 22 | 14 | 23 | 50 | 45 | 58 | |||

When rls = 1, and so differences in the SEL results in the first and seventh rows quantify residual simulation variation and Monte Carlo uncertainty in computing the probabilities used in equation (1) to produce the P̃k(γ). Results for other values of rls show that under L̂, P̂k outperforms P̃k(γ) and as must be the case, since P̂k is optimal under SEL. Similarly, P̂k(γ) optimizes L0/1 and L̃, and for rls ≠ 1 outperforms competitors. Though optimizes (see Section 5.4) for sufficiently small w, it performs relatively poorly for the seemingly related L‡; P̃k(γ) appears to dominate and P̂k performs well. The poor performance of shows that unit-specific combining of a misclassification penalty with squared-error loss is fundamentally different from using them in an overall convex combination.

Similar relations among the estimators hold for the two component Gaussian mixture prior and for a “frequentist scenario” with a fixed set of parameters and repeated sampling only from the Gaussian sampling distribution conditional on these parameters.

Results in Table 2 are based on gmv = 1. Relations among the estimators for other values of gmv are similar, but a look at extreme gmv is instructive. Results (not shown) indicate that for rls = 1, the risk associated with L0/1 is of the form a(gmv)γ(1 − γ), where a(gmv) is a constant depending only on the value of gmv. By identity (13), this implies that the expectation of OC(γ) is approximately constant. When gmv = 0, the data are fully informative, Yk ≡ θk and all risks are 0. When , gmv = ∞ and the Yk provide no information on the θs nor on Pk. Table 3 displays the preposterior risk for this no information case, with values providing an upper bound for results in Table 2.

Table 3.

Preposterior risk for rls = 1 when gmv = ∞. All values are 10000×Risk.

| L0/1 | L̂ | L‡ | L̃ and L† | |

|---|---|---|---|---|

| γ | 200γ(1−γ) | 1667 | 3333γ(1−γ) | 3333γ(1−γ)[γ3 + (1−γ)3] |

| 0.5 | 5000 | 1667 | 833 | 208 |

| 0.6 | 4800 | 1667 | 800 | 224 |

| 0.8 | 3200 | 1667 | 533 | 277 |

| 0.9 | 1800 | 1667 | 300 | 219 |

Under L0/1 P̃k(γ) is the optimal and the difference between P̂k and P̃k(γ) depends on the magnitude of rls. That P̃k(γ) is only moderately better than P̂k under L̃ is due in part to our having considered only the case p = q = 2, c = 1, which makes L̃ very similar to L̂. For larger p and q there would be a more substantial difference.

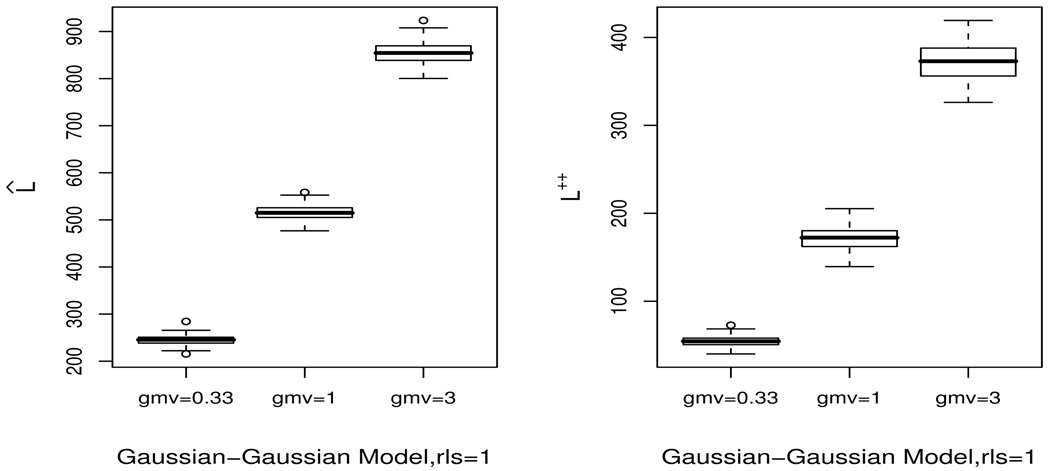

Figure 1–Figure 3 are based on the Gaussian-Gaussian model. Figure 1 displays the dependence of risk on gmv for the exchangeable model (rls = 1). As expected, risk increases with gmv. For rls = 1, expected unit-specific loss equals the overall average risk and so the box plots summarize the sampling distribution of unit-specific risk.

Figure 1.

Unit-specific, L̂ and L‡ risk classified by gmv for K = 200, γ = 0.8.

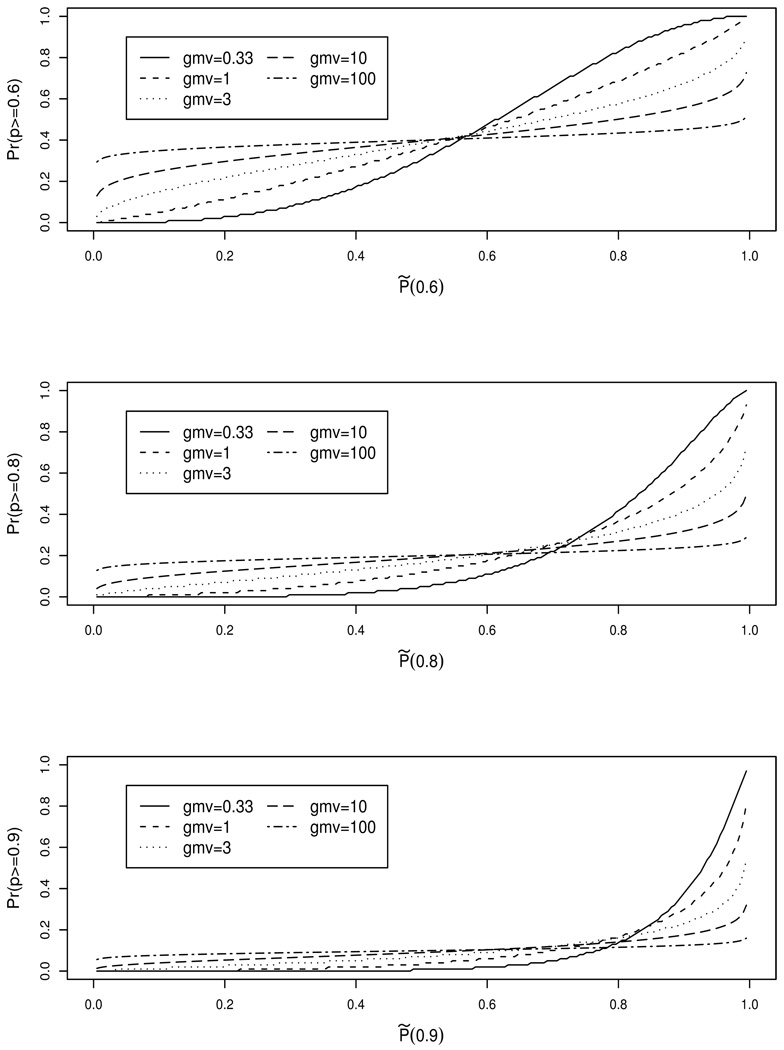

Figure 3.

Average posterior classification probabilities as a function of the optimally estimated percentiles for rls = 1, γ = (0.6, 0.8, 0.9).

9.3 Unit-specific performance

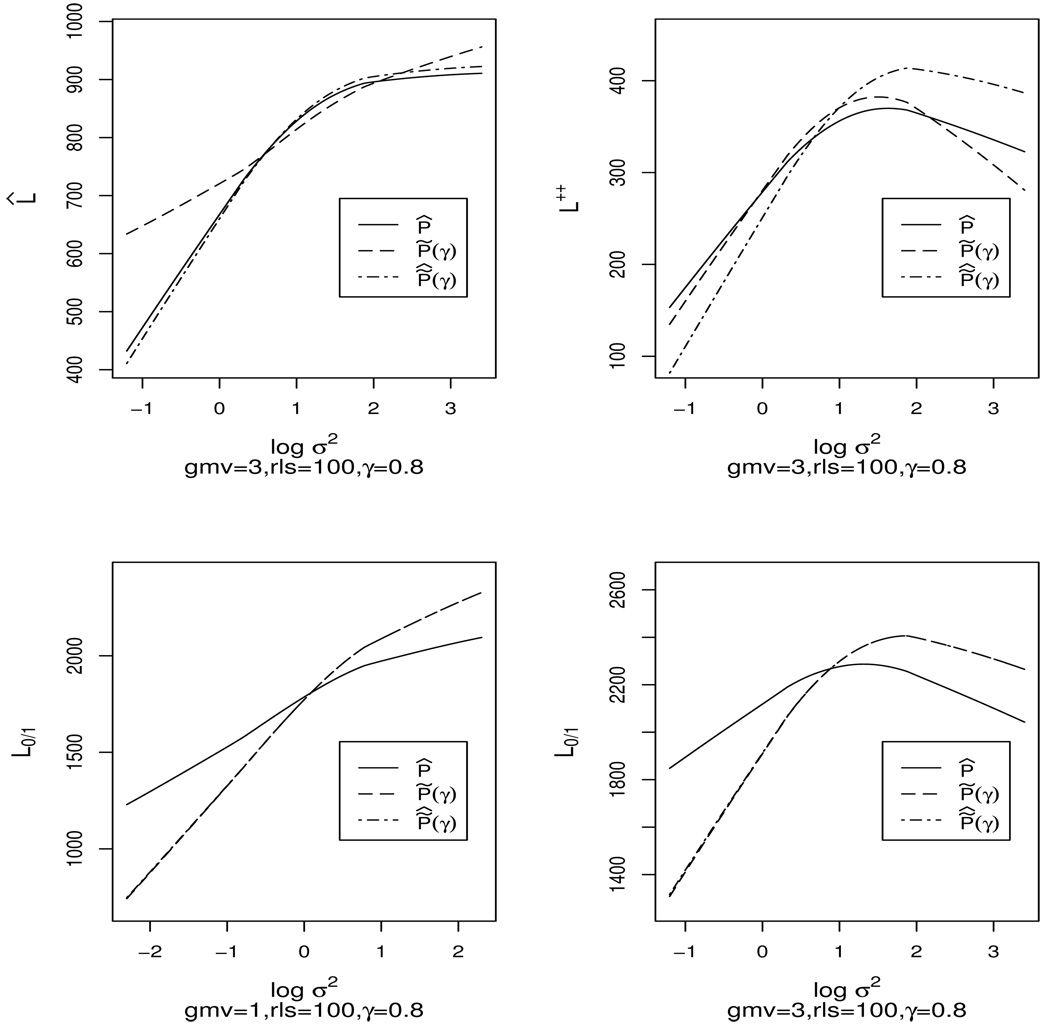

When rls = 1, pre-posterior risk is the same for all units. However, when rls > 1, the form a geometric sequence and preposterior risk depends on the unit. We study this non-exchangeable situation by simulation. Figure 2 displays loess smoothed performance of P̂k, P̃k(γ) and for L0/1, L̂ and L‡ as a function of unit-specific variance for gmv = 1 and 3, rls = 100 and γ = 0.8. Results for L̂ (gmv = 3) and L0/1 (gmv = 1) are intuitive in that risk increases with increasing unit-specific variance. However, in the displays for L0/1 (gmv = 3) and for L‡, for all estimators the risk increases and then decreases as a function of . For gmv and rls sufficiently large, similar patterns hold for other γ-values with the presence and location of a downturn depending on | γ − 0.5 |.

Figure 2.

Loess smoothed, unit-specific performance of P̂k, P̃k(γ) and under L̂, L‡, and L0/1 as a function of unit-specific variance ().

These apparent anomalies are explained as follows. If γ is near 1 (or equivalently, near 0) and if the differ sufficiently (rls ≫ 1), estimates for the high variance units perform better than for those with mid-level variance. This relation is due to the improved classification of high-variance units into (above γ)/(below γ) groups, with substantial shrinkage of the percentile estimates towards 0.5. For example, with γ = 0.8, a priori 80% of the percentiles should be below 0.8. Estimated percentiles for the high variance units are essentially guaranteed to be below 0.8 and so the classification error for the large-variance units converges to 0.20 as rls → ∞. Generally, low variance units have small misclassification probabilities, but percentiles for units with intermediate variances are not shrunken sufficiently toward 0.5 to produce a low L0/1.

9.4 Classification performance

As shown in the foregoing tables and by Liu et al. (2004) and Lockwood et al. (2002), even the optimal ranks and percentiles can perform poorly unless the data are very informative. Figure 3 displays average posterior classification probabilities as a function of the optimally estimated percentile for gmv = 0.33, 1, 10, 100 and γ = 0.6, 0.8, 0.9, when rls = 1. The pattern shown by the three panels should hold for other γ choices and we use the γ = 0.8 panel as the typical example for further comments. Discrimination improves with decreasing gmv, but even when gmv = 0.33 (the σk are 1/3 of the prior variance), the model-based, posterior probability of Pk > 0.8 is only 0.42 for a unit with P̃k(0.8) = 0.8. For this probability to exceed 0.95 (i.e., to be reasonably certain that Pk > 0.80) requires that P̃k(0.8) > 0.97. It can be shown that as gmv → ∞ the plots converge to a horizontal line at (1 − γ) and that as gmv → 0 the plots converge to a step function that jumps from 0 to 1 at γ.

9.5 The Poisson-Gamma model

We conducted investigations analogous to the all of the foregoing for the Poisson sampling distribution with a Gamma prior, with constant or unequal variances (e.g., expected values) for the unit-specific MLEs. Results are qualitatively and quantitatively very similar to those we report for the Gaussian sampling distribution.

10 Analysis of USRDS standardized mortality ratios

The United States Renal Data System (USRDS) uses provider specific, standardized mortality ratios (SMRs) as a quality indicator for its nearly 4000 dialysis centers (Lacson et al. 2001; End-Stage Renal Disease (ESRD) Network 2000) and (United States Renal Data System (USRDS) 2005). Under the Poisson likelihood (last line of model (14)), with Yk the observed and mk the expected deaths computed from a case-mix adjustment (Wolfe et al. 1992), the MLE is ρ̂k = Yk/mk, with variance ρk/mk. For the “typical” dialysis center ρk ≈ 1 and the mk control the variance of the MLEs. The observed mks range from around 0 to greater than 100. The ratio of the largest mk to the smallest mk, which is analogous to the rls in the foregoing simulations, is around 258,000.

In this “profiling” application, the loss function should reflect the end use of the ranks or percentiles. For example, suppose that the following monetary reward (increased reimbursement) and penalty (increased scrutiny) system is in place:

A provider either does or does not receive the reward depending on whether its percentile is or is not beyond (for SMRs, below) a γ threshold.

Providers that do get rewards receive varying amounts depending on their position among those receiving rewards.

Providers not receiving rewards undergo increased scrutiny from an oversight committee.

The distance from the threshold γ is used to monetize rewards or intensify scrutiny.

Loss function L̃ embodies this system with the values of p, q and c controlling the award/penalty differences within the (above γ)/(below γ) groups. If rewarded providers all get the same amount, then L0/1(γ) can be used. Alternatively, rewards and scrutiny can depend on the posterior probability of exceeding or falling below γ, with both P̃k(γ) and optimizing the evaluation.

Liu et al. (2004) analyzed 1998 data and Lin et al. (2004) extended these analyses to 1998 – 2001 for 3173 centers with complete data using an autoregressive model. We illustrate the new loss functions and performance measures using 1998 data and the model,

| (14) |

For these data, . Table 4 gives the posterior risks. For all loss functions investigated, the MLE based rank has the poorest performance; methods based on the posterior distributions generally perform well. As Theorem 6 indicates, P̃k(γ) and have almost identical risk.

Table 4.

Posterior, loss function risk for different ranking methods using the USRDS 1998 data. All values are 10000×(Loss). and are percentiles based on the and the Yk respectively.

| P̂k | P̃k(γ) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| L̂ | 741 | 872 | 740 | 769 | 741 | 769 | ||||

| L̃ | 108 | 164 | 107 | 100 | 107 | 100 | ||||

| L† | 97 | 130 | 96 | 102 | 102 | 102 | ||||

| L‡ | 281 | 401 | 279 | 275 | 285 | 276 | ||||

| L0/1 | 2001 | 2062 | 2006 | 1992 | 1991 | 1995 |

Figure 4 displays pr(θk > 0.18 | Y) with X-axis percentiles determined separately by the three percentiling methods. As shown by Theorem 6, the and P̃k(0.8) curves are monotone and approximately equal; the P̂k curve is not monotone, but is close to the other curves. The OC(0.8) value for and P̃k(0.8) is 0.64 (the optimal classification produces an error rate that is 64% of that for the no information case) and for P̂k is 0.65, showing that for γ = 0.8, using P̂k to classify is nearly fully efficient. Figure 4 also shows that for centers classified in the top 10%, the probability that they are truly in the top 20% (γ = 0.8) can be as low as 0.45. Lin et al. (2004) showed that, by using data from 1998–2001, this probability increases to 0.57. Evaluators should take this relatively poor classification performance into account by tempering rewards and scrutiny.

Figure 5 displays the relation between P̃k(0.8) and P̂k for 50 dialysis centers spaced uniformly according to P̃k(0.8). Since P̃k(0.8) is based on the pr(Pk > .8|Y) calculated from MCMC samples, ties appear when this probability is close to zero. Among 3173 dialysis centers, 249 centers have the exceeding probability 0 and all are estimated with percentile 125/3174=0.039. Though P̃k is highly efficient, some percentiles are substantially different from the optimal. As further evidence of this discrepancy, of the 635 dialysis centers classified by P̃k(0.8) in the top 20%, 39 are not so classified by P̂k with most of these near the γ = 0.8 threshold. Estimated percentiles are very similar for centers classified in the top 10%.

Figure 5.

Circles represent 50 USRDS dialysis centers evenly spread across percentiles determined by P̃k(0.8) using 1998 SMR data. Lines connect P̃k(0.8) and P̂k. Ties appear in the lower percentile area.

11 Discussion

Effective ranking or percentiling should be based on a loss function computed from the estimated and true ranks, or be asymptotically equivalent to such loss function based estimates. Doing so produces optimal or near optimal performance and ensures desirable properties such as monotone transform invariance. In general, percentiles based on MLEs or on posterior means of the target parameter can perform poorly. Similarly, hypothesis test-based percentiles perform poorly.

Our performance evaluations are primarily for the fully parametric model with a Gaussian sampling distribution, though we do investigate departures from the Gaussian prior. Simulations for the Poisson/Gamma model produce relative performance very similar to those for the Gaussian. The P̂k that optimize L̂ (SEL) are “general purpose” with no explicit attention to optimize the (above γ)/(below γ) classification. The optimal (above γ)/(below γ) ranks are asymptotically equivalent to the “exceedance probability” procedure proposed in Normand et al. (1997). This near-equivalence provides insight into goals and a route to efficient computation.

We report loss function comparisons and plots based on unit-specific performance. These can be augmented by bivariate and multivariate summaries of properties, for example pair-wise posterior distributions or pair-wise operating characteristics.

When posterior distributions are not stochastically ordered and the choice of ranking methods does matter, our simulations show that though P̃k(γ) and are optimal for their respective loss functions and outperform P̃k, P̃k performs well for a broad range of γ values. And, P̂k(γ) can have poor SEL performance. However, for some scenarios the relative benefit of using an optimal procedure is considerable and so a choice of estimator should be guided by goals.

Performance evaluations for three-level models with a hyper-prior and robust analyses based on the non-parametric maximum likelihood prior or a fully Bayesian nonparametric prior (Paddock et al. 2006) showed that SEL-optimal ranks perform well over a wide range of prior specifications.

Other loss functions and estimates can be considered. Weighted combinations of several loss functions can be used to broaden the class of loss functions. If an application relevant loss function cannot be optimized, our evaluations provide a framework to compare candidate estimators. Our scoring function approach can help practitioners elicit a meaningful loss function with an intuitive interpretation.

Though there are a wide variety of candidate loss functions and, thereby, candidate estimated percentiles, our investigations show that in most applications one can choose between P̂k and (equivalently, P̃k(γ)). The P̂k are for general purpose and are recommended in situations where the full spectrum of percentiles is important, for identifying units as low, medium or high performers. This is the case in educational assessments. Schools and school districts want to track their performance over time irrespective of whether they are low, high or in the middle. The focus on a specific (above γ)/(below γ) cut point and are recommended in situations where identifying one extreme is the dominant goal. Selection of the most differentially expressed genes, with γ selected to deliver a manageable number for further analysis, is a prime example.

Whatever percentiling method is used, plots such as Figure 4 can be constructed with those percentiles on the X-axis. In general, the plot will not be monotone unless the are used, but use of the P̂k produces a nearly monotone plot and very good OC(γ) performance. Therefore, unless there is a compelling reason to optimize relative to a specific (above γ)/(below γ) cut point, the P̂k are preferred.

Importantly, as do Liu et al. (2004) and Lockwood et al. (2002), we show that unless data are highly informative, even the optimal estimates can perform poorly. It is thus very important to select proper estimates for as good as possible inference, especially when performance differences between estimators are large. Data analytic performance summaries such as SEL, OC(γ) and plots like Figure 3 and Figure 4 should accompany any analysis.

Acknowledgments

This work was supported by grant 1-R01-DK61662 from the U.S. NIH, National Institute of Diabetes, Digestive and Kidney Diseases. The authors are grateful to the editors and referees for helpful comments.

Appendix

Appendix A Optimizing weighted squared error loss (WSEL)

Theorem 7

Under weighted squared error loss:

| (15) |

the optimal rank estimates are

(We drop conditioning on Y)

Thus, the R̄k are optimal.

When all wk ≡ w,

optimizes (15) subject to the exhausting the integers (1;…K). To see this, if 0 ≤ E(Ri) = mi ≤ E(Rj) = mj, ri < rj, then

and the R̂k are optimal.

For general wk there is no closed form solution, but a sorting-based algorithm based on,

| (16) |

guides the optimization. By the above inequality, reversing any two estimated ranks that do not align with R̄k results in a smaller squared error.

Theorem 8

Start from any initial ranks and implement the recursion: If inequality (16) is satisfied, switch the position of unit i and unit j, i, j = 1,…, K. The unique fixed point will minimize weighted squared error loss (15).

Since each switch will decrease the expected loss and there are at most n! possible values of the expected loss, a fixed point will be reached. At the fixed point, no (i, j) pair produces inequality (16) and so gives the SEL minimum.

In the standard sorting problem, the quantities to sort do not depend on the current positions of the units, while the quantity in (16) does. For this reason, the convergence of the algorithm can be very slow. After units i and j are compared and ordered, if unit i is compared to some other unit k and a switch happens, then unit i should be compared to unit j again and so this pairwise-switch optimization algorithm is computationally impractical.

Appendix B Optimizing L0/1

Proof of Theorem 1

Rewrite the loss function as a function of the number of units that not classified in the top (1 − γ)K, but that should have been. Then, , where A is the set of indices of the observations classified in the top and T is the true set of indices for which rank(θk) > (1 − γ)K. We need to maximize the expected number of correctly classified coordinates:

To optimize L0/1, for each θk calculate pr(Pk > γ|Y), rank these probabilities and select the largest (1−γ)K of them to minimize L0/1, creating the optimal (above γ)/(below γ) classification. This computation can be time-consuming, but is Monte Carlo implementable.

The P̂k(γ) optimize L0/1. There are other optimizers because L0/1 requires only the optimal (above γ)/(below γ) categorization but not the optimal ordering. For example, permutations of the ranks of units classified in A or permutations of the ranks in AC yield the same posterior risk for L0/1.

Appendix C Optimizing L̃

Lemma 1

If a1 + a2 ≥ 0 and b1 ≤ b2, then

Lemma 2

Rearrangement Inequality (Hardy et al. 1967): If a1 ≤ a2 ≤ … ≤ an and b1 ≤ b2 ≤ … ≤ bn, b(1); b(2), …b(n) is a permutation of b1; b2, … bn, then

For n = 2 we use the ranking inequality:

For n > 2, there exists a minimum and a maximum in all n! combinations of sums of products. By the result for n = 2, the necessary condition for the sum to reach the minimum is that any pair of indices (i1, i2), (ai1, ai2) and (bi1, bi2) must have the inverse order; to reach the maximum, they must have same order. Therefore, except in the trivial cases where there are ties inside {ai} or {bi}, is the only candidate to reach the minimum and is the only candidate to reach the maximum. Proof of Theorem 2 Denote by R(i) the rank random variables for units whose ranks are estimated as i. Then,

For optimum ranking, the following conditions are necessary:

By Lemma 1, for any (i1; i2) satisfying (1 ≤ i1 ≤ [γ(K + 1)], [γ(K + 1)] + 1 ≤ i2 ≤ K), it is required that pr(R(i1) ≥ γ (K + 1)) ≤ pr(R(i2) ≤ γ(K + 1)). To satisfy this condition, divide the units into two groups by picking the units with largest (1 − γ)K values of pr(Rk ≥ γ(K + 1)) into the (above γ) group.

-

By Lemma 2

For the set {k : Rk = R(i), i = 1, …, [γ(K + 1)]} , since |γ(K + 1) − i|p is a decreasing function of i, we require that pr(R(i1) ≥ γ(K + 1)) ≥ pr(R(i2) ≥ γ(K + 1)) if i1 > i2. Therefore, for the units with ranks (1,… γK), the ranks should be determined by ranking the pr(Rk ≥ γ(K + 1)).

For the set {k : Rk = R(i), i = [γ(K + 1)] + 1, … ,K} since |i − γ(K + 1) |q is an increasing function of i, we require that pr(R(i1) ≥ γ(K + 1)) ≥ pr(R(i2) ≥ γ(K + 1)) if i1 > i2. Therefore, for the units with ranks (γK + 1,…,K), the ranks should be determined by ranking the pr(Rk ≥ (K + 1)).

These conditions imply that the R̃k(γ) (P̃k(γ)) are optimal. By the proof of Lemma 2, we know that the optimization is not unique, when there are ties in pr(Rk ≥ γ (K + 1)).

Appendix D Optimization procedure for L†

As in the proof of Theorem 2, we begin with a necessary condition for optimization. Denote by R(i1), R(i2) the rank random variables for units whose ranks are estimated as i1, i2, where i1 < γ(K + 1), i2 > γ(K + 1). Let,

For the index selection to be optimal,

The following is equivalent to the foregoing:

Therefore, with pk = pr(Rk ≥ γ(K + 1)) the optimal ranks split the θs into a lower fraction and an upper fraction by ranking the quantity,

This result is useful and different from that of WSEL in Section Appendix A in the sense that we can now successfully get a quantity depend on unit index k only. However, as for L0/1 optimization of L† does not induce an optimal ordering in the two groups. A second stage loss, for example SEL, can be imposed within the two groups.

Appendix E Optimizing L‡

As for optimizing WSEL in Section Appendix A, a pairwise switch algorithm is computationally challenging, since the decision on switching a pair of units depends on their relative position and on their estimated ranks. Thus, in each iteration all pair-wise relations have to be checked. We have not identified a general representation or efficient algorithm for the optimal ranks. However, we have developed the following relation between L†, L̃ and L‡. Note that when either it must be the case that either . Equivalently,

Now, suppose c > 0, p ≥ 1, q ≥ 1 and let m = max(p,q). Then, using the inequality 21−m ≤ am + (1 − a)m ≤ 1 for 0 ≤ a ≤ 1, we have that (L̃ + L†) ≤ L‡ ≤ 2m−1(L̃ + L†). Specifically, if p = q = 1, L‡ = L̃ + L†; if p = q = 2, then (L̃ + L†) ≤ L‡ ≤ 2(L̃ + L†). Similarly, when c > 0, p ≤ 1, q ≤ 1, (L̃ + L†) ≥ L‡ ≥ 2m−1 (L̃ + L†). Therefore, L̃ and L† can be used to control L‡.

Appendix F Proof of Theorem 3

Since the (above γ)/(below γ) groups are formed by P̃k(γ), minimizes L0/1. For constrained SEL minimization we prove the more general result that for any (above γ)/(below γ) categorization, ordering within the groups by P̂k produces the constrained solution. To see this, without loss of generality, assume that coordinates (1,…, γK) are in the (below γ) group and (γK + 1,…,K) are in the (above γ) group. Similar to Section Appendix A,

Nothing can be done to reduce the variance terms. The summed squared bias partitions into,

which must be minimized subject to the constraints that . We deal only with the (below γ) group; the (above γ) group is handled in the same manner. Without loss of generality assume that R̄1 < R̄2 < … < R̄γK and compare SEL for to any other assignment. It is straightforward to show that switching any pair that does not follow the R̄k order reduces SEL. Iterating this and noting that the R̂k = rank(R̄k) produces the result.

Appendix G Proof of Theorem 4

Recall that for a positive, discrete random variable the expected value can be computed as the sum of (1 − cdf) at mass points, where cdf is the cumulative distribution function, so

| (17) |

Relation (17) can be used to show that when the posterior distributions are stochastically ordered, R̂k ≡ R̃k(γ) because the order of pr[Rk ≥ ν] does not depend on γ and the R̄k inherit their order.

Appendix H Proof of Theorem 5

In this proof we use YK rather than Y to stress that as K goes to infinity, the length of Y changes. For , we prove: as , where Pk is the true percentile of θk, YK is the vector (Y1; Y2,… , YK).

The posterior independence of θk is straightforward. Denote θ = (θ1, …, θk,…, θK) and θ(−k) = (θ1,…, θk−1, θk+1,…,θK), where . Let θ(γ) = θ(i,K) be the γth quantile of θ, if , where θ(i,K) is the ith largest number of θ. Respectively, is the γth quantile of θ(−k). We also denoted θ(i−1,K−1) as the (i − 1)th largest number of θ(−k).

For the P̃k(γ)’s generator:

| (18) |

For the second term in (18)

We have the inequality , θ(γ) = θ(i,K) by definition. Consider the relation between and γ:

If pr(θ(i−1,K−1) ≤ θk < θ(i,K)|YK) = 0 and pr(θ(i,K) < θk ≤ θ(i−1,K−1)|YK) = 0;

If pr(θ(i,K−1) < θk ≤ θ(i,K)|YK) = 0 and pr(θ(i,K) < θk ≤ θ(i−1,K−1)|YK) = 0.

Thus the second term in (18) is zero,

| (19) |

In (19), is the γth quantile of non-iid K − 1 samples from K − 1 posterior distributions. By theorem 5.2.1 of David and Nagaraja (2003) and large sample theorem of order statistics from iid sampling, we have in probability as K goes to ∞. Since we assume that θk|Yk has a uniformly bounded finite second moment, so does . Thus .

The generator of is:

| (20) |

Appendix I Scoring function

For each function S(P), there will be an optimal SEL ranking estimator. For instance,

indicates that the reward or penalty is the same for all units below the threshold γ; for units above γ the reward/penalty is linearly related to the rank.

We study the (above γ)/(below γ) classification, but more than two ordinal categories can be of interest. For example, educational institutions might be classified into three categories, the poor, the average and the excellent. The following two S(P) capture this goal. Let J ≥ 3 be the number of ordered categories, then

or

References

- Austin PC, Tu JV. Comparing Clinical Data with Administrative Data for Producing Acute Myocardial Infarction Report Cards. Journal of the Royal Statistical Society, Series A: Statistics in Society. 2006;169(1):115–126. 916. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society, Series B, Methodological. 1995;57:289–300. 925. [Google Scholar]

- Christiansen CL, Morris CN. Improving the statistical approach to health care provider profiling. Annals of Internal Medicine. 1997;127:764–768. doi: 10.7326/0003-4819-127-8_part_2-199710151-00065. 916. [DOI] [PubMed] [Google Scholar]

- Conlon EM, Louis TA. Addressing Multiple Goals in Evaluating Region-specific Risk using Bayesian methods chapter 3. In: Lawson A, Biggeri A, Böhning D, Lesaffre E, Viel J-F, Bertollini R, editors. Disease Mapping and Risk Assessment for Public Health. Wiley; 1999. pp. 31–47. 916. [Google Scholar]

- Daniels M, Normand S-LT. Longitudinal profiling of health care units based on continuous and discrete patient outcomes. Biostatistics. 2006;7:1–15. doi: 10.1093/biostatistics/kxi036. 916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- David HA, Nagaraja HN. Order Statistics. third edition. Wiley; 2003. 939. [Google Scholar]

- Devine OJ, Louis TA. A constrained empirical Bayes estimator for incidence rates in areas with small populations. Statistics in Medicine. 1994;13:1119–1133. doi: 10.1002/sim.4780131104. 916. [DOI] [PubMed] [Google Scholar]

- Devine OJ, Louis TA, Halloran ME. Empirical Bayes estimators for spatially correlated incidence rates. Environmetrics. 1994;5:381–398. 916. [Google Scholar]

- Diggle PJ, Thomson MC, Christensen OF, Rowlingson B, Obsomer V, Gardon J, Wanji S, Takougang I, Enyong P, Kamgno J, Remme JH, Boussinesq M, Molyneux DH. Technical report, Department of Mathematics and Statistics. Lancaster University; 2006. Spatial modelling and prediction of Loa loa risk: decision making under uncertainty. 916. [DOI] [PubMed] [Google Scholar]

- Draper D, Gittoe M. Statistical Analysis of Performance Indicators in UK Higher Education. Journal of the Royal Statistical Society, Series A: Statistics in Society. 2004;167(3):449–474. 916. [Google Scholar]

- DuMouchel W. Bayesian Data Mining in Large Frequency Tables, With An Application to the FDA Spontaneous Reporting System (with discussion) The American Statistician. 1999;53:177–190. 916. [Google Scholar]

- Efron B, Tibshirani R, Storey JD, Tusher V. Empirical Bayes analysis of a microarray experiment. Journal of the American Statistical Association. 2001;96(456):1151–1160. 922. [Google Scholar]

- End-Stage Renal Disease (ESRD) Network. Technical report, Health Care Financing Administration. 2000. 1999 Annual Report: ESRD Clinical Performance Measures Project. 930. [Google Scholar]

- Gelman A, Price PN. All maps of parameter estimates are misleading. Statistics in Medicine. 1999;18:3221–3234. doi: 10.1002/(sici)1097-0258(19991215)18:23<3221::aid-sim312>3.0.co;2-m. 916. [DOI] [PubMed] [Google Scholar]

- Goldstein H, Spiegelhalter DJ. League tables and their limitations: statistical issues in comparisons of institutional performance (with discussion) Journal of the Royal Statistical Society Series A. 1996;159:385–443. 916. [Google Scholar]

- Hardy GH, Littlewood JE, Polya G. Inequalitites. 2nd edition. Cambridge University Press; 1967. 921, 935. [Google Scholar]

- Lacson E, Teng M, Lazarus JM, Lew N, Lowrie EG, Owen WF. Limitations of the facility-specific standardized mortality ratio for profiling health care quality in Dialysis. American Journal of Kidney Diseases. 2001;37:267–275. doi: 10.1053/ajkd.2001.21288. 930. [DOI] [PubMed] [Google Scholar]

- Laird NM, Louis TA. Empirical Bayes ranking methods. Journal of Educational Statistics. 1989;14:29–46. 918. [Google Scholar]

- Landrum MB, Bronskill SE, Normand S-LT. Analytic methods for constructing cross-sectional profiles of health care providers. Health Services and Outcomes Research Methodology. 2000;1:23–48. 916. [Google Scholar]

- Landrum MB, Normand S-LT, Rosenheck RA. Selection of Related Multivariate Means: Monitoring Psychiatric Care in the Department of Veterans Affairs. Journal of the American Statistical Association. 2003;98(461):7–16. 916. [Google Scholar]

- Lin R, Louis TA, Paddock SM, Ridgeway G. Ranking of USRDS, provider-specific SMRs from 1998–2001. Technical Report 67, Johns Hopkins University, Dept. of Biostatistics Working Papers. 2004 http://www.bepress.com/jhubiostat/paper67. 931.

- Liu J, Louis TA, Pan W, Ma J, Collins A. Methods for estimating and interpreting provider-specific, standardized mortality ratios. Health Services and Outcomes Research Methodology. 2004;4:135–149. doi: 10.1023/B:HSOR.0000031400.77979.b6. 916, 921, 930, 931, 933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lockwood JR, Louis TA, McCaffrey DF. Uncertainty in rank estimation: Implications for value-added modeling accountability systems. Journal of Educational and Behavioral Statistics. 2002;27(3):255–270. doi: 10.3102/10769986027003255. 916, 918, 930, 933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCaffrey DF, Lockwood JR, Koretz D, Louis TA, Hamilton L. Models for value-added modeling of teacher effects. Journal of Educational and Behavioral Statistics. 2004;29(1):67–101. doi: 10.3102/10769986029001067. 916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClellan M, Staiger D. The Quality of Health Care Providers. Technical Report 7327, National Bureau of Economic Research, Working Paper. 1999 916. [Google Scholar]

- Noell GH, Burns JL. Value-added assessment of teacher preparation - An illustration of emerging technology. Journal of Teacher Education. 2006;57(1):37–50. 916. [Google Scholar]

- Normand S-LT, Glickman ME, Gatsonis CA. Statistical methods for profiling providers of medical care: Issues and applications. Journal of the American Statistical Association. 1997;92:803–814. 916, 921, 923, 932. [Google Scholar]

- Paddock SM, Ridgeway G, Lin R, Louis TA. Flexible distributions for triple-goal estimates in two-stage hierarchical models. Computational Statistics & Data Analysis. 2006;50/11:3243–3262. doi: 10.1016/j.csda.2005.05.008. 932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rubin DB, Stuart EA, Zanutto EL. A potential outcomes view of value-added assessment in education. Journal of Educational and Behavioral Statistics. 2004;29(1):103–116. 916. [Google Scholar]

- Shen W, Louis TA. Triple-goal estimates in two-stage, hierarchical models. Journal of the Royal Statistical Society, Series B. 1998;60:455–471. 916, 917, 918. [Google Scholar]

- Storey JD. A direct approach to false discovery rates. Journal of the Royal Statistical Society, Series B, Methodological. 2002;64(3):479–498. 925. [Google Scholar]

- Storey JD. The Positive False Discovery Rate: A Bayesian Interpretation and the q-Value. The Annals of Statistics. 2003;31(6):2013–2035. 925. [Google Scholar]

- Tekwe CD, Carter RL, Ma CX, Algina J, Lucas ME, Roth J, Ariet M, Fisher T, Resnick MB. An empirical comparison of statistical models for value-added assessment of school performance. Journal of Educational and Behavioral Statistics. 2004;29(1):11–35. 916. [Google Scholar]

- Tusher VG, Tibshirani R, Chu G. Significance analysis of microarrays applied to the ionizing radiation response. Procedings of National Academy of Sciences. 2001;98(9):5116–5121. doi: 10.1073/pnas.091062498. 922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- United States Renal Data System (USRDS) 2005 Annual Data Report: Atlas of end-stage renal disease in the United States. Technical report, Health Care Financing Administration. 2005 930.

- Wolfe R, Gaylin D, Port F, Held P, Wood C. Using USRDS generated mortality tables to compare local ESRD mortality rates to national rates. Kidney Int. 1992;42(4):991–996. doi: 10.1038/ki.1992.378. 930. [DOI] [PubMed] [Google Scholar]

- Wright DL, Stern HS, Cressie N. Loss functions for estimation of extrema with an application to disease mapping. The Canadian Journal of Statistics. 2003;31(3):251–266. 916. [Google Scholar]