Abstract

We apply the nonconcave penalized likelihood approach to obtain variable selections as well as shrinkage estimators. This approach relies heavily on the choice of regularization parameter, which controls the model complexity. In this paper, we propose employing the generalized information criterion (GIC), encompassing the commonly used Akaike information criterion (AIC) and Bayesian information criterion (BIC), for selecting the regularization parameter. Our proposal makes a connection between the classical variable selection criteria and the regularization parameter selections for the nonconcave penalized likelihood approaches. We show that the BIC-type selector enables identification of the true model consistently, and the resulting estimator possesses the oracle property in the terminology of Fan and Li (2001). In contrast, however, the AIC-type selector tends to overfit with positive probability. We further show that the AIC-type selector is asymptotically loss efficient, while the BIC-type selector is not. Our simulation results confirm these theoretical findings, and an empirical example is presented. Some technical proofs are given in the online supplementary material.

Keywords: AIC, BIC, GIC, LASSO, Nonconcave penalized likelihood, SCAD

1 Introduction

Using the penalized likelihood function to simultaneously select variables and estimate unknown parameters has received considerable attention in recent years. To avoid the instability of the classical subset selection procedure, Tibshirani (1996) proposed the Least Absolute Shrinkage and Selection Operator (LASSO). In the same spirit as LASSO, Fan and Li (2001) introduced a unified approach via a nonconcave penalized likelihood function, and demonstrated its usefulness for both linear and generalized linear models. Subsequently, Fan and Li (2002) employed the nonconcave penalized likelihood approach for Cox models, while Fan and Li (2004) used it on semiparametric regression models. In addition, Fan and Peng (2004) investigated the theoretical properties of the nonconcave penalized likelihood when the number of parameters tends to infinity as the sample size increases. Recently, more researchers applied penalized approaches to study variable selections (e.g., Park and Hastie, 2007; Wang and Leng, 2007; Yuan and Lin, 2007; Zhang and Lu, 2007; Li and Liang, 2008).

In employing the nonconcave penalized likelihood in regression analysis, we face two challenges. The first hurdle is to compute the nonconcave penalized likelihood estimate. This issue has been carefully studied in the recent literature: (i.) Fan and Li (2001) proposed the local quadratic approximation (LQA) algorithm, which was further analyzed by Hunter and Li (2005); (ii.) Efron, Hastie, Johnstone, and Tibshirani (2004) introduced the LARS algorithm, which can be used for the adaptive LASSO (Zou, 2006; Wang, Li, and Tsai, 2007a; Zhang and Lu, 2007). With the aid of local linear approximation (LLA) algorithm (Zou and Li, 2008), LARS can be adopted to solve optimization problems of nonconcave penalized likelihood functions. However, the above computational procedures rely on the regularization parameter. Hence, the selection of this parameter becomes the second challenge, and is therefore the primary aim of our paper.

In the literature, selection criteria are usually classified into two categories: consistent (e.g., the Bayesian information criterion BIC, Schwarz, 1978) and efficient (e.g., the Akaike information criterion AIC, Akaike, 1974; the generalized cross-validation GCV, Craven and Wahba, 1979). A consistent criterion identifies the true model with a probability that approaches 1 in large samples when a set of candidate models contains the true model. An efficient criterion selects the model so that its average squared error is asymptotically equivalent to the minimum offered by the candidate models when the true model is approximated by a family of candidate models. Detailed discussions on efficiency and consistency can be found in Shibata (1981, 1984), Li (1987), Shao (1997) and McQuarrie and Tsai (1998). In the context of linear and generalized linear models (GLIM) with a nonconcave penalized function, Fan and Li (2001) proposed applying GCV to choose the regularization parameter. Recently, Wang, Li, and Tsai (2007b) found that the resulting model selected by GCV tends to overfit, while BIC is able to identify the finite-dimensional true linear and partial linear models consistently. Wang et al. (2007b) also indicated that GCV is similar to AIC. However, they only studied the penalized least squares function with the smoothly clipped absolute deviation (SCAD) penalty. This motivated us to study the issues of regularization parameter selection for penalized likelihood-based models with a nonconcave penalized function.

In this paper, we adopt Nishii's (1984) generalized information criterion (GIC) to choose regularization parameters in nonconcave penalized likelihood functions. This criterion not only contains AIC and BIC as its special cases, but also bridges the connection between the classical variable selection criteria and the nonconcave penalized likelihood methodology. This connection provides more flexibility for practitioners to employ their own favored variable selection criteria in choosing desirable models. When the true model is among a set of candidate models with the GLIM structure, we show that the BIC-type tuning parameter selector enables us to identify the true model consistently, whereas the AIC-type selector tends to yield an overfitted model. On the other hand, if the true model is approximated by a set of candidate models with the linear structure, we demonstrate that the AIC-type tuning parameter selector is asymptotically loss efficient, while the BIC-type selector does not have this property in general. These findings are consistent with the features of AIC (see Shibata, 1981; Li, 1987) and BIC (see Shao, 1997) used in best subset variable selections.

The rest of the paper is organized as follows. Section 2 proposes the generalized information criterion under a general nonconcave penalized likelihood setting. Section 3 studies the consistent property of GIC for generalized linear models, while section 4 investigates the asymptotic loss efficiency of the AIC-type selector for linear regression models. Monte Carlo simulations and an empirical example are presented in section 5 to illustrate the use of the regularization parameter selectors. Section 6 provides discussions, and technical proofs are given in the Appendix.

2 Nonconcave penalized likelihood function

2.1 Penalized estimators and penalty conditions

Consider the data (x1, y1), · · · , (xn, yn) being collected identically and independently, where yi is the response from the ith subject, and xi is the associated d dimensional predictor variable. Let be the log likelihood-based (or loss) function of d dimensional parameter vector . Then, adopting Fan and Li's (2001) approach, we define a penalized likelihood to be

| (1) |

where pλ(·) is a penalty function with regularization parameter λ. Several penalty functions have been proposed in the literature. For example, the Lq (0 < q < 2) penalty, namely pλ(|β|) = q–1λ|β|q, leads to the bridge regression (Frank and Friedman, 1993). In particular, the L1 penalty yields the LASSO estimator (Tibshirani, 1996). Fan and Li (2001) proposed the nonconcave penalized likelihood method and advocated the use of the smoothly clipped absolute deviation (SCAD) penalty, whose first derivative is given by

with a = 3.7 and pλ(0) = 0. With properly chosen regularization parameter λ from 0 to its upper limit, λmax, the resulting penalized estimator is sparse and therefore suitable for variable selections.

To investigate the asymptotic properties of the regularization parameter selectors, we present the penalty conditions given below.

(C1) Assume that λmax depends on n and satisfies λmax → 0 as n → ∞.

(C2) There exists a constant m such that the penalty pλ(ζ) satisfies for θ > mζ.

- (C3) If λn → 0 as n → ∞, then the penalty function satisfies

Condition (C1) indicates that a smaller regularization parameter is needed if the sample size is large. Condition (C2) assures that the resulting penalized likelihood estimate is asymptotically unbiased (Fan and Li, 2001). Both the SCAD and Zhang's (2007) minimax concave penalties (MCP) satisfy this condition. Condition (C3) is adapted from Fan and Li's (2001) Equation (3.5), which is used to study the oracle property.

Remark 1. Both the SCAD penalty and Lq penalty for 0 < q ≤ 1 are singular at the origin. Hence, it becomes challenging to maximize their corresponding penalized likelihood functions. Accordingly, Fan and Li (2001) proposed the LQA algorithm for finding the solution of the nonconcave penalized likelihood. In LQA, pλ(|β|) is locally approximated by a quadratic function qλ(|β|), whose first derivative is given by

The above equation is evaluated at the (k + 1)-step iteration of the Newton-Raphson algorithm if is not very close to zero. Otherwise, the resulting parameter estimator of βj is set to 0.

2.2 Generalized information criterion

Before introducing the generalized information criterion, we first define the candidate model, which is involved in variable selections.

Definition 1 (Candidate Model). We define α, a subset of , as a candidate model, meaning that the corresponding predictors labelled by α are included in the model. Accordingly, is the full model. In addition, we denote the size of model α (i.e., the number of nonzero parameters in α) and the coefficients associated with the predictors in model α by dα and βα, respectively. Moreover, we denote the collection of all candidate models by . For a penalized estimator that minimizes the objective function (1), denote the model associated with by αλ.

In the normal linear regression model, and ei ~ N(0, σ2) for i = 1, · · · , n, Nishii (1984) proposed the generalized information criterion for classical variable selections. It is

where βα is the parameter of the candidate model α, is the maximum likelihood estimator of σ2, and κn is a positive number that controls the properties of variable selection. Note that Nishii's GIC is different from the GIC proposed by Konishi and Kitagawa (1996). When κn = 2, GIC becomes AIC, while κn = log(n) leads to GIC being BIC. Because GIC contains a broad range of selection criteria, this motivates us to propose the following GIC-type regularization parameter selector,

| (2) |

where measures the fitting of model αλ, y = (y1, · · · , yn)T, is the penalized parameter estimator obtained by maximizing Equation (1) with respect to β, and dfλ is the degrees of freedom of model αλ. For any given κn, we select λ that minimizes GICκn(λ). We can see that, the larger κn is, the higher the penalty for models with more variables. Therefore, for some given data, the size of the selected model decreases as κn increases.

Remark 2. For any given model α (including the penalized model αλ and the full model ), we are able to obtain the non-penalized parameter estimator by maximizing the log-likelihood function in (1). Then, Equation (2) becomes

| (3) |

which can be used for classical variable selections. In addition, turns into Nishii's GICκn(α) if we replace in (3) with the –2 log-likelihood function of the fitted normal regression model.

We next study the degrees of freedom used in the second term of GIC. In the selection of the regularization parameter, Fan and Li (2001, 2002) proposed that the degrees of freedom be the trace of the approximate linear projection matrix, i.e.

| (4) |

where , , and for j, j′ such that and . To understand the large sample property of dfL(λ), we show its asymptotic behavior given below.

Proposition 1. Assume that the penalized likelihood estimator is sparse (i.e., with probability tending to one, if the true value of βj is 0) and consistent, where is the j-th component of . Under conditions (C1) and (C2), we have

where dαλ is the size of model αλ.

Proof. After algebraic simplifications, , where . Because of the consistency and sparsity of , converges to βj with probability tending to 1 for all j such that . Hence, those are all bounded from 0. This result, together with conditions (C1) and (C2), implies that Σλ = 0 with probability tending to 1. Subsequently, using the fact that , we complete the proof.

The above proposition suggests that the difference between dfL(λ) and the size of the model, dαλ, is small. Because dαλ is simple to calculate, we use it as the degrees of freedom dfλ in (2). In linear regression models, Efron et al. (2004) and Zou, Hastie, and Tibshirani (2007) also suggested using dαλ as an estimator of the degrees of freedom for LASSO. Moreover, Zou et al. (2007) showed that dαλ is an asymptotically unbiased estimator. In this article, our asymptotical results are valid without regard to the use of dfL(λ) or dαλ as the degrees of freedom. When the sample size is small, however, dfL(λ) should be considered. In the following section, we explore the properties of GIC for the generalized linear models which have been widely used in various disciplines.

3 Consistency

In this section, we assume that the set of candidate models contains the unique true model, and that the number of parameters in the full model is finite. Under this assumption, we are able to study the asymptotic consistency of GIC by introducing the following definition and condition.

Definition 2 (Underfitted and Overfitted Models). We assume that there is a unique true model α0 in , whose corresponding coefficients are nonzero. Therefore, any candidate model α ⊅ α0, is referred to as an underfitted model, while any α ⊃ α0 other than α0 itself is referred to as an overfitted model.

Based on the above definitions, we partition the tuning parameter interval [0, λmax] into the underfitted, true, and overfitted subsets, respectively,

This partition allows us to assess the performance of regularization parameter selections.

To investigate the asymptotic properties of the regularization parameter selectors, we introduce the technical condition given below.

(C4) For any candidate model , there exists cα > 0 such that . In addition, for any underfitted model α ⊅ α0, cα > cα0, where cα0 is the limit of and βα0 is the parameter vector of the true model α0.

The above condition assures that the underfitted model yields a larger measure of model fitting than that of the true model. We next explore the asymptotic consistency of GIC for the generalized linear models which have been used in various disciplines.

Consider the generalized linear model (GLIM, see McCullagh and Nelder, 1989), whose conditional density function of yi given xi is

| (5) |

where a(·), b(·) and c(·, ·) are suitably chosen functions, θi is the canonical parameter, E(yi|xi) = μi = b′(θi), g(μi) = θi, g is a link function, and ϕ is a scale parameter. Throughout this paper, we assume that ϕ is known (such as in the logistic regression model and the Poisson log-linear model) or that it can be estimated by fitting the data with the full model (for instance, the normal linear model). In addition, we follow the classical regression approach to model θi by . Based on (5), the log likelihood-based function in (1) is

| (6) |

where μ = (μ1, · · · , μn)T, y = (y1, · · · , yn)T, and θ = (θ1, · · · , θn)T. Then, the resulting scaled deviance of a penalized estimate is

where .

For model αλ, we employ the scaled deviance as the goodness-of-fit measure, , in (2) so that the resulting generalized information criterion for GLIM is

| (7) |

In addition, when we fit the data with the non-penalized likelihood approach under model α, GIC becomes

| (8) |

where , and is the non-penalized maximum likelihood estimator of β. Accordingly, GIC* can be used in classical variable selection (see Eq. (3.10) of McCullagh and Nelder, 1989). Next, we show the asymptotic performances of GIC.

Theorem 1. Suppose the density function of the generalized linear model satisfies Fan and Li's (2001) three regularity conditions (A)–(C) and that the technical condition (C4) holds.

- If there exists a positive constant M such that κn < M, then the tuning parameter selected by minimizing GICκn(λ) in (7) satisfies

where π is a nonzero probability. Suppose that conditions (C1)-(C3) are satisfied. If κn → ∞ and , then the tuning parameter selected by minimizing GICκn(λ) in (7) satisfies .

The proof is given in Appendix A.

Theorem 1 provides guidance on the choice of the regularization parameter. Theorem 1(A) implies that the GIC selector with bounded κn tends to overfit without regard to which penalty function is being used. Analogous to the classical variable selection criterion AIC, we refer to GIC with κn = 2 as the AIC selector, while we name GIC with κn → 2 the AIC-type selector. In contrast, because κn = log(n) fulfills the conditions of Theorem 1(B), we call GIC with κn → ∞ and the BIC-type selector.Theorem 1(B) indicates that the BIC-type selector identifies the true model consistently. Thus, the nonconcave penalized likelihood of the generalized linear model with the BIC-type selector possesses the oracle property.

Remark 3. In linear regression models, Fan and Li (2001) applied the GCV selector given below to choose the regularization parameter,

| (9) |

where ∥ · ∥ denotes the Euclidean norm, , and dfL(λ) is defined in (4). To extend the application of GCV, we replace the residual sum of squares in (9) by , and then choose λ that minimizes

| (10) |

Using the Taylor expansion, we further have that

Because is bounded via Condition (C4), GCV yields an overfitted model with a positive probability. Therefore, the penalized partial likelihood with the GCV selector does not possess the oracle property.

Remark 4. In linear regression models, Wang et al. (2007b) demonstrated that Fan and Li's (2001) GCV-selector for the SCAD penalized least squares procedure cannot select the tuning parameter consistently. They further proposed the following BIC tuning parameter selector,

where . Using the result that log(1 + t) ≈ t for small t, is approximately equal to

where is the scaled deviance of the normal distribution, and is the dispersion parameter estimator computed from the full model. It can be seen that BIC** is a BIC-type selector. Under the conditions in Theorem 1(B), the SCAD penalized least squares procedure with the BIC** selector possesses the oracle property, which is consistent with the findings in Wang et al. (2007b).

4 Efficiency

Under the assumption that the true model is included in a family of candidate models, we established the consistency of BIC-type selectors. In practice, however, this assumption may not be valid, which motivates us to study the asymptotic efficiency of the AIC-type selector. In the literature, the L2 norm has been commonly used to assess the efficiency of the classical AIC procedure (see Shibata, 1981, 1984 and Li, 1987) in linear regression models. Hence, we focus on the efficiency of linear regression model selections via L2 norm.

Consider the following model

where μ = (μ1, ..., μn)T is an unknown mean vector, and εi's are independent and identically distributed (i.i.d) random errors with mean 0 and variance σ2. Furthermore, we assume that Xβ constitutes the nearest representation of the true mean vector μ, and hence the full model is not necessarily a correct model. Adapting the formulation of Li (1987), we allow d, the dimension of β, to tend to infinity with n, but d/n → 0.

For the given data set {(xi, yi) : i = 1, · · · , n}, we follow the formulation of (1) to define the penalized least squares function

| (11) |

The resulting penalized estimator of μ in model αλ is . In addition, the non-penalized estimator of μ in model α is . It is noteworthy that and have been defined in Definition 1 and Remark 2, respectively. In practice, the tuning parameter λ is unknown and can be selected by minimizing

| (12) |

where σ2 is assumed to be known, and the case with the unknown σ2 will be discussed later. When in (12) is replaced by the least squares estimator , with κn = 2 becomes the Mallows’ Cp criterion that has been used for classical variable selections.

To assess the performance of the tuning parameter selector, we adopt the approach of Shibata (1981) (also see Li, 1987; Shao, 1997), and define the average squared loss (or the L2 loss) associated with the estimator to be

| (13) |

Accordingly, the risk function is .

Using the average squared loss measure, we further define the asymptotic loss efficiency.

Definition 3 (Asymptotically Loss Efficient). A tuning parameter selection procedure is said to be asymptotically loss efficient if

| (14) |

in probability, where is associated with the tuning parameter selected by this procedure. We also say is asymptotically loss efficient if (14) holds.

Moreover, we introduce the following technical conditions for studying the asymptotic loss efficiency of the AIC-type selector in linear regression.

(C5) exists, and its largest eigenvalue is bounded by a constant number C.

(C6) , for some positive integer q.

- (C7) The risks of the least squares estimators for all satisfy

- (C8) Let b = (b1, ..., bd)T, where for all j such that , and bj = 0 otherwise, and is the j-th component of the penalized estimator . In addition, let be the least squares estimator of β obtained from model αλ. Then, we assume that, in probability,

Condition (C5) has been commonly considered in the literature. Conditions (C6) and (C7) are adopted from conditions (A.2) and (A.3), respectively, in Li (1987). It can be shown that if the true model is approximated by a set of the candidate models (e.g., the true model is of infinite dimension), then Condition (C7) holds. Condition (C8) ensures that the difference between the penalized mean function estimator and the corresponding least squares mean function estimator is small in comparison with the risk of the least squares estimator (see Lemma 3 in Appendix B). Sufficient conditions for (C8) are also given in Appendix C. We next show the asymptotic efficiency of the AIC-type selector.

Theorem 2. Assume conditions (C5)—(C8) hold. Then, the tuning parameter selected by minimizing in (12) with κn → 2 yields an asymptotically loss efficient estimator, , in the sense of (14).

The proof is given in Appendix B.

Theorem 2 demonstrates that the AIC-type selector is asymptotically loss efficient. In addition, using the result that log(1 + t) ≈ t for small t, the AIC selector behaves similarly to the following AIC* selector,

Accordingly, both AIC and AIC* selectors are asymptotically loss efficient.

Applying Lemma 4 and Equation (26) in Appendix B, we find that if in probability, then is asymptotically loss efficient. Note that it can be shown is bounded by 1. As a result, κn → 2 (including κn = 2) is critical in establishing the asymptotic loss efficiency. This finding is similar to the classical efficient criteria (see Shibata, 1980, Shao, 1997 and Yang, 2005). It is also not surprising that the BIC-type selectors do not possess asymptotic loss efficiency, which is consistent with the finding of classical variable selections (see Li, 1987 and Shao, 1997).

In practice, σ2 is often unknown. It is natural to replace the σ2 in by its consistent estimator (see Shao, 1997). The following corollary shows that the asymptotical property of GIC still holds.

Corollary 1. If the tuning parameter is selected by minimizing with κn → 2 and σ2 being replaced by its consistent estimator , then the resulting procedure is also asymptotically loss efficient.

The proof is given in Appendix B.

Remark 5. As suggested by an anonymous reviewer, we study the asymptotic loss efficiency of the generalized linear model in (5). Following the spirit of the deviance measure commonly used in the GLIM, we adopt the Kullback-Leibler (KL) distance measure to define the KL loss of an estimate (either the maximum likelihood estimate or the nonconcave penalized estimate ), as

| (15) |

where is defined in (6), θ0 = (θ01, · · · , θ0n)T is the true unknown canonical parameter, under canonical link function, and E0 denotes the expectation under the true model (see McQuarrie and Tsai, 1998, for example). It can be shown that the KL loss is identical to the squared loss for normal linear regression models with known variance. To obtain the asymptotic loss efficiency for GLIM, however, it is necessary to employ the Taylor expansion to expand at the true value of θ0i for i = 1, · · · , n. Accordingly, we need to impose some strong assumptions to establish the asymptotic loss efficiency for the nonconcave penalized estimate under the KL loss if the tuning parameter is selected by minimizing GIC in (7) with κn → 2. To avoid considerably increasing the length of the paper, we do not present detailed discussions, justifications, and proofs here, but they are given in the online supplemental material available in JASA website.

5 Numerical studies

In this section, we present three examples comprised of two Monte Carlo experiments and one empirical example. It is noteworthy that Example 1 considers a setting in which the true model is in a set of candidate models with logistic regressions. This setting allows us to examine the finite sample performance of the consistent selection criterion, which is expected to perform well via Theorem 1. In contrast, Example 2 considers a setting in which the true model is not included in a set of candidate models with Gaussian regressions. This setting enables us to assess the finite sample performance of the efficient selection criterion, which is expected to perform well via Theorem 2.

Example 1. Adapting the model setting from Tibshirani (1996) and Fan and Li (2001), we simulate the data from the logistic regression model, y|x ~ Bernoulli{p(xT β)}, where

In addition, β = (3, 1.5, 0, 0, 0, 0, 0, 0, 0, 2, 0, 0)T, x is a 12-dimensional random vector, the first 9 components of x are multivariate normal with covariance matrix Σ = (σij), in which σij = 0.5|i–j|, and the last 3 components of x are generated from an independent Bernoulli distribution with success probability 0.5. Moreover, we conduct 1000 realizations with the sample sizes n = 200 and 400.

To assess the finite sample performance of the proposed methods, we report the percentage of models correctly fitted, underfitted, and overfitted with 1, 2, 3, 4, 5 or more parameters by SCAD-AIC (i.e., κn = 2), SCAD-BIC (i.e., κn = log(n)), SCAD-GCV, AIC, and BIC selectors as well as via the oracle procedure (i.e., the simulated data were fitted with the true model). Their corresponding standard errors can be calculated by , where p̂ is the observed proportion in 1000 simulations. Moreover, we report the average number of zero coefficients that were correctly (C) and incorrectly (I) identified by different methods. To compare model fittings, we further calculate the following model error for the new observation (x, y),

where the expectation is taken with respect to the new observed covariate vector x, and μ(xT β) = E(y|x). Then, we report the median of the relative model error (MRME), where the relative model error is defined as RME = ME/MEfull, and MEfull is the model error calculated by fitting the data with the full model.

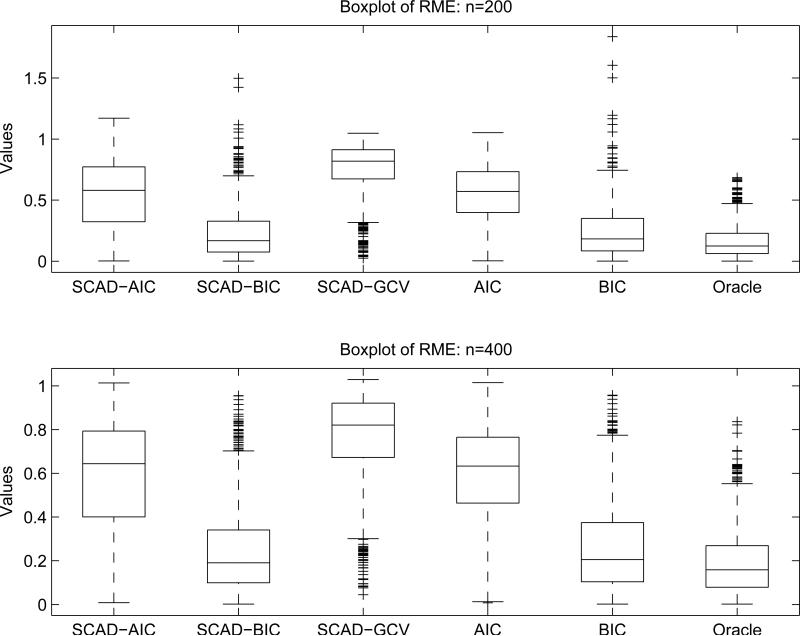

Table 1 shows that the MRME of SCAD-BIC is smaller than that of SCAD-AIC. As the sample size increases, the MRME of SCAD-BIC approaches that of the oracle estimator, whereas the MRME of SCAD-AIC remains at the same level. Hence, SCAD-BIC is superior to SCAD-AIC in terms of model error measures in this example. Moreover, Figure 1 presents the boxplots of RMEs, which lead to a similar conclusion.

Table 1.

Simulation results for the logistic regression model. MRME is the median relative model error; C denotes the average number of the nine true zero coefficients that were correctly identified as zero, while I denotes the average number of the three nonzero coefficients that were incorrectly identified as zero; the numbers in parentheses are standard errors; Under, Exact and Overfitted represent the proportions of corresponding models being selected in 1000 Monte Carlo realizations, respectively.

| MRME (%) | Zeros | Under (%) | Exact (%) | Overfitted (%) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Method | C | I | 1 | 2 | 3 | 4 | ≥ 5 | |||

|

n = 200 | ||||||||||

| SCAD-AIC | 58.03 | 7.4 (1.6) | 0.0 (0.0) | 0.2 | 30.2 | 23.2 | 19.6 | 13.6 | 7.5 | 5.9 |

| SCAD-BIC | 16.90 | 8.8 (0.5) | 0.0 (0.1) | 0.7 | 83.7 | 13.2 | 2.4 | 0.4 | 0.0 | 0.0 |

| SCAD-GCV | 81.91 | 5.8 (1.9) | 0.0 (0.0) | 0.0 | 7.8 | 12.0 | 18.1 | 18.8 | 17.6 | 25.7 |

| AIC | 57.05 | 7.4 (1.2) | 0.0 (0.0) | 0.1 | 19.9 | 31.5 | 26.7 | 14.8 | 4.3 | 2.8 |

| BIC | 18.27 | 8.8 (0.5) | 0.0 (0.1) | 0.8 | 80.4 | 17.0 | 2.3 | 0.1 | 0.0 | 0.0 |

| Oracle |

12.49 |

9.0 (0.0) |

0.0 (0.0) |

0.0 |

100.0 |

0.0 |

0.0 |

0.0 |

0.0 |

0.0 |

|

n = 400 | ||||||||||

| SCAD-AIC | 64.45 | 7.4 (1.5) | 0.0 (0.0) | 0.0 | 29.4 | 21.9 | 21.0 | 15.1 | 8.5 | 4.1 |

| SCAD-BIC | 19.03 | 8.9 (0.4) | 0.0 (0.0) | 0.0 | 89.9 | 7.9 | 1.8 | 0.2 | 0.2 | 0.0 |

| SCAD-GCV | 82.13 | 6.0 (1.9) | 0.0 (0.0) | 0.0 | 9.2 | 14.7 | 18.6 | 19.8 | 15.9 | 21.8 |

| AIC | 63.17 | 7.4 (1.2) | 0.0 (0.0) | 0.0 | 21.7 | 29.2 | 27.3 | 14.6 | 5.5 | 1.7 |

| BIC | 20.46 | 8.8 (0.4) | 0.0 (0.0) | 0.0 | 86.3 | 12.1 | 1.4 | 0.2 | 0.0 | 0.0 |

| Oracle | 15.88 | 9.0 (0.0) | 0.0 (0.0) | 0.0 | 100.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

Figure 1.

Boxplot of relative model error (RME) with n=200 and n=400.

In model identifications, SCAD-BIC has a higher chance than SCAD-AIC of correctly setting the nine true zero coefficients to zero, while SCAD-BIC is slightly more prone than SCAD-AIC to incorrectly set the three nonzero coefficients to zero when the sample size is small. In addition, SCAD-BIC has a much higher possibility of correctly identifying the true model than that of SCAD-AIC. Moreover, among the overfitted models, the SCAD-BIC method is likely to include only one irrelevant variable, whereas the SCAD-AIC method often includes two or more. When the sample size increases, the SCAD-BIC method yields a lesser degree of overfitting, while the SCAD-AIC method still overfits quite seriously. These results are consistent with the theoretical findings presented in Theorem 1.

It is not surprising that SCAD-GCV performs similar to SCAD-AIC. Furthermore, the classical AIC and BIC selection criteria behave as SCAD-AIC and SCAD-BIC, respectively, in terms of model errors and identifications. It is of interest to note that SCAD-AIC usually suggests a sparser model than AIC. In sum, SCAD-BIC performs the best.

Example 2. In practice, it is plausible that the full model fails to include some important explanatory variable. This motivates us to mimic such a situation and then assess various selection procedures. Accordingly, we consider a linear regression model , where xi's are i.i.d. multivariate normal random variables with dimension 13, the correlation between xi and xj is 0.5|i–j|, and εi's are i.i.d. N(0, σ2) with σ = 4. In addition, we partition , where xfull contains d = 12 covariates of the full model and xexc is the covariate excluded from model fittings. Accordingly, we partition , where βfull is a 12 × 1 vector and βexc is a scalar. To investigate the performance of the proposed methods under various parameter structures, we let , where β0 = (3, 1.5, 0, 0, 0, 0, 0, 0, 0, 2, 0, 0)T, δ = (0, 0, 1.5, 1.5, 1, 1, 0, 0, 0, 0, 0.5, 0.5)T, γ ranges from 0 to 10, and βexc = 0.2. Because the candidate model is the subset of the full model that contains 12 covariates in xfull, the above model settings ensure that the true model is not included in the set of candidate models.

We simulate 1000 data sets with n = 400, 800, and 1600. To study the performance of selectors, we define the finite sample's loss efficiency,

where is chosen by SCAD-GCV, SCAD-AIC, and SCAD-BIC. It is noteworthy that βfull depends on the sample size and γ, a sensible approach to compare the loss efficiency of the selection criterion across different sample sizes is to compare the loss efficiency under the same value of βfull.

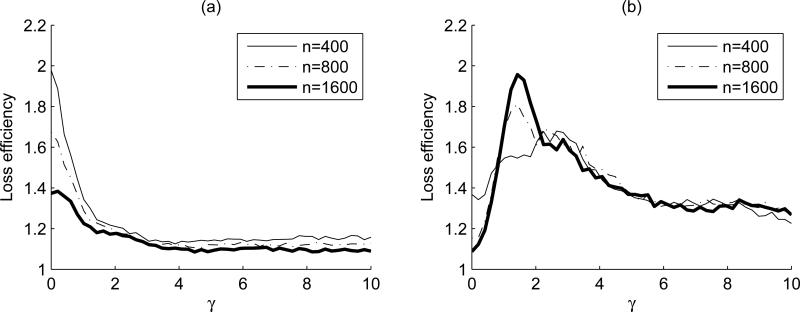

Because the performance of SCAD-GCV is very similar to that of SCAD-AIC, it is not reported here. Figure 2(a) and 2(b) depict the LEs of SCAD-AIC and SCAD-BIC, respectively, across various γ. Figure 2(a) clearly indicates that the loss efficiency of SCAD-AIC converges to 1 regardless of the value of γ, which corroborates the theoretical finding in Theorem 2. However, the loss efficiency of SCAD-BIC in Figure 2(b) does not show this tendency. Specifically, when γ is close to 0 (i.e., the model is nearly sparse), SCAD-BIC results in smaller loss due to its larger penalty function. As γ increases so that the full model contains the medium-sized coefficients, SCAD-BIC is likely to choose the model that is too sparse and hence increases the loss. When γ becomes large enough and the coefficient βexc is dominated by the remaining coefficients in βfull, the consistent property of SCAD-BIC tends to have the smaller loss. In sum, Figure 2 shows that SCAD-AIC is efficient, whereas SCAD-BIC is not. Finally, we examine the efficiencies of AIC and BIC, and find that the performances of AIC and BIC are similar to those of SCAD-AIC and SCAD-BIC, respectively, and hence are omitted.

Figure 2.

(a) The loss efficiency of SCAD-AIC; (b) The loss efficiency of SCAD-BIC.

Example 3. (Mammographic mass data) Mammography is the most effective method for screening for the presence of breast cancer. However, mammogram interpretations yield approximately a 70% rate of unnecessary biopsies with benign outcomes. Hence, several computer-aided diagnosis (CAD) systems have been proposed to assist physicians in predicting breast biopsy outcomes from the findings of BI-RADS (Breast Imaging Reporting And Data System). To examine the capability of two novel CAD approaches, Elter, Schulz-Wendtland, and Wittenberg (2007) recently analyzed a data set containing 516 benign and 445 malignant instances. We downloaded this data set from UCI Machine Learning Repository, and excluded 131 missing values and 15 coding errors. As a result, we considered a total of 815 cases.

To study the severity (benign or malignant), Elter et al. (2007) considered the following three covariates: x1-birads, BI-RADS assessment assigned in a double-review process by physicians (definitely benign=1 to highly suggestive of malignancy=5); x2-age, patient's age in years; x3-mass density, high=1, iso=2, low=3, fat-containing=4. In addition, we employ the dummy variables, x4 to x6, to represent the four mass shapes: round=1, oval=2, lobular=3, irregular=4, where “irregular” is used as the baseline. Analogously, we apply the dummy variables, x7 to x10, to represent the five mass margins: circumscribed=1, microlobulated=2, obscured=3, ill-defined=4, spiculated=5), where “spiculated” is used as the baseline.

To predict the value of the binary response, y = 0 (benign) or y = 1 (malignant), on the basis of the 10 explanatory variables, we fit the data with the following logistic regression model:

where x = (x1, . . . , x10)T, β = (β1, . . . , β10)T, and p(xTβ) is the probability of the case being classified as malignant. As a result, the tuning parameters selected by SCAD-AIC and SCAD-BIC are 0.0332 and 0.1512, respectively, and SCAD-GCV yields the same model as SCAD-AIC. Because the true model is unknown, we follow an anonymous referee's suggestion to include the models selected by the delete-one cross validation (delete-1 CV) and the 5-fold cross validation (5-fold CV). The detailed procedures for fitting those models can be obtained from the first author.

Because the model selected by the delete-1 CV is the same as SCAD-AIC, Table 2 only presents the non-penalized maximum likelihood estimates from the full-model as well as the SCAD-AIC, SCAD-BIC, and 5-fold CV estimates, together with their standard errors. It indicates that the non-penalized maximum likelihood approach fits five spurious variables (x3, x6, and x8 to x10), while SCAD-AIC and 5-fold CV include two variables (x6 and x9) and one variable (x9), respectively, with insignificant effects at a level of 0.05. In contrast, all variables (x1, x2, x4, x5, and x7) selected by SCAD-BIC are significant. Because the sample size n = 815 is large, these findings are consistent with Theorem 1 and the simulation results. In addition, the p-value of the deviance test for assessing the SCAD-BIC model against the full model is 0.41, and as a result, there is no evidence of lack of fit in the SCAD-BIC model.

Table 2.

Estimates for mammographic mass data with standard deviations in parentheses

| MLE | SCAD-AIC | SCAD-BIC | 5-Fold CV | |

|---|---|---|---|---|

| β 0 | −11.04(1.48) | −11.17(1.13) | −11.16(1.07) | −11.49 (1.14) |

| BIRADS (x1) | 2.18(0.23) | 2.19(0.23) | 2.25(0.23) | 2.23 (0.23) |

| age (x2) | 0.05(0.01) | 0.04(0.01) | 0.04(0.01) | 0.05 (0.01) |

| density (x3) | −0.04(0.29) | 0(-) | 0(-) | 0(-) |

| sRound (x4) | −0.98(0.37) | −0.99(0.37) | −0.80(0.34) | −0.81 (0.35) |

| sOval (x5) | −1.21(0.32) | −1.22(0.32) | −1.07(0.30) | −1.07 (0.30) |

| sLobular (x6) | −0.53(0.35) | −0.54(0.34) | 0(-) | 0(-) |

| mCircum (x7) | −1.05(0.42) | −0.98(0.32) | −1.01(0.30) | −1.07 (0.31) |

| mMicro (x8) | −0.03(0.65) | 0(-) | 0(-) | 0(-) |

| mObscured (x9) | −0.48(0.39) | −0.42(0.30) | 0(-) | −0.47 (0.30) |

| mIlldef (x10) | −0.09(0.33) | 0(-) | 0(-) | 0(-) |

Based on the model selected by SCAD-BIC, we conclude that a higher BI-RADS assessment or a greater age results in a higher chance for malignancy. In addition, the oval or round mass shape yields lower odds for malignance than does the irregular mass shape, while the odds for malignance designated by the lobular mass shape is not significantly different from that of the irregular mass shape. Moreover, the odds for malignance indicated by the microlobulated, obscured, and ill-defined mass margins are not significantly different from that of the spiculated mass margin. However, the circumscribed mass margin leads to lesser odds for malignance than that of the four other types of mass margins.

6 Discussion

In the context of variable selection, we propose the generalized information criterion to choose regularization parameters for nonconcave penalized likelihood functions. Furthermore, we study the theoretical properties of GIC. If we believe that the true model is contained in a set of candidate models with the GLIM structure, then the BIC-type selector identifies the true model with probability tending to 1, while the GIC selectors with bounded κn tends to overfit with positive probability. However, if the true model is approximated by a family of candidate models, the AIC-type selector is asymptotically loss efficient, whereas the BIC-type selector is not, in general. Simulation studies support the finite sample performance of the selection criteria.

Although we obtain the theoretical properties of GIC for GLIM, the application of GIC is not limited. For example, we could employ GIC to select the regularization parameter in Cox proportional hazard and quasi-likelihood models. We believe that these efforts would further enhance the usefulness of GIC in real data analysis.

Supplementary Material

Acknowledgments

We are grateful to the editor, the associate editor and three referees for their helpful and constructive comments that substantially improved an earlier draft. Zhang's research is supported by National Institute on Drug Abuse grants R21 DA024260 and P50 DA10075 as a research assistant. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIDA or the NIH. Li's research is supported by National Science Foundation grants DMS 0348869, 0722351 and 0926187.

Appendix A. Proof of Theorem 1

Before proving the theorem, we show the following two lemmas. Then, Theorems 1(A) and 1(B) follow from Lemmas 1 and 2, respectively. For the sake of convenience, we denote in (7) and in (8) by D(λ) and D*(α), respectively.

Lemma 1. Suppose the density function of the generalized linear model satisfies Fan and Li's (2001) three regularity conditions (A)—(C). Assume that there exists a positive constant M such that κn < M. Then under condition (C4), we have

| (16) |

and

| (17) |

as n → ∞.

Proof. For a given λ, the non-penalized maximum likelihood estimator, , maximizes under model αλ. This implies D(λ) ≥ D*(αλ) for any given λ, which leads to

| (18) |

Then, we obtain that

holds true for any λ ∈ Ω– = {λ : αλ ⊅ α0}. This together with condition (C4) and obtained from the fact of κn < M, we have

| (19) |

as n → ∞. The last step uses the fact that the number of underfitting models is finite, hence minα⊅α0 cα is strictly greater than (i.e., cα0) under condition (C4). The above equation yields (16) immediately.

Next we show that the model selected by the GIC selector with bounded κn is overfitted with probability bounded away from zero. For any λ ∈ Ω0, αλ = α0. Then subtracting from both sides of (18) and subsequently taking infλ∈Ω0 over GICκn(λ), we have

| (20) |

Note that the right-hand side of the above equation does not involve λ.

, where and are the non-penalized maximum likelihood estimators computed via the true and full models, respectively. Under regularity conditions (A)—(C) in Fan and Li (2001), it can be shown that and are consistent and asymptotically normal. Hence, the likelihood ratio test statistic . This result, together with κn < M and (20), yields

This implies (17), and we complete the proof of Lemma 1.

To prove Theorem 1(B), we present the following lemma.

Lemma 2. Suppose the density function of the generalized linear model satisfies Fan and Li's (2001) three regularity conditions (A)—(C). Assume conditions (C1)—(C4) hold, and let . If κn satisfies κn → ∞ and λn → 0 as n → ∞, then

| (21) |

and

| (22) |

Proof. Without loss of generality, we assume that the first dα0 coefficients of βα0 in the true model are nonzero and the rest are zeros. Note that the density function of the generalized linear model satisfies Fan and Li's (2001) three regularity conditions (A)—(C). These conditions, together with condition (C3) and the assumptions of κn stated in Lemma 2, allow us to apply Fan and Li's Theorems 1 and 2 to show that, with probability tending to 1, the last d – dα0 components of are zeros and the first dα0 components of satisfy the normal equations

| (23) |

where , and is the j-th component of .

Using the oracle property, we have . Then, under conditions (C1) and (C2), there exists a constant m so that for min1≤j≤dα0 |βj| > mλn as n gets large. Accordingly, P(bλnj = 0) → 1 for j = 1, ..., dα0. This together with (23) implies that, with probability tending to 1, the first dα0 components of solve the normal equations

and the remaining d – dα0 components are zeros. This is exactly the same as the normal equation in solving the non-penalized maximum likelihood estimator . As a result, with probability tending to 1. It follows that

Moreover, using the result from Proposition 1, we have P{dfλn = dα0} → 1. Consequently,

The proof of (21) is complete.

We next show that, for any λ which cannot identify the true model, the resulting GICκn(λ) is consistently larger than GICκn(λn). To this end, we consider two cases, underfitting and overfitting.

Case 1: Underfitted model (i.e., λ ∈ Ω– so that αλ ⊅ α0). Applying (18) and (21), we obtain that, with probability tending to 1,

Subsequently, take infλ∈Ω– of both sides of the above equation, which yields

| (24) |

This, in conjunction with condition (C4) and the number of underfitted models being finite, leads to

as n → ∞. As a result,

Case 2: Overfitted model (i.e., λ ∈ Ω+ so that . According to Lemma 1, with probability tending to 1, D(λn) = D*(α0). In addition, Proposition 1 indicates that dfλ – dfλn > τ + OP (1) for some τ > 0 and λ ∈ Ω+. Moreover, as noticed in the proof of Lemma 1, D(λ) ≥ D*(αλ). Therefore, with probability tending to 1,

Because D*(α0) – D*(α) follows a χ2 distribution with dα – dα0 degrees of freedom asymptotically for any , it is of order OP (1). Accordingly,

| (25) |

Using the fact that κn goes to infinity as n → ∞, the right-hand side of equation (25) goes to positive infinity, which guarantees that the left-hand side of equation (25) is positive as n → ∞. Hence, we finally have

The results of Cases 1 and 2 complete the proof of (22).

Proofs of Theorem 1. Lemma 1 implies that GICκn(λ), which produces the underfitted model, is consistently larger than . Thus, the optimal model selected by minimizing the GICκn(λ) must contain all of the significant variables with probability tending to one. In addition, Lemma 1 indicates that there is a nonzero probability that the smallest value of GICκn(λ) for λ ∈ Ω0 is larger than that of the full model. As a result, there is a positive probability that any λ associated with the true model cannot be selected by GICκn(λ) as the regularization parameter. Theorem 1(A) follows.

Lemma 2 indicates that the model identified by λn converges to the true model as the sample size gets large. In addition, it shows λ's that fail to identify the true model cannot be selected by GICκn(λ) asymptotically. Theorem 1(B) follows.

Appendix B. Proofs of Theorem 2 and Corollary 1

Before proving the theorem, we establish the following two lemmas. Lemma 3 evaluates the difference between a penalized mean estimator and its corresponding least squares mean estimator , while Lemma 4 demonstrates that the losses of and are asymptotically equivalent.

Lemma 3. Under condition (C5),

where C is the constant number in condition (C5) and b is defined in condition (C8).

Proof. Without loss of generality, we assume that the first dαλ components of and are nonzero, and denote them by and , respectively. Thus, and . From the proofs of Theorems 1 and 2 in Fan and Li (2001), with probability tending to 1, we have that is the solution of the following equation,

where b(1) is the subvector of b that corresponds to . Accordingly,

where . In addition, the eigenvalues of Vαλ are bounded under condition (C5). Hence,

for some positive constant number C. This completes the proof.

Lemma 4. If conditions (C5)—(C8) hold, then

in probability.

Proof. After algebraic simplification, we have

Under conditions (C6) and (C7), Li (1987) showed that

This, together with condition (C8) and Lemma 3, implies

Applying the Cauchy-Schwarz inequality, we next obtain

As a result, , and Lemma 4 follows immediately.

Proof of Theorem 2. To show the asymptotic efficiency of the AIC-type selector, it suffices to demonstrate that minimizing with κn → 2 is the same as minimizing asymptotically. To this end, we need to prove that, in probability,

| (26) |

Let the projection matrix corresponding to the model α be . Then,

| (27) |

Let and J5 = (κn – 2)σ2dαλ/n. Using Lemma 3, Lemma 4 and similar arguments used in Li (1987), we obtain that, in probability,

Because κn – 2 and the fact that is bounded by 1, we can further show that using Lemma 4 and condition (C7). Accordingly, (26) holds, which implies that the difference between and is negligible in comparison to . This completes the proof.

Proof of Corollary 1. When σ2 is unknown, the in (27) becomes

Using Lemma 4 and condition (C7), we have

in probability, which completes the proof.

Appendix C. Sufficient conditions for (C8)

We first present three sufficient conditions for (C8).

(S1) There exists a constant M1 such that λmax satisfies for all n.

(S2) There exists a constant M2 such that pλ(θ) satisfies for any θ.

(S3) The average error of the full model , i.e. , satisfies , as n → ∞.

We next provide motivations for these conditions. Assume that the true model is for i = 1, . . . , n, and then write . Under the condition d = O(nν) with ν < ¼, Fan and Peng (2004) proved that there exists a local maximum of the penalized least squares function, so that . Thus, conditions (S1) and (S2) ensure that the penalized estimator is . As for condition (S3), consider a case where the full model misses an important variable xexc that is orthogonal to the rest of the variables. Accordingly, , and hence is of the same order as . Consequently, (S3) holds if the coefficient βexc satisfies , which is valid, for example, when βexc is fixed.

In contrast to imposing condition (S3) on the error of the full model, an alternative condition (S3*) motivated by Fan and Peng (2004) is given below.

(S3*) The proportion of penalized coefficients satisfies , as n → ∞, where m is defined in (C2).

Condition (S3*) means that the proportion of small or moderate size coefficients is vanishing. This condition is satisfied under the identifiability assumption of Fan and Peng (2004) (i.e., minj:β0≠0 |β0j|/λ → ∞, as n → ∞). As a byproduct, a similar condition can also be established for the adaptive LASSO, which employs data-driven penalties with for some k > 0 to cope with the bias of LASSO. In sum, (S1) to (S3) (or S3*) are mild conditions, and two propositions given below demonstrate their sufficiency for condition (C8).

Proposition 2. Under (S1) to (S3), condition (C8) holds.

Proof. It is noteworthy that

| (28) |

On the right-hand side of (28), the first term dominates the second term via condition (S3). From (S1), (S2), and (28), we have that

which goes to zero independently of λ. This completes the proof.

Proposition 3. Assume that the penalty function satisfies condition (C2). Under (S1) to (S3*), condition (C8) holds.

Proof. Under condition (C2), the components in b are zero except for those . Then, employing (S2) and (28), we obtain that

On the right-hand side of the above equation, the first term is constant, the second term is bounded under (S1), and the third term goes to zero uniformly in λ under (S3*). This completes the proof.

Footnotes

Technical Details for Remark 5: Detailed discussions, justifications, and proofs referenced in Remark 5 are available under the Paper information link at the JASA website http://pubs.amstat.org/loi/jasa (pdf).

References

- Akaike H. A New Look at the Statistical Model Identification. IEEE Trans. on Automatic Control. 1974;19:716–723. [Google Scholar]

- Craven P, Wahba G. Smoothing Noisy Data with Spline Functions. Numerische Mathematik. 1979;31:377–403. [Google Scholar]

- Efron B, Hastie T, Johnstone IM, Tibshirani R. Least Angle Regression. The Annals of Statistics. 2004;32:407–499. [Google Scholar]

- Elter M, Schulz-Wendtland R, Wittenberg T. The Prediction of Breast Cancer Biopsy Outcomes Using Two CAD Approaches that Both Emphasize an Intelligible Decision Process. Medical Physics. 2007;34:4164–4172. doi: 10.1118/1.2786864. [DOI] [PubMed] [Google Scholar]

- Fan J, Li R. Variable Selection via Nonconcave Penalized Likelihood and Its Oracle Properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Fan J, Li R. Variable Selection for Cox's Proportional Hazards Model and Fratile Model. The Annals of Statistics. 2002;30:74–99. [Google Scholar]

- Fan J, Li R. New Estimation and Model Selection Procedures for Semiparametric Modeling in Longitudinal Data Analysis. Journal of the American Statistical Association. 2004;99:710–723. [Google Scholar]

- Fan J, Peng H. Nonconcave Penalized Likelihood with a Diverging Number of Parameters. The Annals of Statistics. 2004;32:928–961. [Google Scholar]

- Frank IE, Friedman JH. A Statistical View of Some Chemometrics Regression Tools. Technometrics. 1993;35:109–148. [Google Scholar]

- Hunter D, Li R. Variable Selection Using MM Algorithms. The Annals of Statistics. 2005;33:1617–1642. doi: 10.1214/009053605000000200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konishi S, Kitagawa G. Generalised Information Criteria in Model Selection. Biometrika. 1996;83:875–890. [Google Scholar]

- Li K-C. Asymptotic Optimality for Cp, CL, Cross-Validation and Generalized Cross-Validation: Discrete Index Set. The Annals of Statistics. 1987;15:958–975. [Google Scholar]

- Li R, Liang H. Variable Selection In Semiparametric Regression Modeling. The Annals of Statistics. 2008;36:261–286. doi: 10.1214/009053607000000604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCullagh P, Nelder JA. Generalized Linear Models. Chapman & HALL/CRC; New York: 1989. [Google Scholar]

- McQuarrie ADR, Tsai C-L. Regression and Time Series Model Selection. 1st ed. World Scientific Publishing Co, Pte. Ltd.; Singapore: 1998. [Google Scholar]

- Nishii R. Asymptotic Properties of Criteria for Selection of Variables in Multiple Regression. The Annals of Statistics. 1984;12:758–765. [Google Scholar]

- Park M-Y, Hastie T. An L1 Regularization-path Algorithm for Generalized Linear Models. Journal of the Royal Statistical Society, Series B. 2007;69:659–677. [Google Scholar]

- Schwarz G. Estimating the Dimension of a Model. The Annals of Statistics. 1978;19(2):461–464. [Google Scholar]

- Shao J. An Asymptotic Theory for Linear Model Selection. Statistica Sinica. 1997;7:221–264. [Google Scholar]

- Shibata R. Asymptotically Efficient Selection of the Order of the Model for Estimating Parameters of a Linear Process. The Annals of Statistics. 1980;8:147–164. [Google Scholar]

- Shibata R. An Optimal Selection of Regression Variables. Biometrika. 1981;68:45–54. [Google Scholar]

- Shibata R. Approximation Efficiency of a Selection Procedure for the Number of Regression Variables. Biometrika. 1984;71:43–49. [Google Scholar]

- Tibshirani R. Regression Shrinkage and Selection via LASSO. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- Wang H, Leng C. Unified LASSO Estimation via Least Squares Approximation. Journal of the American Statistical Association. 2007;102:1039–1048. [Google Scholar]

- Wang H, Li G, Tsai C-L. Regression coefficient and autoregressive order shrinkage and selection via LASSO. Journal of the Royal Statistical Society, Series B. 2007a;69:63–78. [Google Scholar]

- Wang H, Li R, Tsai C-L. Tuning Parameter Selectors for the Smoothly Clipped Absolute Deviation Method. Biometrika. 2007b;94:553–568. doi: 10.1093/biomet/asm053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yang Y. Can the Strengths of AIC and BIC be shared? A Conflict Between Model Identification and Regression Estimation. Biometrika. 2005;92:937–950. [Google Scholar]

- Yuan M, Lin Y. On the Non-Negative Garrotte Estimator. Journal of the Royal Statistical Society, Series B. 2007;69:143–161. [Google Scholar]

- Zhang C-H. Penalized linear unbiased selection. Department of Statistics, Rutgers University; 2007. Technical Report No. 2007-003. [Google Scholar]

- Zhang HH, Lu W. Adaptive LASSO for Cox's Proportional Hazards Model. Biometrika. 2007;94:691–703. [Google Scholar]

- Zou H. The Adaptive LASSO and its Oracle Properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. On the Degrees of Freedom of the LASSO. The Annals of Statistics. 2007;35:2173–2192. [Google Scholar]

- Zou H, Li R. One-Step Sparse Estimates in Nonconcave Penalized Likelihood Models. The Annals of Statistics. 2008;36:1509–1533. doi: 10.1214/009053607000000802. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.