Abstract

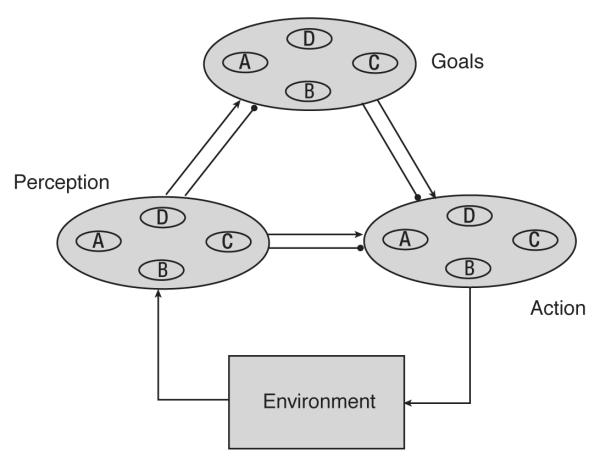

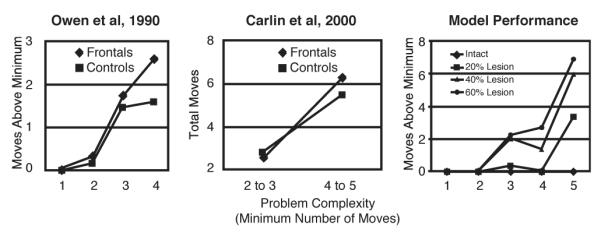

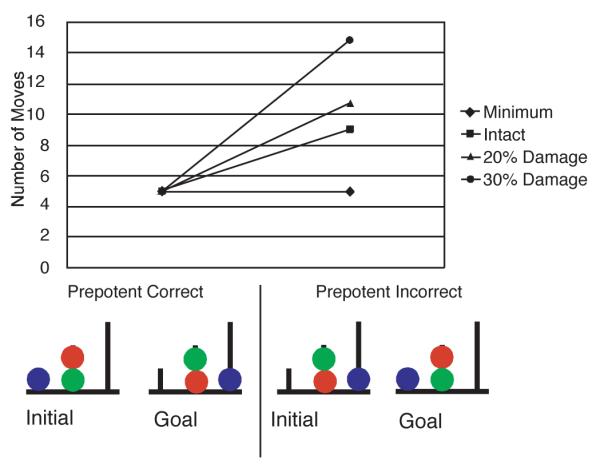

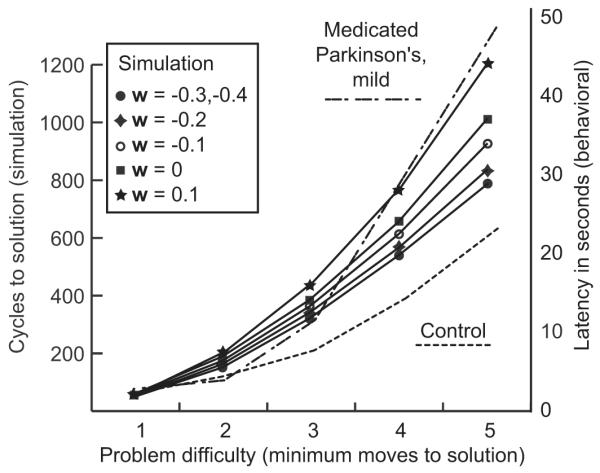

Researchers studying complex cognition have grown increasingly interested in mapping symbolic cognitive architectures onto subsymbolic brain models. Such a mapping seems essential for understanding cognition under all but the most extreme viewpoints (namely, that cognition consists exclusively of digitally implemented rules; or instead, involves no rules whatsoever). Making this mapping reduces to specifying an interface between symbolic and subsymbolic descriptions of brain activity. To that end, we propose parameterization techniques for building cognitive models as programmable, structured, recurrent neural networks. Feedback strength in these models determines whether their components implement classically subsymbolic neural network functions (e.g., pattern recognition), or instead, logical rules and digital memory. These techniques support the implementation of limited production systems. Though inherently sequential and symbolic, these neural production systems can exploit principles of parallel, analog processing from decision-making models in psychology and neuroscience to explain the effects of brain damage on problem solving behavior.

Keywords: problem solving, production system, neural network, diffusion, decision making, symbolic, subsymbolic

1. Introduction

In order to build biologically plausible cognitive models that exhibit the full range of human behavior — from playing chess to balancing on skates — it seems that researchers will inevitably require models that can implement finite state automata (e.g., for representing and executing sequences of chess moves), and at the same time, feedback controllers for dynamical systems (e.g., for correcting a destabilizing wobble). Similarly, it will almost surely require models that are robust to perceptual noise, but that can behave stochastically when desired; and that require no central clock to govern synchronous, digital circuitry, but that can still time intervals and represent symbols.

We present a technique for building cognitive models that may be able to satisfy the disparate and seemingly paradoxical requirements outlined above. This technique draws on the strengths of existing symbolic (or quasi-symbolic) cognitive architectures, such as Soar (Laird et al., 1987) and ACT-R (Anderson and Lebiere, 1998) among others, but it implements every feature in a physical-level design that di ers substantially from the design of standard computer hardware. It also draws on the strengths of existing subsymbolic cognitive models, including models of associative memory and of decision making.

Using this technique amounts to hardwiring neural networks to produce complex models of approximately symbolic processing. What is left out of this hardwiring approach, of course, is one of the primary virtues of neural networks — the simple and powerful learning algorithms that can be applied to them (e.g., Rumelhart et al., 1986; Ackley et al., 1985; Williams and Zipser, 1989; Sutton and Barto, 1998). Our hope, however, is that in showing how a hardwired system may resolve some of the differences between strictly symbolic and strictly subsymbolic cognitive models, we will have presented a well-defined target architecture that future learning algorithms may be designed to acquire through experience.

To best achieve our purpose, and to show that an account of a symbolic / subsymbolic interface can have practical consequences in cognitive modeling, this paper investigates the functionality underlying human problem solving. Problem solving has been argued to exemplify complex cognition (Miller et al., 1960; Newell and Simon, 1972), but it is not typically modeled with neural networks (although see Dehaene and Changeux, 1997). While what we present is by no means a comprehensive theory of human problem solving, we hope that it illustrates the leverage that can be gained from extracting symbolic processing out of a subsymbolic system.

We begin from the same starting point assumed in some of the earliest cognitive modeling efforts: namely, we model problem solving as a process of heuristic search (Newell and Simon, 1963). However, our approach differs from early symbolic modeling efforts in that it does not presuppose any hard and fast distinction between software and hardware. Instead, it directly addresses a lower level of description whose fundamental atoms we propose to be standard, artificial neural network units. We choose this level of description despite the fact that it, like a purely symbolic approach itself, sacrifices a great deal of biophysical detail. We note at the outset, however, that there is growing evidence that this type of neural network may plausibly be reduced even further to models whose greater level of physical detail is more appropriate to single-cell physiology than to whole-brain function (Wang, 2002; Wong and Wang, 2006).

In what follows, we build from this model of the physical processing level up to an architecture capable of problem-space search, presenting possible solutions to problems that arise along the way. Admittedly, this is an architecture with several limitations — including an inability to implement firstorder logic — that all appear to reduce to what is commonly known as “the binding problem”, and which further work must address. However, a variety of promising approaches to this problem already exist that seem compatible with the proposed architecture, including the binding of object features through temporal synchrony of activations (Shastri and Ajjanagadde, 1993) — an approach which supports analogical reasoning in some systems (Hummel and Holyoak, 1997) — and, without relying on synchrony, using layers of conjunctive processing units (O’Reilly and Busby, 2002) to bind features. The general problem of using neural networks to represent first-order logic statements and to carry out deductive inference has also been addressed by a number of approaches, several of which are detailed in Hammer and Hitzler (2007).

The organization of the paper parallels both the design-level hierarchy of modern computer engineering (Hayes, 1993) and the levels-of-analysis hierarchy made famous by David Marr in vision research (Marr, 1982). Section 2 covers the basic ‘physical-level’ (Hayes, 1993) or ‘implementational-level’ (Marr, 1982) building block that we will use for cognitive modeling — a stochastic version of a classic artificial neural network unit. Section 3 covers the decision making networks that form the basic components of the architecture’s ‘logic level’, in engineering terms. Sections 4 and 5 cover the composition of these networks into sequential processing systems equivalent to simple production systems (collections of if-then rules coupled with a working memory). Because of their computational power and psychological plausibility, production systems are used widely in symbolic cognitive modeling (e.g. Anderson and Lebiere, 1998; Just and Carpenter, 1992; Laird et al., 1987; Kieras and Meyer, 1997).

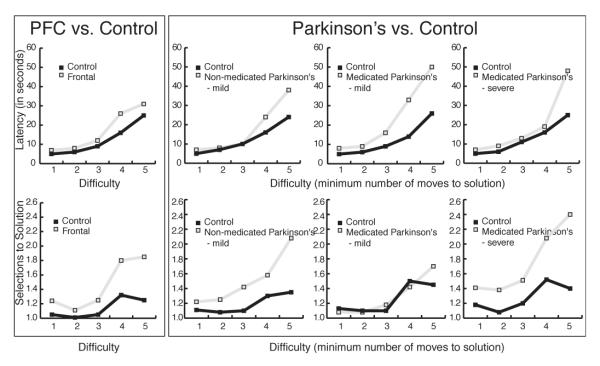

In order to demonstrate the usefulness of a well-specified symbolic/subsymbolic interface for cognitive modeling, section 6 reviews published results from a model that incorporates this interface. We applied it to human performance data from a problem solving task used in psychology to assess cognitive deficits in brain damage and disease (the Tower of London task of Shallice, 1982). These sections correspond to the ‘architecture level’ in engineering and span the implementational and ‘algorithmic’ levels in Marr (1982); they provide most of the essential pieces of a cognitive architecture — that is, a framework of core assumptions that defines a space of possible cognitive models (Newell, 1990).

We conclude with a general discussion in section 7. In the supplementary materials, we give a specification of the previously mentioned cognitive model of human performance in the Tower of London task (the details of which have not been published and may interest modelers seeking to replicate or generalize our modeling results). This example serves as an existence proof that recurrent neural networks may serve as a bridge between low-level, biophysically detailed neuron models and high-level psychological models.

2. Basic building block

In this section, we define a stochastic version of a classical model of neural population activity that has received empirical support from neurophysiology (e.g., Shadlen and Newsome, 1998). This population model will serve as the basic building block of a proposed cognitive architecture. In its deterministic form (cf. Cohen and Grossberg, 1983; Hertz et al., 1991; Hopfield, 1984; Lapique, 1907; Wilson and Cowan, 1972), this simple model feeds a linear combination of a unit’s inputs into a system defined by one of the simplest possible nonlinear differential equations. It is formally equivalent to an electric circuit of resistive inputs feeding into a capacitor, or leaky integrator, whose output is boosted by an operational amplifier (Mead, 1989).

Computing linear combinations or weighted sums of inputs allows a model neural population to perform arbitrary linear transformations of its inputs. This capability has proven useful for modeling fundamental human learning and categorization capacities by a number of authors (e.g., Anderson et al., 1977; Rosenblatt, 1958). It will form the basis of our subsymbolic approach to simple decision making in section 3 and to more complex decision making in section 5, where we equate making a decision to voting for outcomes in an election. Further, it is consistent with the basic phenomena in synaptic transmission between neurons, which lend themselves well to a linear description if plasticity is not too great (Dayan and Abbott, 2001). Importantly, a linear model for integrating multiple inputs also has the advantage of allowing the large body of linear systems theory to aid in the formal analysis of models. Subsequently transforming these linear combinations with a nonlinear tranformation (namely a squashing function) then allows a tremendous increase in functionality (such as the ability to represent any continuous function; see, e.g., Bishop, 2006) without a complete loss of analytical tractability.

In addition to the linear combination of momentary inputs, the model assumes the addition of Gaussian noise to these inputs, followed by leaky integration of these noise-corrupted combinations from moment to moment prior to application of the nonlinear transformation. Leaky integration is formally defined in appendix A, where we also discuss the stochastic numerical integration technique used to simulate the proposed architecture on a computer.1 Similar behavior occurs in a resistor-capacitor (RC) circuit: when the input voltage to such an RC circuit is abruptly changed, the output voltage approaches the new value at an exponential rate determined by the value of the circuit’s time constant, which equals the inverse of the resistance times the capacitance (Oppenheim and Willsky, 1996).

It is from this property that we derive two of the most important reasons to use this stochastic model. One is functional, which is that the RC factor (equivalently, the time constant) determines the model’s ability both to act as a low-pass filter — that is, to filter out high-frequency noise — and to act as a short-term memory. Thermal noise occurs at roughly equal power at all frequencies in electrical circuits (Gardiner, 2004), and is therefore referred to as ‘white’ noise. In contrast, most signals of interest will have an upper frequency bound, leaving a high-frequency band filled entirely with noise. Thus, attenuating high frequencies is probably critical for the survival of organisms that use electrical activity to process information. At least a small amount of such attenuation is, in any case, probably unavoidable in any physically implemented system: the small capacitances in digital, sequential circuits provide a small amount of noise reduction, for example.2 When applied to Gaussian signals corrupted by Gaussian white noise, this approach to filtering is in fact equivalent to the optimal Bayesian signal estimation procedure (Poor, 1994); when it is not optimal, it can frequently still approximate such a procedure, and unlike a true Bayesian approach, it requires no explicit priors and is computationally tractable under all circumstances. Furthermore, noise allows random behavior, which is essential in competitive games (Von Neumann and Morgenstern, 1944).

The other major reason for using this model is empirical, since individual neurons themselves have a capacitive membrane with resistive conducting pores (Hodgkin and Huxley, 1945). Thus leaky integration is known to occur in the brain (albeit in the context of a variety of more complex processes). Simple, leaky integrators can be related to more complex models of neural activity (Gerstner, 2000; Wang, 2002; Wong and Wang, 2006) that can in turn be related to the widely accepted model of Hodgkin and Huxley (1952), but the simplicity of leaky integrator models allows analytical solutions that more complex models lack.

As we discuss in the next section, however, the time constant of individual neurons is much too small to provide the kind of noise filtering and slow memory decay that may be required for typical cognitive tasks (instead, individual neurons appear to be optimized for millisecond-level computation). Nevertheless, populations of neurons acting in concert may be able to achieve time constants that are much larger than that of an individual neuron (Wang, 2001). In addition, Seung et al. (2000) discuss a method that we review here for using recurrent, self-excitatory feedback within such a population to achieve an arbitrarily large time constant, including an ‘infinite’ time constant that causes a self-exciting unit to act as a perfect integrator of its inputs (see Eq. 20). This fact will allow us to design mechanisms that time intervals and provide feedback control with desirable properties (namely, properties that allow cognitive models to make decisions robustly). These mechanisms in turn will enable the construction of models that carry out problem space search without the use of a central, oscillating clock and a traditional, synchronous circuit design.

Obviously, electric circuit models also describe electrical activity in the type of hardware underlying programmable computers. As a result, a single form of mathematics can be used for our ultimate goal of linking psychological models to neurophysiological models, and also for translating between the symbolic computational level and the physical level of transistors, resistors and capacitors in modern computing technology.

Formally, a low-pass filter coupled with a nonlinear activation function forms a system defined by a stochastic differential equation (SDE), Eq. 1, and a squashing function, Eq. 2:

| (1) |

| (2) |

Here, V is taken to represent the average firing rate of a neural population; λ determines the slope of the sigmoidal activation function f, and β represents the offset voltage, or equivalently, the value of the input x such that f(x) = 0.5. By arguments in Appendix B, however, we can use a single, much more manageable equation with approximately the same behavior (cf. Cohen and Grossberg, 1983):

| (3) |

Here I represents a weighted sum of inputs from other units: . The variable c’ represents the weighted sum of noise terms, which averages out to 0 in the limit of a large number of uncorrelated noise terms.

Now we turn to compositions of these building blocks for carrying out an essential operation in both computer technology and human and animal behavior: namely, decision making.

3. Composition of decision making circuits

With basic computing elements in hand, we now describe an implementation of symbols and logical rules. Symbols arise in our analog system through a quantization (Gray and Neuhoff, 1998) or categorization process. The particular categorization process we use is equivalent to a well-supported model of decision making in psychology and neuroscience known as the diffusion (or drift-diffusion) model (cf. Ratcliff, 1978), which itself is inspired by the physics of Brownian motion (Gardiner, 2004). A ‘decision’ is the selection of a unique outcome from among a finite or countably infinite set of discrete possibilities, although the inputs to such a process are often continuous.

This quantization approach is also at the heart of basic decision-making operations in digital electronics (and in symbolic cognitive modeling approaches like production systems). Decisions are the fundamental logic-level operations that allow a purely symbolic (i.e., binary) description of the physical state of a circuit and a description of its dynamics in terms of propositional logic, even though the physical laws governing its behavior are defined by differential equations and continuously varying quantities. Applying logical operations to symbolic descriptions then allows engineers to design at the hierarchically higher architecture level (and software engineers to program at a still higher level) using much simpler Boolean algebra. We hypothesize that symbolic search processes arise in cognition for similar reasons of increased efficiency.

3.1. A standard voltage binarization scheme

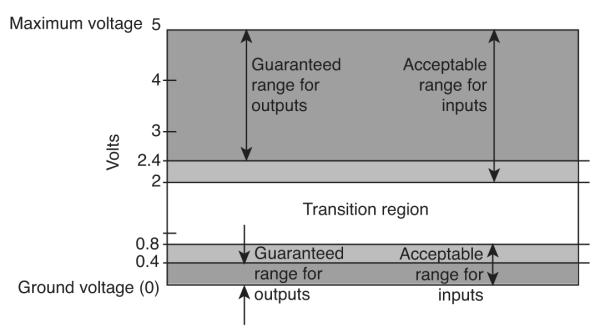

Although we will argue that analog computation should not be ignored in cognitive modeling, the way in which standard computers implement digital behavior in inherently analog circuitry will nevertheless provide a paradigm for our own analog-to-digital (AD) conversions when they occur. Given that continuous change in the output voltage is produced by continuous change in the input voltage, all-or-none switching behavior in networks of transistors, resistors and capacitors is determined simply by choosing a convention for voltage levels that correspond to ‘all’ (1) and to ‘none’ (0). This convention assigns a band of acceptable voltage values for representing a 0 to small voltages, and a wider band of acceptable voltage values for representing a 1 centered at a higher voltage (Hayes, 1993). The conventions for transistor-transistor logic (TTL) circuits, which are used for a wide range of digital electronic circuits, are shown in Fig. 1.

Figure 1.

Voltage bands corresponding to 1 (voltages greater than 2), and 0 (voltages less than 0.8). These ranges define the limits for acceptable inputs to a logic gate. Output voltage criteria are stricter. Greater reliability is achieved when logic component manufacturers adhere to this scheme, because one gate in a chain can effectively clean up extreme noise in its inputs before propagating its output.

In a digital system, symbolic representation is achieved by the use of these bands. When the output voltage of a transistor-based logic gate falls within one band, it will be virtually guaranteed to produce an output in downstream components that also falls within one of these bands. The width of these bands is set so that noise cannot erroneously flip a bit, except in circumstances of very small probability (and error-checking schemes are built in to digital circuits to further reduce this probability). Noise is an ever-present element of real, electronic system operation due, among other reasons, to the heat generated by electric current flowing through resistive material. Dealing with this noise when we abandon a digital interpretation of voltages is of major importance.

In a noisy environment, the task of detecting whether a signal is present can be non-trivial. The same is true inside an electronic system that does not adhere at all times to a TTL voltage scheme (i.e., one that combines analog and digital circuitry). When many signals are possible, and evidence for each conflicts with evidence for the others, the task of deciding on a signal’s identity is all the more difficult.

Fortunately, the study of signal detection and decision making that has taken place since the 1940s — in engineering, statistics and psychology — has led to a clear understanding of optimal performance in these tasks. It has also produced a rigorous analysis of various algorithms for carrying them out. A great deal of behavioral research in psychology has furthermore been devoted to examining these algorithms as models of human and animal decision making. As we will show, all of this work provides a strong incentive for choosing some variety of a random walk as our algorithm for signal detection and decision making. More fortunately still, recent work in psychology and neuroscience provides us with a simple mapping from these algorithms onto neural networks, our computational medium of choice (Bogacz et al., 2006).

In this section we draw on this work to develop an attractor network mechanism for resolving conflict between the possible outputs of a decision making element: this mechanism uses lateral inhibition to implement a process of competition between responses. Lateral inhibition is an old idea in neuroscience (Hartline and Ratliff, 1957) and neural networks (McClelland and Rumelhart, 1981; Grossberg, 1980b), and underlies many associative memory models based on attractor networks in psychology. We draw on this work to formalize our approach to the basic decision making operations of our system when ambiguous inputs attempt to produce more than one output from an element.

It is at this point that analog numerical representation begins to impact the method by which neural networks emulate finite state automata and production systems: unlike standard computer hardware components, individual units in a decision making element will now represent real numerical quantities of evidence using an analog code. Specifically, this code relates activation levels monotonically to the likelihood ratio of the hypotheses (or preferences) under consideration.

We now examine how theories of signal detection and decision making, particularly random walk models, contribute to our story. We will then be ready to address the implementation of productions and the resolution of conflict.

3.2. Theories of signal detection and decision making in statistics, psychology and biology

A great deal of psychological research has focused on the processes leading up to human responses in simple decision making tasks. Signal detection — the subject of psychophysical investigation since Weber in the early 1800s — involves a single response to stimuli of a single category. Signal discrimination or choice reaction — also studied since the 1800s using reaction time techniques developed by Donders — involves multiple stimulus classes and responses (Green and Swets, 1966). In our analysis, decision making will be taken to include these simple processes, as well as other, more complex processes leading to discrete responses (for example, choosing a car to purchase; cf. Roe et al., 2001). Our purpose in this section, though, is to situate the building blocks of a neural cognitive architecture in a framework that has recently connected neurobiological research on decision making to behavioral reaction time research (Smith and Ratcliff, 2004). For that reason, we will focus on the favored task in this domain: discrimination tasks involving two stimulus categories, each associated with its own response.

In this domain, models that employ a technique known as sequential sampling have been used to explain some widely observed features of response time (RT) and accuracy data (Luce, 1986) — in particular, the specific shape of the long-tailed RT distributions that typically occur in human reaction time experiments. In sequential sampling models, the stimulus is assumed to consist of a stream of samples from one of two probability distributions (Fig. 2A illustrates an example of two Gaussian distributions). To determine which distribution is actually generating the stimulus, the samples are accumulated over time. Evidence in favor of one or the other hypothesis thus builds up until a response criterion — or decision threshold — has been reached. Sequential sampling models explain speed-accuracy tradeoffs in decision making performance in terms of shifts in the response threshold toward or away from the starting point of the decision variable trajectory: closer thresholds produce shorter RTs and higher error rates on average (Grice, 1972; Laming, 1968; Ratcliff, 1978; Reddi and Carpenter, 2000).

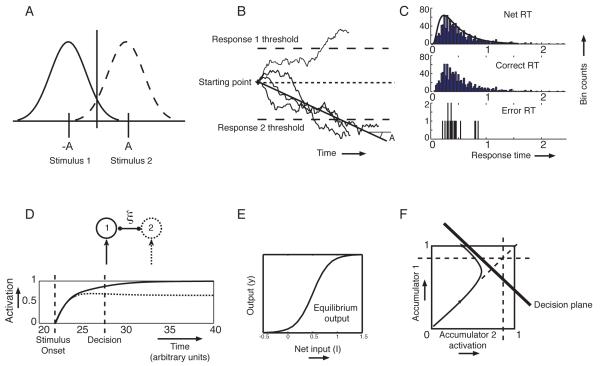

Figure 2.

A: Two possible stimulus distributions; B: Four sample paths of a drift-diffusion process; C: Long-tailed analytical RT density (solid curve) and simulated RT histogram (top), correct RT histogram (middle), error RT histogram (bottom); D: Time courses of noise-free, mutually inhibitory evidence accumulation units with sigmoid activation functions and mutual inhibition of strength ξ; E: The sigmoid activation function; F: A smoothed sample path of mutually inhibitory accumulator activations in the (y1, y2)-phase space showing rapid attraction to a line (the ‘decision plane’) followed by drift and diffusion in its neighborhood.

In accumulator versions of sequential sampling models, evidence accumulates independently in a set of accumulators, one of which is assigned to each hypothesis. In random walk versions, in contrast, each sample that increases the evidence for one hypothesis (i.e., the likelihood of that hypothesis given the data) correspondingly reduces the evidence in favor of the other. This is a form of competition that effectively reduces two decision variables to one: this variable equals the difference in accumulated evidence for each hypothesis. (Fig. 2B shows the trajectory of this variable plotted against time for four different decisions superimposed on each other. Fig. 2C shows the resulting response time distributions over many decisions.)

The ratio of the likelihoods of the two hypotheses is the quantity that is implicitly used to make the decision in most random walk models: when the likelihood ratio approaches 0, the hypothesis corresponding to the denominator is almost certainly true; when the ratio approaches infinity, the hypothesis corresponding to the numerator is almost certainly true. Assuming independence of individual samples, the current likelihoods can be updated to incorporate a new sample quite easily: the likelihood of the hypothesis given the single sample is multiplied against the total likelihood of the hypothesis given all previous samples.

When the logarithm of the likelihood is taken, this multiplication becomes an addition. Similarly, dividing one likelihood by the other becomes subtraction when the logarithm of the ratio is taken. Steps in the random walk thus equal increments to the logarithm of the likelihood ratio for one hypothesis over the other. This property makes such a model equivalent to the sequential probability ratio test, or SPRT (Stone, 1960). This fact is encouraging for the use of random walk models, since the SPRT is optimal in a statistically stationary environment, in the sense that no other test can achieve higher expected accuracy in the same expected time; conversely, no other test can reach a decision faster for a given level of accuracy (Wald and Wolfowitz, 1948).

The drift-diffusion model (DDM) (Ratcliff, 1978) is a sequential sampling model in which stimuli are sampled continuously rather than at discrete intervals, like the continuous-time low-pass filter mechanism of Eq. 3 (we will soon review a proof that the DDM can in fact be approximated by a suitably organized neural network). With human subjects, the DDM has accounted for response time distributions and choice probabilities in a wide range of two-alternative tasks (Ratcliff and Rouder, 1998; Smith and Ratcliff, 2004).

During decision making by the DDM, the difference between the means of the two possible stimulus distributions (see Fig. 2A), imposes a constant drift of net evidence toward one threshold, and the variance imposes a Brownian motion that may lead tofferrors. The DDM is defined by the following stochastic differential equation (SDE):

| (4) |

We examined similar equations when discussing analog computation in section 2. Here, the equation is arguably simpler. A is the signal strength; when it is nonzero, it produces a tendency for trajectories x(t) to move, or ‘drift’, in the direction of the signal. Brownian motion produced by integrating a white noise process W, causes diffusion of a substance within a liquid — hence the term ‘diffusion’ in the name of the model. The factor c weights the intensity of this di usive component of x’s motion.

3.2.1. Sequential sampling by leaky integrator networks

In monkeys performing oculomotor tasks, the continuously evolving firing rates of neurons in the lateral intraparietal sulcus (area LIP) have been related to competing evidence accumulators that approximately implement a drift-diffusion process (Gold and Shadlen, 2001; Roitman and Shadlen, 2002; Shadlen and Newsome, 2001). Similar findings have been reported for frontal structures responsible for controlling eye movements (Hanes and Schall, 1996). We now examine how a network of leaky integrator units can implement the DDM in this way, thereby achieving nearly optimal decision making capabilities in addition to nearly optimal signal estimation capabilities in a way that is consistent with evidence from neuroscience.

Evidence accumulation can be approximated by a simple neural network with two leaky integrators, each of which responds preferentially to one of the stimuli. Each integrator is also subject to inhibition from the other integrator (see Fig. 2D), as proposed by Usher and McClelland (2001) (cf. Bogacz et al., 2006; Gold and Shadlen, 2002; Grossberg, 1982). The evolving activation of each unit (indexed by i) is determined by an SDE, the deterministic part of which is given by Eq. 5:

| (5) |

Eq. 5 is a version of the basic leaky integrator unit (Eq. 3) that is linearized for easier analysis. In this equation, Ii is the input to unit i. It is usually assumed to be a step function of time (corresponding to stimulus onset). The parameter ξ represents the inhibitory strength of symmetric connections between the two decision units; −ξyj represents inhibition from the other unit(s).

When noise is included, the pair of linearized units is governed by Eqs. 6-7:

| (6) |

| (7) |

Assuming linearity also allows us to make the relationship between the DDM and its neural network implementation explicit. By adding the two, noise-free, linearized equations (Eqs. 6-7), we get a quantity, yc = y1(t) + y2(t), that approaches an attracting line — defined in the (y1, y2) plane by y1 + y2 = (I1 + I2)/(1 + ξ) — exponentially at rate 1 + ξ. Subtracting the second equation from the first yields an Ornstein-Uhlenbeck process for the net accumulated evidence, x = y1 − y2:

| (8) |

We will refer to the attracting line as the ‘decision line’ (following Bogacz et al., 2006). The difference quantity, y1 − y2, represents movement along this plane in one of two possible directions. By using strongly self-exciting units to implement thresholds on the activation values, y1 and y2, decisions can be read out of this system. These thresholds define lines in the phase space of unit activations that intersect the decision plane (the dashed lines in Fig. 2F). These intersections are equivalent to decision thresholds applied to a process of drift and diffusion along the decision line.

If leakage and inhibition are balanced (ξ = 1), the drift term is a constant, A, and the system is equivalent to the DDM (Eq. 4) with A = I1 − I2 representing the difference in inputs (Brown et al., 2005; Holmes et al., 2005).

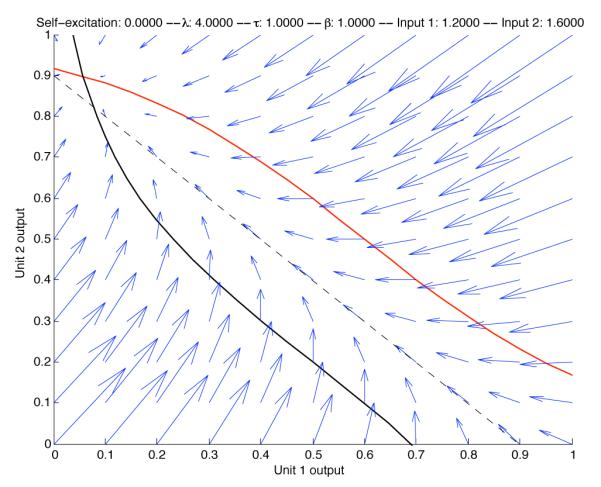

Fig. 2F shows the evolution of the activations y1(t) and y2(t) over time. After stimulus onset, the system state (y1, y2) approaches the decision plane. Projection of the state (y1, y2) onto this line yields the net accumulated evidence x(t), which approximates the DDM as shown in Fig. 2B. Fig. 3 shows that including nonlinear activation functions does not dramatically change the decision making dynamics.

Figure 3.

The phase portrait of a nonlinear two-unit system in which the decision plane is a good approximation of where decision making takes place. The red curve illustrates the isocline of zero vertical velocity, and the black curve represents the isocline of zero horizontal velocity. Thus, their intersection is a stable attractor. Here unit 1 is given a net input of 1.2, and unit 2 is given a net input of 1.6.

We have now addressed the evidence accumulation aspect of two-alternative decision making, but we have not addressed how a surplus of evidence in favor of one hypothesis is ‘read out’ into a decision. We must address this issue because of a conceptual problem: if a surplus of size x is sufficient for making a decision that in many cases leads to a motor action, then why is x−∊ not sufficient, for any ∊ > 0? How can an arbitrarily small change in the surplus make the difference between taking an action and remaining still? We can address this problem easily by adding a second layer of strongly self-exciting units that implement step functions (approximately). We discuss this solution in detail in section 4.

Thus, extremely simple leaky integrator units that provide nearly optimal signal estimation can also perform nearly optimal decision making in the context of difficult, two-alternative tasks.

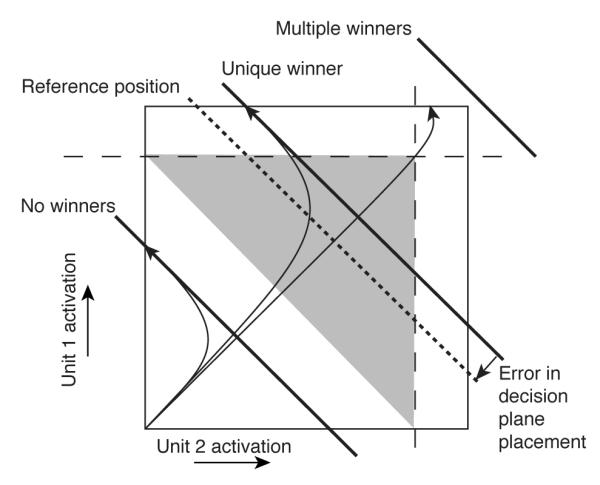

3.3. Attractor and winner-take-all networks in higher dimensions

We have shown a simple example of an attractor network for the case of two-alternative decisions. The pair of threshold detector units used to detect threshold crossings in the accumulator/leaky integrator layer has four attractors in the state space of activations: both units near 0; one near 0 and the other near 1; and both near 1. Assuming normal operating conditions for a properly parameterized two-alternative decision making circuit, and assuming one of the two signals is present, the expected behavior is that ultimately, one of the two units will achieve an activation near 1, and the other will remain at an activation near 0. Thus, the system of two threshold units acts as a winner-take-all (WTA) network. This scheme can be generalized to n units and constitutes a 2-layer building block for a cognitive architecture that is analogous to the logic gates in computers.

We can also collapse the accumulator layer and the threshold layer of units into a single layer of self-exciting units, as in the model of Wong and Wang (2006). Analysis of the relationship between the SPRT and such models is not as well developed as for the two layer network, but such networks exhibit similar dynamics and may allow a simpler, one-layer building block.

Here we generalize this two-channel WTA network to n channels. Technically, depending on the interconnections among units, such a network could have an arbitrarily large number of attractors in the state space of possible activations of all units (Amit et al., 1985). However, we are only interested in attractors that we can easily use to do computation. Thus we will primarily investigate networks whose attractors under normal conditions consist of exactly n + 1 patterns of activation: one in which all units are near 0 activation, and for each of the n channels, one pattern in which the given channel’s threshold unit is near 1 while all the others are near 0 (i.e., a localist representation).

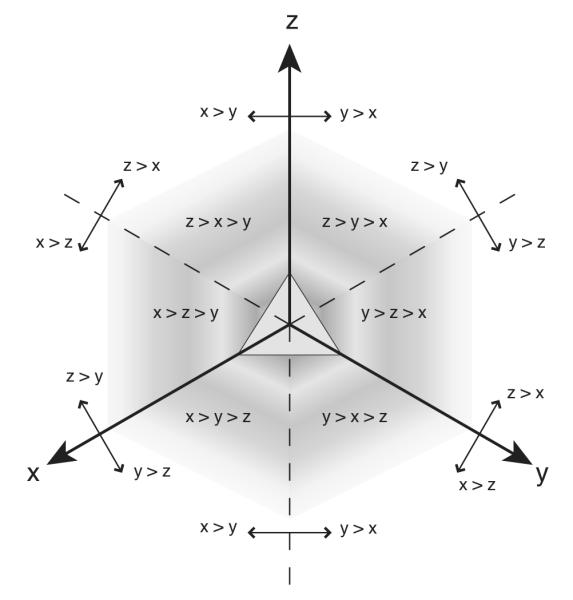

We can generalize beyond two dimensions by considering first three dimensions, defined by Eqs. 9:

| (9) |

A general, n-dimensional version of Eqs. 9 is a nonhomogeneous linear system that can be transformed into a system with 2 unique eigenvalues (of multiplicities 1 and n − 1) and n orthogonal eigenvectors (McMillen and Holmes, 2006). The eigenvalue of multiplicity 1 corresponds to the eigenvector , which defines the position of a decision plane — an n − 1-dimensional generalization of the 1-dimensional decision line in the two-alternative case. The remaining eigenvalues correspond to orthogonal directions within the decision plane that push the system toward a threshold on the plane.

Returning to the three-dimensional situation, let B = y1 +y2 + y3. Then Eqs. 9 imply Eq. 10:

| (10) |

Setting to 0 gives the following relationship between the three activation variables:

| (11) |

This defines a 2-dimensional decision plane that, like the 1-dimensional decision line in the two-alternative case, cuts diagonally through the activation bounding box. This intersection forms a triangular area (see the triangular surface in Fig. 22) within which drift and diffusion take place (McMillen and Holmes, 2006). The boundaries of the triangle are absorbing, meaning that as soon as the system hits one of them, the process stops. Thus they act as response thresholds in a decision making context. (The decision plane can also form a hexagonal bounding area with three absorbing and three reflecting boundaries, depending on the precise decision plane placement. Reflecting boundaries prevent the system from going beyond them, but do not stop the evolution of the process; thus the system can hit a reflecting boundary and then move back away from it over time.)

In general, we can continue this pattern for n alternatives to get a decision plane defined by Eq. 12:

| (12) |

We can always generate a winner by exciting one unit more strongly than all others by some margin. The problem is that we can also easily produce either no winner, or multiple winners, by carelessly defining input strengths and the connection strengths from the accumulator layer to the threshold layer. These problems are addressed in appendix C, where nonlinear, integral feedback control is used to ensure the WTA property of our decision making networks.

We now address the means by which connections and connection strengths can be programmed to implement competing if-then rules, or productions, of varying degrees of preference, as well as the state-maintenance and sequential state transitions that define a finite state automaton (FSA).

4. Sequential processing

We have discussed how a simple, low-pass filtering mechanism can be applied to the problem of signal estimation and decision making. We now address how a network of these mechanisms can emulate a memory-limited Turing machine, or equivalently, an FSA (Sipser, 1997). For our cognitive modeling goal, the FSA must itself implement a production system that in turn must implement a problem-space search algorithm. And given our commitment to biologically plausibility, our system must do all this without relying on a centralized system clock governing a sequential, synchronous processing architecture, as standard computers do. Nevertheless, we need a standard computer’s capability for sequential processing, because when humans solve problems — and more generally, when they carry out the type of symbolic processing exemplified by problem solving — they frequently appear to mentally simulate a sequence of state-to-state transitions in a space of possible task states (Miller et al., 1960; Newell and Simon, 1972). Indeed, it is this fact — especially in the case of mathematical proofs and computations — that inspired the Church-Turing Thesis, which states that computation in any form is essentially equivalent to the operation of an FSA controlling a memory tape (Sipser, 1997).

The primary external signal given to the type of problem-solving models we envision within the proposed architecture (we discuss one such model in detail in the supplementary materials) will be an initial problem space configuration and a goal configuration. At that point, the model will need to move through the problem space at its own pace, and various parallel processing pathways will need to coordinate the timing of their processing. Thus the FSAs we need to emulate have states in which the next state is determined without reference to any signal from the environment. The components of such an FSA will need to time their own operations, determining how long to remain in a given state before transitioning without using a centralized, oscillating clock as a trigger. In our case, we would like to know how to make the system operate as quickly as possible, moving through the problem space at maximum speed, while maintaining some specified level of accuracy in its transitions. This section covers the state-maintenance and self-timing mechanisms that our system will require in order to do this.

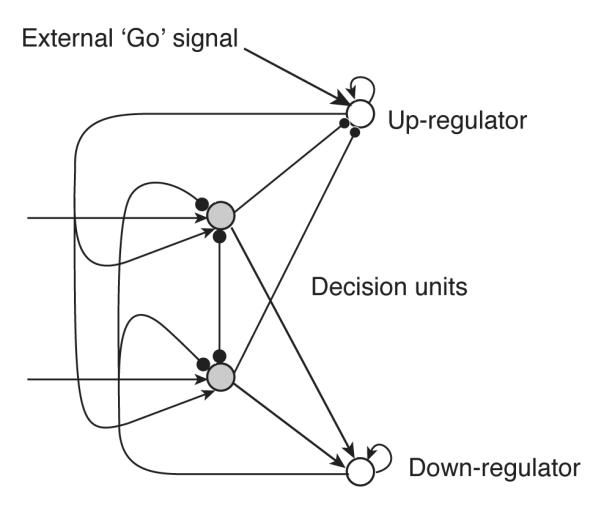

In another contrast to standard sequential circuit designs, these circuits will also involve concurrent operations which frequently conflict with each other (as in the competitive dynamics of the circuit in Fig. 4). In particular, a key operation of the system will be to select an action to take in a given problem solving task, and the production system emulated by the network will frequently match multiple, mutually exclusive rules specifying which action should be selected. Thus, the system must carry out a process that is equivalent to decision making among more and less preferred alternatives. Finally, the system will also frequently need to decide among alternatives that are all equally preferred. This fact requires that our system emulate a probabilistic FSA: an FSA in which the probabilities of particular state transitions, rather than the transitions themselves, are what are determined by the current state and current input.

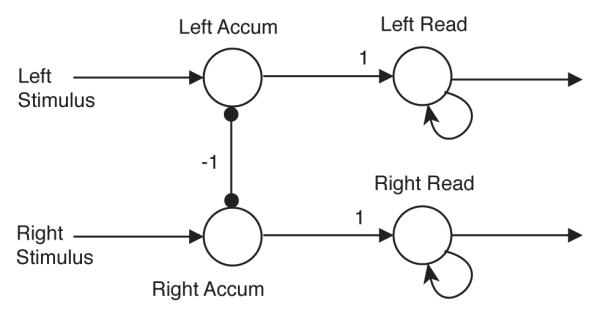

Figure 4.

A two-layer decision network, in which the first layer of non-self-exciting, approximately linear units weighs evidence represented by an analog code, and the second layer of strongly self-exciting, highly nonlinear units reads out the decision into an approximately binary code. Here, arrows represent excitatory connections, and circular arrowheads represent inhibitory connections.

4.1. Mapping finite automata onto neural networks via symbolic dynamics: symbols = state-space regions

The first complete analysis of the computational capabilities of finite state automata — showing specifically that every FSA essentially computes whether an input string of symbols matches some regular expression — was given by Kleene in the context of a model of neural processing (Kleene, 1956). It would therefore seem that we do not have to do any work in order to construct a mapping between FSAs and neural networks. However, Kleene’s construction involved discrete time and the use of noiseless, McCulloch-Pitts neurons: units which compute a weighted sum of inputs and then apply a step function to the sum, producing an instantaneously responsive, binary output. (In contrast, our leaky integrator units compute a weighted sum of inputs and then asymptotically approach the value defined by a sigmoidal function of that weighted sum.) Given the constraints that we derived in the previous section, however, it is not yet clear that a neural network of the type that interests us (i.e., one that operates in continuous time and is analog, asynchronous and noisy) can implement finite automata and carry out problem-space search. In this section, we outline a mapping from finite automata onto neural networks that meets our constraints. In the sections that follow, we will fill in the details about the critical mechanisms that are sketched here.

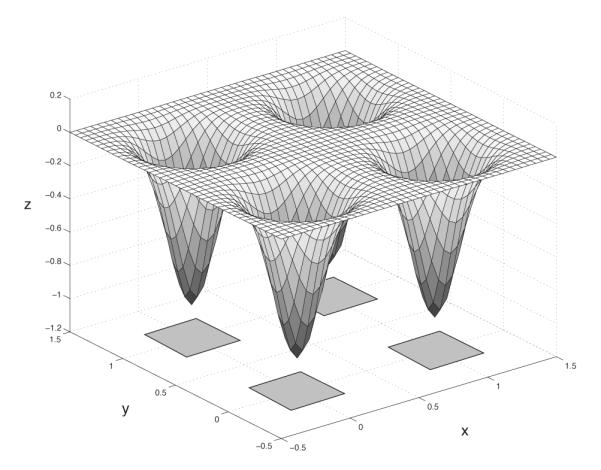

We can think of the state of a two-dimensional analog system as the x and y coordinates of a ball rolling around on an energy surface (a function z = f(x, y)). If noise is present, the ball will also be constantly jostled by random perturbations. The ball will tend to come to rest in any valleys, or wells, in the surface (e.g., see Fig. 5).3 In order to implement an FSA in an analog system, we need to create a mapping between non-overlapping regions of the underlying analog system’s state space (the analog states, defined by coordinates x and y in Fig. 5) and the states of the FSA (the FSA states). These analog-state regions must not overlap, so that the mapping from FSA states onto regions is one-to-one and the system will never be confused about what state it is in.

Figure 5.

Four potential wells, implementing four symbols in the (x, y) state space of two units’ activations.

As a reminder, the analog state space (or phase space) of the system we will examine consists of vectors of real numbers, each greater than or equal to 0 and less than or equal to 1. When we are faced with more than two phase space dimensions (i.e., more than two units), the energy surface is difficult to depict graphically. Nevertheless, the analog state is equivalent to a point moving inside a hypercube (i.e., a ‘brain state in a box’, in the colorful description of Anderson et al., 1977). Each dimension corresponds to one unit in the system, and an energy surface can still be well-defined.

Since we assume the presence of noise, the non-overlapping regions of state space must be separated by a no-man’s-land that is not associated with any state. Otherwise, a stochastic process involving white noise perturbations that is leaving one region and entering another is likely to make multiple, back-and-forth crossings of a region-boundary during a single intended FSA transition. A separated analog state region is consistent with the TTL voltage band convention. Fig. 5 shows regions in the x − y plane that denote four distinct symbols, separated by large regions that do not encode any particular state of an emulated four-state FSA.

4.2. Threshold mechanisms

Since the behavior of an emulated FSA will be conditioned on the entry of an analog state into a symbol region, threshold-crossing detection will be an essential function of our system (as it is in any computer). Mathematically, it is easy to define a quantity (an indicator variable) that specifies whether the analog state of a system is within a symbol region, such as the TTL regions for 0 and 1 in digital systems. An indicator variable for a single voltage takes on the value 1 when a voltage is inside a region, and 0 when it is outside, making it a step function — or Heaviside function — of the analog state variable.

4.2.1. McCulloch-Pitts neurons

In general, approximations to step functions will play critical roles in our models in determining whether or not to make a response. However, using step functions per se would present serious difficulties for the type of model that we address in section 6. Ultimately, we will derive instead a simple mechanism for approximating a step function with arbitrary precision using only the leaky integrator units we presented in section 2. First, though, we state the definition of McCulloch-Pitts neurons (McCulloch and Pitts, 1943) explicitly in Eq. 14, and we analyze the problems this model presents, especially when the discrete time steps (n) are generalized to continuous time (t).

Formally, the activation Vi of the ith unit in a system of McCulloch-Pitts neurons in response to input Ii is as follows:

| (13) |

| (14) |

Eq. 14 is very similar to Eq. 3 in that it computes a weighted sum of its inputs. The effective threshold can be increased or decreased from Θ by providing a constant input (or bias) to the unit. A positive bias in effect shifts the function to the left (decreasing the threshold), and a negative bias shifts it to the right (increasing the threshold). A simple but effective technique for adapting response thresholds (and speed-accuracy tradeoffs) to changing task conditions derives from this fact (Simen et al., 2006).

However, there are critical problems with this idealized approach to thresholds regarding its physical and biological plausibility. Furthermore, a disastrous, noise-induced ‘chatter’ effect is easily produced by artificial mechanisms that approximate these idealized thresholds. Without compensating mechanisms, thermostats that use temperature readings to govern a furnace, for example, can rapidly switch a burner on and off as a room’s temperature hovers noisily around the thermostat’s temperature threshold, thereby wasting energy and possibly damaging the furnace.

For our purposes, the worst problem with McCulloch-Pitts units is that, by themselves, they cannot maintain an encoded FSA state in the interim between received state-transition signals. Therefore, we either need to guarantee that the intervals between input signals to the FSA are shorter than the decay time of our state-maintaining units (an unnecessarily strong constraint), or we need to ensure that state can be encoded indefinitely (or latched) as is done in digital electronics. Latching is the path we will take.

We achieve latching and avoid the chatter problem with the use of strong, recurrent self-excitation in ‘readout’ units, like those in the second layer of Fig. 4. This results in bistable dynamical systems with exactly two equilibrium points that produce all-or-none behavior of the desired type. Bistability will play an important role in our proposed architecture, as it does in other neural modeling approaches and in digital electronic circuit components (Hayes, 1993). Bistable striatal neurons in mammals, for example, are thought to produce action initiation by promoting signal propagation through the basal ganglia (Alexander et al., 1986). Because of its role in action initiation and sequencing (Aldridge and Berridge, 1998), the most recent version of ACT-R has associated production firing with the basal ganglia in its mapping from the architecture onto the brain (Anderson et al., 2004). We proposed a similar mapping in Simen et al. (2004).

Thus recurrent excitation defines the symbolic/subsymbolic interface in our approach to cognitive modeling, by turning a nearly linear system into a highly nonlinear (nearly binary) one.

4.2.2. Hysteresis

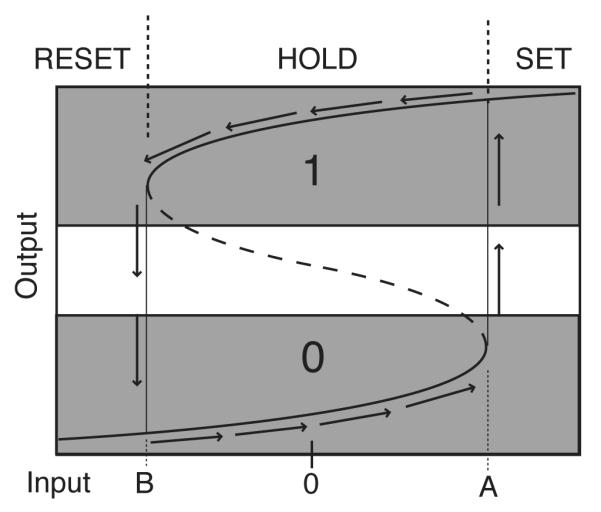

In general, bistability and latching are properties of many systems that display hysteresis: that is, systems whose outputs do not depend exclusively on their immediate inputs, but also on the recent history of the system’s outputs. Fig. 6 shows a classic example of a bistable system with hysteresis. The vertical axis represents output values, and the horizontal axis represents input values. The solid curves denote stable equilibrium output values as two, distinct functions of the input values. The dashed curve represents unstable outputs as a function of inputs: for any coordinate pair that lies on this curve, any perturbation of the output will cause it either to increase to the upper solid curve segment or to decrease to the lower solid curve. The resulting dynamics of such a system are that upward velocities occur as the input increases above point A if the output starts out on the lower solid curve (these velocities become large as the input grows greater than A). Similarly, downward velocities occur as the input decreases below B if the output starts out on the upper solid curve. For any input I in the range between B and A, the system’s output value has either the lower or upper solid curve as its equilibrium, depending on whether the previous output values were, respectively, below or above the dashed curve.

Figure 6.

A latch based on hysteresis. The solid curves denote stable, attracting points: for any given, constant level of input, I, the system will eventually converge to a point on one of these curves centered above I on the horizontal axis. Where a dashed curve is plotted, two possible attractors exist for the same input value. Which curve the system converges to (assuming I is held fixed) is determined by the current output value of the system: if it is above the dashed curve, it will converge to the upper solid curve, otherwise to the lower curve. If the system starts out on the solid curve in the lower left corner of the diagram, and input increases, it will follow the trajectory denoted by the arrows. Input value A then defines a threshold for inputs that drive the system to a high level of output activation (corresponding to a binary 1). If inputs then drop below A, the system retains nearly maximal activation until input drops below the value B, at which point output will plunge to the lower attractor (a binary 0). It can store a 1 or a 0 as long as the input is between A and B, and will be least susceptible to noise at the midpoint between them.

Hysteresis has historically been employed for a variety of functions in psychological and neural models, including shortterm memory (Cragg and Temperley, 1955; Harth et al., 1970; Nakahara and Doya, 1998), abrupt changes in conditioning and extinction (Frey and Sears, 1978), and critically, the implementation of population thresholds for activity that are robust to noise (Wilson and Cowan, 1972). A simple method for controlling the amount of hysteresis in the bistable units we propose for threshold-crossing detectors will be the principal technique that allows us to construct models capable of complex, sequential processing. Also, while we have noted that the vertical translations in the hysteresis diagram in Fig. 6 can be rapid, circuits with cyclic connection patterns (as discussed below) will sometimes require in addition a method for controlling the speed of the vertical translations. In fact, for inputs only slightly greater than A, the upward vertical velocity is very small for solutions that are leaving the lower, stable equilibrium curve, so that input strengths can be tuned to achieve arbitrarily slow translations in the absence of noise.

We now show how input latching and resetting can be achieved if we can assume that our units display hysteresis. Rather than using an ‘enable’ line, as in typical electronic latches, this latch operates more like a static RAM cell in computer memory: it loads a new value when forced with a strong input that overwrites its currently stored value. Here, ‘strong’ inputs are those that are greater than A or less than B. As we show below, we can parameterize our units so that input signals of strength near 0 occur at the midpoint of the unstable (dashed) curve, as in Fig. 6. When strongly positive inputs greater than A are received for a duration long enough to drive the output into the 1 region, the latch is ‘set’, independently of the previously stored value. When strongly negative values (less than B) are received for long enough to drive the output into the 0 region, the latch is ‘reset’, again independently of the previously stored value. When inputs in the intermediate range are received, they change the output value slightly, but they are incapable of driving the output out of the symbol region for the currently stored symbol.

4.2.3. Threshold-crossing detectors and latches built from self-exciting, sigmoidal, low-pass filter units

Positive feedback in sigmoidal units is the key to generating the hysteresis properties that we need for threshold-crossing detection and latching, just as it was the key to achieving a larger time constant for linear units in section 2. We therefore consider the dynamics of a self-exciting unit, whose output value V is weighted by a nonzero synaptic strength w and added to the weighted sum of its other inputs. A standard result for such units is that supplying them with positive feedback is mathematically equivalent to changing the shape of their activation functions. We can achieve latching in this way, and we can also achieve precise control over the speed of this latching. Control over this aspect of hysteresis is what will allow us to connect units into network topologies containing small cycles, which in turn will allow us to build concurrently operating, self-timing circuit components with ease.

We can determine the effects of recurrent excitation by two methods: the first is the hysteresis diagram that we have already discussed, which will allow us to interpret self-excitation as a deformation of a unit’s activation function; the second method, based on ‘cobweb diagrams’ (Jordan and Smith, 1999), is covered in the supplementary materials. The latter technique is extremely useful for model construction, allowing the designer of a model to estimate the rate of change in activation at a given pair of input and output values by visual inspection. This method in turn supports an efficient procedure for setting the connection strengths between units in large networks so that desired behaviors are achieved. However, the workings of architecture components can be understood without delving deeply into the details of this technique.

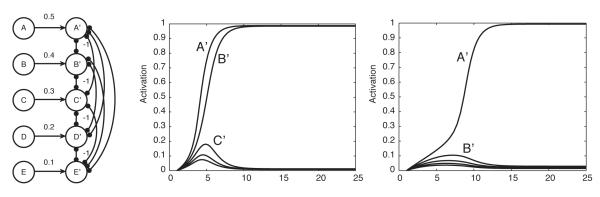

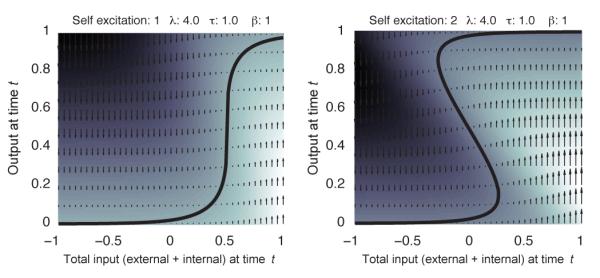

Diagrams like those in Fig. 6 are sufficient for this purpose. These figures can be computed by numerically finding the points in the input-output plane at which the derivative dV/dt in Eq. 3 is equal to 0, using Newton’s method, for example. (The utility of cobweb diagrams is that they do not require this computationally expensive step.) The activation derivative can also be computed at a grid of points in the input-output plane, so that nonzero velocity vectors can be plotted (with arrowlength proportional to magnitude) to give a global picture of how the system changes as a function of position. The plots in Fig. 7 show this approach: a system with self-excitation that perfectly balances its leak is plotted on the left (we will refer to such units as balanced); a system with stronger self-excitation results in bistability and is plotted on the right (we will refer to such units as strongly self-exciting); units with weaker self-excitation (not shown) will be referred to as weakly self-exciting (a weakly self-exciting unit’s activation function will look more like the non-self-exciting activation function shown in Fig. 2E; weak self-exciters act as leaky integrators with an adjustable time constant that increases as the recurrent weight increases, as in Eq. 20).

Figure 7.

Phase plots for units with balanced (left) and strong (right) recurrent connections to themselves. Here equilibrium curves are plotted as solid curves, and velocities are plotted by arrows and shading (white corresponds to positive, black to negative velocities).

A unit’s activation function can also be computed over a range of self-excitation strengths and plotted as a surface. Fig. 8 illustrates this graphical approach. This type of diagram is a depiction of a ‘cusp catastrophe’ (Thom, 1989), in which a particular type of sudden, ‘catastrophic’ change occurs in the shape of the equilibrium curve (which in our case is an activation function) as some parameter of the system changes continuously (in this case, the strength of self-excitation). The equilibrium curves in the bottom plots of Fig. 7 are equivalent to vertical slices through this surface that run parallel to the Input axis. The catastrophic change that occurs as recurrent weight strength increases is the sudden appearance of three equilibrium points for certain input levels (and therefore hysteresis too), whereas for smaller recurrent weights, there is only one equilibrium point (and no hysteresis). The so-called ‘bifurcation’ value of self-excitation at which this change occurs happens to be equal to the slope of the original sigmoid activation function at its inflection point, as shown in the supplementary materials. At this special value (i.e., when it is balanced), the system approximates a perfect (non-leaky) integrator. We discuss an interval timer in section 6 that exploits these dynamics in order to time out unsuccessful steps of computation and generate subgoals for problem solving.

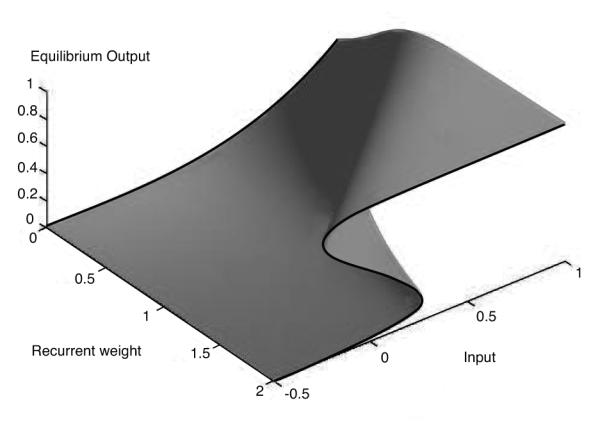

Figure 8.

Effective activation functions for a range of self-excitatory, recurrent connection strengths, plotted as a ‘catastrophe manifold’. This system produces a cusp catastrophe as self-excitation increases above 1 (in general, such catastrophes occur when self-excitation increases above λ/4, where λ is the maximum slope of the sigmoid activation function (i.e., the activation function for self-excitation equal to 0). Given a particular recurrent self-excitation strength, w, a vertical slice through this surface parallel to the Input axis and positioned at w on the Recurrent Strength axis gives the effective activation function for that value of w.

4.2.4. The closed-loop problem

The primary self-timing operation that independent, concurrent processes in our model will invoke once activated is to prevent other processes from interfering with them until they have finished their work (if possible). This includes preventing new inputs to a process from being accepted once the process begins. It also includes waiting for indications that the result of a process has been computed, and then cancelling itself. Both of these types of handshake operation can be achieved with cyclic connection patterns involving excitation and inhibition (Sparso and Furber, 2002).

Here we examine the simplest example of such a system, depicted in Fig. 9: two units, IN and OUT, in which IN excites OUT, which in turn inhibits IN. Once IN is inactive and OUT is active, we might want OUT to persist in its activity indefinitely, or to persist until being inhibited by some other unit (not depicted), or finally to return to inactivity after a delay of controllable duration. We now address parameterizations and a technique for finding them that allow these behaviors to be realized.

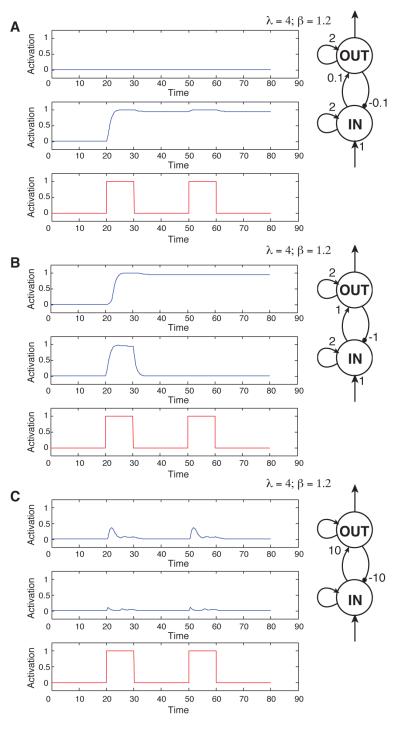

Figure 9.

Three different parameterizations of a two-unit closed-loop system intended to propagate a 1 forever (from the OUT unit) and to wipe out input layer activity (in the unit IN) once the first input pulse is detected. Activation over time is shown for each unit in the top two rows of each subfigure. The input signal is shown in the bottom row. A: Feedforward and feedback connection strengths between IN and OUT are too weak to cause propagation of the input pulses; B: Interconnection strengths suffice for the desired behavior; C: Interconnection strengths are too strong, once again causing failure of input pulse propagation.

Fig. 9 shows the activation levels of the two units over time, under three different parameterizations, in response to two step pulses of input of amplitude 1. The only parameter that varies in the three sets of plots is the strength of the connections between IN and OUT. The intended behavior of the system is to detect the first input pulse, thereafter propagating a 1 from OUT and making IN unreceptive to external inputs. Both units are parameterized with bias β equal to 1.2, gain λ equal to 4, and recurrent excitatory connection strength 2. Each subfigure of Fig. 9 shows the timecourse of activation in a two unit network receiving a pulsed input. The top two graphs of each subfigure show the activation of the unit illustrated next to the graph. Subfigure A and C both show failures to achieve the intended behavior due to interconnection strengths that are too weak and too strong, respectively. Subfigure B shows the intended behavior being executed.

4.3. Elementary logic functions

Using hysteresis diagrams, we now demonstrate that self-exciting, nonlinear leaky integrator units can implement a complete set of logic functions (a set, like {AND, NOT}, that can be used to compute any propositional logic function). We will then have the means to compute the state-transition table for any FSA.

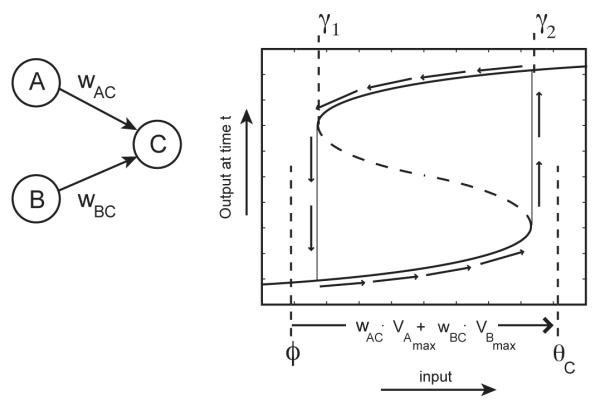

Consider a system of two upstream units, A and B, and one downstream unit C, with feedforward excitation from A and B to C, as in Fig. 10. In order for C to respond as the neural equivalent of an AND gate in digital logic, the following behavior is required: when A and B are both highly active, C should be highly active. If either A or B are inactive, C should be inactive. C should never linger at values that are far from 0 or far from 1.

Figure 10.

Parameterization for an AND gate, C.

The following parameterizations, illustrated in Fig. 10, give this behavior. C should be strongly self-exciting, and θC should be greater than γ2, the bifurcation point at which two stable equilibria and one unstable equilibrium collapse to a single stable equilibrium. The connection strengths from A and B to C should sum to a value sufficient to exceed this threshold when added to the baseline level of input to C (in Fig. 10, this level is a point ϕ to the left of γ1). Thus when A and B are highly active, C’s effective activation curve will have a single equilibrium value near the maximum possible output value of 1. Without a drop in A or B, C will eventually become highly active. The time that C takes to become active will depend on how far to the right of γ2 is the weighted sum of C’s inputs, because this horizontal position determines the size of the upward velocity vectors (shown in Fig. 7). For horizontal coordinates arbitrarily close to, and greater than, γ2, the diminishing upward velocity vector length indicates that C’s ramp-up time will be arbitrarily long. (Strictly speaking, the time to approach an attractor is always infinite, since attractors are approached exponentially. By ‘ramp-up time’ we refer instead to the time required to approach within some nonzero-diameter neighborhood of an attractor, which happens in finite time.) Thus, faster decision making requires the sum of input from A and B to exceed γ2 by a larger amount (that is, to excite C more strongly).

With strong A → C and B → C connections (denoted wCA and wCB respectively), however, there is the potential problem that strong activation in only one of A or B will be sufficient to activate C. In order for C to retain the property of being a conjunction detector, the connection strength from each of A and B to C must be less than θC − γ1. Thus a requirement for faster conjunction detection requires both that θC be larger and that the feedforward connection strengths be larger and roughly equal, each less than θC − ϕ, and with sum greater than θC − ϕ.

Finally, we note that the connection strengths used in–this discussion are approximations. The units A and B in this example will never quite reach an activation of 1 or 0. Weights and θ’s must therefore be set with a margin for error that is discovered through trial and error when building a system (in our experience, this is quite easy to do).

We have shown the parameterization of a three-unit network that computes an AND function. We omit the description of OR and NOT functions; interconnection strengths that compute these functions are straightforward modifications of the values depicted in Fig. 10.

4.4. Using attractors in the form of latches to maintain state

We now address the means by which the proposed system can maintain the representation of an FSA state in between the reception of transition-triggering signals, and the means by which signals can trigger transitions from one FSA state into another.

We do this by parameterizing the strength of a connection from the old state-encoding unit (or units) to the new state-encoding unit(s) — and from the input to the new state-encoding unit — so that together, the old state plus the new input cause activation of the new state. That is, each state-encoding unit acts as an AND gate that detects the conjunction of the old state and the new input, and whose hysteresis properties maintain the new state after the input disappears, even in the presence of noise. In this way, state can be maintained with high probability and for long durations in between signals (although noise will eventually produce an escape from the potential well defining a state with probability 1 — see Gardiner, 2004 — implying a practical limit on working memory duration).

4.5. Using flip-flops to prevent critical race conditions

There is a well-known problem with using latches to maintain state that has been solved in digital logic design with the use of flip-flops (Hayes, 1993). The problem is that computations often need to use the maintained state in order to compute the next state. If state is maintained by latches, then the old state can begin to be overwritten by a new internal signal even as that signal is being computed. This can easily result in a failure to complete the computation of the next state. In that case, the result is an unpredictable state, or a rapid oscillation between 1 and 0 known as a ‘critical race’ condition, or even metastability: persistence in a state in the analog state space that is not within any of the symbol regions.

To handle these problems, flip-flops are used in digital logic to ensure that only one transition is possible before some additional control signal is received on an enable line, usually from a central clock. Flip-flops maintain two copies of a bit value: the current bit value, and a new value that will become current when the next control signal is received. Rules can be applied to transform old values into new values, but without overwriting the old values until the next control signal. This eliminates any possibility that in the process of computing a new value, the old value becomes destabilized before the computation of the new value is complete. The use of clocked flip-flops is the defining feature of synchronous digital circuit design, and it heavily influences the discrete time-cycle view embodied in most production systems and AI models.

Because we cannot assume a central clock, however, we make each concurrent process determine for itself whether or not to accept input. The method we have used to handle this issue is to cause one or more units involved in a process to strongly inhibit all of that process’s input units once the process has begun, as in our closed-loop example. When reduced to near-0 activation, the input units can neither excite nor inhibit the units carrying out the process. Thus, no input will be accepted by a process until it detects that its processing is complete, or until it decides that too much time has gone by (we discuss the latter in section 6). Nevertheless, we can still make use of locally clocked flip-flops to solve problems involved in generating subgoals during problem solving, as we discuss in the supplementary materials.

To achieve flip-flop behavior, we define a gate to be a copy of an upstream latch component, with one-to-one feedforward connections from upstream units to their corresponding downstream units.4 A second set of inputs to the gate component is strongly inhibitory and reduces all activity in the gate to baseline when propagation of outputs is not desired. The purpose of a gate is to prevent propagation of a latch’s contents without wiping out its activation-based memory. We note that while neural latches are analogous to latches in digital systems, gates as we have defined them are distinctly different than digital components with the same name (AND, NAND, OR gates, etc.). We use this term for our neural component because it is a common term for mechanisms with the same function in the cognitive neuroscience literature (e.g. Frank et al., 2001; Braver and Cohen, 2000).

We have now described the operations of decision making under uncertainty, thresholding, computing logic functions and maintaining state, and we have proposed neural network mechanisms to implement them. These operations give us sufficient computational power to emulate any FSA (and if we allowed ourselves infinitely many filter units, we could emulate any Turing machine with an infinitely long tape — see Simen et al., 2003). The mechanisms are simple: self-exciting, bistable units maintain binary representations of state for arbitrary durations; connection strengths between units and the bias parameters within units determine the logical function that a unit computes on its binary inputs (thereby implementing an FSA’s statetransition table); finally, the bistability of these units leads to approximately punctate transitions into the next state. Aside from the absence of a central clock (which we can achieve by the use of asynchronous timing methods based on closed-loop circuit connections; cf. Sutherland and Ebergen, 2002, and Sparso and Furber, 2002), the result is a network mechanism that is not all that different from a standard, digital electronic circuit, in which units play the role of capacitors and transistors, and connections play the role of resistors. Neural automata of this type can then be used to control a hierarchy of truly subsymbolic processing, as might be carried out in hybrid neural-symbolic models of motor control in animals and robots (e.g. Ritter et al., 2007).

Our discussion of logic-level mechanisms is not yet complete though. We must now consider how decisions are made in the presence of processing conflict — an operating condition described below that typifies the operation of parallel-processing systems such as production systems, but a condition that is purposely precluded by standard sequential-circuit design techniques in digital electronics in order to ensure predictable operation (Hayes, 1993). Since the brain appears to be a parallel processing device, conflict constitutes a central concept in cognitive neuroscience (Botvinick et al., 2001). Aside from the capacity for subsymbolic decision making, it is the presence of processing conflict and mechanisms for resolving it that most distinguish our proposed architecture from standard digital computing techniques.

5. Voting processes that implement if-then rules

Production systems5 (e.g., Anderson and Lebiere, 1998; Just and Carpenter, 1992; Laird et al., 1987; Kieras and Meyer, 1997) are used widely in cognitive psychology and AI to model cognition. These systems exhibit flexibility in their operation relative to standard computer programs, because they decompose the potentially long, complex routines of a standard program into sequences of smaller instructions – if-then rules, or ‘productions’ – which can be conditioned on the state of the world and on current goals, and which can be executed in parallel. Thus production systems are able to sample the world frequently, detect and handle errors quickly, and interrupt long routines if necessary. They are also amenable to powerful learning strategies such as ‘chunking’ (Anderson and Lebiere, 1998; Laird et al., 1986; Miller, 1956) that compile successful rule-sequences into single rules, thereby speeding performance, as well as stochastic, exploratory rule-creation processes in some systems (Holland, 1986a).

Production systems consist of a working memory (WM) for symbolic information (whose contents are typically updated frequently), and a long-term memory of productions (whose contents typically endure for much longer durations). The typical processing scheme for such a system is that the conditions of the production rules are repeatedly matched against the contents of WM. Any rule whose conditions are satisfied becomes a candidate to make the changes to WM specified by its postcondition. These rules are said to ‘match’. Conflict resolution processes that vary among production system architectures may then determine which rules actually execute their postconditions, or ‘fire’. These changes typically occur at the onset of the next processing cycle and do not produce matches of other rules on the current cycle. In Soar, many productions can fire in parallel and generate preferences about the next sequential step to take in problem solving. A separate decision cycle then consults the preferences and commits to a specific mental operator (Laird and Congdon, 2006). ACT-R instead allows only one production at a time to fire (Anderson et al., 2004).

In order to implement production systems in neural networks, the mechanisms underlying this cyclical process must be addressed (or the cyclical model must be modified) in order to observe a constraint that we and others have hypothesized: this is that the brain lacks a global, synchronizing clock circuit. Furthermore, a means must be addressed by which circuits can make decisions about which rules to fire when there is processing conflict between multiple matching rules.

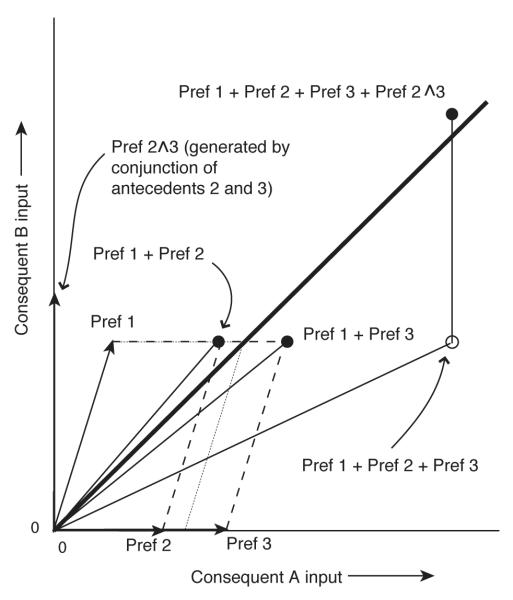

This section addresses both issues in the same manner as Polk et al. (2002). It emulates ‘matching’ by a voting process among competing candidate rules (cf. Grossberg, 1980b; Feldman and Ballard, 1982). This voting is implemented as a high-dimensional diffusion process in a competitive attractor network (or ‘module’), driven by connection strengths that implement preferences among candidates and that connect modules together (cf. the similar feedforward network approach, or ‘Core Method’, for encoding propositional logic statements in Bader et al., 2007). It emulates ‘firing’ as a threshold-crossing event, implemented by strongly self-excitatory neural network units that can operate without governance by global clock signals.

5.1. Production implementations: if-then rules and conflict

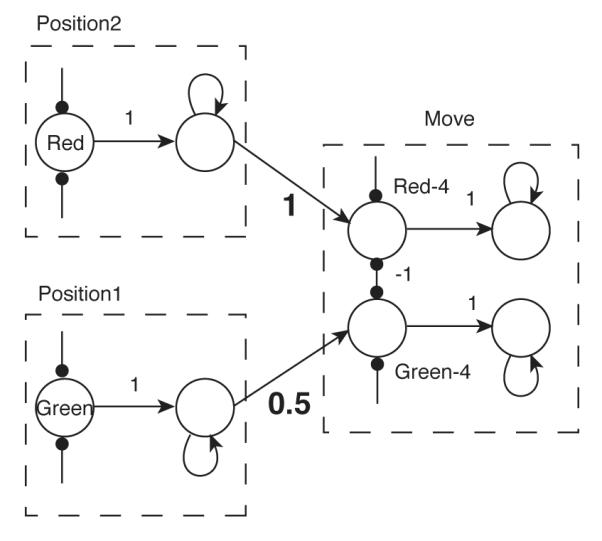

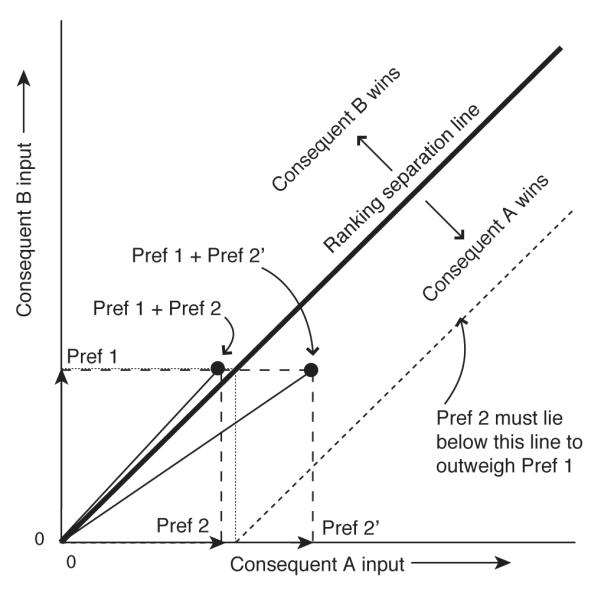

We characterized the operation of n-dimensional attractor networks in response to a vector of n constant inputs in section 3. We are now ready to take our most significant step toward the implementation of production systems, by implementing working memory symbols and symbolic productions that may conflict with each other, as was done in Polk et al. (2002). To do so, we consider a simple example of the type of productions necessary for performing the Tower of London task (Shallice, 1982).

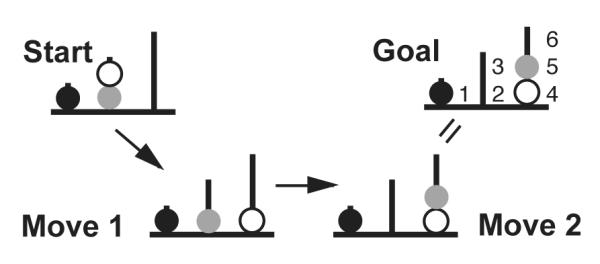

This task is depicted in Fig. 11. In it, a participant is shown a starting configuration and a goal configuration of colored balls on pegs; the participant is then asked to transform the starting configuration into the goal configuration by moving one ball at a time. The TOL task has been used extensively to assess planning impairments and is thought to depend crucially on goal management (Shallice, 1982). It is a variant of the Tower of Hanoi problem (Simon, 1975), but in contrast to that task, there are no constraints specifying which balls can be placed on which others. Instead, the pegs differ in how many balls they can hold at one time (the first peg can hold one ball, the second peg can hold two, and the third peg can hold three). There is typically one red, one green and one blue ball. Participants are usually asked to try to figure out how to achieve the goal in the minimum number of moves and are sometimes asked to plan out the entire sequence of moves before they begin (Carlin et al., 2000; Shallice, 1982; Ward and Allport, 1997).

Figure 11.

The Tower of London task. Here we have arbitrarily numbered the possible goal positions for easier reference.