Abstract

Neurons in auditory cortex are central to our perception of sounds. However, the underlying neural codes, and the relevance of millisecond-precise spike timing in particular, remain debated. Here, we addressed this issue in the auditory cortex of alert nonhuman primates by quantifying the amount of information carried by precise spike timing about complex sounds presented for extended periods of time (random tone sequences and natural sounds). We investigated the dependence of stimulus information on the temporal precision at which spike times were registered and found that registering spikes at a precision coarser than a few milliseconds significantly reduced the encoded information. This dependence demonstrates that auditory cortex neurons can carry stimulus information at high temporal precision. In addition, we found that the main determinant of finely timed information was rapid modulation of the firing rate, whereas higher-order correlations between spike times contributed negligibly. Although the neural coding precision was high for random tone sequences and natural sounds, the information lost at a precision coarser than a few milliseconds was higher for the stimulus sequence that varied on a faster time scale (random tones), suggesting that the precision of cortical firing depends on the stimulus dynamics. Together, these results provide a neural substrate for recently reported behavioral relevance of precisely timed activity patterns with auditory cortex. In addition, they highlight the importance of millisecond-precise neural coding as general functional principle of auditory processing—from the periphery to cortex.

Keywords: hearing, neural code, spike timing, natural sounds, mutual information

Our auditory system can reliably encode natural sounds that vary simultaneously over many time scales, and from these sounds, it can extract behaviorally relevant information such as a speaker's identity or the sound qualities of musical instruments (1, 2). Neurons in the auditory cortex are sensitive to temporally structured acoustic stimuli and likely play a central role in mediating their perception (3). Yet, how exactly auditory cortex neurons use their temporal patterns of action potentials (spikes) to provide an information-rich representation of complex or natural sounds remains debated.

One central question pertains to the relevance of millisecond-precise spike times of individual auditory cortical neurons for stimulus encoding. Previous work has shown that onset responses to isolated brief sounds can be millisecond-precise and carry acoustic information (4–6). However, auditory cortex neurons cannot lock their responses to rapid sequences of short stimuli. For example, during the repetitive presentation of brief sounds, their response precision degrades when the intersound interval becomes shorter than ≈10–20 ms. This finding argues against a role of millisecond-precise spike timing in the encoding of fast sequences of short sounds (7, 8). In addition, studies on the decoding of complex sounds from single-trial responses reported time scales of 10–50 ms as optimal (9) or suggested only small benefits of knowing the spike timing in addition to knowing the spike rate (10, 11). Together, these results may be taken to suggest that millisecond-precise spike timing of individual auditory cortex neurons may not encode additional information about complex sounds beyond that contained in the spike rate on the 10- to 20-ms scale. However, it could also be that the importance of precisely stimulus-locked spike patterns was masked in previous studies, because anesthetic drugs can suppress stimulus-synchronized spikes (12, 13), because stimulus-induced temporal patterns of cortical activity may be less precise during the presentation of brief artificial sounds than during the presentation of extended periods of naturalistic sounds (14, 15), or because some previously used analysis methods did not capture all possible information carried by the responses (16).

Here, we investigated the role of millisecond-precise spike timing of individual neurons in the auditory cortex of alert nonhuman primates. In particular, unlike previous studies that used isolated and brief sounds, we considered the encoding of prolonged complex sounds that were presented as a continuous sequence. We computed direct estimates of the mutual information between stimulus and neural response, which can capture all of the information that neural responses carry about the sound stimuli. We found that auditory cortex neurons encode complex sounds by millisecond-precise variations in firing rates. Registering the same responses at a coarser (e.g., tens of millisecond) precision considerably reduced the amount of information that can be recovered.

Results

Encoding of Complex Sounds.

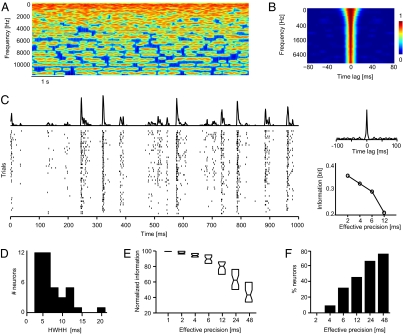

We recorded single neurons’ responses in the caudal auditory cortex of passively listening macaque monkeys. In a first experiment, we recorded the responses of 41 neurons to a sequence of pseudorandom tones (so called “random chords”; Fig. 1A). Given that this stimulus has a short (few milliseconds) correlation time scale (Fig. 1B), and given the debate about whether there could be a precise temporal encoding in the absence of response locking to fast sequences of stimulus presentations (7), random chords are particularly suited to determine whether precise spike timing can contribute to the encoding of complex sounds within a rich acoustic background. We found that responses to random chords were highly reliable and precisely time-locked across stimulus repeats, resulting in good alignment of spikes across trials and sharp peaks in the trial-averaged response (the peristimulus time histogram, PSTH). This precision is visualized by the example responses in Fig. 1C and Fig. S1. The width of the peaks in the PSTH reflects the duration of stimulus-induced firing events and their trial-to-trial variability and can be used as a simple measure of response precision (17, 18). By following Desbordes et al. (19), we used the PSTH autocorrelogram to quantify the width of such peaks. For many neurons, this analysis revealed very narrow correlogram peaks (as illustrated by the example data; Fig. 1C and Fig. S1), indicating a response precision finer than 10 ms (the population median of the half-width at half-height of the PSTH autocorrelogram was 5 ms; Fig. 1D).

Fig. 1.

Responses to random chords. (A) Time-frequency representation of a short stimulus section. Red colors indicate high sound amplitude, and individual tones of the random chord stimulus are visible as red lines. (B) Autocorrelation of the amplitude of individual sound frequency bands. Strong correlations (red colors) are limited to within ±20 ms. (C) Example data showing the response of a single neuron for a stimulus section of 1-s duration. (Left) Spike times on individual stimulus repeats and the trial-averaged response (PSTH). (Right) Autocorrelation of the PSTH (Upper) and stimulus information (in bits) obtained at different effective temporal precisions (Lower). The high response precision of the neuron is visible as the tight alignment of spikes across trials, the narrow peak of the correlogram, and the rapid drop of information with decreasing effective precision. Note that information values are only shown for precision <2 ms, because finer precision did not add information (compare E and F). (D) Distribution of the PSTH autocorrelogram half-width (HWHH) across neurons (n = 41). The median value is 5 ms. (E) Normalized stimulus information obtained from responses sampled at different effective precisions. For each neuron, the absolute information values were normalized (100%) to the value at 1-ms precision. Boxplots indicate the median and 25th and 75th percentile across neurons. Information values from 1 to 6 ms were calculated by using Δt = 1-ms bins, whereby the effective precisions of 2, 3, and 6 ms were obtained by shuffling spikes in two, three, and six neighboring bins; values for 12, 24, and 48-ms precision were computed by using bins of Δt = 2, 4, and 8 ms, respectively, and by shuffling spikes in six neighboring bins. (F) Percentage of neurons with significant information loss (compared with 1-ms precision) at each effective temporal precision (bootstrap procedure, P < 0.05). Note that neurons with a significant information loss at (e.g., 4-ms) precision also lose information at even coarser precisions, hence resulting in an increasing proportion of neurons with decreasing effective precision.

Yet, the width of PSTH peaks does not fully establish the temporal scale at which spikes carry information, because, for example, spikes might encode stimulus information depending on where they occur within a PSTH peak. We thus used Shannon information [I(S;R); Eq. 1] to directly quantify the information carried by temporal spike patterns sampled at different temporal precision. Shannon information measures the reduction of uncertainty about the stimulus gained by observing a single trial response (averaged over all stimuli and responses) and was computed from many (average 55) repeats of the same stimulus sequence by using the direct method (20). We computed the stimulus information carried by spike patterns characterized as “binary” (spike/no spike) sequences sampled in fine (1-ms) time bins, and used a temporal shuffling procedure to compare the stimulus information encoded at different “effective” response precisions. This shuffling procedure entailed shuffling spikes in nearby time bins (compare Methods and Fig. S2A) and can be used to progressively degrade the effective precision of a spike train without affecting the statistical dimensionality of the data. As “stimuli” we considered eight randomly selected sections form the long stimulus sequence (Fig. S2B). The resulting information estimates hence indicate how well these different sounds can be discriminated given the observed responses.

Estimating stimulus information from responses at effective precisions coarser than 1 ms resulted in a considerable information loss. This information loss is illustrated for the example neurons, which reveal a considerable drop in stimulus information with progressively degrading temporal precision (Fig. 1C and Fig. S1A). Across the population of neurons, the dependency of stimulus information on response precision was quantified by normalizing information values at coarser precisions by the information derived from the original (1-ms precision) response (Fig. 1E). Across neurons the information loss amounted to 5, 11, and 20% (median) for effective precisions of 4, 6, and 12 ms, respectively, demonstrating that a considerable fraction of the encoded stimulus information is lost when sampling responses at coarser resolution.

We evaluated the statistical significance of the information lost at coarser precision for individual neurons by using a bootstrap test. For each neuron we estimated the information lost by reducing the temporal precision given a (artificially generated) response that does not carry information at that particular precision (compare Methods). This bootstrap test demonstrated that the information loss was significant (P < 0.05) for some neurons already at 4-ms effective precision (7% of the neurons), and for nearly half the neurons (46%) at 12-ms precision (Fig. 1F). In addition, we did not find neurons with a significant information loss when reducing the response precision from 1- to 2-ms bins. This result demonstrates that for the present dataset the temporal precision of information coding is coarser than one, but finer than ≈4–6 ms.

The information that each spike gains when registered at high precision can be better appreciated by expressing information values as information per spike. For those neurons with a significant loss at 12-ms precision (46% of the neurons), information per spike was 4.3 bits/spike (median) at 1-ms response precision and dropped to 3.6 and 2.6 bits/spike at 6 and 12-ms precision, respectively.

Response Features Contributing to Millisecond-Precise Coding.

The above analysis demonstrates that auditory cortex neurons encode sounds by using responses with a temporal precision of a few milliseconds. This result raises the question of what specific aspects of the spike train carry this information. It could be that the only information-bearing feature is the temporal modulation of the PSTH, hence the time-dependent firing rate. However, it could also be that higher-order correlations within the spike response pattern carry additional information. We quantified the relative importance of PSTH modulations and of correlations between spike times by computing  (SI Methods, Eq. S1), which is a lower bound to the amount of stimulus information that can be extracted by an observer that considers only the time-dependent firing rate but ignores higher-order correlations (21).

(SI Methods, Eq. S1), which is a lower bound to the amount of stimulus information that can be extracted by an observer that considers only the time-dependent firing rate but ignores higher-order correlations (21).

We found that  accounted for 99 ± 0.2% of the total information I(S;R) that can be derived from the responses for precisions below 48 ms and 96 ± 1.6% at 48-ms precision (mean ± SEM across neurons). This high fraction demonstrates that essentially the entire stimulus information can be extracted without knowledge of higher-order correlations. Modulations of time-dependent firing rate are thus a key factor in the auditory cortical representation of sounds.

accounted for 99 ± 0.2% of the total information I(S;R) that can be derived from the responses for precisions below 48 ms and 96 ± 1.6% at 48-ms precision (mean ± SEM across neurons). This high fraction demonstrates that essentially the entire stimulus information can be extracted without knowledge of higher-order correlations. Modulations of time-dependent firing rate are thus a key factor in the auditory cortical representation of sounds.

Encoding of Natural Sounds.

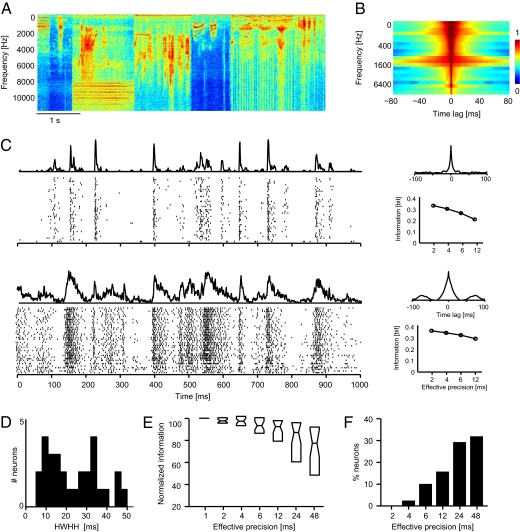

In a second experiment we further tested the importance of sampling responses at millisecond precision using an additional set of neurons (n = 31) recorded during the presentation of natural sounds. This stimulus (Fig. 2A), which has correlation time scale of few tens of milliseconds (Fig. 2B), comprised a continuous sequence of environmental sounds, animal vocalizations and conspecific macaque vocalizations.

Fig. 2.

Responses to natural sounds. (A) Time-frequency representation of a short stimulus section. Red colors indicate high sound amplitude. (B) Autocorrelation of the amplitude of individual sound frequency bands. Strong correlations (red colors) extend over several tens of milliseconds. (C) Example data showing the responses of two neurons for a stimulus section of 1-s duration. (Left) Spike times on individual stimulus repeats and the trial-averaged response (PSTH). (Right) Autocorrelation of the PSTH (Upper) and stimulus information (in bits) obtained at different effective temporal precisions (Lower). Upper is characterized by narrow peaks in the PSTH and the PSTH autocorrelogram, whereas Lower is characterized by broad peaks and a marginal drop of stimulus information obtained at different effective temporal resolutions. Note that information values are only shown for precision <2 ms, because finer precision did not add information (compare E and F). (D) Distribution of the PSTH autocorrelogram half-width (HWHH) across neurons (n = 31). (E) Normalized stimulus information obtained from responses sampled at different effective precisions. For each neuron, the absolute information values were normalized (100%) to the value at 1-ms precision. Boxplots indicate the median and 25th and 75th percentile across neurons. (F) Percentage of neurons with significant information loss (compared with 1-ms precision) at each effective temporal precision (bootstrap procedure, P < 0.05). Note that neurons with a significant information loss at (e.g., 4-ms) precision also lose information at even coarser precisions, hence resulting in an increasing proportion of neurons with decreasing effective precision.

We found that responses to natural sounds were also characterized by high temporal precision: Several neurons (e.g., Fig. 2C, Upper) exhibited similarly precise responses as observed during random chord stimulation. For these neurons, spike times were reliable and precisely time-locked across stimulus repeats, resulting in sharp peaks in the trial-averaged response and narrow peaks in the PSTH autocorrelogram. However, other neurons exhibited responses with coarser precision and wider peaks in the PSTH (e.g., Fig. 2C, Lower). This neuron by neuron variability is reflected in the wide distribution of the PSTH-derived response precision (median, 16 ms; Fig. 2D) and the wide distribution of information loss when reading responses at different effective temporal precisions (Fig. 2E). In fact, across the entire sample of neurons, the proportional information lost by reducing the effective temporal precision was smaller than the results obtained during stimulation with random chords (compare Fig. 1).

Importantly, however, a subset of neurons carried considerable stimulus information at high temporal precision and revealed a significant information loss when ignoring the temporal response precision. The fraction of neurons with significant information loss (bootstrap test; P < 0.05) was 11 and 17% at 6 and 12 ms, respectively (Fig. 2F). For those with significant loss at 12 ms, the information transmitted per spike dropped from 3.8 bits/spike at 1 ms to 3.2 and 2.7 bits/spike (median) at 6 and 12 ms precision, respectively. This result demonstrates that millisecond-precise spike timing can also carry additional information about natural sounds that cannot be recovered from the spike count on the scale of ≈10 ms or coarser.

Discussion

Temporal Encoding of Sensory Information.

Determining the precision of the neural code (i.e., the coarsest resolution sufficient to extract all of the information carried by a neuron's response) is a fundamental prerequisite for characterizing and understanding sensory representations (17, 18, 22–24). In recent years, the temporal precision of neural codes has been systematically investigated in several sensory structures, and in many cases, it was suggested that the nervous system uses millisecond-precise activity to encode sensory information (22, 25, 26). For example, neurons in rat somatosensory cortex encode information about whisker movements with a precision of a few milliseconds (27), and the responses of retinal ganglion cells can account for the visual discrimination performance of the animal only if they are registered with millisecond precision (28).

In the auditory system, the role of millisecond-precise spike times is well established at the peripheral and subcortical level (29, 30), but it remains debated whether auditory cortical neurons encode acoustic information with millisecond-precise spike timing (8, 9, 11). Our results demonstrate that neurons in primate auditory cortex can indeed use spike times with a precision of a few milliseconds to carry information about complex and behaviorally relevant sounds, albeit at a precision coarser than found for peripheral or subcortical auditory structures. When sampling or decoding these responses at millisecond precision, considerably more information can be obtained than when decoding the same response at coarser precision. For example, about a third of the neurons exhibited a significant information loss at 6-ms effective precision. Our results also revealed that basically the entire information in the spike train is provided by the time-dependent firing rate and that higher order correlations between spike times add negligibly to this. Together these results show that auditory cortex neurons encode complex sounds by using finely timed modulations of the firing rate that occur on the scale of a few milliseconds.

It is tempting to hypothesize that such millisecond-precise single neuron-encoding serves as neural substrate for recently reported behavioral correlates of millisecond-precise cortical activity. For example, trained rats were shown to being able to report millisecond differences in patterns of electrical microstimulation applied to auditory cortex (31), demonstrating that auditory cortex can access temporally precise patterns of local activity. In addition, another study demonstrated that behavioral performance can be better explained when decoding neural population activity sampled at a precision well below 10 ms than when decoding the same responses at coarser time scales (32). The present results demonstrate that the responses of individual neurons can indeed contribute to such millisecond encoding of complex sounds.

Our finding that millisecond-precise spike patterns can carry considerable information about the acoustic environment suggests that neurons in higher auditory cortices should be sensitive to the timing of neurons in earlier cortices to extract such information. Acoustic information might hence be passed through the different stages of acoustic analysis by either preserving the temporal precision of activity volleys generated in the auditory periphery, or by creating de novo temporally precise activity patterns that encode nontemporal features. In either case, our findings emphasize that precise response timing not only prevails at peripheral stages of the auditory system, but is a general principle of auditory coding throughout the sensory pathway.

Stimulus Decoding Versus Direct Information Estimates.

Several previous analyses on the time scales of stimulus representations in auditory cortex were based on decoding techniques, which predict the most likely stimulus given the observation of a neural response (e.g., ref. 9), rather than direct estimates of mutual information as used here. By making specific assumptions, these methods reduce the statistical complexity and can yield reliable results also when only small amounts of experimental data are available. However, even well constructed decoders may not be able to capture all of the information carried by neural responses (16). To determine whether such methodological aspects may affect the apparent temporal encoding precision, we compared results from linear stimulus decoding and direct information methods (compare SI Materials and Methods and SI Results). We found that linear decoding resulted in smaller losses of stimulus information when degrading temporal precision and, hence, would have indicated a coarser encoding time scale than that found with direct estimates of the stimulus information (SI Results and Fig. S3). This lower temporal sensitivity suggests that some decoding procedures may fail to reveal part of the information encoded by neurons at very fine precisions.

Dependency of Coding Precision on Stimulus Dynamics.

Our results reveal a dependency of neural response precision on the prominent stimulus time scales: The average PSTH-derived response precision (Figs. 1D and 2D) was finer, and the information loss with decreasing response precision (Figs. 1E and 2E) was larger, for the chord stimulus, which had a much shorter autocorrelation time scale than the natural sounds (compare Figs. 1 and 2B). Our results hence suggest that the neural response precision and the importance of millisecond-precise spike patterns may depend on the overall dynamics of stimulus presentation. In this regard, our findings parallel previous results obtained in the visual thalamus of anesthetized cats, where visual neurons were found to exhibit higher response precision for faster visual stimuli (18). Our results hence generalize this dependency of neural coding time scales upon stimulus time scales to the auditory system, sensory cortex, and the alert primate.

The apparent dependency of the encoding precision on stimulus dynamics also suggests that possibly even finer encoding precisions than reported here may be found when using stimuli of yet faster dynamics. In light of this stimulus dependency, our results may rather provide a lower bound on the effective precision, leaving the possibility that auditory cortex neurons might encode sensory information at the (sub) millisecond scale.

What biophysical mechanisms could be responsible for the generation of stimulus information at high temporal spike precision in the context of more rapidly modulating sensory stimuli? A simple hypothesis is that precision of spike times is determined by the slope of the membrane voltage when crossing the firing threshold, with steeper slopes resulting in more precise spike times (17, 33). On the basis of this hypothesis, our results suggest that stimuli with faster dynamics elicit more rapid membrane depolarizations and, hence, more precisely timed spikes that, in return, then encode stimulus information at high temporal precision. Moreover, a recent study proposed that cortical networks driven by static or slowly varying inputs are unable to encode information by reliable and precise spikes times (34). In light of this previous result, our results suggest that during stimulation with complex sounds input to auditory cortex is varying sufficiently rapid to enable the representation of stimulus information by millisecond-precise spike timing.

In addition to depending on the overall stimulus dynamics, auditory cortex responses are also modulated by sounds that occurred several seconds in the past (35, 36). This history or context dependence might be very relevant to the study of neural codes: For example, previous studies reporting a 10- to 20-ms coarse coding precision of auditory cortex neurons were based on the presentation of short stimuli (natural sounds or clicks) interspersed with long periods of silence (9, 11). In such paradigms, individual “target” sounds are presented in a context of silence. In contrast to such a paradigm, we here presented continuous sequences of sounds, hence effectively embedding each target sound in the context of other (similar) sounds. Our finding of a higher coding precision than in previous studies might well result from this difference in stimulation paradigm. Future studies should directly investigate the context dependency of neural coding time scales, by e.g., presenting the same set of stimuli either separated by extended periods of silence or embedded in a context of stimuli of the same kind. Addressing this issue will be crucial to understand how the brain forms sensory representations which can operate efficiently in continuously changing sensory environments.

Materials and Methods

Recording Procedures and Data Extraction.

All procedures were approved by the local authorities (Regierungspräsidium Tübingen) and were in full compliance with the guidelines of the European Community (Directive 86/609/EEC). Neural activity was recorded from the caudal auditory cortex of three adult rhesus monkeys (Macaca mulatta) by using procedures detailed in studies (15, 37). Briefly, single neuron responses were recorded by using multiple microelectrodes (1–6 MOhm impedance) and amplified (Alpha Omega system) and digitized at 20.83 kHz. Recordings were performed in a dark and anechoic booth, while animals were passively listening to the acoustic stimuli. Recording sites were assigned to auditory fields (primary field A1 and caudal belt fields CM and CL) based on stereotaxic coordinates, frequency maps constructed for each animal, and the responsiveness for tone vs. band-passed stimuli (37). Spike-sorted activity was extracted by using commercial spike-sorting software (Plexon Offline Sorter) after high-pass filtering the raw signal at 500 Hz (third-order Butterworth filter). Only units with high signal to noise (SNR > 10) and <1% of spikes with interspike intervals shorter than 2 ms were included in this study.

Acoustic Stimuli.

Sounds (average 65 dB sound pressure level) were delivered from two calibrated free field speakers (JBL Professional) at 70-cm distance. Two different kinds of stimuli were used. The first was a 40-s sequence of pseudorandom tones (“random chords”). This sequence was generated by presenting multiple tones (125-ms duration) at different frequencies (12 fixed frequency bins per octave), with each tone frequency appearing (independently of the others) with an exponentially distributed intertone interval (range 30–1,000 ms, median 250 ms; see Fig. 1A for a spectral representation). The second was a continuous 52-s sequence of natural sounds. This sequence was created by concatenating 21 each 1- to 4-sec-long snippets of various naturalistic sounds, without periods of silence in between (animal vocalizations, environmental sounds, conspecific vocalizations, and short samples of human speech; Fig. 2A). Each stimulus was repeated multiple times (on average 55 repeats of the same stimulus, range 39–70 repeats) and for a given recording site only one of the two stimuli was presented.

The temporal structure of these stimuli was quantified by using the autocorrelation of individual frequency bands. The envelope of individual quarter octave bands was extracted by using third-order Butterworth filters and applying the Hilbert transform, and the autocorrelation was computed. This calculation revealed that the correlation time constant of the random chords extended over <20 ms (Fig. 1B) and was considerably shorter than that of the natural sounds (Fig. 2B).

Information Theoretic Analysis.

Information theoretic analysis was based on direct estimates of stimulus information I(S;R) and followed detailed procedures (15, 38, 39). The Shannon (mutual) information between a set of stimuli S and a set of neural responses R is defined as

|

with P(s) the probability stimulus s, P(r|s) the probability of the response r given presentation of stimulus s, and P(r) the probability of response r across all trials to any stimulus. It is important to note that, in the formalism used here, the information theoretic calculation does not make assumptions about the particular acoustic features driving the responses (see also refs. 15 and 40). As a result, our information estimates the overall amount of knowledge about the sensory environment that can be gained from neural response.

The stimuli for the information analysis were defined by eight (randomly selected) 1-s-long sections from the long sound sequences (Fig. S2B). For each section, the time axis was divided into a number of nonoverlapping time windows of length T (here ranging from 6 to 48 ms), and for each window, we computed the information carried by the neural responses about which of these eight different complex sounds was being presented. Information values were then averaged over all time windows and over 50 sets of randomly selected eight sound sections to ensure independence of results on the exact selection of stimulus chunks. The limited sampling bias of the information values was corrected by using established procedures described in SI Materials and Methods.

Information Dependence on Effective Response Precision.

Responses were characterized as binary n-letter words, whereby the letter in each time (Δt) indicates the absence (0) or presence of at least one spike (1). Here, we considered bins of Δt = 1, 2, 4, and 8 ms and patterns of length n = 6. To quantify the impact of reading responses at different effective precisions, we compared the information in the full pattern and patterns of degraded precision. To reduce the effective response precision we shuffled (independently across trials and stimuli) the responses in groups of N neighboring time bins, where the parameter N indicates the temporal extent of the shuffling (Fig. S2A). It is important to note that this shuffling procedure does not alter the statistical dimensionality (number of time bins N) of the data and allows investigating different effective temporal precisions without changing the absolute length of the time window T. The latter is important because the associated biases of the information estimate are kept constant (38) and because it avoids spurious scaling of the information values by changing the considered time window T: Direct information estimates increase rapidly with decreasing window T because the use of short windows can underestimate the redundancy of the information carried by spikes in adjacent response windows when these windows become too short (41, 42). Note that this shuffling procedure is equivalent to decreasing the spiking precision by randomly jittering spike times within the window T with a jitter taken from a uniform distribution.

To quantify the information loss induced by ignoring the response precision, we normalized (for each neuron) the information value obtained at coarser precisions by the values at 1-ms precision (hence defined as 100%, compare Figs. 1E and 2E) and calculated the information loss as the difference of the normalized information to 100%. To evaluate the statistical significance of the information loss for individual neurons, we used a nonparametric bootstrap procedure: For each unit, we first randomly shuffled along the time dimension the neural response independently in each trial and then computed the percent information loss when evaluating the randomized response at coarser effective precisions. This procedure was repeated 500 times, and the 95th percentile (P = 0.05) was used as the statistical threshold to identify significant information loss.

Supplementary Material

Acknowledgments

We are supported by the Max Planck Society, the Brain Machine Interface (BMI) project of the Italian Institute of Technology, the Compagnia di San Paolo, and the Bernstein Center for Computational Neuroscience Tübingen.

Footnotes

The authors declare no conflict of interest.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1012656107/-/DCSupplemental.

References

- 1.Plack CJ, Oxenham AJ, Fay RR, Popper AN. Pitch: Neural Coding and Perception. New York: Springer; 2005. [Google Scholar]

- 2.Rosen S. Temporal information in speech: Acoustic, auditory and linguistic aspects. Philos Trans R Soc Lond B Biol Sci. 1992;336:367–373. doi: 10.1098/rstb.1992.0070. [DOI] [PubMed] [Google Scholar]

- 3.Zatorre RJ. Pitch perception of complex tones and human temporal-lobe function. J Acoust Soc Am. 1988;84:566–572. doi: 10.1121/1.396834. [DOI] [PubMed] [Google Scholar]

- 4.DeWeese MR, Wehr M, Zador AM. Binary spiking in auditory cortex. J Neurosci. 2003;23:7940–7949. doi: 10.1523/JNEUROSCI.23-21-07940.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Heil P, Irvine DR. First-spike timing of auditory-nerve fibers and comparison with auditory cortex. J Neurophysiol. 1997;78:2438–2454. doi: 10.1152/jn.1997.78.5.2438. [DOI] [PubMed] [Google Scholar]

- 6.Furukawa S, Xu L, Middlebrooks JC. Coding of sound-source location by ensembles of cortical neurons. J Neurosci. 2000;20:1216–1228. doi: 10.1523/JNEUROSCI.20-03-01216.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lu T, Liang L, Wang X. Temporal and rate representations of time-varying signals in the auditory cortex of awake primates. Nat Neurosci. 2001;4:1131–1138. doi: 10.1038/nn737. [DOI] [PubMed] [Google Scholar]

- 8.Wang X, Lu T, Bendor D, Bartlett E. Neural coding of temporal information in auditory thalamus and cortex. Neuroscience. 2008;157:484–494. doi: 10.1016/j.neuroscience.2008.07.050. [DOI] [PubMed] [Google Scholar]

- 9.Schnupp JW, Hall TM, Kokelaar RF, Ahmed B. Plasticity of temporal pattern codes for vocalization stimuli in primary auditory cortex. J Neurosci. 2006;26:4785–4795. doi: 10.1523/JNEUROSCI.4330-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Bizley JK, Walker KM, King AJ, Schnupp JW. Neural ensemble codes for stimulus periodicity in auditory cortex. J Neurosci. 2010;30:5078–5091. doi: 10.1523/JNEUROSCI.5475-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Nelken I, Chechik G, Mrsic-Flogel TD, King AJ, Schnupp JW. Encoding stimulus information by spike numbers and mean response time in primary auditory cortex. J Comput Neurosci. 2005;19:199–221. doi: 10.1007/s10827-005-1739-3. [DOI] [PubMed] [Google Scholar]

- 12.Ter-Mikaelian M, Sanes DH, Semple MN. Transformation of temporal properties between auditory midbrain and cortex in the awake Mongolian gerbil. J Neurosci. 2007;27:6091–6102. doi: 10.1523/JNEUROSCI.4848-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rennaker RL, Carey HL, Anderson SE, Sloan AM, Kilgard MP. Anesthesia suppresses nonsynchronous responses to repetitive broadband stimuli. Neuroscience. 2007;145:357–369. doi: 10.1016/j.neuroscience.2006.11.043. [DOI] [PubMed] [Google Scholar]

- 14.Schroeder CE, Lakatos P, Kajikawa Y, Partan S, Puce A. Neuronal oscillations and visual amplification of speech. Trends Cogn Sci. 2008;12:106–113. doi: 10.1016/j.tics.2008.01.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kayser C, Montemurro MA, Logothetis NK, Panzeri S. Spike-phase coding boosts and stabilizes information carried by spatial and temporal spike patterns. Neuron. 2009;61:597–608. doi: 10.1016/j.neuron.2009.01.008. [DOI] [PubMed] [Google Scholar]

- 16.Quian Quiroga R, Panzeri S. Extracting information from neuronal populations: Information theory and decoding approaches. Nat Rev Neurosci. 2009;10:173–185. doi: 10.1038/nrn2578. [DOI] [PubMed] [Google Scholar]

- 17.Mainen ZF, Sejnowski TJ. Reliability of spike timing in neocortical neurons. Science. 1995;268:1503–1506. doi: 10.1126/science.7770778. [DOI] [PubMed] [Google Scholar]

- 18.Butts DA, et al. Temporal precision in the neural code and the timescales of natural vision. Nature. 2007;449:92–95. doi: 10.1038/nature06105. [DOI] [PubMed] [Google Scholar]

- 19.Desbordes G, et al. Timing precision in population coding of natural scenes in the early visual system. PLoS Biol. 2008;6:e324. doi: 10.1371/journal.pbio.0060324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rieke F, Warland D, de Ruyter van Steveninck RR, Bialek W. Spikes—Exploring the Neural Code. MIT Press, Cambridge, MA; 1999. [Google Scholar]

- 21.Latham PE, Nirenberg S. Synergy, redundancy, and independence in population codes, revisited. J Neurosci. 2005;25:5195–5206. doi: 10.1523/JNEUROSCI.5319-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Panzeri S, Brunel N, Logothetis NK, Kayser C. Sensory neural codes using multiplexed temporal scales. Trends Neurosci. 2010;33:111–120. doi: 10.1016/j.tins.2009.12.001. [DOI] [PubMed] [Google Scholar]

- 23.Bialek W, Rieke F, de Ruyter van Steveninck RR, Warland D. Reading a neural code. Science. 1991;252:1854–1857. doi: 10.1126/science.2063199. [DOI] [PubMed] [Google Scholar]

- 24.Victor JD. How the brain uses time to represent and process visual information(1) Brain Res. 2000;886:33–46. doi: 10.1016/s0006-8993(00)02751-7. [DOI] [PubMed] [Google Scholar]

- 25.Reinagel P, Reid RC. Temporal coding of visual information in the thalamus. J Neurosci. 2000;20:5392–5400. doi: 10.1523/JNEUROSCI.20-14-05392.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Reich DS, Mechler F, Purpura KP, Victor JD. Interspike intervals, receptive fields, and information encoding in primary visual cortex. J Neurosci. 2000;20:1964–1974. doi: 10.1523/JNEUROSCI.20-05-01964.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Arabzadeh E, Panzeri S, Diamond ME. Deciphering the spike train of a sensory neuron: Counts and temporal patterns in the rat whisker pathway. J Neurosci. 2006;26:9216–9226. doi: 10.1523/JNEUROSCI.1491-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Jacobs AL, et al. Ruling out and ruling in neural codes. Proc Natl Acad Sci USA. 2009;106:5936–5941. doi: 10.1073/pnas.0900573106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Johnson DH. The relationship between spike rate and synchrony in responses of auditory-nerve fibers to single tones. J Acoust Soc Am. 1980;68:1115–1122. doi: 10.1121/1.384982. [DOI] [PubMed] [Google Scholar]

- 30.Lesica NA, Grothe B. Dynamic spectrotemporal feature selectivity in the auditory midbrain. J Neurosci. 2008;28:5412–5421. doi: 10.1523/JNEUROSCI.0073-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Yang Y, DeWeese MR, Otazu GH, Zador AM. Millisecond-scale differences in neural activity in auditory cortex can drive decisions. Nat Neurosci. 2008;11:1262–1263. doi: 10.1038/nn.2211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Engineer CT, et al. Cortical activity patterns predict speech discrimination ability. Nat Neurosci. 2008;11:603–608. doi: 10.1038/nn.2109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Azouz R, Gray CM. Cellular mechanisms contributing to response variability of cortical neurons in vivo. J Neurosci. 1999;19:2209–2223. doi: 10.1523/JNEUROSCI.19-06-02209.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.London M, Roth A, Beeren L, Häusser M, Latham PE. Sensitivity to perturbations in vivo implies high noise and suggests rate coding in cortex. Nature. 2010;466:123–127. doi: 10.1038/nature09086. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Asari H, Zador AM. Long-lasting context dependence constrains neural encoding models in rodent auditory cortex. J Neurophysiol. 2009;102:2638–2656. doi: 10.1152/jn.00577.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Ulanovsky N, Las L, Farkas D, Nelken I. Multiple time scales of adaptation in auditory cortex neurons. J Neurosci. 2004;24:10440–10453. doi: 10.1523/JNEUROSCI.1905-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kayser C, Petkov CI, Logothetis NK. Visual modulation of neurons in auditory cortex. Cereb Cortex. 2008;18:1560–1574. doi: 10.1093/cercor/bhm187. [DOI] [PubMed] [Google Scholar]

- 38.Panzeri S, Senatore R, Montemurro MA, Petersen RS. Correcting for the sampling bias problem in spike train information measures. J Neurophysiol. 2007;98:1064–1072. doi: 10.1152/jn.00559.2007. [DOI] [PubMed] [Google Scholar]

- 39.Magri C, Whittingstall K, Singh V, Logothetis NK, Panzeri S. A toolbox for the fast information analysis of multiple-site LFP, EEG and spike train recordings. BMC Neurosci. 2009;10:81. doi: 10.1186/1471-2202-10-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Strong SP, Koberle R, de Ruyter van Steveninck RR, Bialek W. Entropy and information in neural spike trains. Phys Rev Lett. 1998;80:197–200. [Google Scholar]

- 41.Panzeri S, Schultz SR. A unified approach to the study of temporal, correlational, and rate coding. Neural Comput. 2001;13:1311–1349. doi: 10.1162/08997660152002870. [DOI] [PubMed] [Google Scholar]

- 42.Oizumi M, Ishii T, Ishibashi K, Hosoya T, Okada M. Mismatched decoding in the brain. J Neurosci. 2010;30:4815–4826. doi: 10.1523/JNEUROSCI.4360-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.