Abstract

Observing other people’s actions activates a network of brain regions that is also activated during the execution of these actions. Here, we used functional magnetic resonance imaging to test whether these “mirror” regions in frontal and parietal cortices primarily encode the spatiomotor aspects or the functional goal-related aspects of observed tool actions. Participants viewed static depictions of actions consisting of a tool object (e.g., key) and a target object (e.g., keyhole). They judged the actions either with regard to whether the objects were oriented correctly for the action to succeed (spatiomotor task) or whether an action goal could be achieved with the objects (function task). Compared with a control condition, both tasks activated regions in left frontoparietal cortex previously implicated in action observation and execution. Of these regions, the premotor cortex and supramarginal gyrus were primarily activated during the spatiomotor task, whereas the middle frontal gyrus was primarily activated during the function task. Regions along the intraparietal sulcus were more strongly activated during the spatiomotor task but only when the spatiomotor properties of the tool object were unknown in advance. These results suggest a division of labor within the action observation network that maps onto a similar division previously proposed for action execution.

Keywords: intraparietal sulcus, middle frontal gyrus, mirror neurons, premotor cortex, tool use

Introduction

What sets human action apart from even our closest relatives in the animal kingdom is the capacity for complex tool use (cf. Johnson-Frey 2003). The ability to act “with” objects rather than just “on” them has unlocked a vast range of effects humans can produce in the environment (e.g., Johnson-Frey and Grafton 2003). Great strides forward have been made in identifying the brain systems that guide tool actions (for reviews, see Johnson-Frey et al. 2005; Lewis 2006), but it is unclear how this important class of actions is understood when observed.

Complex tool use differs from simple object-directed actions (e.g., grasping) in that successful action requires not only a visual alignment of hand and goal object but also the application of learned knowledge about proper object use (e.g., Buxbaum 2001; Johnson-Frey and Grafton 2003). This knowledge appears to be hierarchically organized, linking action goals both to the objects that have to be used and to the motor behaviors that have to be performed with these objects for the action to be successful (e.g., Nowak et al. 2000; Lindemann et al. 2006; Grafton and Hamilton 2007; Botvinick et al. 2009). For instance, to unlock a door, one needs to correctly insert (the motor act) a key (the tool). The distinction between proper object selection and proper motor performance features prominently in models of action production (e.g., Milner and Goodale 1995; Oztop and Arbib 2002; Lindemann et al. 2006; Botvinick et al. 2009). The use of both kinds of knowledge during tool use is demonstrated by patients who can either select the appropriate tools for a task but fail to use them correctly or vice versa (e.g., brushing teeth with a comb), while grasping is often unaffected (e.g., Ochipa et al. 1989; Sirigu et al. 1991; Buxbaum and Saffran 1998; Hodges et al. 1999).

It has been proposed that, conversely, observers can derive the goal of an observed action if the action’s perceivable attributes—the motor behaviors performed and the objects used—can be mapped onto the control hierarchy that also guides its production (e.g., Bach et al. 2005; Grafton and Hamilton 2007). Evidence for this view comes from the finding that the observation of motor acts engages a network of regions that is also centrally involved in the spatiomotor control of these actions, such as the ventral and dorsal premotor cortices (PMC), as well as regions along the left postcentral and intraparietal sulci (IPS) (e.g., di Pellegrino et al. 1992; Iacoboni et al. 1999; Buccino et al. 2001; Grèzes et al. 2003; Rozzi et al. 2008; for tool actions, see Manthey et al. 2003; Baumgaertner et al. 2007). However, these so-called mirror networks appear to represent the actions primarily in terms of their spatiomotor aspects, such as trajectory and hand posture information (e.g., Hamilton and Grafton 2006; Lestou et al. 2008), but do not appear to take the identity of the objects used into account (Nelissen et al. 2005; Shmuelof and Zohary 2006). They are involved even when tool actions are pantomimed without objects (Buxbaum et al. 2005; Villarreal et al. 2008) or when skilled dance movements are observed (Calvo-Merino et al. 2005; Cross et al. 2006), consistent with a mapping based on the actions’ spatiomotor properties.

It therefore remains unresolved which regions mediate the influence of object identity on action observation. If human action representations link action goals not only to the required motor behaviors but also to the objects that have to be used, then object identity might be a second source of information about the action’s goal. Indeed, both behavioral and electrophysiological studies confirm that humans naturally infer the meaning and goals of an action from the objects used (e.g., Sitnikova et al. 2003; Bach et al. 2005, 2008; Boria et al. 2009). Moreover, because objects—and the goals that can be achieved with them—are linked to specific motor behaviors that have to be performed (e.g., Goodale and Humphrey 1998; Creem and Proffitt 2001), object information may also bias the spatiomotor action observation processes toward the particular motor acts that are required in a given situation. Indeed, in a recent behavioral study, we found that the identities of the objects involved in a seen action determined both whether a goal-based representation of the action could be established and facilitated judgments of whether the tool was applied correctly to the goal object for the action to succeed (Bach et al. 2005; see also Riddoch et al. 2003; Green and Hummel 2006; van Elk et al. 2008).

Here, we investigate the pathways via which object information determines both goal and spatiomotor representations of observed tool actions. We hypothesize that these processes draw upon the same representations that also supply knowledge about proper tool selection and use during action production. Knowledge about how to act with objects appears to rely on a left-hemispheric network comprising parietal, temporal, and prefrontal regions (for a review, see Johnson-Frey 2004). Anterior and posterior regions in the temporal lobe act as general repositories for semantic/functional knowledge and visual information about objects (e.g., Hodges et al. 2000; Kellenbach et al. 2003; Ebisch et al. 2007; Canessa et al. 2008). In contrast, lesion and imaging studies have indicated that 2 regions are central for applying this knowledge “in action”: the left middle frontal gyrus (mFG) and regions along the IPS (e.g., Rumiati et al. 2004; Johnson-Frey et al. 2005; for reviews, see Koski et al. 2002; Frey 2007). Lesions in both areas produce ideomotor apraxia and deficits in accessing skilled tool knowledge (Haaland et al. 2000; Goldenberg and Spatt 2009) but often leave grasping unaffected. These regions have therefore been identified with the human “acting with” system that guides learned actions with tools in contrast to the more basic “acting on” system that guides the more basic spatiomotor alignments required for grasping (Johnson-Frey and Grafton 2003; see also Buxbaum 2001).

Of these areas, the regions along the IPS are strongly associated with storing specific motor patterns relevant for using a tool, such as the hand postures required for its use, or which parts are relevant for action (e.g., Buxbaum et al. 2006, 2007). In contrast, the mFG appears to represent action knowledge associated with a tool on a relatively high and abstract level. It supports the planning but not the actual performance of tool actions (Johnson-Frey et al. 2005) and mediates all aspects of the action knowledge associated with a tool, be it the actions that can be performed with it, their functional and spatiomotor properties (Grabowski et al. 1998; Ebisch et al. 2007; Weisberg et al. 2007), and the typical goal objects they have to be applied to (Goldenberg and Spatt 2009). The mFG may therefore hold relatively high-level representations of tool actions, linking the use of tools to potential action goals, as well as to the proper ways to perform the associated motor behaviors.

The Present Study

This study uses functional magnetic resonance imaging (fMRI) to identify the pathways via which the objects used determine both goal and spatiomotor representations of observed tool actions. Participants were presented with 2-frame sequences of tool actions (see Fig. 1 for examples), consisting of a hand holding a tool (e.g., a key) and a goal object (e.g., a keyhole). The task was varied between blocks of trials to engage regions establishing either a spatiomotor or a goal-related representation of the observed action. First, in the “function task,” participants judged whether the tool and goal object could be used together to achieve an action goal. This task should engage regions that establish a representation of the actions’ goal based on the identity of the objects involved in the actions. Second, the “space task” was designed to engage regions establishing a spatiomotor representation of how the instrument is applied to the goal object. Here, participants had to judge whether tool and goal object were applied to one another correctly spatially. This task should engage regions that derive a spatiomotor description of how the objects are applied to one another based on knowledge on how the objects should be used. Importantly, both tasks were performed on exactly the same stimulus sequences. Any differences observed are therefore not due to stimulus differences but due to the participants’ attentional focus on either the functional or the spatiomotor aspects of the observed actions.

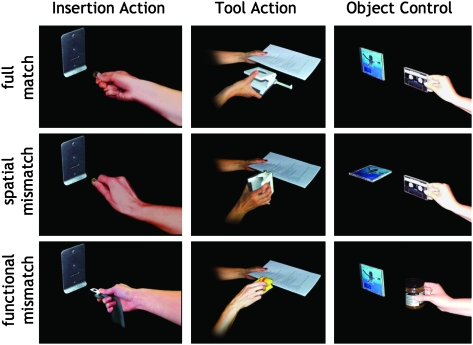

Figure 1.

Example stimuli for correct actions in the 3 stimulation conditions and the respective mismatches. For illustration purposes, instruments and goal objects are combined in one frame but were presented separately in the experiment (see Fig. 2). Left column (insertion action condition): coin inserted into vending machine with correct orientation, coin inserted into vending machine with incorrect orientation, and safety belt inserted into the vending machine. Middle column (tool action condition): hole puncher applied to paper in correct orientation, hole puncher applied to paper in incorrect orientation, and sponge being applied to paper. Right column (object control condition): compact disc and music cassette with same orientations, compact disc and music cassette with different orientations, and compact disc and jar of honey.

Participants performed these tasks in 2 action observation conditions and in 1 control condition (varied between runs). In the control condition, participants made similar judgments of object sequences that did not form prototypical actions but which were nevertheless semantically/functionally related (e.g., a soap bar and a shampoo bottle). The control condition was used to restrict our analysis to regions involved in action observation, as opposed to those more generally involved in processing spatial and semantic/functional object properties.

The 2 action observation conditions were designed to further subdivide the regions involved in the space task between regions involved in establishing a spatiomotor description of how the objects are applied to one another based on their directly perceivable attributes and those involved in deriving how the tool should in fact be oriented for the action to succeed. In the first action observation condition, “insertion action,” only insertion actions were presented, such as the insertion of a key into a keyhole or of a coin into a slot of a vending machine. Due to this stereotypicality of the presented actions, the space task in the insertion action condition could therefore be performed on the available visual information alone: by comparing whether the tool has the correct orientation for insertion into the goal object, without the need to access object-based action knowledge. This was not the case in the second action observation condition, “tool action.” Here, a more variable set of actions was presented, such as cutting a piece of paper with scissors or cleaning a plate with a sponge. Crucially, the tools were chosen so that each had to be applied to the goal object differently for the action to succeed. For instance, whereas a sponge should have the same orientation as the to-be cleaned plate, the scissors should be oriented orthogonally to the piece of paper one wants to cut. Thus, although the insertion action condition minimizes the need for knowledge about how to act with the given objects, this knowledge is critical for the space task in the tool action condition. Comparing these conditions will therefore allow us to dissociate regions that establish how the seen objects are actually applied to one another (necessary in both conditions) from those regions that derive how an action with the specific objects should be performed for the action’s goal to be achieved (only necessary in the tool action condition).

Note that the stronger reliance on object-based action knowledge in the tool action condition has been confirmed in behavioral studies using the same tasks and stimuli (Bach et al. 2005). There, we argued that knowledge about how to use an object can be derived in 2 ways. First, there is evidence that seeing objects automatically activates posterior parietal regions representing tool-specific motor knowledge (e.g., Buxbaum et al. 2007; Mahon et al. 2007). Such regions should show a specific involvement in the space task of the tool action condition but not in the insertion action condition. A second possibility is that knowledge about how to align the objects can also be derived from higher level goal-related representations of the actions, hypothesized for the mFG in the dorsolateral prefrontal cortex. There is evidence that such goal-related action representations are directly tied to the relevant motor behaviors required for the actions to succeed (Bach et al. 2005; Ebisch et al. 2007; van Elk et al. 2008). If this is the case, then regions that establish such high-level action representations in the function task should also be activated in the space task of the tool action condition.

Materials and Methods

Participants

Fifteen participants, all students at the Bangor University, United Kingdom, took part in the experiment and were paid for their participation (£20). They ranged in age from 18 to 34 years, were right-handed, and had normal or corrected-to-normal vision. They satisfied all requirements in volunteer screening and gave informed consent. The study was approved by the School of Psychology at the Bangor University, United Kingdom, and the North West Wales Health Trust and was conducted in accordance with the Declaration of Helsinki.

Stimuli

For each of the 3 stimulation conditions (tool action, insertion action, and object control), a separate set of stimulus sequences was created (see Supplementary Table 1 for a complete list of sequences in each condition). In all conditions, these sequences consisted of 2 photographs that were presented briefly (300 ms, ISI = 200 ms), with the first showing an object held in a hand and the second showing a potential goal object. In the insertion action condition, these sequences depicted insertion actions, such as the insertion of a key in a keyhole or the insertion of a ticket in a ticket canceler. Because all actions were inserting actions, proper motor performance in this condition required identical orientations of insert and slot of the goal objects. In the tool action condition, the sequences depicted more complex actions of tool use, such as hole punching a stack of papers or cleaning a plate with a sponge. In order to ensure that in these sequences the proper spatial relationship was not predictable, the stimuli were chosen so that one-half of the actions required identical orientations of tool and goal object (e.g., sponge and plate), while the other half required orthogonal orientations (e.g., scissors and piece of paper). Finally, the object control condition consisted of 2 objects that did not imply an action but that were nevertheless functionally related, such as a compact disc and an audiocassette or a bottle of milk and a bottle of orange juice (see Fig. 1 for examples).

The sequences in each condition were assembled from 32 different photographs. Of these 32 pictures, 16 showed a hand holding 1 of 8 objects, either vertically or horizontally. The other 16 pictures showed 8 corresponding goal objects, again either vertically or horizontally. Fully matching actions were created by combining a functionally appropriate tool and a goal object in the correct orientations for action. Spatially mismatching actions were created by combining this tool with an appropriate object but of an inappropriate orientation. Functionally mismatching actions were created by combining the tool with an inappropriate goal object that has an appropriate orientation (see Fig. 1 for examples). Thus, there were 64 possible action sequences in each stimulation condition.

The stimuli used in the action observation conditions have been extensively tested in prior studies. A rating task confirmed that the stimuli indeed strongly evoke the actions’ goals (Bach et al. 2005). Moreover, these studies show that the response times (RTs) are very comparable across the space and function tasks in the insertion action condition, suggesting similar perceptual processing demands. In the tool action condition, longer RTs in the space task are found, following the prediction of a more complex space task that requires the prior processing of goal/object information (Bach et al. 2005).

Static images were used because they allow for efficient experimental manipulation of objects and orientations. Furthermore, there is considerable evidence that the processing of static images of actions in many respects resembles that of full-action displays. For example, the motion implied by actions presented in static images is extracted automatically (Proverbio et al. 2009) and activates motion-selective brain areas (e.g., Kourtzi and Kanwisher 2000). Moreover, evidence from behavioral (e.g., Stürmer et al. 2000), imaging (e.g., Johnson-Frey et al. 2003), and transcranial magnetic stimulation studies (Urgesi et al. 2006) indicate that actions in static displays are mapped onto the observers’ motor system in a similar manner as those in full-motion displays.

Task

In the space task of the action conditions, participants judged whether tool and goal object were applied to one another correctly. They had to report mismatches in which the orientations of instrument and goal object were not appropriate (e.g., a vertically oriented key inserted into a keyhole with a horizontal slot). In the function task of the action conditions, participants judged whether the tool and goal object could be used together to achieve an action goal. Participants had to report mismatches in which no goal could be derived because the tool was applied to an inappropriate object (e.g., a screwdriver rather than a key is inserted into the keyhole).

In the space task of the control condition, the participants had to detect mismatches in which the physical orientation of the 2 objects was different (e.g., vertical CD and horizontal music cassette). In the function task of the control condition, they had to detect mismatches in which the 2 objects were not used in the same contexts (e.g., a milk bottle and a piece of soap). As such, the tasks in the control condition were based on the same component processes as those in the action observation conditions (i.e., deriving object orientation and semantic object knowledge). However, since the stimuli neither depicted typical actions nor required action judgments of the participants, they did not require processing the stimuli as actions. The control condition should therefore capture particularly the basic spatial/semantic object analysis processes common to both the action observation and the control condition but not those processes that are specific to the action understanding tasks.

Procedure

Before the experiment, all participants were informed about their task and were familiarized with the stimuli so that all objects were recognized before the scanning session. We also ensured that the participants understood the difference between the tool action and the insertion action conditions (i.e., that all actions in the insertion action condition would be insertion actions and could therefore be judged in the same manner but that this was not possible in the tool action conditions, where the proper way to apply instrument and goal object had to be derived from the objects used). This explicit instruction was given to prevent entrainment effects, such that participants would neither be surprised by the more complex tool action task after completion of the insertion action condition nor, conversely, would go on using the more complex unnecessary strategy of the tool action condition in the insertion action condition.

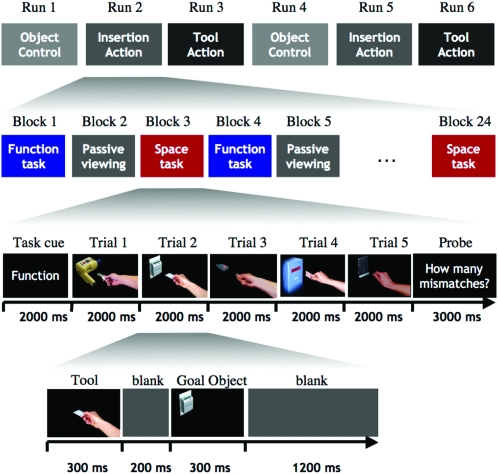

Subjects then performed 6 runs of the experiment (see Fig. 2 for the complete structure of the experiment). In each run, they saw sequences from one stimulation condition exclusively (insertion action, tool action, and object control). The order of stimulation conditions followed an ABCABC order and was counterbalanced across subjects. At the start of each run, participants were informed via an on-screen instruction about the stimuli they would see. They would then see 24 short (15 s) blocks of 5 actions each (120 actions altogether). Each block started with a 2-s cue that notified the participants about their task in this block (indicated by the words “space,” “function,” and “passive viewing”). Data from the passive viewing conditions were not further analyzed because subjects reported that they failed to consistently pay attention to the stimuli in this condition. Task order was counterbalanced across runs. In each of the 5 trials within a block, one of the 2-frame stimulus sequences was shown for 800 ms (with 300 ms for each photograph, 200 ms ISI). At the end of each block (i.e., after 5 trials), subjects had to report how many relevant mismatches they had observed by pressing a button as often as the number of mismatches they had identified. Compared with go–no go paradigms, this task temporally separates action observation and overt motor responses and ensures that any observed responses in sensorimotor areas do not simply reflect the participants’ requirement to process the action with regard to an immediate motor goal (e.g., making or withholding a response). Mismatches were rare; of the 480 actions the participants judged in the experiment, on average, every eighth action contained either a spatial or a functional mismatch (60 trials total). Mismatches were distributed over the 5-action blocks so that a mismatch had to be reported on average every 3 blocks of actions. Spatial and functional mismatches occurred equally often in both tasks.

Figure 2.

Schematic illustration of the design of the experiment, showing from top to bottom: runs within the experiment, blocks within runs, trials within the blocks, and the course of each trial.

After the experiment was finished, all participants filled out a questionnaire (described in detail in Bach et al. 2005) and rated each action with respect to 1) how apparent the action’s goal was, 2) how much visuomotor experience they have had with the actions, and 3) how strongly the instruments and goal objects were associated.

Data Acquisition and Analysis

All data were acquired on a 1.5T Philips MRI scanner, equipped with a parallel head coil. For functional imaging, an echo-planar imaging sequence was used (time repetition [TR] = 3000 ms, time echo = 50 ms, flip angle = 90°, and field of view = 192, 30 axial slices, 64 × 64 in-plane matrix, 4 mm slice thickness). The scanned area covered the whole cortex and most of the cerebellum. Preprocessing and statistical analysis of magnetic resonance imaging data were performed using BrainVoyager 4.9 and QX (Brain Innovation). Functional data were motion corrected and spatially smoothed with a Gaussian kernel (6-mm full-width at half-maximum), and low-frequency drifts were removed with a temporal high-pass filter (0.006 Hz). Functional data were manually coregistered with 3D anatomical T1 scans (1 × 1 × 1.3 mm resolution) and then resampled to isometric 3 × 3 × 3 mm voxels with trilinear interpolation. The 3D scans were transformed into Talairach space (Talairach and Tournoux 1988), and the parameters for this transformation were subsequently applied to the coregistered functional data.

In order to generate predictors for the multiple regression analyses, the event time series for each condition were convolved with a delayed gamma function (delta = 2.5 s; tau = 1.25 s). Six predictors of interest were used to model the 2 tasks and the 3 stimulus conditions (space-tools, space-inserts, space-objects, function-tools, function-inserts, and function-objects). Seven additional predictors of no interest were included to model the effect of the 2 mismatches in the 3 stimulation conditions. The first six of these additional predictors modeled the mismatches in each condition separately (space-tools, space-inserts, space-objects, function-tools, function-inserts, and function-objects), and the seventh was an additional predictor for modeling the effects of the relevant mismatches (i.e., spatial mismatches in the space task and functional mismatches in the function task). Voxel time series were z normalized for each run, and additional predictors accounting for baseline differences between runs were included in the design matrix. The regressors were fitted to the MR time series in each voxel. Whole-brain random-effects contrasts were corrected for multiple comparisons using the false discovery rate (FDR) approach (q < 0.05) implemented in BrainVoyager (Genovese et al. 2002).

Regions of interest (ROIs) derived from both the main analysis and the post hoc effective connectivity analyses were defined by their “peak” voxel (for coordinates, see Table 1) and all contiguous voxels that met the statistical threshold of P < 0.001 and fell within a cube with 15 mm length, width, and depth centered on the peak voxel. The average signal across voxels in the ROI was then submitted to a further ROI general linear model (GLM) analysis. The betas from these regression analyses provided estimates of the response to the experimental conditions, which were subsequently analyzed with analyses of variance (ANOVAs).

Table 1.

Brain regions/Brodman areas (BAs) more activated in the 2 action observation conditions (insertion-action, tool-action) than in the object control condition, and the P values of the effects obtained in the subsequent ANOVAs in these regions

| Region (BA) | x, y, z | t/mm3 | Condition | Task | Task × condition |

| mFG (9) | −46, 22, 35 | 6.69a/685 | 0.99 | 0.17 | <0.05 |

| PMC (6) | −51, 4, 37 | 8.77a/1213 | 0.50 | <0.01 | 0.35 |

| SMG (40) | −55, −28, 38 | 7.05a/1049 | <0.01 | <0.001 | 0.91 |

| aIPS (40) | −34, −41, 38 | 8.89a/1387 | 0.27 | <0.001 | 0.08 |

| cIPS (7) | −19, −70, 49 | 4.51/131 | 0.76 | <0.004 | <0.05 |

Bold values indicate significant effects.

Notes: aPeak responses that pass an FDR threshold of q < 0.05. Cluster extents are based on thresholding at P < 0.001, as used for the region of interest analysis.

Results

Behavioral Data

For the analysis of the behavioral data, the detection rates in the 3 conditions (insertion action, tool action, and object control) and the 2 tasks (space and function) were entered into a repeated measures ANOVA with the factors Condition and Task. Due to a computer error, the button press data from 2 subjects were not available, leaving 13 subjects for the behavioral analysis. The analysis did not reveal a main effect of Task (F1,12 = 1.0), showing that overall the space and function tasks were of similar difficulty. There was a marginally significant main effect of Condition (F2,24 = 3.3, P < 0.1, e = 0.79) that was qualified by an interaction of Condition and Task (F2,24 = 4.1, P < 0.05, e = 0.81). Post hoc t-tests showed that the overall detection probabilities did not differ between the insertion action condition and tool action condition (P = 0.13). However, whereas for insertion actions the participants detected marginally more mismatches in the space task (M = 84%, s = 22%) than in the function task (M = 71%, s = 22%, P < 0.1), the opposite was the case for tool actions. Here, the participants detected more mismatching actions in the function task (M = 91%, s = 13%) than in the space task (M = 79%, s = 21%, P < 0.05). This difference was predicted from prior behavioral work showing that the space task for tools is more complex because it involves the additional step of deriving what the appropriate motor act for the given action would be (Bach et al. 2005). For the object control condition, the detection rates in both tasks did not differ (space, M = 93%, s = 11%; function, M = 82%, s = 28%, n.s.).

fMRI Data

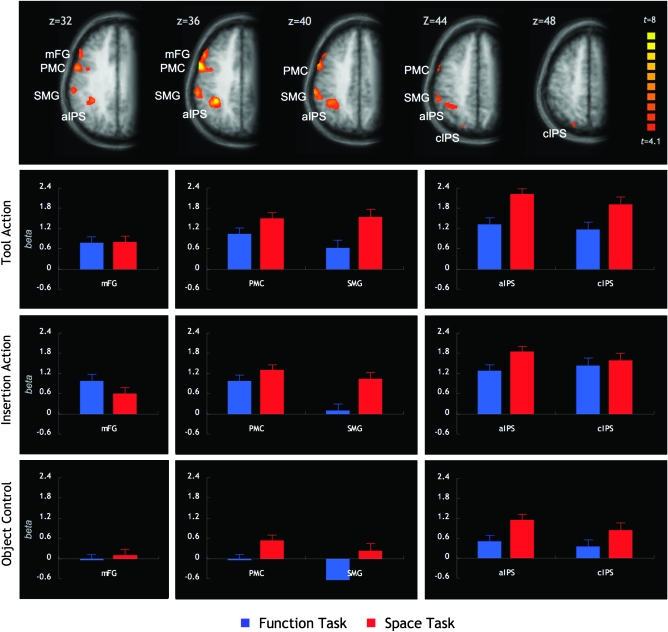

The analysis of the fMRI data followed a 2-step procedure. First, to identify brain areas involved in action comprehension as opposed to areas more generally involved in representing spatial and functional object features, we contrasted activation for trials in the 2 action observation conditions (tool action and insertion action) with those in the object control condition. This comparison (FDR corrected at q < 0.05) revealed an exclusively left-hemispheric network (Table 1 and Fig. 3; see Supplementary Figs 2 and 3 for the activation in the 3 conditions against baseline). It comprised brain structures typically found in action observation tasks, such as the PMC and the supramarginal gyrus (SMG) of the inferior parietal lobe (IPL). In addition, regions along the anterior sections of the intraparietal sulcus (aIPS) and a more rostral region in the mFG were strongly activated. At a relaxed threshold (P < 0.001), activation was also observed in the caudal sections of the intraparietal sulcus (cIPS). The cIPS will also be discussed in the following, as responses in this region are expected from prior work on action and tool representation (Shikata et al. 2001, 2003; Culham et al. 2003; Mahon et al. 2007). This left-hemisphere action observation network was also robustly activated when both tasks were compared separately against the object control condition (Supplementary Fig. 1). Areas more strongly activated for the object control condition than the action observation condition included areas in the anterior and middle cingulate cortices and in visual cortex (Supplementary Table 2).

Figure 3.

The upper panel shows areas activated more strongly in the 2 action observation conditions (tool action and insertion action) than in the object control condition (thresholded at P < 0.001, as used for the ROI analysis). The lower 3 panels show the beta estimates in the 3 experimental conditions (tool action, insertion action, object control) and the two tasks (space, function). Left panel: region in the mFG with a selective engagement in spatial and functional tasks requiring object-based action knowledge. Middle panel: premotor and supramarginal regions showing a stronger response for spatiomotor judgments in both action observation conditions. Right panel: intraparietal regions showing stronger responses for spatiomotor judgments in the tool action condition, in particular.

To ensure that we did not miss any regions that play a role in action as opposed to object judgments, we also computed the whole-brain interaction contrast ((tools_space + inserts_space) − (tools_function + inserts_function)) − ((2 × objects_space) − (2 × objects_function)) to uncover regions showing different involvements in the space and the function tasks in the 2 action observation and object control conditions. In addition, we also computed an interaction contrast for the 2 tasks in the 2 action observation conditions ((tools_space + inserts_function) − (inserts_space + tools_function)) to identify regions that play different roles in the 2 tasks across action observation conditions. However, neither of these analyses yielded any significant activation that would pass correction for multiple comparisons at FDR (q < 0.05).

The next step was aimed at a more detailed investigation of the role of the action-related areas in the 2 tasks and the 2 action observation conditions. We extracted GLM parameter estimates for each ROI, subject, and condition. These values were entered into a 3-way ANOVA with the factors Task (space and function), Condition (insertion action and tool action), and ROI (see Table 1). This ANOVA revealed a 3-way interaction of Task, Condition, and ROI, indicating a differential involvement of the regions in the 2 tasks and the 2 action observation conditions (F5,70 = 2.6, P < 0.05, Huynh–Feldt e = 1).

To further characterize these ROIs, the parameter estimates of each ROI were entered into separate ANOVAs with the factors Task (space and function) and Condition (insertion action and tool action). Table 1 shows the resulting 2 main effect and interaction terms for each of the ROIs; Figure 3 shows the beta estimates in each of the ROIs for the 2 tasks and 3 action observation conditions. As can be seen, all ROIs responded strongly during the observation of implied actions but not (or weakly) during the observation of objects.

The SMG was the only area that showed a main effect of Condition, with overall stronger responses in the tool action condition than in the insertion action condition. A main effect of Task (space > function) was found in the PMC and all parietal regions (SMG, aIPS, and cIPS), reflecting a stronger overall involvement in the space task than in the function task. In the PMC and SMG, this stronger involvement was independent of the action observation condition and present both in the tool action condition (P < 0.0005 for both) and in the insertion action condition (SMG, P < 0.0005; PMC, P < 0.05).

In the cIPS, the main effect of Task was qualified by a significant interaction of Task and Condition. The cIPS responded more strongly in the space task than in the function task in the tool action condition (cIPS, t = 5.1, P < 0.0005), where spatiomotor judgments required knowledge of object identity. In the insertion action condition, however, where the space task could be performed on the basis of immediate perceptual information alone, this effect was not present (t < 1). A similar (but marginally significant) interaction was found in the aIPS region. Since for the aIPS the stronger responses in the space task over the function task were significant for both the insertion action condition and the tool action condition (P < 0.0005 for both), the interaction indicates a more pronounced difference in the tool action condition relative to the insertion action condition.

The mFG was the only area in the action observation network that did not show overall stronger responses in the space task. Interestingly, the ANOVA revealed an interaction of Condition and Task (Table 1), reflecting significantly stronger responses in the function task than in the space task in the insertion action condition (t = 2.8, P < 0.02) but equal activation across both tasks in the tool action condition (t < 1), where both functional and spatiomotor judgments required knowledge of object identity.

We were also interested in the role the areas of the action observation network play in the judgment of actions outside of an action context. We therefore used simple t-tests to compare activations in the space and function tasks in the object control condition, where no actions were involved. We found stronger responses in the space task than in the function task in all premotor and parietal regions (P < 0.005 for PMC, SMG, and aIPS; P < 0.05 for cIPS), suggesting that these regions are also involved in deriving spatial relations between objects that are not involved in an action. The mFG did not differentiate between the 2 tasks in the object control condition.

Analysis of Effective Connectivity

The results of the ANOVAs carried out on the parameter estimates for the mFG, aIPS, and cIPS (see above) revealed an interaction of Task and Condition, suggesting a role in deriving knowledge about how an action with the objects should be performed in the space task of the tool action condition (see Discussion). To further investigate these interactions, we performed a functional connectivity analysis to identify regions to which effective connectivity from these regions increases in the space tasks of the tool action condition compared with the insertion action condition. We employed BrainVoyager Granger Causality Mapping Plug-In (Roebroeck et al. 2005) that uses an autoregressive model to obtain Granger causality maps (GCMs) between a reference ROI and all other recorded voxels. Our TR of 3 s does not allow us to investigate directional “causal” influences (from the reference region to target voxels of the brain or vice versa) but allows for identifying voxels that show correlated time courses with the reference region, within the experimental conditions of interest. Such “instantaneous” influence exists when the time course of the reference ROI improves predictions of values of the target voxels (or vice versa), taking into account the past of the reference region and of the target voxels. Enhanced synchrony with target voxels in one experimental condition over another can be taken as an indicator of enhanced functional connectivity that is not due to changes or synchronies in hemodynamic response (cf. Roebroeck et al. 2005).

To test whether there are such differences in functional connectivity between the space task of the tool action condition and the insertion action condition, 2 GCMs were generated for each subject and each reference region, 1 for the space task of the tool action condition and 1 for the space task of the insertion action condition using each subject’s average time course in the relevant ROIs as a reference (i.e., the mFG, aIPS, and cIPS). Smaller ROIs (cubes with 10 mm side length, centered on the peak voxel) were used than in the main analysis to ensure consistency of the time course in the voxels of the reference regions (R Goebel, personal communication). For each of the 3 reference regions, a single-factor within-subjects analysis of covariance was run on the data of all subjects with the levels tool action and insertion action to identify voxels that, across subjects, show stronger functional connectivity with the reference region in the space task of the tool action condition than the insertion action condition.

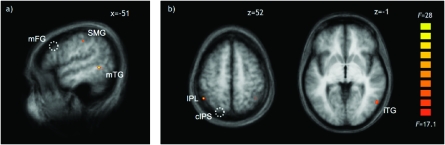

For the mFG, 2 regions showed changes in effective connectivity for which the peak voxels passed correction for multiple comparisons at FDR (q < 0.05) as shown in Figure 4a and Table 2. One region was located in the anterior part of the IPL and was directly adjacent/overlapping with the ROI in the SMG. Indeed, an ANOVA performed on the beta estimates of this new adjacent/overlapping region mirrored the results of the SMG (Table 2) and revealed a stronger involvement in the space task than in the function task in both the tool action condition (t > 10, P < 0.001) and the insertion action condition (t = 4.0, P < 0.005). The other region was located in the left posterior middle temporal gyrus, where prior studies have demonstrated selective responses for visual representations of tools/artifacts over natural kinds (e.g., Chao et al. 1999, 2002; Malach et al. 2002). Consistent with the idea that this region provides knowledge about how the seen tools have to be used, a Task × Condition ANOVA calculated on the parameter estimates of this region showed no main effects but a significant Task × Condition interaction (Table 2), with stronger responses for the space task over the function task in the tool action condition (t = 2.9, P < 0.05) and marginally stronger responses in the function task in the insertion action condition (t = 2.0, P = 0.07). Thus, the effective connectivity analysis supports the idea that the mFG interacts with regions involved in the space task when knowledge about the objects used in every trial is required (i.e., the tool action condition).

Figure 4.

Regions showing significant changes in effective connectivity with the mFG (a) and cIPS (b) between the space task in the tool action condition, where the space task depended on object use, and in the insertion action condition, where the space task was independent from object use (thresholded at P < 0.001, as used for the ROI analysis). Note that (b) also shows an additional cluster in the right IPL with enhanced connectivity to the cIPS that did, however, not pass correction for multiple comparisons at FDR (q < 0.05). Dotted circles show the approximate positions of the reference regions (a, mFG; b, cIPS, see also Fig. 3). mTG, middle temporal gyrus.

Table 2.

Brain regions/Brodman areas (BAs) identified by the functional connectivity analysis for the 2 reference regions (mFG, cIPS), and the P values of the effects obtained in the subsequent ANOVAs in these regions

| Region (BA) | x, y, z | F/mm3 | Condition | Task | Task × condition |

| Changes in functional connectivity to the mFG | |||||

| Left SMG (40) | −52, −24, 40 | 26.6a/34 | <0.05 | <0.001 | 0.19 |

| Left mTG (21/37) | −51, −49, −2 | 28.3a/40 | 0.07 | 0.59 | <0.005 |

| Changes in functional connectivity to the cIPS | |||||

| Left IPL (40) | −45, −49, 52 | 33.6a/25 | 0.79 | <0.05 | 0.08 |

| Right iTG (37) | 54, −61, −1 | 27.4a/104 | <0.005 | <0.005 | 0.09 |

Bold values indicate significant effects.

Notes: The F statistic reflects the difference in effective connectivity between the space task in the tool action condition and in the insertion action condition. mTG, middle temporal gyrus; iTG, inferior temporal gyrus.

Peak responses that pass an FDR threshold of q < 0.05. Cluster extents are based on thresholding at P < 0.001, as used for the region of interest analysis.

For the cIPS, 2 regions showed stronger effective connectivity in the space task of the tool action condition than the insertion action condition (see Fig. 4b and Table 2). One region was found laterally to the aIPS ROI identified in the main analysis and located in the inferior parietal lobule (see Table 2 for the results of the ANOVAs performed on these regions). It responded more strongly in the space task than in the function task in the tool action condition (left IPL, t = 4.1, P < 0.005), where the space task required object-based action knowledge, but not in the insertion action condition, where the space task could be performed on the basis of directly available perceptual features (t < 1.4). Increased effective connectivity was also found to a region within the right inferior temporal lobe. The peak coordinates place it in close proximity to the body-selective extrastriate body area (EBA; Downing et al. 2001; Peelen and Downing 2005) and motion-selective areas, including human homologues of MT (Greenlee 2000). As was the case for the IPL, this region responded more strongly in the space task than in the function task, with the difference being larger in the tool action condition (t = 4.2, P < 0.001) than in the insertion action condition (t = 1.9, P = 0.08). For the aIPS and the other regions that did not show a Task × Condition interaction (i.e., the PMC and SMG), the functional connectivity analysis did not reveal any significant changes.

Correlations with Subjective Action Judgments

The participants filled out a questionnaire (described in detail in Bach et al. 2005) that assessed for each of the actions 1) how apparent its goal was, 2) how much visuomotor experience the participants had with the action (how often they have seen and performed the action), and 3) how strongly the instruments and goal objects were associated with one another. One participant did not fill out the questionnaire and could not be reached afterward. The questionnaire data were then correlated for each participant, each stimulus condition and task separately, with the brain activation in the ROIs when viewing the corresponding actions. This analysis was based on 32 predictors, modeling the responses to each of the 8 different actions in each of the 2 stimulus conditions and each of the 2 action judgment tasks. Table 3 shows the average Fisher-transformed across-subjects correlations of the beta estimates in the 6 ROIs and the ratings of the corresponding action. Each cell reflects the average correlation with the different ratings within a region, averaged across both tasks and both action observation conditions. Simple t-tests (df = 13) against zero were performed to assess the significance level of the averaged correlations for each ROI. As can be seen, across participants, brain activity in all ROIs was significantly stronger for those actions with which the participants had less visuomotor experience. In addition, brain activity in both the mFG and the PMC was stronger for those actions for which the goal was less apparent. None of the ROIs showed a relationship to the participants’ subjective judgments of how strongly the instruments and goal objects were associated.

Table 3.

Average across-person correlations of brain activity in the 6 ROIs and their ratings of the actions seen at the same time, with regard to how apparent the actions’ goal is (goals), how much sensorimotor experience the participants had with the actions (experience), and how much they perceived the instruments and goal objects to be associated (association)

| Region | Goals | Experience | Association |

| mFG | 0.13* | −0.16** | −0.07 |

| PMC | 0.11* | −0.17** | −0.03 |

| SMG | 0.11 | −0.29*** | 0.01 |

| aIPS | 0.07 | −0.22*** | −0.06 |

| cIPS | 0.08 | −0.17*** | −0.02 |

Note: Significance was assessed with simple t-tests against zero (*P < 0.05, **P < 0.01, ***P < 0.001).

Discussion

Previous research has focused on how others’ actions can be recognized based on the motor behaviors performed. The present study investigated the complementary pathways of how the objects used can contribute to action understanding. Participants judged 2-frame sequences of tool actions with regard to either whether an action goal could, in principle, be achieved by applying the objects to one another (function task) or whether the objects were oriented correctly to each other for the action to succeed (space task). These tasks should engage regions that identify the actions (and their goals) on the basis of the objects involved (function task) and those that establish a spatiomotor representation of how the objects are applied to one another (space task).

Compared with a tightly matched control condition, both tasks activated a common left-hemispheric network. It included regions in the dorsal PMC and the SMG that are also involved in the observation of simple object-directed actions (e.g., Buccino et al. 2001; Grèzes et al. 2003). In addition, regions in the mFG and along the IPS, specifically implicated in representing learned knowledge how to “act with” tools (e.g., Buxbaum 2001; Johnson-Frey and Grafton 2003), were strongly activated. The finding that both types of action judgments activated the same regions within the network more strongly than the control tasks reveals a specific tuning for processing of action information and a tight coupling of spatiomotor and goal-related action knowledge (Hodges et al. 2000; Bach et al. 2005; Ebisch et al. 2007). However, the extent to which these regions were activated nevertheless differed between tasks and action observation conditions. The data revealed a distinction between regions involved in basic spatiomotor action representation processes and additional regions that link the use of tools not only to the goals that can be achieved with them but also to how one has to act with these objects for the action goals to be achieved.

Regions Deriving Spatiomotor Action Representations

The dorsal PMC and the SMG generally responded more strongly in the space task than in the function task, indicating a role in establishing a spatiomotor rather than goal-related representation of the seen actions. Importantly, this stronger involvement was found in both action observation conditions, irrespective of whether the space task could be based on directly available perceptual information alone (insertion action condition) or whether it required specific knowledge about how to use the tools (tool action condition). These regions are therefore unlikely to be involved in retrieving knowledge about the correct way to handle the objects but instead provide a more basic spatiomotor description of how the instrument is aligned to the goal object, which is required in both conditions.

Very similar networks have been described for the core “mirror circuits,” in which observed actions are matched to own action representations (e.g., di Pellegrino et al. 1992; Gallese et al. 1996). Activation in these regions is often equated with action understanding in general and the mapping of seen actions onto the observers’ motor system (e.g., Buccino et al. 2001; Grèzes et al. 2003; for reviews, see Rizzolatti and Craighero 2004; Wilson and Knoblich 2005; Binkofski and Buccino 2006). Our data suggest that in these regions this matching is based on the actions’ spatiomotor attributes rather than object or high-level goal information (see also, Brass et al. 2007; de Lange et al. 2008). Consistently, fMRI investigations have indicated an encoding of spatiomotor properties of seen actions in these regions, such as object affordances, hand postures, or kinematics (e.g., Shmuelof and Zohary 2006; Grafton and Hamilton 2007; Chong et al. 2008; Lestou et al. 2008). Moreover, the specific role in encoding spatial relationship information found here is consistent with the role of these regions during action execution, where they are involved in spatially matching hands to goal objects (Binkofski et al. 1998; Castiello 2005; Johnson-Frey et al. 2005; for reviews, see Rizzolatti and Luppino 2001; Glover 2004; for tool actions, see Goldenberg 2009) based on the “difference vectors” between object features and actual hand postures (Shikata et al. 2003; Frey et al. 2005; Tunik et al. 2005, 2008; Begliomini et al. 2007). However, even though such a coding of spatial relationship information is now well established for action execution, to our knowledge, this is the first study to suggest that these regions may support a similar type of processing during action observation.

Regions Deriving Tool-Specific Spatiomotor Knowledge

The areas along the aIPS and caudal sections of the intraparietal sulcus (cIPS) also responded more strongly in the space task than in the function task. However, in these regions, these stronger responses were more pronounced (for the aIPS) or only observed (for the cIPS) in the tool action condition, where the space task required knowledge about the proper way to act with these objects. Whereas the more anterior premotor–SMG circuits are involved in basic spatiomotor action representation processes, the more posterior IPS regions may therefore support these processes by providing information relevant for how a tool has to be wielded to achieve the action’s goal, such as its parts relevant for action (e.g., Goodale and Humphrey 1998; Creem and Proffitt 2001) or the hand postures required for its use (Buxbaum et al. 2003, 2006; Daprati and Sirigu 2006).

A role in providing such acting with information is consistent with lesion studies implicating these regions in deficits in skilled tool action knowledge during action execution (e.g., Liepmann 1920; Haaland et al. 2000; Buxbaum et al. 2007; Goldenberg and Spatt 2009). Moreover, fMRI studies have consistently demonstrated visual tool-specific responses in these areas (e.g., Chao and Martin 2000; Rumiati et al. 2004; Buxbaum et al. 2006) that suggest an automatic activation of tool-specific motor skills (Kellenbach et al. 2003; Creem-Regehr et al. 2007; Valyear et al. 2007). Similarly, the posterior intraparietal regions are implicated in extracting spatial object properties for action control (Shikata et al. 2001, 2003; Culham et al. 2003) and are governed by action information associated with the objects (Mahon et al. 2007).

The effective connectivity analysis provided further insights into how tool-specific action knowledge is derived in the posterior intraparietal areas. Several regions showed increased effective connectivity with the cIPS during the space task of the tool action condition when knowledge about how to use a tool was required. One region was located in the right inferior temporal gyrus, in close proximity to the body-selective EBA (Downing et al. 2001). Effective connectivity also increased to bilateral regions in the IPL, centrally implicated in representing learned knowledge about how to act with objects (Johnson-Frey and Grafton 2003; Boronat et al. 2005; Buxbaum et al. 2005). Indeed, the pattern of responses in both the temporal and the parietal regions reveals a specific involvement in the space task of the tool action condition, which required such tool-specific action knowledge. When the space task is unpredictable, the cIPS may therefore act as an interface between basic spatiomotor representation of the seen objects and stored knowledge about how one has to act with a given object to achieve the actions’ goal.

An additional finding was that the parietal and premotor areas that responded more strongly in the space task of the action observation conditions showed similar differences in the object control condition, even though the objects were judged outside an action context. This suggests either that spatiomotor action judgments make use of more basic processes for visuospatial object analysis or, conversely, that object orientation judgments employ action resources. Recent research supports the latter view. Mental rotation of objects, particularly tools, has been behaviorally shown to rely on motor processes (e.g., Wohlschläger and Wohlschläger 1998) and involves the same brain networks that support their actual rotation (e.g., Vingerhoets et al. 2002; for a review, see Zacks 2008). Here, our correlational analysis relating brain activity to the subjects’ subjective ratings of the stimuli supported an action-related role. Particularly in the regions showing stronger responses in the space task, brain activity was stronger for those actions with which the subjects had less sensorimotor experience. Such a dependence on prior experience has been demonstrated before for mirror processing in behavioral and imaging studies (Knoblich and Flach 2001; Calvo-Merino et al. 2005; Cross et al. 2006) and has also been demonstrated for motor knowledge associated with tools (Vingerhoets 2008). The correlational data therefore indicate that processing in these areas is not related to abstract stimulus aspects but is “grounded” in the observer’s sensorimotor experience, with processing being more difficult the less familiar the actions were.

Regions Deriving Functional Action Knowledge

The last remaining and most frontal region of the network was the left mFG, a region consistently implicated in the representation of skilled action and associated with impairments in skilled tool knowledge and ideomotor apraxia when lesioned (Haaland et al. 2000; Goldenberg and Spatt 2009). The mFG differed from all other regions in the action observation network in that it was not specifically associated with the space task. Rather, for insertion actions, it was more strongly activated in the function task when participants had to decide whether an action goal could be achieved with the objects. This demonstrates a role in using object identity to derive what the action is “for.” Importantly, however, in the tool action condition, the mFG was not only engaged by these goal judgments. Here, the difference disappeared and the mFG was strongly and equally engaged by both tasks. This suggests that the mFG is involved not only in deriving which goals can be achieved with the objects but also in deriving how the objects have to be applied to one another when this knowledge is required in the space task of the tool action condition.

One way to interpret these findings is to assume that the mFG holds relatively high-level goal-related action representations, in which the use of objects is linked both to the associated action goals and to knowledge about how they have to be applied to one another for the actions to succeed. This interpretation is consistent with behavioral work using the same stimuli showing that deriving an action’s goals from object identity automatically activates information about the objects’ proper spatial alignment (Bach et al. 2005; see also, van Elk et al. 2008). It is also consistent with imaging studies that implicate the left mFG in accessing skilled actions from memory, particularly on the basis of object information (i.e., “action semantics,” cf. Hauk et al. 2004; Johnson-Frey 2004). It is activated when participants derive action knowledge associated with a tool (Grabowski et al. 1998; Weisberg et al. 2007) and, similar to what was found here, when knowledge concerns a tool’s functional and motor properties (e.g., Ebisch et al. 2007; for similar lesion data, see Goldenberg and Spatt 2009).

Although this proposal is preliminary, it was supported by further analysis of our data. First, our analysis linking brain activity to the participants’ subjective judgments of the actions supported a role in deriving action goals. Whereas in all other regions of the action observation network brain activity predominantly varied with the subjects’ prior sensorimotor experience with the actions, in the mFG (and potentially the PMC), brain activity was additionally determined by the apparentness of the actions’ goal, being stronger for those actions for which the goal was harder to extract. Thus, the correlational analysis supports the notion that the mFG represents actions in a more high-level goal-related manner than the predominantly spatiomotor-attuned parietal–premotor networks.

Second, the analysis of effective connectivity supported the proposed involvement in the space task, and that processing in the mFG is based on object information. In the space task of the tool action condition, effective connectivity increased, first, to a region in the left SMG, directly adjacent to/overlapping with our SMG activation involved in basic spatiomotor processes. Second, effective connectivity also increased to a region in the left posterior middle temporal gyrus that has previously been implicated in action observation and the visual representation of tools and artifacts (e.g., Chao et al. 1999, 2002; Malach et al. 2002; Tyler et al. 2003). Responses in this area are primarily stimulus driven rather than by the task (for a review, see Noppeney 2008), consistent with a lack of activation when compared with the control condition (in which similar body parts and possible tool objects were presented). The effective connectivity analysis therefore not only supports the notion that the goal representations in the mFG interact with basic spatiomotor processes. It also reveals an important contribution of the posterior temporal lobe to tool action observation that was not identified by the main analysis, but which might reflect the visual/semantic object representations on which processing in the mFG is based.

Conclusions

This study provided evidence for a division of labor during tool action observation. Whereas typical “mirror” regions in the PMC and the SMG relied on directly perceivable aspects of the objects to establish a basic spatiomotor description of how the action is performed irrespective of the objects used, regions along the IPS and in the mFG extracted action knowledge based on the identities of the objects. Of these 2 regions, the intraparietal regions appeared to be primarily concerned with deriving tool-specific spatiomotor knowledge: how a tool should be used motorically or which of its parts are relevant for action. In contrast, the mFG appeared to derive more general high-level action representations that are linked both to information about the actions’ goal and to spatiomotor knowledge about how the action has to be performed.

The distinction between the purely spatiomotor premotor–inferior parietal systems and the frontoposterior parietal systems for supplying object information closely maps onto the acting on and acting with streams described by Johnson-Frey and Grafton (2003; see also Buxbaum 2001). Accordingly, the premotor–parietal circuits instantiate evolutionary old systems for interactions with objects based on their perceptual attributes (acting on). In contrast, the acting with system, realized in the IPL and the mFG, provides action schemata about the skilled use of tools. The actual use of a tool requires the cooperation of both these systems. Our current findings indicate that very similar interactions and brain networks may be involved in the observation of tool actions.

Funding

Wellcome Trust Programme grant awarded to S.P.T.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Acknowledgments

The authors would like to thank the radiographers at Ysbyty Gwynedd Hospital, Bangor, North Wales. Conflict of Interest: None declared.

References

- Bach P, Gunter TC, Knoblich G, Prinz W, Friederici AD. N400-like negativities in action perception reflect two components of an action representation. Soc Neurosci. 2008;4(3):212–232. doi: 10.1080/17470910802362546. [DOI] [PubMed] [Google Scholar]

- Bach P, Knoblich G, Gunter TC, Friederici AD, Prinz W. Action comprehension: deriving spatial and functional relations. J Exp Psychol Hum Percept Perform. 2005;31(3):465–479. doi: 10.1037/0096-1523.31.3.465. [DOI] [PubMed] [Google Scholar]

- Baumgaertner A, Buccino G, Lange R, McNamara A, Binkofski F. Polymodal conceptual processing of human biological actions in the left inferior frontal lobe. Eur J Neurosci. 2007;25(3):881–889. doi: 10.1111/j.1460-9568.2007.05346.x. [DOI] [PubMed] [Google Scholar]

- Begliomini C, Caria A, Grodd W, Castiello U. Comparing natural and constrained movements: new insights into the visuomotor control of grasping. PLoS One. 2007;2(10):e1108. doi: 10.1371/journal.pone.0001108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binkofski F, Buccino G. The role of ventral premotor cortex in action execution and action understanding. J Physiol. 2006;99:396–405. doi: 10.1016/j.jphysparis.2006.03.005. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, Freund HJ. Human anterior intraparietal area subserves prehension: a combined lesion and functional MRI activation study. Neurology. 1998;50:1253–1259. doi: 10.1212/wnl.50.5.1253. [DOI] [PubMed] [Google Scholar]

- Boria S, Fabbri-Destro M, Cattaneo L, Sparaci L, Sinigaglia C, Santelli E, Cossu G, Rizzolatti G. Intention understanding in autism. PLoS One. 2009;4(5):e5596. doi: 10.1371/journal.pone.0005596. doi: 10.1371/journal.pone.0005596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boronat C, Buxbaum LJ, Coslett H, Tang K, Saffran E, Kimberg D, Detre J. Distinctions between manipulation and function knowledge of objects: evidence from functional magnetic resonance imaging. Brain Res Cogn Brain Res. 2005;23:361–373. doi: 10.1016/j.cogbrainres.2004.11.001. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Buxbaum LJ, Bylsma LM, Jax SA. Toward an integrated account of object and action selection: a computational analysis and empirical findings from reaching-to-grasp and tool-use. Neuropsychologia. 2009;47(3):671–683. doi: 10.1016/j.neuropsychologia.2008.11.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brass M, Schmitt R, Spengler S, Gergely G. Investigating action understanding: inferential processes versus motor simulation. Curr Biol. 2007;17(24):2117–2121. doi: 10.1016/j.cub.2007.11.057. [DOI] [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13:400–404. [PubMed] [Google Scholar]

- Buxbaum LJ. Ideomotor apraxia: a call to action. Neurocase. 2001;7:445–458. doi: 10.1093/neucas/7.6.445. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Kyle KM, Grossman M, Coslett HB. Left inferior parietal representations for skilled hand-object interactions: evidence from stroke and corticobasal degeneration. Cortex. 2007;43(3):411–423. doi: 10.1016/s0010-9452(08)70466-0. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Kyle KM, Menon R. On beyond mirror neurons: internal representations subserving imitation and recognition of skilled object-related actions in humans. Brain Res Cogn Brain Res. 2005;25(1):226–239. doi: 10.1016/j.cogbrainres.2005.05.014. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. Knowing ‘how’ vs “what for”: a new dissociation. Brain Lang. 1998;65:73–86. [Google Scholar]

- Buxbaum LJ, Sirigu A, Schwartz M, Klatzky R. Cognitive representations of hand posture in ideomotor apraxia. Neuropsychologia. 2003;41(8):1091–1113. doi: 10.1016/s0028-3932(02)00314-7. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Tyle KM, Tang K, Detre JA. Neural substrates of knowledge of hand postures for object grasping and functional object use: evidence from fMRI. Brain Res. 2006;1117(1):175–185. doi: 10.1016/j.brainres.2006.08.010. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Glaser DE, Grèzes J, Passingham RE, Haggard P. Action observation and acquired motor skills: an fMRI study with expert dancers. Cereb Cortex. 2005;15:1243–1249. doi: 10.1093/cercor/bhi007. [DOI] [PubMed] [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, Tettamanti M, Shallice T. The different neural correlates of action and functional knowledge in semantic memory: an FMRI study. Cereb Cortex. 2008;18(4):740–751. doi: 10.1093/cercor/bhm110. [DOI] [PubMed] [Google Scholar]

- Castiello U. The neuroscience of grasping. Nat Rev Neurosci. 2005;6:726–736. doi: 10.1038/nrn1744. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chao LL, Weisberg J, Martin A. Experience-dependent modulation of category-related brain activity. Cereb Cortex. 2002;12(5):545–551. doi: 10.1093/cercor/12.5.545. [DOI] [PubMed] [Google Scholar]

- Chong T, Cunnington R, Williams M, Kanwisher N, Mattingley J. fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Curr Biol. 2008;18(20):1576–1580. doi: 10.1016/j.cub.2008.08.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Creem SH, Proffitt DR. Grasping objects by their handles: a necessary interaction between cognition and action. J Exp Psychol Hum Percept Perform. 2001;1:218–228. doi: 10.1037//0096-1523.27.1.218. [DOI] [PubMed] [Google Scholar]

- Creem-Regehr SH, Dilda V, Vicchrilli AE, Federer FL, James N. The influence of complex action knowledge on representations of novel graspable objects: evidence from functional magnetic resonance imaging. J Int Neuropsychol Soc. 2007;13(6):1009–1020. doi: 10.1017/S1355617707071093. [DOI] [PubMed] [Google Scholar]

- Cross ES, Hamilton AF, Grafton ST. Building a motor simulation de novo: observation of dance by dancers. NeuroImage. 2006;31(3):1257–1267. doi: 10.1016/j.neuroimage.2006.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, DeSouza JF, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Daprati E, Sirigu A. How we interact with objects: learning from brain lesions. Trends Cogn Sci. 2006;10:265–270. doi: 10.1016/j.tics.2006.04.005. [DOI] [PubMed] [Google Scholar]

- de Lange FP, Spronk M, Willems RM, Toni I, Bekkering H. Complementary systems for understanding action intentions. Curr Biol. 2008;18:454–457. doi: 10.1016/j.cub.2008.02.057. [DOI] [PubMed] [Google Scholar]

- di Pellegrino G, Fadiga L, Fogassi L, Gallese L, Rizzolatti G. Understanding motor events: a neurophysiological study. Exp Brain Res. 1992;91:176–189. doi: 10.1007/BF00230027. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Ebisch SJ, Babiloni C, Del Gratta C, Ferretti A, Perrucci MG, Caulo M, Sitskoorn MM, Romani GL. Human neural systems for conceptual knowledge of proper object use: a functional magnetic resonance imaging study. Cereb Cortex. 2007;17(11):2744–2751. doi: 10.1093/cercor/bhm001. [DOI] [PubMed] [Google Scholar]

- Frey SH. What puts the how in where: semantic and sensori-motor bases of everyday tool use. Cortex. 2007;43:368–375. doi: 10.1016/s0010-9452(08)70462-3. [DOI] [PubMed] [Google Scholar]

- Frey SH, Vinton D, Norlund R, Grafton ST. Cortical topography of human anterior intraparietal cortex active during visually guided grasping. Brain Res Cogn Brain Res. 2005;23(2–3):397–405. doi: 10.1016/j.cogbrainres.2004.11.010. [DOI] [PubMed] [Google Scholar]

- Gallese L, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Genovese CR, Lazar NA, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. NeuroImage. 2002;15:870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Glover S. Separate visual representations in the planning and control of action. Behav Brain Sci. 2004;27:3–78. doi: 10.1017/s0140525x04000020. [DOI] [PubMed] [Google Scholar]

- Goldenberg G. Apraxia and the parietal lobes. Neuropsychologia. 2009;47(6):1449–1459. doi: 10.1016/j.neuropsychologia.2008.07.014. [DOI] [PubMed] [Google Scholar]

- Goldenberg G, Spatt J. The neural basis of tool use. Brain. 2009;132:1645–1655. doi: 10.1093/brain/awp080. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Humphrey GK. The objects of action and perception. Cognition. 1998;67(1–2):181–207. doi: 10.1016/s0010-0277(98)00017-1. [DOI] [PubMed] [Google Scholar]

- Grabowski TJ, Damasio H, Damasio AR. Premotor and prefrontal correlates of category-related lexical retrieval. NeuroImage. 1998;7(3):232–243. doi: 10.1006/nimg.1998.0324. [DOI] [PubMed] [Google Scholar]

- Grafton ST, Hamilton AF. Evidence for a distributed hierarchy of action representation in the brain. Hum Mov Sci. 2007;26(4):590–616. doi: 10.1016/j.humov.2007.05.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green CB, Hummel JE. Familiar interacting object pairs are perceptually grouped. J Exp Psychol Hum Percept Perform. 2006;32(5):1107–1119. doi: 10.1037/0096-1523.32.5.1107. [DOI] [PubMed] [Google Scholar]

- Greenlee MW. Human cortical areas underlying the perception of optic flow: brain imaging studies. Int Rev Neurobiol. 2000;44:269–292. doi: 10.1016/s0074-7742(08)60746-1. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Armony L, Rowe J, Passingham RE. Activations related to “mirror” and “canonical” neurones in the human brain: an fMRI study. NeuroImage. 2003;18:928–937. doi: 10.1016/s1053-8119(03)00042-9. [DOI] [PubMed] [Google Scholar]

- Haaland KY, Harrington DL, Knight RT. Neural representations of skilled movement. Brain. 2000;123:2306–2313. doi: 10.1093/brain/123.11.2306. [DOI] [PubMed] [Google Scholar]

- Hamilton AF, Grafton ST. Goal representation in human anterior intraparietal sulcus. J Neurosci. 2006;26:1133–1137. doi: 10.1523/JNEUROSCI.4551-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Johnsrude I, Pulvermueller F. Somatotopic representation of action words in human motor and premotor cortex. Neuron. 2004;41:301–307. doi: 10.1016/s0896-6273(03)00838-9. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Bozeat S, Lambon Ralph MA, Patterson K, Spatt J. The role of conceptual knowledge in object use evidence from semantic dementia. Brain. 2000;123:1913–1925. doi: 10.1093/brain/123.9.1913. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Spatt J, Patterson K. “What” and “how”: evidence for the dissociation of object knowledge and mechanical problem-solving skills in the human brain. Proc Natl Acad Sci U S A. 1999;96:9444–9448. doi: 10.1073/pnas.96.16.9444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–2528. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH. What’s so special about human tool use. Neuron. 2003;39:201–204. doi: 10.1016/s0896-6273(03)00424-0. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH. The neural bases of complex tool use in humans. Trends Cogn Sci. 2004;8(2):71–78. doi: 10.1016/j.tics.2003.12.002. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Grafton ST. From “acting on” to “acting with”: the functional anatomy of action representation. In: Prablanc C, Pelisson D, Rossetti Y, editors. Space coding and action production. New York: Elsevier; 2003. pp. 127–139. [Google Scholar]

- Johnson-Frey SH, Maloof FR, Newman-Norlund R, Farrer C, Inati S, Grafton ST. Actions or hand-object-interactions: human inferior frontal cortex in action observation. Neuron. 2003;39:1053–1058. doi: 10.1016/s0896-6273(03)00524-5. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. A distributed left hemisphere network active during planning of everyday tool use skills. Cereb Cortex. 2005;15(6):681–695. doi: 10.1093/cercor/bhh169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Actions speak louder than functions: the importance of manipulability and action in tool representation. J Cogn Neurosci. 2003;15(1):30–46. doi: 10.1162/089892903321107800. [DOI] [PubMed] [Google Scholar]

- Knoblich G, Flach R. Predicting the effects of actions: interactions of perception and action. Psychol Sci. 2001;12:467–472. doi: 10.1111/1467-9280.00387. [DOI] [PubMed] [Google Scholar]

- Koski L, Iacoboni M, Mazziotta JC. Deconstructing apraxia: understanding disorders of intentional movement after stroke. Curr Opin Neurol. 2002;15(1):71–77. doi: 10.1097/00019052-200202000-00011. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Activation in human MT/MST by static images with implied motion. J Cogn Neurosci. 2000;12(1):48–55. doi: 10.1162/08989290051137594. [DOI] [PubMed] [Google Scholar]

- Lestou V, Pollick F, Kourtzi Z. Neural substrates for action understanding at different description levels in the human brain. J Cogn Neurosci. 2008;20:324–341. doi: 10.1162/jocn.2008.20021. [DOI] [PubMed] [Google Scholar]

- Lewis JW. Cortical networks related to human use of tools. Neuroscientist. 2006;12:211–231. doi: 10.1177/1073858406288327. [DOI] [PubMed] [Google Scholar]

- Liepmann H. Apraxie: Brugschs Ergebnisse der Gesamten Medizin. Berlin (Germany): Urban & Schwarzenberg; 1920. [Google Scholar]

- Lindemann O, Stenneken P, van Schie HT, Bekkering H. Semantic activation in action planning. J Exp Psychol Hum Percept Perform. 2006;32:633–643. doi: 10.1037/0096-1523.32.3.633. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Milleville SC, Negri GAL, Rumiati RI, Caramazza A, Martin A. Action-related properties shape object representations in the ventral stream. Neuron. 2007;55(3):507–520. doi: 10.1016/j.neuron.2007.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malach R, Levy I, Hasson U. The topography of high-order human object areas. Trends Cogn Sci. 2002;6(4):176–184. doi: 10.1016/s1364-6613(02)01870-3. [DOI] [PubMed] [Google Scholar]

- Manthey S, Schubotz RI, von Cramon DY. Premotor cortex in observing erroneous action: an fMRI study. Brain Res Cogn Brain Res. 2003;15:296–307. doi: 10.1016/s0926-6410(02)00201-x. [DOI] [PubMed] [Google Scholar]

- Milner AD, Goodale MA. The visual brain in action. Oxford: Oxford University Press; 1995. [Google Scholar]

- Nelissen K, Luppino G, Vanduffel W, Rizzolatti G, Orban GA. Observing others: multiple action representation in the frontal lobe. Science. 2005;310:332–336. doi: 10.1126/science.1115593. [DOI] [PubMed] [Google Scholar]

- Noppeney U. The neural systems of tool and action semantics: a perspective from functional imaging. J Physiol Paris. 2008;102(1–3):40–49. doi: 10.1016/j.jphysparis.2008.03.009. [DOI] [PubMed] [Google Scholar]

- Nowak MA, Plotkin JB, Jansen VAA. The evolution of syntactic communication. Nature. 2000;404:495–498. doi: 10.1038/35006635. [DOI] [PubMed] [Google Scholar]

- Ochipa C, Rothi LJG, Heilman KM. Ideational apraxia: a deficit in tool selection and use. Ann Neurol. 1989;25:190–193. doi: 10.1002/ana.410250214. [DOI] [PubMed] [Google Scholar]

- Oztop E, Arbib MA. Schema design and implementation of the grasp-related mirror neuron system. Biol Cybern. 2002;87:116–140. doi: 10.1007/s00422-002-0318-1. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Within-subject reproducibility of category-specific visual activation with functional MRI. Hum Brain Mapp. 2005;25:402–408. doi: 10.1002/hbm.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proverbio AM, Riva F, Zani A. Observation of static pictures of dynamic actions enhances the activity of movement-related brain areas. PLoS One. 2009;4(5):e5389.. doi: 10.1371/journal.pone.0005389. doi: 10.1371/journal.pone.0005389. [DOI] [PMC free article] [PubMed] [Google Scholar]