Abstract

Spike trains commonly exhibit interspike interval (ISI) correlations caused by spike-activated adaptation currents. Here we investigate how the dynamics of adaptation currents can represent spike pattern information generated from stimulus inputs. By analyzing dynamical models of stimulus-driven single neurons, we show that the activation states of the correlation-inducing adaptation current are themselves statistically independent from spike to spike. This paradoxical finding suggests a biophysically plausible means of information representation. We show that adaptation independence is elicited by input levels that produce regular, non-Poisson spiking. This adaptation-independent regime is advantageous for sensory processing because it does not require sensory inferences on the basis of multivariate conditional probabilities, reducing the computational cost of decoding. Furthermore, if the kinetics of postsynaptic activation are similar to the adaptation, the activation state information can be communicated postsynaptically with no information loss, leading to an experimental prediction that simple synaptic kinetics can decorrelate the correlated ISI sequence. The adaptation-independence regime may underly efficient weak signal detection by sensory afferents that are known to exhibit intrinsic correlated spiking, thus efficiently encoding stimulus information at the limit of physical resolution.

Keywords: neural correlations, spike train information

Negative-feedback processes are ubiquitous in biological systems. In neurons, spike-triggered adaptation processes inhibit subsequent spiking and can produce temporal correlations in the spike train, in which a longer than average interspike interval (ISI) makes a shorter subsequent ISI more likely and vice versa. Such correlations are common in neurons (1–7) and model neurons (8–14). The impact of temporal relationships in spike trains, including ISI correlations, on neural information processing has been under intense investigation (1, 2, 15–22). Temporally correlated spike trains pose a challenge for ISI-based sensory inferences because each ISI depends on both present and past stimulus activity, requiring complex strategies to make accurate inferences (23, 24). Some ISI correlations can be attributed to long-time-scale autocorrelations of the input (23, 25) or correlations related to long-time-scale adaptive responses (23, 26). However, if the ISI correlations arise from inputs or noise with fast autocorrelation time scales relative to both the mean ISI and the adaptation time scales of the cell, the resulting fine-grained time structure of spike trains can shape information processing of the underlying slower input fluctuations (2, 3, 11, 15, 18, 19, 27–29). Specifically, Jacobs et al. (29) have recently shown that fine-grained temporal coding of ISIs that takes into account adaptive responses (e.g., refractoriness) not only conveys significant information, but the information benefit accounts for behaviorally important levels of stimulus discrimination.

If fine-grained temporal encoding is utilized for neural spike patterns, how are the temporal codes computed and communicated to postsynaptic neurons? This question is commonly posed as a “decoding” problem, in which statistical models are used to infer likely inputs (15, 17, 22, 29, 30). Whereas these analyses can evaluate if an encoding of the spike train is viable, in this article we investigate the subjacent question of how internal cellular dynamics can represent a fine-grained spike train encoding and carry out decoding.

Commonly, spike-triggered adaptation currents (ACs) decay exponentially, reflecting state transitions of a large number of independent molecules governing the current’s conductance (31). Through analysis of mathematical models, we show that an AC can efficiently represent spike train information. Our main result is that the dynamics of correlated spike emission can create statistical independence in the adaptation process. That is, we have found an independent statistical decomposition of correlated spike trains. Decoding of correlated ISIs involves inferences on the basis of conditional probability distributions (11, 29, 30). Yet, we find the AC activation states are a probabilistically independent and biophysical representation of the ISI code that does not require decoding on the basis of more computationally costly conditional probabilities. This dynamical coding property is distinct from coarse-grained integrative coding by slow-time-scale, activity-dependent processes (32–35). We further show that the entropy of the AC states represents the maximal stimulus information when the AC system is sufficiently activated and displays weak signal detectability levels equivalent to noncorrelated Poisson ISI coding but at a much-reduced firing-rate gain. We conclude by showing how simple synaptic dynamics may communicate adaptation state information to postsynaptic targets, which provides a testable experimental prediction of our theory. The adaptation-independence property is relevant to information transmission in regular-firing sensory afferents (1, 3, 36), in which correlated spike sequences are used to discriminate weak underlying signals from noise (2, 3, 11).

Results

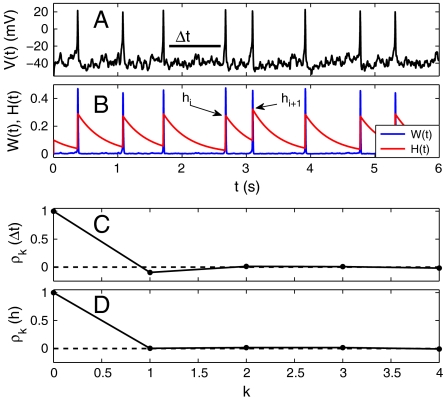

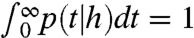

Noisy stimulus current injection x(t) to a Morris–Lecar (ML) model (37), with generic parameters (38), produced input fluctuation-driven spike patterns (Fig. 1A and SI Text, SI Methods). We set the constant injected current Im = 38 nA/cm2 to be just below the deterministic threshold for firing, so that fluctuations in the noisy input x(t) determine spike times {ti}. The strength of the fluctuations was large enough to perturb V(t) past spiking threshold but small enough to not obscure the intrinsic spike kinetics. In addition to the fast-activating potassium current W(t) responsible for spike repolarization, the model possessed a slower-decaying spike-activated AC: H(t) (31, 35) (Fig. 1B). The AC kinetics were modeled as a voltage-gated potassium channel, similar to a KV3.1 channel (2, 31).

Fig. 1.

Morris–Lecar model exhibits fast-fluctuating input-driven spike trains (A). A spike at time ti activates the AC, which can vary in peak amplitude hi, depending on the previous ISI: Δti-1 = ti - ti-1 (B). Serial ISI correlations ρk between Δti and Δti+k exist only between subsequent ISI pairs (k = 1), whereas longer-range pairs (k > 1) are uncorrelated (C). Paradoxically, peak AC amplitudes (hi) are uncorrelated (D).

Activation of the AC hyperpolarizes the membrane and discourages spiking. Spikes that occur in quick succession induce elevated AC activation, making the later ISIs longer on average, whereas low AC values make subsequent ISIs shorter on average; both cases result in temporal ISI correlations. Monte Carlo simulations reveal that ISI correlations ρk = Corr(Δti,Δti+k) (where Δti = ti+1 - ti) exist only between subsequent ISIs (Δti and Δti+1), but all later ISIs (k > 1) have effectively zero correlation in the parameter regime we have chosen (Fig. 1C). Note that without random fluctuations in x(t) there would be no ISI correlations and only rhythmic firing or silence, depending on the bias current Im. Hence, the ISI correlations are induced by the fast-fluctuating input x(t), and so the correlations can be used to infer properties of x(t).

In addition to ISI correlations, we also investigated correlations in the AC conductance H(t). We measured the peak activation of H(t), defined as hi, that occurs immediately after each spike (see Fig. 1B). The hi value defines the activation level of the current, and so we refer interchangeably to hi as the AC activation state associated with each spike. Paradoxically, the hi activation states are uncorrelated (Fig. 1D). How this zero correlation property emerges through the dynamical coupling between the adaptation H(t) and the spike generation mechanism is investigated in the following.

Mathematical Analysis.

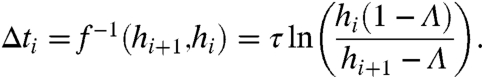

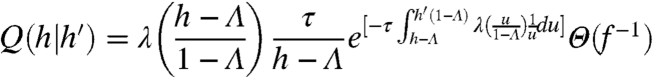

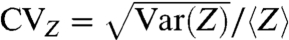

What conditions allow for ISI correlations to emerge together with uncorrelated AC activation states hi? To answer this question, we derived a more analytically tractable model of AC-limited spike emission, starting with H(t). To simplify, note that the smooth H dynamics in Fig. 1B exhibit a long exponential decay phase between spikes followed by a fast activation phase during a spike. By approximating these phases of H(t) with piecewise exponentials (see SI Text, Approximation of H(t) and Derivation of Q(h|h′)), we derived a map from hi to hi+1:

| [1] |

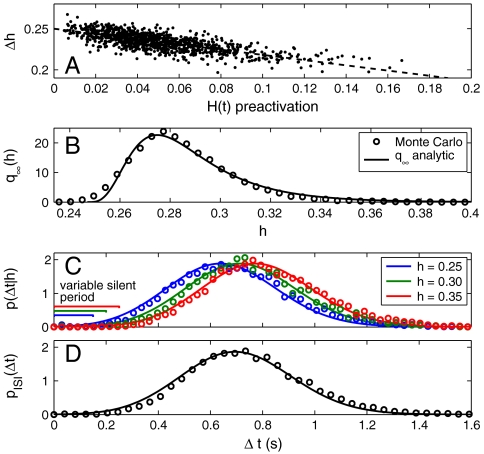

The parameter Λ∈(0,1) is the minimum activation level for H(t), and τ is the decay time scale. Setting Λ = 1 is an extreme case in which the current is always maximally activated for every spike, whereas the other extreme (Λ = 0) is a nonadapting process [H(t) = 0]. The linear map [1] approximates the activation states hi of H(t) as evidenced in the plot of the activation change Δh ≡ hi+1 - hie-Δti/τ as a function of the AC level just prior to the activation phase H ≈ hie-Δti/τ (Fig. 2A). This linear relationship has a y intercept Λ and slope -Λ.

Fig. 2.

Analytical model [6] captures the spike statistics of the biophysically realistic model with α = 16.7, β = 29, and τ = 400 ms. (A) The H(t) pre- minus postactivation change Δh = hi+1 - hie-Δti/τ exhibits a linear relationship with the preactivation level H(t) ≈ hie-Δti/τ with slope -Λ ≈ -0.246. The Morris–Lecar system’s empirical limiting distributions (dots) of AC states h (B), and ISI distributions, conditioned on h, with h-dependent variable silent periods (C), and unconditioned (D), are fit by Eqs. 1–6 (solid lines).

Because Λ < 1, the map f, [1], can always be inverted to solve for Δti:

|

[2] |

Hence, the hi sequence contains the same information as the ISI sequence. Note that the hi values are contained in the interval (Λ,1), from which we infer from [2] that Δti≥0 implies hi+1 ≤ Λ + hi(1 - Λ).

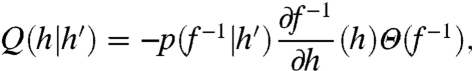

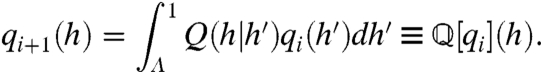

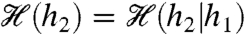

The sequence from hi to hi+1 is determined by the intervening ISI, which is stochastically determined by the fluctuating input x(t). Here we derive the stochastic dynamics of the hi sequence by approximating the stochastic spike process that generates Δti. Following Muller et al. (12), the likely times of the next ISI, conditioned on hi, are decided by the probability density p(Δti|hi) (defined below). Longer ISIs should be more likely for larger h values and vice versa (Fig. 2C). Assuming for now that p(Δti|hi) is known, we define the Markov transition function Q from h′ ≡ hi to h ≡ hi+1 by substituting Δt = f-1(h,h′):

|

[3] |

where the negative sign ensures positivity of the operator, ∂f-1/∂h is the Jacobian of f-1 [we abbreviate f-1 = f-1(h,h′)], and Θ(x) is the Heaviside step function (see SI Text, Approximation of H(t) and Derivation of Q(h|h′)). The Heaviside factor in [3] disallows (h,h′) combinations that lead to impossible negative ISIs. We define the stochastic dynamics of the h sequence in terms of the evolution of an h distribution qi(h′) at the ith spike, to the next qi+1(h):

|

[4] |

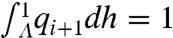

Note that  and

and  imply

imply  . Thus, [4] maps densities to densities. Under mild conditions of irreducibility and ergodicity, Frobenius–Perron theory (39) predicts that there exists a unique limiting distribution q∞(h) such that

. Thus, [4] maps densities to densities. Under mild conditions of irreducibility and ergodicity, Frobenius–Perron theory (39) predicts that there exists a unique limiting distribution q∞(h) such that  , in which

, in which  is the ith operator composition, for any starting distribution q0. Given q∞ exists, the ISI density is calculated as

is the ith operator composition, for any starting distribution q0. Given q∞ exists, the ISI density is calculated as

|

[5] |

Fig. 2 B–D shows analytical approximations to the ML Monte Carlo results for q∞(h), p(Δt|h), and pISI(Δt), respectively, which are derived as follows.

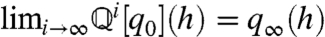

We generated p(Δt|h) with a spike rate function λ(H)≥0, in which the probability of a spike in a small time interval δt is λ(H)δt (12, 30, 40). To correctly model the effect of the AC on spiking, λ(H) must elicit lower rates for large H and vice versa. The conditional ISI density is calculated as  . Without loss of generality, we used the specific model (12):

. Without loss of generality, we used the specific model (12):

| [6] |

The parameter α > 0 sets the overall spike rate of the cell, and β≥0 sets the strength of AC. If β is large, then moderate activation levels of H induce a silent period until H(t) decays sufficiently (see Fig. 2C). Conversely, a small β reduces the effect that H has on spike probability. Choosing β = 0 or Λ = 0 yields homogeneous Poisson firing statistics from the model in [6] with rate α. Hence, there is a homotopy between adapting and Poisson trains through β or Λ.

The exponential dependence of firing rate on H in [6] arises commonly from spiking neural models on the basis of diffusion processes, where the effect of noisy input x(t) is represented as a probability of spiking per unit time (12, 14, 30, 40–42). Moreover, Eq. 6 approximates biophysically realistic models such as ML (Fig. 2) effectively over a realistic range of baseline input and fluctuation levels, provided that the autocorrelation time scale of input current is fast relative to the mean ISI (12, 40), which is consistent with the fast-fluctuating noisy input x(t) used in Fig. 1.

Adaptation Independence.

By analyzing the transition function Q in [3], we can show that the sequential h values can be not only uncorrelated (Fig. 1D) but also statistically independent. For a general rate function λ(H), Eq. 3 becomes

|

[7] |

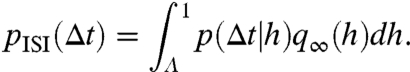

(see SI Text, Approximation of H(t) and Derivation of Q(h|h′)). Independence is established by proving that the Markov transition [7] does not depend on h′: Q(h|h′) = Q(h), which also implies q∞(h) = Q(h). Independence is achieved if the rate function

| [8] |

The condition [8] requires the peak activation h≥Λ to inhibit spiking for a nonzero time period until the AC decays. For the specific λ in [6], independence occurs if β (the strength of the AC) is sufficiently large: βΛ - ln(ατ)≫1. The physiological meaning of the condition [8] is that each AC activation must induce a nonzero postspike silent period Δw ≡ τ ln(h′/Λ), as is illustrated in Fig. 2C, in addition to the usual absolute and relative refractory periods. The silent period is nonstochastic for a given h′ and ends when H(t) decays below the threshold value Λ so that λ[H(t)] > 0—the larger the h′ value, the longer the silent period. The selection of h is determined by the remaining stochastic portion of the ISI Δt - Δw, which is a renewal process, and thus is independent of h′. Note also that the independence condition [8] is generic for all AC time scales τ because λτ in the integral of [7] is nondimensional. Of course, an AC-mediated silent period is a common phenomenon, so the result is broadly applicable, including to the model in Figs. 1 and 2 and SI Text, Fitting the ML Model.

To prove that [8] implies independence, assume [8] holds. Then the upper integration limit of [7] can be replaced with the lower bound h′ = Λ≥Λ(1 - Λ) or any value above it with no consequence because the integrand is effectively zero above the bound. Furthermore, [8] gives an upper bound for h, because Q(h|h′) ∼ 0 for h≥Λ(1 - Λ) + Λ≥Λ, so h∈[Λ, min(2Λ - Λ2,1)]. Note that h∈[Λ, min(2Λ - Λ2,1)] implies f-1 > 0, and so the Heaviside factor in [7] can be omitted. Finally, the Jacobian  in [3] depends only on h and not h′. Taken together, the above deductions imply Q(h|h′) = Q(h) = q∞(h), which is independence (see SI Text, Fitting the ML Model). In the following, we analyze how changes in mean firing rate affects adaptation independence and spike statistics, including correlations.

in [3] depends only on h and not h′. Taken together, the above deductions imply Q(h|h′) = Q(h) = q∞(h), which is independence (see SI Text, Fitting the ML Model). In the following, we analyze how changes in mean firing rate affects adaptation independence and spike statistics, including correlations.

Spike Train Statistics.

In the independence regime, realistic correlated spike trains can be generated from independent realizations of q∞-distributed activation states {hi} by f-1(hi+1,hi) = Δti. Independence also explains why only adjacent ISIs are correlated in Fig. 1C: Δti and Δti+1 both depend on hi+1 and so are correlated, whereas Δti and Δti+k for k≥2 are independent because they are determined by distinct and independent activation states. Conversely, in the nonindependent regime, nonadjacent ISIs exhibit correlations (see SI Text, Fitting the ML Model, and Fig. 3 below).

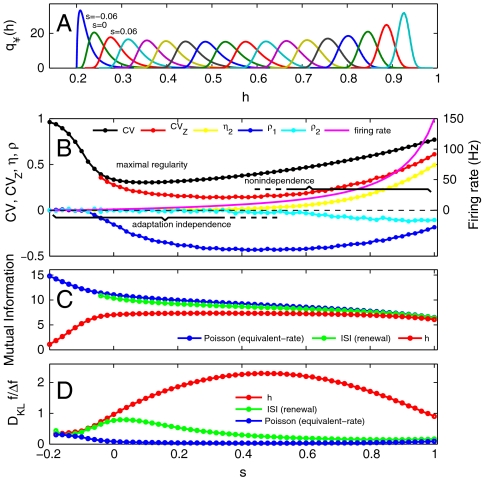

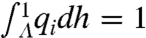

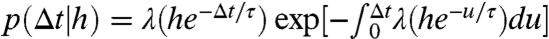

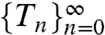

Fig. 3.

Input discrimination from AC states is efficient relative to similar uncorrelated spike trains. (A)  over a range of inputs s from s = -0.06 to 1 for the model (Eq. 6, τ = 400 ms, Λ = 0.2, α = 14.63 s-1, β = 30; see SI Text, Fitting the ML Model for an analysis of parameter variation). (B) CV versus input s has Poisson statistics (CV = 1) for low input levels (s < 0). Increased input leads to more regular spiking that overlaps the region of adaptation independence (s approximately less than 0.4) where the secondary eigenvalue of the h operator is essentially zero (η2 ∼ 0). As input increases, it causes a loss of independence (η2 > 0), corresponding to increased spike train irregularity measured by CVZ. (C) Mutual information IAC exhibits a plateau region of elevated information for sufficiently activated AC states but is uniformly lower than the mutual information of the renewal ISI process [pISI(Δt), Eq. 5, lower points omitted but are very near Poisson] and the bounding equivalent-rate Poisson mutual information. (D) The information gain DKL divided by the proportional change in firing rate ΔfA/fA for Δs = 0.02 shows the AC system has greater discriminability per firing-rate change compared to the equivalent renewal ISI process and the equivalent-rate Poisson process.

over a range of inputs s from s = -0.06 to 1 for the model (Eq. 6, τ = 400 ms, Λ = 0.2, α = 14.63 s-1, β = 30; see SI Text, Fitting the ML Model for an analysis of parameter variation). (B) CV versus input s has Poisson statistics (CV = 1) for low input levels (s < 0). Increased input leads to more regular spiking that overlaps the region of adaptation independence (s approximately less than 0.4) where the secondary eigenvalue of the h operator is essentially zero (η2 ∼ 0). As input increases, it causes a loss of independence (η2 > 0), corresponding to increased spike train irregularity measured by CVZ. (C) Mutual information IAC exhibits a plateau region of elevated information for sufficiently activated AC states but is uniformly lower than the mutual information of the renewal ISI process [pISI(Δt), Eq. 5, lower points omitted but are very near Poisson] and the bounding equivalent-rate Poisson mutual information. (D) The information gain DKL divided by the proportional change in firing rate ΔfA/fA for Δs = 0.02 shows the AC system has greater discriminability per firing-rate change compared to the equivalent renewal ISI process and the equivalent-rate Poisson process.

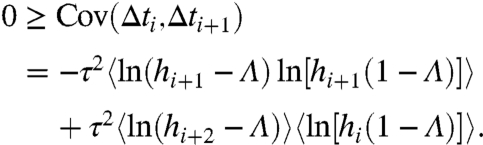

The variability in the hi sequence is also the source of the negative ISI correlation structure in the adaptation-independence regime (Fig. 1C). By inserting f-1(hi+1,hi) = Δti into the ISI covariance formula Cov(Δti,Δti+1) = 〈ΔtiΔti+1〉-〈Δti〉2 and using Chebyshev’s algebraic inequality (see SI Text, ISI Correlations), we deduce from independence that

|

[9] |

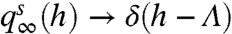

Thus, sequential ISIs have nonpositive correlations in the adaptation-independent regime, consistent with Fig. 1C. Furthermore, ISI correlations are zero only if h does not vary. That is, Cov(Δti,Δti+1) → 0 only if q∞(h) → δ(h - 〈h〉), where δ(·) is the Dirac functional, which occurs only in the limit of zero stochastic input [i.e., x(t) → 0].

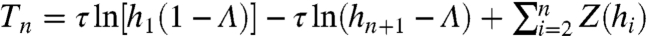

Negative serial ISI correlations regularize the spike train over long time scales, as can be understood by analyzing the variability of the sum of n sequential ISIs:  (10). Recall that Δti/τ = ln[hi(1 - Λ)] - ln(hi+1 - Λ), so Tn is a telescoping sum of n + 1 summands:

(10). Recall that Δti/τ = ln[hi(1 - Λ)] - ln(hi+1 - Λ), so Tn is a telescoping sum of n + 1 summands:  , where

, where

| [10] |

In the adaptation-independent regime, the Z(hi) terms are independent time intervals making up Tn, with mean 〈Z〉 = 〈Δt〉. If there is independence, the variance of Tn is

| [11] |

| [12] |

Note that Var(Z) is the dominant term in [12] for large n and thus is a good measure of long-time-scale spike train variability. Note, however, with nonindependence [11] and [12] would contain higher-order covariance terms, and, in general, Var(Z) can be computed from moments of Z given q∞ (as in Fig. 3). However, in the adaptation-independence regime we deduce from [11] that

| [13] |

The first term of [13] accounts for intrinsic variability of a single ISI, and the negative-valued second term (see [9]) lowers the resulting variance. Thus, spike pattern regularity is a direct result of ISI covariance. In the following, we study how the variability in the spike train and adaptation independence are modulated as a function of mean firing rate.

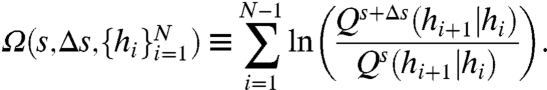

Suppose we introduce a nonfluctuating baseline input conductance s to the model: λ(H - s). Increasing (decreasing) the input s has the same effect as increasing (decreasing) injected current Im in the ML model (see SI Text, Fitting the ML Model). The baseline s sets the overall firing rate of the cell by effectively scaling α. The input s modulates the ISI correlations, ISI variability, and adaptation independence, as we now show.

The baseline s defines a set of operators  , [4], and operator spectra. For each operator

, [4], and operator spectra. For each operator  there is a single unit eigenvalue η1 = 1 and corresponding eigenfunction

there is a single unit eigenvalue η1 = 1 and corresponding eigenfunction  (Fig. 3A). The secondary spectrum (ηj for j > 1) is effectively zero for low input values (s ≲ 0.4). Increasing s leads to an increase in the secondary spectrum from zero at s ∼ 0.4, corresponding to a loss of adaptation independence (η2, Fig. 3B). The secondary spectrum η2 measures the degree of independence because it is the fractional contraction due to

(Fig. 3A). The secondary spectrum (ηj for j > 1) is effectively zero for low input values (s ≲ 0.4). Increasing s leads to an increase in the secondary spectrum from zero at s ∼ 0.4, corresponding to a loss of adaptation independence (η2, Fig. 3B). The secondary spectrum η2 measures the degree of independence because it is the fractional contraction due to  [4] of the linear subspace orthogonal to q∞ (see SI Text, Fitting the ML Model).

[4] of the linear subspace orthogonal to q∞ (see SI Text, Fitting the ML Model).

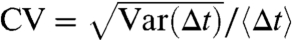

The loss of adaptation independence for increasing baseline levels s occurs concomitantly with changes in ISI regularity, which is measured by the coefficient of variation of the ISI [ ]. There is a nonmonotonicity in the s-input-CV graph (Fig. 3B), first declining from unity, which is associated with a transition from subthreshold and very-low-firing-rate Poisson statistics, to more regular firing at a minimum CV value (s ∼ 0.1). This drop is associated with baseline input levels near the deterministic spike threshold (see SI Text, Fitting the ML Model). The CV then increases for higher baseline levels, which is a unique feature of adapting models (14, 40) and contrasts with the monotonic s-input-CV graph of nonadapting models (42). Very high baselines (s ≳ 0.8) result in exponential firing-rate gains that are considered nonphysiological and will not be considered further (see SI Text, Fitting the ML Model.

]. There is a nonmonotonicity in the s-input-CV graph (Fig. 3B), first declining from unity, which is associated with a transition from subthreshold and very-low-firing-rate Poisson statistics, to more regular firing at a minimum CV value (s ∼ 0.1). This drop is associated with baseline input levels near the deterministic spike threshold (see SI Text, Fitting the ML Model). The CV then increases for higher baseline levels, which is a unique feature of adapting models (14, 40) and contrasts with the monotonic s-input-CV graph of nonadapting models (42). Very high baselines (s ≳ 0.8) result in exponential firing-rate gains that are considered nonphysiological and will not be considered further (see SI Text, Fitting the ML Model.

Long-time-scale spike train regularity, measured by the CVZ [ ], exhibits a local minimum similar to the CV, but it occurs at a higher baseline level that is near the upper boundary of adaptation independence (s ∼ 0.4). This minimum point occurs for input levels above the deterministic threshold for spiking (see SI Text, Fitting the ML Model. The minimum point of CVZ depends on the variability of ISIs but is dominated by the minimum point of the first serial correlation coefficient ρ1 (see Eq. 13). Previous studies have detailed how ISI correlations can increase spike train regularity and enhance coarse-grained firing-rate-coding precision (1, 2). Here we observe a broad range of baseline inputs exhibiting high spike train regularity associated with a sufficiently activated AC. However, our analysis also reveals that there is a subset of this range that exhibits adaptation independence, which we will show provides additional advantages for fine-grained sensory coding. In the next two sections, we investigate information-theoretical measures of the AC (Fig. 3C), and we show how independent adaptation states can be utilized to detect small changes in the baseline Δs (Fig. 3D).

], exhibits a local minimum similar to the CV, but it occurs at a higher baseline level that is near the upper boundary of adaptation independence (s ∼ 0.4). This minimum point occurs for input levels above the deterministic threshold for spiking (see SI Text, Fitting the ML Model. The minimum point of CVZ depends on the variability of ISIs but is dominated by the minimum point of the first serial correlation coefficient ρ1 (see Eq. 13). Previous studies have detailed how ISI correlations can increase spike train regularity and enhance coarse-grained firing-rate-coding precision (1, 2). Here we observe a broad range of baseline inputs exhibiting high spike train regularity associated with a sufficiently activated AC. However, our analysis also reveals that there is a subset of this range that exhibits adaptation independence, which we will show provides additional advantages for fine-grained sensory coding. In the next two sections, we investigate information-theoretical measures of the AC (Fig. 3C), and we show how independent adaptation states can be utilized to detect small changes in the baseline Δs (Fig. 3D).

Information-Theoretic Analysis of Activation States.

The ISI sequence encodes information about the fluctuating stimulus x(t) (17) that is accessible from the AC activation states hi. We find that the AC states better encode the effect of stimulus fluctuations x(t) when the AC is activated sufficiently to produce discernible variations in the AC from spike to spike. To measure this property, we derived the mutual information (MI) per spike IAC between the stimulus x(t) and the AC states and discovered that it was the entropy of the h-process

| [14] |

where 0 < δh ≪ 1 sets the resolution of the MI (43). A rigorous derivation of [14] and the representation of x(t) in the rate model [6] is given in SI Text, Mutual Information. Note that adaptation independence implies  , which is significant because the MI of any N-spike sequence length (i.e., “word” length; see ref. 20) scales linearly as

, which is significant because the MI of any N-spike sequence length (i.e., “word” length; see ref. 20) scales linearly as  . Fig. 3C shows a broad “plateau” region of elevated MI (red) for baseline levels -0.06 ≲ s ≲ 1 associated with higher-variance

. Fig. 3C shows a broad “plateau” region of elevated MI (red) for baseline levels -0.06 ≲ s ≲ 1 associated with higher-variance  distributions (Fig. 3A). This plateau decreases to zero MI for decreasing baseline levels because

distributions (Fig. 3A). This plateau decreases to zero MI for decreasing baseline levels because  , because this is a regime of very long ISIs (minimal h values) that can be distinguished only by small differences in AC states. Thus, AC-based encoding carries more information if the AC is sufficiently activated by the input s.

, because this is a regime of very long ISIs (minimal h values) that can be distinguished only by small differences in AC states. Thus, AC-based encoding carries more information if the AC is sufficiently activated by the input s.

Fig. 3C also shows the MI of the renewal ISI process pISI(Δt) (see Eq. 5). The renewal ISI information is close to the MI of a maximal-entropy Poisson spike train that bounds all processes with the equivalent mean firing rate: IPoisson = ln(〈Δt〉) + 1 - ln(δt) (see SI Text, Mutual Information) for λ = 〈Δt〉-1. Thus, there is a significant loss of MI when using AC states for input coding relative to similar renewal processes, coding that is worse for low baselines where the AC is minimally activated. However, this information loss does not hinder detection of weak changes in the baseline input Δs, as we show in the next section.

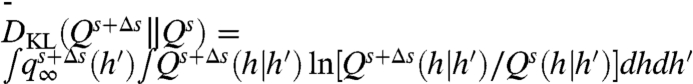

Weak Signal Detection.

Changes in the AC states can be used to discriminate small changes in the baseline Δs. We consider Δs as an adiabatic change in the baseline, effectively constant over the time period of many spikes. Such signal detection is commonly performed by correlated fast-spiking sensory afferents that detect small changes in mean input level in the presence of noisy fluctuations (1, 3, 27). We wish to determine if a given h sequence  more likely originated from baseline level s or baseline level s + Δs. The most statistically efficient discriminator for this task is the log likelihood ratio (LLR) (43)

more likely originated from baseline level s or baseline level s + Δs. The most statistically efficient discriminator for this task is the log likelihood ratio (LLR) (43)

|

[15] |

If the h data originate from a perturbed-input distribution (Δs ≠ 0), the LLR will grow positive on average with increasing N; a threshold can then be set and utilized for hypothesis testing (43). The average rate of growth of the LLR, termed the information gain, is equal to the Kullback–Leibler divergence between distinct h distributions:  , so that 〈Ω〉 = (N - 1)DKL. For the exponential rate function λ(H - s) in [6], the information gain is

, so that 〈Ω〉 = (N - 1)DKL. For the exponential rate function λ(H - s) in [6], the information gain is

| [16] |

as detailed in SI Text, Information Gain. Thus, the information gain using the h data depends only on Δs and β but is independent of s, α, Λ, and τ. The uniformity over s is a unique property of the rate function in [6]. Because [16] is independent of all parameters but β and Δs, the result holds for a continuum of spike train behaviors, from those with strong AC currents (large Λ) that have ISI correlations and adaptation independence to the limiting case of a pure Poisson process with a nonexistent AC (Λ → 0): λ(-s) = αeβs. This Poisson process with rate λ(-s), which has no ISI correlations and maximal entropy, provides a useful comparison to AC systems and highlights an important aspect of how AC states represent information: Knowledge of the h activation states yields the equivalent information gain to that obtained from Poisson ISIs undergoing the same baseline change Δs; however, to achieve this gain, the Poisson model λ(-s) undergoes a much larger (exponential) change in firing rate λ[-(s + Δs)] because there is no additional activation of the AC to counteract the baseline change Δs.

Alternatively, the above Poisson equivalence property ([16]) can be expressed by computing the proportional firing rate change ΔfA/fA given Δs for the AC model and examining the difference in the information gain of a Poisson spike train with the equivalent firing-rate change. Fig. 3D plots DKL [16] divided by the ΔfA/fA (red) versus s, for Δs = 0.02. The ratio DKLfA/ΔfA reaches a maximum for baseline levels concomitant with the point of maximal regularity of the Tn interval (CVZ), and the transition point to the non-adaptation-independence regime (Fig. 3B). This concomitance indicates that the maximal inhibitory effect of the AC on firing-rate change coincides with the baseline level where the AC can no longer produce a significant silent period. Conversely, for a Poisson model with the equivalent rate change, DKLfA/ΔfA is inversely related, to first order, to the AC system; so as one goes up, the other goes down (Fig. 3D; see SI Text, Poisson Information Gain for calculation). Therefore, signal detection of the fine-grained AC coding per change in firing rate is significantly enhanced for AC systems relative to both ISI coding of Poisson and similar renewal trains.

We also plotted DKLfA/ΔfA for uncorrelated (renewal) ISI sequences from the ISI distribution pISI(Δt) (Eq. 5; Fig. 3D, green). For low s values (s ≲ -0.04), the AC model has no ISI correlations (see Fig. 3B) and thus approximates a Poisson process; thus all three models in Fig. 3D are approximately equivalent. As the input increases, the DKLfA/ΔfA of both the adapting and renewal ISI models increase and stay approximately the same because there are no significant ISI correlations in the AC model. At s ∼ 0.04 the renewal ISI model peaks (Fig. 3D) at the minimum CV point, whereas the adapting model diverges further as significant negative ISI correlations allow for greater information gain and less firing-rate gain relative to the renewal models.

As stated in the previous section, AC states resolve the underlying ISIs better when the baseline level s is high enough to produce broad h distributions (Fig. 3A), thus eliciting the plateau MI region (Fig. 3C). In addition to resolvability, decoding is less computationally costly for independent AC states. In the nonindependent regime, the LLR [15] is computed by using the conditional distribution Q(h|h′), which requires a decoder to represent and compute multivariate data (h′ and h) in independent memory buffers. In contrast, in the independence regime, only univariate data must be represented for decoding because the AC dynamics “self-decorrelate” the ISI information. Therefore, sensory information is more economically decoded for baseline excitability levels below the transition to non-adaptation independence yet high enough for sufficient AC activation (-0.06 ≲ s ≲ 0.4).

Synaptic Decoding of AC States.

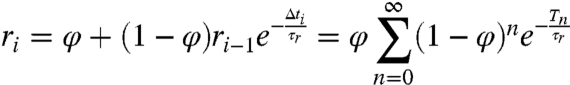

How then are the h data, a sequence of variables hidden from direct experimental observation, accessed by postsynaptic decoders? Spike-triggered exponential processes such as H(t) are ubiquitous in the nervous system. Namely, standard models of postsynaptic receptor binding dynamics are characterized as exponentially activating and decaying processes (31). Similar to hi, we let ri be the peak activation of postsynaptic receptors, the first stage of possibly many stages of postsynaptic processing, and we also define φ to be the minimum activation level, a parameter similar to Λ, and let τr be the decay time scale, which defines a map similar to that in [1]:

|

[17] |

| [18] |

The r sequence is a function of the n-interval  sequence [17], so the statistical properties of Tn are transmitted postsynaptically. Moreover, if both φ and τr are near Λ and τ, respectively, then ri will approximate hi, and with identical parameters there is equality: ri = hi [18]. This finding suggests an original experimental prediction: If synaptic kinetics are matched to presynaptic adaptation kinetics, then postsynaptic responses can exhibit the independence property and effectively represent the h-code information postsynaptically.

sequence [17], so the statistical properties of Tn are transmitted postsynaptically. Moreover, if both φ and τr are near Λ and τ, respectively, then ri will approximate hi, and with identical parameters there is equality: ri = hi [18]. This finding suggests an original experimental prediction: If synaptic kinetics are matched to presynaptic adaptation kinetics, then postsynaptic responses can exhibit the independence property and effectively represent the h-code information postsynaptically.

Discussion

We have discovered a stochastic regime of input-driven spiking models associated with correlated spiking, in which the activation states of the AC are probabilistically independent from spike to spike. Adaptation independence is met by the mild physiological condition that the minimum activation state is strong enough to stop spiking for a brief period. Independence occurs in a regime associated with perithreshold regular firing, and so we speculate that it is a common property of neurons (see SI Text, Fitting the ML Model).

Sensory afferent cells are challenged with representing information at the limits of physical resolution, in which noise fluctuations must be quickly disambiguated from baseline signals over a wide range of intensities. The adaptation-independent regime is important in this context because it enhances signal detection with minimal firing-rate change, by using a fine-grained code that utilizes both the ISIs and ISI correlations (11, 29, 36). This fine-grained coding contrasts with the previously reported regularization effect of ISI correlations on coarse-grained rate coding (2, 3) or Poisson coding commonly reported in central neurons (44). We have shown that AC activation states represent the same information gain that can be achieved with Poisson spike statistics but at a reduced firing-rate change.

It has been proposed that decoding of stimulus information is achieved through inferences on the basis of conditional probabilities from sequential data (e.g., Δti+1, conditioned on Δti, and so on) (1, 11, 20, 29, 30). This scheme requires a postsynaptic decoder to represent multivariate data in independent memory buffers and compute conditional probability distributions. It has been proposed, yet is unproven (11), that synaptic processing could perform such a computation or be used for Bayesian inference (45). However, we have shown that correlated spike trains can be represented independently causa sui by the adaptation dynamics, providing a simple biophysical means of information representation that does not require more costly multivariate decoding.

We have also shown that simple postsynaptic dynamics can represent the AC states, which suggests a testable experimental prediction that synaptic activation kinetics can decorrelate the correlated ISI sequence and synaptic and dendritic nonlinearities (46) could decode the AC activation.

Methods

All numerical computations were performed on a Macintosh computer using MATLAB software. See SI Text, Notes to Numerical Computations for specific methods used in Figs. 1–3.

Supplementary Material

ACKNOWLEDGMENTS.

The authors acknowledge funding from Canadian Institutes of Health Research Grant 6027 and Natural Sciences and Engineering Research Council.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1008587107/-/DCSupplemental.

References

- 1.Ratnam R, Nelson M-E. Nonrenewal statistics of electrosensory afferent spike trains: Implications for the detection of weak sensory signals. J Neurosci. 2000;20:6672–6683. doi: 10.1523/JNEUROSCI.20-17-06672.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chacron MJ, Longtin A, Maler L. Negative interspike interval correlations increase the neuronal capacity for encoding time-dependent stimuli. J Neurosci. 2001;21:5328–5343. doi: 10.1523/JNEUROSCI.21-14-05328.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sedeghi S-G, Chacron M-J, Taylor M-C, Cullen K-E. Neural variability, detection thresholds, and information transmission in the vestibular system. J Neurosci. 2007;27:771–781. doi: 10.1523/JNEUROSCI.4690-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Goldberg J-M, Adrian H-O, Smith F-D. Response of neurons of the superior olivary complex of the cat to acoustic stimuli of long duration. J Neurophysiol. 1964;27:706–749. doi: 10.1152/jn.1964.27.4.706. [DOI] [PubMed] [Google Scholar]

- 5.Yamamoto M, Nakahama H. Stochastic properties of spontaneous unit discharges in somatosensory cortex and mesencephalic reticular formation during sleep-waking states. J Neurophysiol. 1983;49:1182–1198. doi: 10.1152/jn.1983.49.5.1182. [DOI] [PubMed] [Google Scholar]

- 6.Neiman A-B, Russell D-F. Two distinct types of noisy oscillators in electroreceptors of paddlefish. J Neurophysiol. 2004;92:492–509. doi: 10.1152/jn.00742.2003. [DOI] [PubMed] [Google Scholar]

- 7.Farkhooi F, Strube-Bloss M-F, Nawrot M-P. Serial correlation in neural spike trains: Experimental evidence, stochastic modelling, and single neuron variability. Phys Rev E. 2009;79:021905. doi: 10.1103/PhysRevE.79.021905. [DOI] [PubMed] [Google Scholar]

- 8.Chacron M, Longtin A, St.-Hilaire M, Maler L. Suprathreshold stochastic firing dynamics with memory in p-type electroreceptors. Phys Rev Lett. 2000;85:1576–1579. doi: 10.1103/PhysRevLett.85.1576. [DOI] [PubMed] [Google Scholar]

- 9.Liu Y-H, Wang X-J. Spike-frequency adaptation of a generalized leaky integrate-and-fire model neuron. J Comput Neurosci. 2001;10:25–45. doi: 10.1023/a:1008916026143. [DOI] [PubMed] [Google Scholar]

- 10.Brandman R, Nelson ME. A simple model of long-term spike train regularization. Neural Comput. 2002;14:1575–1597. doi: 10.1162/08997660260028629. [DOI] [PubMed] [Google Scholar]

- 11.Lüdke N, Nelson M-E. Short-term synaptic plasticity can enhance weak signal detectability in nonrenewal spike trains. Neural Comput. 2006;18:2879–2916. doi: 10.1162/neco.2006.18.12.2879. [DOI] [PubMed] [Google Scholar]

- 12.Muller E, Buesing L, Schemmel J, Meier K. Spike-frequency adapting neural ensembles: beyond mean adaptation and renewal theories. Neural Comput. 2007;19:2958–3010. doi: 10.1162/neco.2007.19.11.2958. [DOI] [PubMed] [Google Scholar]

- 13.Prescott S-A, Sejnowski T-J. Spike-rate coding and spike-time coding are affected oppositely by different adaptation mechanisms. J Neurosci. 2008;28:13649–13661. doi: 10.1523/JNEUROSCI.1792-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Schwalger T, Lindner B. Higher-order statistics of a bistable system driven by dichotomous colored noise. Phys Rev E. 2008;78:021121. doi: 10.1103/PhysRevE.78.021121. [DOI] [PubMed] [Google Scholar]

- 15.Bialek W, Rieke F, de Ruyter van Steveninck R-R, Warland D. Reading a neural code. Science. 1991;252:1854–1857. doi: 10.1126/science.2063199. [DOI] [PubMed] [Google Scholar]

- 16.Koch K, et al. Efficiency of information transmission by retinal ganglion cells. Curr Biol. 2004;14:1523–1530. doi: 10.1016/j.cub.2004.08.060. [DOI] [PubMed] [Google Scholar]

- 17.Lundstrom B-N, Fairhall A. Decoding stimulus variance from a distributional neural code of interspike intervals. J Neurosci. 2006;26:9030–9037. doi: 10.1523/JNEUROSCI.0225-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Reinagel P, Reid R-C. Temporal coding of visual information in the thalamus. J Neurosci. 2000;20:5392–5400. doi: 10.1523/JNEUROSCI.20-14-05392.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Panzeri S, Petersen R-S, Schultz S, Lebedev M, Diamond M-E. The role of spike timing in the coding of stimulus location in rat somatosensory cortex. Neuron. 2001;29:769–777. doi: 10.1016/s0896-6273(01)00251-3. [DOI] [PubMed] [Google Scholar]

- 20.Strong S-P, Koberle R, de Ruyter van Steveninck R-R, Bialek W. Entropy and information in neural spike trains. Phys Rev Lett. 1998;80:197–200. [Google Scholar]

- 21.Borst A, Theunissen F-E. Information theory and neural coding. Nat Neurosci. 1999;2:947–957. doi: 10.1038/14731. [DOI] [PubMed] [Google Scholar]

- 22.Thomson E-E, Kristan W-B. Quantifying stimulus discriminability: a comparison of information theory and ideal observer analysis. Neural Comput. 2005;17:741–778. doi: 10.1162/0899766053429435. [DOI] [PubMed] [Google Scholar]

- 23.Fairhall A, Lewen G-D, Bialek W, de Ruyter van Steveninck R. Efficiency and ambiguity in an adaptive neural code. Nature. 2001;412:787–792. doi: 10.1038/35090500. [DOI] [PubMed] [Google Scholar]

- 24.Fellous J-M, Tiesinga P-H-E, Thomas P-J, Sejnowski T-J. Discovering spike patterns in neuronal responses. J Neurosci. 2004;24:2989–3001. doi: 10.1523/JNEUROSCI.4649-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Middleton J-W, Chacron M-J, Lindner B, Longtin A. Firing statistics of a neuron model driven by long-range correlated noise. Phys Rev E. 2003;68:021920. doi: 10.1103/PhysRevE.68.021920. [DOI] [PubMed] [Google Scholar]

- 26.Brenner N, Bialek W, de Ruyter van Steveninck R. Adaptive rescaling maximizes information transmission. Neuron. 2000;26:695–702. doi: 10.1016/s0896-6273(00)81205-2. [DOI] [PubMed] [Google Scholar]

- 27.Theunissen F, Miller J-P. Temporal encoding in nervous systems: A rigorous definition. J Comput Neurosci. 1995;2:149–162. doi: 10.1007/BF00961885. [DOI] [PubMed] [Google Scholar]

- 28.Butts D-A, et al. Temporal precision in the neural code and the timescales of natural vision. Nature. 2007;449:92–95. doi: 10.1038/nature06105. [DOI] [PubMed] [Google Scholar]

- 29.Jacobs A-L, et al. Ruling out and ruling in neural codes. Proc Natl Acad Sci USA. 2009;106:5936–5941. doi: 10.1073/pnas.0900573106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Paninski L, Pillow J, Lewi J. Statistical models for neural encoding, decoding, and optimal stimulus design. Prog Brain Res. 2007;165:493–507. doi: 10.1016/S0079-6123(06)65031-0. [DOI] [PubMed] [Google Scholar]

- 31.Hille B. Ionic Channels of Excitable Membranes. 2nd Ed. Sunderland, MA: Sinauer; 1984. [Google Scholar]

- 32.Storm J-F. Temporal integration by a slowly inactivating K+ current in hippocampal neurons. Nature. 1988;336:379–381. doi: 10.1038/336379a0. [DOI] [PubMed] [Google Scholar]

- 33.Sobel E-C, Tank D-W. In vivo Ca2+ dynamics in a cricket auditory neuron: An example of chemical computation. Science. 1994;263:823–825. doi: 10.1126/science.263.5148.823. [DOI] [PubMed] [Google Scholar]

- 34.Wang X-J. Calcium coding and adaptive temporal computation in cortical pyramidal neurons. J Neurophysiol. 1998;79:1549–1566. doi: 10.1152/jn.1998.79.3.1549. [DOI] [PubMed] [Google Scholar]

- 35.Wang X-J, Liu Y, Sanchez-Vives M-V, McCormick D-A. Adaptation and temporal decorrelation by single neurons in the primary visual cortex. J Neurophysiol. 2003;89:3279–3293. doi: 10.1152/jn.00242.2003. [DOI] [PubMed] [Google Scholar]

- 36.Kara P, Reinagel P, Reid R-C. Low response variability in simultaneously recorded retinal, thalamic, and cortical neurons. Neuron. 2000;27:635–646. doi: 10.1016/s0896-6273(00)00072-6. [DOI] [PubMed] [Google Scholar]

- 37.Morris C, Lecar H. Voltage oscillations in the barnacle giant muscle fiber. Biophys J. 1981;35:193–213. doi: 10.1016/S0006-3495(81)84782-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Rinzel J, Ermentrout B. In: Methods of Neural Modelling. 2nd Ed. Koch C, Segev I, editors. Cambridge, MA: Massachusetts Institute of Technology; 1998. [Google Scholar]

- 39.Lasota A, Mackey MC. Chaos, Fractals, and Noise: Stochastic Aspects of Dynamics. 2nd Ed. New York: Springer-Verlag; 1994. [Google Scholar]

- 40.Nesse W-H, Del Negro C-A, Bressloff P-C. Oscillation regularity in noise-driven excitable systems with multi-time-scale adaptation. Phys Rev Lett. 2008;101:088101. doi: 10.1103/PhysRevLett.101.088101. [DOI] [PubMed] [Google Scholar]

- 41.Plesser H-E, Gerstner W. Noise in integrate-and-fire neurons: From stochastic input to escape rates. Neural Comput. 2000;12:367–384. doi: 10.1162/089976600300015835. [DOI] [PubMed] [Google Scholar]

- 42.Lindner B, Schimansky-Geier L, Longtin A. Maximizing spike train coherence or incoherence in the leaky integrate-and-fire model. Phys Rev E. 2002;66:031916. doi: 10.1103/PhysRevE.66.031916. [DOI] [PubMed] [Google Scholar]

- 43.Cover T, Thomas J. Elements of Information Theory. New York: Wiley-Interscience; 1991. [Google Scholar]

- 44.Ma W-J, Beck J-M, Latham P-E, Pouget A. Bayesian inference with probabilistic population codes. Nat Neurosci. 2006;9:1432–1438. doi: 10.1038/nn1790. [DOI] [PubMed] [Google Scholar]

- 45.Pfister J-P, Dayan P, Lengyel M. Know thy neighbour: A normative theory of synaptic depression. Adv Neural Inf Process Syst. 2009;22:1464–1472. [Google Scholar]

- 46.Polsky A, Mel B, Schiller J. Encoding and decoding bursts by NMDA spikes in basal dendrites of layer 5 pyramidal neurons. J Neurosci. 2009;29:11891–11903. doi: 10.1523/JNEUROSCI.5250-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.