Abstract

Recent research in neuroeconomics has demonstrated that the reinforcement learning model of reward learning captures the patterns of both behavioral performance and neural responses during a range of economic decision-making tasks. However, this powerful theoretical model has its limits. Trial-and-error is only one of the means by which individuals can learn the value associated with different decision options. Humans have also developed efficient, symbolic means of communication for learning without the necessity for committing multiple errors across trials. In the present study, we observed that instructed knowledge of cue-reward probabilities improves behavioral performance and diminishes reinforcement learning-related blood-oxygen level-dependent (BOLD) responses to feedback in the nucleus accumbens, ventromedial prefrontal cortex, and hippocampal complex. The decrease in BOLD responses in these brain regions to reward-feedback signals was functionally correlated with activation of the dorsolateral prefrontal cortex (DLPFC). These results suggest that when learning action values, participants use the DLPFC to dynamically adjust outcome responses in valuation regions depending on the usefulness of action-outcome information.

Keywords: functional MRI, striatum, instruction, computational modeling, prediction error

Maximizing reward obtained over time can be a daunting challenge to any organism (1). Without concrete instruction, an animal can only develop and fine-tune its reward-harvesting strategy through trial and error. Reinforcement learning (RL) theory has formalized this intuition and associated prediction error to the phasic changes of activities in dopaminergic neurons that track the ongoing difference between experienced and expected reward (2). Under this framework, prediction error is thought to broadcast to valuation structures, such as the striatum and ventromedial prefrontal cortex (vmPFC), to direct learning and integrate with other streams of information to facilitate decision making (3–6).

Recent research in neuroeconomics has demonstrated that this theoretical model of trial-and-error reward learning captures the patterns of both behavioral performance and the blood-oxygen level-dependent (BOLD) signals during a range of economic decision-making tasks (3, 7–10), demonstrating important cross-species similarities in the mechanisms of reward learning (11). Because of this finding, RL models have become a primary means to characterize neural responses in neuroeconomics. However, this powerful theoretical model has its limits. Trial and error is only one of the means by which individuals can learn the value associated with different decision options. Humans have also developed efficient, symbolic means of communication, namely language, that allow the social communication of information about value without the necessity for committing multiple errors across trials to learn. Little is known about how this explicit, symbolic knowledge can infiltrate the valuation structures mentioned above and exert its influence on action selection, and how the brain's embodiment of the RL algorithm differs in the face of instructed knowledge.

To address these questions, we used functional MRI (fMRI) together with a probabilistic reward task (Fig. 1) to assess the relative contributions of trial-and-error feedback and instructed knowledge on choice selection (12, 13). We designed an experiment with two sessions. In the “feedback” session, participants’ choices were only based on the win/loss feedback, and in the “instructed” session participants could also incorporate the correct cue-reward probability information provided by experimenter to guide choice behavior. We hypothesize that: (i) RL is a robust algorithm to explain and predict choice behaviors and BOLD responses in an environment where trial-and-error feedback is the only information to guide learning and influence choices (13–17), and (ii) when instructed knowledge about reward probabilities is also available, participants use this extra information to achieve better performance by modulating the degree to which RL algorithms are involved. We also explored which brain systems may influence the implementation of instructed knowledge by modulating the patterns of BOLD responses in brain areas typically implicated in RL, valuation and choice selection (13, 18–26).

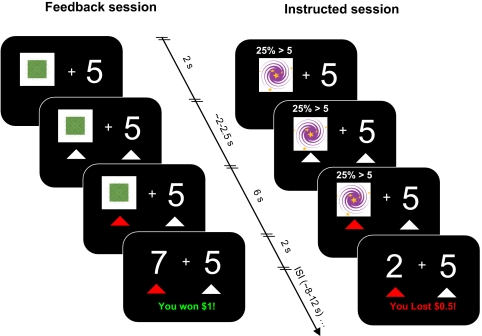

Fig. 1.

Experimental design. In feedback session, the number 5 and a specific visual cue were displayed on the screen. In the instructed session, additional probability information was displayed on top of the visual cue.

Results

Behavioral Results.

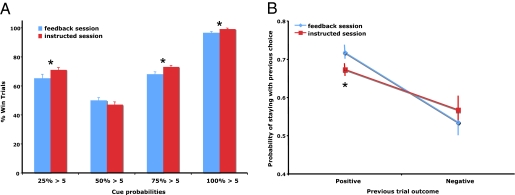

For both sessions, the participants’ frequency of win trials varied across four different probability conditions (F3,76 > 90, P < 0.0001). A repeated measure two-way ANOVA was performed using probability condition (25, 50, 75, and 100%) and session condition (feedback and instructed) as within-participant factors. As expected, participants showed better performance, as indicated by the frequency of win trials, in the instructed session (F1,152 = 5.8, P < 0.02) (Fig. 2A). Post hoc t tests showed that the differential performance existed in the 25, 75, and 100% probability conditions (P < 0.05), but not the 50% condition (P = 0.16) (Fig. 2A). We then examined how participants’ choices were influenced by the most recent outcome they received upon their subsequent choices. We calculated how likely participants would stay with their previous choice when the outcome of the previous choice was positive (win) or negative (loss) in both sessions (Fig. 2B). Using previous trial outcome (win or loss) and session condition (feedback or instructed) as two factors, a two-way ANOVA revealed that there is a main effect for outcome type. Participants were more likely to follow their previous choice when the outcome was positive (F1,76 = 26.9, P < 0.001). Further post hoc analysis showed participants in the feedback session were more influenced in their subsequent choice action by positive outcomes than participants in the instructed session (P < 0.05). A similar trend was observed for negative outcomes, but it was not significant (P = 0.18) (Fig. 2B). Overall, the loss trials were less than 30% of all of the trials, resulting in diminished statistical power for analyses of loss, relative to win, trials.

Fig. 2.

Behavioral results for both sessions. (A) Percentage of win trials (±SEM) for the different visual cue probabilities for both sessions. (B) The probability of staying with the previous choice (±SEM) given its outcome (positive or negative) for both sessions. (*, significant difference between sessions, P < 0.05).

We fitted a Q-learning model to participants’ choice behavior in both the feedback and instructed sessions using the maximum likelihood estimation. Different prominent RL models were fitted to the participants’ behavioral data to determine the optimal model. We considered popular models: a RL model with a single learning rate for both positive and negative prediction errors (PEs) (δ+ and δ−), and a RL model with different learning rates for both positive and negative PEs (δ+ and δ−). In the instructed session, we also included a RL model that assigns a “confirmation bias” to outcomes that match the instructions (27, 28) (SI Appendix, Tables S1 and S2). In the feedback session, a simple RL model with a single learning rate (α) for both positive and negative PEs (δ+ and δ−) tended to fit participants’ behavior better. However, a Q-learning model with two different learning rates (α+ and α−) for positive and negative PEs (δ+ and δ−) best explained participants’ behavior in the instructed session (implementation of model fitting is detailed in SI Appendix). The McFadden's pseudo R-square was 0.50 for the feedback session and 0.61 for the instructed session (SI Appendix, Table S1). A single learning rate of 0.24 was estimated for the feedback session; however, the learning rates associated with positive PE (α+) was 0.05 and was 0 with negative PE (α−) in the instructed session (SI Appendix, Tables S1 and S2). The significant difference of learning rates indicates that the PEs were not as efficiently incorporated to the updating of action value in the instructed session, especially when the outcome was worse than participants’ expectation. These results suggest that participants’ actions were simply governed by the a priori action value instructed by experimenter, as indicated by the initial Q value associated with different stimuli (α- = 0) (SI Appendix, Table S2).

Functional MRI Results.

RL model predicts BOLD signals in the feedback session.

Our behavioral results suggest that a RL model captures participants’ performance in the feedback session, but does not adequately describe learning in the instructed session. To explore if a similar pattern was reflected in the patterns of BOLD responses, we constructed a general linear model (GLM) with the PE regressors generated from the best fitting Q-learning models for both sessions (SI Appendix, Tables S1 and S2) and investigated the neural correlates of PE in the feedback session and the instructed session (Fig. 3A). Ventral striatum BOLD response was significantly correlated with PE signals in the feedback session [P < 0.05, corrected, peak Montreal Neurological Institute (MNI coordinate) (–27 3 0), z = 3.59] (Fig. 3A and SI Appendix, Table S2). There was no such correlation observed in the instructed session, even under a more relaxed threshold (P < 0.01, uncorrected). A direct comparison between the BOLD responses that correlated with PEs in the feedback and instructed sessions further confirmed the differential involvement of striatum in encoding PEs in both sessions (SI Appendix, Fig. S1). Because PE and monetary outcome often tend to correlate with each other, PE regression analyses were performed by including the monetary outcome regressor in the GLM for both sessions to separate PE-related BOLD responses from the outcome-related ones.

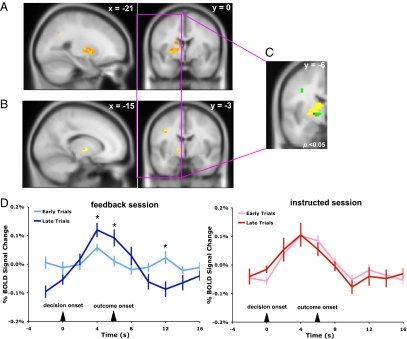

Fig. 3.

BOLD responses for prediction errors in both sessions. (A) Activity of the striatum showed significant correlation to the PE signal in the feedback session (P < 0.05, corrected). Such correlations were not observed in the above structures in the instructed session (P < 0.01, uncorrected). (B) A two-way ANOVA showed an interaction between session (feedback and instructed) and learning phase (early and late) in the left striatum. (C) Striatal activation identified in the PE (A, yellow) and session × learning phase interaction (B, green) analyses, and the overlapping region (red). (D) BOLD response patterns in the overlapping region for the early and late phases of learning in the feedback and instructed sessions (*, time points with significantly different BOLD responses between early and late learning phases, P < 0.05; ± SEM).

As a learning signal, PE has its unique activity pattern. At the beginning of the learning phase, PE signals tend to respond to the onset of the outcome delivery, but as learning progresses PE signal shifts toward the onset of the cue/decision accompanied by a diminished response to the actual outcome. To further test that BOLD response in the striatum indeed encodes PEs in the feedback session, we conducted an independent two-way ANOVA to identify brain regions whose activities were modulated by the interaction between the session (instructed vs. feedback) and learning phase (early vs. late learning). We hypothesized that if a RL mechanism was engaged differentially between the instructed and the feedback session, we should observe an interaction between the session and learning phase factors in a two-way ANOVA. Indeed, this analysis yielded similar brain regions as the PE regression analysis [P < 0.05, small volume corrected for 343 surrounding voxels, peak MNI coordinate (−15 −6 0), F1,304 = 15.55, z = 3.72] (Fig. 3B). A further region of interest (ROI) time-series analysis in the overlapping region of activation in the ventral striatum (Fig. 3C) revealed a pattern of BOLD response consistent with a PE learning signal in the feedback session. In early trials, striatum activation peaked at the onset of outcome. As learning progressed, this peak activation shifted toward the decision onset (Fig. 3D, Left). However, this characteristic PE response pattern was absent in the instructed session (Fig. 3D, Right).

Reduced BOLD responses to outcomes in the instructed session.

Inspired by the results that participants’ choices are differentially influenced by previous trial outcomes (Fig. 2B), we examined participants’ BOLD responses when participants processed monetary outcome (win or loss) in both sessions. From a general contrast of win over loss at the onset of outcome revelation across both sessions, we found significant activation in the nucleus accumbens (NAc) [peak MNI coordinate (−2 12 −10), z = 6.12] and vmPFC [peak MNI coordinate (−4 42 −10), z = 5.94; P < 0.05 corrected] (Fig. 4A), regions previously linked to the brain's reward valuation system (25, 29–34) (SI Appendix, Table S3). In addition, we observed bilateral activation in the hippocampal complex [peak MNI coordinates (−18 −18 −20), z = 4.31 and (28 −18 −20), z = 3.61], which was centered on the perirhinal cortex, a region that has been implicated in processing item-reward associations (35–37) (Fig. 4A). Similar outcome-related activation patterns were also observed by including the prediction error regressor in the GLM.

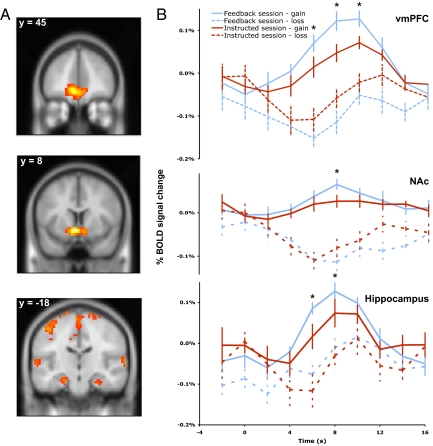

Fig. 4.

BOLD responses discriminating win and loss for both sessions. (A) A whole-brain analysis revealed greater activation in the NAc, vmPFC, and bilateral hippocampal complex for win than loss trials across both sessions (P < 0.05, corrected). (B) BOLD time course of activation in the NAc, vmPFC, and bilateral hippocampal complex for win and loss trials in the feedback and instructed sessions (*, significant difference of time points near activation peaks, P < 0.05; ± SEM).

ROI analyses of these brain regions showed that overall BOLD responses to outcomes (win minus loss) were smaller in the instructed session than the feedback session. Examining win and loss trials independently revealed diminished activation to monetary gains in the instructed session in all three regions (P < 0.05 at the peaks of activation). Although a similar pattern was observed for loss trails, no significant differences were observed for loss evoked responses between the two sessions, perhaps because of the diminished statistical power resulting from fewer overall loss trials (Fig. 4B).

Higher dorsolateral prefrontal cortex activity paralleled better performance in the instructed session.

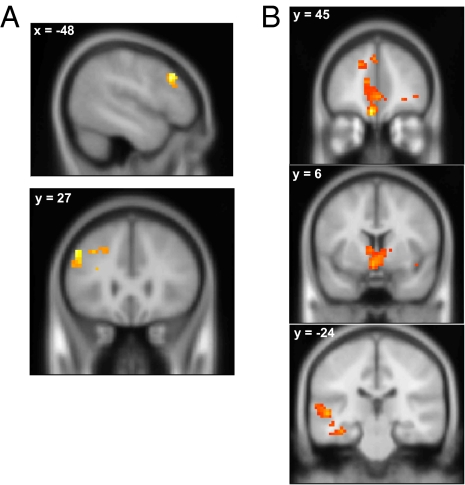

RL model fitting of the behavioral data suggested that participants were less influenced by monetary outcomes in the instructed session, most likely because of the strong a priori instructed knowledge of the cue-reward probabilities. Accordingly, participants achieved better performance in the instructed session. This reliance on instructed knowledge reduced BOLD responses in regions implicated in reward learning, suggesting that instructed knowledge enables the brain to diminish the impact of outcome feedback on decision making. If this process is the case, there should also be a corresponding increase in activation in brain regions that mediate the implementation of instructed knowledge. To determine which brain regions may enable the effects of instructed knowledge on trial-and-error reward learning tasks, we conducted an exploratory analysis to locate brain areas where activation to monetary outcomes was greater in the instructed relative to feedback session. We focused on win trials because our previous analyses found significantly diminished BOLD responses to wins in reward learning (NAc and hippocampal complex) and valuation (vmPFC) regions in the instructed session. This analysis revealed the left dorsolateral prefrontal cortex (DLPFC) [P < 0.05 corrected, peak MNI coordinate: (−48 24 33), z = 3.98] showed a greater BOLD response to win outcomes during the instructed session (Fig. 5A and SI Appendix, Table S5).

Fig. 5.

Left DLPFC activity showed negative functional connectivity to brain structures related to reward valuation. (A) Left DLPFC showed relatively greater activation to monetary gains in the instructed than the feedback session (P < 0.05, corrected). (B) PPI analysis showing regions negatively correlated with the left DLPFC on win trials in the instructed session (P < 0.05, corrected) but not in the feedback session (P < 0.01, uncorrected) (SI Appendix, Fig. S2).

Functional connectivity between DLPFC and reward-related brain structures.

The DLPFC has previously been implicated in decision-making and emotion regulation tasks that require the top-down modulation of valuation regions (25, 26, 34). To determine if the left DLPFC acted as a cognitive modulator of reward learning regions in the presence of instructed knowledge, we conducted a psychophysiological interaction (PPI) analysis using the peak voxels in the left DLPFC (Fig. 5A) as the seed region, and tested which brain areas showed significant functional connectivity in the win trials vs. loss trials. We found an inverse, win-trial specific functional connectivity between the DLPFC and the NAc [peak MNI coordinate (−3 6 −12), z = 3.17], vmPFC [peak MNI coordinate (−6 48 −18), z = 4.86], and left parahippocampal gyrus [peak MNI coordinate (−36 −24 −18), z = 3.77] only in the instructed session (P < 0.05 corrected) (Fig. 5B and SI Appendix, Table S6). Similar results were obtained by directly comparing the functional connectivity of the DLPFC and these reward-related brain areas in the feedback and instructed session (SI Appendix, Fig. S2). This result is particularly interesting because the valuation structures, whose BOLD responses are negatively correlated with the left DLPFC when reliable instructed knowledge is available to guide choices (vmPFC, NAc, and hippocampal complex), overlap with those regions showing diminished response to reward outcomes in the instructed session (Figs. 4 and 5).

Discussion

Optimal decision making requires the brain to dynamically allocate control among different types of information for action selection (17, 38, 39). When feedback is the only source of information, choice-dependent outcomes can be evaluated and fed back to valuation systems to provide a better approximation of action values and guide individuals toward choices that maximize accumulated rewards in the long run. The RL algorithm provides a formal framework to incorporate feedback information to facilitate learning and decision-making (1, 2, 40–43). Consistent with this previous research, in the feedback session of our task we fit a RL model to participants’ behavioral data and located the neural basis of PE in the ventral striatum (Fig. 3 and SI Appendix, Table S1) using two independent approaches (Fig. 3 A and B). Additional ROI time-series analyses in the feedback session further revealed that striatal BOLD responses were sensitive to the onset of both decisions and outcomes early in learning, but migrated to the onset of the decision as learning progressed. This pattern is consistent with the unique characteristics of PE learning signals and is absent in the instructed session (Fig. 3D) (2).

In contrast, when correct instructed knowledge about the cue-reward probabilities was available, participants used this information to achieve better performance and the RL model was less successful in interpreting participants’ behaviors and BOLD activation pattern (Fig. 3D and SI Appendix, Fig. S1). Previous research has formalized the intuition of instructional control and suggested a “confirmation bias” model to amplify the effect of positive PEs and diminish the effect of negative PEs when participants made choices based on instructed information (27, 28). We compared the performance of different RL models [including the confirmation-bias model suggested by Doll et al. (27)] and interestingly, the RL model with different learning rates (α+ and α−) for positive and negative PEs (δ+ and δ−) tended to fit participants’ behavior best in the instructed session (see SI Appendix for technical details). Using the PEs generated from the above best-fitting RL model, our fMRI analysis did not reveal a correlation between PE signal and striatal BOLD responses (P < 0.01, uncorrected) in the instructed session. Taken together, these results suggest that participants might rely less on PE signals for action-value updating when symbolic, instructed knowledge of the reward probabilities is available. Consistent with this hypothesis, both behavior (Fig. 2B) and BOLD responses were less influenced by outcomes in the instructed session. Indeed, we observed relatively smaller activations in brain areas (NAc, vmPFC, and hippocampal complex) typically associated with reward learning and valuation (11, 25, 30, 31, 33, 35–37, 44–50) when participants were rewarded for their choices in the instructed session (Fig. 4B and SI Appendix, Table S4). These findings suggest that the brain assigns less weight to actual outcomes when other sources of reliable information (instructed knowledge) about the cue-reward probability and optimal choice strategies are available.

The mechanism by which participants dynamically adjust their reliance on outcome information when symbolic knowledge of the reward probabilities is available was revealed in an exploratory analysis that showed higher left DLPFC activity when participants experienced monetary wins in the instructed relative to the feedback session (Fig. 5A). Importantly, PPI analysis using this DLPFC area as a seed region revealed negative functional connectivity between BOLD activities in the left DLPFC, and those in brain regions related to reward learning and valuation (NAc, vmPFC, and the hippocampal complex) among other brain areas (Fig. 5B and SI Appendix, Fig. S2 and Table S6). Interestingly, these regions overlapped remarkably well with those identified previously through the win-loss contrast (Figs. 4A and 5B). Thus, we propose a functional link between the DLPFC and an outcome-valuation learning system. This latter system is pivotal in providing correct value or “utility” information to facilitate learning based on monetary feedback, but appears to be less important when preexisting, symbolic knowledge to guide choices is available.

Taken together, these results suggest that when learning action values, the DLPFC tends to dynamically adjust outcome responses in reward-related brain regions depending on the usefulness of action-outcome information compared with explicit knowledge participants directly obtained from social communication. Although the current study demonstrates the importance of this DLPFC, reward-related structure circuitry for learning and reward processing, previous neuroeconomic research has outlined a similar circuitry across a range of decision-making tasks in which preexisting reward values that are represented in valuation regions can be modulated based on social processes (51, 52), goals (34), or other cognitive factors (52, 53). Although there have been suggestions that the DLPFC and reward-related regions represent independent systems in the brain competing with each other for the dominance of action selection (27, 28, 53, 54), our results are more consistent with a general role for the DLPFC in modulating the engagement of reward-related regions depending on the relative importance of the information during a learning paradigm.

Our findings also lend neurological evidence to support recent computational approaches to reconcile a broad range of literatures suggesting multiple representation systems in the brain for behavioral control. One such system deploys a model-free method and “learns putatively simpler quantities,” such as policies that are sufficient to permit optimal performance through processing action outcomes. It is suggested this computation is carried out in the dorsolateral striatum. The other system, which employs the prefrontal cortex, adopts a model-based method to make use of available or learned rules and derives optimal choice through dynamic programming. The brain arbitrates between different representation systems according to the uncertainty estimated from each system (38, 39, 55). In our task, the state transition probabilities (reward probabilities) were more accurate in the instructed session (provided by the experimenter), thus the model-based approach would dominate participants’ choice by recruiting the DLPFC to bias responses in the reward-valuation systems.

The brain's reward-learning circuitry as instantiated in the RL model is a phylogenetically old system for learning based on trial-and-error. When social structures and means of communication are more complex, these basic reward-learning processes may not be optimal to promote the best decision. Errors are costly and unnecessary when additional, symbolic information about the best decision is available. The current study demonstrates how the DLPFC interacts with the reward-learning circuitry to diminish the impact of actual trial-outcome information, presumably enabling symbolic knowledge of reward probability to guide choices. Our data add to the growing literature of interactions of different types of information to achieve optimal behavior in decision making and provide direct support to the computational theory that arbitrates between different representation systems by assigning control to the one that has less uncertainty of the correct action values.

Methods

Participants.

Twenty participants were recruited and tested in compliance with the university committee on activities involving human subjects [University Committee on Activities Involving Human Subjects (UCAIHS)]. The experiment was approved by the UCAIHS at New York University and all subjects provided informed consent before the experiment. Of the 20 participants, 7 were male, 9 were non-Caucasian, and the group had an average age of 21.6 y (SD = 3.72).

Experimental Procedures.

Each participant played two sessions of the task. One session was named the “feedback” session and the other session was titled the “instructed” session (Fig. 1). For both sessions, participants were told that they would see different visual cues which represent how likely the number underneath the cue would be greater or less than 5 (value of underlying number ∈ {1, 2, 3, 4, 6, 7, 8, 9}). The sequence of the two sessions was randomized across participants, so that 10 out of 20 participants experienced the feedback session first. In both sessions, four different visual cues representing different probabilities (P ∈ {25, 50, 75, 100%}) of the number underneath the cue being greater than 5 were presented to participants. For both sessions, participants saw a cue next to the number 5 on each trial. Each cue was randomly presented 20 times for a total of 80 trials per session (see SI Appendix for details).

Functional MRI Image Acquisition.

Scanning was performed on all 20 participants with a 3-T Siemens Allegra head-only scanner and a Siemens standard head coil at New York University's Center for Brain Imaging (see SI Appendix for details).

Behavioral Analysis.

Participants’ choice behaviors in both sessions were modeled by a simple RL algorithm (See SI Appendix for details). We tested our model against others suggested in the literature based on behavioral data with similar tasks (27, 28) using the Bayesian information criterion as a criterion for model selection. For the feedback session, the simple RL with one learning rate (α) for both positive and negative prediction errors fits participants’ behavior better. However, RL with different learning rates (α+ and α−) for positive and negative (δ+ and δ−) PEs fits participants’ choices the best in the instructed session (see SI Appendix for details).

Imaging Analysis.

We first regressed PEs that were generated for both the feedback and instructed sessions using the best-fitting parameters to the whole-brain BOLD signals at the revelation of monetary outcome to identify the brain areas whose activities were correlated with the calculation of PE. Monetary outcomes were also included as dummy regressors to account for the effect of the magnitude of the reward value.

Repeated-measures two-way ANOVA was performed on the functional imaging data with two factors (session and learning phase) at the onset of feedback. The finite impulse response from time 0 to ∼12 s (TR0 to ∼TR6) was generated by resampling the BOLD time series of each voxel in the brain and averaging across 40 trials each for the early and late learning phases in both sessions. Because canonical hemodynamic response function typically peaks at 6 to ∼8 s after the stimulus onset, the two-way ANOVA was performed on both TR3 (6 s) and TR4 (8 s). These whole-brain analyses were performed on each voxel to identify brain regions that showed a significant interaction effect with time (i.e., early vs. late learning) and session (i.e., feedback vs. instructed session).

Finally, we conducted a PPI analysis to investigate the connectivity between brain regions that may modulate the impact of instructed knowledge on RL learning signals (see SI Appendix for technical details).

Supplementary Material

Acknowledgments

We thank K. Sanzenbach and the Center for Brain Imaging at New York University for technical assistance. This study was funded by a James S. McDonnell Foundation grant and National Institute of Mental Health Grants MH 080756 (to E.A.P.) and MH 084081 (to M.R.D.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1014938108/-/DCSupplemental.

References

- 1.Sutton RS, Barto AG. Reinforcement Learning: An Introduction. Cambridge, Mass.: MIT Press; 1998. p. 322. [Google Scholar]

- 2.Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- 3.Montague PR, King-Casas B, Cohen JD. Imaging valuation models in human choice. Annu Rev Neurosci. 2006;29:417–448. doi: 10.1146/annurev.neuro.29.051605.112903. [DOI] [PubMed] [Google Scholar]

- 4.Tanaka SC, et al. Brain mechanism of reward prediction under predictable and unpredictable environmental dynamics. Neural Netw. 2006;19:1233–1241. doi: 10.1016/j.neunet.2006.05.039. [DOI] [PubMed] [Google Scholar]

- 5.Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Rudebeck PH, et al. Frontal cortex subregions play distinct roles in choices between actions and stimuli. J Neurosci. 2008;28:13775–13785. doi: 10.1523/JNEUROSCI.3541-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.McClure SM, Berns GS, Montague PR. Temporal prediction errors in a passive learning task activate human striatum. Neuron. 2003;38:339–346. doi: 10.1016/s0896-6273(03)00154-5. [DOI] [PubMed] [Google Scholar]

- 8.King-Casas B, et al. Getting to know you: Reputation and trust in a two-person economic exchange. Science. 2005;308(5718):78–83. doi: 10.1126/science.1108062. [DOI] [PubMed] [Google Scholar]

- 9.Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hare TA, O'Doherty J, Camerer CF, Schultz W, Rangel A. Dissociating the role of the orbitofrontal cortex and the striatum in the computation of goal values and prediction errors. J Neurosci. 2008;28:5623–5630. doi: 10.1523/JNEUROSCI.1309-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- 12.Delgado MR, Miller MM, Inati S, Phelps EA. An fMRI study of reward-related probability learning. Neuroimage. 2005;24:862–873. doi: 10.1016/j.neuroimage.2004.10.002. [DOI] [PubMed] [Google Scholar]

- 13.Burke CJ, Tobler PN, Baddeley M, Schultz W. Neural mechanisms of observational learning. Proc Natl Acad Sci USA. 2010;107:14431–14436. doi: 10.1073/pnas.1003111107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kaelbling LP, Littman ML, Moore AW. Reinforcement learning: A survey. J Artif Intell Res. 1996;4:237–285. [Google Scholar]

- 15.Bogacz R, McClure SM, Li J, Cohen JD, Montague PR. Short-term memory traces for action bias in human reinforcement learning. Brain Res. 2007;1153:111–121. doi: 10.1016/j.brainres.2007.03.057. [DOI] [PubMed] [Google Scholar]

- 16.Schönberg T, Daw ND, Joel D, O'Doherty JP. Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. J Neurosci. 2007;27:12860–12867. doi: 10.1523/JNEUROSCI.2496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Niv Y. Reinforcement learning in the brain. J Math Psychol. 2009;53(3):139–154. [Google Scholar]

- 18.Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- 19.Ochsner KN, Gross JJ. The cognitive control of emotion. Trends Cogn Sci. 2005;9:242–249. doi: 10.1016/j.tics.2005.03.010. [DOI] [PubMed] [Google Scholar]

- 20.LaBar KS, Cabeza R. Cognitive neuroscience of emotional memory. Nat Rev Neurosci. 2006;7:54–64. doi: 10.1038/nrn1825. [DOI] [PubMed] [Google Scholar]

- 21.Li J, McClure SM, King-Casas B, Montague PR. Policy adjustment in a dynamic economic game. PLoS ONE. 2006;1:e103. doi: 10.1371/journal.pone.0000103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Knoch D, Fehr E. Resisting the power of temptations: The right prefrontal cortex and self-control. Ann N Y Acad Sci. 2007;1104:123–134. doi: 10.1196/annals.1390.004. [DOI] [PubMed] [Google Scholar]

- 23.Sakai K. Task set and prefrontal cortex. Annu Rev Neurosci. 2008;31:219–245. doi: 10.1146/annurev.neuro.31.060407.125642. [DOI] [PubMed] [Google Scholar]

- 24.Kouneiher F, Charron S, Koechlin E. Motivation and cognitive control in the human prefrontal cortex. Nat Neurosci. 2009;12:821–822. doi: 10.1038/nn.2321. [DOI] [PubMed] [Google Scholar]

- 25.McClure SM, et al. Neural correlates of behavioral preference for culturally familiar drinks. Neuron. 2004;44:379–387. doi: 10.1016/j.neuron.2004.09.019. [DOI] [PubMed] [Google Scholar]

- 26.Li J, Xiao E, Houser D, Montague PR. Neural responses to sanction threats in two-party economic exchange. Proc Natl Acad Sci USA. 2009;106:16835–16840. doi: 10.1073/pnas.0908855106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Doll BB, Jacobs WJ, Sanfey AG, Frank MJ. Instructional control of reinforcement learning: A behavioral and neurocomputational investigation. Brain Res. 2009;1299:74–94. doi: 10.1016/j.brainres.2009.07.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Biele G, Rieskamp J, Gonzalez R. Computational models for the combination of advice and individual learning. Cogn Sci. 2009;33:206–242. doi: 10.1111/j.1551-6709.2009.01010.x. [DOI] [PubMed] [Google Scholar]

- 29.Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. J Neurophysiol. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- 30.de Quervain DJ, et al. The neural basis of altruistic punishment. Science. 2004;305:1254–1258. doi: 10.1126/science.1100735. [DOI] [PubMed] [Google Scholar]

- 31.Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- 32.Delgado MR. Reward-related responses in the human striatum. Ann N Y Acad Sci. 2007;1104:70–88. doi: 10.1196/annals.1390.002. [DOI] [PubMed] [Google Scholar]

- 33.Glimcher PW. Neuroeconomics: Decision Making and the Brain. Burlington, MA: Academic Press; 2008. p. 552. [Google Scholar]

- 34.Hare TA, Camerer CF, Rangel A. Self-control in decision-making involves modulation of the vmPFC valuation system. Science. 2009;324:646–648. doi: 10.1126/science.1168450. [DOI] [PubMed] [Google Scholar]

- 35.Liu Z, Murray EA, Richmond BJ. Learning motivational significance of visual cues for reward schedules requires rhinal cortex. Nat Neurosci. 2000;3:1307–1315. doi: 10.1038/81841. [DOI] [PubMed] [Google Scholar]

- 36.Liu Z, Richmond BJ. Response differences in monkey TE and perirhinal cortex: Stimulus association related to reward schedules. J Neurophysiol. 2000;83:1677–1692. doi: 10.1152/jn.2000.83.3.1677. [DOI] [PubMed] [Google Scholar]

- 37.Mogami T, Tanaka K. Reward association affects neuronal responses to visual stimuli in macaque te and perirhinal cortices. J Neurosci. 2006;26:6761–6770. doi: 10.1523/JNEUROSCI.4924-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- 39.Dayan P, Daw ND. Decision theory, reinforcement learning, and the brain. Cogn Affect Behav Neurosci. 2008;8:429–453. doi: 10.3758/CABN.8.4.429. [DOI] [PubMed] [Google Scholar]

- 40.McClure SM, Daw ND, Montague PR. A computational substrate for incentive salience. Trends Neurosci. 2003;26:423–428. doi: 10.1016/s0166-2236(03)00177-2. [DOI] [PubMed] [Google Scholar]

- 41.O'Doherty JP, Dayan P, Friston K, Critchley H, Dolan RJ. Temporal difference models and reward-related learning in the human brain. Neuron. 2003;38:329–337. doi: 10.1016/s0896-6273(03)00169-7. [DOI] [PubMed] [Google Scholar]

- 42.O'Doherty J, et al. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- 43.Hampton AN, Bossaerts P, O'Doherty JP. Neural correlates of mentalizing-related computations during strategic interactions in humans. Proc Natl Acad Sci USA. 2008;105:6741–6746. doi: 10.1073/pnas.0711099105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.O'Doherty J, Kringelbach ML, Rolls ET, Hornak J, Andrews C. Abstract reward and punishment representations in the human orbitofrontal cortex. Nat Neurosci. 2001;4:95–102. doi: 10.1038/82959. [DOI] [PubMed] [Google Scholar]

- 45.O'Doherty J, Rolls ET, Francis S, Bowtell R, McGlone F. Representation of pleasant and aversive taste in the human brain. J Neurophysiol. 2001;85:1315–1321. doi: 10.1152/jn.2001.85.3.1315. [DOI] [PubMed] [Google Scholar]

- 46.Knutson B, Fong GW, Adams CM, Varner JL, Hommer D. Dissociation of reward anticipation and outcome with event-related fMRI. Neuroreport. 2001;12:3683–3687. doi: 10.1097/00001756-200112040-00016. [DOI] [PubMed] [Google Scholar]

- 47.Aharon I, et al. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron. 2001;32:537–551. doi: 10.1016/s0896-6273(01)00491-3. [DOI] [PubMed] [Google Scholar]

- 48.Anderson AK, et al. Dissociated neural representations of intensity and valence in human olfaction. Nat Neurosci. 2003;6:196–202. doi: 10.1038/nn1001. [DOI] [PubMed] [Google Scholar]

- 49.Small DM, et al. Dissociation of neural representation of intensity and affective valuation in human gustation. Neuron. 2003;39:701–711. doi: 10.1016/s0896-6273(03)00467-7. [DOI] [PubMed] [Google Scholar]

- 50.Zink CF, Pagnoni G, Martin-Skurski ME, Chappelow JC, Berns GS. Human striatal responses to monetary reward depend on saliency. Neuron. 2004;42:509–517. doi: 10.1016/s0896-6273(04)00183-7. [DOI] [PubMed] [Google Scholar]

- 51.Spitzer M, Fischbacher U, Herrnberger B, Grön G, Fehr E. The neural signature of social norm compliance. Neuron. 2007;56(1):185–196. doi: 10.1016/j.neuron.2007.09.011. [DOI] [PubMed] [Google Scholar]

- 52.Delgado MR, Gillis MM, Phelps EA. Regulating the expectation of reward via cognitive strategies. Nat Neurosci. 2008;11:880–881. doi: 10.1038/nn.2141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- 54.Sanfey AG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD. The neural basis of economic decision-making in the Ultimatum Game. Science. 2003;300:1755–1758. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- 55.Gläscher J, Daw N, Dayan P, O'Doherty JP. States versus rewards: Dissociable neural prediction error signals underlying model-based and model-free reinforcement learning. Neuron. 2010;66:585–595. doi: 10.1016/j.neuron.2010.04.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.