Abstract

The post-experiment processing of X-ray Diffraction Microscopy data is often time-consuming and difficult. This is mostly due to the fact that even if a preliminary result has been reconstructed, there is no definitive answer as to whether or not a better result with more consistently retrieved phases can still be obtained. We show here that the first step in data analysis, the assembly of two-dimensional diffraction patterns from a large set of raw diffraction data, is crucial to obtaining reconstructions of highest possible consistency. We have developed software that automates this process and results in consistently accurate diffraction patterns. We have furthermore derived some criteria of validity for a tool commonly used to assess the consistency of reconstructions, the phase retrieval transfer function, and suggest a modified version that has improved utility for judging reconstruction quality.

1. Introduction

X-ray diffraction microscopy (XDM; also called coherent diffraction imaging or CDI) provides an alternative approach to more conventional forms of lens-based x-ray microscopy in that it does not rely on inefficient optics and thus helps reduce the radiation dose administered to the sample [1]. This is especially important with regards to biological imaging where radiation dose is limiting the maximum achievable resolution [2]. The idea of phase retrieval from recorded diffraction intensities alone was first conceived by Sayre in 1952 [3]. The first experimental demonstration of XDM was achieved by Miao et al. in 1999 on a fabricated test pattern [4]. Since then the technique has been successfully applied in 2D to biological [5–7] and material science samples [8], and in 3D to test structures [9], material science [10,11] and biological [12] samples.

A typical experimental setup involves recording the far-field diffraction pattern of a plane wave incident on an isolated object. Since the detector, usually a CCD, only records intensities, the phases need to be retrieved computationally using a reconstruction algorithm. The first algorithm to successfully retrieve phases from far-field intensity measurements was demonstrated by Fienup in 1978 [13]. Several generalizations have since been developed [14,15], all of which are based on iteratively enforcing constraints in real and Fourier space. In Fourier space, the present guess of the complex amplitude is adjusted towards the measured Fourier magntiudes. In real space, the present guess of the object wavefield is adjusted to enforce a finite support constraint, so that pixels outside the support (the array subspace within which the object is supposed to lie) are assumed to produce no scattering. The support guess is periodically updated (either by hand or in an automated fashion using the shrinkwrap algorithm [16]) until a support is found that tightly fits the actual object.

The far-field diffraction geometry has certain advantages in experimental simplicity (no nanofocusing optics or nanopositioning stages are required), and in insensitivity to certain errors such as small shifts in the transverse position of the object (the shift theorem of Fourier transforms shows that such shifts produce only linear phase ramps in Fourier space which are not encoded in the Fourier plane intensities). At the same time, alternative experimental geometries have been developed with different tradeoffs. By using curved wavefront illumination in a near-field or Fresnel scheme [17, 18] one gains more rapid and robust reconstruction convergence, while ptychographic [19–22] and keyhole [23] methods limit the illumination footprint and thus overcome the need for the object to be constrained inside a finite support. Because these other methods still involve the use of Fourier plane intensities and iterative algorithms for reconstruction, improvements to the data handling and object reconstruction of far-field methods can often be of benefit in these other techniques.

We describe here three improvements to the processing and iterative reconstruction of images from measured far-field intensities. In Sec. 2, we describe an algorithmic and automated procedure for improved merging of multiple Fourier plane intensity recordings. In Sec. 3, we show that incorporation of a Wiener filter into the phase retrieval transfer function (PRTF) improves the PRTF’s interpretability and utility for judging reconstruction validity. In Sec. 4, we examine different approaches for iterate averaging [6,24] and their impact on reconstruction validity. The collective improvement on reconstructed image quality is illustrated using recent experimental data from beamline 9.0.1 at the Advanced Light Source at Lawrence Berkeley Laboratory that yielded 13 nm resolution images of specifically-labeled freeze-dried yeast cells [25].

2. Automated Merging Program (AMP) for Fourier intensities

When recording far-field diffraction intensities, one must be mindful of the experience in small-angle scattering that intensity I tends to drop off with spatial frequency as I(f) ∝ f−m, where f = θ/λ is the spatial frequency and m = 3–4 with m = 4 suggested by Porod’s law. Since data is usually recorded over at least two orders of magnitude range in spatial frequency, this means that the Fourier plane intensity tends to span six or more orders of magnitude. This can present challenges for many pixelated x-ray detectors; for example, in using direct detection on CCDs one generates several hundred electron-hole pairs per soft x-ray photon absorbed, which when coupled with a full-well charge capacity of 105–106 electrons means that a dynamic range of only something over three orders of magnitude can be achieved in a single recording. (Pixel array detectors are beginning to overcome these limitations, but high pixel number detectors with good sensitivity for soft x rays are not yet widely available). As a result, a common experimental strategy is to to use an adjustable beamstop to block various parts of the strong, low spatial frequency signal while adjusting the exposure time to collect the weaker, high spatial frequency signals. These various intensity recordings must then be combined to yield a merged measurement of the Fourier plane intensities. These merged intensities must satisfy some key conditions. There should be no scaling errors between regions recorded with different exposure times. Saturated pixels should be removed before merging the raw data; as well as anomalously high pixel values due to cosmic rays incident on the CCD. Finally, noise in the raw data should be suppressed; this is especially important in the high spatial frequency regime, where the scattering signal is weak.

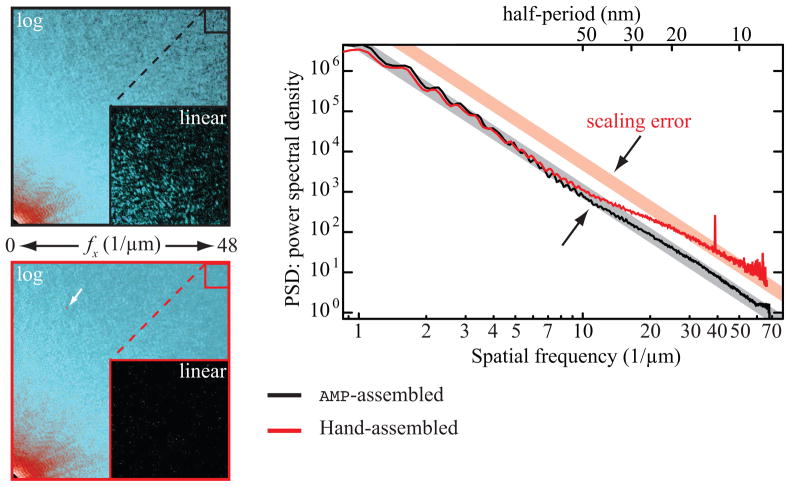

In previous work, we have been been merging multiple Fourier plane intensity recordings by using a per-dataset procedure based on manual adjustments of noise thresholds and requested exposure times. Besides being tedious, this has produced slight user-dependent variations in the assembled Fourier intensities. We have therefore developed an Automated Merging Program AMP to perform this task which we now describe, with its final results illustrated in Fig. 1.

Fig. 1.

Assembled diffraction intensities of data collected on a freeze-dried labeled yeast cell. On the left, the same quadrant of the entire array is shown on a false color logarithmic scale for both AMP-assembled data (in black) and data assembled by hand (in red). An inset shows the highest spatial frequencies of the merged intensities on a false color linear scale. The AMP-assembled intensities show good contrast for speckles all the way to highest spatial frequencies where the hand assembled intensities are dominated by noise. On the right, the power spectral densities for both arrays are plotted on a log-log scale. The hand-assembled data does not follow a straight power law indicating scaling errors between low and high spatial frequency data. The peak at 40 μm−1 in the PSD of the hand-assembled data is due to a cosmic ray; its location is indicated by the white arrow.

2.1. Data assembly: previous practice

In a typical per-dataset assembly, the following procedure is performed for each beamstop position. Saturated pixels (where the full-well capacity of the CCD had been reached) are first removed. Next, pixels with anomalously high values due to large charge deposition by cosmic ray events are found and removed, as are pixels with anomalous values due to either manufacturing flaws or radiation-induced damage. Individual recordings are then normalized to the synchrotron beam current, after which images with the same exposure time are averaged and a noise threshold floor is applied. The area behind the beamstop is then masked. Beam-normalized averages from the different exposure times are then scaled and averaged, taking care to include only those pixels with non-zero signal. We refer to the result as a “hand-assembled” data set.

2.2. Automated assembly: improvements provided by AMP

The assembly performed by AMP improves upon this basic assembly protocol in several key areas. The first difference is a quantitative analysis of the CCD chip. Given a series of dark current images at different exposure times, AMP will calculate an average dark current and the variation in dark current either as an average for all pixels in the chip, or (if enough redundant dark current files are present) on a per-pixel basis. The variance in each pixel corresponds to the total CCD noise comprised of thermal noise and readout noise. From these data the scaling of average dark current and CCD noise with exposure time is determined from a linear fit. This dark current information is used twice: first to subtract an average dark current signal from each recorded image, and second to calculate an error value for each pixel. The latter is determined by the square root sum of CCD noise and noise due to initial photon statistics; this error array is kept throughout the entire assembly process and updated according to the rules of error propagation. It is a crucial ingredient to two other improvements that AMP introduces: weighted averages and weighted normalizations.

During the assembly process, arrays are frequently normalized with respect to some constant (such as exposure time or ring current) and subsequently averaged such that in the end there is only one data set containing all the information from all initially recorded images. Even though the arrays are normalized, problems may arise from insufficient knowledge of the normalization constants. We have found for instance that our shutter timing (which ultimately determines the exposure time) is not very accurate at short exposure times. This will lead to scaling errors between different regions of the final assembled array. To overcome this problem, AMP calculates a normalization correction based on pixels that are common to the two arrays about to be averaged. This correction is applied just after the “regular” normalization (i.e. with respect to beam current or exposure time), before the arrays are averaged. For both the calculation of the normalization correction and the averaging of two arrays, AMP makes use of their error arrays by weighting each pixel’s influence on the result with its respective error. This is justified as we want pixels with smaller error to contribute more to the final result than pixels with higher uncertainties. Given two previously normalized arrays 1 and 2 with intensity values at the k-th pixel of Ik,1 and Ik,2 respectively, the normalization correction c is calculated from the minimum of the goodness-of-fit parameter

| (1) |

where σk is the effective total error for the k-th pixel. To calculate σk we express the intensity at the k-th pixel of the i-th array Ik,i as the sum of true signal and error σk,i. With this, Eq. (1) becomes

| (2) |

Rearranging the left hand side to

| (3) |

and assuming c ≈ 1, we arrive at

| (4) |

| (5) |

where we have assumed that the errors are uncorrelated, i.e. Σσk,1 σk,2 = 0. Now we can go back to the original idea and calculate the normalization constant by taking the derivative of Eq. (1) with respect to c

| (6) |

Performing the derivation and solving for c, we end up with

| (7) |

Note that the sum above is performed over pixels that are defined (i.e. greater than some threshold or zero and not saturated) in both arrays. The implementation of the weighted normalization in software is illustrated in pseudocode in the Appendix (Algorithm 1). After normalizing the arrays in the pre-described manner, we can average them. As with the normalization, we have to make sure that we give more weight to pixels with little uncertainty than to pixels with high uncertainty. Therefore AMP calculates for each pixel k the weighted average over all arrays i = 0,….,N as

| (8) |

where σi,k is the previously normalized error of the k-th pixel in the i-th image. The new error σk for each pixel can then be calculated as the square root sum of all errors of pixels that were averaged, or

| (9) |

which can be used in subsequent analysis. The implementation of the weighted averaging in software is illustrated in pseudocode in the Appendix (Algorithm 2).

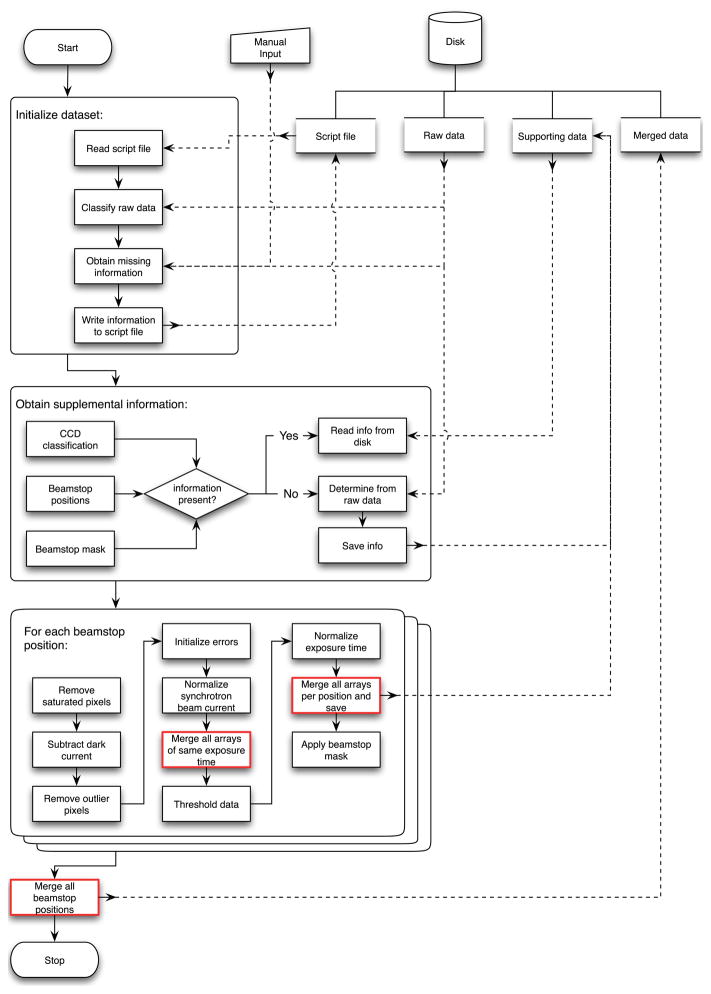

Apart from providing a more rigorous defined and consistent assembly of the data, AMP was also written to facilitate and speed up the process of assembling a 2D diffraction pattern. A simple script file indicating the names of the raw data files to be assembled is sufficient to start AMP. Given such a basic script file, AMP will attempt to infer all information it needs directly from the data; for all else, it prompts the user for input. As the assembly progresses, AMP will write important data-set-specific parameters it determined to the script file for future reference. It also automatically saves information that can be reused for a subsequent assembly of the same data and even for other data sets if applicable, such as if the same dark current parameters can be used for data sets recorded with the same CCD, or the same beamstop mask pattern for data sets that were recorded using the same beamstop. Finally, AMP will save the final assembled diffraction intensities along with meta data important for reconstruction into a custom defined file format based on the widely available, platform independent HDF5 standard. Automating these steps is especially important for data intensive three-dimensional x-ray diffraction microscopy [9, 26], where 2D diffraction patterns are recorded over a wide angular range with small angular steps prior to mapping the resulting Ewald spheres into a 3D data cube. A flowchart of our software implementation of the program is shown in Fig. 8 of the Appendix. The software is also available through Concurrent Versioning System upon request. The 2D Fourier plane data assembled by the above automated procedure is referred to as “AMP-assembled” data in what follows.

Figure 1 illustrates some advantages AMP-assembled data has over hand-assembled data. Subsections of the assembled diffraction intensities for both AMP-assembled data (black) and hand-assembled data (red) on a logarithmic intensity scale are shown on the left. The x-axis spatial frequency range in each case is from 0 to ≈ 48 μm−1. The insets show a zoomed in view of the highest spatial frequencies on a false color linear scale. While the AMP-assembled array shows speckle with good contrast, the hand-assembled array is dominated by noise at these spatial frequencies. Scaling issues are present in the hand-assembled array but not in the AMP-assembled array. This is illustrated by the plot of the power spectral densities (PSD) for each array, shown on the right on a log-log scale. While the PSD of AMP-assembled data (in black) follows a straight line as would be expected for most objects, the PSD of hand-assembled data (red) changes its slope at a spatial frequency of around 10 μm−1 suggesting that low and high spatial frequency data have not been properly scaled. Another prominent difference is the occurrence of a sharp peak at ≈ 40 μm−1 in the PSD of the hand-assembled data. This peak, presumably due to a cosmic ray incident on our CCD at the time of data collection, is found in one single exposure of the recorded raw data. Due to the large standard deviation of the affected pixels it is filtered out by weighted averaging early on in the AMP assembly process. This is not true for the hand-assembled data where the peak ends up in the final assembled array, as is indicated by the white arrow in the image of the merged intensities of the hand-assembled data. We note that while a more careful assembly by hand is possible, it would be considerably more time consuming and its steps would have to be readjusted for each new data set.

2.3. Automated assembly: evaluation from reconstructed images

The ultimate judgement of the quality of data assembly comes from seeing the quality of the reconstructed image. In this section we compare images reconstructed from AMP-assembled versus hand-assembled diffraction data.

Iterative phase retrieval in the far-field geometry works by finding a complex wavefield which satisfies real-space constraints such as the imposition of a finite support (and possibly others such as a limit on maximum phase variation), and the Fourier-space constraint of adjustment towards the measured diffraction magnitudes. Because reciprocal plane and real space information are related by a complex-valued Fourier transform, one can start the algorithm with random phases and converge to a solution. Since the real-space constraints are not known perfectly, and since random and systematic errors are possible in the measurement of the Fourier plane magnitudes, one cannot find a single, numerically unique solution to the complex wavefield (though in “good” reconstructions the variations between different possible solutions are small). As a result, various iterate averaging procedures have been adopted [6, 9, 24], based on the idea that consistent phases add coherently, while inconsistent phases add incoherently. This averaging is applied in the Fourier plane, where the magnitudes were measured but the phases were not. While Sec. 4 below involves a comparison between different iterate averaging procedures, in this section we used a variation of an already-demonstrated averaging procedure [24].

We first carried out a reconstruction where the object’s support mask was found first from the autocorrelation of the diffraction pattern, then by application of the shrinkwrap algorithm [16] with occasional by-hand adjustment. This support mask was then used in 10 separate reconstructions with different random starting phases [27]. In each reconstruction, the difference map algorithm [15] was used with a positivity constraint on the imaginary part of the object’s exit wave (this corresponds to a maximum phase shift of π as induced by 1.5 μm of solid dry protein for X-rays of wavelength 1.65 nm), and a linear phase ramp was continuously removed (thus constraining the object to be centered in the real space array). In each of these separate reconstructions, every 50th iterate from iterations 5,000 to 10,000 was set aside; the global or zero-spatial-frequency phase of each real-space iterate was adjusted to a common value [9], and the complex iterates were then averaged together. Finally, the 10 separate reconstructions were averaged together, again with the global phase adjusted to a common value (the global phase has no effect on diffraction intensities and thus is unconstrained by measured data). This procedure yields a reconstruction with minimal sensitivity to those phases that are poorly constrained by the data.

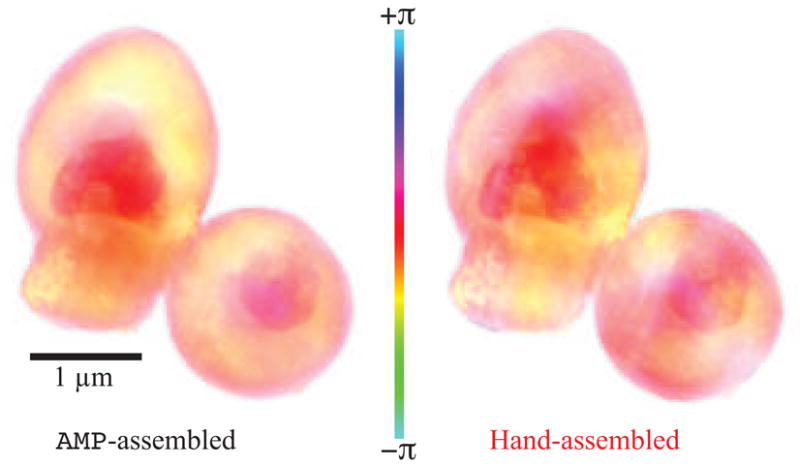

The images reconstructed using the above procedure on both hand-assembled and AMP-assembled data are shown in Fig. 2. Magnitude is displayed as brightness, and phase as hue with a color bar illustrating the phase–hue relationship. (Note that because the global or zero-spatial-frequency phase is unknown, the color bar serves only as an indicator for relative phase differences). Both reconstructions agree in key features; however, the AMP-assembled reconstruction shows less phase variation at low spatial frequencies. This is in better agreement with what would be expected from less-dense areas of the yeast cell which should have greater uniformity of projected thickness. It is also similar to the goal of maximum-entropy methods of image reconstruction, which seek to find the image with the least variation yet which is still consistent with the measured constraints. If we assume that the hand-assembled Fourier magnitudes have variations associated with erroneous assembly rather than with scattering properties of the specimen, then we would expect the reconstruction from hand-assembled magnitudes to give rise to more, but erroneous, contrast in the reconstructed image.

Fig. 2.

Final averaged reconstructions of both AMP-assembled diffraction intensities and hand-assembled diffraction intensities obtained in experiments on gold-labeled freeze-dried yeast [25]. Magnitude is represented as brightness and phase as hue according to the inset color bar. The hand-assembled reconstruction shows streaks and other variations in intensity that would not be expected in the cell; the AMP-assembled reconstruction provides an improved visual appearance.

3. Wiener-filtered phase retrieval transfer function (wPRTF)

The first papers [4,5] in x-ray diffraction microscopy used the presence of measured diffraction signal as a function of spatial frequency (the power spectral density or PSD of the diffraction pattern) to estimate the resolution achieved in the reconstruction. However, the simple presence of signal is only part of the story: one must consider the presence of noise, partial coherence in the beam, and the possible presence of small scatterers outside of the assumed support constraint. Taken together, these effects can lead to a decrease in the consistency of the estimated phases, and Fourier plane pixels which cannot be reliably retrieved will not contribute useful and reproducible information to the reconstructed image. The iterative averaging procedure described in Sec. 2.3 above provides a measure of the reproducibility of Fourier plane phasing through a phase retrieval transfer function (PRTF) [9] of

| (10) |

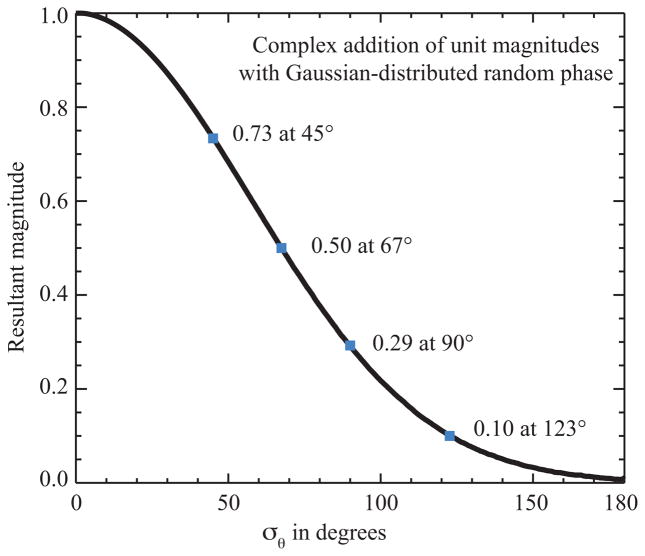

which is essentially the square root of a similar measure called the intensity ratio [6, 24]. Measurement of the spatial frequency at which the PRTF has suffered a significant decline can be used to provide an estimate of the spatial resolution of the reconstructed image [6,9,24], since of course a PRTF value of 1 indicates perfectly reproducible phases while a value of 0 indicates completely random phases. Unfortunately no consensus has emerged on what PRTF value should be used to judge reconstructed image resolution, with various authors using values of about 0.4 [6], 0.1 [28], “close to zero” [29], or unspecified values [12] as their criteria. These various criteria can be evaluated by examination of Fig. 3, which shows the relationship between σθ in Gaussian-distributed random phases, and the magnitude obtained by averaging unit-magnitude and random phase vectors.

Fig. 3.

The magnitude resulting from a sum of random phases with Gaussian phase distribution characterized by σθ, calculated for a range of values of σθ. Since the phase retrieval transfer function or PRTF measures the magnitude (at a particular spatial frequency) of the average of many iterates, this figure provides insight into the range of phase variations between the iterates.

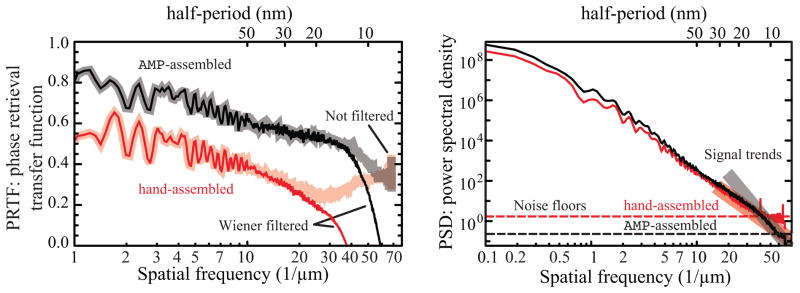

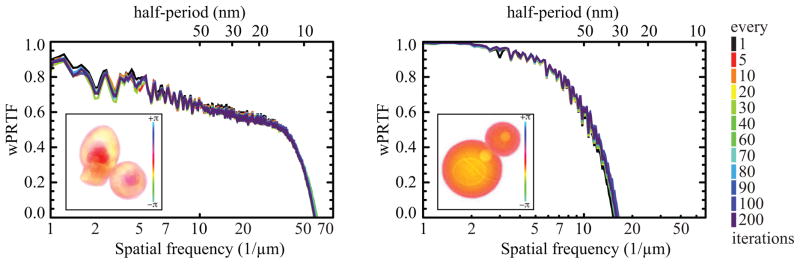

The PRTF can also be difficult to interpret. The shaded “Not filtered” curves shown at left in Fig. 4 show the PRTF for the reconstructions of both the hand-assembled (in red) and AMP-assembled (in black) Fourier plane intensities. The PRTF for the AMP-assembled data shows a steady decrease, with a “knee” at about 40 μm−1 corresponding to a real space half-period of 13 nm; this is consistent with the resolution estimated from examining real-space features [25]. The PRTF for the hand-assembled reconstruction is more difficult to interpret, in that it decreases steadily to a spatial frequency of about 25 μm, but it then increases. This pathological behavior can result from a too-small support constraint, or from errors in measurement of the Fourier plane intensity, or from noise which might place consistent but erroneous structure within the support constraint.

Fig. 4.

The use of a Wiener filter provides an improved measure of reconstructed image quality. This figure shows the phase retrieval transfer function (PRTF; shown in thick shaded lines at left) and power spectral density (PSD; right) curves corresponding to the reconstructions of Fig. 2. In the PSD curves, the square of the spatial-frequency-independent noise floor value |N|2 is shown by a dashed line, and a linear fit to the square of the high spatial frequency trend of the measured signal |C(f)|2 is shown with a thick shaded line. A Wiener filter function was then calculated according to Eqs. 11 and 12 and applied to the PRTF curve, leading to the Wiener-filtered PRTF curve or wPRTF curve which is shown in thin, non-shaded lines at left. Applying the Wiener-filter suppresses the artificially high PRTF-values of the hand-assembled reconstructed data (red) above a spatial frequency of about 25 μm−1 and underlines the higher quality of the reconstruction of the AMP-assembled data.

What is needed is a way to combine the PRTF’s measure of reconstruction consistency with a measure of data quality. We propose that a Wiener filter [30] provides such a measure. The Wiener filter is designed to optimally remove noise from a measured signal. It is applied in inverse space and suppresses the Fourier components of the measured signal that are dominated by noise. Here we will apply it to the PRTF to remove artifacts that are associated with noise in the reconstruction. If one can estimate the spatial frequency dependent trend S(f) of the true signal, and the trend N(f) in noise, the Wiener filter W (f) is formed from

| (11) |

so that it varies smoothly between 1 for signal dominated and 0 for noise dominated spatial frequencies. Since many noise sources (such as photon statistical noise, and thermal charge fluctuations in CCD detectors) are uncorrelated pixel-to-pixel, the power spectral density PSD of noise usually follows the form of the Fourier transform of a delta function: namely, a “flat” power spectrum consisting of a constant value at all spatial frequencies. The diffraction signal is much different; as was noted at the start of Sec. 2, it tends to decline as I ∝ f−m with m ≃ 3–4. We can therefore follow a straightforward procedure to generate a Wiener filter from the power spectral density of a measured diffraction pattern: If we assume the measured signal C(f) to consist of true signal S(f) plus frequency independent noise N, such that its power spectral density is given by

| (12) |

then the trend of |C(f)|2 can be found from a straight line fit in a log-log plot, while the square of the spatial frequency independent noise floor |N| can be found from where the power spectral density rolls off to a constant at high spatial frequencies. From these two quantities, we can extrapolate the square of the true signal as |S(f)|2 = |C(f)|2 − |N|2 and use this to determine the Wiener filter according to Eq. (11). This procedure is illustrated at right in Fig. 4; it has been used with success for image deconvolution [31] and phase contrast Fourier filtering [32] in lens-based x-ray microscopy.

Application of the Wiener filter to the phase retrieval transfer function [wPRTF(f) = W (f)PRTF(f)] provides an improved measure of the reconstructed image (here abbreviated as wPRTF). In Fig. 4, we show the wPRTF for the AMP-assembled and hand-assembled reconstructed images of Fig. 2. While the non-filtered PRTF of the hand-assembled data has a pathological increase at higher spatial frequencies, the wPRTF shows a sharp decrease. In addition, the wPRTF of the hand-assembled data is now below that of the AMP-assembled data, which is consistent with the improved visual impression of the reconstructed images in Fig. 2.

3.1. wPRTF and varied specimen exposures

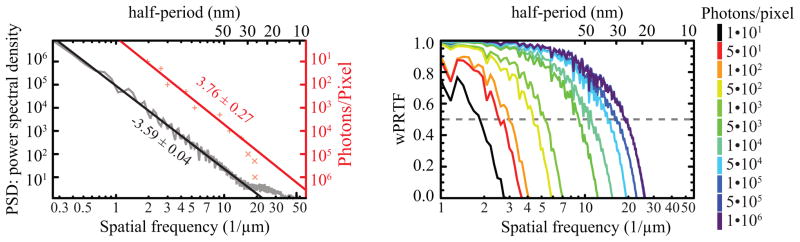

To make sure that Wiener-filtered PRTFs are a reliable tool to assess the quality of reconstructions of a wide variety of diffraction data, we reconstructed diffraction data from a simulated object at various different incident photons per pixel values. The simulated object was designed to approximate a pair of frozen-hydrated biological cells in a 5123 array with 15 nm pixel size, similar to simulated cells we have used in other computational studies [1]. The larger cell has an outer diameter of 2.1 μm while the smaller cell has an outer diameter of 1.27 μm. Together they are embedded in a 30 nm thin layer of ice. Both cells have a 45 nm thick double-layer cell membrane made from 50/50 protein and lipid, and are filled with a 10:1 ice and protein mixture. Several lipid balls of 60 nm diameter are distributed throughout the volume of both cells. Each cell also has a cell nucleus (assumed to be filled with chromatin) with a 15 nm thin single layer cell membrane made from the same composition as the outer cell membrane. Finally, each cell has a vacuole that is filled with ice and has a 15 nm thin lipid membrane. The values of the refractive index are calculated according to tabulated data of Henke et al. [33] assuming a stoichiometric composition of H48.6C32.9N8.9O8.9S0.3 and density of ρ = 1.35 g/cm3 for protein, H62.5C31.5O6.3 with ρ = 1.0 g/cm3 for lipid [34], and H49.95C24.64N8.66O15.57P1.07S0.03 and ρ = 1.527 g/cm3 for chromatin [35]. Assuming an x-ray energy within the “water window” [36,37] of 520 eV, an exit wave leaving the object was calculated using a multislice propagation process [24,38] and then propagated to the far-field. Diffraction patterns were simulated for 11 different exposures with photons per pixel values ranging from 101 to 106, with simulated Poisson noise included [1]. The power spectral density of the highest exposure diffraction pattern shown in the grey curve at left in Fig. 5 indicates that the simulated cell showed strong scattering out to a spatial frequency of about 13 μm−1. Each data set was reconstructed similar to what has been described above for the experimental data, except that averaging was applied to every 10th iterate starting at 2,000 iterations up until a total of 10,000 iterations had been run.

Fig. 5.

The Wiener-filtered phase retrieval transfer function (wPRTF) provides a good measure of reconstructed image quality over a wide range of photon exposures. Shown at right are a series of wPRTF curves for reconstructions of simulated data with several different photons per pixel values and simulated Poisson noise. The spatial frequency at which the wPRTF crosses the dashed 0.5 line is taken as effective resolution for each data set. On left, a power law fit to the power spectral density (PSD) of the data set with the highest photons per pixel value (black) is compared to a power law fit to the dose–resolution data (red) derived from the figure on the right. The magnitudes of both slopes agree within their error, indicating that the degree to which scattering decreases with spatial frequency in an object is equal to the degree at which reconstructed image resolution falls off with decreasing exposure [1].

Results from these simulations are shown at right in Fig. 5. As expected [1], lower exposures lead to poorer signal-to-noise values in the final reconstructions and thus poorer resolution. We took the spatial frequency at which the filtered PRTF curve falls below 0.5 as a measure of the effective resolution of the reconstruction at each photon exposure value. These values are plotted as red + marks at left in Fig. 5; plotting the values against the simulated photons per pixel values results in a power-law fit with an exponent of 3.68 ± 0.36 (after excluding resolution measures above the 13 μm−1 spatial frequency at which there was little signal present in the simulated object; these are shown as red × marks). Also shown in Fig. 5 is a fit to the power spectral density of the highest exposure diffraction pattern; this gave a slope of −3.59 ± 0.04. The magnitude of both exponents agree within error; this is as expected, since one needs signal at a spatial frequency to see structure over the corresponding length scale, so that achievable resolution should follow the same spatial frequency trend with exposure as the spatial frequency content of the object does [1]. The fact that the Wiener-filtered PRTF provides such a straightforward illustration of this result of this confirms the utility of the wPRTF measure.

4. Iterate averaging procedures

Iterate averaging provides a way to improve image reproducibility, and to measure the resolution via the Wiener-filtered phase retrieval transfer function (wPRTF). In this section we consider how many iterates should be averaged, and at what frequency the iterates should be sampled. Sufficient averaging supresses the contribution of poorly-phased Fourier scattering to the real-space image, since phases which are not reliably retrieved will add up incoherently. A lack of sufficient averaging will lead to artificially high PRTF values, since not enough potentially inconsistent phases will have been sampled. Averaging procedures can be implemented in Fourier or real space; here we consider the averaging of real space iterates.

How frequently should one sample particular iterates for averaging? To address this question, we reconstructed both the AMP-assembled experimental data, and the simulated data set described above, for 5,000 iterations of the difference map algorithm. We then ran the algorithm further, but averaged over a total of 100 iterates taken every ith iteration (i ∈ {1,5,10,20,30,40,60,70,80,90,100,200}) to obtain the final result. The resulting wPRTF curves shown in Fig. 6 are nearly identical for all different averaging intervals. This suggests that the frequency at which iterates are sampled is unimportant.

Fig. 6.

Illustration of the effect of choosing different iterate averaging frequencies. For both the AMP-assembled experimental data at left, and the simulated data set at right, the difference map algorithm was first run for 5,000 iterations. Next, 100 iterates were taken every ith iteration (i ∈ {1,5,10,20,30,40,60,70,80,90,100,200}) to obtain a final result. Wiener-filtered phase retrieval transfer function (wPRTF) curves are plotted for each of the iterate averaging frequencies. An example reconstructed image is shown as an inset. As judged by the wPRTF, all iterate sampling frequencies give essentially the same result.

How many iterates should be averaged? One would expect that the result would depend on the quality of the data, since data with systematic errors should show more fluctuations in the reconstructed phase. Rather than plot a series of individual wPRTF curves as in Fig. 6, in this case we decided to measure the RMS residual change in the wPRTF as one went from i to i + 1 averages:

| (13) |

where the sum extends over all N spatial frequencies up to the spatial frequency where the PSD rolls off to a steady noise floor for a given reconstruction. We calculated the RMS residual according to the above equation for the set of reconstructions with 12 different averaging frequencies that were already used for the analysis leading to Fig. 6. Since this analysis showed that the consistency in phase retrieval as measured by the w PRTF does not depend on the averaging frequency, we can assume only statistical differences between these reconstructions and calculate the mean of all RMS residuals and their standard deviation as a function of number of iterates averaged. Based on examination of the resulting average RMS residual on a linear-log plot, we then fitted the average RMS residual to a function of the form y(x) = axb + c in order to characterize the residual trend.

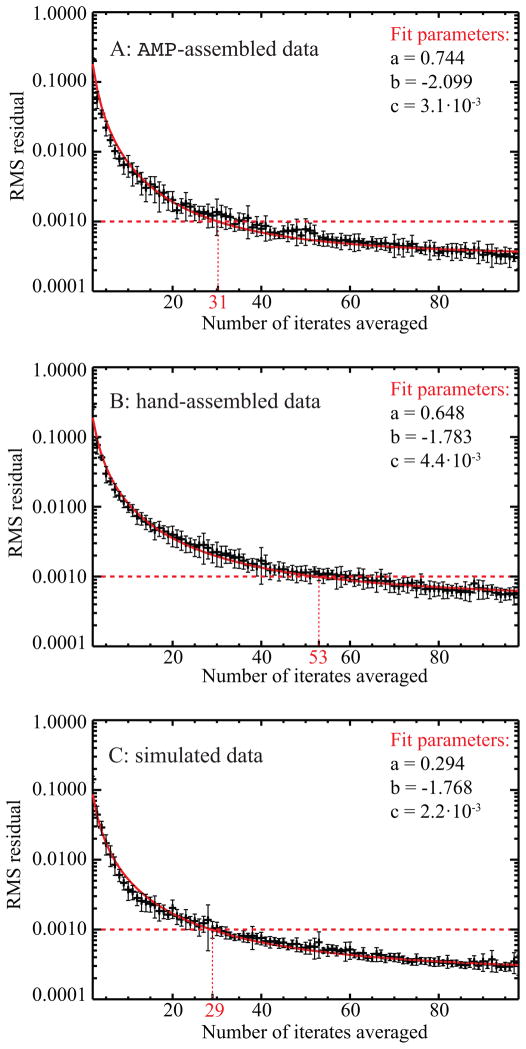

Figure 7 shows graphs of this analysis for reconstructions of A) the AMP-assembled data set, B) the hand-assembled data set, and C) the simulated data set. The calculated means of all 12 reconstructions with different iterate averaging frequencies are plotted as crosses with error bars indicating their standard deviation. The fitted function is plotted in red and its fit parameters are indicated for each respective graph. An arbitrary threshold of 0.001 RMS residual was chosen to define convergence of the wPRTF; it is marked in the graph by a horizontal dashed line. The number of iterates at which the fitted function falls below the threshold (i.e., the number of averages at which we declare the wPRTF to have converged) is indicated for each data set by a vertical dotted line. The reconstructions of both the AMP-assembled and the simulated data set converge after ≈ 30 averaged iterates, while the reconstruction of the hand-assembled data set converges only after about 50 averaged iterates. This result confirms that a AMP-assembly leads to data sets that have fewer systematic errors in the Fourier plane intensities. It also gives an estimate as to how many iterates need to be averaged for the PRTF to be a valid representation of the consistency in phase retrieval of a reconstruction.

Fig. 7.

Changes in the wPRTF as a function of number of iterates averaged for A) AMP-assembled experimental data, B) hand-assembled experimental data, and C) simulated data. The RMS residual changes in the wPRTF (calculated using Eq. (13)) as one goes from i to i + 1 iterates averaged were then fitted to a function of the form y(x) = axb + c, plotted in red. The error bars indicate the standard deviation between 12 different averaging frequencies. A horizontal dashed line marks a value of RMS residual of 0.001 selected to compare the results for the three different data sets. Not surprisingly, the simulated data with no systematic errors converges most quickly with only 29 iterates averaged, while the higher quality AMP-assembled experimental data requires 31 iterates averaged and the hand-assembled experimental data requires 53 iterates averaged.

5. Conclusion

We have developed an automated merging program, dubbed AMP, with a simple text file driven user interface that determines parameters relevant for the assembly directly from the raw data and thus speeds up the assembly process. This results in higher quality reconstructions compared to a standard hand assembly protocol, and it also aids 3D reconstructions where a large number of 2D diffraction projections need to be processed. We have looked in greater detail at the properties of the phase retrieval transfer function (PRTF), showing that the frequency of iterate averaging is not important and that averaging over 50 iterates should be sufficient for data with some degree of systematic error in the Fourier plane intensities. Finally, we propose that the PRTF be combined with a Wiener filter in a wPRTF for more reliable interpretation and estimation of the resolution of a reconstructed image. Taken together, these developments give us a more systematic understanding of the properties of finite support iterative phase retrieval of far-field diffraction data.

In summary, our studies indicate that in order to obtain high quality reconstructions one has to carefully assemble the raw data into a 2D diffraction pattern. In particular, one should perform weighted averaging and calculate a weighted normalization factor from commonly defined pixels. To evaluate both reconstruction consistency and maximum information transfer, one should use a Wiener-filtered PRTF, where the Wiener filter is determined from the PSD of the reconstructed image. This measurement will be valid if at least 50 iterates have been averaged to obtain the final result.

Acknowledgments

We wish to thank David Sayre, Malcolm Howells, Aaron Neiman and Janos Kirz for their continued contribution. We also wish to thank the Division of Materials Sciences and Engineering, Office of Basic Energy Sciences, at the Department of Energy for support of x-ray diffraction microscopy methods and instrumentation development under contract DE-FG02-07ER46128 and the National Institute for General Medical Services at the National Institutes for Health for support of the application of this method to biological imaging under contract 5R21EB6134.

A. Software implementation of the Automated Merging Program (AMP)

Algorithm 1.

Weighted normalization

| Require: SIZE(arrays) = SIZE(errors) = [n,nx,ny]{n arrays to be normalized with errors} |

| for i = 0 to n −1 do |

| for j = 0 to n −1; j ≠ i do |

| indicesi j ←WHERE((arrays[i,*,*] AND arrays[j,*,*]) > threshold) |

| end for |

| alli ← union of all indicesi* |

| stddev[i,*,*] ← errors[i,*,*] · arrays[i,*,*] |

| end for |

| arrays[k,*,*]; where allk has the most elements nall {Find reference array} |

| if nall > 100 then |

| for i = 0 to n −1; i ≠ k do |

| using indices allk, calculate σ using Eq. (5) and c for arrays[i] and arrays[k] using Eq. (7) |

| arrays[i] *= c |

| end for |

| else {normalizing in pairs instead} |

| ni j ← number of elements of indicesi j |

| p,q ← indices of maxval(ni j) |

| for l = 0 to n − 2 do |

| calculate total error σ from stddev[p] and stddev[q] using Eq. (5) |

| using indicespq, calculate c for arrays[p] and arrays[q] according to Eq. (7) |

| arrays[p] *= c |

| p̄,q̄ ← p,q |

| np̄q̄ ← 0 |

| p,q ← indices of maxval(np̄*, n*q̄) |

| end for |

| end if |

Algorithm 2.

Weighted averaging

| Require: SIZE(arrays) = SIZE(errors) = [n,nx,ny]{n arrays to be averaged with errors} |

| for i = 0 to n − 1 do |

| indices ← WHERE(arrays[i,*,*] > threshold) |

| stddev[i,*,*] ← errors[i,*,*] · arrays[i,*,*] {calculate average according to Eq. (8)} |

| numerator[indices] += arrays[i,indices]/stddev[i,indices]2 |

| enominator[indices] + = 1/stddev[i,indices]2 {calculate new errors according to Eq. (8)} |

| newerror[indices] += 1/stddev[i,indices]2 |

| end for |

| average ← numerator/denominator |

| newerror ← 1/(newerror ·average) |

Fig. 8.

Flowchart of AMP. The processes in red involve weighted normalization and weighted averaging as described in Sec. 2.2 and outlined in pseudocode in Algorithm 1 and Algorithm 2, respectively.

References and links

- 1.Huang X, Miao H, Steinbrener J, Nelson J, Shapiro D, Stewart A, Turner J, Jacobsen C. Signal-to-noise and radiation exposure considerations in conventional and diffraction x-ray microscopy. Opt Express. 2009;17:13541–13553. doi: 10.1364/oe.17.013541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Howells M, Beetz T, Chapman H, Cui C, Holton J, Jacobsen C, Kirz J, Lima E, Marchesini S, Miao H, Sayre D, Shapiro D, Spence J, Starodub D. An assessment of the resolution limitation due to radiation-damage in x-ray diffraction microscopy. J Elec Spectroscopy and Related Phenom. 2009;170:4–12. doi: 10.1016/j.elspec.2008.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sayre D. Some implications of a theorem due to Shannon. Acta Cryst. 1952;5:843. [Google Scholar]

- 4.Miao J, Charalambous P, Kirz J, Sayre D. An extension of the methods of x-ray crystallography to allow imaging of micron-size non-crystalline specimens. Nature (London) 1999;400:342–344. [Google Scholar]

- 5.Miao J, Hodgson K, Ishikawa T, Larabell C, LeGros M, Nishino Y. Imaging whole escherichia coli bacteria by using single-particle x-ray diffraction. Proc Nat Acad Sci. 2003;100:110–112. doi: 10.1073/pnas.232691299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Shapiro D, Thibault P, Beetz T, Elser V, Howells M, Jacobsen C, Kirz J, Lima E, Miao H, Neiman AM, Sayre D. Biological imaging by soft x-ray diffraction microscopy. Proc Nat Acad Sci. 2005;102:15343–15346. doi: 10.1073/pnas.0503305102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Huang X, Nelson J, Kirz J, Lima E, Marchesini S, Miao H, Neiman A, Shapiro D, Steinbrener J, Stewart A, Turner J, Jacobsen C. Soft x-ray diffraction microscopy of a frozen hydrated yeast cell. Phys Rev Lett. 2009;103:198101. doi: 10.1103/PhysRevLett.103.198101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Robinson I, Vartanyants I, Williams G, Pfeifer M, Pitney J. Reconstruction of the shapes of gold nanocrystals using coherent x-ray diffraction. Phys Rev Lett. 2001;87:195505. doi: 10.1103/PhysRevLett.87.195505. [DOI] [PubMed] [Google Scholar]

- 9.Chapman H, Barty A, Marchesini S, Noy A, Hau-Riege SP, Cui C, Howells M, Rosen R, He H, Spence J, Weierstall U, Beetz T, Jacobsen C, Shapiro D. High resolution ab initio three-dimensional x-ray diffraction microscopy. J Opt Soc Am A. 2006;23:1179–1200. doi: 10.1364/josaa.23.001179. [DOI] [PubMed] [Google Scholar]

- 10.Miao J, Chen CC, Song C, Nishino Y, Kohmura Y, Ishikawa T, Ramunno-Johnson D, Lee TK, Risbud SH. Three-dimensional GaN-Ga2O3 core shell structure revealed by x-ray diffraction microscopy. Phys Rev Lett. 2006;97:215503. doi: 10.1103/PhysRevLett.97.215503. [DOI] [PubMed] [Google Scholar]

- 11.Barty A, Marchesini S, Chapman HN, Cui C, Howells MR, Shapiro DA, Minor AM, Spence JCH, Weierstall U, Ilavsky J, Noy A, Hau-Riege SP, Artyukhin AB, Baumann T, Willey T, Stolken J, van Buuren T, Kinney JH. Three-dimensional coherent x-ray diffraction imaging of a ceramic nanofoam: Determination of structural deformation mechanisms. Phys Rev Lett. 2008;101:055501. doi: 10.1103/PhysRevLett.101.055501. [DOI] [PubMed] [Google Scholar]

- 12.Nishino Y, Takahashi Y, Imamoto N, Ishikawa T, Maeshima K. Three-dimensional visualization of a human chromosome using coherent x-ray diffraction. Phys Rev Lett. 2009;102:018101. doi: 10.1103/PhysRevLett.102.018101. [DOI] [PubMed] [Google Scholar]

- 13.Fienup J. Reconstruction of an object from the modulus of its Fourier transform. Opt Lett. 1978;3:27–29. doi: 10.1364/ol.3.000027. [DOI] [PubMed] [Google Scholar]

- 14.Fienup J. Phase retrieval algorithms: a comparison. Appl Opt. 1982;21:2758–2769. doi: 10.1364/AO.21.002758. [DOI] [PubMed] [Google Scholar]

- 15.Elser V. Phase retrieval by iterated projections. J Opt Soc Am A. 2003;20:40–55. doi: 10.1364/josaa.20.000040. [DOI] [PubMed] [Google Scholar]

- 16.Marchesini S, He H, Chapman H, Hau-Riege S, Noy A, Howells M, Weierstall U, Spence J. X-ray image reconstruction from a diffraction pattern alone. Phys Rev B. 2003;68:140101. [Google Scholar]

- 17.Nugent KA, Peele AG, Quiney HM, Chapman HN. Diffraction with wavefront curvature: a path to unique phase recovery. Acta Cryst A. 2005;61:373–381. doi: 10.1107/S010876730501055X. [DOI] [PubMed] [Google Scholar]

- 18.Williams GJ, Quiney HM, Dhal BB, Tran CQ, Nugent KA, Peele AG, Paterson D, de Jonge MD. Fresnel coherent diffractive imaging. Phys Rev Lett. 2006;97:025506. doi: 10.1103/PhysRevLett.97.025506. [DOI] [PubMed] [Google Scholar]

- 19.Rodenburg J, Bates R. The theory of super-resolution electron microscopy via wigner-distribution deconvolution. Phil Trans Roy Soc (London) 1992;339:521–553. [Google Scholar]

- 20.Rodenburg J, Hurst A, Cullis A, Dobson B, Pfeiffer F, Bunk O, David C, Jefimovs K, Johnson I. Hard-x-ray lensless imaging of extended objects. Phys Rev Lett. 2007;98:034801. doi: 10.1103/PhysRevLett.98.034801. [DOI] [PubMed] [Google Scholar]

- 21.Thibault P, Dierolf M, Menzel A, Bunk O, David C, Pfeiffer F. High-resolution scanning x-ray diffraction microscopy. Science. 2008;321:379–382. doi: 10.1126/science.1158573. [DOI] [PubMed] [Google Scholar]

- 22.Maiden AM, Rodenburg JM. An improved ptychographical phase retrieval algorithm for diffractive imaging. Ultramicroscopy. 2009;109:1256–1262. doi: 10.1016/j.ultramic.2009.05.012. [DOI] [PubMed] [Google Scholar]

- 23.Abbey B, Nugent K, Williams G, Clark J, Peele A, Pfeifer M, de Jonge M, McNulty I. Keyhole coherent diffractive imaging. Nature Physics. 2008;4:394–398. [Google Scholar]

- 24.Thibault P, Elser V, Jacobsen C, Shapiro D, Sayre D. Reconstruction of a yeast cell from x-ray diffraction data. Acta Cryst A. 2006;62:248–261. doi: 10.1107/S0108767306016515. [DOI] [PubMed] [Google Scholar]

- 25.Nelson J, Huang X, Steinbrener J, Shapiro D, Kirz J, Marchesini S, Neiman AM, Turner JJ, Jacobsen C. High-resolution x-ray diffraction microscopy of specifically labeled yeast cells. Proc Nat Acad Sci. 2010;107:7235–7239. doi: 10.1073/pnas.0910874107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Miao J, Ishikawa T, Johnson B, Anderson E, Lai B, Hodgson K. High resolution 3D x-ray diffraction microscopy. Phys Rev Lett. 2002;89:088303. doi: 10.1103/PhysRevLett.89.088303. [DOI] [PubMed] [Google Scholar]

- 27.Chen CC, Miao J, Wang CW, Lee TK. Application of optimization technique to noncrystalline x-ray diffraction microscopy: Guided hybrid input-output method. Phys Rev B. 2007;76:064113. [Google Scholar]

- 28.Schroer CG, Boye R, Feldkamp JM, Patommel J, Schropp A, Schwab A, Stephan S, Burghammer M, Schoeder S, Riekel C. Coherent x-ray diffraction imaging with nanofocused illumination. Phys Rev Lett. 2008;101:090801. doi: 10.1103/PhysRevLett.101.090801. [DOI] [PubMed] [Google Scholar]

- 29.Giewekemeyer K, Thibault P, Kalbfleisch S, Beerlink A, Kewish CM, Dierolf M, Pfeiffer F, Salditt T. Quantitative biological imaging by ptychographic x-ray diffraction microscopy. Proc Nat Acad Sci. 2010;107:529–534. doi: 10.1073/pnas.0905846107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wiener N. Extrapolation, Interpolation, and Smoothing of Stationary Time Series. The MIT Press; 1964. [Google Scholar]

- 31.Jacobsen C, Williams S, Anderson E, Browne MT, Buckley CJ, Kern D, Kirz J, Rivers M, Zhang X. Diffraction-limited imaging in a scanning transmission x-ray microscope. Opt Commun. 1991;86:351–364. [Google Scholar]

- 32.Hornberger B, Feser M, Jacobsen C. Quantitative amplitude and phase contrast imaging in a scanning transmission X-ray microscope. Ultramicroscopy. 2007;107:644–655. doi: 10.1016/j.ultramic.2006.12.006. [DOI] [PubMed] [Google Scholar]

- 33.Henke BL, Gullikson EM, Davis JC. X-ray interactions: Photoabsorption, scattering, transmission, and reflection at E=50–30,000 eV, Z=1–92. Atomic Data and Nuclear Data Tables. 1993;54:181–342. [Google Scholar]

- 34.London RA, Rosen MD, Trebes JE. Wavelength choice for soft x-ray laser holography of biological samples. Appl Opt. 1989;28:3397–3404. doi: 10.1364/AO.28.003397. [DOI] [PubMed] [Google Scholar]

- 35.Koch MHJ, Vega MC, Sayers Z, Michon AM. The superstructure of chromatin and its condensation mechanism. Eur Biophys J. 1987;14:307–319. doi: 10.1007/BF00254896. [DOI] [PubMed] [Google Scholar]

- 36.Wolter H. Spiegelsysteme streifenden Einfalls als abbildende Optiken für Röntgenstrahlen. Annalen d Physik. 1952;10(286):94–114. [Google Scholar]

- 37.Sayre D, Kirz J, Feder R, Kim DM, Spiller E. Transmission microscopy of unmodified biological materials: Comparative radiation dosages with electrons and ultrasoft x-ray photons. Ultramicroscopy. 1977;2:337–341. doi: 10.1016/s0304-3991(76)91997-5. [DOI] [PubMed] [Google Scholar]

- 38.Cowley JM, Moodie AF. Fourier images. I. The point source. Proc Phys Soc B. 1957;70:486–496. [Google Scholar]