Abstract

Efron, Hastie, Johnstone and Tibshirani (2004) proposed Least Angle Regression (LAR), a solution path algorithm for the least squares regression. They pointed out that a slight modification of the LAR gives the LASSO (Tibshirani, 1996) solution path. However it is largely unknown how to extend this solution path algorithm to models beyond the least squares regression. In this work, we propose an extension of the LAR for generalized linear models and the quasi-likelihood model by showing that the corresponding solution path is piecewise given by solutions of ordinary differential equation systems. Our contribution is twofold. First, we provide a theoretical understanding on how the corresponding solution path propagates. Second, we propose an ordinary differential equation based algorithm to obtain the whole solution path.

Keywords: generalized linear model, LARS, LASSO, ordinary differential equation, solution path algorithm, QuasiLARS, quasi-likelihood model

1 Introduction

Recently we have seen exploding growth of research in variable selection popularized by Tibshirani (1996), which uses the L1 penalty to regularize least squares regression. Following this line of research, many other techniques have been proposed. They include the SCAD (Fan and Li, 2001), the LARS (Efron et al., 2004), the elastic net (Zou and Hastie, 2005), the Dantzig selector (Candes and Tao, 2007), the adaptive LASSO (Zou, 2006; Zhang and Lu, 2007), and their related methods.

Computationally, the LASSO, elastic net, and adaptive LASSO can all be solved by any quadratic programming (QP) solver. The Dantzig selector involves a linear programming problem. The SCAD penalty leads to a non-convex optimization problem, for which Fan and Li (2001) proposed a local quadratic approximation (LQA) algorithm and Zou and Li (2008) proposed a local linear approximation (LLA) algorithm. They are two instances of the MM algorithm (Hunter and Li, 2005) and each step of the LQA or LLA involves a QP problem. All these algorithms share one characteristic in common: they solve the corresponding optimization for one regularization parameter at a time.

Efron et al. (2004) proposed the Least Angle Regression (LAR) algorithm and illustrated its close connection to the LASSO and Forward Stagewise linear regression. Together these algorithms are called LARS. By slight modification, their algorithm provides the whole exact solution path for the LASSO. The LARS solution paths are piecewise linear. Another algorithm for the LASSO is due to Osborne, Presnell and Turlach (2000) which proposed the homotopy algorithm. Rosset and Zhu (2007) derived a general characterization of the loss-penalty pair which leads to piecewise linear solution paths.

Note that the piecewise quadratic condition of Rosset and Zhu (2007) is not satisfied by generalized linear models (GLMs). The corresponding L1 regularized solution path is not piecewise linear as demonstrated by Figure 2. To our limited knowledge, it is largely unknown how to extend the LARS to GLMs and more generally to the quasi-likelihood model (QLM) to get an exact solution path. Yet some approximate solution path algorithms are available. Madigan and Ridgeway (2004) discussed one possible extension to GLMs. Rosset (2004) suggested a general second-order path-following algorithm to track the curved regularized optimization solution path. Park and Hastie (2007)’s algorithm is based on the predictor-corrector method of convex optimization. To control the overall accuracy, Park and Hastie (2007) pointed out that it is critical to select the step length of the regularization parameter, for which strategies are proposed. These two papers try to approximate the whole regularization solution path by providing a series of solution sets at di erent regularization parameters, but different strategies are proposed to select the set of regularization parameters to control the approximation error. Yuan and Zou (2009) proposed an efficient global approach to approximate nonlinear L1 regularization solution paths. Their method is based on the approximation of a general loss function by quadratic splines. In this way, the global loss approximation error can be controlled and a generalized LARS-type algorithm is devised to compute the corresponding exact solution path for the approximate quadratic spline loss. This path approximates the original nonlinear regularization solution path and theory is provided to show that the path approximation error is controlled by the global loss approximation error. On one hand, increasing the number of knots in the quadratic spline approximation makes the approximate solution path more accurate. On the other hand, it increases the number of pieces in the corresponding piecewise linear solution path and therefore the computational cost as well (Section 4 of Yuan and Zou, 2009). They further commented that “If the user wants to get the exact solution path from the EGA solution, then it seems not worthy to use a large number of knots.”

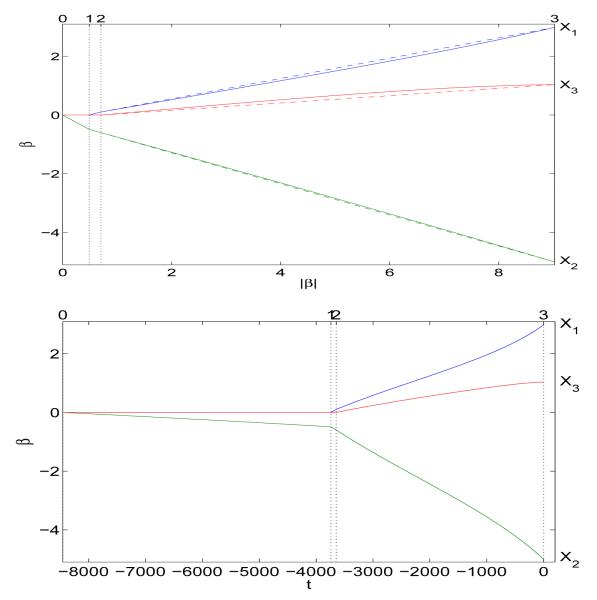

Figure 2.

Poisson QuasiLARS solution path of the toy example: the top panel gives the solution path of the Poisson QuasiLARS with respect to the one norm of β(t); the bottom panel plotted with respect to t.

This urgent need of an exact solution path calls for another algorithm. This is exactly the goal of the current paper. We extend the LAR to the QLM and name our extension QuasiLAR. Piecewise, our QuasiLAR solution path is given by solutions of ordinary differential equation (ODE) systems. We also discuss how the extension QuasiLAR is modified to get the whole solution path of the LASSO regularized quasi-likelihood, and this modified algorithm is called QuasiLASSO. Putting them together, we name our new algorithm QuasiLARS. The QuasiLARS is different from existing algorithms mentioned in the previous paragraph in that they all provide approximate solution paths instead. Our contribution is two-fold. On one hand, the current paper helps us to understand the corresponding optimization problem better by providing an answer to the question: how the general LASSO regularized solution path changes as the regularization parameter varies. On the other hand, we present an ODE based solution path algorithm and it provides a potential way to evaluate how well these existing solution path algorithms approximate the true solution path. Other papers on solution path algorithms include Zhu, Rosset, Hastie and Tibshirani (2004), Hastie, Rosset, Tibshirani and Zhu (2004), Wang and Shen (2006), Yuan and Lin (2007), Li, Liu and Zhu (2007), Wang and Zhu (2007), Wang, Shen and Liu (2008), Li and Zhu (2008), Zou (2008), Rocha, Zhao and Yu (2008), Wu, Shen and Geyer (2009), and references therein. In particular, Friedman, Hastie and Tibshirani (2010) focused on GLMs as well. They proposed a coordinate descent algorithm, which works for a fixed regularization parameter. They get a solution path by obtaining and connecting solutions at a pre-specified (penitentially dense) grid of the regularization parameter.

The rest of the article is organized as follows. Section 2 details the LARS and motivates the QuasiLARS. In Section 3, we present the QuasiLARS . Details for a key step are discussed in Section 4. Section 5 gives some properties of the QuasiLARS path. Numerical examples in Section 6 are used to illustrate how our QuasiLARS works. We conclude with Section 7. All technical proofs are collected in supplementary online material.

2 LARS

Before delving into details, let us see how the LAR works. To facilitate our later discussion, let us consider a general regression with a univariate response and predictor vector , where p denotes the number of predictor variables. The QLM assumes that μ(x) ≜ E(Y|X = x) = g−1(η(x)) with η(x) = β0 + xTβ, and Var(Y|X = x) = V (μ(x)) for some known monotonic link function g(·) and positive variance function V (·). Define and denote our observed data set by {(xi, yi) : i = 1, ⋯ , n} with , and n being the sample size. Predictors have been standardized such that and , j = 1, ⋯, n. The QLM estimates β0 and β by solving

| (1) |

The QLM includes GLMs as special cases by choosing g(·) and V(·) appropriately.

The ordinary least squares (OLS) regression is a special case with g(μ) = μ and V(μ) = σ2. In this case, by demeaning if necessary to ensure , (1) reduces to

| (2) |

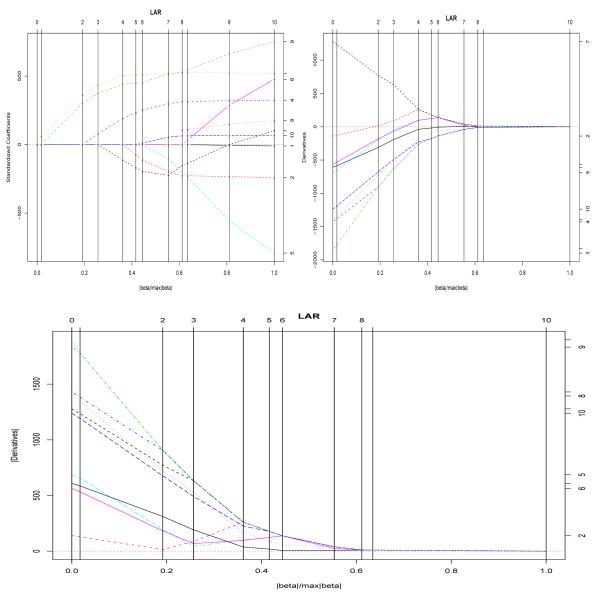

For OLS (2), the LAR provides a solution path β(t) indexed by t ∈ [0, ∞), with β(0) = 0. For large enough t, β(t) is the same as the full OLS solution to (2). The solution path in between is piecewise linear. Over each piece, it moves along the direction that keeps the correlation between the current residuals and each active predictor equal in absolute value. Define current residuals for i = 1, ⋯ , n. In terms of the current residual vector e(β(t)) = (e1(β(t)), ⋯ , en(β(t)))T and the jth predictor vector x(j) = (x1j, ⋯ , xnj)T, the current correlation e(β(t))T x(j) has the same absolute value for each active predictor j. Note that . This implies that the absolute values of the objective function’s first-order derivatives are equal for each active predictor variable along the LAR solution path, namely for any j and j’ among the active predictor set at t. In this paper we will take advantage of this observation and extend LARS to the more general QLM. For the diabetes data in the R package LARS, we plot the LAR solution path in the top left panel of Figure 1. The derivatives along the LAR solution path are shown in the top right panel of Figure 1. The derivatives in absolute value, namely are given in the bottom panel of Figure 1. It is clearly shows that, at the end of each LAR step, a new predictor variable joins the group of active predictor variables that share the honor of having the same largest absolute value of the first-order derivatives. The LAR algorithm terminates at the full OLS estimate of (2) when all the first-order partial derivatives are exactly zero.

Figure 1.

LAR solution path of the diabetes data: the top left panel gives the solution path of the LAR; the top right panel and the bottom panel plot the derivatives of (2) and their absolute values, respectively, along the LAR solution path.

3 QuasiLARS: extension of LARS

Note first that in general β0 of the QLM cannot be removed from equation (1) by location and scale transformations as in the least squares regression. However for any β, the quasi-likelihood function (1) is concave in β0. Thus we can define the marginal maximizer of β0 as a function of β. Namely for any β, define

| (3) |

We denote and R(β) = R(β, β0(β)).

Based on the above observation that the LAR produces a solution path along which the objective function’s first-order partial derivatives have the same absolute value for each active predictor variable, our extension QuasiLAR seeks a solution path β(t) such that for j and j’ that are active at t. More explicitly, at any t, the solution should move in a special direction , which is chosen in a way to ensure that the first-order partial derivatives have the same absolute value for each active predictor variable j.

For R(β), denote its vector of first-order partial derivatives by b(β) = (b1(β), ⋯ , bp(β))T and matrix of second-order partial derivatives by M(β) = (mjk(β))1≤j,k≤p, where and for 1 ≤ j,k ≤ p.

As in LAR, we use t to index our QuasiLAR solution path β(t). Denote the active index set at t by and also by . We will use and interchangeably.

Note that b(β(t + dt)) ≈ b(β(t)) + M(β(t)) {β(t + dt) — β(t)} for small dt > 0 due to Taylor expansion. Thus in order to keep the absolute values of the first-order partial derivatives with respect to all active predictor variables decrease and be the same, our solution path updating direction β(t+dt)—β(t) should satisfy that bj(β(t+dt))—bj(β(t)) has the opposite sign of bj(β(t)) and has the same absolute value for each . Here the first requirement guarantees that the first-order partial derivatives of active predictor variables are decreasing in absolute value and the second requirement ensures that they decrease at the same speed. This gives our motivation on how to define an appropriate solution path updating direction. The above discussion can be made rigorous by using differential operators when the above dt > 0 is infinitesimal as presented next.

At any t with solution β(t), denote the solution path updating direction by . For any inactive variable , we keep βj(t) = 0 and do not change it; thus aj(β(t)) = 0 for . Consequently we only care about aj(β(t)) for active predictor . For any two index sets and , vector a, and matrix M, denote to be the sub-vector of a consisting of those elements with index in and to be the sub-matrix of M consisting of those elements with row index in and column index in . When and are singletons, we also write and , which are essentially a row vector and a column vector, respectively. Denote the complement of by . With these notations, our solution path updating direction for active predictor variables should satisfy

| (4) |

The argument is based on the previous paragraph with infinitesimal dt. Thus our solution path should be updated using and with

| (5) |

being the solution of (4), where the invertibility of is not an issue as long as the quasi-likelihood is well defined. Here we use 0 to denote a column vector of zeros with length depending on the context. Note further that this updating scheme implies that because .

In integration format, they become

| (6) |

| (7) |

where is given by (5). Note that we consider small dt > 0 in all the above discussion and assume that between t and t + dt the active index has not changed. Consequently, beginning at t we may keep updating the solution path using (6) until the active set changes at some t’ > t. This happens when another predictor variable joins the active set to share the honor of having the largest absolute value of the first-order partial derivatives, that is, ∣bj’(β(t’))∣ = ∣bj(β(t’))∣ for any active predictor . At this point, we update the active set by setting .

Now we present our extension QuasiLAR for the QLM. We initialize our solution path by identifying the predictor variable j so that the objective function R(β) changes fastest with respect to βj beginning at β = 0. We first set . It will be clear later why we choose t0 in this way. Our solution path begins with β(t0) = 0 and β0(t0) = β0(βt0)) defined in terms of (3). The initial active predictor set is given by .

With t0, β(t0), and , we update our solution path using (6) until a new variable joins the active set at some t1(> t0) to be determined. That means the solution for any t > t0 may be temporarily updated by and . Here is a temporary solution path defined for any t > t0. For any , define , where . Then t1 is given by and we call t1 a transition point in that the set of active predictors changes at t = t1.

Then our QuasiLAR algorithm updates by setting , , and β0(t) = β0(β(t)) for all t ∈ [t0, t1]. The active predictor set stays the same for t ∈ [t0, t1), namely . At t1, we update the active predictor set by setting . At t = t1, the number of active predictors is two. Due to (5), and the definitions of , Tj and t1, β(t1) satisfies , where . Note further that (7) and the definition of t0 ensure that for any .

Our QuasiLAR algorithm continues with t1, β(t1), and . The full algorithm is given by Algorithm 1. Note that at the end of the mth QuasiLAR step, tm, β(tm), and satisfy for any and , for any .

Algorithm 1. QuasiLAR for the QLM.

Step 1: Initialize by setting t0 = —maxj=1,⋯,p ∣bj(0)∣, β(t0) = 0, β0(t0) = β0(β(t0)) ad defined in (3), and .

Step 2: For m = 0, ⋯ , p −2, define a tentative solution path using

for t ≥ tm. Define a new transition point , where . Update solution path by setting , , and β0(t) = β0(β(t)) for t ∈ [tm, tm+1]. for t ∈ [tm, tm+1) and .

Step 3: At the end of Step 2, should be exactly {1, ⋯ , p}. Next we update solution path using , and for t between tp—1 and tp = 0.

Note that at the end of the (p — 1)th QuasiLAR step in Step 2 of Algorithm 1, all predictors are active. Then, in Step 3, the QuasiLAR path moves along a direction such that the absolute values of the first-order partial derivatives decrease at the same speed until all the first-order partial derivatives are exactly zero, which happens at t = 0. The solution at t = 0 exactly corresponds to the full solution of the QLM by solving (1) just like the LAR ends at the full OLS estimate. This completes our QuasiLAR algorithm.

Remark 1

Note that the QuasiLAR instantaneous path updating direction is given by . For least squares regression, the objective function is exactly quadratic and thus depends only on the active predictor set , but not on the current solution values . Note that does not change in a small neighborhood of t. This implies that, within a small neighborhood of t, the instantaneous path updating direction is the same for least squares regression. This leads to the piecewise linear solution path of the LAR and Rosset and Zhu (2007) in general.

3.1 Quasi-LASSO modification

Efron et al. (2004) discovered that the LASSO solution path can be obtained by a slight modification of the LAR. Next we make a parallel extension by showing that the Quasi-LAR can be modified to get the whole LASSO regularized quasi-likelihood solution path.

Now consider the LASSO regularized quasi-likelihood in two different formats

| (8) |

| (9) |

which are equivalent with one-to-one correspondence between λ ≥ 0 and s ≥ 0.

Let be a LASSO solution to (8). Then we can show that the sign of any nonzero component must agree with the sign of the current first-order partial derivative . It is given by Lemma 2 in Section 5.

Suppose t = t* at the end of a QuasiLAR step with a new active set . At the next QuasiLAR step with t ∈ [t*, T] for some T to be determined, the QuasiLAR solution path moves along the following tentative solution path

| (10) |

for t ≥ t*. Denote for . Then the end point T is given by ,

However may have changed sign at some point between t* and T for some , in which case the sign restriction in Lemma 2 must have been violated. We define for , where is the jth component of defined by (10). If defined by (10) cannot be a LASSO quasi-likelihood solution since the sign restriction in Lemma 2 has already been violated. The following Quasi-LASSO modification can be applied to ensure that we can get the LASSO regularized quasi-likelihood solution path.

Quasi-LASSO modification

If S < T, stop the ongoing QuasiLAR step at S and remove from the active set by set , where is chosen such that . At the new transition point S, the new path updating direction is calculated based on the new active predictor set .

We have the following theorem to guarantee that the Quasi-LASSO modification leads to the LASSO regularized quasi-likelihood solution path. We name the modified algorithm by QuasiLASSO and use QuasiLARS to refer to both QuasiLAR and QuasiLASSO.

Note that at each transition point of our QuasiLARS solution path, two kinds of event can happen: either an inactive predictor joins the active predictor set or an active predictor is removed from the active predictor set. As in Efron et al. (2004), we assume that a “one at a time” condition holds. With the “one at a time” condition, at each transition point t*, only one single event can happen, namely either one inactive predictor variable becomes active or one currently active predictor variable becomes inactive.

Theorem 1

With the Quasi-LASSO modification, and assuming the “one at a time” condition, the QuasiLARS algorithm yields the LASSO quasi-likelihood solution path.

Remark 2

Here we make the “one at a time” assumption. However, even when the “one at a time” condition does not hold, a QuasiLASSO solution path is still available. The same discussion in Efron et al. (2004) applies. For practical applications, some slight jittering may simply be applied, if necessary, to ensure the “one at a time” condition.

3.2 Updating via ODE

Our solution path algorithm QuasiLARS involves an essential piecewise updating step and beginning at a transition point t* with solution β(t*) and active predictor set . Note that the piecewise updating can be easily achieved by setting for and t > t* and solving the following ODE system with initial value condition . This is a standard initial-value ODE system, for which there are many efficient solvers available. We have implemented our QuasiLARS using Matlab ODE solver “ODE45.”

4 Details for deriving the path updating direction

Note that the path updating direction defined by (5) asks for and . By the chain rule, we are required to have the implicit partial derivatives and . Next we show how to obtain them.

According to its definition (3), β0(β) satisfies , where . Now treat β0 as a function of β and take derivative of each term with respect to βj. We should get , where . Thus by solving for , we get , where for i = 1, ⋯, n. To get the second-order partial derivatives , we may apply another layer of differential operator .

For some particular generalized linear models, it may be much simpler to get those partial derivatives as shown in the following subsections.

4.1 Binomial

For the Binomial distribution, the data set is given by {(xi, yi) : i = 1, ⋯, n} with yi ∈ {0, 1}. With the canonical logit link η(x) = log(μ(x)/(1−μ(x))), the corresponding loglikelihood function is given by . Then for any β, the corresponding optimal β0(β) is given by the solution of which is equivalent to . We next differentiate both sides with respect to βj and solve for to get . We may take another layer of differentiation to get second-order partial derivatives.

4.2 Poisson

In the case of Poisson distribution with the canonical log link η(x) = log μ(x), the likelihood function is given, up to a constant, by . For any β, the maximizer β0(β) of is given by . We may take differentiation to get partial derivatives and .

With the closed-form formula of β0(β), we may simply plug it into the likelihood and get , which corresponds to our notation R(β).

5 Properties of QuasiLARS

We next establish some properties of QuasiLARS solution path and prove Theorem 1.

With the “one at a time” condition, at each transition point t*, only one single event can happen, namely either one inactive predictor variable becomes active or one currently active predictor variable becomes inactive. For the first type of event, it means the active set changes from to for some . We next show in Lemma 1 that this new active variable j* joins in a “correct” manner. This is the key result for proving Theorem 1. Lemma 1 applies to QuasiLARS (both QuasiLAR and QuasiLASSO).

Lemma 1

For any transition point t* during the QuasiLARS solution path, if predictor variable j* is the only addition to the active set at t* with solution β(t*) and active set changing from to , then the path updating direction α(β(t*)) at t* has its j*th component agreeing in sign with the current first-order partial derivative bj*(β(t*)).

Our next four lemmas concern properties of the LASSO regularized quasi-likelihood solution. These lemmas will lead to the proof of Theorem 1. For any s ≥ 0, we denote the solution of (9) by , which is unique for each s and continuous in s. The uniqueness is due to the convexity of and the strict convexity of −R(β, β0(β)). Throughout the paper, the hat notation always refers to the LASSO regularized quasi-likelihood solution. For any s ≥ 0, let denote the index set of nonzero components of . We will show that the nonzero set is also the active predictor set that determines the QuasiLARS path updating direction.

Let be a solution of (8). Next we can show that the sign of any non-zero component must agree with the sign of the current first-order partial derivative, namely for .

Lemma 2

A LASSO regularized quasi-likelihood solution to (8) satisfies for any .

Let be an open interval of the s axis, with infimum s, within which the nonzero set of the corresponding LASSO regularized quasi-likelihood solution remains constant, namely, for and some .

Lemma 3

For , the LASSO regularized quasi-likelihood estimate updates along the QuasiLARS path updating direction.

Lemma 4

For an open interval with a constant nonzero set over the LASSO regularized quasi-likelihood solution path , let . Then for , the first-order partial derivatives of R(β, β0(β)) at must satisfy for and for .

Let s denote such a point, as in Lemma 4, with the LASSO regularized quasi-likelihood solution , current derivatives , and maximum absolute derivative . Define , , and . Define for some , T(γ) = R(β(γ), β0(β(γ))) and . Denote , , and .

Lemma 5

At s, we have

| (11) |

with equality only if dj = 0 for and for . If so,

| (12) |

One implication of Lemma 5 is that, at any transition point, the active predictor set of the LASSO regularized quasi-likelihood solution is a subset of . Note that the LASSO regularized quasi-likelihood minimizes −R(β, β0(β)) subject to a constraint on the one norm of β. Locally around , we are maximizing T(γ) subject to an upper bound on S(γ). The first part of Lemma 5 implies that the instantaneous relative changing rate of T(γ) and S(γ) is at most . For β(γ), its one norm S(γ) is increasing in γ as long as and the best instantaneous relative changing rate is achieved whenever dj = 0 for and for . Note that is the same to say that the jth predictor variable is changing from inactive to active. Then, with the “one at a time” condition, the set is singleton and the requirement for is thus guaranteed for our LARS path updating direction due to Lemma 1.

The second part of Lemma 5 provides a closer look at the relative changing rate by checking the second-order derivative . As we only care about direction, we assume that for some fixed △ > 0. Note that . Then we need to find the best direction d to maximize T(γ) among all the possible direction d satisfying and for . By taking the second-order information into account, we need to solve

| (13) |

for some △ > 0 to select the optimal solution updating direction d. As we only care about direction, △ > 0 can be any number. Our next lemma shows that the optimal direction corresponding to (13) is exactly given by our QuasiLARS path updating direction.

Lemma 6

Our QuasiLARS path updating direction matches the direction corresponding to the solution to (13).

6 QuasiLARS in Action

In this section, we apply QuasiLARS to different datasets with different models. In our implementation, we first calculate t0. Then set δt = −t0/K with a large positive K. For our numerical examples, we set K = 2000. In addition to the transition points tks, we evaluate the solution over our solution path at a grid of size δt. More specifically, for each piece of our solution path over [tk, tk+1], we calculate our solution β(t) at t = tk + mδt for , where denotes the integer part of a.

The first toy example with a Poisson distribution is used to demonstrate that the LASSO regularized quasi-likelihood does have a nonlinear solution path. In Example 2, we consider Diabetes data with Gaussian distribution trying to compare QuasiLARS and LARS. The response of the Diabetes data is actually positive integer valued, and thus can be thought of coming from some Poisson model. In Example 3, we apply QuasiLARS with Poisson distribution to the Diabetes data. Binomial QuasiLARS is considered in Example 4 with the Wisconsin Diagnostic Breast Cancer (WDBC) Data (available online at http://archive.ics.uci.edu/ml/datasets/Breast+Cancer+Wisconsin+(Diagnostic)).

Example 1 (A Poisson toy example) We set p = 3 and n = 40. The predictor covariates are generated from X ~ N(0, ∑), where ∑ is the variance-covariance matrix with its (i, j) element being 1 if i = j and 0.9 otherwise. Conditional on X = (x1, x2, x3)T, the response is generated from a Poisson distribution with mean exp(4+3x1−5x2+x3). We apply our QuasiLARS with the canonical link function η(x) = logμ(x) and the identity variance function V(μ(x)) = μ(x) of the Poisson distribution.

For this toy example, the QuasiLAR and QuasiLASSO lead to the same solution path. In the top panel of Figure 2, we plot our solution path by solid lines. The horizontal axis corresponds to the one norm of β(t). If you connect the solutions at different transition points by straight lines, then you get the dashed lines. It clearly demonstrates that the true solution path for the LASSO regularized quasi-likelihood is not piecewise linear. In the bottom panel, the solution β(t) is plotted with respect to t.

Example 2 (Gaussian with Diabetes data) In this example, we use the diabetes data (Efron et al., 2004) to compare the solution path of our extension QuasiLARS and that of the original LARS algorithm. In this data set, ten baseline variables, age, sex, body mass index, average blood pressure, and six blood serum measurements were obtained for each of n = 442 diabetes patients, as well as the response of interest, a quantitative measure of disease progression one year after baseline. We run our extension QuasiLAR algorithm for this data set. Our QuasiLAR solution path matches the LAR solution path obtained by the R package LARS which is shown in the top left panel of Figure 1. The maximum solution difference at all transition points is very small and in fact bounded from above by 5.0×10−7, namely, , where βLAR and βQuasiLAR denote the LAR and QuasiLAR solution, respectively. Comparing to QuasiLAR, the QuasiLASSO solution path has two more transition points. This is consistent with the result of R package LARS. With LASSO, the maximum solution difference at all transition points is also bounded from above as . This example confirms that the QuasiLARS matches the LARS in the Gaussian case and works correctly. However to save space, we do not plot our QuasiLAR and QuasiLASSO paths.

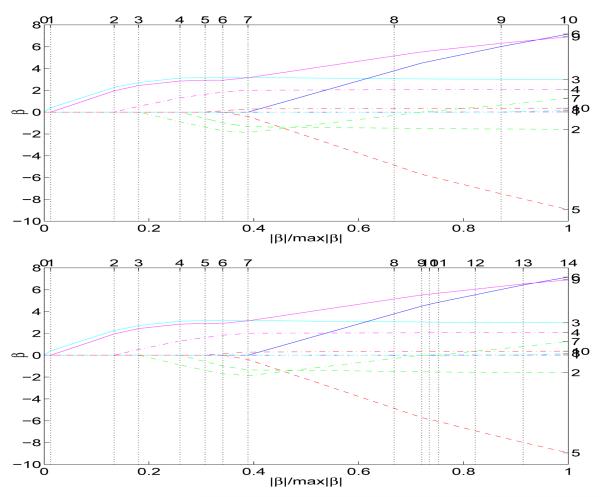

Example 3 (Poisson with Diabetes data) The response in the diabetes data is in fact positive integer valued. We apply our QuasiLARS algorithm by choosing Poisson distribution with the canonical log link function and identity variance function, namely, η(x) = log μ(x) and V (μ(x)) = μ(x). Results are shown in Figure 3. As in the Gaussian example, some discrepancy between the QuasiLAR and QuasiLASSO solution paths is observed. The QuasiLASSO has four more transition points than the QuasiLAR does.

Figure 3.

Poisson QuasiLAR (top) and QuasiLASSO (bottom) solution paths for the Diabetes data.

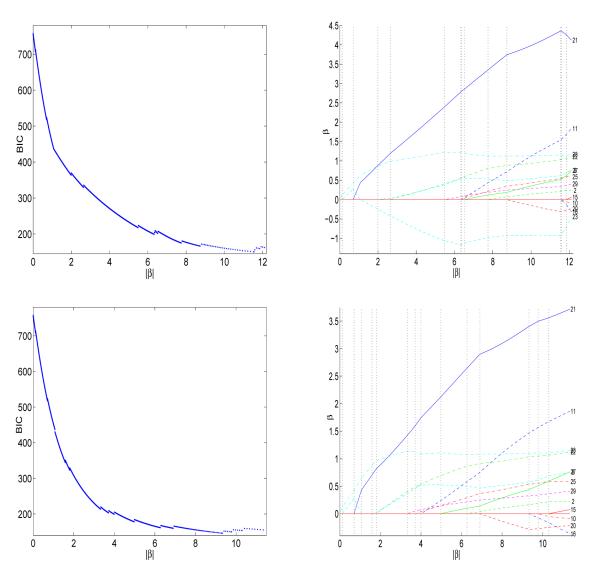

Example 4 (Binomial with WDBC Data) The WDBC data is based on n = 569 patients. The number of predictors is p = 30. The response is binary in that each patient is diagnosed either as malignant (Y = 1) or benign (Y = 0). We first standardize each predictor variable to have mean zero and variance one. Our QuasiLARS with Binomial distribution is applied to this data set with the logit link and variance function V (μ(x)) = μ(x)(1 − μ(x)). There are a lot of predictor variables available. To locate an “optimal” solution along the QuasiLARS solution path, we use the Bayesian Information Criterion (BIC) defined by , where L(xi, yi; β(t), β0(β(t))) denotes the Binomial likelihood and k(β(t)) = #{1 ≤ j ≤ p : ∣βj(t)∣ > 0} denotes the number of nonzero coefficients of β(t). For the LASSO regularized least squares regression, Zou, Hastie and Tibshirani (2007) proved that the number of nonzero coefficients is an unbiased and asymptotically consistent estimator of the degrees of freedom. Park and Hastie (2007) provided a heuristic proof for the case of generalized linear models. The optimal solution is given by β(t*) with t* = argmint∈[t0,0] BIC(β(t)).

For the QuasiLAR, nonzero elements of the optimal solution are given by the second column of Table 1. The BIC score is plotted with respect to the solution’s one norm in the top left panel of Figure 4 for t ∈ [t0, T], where T is a little beyond t* corresponding to the “optimal” solution. The top right panel of Figure 4 gives the solution path for t ∈ [t0, T]. Here we truncate the figures at T to make it look more clear.

Table 1.

Nonzero elements of the optimal solution selected by BIC for Example 3

| QuasiLAR | QuasiLASSO | |

|---|---|---|

| β 2 | 0.2077 | 0.1624 |

| β 8 | 0.6170 | 0.5767 |

| β 11 | 1.5370 | 1.4667 |

| β 20 | −0.3169 | −0.2833 |

| β 21 | 4.3576 | 3.4047 |

| β 22 | 1.0325 | 1.0343 |

| β 23 | −0.9287 | |

| β 25 | 0.5470 | 0.5339 |

| β 27 | 0.5176 | 0.4395 |

| β 28 | 1.1496 | 1.0998 |

| β 29 | 0.3378 | 0.3257 |

Figure 4.

Binomial QuasiLARS paths for the WDBC data: the top left panel plots the BIC score along the Binomial QuasiLAR solution path; the top right panel gives part of the Binomial QuasiLAR solution path; the bottom left panel plots the BIC score along the Binomial QuasiLASSO solution path; the bottom right panel gives part of the Binomial QuasiLASSO solution path;

For the QuasiLASSO, the optimal solution’s nonzero elements are shown in the third column of Table 1. The corresponding plots of the BIC and solution path are given in the two bottom panels of Figure 4.

From the QuasiLAR solution path given in the top right panel of Figure 4, we can see that one solution component has changed sign between the second and third transition points. This change causes the violation of the sign constraint of the LASSO regularized quasi-likelihood solution path. Thus in the QuasiLASSO solution path, another transition is added at this point to avoid sign constraint violation.

This example demonstrates that our extension QuasiLARS may be applied to high dimensional data sets. However there is no need to complete the whole solution path. We may design an optimal criterion, say the BIC. This optimal criterion may be used to identify the optimal solution as the QuasiLARS solution path progresses. Thus an earlier termination is possible to save computational effort in that it is computationally expensive to solve the ODE system when the active predictor set is large.

7 Conclusion

In this work, we extend the LARS algorithm to the QLM. Over each piece, the solution path is obtained by solving an initial-value ordinary differential equation system. Several examples are used to demonstrate how it works with real data. In particular, Example 4 uses the BIC to select the “optimal solution” along the solution path to show that the QuasiLARS algorithm may be applied to high dimensional data and an earlier termination is possible. One interesting future research topic is to study how to define degrees of freedom for the QuasiLARS as studied in Zou et al. (2007). This will provide an elegant criterion to select the “optimal solution.”

The LARS is attractive because of its super fast speed. This is made possible because the corresponding path is piecewise linear. However the QuasiLARS solution path is not piecewise linear due to the nature of the QLM. Thus we can not expect the QuasiLARS to be as fast as the LARS. We have implemented the primitive version of our algorithm using the Matlab ODE solver “ODE45,” which works fairly fast.

Supplementary Material

Acknowledgements

The author thanks Jianqing Fan and Chuanshu Ji for mentoring and longtime encouragement. The author also thanks Dennis Boos, Jingfang Huang, Yufeng Liu, John Monahan, and Leonard Stefanski for helpful comments and discussions. This work is supported in part by NSF grant DMS-0905561, NIH/NCI grant R01-CA149569, and NCSU Faculty Research and Professional Development Award.

References

- Candes E, Tao T. The dantzig selector: statistical estimation when p is much larger than n. The Annals of Statistics. 2007:2313–2351. [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression (with discussions) Ann. Statist. 2004;32:409–499. [Google Scholar]

- Fan J, Li R. Variable selection via penalized likelihood. Journal of American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularized paths for generalized linear models via coordinate descent. Journal of Statistical Software. 2010;33 [PMC free article] [PubMed] [Google Scholar]

- Hastie T, Rosset S, Tibshirani R, Zhu J. The entire regularization path for the support vector machine. JMLR. 2004;5:1391–1415. [Google Scholar]

- Hunter DR, Li R. Variable selection using mm algorithm. The Annals of Statistics. 2005;33:1617–1642. doi: 10.1214/009053605000000200. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Y, Liu Y, Zhu J. Quantile regression in reproducing kernel hilbert spaces. Journal of the American Statistical Association. 2007;102:255–268. [Google Scholar]

- Li Y, Zhu J. l1-norm quantile regressions. Journal of Computational and Graphical Statistics. 2008;17:163–185. [Google Scholar]

- Madigan D, Ridgeway G. Discussion on “least angle regression”. Annals of Statistics. 2004;32:465–469. [Google Scholar]

- Osborne M, Presnell B, Turlach B. A new approach to variable selection in least squares problems. IMA Journal of Numerical Analysis. 2000;20:389–403. [Google Scholar]

- Park MY, Hastie T. l1-regularization path algorithm for generalized linear models. Journal of the Royal Statistical Society, Series B. 2007;69:659–677. [Google Scholar]

- Rocha G, Zhao P, Yu B. A path following algorithm for sparse pseudo-likelihood inverse covariance estimation (splice) Technical Report. 2008 [Google Scholar]

- Rosset S. Tracking curved regularized opimization solution paths. Advances in Neural Information Processing Systems. 2004;13 [Google Scholar]

- Rosset S, Zhu J. Piecewise linear regularized solution paths. Annals of Statistics. 2007;35:1012–1030. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- Wang J, Shen X, Liu Y. Probability estimation for large margin classifiers. Biometrika. 2008;95:149–167. [Google Scholar]

- Wang L, Shen X. Multicategory support vector machines, feature selection and solution path. Statistica Sinica. 2006;16:617–634. [Google Scholar]

- Wang L, Zhu J. Image denoising via solution paths. Annals of Operations Research (Special issue on data mining) 2007 [Google Scholar]

- Wu S, Shen X, Geyer C. Adaptive regularization through entire solution surface. Biometrika. 2009:513–527. doi: 10.1093/biomet/asp038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yuan M, Lin Y. On the nonnegative garrote estimator. Journal of the Royal Statistical Society, Series B. 2007;69:143–161. [Google Scholar]

- Yuan M, Zou H. Efficient global approximation of generalized nonlinear l1 regularized solution paths and its applications. JASA. 2009:1562–1574. [Google Scholar]

- Zhang HH, Lu W. Adaptive lasso for cox’s proportional hazards model. Biometrika. 2007;94:691–703. [Google Scholar]

- Zhu J, Rosset S, Hastie T, Tibshirani R. 1-norm support vector machines. Neural Information Processing Systems. 2004;16 [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. Journal of the American Statistical Association. 2006;101:1418–1429. [Google Scholar]

- Zou H. A note on path-based variable selection in the penalized proportional hazards model. Biometrika. 2008;95:241–247. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society, Series B. 2005;67:301–320. [Google Scholar]

- Zou H, Hastie T, Tibshirani R. On the degrees of freedom of the lasso. The Annals of Statistics. 2007:2173–2192. [Google Scholar]

- Zou H, Li R. One-step sparse estimates in nonconcave penalized likelihood models (with discussion) Ann. Statist. 2008;36:1509–1566. doi: 10.1214/009053607000000802. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.