Abstract

Recent research shows that our actions can influence how we think. A separate body of research shows that the gestures we produce when we speak can also influence how we think. Here we bring these two literatures together to explore whether gesture has an impact on thinking by virtue of its ability to reflect real-world actions. We first argue that gestures contain detailed perceptual-motor information about the actions they represent, information often not found in the speech that accompanies the gestures. We then show that the action features in gesture do not just reflect the gesturer’s thinking—they can feed back and alter that thinking. Gesture actively brings action into a speaker’s mental representations, and those mental representations then affect behavior—at times more powerfully than the actions on which the gestures are based. Gesture thus has the potential to serve as a unique bridge between action and abstract thought.

In recent years, cognitive scientists have reworked the traditional view of the mind as an abstract information processor to include connections with the body. Theories of embodied cognition suggest that our internal representations of objects and events are not grounded solely in amodal propositional code, but are also linked to the sensorimotor systems that govern acting on these objects and actions (e.g., Barsalou, 1999; Glenberg; 1997; Garbarini & Adenzato, 2004; Wilson, 2002; Zwaan, 1999). This analysis has roots in ecological psychology, which argues against a distinct division between perception and action (Gibson, 1979).

The embodied viewpoint leads to a number of specific ideas about links between cognition and action (cf., Wilson, 2002). One such idea is that our action experiences change how we think about the objects we encounter by interconnecting our representations of these objects with the sensorimotor experiences associated with acting on the objects. These interconnections then play a role in thinking even when there is no intent to act (Beilock & Holt, 2007). In this article, we move beyond activities commonly studied in exploring this prediction—activities ranging from dancing (Calvo-Merino, Glaser, Grezes, Passingham, & Haggard, 2005) to playing ice hockey (Beilock, Lyons, Mattarella-Micke, Nusbaum, & Small, 2008)—to focus on gesture. Gestures are an interesting test case for the embodied view. Because they involve movements of the hand, gestures are clearly actions. However, gestures do not have a direct effect on the world the way most actions do—instead, gestures are representational. The embodied viewpoint suggests that previous action experiences can influence how we think. We examine here the hypothesis that the representational gestures we produce also influence thinking. We begin by noting that the gestures that accompany speech are not mindless hand waving—gestures convey substantive information. Moreover, the information conveyed in gesture is often not conveyed anywhere in the speech that accompanies it. In this way, gesture reflects thoughts that speakers may not explicitly know they have. Moreover, gesture does more than reflect thought—gesture plays a role in changing thought. The mechanism underlying gesture’s effect on thinking is, however, unclear. The hypothesis we explore here is that gesture influences thought, at least in part, by grounding thought in action.

Gestures vary in how closely they mirror the actions they represent. Some gestures simulate a person’s actions; for example, moving the hands as though pouring water from one container into another. In these gestures, often called character viewpoint gestures (McNeill, 1992), the gesturer takes the perspective of the character being described and, in effect, becomes that character in terms of the movements she makes. In the previous example, the gesturer assumes the role of pourer and moves her hand accordingly. Other gestures, called observer viewpoint gestures (McNeill, 1992), depict characters or scenes as though viewing them (as opposed to doing them); for example, tracing the trajectory of the water as it is poured from one container to another.

In addition to character and observer viewpoint gestures, which depict action movements, gesturers can use metaphoric gestures. Metaphoric gestures represent abstract ideas rather than concrete objects or actions, often referring not to the movements used to carry out an activity, but rather to its goal or outcome. For example, sweeping the left hand under the left side of a mathematical equation and then sweeping the right hand under the right side of the equation can be used to indicate that the numbers on the left should add up to the same sum as the numbers on the right (Perry, Church & Goldin-Meadow, 1988),

Actions, like gestures, are not a random collection of movements, but rather can be organized into relative hierarchies of motor control (Grafton & Hamilton, 2007). Actions range from the specific kinematics of movement itself (e.g., in terms of grasping and moving an object, whether one uses a power or precision grip), to the goal-object (e.g., in our grasping example, the identity of the object being grasped), to the outcome (e.g., how the world will be altered as a function of the object that is grasped and moved. The three gesture types outlined above (character, observer, metaphor) map neatly onto the action hierarchy. Character gestures can be seen as capturing lower-level action kinematics in that they reflect the actual movements being performed. Observer gestures capture the goal-object in that they represent the objects being acted upon and/or the trajectory that those objects follow. Finally, metaphoric gestures reflect higher-level outcomes. This mapping of gesture types to action types allows us to, first, disentangle different forms of gesture and, second, ask questions about whether the way in which a gesture represents an action (in particular, whether it captures movements that are situated on lower vs. higher levels in a hierarchy of motor control) influences the impact that the gesture has on thinking.

The structure of our argument elaborates this hypothesis. We begin by reviewing evidence that our actions can influence our thoughts. We then review evidence that the gestures we produce when we speak can also influence our thoughts. We then join these two literatures and ask whether gestures influence thinking by virtue of the actions they represent. We end by speculating that gesture may be the ideal vehicle by which thought can move from the concrete to the abstract.

1. The Embodied Viewpoint: Action influences thought

Traditional views of cognition suggest that conscious experience gives rise to abstract codes that are arbitrarily related to the objects or concepts they represent (Kintsch, 1988; Newell & Simon, 1972; Pylyshyn, 1986). Broadly speaking, an individual’s knowledge is conceptualized as a network of connected nodes or concepts in the form of amodal propositions (e.g., Collins & Quillian, 1969). Recently, however, embodied approaches propose that amodal propositions are not the only manner in which knowledge is represented. Theories of embodied cognition such as perceptual symbols systems (PSS; Barsalou, 1999) suggest that our representations of objects and events are built on a system of activations much like amodal views of cognition. However, in contrast to amodal views, PSS purports that current neural representations of events are based on the brain states that were active in the past, during the actual perception and interaction with the objects and events in the real world. That is, our cognitive representations of a particular action, item, or event reflect the states that produced these experiences. Perceptual symbols are believed to be multimodal traces of neural activity that contain at least some of the motor information present during actual sensorimotor experience (Barsalou, 1999; for embodied cognition reviews, see Garbarini & Adenzato, 2004; Glenberg, 1997; Niedenthal, Barsalou, Winkielman, Krauth-Gruber, & Ric, 2005; Wilson, 2002; Zwaan, 1999).

To the extent that PSS, and the embodied viewpoint more generally, captures the way individuals understand and process the information they encounter, we can make some straightforward predictions about how acting in the world and, specifically, one’s previous action experiences, should influence cognition. For instance, if neural operations that embody previous actions and experiences underlie our representations of those actions, then individuals who have had extensive motor skill experience in a particular domain should perceive and represent information in that domain differently from individuals without such experiences. There is evidence to support this prediction.

Experience doing an action influences perception of the action

In one of the first studies to address differences in the neural activity that underlies action observation in people with more or less motor experience with those actions, Calvo-Merino, Glaser, Grezes, Passingham, and Haggard (2005) used functional magnetic resonance imaging (fMRI) to study brain activation patterns when individuals watched an action in which they were skilled, compared to one in which they were not skilled. Experts in classical ballet or Capoeira (a Brazilian art form that combines elements of dance and martial arts) watched videos of the two activities while their brains were being scanned. Brain activity when individuals watched their own dance style was compared to brain activity when they watched the other unfamiliar dance style (e.g., ballet dancers watching ballet versus ballet dancers watching Capoeira). Greater activation was found when experts viewed the familiar vs. the unfamiliar activity in a network of brain regions thought to support both the observation and production of action (e.g., bilateral activation in premotor cortex and intraparietal sulcus, right superior parietal lobe, and left posterior superior temporal sulcus; Rizzolatti, Fogassi, & Gallese, 2001).

To explore whether doing (as opposed to seeing) the actions was responsible for the effect, Calvo-Merino, Grezes, Glaser, Passingham, & Haggard (2006) examined brain activation in male and female ballet dancers. Each gender performs several moves not performed by the other gender. But because male and female ballet dancers train together, they have extensive experience seeing (although not doing) the other gender’s moves. Calvo-Merino and colleagues found greater premotor, parietal, and cerebellar activity when dancers viewed moves from their own repertoire, compared to moves performed by the opposite gender. Having produced an action affected the way the dancers perceived the action, suggesting that the systems involved in action production subserve action perception.

Experience doing an action influences comprehension of descriptions of the action

Expanding on this work, Beilock, Lyons, Mattarella-Micke, Nusbaum, & Small (2008; see also Holt & Beilock, 2006) showed that action experience not only changes the neural basis of action observation, but also facilitates the comprehension of action-related language. Expert ice-hockey players and hockey novices passively listened to sentences depicting ice-hockey action scenarios (e.g., “The hockey player finished the stride”) or everyday actions scenarios (e.g., “The individual pushed the bell”) during fMRI. Both groups then performed a comprehension task that gauged their understanding of the sentences they had heard.1

As expected, all participants, regardless of hockey experience, were able to comprehend descriptions of everyday action scenarios like pushing a bell. However, hockey experts understood hockey-language scenarios better than hockey novices (see Beilock et al., 2008, for details). More interestingly, the relation between hockey experience and hockey-language comprehension was mediated by neural activity in left dorsal premotor cortex [Talairach center-of-gravity = (±45,9,41)]. The more hockey experience participants had, the more the left dorsal premotor cortex was activated in response to the hockey-language; and, in turn, the more activation in this region, the better their hockey-language comprehension. These observations support the hypothesis that auditory comprehension of action-based language can be accounted for by experience-dependent activation of left dorsal premotor cortex, a region thought to support the selection of well-learned action plans and procedures (Grafton, Fagg, and Arbib, 1998; O’Shea, Sebastian, Boorman, Johansen-Berg, & Rushworth, 2007; Rushworth, Johansen-Berg, Gobel, & Devlin, 2003; Schluter, Krams, Rushworth, & Passingham, 2001; Toni, Shah, Frink, Thoenissen, Passingham, & Zilles, 2002; Wise & Murray, 2000).

In sum, people with previous experience performing activities that they are currently either seeing or hearing about call upon different neural regions when processing this visual or auditory information than people without such action experience. This is precisely the pattern an embodied viewpoint would predict—namely, that our previous experiences acting in the world change how we process the information we encounter by allowing us to call upon a greater network of sensorimotor regions, even when we are merely observing or listening without intending to act (for further examples, see Beilock & Holt, 2007; Yang, Gallo, & Beilock, 2009).

Experience doing an action influences perceptual discrimination of the action

Finally, motor experience-driven effects do more than facilitate perception of an action, or comprehension of action-related language (for a review, see Wilson & Knoblich, 2004). Recent work by Calise and Giese (2006) demonstrates that motor experience can have an impact on individuals’ ability to make perceptual discriminations among different actions that they observe.

Typical human gait patterns are characterized by a phase difference of approximately 180° between the two opposite arms and the two opposite legs. Calise and Giese trained individuals to perform an unusual gait pattern—arm movements that matched a phase difference of 270° (rather than the typical 180°). Participants were trained blindfolded with only minimal verbal and haptic feedback from the experimenter.

Before and after training, participants performed a visual discrimination task in which they were presented with two point-light walkers and had to determine whether the gait patterns of the point-light walkers were the same or different. In each display, one of the walkers’ gait pattern corresponded to phase differences of 180°, 225°, or 270° (the phase difference participants were trained to perform). The other point-light walker had a phase difference either slightly lower or higher than each of these three prototypes.

As one might expect, before motor training, participants performed at a high level of accuracy on the 180° discriminations, as these are the gait patterns most similar to what people see and perform on a daily basis. However, participants’ discrimination ability was poor for the two unusual gait patterns, 225° and 270°. After motor training, participants again performed well on the 180° discriminations. Moreover, they improved on the 270° displays—the gait they had learned to perform—but not on the 225° displays. Interestingly, the better participants learned to perform the 270° gait pattern, the better their performance on the perceptual discrimination task. This result suggests a direct influence between learning a motor sequence and recognizing that sequence—an influence that does not depend on visual learning as individuals were blindfolded during motor skill acquisition.

To summarize thus far, action experience has a strong influence on the cognitive and neural processes called upon during action observation, language comprehension, and perceptual discrimination. Action influences thought.

2. Gesture influences thought

2.1. Background on gesture

The gestures that speakers produce when they talk are also actions, but they do not have a direct effect on the environment. They do, however, have an effect on communication. Our question, then, is whether actions whose primary function is to represent ideas influence thinking in the same way as actions whose function is to directly impact the world—by virtue of the fact that both are movements of the body. Unlike other movements of the body (often called body language, e.g., whether speakers move their bodies, make eye contact, or raise their voices), which provide cues to the speaker’s attitude, mood, and stance (Knapp, 1978), the gestures that speakers produce when they talk convey substantive information about a speaker’s thoughts (McNeill, 1992). Gestures, in fact, often display thoughts not found anywhere in the speaker’s words (Goldin-Meadow, 2003).

Although there is no right or wrong way to gesture, particular contexts tend to elicit consistent types of gestures in speakers. For example, children asked to explain whether they think that water poured from one container into another is still the same amount all produce gestures that can be classified into a relatively small set of spoken and gestural rationales (Church & Goldin-Meadow, 1986, e.g., children say the amount is different because “this one is taller than that one,” while indicating with a flat palm the height of the water first in the tall container and then in the short container). As a result, it is possible to establish “lexicons” for gestures produced in particular contexts that can be used to code and classify the gestures speakers produce (Goldin-Meadow, 2003, chapter 3).

Not only is it possible for researchers to reliably assign meanings to gestures, but ordinary listeners who have not been trained to code gesture can also get meaning from the gestures they see (Cassell, McNeill, & McCullough, 1999; Kelly & Church, 1997; 1998; Graham & Argyle, 1975; Holle & Gunter, 2007; Kelly, Kravitz, & Hopkins, 2004; Özyürek, Willems, Kita & Hagoort, 2007; Wu & Coulson, 2005). Listeners are more likely to deduce a speaker’s intended message when speakers gesture than when they do not gesture—whether the listener is observing combinations of character and observer viewpoint gestures (Goldin-Meadow, Wein & Chang, 1992) or metaphoric gestures (Alibali, Flevares, & Goldin-Meadow, 1997). Listeners can even glean specific information from gesture that is not conveyed in the accompanying speech (Beattie & Shovelton, 1999; Goldin-Meadow, Kim & Singer, 1999; Goldin-Meadow & Sandhofer, 1999; Goldin-Meadow & Singer, 2003). Gesture thus plays a role in communication.

However, speakers continue to gesture even when their listeners cannot see those gestures (Bavelas, Chovil, Lawrie & Wade, 1992; Iverson & Goldin-Meadow, 1998, 2001), suggesting that speakers may gesture for themselves as well as for their listeners. Indeed, previous work has shown that gesturing while speaking frees up working memory resources, relative to speaking without gesturing—whether the speaker produces combinations of character and observer viewpoint gestures (Ping & Goldin-Meadow, 2010) or metaphoric gestures (Goldin-Meadow, Nusbaum, Kelly & Wagner, 2001; Wagner, Nusbaum & Goldin-Meadow, 2004). Gesture thus plays a role in cognition.

In the next three sections, we first establish that gesture predicts changes in thinking. We then show that, like action, gesture can play a role in changing thinking, both through its effect on communication (the gestures learners see others produce) and through its effect on cognition (the gestures learners themselves produce). Finally, we turn to the question of whether gesture influences thought by linking it to action.

2.2. Gesture predicts changes in thought

The gestures a speaker produces on a task can predict whether the speaker is likely to learn the task. We see this phenomenon in tasks that typically elicit either character or observer viewpoint gestures. Take, for example, school-aged children learning to conserve quantity across perceptual transformations like pouring water. Children who refer in gesture to one aspect of the conservation problem (e.g., the width of the container), while referring in speech to another aspect (e.g. “this one is taller”), are significantly more likely to benefit from instruction on conservation tasks than children who refer to the same aspects in both speech and gesture (Church & Goldin-Meadow, 1986; see also Pine, Lufkin, & Messer, 2004; Perry & Elder, 1997).

We see the same phenomenon in tasks that elicit metaphoric gestures, as in the following example. Children asked to solve and explain math problems such as 6+4+2=__+2 routinely produce gestures along with their explanations and those gestures often convey information that is not found in the children’s words. For example, a child puts 12 in the blank and justifies his answer by saying, “I added the 6, the 4, and the 2” (i.e., he gives an add-to-equal-sign problem-solving strategy in speech). At the same time, the child points at the 6, the 4, the 2 on the left side of the equation, and the 2 on the right side of the equation (i.e., he gives an add-all-numbers strategy in gesture).2 Here again, children who convey different information in their gestures and speech are more likely to profit from instruction than children who convey the same information in the two modalities (Perry, Church & Goldin-Meadow, 1988; Alibali & Goldin-Meadow, 1993).3

Gesture thus predicts changes in thought. But gesture can do more—it can bring about changes in thought in (at least) two ways: The gestures that learners see and the gestures that learners produce can influence what they learn.

2.3. Gesture changes thought

Seeing gesture changes thought

Children who are given instruction that includes both speech and gesture learn more from that instruction than children who are given instruction that includes only speech. This effect has been demonstrated for character and observer gestures in conservation tasks (Ping & Goldin-Meadow, 2008), and for metaphoric gestures in tasks involving mathematical equivalence (Church, Ayman-Nolley, & Mahootian, 2004; Perry, Berch, & Singleton, 1995) or symmetry (Valenzeno, Alibali, & Klatzky, 2003).

There is, moreover, evidence that the particular gestures used in the lesson can affect whether learning takes place. Singer and Goldin-Meadow (2005) varied the types of metaphoric gestures that children saw in a mathematical equivalence lesson. They found that children profited most from the lesson when the instructor produced two different problem-solving strategies at the same time, one in speech and the other in gesture; for example, for the problem 6+4+3=__+3, the teacher said, “we can add 6 plus 4 plus 3 which equals 13; we want to make the other side of the equal sign the same amount and 10 plus 3 also equals 13, so 10 is the answer” (an equivalence strategy), while pointing at the 6, the 4, and the left 3, and producing a take-away gesture near the right 3 (an add-subtract strategy). This type of lesson was more effective than one in which the instructor produced one strategy in speech along with the same strategy in gesture, or with no gesture at all. Most strikingly, learning was worst when the instructor produced two different strategies (equivalence and add-subtract) both in speech. Thus, instruction containing two strategies was effective as long as one of the strategies was conveyed in speech and the other in gesture. Seeing gesture can change the watcher’s thinking.

Producing gesture changes thought

Can doing gesture change the doer’s thinking? Yes. Telling children to gesture either before or during instruction makes them more likely to profit from that instruction. Again, we see the effect in character and observer viewpoint gestures, as well as metaphoric gestures.

For example, children were asked on a pretest to solve a series of mental rotation problems by picking the shape that two pieces would make if they were moved together. The children were then given a lesson in mental rotation. During the lesson, one group was told to “show me with your hands how you would move the pieces to make one of these shapes.” In response to this instruction, children produced character (they rotated their hands in the air as though holding and moving the pieces) and observer (they traced the trajectory of the rotation in the air that would allow the goal object to be formed) viewpoint gestures—akin to the kinematic and goal-object components of the motor hierarchy mentioned earlier (Grafton & Hamilton, 2007). The other group of children was told to use their hands to point to the pieces. Both groups were then given a posttest. Children told to produce gestures that exemplified the kinematics and the trajectories needed to form the goal-object during the lesson were more likely to improve after the lesson than children told only to point (Ehrlich, Tran, Levine & Goldin-Meadow, 2009; see also Ehrlich, Levine & Goldin-Meadow, 2006). Not only does gesturing affect learning, but the type of gesture matters—producing gestures that exemplify the actions needed to produce a desired outcome, as well as gestures that reflect the goal-object itself, both led to learning; pointing gestures did not.

As an example of metaphoric gestures, Broaders, Cook, Mitchell and Goldin-Meadow (2008) encouraged children to gesture when explaining their answers to a series of math problem right before they were given a math lesson. One group of children was told to move their hands as they explained their solutions; the other group was told not to. Both groups were then given the lesson and a posttest. Children told to gesture prior to the lesson were more likely to improve after the lesson than children told not to gesture. Interestingly, the gestures that the children produced conveyed strategies that they had never expressed before, in either speech or gesture, and often those strategies, if implemented, would have led to correct problem solutions (e.g., the child swept her left hand under the left side of the equation and her right side under the right side of the equation, the gestural equivalent of the equivalence strategy). Being told to gesture seems to activate ideas that were likely to have been present, albeit unexpressed, in the learner’s pre-lesson repertoire. Expressing these ideas in gesture then leads to learning.

Gesturing can also create new knowledge. Goldin-Meadow, Cook and Mitchell (2009) taught children hand movements instantiating a strategy for solving the mathematical equivalence problems that the children had never expressed in either gesture or speech—the grouping strategy. Before the lesson began, all of the children were taught say, “to solve this problem, I need to make one side equal to the other side,” the equivalence strategy. Some children were also taught hand movements instantiating the grouping strategy. The experimenter placed a V-hand under the 6+3 in the problem 6+3+5=__+5, followed by a point at the blank (grouping 6 and 3 and putting the sum in the blank leads to the correct answer). The children were told to imitate the movements.

All of the children were then given a math lesson. The instructor taught the children using the equivalence strategy in speech and produced no gestures. During the lesson, children were asked to produce the words or words+hand movements they had been taught earlier. They were then given a posttest. Children who produced the grouping hand movements during the lesson improved on the posttest more than children who did not. Moreover, they produced the grouping strategy in speech for the very first time when asked to justify their posttest responses. Producing hand movements reflecting the grouping strategy led to acquisition of the strategy. Gesture can thus introduce new ideas into a learner’s repertoire (see also Cook, Mitchell & Goldin-Meadow, 2008; Cook & Goldin-Meadow, 2010).

3. Gesture affects thinking by grounding it in action

We have seen that gesture can influence thought, but the mechanism that underlies this effect is, as yet, unclear. We suggest that gesture affects thinking by grounding it in action. To bolster this argument, we first provide evidence that how speakers gesture is influenced by the actions they do and see in the world. We then show that gestures that incorporate components of actions into their form (what we are calling action gestures) can change the way listeners think and, even more striking, the way gesturers themselves think.

3.1. Gestures reflect actions

Hotstetter and Alibali (2008; see also Kita, 2000; McNeill, 1992; Streeck, 1996) have proposed that gestures are an outgrowth of simulated action and perception. According to this view, a gesture is born when simulated action, which involves activating the premotor action states associated with selecting and planning a particular action, spreads to the motor areas involved in the specific step-by-step instantiation of that action.

Character viewpoint gestures, which resemble real-world actions, provide face value support for this hypothesis. For example, when explaining his solution to a mental rotation problem, one child produced the following character viewpoint gesture: his hands were shaped as though he were holding the two pieces; he held his hands apart and then rotated them together. This gesture looks like the movements that would be produced had the pieces been moved (in fact, the pieces were drawings and thus could not be moved). Similarly, when explaining why the water in one container changed in amount when it was poured into another contained, a child produced a pouring motion with her hand shaped as though she were holding the container (here again, the child had not done the actual pouring but had observed the experimenter making precisely those movements).

Although examples of this sort suggest that gestures reflect real-world actions, more convincing evidence comes from a recent study by Cook and Tanenhaus (2009). Adults in the study were asked to solve the Tower of Hanoi problem in which four disks of different sizes must be moved from the leftmost of three pegs to the rightmost peg; only one disk can be moved at a time and a bigger disk can never be placed on a smaller disk (Newell & Simon, 1972). There were two groups in the study, one solved the Tower of Hanoi problem with real objects and had to physically move the disks from one peg to another; the other group solved the same task on a computer and used a mouse to move the disks. Importantly, the computer disks could be dragged horizontally from one peg to another without being lifted over the top of the peg; the real disks, of course, had to be lifted over the pegs.

After solving the problem, the adults were asked to explain how they solved it to a listener. Cook and Tanenhaus (2009) examined the speech and gestures produced in those explanations, and found no differences between the two groups in the types of words used in the explanations, nor in the number of gestures produced per word. There were, however, differences in the gestures themselves. The group who had solved the problem with the real objects produced more gestures with grasping hand shapes than the group who had solved the problem on the computer. Their gestures also had more curved trajectories (representing the path of the disk as it was lifted from peg to peg) than the gestures produced by the computer group, which tended to mimic the horizontal path of the computer mouse. The gestures incorporated the actions that each group had experienced when solving the problem, reflecting kinematic, object, and outcome details from the motor plan they had used to move the disks.

3.2. The actions reflected in gesture influence thought

Seeing action gestures changes thinking

We have seen that speakers incorporate components of their actions into the gestures they produce while talking. Importantly, those action components affect the way their listeners think. After the listeners in the Cook and Tanenhaus (2009) study heard the explanation of the Tower of Hanoi problem, they were asked to solve a Tower of Hanoi problem themselves on the computer. The listeners’ performance was influenced by the gestures they had seen. Listeners who saw explanations produced by adults in the real objects condition were more likely to make the computer disks follow real-world trajectories (i.e., they ‘lifted’ the computer disks up and over the peg on the screen even though there was no need to). Listeners who saw explanations produced by adults in the computer condition were more likely to move the computer disks laterally from peg to peg, tracking the moves of the computer mouse. Moreover, within the real objects condition, there was a significant positive relation between the curvature of the gestures particular speakers produced during the explanation phase of the study and the curvature of the mouse movements produced by the listeners who saw those gestures when they themselves solved the problem during the second phase of the study. The more curved the trajectory in the speaker’s gestures, the more curved was the trajectory in the listener’s mouse movements.

Thus, the listeners were sensitive to quantitative differences in the gestures they saw. Moreover, their own behavior when asked to subsequently solve the problem was shaped by those differences, suggesting that seeing gestures reflecting action information changes how observers go about solving the problem themselves.

Doing action gestures changes thinking

As described earlier, gestures can affect not only the observer, but also the gesturer him or herself. We next show that this effect may grow out of gesture’s ability to solidify action information in the speaker’s own mental representations. Beilock and Goldin-Meadow (2009) asked adults to solve the Tower of Hanoi problem twice, the first time with real objects (TOH1)—four disks, the smallest disk of which weighed the least (0.8kg), the largest disk the most (2.9kg). The smallest disk could be moved using either one hand or two, but the largest disk required two hands to move successfully because it was so heavy.

After solving the problem, the adults explained their solution to a confederate (Explanation). All of the adults spontaneously produced action gestures during their explanations. Gestures in which the adults used only hand when describing how the smallest disk was moved were classified as ‘one-handed’; gestures in which the adults used two hands when describing how the smallest disk was moved were classified as ‘two-handed’.

In the final phase of the study, adults solved the Tower of Hanoi problem a second time (TOH2). Half of the adults solved TOH2 using the original set of disks (No-Switch condition); half used disks whose weights had been switched so that the smallest disk now weighed the most and the largest the least (Switch condition). Importantly, when the smallest disk weighed the most it was too heavy to be picked up with one-hand; the adults had to use two-hands to pick it up successfully.

Not surprisingly, adults in the No-Switch condition, who solved the problem using the same tower that they had previously used, improved on the task (they took less time on TOH2 than TOH1 and also used fewer moves). But adults in the Switch condition did not improve and, in fact, took more time (and used more moves) to solve TOH2 than TOH1. The interesting result is that, in the Switch condition, performance on TOH2 could be predicted by the particular gestures adults produced during the Explanation—the more one-handed gestures they produced when describing how they moved the smallest disk, the worse they did on TOH2. Remember, when the disks were switched, the smallest disk could no longer be lifted with one hand.

Adults who had used one-handed gestures when talking about the smallest disk may have begun to represent the disk as light, which, after the switch, was the wrong way to represent this disk; hence, their poor performance. Importantly, in the No-Switch condition, there was no relation between the percentage of one-handed gestures used to describe the smallest disk during the Explanation and change in performance from TOH1 to TOH2—the disk was still small so representing it as light was consistent with the actions needed to move it. In other words, adults the No-Switch condition could use either one- or two-handed gestures to represent the smallest disk without jeopardizing their performance on TOH2, as either one or two hands could be used to move a light disk.

Disk weight is not a relevant factor in solving the Tower of Hanoi problem. Thus, when adults explained how they solved TOH1 to the confederate, they never talked about the weight of the disks or the number of hands they used to move the disks. However, it is difficult not to represent disk weight when gesturing—using a one-handed vs. a two-handed gesture implicitly captures the weight of the disk, and this gesture choice had a clear effect on TOH2 performance. Moreover, the number of hands that adults in the Switch group actually used when acting on the smallest disk in TOH1 did not predict performance on TOH2; only the number of one-handed gestures predicted performance. This finding suggests that gesture added action information to the adults’ mental representation of the task, and did not merely reflect what they had previously done.

If gesturing really is changing the speaker’s mental representations, rather than just reflecting those representations, then it should be crucial to the effect—adults who do not gesture between TOH1 and TOH2 should not show a decrement in performance when the disk weights are switched. Beilock and Goldin-Meadow (2009) asked a second group of adults to solve TOH1 and TOH2, but this time the adults were not given an Explanation task in between and, as a result, did not gesture. These adults improved on TOH2 in both the Switch and No-Switch conditions. Switching the weights of the disks interfered with performance only when the adults had previously produced action gestures that were no longer compatible with the movements needed to act on the smallest disk.

Gesturing about an action thus appears to solidify in mental representation the particular components of the action reflected in the gesture. When those components are incompatible with subsequent actions, performance suffers. When the components are compatible with future actions, gesturing will presumably facilitate the actions.

4. Gesture may be a more powerful influence on thought than action itself

Although gesturing is based in action, it is not a literal replay of the movements involved in action. Thus, it is conceivable that gesture could have a different impact on thought than action itself. Arguably, gesture should have less impact than action, precisely because gesture is ‘less’ than action; that is, it is only a representation, not a literal recreation, of action. Alternatively, this ‘once-removed-from-action’ aspect of gesture could have a more, not less, powerful impact on thought. Specifically, when we perform a particular action on an object (e.g., picking up a small, light disk in the TOH problem), we do not need to hold in mind a detailed internal representation of the action (e.g., the plans specifying a series of goals or specific movements needed to achieve the desired outcome; Newell & Simon, 1972) simply because some of the information is present in our perception of the object itself (via the object’s affordances). An affordance is a quality of an object or the environment that allows an individual to perform a particular action on it (Gibson, 1979); for example, a light disk affords grasping with one or two hands; a heavy disk affords grasping with only two hands.

In contrast to action, when we gesture, our movements are not tied to perceiving or manipulating real objects. As a result, we cannot rely on the affordances of the object to direct our gestures, but must instead create a rich internal representation of the object and the sensorimotor properties required to act on it. In this way, gesturing (versus acting) may lead to a more direct link between action and thinking because, in gesturing, one has to generate an internal representation of the object in question (with all the sensorimotor details needed to understand and act on it), whereas, in acting, some of this information is embedded in, or ‘off-loaded’ to, the environment.

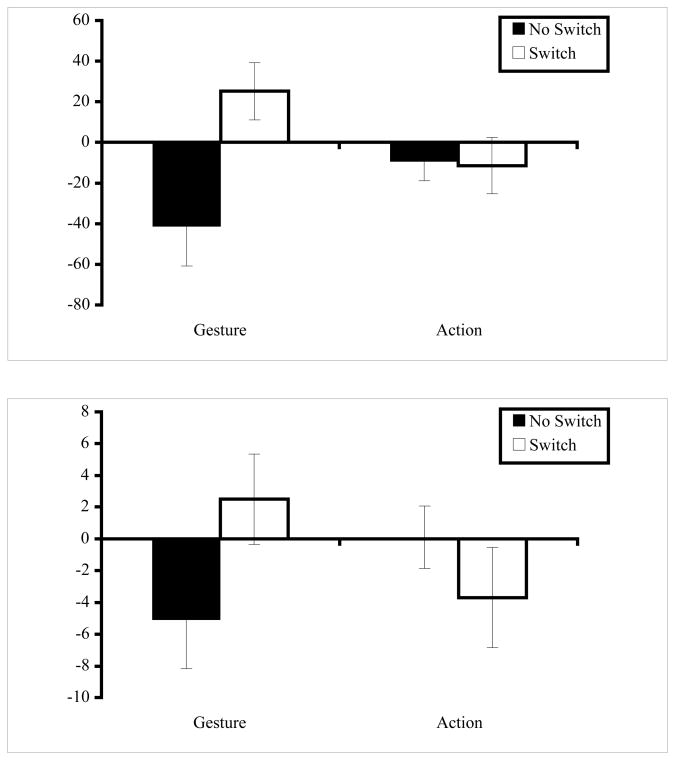

To explore whether gesture is, in fact, more powerful in linking thought and action then acting itself, we conducted a new study in which we again asked adults to solve TOH twice. However, in this study, after solving TOH1, only one group of adults was asked to explain how they solved the task; this group gestured about moving the disks (N=20, the Gesture group). A second group was asked to solve the task (rather than talk about solving the task) after solving TOH1; this group actually moved the disks (N=20, the Action group). This protocol directly contrasts gesture with action. Both groups then solved TOH2, and, as in the original study, half of the adults in each of group solved TOH2 using the tower they used in TOH1 (the smallest disk was the lightest, the No-Switch condition), and half solved TOH2 using the switched tower (the smallest disk was the heaviest and thus required two hands to lift, the Switch condition).

To make sure that we had created a fair contest between gesture and action, we began by demonstrating that there were no differences between the mean number of one-handed gestures adults in the Gesture group produced when describing how they moved the smallest disk (M=7.4, SD=4.66), and the mean number of one-handed moves adults in the Action group produced when actually moving the smallest disk (M=5.9, SD=4.67), F=1, p=.30. Moreover, both the Gesture and Action groups used one hand in relation to the smallest disk a majority of time.

If using one hand when either gesturing about the small disk or moving the small disk serves to create a representation of the small disk as light (and thus liftable with one hand), then switching disk weights should hurt performance equally in both conditions. In other words, if action works in the same way as gesture to solidify information in mental representation, then performance in the Action and Gesture groups ought to be identical—when the disk weights are switched, both groups should perform less well on TOH2 than TOH1 (our dependent variable, as in Beilock & Goldin-Meadow, 2009 was the difference in time, or number of moves, taken to solve the problem, between TOH1 and TOH2). However, if action solidifies representations less effectively than gesture, then the impact of switching weights for the Action group should be smaller than the impact of switching weights for the Gesture group—and that is precisely what we found. There was a significant 2(group: Gesture, Action) × 2(switch: No-Switch, Switch) interaction for time, F(1,36)=5.49, p<.03, and number of moves, F(1,36)=4.12, p<.05.

This result is displayed in Figure 1. For the Action group, switching the weight of the disks across TOH attempts had no impact on performance. Specifically, there was no difference between the Switch and No-Switch conditions in the Action group (TOH2-TOH1) in either time, F<1, or number of moves, F=1, p>.3; both groups improved somewhat across the problem-solving attempts.

Figure 1.

Difference in time (top graph) and moves (bottom graph) taken to solve the TOH problem (TOH2 – TOH1) for adults who, after completing TOH1, explained how they solved the task and gestured about moving the disks (Gesture group, left bars); and adults who solved the task rather than explain it and thus actually moved the disks (Action group, right bars). In the Gesture group, adults in the Switch condition showed less improvement (i.e., a more positive change score) from TOH1 to TOH2 than adults in the No-Switch condition. In contrast, in the Action group, adults showed no difference between conditions.

In contrast, for the Gesture group, there were significant differences between the Switch and No-Switch conditions (TOH2-TOH1) in both time, F(1,18)=7.73, p<.02, and number of moves, F(1,18)=3.25, p=.088. Figure 1 shows clearly that whereas the No-Switch group improved across time, the Switch group performed worse (thus replicating Beilock & Goldin-Meadow, 2009).

To our knowledge, this study is the first to directly test the hypothesis that gesture about action is more powerful than action itself in its effect on thought. Producing one-handed gestures before the second problem-solving attempt slowed performance on the task (in terms of both time and number of moves), but producing one-handed action movements did not. Gesturing about the actions involved in solving a problem thus appears to exert more influence on how the action components of the problem will be mentally represented than actually performing the actions.

5. Gesture as a bridge between action and abstract thought

Hotstetter and Alibali (2008) propose that gestures are simulated action—they emerge from perceptual and motor simulations that underlie embodied language and mental imagery. Character viewpoint gestures are the most straightforward case—they are produced as the result of a motor simulation in which speakers simulate an action in terms of the specific effectors and movement patterns needed to reach a desired outcome, as though they themselves were performing the action (thus corresponding to the lower-level action kinematics in a hierarchy of motor control, Grafton & Hamilton, 2007). Although observer viewpoint gestures do not simulate the character’s actions, they result from simulated object properties such as the trajectory of an object or the identity of the object (and, in this sense, correspond to the goal-object in a hierarchy of motor control, Grafton & Hamilton, 2007). For example, speakers can use their hands to simulate the motion path that an object takes as it moves or is moved from one place to another or the end result of the object itself. Finally, metaphoric gestures arise from perceptual and motor simulations of the schemas on which the metaphor is based (and thus correspond to higher-level outcomes in a hierarchy of motor control, Grafton & Hamilton, 2007). For example, speakers who talk about fairness while alternating moving their hands (with palms facing up) up and down are simulating two opposing views as though balancing objects on a scale. Gestures are thus closely tied to action and display many of the characteristics of action.

Our findings take the Hotstetter and Alibali (2008) proposal one important step further. We suggest that the action features that find their way into gesture do not just reflect the gesturer’s thinking—they can feed back and alter that thinking. Speakers who produce gestures simulating actions that could be performed on a light object (i.e., a one-handed movement that could lift a light but not a heavy object) come to mentally represent that object as light. If the object, without warning, becomes heavy, the speakers who produced the gestures are caught off-guard and perform less well on a task involving this object. In other words, gesture does not just passively reflect action, it actively brings action into a speaker’s mental representations, and those mental representations then affect behavior—sometimes for the better and sometimes for the worse. Importantly, our new evidence suggests that gesture may do even more than action to change thought. Problem-solvers who use one-handed movements to lift the smallest disk, thus treating it as a light rather than heavy disk, do not seem to mentally represent the disk as light. When the disk, without warning, becomes heavy, their performance on a task involving the now-heavy disk is unaffected. Gesture may be more effective in linking thought and action then acting itself.

Are observer viewpoint and metaphoric gestures as effective as character viewpoint gestures in grounding thought in action? Previous work tells us that all three gesture types can play an active role in learning, changing the way the learner thinks. Our current findings suggest that the mechanism underlying this effect for character viewpoint gestures may be the gesture’s ability to ground thought in action. That is, producing character viewpoint gestures brings action into the speaker’s mental representations, and does so more effectively than producing the actions on which the gestures are based. Future work is needed to determine whether observer viewpoint and metaphoric gestures serve the same grounding function equally well. As an example, we can ask, whether producing concrete character viewpoint gesture during instruction on a mental rotation task facilitates learning better than producing the more abstract observer viewpoint (or metaphoric) gesture.

Character viewpoint gestures appear to be an excellent vehicle for bringing action information into a learner’s mental representations. But, as Beilock and Goldin-Meadow (2010) show, action information can, at times, hinder rather than facilitate performance. Indeed, the more abstract observer viewpoint gestures, because they strip away some of the action details that are particular to an individual problem, may be even better at promoting generalization across problems than character viewpoint gestures (compare findings in the analogy literature showing that abstract representations are particularly good at facilitating transfer to new problems, e.g., Gick & Holyoak, 1980).

We might further suggest that character and observer viewpoint gestures, if used in sequence, could provide a bridge between concrete actions and more abstract representation. For example, a child learning to solve mental rotation problems might first be encouraged to use a character viewpoint gesture, using her hands to represent the actual movements that would be used to move the two pieces together (e.g., two C-shaped hands mimicking the way the pieces are held and rotated toward one another). In the next stage, she might be encouraged to use the more abstract observer viewpoint gesture and use her hands to represent the movement of the pieces without including information about how the pieces would be held (e.g., two pointing hands rotated toward one another). In this way, producing the concrete character viewpoint gesture could serve as an entry point into the action details of a particular problem. These details may then need to be stripped away if the learner is to generalize beyond one particular problem, a process that could be facilitated by producing the more abstract observer viewpoint. If so, learners taught to first produce the character viewpoint gesture and next produce the observer viewpoint gesture may do better than learners taught to produce the two gestures in the opposite order, or than learners taught to produce either gesture on its own, or no gesture at all.

To summarize, many lines of previous research have shown that the way we act influences the way we think. A separate literature has shown that the way we gesture also influences the way we think. In this manuscript, we have attempted to bring these two research themes together, and explore whether gesture plays its role in thinking by virtue of the fact that it uses action to represent action. Gesture is a unique case because, although it is an action, it does not have a direct effect on the world the way other actions usually do. Gesture has its effect by representing ideas. We have argued here that actions whose primary function is to represent ideas—that is, gestures—can influence thinking, perhaps even more powerfully than actions whose function is to impact the world more directly.

Acknowledgments

Supported by NICHD grant R01 HD47450 and NSF Award No. BCS-0925595 to SGM, and NSF Award No. SBE 0541957 to SB for the Spatial Intelligence Learning Center. We thank Mary-Anne Decatur, Sam Larson, Carly Kontra, and Neil Albert for help with data collection and analysis.

Footnotes

In the comprehension test, after the participants heard each sentence, they saw a picture. Their task was to judge whether the actor in the picture had been mentioned in the sentence. In some pictures, the actor performed the action described in the sentence; in some, the actor performed an action not mentioned in the sentence; and in some, the actor pictured was not mentioned at all in the sentence (these were control sentences). If participants are able to comprehend the actions described in the sentences, they should be faster to correctly say that the actor in the picture had been mentioned in the sentence if that actor was pictured doing the action described in the sentence than if the actor was pictured doing a different action.

Note that the strategy the child produces in gesture leads to an answer that is different from 12 (i.e., 14). Although children are implicitly aware of the answers generated by the strategies they produce in gesture (Garber, Alibali & Goldin-Meadow, 1998), they very rarely explicitly produce those answers.

In this example, the strategies produced in both speech and gesture lead to incorrect (albeit different, see preceding footnote) answers. Children also sometimes produce a correct strategy in gesture paired with an incorrect strategy in speech (however, they rarely do the reverse, i.e., a correct strategy in speech paired with an incorrect strategy in gesture). Responses of this sort also predict future success on the problem (Perry et al., 1988).

References

- Alibali MW, Flevares L, Goldin-Meadow S. Assessing knowledge conveyed in gesture: Do teachers have the upper hand? Journal of Educational Psychology. 1997;89:183–193. [Google Scholar]

- Alibali M, Goldin-Meadow S. Gesture-speech mismatch and mechanisms of learning: what the hands reveal about a child’s state of mind. Cognitive Psychology. 1993;25(4):468–523. doi: 10.1006/cogp.1993.1012. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. Perceptual symbol systems. Behavioral & Brain Sciences. 1999;22:577–660. doi: 10.1017/s0140525x99002149. [DOI] [PubMed] [Google Scholar]

- Bavelas JB, Chovil N, Lawrie DA, Wade A. Interactive gestures. Discourse Processes. 1992;15:469–489. [Google Scholar]

- Beattie G, Shovelton H. Mapping the range of information contained in the iconic hand gestures that accompany spontaneous speech. Journal of Language and Social Psychology. 1999;18:438–462. [Google Scholar]

- Beilock S, Goldin-Meadow S. Gesture changes thought by grounding it in action. 2009 doi: 10.1177/0956797610385353. Under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beilock SL, Holt LE. Embodied preference judgments: Can likeability be driven by the motor system? Psychological Science. 2007;18:51–57. doi: 10.1111/j.1467-9280.2007.01848.x. [DOI] [PubMed] [Google Scholar]

- Beilock SL, Lyons IM, Mattarella-Micke A, Nusbaum HC, Small SL. Sports experience changes the neural processing of action language. Proceedings of the National Academy of Sciences, USA. 2008;105:13269–13273. doi: 10.1073/pnas.0803424105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Broaders S, Cook SW, Mitchell Z, Goldin-Meadow S. Making children gesture brings out implicit knowledge and leads to learning. Journal of Experimental Psychology: General. 2007;136(4):539–550. doi: 10.1037/0096-3445.136.4.539. [DOI] [PubMed] [Google Scholar]

- Calise A, Giese MA. Nonvisual motor training influences biological motion perception. Current Biology. 2006;16:69–74. doi: 10.1016/j.cub.2005.10.071. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Glaser DE, Grezes J, Passingham RE, Haggard P. Action observation and acquired motor skills: An fmri study with expert dancers. Cerebral Cortex. 2005;15:1243–1249. doi: 10.1093/cercor/bhi007. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Grezes J, Glaser DE, Passingham RE, Haggard P. Seeing or doing? Influence of visual and motor familiarity on action observation. Current Biology. 2006;16:1905–1910. doi: 10.1016/j.cub.2006.07.065. [DOI] [PubMed] [Google Scholar]

- Cassell J, McNeill D, McCullough K-E. Speech-gesture mismatches: Evidence for one underlying representation of linguistic and nonlinguistic information. Pragmatics & Cognition. 1999;7(1):1–34. [Google Scholar]

- Church RB, Ayman-Nolley S, Mahootian S. The effects of gestural instruction on bilingual children. International Journal of Bilingual Education and Bilingualism. 2004;7(4):303–319. [Google Scholar]

- Church RB, Goldin-Meadow S. The mismatch between gesture and speech as an index of transitional knowledge. Cognition. 1986;23:43–71. doi: 10.1016/0010-0277(86)90053-3. [DOI] [PubMed] [Google Scholar]

- Collins AM, Quillian MR. Retrieval time from semantic memory. Journal of Verbal Learning and Verbal Behavior. 1969;8:240–247. [Google Scholar]

- Cook SW, Goldin-Meadow S. The Role of Gesture in Learning: Do children use their hands to change their minds? Journal of Cognition & Development. 2006;7(2):211–232. [Google Scholar]

- Cook SW, Goldin-Meadow S. Gesture leads to knowledge change by creating implicit knowledge. 2010 Under review. [Google Scholar]

- Cook SW, Mitchell Z, Goldin-Meadow S. Gesturing makes learning last. Cognition. 2008;106:1047–1058. doi: 10.1016/j.cognition.2007.04.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook SW, Tanenhaus MK. Embodied communication: Speakers’ gestures affect listeners’ actions. Cognition. 2009;113:98–104. doi: 10.1016/j.cognition.2009.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrlich S, Tran K, Levine S, Goldin-Meadow S. Gesture training leads to improvement in children’s mental rotation skill. Paper presented at the biennial meeting of the Society for Research in Child Development; Denver. 2009. [Google Scholar]

- Ehrlich SB, Levine SC, Goldin-Meadow S. The importance of gesture in children’s spatial reasoning. Developmental Psychology. 2006;42:1259–1268. doi: 10.1037/0012-1649.42.6.1259. [DOI] [PubMed] [Google Scholar]

- Garbarini F, Adenzato M. At the root of embodied cognition: Cognitive science meets neurophysiology. Brain and Cognition. 2004;56:100–106. doi: 10.1016/j.bandc.2004.06.003. [DOI] [PubMed] [Google Scholar]

- Garber P, Alibali MW, Goldin-Meadow S. Knowledge conveyed in gesture is not tied to the hands. Child Development. 1998;69:75–84. [PubMed] [Google Scholar]

- Gibson JJ. The ecological approach to visual perception. London: Erlbaum; 1979. [Google Scholar]

- Gick ML, Holyoak KJ. Analogical problem solving. Cognitive Psychology. 1980;12:306–355. [Google Scholar]

- Glenberg AM. What memory is for. Behavioral & Brain Sciences. 1997;20:1–55. doi: 10.1017/s0140525x97000010. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S. Hearing gesture: How our hands help us think. Cambridge, MA: Harvard University Press; 2003. [Google Scholar]

- Goldin-Meadow S, Alibali MW, Church RB. Transitions in concept acquisition: Using the hand to read the mind. Psychological Review. 1993:279–297. doi: 10.1037/0033-295x.100.2.279. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Cook SW, Mitchell ZA. Gesturing gives children new ideas about math. Psychological Science. 2009;20(3):267–272. doi: 10.1111/j.1467-9280.2009.02297.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Kim S, Singer M. What the teacher’s hands tell the student’s mind about math. Journal of Educational Psychology. 1999;91:720–730. [Google Scholar]

- Goldin-Meadow S, Nusbaum H, Kelly S, Wagner S. Explaining math: Gesturing lightens the load. Psychological Science. 2001;12:516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Sandhofer CM. Gesture conveys substantive information about a child’s thoughts to ordinary listeners. Developmental Science. 1999;2:67–74. [Google Scholar]

- Goldin-Meadow S, Singer MA. From children’s hands to adults’ ears: Gesture’s role in teaching and learning. Developmental Psychology. 2003;39(3):509–520. doi: 10.1037/0012-1649.39.3.509. [DOI] [PubMed] [Google Scholar]

- Goldin-Meadow S, Wein D, Chang C. Assessing knowledge through gesture: Using children’s hands to read their minds. Cognition and Instruction. 1992;9:201–219. [Google Scholar]

- Grafton S, Fagg A, Arbib M. Dorsal premotor cortex and conditional movement selection: A PET functional mapping study. The Journal of Neurophysiology. 1998;79:1092–1097. doi: 10.1152/jn.1998.79.2.1092. [DOI] [PubMed] [Google Scholar]

- Grafton S, Hamilton A. Evidence for a distributed hierarchy of action representation in the brain. Human Motor Sciences. 2007;26:590–616. doi: 10.1016/j.humov.2007.05.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham JA, Argyle M. A cross-cultural study of the communication of extra-verbal meaning by gestures. International Journal of Psychology. 1975;10(1):57–67. [Google Scholar]

- Holle H, Gunter TC. The Role of Iconic Gestures in Speech Disambiguation: ERP Evidence. Journal of Cognitive Neuroscience. 2007;19(7):1175–1192. doi: 10.1162/jocn.2007.19.7.1175. [DOI] [PubMed] [Google Scholar]

- Holt LE, Beilock SL. Expertise and its embodiment: Examining the impact of sensorimotor skill expertise on the representation of action-related text. Psychonomic Bulletin & Review. 2006;13:694–701. doi: 10.3758/bf03193983. [DOI] [PubMed] [Google Scholar]

- Hostetter AB, Alibali MW. Visible embodiment: Gestures as simulated action. Psychonomic Bulletin and Review. 2008;15:495–514. doi: 10.3758/pbr.15.3.495. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Goldin-Meadow S. Why people gesture as they speak. Nature. 1998;396:228. doi: 10.1038/24300. [DOI] [PubMed] [Google Scholar]

- Iverson JM, Goldin-Meadow S. The resilience of gesture in talk: Gesture in blind speakers and listeners. Developmental Science. 2001;4:416–422. [Google Scholar]

- Kelly SD, Church RB. Can children detect conceptual information conveyed through other children’s nonverbal behaviors? Cognition and Instruction. 1997;15:107–134. [Google Scholar]

- Kelly SD, Church RB. A comparison between children’s and adults’ ability to detect conceptual information conveyed through representational gestures. Child Development. 1998;69:85–93. [PubMed] [Google Scholar]

- Kelly SD, Kravitz C, Hopkins M. Neural correlates of bimodal speech and gesture comprehension. Brain and Language. 2004;89(1):253–260. doi: 10.1016/S0093-934X(03)00335-3. [DOI] [PubMed] [Google Scholar]

- Kintsch W. The role of knowledge in discourse comprehension: A construction integration model. Psychological Review. 1988;95:163–182. doi: 10.1037/0033-295x.95.2.163. [DOI] [PubMed] [Google Scholar]

- Kita S. How representational gestures help speaking. In: McNeill D, editor. Language and gesture. N.Y.: Cambridge University Press; 2000. pp. 162–185. [Google Scholar]

- Knapp ML. Nonverbal communication in human interaction. 2. New York: Holt, Rinehart & Winston; 1978. [Google Scholar]

- McNeill D. Hand and mind: What gestures reveal about thought. Chicago: University of Chicago Press; 1992. [Google Scholar]

- Newell A, Simon HA. Human problem solving. Englewood Cliffs, NJ: Prentice-Hall; 1972. [Google Scholar]

- Niedenthal PM, Barsalou LW, Winkielman P, Krauth-Gruber S, Ric F. Embodiment in attitudes, social perception, and emotion. Personality and Social Psychology Review. 2005;9:184–211. doi: 10.1207/s15327957pspr0903_1. [DOI] [PubMed] [Google Scholar]

- O’Shea J, Sebastian C, Boorman E, Johansen-Berg H, Rushworth M. Functional specificity of human premotor-motor cortical interactions during action selection. European Journal of Neuroscience. 2007;26:2085–2095. doi: 10.1111/j.1460-9568.2007.05795.x. [DOI] [PubMed] [Google Scholar]

- Özyürek A, Willems RM, Kita S, Hagoort P. On-line integration of semantic information from speech and gesture: Insights from event-related brain potentials. Journal of Cognitive Neuroscience. 2007;19(4):605–616. doi: 10.1162/jocn.2007.19.4.605. [DOI] [PubMed] [Google Scholar]

- Perry M, Berch DB, Singleton JL. Constructing Shared Understanding: The Role of Nonverbal Input in Learning Contexts. Journal of Contemporary Legal Issues. 1995 Spring;(6):213–236. [Google Scholar]

- Perry M, Church RB, Goldin-Meadow S. Transitional knowledge in the acquisition of concepts. Cognitive Development. 1988;3:359–400. [Google Scholar]

- Perry M, Elder AD. Knowledge in transition: Adults’ developing understanding of a principle of physical causality. Cognitive Development. 1997;12:131–157. [Google Scholar]

- Pine KJ, Lufkin N, Messer D. More gestures than answers: Children learning about balance. Developmental Psychology. 2004;40:1059–106. doi: 10.1037/0012-1649.40.6.1059. [DOI] [PubMed] [Google Scholar]

- Ping R, Goldin-Meadow S. Hands in the air: Using ungrounded iconic gestures to teach children conservation of quantity. Developmental Psychology. 2008;44:1277–1287. doi: 10.1037/0012-1649.44.5.1277. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ping R, Goldin-Meadow S. Gesturing saves cognitive resources when talking about non-present objects. Cognitive Science. 2010 doi: 10.1111/j.1551-6709.2010.01102.x. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pylyshyn ZW. Computational cognition: Toward a foundation for cognitive science. Cambridge, MA: MIT Press; 1986. [Google Scholar]

- Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying understanding and imitation of action. Nature Reviews Neuroscience. 2001;2:661–670. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- Rosenbaum DA, Carlson RA, Gilmore RO. Acquisition of intellectual and perceptual-motor skills. Annual Review of Psychology. 2001;52:453–470. doi: 10.1146/annurev.psych.52.1.453. [DOI] [PubMed] [Google Scholar]

- Rushworth M, Johansen-Berg H, Gobel S, Devlin J. The left parietal and premotor cortices: motor attention and selection. Neuroimage. 2003;20:S89–100. doi: 10.1016/j.neuroimage.2003.09.011. [DOI] [PubMed] [Google Scholar]

- Schluter N, Krams M, Rushworth M, Passingham R. Cerebral dominance for action in the human brain: the selection of actions. Neuropsychologia. 2001;39:105–113. doi: 10.1016/s0028-3932(00)00105-6. [DOI] [PubMed] [Google Scholar]

- Singer MA, Goldin-Meadow S. Children learn when their teachers’ gestures and speech differ. Psychological Science. 2005;16:85–89. doi: 10.1111/j.0956-7976.2005.00786.x. [DOI] [PubMed] [Google Scholar]

- Streeck J. How to do things with things. Human Studies. 1996;19(4):365–384. [Google Scholar]

- Toni I, Shah NJ, Frink GR, Thoenissen D, Passingham RE, Zilles K. Multiple movement representations in the human brain: An event-related fMRI study. Journal of Cognitive Neuroscience. 2002;14:769–784. doi: 10.1162/08989290260138663. [DOI] [PubMed] [Google Scholar]

- Valenzeno L, Alibali MW, Klatzky R. Teachers’ gestures facilitate students’ learning: A lesson in symmetry. Contemporary Educational Psychology. 2003;28:187–204. [Google Scholar]

- Wagner S, Nusbaum H, Goldin-Meadow S. Probing the mental representation of gesture: Is handwaving spatial? Journal of Memory and Language. 2004;50:395–407. [Google Scholar]

- Wilson M. Six views of embodied cognition. Psychonomic Bulletin & Review. 2002;9:625–636. doi: 10.3758/bf03196322. [DOI] [PubMed] [Google Scholar]

- Wilson M, Knoblich G. The case for motor involvement in perceiving conspecifics. Psychological Bulletin. 2004;131:460–473. doi: 10.1037/0033-2909.131.3.460. [DOI] [PubMed] [Google Scholar]

- Wise S, Murray E. Arbitrary associations between antecedents and actions. Trends in Neuroscience. 2000;23:271–276. doi: 10.1016/s0166-2236(00)01570-8. [DOI] [PubMed] [Google Scholar]

- Wu YC, Coulson S. Meaningful gestures: Electrophysiological indices of iconic gesture comprehension. Psychophysiology. 2005;42:654–667. doi: 10.1111/j.1469-8986.2005.00356.x. [DOI] [PubMed] [Google Scholar]

- Yang S, Gallo D, Beilock SL. Embodied memory judgments: A case of motor fluency. Journal of Experiment Psychology: Learning, Memory, & Cognition. 2009;35:1359–1365. doi: 10.1037/a0016547. [DOI] [PubMed] [Google Scholar]

- Zwaan RA. Embodied cognition, perceptual symbols, and situation models. Discourse Processes. 1999;28:81–88. [Google Scholar]