Abstract

The state estimation problem for discrete-time recurrent neural networks with both interval discrete and infinite-distributed time-varying delays is studied in this paper, where interval discrete time-varying delay is in a given range. The activation functions are assumed to be globally Lipschitz continuous. A delay-dependent condition for the existence of state estimators is proposed based on new bounding techniques. Via solutions to certain linear matrix inequalities, general full-order state estimators are designed that ensure globally asymptotic stability. The significant feature is that no inequality is needed for seeking upper bounds for the inner product between two vectors, which can reduce the conservatism of the criterion by employing the new bounding techniques. Two illustrative examples are given to demonstrate the effectiveness and applicability of the proposed approach.

Keywords: Delay-dependent condition, State estimator, Interval discrete time-varying delays, Infinite-distributed delays, Linear matrix inequality

Introduction

Recent years have witnessed a growing interest in investigating recurrent neural networks (RNNs) including the well-known cellular neural networks, Hopfield neural networks, bidirectional associative memory neural networks, as well as Cohen-Grossberg neural networks. This is mainly due to the widespread applications in various areas such as signal processing, fixed point computations, model identification, optimization, pattern recognition and associative memory. It has been shown that such applications heavily rely on the dynamical behaviors of the neural networks. For instance, when a neural network is employed to solve an optimization problem, it is highly desirable for the neural network to have a unique and globally stable equilibrium. Therefore, stability analysis of different neural networks has received much attention and various stability conditions have been obtained. Recently, RNNs has extended bigger pieces of work with applications across the area of engineering, such as (Mandic and Chambers 2001; Hirose 2006; Mandic and Goh 2009; Arena et al. 1998). In addition, time delays are an inherent feature of signal transmission between neurons, and it is now well known that time delays are one of the main cause of instability and oscillations in neural networks. Therefore, the problem of stability analysis for delayed neural networks is of great importance and has attracted considerable attention. A great number of results related to this issue have been reported in the past few years (Cao and Wang 2005; Hu and Wang 2006; Shi et al. 2007; Cao et al. 2007; Yu et al. 2003; Xu et al. 2005; Arik 2003; Park 2006; Shen and Wang 2008; Cao and Wang 2003; Qi 2007; Jiao and Wang 2010; Jiao and Wang 2007). It is worth pointing out that the distributed delay occurs very often in reality and it has been an attractive subject of research in the past few years. However, almost all existing works on distributed delays have focused on continuous-time systems. It is known that the discrete-time system is in a better position to model digitally transmitted signals in a dynamical way than its continuous-time analogue. On the other hand, a neural network usually has a spatial nature due to the presence of an amount of parallel pathways of a variety of axon sizes and lengths (Principle et al. 1994; Tank and Hopfield 1987), which gives rise to possible distributed delays for discrete-time systems. Recently, the global stability problem for neural networks with discrete and distributed delays has been drawing increasing attention (Cao et al. 2006; Wang et al. 2005; Shu et al. 2009). With the increasing application of digitalization, distributed delays may emerge in a discrete-time manner for the increasing application of digitalization, therefore, it becomes desirable to study the stability for discrete-time neural networks with distributed delays, see e.g., Liu et al. (2009).

On the other hand, in relatively large-scale neural networks, normally only partial information about the neuron state is available in the network outputs. Therefore, in order to utilize the neural networks. One would need to estimate the neuron state through available measurements. Recently Elanayar and Shin (1994) presented a neural network approach to approximate the dynamic and static equations of stochastic nonlinear systems and to estimate the state variables. An adaptive state estimator has been described by using techniques of optimization theory, the calculus of variations and gradient descent dynamics in Parlos et al. (2001). The state estimation problem for neural networks with time-varying delays was studied in He et al. (2006), where a linear matrix inequality (LMI) approach (Boyd et al. 1994) was developed to solve the problem. When time delays and parameter uncertainties appear simultaneously, the robust state estimation problem was solved in Huang et al. (2008), where sufficient conditions for the existence of state estimators have been obtained in terms of LMIs. The state estimation problem for neural networks with both discrete and distributed time-delay has been studied in Liu et al. (2007) and Li and Fei (2007), respectively, in which design methods based on LMIs were presented. It should be pointed out that the aforementioned results for state estimation problems for both the discrete delay case and distributed delay case have been on continuous-time models. In implementing and applications of neural networks, however, discrete-time neural networks play a more important role than their continuous-time counterparts in today’s digital world. Recently, the dynamics analysis problem for discrete-time recurrent neural networks with or without time delays has received considerable research interest; see, e.g., Lu (2007), Liang and Cao (2005), Liu and Han (2007), Chen et al. (2006), Wang and Xu (2006). The corresponding results for the discrete-time distributed delays case can be found in Liu et al. (2009). Although delay-dependent state estimation results on discrete-time recurrent neural networks with interval time-varying delay were proposed in Lu (2008), no delay-dependent state estimation results on discrete-time recurrent neural networks with interval discrete and distributed time-varying delays are available in the literature, and remain as an open topic for further investigation. The objective of this paper is to address this unsolved problem.

This paper deals with the problem of state estimation for discrete-time recurrent neural networks with interval discrete and infinite-distributed time-varying delays. The time-varying delay includes both lower and upper bound of delays. A delay- dependent condition for the existence of estimators is proposed and an LMI approach is developed. A general full order estimator is sought to guarantee that the resulting error system is globally asymptotically stable. Desired estimators can be obtained by the solution to certain LMIs, which can be solved numerically and efficiently by resorting to standard numerical algorithms (Gahinet et al. 1995). It is worth emphasizing that no inequality is introduced to seek upper bounds of the inner product between two vectors. This feature has the potential to enable us to reduce the conservatism of the criterion by applying the new bounding technique. Finally, two illustrative examples are provided to demonstrate the effectiveness of the proposed method.

Throughout this paper, the notation X ≥ Y (X > Y) for symmetric matrices X and Y indicates that the matrix X − Y is positive and semi-definite (respectively, positive definite), ZT represents the transpose of matrix Z.

Preliminaries

Consider the following discrete-time recurrent neural network with interval discrete and infinite-distributed time-varying delays

|

1 |

where x(k) = (x1(k), x2(k), …, xn(k))T is the state vector, A = diag(a1, a2, …, an) with |ai| < 1, i = 1,2, …, n, is the state feedback coefficient matrix,  ,

,  and

and  are, respectively, the connection weight matrix, the discretely delayed connection weight matrix and distributively delayed connection weight matrix,

are, respectively, the connection weight matrix, the discretely delayed connection weight matrix and distributively delayed connection weight matrix,  is the neuron activation function with g(0) = 0, τ(k) is the time-varying delay of the system satisfying

is the neuron activation function with g(0) = 0, τ(k) is the time-varying delay of the system satisfying

|

2 |

where 0 ≤ τ1 ≤ τ2 are known integers. I is the constant input vector.

Remark 2.1 The delay term

|

in the system (1) is the so-called infinitely distributed delay in the discrete-time setting, which can be regarded as the discretization of the infinite integral form

|

for the continuous-time system. The importance of distributed delays has been widely recognized and intensively studied (Kuang et al. 1991; Xie et al. 2001). However, almost all existing references concerning distributed delays consider the continuous-time systems, where the distributed delays are described in the discrete-time-distributed delays has been proposed in Wei et al. (2009), and we aim to give the state estimation problem formulated for discrete-time recurrent neural networks with interval discrete and distributed time-varying delays in this paper.

Remark 2.2 Time-varying delays are common in signal processing, and are addressed by using all-pass filters as a time delay, the area is called fractional time delay processing. An all-pass filter is signal processing filter that passes all frequencies equally, but changes the phase relationship between various frequencies. It does this by the frequency at which the phase shift crosses 90° (i.e., when the input and output signals go into quadrature—when there is a quarter wavelength of delay between them.), see, e.g. Boukis et al. (2006).

In order to obtain our main results, the neuron activation functions in (1) are assumed to be bounded and satisfy the following assumptions.

Assumption 1

For each i ∈ {1,2,3,…, n}, the neuron activation function gi: R → R is Lipschitz continuous with a Lipschitz constant αi, that is, there exists constant αi such that for any ς1, ς2 ∈ R, ς1 ≠ ς2 the neuron activation functions satisfy

|

3 |

where αi > 0, i = 1,2,…, n.

Assumption 2

The constant μ ≥ 0 satisfies the following convergent condition

|

4 |

It is worth noting that the information related to the neuron states in large scale neural networks is commonly incomplete from the network measurements. That is to say, the neuron states are not often fully obtainable in the network outputs in practical applications. Our goal in this paper is to provide an efficient estimation algorithm in order to observe the neuron states from the available network outputs. For this reason, the network measurements are assumed to satisfy.

|

5 |

where y(k) ∈ Rm is the measurement output and C is a known constant matrix with appropriate dimension. q(k, x(k)) is the neuron-dependent nonlinear disturbances on the network outputs and satisfies the following Lipschitz condition.

|

6 |

where x(t) = [x1(t), x2(t), …,xn(t)]T ∈ Rn, y(t) = [y1(t), y2(t), …,yn(t)]T ∈ Rn and Σ is the known real constant matrix.

For system (1) and (5), we now consider the following full-order estimator

|

7 |

where  is the estimation of the neuron state and L ∈ Rm×n is the estimator gain matrix to be determined.

is the estimation of the neuron state and L ∈ Rm×n is the estimator gain matrix to be determined.

Our target is to choose a suitable L so that  approaches x(k) asymptotically. Let

approaches x(k) asymptotically. Let

|

8 |

be the state estimation error.

Then in terms of (1), (6) and (7), the error-state dynamics can be expressed by

|

9 |

where

The purpose of this paper is to develop delay-dependent conditions for the existence of estimators for the discrete-time recurrent neural network with interval discrete and infinite-distributed time-varying delay. Specifically, for given scalars lower and upper bound of delays, we deal with finding an asymptotically stable estimator in the form of (9) such that the error-state system (9) is globally asymptotically stable for any lower and upper bounds of delay satisfying τ1 ≤ τ(k) ≤ τ2.

Mathematical formulation of the proposed approach

This section explores the globally delay-dependent state estimation conditions given in (9). Specially, an LMI approach is employed to solve the estimator if the system (9) is globally asymptotically stable. The analysis commences by using the LMI approach to develop some results which are essential to introduce the following Lemmas 3.1 and 3.2 for the proof of our main theorem in this section.

Lemma 3.1 For real matrices Z1 > 0, Z2 > 0, Ei, Ti, and Si (i = 1, 2) with appropriate dimensions. Then, the following matrix inequalities hold

|

10 |

|

11 |

|

12 |

where

|

Proof See Appendix 1.

Lemma 3.2 Let Z ∈ Rn×nbe a positive semidefinite matrix, xi ∈ Rn, and constant βi > 0 (i = 1, 2, …). If the series concerned is convergent, then the following inequality holds

|

13 |

For any matrices Ei, Tiand Si (i = 1, 2) of appropriate dimensions, it can be shown that

|

14 |

|

15 |

|

16 |

|

17 |

|

18 |

On the other hand, under the conditions (10–12), the following inequalities are also true

|

19 |

|

20 |

|

21 |

where

|

In the following theorem, the state estimation problem formulated is given based on Lemmas 3.1 and 3.2 as follows.

Theorem 3.1 Under Assumptions 1 and 2, given scalars 0 ≤ τ1 < τ2, the error-state dynamics in (9) with interval time-varying delay τ(k) satisfying (2) is globally asymptotically stable, if there exist matrices P > 0, Q1 > 0, Q2 > 0, Z1 > 0, Z2 > 0, Z3 > 0, a nonsingular matrix H1, diagonal matrices R1 > 0, R2 > 0 and matrices Y1, Ei, Siand Ti (i = 1,2) of appropriate dimensions such that the following LMI holds

|

22 |

where

|

|

and

and  In this case, a desired the estimator gain matrix L is given as L = H−11Y1.

In this case, a desired the estimator gain matrix L is given as L = H−11Y1.

Proof See Appendix 2.

Remark 3.1 Based on the new bounding technique (Lemma 3.1), Theorem 3.1 proposes a delay-dependent criterion such that the state estimator design for the discrete-time recurrent neural network with interval discrete and infinite-distributed time-varying delays can be achieved by solving an LMI. Free-weighting matrices Ei, Si and Ti (i = 1, 2) are introduced into the LMI condition (22). It should be noted that these free-weighting matrices are not required to be symmetric. The purpose of introduction of these free-weighting matrices is to reduce conservatism for system (9).

Remark 3.2 In deriving the delay-dependent estimators in Theorem 3.1, no model transform technique incorporating Moon’s bounding inequality (Moon et al. 2001) to estimate the inner product of the involved crossing terms has been performed. This feature has the potential to enable us to obtain less-conservative results by means of Lemma 3.1.

Remark 3.3 Theorem 3.1 provides a sufficient condition for the globally stability of the discrete-time recurrent neural network with interval discrete and infinite-distributed time-varying delay given in (1) and proposes a delay-dependent criterion with the upper and lower bounds of the delays. Even for τ1 = 0, the result in Theorem 3.1 still may lead to the delay-dependent stability criteria. In fact, if Z2 = εI, with ε > 0, being sufficient small scalars, Ti = 0, i = 1, 2, Theorem 3.1 yields the delay-dependent criterion only with the upper bound of the delay for error-state dynamics in (9).

Two illustrative examples are now presented to demonstrate the usefulness of the proposed approach.

Examples

Example 1 Consider the discrete-time recurrent neural network (1) with parameters as follows

|

In this example, we assume the activation functions satisfy Assumption 1 as α1 = 0.562, α2 = 0.047. Take the activation function as  . The non-linearity q(k, x(k)) is assumed to satisfy (5) with

. The non-linearity q(k, x(k)) is assumed to satisfy (5) with  , for the network output, the parameter C is given as

, for the network output, the parameter C is given as  . Choosing the constants μi = 2−3−i, we easily find that

. Choosing the constants μi = 2−3−i, we easily find that  , which satisfies the convergence condition (4). Using the Matlab LMI Control Toolbox to solve the LMI (15) for all interval time-varying delays satisfying

, which satisfies the convergence condition (4). Using the Matlab LMI Control Toolbox to solve the LMI (15) for all interval time-varying delays satisfying  (i.e. the lower bound τ1 = 0 and the upper bound τ2 = 6), the feasible solution is sought as

(i.e. the lower bound τ1 = 0 and the upper bound τ2 = 6), the feasible solution is sought as

|

|

|

|

|

|

Therefore, by Theorem 3.1 the state estimation problem is solvable, and a desired estimator is given by L = H−11Y1 as

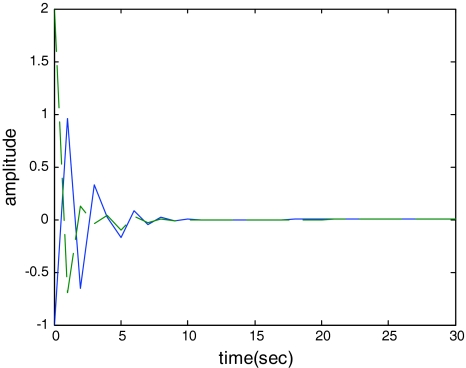

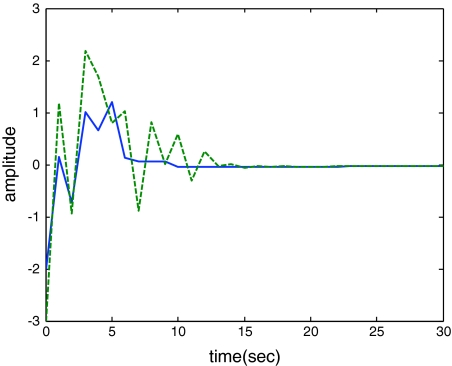

Such a conclusion is further supported by the simulation results given in Fig. 1.

Fig. 1.

Response of the error state e(t)

Example 2 Consider the discrete-time recurrent neural network (1) with the following parameters

|

|

The activation functions in this example are assumed to satisfy Assumption 1 with α1 = 0.034, α2 = 0.429, α3 = 0.508. Take the activation function as  . The non-linearity q(k, x(k)) is assumed to satisfy (5) with

. The non-linearity q(k, x(k)) is assumed to satisfy (5) with  , for the network output, the parameter C is given as

, for the network output, the parameter C is given as  . Choosing the constants μi = 2−3−i, we easily find that

. Choosing the constants μi = 2−3−i, we easily find that  , which satisfies the convergence condition (4). By the Matlab LMI Control Toolbox, it can be verified that Theorem 3.1 in this paper is feasible solution for all interval time-varying delays satisfying

, which satisfies the convergence condition (4). By the Matlab LMI Control Toolbox, it can be verified that Theorem 3.1 in this paper is feasible solution for all interval time-varying delays satisfying  (i.e. the lower bound τ1 = 2 and the upper bound τ2 = 4), the solution is obtained as.

(i.e. the lower bound τ1 = 2 and the upper bound τ2 = 4), the solution is obtained as.

|

|

|

|

|

|

|

|

Therefore, according to Theorem 3.1, a desired estimator can be computed as.

|

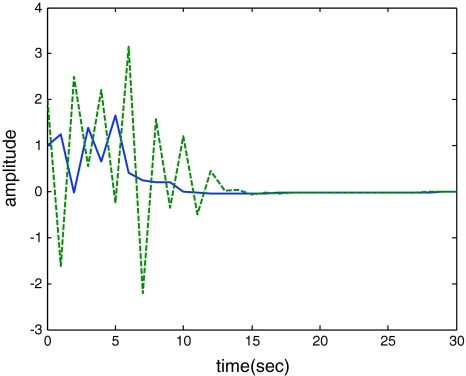

Thus, by Theorem 3.1, the desired estimator guarantees asymptotic stability of the error-state system in (8) (i.e. the lower bound τ1 = 2 and the upper bound τ2 = 4). Such a conclusion is further supported by the simulation results given in Fig. 2.

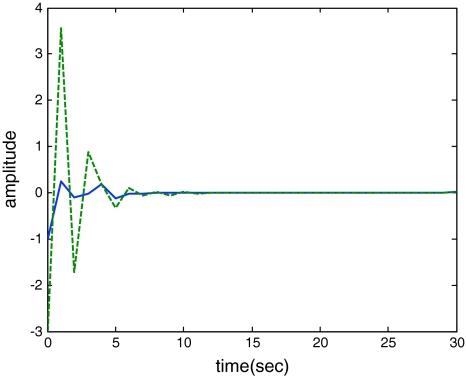

Fig. 2.

Response of the rule state x1(t) (solid) and its estimation  (dash)

(dash)

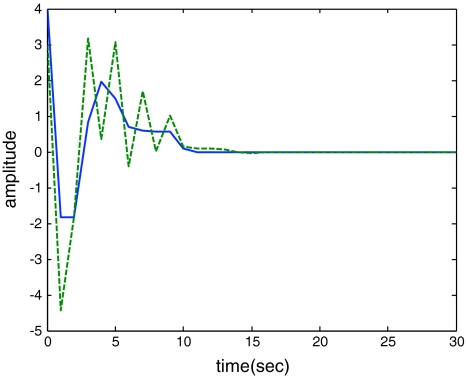

Conclusions

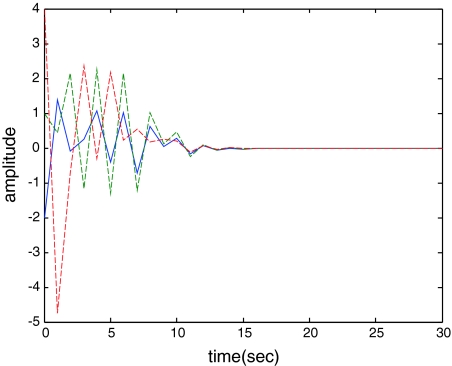

In this paper, the problem of state estimation for a discrete-time recurrent neural network with interval discrete and infinite-distributed time-varying delay has been studied. A delay-dependent sufficient condition for the solvability of this problem, which takes into account the range for the time delay, has been established by using the Lyapunov–Krasovskii stability theory and LMI technique. Lemma 3.1 has been introduced into the state estimation criteria to reduce the conservatism because of without the inner product of the involved crossing terms in the results. The desired estimator guarantees asymptotic stability of the estimation error-state system. Illustrative examples have been presented to demonstrate the effectiveness of the proposed approach (Figs 3, 4, 5, 6, 7).

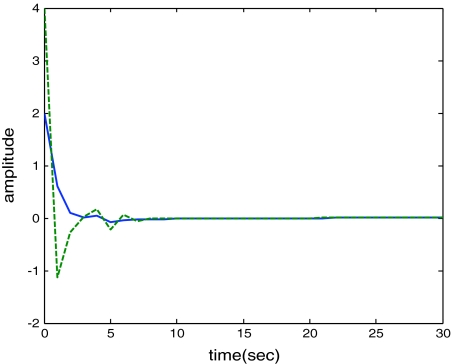

Fig. 3.

Response of the rule state x2(t) (solid) and its estimation  (dash)

(dash)

Fig. 4.

Response of the error state e(t)

Fig. 5.

Response of the rule state x1(t) (solid) and its estimation  (dash)

(dash)

Fig. 6.

Response of the rule state x2(t) (solid) and its estimation  (dash)

(dash)

Fig. 7.

Response of the rule state x3(t) (solid) and its estimation  (dash)

(dash)

Appendix 1: Proof of Lemma 3.1

Proof It follows from (11) that

|

then one has

|

Along a similar analysis method to the proof of (10) and (12), the results in (10) and (12) are omitted here to avoid duplication.

Appendix 2: Proof of Theorem 3.1

Proof Define the following Lyapunov–Krasovskii functional candidate for the error-state system (9) as

|

23 |

Then, the difference of V(k) along the solution of (8) is given by

|

24 |

|

25 |

|

26 |

|

27 |

|

28 |

where

Substituting (19–21) and (24–28) into ΔV(k) and arranging some algebraic manipulations, it follows that

|

29 |

Using Assumption 1 and noting that R1 > 0 and R2 > 0 are diagonal matrices, one has.

|

30 |

|

31 |

where  Moreover, since the function q(k, ·) satisfies (6), it follows from (6) that.

Moreover, since the function q(k, ·) satisfies (6), it follows from (6) that.

|

32 |

where Σ is the known real constant matrix.

Substituting (30–32) into (29), it is not difficult to deduce that

|

33 |

where

|

|

Employing the change of variable such that Y1 = H1L, and using Schur complement, yields ΔV(k) < 0 if (22) is true. Therefore, it can be concluded that the system (9) is globally asymptotically stable for interval time-varying delay τ(k) satisfying (2). This completes the proof of Theorem 3.1. □

References

- Arena P, Muscato G, Fortuna L. Neural networks in multidimensional domains: fundamentals and new trends in modelling and control. Berlin: Springer; 1998. [Google Scholar]

- Arik S. Global asymptotic stability of a larger class of neural networks with constant time delay. Phys Lett A. 2003;311:504–511. doi: 10.1016/S0375-9601(03)00569-3. [DOI] [Google Scholar]

- Boukis C, Mandic DP, Constantinides AG, Polymenakos LC (2006) A novel algorithm for the adaptation of the pole of Laguerre filters 13:429–432

- Boyd S, Ei Ghaoui L, Feron E, Balakrishnan V. Linear matrix inequalities in system and control theory. Philadelphia, PA: SIAM; 1994. [Google Scholar]

- Cao J, Wang J. Global asymptotic stability of a general class of recurrent neural networks with time-varying delays. IEEE Trans Circuits Syst I Fundam Theory Appl. 2003;50:34–44. doi: 10.1109/TCSI.2002.807494. [DOI] [Google Scholar]

- Cao J, Wang J. Global asymptotic and robust stability of recurrent neural networks with time delays. IEEE Trans Circuits Syst I Regul Pap. 2005;52:417–426. doi: 10.1109/TCSI.2004.841574. [DOI] [Google Scholar]

- Cao J, Yuan K, Li HX. Global asymptotical stability of recurrent neural networks with multiple discrete delays and distributed delays. IEEE Trans Neural Netw. 2006;17:1646–1651. doi: 10.1109/TNN.2006.881488. [DOI] [PubMed] [Google Scholar]

- Cao J, Ho DWC, Huang X. LMI-based criteria for global robust stability of bidirectional associative memory networks with time delay. Nonlinear Anal. 2007;66:1558–1572. doi: 10.1016/j.na.2006.02.009. [DOI] [Google Scholar]

- Chen WH, Lu X, Liang DY. Global exponential stability for discrete-time neural networks with variable delays. Phys Lett A. 2006;358:186–198. doi: 10.1016/j.physleta.2006.05.014. [DOI] [Google Scholar]

- Elanayar VTS, Shin YC. Approximation and estimation of nonlinear stochastic dynamic systems using radial basis function neural networks. IEEE Trans Neural Netw. 1994;5:594–603. doi: 10.1109/72.298229. [DOI] [PubMed] [Google Scholar]

- Gahinet P, Nemirovsky A, Laub AJ, Chilali M. LMI control toolbox: for use with matlab. Natick, MA: The Math Works; 1995. [Google Scholar]

- He Y, Wang QG, Wu M, Lin C. Delay-dependent state estimation for delayed neural networks. IEEE Trans Neural Netw. 2006;7:1077–1081. doi: 10.1109/TNN.2006.875969. [DOI] [PubMed] [Google Scholar]

- Hirose A. Complex-valued neural networks: theories and applications. New Jersey: World Scientific Publishing; 2006. [Google Scholar]

- Hu S, Wang J. Global robust stability of a class of discrete-time interval neural networks. IEEE Trans Circuits Syst Part I Regul. 2006;53:129–138. doi: 10.1109/TCSI.2005.854288. [DOI] [Google Scholar]

- Huang H, Feng G, Cao J. Robust state estimation for uncertain neural networks with time-varying delay. IEEE Trans Neural Netw. 2008;19:1329–1339. doi: 10.1109/TNN.2008.2000206. [DOI] [PubMed] [Google Scholar]

- Jiao X, Wang R (2007) Synchronous firing and its control in neuronal population with time delay. Adv Cogn Neurodyn 213–217

- Jiao X, Wang R. Synchronous firing patterns of neuronal population with excitatory and inhibitory connections. Int J Non-linear Mech. 2010;45:647–651. doi: 10.1016/j.ijnonlinmec.2008.11.020. [DOI] [Google Scholar]

- Kuang Y, Smith H, Martin R. Global stability for infinite-delay, dispersive Lotka-Volterra systems: weakly interacting populations in nearly identical patches. J Dyn Differ Equ. 1991;3:339–360. doi: 10.1007/BF01049736. [DOI] [Google Scholar]

- Li T, Fei SM. Exponential state estimation for recurrent neural networks with distributed delays. Neurocomputing. 2007;71:428–438. doi: 10.1016/j.neucom.2007.07.005. [DOI] [Google Scholar]

- Liang J, Cao J. Convergence of discrete-time recurrent neural networks with variable delay. Int J Bifurcat Chaos. 2005;15:595–5811. [Google Scholar]

- Liu P, Han QL. Discrete-time analogs for a class of continuous-time recurrent neural networks. IEEE Trans Neural Netw. 2007;18:1343–1355. doi: 10.1109/TNN.2007.891593. [DOI] [PubMed] [Google Scholar]

- Liu Y, Wang Z, Liu X. Design of exponential state estimators for neural networks with mixed time delays. Phys Lett A. 2007;364:401–412. doi: 10.1016/j.physleta.2006.12.018. [DOI] [Google Scholar]

- Liu Y, Wang Z, Liu X. Asymptotic stability for neural networks with mixed time-delays: the discrete-time case. Neural Netw. 2009;22:67–74. doi: 10.1016/j.neunet.2008.10.001. [DOI] [PubMed] [Google Scholar]

- Lu CY. A delay-range-dependent approach to global robust stability for discrete-time uncertain recurrent neural networks with interval time-varying delay. Proc IMechE Part I J Syst Control Eng. 2007;221:1123–1132. doi: 10.1243/09596518JSCE451. [DOI] [Google Scholar]

- Lu CY. A delay-range-dependent approach to design state estimator for discrete-time recurrent neural networks with interval time-varying delay. IEEE Trans Circuits Syst-II Express Briefs. 2008;55:1163–11767. doi: 10.1109/TCSII.2008.2001988. [DOI] [Google Scholar]

- Mandic DP, Chambers JA. Recurrent neural networks for prediction: learning algorithms, architectures and stability. New York: Wiley; 2001. [Google Scholar]

- Mandic PD, Goh V. Complex valued nonlinear adaptive filters: noncircularity, widely linear and neural models. New York: Wiley; 2009. [Google Scholar]

- Moon YS, Park P, Kwon WH, Lee YS. Delay-dependent robust stabilization of uncertain state-delayed systems. Int J Control. 2001;74(14):1447–1455. doi: 10.1080/00207170110067116. [DOI] [Google Scholar]

- Park JH. On global stability criterion for neural networks with discrete and distributed delays. Chaos Solitons Fractals. 2006;30:897–902. doi: 10.1016/j.chaos.2005.08.147. [DOI] [Google Scholar]

- Parlos AG, Menon SK, Atiya AF. An algorithmic approach to adaptive state filtering using recurrent neural networks. IEEE Trans Neural Netw. 2001;12:1411–14321. doi: 10.1109/72.963777. [DOI] [PubMed] [Google Scholar]

- Principle JC, Kuo JM, Celebi S. An analysis of the gamma memory in dynamic neural networks. IEEE Trans Neural Netw. 1994;5:337–361. doi: 10.1109/72.279195. [DOI] [PubMed] [Google Scholar]

- Qi H. New sufficient conditions for global robust stability of delayed neural networks. IEEE Trans Circuits Syst I Regul Pap. 2007;54:1131–1141. doi: 10.1109/TCSI.2007.895524. [DOI] [Google Scholar]

- Shen Y, Wang J. An improved algebraic criterion for global exponential stability of recurrent neural networks with time-varying delays. IEEE Trans Neural Netw. 2008;19:528–531. doi: 10.1109/TNN.2007.911751. [DOI] [PubMed] [Google Scholar]

- Shi P, Zhang J, Qiu J, Xing L. New global asymptotic stability criterion for neural networks with discrete and distributed delays. Proc IMECHE Part I J Syst Control Eng. 2007;221:129–135. doi: 10.1243/09596518JSCE287. [DOI] [Google Scholar]

- Shu H, Wang Z, Liu Z. Global asymptotic stability of uncertain stochastic bi-directional associative memory networks with discrete and distributed delays. Math Comput Simul. 2009;80:490–505. doi: 10.1016/j.matcom.2008.07.007. [DOI] [Google Scholar]

- Tank DW, Hopfield JJ. Neural computation by concentrating information in time. Proc Natl Acad Sci USA. 1987;84:1896–1991. doi: 10.1073/pnas.84.7.1896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang L, Xu Z. Sufficient and necessary conditions for global exponential stability of discrete-time recurrent neural networks. IEEE Trans Circuits Syst-I Regul Pap. 2006;53:1373–1380. doi: 10.1109/TCSI.2006.874179. [DOI] [Google Scholar]

- Wang Z, Liu Y, Liu X. On global asymptotic stability of neural networks with discrete and distributed delays. Phys Lett A. 2005;345:299–308. doi: 10.1016/j.physleta.2005.07.025. [DOI] [Google Scholar]

- Wei G, Feng G, Wang Z. Robust H∞ control for discrete-time fuzzy systems with infinite-distributed delays. IEEE Trans Fuzzy Syst. 2009;17:224–232. doi: 10.1109/TFUZZ.2008.2006621. [DOI] [Google Scholar]

- Xie L, Fridman E, Shaked U. Robust H∞ control of distributed delay systems with application to combustion control. IEEE Trans Automat Contr. 2001;46:1930–1935. doi: 10.1109/9.975483. [DOI] [Google Scholar]

- Xu S, Lam J, Ho DWC, Zou Y. Delay-dependent exponential stability for a class of neural networks with time delays. J Comput Appl Math. 2005;183:16–28. doi: 10.1016/j.cam.2004.12.025. [DOI] [Google Scholar]

- Yu GJ, Lu CY, Tsai JSH, Liu BD, Su TJ. Stability of cellular neural networks with time-varying delay. IEEE Trans Circuits Syst-I Fundam Theory Appl. 2003;50:677–678. doi: 10.1109/TCSI.2003.811031. [DOI] [Google Scholar]