Abstract

Because many illnesses show heterogeneous response to treatment, there is increasing interest in individualizing treatment to patients [11]. An individualized treatment rule is a decision rule that recommends treatment according to patient characteristics. We consider the use of clinical trial data in the construction of an individualized treatment rule leading to highest mean response. This is a difficult computational problem because the objective function is the expectation of a weighted indicator function that is non-concave in the parameters. Furthermore there are frequently many pretreatment variables that may or may not be useful in constructing an optimal individualized treatment rule yet cost and interpretability considerations imply that only a few variables should be used by the individualized treatment rule. To address these challenges we consider estimation based on l1 penalized least squares. This approach is justified via a finite sample upper bound on the difference between the mean response due to the estimated individualized treatment rule and the mean response due to the optimal individualized treatment rule.

Keywords and phrases: decision making, l1 penalized least squares, Value

1. Introduction

Many illnesses show heterogeneous response to treatment. For example, a study on schizophrenia [12] found that patients who take the same antipsychotic (olanzapine) may have very different responses. Some may have to discontinue the treatment due to serious adverse events and/or acutely worsened symptoms, while others may experience few if any adverse events and have improved clinical outcomes. Results of this type have motivated researchers to advocate the individualization of treatment to each patient [16, 24, 11]. One step in this direction is to estimate each patient’s risk level and then match treatment to risk category [5, 6]. However, this approach is best used to decide whether to treat; otherwise it assumes the knowledge of the best treatment for each risk category. Alternately, there is an abundance of literature focusing on predicting each patient’s prognosis under a particular treatment [10, 28]. Thus an obvious way to individualize treatment is to recommend the treatment achieving the best predicted prognosis for that patient. In general the goal is to use data to construct individualized treatment rules that, if implemented in future, will optimize the mean response.

Consider data from a single stage randomized trial involving several active treatments. A first natural procedure to construct the optimal individualized treatment rule is to maximize an empirical version of the mean response over a class of treatment rules (assuming larger responses are preferred). As will be seen, this maximization is computationally difficult because the mean response of a treatment rule is the expectation of a weighted indicator that is non-continuous and non-concave in the parameters. To address this challenge we make a substitution. That is, instead of directly maximizing the empirical mean response to estimate the treatment rule, we use a two-step procedure that first estimates a conditional mean and then from this estimated conditional mean derives the estimated treatment rule. As will be seen in Section 3, even if the optimal treatment rule is contained in the space of treatment rules considered by the substitute two-step procedure, the estimator derived from the two-step procedure may not be consistent. However if the conditional mean is modeled correctly, then the two-step procedure consistently estimates the optimal individualized treatment rule. This motivates consideration of rich conditional mean models with many unknown parameters. Furthermore there are frequently many pretreatment variables that may or may not be useful in constructing an optimal individualized treatment rule, yet cost and interpretability considerations imply that fewer rather than more variables should be used by the treatment rule. This consideration motivates the use of l1 penalized least squares (l1-PLS).

We propose to estimate an optimal individualized treatment rule using a two step procedure that first estimates the conditional mean response using l1-PLS with a rich linear model and second, derives the estimated treatment rule from estimated conditional mean. For brevity, throughout, we call the two step procedure the l1-PLS method. We derive several finite sample upper bounds on the difference between the mean response to the optimal treatment rule and the mean response to the estimated treatment rule. All of the upper bounds hold even if our linear model for the conditional mean response is incorrect and to our knowledge are, up to constants, the best available. We use the upper bounds in Section 3 to illuminate the potential mismatch between using least squares in the two-step procedure and the goal of maximizing mean response. The upper bounds in Section 4.1 involve a minimized sum of the approximation error and estimation error; both errors result from the estimation of the conditional mean response. We shall see that l1-PLS estimates a linear model that minimizes this approximation plus estimation error sum among a set of suitably sparse linear models.

If the part of the model for the conditional mean involving the treatment effect is correct, then the upper bounds imply that, although a surrogate two-step procedure is used, the estimated treatment rule is consistent. The upper bounds provide a convergence rate as well. Furthermore in this setting the upper bounds can be used to inform how to choose the tuning parameter involved in the l1-penalty to achieve the best rate of convergence. As a by-product, this paper also contributes to existing literature on l1-PLS by providing a finite sample prediction error bound for the l1-PLS estimator in the random design setting without assuming the model class contains or is close to the true model.

The paper is organized as follows. In Section 2, we formulate the decision making problem. In Section 3, for any given decision, e.g. individualized treatment rule, we relate the reduction in mean response to the excess prediction error. In Section 4, we estimate an optimal individualized treatment rule via l1-PLS and provide a finite sample upper bound on the maximal reduction in optimal mean response achieved by the estimated rule. In Section 5, we consider a data dependent tuning parameter selection criterion. This method is evaluated using simulation studies and illustrated with data from the Nefazodone-CBASP trial [13]. Discussions and future work are presented in Section 6.

2. Individualized treatment rules

We use upper case letters to denote random variables and lower case letters to denote values of the random variables. Consider data from a randomized trial. On each subject we have the pretreatment variables X ∈  , treatment A taking values in a finite, discrete treatment space

, treatment A taking values in a finite, discrete treatment space  , and a real-valued response R (assuming large values are desirable). An individualized treatment rule (ITR) d is a deterministic decision rule from

, and a real-valued response R (assuming large values are desirable). An individualized treatment rule (ITR) d is a deterministic decision rule from  into the treatment space

into the treatment space  .

.

Denote the distribution of (X, A, R) by P. This is the distribution of the clinical trial data; in particular, denote the known randomization distribution of A given X by p(·|X). The likelihood of (X, A, R) under P is then f0(x)p(a|x)f1(r|x, a), where f0 is the unknown density of X and f1 is the unknown density of R conditional on (X, A). Denote the expectations with respect to the distribution P by an E. For any ITR d :  →

→  , let Pd denote the distribution of (X, A, R) in which d is used to assign treatments. Then the likelihood of (X, A, R) under Pd is f0(x)1a=d(x)f1(r|x, a). Denote expectations with respect to the distribution Pd by an Ed. The Value of d is defined as V (d) = Ed(R). An optimal ITR, d0, is a rule that has the maximal Value, i.e.

, let Pd denote the distribution of (X, A, R) in which d is used to assign treatments. Then the likelihood of (X, A, R) under Pd is f0(x)1a=d(x)f1(r|x, a). Denote expectations with respect to the distribution Pd by an Ed. The Value of d is defined as V (d) = Ed(R). An optimal ITR, d0, is a rule that has the maximal Value, i.e.

where the argmax is over all possible decision rules. The Value of d0, V(d0), is the optimal Value.

Assume P[p(a|X) > 0] = 1 for all a ∈  (i.e. all treatments in

(i.e. all treatments in  are possible for all values of X a.s.). Then Pd is absolutely continuous with respect to P and a version of the Radon-Nikodym derivative is dPd/dP = 1a=d(x)/p(a|x). Thus the Value of d satisfies

are possible for all values of X a.s.). Then Pd is absolutely continuous with respect to P and a version of the Radon-Nikodym derivative is dPd/dP = 1a=d(x)/p(a|x). Thus the Value of d satisfies

| (2.1) |

Our goal is to estimate d0, i.e. the ITR that maximizes (2.1), using data from distribution P. When X is low dimensional and the best rule within a simple class of ITRs is desired, empirical versions of the Value can be used to construct estimators [21, 27]. However if the best rule within a larger class of ITRs is of interest, these approaches are no longer feasible.

Define Q0(X,A) ≜ E(R|X,A) (Q0(X,A) is sometimes called the “Quality” of treatment a at observation x). It follows from (2.1) that for any ITR d,

Thus V (d0) = E[Q0(X, d0(X))] ≤ E[maxa∈ Q0(X, a)]. On the other hand, by the definition of d0,

Q0(X, a)]. On the other hand, by the definition of d0,

Hence an optimal ITR satisfies d0(X) ∈ arg maxa∈ Q0(X, a) a.s.

Q0(X, a) a.s.

3. Relating the reduction in Value to excess prediction error

The above argument indicates that the estimated ITR will be of high quality (i.e. have high Value) if we can estimate Q0 accurately. In this section, we justify this by providing a quantitative relationship between the Value and the prediction error.

Because  is a finite, discrete treatment space, given any ITR, d, there exists a square integrable function Q :

is a finite, discrete treatment space, given any ITR, d, there exists a square integrable function Q :  ×

×  → ℝ for which d(X) ∈ arg maxa Q(X, a) a.s. Let L(Q) ≜ E[R − Q(X,A)]2 denote the prediction error of Q (also called the mean quadratic loss). Suppose that Q0 is square integrable and that the randomization probability satisfies p(a|x) ≥ S−1 for an S > 0 and all (x, a) pairs. Murphy [23] showed that

→ ℝ for which d(X) ∈ arg maxa Q(X, a) a.s. Let L(Q) ≜ E[R − Q(X,A)]2 denote the prediction error of Q (also called the mean quadratic loss). Suppose that Q0 is square integrable and that the randomization probability satisfies p(a|x) ≥ S−1 for an S > 0 and all (x, a) pairs. Murphy [23] showed that

| (3.1) |

Intuitively, this upper bound means that if the excess prediction error of Q (i.e. E(R − Q)2 − E(R − Q0)2) is small, then the reduction in Value of the associated ITR d (i.e. V(d0) − V(d)) is small. Furthermore the upper bound provides a rate of convergence for an estimated ITR. For example, suppose Q0 is linear, that is Q0 = Φ(X, A)θ0 for a given vector-valued basis function Φ on  ×

×  and an unknown parameter θ0. And Suppose we use a correct linear model for Q0 (here “linear” means linear in parameters), say the model

and an unknown parameter θ0. And Suppose we use a correct linear model for Q0 (here “linear” means linear in parameters), say the model  = {Φ(X, A)θ : θ → ℝdim(Φ)} or a linear model containing

= {Φ(X, A)θ : θ → ℝdim(Φ)} or a linear model containing  with dimension of parameters fixed in n. If we estimate θ by least squares and denote the estimator by θ̂, then the prediction error of Q̂ = Φθ̂ converges to L(Q0) at rate 1/n under mild regularity conditions. This together with inequality (3.1) implies that the Value obtained by the estimated ITR, d̂(X) ∈ arg maxa Q̂(X, a), will converge to the optimal Value at rate at least

.

with dimension of parameters fixed in n. If we estimate θ by least squares and denote the estimator by θ̂, then the prediction error of Q̂ = Φθ̂ converges to L(Q0) at rate 1/n under mild regularity conditions. This together with inequality (3.1) implies that the Value obtained by the estimated ITR, d̂(X) ∈ arg maxa Q̂(X, a), will converge to the optimal Value at rate at least

.

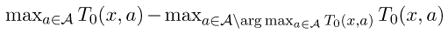

In the following theorem, we improve this upper bound in two aspects. First, we show that an upper bound with exponent larger than 1/2 can be obtained under a margin condition, which implicitly implies a faster rate of convergence. Second, it turns out that the upper bound need only depend on one term in the function Q; we call this the treatment effect term, T. For any square integrable Q, the associated treatment effect term is defined as T(X,A) ≜ Q(X,A) − E[Q(X,A)|X]. Note that d(X) ∈ arg maxa T(X, a) = arg maxa Q(X, a) a.s. Similarly, the true treatment effect term is given by

| (3.2) |

T0(x, a) is the centered effect of treatment A = a at observation X = x; d0(X) ∈ arg maxa T0(X, a).

Theorem 3.1

Suppose p(a|x) ≥ S−1 for a positive constant S for all (x, a) pairs. Assume there exists some constants C > 0 and α ≥ 0 such that

| (3.3) |

for all positive ε. Then for any ITR d :  →

→  and square integrable function Q :

and square integrable function Q :  ×

×  → ℝ such that d(X) ∈ arg maxa∈

→ ℝ such that d(X) ∈ arg maxa∈ Q(X, a) a.s., we have

Q(X, a) a.s., we have

| (3.4) |

and

| (3.5) |

where C′ = (22+3αS1+αC)1/(2+α).

The proof of Theorem 3.1 is in Appendix A.1.

Remarks

We set the second maximum in (3.3) to −∞ if for an x, T0(x, a) is constant in a and thus the set

\arg maxa∈

\arg maxa∈ T0(x, a) = ∅.

T0(x, a) = ∅.Condition (3.3) is similar to the margin condition in classification [25, 18, 32]; in classification this assumption is often used to obtain sharp upper bounds on the excess 0 − 1 risk in terms of other surrogate risks [1]. Here

can be viewed as the “margin” of T0 at observation X = x. It measures the difference in mean responses between the optimal treatment(s) and the best suboptimal treatment(s) at x. For example, suppose X ~ U[−1, 1], P(A = 1|X) = P(A = −1|X) = 1/2 and T0(X, A) = XA. Then the margin condition holds with C = 1/2 and α = 1. Note the margin condition does not exclude multiple optimal treatments for any observation x. However, when α > 0, it does exclude suboptimal treatments that yield a conditional mean response very close to the largest conditional mean response for a set of x with nonzero probability.

can be viewed as the “margin” of T0 at observation X = x. It measures the difference in mean responses between the optimal treatment(s) and the best suboptimal treatment(s) at x. For example, suppose X ~ U[−1, 1], P(A = 1|X) = P(A = −1|X) = 1/2 and T0(X, A) = XA. Then the margin condition holds with C = 1/2 and α = 1. Note the margin condition does not exclude multiple optimal treatments for any observation x. However, when α > 0, it does exclude suboptimal treatments that yield a conditional mean response very close to the largest conditional mean response for a set of x with nonzero probability.For C = 1, α = 0, Condition (3.3) always holds for all ε > 0; in this case (3.4) reduces to (3.1).

-

The larger the α, the larger the exponent (1 + α)/(2 + α) and thus the stronger the upper bounds in (3.4) and (3.5). However the margin condition is unlikely to hold for all ε if α is very large. An alternate margin condition and upper bound are as follows.

Suppose p(a|x) ≥ S−1 for all (x, a) pairs. Assume there is an ε > 0, such that(3.6) Then V(d0) − V(d) ≤ 4S[L(Q) − L(Q0)]/ε and V(d0) − V(d) ≤ 4SE(T − T0)2/ε.

The proof is essentially the same as that of Theorem 3.1 and is omitted. Condition (3.6) means that T0 evaluated at the optimal treatment(s) minus T0 evaluated at the best suboptimal treatment(s) is bounded below by a positive constant for almost all X observations. If X assumes only a finite number of values, then this condition always holds, because we can take ε to be the smallest difference in T0 when evaluated at the optimal treatment(s) and the suboptimal treatment(s) (note that if T0(x, a) is constant for all a ∈

for some observation X = x, then all treatments are optimal for that observation).

for some observation X = x, then all treatments are optimal for that observation). Inequality (3.5) cannot be improved in the sense that choosing T = T0 yields zero on both sides of the inequality. Moreover an inequality in the opposite direction is not possible, since each ITR is associated with many non-trivial T-functions. For example, suppose X ~ U[−1, 1], P(A = 1|X) = P(A = −1|X) = 1/2 and T0(X, A) = (X − 1/3)2A. The optimal ITR is d0(X) = 1 a.s. Consider T (X, A) = θA. Then maximizing T(X, A) yields the optimal ITR as long as θ > 0. This means that the left hand side (LHS) of (3.5) is zero, while the right hand side (RHS) is always positive no matter what value θ takes.

Theorem 3.1 supports the approach of minimizing the estimated prediction error to estimate Q0 or T0 and then maximizing this estimator over a ∈  to obtain an ITR. It is natural to expect that even when the approximation space used in estimating Q0 or T0 does not contain the truth, this approach will provide the best (highest Value) of the considered ITRs. Unfortunately this does not occur due to the mismatch between the loss functions (weighted 0–1 loss and the quadratic loss). This mismatch is indicated by remark 5 above. More precisely, note that the approximation space, say

to obtain an ITR. It is natural to expect that even when the approximation space used in estimating Q0 or T0 does not contain the truth, this approach will provide the best (highest Value) of the considered ITRs. Unfortunately this does not occur due to the mismatch between the loss functions (weighted 0–1 loss and the quadratic loss). This mismatch is indicated by remark 5 above. More precisely, note that the approximation space, say  for Q0, places implicit restrictions on the class of ITRs that will be considered. In effect the class of ITRs is

for Q0, places implicit restrictions on the class of ITRs that will be considered. In effect the class of ITRs is  = {d(X) ∈ arg maxa Q(X, a) : Q ∈

= {d(X) ∈ arg maxa Q(X, a) : Q ∈  }. It turns out that minimizing the prediction error may not result in the ITR in

}. It turns out that minimizing the prediction error may not result in the ITR in  that maximizes the Value. This occurs when the approximation space

that maximizes the Value. This occurs when the approximation space  does not provide a treatment effect term close to the treatment effect term in Q0. In the following toy example, the optimal ITR d0 belongs to

does not provide a treatment effect term close to the treatment effect term in Q0. In the following toy example, the optimal ITR d0 belongs to  , yet the prediction error minimizer over

, yet the prediction error minimizer over  does not yield d0.

does not yield d0.

A toy example

Suppose X is uniformly distributed in [−1, 1], A is binary {−1, 1} with probability 1/2 each and is independent of X, and R is normally distributed with mean Q0(X, A) = (X −1/3)2A and variance 1. It is easy to see that the optimal ITR satisfies d0(X) = 1 a.s. and V(d0) = 4/9. Consider approximation space  = {Q(X, A; θ) = (1, X, A, XA)θ : θ ∈ ℝ4} for Q0. Thus the space of ITRs under consideration is

= {Q(X, A; θ) = (1, X, A, XA)θ : θ ∈ ℝ4} for Q0. Thus the space of ITRs under consideration is  = {d(X) = sign(θ3+θ4X) : θ3, θ4 ∈ ℝ}. Note that d0 ∈

= {d(X) = sign(θ3+θ4X) : θ3, θ4 ∈ ℝ}. Note that d0 ∈  since d0(X) can be written as sign(θ3 + θ4X) for any θ3 > 0 and θ4 = 0. d0 is the best treatment rule in

since d0(X) can be written as sign(θ3 + θ4X) for any θ3 > 0 and θ4 = 0. d0 is the best treatment rule in  . However, minimizing the prediction error L(Q) over

. However, minimizing the prediction error L(Q) over  yields Q*(X, A) = (4/9−2/3X)A. The ITR associated with Q* is d*(X) = arg maxa∈{−1,1} Q*(X, a) = sign(2/3 − X), which has lower Value than

.

yields Q*(X, A) = (4/9−2/3X)A. The ITR associated with Q* is d*(X) = arg maxa∈{−1,1} Q*(X, a) = sign(2/3 − X), which has lower Value than

.

4. Estimation via l1-penalized least squares

To deal with the mismatch between minimizing the prediction error and maximizing the Value discussed in the prior section, we consider a large linear approximation space  for Q0. Since overfitting is likely (due to the potentially large number of pretreatment variables and/or large approximation space for Q0) we use penalized least squares (see Section S.1 of the supplementary material for further discussion of the overfitting problem). Furthermore we use l1 penalized least squares (l1-PLS, [31]) as the l1 penalty does some variable selection and as a result will lead to ITRs that are cheaper to implement (fewer variables to collect per patient) and easier to interpret. See Section 6 for the discussion of other potential penalization methods.

for Q0. Since overfitting is likely (due to the potentially large number of pretreatment variables and/or large approximation space for Q0) we use penalized least squares (see Section S.1 of the supplementary material for further discussion of the overfitting problem). Furthermore we use l1 penalized least squares (l1-PLS, [31]) as the l1 penalty does some variable selection and as a result will lead to ITRs that are cheaper to implement (fewer variables to collect per patient) and easier to interpret. See Section 6 for the discussion of other potential penalization methods.

Let

represent i.i.d. observations on n subjects in a randomized trial. For convenience, we use En to denote the associated empirical expectation (i.e.

for any real-valued function f on  ×

×  × ℝ). Let

× ℝ). Let  := {Q(X, A; θ) = Φ(X, A) θ, θ ∈ ℝJ} be the approximation space for Q0, where φ(X, A) = (φ1(X, A), …, φJ (X, A)) is a 1 by J vector composed of basis functions on

:= {Q(X, A; θ) = Φ(X, A) θ, θ ∈ ℝJ} be the approximation space for Q0, where φ(X, A) = (φ1(X, A), …, φJ (X, A)) is a 1 by J vector composed of basis functions on  ×

× , θ is a J by 1 parameter vector, and J is the number of basis functions (for clarity here J will be fixed in n, see Appendix A.2 for results with J increasing as n increases). The l1-PLS estimator of θ is

, θ is a J by 1 parameter vector, and J is the number of basis functions (for clarity here J will be fixed in n, see Appendix A.2 for results with J increasing as n increases). The l1-PLS estimator of θ is

| (4.1) |

where σ̂j = [Enφj(X, A)2]1/2, θj is the jth component of θ and λn is a tuning parameter that controls the amount of penalization. The weights σ̂j’s are used to balance the scale of different basis functions; these weights were used in Bunea et al. [4] and van de Geer [33]. In some situations, it is natural to penalize only a subset of coefficients and/or use different weights in the penalty; see Section S.2 of the supplementary material for required modifications. The resulting estimated ITR satisfies

| (4.2) |

4.1. Performance guarantee for the l1-PLS

In this section we provide finite sample upper bounds on the difference between the optimal Value and the Value obtained by the l1-PLS estimator in terms of the prediction errors resulting from the estimation of Q0 and T0. These upper bounds guarantee that if Q0 (or T0) is consistently estimated, the estimator of d0 will be consistent and will inherit a rate of convergence from the rate of convergence of the estimator of Q0 (or T0). Perhaps more importantly, the finite sample upper bounds provided below do not require the assumption that either Q0 or T0 is consistently estimated. Thus each upper bound includes approximation error as well as estimation error. The estimation error decreases with decreasing model sparsity and increasing sample size. An “oracle” model for Q0 (or T0) minimizes the sum of these two errors among suitably sparse linear models (see remark 2 after Theorem 4.3 for a precise definition of the oracle model). In finite samples, the upper bounds imply that d̂n, the ITR produced by the l1-PLS method, will have Value roughly as if the l1-PLS method detects the sparsity of the oracle model and then estimates from the oracle model using ordinary least squares (see remark 3 below).

Define the prediction error minimizer θ* ∈ ℝJ by

| (4.3) |

For expositional simplicity assume that θ* is unique, and define the sparsity of θ ∈ ℝJ by its l0 norm, ||θ||0 (see Appendix A.2 for a more general setting, where θ* is not unique and a laxer definition of sparsity is used). As discussed above, for finite n, instead of estimating θ*, the l1-PLS estimator θ̂n estimates a parameter,

, possessing small prediction error but with controlled sparsity. For any bounded function f on  ×

×  , let ||f||∞ ≜ supx∈

, let ||f||∞ ≜ supx∈ ,a∈

,a∈ |f(x,a)|.

lies in the set of parameters Θn defined by

|f(x,a)|.

lies in the set of parameters Θn defined by

| (4.4) |

where , and η, β and U are positive constants that will be defined in Theorem 4.1.

The first two conditions in (4.4) restrict Θn to θ’s with controlled distance in sup norm and with controlled distance in prediction error via first order derivatives (note that . The third condition restricts Θn to sparse θ’s. Note that as n increases this sparsity requirement becomes laxer, ensuring that θ* ∈ Θn for sufficiently large n.

When Θn is non-empty, is given by

| (4.5) |

Note that is at least as sparse as θ* since by (4.3), for any θ such that ||θ||0 > ||θ*||0.

The following theorem provides a finite sample performance guarantee for the ITR produced by l1-PLS method. Intuitively, this result implies that if Q0 can be well approximated by the sparse linear representation (so that both and are small), then d̂n will have Value close to the optimal Value in finite samples.

Theorem 4.1

Suppose p(a|x) ≥ S−1 for a positive constant S for all (x, a) pairs and the margin condition (3.3) holds for some C > 0, α ≥ 0 and all positive ε. Assume

the error terms εi = Ri − Q0(Xi, Ai), i = 1, …, n, are independent of (Xi, Ai), i …, n and are i.i.d. with E(εi) = 0 and for some c, σ2 > 0 for all l ≥ 2;

there exist finite, positive constants U and η such that maxj=1,…,J ||φj||∞/σj ≤ U and ||Q0 − Φθ*||∞ ≤ η; and

E[(φ1/σ1, …, φJ/σJ )T (φ1/σ1,…, φJ/σJ)] is positive definite, and the smallest eigenvalue is denoted by β.

Consider the estimated ITR d̂n defined by (4.2) with tuning parameter

| (4.6) |

where k = 82 max{c, σ, η}. Let Θn be the set defined in (4.4). Then for any n ≥ 24U2 log(Jn) and for which Θn is non-empty, we have, with probability at least 1 − 1/n, that

| (4.7) |

where C′ = (22+3αS1+αC)1/(2+α).

The result follows from inequality (3.4) in Theorem 3.1 and inequality (4.10) in Theorem 4.3. Similar results in a more general setting can be obtained by combining (3.4) with inequality (A.7) in Appendix A.2.

Remarks

Note that is the minimizer of the upper bound on the RHS of (4.7) and that is contained in the set { : m ⊂ {1, …, J}}. Each satisfies ; that is, minimizes the prediction error of the model indexed by the set m (i.e. model {Σj∈m φjθj : θj ∈ ℝ}) (within Θn). For each , the first term in the upper bound in (4.7) (i.e. ) is the approximation error of the model indexed by m within Θn. As in van de Geer [33], we call the second term the estimation error of the model indexed by m. To see why, first put . Then, ignoring the log(n) factor, the second term is a function of the sparsity of model m relative to the sample size, n. Up to constants, the second term is a “tight” upper bound for the estimation error of the OLS estimator from model m, where “tight” means that the convergence rate in the bound is the best known rate. Note that is the parameter that minimizes the sum of the two errors over all models. Such a model (the model corresponding to ) is called an oracle model. The log(n) factor in the estimation error is the price paid for not knowing the sparsity of the oracle model. By using the l1-PLS method, we pay by a factor of log(n) in the estimation error and as an exchange, the l1-PLS estimator behaves roughly as if it knew the sparsity of the oracle model and as if is was estimated from the oracle model using OLS. Thus the log(n) factor can be viewed as the price paid for not knowing the sparsity of the oracle model and thus having to conduct model selection. See remark 2 after Theorem A.1 for the precise definition of the oracle model and its relationship to .

Suppose λn = o(1). Then in large samples the estimation error term is negligible. In this case, is close to θ*. When the model Φθ* approximates Q0 sufficiently well, we see that setting λn equal to its lower bound in (4.6) provides the fastest rate of convergence of the upper bound to zero. More precisely, suppose Q0 = Φθ* (i.e. L(Φθ*) − L((Q0) = 0). Then inequality (4.7) implies that V(d0) − V(d̂n) ≤ Op ((log n/n)(1+α)/(2+α)). A convergence in mean result is presented in Corollary 4.1.

In finite samples, the estimation error is nonnegligible. The argument of the minimum in the upper bound (4.7), , minimizes prediction error among parameters with controlled sparsity. In remark 2 after Theorem 4.3, we discuss how this upper bound is a tight upper bound for the OLS estimator from an oracle model in the step-wise model selection setting. In this sense, inequality (4.7) implies that decision rule produced by the l1-PLS method will have a reduction in Value roughly as if it knew the sparsity of the oracle model and were estimated from the oracle model using OLS.

-

Assumptions 1–3 in Theorem 4.1 are employed to derive the finite sample prediction error bound for the l1-PLS estimator θ̂n defined in (4.1). Below we briefly discuss these assumptions.

Assumption 1 implicitly implies that the error terms do not have heavy tails. This condition is often assumed to show that the sample mean of a variable is concentrated around its true mean with a high probability. It is easy to verify that this assumption holds if each εi is bounded. Moreover, it also holds for some commonly used error distributions that have unbounded support, such as the normal or double exponential.

Assumption 2 is also used to show the concentration of the sample mean around the true mean. It is possible to replace the boundedness condition by a moment condition similar to Assumption 1. This assumption requires that all basis functions and the difference between Q0 and its best linear approximation are bounded. Note that we do not assume

to be a good approximation space for Q0. However, if Φθ* approximates Q0 well, η will be small, which will result in a smaller upper bound in (4.7). In fact, in the generalized result (Theorem A.1) we allow U and η to increase in n.Assumption 3 is employed to avoid collinearity. In fact, we only need

to be a good approximation space for Q0. However, if Φθ* approximates Q0 well, η will be small, which will result in a smaller upper bound in (4.7). In fact, in the generalized result (Theorem A.1) we allow U and η to increase in n.Assumption 3 is employed to avoid collinearity. In fact, we only need(4.8) for θ, θ′ belonging to a subset of ℝJ (see Assumption A.3), where M0(θ) ≜ {j = 1,…, J: θj ≠ 0}. Condition (4.8) has been used in van de Geer [33]. This condition is also similar to the restricted eigenvalue assumption in Bickel et al. [3] in which E is replaced by En, and a fixed design matrix is considered. Clearly, Assumption 3 is a sufficient condition for (4.8). In addition, condition (4.8) is satisfied if the correlation |Eφjφk|/(σjσk) is small for all k ∈ M0(θ), j ≠ k and a subset of θ’s (similar results in a fixed design setting have been proved in Bickel et al. [3]. The condition on correlation is also known as “mutual coherence” condition in Bunea at al. [4]). See Bickel et al. [3] for other sufficient conditions for (4.8).

The above upper bound for V (d0) − V (d̂n) involves L(Φθ) − L(Q0), which measures how well the conditional mean function Q0 is approximated by  . As we have seen in Section 3, the quality of the estimated ITR only depends on the estimator of the treatment effect term T0. Below we provide a strengthened result in the sense that the upper bound depends only on how well we approximate the treatment effect term.

. As we have seen in Section 3, the quality of the estimated ITR only depends on the estimator of the treatment effect term T0. Below we provide a strengthened result in the sense that the upper bound depends only on how well we approximate the treatment effect term.

First we identify terms in the linear model  that approximate T0 (recall that T0(X,A) ≜ Q0(X,A) − E[Q0(X,A)|X]). Without loss of generality, we rewrite the vector of basis functions as Φ(X, A) = (Φ(1)(X), Φ(2)(X, A)), where Φ(1) = (φ1(X), …, φJ(1)(X)) is composed of all components in Φ that do not contain A and Φ(2) = (φJ(1)+1(X, A), …, φJ (X, A)) is composed of all components in Φ that contain A. Since A takes only finite values and the randomization distribution p(a|x) is known, we can code A so that E[Φ(2)(X, A)T|X] = 0 a.s. (see Section 5.2 and Appendix A.3 for examples). For any θ = (θ1, …, θJ)T ∈ ℝJ, denote θ(1) = (θ1, …, θJ(1))T and θ(2) = (θJ(1)+1, …, θJ)T. Then Φ(1)θ(1) approximates E(Q0(X, A)|X) and Φ(2)θ(2) approximates T0.

that approximate T0 (recall that T0(X,A) ≜ Q0(X,A) − E[Q0(X,A)|X]). Without loss of generality, we rewrite the vector of basis functions as Φ(X, A) = (Φ(1)(X), Φ(2)(X, A)), where Φ(1) = (φ1(X), …, φJ(1)(X)) is composed of all components in Φ that do not contain A and Φ(2) = (φJ(1)+1(X, A), …, φJ (X, A)) is composed of all components in Φ that contain A. Since A takes only finite values and the randomization distribution p(a|x) is known, we can code A so that E[Φ(2)(X, A)T|X] = 0 a.s. (see Section 5.2 and Appendix A.3 for examples). For any θ = (θ1, …, θJ)T ∈ ℝJ, denote θ(1) = (θ1, …, θJ(1))T and θ(2) = (θJ(1)+1, …, θJ)T. Then Φ(1)θ(1) approximates E(Q0(X, A)|X) and Φ(2)θ(2) approximates T0.

The following theorem implies that if the treatment effect term T0 can be well approximated by a sparse representation, then d̂n will have Value close to the optimal Value.

Theorem 4.2

Suppose p(a|x) ≥ S−1 for a positive constant S for all (x, a) pairs and the margin condition (3.3) holds for some C > 0, α ≥ 0 and all positive ε. Assume E[Φ(2)(X, A)T|X] = 0 a.s. Suppose Assumptions 1 – 3 in Theorem 4.1 hold. Let d̂n be the estimated ITR with λn satisfying condition (4.6). Let Θn be the set defined in (4.4). Then for any n ≥ 24U2 log(Jn) and for which Θn is non-empty, we have, with probability at least 1 − 1/n, that

| (4.9) |

where C′ = (22+3αS1+αC)1/(2+α).

The result follows from inequality (3.5) in Theorem 3.1 and inequality (4.11) in Theorem 4.3.

Remarks

Inequality (4.9) improves inequality (4.7) in the sense that it guarantees a small reduction in Value of d̂n as long as the treatment effect term T0 is well approximated by a sparse linear representation; it does not require that the entire conditional mean function Q0 be well approximated. In many situations Q0 may be very complex, but T0 could be very simple. This means that T0 is much more likely to be well approximated as compared to Q0 (indeed, if there is no difference between treatments, then T0 ≡ 0).

Inequality (4.9) cannot be improved in the sense that if there is no treatment effect (i.e. T0 ≡ 0), then both sides of the inequality are zero. This result implies that minimizing the penalized empirical prediction error indeed yields high Value (at least asymptotically) if T0 can be well approximated.

The following asymptotic result follows from Theorem 4.2. Note that when E[Φ(2)(X, A)T|X] = 0 a.s. (see Section 5 for examples), E(Φθ − Q0)2 = E(Φ(1)θ(1) − [Q0 − E(Q0|X)])2 + E(Φ(2)θ(2) − T0)2. Thus the estimation of the treatment effect term T0 is asymptotically separated from the estimation of the main effect term Q0 − E(Q0|X). In this case, Φ(2)θ(2),* is the best linear approximation of the treatment effect term T0, where θ(2),* is the vector of components in θ* corresponding to Φ(2).

Corollary 4.1

Suppose p(a|x) ≥ S−1 for a positive constant S for all (x, a) pairs and the margin condition (3.3) holds for some C > 0, α ≥ 0 and all positive ε. Assume E[Φ(2)(X, A)T|X] = 0 a.s. In addition, suppose Assumptions 1 – 3 in Theorem 4.1 hold. Let d̂n be the estimated ITR with tuning parameter for a constant k1 ≥ 82 max{c, σ, η}. If T0(X, A) = Φ(2)θ(2),*, then

This result provides a guarantee on the convergence rate of V (d̂n) to the optimal Value. More specifically, it means that if T0 is correctly approximated, then the Value of d̂n will converge to the optimal Value in mean at rate at least as fast as (log n/n)(1+α)/(2+α) with appropriate choice of λn.

4.2. Prediction error bound for the l1-PLS estimator

In this section we provide a finite sample upper bound for the prediction error of the l1-PLS estimator θ̂n. This result is needed to prove Theorem 4.1. Furthermore this result strengthens existing literature on l1-PLS method in prediction. Finite sample prediction error bounds for the l1-PLS estimator in the random design setting have been provided in Bunea et al. [4] for quadratic loss, van de Geer [33] mainly for Lipschitz loss, and Koltchinskii [15] for a variety of loss functions. With regards quadratic loss, Koltchinskii [15] requires the response Y is bounded, while both Bunea et al. [4] and van de Geer [33] assumed the existence of a sparse θ ∈ ℝJ such that E(Φθ − Q0)2 is upper bounded by a quantity that decreases to 0 at a certain rate as n → ∞ (by permitting J to increase with n so Φ depends on n as well). We improve the results in the sense that we do not make such assumptions (see Appendix A.2 for results when Φ, J are indexed by n and J increases with n).

As in the prior sections, the sparsity of θ is measured by its l0 norm, ||θ||0 (see the Appendix A.2 for proofs with a laxer definition of sparsity). Recall that the parameter, defined in (4.5) has small prediction error and controlled sparsity.

Theorem 4.3

Suppose Assumptions 1–3 in Theorem 4.1 hold. For any η1 ≥ 0, Let θ̂n be the l1-PLS estimator defined by (4.1) with tuning parameter λn satisfying condition (4.6). Let Θn be the set defined in (4.4). Then for any n ≥ 24U2 log(Jn) and for which Θn is non-empty, we have, with probability at least 1 − 1/n, that

| (4.10) |

Furthermore, suppose E[Φ(2)(X, A)T|X] = 0 a.s. Then with probability at least 1 − 1/n,

| (4.11) |

The results follow from Theorem A.1 in Appendix A.2 with ρ = 0, γ= 1/8, η1 = η2 = η, t = log 2n and some simple algebra (notice that Assumption 3 in Theorem 4.1 is a sufficient condition for Assumptions A.3 and A.4).

Remarks

Inequality (4.11) provides a finite sample upper bound on the mean square difference between T0 and its estimator. This result is used to prove Theorem 4.2. The remarks below discuss how inequality (4.10) contributes to the l1-penalization literature in prediction.

The conclusion of Theorem 4.3 holds for all choices of λn that satisfy (4.6). Suppose λn = o(1), then as n → ∞ (since ||θ||0 is bounded). Then (4.10) implies that L(Φθ̂n) − L(Φθ*) → 0 in probability. To achieve the best rate of convergence, equal sign should be taken in (4.6).

-

Note that minimizes . Below we demonstrate that the minimum of can be viewed as the approximation error plus a “tight” upper bound of the estimation error of an “oracle” in the stepwise model selection framework (when “=” is taken in (4.6)). Here “tight” means the convergence rate in the bound is the best known rate, and “oracle” is defined as follows. Let m denote a non-empty subset of the index set {1, …, J}. Then each m represents a model which uses a non-empty subset of {φ1, …, φJ} as basis functions (there are 2J − 1 such subsets). Define and θ*,(m) = arg min{θ ∈ ℝJ:θj=0 for all j∉ m}L(Φθ). In this setting, an ideal model selection criterion will pick model m* such that . is referred as an “oracle” in Massart [20]. Note that the excess prediction error of each can be written aswhere the first term is called the approximation error of model m and the second term is the estimation error. It can be shown that [2] for each model m and xm > 0, with probability at least 1 − exp(−xm),under appropriate technical conditions, where |m| is the cardinality of the index set m. To our knowledge this is the best rate known so far. Taking xm = log n + |m| log J and using the union bound argument, we have with probability at least 1 − O(1/n),

(4.12) On the other hand, take λn so that condition (4.6) holds with “=”. (4.10) implies that, with probability at least 1 − 1/n,which is essentially (4.12) with the constraint of θ ∈ Θn. (The “constant” in the above inequalities may take different values.) Since minimizes the approximation error plus a tight upper bound for the estimation error in the oracle model, within θ ∈ Θn, we refer to as an oracle.

-

The result can be used to emphasize that l1 penalty behaves similarly as the l0 penalty. Note that θ̂n minimizes the empirical prediction error, En(R − Θθ)2, plus an l1 penalty whereas θ**(un) minimizes the prediction error L(Φθ) plus an l0 penalty. We provide an intuitive connection between these two quantities. First note that En(R − Φθ)2 estimates L(Φθ) and σ̂j estimates σj. We use “≈” to denote this relationship. Thus

(4.13) where θ̂n,j is the jth component of θ̂n. In Appendix B we show that for any θ ∈ Θn, is upper bounded by up to a constant with a high probability. Thus θ̂n minimizes (4.13) and θ**(un) roughly minimizes an upper bound of (4.13).

The constants involved in the theorem can be improved; we focused on readability as opposed to providing the best constants.

5. A Practical Implementation and an Evaluation

In this section we develop a practical implementation of the l1-PLS method, compare this method to two commonly used alternatives and lastly illustrate the method using the motivating data from the Nefazodone-CBASP trial [13].

A realistic implementation of l1-PLS method should use a data-dependent method to select the tuning parameter, λn. Since the primary goal is to maximize the Value, we select λn to maximize a cross validated Value estimator. For any ITR d, it is easy to verify that E[(R − V (d))1A=d(X)/p(A|X)] = 0. Thus an unbiased estimator of V (d) is

[21] (recall that the randomization distribution p(a|X) is known). We split the data into 10 roughly equal-sized parts; then for each λn we apply the l1-PLS based method on each 9 parts of the data to obtain an ITR, and estimate the Value of this ITR using the remaining part; the λn that maximizes the average of the 10 estimated Values is selected. Since the Value of an ITR is noncontinuous in the parameters, this usually results in a set of candidate λn’s achieving maximal Value. In the simulations below the resulting λn is nonunique in around 97% of the data sets. If necessary, as a second step we reduce the set of λn’s by including only λn’s leading to the ITR’s using the least number of variables. In the simulations below this second criterion effectively reduced the number of candidate λn’s in around 25% of the data sets, however multiple λn’s still remained in around 90% of the data sets. This is not surprising since the Value of an ITR only depends on the relative magnitudes of parameters in the ITR. In the third step we select the λn that minimizes the 10-fold cross validated prediction error estimator from the remaining candidate λn’s; that is, minimization of the empirical prediction error is used as a final tie breaker.

5.1. Simulations

A first alternative to l1-PLS is to use ordinary least squares (OLS). The estimated ITR is d̂OLS ∈ arg maxa Φ(X, a)θ̂OLS where θ̂OLS is the OLS estimator of θ. A second alternative is called “prognosis prediction” [14]. Usually this method employees multiple data sets, each of which involves one active treatment. Then the treatment that is associated with the best predicted prognosis [14] is selected. We implement this method by estimating E(R|X, A = a) via least squares with l1 penalization for each treatment group (each a ∈  ) separately. The tuning parameter involved in each treatment group is selected by minimizing the 10-fold cross-validated prediction error estimator. The resulting ITR satisfies d̂PP (X) ∈ arg maxa∈

) separately. The tuning parameter involved in each treatment group is selected by minimizing the 10-fold cross-validated prediction error estimator. The resulting ITR satisfies d̂PP (X) ∈ arg maxa∈ Ê(R|X, A = a) where the subscript “PP” denotes prognosis prediction.

Ê(R|X, A = a) where the subscript “PP” denotes prognosis prediction.

For simplicity we consider binary A. All three methods use the same number of data points and the same number of basis functions but use these data points/basis functions differently. l1-PLS and OLS use all J basis functions to conduct estimation with all n data points whereas the prognosis prediction method splits the data into the two treatment groups and uses J/2 basis functions to conduct estimation with the n/2 data points in each of the two treatment groups. To ensure the comparison is fair across the three methods, the approximation model for each treatment group is consistent with the approximation model used in both l1-PLS and OLS (e.g. if Q0 is approximated by (1, X, A, XA)θ in l1-PLS and OLS, then in prognosis prediction we approximate E(R|X, A = a) by (1, X)θPP for each treatment group). We do not penalize the intercept coefficient in either prognosis prediction or l1-PLS.

The three methods are compared using two criteria: 1) Value maximization; and 2) simplicity of the estimated ITRs (measured by the number of variables/basis functions used in the rule).

We illustrate the comparison of the three methods using 4 examples selected to reflect three scenarios; please see Section S.3 of the supplementary material for 4 further examples.

There is no treatment effect (i.e. Q0 is constructed so that T0 = 0; example 1). In this case, all ITRs yield the same Value. Thus the simplest rule is preferred.

There is a treatment effect and the treatment effect term T0 is correctly modeled (example 4 for large n, and example 2). In this case, minimizing the prediction error will yield the ITR that maximizes the Value.

There is a treatment effect and the treatment effect term T0 is misspecified (example 4 for small n, and example 3). In this case, there might be a mismatch between prediction error minimization and Value maximization.

The examples are generated as follows. The treatment A is generated uniformly from {−1, 1} independent of X and the response R. The response R is normally distributed with mean Q0(X, A). In examples 1–3, X ~ U [−1, 1]5 and we consider three simple examples for Q0. In example 4, X ~ U [0, 1] and we use a complex Q0, where Q0(X, 1) and Q(X, −1) are similar to the blocks function used in Donoho and Johnstone [8]. Further details of the simulation design are provided in Appendix A.3.

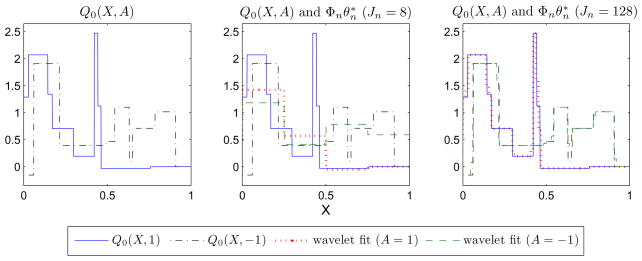

We consider two types of approximation models for Q0. In examples 1–3, we approximate Q0 by (1, X, A, XA)θ. In example 4, we approximate Q0 by Haar wavelets. The number of basis functions may increase as n increases (we index J, Φ and θ* by n in this case). Plots for Q0(X, A) and the associated best wavelet fits are provided in Figure 1.

Fig 1.

Plots for: the conditional mean function Q0(X; A) (left), Q0(X; A) and the associated best wavelet fit when Jn = 8 (middle), and Q0(X; A) and the associated best wavelet fit when Jn = 128 (right) (example 4).

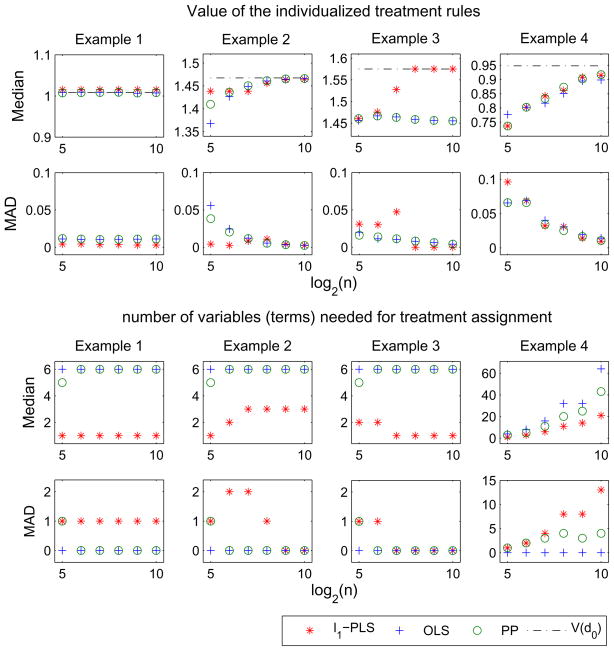

For each example, we simulate data sets of sizes n = 2k for k = 5, …, 10. 1000 data sets are generated for each sample size. The Value of each estimated ITR is evaluated via Monte Carlo using a test set of size 10, 000. The Value of the optimal ITR is also evaluated using the test set.

Simulation results are presented in Figure 2. When the approximation model is of high quality, all methods produce ITRs with similar Value (see examples 1, 2 and example 4 for large n). However, when the approximation model is poor, the l1-PLS method may produce highest Value (see example 3). Note that in example 3 settings in which the sample size is small, the Value of the ITR produced by l1-PLS method has larger median absolute deviation (MAD) than the other two methods. One possible reason is that due to the mismatch between maximizing the Value and minimizing the prediction error, the Value estimator plays a strong role in selecting λn. The non-smoothness of the Value estimator combined with the mismatch results in very different λns and thus the estimated decision rules vary greatly from data set to data set in this example. Nonetheless, the l1-PLS method is still preferred after taking the variation into account; indeed l1-PLS produces ITRs with higher Value than both OLS and PP in around 46%, 55% and 67% in data sets of sizes n = 32, 64 and 128, respectively. Furthermore, in general the l1-PLS method uses much fewer variables for treatment assignment than the other two methods. This is expected because the OLS method does not have variable selection functionality and the PP method will use all variables that are predictive of the response R whereas the use of the Value in selecting the tuning parameter in l1-PLS discounts variables that are only useful in predicting the response (and less useful in selecting the best treatment).

Fig 2.

Comparison of the l1-PLS based method with the OLS method and the PP method (examples 1 – 4): Plots for medians and median absolute deviations (MAD) of the Value of the estimated decision rules (top panels) and the number of variables (terms) needed for treatment assignment (including the main treatment effect term, bottom panels) over 1000 samples versus sample size on the log scale. The black dash-dotted line in each plot on the first row denotes the Value of the optimal treatment rule, V (d0), for each example. (n = 32; 64; 128; 256; 512; 1024. The corresponding numbers of basis functions in example 4 are Jn = 8; 16; 32; 64; 64; 128).

5.2. Nefazodone-CBASP trial example

The Nefazodone-CBASP trial was conducted to compare the efficacy of several alternate treatments for patients with chronic depression. The study randomized 681 patients with non-psychotic chronic major depressive disorder (MDD) to either Nefazodone, cognitive behavioral-analysis system of psychotherapy (CBASP) or the combination of the two treatments. Various assessments were taken throughout the study, among which the score on the 24-item Hamilton Rating Scale for Depression (HRSD) was the primary outcome. Low HRSD scores are desirable. See Keller et al. [13] for more detail of the study design and the primary analysis.

In the data analysis, we use a subset of the Nefazodone-CBASP data consisting of 656 patients for whom the response HRSD score was observed. In this trial, pairwise comparisons show that the combination treatment resulted in significantly lower HRSD scores than either of the single treatments. There was no overall difference between the single treatments.

We use l1-PLS to develop an ITR. In the analysis the HRSD score is reverse coded so that higher is better. We consider 50 pretreatment variables X = (X1,…, X50). Treatments are coded using contrast coding of dummy variables A = (A1, A2), where A1 = 2 if the combination treatment is assigned and −1 otherwise and A2 = 1 if CBASP is assigned, −1 if nefazodone and 0 otherwise. The vector of basis functions, Φ(X, A), is of the form (1, X, A1, XA1, A2, XA2). So the number of basis functions is J = 153. As a contrast, we also consider the OLS method and the PP method (separate prognosis prediction for each treatment). The vector of basis functions used in PP is (1, X) for each of the three treatment groups. Neither the intercept term nor the main treatment effect terms in l1-PLS or PP is penalized (see Section S.2 of the supplementary material for the modification of the weights σ̂j used in (4.1)).

The ITR given by the l1-PLS method recommends the combination treatment to all (so none of the pretreatment variables enter the rule). On the other hand, the PP method produces an ITR that uses 29 variables. If the rule produced by PP were used to assign treatment for the 656 patients in the trial, it would recommend the combination treatment for 614 patients and nefazodone for the other 42 patients. In addition, the OLS method will use all the 50 variables. If the ITR produced by OLS were used to assign treatment for the 656 patients in the trial, it would recommend the combination treatment for 429 patients, nefazodone for the 145 patients and CBASP for the other 82 patients.

6. Discussion

Our goal is to construct a high quality ITR that will benefit future patients. We considered an l1-PLS based method and provided a finite sample upper bound for V (d0) − V (d̂n), the excess Value of the estimated ITR.

The use of an l1 penalty allows us to consider a large model for the conditional mean function Q0 yet permits a sparse estimated ITR. In fact, many other penalization methods such as SCAD [9] and l1 penalty with adaptive weights (adaptive Lasso; [37]) also have this property. We choose the non-adaptive l1 penalty to represent these methods. Interested readers may justify other PLS methods using similar proof techniques.

The high probability finite sample upper bounds (i.e. (4.7) and (4.9)) cannot be used to construct a prediction/confidence interval for V (d0) − V (d̂n) due to the unknown quantities in the bound. How to develop a tight computable upper bound to assess the quality of d̂n is an open question.

We used cross validation with Value maximization to select the tuning parameter involved in the l1-PLS method. As compared to the OLS method and the PP method, this method may yield higher Value when T0 is misspecified. However, since only the Value is used to select the tuning parameter, this method may produce a complex ITR for which the Value is only slightly higher than that of a much simpler ITR. In this case, a simpler rule may be preferred due to the interpretability and cost of collecting the variables. Investigation of a tuning parameter selection criterion that trades off the Value with the number of variables in an ITR is needed.

This paper studied a one stage decision problem. However, it is evident that some diseases require time-varying treatment. For example, individuals with a chronic disease often experience a waxing and waning course of illness. In these settings the goal is to construct a sequence of ITRs that tailor the type and dosage of treatment through time according to an individual’s changing status. There is an abundance of statistical literature in this area [29, 30, 22, 23, 26, 17, 34, 35]. Extension of the least squares based method to the multi-stage decision problem has been presented in Murphy [23]. The performance of l1 penalization in this setting is unclear and worth investigation.

Supplementary Material

Acknowledgments

The authors thank Martin Keller and the investigators of the Nefazodone-CBASP trial for use of their data. The authors also thank John Rush, MD, for the technical support and Bristol-Myers Squibb for helping fund the trial. The authors thank valuable comments from Eric B. Laber and Peng Zhang.

APPENDIX

A.1. Proof of Theorem 3.1

For any ITR d:  →

→  , denote ΔTd(X) ≜ maxa∈

, denote ΔTd(X) ≜ maxa∈ T0(X, a) − T0(X, d(X)). Using similar arguments to that in Section 2, we have V (d0) − V (d) = E(ΔTd). If V (d0) − V (d) = 0, then (3.4) and (3.5) automatically hold. Otherwise, E(ΔTd)2 ≥ (EΔTd)2 > 0. In this case, for any ε > 0, define the event

T0(X, a) − T0(X, d(X)). Using similar arguments to that in Section 2, we have V (d0) − V (d) = E(ΔTd). If V (d0) − V (d) = 0, then (3.4) and (3.5) automatically hold. Otherwise, E(ΔTd)2 ≥ (EΔTd)2 > 0. In this case, for any ε > 0, define the event

Then ΔTd ≤ (ΔTd)2/ε on the event . This together with the fact that ΔTd ≤ (ΔTd)2/ε + ε/4 implies

where the last inequality follows from the margin condition (3.3). Choosing ε = (4E(ΔTd)2/C)1/(2+α) to minimize the above upper bound yields

| (A.1) |

Next, for any d and Q such that d(X) ∈ maxa∈ Q(X, a) and decomposition Q(X, A) into W(X) + T(X, A),

Q(X, a) and decomposition Q(X, A) into W(X) + T(X, A),

where the last inequality follows from the fact that neither |maxa T0(X, a)− maxa T (X, a)| nor |T (X, d(X))−T0(X, d(X))| is larger than maxa |T (X, a)− T0(X, a)|. Since p(a|x) ≥ S−1 for all (x, a) pairs, we have

| (A.2) |

Inequality (3.5) follows by substituting (A.2) into (A.1) and setting W(X, A) = E[Q(X, A)|X]. Inequality (3.4) follows by setting W(X) = 0 and noticing that ΔTd(X) = maxa∈ Q0(X, a) − Q0(X, d(X)).

Q0(X, a) − Q0(X, d(X)).

A.2. Generalization of Theorem 4.3

In this section, we present a generalization of Theorem 4.3 where J may depend on n and the sparsity of any θ ∈ ℝJ is measured by the number of “large” components in θ as described in Zhang and Huang [36]. In this case, J, F and the prediction error minimizer θ* are denoted as Jn, Φn and , respectively. All relevant quantities and assumptions are re-stated below.

Let |M| denote the cardinality of any index set M ⊆ {1,…, Jn}. For any θ ∈ ℝJn and constant ρ ≥ 0, define

Then Mρλn (θ) is the smallest index set that contains only “large” components in θ. |Mρλn (θ)| measures the sparsity of θ. It is easy to see that when ρ = 0, M0(θ) is the index set of nonzero components in θ and |M0(θ)| = ||θ||0. Moreover, Mρλn (θ) is an empty set if and only if θ = 0.

Let [ ] be the set of most sparse prediction error minimizers in the linear model, i.e.

| (A.3) |

Note that [ ] depends on ρλn.

To derive the finite sample upper bound for L(Φnθ̂n), we need the following assumptions.

Assumption A.1

The error terms εi, i = 1,…, n are independent of (Xi, Ai), i = 1,…, n and are i.i.d. with E(εi) = 0 and for some c, σ2 > 0 for all l ≥ 2.

Assumption A.2

For all n ≥ 1,

there exists an 1 ≤ Un < ∞ such that maxj=1,…,Jn ||φj||∞/σj ≤ Un, where .

there exists an 0 < η1,n < ∞, such that .

For any 0 ≤ γ < 1/2, η2,n ≥ 0 (which may depend on n) and tuning parameter λn, define

Assumption A.3

For any n ≥ 1, there exists a βn > 0 such that

for all , θ̃ ∈ ℝJn and .

When a.s. ( is defined in Section 4.1), we need an extra assumption to derive the finite sample upper bound for the mean square error of the treatment effect estimator, (recall that T0(X,A) ≜ Q0(X, A) − E[Q0(X,A)|X]).

Assumption A.4

For any n ≥ 1, there exists a βn > 0 such that

for all , θ̃ ∈ ℝJn and , where

is the smallest index set that contains only large components in θ(2).

Note that here for simplicity, we assume that Assumptions A.3 and A.4 hold with the same value of βn. And with out loss of generality, we can always choose a small enough βn so that ρβn ≤ 1 for a given ρ.

For any t > 0, define

| (A.4) |

Note that we allow Un, η1,n, η2,n and to increase as n increases. However, if those quantities are small, the upper bound in (A.7) will be tighter.

Theorem A.1

Suppose Assumptions A.1 and A.2 hold. For any given 0 ≤ γ < 1/2, η2,n > 0, ρ ≥ 0 and t > 0, let θ̂n be the l1-PLS estimator defined in (4.1) with tuning parameter

| (A.5) |

Suppose Assumption A.3 holds with ρβn ≤ 1. Let Θn be the set defined in (A.4) and assume Θn is non-empty. If

| (A.6) |

then with probability at least , we have

| (A.7) |

where and Kn = [40γ(12βnρ + 2γ + 5)]/[(1 − 2γ)(2γ + 19)] + 130(12βnρ + 2γ + 5)2/[9(2γ + 19)2].

Furthermore, suppose a.s. If Assumption A.4 holds with ρβn ≤ 1, then with probability at least , we have

where .

Remark

Note that Kn is upper bounded by a constant under the assumption βnρ ≤ 1. In the asymptotic setting when n → ∞ and Jn → ∞, (A.7) implies that with probability tending to 1, if (i) , (ii) and for some sufficiently small positive constants k1 and k2, and (iii) for a sufficiently large constant k3, where (take t = log Jn).

-

Below we briefly discuss Assumptions A.2 – A.4.

Assumption A.2 is very similar to Assumption 2 in Theorem 4.1 (which is used to prove the concentration of the sample mean around the true mean), except that Un and η1,n may increase as n increases. This relaxation allows the use of basis functions for which the sup norm maxj ||φj||∞ is increasing in n (e.g. the wavelet basis used in example 4 of the simulation studies).

Assumption A.3 is a generalization of condition (4.8) (which has been discussed in remark 4 following Theorem Theorem 4.1)) to the case where Jn may increase in n and the sparsity of a parameter is measured by the number of “large” components as described at the beginning of this section. This condition is used to avoid the collinearity problem. It is easy to see that when ρ = 0 and βn is fixed in n, this assumption simplifies to condition (4.8).

Assumption A.4 puts a strengthened constraint on the linear model of the treatment effect part, as compared to Assumption A.3. This assumption, together with Assumption A.3, is needed in deriving the upper bound for the mean square error of the treatment effect estimator. It is easy to verify that if is positive definite, then both A.3 and A.4 hold. Although the result is about the treatment effect part, which is asymptotically independent of the main effect of X (when a.s.), we still need Assumption A.3 to show that the cross product term is upper bounded by a quantity converging to 0 at the desired rate. We may use a really poor model for the main effect part E(Q0(X, A)|X) (e.g. ), and Assumption A.4 implies Assumption A.3 when ρ = 0. This poor model only effects the constants involved in the result. When the sample size is large (so that λn is small), the estimated ITR will be of high quality as long as T0 is well approximated.

Proof

For any θ ∈ Θn, define the events

Then there exists a such that

where the first equality follows from the fact that E[(R − Φnθo)φj] = 0 for any for j = 1,…, Jn and the last inequality follows from the definition of .

Based on Lemma A.1 below, we have that on the event Ω1 ∩ Ω2(θ) ∩Ω3(θ),

Similarly, when , by Lemma A.2, we have that on the event Ω1 ∩ Ω2(θ) ∩ Ω3(θ),

The conclusion of the theorem follows from the union probability bounds of the events Ω1, Ω2(θ) and Ω3(θ) provided in Lemmas A.3, A.4 and A.5.

Below we state the lemmas used in the proof of Theorem A.1. The proofs of the lemmas are given in Section S.3 of the supplementary material.

Lemma A.1

Suppose Assumption A.3 holds with ρβn ≤ 1. Then for any θ ∈ Θn, on the event Ω1 ∩ Ω2(θ) ∩ Ω3(θ), we have

| (A.8) |

and

| (A.9) |

Remark

This lemma implies that θ̂n is close to each θ ∈ Θn on the event Ω1 ∩ Ω2(θ) ∩ Ω3(θ). The intuition is as follows. Since θ̂n minimizes (4.1), the first order conditions imply that maxj |En(R −Φnθ̂n)φj/σ̂j| ≤ λn/2. Similar property holds for θ on the event Ω1 ∩ Ω3(θ). Assumption A.3 together with event Ω2(θ) ensures that there is no collinearity in the n × Jn design matrix . These two aspects guarantee the closeness of θ̂n to θ.

Lemma A.2

Suppose a.s. and Assumption A.4 holds with ρβn ≤ 1. Then for any θ ∈ Θn, on the event Ω1 ∩ Ω2(θ) ∩ Ω3(θ), we have

| (A.10) |

and

| (A.11) |

Lemma A.3

Suppose Assumption A.2(a) and inequality (A.6) hold. Then , where .

Lemma A.4

Suppose Assumption A.2(a) holds. Then for any t > 0 and θ ∈ Θn, P({Ω2(θ)}C) ≤ 2 exp(−t)/3.

Lemma A.5

Suppose Assumptions A.1 and A.2 hold. For any t > 0, if λn satisfies condition (A.5), then for any θ ∈ Θn, we have P({Ω3(θ)}C) ≤ 2 exp(−t)/3.

A.3. Design of simulations in Section 5.1

In this section, we present the detailed simulation design of the examples used in Section 5.1. These examples satisfy all assumptions listed in the Theorems (it is easy to verify that for examples 1–3. Validity of the assumptions for example 4 is addressed in the remark after example 4). In addition, Θn defined in (4.4) is non-empty as long as n is sufficiently large (note that the constants involved in Θn can be improved and are not that meaningful. We focused on a presentable result instead of finding the best constants).

In examples 1 – 3, X = (X1,…, X5) is uniformly distributed on [−1, 1]5. The treatment A is then generated independently of X uniformly from {−1, 1}. Given X and A, the response R is generated from a normal distribution with mean Q0(X, A) = 1+2X1 +X2 +0.5X3 +T0(X, A) and variance 1. We consider the following three examples for T0.

T0(X, A) = 0 (i.e. there is no treatment effect).

T0(X, A) = 0.424(1 − X1 − X2)A.

T0(X, A) = 0.446sign(X1)(1 − X1)2A.

Note that in each example T0(X, A) is equal to the treatment effect term, Q0(X, A) − E[Q0(X, A)|X]. We approximate Q0 by  = {(1, X, A, XA)θ: θ ∈ ℝ12}. Thus in examples 1 and 2 the treatment effect term T0 is correctly modeled, while in example 3 the treatment effect term T0 is misspecified.

= {(1, X, A, XA)θ: θ ∈ ℝ12}. Thus in examples 1 and 2 the treatment effect term T0 is correctly modeled, while in example 3 the treatment effect term T0 is misspecified.

The parameters in examples 2 and 3 are chosen to reflect a medium effect size according to Cohen’s d index. When there are two treatments, the Cohen’s d effect size index is defined as the standardized difference in mean responses between two treatment groups, i.e.

Cohen [7] tentatively defined the effect size as “small” if the Cohen’s d index is 0.2, “medium” if the index is 0.5 and “large” if the index is 0.8.

In example 4, X is uniformly distributed on [0, 1]. Treatment A is generated independently of X uniformly from {−1, 1}. The response R is generated from a normal distribution with mean Q0(X, A) and variance 1, where , and ϑ’s and u’s are parameters specified in (A.12). The effect size is small.

| (A.12) |

We approximate Q0 by Haar wavelets

where h0(x) = 1x∈[0,1] and hlk(x) = 2l/2 (12lx∈[k+1/2,k+1) − 12lx∈[k,k+1/2)) for l = 0,…, l̄n. We choose l̄n = ⌊3log2 n/4⌋ − 2. For a given l and sample , k takes integer values from ⌊2l mini Xi⌋ to ⌈2l maxi Xi⌉ − 1. Then Jn = 2⌊3 log2n/4⌋ ≤ n3/4.

Remark

In example 4, we allow the number of basis functions Jn to increase with n. The corresponding theoretical result can be obtained by combining Theorem 3.1 and Theorem A.1. Below we demonstrate the validation of the assumptions used in the theorems.

Theorem 3.1 requires that the randomization probability p(a|x) ≥ S−1 for a positive constant for all (x, a) pairs and the margin condition (3.3) or (3.6) holds. According the generative model, we have that p(a|x) = 1/2 and condition (3.6) holds.

Theorem A.1 requires Assumptions A.1 - Assumptions A.4 hold and Θn defined in (A.4) is non-empty. Since we consider normal error terms, Assumption A.1 holds. Note that the basis functions used in Haar wavelet are orthogonal. It is also easy to verify that Assumptions A.3 and A.4 hold with βn = 1 and Assumption A.2 holds with Un = n3/8/2 and (since each ). Since Q0 is piece-wise constant, we can also verify that . Thus for sufficiently large n, Θn is non-empty and (A.6) holds. The RHS of (A.5) converges to zero as n → ∞.

Contributor Information

Min Qian, Email: minqian@umich.edu.

Susan A. Murphy, Email: samurphy@umich.edu.

References

- 1.Bartlett PL, Jordan ML, McAuliffe JD. Convexity, classification, and risk bounds. Journal of the American Statistical Association. 2006;135(3):311–334. [Google Scholar]

- 2.Bartlett PL. Fast rates for estimation error and oracle inequalities for model selection. Econometric Theory. 2008;24(2):545–552. [Google Scholar]

- 3.Bickel PJ, Ritov Y, Tsybakov AB. Simultaneous analysis of lasso and dantzig selector. The Annals of Statistics. 2009;37(4):1705–1732. [Google Scholar]

- 4.Bunea F, Tsybakov AB, Wegkamp MH. Sparsity oracle inequalities for the Lasso. Electronic Journal of Statistics. 2007;1:169–194. [Google Scholar]

- 5.Cai T, Tian L, Lloyd-Jones DM, Wei LJ. Evaluating Subject-level Incremental Values of New Markers for Risk Classification Rule. Harvard University Biostatistics Working Paper Series. Working Paper 91. 2008a doi: 10.1007/s10985-013-9272-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Cai T, Tian L, Uno H, Solomon SD, Wei LJ. Calibrating Parametric Subject-specific Risk Estimation. Harvard University Biostatistics Working Paper Series. Working Paper 92 2008b [Google Scholar]

- 7.Cohen J. Statistical Power Analysis for the Behavioral Sciences. 2. Hillsdale, NJ: Lawrence Erlbaum Associates, Inc; 1988. [Google Scholar]

- 8.Donoho D, Johnstone I. Ideal spatial adaptation by wavelet shrinkage. Biometrika. 1994;81(3):425–455. [Google Scholar]

- 9.Fan J, Li R. Variable selection via nonconcave penalized likelihood and it oracle properties. Journal of the American Statistical Association. 2001;96:1348–1360. [Google Scholar]

- 10.Feldstein ML, Savlov ED, Hilf R. A statistical model for predicting response of breast cancer patients to cytotoxic chemotherapy. Cancer Research. 1978;38(8):2544–2548. [PubMed] [Google Scholar]

- 11.Insel TR. Translating scientific opportunity into public health impact: a strategic plan for research on mental illness. Archives of General Psychiatry. 2009;66(2):128–33. doi: 10.1001/archgenpsychiatry.2008.540. [DOI] [PubMed] [Google Scholar]

- 12.Ishigooka J, Murasaki M, Miura S The Olanzapine Late-Phase II Study Group. Olanzapine optimal dose: Results of an open-label multicenter study in schizophrenic patients. Psychiatry and Clinical Neurosciences. 2001;54(4):467–478. doi: 10.1046/j.1440-1819.2000.00738.x. [DOI] [PubMed] [Google Scholar]

- 13.Keller MB, McCullough JP, Klein DN, Arnow B, Dunner DL, Gelenberg AJ, Markowitz JC, Nemeroff CB, Russell JM, Thase ME, Trivedi MH, Zajecka J. A comparison of nefazodone, the cognitive behavioral-analysis system of psychotherapy, and their combination for the treatment of chronic depression. The New England Journal of Medicine. 2000;342(20):1462–1470. doi: 10.1056/NEJM200005183422001. [DOI] [PubMed] [Google Scholar]

- 14.Kent DM, Hayward RA, Griffith JL, Vijan S, Beshansky JR, Califf RM, Selker HP. An independently derived and validated predictive model for selecting patients with myocardial infarction who are likely to benefit from tissue plasminogen activator compared with streptokinase. The American Journal of Medicine. 2002;113(2):104–11. doi: 10.1016/s0002-9343(02)01160-9. [DOI] [PubMed] [Google Scholar]

- 15.Koltchinskii V. Sparsity in penalized empirical risk minimization. Annales de l’Institut Henri Poincaré Probabilitiés et Statistiques. 2009;45(1):7–57. [Google Scholar]

- 16.Lesko LJ. Personalized Medicine: Elusive Dream or Imminent Reality? Clinical Pharmacology and Therapeutics. 2007;81:807–816. doi: 10.1038/sj.clpt.6100204. [DOI] [PubMed] [Google Scholar]

- 17.Lunceford JK, Davidian M, Tsiatis AA. Estimation of survival distributions of treatment policies in two-stage randomization designs in clinical trials. Biometrics. 2002;58:48–57. doi: 10.1111/j.0006-341x.2002.00048.x. [DOI] [PubMed] [Google Scholar]

- 18.Mammen E, Tsybakov A. Smooth discrimination analysis. The Annals of Statistics. 1999;27:1808–1829. [Google Scholar]

- 19.Massart P. Ecole d’Eté de Probabilités de Saint-Flour XXXIII, Concentration inequalities and model selection. Springer; 2003. [Google Scholar]

- 20.Massart P. A non asymptotic theory for model selection. Proceedings of the 4th European Congress of Mathematicians (Ed. Ari Laptev); European Mathematical Society; 2005. pp. 309–323. [Google Scholar]

- 21.Murphy SA, van der Laan MJ, Robins JM CPPRG. Marginal mean models for dynamic regimes. Journal of the American Statistical Association. 2001;96:1410–1423. doi: 10.1198/016214501753382327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Murphy SA. Optimal Dynamic Treatment Regimes. Journal of the Royal Statistical Society, Series B (with discussion) 2003;65(2):331–366. [Google Scholar]

- 23.Murphy SA. A Generalization error for Q-Learning. Journal of Machine Learning Research. 2005;6:1073–1097. [PMC free article] [PubMed] [Google Scholar]

- 24.Piquette-Miller P, Grant DM. The Art and Science of Personalized Medicine. Clinical Pharmacology and Therapeutics. 2007;81:311–315. doi: 10.1038/sj.clpt.6100130. [DOI] [PubMed] [Google Scholar]

- 25.Polonik W. Measuring mass concentrations and estimating density contour clusters - an excess mass approach. The Annals of Statistics. 1995;23(3):855–881. [Google Scholar]

- 26.Robins JM. Optimal-regime structural nested models. In: Lin DY, Haegerty P, editors. Lecture notes in Stastitics; Proceedings of the Second Seattle Symposium on Biostatistics; New York: Springer; 2004. [Google Scholar]

- 27.Robins JM, Orellana L, Rotnitzky A. Estimation and extrapolation of optimal treatment and testing strategies. Statistics in Medicine. 2008;27(23):4678–4721. doi: 10.1002/sim.3301. [DOI] [PubMed] [Google Scholar]

- 28.Stoehlmacher J, Park DJ, Zhang W, Yang D, Groshen S, Zahedy S, Lenz HJ. A multivariate analysis of genomic polymorphisms: prediction of clinical outcome to 5-FU/oxaliplatin combination chemotherapy in refractory colorectal cancer. British Journal of cancer. 2004;91(2):344–354. doi: 10.1038/sj.bjc.6601975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thall PF, Millikan RE, Sung HG. Evaluating multiple treatment courses in clinical trials. Statistics in Medicine. 2000;19:1011–1028. doi: 10.1002/(sici)1097-0258(20000430)19:8<1011::aid-sim414>3.0.co;2-m. [DOI] [PubMed] [Google Scholar]

- 30.Thall PF, Sung HG, Estey EH. Selecting therapeutic strategies based on efficacy and death in multicourse clinical trials. Journal of the American Statistical Association. 2002;97:29–39. [Google Scholar]

- 31.Tibshirani R. Regression shrinkage and selection via the Lasso. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 1996;32:135–166. [Google Scholar]

- 32.Tsybakov AB. Optimal aggregation of classifiers in statistical learning. The Annals of Statistics. 2004;32:135–166. [Google Scholar]

- 33.van de Geer S. High-dimensional generalized linear models and the Lasso. The Annals of Statistics. 2008;36(2):614–645. [Google Scholar]

- 34.van der Laan MJ, Petersen ML, Joffe MM. History-Adjusted Marginal Structural Models and Statically-Optimal Dynamic Treatment Regimens. The International Journal of Biostatistics. 2005;1(1) Article 4. [Google Scholar]

- 35.Wahed AS, Tsiatis AA. Semiparametric efficient estimation of survival distribution for treatment policies in two-stage randomization designs in clinical trials with censored data. Biometrika. 2006;93:163–177. [Google Scholar]

- 36.Zhang CH, Huang J. The sparsity and bias of the lasso selection in high-dimensional linear regression. The Annals of Statistics. 2008;36(4):1567–1594. [Google Scholar]

- 37.Zou H. The Adaptive Lasso and its Oracle Properties. Journal of the American Statistical Association. 2006;101(476):1418–1429. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.