Abstract

Computational models of the neuromuscular system hold the potential to allow us to reach a deeper understanding of neuromuscular function and clinical rehabilitation by complementing experimentation. By serving as a means to distill and explore specific hypotheses, computational models emerge from prior experimental data and motivate future experimental work. Here we review computational tools used to understand neuromuscular function including musculoskeletal modeling, machine learning, control theory, and statistical model analysis. We conclude that these tools, when used in combination, have the potential to further our understanding of neuromuscular function by serving as a rigorous means to test scientific hypotheses in ways that complement and leverage experimental data.

Index Terms: Biomechanics, computational methods, modeling, neuromuscular control

I. Introduction: Why is Neuromuscular Modeling So Difficult?

For the purposes of this review, we define computational models of neuromuscular function to be algorithmic representations of the coupling among three elements: the physics of the world and skeletal anatomy, the physiological mechanisms that produce muscle force, and the neural processes that issue commands to muscles based on sensory information, intention, and a control law. Some of the difficulties and challenges of neuromuscular modeling arise from differences in the engineering approach to modeling versus the scientific approach to hypothesis testing. From the engineering perspective, computational modeling is a proven tool because we are able to use modeling to design and build very complex systems. For example, airliners, skyscrapers, and microprocessors are three examples of systems that are almost entirely developed using computational modeling. The obvious extension of these successes is to expect neuromuscular modeling to have already yielded deeper understanding of brain–body interactions in vertebrates, and revolutionized rehabilitation medicine.

To explain why this is not a reasonable extrapolation, we point out that engineers tend to apply an inductive approach and build models from the bottom-up, where the constitutive parts are computational implementations of laws of physics and mechanics known to be valid for a particular regime (e.g., turbulent versus laminar flow, continuum versus rigid body mechanics, etc.) that we understand well, or have at least been validated against experimental data in those regimes. The behavior of the model that emerges from the interactions among constitutive elements is carefully compared against the engineers' intuition and further experimental data before it is accepted as valid.

Neuromuscular modeling, on the other hand, tends to be used for scientific inquiry via a deductive approach to proceed from observed behavior in a particular regime that is measured accurately (e.g., gait, flight, manipulation), to building models that are computational implementations of hypotheses about the constitutive parts and the overall behavior. This deductive top-to-bottom approach makes the emergent behavior of the model difficult to compare against intuition, or even other models, because the differences that invariably emerge between model predictions and experimental data can be attributed to a variety of sources ranging from the validity of the scientific hypothesis being tested, to the choice of each constitutive element, or even their numerical implementation. Even when models are carefully built from the bottom-up, the modeler is confronted with choices that often affect the predictions of the model in counterintuitive ways. Some examples of choices are the types of models for joints (e.g., a hinge versus articulating surfaces), muscles (e.g., Hill-type versus populations of motor units), controllers (e.g., proportional-derivative versus linear quadratic regulator), and solution methods (e.g., forward versus inverse).

Therefore, we have structured this review in a way that first presents a critical overview of different modeling choices, and then describes methods by which the set of feasible predictions of a neuromuscular model can be used to test hypotheses.

II. Overview of Musculoskeletal Modeling

Computational models of the musculoskeletal system (i.e., the physics of the world and skeletal anatomy, and the physiological mechanisms that produce muscle force) are a necessary foundation when building models of neuromuscular function. Musculoskeletal models have been widely used to characterize human movement and understand how muscles can be coordinated to produce function. While experimental data are the most reliable source of information about a system, computer models can give access to parameters that cannot be measured experimentally and give insight on how these internal variables change during the performance of the task. Such models can be used to simulate neuromuscular abnormalities, identify injury mechanisms, and plan rehabilitation [1]–[3]. They can be used by surgeons to simulate tendon transfer [4]–[6] and joint replacement surgeries [7], to analyze the energetics of human movement [8], athletic performance [9], design prosthetics and biomedical implants [10], and functional electric stimulation controllers [11]–[13].

Naturally, the type, complexity, and physiological accuracy of the models vary depending on the purpose of the study. Extremely simple models that are not physiologically realistic can and do give insight into biological function (e.g., [14]). On the other hand, more complex models that describe the physiology closely might be necessary to explain some other phenomenon of interest [15]. Most models used in understanding neuromuscular function lie in-between, with a combination of physiological reality and modeling simplicity. While several papers [16]–[23] and books [24]–[26] discuss the importance of musculoskeletal models and how to build them, we will give a brief overview of the necessary steps and discuss some commonly performed analyses and limitations using these models. We will illustrate the procedure for building a musculoskeletal model by considering the example of the human arm consisting of the forearm and upper arm linked at the elbow joint as shown in Fig. 1.

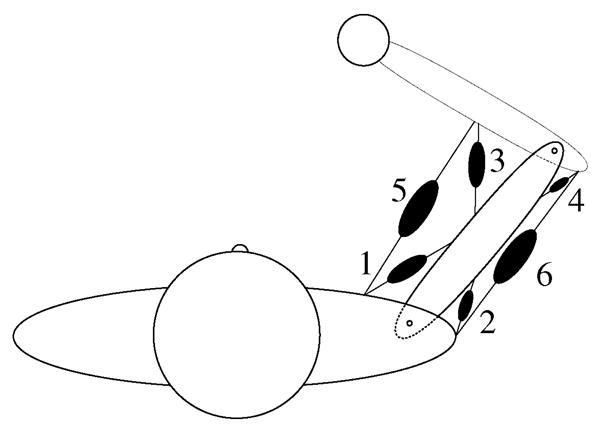

Fig. 1.

Simple model of the human arm consisting of two planar joints and six muscles.

A. Computational Environments

The motivation and advantage of graphical/computational packages like SIMM (Motion Analysis Corporation), Any-Body (AnyBody Technology), MSMS, etc. [27]–[29], is to build graphical representations of musculoskeletal systems, and translate them into code that is readable by multibody dynamics computational packages like SDFast (PTC), Autolev (Online Dynamics Inc.), ADAMS (MSC Software Corp.), MATLAB (Mathworks Inc.), etc., or use their own dynamics solvers. These packages allow users to define musculoskeletal models, calculate moment arms and musculotendon lengths, etc.

This engineering approach dates back to the use of computer-aided design tools and finite-element analysis packages to study bone structure and function in the 1960s, which grew to include rigid body dynamics simulators in the mid 1980s like ADAMS and Autolev. Before the advent of these programming environments (as in the case of computer-aided design), engineers had to generate their own equations of motion or Newtonian analysis by hand, and write their own code to solve the system for the purpose of interest. Available packages for musculoskeletal modeling have now empowered researchers without training in engineering mechanics to assemble and simulate complex nonlinear dynamical systems. The risk, however, is that the lack of engineering intuition about how complex dynamical systems behave can lead the user to accept results that one otherwise would not. In addition, to our knowledge, multibody dynamics computational packages have not been cross-validated against each other, or a common standard, to the extent that finite-elements analysis code has [30] and the simulation of nonlinear dynamical systems remains an area of study with improved integrators and collision algorithms developed every year. An exercise the user can do is to simulate the same planar double or triple pendulum (i.e., a limb) in different multibody dynamics computational packages and compare results after a few seconds of simulation. The differences are attributable to the nuances of the computational algorithms used, which are often beyond the view and control of the user. Whether these shortcomings in dynamical simulators affect the results of the investigation can only be answered by the user and reviewers on a case-by-case basis, and experts can also disagree on computational results in the mainstream of research like gait analysis [31]–[33].

B. Dimensionality and Redundancy

The first decision to be made when assembling a musculoskeletal model is to define dimensionality of the musculoskeletal model (i.e., number of kinematic degrees-of-freedom and the number of muscles acting on them). If the number of muscles exceeds the minimal number required to control a set of kinematic degrees-of-freedom, the musculoskeletal model will be redundant for some submaximal tasks. The validity and utility of the model to the research question will be affected by the approach taken to address muscle redundancy. Most musculoskeletal models have a lower dimensionality than the actual system they are simulating because it simplifies the mathematical implementation and analysis, or because a low-dimensional model is thought sufficient to simulate the task being analyzed. Kinematic dimensionality is often reduced to limit motion to a plane when simulating arm motion at the level of the shoulder [34]–[36], when simulating fingers flexing and extending [37], or when simulating leg movements during gait [38]. Similarly, the number of independently controlled muscles is often reduced [39] for simplicity, or even made equal to the number of kinematic degrees-of-freedom to avoid muscle redundancy [40]. While reducing the dimensionality of a model can be valid in many occasions, one needs to be careful to ensure it is capable of replicating the function being studied. For example, an inappropriate kinematic model can lead to erroneous predictions [41], [42], or reducing a set of muscles too severely may not be sufficiently realistic for clinical purposes.

A subtle but equally important risk is that of assembling a kinematic model with a given number of degrees-of-freedom, but then not considering the full kinematic output. For example, a three-joint planar linkage system to simulate a leg or a finger has three kinematic degrees-of-freedom at the input, and also three kinematic degrees-of-freedom at the output: the x and y location of the endpoint plus the orientation of the third link. As a rule, the number of rotational degrees-of-freedom (i.e., joint angles) maps into as many kinematic degrees-of-freedom at the endpoint [43]. Thus, for example, studying muscle coordination to study endpoint location without considering the orientation of the terminal link can lead to variable results. As we have described in the literature [44], [45], the geometric model and Jacobian of the linkage system need to account for all input and output kinematic degrees-of-freedom to properly represent the mapping from muscle actions to limb kinematics and kinetics.

C. Skeletal Mechanics

In neuromuscular function studies, skeletal segments are generally modeled as rigid links connected to one another by mechanical pin joints with orthogonal axes of rotation. These assumptions are tenable in most cases, but their validity may depend on the purpose of the model. Some joints like the thumb carpometacarpal joint, the ankle and shoulder joints are complex and their rotational axes are not necessarily perpendicular [46]–[48], or necessarily consistent across subjects [46], [49], [50]. Assuming simplified models may fail to capture the real kinematics of these systems [51]. While passive moments due to ligaments and other soft tissues of the joint are often neglected, at times they are modeled as exponential functions of joint angles [52], [53] at the extremes of range of motion to passively prevent hyper-rotation. In other cases, passive moments well within the range of motion could be particularly important in the case of systems like the fingers [54], [55] where skin, fat, and hydrostatic pressure tend to resist flexion.

Modeling of contact mechanics could be important for joints like the knee and the ankle where there is significant loading on the articulating surfaces of the bones, and where muscle force predictions could be affected by contact pressure. Joint mechanics are also of interest for the design of prostheses, where the knee or hip could be simulated as contact surfaces rolling and sliding with respect to each other [56]–[58]. Several studies estimate contact pressures using quasi-static models with deformable contact theory (e.g., [59]–[62]). But these models fail to predict muscle forces during dynamic loading. Multibody dynamic models with rigid contact fail to predict contact pressures [7].

For the illustrative example carried throughout this review, we will use the simple two-joint, six-muscle planar limb shown in Fig. 1. We model the upper arm and the forearm as two rigid cylindrical links connected to each other by a pin joint representing the elbow and shoulder joints as hinges. We will neglect the torque due to passive structures and assume frictionless joints. We will not consider any contact mechanics at the joints. This model will simulate the movement and force production of the hand (i.e., a fist with a frozen wrist) in a two-dimensional plane perpendicular to the torso as is commonly done in studies of upper extremity function [34]–[36].

Commentary 1

Modeling contact mechanics is the first of several elements we will point out throughout this review where the community of modelers diverge in approach and/or opinion. The computational approach to use when simulating contact mechanics among rigid and deformable bodies remains an area of active research and debate, and no definitive method exists to our knowledge. This affects neuromuscular modeling in two areas.

Joint mechanics. An anatomical joint is a mechanical system where two or more rigid bodies make contact at their articular surfaces (e.g., the femoral head and acetabulum for the hip, the distal femur, patella and tibial plateau for the knee, or the eight wrist bones and distal radius for the wrist). Their congruent anatomical shape, ligaments, synovial capsule, and muscle forces interact to induce kinematic constraints and produce the function of a kinematic joint. These mechanical systems are quite complex and their behavior can be load-dependent [63]. Most modelers correctly assume that the system can be approximated as a system of well-defined centers of rotation for the purposes of whole-limb kinematics and kinetics (e.g., [12], [29], [64]). However, including contact mechanics in joints like the knee and ankle could affect force predictions in muscles crossing these joints. For example, modeling a joint as deformable surfaces that remain in contact introduces additional constraints, thereby reducing the solution space when solving for muscle forces from joint torques [65]. If joint behavior or the specific loading of the articular surfaces is the purpose of the study as when studying cartilage loading, osteoarthritis or joint prostheses (e.g., [56], etc., among many), then it is critical to have detailed models of the multiple constitutive elements of the joint. Recent studies have combined dynamic multibody modeling in conjunction with deformable contact theory for articular contact which makes it possible to simultaneously determine contact pressures and muscle forces during dynamic loading [65]–[69].

Body-world interactions. Faithful and accurate simulations of the interactions among rigid and deformable bodies have been an active area of investigation, including foot–floor contact, accident simulation, surgical simulation, and hand–object interactions (e.g., [70]–[72]). Most recently, there have been advances that have crossed over from the computer animation and gaming world that provide so-called “dynamics engines” that can rapidly compute multibody contact problems [70], [73], [74]. Some recent examples of fast algorithms to simulate body–object interactions include [73] and [75]. While some of these dynamics engines emphasize speed and a realistic look over mechanical accuracy, some examples of new techniques can be both accurate and fast [75], [76].

D. Musculotendon Routing

Next, we need to select the routing of the musculotendon unit consisting of a muscle and its tendon in series [77], [78]. The reason we speak in general about musculotendons (and not simply tendons) is that in many cases it is the belly of the muscle that wraps around the joint (e.g., gluteus maximus over the hip, medial deltoid over the shoulder). In other cases, however, it is only the tendon that crosses any joints as in the case of the patellar tendon of the knee or the flexors of the wrist. In addition, the properties of long tendons affect the overall behavior of muscle like by stretching out the force-length curve of the muscle fibers [77]. Most studies assume correctly that musculotendons insert into bones at single points or multiple discrete points (if the actual muscle attaches over a long or broad area of bone). Musculotendon routing defines the direction of travel of the force exerted by a muscle when it contracts. This defines the moment arm r of a muscle about a particular joint, and determines both the excursion δs the musculotendon will undergo as the joint rotates an angle δθ defined by the equation, δs = r * δθ, as well as the joint torque τ at that joint due to the muscle force fm transmitted by the tendon τ = r * fm, where r is the minimal perpendicular distance of the musculotendon from the joint center for the planar (scalar) case [78]. For the three-dimensional (3-D) case, the torque is calculated by the cross product of the moment arm with the vector of muscle force τ = r × fm.

In today's models, musculotendon paths are modeled and visualized either by straight lines joining the points of attachment of the muscle; straight lines connecting “via points” attached to specific points on the bone which are added or removed depending on joint configuration [79] or as cubic splines with sliding and surface constraints [80]. Several advances also allow representing muscles as volumetric entities with data extracted from imaging studies [81], [82], and defining tendon paths as wrapping in a piecewise linear way around ellipses defining joint locations [12], [64]. The path of the musculotendon in these cases is defined based on knowledge of the anatomy. Sometimes, it may not be necessary to model the musculotendon paths but obtaining a mathematical expression for the moment arm (r) could suffice. The moment arm is often a function of joint angle and can be obtained by recording incremental tendon excursions (δs) and corresponding joint angle changes (δθ) in cadaveric specimens (e.g., [83], [84]).

For the arm model example (Fig. 1), we will model musculotendon paths as straight lines connecting their points of insertion. We will attach single-joint flexors and extensors at the shoulder (pectoralis and deltoid) and elbow (biceps long head and triceps lateral head) and double-joint muscles across both joints (biceps short head and triceps long head). Muscle origins and points of insertion are estimated from the anatomy. In our model of the arm in Fig. 1, we shall model musculotendons as simple linear springs. We then assign values to model parameters like segment inertia, elastic properties of the musculotendons, etc. At this point the model is complete and ready for dynamical analysis.

Commentary 2

Until recently, tendon routing was defined and computed using via points along the portions of its path where it crossed a joint. However, the more realistic extension of this process uses tendon paths that wrap around tessellated arbitrary bone surfaces, but defined to pass along specific via points, but the tendon path between via points need not be straight and can be affected by the shape of the bones and the tension in the tendon [76], [80]. Another approach is to eliminate via points altogether and calculate the behavior of the tendons as they drape over surfaces. This allows calculating the way tendon structures slide over complex bones, where tension transmission is affected by finger posture and tendon loading [80], [85]–[88]. These methods come at a computational cost but are arguably necessary in some cases, as when simulating the tendinous networks of the hand [80], [86], [88].

E. Musculotendon Models

The most commonly used computational model of musculotendon force is the one based on the Hill-type model of muscle[77], largely because of its computational efficiency, scalability, and because it is included in simulation packages like SIMM (Motion Analysis Corporation). In Hill-type models, the entire muscle is considered to behave like a large sarcomere with its length and strength scaled-up, respectively, to the fiber length and physiological cross-sectional area of the muscle of interest. This model consists of a parallel elastic element representing passive muscle stiffness, a parallel dashpot representing muscle viscosity, and a parallel contractile element representing activation-contraction dynamics, all in series with a series elastic element representing the tendon. The force generated by a muscle depends on muscle activation, physiological cross-sectional area of the muscle, pennation angle, and force-length and force-velocity curves for that muscle. These parameter values are generally based on animal or cadaveric work [89]. Five parameters define the properties of this musculotendon model. Four of these are specific to the muscle: the optimal muscle fiber length, the peak isometric force (found by multiplying maximal muscle stress by physiological cross-sectional area), the maximal muscle shortening velocity, and the pennation angle. The fifth is the slack length of the tendon (tendon cross-sectional area is assumed to scale with its muscle's physiological cross-sectional area [90]). Model activation-contraction dynamics is adjusted to match the properties of slow or fast muscle fiber types by changing the activation and deactivation time constants of a first order differential equation [77]. This Hill-type model has undergone several modifications but remains a first-order approximation to muscle as a large sarcomere with limited ability to simulate the full spectrum of muscles, or of fiber types found within a same muscle, or the properties of muscle that arise from it being composed of populations of motor units such as signal dependent noise, etc. Several researchers have developed alternative models for muscle contraction, which were used in specific studies [91]–[94].

The alternative approach has been to model muscles as populations of motor units. While this is much more computationally expensive, it is done with the purpose of being more physiologically realistic and enabling explorations of other features of muscle function. A well-known model is that proposed by Fuglevand and colleagues [95], which has been used extensively to investigate muscle physiology, electromyography, and force variability. However, the computational overhead of this model has largely limited it to studies of single muscles, and is not usually part of neuromuscular models of limbs. In order to develop a population-based model that could be used easily by researchers, Loeb and colleagues developed the Virtual Muscle software package [96]. It integrates motor recruitment models from the literature and extensive experimentation with musculotendon contractile properties into a software package that can be easily included in multibody dynamic models run in MATLAB (The Mathworks, Natick, MA).

Commentary 3

Most investigators will agree that defining and implementing more realistic muscle models is a critical challenge to be overcome in musculoskeletal modeling. The reasons include the following.

Muscles are the actuators in musculoskeletal systems, and the neural control and mechanical performance of the system depend heavily on their properties. There is abundant experimental evidence that the nonlinear, time-varying, highly individuated properties of muscles determine much about neuromuscular function and performance in health and disease. Therefore, before realistic muscle models are available, testing theories of motor control will remain a challenge.

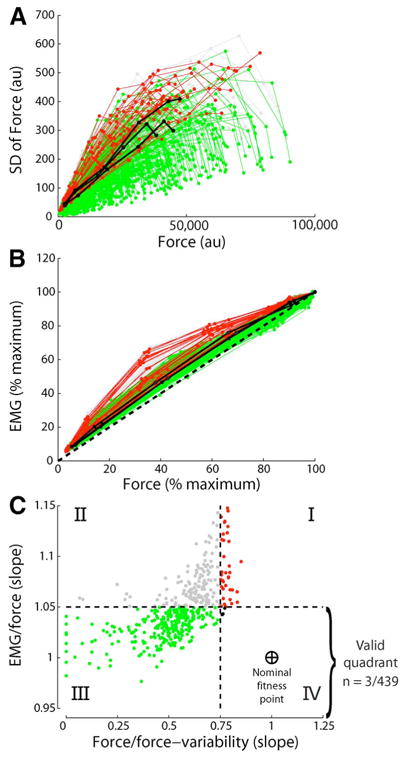

Muscle models today fall short of replicating some fundamental physiological and mechanical features of muscles. In a recent study, for example, Keenan and Valero-Cuevas [97] showed that the most widely used model of populations of motor units does not robustly replicate two fundamental tenets of muscle function: the scaling of EMG and force variability with increasing muscle force. Therefore, there are some critical neural features of muscle function that are yet to be characterized experimentally and encoded computationally (for another example, see [98]).

Is it even desirable or possible to build a “complete” model of muscle function? A good model is best tailored to a specific question because it can make testable predictions and/or explain a specific experimental phenomenon. Thus, such models are more likely to be valid and useful. For example, some researchers focus on time- and context-sensitive properties like residual force enhancement [98] or force depression [99], others investigate the complex 3-D architecture of muscles and muscle fibers [100], and others mentioned above focus on total force production or populations of motor units. Therefore the challenge is to decide what is the best combination of mechanistic and phenomenological elements to make the model valid and useful for the study at hand.

Muscle energetics is another important aspect of modeling that deserves attention. An obvious disadvantage of Hill-type muscle models is that they do not capture the distribution of cross bridge conformations for a given muscle state (length, velocity, activation, etc.) because the details of energy storage and release in eccentric and concentric contractions associated with cross bridge state and parallel elastic elements are vaguely understood [101], [102]. Therefore, muscle energetics is a clear case where, in spite of what is said in the above paragraph, it may be necessary to create models that span multiple “scales” or “levels of complexity.” Several authors have repeatedly pointed out the need for accurate muscle energetics to understand real-world motor tasks such as [103] and [104].

Lastly, modeling and understanding muscle function will require embracing the fact that muscle contraction is an emergent dynamical phenomenon mediated (or even governed?) by spinal circuitry. So far most modelers have focused on driving muscle force with an unadulterated motor command. Motor unit recruitment, muscle tone, spasticity, clonus, signal dependent noise, to name a few, are features of muscle function affected to a certain extent by muscle spindles, Golgi tendon organs, and spinal circuitry. Thus advancing and using models of muscle proprioceptors and spinal circuitry will become critical to our understanding of physiological muscle function [105]–[107].

III. Forward and Inverse Simulations

In “forward” models, the behavior of the neuromuscular system is calculated in the natural order of events: from neural or muscle command to limb forces and movements. In “inverse” models, the behavior is assumed or measured and the model is used to infer and predict the time histories of neural, muscle, or torque commands that produced it. The same biomechanical model governed by Newtonian mechanics is used in either approach, but it is used differently in each analysis [24], [26].

A. Forward Models

The inputs to a forward musculoskeletal model are usually in the form of muscle activations (or torque commands if the model is torque driven) and the outputs are the forces and/or movements generated by the musculoskeletal system. The system dynamics is represented using the following equation:

| (1) |

where I is the system mass matrix, θ̈ the vector of joint accelerations, θ the vector of joint angles, C the vector of Coriolis and centrifugal forces, G the gravitational torque, M the instantaneous moment arm matrix, FM the vector of muscle forces, and Fext the vector of external torques due to ground reaction forces and other environmental forces. This system of ordinary differential equations is numerically integrated to obtain the time course of all the states (joint angles θ and joint angle velocities θ̇) of the system. The input muscle activations could be derived from measurements of muscle activity (electromyogram) or from an optimization algorithm that minimizes some cost function, for example, the error in joint angle trajectory for all joints and energy consumed [108]. Forward dynamics has also been used in determining internal forces that cannot be experimentally measured like in the ligaments during activity or contact loads in the joints. It gives insight on energy utilization, stability and muscle activity during function for example in walking simulations [109]. It gives the user access to all the parameters of the system and to simulate effects when these are changed. This makes it a useful tool to study pathological motion and for rehabilitation. [22] provides a review on many of the applications of forward dynamics modeling.

B. Inverse Models

Inverse dynamics consists of determining joint torque and muscle forces from experimentally measured movements and external forces. Since the number of muscles crossing a joint is higher than the degrees-of-freedom at the joint, multiple sets of muscle forces could give rise to the same joint torques. This is the load-sharing problem in biomechanics [110]. A single combination is chosen by introducing constraints such that the number of unknown variables is reduced and/or based on some optimization criterion, like minimizing the sum of muscle forces or muscle activations. Several optimization criteria have been used in the literature [111]–[113]. Muscle forces determined by this analysis are often corroborated by electromyogram recordings from specific muscles [114], [115]. Since inverse dynamics consists of using the outputs of the real system as inputs to a mathematical model whose dynamics do not exactly match with the real system, the predicted behavior of the model does not necessarily match with the measured behavior of the real system. This is an important problem in inverse dynamics and is discussed in more detail in [116].

Both forward and inverse models are useful and can be complementary and the choice is largely driven by the goals of the study. The main challenge with both these analyses is experimental validation because many of the variables determined using either approach cannot be measured directly. The reader is directed to articles and textbooks that describe these methods in detail [12], [24], [64], [117]–[119].

IV. Computational Methods for Model Learning, Analysis, and Control

We have discussed the computational methods used to define and assemble known musculoskeletal elements of models. However, there exist complementary computational methods to expand the utility of these models in several ways. For example:

use experimental data to “learn” the complex patterns or functional relationships, and thereby create model elements that are not otherwise available (e.g., the inverse dynamics of a complex limb, mass properties, complex joint kinematics, etc.);

find families of feasible solutions when problems are high dimensional, nonlinear, etc. (e.g., characterize kinematic and kinetic redundancy);

find specific optimized solutions for a specific task;

establish the consequences of parameter variability and uncertainty;

explore possible control strategies used by the nervous system;

predict the consequences of disease, treatment, and other changes in the neuromusculoskeletal system;

consider noise in sensors and actuators.

The computational methods that allow such explorations stem from the interface of three established fields combining engineering, statistics, computer science, and applied mathematics: machine learning, control theory, and estimation-detection theory. While these fields are vast, and the subject of active research in their own right, we portray a categorization of their techniques and interactions as they relate to our topic (Fig. 2). Experts in these fields will have valid and understandable objections to our specific simplifications and categorizations. However, we believe that nonspecialists will nevertheless benefit from it at the onset of their exploration of these areas; and nuance will emerge as they become proficient.

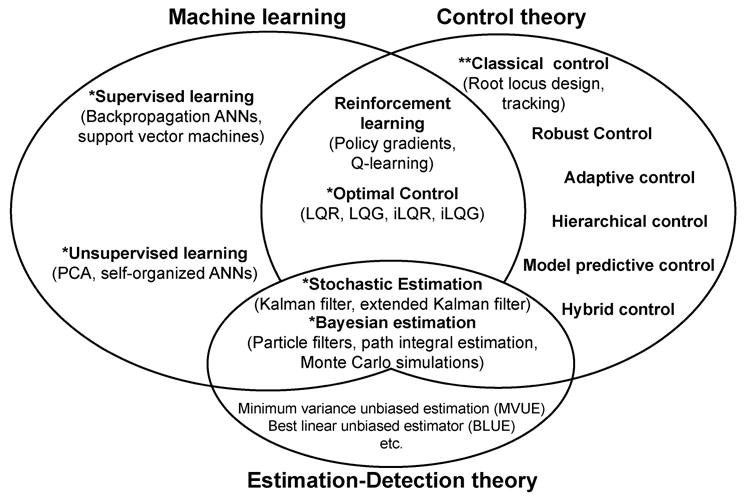

Fig. 2.

Schematic description of the interactions among machine learning, control theory, and estimation-detection theory.

What is most important to extract from this categorization is that, even though most of these areas matured decades ago, only a few techniques are commonly used in neuromuscular modeling (indicated with **) and a few others are beginning to be used (indicated with *). To be clear, several of these techniques are routinely used, and even overused, in the context of psychophysics, biomechanical analysis, gait, and EMG analysis, data processing, motor control, etc. Therefore, they will not be altogether new to someone familiar with those fields. However, neuromuscular modeling has not tapped into these available computational techniques. Our aim here is to succinctly describe them in the context of neuromuscular modeling and point to useful literature.

Another important idea we wish to convey is that the expertise you may have with one of these techniques in a different context enables their use for neuromuscular modeling. For example, you may be familiar with the use of principal components analysis for EMG analysis, and the same techniques can be used to approximate the main interactions among the parameters of a model.

Lastly, we wish to invite the community of practitioners and students in machine learning, control theory, and estimation-detection theory to join forces with our community of neuromuscular modelers. For example, we can find collaborators in those fields, train students with backgrounds in those fields, or expand our use of those techniques. This commitment is particularly necessary to move beyond traditional discipline-based training where, for example, control theory is taught in the electrical engineering curriculum, and machine learning in computer science—and each is taught as mutually independent, and separate from the problems of neuromuscular systems.

V. Machine-Learning Techniques for Neuromuscular Modeling

Machine learning is the general term used for a scientific discipline whose purpose is to design and develop computational algorithms that allow computers to learn based on available data (such as from experiments or databases) or on-line during iterative or exploratory behavior [120]–[122]. For the purposes of this review, we will use the two-link arm model introduced in Section II to illustrate two main classes of machine-learning approaches.

Learning functional relationships. It is often necessary to use experimental data to arrive at a computational representation of model elements lacking analytical description. Or even if such analytical representation exists, it may only be an approximation that needs to be refined due to structural or parameter uncertainty. Learning functional relationships has been called a “black box” approach.

Learning solutions to redundant problems (i.e., one-to-many mappings). Machine-learning techniques can be used to solve the redundancy problem common in neuromuscular systems when these solutions cannot be found analytically, particularly, if the problem is nonlinear, nonconvex or high-dimensional.

A. Learning Functional Relationships

In neuromuscular models, a functional relationship may describe, for example, the inertia tensor, moment arm matrix, Jacobian matrix, or inverse dynamics. Such relationships could be derived analytically, but often an analytical solution is not available or feasible, e.g., due to intersubject variability or structural uncertainties, like variability or uncertainty about link lengths, joint centers of rotation, centers of mass, and inertial properties. For minor uncertainties, where only a few parameters need to be determined, these parameters could be inferred by fitting the model to experimental data. For example, limb lengths could be extracted from motion tracking data using probabilistic-inference methods [123]. Such an approach, however, becomes increasingly difficult if too many parameters are unknown or uncertain. Apart from computational problems, the state that fully defines the dynamics of the neuromuscular system may be unobservable [124]. These shortcomings motivate methods to learn functional relationships, as described in this section. These methods focus on the so-called “model-free” approach that does not require an a priori analytical model.

This model-free approach avoids finding the underlying structure of a system. Examples of finding the structure, e.g., the number of model elements and their connectivity, can be found in [87] and [125]–[127]. Typically, the search space for these problems is large and the fitness landscape is often fragmented and discontinuous: that is, the fitness of a model can change dramatically when a model element is added or removed [87], [128]. In this section, however, we focus on the aim of replacing unknown elements of neuromuscular models by learned functional representations.

We illustrate learning functional relationships using our arm-model example (Section II). Our task is to track a given trajectory with the hand. Here, we omit finding and implementing a controller. Instead, we want to find a computational representation of the inverse dynamics—which in turn may be used by a controller for tracking. For this simple example, the inverse dynamics can be found analytically, but for illustration purposes, we assume it is unknown.

In our task, the goal of the machine-learning algorithm is to find a computational function that maps from desired accelerations of the endpoint onto joint torques. Before learning this mapping, we need to identify the dependencies across variables so that they can be measured. That is, the appropriate data need to be collected. Note that this implies that the modeler has (or will spend time acquiring) an intuitive sense of the underlying causal interactions at play to properly identify the data to collect. For example, the joint torques τ will depend on the limb's mass and inertial properties, the state variables of the system (joint angles, x, and angular velocities, ẋ), and, finally, on the desired hand acceleration, ẍ*; thus, the torques are τ = f(x, ẋ, ẍ*) if mass and inertia parameters are assumed constant. For ease of illustration, we assume that the limb is controlled by torque motors (finding muscle commands is illustrated later in this section) and that the Jacobian of the system is full rank (i.e., the dynamics is invertible). Problems with noninvertible mappings are illustrated in Section V-B. We now critically review several techniques to find the target mapping from measurements.

1) Computational Representation of Functional Relationships

A foundation of machine-learning methods is to find numerical functions that approximate relationships in data. These functions can take numerous forms that range from linear and polynomial to Gaussian and sinusoidal or sum of these. In the machine-learning framework, these functions are called basis functions [120], [122]. A typical scenario in a machine-learning problem is for the modeler to prespecify the basis functions to fit to the data. In this case, the modeler has an a priori opinion of what the underlying structure of the mapping should be. If the a priori opinion is valid, then these algorithms converge quickly to the desired mapping and have good performance. However, for many problems in neuromuscular biomechanics, such intuition or prior knowledge is not available. More advanced machine-learning algorithms can select from among families of basis functions, as well as estimate their parameter values [122], [129]. As the basis functions become more complex, however, the model becomes more opaque and provides less intuition. We now discuss the use of basis functions in the context of supervised learning.

2) Supervised Learning Methods

In supervised learning, for a given input pattern, we posit an a priori function to produce the corresponding output pattern. Thus, the problem is function approximation, which is also known as regression analysis. Generally, the input–output relationship will be nonlinear. A common approach to nonlinear regression is to approximate an input–output relationship with a linear combination of basis functions [121]. Popular examples of this approach are neural networks [130], support vector regression [131], and Gaussian process regression [132]; the latter has been introduced to the machine-learning community by Williams and Rasmussen [133], but the algorithm is the same as the 50-year-old “Kriging” interpolation [134], [135] developed by Daniel Krige and Georges Matheron.

Some supervised learning methods go beyond producing a functional mapping, and also predict confidence boundaries for each predicted output. Gaussian process regression is an example of these methods that has a solid probabilistic foundation and therefore enjoys high academic interest. Unfortunately, however, Gaussian process regression is computationally expensive: the training time (i.e., computational cost) scales with the cube of the number of training patterns. Faster variants have been developed, but they essentially rely on choosing a small enough set of representative data points to make the solution computationally feasible [132]. If the computation of confidence boundaries is not important, then support vector regression is a faster alternative because the training time scales with the square of the number of training patterns.

An alternative for fast computation and with the option to compute confidence boundaries is locally weighted linear regression [136]–[138]. A challenge with locally weighted regression is the placement of the basis functions, which are typically Gaussian. An optimal choice for centering Gaussian functions is often numerically infeasible. A further problem of locally confined models arises in high-dimensional spaces: the proportional volume of the neighborhood decreases exponentially with increasing dimensionality; thus, eventually this volume may not contain enough data points for a meaningful estimation of the regression coefficients—see the “curse of dimensionality” [139]. Counteracting this problem using local models with broad Gaussian basis functions is often infeasible, since these may lead to over-smoothing and loss of detail. Fortunately, many biological data distributions are confined to low-dimensional manifolds, which can be exploited for supervised learning [137], [138], [140].

Generally, finding the model parameters to fit a functional relationship is an optimization problem; therefore, we discuss briefly convergence and local minima. Some of the above-mentioned techniques, like linear regression and Kriging interpolation, provide analytic solutions to function approximation and, thus, avoid problems with lack of convergence and local minima. However, finding a proper family of basis functions and their parameters is typically a complex optimization problem requiring an iterative solution. Whereas most established methods have guaranteed convergence [122], they may result in local minima, which are not globally optimal. This problem has been addressed by using, for example, annealing schemes [141], [142] and genetic algorithms [143]. The latter are particularly useful if the parameter domain is discrete, like, e.g., for the topology of neural networks [144]. As a downside, these optimization methods tend to be computationally complex and provide no guarantee to find a globally optimal solution; the problem of local minima remains, therefore, an area of active research.

Commentary 4

Artificial neural networks (ANNs) are perhaps the best-known example of supervised learning. They are widespread, but their use has also been controversial.

There are largely two communities who use ANNs. From the perspective of one community, the network connectivity, parallel processing, and learning rules are biologically inspired. Therefore, the focus is on understanding computation in biological neurons, and the fact that certain networks can do function approximation efficiently is simply an additional benefit [145]. In contrast, the statistical-machine-learning community sees ANNs as a specific algorithmic implementation and focuses on the function-approximation problem per-se and, thus, sees no need to address this problem exclusively with neural networks [121], [122].

The selection of the topology of the network (number of neurons and their connectivity) is to a large extent heuristic, and unrelated to the a priori knowledge of the underlying structure of the mapping.

The more complex the network, the more it will tend to overfit the data and lack generalization. Heuristics have been developed to mitigate overfitting: for example, the number of parameters to learn should be less than 1/10 of the training data [130].

3) Data Collection and Learning Schemes

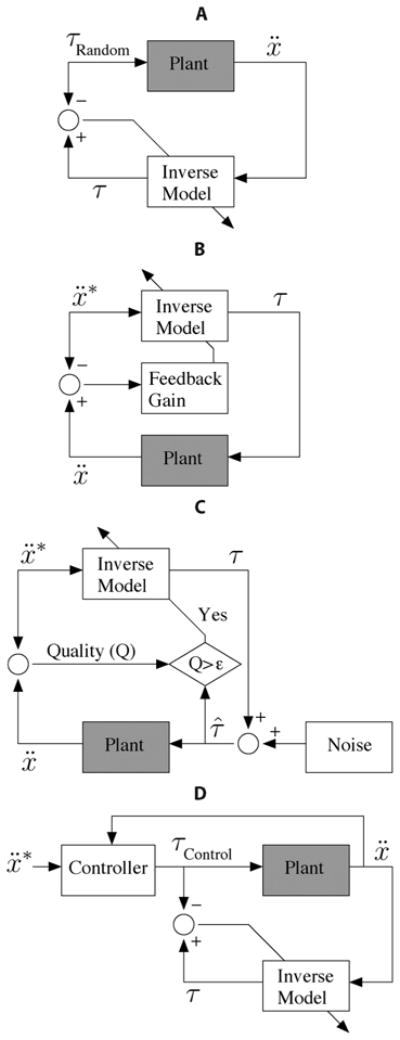

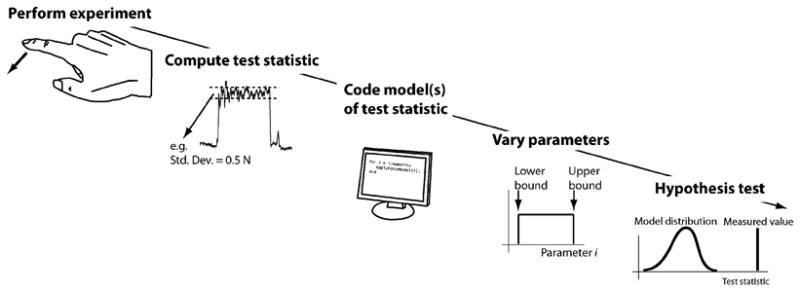

Having presented the nature of function approximation, we now describe different strategies for collecting training data necessary to compute the approximation (Fig. 3). Here, we focus on learning inverse mappings, like the inverse dynamics of a limb, which pose a challenge for data collection (Fig. 4).

Fig. 3.

Block diagram representation of data collection and supervised learning schemes (see text for detailed description of each case). In every case, data is collected in the real world by feeding joint torques to the real-world Plant (gray block). These torques can be: (A) selected at random, (B) based on a preliminary inverse model that may (C) include noise and selective use of training data, or (D) selected with the benefit of a demonstrator. For simplicity of illustration, the dependence of the inverse model and controller on state, x, ẋ, is omitted. (A) Direct inverse modeling. (B) Feedback-error learning. (C) Staged learning. (D) Learning from demonstration.

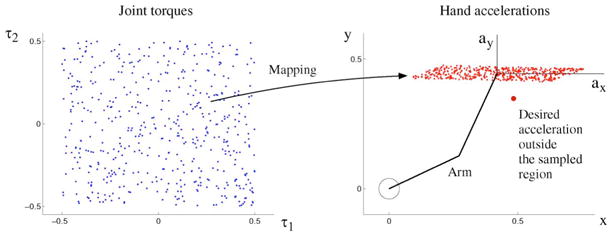

Fig. 4.

Illustration that an exploration in input space (here, torque) may not sample a desired output (acceleration). Sampling in input space is limited to the range ±0.5—any practical setting requires limits of exploration.

In direct inverse modeling [146], a sequence of random torques is delivered to the system to produce and record hand accelerations [Fig. 3(a)]. To assemble input–output training patterns, we take as input the observed time series of the arm state (posture, velocity) and acceleration, and as output the corresponding torque time series. The inverse mapping is then obtained using a supervised learning method (e.g., locally weighted linear regression with Gaussian basis functions [136]). Whereas feeding random sequences of torques is the most straightforward way to collect training patterns, its disadvantage is that it may not produce the desired accelerations, and therefore, the mapping found may not generalize well to the desired accelerations—see Fig. 4 and [147].

To better explore the desired set of accelerations, feedback-error learning [148] and distal supervised learning [147] directly feed the inverse model with desired accelerations [Fig. 3(b)]. This method requires a preliminary inverse model, found perhaps using direct inverse modeling. Since the errors in torque space are not directly accessible, the resulting errors in acceleration space are mapped back onto torque space. Feedback-error learning uses a linear mapping, and distal supervised learning requires the ability to do error-backpropagation (as in ANNs [130]) through an a priori learned forward model (which learns the opposite direction). If the errors are small and the underlying mapping is locally linear, feedback-error learning is the method of choice. However, small errors require a well initialized inverse mapping. Distal supervised learning, to our knowledge, is not often used in practice today.

Staged learning [149], [150] also feeds the inverse model with desired accelerations, but does not require a well initialized model [Fig. 3(c)]. The output of the inverse model is augmented with noise before applying it as torques to the arm. If the resulting accelerations show a better performance—based on some quality criterion—the applied torque is used as training pattern for a new generation (new stage) of inverse models. Compared to feedback-error learning, this method can be applied to a broader set of problems (see feedback-error learning above), but comes at the expense of a longer training time.

Alternatively, we may learn from demonstration. For example, a proportional-integral-derivative (PID) controller could be used to demonstrate (i.e., bias and/or guide) the production of training data to learn the inverse-dynamics mapping close to the region of interest [Fig. 3(d)] [151]–[153]. If a suitable demonstrator is available, this last option is the method of choice.

B. Learning Solutions to Redundant Problems

There is a long history of ways to solve the “muscle redundancy” problem with linear and nonlinear optimization methods based on specific cost functions [110], [154]. However, these methods provide single solutions that minimize that specific cost function, which is often open to debate. An alternative method is to solve for the entire solution space so as to explore the features of alternative solutions. If the system is linear for a given posture of the limb [44], [45], [155], the complete solution space can be found, which explicitly identifies the following:

the set of feasible control commands, e.g., the feasible activation set for muscles;

the set of feasible outputs, e.g., the feasible set of accelerations or forces a limb can produce;

the set of unique control commands that achieve the limits of performance;

the nullspace associated with a given submaximal output, e.g., the set of muscle activations that produce a given submaximal acceleration or force.

By knowing the structure of these bounded regions (i.e., feasible sets of muscle activations, limb outputs, and nullspaces), the modeler can explore the consequences of different families of inputs and outputs such as the level of cocontraction, joint loading, metabolic cost, etc. Methods to find these bounded regions are well known in computational geometry [44], [45], [156]. However, these methods risk failure if the problem is high dimensional or nonlinear. In these cases, it is best to first use machine algorithms to “learn” the topology of the bounded regions, and then use that knowledge to explore specific solutions.

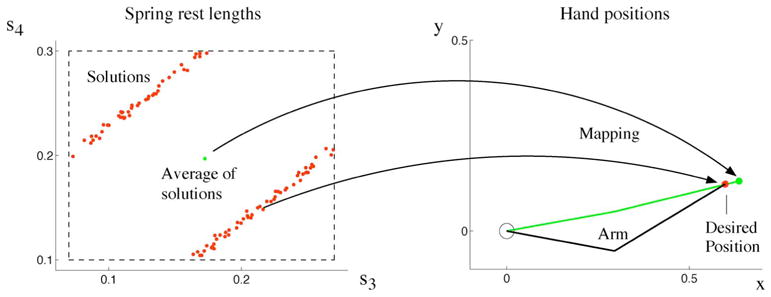

1) Redundancy Poses a Challenge to Learning

We use again our two-link arm model to illustrate a challenge in redundancy for learning. Note, the redundancy could be eliminated by providing sufficiently many constraints. Here, however, we focus on problems where such constraints are missing. In our model, we want to learn the set of muscle activations that bring the hand to a given equilibrium position. For simplicity, we model muscles as springs (see Section II); thus, we control spring rest lengths. The mapping from spring rest lengths onto hand position is unique. However, the inverse mapping is one-to-many (Fig. 5). Moreover, we map a single hand position onto a nonconvex region.1 For such a mapping, function approximation fails because it will average over the many possible solutions, i.e., over the nonconvex region, to obtain a single output [157]. Applying this output to our arm model, however, does not bring the hand to the desired position (Fig. 5). Thus, a different approach is needed to learn this mapping. This mapping problem could be addressed in the following ways.

Fig. 5.

Mapping from spring resting lengths (si) to hand positions (x, y). Several redundant resting lengths are solutions for one desired hand position (red). The graph on the left shows a two-dimensional projection of a cross section of the six-dimensional nullspace of spring resting lengths: s1 and s2 were set to constant values; s3 and s4 were randomly drawn (within dashed box), and all values of s5 and s6 were projected onto the displayed plane. The original six-dimensional nullspace in rest-length space is, therefore, nonconvex. Thus, the average of all rest-lengths solutions does not map onto the desired hand position.

Instead of learning a mapping onto a point, we could learn a mapping onto a probability distribution, and thus, accommodate the above-mentioned nonconvex nature of the solution space. Diffusion networks address this task [157].

Recurrent neural networks store training patterns as stable states [158]. In our case, such a pattern could be a combination of muscle activation and hand position. If only part of a pattern is specified (e.g, the hand position), the network dynamics completes this pattern to obtain the complement (here, the muscle activation). For fully connected symmetric networks, the dynamics converge to a stable pattern [158]. As an example for such an application, Cruse and Steinkühler showed that the relaxation in a recurrent neural network can be used to solve the inverse kinematics of a redundant robot arm [159], [160].

Finally, analogue to the use of recurrent neural networks, we could—in a first step—learn a representation of the manifold or distribution of the data points that contain input and output, and—in a second step—use this learned representation to compute a suitable mapping. Here, we want to focus on this latter solution.

2) Learning the Structure of Data Sets: Unsupervised Learning Methods

Unsupervised learning methods are designed to find the structure in data sets and do not need pairs of input and target patterns. Several methods exist for extracting linear and nonlinear approximations to the distribution of data points that will represent such a data structure. In this context, the data set represents a manifold in a multidimensional space, and learning the structure of this manifold is the goal.

Here, we will only briefly mention various linear and nonlinear methods—see references for more details. Methods for finding linear subspaces that represent data distributions are principal components analysis (PCA) [161], probabilistic PCA [162], independent component analysis [163], and nonnegative matrix factorization [164]. When applied to nonlinear distributions, these linear methods may give misleading solutions [165], [166].

Several methods exist to find the structure of nonlinear manifolds in data: auto-associative neural networks [145], [167], point-wise dimension estimation [166], self-organizing maps (SOMs) [168]–[170], probabilistic SOM [171], [172], semidefinite embedding [173], locally linear embedding [174], Isomap [175], Laplacian eigenmaps [176], stochastic neighbor embedding [177], kernel PCA [178], [179], and mixtures of spatially confined linear models (PCA or probabilistic PCA are commonly used as their linear models) [130], [150], [165], [180].

3) Going From the Structure of an Input–Output Data Set to Creating a Functional Mapping

Once a representation has been found, we need to construct a mapping from a specified input to the corresponding output. This mapping could be obtained as follows.

An input pattern specifies a constrained space in the joint space of input and output. To find output samples, this constrained space can be intersected with the learned representation of the data distribution/manifold. One possibility is to find the point on the constrained space that has the smallest Euclidean distance to our manifold representation [150], [165], [172]. For mixtures of locally linear models, efficient algorithms exist to find such a solution [150], [165]. If the manifold representation intersects the constrained space at several or infinitely many points, a solution has to be chosen out of this set of intersections.

Alternative to the minimum distance, we could define arbitrary cost functions on the set of intersections and find a solution accordingly. This path has not been fully exploited and explored.

VI. Applications of Control Theory for Neuromuscular Modeling

Control theory is a vast field of engineering where information about a dynamical system (from internal sensors, outputs, or predictions) is used to issue commands (corrective, anticipatory, or steering) with the goal of achieving a particular performance. We begin by giving a short overview of the uses of classical and optimal control theories as they are now used in the context of neuromuscular modeling. We then provide an overview of alternative approaches such as hierarchical optimal control, model predictive control, and hybrid optimal control. Our presentation of each of these types of optimal control are motivated by the characteristics of the dynamical systems found in neuromuscular systems. Hierarchical optimal control is motivated by the high dimensionality of neuromuscular dynamics; model predictive control is motivated by the need to impose state and control constrains such as uni-directional muscle activation (e.g., muscles can actively pull and resist tension, but cannot push). Finally, hybrid optimal control is motivated by the need to incorporate discontinuities and/or changes in the dynamics arising from making and breaking contact with objects and the environment (e.g., as in locomotion, grasping and object manipulation).

In the context of neuromuscular modeling, a dynamical system is one where differential equations can describe the evolution of the dynamical variables (called the state vector denoted by x) and their response to the vector of control signals (denoted by u). The reader is referred to any introductory text in control theory such as [181] for details. The dynamics of neuromuscular systems is generally nonlinear and they are formulated by the following equations:

| (2) |

For the dynamics of a limb model (Fig. 1), x is the state vector of two angles and two angular velocity while u are the controls that correspond to the two applied joint torques. The control of nonlinear systems is a problem with no general solution, and the traditional approach is to linearize the nonlinear dynamics around an operating point, or a sequence of operating points in state and control space. In the linearized version of the problem, the linear dynamics (3) are valid for small deviations from the operating point. For the example of the limb model, the operating point can be a prespecified arm posture, or a sequence of prespecified arm postures. The linearized dynamics have the form

| (3) |

The matrix A is the state transition matrix that defines how the current state x affects the derivative of the state ẋ (i.e., like when the change in position of a pendulum along its arc defines its velocity). B is the control transition matrix that defines how the control signals u affect the state derivatives. The matrix H is the measurement matrix that defines how the state of the system produces the output y. In some cases, the control signals u can also act directly on the outputs y via the matrix D, which is called the control output matrix. Control theory comes into the picture when we apply a control signal u to correct or guide the evolution of the state variables.

With very few exceptions, the vast majority of neuromuscular modeling attempts to find the sequence of control gains ut1, ut2, …, uT that will force the neuromuscular system to execute a task—which in most cases is to track a prespecified kinematic or kinetic trajectory in the time horizon t1, …, tT. Importantly, a valid sequence of control gains u = u(t) is defined as meeting the constraints imposed by the prespecified trajectories. The underlying control strategy is open loop. Obviously, any small disturbance or change in dynamics will cause the controller to fail drive the system to the desired state since control is open loop and therefore the controller is “blind” in any state changes. We draw the analogy to inverse modeling (see Section III) where an inverse Newtonian analysis is used to find the muscle forces or joint torques that are compatible with the measured kinematics and kinetics. Inaccuracies, simplifications, and assumptions in the analysis invariably produces solutions that, when “played forward,” do not produce stable behavior when the solutions are used to drive forward simulations. Thus, most of the work in control of neuromuscular systems to date has two dominant shortcomings:

Control problems are formulated as tracking problems and need a prespecified trajectory in state space. This approach can be very problematic for high-dimensional systems where part of the state is hidden or only obtained by approximation. For example, if the model includes muscle activation-contract dynamics, then muscle activation becomes part of the state vector. Usually, EMG is used to estimate muscle activation, but it is a poor predictor of the actual activation state of the muscle (for a brief discussion of limitations of EMG and references to follow, see [182]). Therefore, even though the part of the state vector obtained from measured limb kinematics and kinetics is well defined, the part of the state vector related to muscle activation is effectively hidden and must be approximated.

Control policies are open loop u = u(t) and apply only to the time histories used to calculate them. Therefore, if used to drive a forward simulation, they are independent of the new time history of the state. In these conditions, the stability of the neuromuscular system is not guaranteed, even for small disturbances, inaccuracies or noise in the dynamics.

The remainder of this section is motivated by the need to overcome these two shortcomings. We attempt to provide an overview of techniques that have the potential to lead to controls frameworks for high-dimensional nonlinear dynamical systems with hidden states that produces stable closed-loop feedback control laws.

A. Optimal Control

In the optimal control framework as described by [124], [183], and [184], the goal is to control a dynamical system while optimizing an objective function. In optimal control theory, the controller has direct or indirect access to the state variables x (often estimated from sensors and/or predictions) and output variables y to be able to both implement a control law and quantify the performance of the system (3). The objective function is an equation that quantifies how well a specified task is achieved. In mathematical terms, a general optimal control problem can be formulated as

| (4) |

subject to

| (5) |

| (6) |

where x ∈ ℜn×1 is the state of system (e.g., joint angles, velocities, muscle activations), and u ∈ ℜm×1 are the control signals (e.g., torques, muscle forces, neural commands). The quantity y ∈ ℜp×1 corresponds to observations or outputs that are functions of the state. The stochastic variables w ∈ ℜn×1 and ω ∈ ℜp×1 correspond to process and observation noise. For neuromuscular systems, the process noise can be signal-dependent while the proprioceptive sensory noise plays the role of observation noise. The cost to minimize J(x, u) consists of three terms. The quantity ϕ(xtN) is the terminal cost that is state-dependent (e.g., how well a target was reached); the term q(x) is the state-dependent cost accumulated over the time horizon tN – t0 (e.g., were large velocities needed to perform the task?), and uT Ru is the control-dependent cost accumulated over the time horizon (e.g., how much control effort was used to achieve the task). The control cost does not have to be quadratic, however, quadratic is used mosly for computational convenience. The term J(x, u) is the standard variable used for the cost function and V(x) is the scalar value representing the minimal value of the cost function, indicating that the task was performed (locally or globally) optimally as per this formulation of the problem and choice of cost function.

For the case of deterministic linear systems F(x, u, w) = Ax + Bu, with quadratic state cost functions and q(x) = xTQx, and full state observation y = H(x, u, v), the solution to the optimal control problem can be found analytically and is one of the more significant achievements of engineering theory in the 20th century. The solution provides controls of the form u = −Kx with feedback gains K ∈ ℜm×n which guarantee stability of the system while minimizing the objective function J(x, u). This is called the Linear Quadratic Regulator (LQR) method and it is one of the most well-known and explored control frameworks in control theory. Some examples of using this approach in neuromuscular modeling are [185]–[187].

Under certain conditions, optimal control can be applied to stochastic linear and nonlinear dynamical systems with noise that can be either state- or control-dependent. For linear stochastic systems F(x, u, w) = Ax + Bu + Γw, under the presence of observation noise y = Hx + v, optimal stochastic filtering is required (Fig. 2). Kalman filtering (KF) is a stochastic algorithm to estimate states of dynamical systems under the presence of process and observation noise. For linear systems with Gaussian process and observation noise, KF is the optimal estimator since it the minimum variance unbiased estimator (MVUE) [188]. The intuition behind KF is that, if x̂tk is the current estimate of the state, KF provides the Kalman gains L that under the update law guarantee to reduce the variance E{(x(t) − x̂(t)) (x(t) − x̂(t))T} = E{e(t)e(t)T}, where the term e(t) is the estimation error defined as e(t) = x(t) − x̂(t).

The full treatment of optimal control and estimation is the so-called Linear Quadratic Guassian Regulator (LQG) control scheme. The equations for the LQG are summarized below

| (7) |

| (8) |

| (9) |

| (10) |

| (11) |

Since the very first applications of optimal control, it has been known that the stability of the estimation and control problem affect the stability of the LQG controller. To see the connection between stability of estimation and control, and the overall stability we need to combine both problems under one mathematical formulation. It can been shown that [124], [184]

| (12) |

or

| (13) |

where the matrices F and G are appropriately defined.

The stability of the LQG controller depends on the eigenvalues of the state transition matrix F. Since F is lower triangular, its eigenvalues are given by the eigenvalues of A − BK and A − LH. In addition, the control gain K stabilizes the matrix A − BK while the Kalman gain L stabilizes the matrix A − LH. Therefore, the overall LQG controller is stable if and only if the state and estimation dynamics are stable.

Another important characteristic of LQG for linear systems is the separation principle. The separation principle states that the optimal control and estimation problems are separated and, therefore, the control gains are independent of the Kalman gains. Finding the control gains requires using the backward control Riccati equation, which does not depend on Kalman gain L, nor on the mean and covariance of the process and observation noise. Similarly, computation of the Kaman gain requires the use of the forward estimation Riccati equation, which is not a function of the control gain K nor of the weight matrices Q, R in the objective function J(x, u).

Importantly, when multiplicative noise with respect to the control signals is considered, the separation principle breaks down and the control gains are a function of the estimation gains (Kalman gains). The stochastic optimal controller for a dynamical system with control-dependent noise will only be active in those dimensions of the state relevant to the task. If the controller were active in all dimensions, it would necessarily be suboptimal because control actions add more noise in the dynamics.

The use of stochastic optimal control theory as a conceptual tool towards understanding neuromuscular behavior was proposed in, for example, [189]–[191]. In that work, a stochastic optimal control framework for systems with linear dynamics and control-dependent noise was used to understand the variability profiles of reaching movements. The influential work by [191] established the minimal intervention principle in the context of optimal control. The minimal intervention principle was developed based on the characteristics of stochastic optimal controllers for systems with multiplicative noise in the control signals.

The LQR and LQG optimal control methods have been mostly tested on linear dynamical systems for modeling sensorimotor behavior; e.g, in reaching tasks, linear models were used to describe the kinematics of the hand trajectory [190], [192]. In neuromuscular modeling, however, linear models cannot capture the nonlinear behavior of muscles and multibody limbs. In [187], an Iterative Linear Quadratic Regulator (ILQR) was first introduced for the optimal control of nonlinear neuromuscular models. The proposed method is based on linearization of the dynamics. An interesting component of this work that played an influential role in the studies on optimal control methods for neuromuscular models was the fact that there was no need for a prespecified desired trajectory in state space. By contrast, most approaches for neuromuscular optimization that use classical control theory (see Section VI) require target time histories of limb kinematics, kinetics, and/or muscle activity. In [193], the ILQR method was extended for the case of nonlinear stochastic systems with state- and control-dependent noise. The proposed algorithm is the Iterative Linear Quadratic Gaussian Regulator (iLQG). This extension allows the use of stochastic nonlinear models for muscle force as a function of fiber length and fiber velocity. Fig. 6 illustrates the application of LQG to our arm model (Section II). Further theoretical developments in [194] and [195] allowed the use of an Extended Kalman Filter (EKF) for the case of sensory feedback noise. The EKF is an extension of the Kalman filter for nonlinear systems.

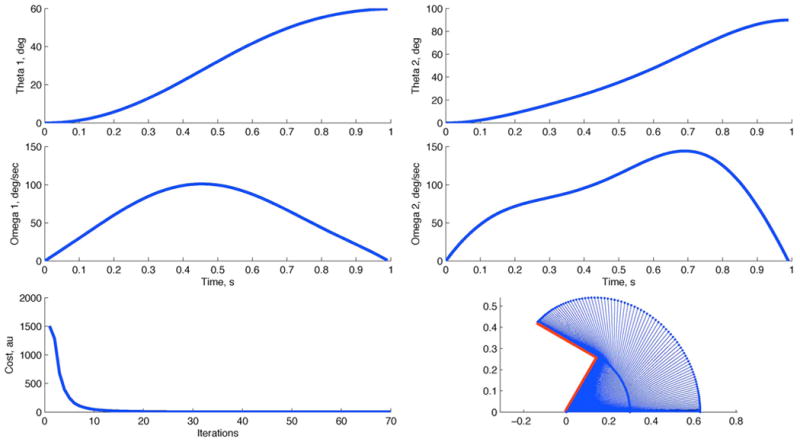

Fig. 6.

Simulation results for our two-link arm model using an optimal feedback controller. The task is to move the two-link arm from the initial configuration of (θ1, θ2) = (0, 0) to (θ1, θ2) = (60°, 90°) in the time horizon of 1 s and with 0 terminal velocity (ω1(T), ω2(T)) = (0, 0)). The lower left panel illustrates the reduction of the cost function for every iteration of the ILQG algorithm. The algorithm convergences quickly (after about 15 iterations), and yields smooth joint-space trajectories with close to bell-shaped velocity profiles.

1) Hierarchical Control

The hierarchical optimal control approach is motivated by the redundancy and the hierarchical structure of neuromuscular systems. The hierarchical optimal control framework is mentioned in, for example [196] and [197] for the case of a two link muscle driven arm with six muscles. In [198], the complete treatment of the control of a 7DOF arm with 14 muscles—two for each join—is presented.

In the hierarchical control framework, the dynamics of neuromuscular systems are distinguished into different levels. For the case of arm [197], the dynamics can be distinguished in two levels. The higher level dynamics includes the kinematics of end effector such as position p, velocity v, and force f. The low level dynamics consists of the join angles θ and θ̇ velocities and as well as the muscle activation α. The state space model of the high level dynamics can be represented as

| (14) |

| (15) |

| (16) |

where m is average hand mass, uH is the control in the higher level, and f is the force at the end effector. The parameters Hf (p, v, m) and Hv(p, v, m) are a function of position velocity and mass that correspond to some approximation error of the high level dynamics. A cost function relative to a task is imposed and the optimal control problem in the higher level can be defined as

| (17) |

subject to the equations of the kinematics of the end effector. The optimal control in higher dynamics will provide the required input force—control uH. The low level dynamics are defined by the forward dynamics of the arm and the muscle dynamics

| (18) |

| (19) |

| (20) |

The matrix I(θ) ∈ ℜn×n is the inertia, C(θ, θ̇) ∈ ℜn×1 is the vector centipentral and coriolis force, and G(θ) is the gravitational force. The term T(α, l(θ), l̇(θ, θ̇)) is the tension of the muscle that depends on the levels of activation a, the length l and the velocity l̇ of the corresponding muscle. The low level control is u. The low level dynamics are related to high level dynamics through the equations p = Φ(θ) and v = J(θ), where J(θ) is the Jacobian. The end effector forces are related to torques produces by the muscles and the gravity according to the equation J(θ)T f = τmuscles − G(θ). The analysis is simplified with zero gravity and therefore the end effector forces are specified by

| (21) |

Under the assumption that ȧ ≫ θ̇ differentiation of the end effector force leads to ḟ = J(θ)+ · M(θ) · Fvl(l(θ), l̇(θ, θ̇)) · ȧ. Since ḟ = −c(f − mg) + uH, it can be shown that uH = β2QuL, where Q is defined as Q = J(θ)+ · M(θ) · Fvl(l(θ), l̇(θ, θ̇)). The low level optimization is formulated as

| (22) |

Subject to 0 < uL < 1 and with H = β2QTQ + rI and b = −βQuH. The choice of cost function above is such that the control energy of the controller in lower dynamics is minimized.

The main idea in the hierarchical optimal control problem is to split the higher dimensional optimal control problem into smaller optimization problems. For the case of the arm movements, the higher optimization problem provides the control forces in end effector space. These end-effector forces play the role of the desired output for the low-level dynamics. The goal of the optimization for low level dynamics is to find the optimal muscle activation profiles that can deliver the desired end-effector. The optimal muscle activation is with respect to a minimum energy cost. Thus by starting from the higher level and solving smaller optimization problems that specify the desired output for the next lower level in the hierarchy, the hierarchical optimal control approach addresses the high dimensionality in neuromuscular structures. The dimensionality reduction and the computational efficiency that are achieved with hierarchical optimal control come with the cost of suboptimality.

A recent development in stochastic optimal control introduces a hierarchical control scheme applicable to a large family of problems [199], [200]. The low level is a collection of feedback controllers which are optimal for different instances of the task. The high-level controller then computes state-dependent activations of these primitive controllers, and in this way achieves optimal performance for new instances of the task. When the new tasks belong to a nonlinear manifold spanned by the primitive tasks, the hierarchical controller is exactly optimal; otherwise, it is an approximation. An appealing feature of this framework is that, once a controller is optimized for a specific instance of the task, it can be added to the collection of primitives and thereby extend the manifold of exactly solvable tasks.

2) Hybrid Control

In tasks that involve contact with surfaces such as locomotion, grasping, and object manipulation, the control problem becomes more difficult. From a control theoretic standpoint, the challenges are due to changes in the dynamics of the system when mechanical constraints are added or removed, for example, when transitioning between the swing and stance phases of gait, or during grasp acquisition. This change in plant dynamics requires switching control laws (hence the term “hybrid”). From the neuromuscular control point view, recent experimental findings about muscle coordination during finger tapping [201], [202] demonstrated a switch between mutually incompatible control strategies: from the control of finger motion before contact, to the control of well-directed isometric force after contact. These experimental findings motivated the work by [203] to extend the ILQR framework for modeling contact transition with the finger tip. For the motion phase of the tapping task, the objective of the optimal controller is to find the control law that minimizes the function

| (23) |

where ϕ(xtN) = (xtN − x*)T QN(xtN − x*) and subject to dynamics

| (24) |

The state x contains the angles and velocities of the neuromuscular system. For the case of the index finger, the state x includes the kinematics of the metacarpoplalangeal (MCP), proximal interphalangeal (PIP), and distal interphalangeal (DIP) joins. Upon contact with the rigid surface, the optimal control problem is formulated as

| (25) |

where ϕ(xtN) = (xtN − x*)T QN(xtN − x*) and subject to the constrained dynamics

| (26) |

where f is the contact force between the finger tip and the constrain surface. The relation between the contact forces f and the Lagrange multipliers λ in the cost function is given by f = ∇Φ(p)λ, where p position vector of the finger tip that satisfies the constraint Φ(p) during contact. The formulation for hybrid iLQR is rather general and it can be applied to a variety of tasks that involve contact with surfaces and switching dynamics. It is also an elegant methodology since it provides the optimal control gains during motion as well as during contact. The main limitation of the method is that it requires an a priori known switching time between the two control laws—instead of making the switching time itself a parameter to optimize. It is an open question whether or not optimal control for nonlinear stochastic systems can incorporate the time of the switch as a variable to optimize. In addition, further theoretical developments are required for the hybrid optimal control of stochastic systems with state and control multiplicative noise.

3) Model Predictive Control