Abstract

Decades of research have documented the specialization of fusiform gyrus (FG) for facial information processes. Recent theories indicate that FG activity is shaped by input from amygdala, but effective connectivity from amygdala to FG remains undocumented. In this fMRI study, 39 participants completed a face recognition task. 11 participants underwent the same experiment approximately four months later. Robust face-selective activation of FG, amygdala, and lateral occipital cortex were observed. Dynamic causal modeling and Bayesian Model Selection (BMS) were used to test the intrinsic connections between these structures, and their modulation by face perception. BMS results strongly favored a dynamic causal model with bidirectional, face-modulated amygdala-FG connections. However, the right hemisphere connections diminished at time 2, with the face modulation parameter no longer surviving Bonferroni correction. These findings suggest that amygdala strongly influences FG function during face perception, and that this influence is shaped by experience and stimulus salience.

Keywords: Functional MRI, Face processing, Amygdala, Effective connectivity, Dynamic causal modeling

Introduction

Until recently, it was widely held that portions of visual cortex function in a relatively modular way in implementing facial information processes. This perspective was the inheritance of decades of research showing selective deficits in various face tasks subsequent to ventral temporal lobe injury (see Bruce and Young, 1986). However, a growing number of studies indicate that face-selective areas of the brain – namely, portions of fusiform gyrus (FG) – are strongly influenced by activity in other areas implementing more diverse functions (Brassen et al., 2010; Fairhall and Ishai, 2007; Vuilleumier et al., 2001, 2003, 2004; Vuilleumier and Pourtois, 2007). Hypotheses regarding the influence of amygdala on FG figure prominently in this literature, as the decoding of emotional expressions is a core element of facial information processing.

FG and amygdala are structurally connected, lying on opposite ends of the inferior longitudinal fasciculus (Amaral, 2002). Hypotheses regarding their functional connection have emerged from the work of several groups, including Vuilleumier et al. (2001, 2003, 2004) who showed increases in FG activation for emotional faces that ostensibly resulted from direct inputs from amygdala. Their evidence came primarily from two experimental manipulations. The first consisted of a task in which participants were required to attend to specific portions of a display that simultaneously presented face and house stimuli. The faces were wearing either fearful or neutral expressions. FG activation was sensitive to whether faces were the focus of attention, and to the emotional valence of those faces (irrespective of attentional focus). Amygdala activation was also sensitive to the valence of the facial expression regardless of attention focus, leading the authors to propose that the enhanced FG activation observed for fearful faces was due to input from amygdala. The second manipulation was at the group level, comparing clinical to nonclinical participants; using the same face/house paradigm, a group of adults with childhood-onset amygdala damage failed to show increased FG activation for fearful faces. These studies present compelling, but indirect, evidence for the influence of amygdala on FG activation.

There are presently multiple visual information processing models proposing inputs to amygdala that initially bypass visual cortex. One of the first such models is comprised of a subcortical pathway proceeding from superior colliculus to the posterior nuclei of the thalamus, then to amygdala. This network has been hypothesized to provide access to coarse but emotionally salient visual information in the environment (LeDoux, 1996; Linke et al., 1999; Morris et al., 1998, 1999, 2001; Pasley et al., 2004; Romanski et al., 1997). Although supported by multiple studies, numerous aspects of this subcortical network have recently been called into question (for review see Pessoa and Adolphs, 2010). It now appears likely that amygdala receives visual inputs from multiple cortical and subcortical networks (Pasley et al., 2004; Pessoa and Adolphs, 2010; Pessoa, 2008; Vuilleumier and Pourtois, 2007). These inputs likely coincide with re-entrant inputs from visual cortex (including FG) to amygdala and then back to the ventral temporal pathway (Vuilleumier and Pourtois, 2007; Amaral, 2002; Amaral and Price, 1984).

Despite the recent findings of Vuilleumier and others regarding the functional connectivity of FG and amygdala (i.e., correlation), the directionality of the connection has not been firmly established (i.e., effective connectivity). In one of the only studies on this topic measuring effective connectivity, Fairhall and Ishai (2007) used dynamic causal modeling (DCM) to examine connectivity between “core” and “extended” face processing systems. As proposed by Haxby et al. (2000, 2002), the core system includes FG (also commonly referred to as the fusiform face area, FFA), superior temporal sulcus (STS), and the inferior portion of lateral occipital gyrus (IOG; also commonly referred to as the occipital face area, OFA), whereas the extended system includes amygdala, inferior frontal gyrus (IFG), and orbitofrontal cortex (OFC). The authors presented evidence that FG activity significantly influenced amygdala activity while passively viewing faces. The authors indicated that they also tested a bidirectional amygdala/FG connectivity model; however, they did not provide statistical information about how this model fared vis-àvis the others, only stating that the simple feed-forward model fared better. They did note that their findings did not preclude the existence of a feedback connection, but this connection would likely be minor relative to FG's influence on amygdala. However, given the limited statistical power available to the study (including only 10 participants) and the absence of specific quantitative information, the hypothesis of amygdala-to-FG connectivity would appear to be largely untested.

If identified, an amygdalar influence on FG function would have widespread implications for typical and atypical (clinical) populations. In particular, it would provide a neurobiological basis for the direct integration of emotional and cognitive processes that are often construed as distinct (for a recent consideration of this issue see Pessoa, 2008). The tuning of visual processing centers by emotion structures represents a potentially critical mechanism whereby an individual can allocate resources towards specific sensory information and coordinate an appropriate response.

Implications for psychopathology are equally compelling. Multiple psychological disorders are associated with abnormalities in social perceptual processes (e.g. face recognition), including autism spectrum disorders (ASD, including diagnoses of autism, Asperger's Disorder and Pervasive Developmental Disorder — Not Otherwise Specified; Harms et al., 2010; Wolf et al., 2008), schizophrenia (Aleman and Kahn, 2005), and mood and anxiety disorders (Phillips et al., 2003). Each of these disorders is also associated with abnormalities in processes related to emotional and social functions, which in turn can be affected by amygdala dysfunction (Barbour et al., 2010; Monk, 2008; Schultz, 2005). Thus, models of amygdala/FG connectivity propose a direct link between emotion dysregulation and visual information processing deficits in these disorders.

In the current study we test the hypothesis that connectivity between FG and amygdala during face processing is reciprocal rather than unidirectional among typically developing individuals. Our goal in this study was to use a paradigm that would be highly sensitive to activation within, and connectivity between, key face processing regions. We therefore included emotional as well as neutral faces in the experimental paradigm (angry, happy, surprised, and neutral). As recently summarized by Vuilleumier and Pourtois (2007), FG activity is enhanced during the perception of emotional facial expressions “presumably through direct feedback connections from the amygdala” (p. 174). The present study attempts to provide concrete evidence for this proposed modulatory effect of amygdala on FG. Evidence in favor of reciprocal FG/amygdala connectivity would provide critical support to developmental models that emphasize the role of emotional salience and reward value in shaping visual expertise for faces. Conversely, it would support models of abnormal development that associate facial recognition deficits with attenuated connectivity between socio-emotional and visual information processing structures (as has been proposed in ASD; Minshew and Williams, 2007; Schultz, 2005).

An additional hypothesis in this study examines the role of novelty and stimulus salience on FG/amygdala connectivity. It was predicted that FG/amygdala connectivity would decrease upon subsequent viewing of the same faces, as their social relevance would have diminished (Wendt et al., 2010). This hypothesis represents an important test of theories implicating amygdala in monitoring the environment and coordinating responses for socially relevant stimuli (Barrett and Bar, 2009). Eleven of the 39 participants in this study completed the same fMRI paradigm twice, permitting the examination of repetition-based changes in FG/amygdala connectivity strength.

Participants

Data from 39 typically developing participants (19 female, mean/SD age 23.61/3.01) were gathered retrospectively from a variety of facial information processing studies carried out in 2006 and 2007 as part of a larger research program (the present data have not been published elsewhere to date). All participants in this study completed an identical paradigm — a 4.82 minute “face identity localizer” task designed to elicit activation in face-selective portions of FG. Eleven of the participants completed the same face localizer again an average of 124 days after their first scan, as part of a separate study. All participants denied a present or prior history of psychopathology, head injury, or developmental delay. All participants reported normal or corrected-to-normal vision (contact lenses or MR-safe eyeglasses were used as necessary based on participant's specific prescriptions).

Experimental task

During fMRI scanning, participants completed a two-alternative forced-choice identity discrimination task. The paradigm had been developed by our lab over many years such that task difficulty was optimal for driving the FG face recognition system. Two images of either unfamiliar faces (Ekman and Friesen, 1976; Endl, et al., 1998; Tottenham, et al., 2009) or houses were presented side-by-side on a black background for 3500 ms (followed by a 1000 ms interstimulus interval). Participants were instructed to indicate via button press whether the two images were the same or different based on identity (i.e., subordinate-level discrimination). All faces had emotional expressions as an implicit dimension (happy, surprised, angry, and neutral). Faces were cropped to remove hair, ears, most of the neck, and shirt collars. In order to avoid direct picture matching (“pop-out” effects on same trials, as opposed to identity matching), faces were rotated ±2° (in-plane), given small differences in brightness, and given slightly different cropping contours. Trials were blocked according to stimulus type, including four alternating blocks of faces and four of houses (i.e., an ABAB block design), with 5 trials per block (yielding 31 fMRI volumes per stimulus type). While event-related designs are generally viewed as more amenable to connectivity analyses than block designs (due to increased variability in task-based BOLD responses), the block design used in this task was chosen to provide the most robust face-sensitive activation possible in a relatively short single-run task (ideal for ultimate objective of examining face processing networks in clinical populations). Although formal considerations of the necessity of per-subject localization for connectivity analyses are scant, there appears to be some consensus in the neuroimaging community that seeds for connectivity analyses should ideally be defined separately for each participant based on significant task-based activations (for one empirical paper in support of this perspective, see Smith et al., 2011). Our block design permitted the localization of the core face processing network in almost all participants (as described below). We were therefore able to offset the relative disadvantage of a block design in elucidating connectivity with the advantage of increased statistical power via a large sample and well-defined network.

Prior studies from our lab using similar paradigms also indicated that FG activation is maximized when the emotional expressions of the faces vary over the course of an fMRI run. For this reason, each of the four face blocks was comprised entirely of happy, surprised, angry, or mixed expressions (i.e., all three), respectively (all face pairs presented identical expressions). Blocks were 22.5 s long separated by a 10 s rest period during which two asterisks were presented side-by-side on the screen.

MRI data collection

MRI data were collected on a Siemens Trio 3T scanner. FMRI data consisted of a single run of gradient-echo echo-planar images (40 oblique axial slices, number of repetitions = 126, isotropic voxel size = 3.5 mm, TR = 2320 ms, TE = 25 ms, flip angle = 60°). Two structural MR images were also acquired for the registration of fMRI data to standard space: an MPRAGE sequence providing full head coverage (176 sagittal slices, isotropic voxel size = 1mm, TR = 300 ms, TE = 2.46 ms, flip angle = 60°) and a high-resolution FLASH sequence collected in the same axial plane as the fMRI data (number of slices = 40, slice thickness = 3.5 mm, in-plane resolution = .88 mm, TR = 2530 ms, TE = 3.34 ms, flip angle = 7°).

FMRI data reduction and analysis

Preprocessing and first-level (time series) analysis

Functional image processing and statistical analyses were implemented primarily using FEAT (FMRIB's Expert Analysis Tool, http://www.fmrib.ox.ac.uk/analysis/research/feat), part of FMRIB's Software Library (FSL) analysis package (http://www.fmrib.ox.ac.uk/fsl). Dynamic causal modeling analyses (described below) were carried out using SPM8 (http://www.fil.ion.ucl.ac.uk/spm). Asymmetry analyses were carried out using locally written software.

Prior to image processing, the first four time points of each fMRI time series were discarded to allow the MR signal to reach a steady state. Each time series was motion-corrected, intensity-normalized, and temporally filtered (nonlinear high-pass filter with a 1/64 Hz cutoff; Jenkinson et al., 2002). A 3D Gaussian filter (FWHM = 5 mm) was applied to account for individual differences in brain morphology and local variations in noise.

Per-voxel regression analyses were performed on each participant's time series using FILM (FMRIB's Improved Linear Model; Woolrich et al., 2001). An explanatory variable (EV) was created for each trial type (faces and houses) and convolved with a gamma function to better approximate the temporal course of the blood-oxygen dependent (BOLD) hemodynamic response. Each EV yielded a per-voxel parameter estimate (PE, or beta) map representing the magnitude of activity associated with that EV. All functional activation maps and their corresponding structural MRI maps were transformed into stereotactic space (MNI) using FMRIB's Non-linear Image Registration Tool (FNIRT).

Group-level analysis: identification of the core face processing network

Data from each participant were submitted to a group-wise per-voxel GLM implemented by the FSL program FLAME (Beckmann et al., 2003). For those participants who were scanned twice, only data from their first session were included in this analysis (i.e., 39 total participants, 1 scan session per participant). The key analysis contrasted activity during face blocks with activity during house blocks. This contrast was implemented in the context of a mixed-effects model including the random effect of participant.

Although false discovery rate (FDR) correction (Genovese et al., 2002) was initially used to control family-wise error (FWE) in the group-level analysis, this approach yielded large clusters of activation that, while centered on a priori areas, spread to multiple adjacent structures. Bonferroni correction, though based on statistical assumptions that are generally violated by fMRI maps (Chumbley et al., 2010; Friston, 2010), nevertheless provided an appropriately conservative threshold and more discrete clusters of activation. We therefore applied a per-voxel threshold to statistical maps that reduced Type I error to a Bonferroni-corrected level of p<.05.

The effect of novelty of FG, amygdala and IOG activity was evaluated by comparing face vs. house activation maps for the 11 participants who repeated the fMRI experiment twice. GLM-based analyses (i.e., t-tests, Pearson correlations) were carried out for the activation magnitude and location of the peak voxel for these structures between time points.

Post-hoc analyses examined hemispheric asymmetries in the core face processing system. Although numerous papers have claimed that FG activation is right-lateralized during face tasks, almost none of them have implemented procedures that directly and robustly test for this (see Herrington et al., 2006, 2010). The asymmetry analysis procedure used here closely followed that of Herrington et al. (2010), directly testing the PE at each voxel with its contralateral homologue (i.e., the voxel with the opposite sign in the x dimension) via repeated measures ANOVA (including the random effect of participant). For this analysis, all data were registered to a symmetrical brain template (http://www.bic.mni.mcgill.ca/ServicesAtlases/ICBM152NLin2009). FWE was controlled in this analysis via the application of FDR correction (Genovese et al., 2002).

For connectivity analyses, regions of interest (ROIs) were extracted from nodes of the core face processing network of individual participants, in both hemispheres, including FG, amygdala, and IOG (although STS is also generally considered part of the core system, we did not predict that we would see STS activation in a subordinate-level identity judgment task, and therefore excluded it from DCM analysis). Left and right amygdala ROIs were also extracted from individual participants. ROIs were defined as spheres (radius = 2 voxels, or 7 mm) around activation peaks within Bonferroni-corrected clusters for each region.

For the right hemisphere (RH) ROIs, all 39 participants had an identifiable FG cluster, while 37 of them had an identifiable amygdala cluster (both at Bonferroni-corrected p<.05, as described above). One participant did not have an identifiable right IOG peak for the time 1 scan, but did have one at time 2. The time 2 data for this participant were therefore included in the full group analyses but dropped from time 1/time 2 difference analyses (leaving N = 10 for the latter, with an average separation of 133.20 days, SD = 42.51 days).

For the left hemisphere (LH) ROIs: all 39 participants had identifiable FG and IOG clusters. 34 had an identifiable left amygdala cluster, thus leaving a group N of 34 for LH connectivity analyses. As only 7 of the 11 participants who were scanned twice had an identifiable left amygdala cluster, time 1/time 2 difference analyses were not conducted for LH ROIs.

Dynamic causal modeling and Bayesian Model Selection

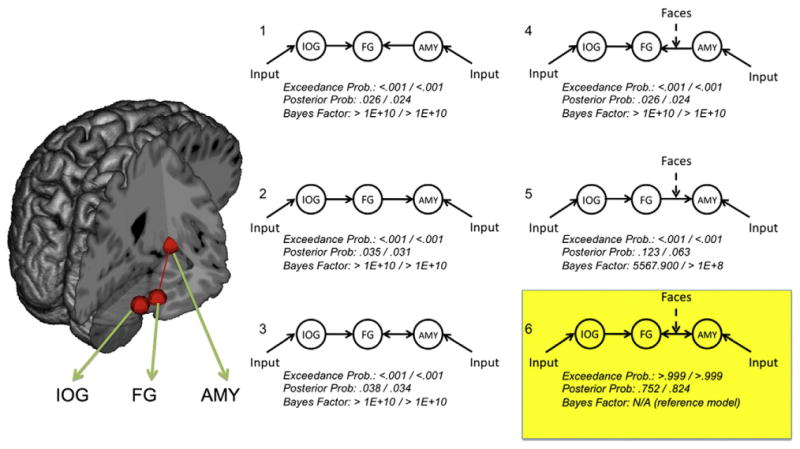

Connectivity analyses focused on the hypotheses that 1) FG and amygdala would show bidirectional rather than unidirectional connectivity and 2) this connectivity would be modulated by the experimental paradigm, i.e., greater during face perception. These hypotheses were tested via dynamic causal modeling — a technique that estimates neural dynamics between brain structures. A dynamic causal model (i.e., a DCM, henceforth referring to specific models rather than the general modeling procedure) involves the specification of one or more inputs into a network of brain areas, a set of “intrinsic” connections between structures (defined functionally as well as structurally), and modulations of these intrinsic connections by exogenous factors (i.e., bilinear terms reflecting experimentally induced changes in cognitive context; Stephan et al., 2009). Six DCMs were calculated in each hemisphere for each participant using variations of these parameters (see Fig. 2). Each of the six models included separate inputs to IOG and amygdala — reflecting the influence of cortical and subcortical routes in facial expression recognition (Vuilleumier and Pourtois, 2007). The models varied according to the direction of communication between FG and amygdala (FG to amygdala, amygdala to FG, or both), and the presence or absence of a bilinear term reflecting the modulation of the FG/amygdala connection by face perception (i.e., face blocks within the experimental task).

Fig. 2.

Dynamic causal models of face network connectivity. Six dynamic causal models (DCMs) were tested against one another using Bayes factor analyses and Bayesian Model Selection (BMS). Each model included lateral occipital cortex (IOG), fusiform gyrus (FG), and amygdala (AMYG), illustrated schematically on the brain to the left (shown in the right hemisphere only; see Table 1 for MNI coordinates). The right side of the figure illustrates the six DCMs included in analyses. All six models included inputs to IOG and AMY and unidirectional intrinsic connections from IOG to FG. Models varied according to whether the FG/AMY was unidirectional from FG (models 1 and 4), unidirectional to FG (models 2 and 5), or bidirectional (models 3 and 6). Models also varied according to whether the specific FG/AMY connection under consideration was modulated by the processing of face stimuli (models 1–3 = no modulation, models 4–6 = modulation). Bayes factor and Bayesian Model Selection analyses converged overwhelmingly on model 6 as the most likely model (highlighted in yellow). BMS exceedance probabilities, posterior probabilities, and Bayes factors (relative to model 6) are listed below each model. Results for the left-hemisphere model are listed to the left of the /; right-hemisphere models are listed to the right of the /.

DCMs can be examined according to a number of parameters suitable for both frequentist (e.g., GLM-based) and Bayesian statistics. As our hypotheses concerned specific, competing models of FG/amygdala connectivity, analyses focused primarily on estimates of the group-wise cumulative evidence for each DCM. Two procedures were used to compare the six models. First, log-likelihood estimates of each model were compared to one another. These estimates consist of bounds on the evidence for each model (derived from the principle of free-energy) whereby the brain is assumed to maintain equilibrium with respect to allocated and unallocated resources (Friston, 2010). Competing models were compared to one another via the calculation of Bayes factors — differences in the log-evidences of two models (Raftery, 1995; Kass and Raftery, 1995). Bayes factors were calculated comparing the hypothesized model (bidirectional, task-modulated FG/amygdala connectivity) to each of the five alternative models. Bayes factors greater than 150 are generally viewed as strong evidence favoring one model over another (Raftery, 1995).

The second group-wise comparison procedure consisted of random-effects Bayesian Model Selection (BMS), a technique that focuses on the estimation of model probabilities according to a Dirichlet distribution. This probability reflects how frequently a specified model “occurred” among individuals in a given sample (Stephan et al., 2009). This procedure has advantages over Bayes factor estimation in that it accounts for within-group heterogeneity and is less sensitive to outliers. BMS yields exceedance probabilities reflecting the strength of the belief that one model better reflects the observed data than the other models.

The reliability of right hemisphere DCM results were examined by comparing data between time points 1 and 2 for the 10 participants who completed the fMRI task on two occasions and had significant clusters of activation in the entire right hemisphere network. Analyses consisted of t-tests (all two-tailed) and Pearson correlations between time points 1 and 2 for 1) the exceedance probabilities of each of the 6 models, and 2) the parameters reflecting the strength of intrinsic (DCM.A matrix), modulatory (DCM.B matrix), and model inputs (DCM.C matrix).

Results

Task accuracy

Mean task accuracy percentages/SDs were 86.8/8.03 for face trials, and 96.7/4.78 for house trials. Mean/SD response times (in ms) were 1644/327 for faces 1332/294 for houses. Participants were significantly faster (t(35) = 7.74, p<.01) and more accurate (t(35) = 6.31, p<.01) for houses than faces. However, post-hoc analyses indicated that neither face nor house task results were significantly correlated with any of the DCM weights for the bidirectional face-modulated FG/amygdala model, indicating that these connectivity parameters (and face modulation effects) are not likely related to task performance.

Group-level analysis: identification of face-selective regions

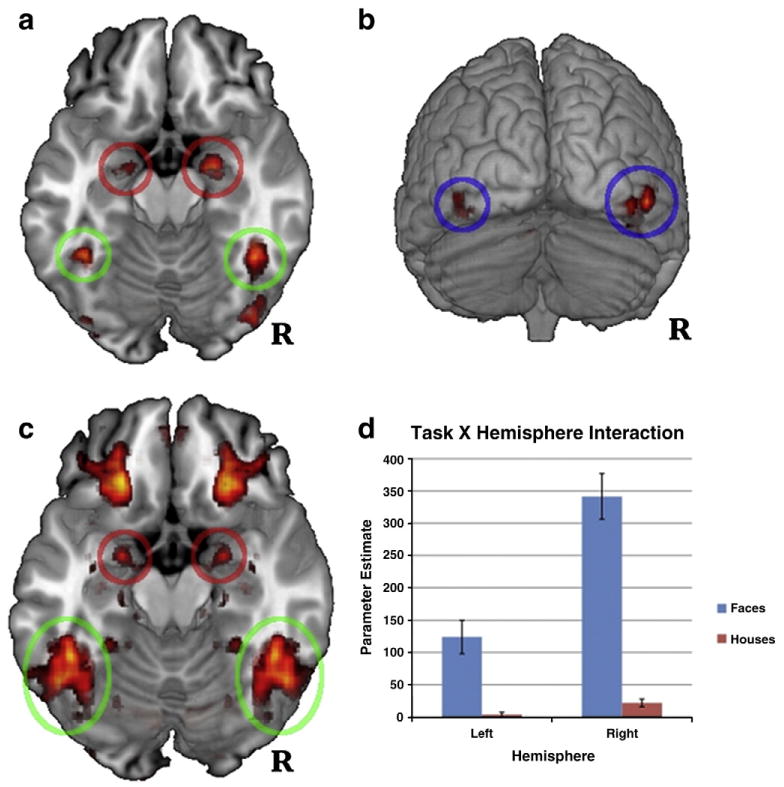

As predicted, group-level maps included significant clusters of activation for faces>houses in bilateral FG, amygdala, and IOG (see Fig. 1 panels 1 and 2, and Table 1; see Supplementary Table 1 for activation clusters across the entire brain and for houses>faces). Overall, group maps closely followed a priori predictions regarding face-selective regions of both the core and extended face processing systems.

Fig. 1.

Per-voxel tests of faces greater than houses. Circles highlight regions from which ROIs were extracted for connectivity analyses (green = fusiform gyrus [FG], red = amygdala [AMY], blue = inferior lateral occipital cortex [IOG]). Panel 1: bilateral FG and AMY clusters at p<.05, Bonferroni-corrected. Panel 2: IOG clusters at p<.05, Bonferroni-corrected. Panel 3: per-voxel tests of hemispheric asymmetry, FDR-corrected. Clusters of activity correspond to areas that showed significant task X hemisphere interactions at p<.05 (activation is symmetrical because hemisphere was included as a formal factor in the analyses). Panel 4: histogram of activation for the peak voxel within FG of each participant for the asymmetry analyses (Panel 3). The histogram illustrates significantly right-lateralized activation for faces (MNI peak = ±46, −44, − 20, z = 5.307).

Table 1.

Activation for faces within the face processing network.

| Region | Left hemisphere | Right hemisphere | ||||

|---|---|---|---|---|---|---|

| MNI | Cluster size | Peak Z-value | MNI | Cluster size | Peak Z-value | |

| Coordinates | (# voxels) | Coordinates | (# voxels) | |||

| Fusiform gyrus | 42,−46,−22 | 341 | 8.46 | −42,−48,−22 | 172 | 7.9 |

| Amygdala | 22,−6,−14 | 122 | 7.20 | −20,−6,−16 | 50 | 5.91 |

| Lateral occipital gyrus (inferior) | 48,−70,−8 | 491 | 6.96 | −42,−82,−8 | 123 | 6.03 |

Note. All clusters were significant at p<.05 (Bonferroni-corrected for multiple comparisons). MNI coordinates represent peak voxels for each cluster. See Supplementary Material for listing of all activation clusters.

FG, amygdala, and IOG results were remarkably robust across participants. All 39 participants had an identifiable right FG activation cluster (faces>houses) at a Bonferroni-corrected p<.05 threshold, and 37 of 39 participants had a right amygdala cluster. T-tests for the 11 participants who completed the face task twice showed no significant difference between peak location or magnitude in any of the three regions. Pearson correlations between peak activation magnitudes were large for each of the three regions (though p-values were limited by the small sample size; FG r = 0.59, p = 0.055, amygdala r = 55, p = .080, IOG r =.57, p = .068).

Hemispheric asymmetry analysis provided the most robust evidence to date that FG activation is significantly right-lateralized during face processing. Per-voxel tests of the task (faces>houses) X hemisphere interaction indicated that faces yielded significantly more right-lateralized activation than houses (MNI peak = ±46, −44, −20, z = 5.31; see Fig. 1, panel 3). Parameter estimates (PEs) for faces were significantly greater than houses in the left and right hemispheres, t(38) = 4.92,p<.001 and t(38) = 10.11, p<.001, respectively, but significantly more so in the right hemisphere (mean PEs in the left and right hemispheres were 120.36 and 319.71, respectively; see Fig. 1, panel 4). Amygdala showed a very similar pattern of right-lateralized activation for faces (MNI peak = ±22, −2, −16, z = 4.29; see Fig. 1, panel 3).

Dynamic causal modeling and Bayesian Model Selection

As predicted, the DCM with bidirectional, face-modulated connections between FG and amygdala (model 6 in Fig. 2) was selected as substantially more probable than the unidirectional alternatives (with and without face modulation) in both left and right hemisphere networks. Bayes factors for the bidirectional modulation DCM were extremely large (see Fig. 2), all well beyond the standard threshold of 150 for “very probable” models (Raftery, 1995). In both hemispheres, the model with the second-highest relative log-evidence was the one containing only a unidirectional connection from FG to amygdala, modulated by face task. The Bayes factor for the bidirectional model relative to this second-best unidirectional model was 5568 in the left hemisphere and >1×108 in the right hemisphere.

BMS results were also strongly in favor of the bidirectional task-modulated DCM, with exceedance probabilities asymptoting to 1 in both hemispheres and expected posterior probabilities of .82 in the right hemisphere and .75 in the left. The 5 remaining models had near-zero exceedance probabilities for both hemispheres.

In addition to the overall DCM comparison via BMS, the specific parameters of a particular DCM can be tested for statistical significance and effect direction (e.g., significant increased modulation of a given connection by task versus significantly decreased modulation or no effect). These tests were carried out for the model predicted by BMS (as described above) — the bidirectional FG/amygdala model with modulation by faces. Connection weights for each component of this DCM were significantly greater than zero at a Bonferroni-corrected threshold of p<.05 for both hemispheres (including weights for IOG and amygdala inputs, intrinsic connections to and from amygdala/FG, and the modulation of both intrinsic connections by the Face condition). 36 of 37 participants showed positive connectivity weights for the modulation of the right-hemisphere FG-to-amygdala connection by faces (Pearson Chi-square = 33.11, p<.001); 34 of 37 showed positive face modulation in the right hemisphere amygdala-to-FG connection (Chi-square = 25.97, p < .001). In the left hemisphere, these proportions were 33/34 (Chi-square = 30.12, p<.001) and 27/34 (Chi-square = 11.76, p<.001), respectively.

Time 1/time 2 differences in DCM parameters

Because too few of the time 1/time 2 participants showed significant activation clusters in the left hemisphere core face processing system, only the RH system was examined for stability of DCM parameters between time points. Of the six weights reflecting system inputs (to IOG and amygdala), intrinsic connections (FG to/from amygdala, IOG to FG), and the face-dependent modulation of FG/amygdala connections, only one statistically significant difference was noted between time points: the intrinsic connection from amygdala to FG was decreased at time 2, t(9) = 3.46, p = .007. This result indicates that, irrespective of the experimental manipulation (i.e., face or house perception), the influence of amygdala on FG activation decreased on the second presentation of the task. Furthermore, although the amygdala-to-FG modulation parameter did not significantly differ between time points, t(9) = 1.03, n.s., this parameter was significantly greater than zero at time 1, t(9) = 3.49, p = .007, but not time 2, when applying the appropriate Bonferroni-corrected significance threshold; t(9) = 2.58, p = .02). These data suggest that the influence of amygdala on FG activation is modulated by prior history with perceived stimuli.

Discussion

The present study was the first to use directional connectivity analyses to establish the influence of amygdala on FG function during face perception. BMS results indicated that a model of bidirectional FG/amygdala communication substantially outperformed either unidirectional model in predicting observed brain activity. Results further indicated that the strength of the bidirectional communication was enhanced by stimulus context — face perception. Thus, as participants made identity discriminations on faces, there was significantly greater bidirectional communication between amygdala and FG. These findings, alongside those of Vuilleumier et al. (2001,2003,2004), have significant implications for our understanding of higher-order visual information processes. Specifically, they suggest that visual processes such as face perception are under the direct influence of structures commonly associated with affect and reward. Stated differently: the way we perceive the world may in fact be under the influence of the very motivational dispositions for which perception is so critical.

The finding of decreased amygdala-to-FG connectivity upon repeated exposure to the same stimuli is among the first uses of dynamic causal modeling to track experience-based changes in communication between brain structures. This finding is consistent with a recent study by Wendt et al. (2010) who showed decreased correlation between amygdala and inferior temporal cortex after the repeated presentation of emotional scenes. However, it should be noted that the present finding must be interpreted cautiously, for two reasons. First, unlike the DCM analyses that tested competing models, this finding was not tested against alternative or control models (no other modulatory connections were of interest), and is therefore vulnerable to the effects of regression to the mean. Second, the analysis was based on a relatively small sample (though still comparable to many fMRI studies).

Nevertheless, if this finding is replicated, it would provide strong support to a prevailing account of the significance of FG/amygdala connectivity — one emphasizing stimulus salience and resource allocation. According to this account, amygdala serves to enhance FG function when an individual is presented with stimuli of high social/emotional relevance, thereby prioritizing processing (Pessoa and Adolphs, 2010; Vuilleumier and Pourtois, 2007). The present data are in keeping with this account insofar as the diminished amygdala-to-FG connection strength may reflect the attenuated emotional salience of faces that have already been seen, and are therefore less relevant to the perceiver. This finding has important practical implications for any studies comparing individuals or groups, in that each person needs to be equated on prior experience with experimental stimuli, else results on amygdala connectivity may be distorted.

Similarities between these findings and a related study point to intriguing hypotheses regarding the time course of amygdala influences on FG. Vuilleumier and Pourtois (2007) reported decreased FG activation for emotional faces in a cross-sectional study of adults with amygdala damage originating in childhood. They concluded that this decrease was the direct consequence of the absence of amygdala input and that, conversely, amygdala plays an important role in upregulating FG activity. If repeated exposure to identical emotional faces results in attenuated amygdala/FG connectivity (as observed here), the data from Vuilleumier and colleagues would lead one to predict that FG activity would also be attenuated after repeated exposure to identical faces. However, a significant decrease in FG activation was not observed in the present study. One possibility is that, although prior exposure attenuated the amygdala-to-FG connectivity in this sample, this was miniscule in comparison to the attenuation associated with amygdala damage. A second, more intriguing possibility is that amygdala influences FG function on multiple time scales — in milliseconds, with respect to stimulus responses, and in months and years, in terms of the maturation of FG. In other words, in a typically developing healthy adult, years of experience with faces may result in a highly responsive FG that continues to be influenced by amygdala, but also maintains substantial functional independence from it. This hypothesis is consistent with data indicating that the specialization of FG for faces typically does not emerge until around late childhood/early adolescence (Scherf et al., 2007). It is also consistent with Wendt et al. (2010), who found decreased amygdala/inferior temporal cortex connectivity after repeated presentation of emotional scenes despite no change in inferior temporal cortex activation per se.

The present findings of amygdala influence on FG have implications for our understanding of both typical and atypical development. The implications for ASD are particularly noteworthy. Decades of writings in the clinical and research literatures have documented reduced attention to faces among those with ASD (see Schultz, 2005; Dawson et al., 2005; Sasson et al., 2008). Accounts of this diminished attention have focused increasingly on developmental neurobiology. Two prominent theories of brain development in ASD posit that a deficit in reward processes (mediated in part by amygdala) results in an attenuation of the typical pattern of intense interest in faces and people among infants and toddlers (Schultz, 2005; Dawson et al., 2005; also see Dziobek et al., 2010). According to these models, the absence of reward value for faces initiates a cascade of developmental and neurobiological deficits, including the abnormal functioning of face-selective portions of FG. The current data point on an intriguing developmental hypothesis that facial information processing deficits in ASD are the accumulated effect of years of abnormal tuning of FG by amygdala (Schultz, 2005). This hypothesis would be best addressed by longitudinal studies examining face processes as they emerge in childhood.

The key analyses in this study leveraged a relatively large sample size (likely offsetting the relative disadvantage of relying on a block design for connectivity analyses). Given the limited temporal resolution of fMRI (and subsequent limitations in identifying directional communication between brain structures), it seems likely that large sample sizes will be needed in future studies examining amygdala/FG connectivity effects in detail. On the other hand, findings regarding time-dependent changes in amygdala/FG connectivity relied only on data from a small portion of the overall sample. Clearly more research is needed to determine which of the findings of interest in this study represent large effects that are most amenable to individual difference analyses (as in the study of clinical populations).

More research is also needed to examine the specificity of amygdala influences on FG function. It is important to note that the paradigm used in this study did not include enough trials of neutral faces to support the separate analysis of implicit emotional and non-emotional face processes with respect to regional brain activity or effective connectivity. It is therefore unclear if the observed results relate to face processes in general, or facial emotion processes in particular. However, existing evidence (Vuilleumier and Pourtois, 2007) indicates that the modulation of FG by amygdala is specific to the emotional content of faces. Relatedly, the present paradigm included three types of emotion expressions, but did not include enough trials to look at their separate effects. It is possible that some facial expressions — particularly fear — modulate amygdala/FG connectivity more than others. This is an important hypothesis for future studies.

Furthermore, the DCMs tested in this study do not include alternate pathways between amygdala and FG, and therefore cannot speak directly to the question of whether such pathways may better account for the observed data. The limiting of conclusions to explicitly defined models is in fact a limitation inherent in DCM and most connectivity measures. Replications of the present results could be strengthened by including DCMs with additional structures that could serve either as competing hypotheses or as controls for primary hypotheses. However, there are few alternative functional routes between amygdala and FG that seem likely. For example, although it has been hypothesized that FG activity may be modulated via subcortical visual pathways excluding amygdala (i.e., basal forebrain), these models have generally failed to obtain empirical support (Amaral et al., 2003; Catani et al., 2003).

A final consideration for future research in this area is that it is presently unclear what kind of visual information amygdala is most sensitive to. This information may prove critical in understanding the developmental progression of face selectivity in the FG, and in facial information processing more generally. The presence of networks for face processing that bypass lower-level visual processing areas is remarkable in its own right; we are only beginning to understand the stimulus parameters that this network is sensitive to (for example, conveyance of lower versus higher spatial frequency information; Pessoa and Adolphs, 2010; Rotshtein et al., 2007; Vuilleumier et al., 2003).

The present findings indicate that connectivity models may provide essential insights into the role of amygdala in orienting attention and tuning visual cortex. Future studies will benefit from testing broader models that encompass structures outside of tempo-occipital cortex. The inclusion of orbitofrontal cortex in these models may be particularly fruitful, as recent evidence implicates this area in emotional surveillance and the rapid processing of low spatial frequency information (Bar et al. 2006; Barrett and Bar, 2009). Ultimately, insights into the mechanics of amygdala-mediated pathways to visual cortex promise to greatly influence models of normal and abnormal development.

Supplementary Material

Acknowledgments

We would like to thank Elinora Hunyadi, Mikle South and Julie Wolf for their roles in recruiting and testing research participants for this study. Funding for this project was provided by an award from NIMH (R01MH073084) to R. Schultz.

Footnotes

Supplementary materials related to this article can be found online at doi:10.1016/j.neuroimage.2011.03.072.

References

- Aleman A, Kahn RS. Strange feelings: do amygdala abnormalities dysregulate the emotional brain in schizophrenia? Prog Neurobiol. 2005;77(5):283–298. doi: 10.1016/j.pneurobio.2005.11.005. [DOI] [PubMed] [Google Scholar]

- Amaral DG. The primate amygdala and the neurobiology of social behavior: implications for understanding social anxiety. Biol Psychiatry. 2002;51(1):11–17. doi: 10.1016/s0006-3223(01)01307-5. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Price JL. Amygdalo-cortical projections in the monkey (Macaca fascicularis) J Comp Neurol. 1984;230(4):465–496. doi: 10.1002/cne.902300402. [DOI] [PubMed] [Google Scholar]

- Amaral DG, Behniea H, Kelly JL. Topographic organization of projections from the amygdala to the visual cortex in the macaque monkey. Neuroscience. 2003;118(4):1099–1120. doi: 10.1016/s0306-4522(02)01001-1. [DOI] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Schmidt AM, Dale AM, Hämäläinen MS, Marinkovic K, Schacter DL, Rosen BR, Halgren E. Top-down facilitation of visual recognition. Proc Natl Acad Sci USA. 2006;103(2):449–454. doi: 10.1073/pnas.0507062103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbour T, Murphy E, Pruitt P, Eickhoff SB, Keshavan MS, Rajan U, Zajac-Benitez C, Diwadkar VA. Reduced intra-amygdala activity to positively valenced faces in adolescent schizophrenia offspring. Schizophr Res. 2010;123(2–3):126–136. doi: 10.1016/j.schres.2010.07.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrett LF, Bar M. See it with feeling: affective predictions during object perception. Philos Trans R Soc Lond B Biol Sci. 2009;364(1521):1325–1334. doi: 10.1098/rstb.2008.0312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beckmann CF, Jenkinson M, Smith SM. General multilevel linear modeling for group analysis in FMRI. Neuroimage. 2003;20(2):1052–1063. doi: 10.1016/S1053-8119(03)00435-X. [DOI] [PubMed] [Google Scholar]

- Brassen S, Gamer M, Rose M, Büchel C. The influence of directed covert attention on emotional face processing. Neuroimage. 2010;50(2):545–551. doi: 10.1016/j.neuroimage.2009.12.073. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. Br J Psychol. 1986;77(Pt 3John):305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Catani M, Jones DK, Donato R, Ffytche DH. Occipito-temporal connections in the human brain. Brain. 2003;126(Pt 9):2093–2107. doi: 10.1093/brain/awg203. [DOI] [PubMed] [Google Scholar]

- Chumbley JR, Flandin G, Seghier ML, Friston KJ. Multinomial inference on distributed responses in SPM. Neuroimage. 2010;53(1):161–170. doi: 10.1016/j.neuroimage.2010.05.076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Webb SJ, McPartland J. Understanding the nature of face processing impairment in autism: insights from behavioral and electrophysiological studies. Dev Neuropsychol. 2005;27(3):403–424. doi: 10.1207/s15326942dn2703_6. [DOI] [PubMed] [Google Scholar]

- Dziobek I, Bahnemann M, Convit A, Heekeren H. The role of the fusiform-amygdala system in the pathophysiology of autism. Arch Gen Psychiatry. 2010;67(4):397–405. doi: 10.1001/archgenpsychiatry.2010.31. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen W. Pictures of facial affect. 1976 Unpublished manuscript. [Google Scholar]

- Endl W, Walla P, Lindinger G, Lalouschek W, Barth FG, Deecke L, Lang W. Early cortical activation indicates preparation for retrieval of memory for faces: an event-related potential study. Neurosci Lett. 1998;240(1):58–60. doi: 10.1016/s0304-3940(97)00920-8. [DOI] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cereb Cortex. 2007;17(10):2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Friston K. The free-energy principle: a unified brain theory? Nat Rev Neurosci. 2010;11(2):127–138. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- Genovese C, Lazar N, Nichols T. Thresholding of statistical maps in functional neuroimaging using the false discovery rate. Neuroimage. 2002;15(4):870–878. doi: 10.1006/nimg.2001.1037. [DOI] [PubMed] [Google Scholar]

- Harms MB, Martin A, Wallace GL. Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol Rev. 2010;20(3):290–322. doi: 10.1007/s11065-010-9138-6. [DOI] [PubMed] [Google Scholar]

- Haxby J, Hoffman E, Gobbini M. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4(6):223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biol Psychiatry. 2002;51(1):59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- Herrington J, Koven N, Miller G, Heller W. Biology of Personality and Individual Differences. Guilford Press; New York: 2006. Mapping the Neural Correlates of Dimensions of Personality, Emotion and Motivation. [Google Scholar]

- Herrington JD, Heller W, Mohanty A, Engels AS, Banich MT, Webb AG, Miller GA. Localization of asymmetric brain function in emotion and depression. Psychophysiology. 2010;47(3):442–454. doi: 10.1111/j.1469-8986.2009.00958.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17(2):825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Kass R, Raftery A. Bayes factors. J Am Stat Assoc. 1995;90(430):773–795. [Google Scholar]

- LeDoux J. The Emotional Brain: The Mysterious Underpinnings of Emotional Life. Simon and Schuster; New York: 1996. [Google Scholar]

- Linke R, De Lima AD, Schwegler H, Pape HC. Direct synaptic connections of axons from superior colliculus with identified thalamo-amygdaloid projection neurons in the rat: possible substrates of a subcortical visual pathway to the amygdala. J Comp Neurol. 1999;403(2):158–170. [PubMed] [Google Scholar]

- Minshew NJ, Williams DL. The new neurobiology of autism: cortex, connectivity, and neuronal organization. Arch Neurol. 2007;64(7):945–950. doi: 10.1001/archneur.64.7.945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monk CS. The development of emotion-related neural circuitry in health and psychopathology. Dev Psychopathol. 2008;20(4):1231–1250. doi: 10.1017/S095457940800059X. [DOI] [PubMed] [Google Scholar]

- Morris J, Friston K, Buchel C, Frith C, Young A, Calder A, Dolan R. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Morris JS, Ohman A, Dolan RJ. A subcortical pathway to the right amygdala mediating “unseen” fear. Proc Natl Acad Sci U S A. 1999;96(4):1680–1685. doi: 10.1073/pnas.96.4.1680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, DeGelder B, Weiskrantz L, Dolan RJ. Differential extrageniculostriate and amygdala responses to presentation of emotional faces in a cortically blind field. Brain. 2001;124(Pt 6):1241–1252. doi: 10.1093/brain/124.6.1241. [DOI] [PubMed] [Google Scholar]

- Pasley B, Mayes L, Schultz R. Subcortical discrimination of unperceived objects during binocular rivalry. Neuron. 2004;42(1):163–172. doi: 10.1016/s0896-6273(04)00155-2. [DOI] [PubMed] [Google Scholar]

- Pessoa L. On the relationship between emotion and cognition. Nat Rev Neurosci. 2008;9(2):148–158. doi: 10.1038/nrn2317. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat Rev Neurosci. 2010;11(11):773–783. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phillips ML, Drevets WC, Rauch SL, Lane R. Neurobiology of emotion perception II: implications for major psychiatric disorders. Biol Psychiatry. 2003;54(5):515–528. doi: 10.1016/s0006-3223(03)00171-9. [DOI] [PubMed] [Google Scholar]

- Raftery A. Bayesian Model Selection in Social Research. In: Marsden P, editor. Sociological Methodology. Cambridge, MA: 1995. pp. 111–196. [Google Scholar]

- Romanski LM, Giguere M, Bates JF, Goldman-Rakic PS. Topographic organization of medial pulvinar connections with the prefrontal cortex in the rhesus monkey. J Comp Neurol. 1997;379(3):313–332. [PubMed] [Google Scholar]

- Rotshtein P, Vuilleumier P, Winston J, Driver J, Dolan R. Distinct and convergent visual processing of high and low spatial frequency information in faces. Cereb Cortex. 2007;17(11):2713–2724. doi: 10.1093/cercor/bhl180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sasson NJ, Turner-Brown LM, Holtzclaw TN, Lam KSL, Bodfish JW. Children with autism demonstrate circumscribed attention during passive viewing of complex social and nonsocial picture arrays. Autism Res. 2008;1(1):31–42. doi: 10.1002/aur.4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherf KS, Behrmann M, Humphreys K, Luna B. Visual category-selectivity for faces, places and objects emerges along different developmental trajectories. Dev Sci. 2007;10(4):F15–F30. doi: 10.1111/j.1467-7687.2007.00595.x. [DOI] [PubMed] [Google Scholar]

- Schultz RT. Developmental deficits in social perception in autism: the role of the amygdala and fusiform face area. Int J Dev Neurosci. 2005;23(2–3):125–141. doi: 10.1016/j.ijdevneu.2004.12.012. [DOI] [PubMed] [Google Scholar]

- Smith SM, Miller KL, Salimi-Khorshidi G, Webster M, Beckmann CF, Nichols TE, Ramsey JD, Woolrich MW. Network modelling methods for FMRI. Neuroimage. 2011;54(2):875–891. doi: 10.1016/j.neuroimage.2010.08.063. [DOI] [PubMed] [Google Scholar]

- Stephan KE, Penny WD, Daunizeau J, Moran RJ, Friston KJ. Bayesian model selection for group studies. Neuroimage. 2009;46(4):1004–1017. doi: 10.1016/j.neuroimage.2009.03.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, Marcus DJ, Westerlund A, Casey BJ, Nelson C. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuilleumier P, Pourtois G. Distributed and interactive brain mechanisms during emotion face perception: evidence from functional neuroimaging. Neuropsychologia. 2007;45(1):174–194. doi: 10.1016/j.neuropsychologia.2006.06.003. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony J, Driver J, Dolan R. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30(3):829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6(6):624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci. 2004;7(11):1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Wendt J, Weike AI, Lotze M, Hamm AO. The Functional Connectivity Between Amygdala and Extrastriate Visual Cortex Activity During Emotional Picture Processing Depends on Stimulus Novelty. Biol, Psychology. 2010 doi: 10.1016/j.biopsycho.2010.11.009. [DOI] [PubMed] [Google Scholar]

- Wolf JM, Tanaka JW, Klaiman C, Cockburn J, Herlihy L, Brown C, South M, McPartland J, Kaiser MD, Phillips R, Schultz RT. Specific impairment of face-processing abilities in children with autism spectrum disorder using the Let's Face It! skills battery. Autism Res. 2008;1(6):329–340. doi: 10.1002/aur.56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of fMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.