The owl captures prey using sound localization. In the classical model, the owl infers sound direction from the position in a brain map of auditory space with the largest activity. However, this model fails to describe the actual behavior. While owls accurately localize sources near the center of gaze, they systematically underestimate peripheral source directions. Here we demonstrate that this behavior is predicted by statistical inference, formulated as a Bayesian model that emphasizes central directions. We propose that there is a bias in the neural coding of auditory space, which, at the expense of inducing errors in the periphery, achieves high behavioral accuracy at the ethologically relevant range. We then show that the owl's map of auditory space decoded by a population vector is consistent with the behavioral model. Thus, a probabilistic model describes both how the map of auditory space supports behavior and why this representation is optimal.

Behavioral experiments have shown that owls are very accurate at localizing sounds near the center of gaze but systematically underestimate the direction of sources in the periphery of the horizontal plane1,2 (Fig. 1a). This underestimation is also observed in cats3, monkeys4, ferrets5, and humans6. The localization of sources in the horizontal direction depends on the timing of the signals received at the two ears, termed interaural time difference7 (ITD). Processing of ITD at multiple stages of the owl's auditory system8–10 ultimately leads to a representation of auditory space in the optic tectum (OT; homolog of mammalian superior colliculus) where stimulation induces head saccades11.

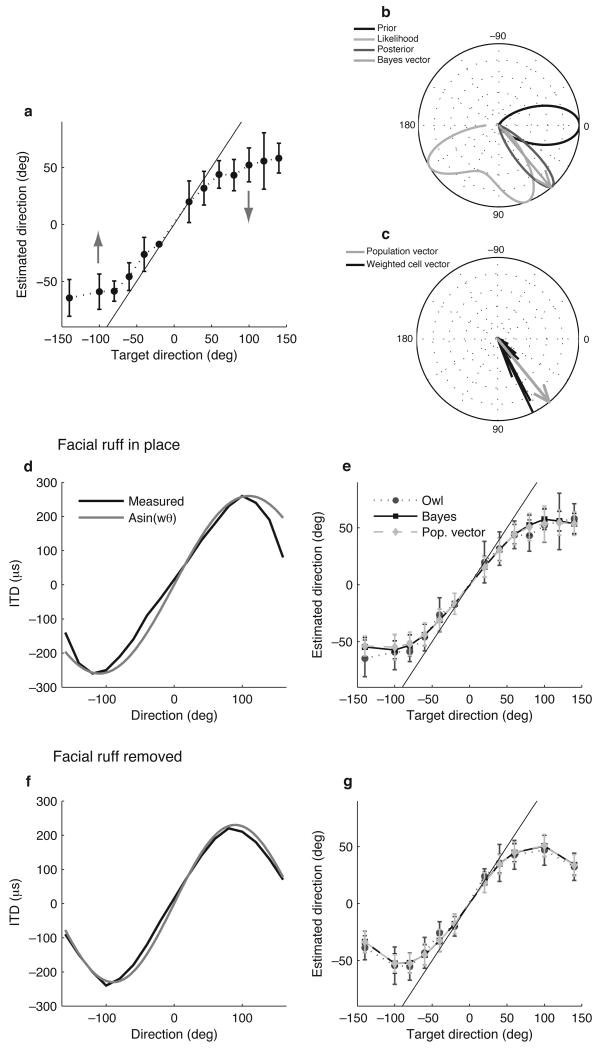

Figure 1.

Models of the owl's behavior. (a) Owl's behavior, modified from ref. 2. The solid gray line is the identity. (b) The Bayesian estimate is the direction of the vector found by averaging unit vectors in each direction weighted by the posterior density (medium gray). The posterior is proportional to the product of the likelihood (light gray) and the prior (black). All probablity densities were normalized by their peak for display. The source direction is 70 degrees, at one of the peaks of the likelihood. (c) The population vector (gray) is the average of the preferred direction vectors of the neurons, weighted by the firing rates (black). (d) Measured relationship between direction and interaural time difference (ITD) (black) under normal conditions2, along with the sinusoidal approximation (gray). (e) Owl's behavior2 (medium gray circle, dotted line), Bayesian estimator (black square, solid line), and population vector (light gray diamond, dashed line) under the normal condition. Error bars represent the standard deviation over trials. (f) Measured relationship between direction and ITD (black) under ruff-removed conditions2, along with the sinusoidal approximation (gray). (g) Owl's behavior2, Bayesian estimator, and population vector under the ruff-removed condition.

The classical view of auditory-space coding in the owl is that sound source direction is represented in a place code12,13. In this framework, the direction of a sound source is determined by the position in a topographic map of auditory space with the greatest activity level. However, this model has not been directly compared to the owl's behavior. Thus, although considerable progress has been made in determining how ITD is encoded13, it remains unclear how ITD is decoded to support the owl's localization behavior.

The estimation of sound source direction from ITD is an inherently ambiguous problem because of the non-unique dependence of ITD on sound direction2. Bayesian inference has been used to show how combining prior and sensory information can explain biased perception of ambiguous sensory signals14,15. Here we consider the hypothesis that a Bayesian estimator, with a prior distribution that emphasizes directions near the center of gaze (Fig. 1b), can explain the owl's localization based on ITD. This provides an opportunity to address an open question in neuroscience: the degree to which perception and behavior are consistent with statistical inference.

Results

Bayesian model of behavior

Under the Bayesian model, the owl's estimate of source direction depends on two factors: the statistical dependence of ITD on direction, and a bias for particular directions. The direction-dependence of ITD is known to be approximately sinusoidal from direct measurements of the sound signals reaching the owl's tympanic membranes2. ITD is also subject to variability due to the type of sound signal, environmental conditions, and noise in the neural computation of ITD16–19. Therefore, we modeled ITD as a sinusoidal function of source direction that is corrupted by Gaussian noise (Fig. 1d; amplitude = 260 μs, angular frequency = 0.0143 rad/degree). Note that the maximal ITDs are not at positive and negative ninety degrees because the facial ruff of the owl causes a phase shift relative to the owl's head2. The conditional probability over ITD given a source direction defined by this model p(ITD|θ) is called the likelihood function. The owl's localization behavior shows a clear bias, corresponding to an underestimation of directions away from the center of gaze. We modeled this bias using a Gaussian-shaped probability density of sound-source directions that peaks at the center of gaze and decays for peripheral directions (Fig. 1b). This bias constitutes the prior for Bayesian inference p(θ). While we include the prior in the model to capture the behavior, we note that the actual distribution of target directions measured in behavioral studies of the interaction between barn owls and prey is consistent with a centrality prior20 (Fig. 2). The likelihood function and the prior are combined according to Bayes' rule to give the posterior density p(θ|ITD), which is the probability density over sound-source direction given a value of ITD (Fig. 1b). The Bayesian estimate of source direction θ given a value of ITD is taken to be the mean of θ under the posterior p(θ|ITD). This leads to a probabilistic model of the relationship between direction and ITD with two parameters: the width of the prior probability density and the variance of the noise corrupting the computed ITD.

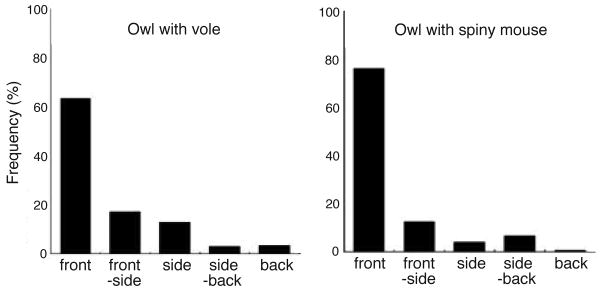

Figure 2.

Measured prior distribution of target direction. The relative frequency of different oppositions between an owl and two types of prey (vole on the left and spiny mouse on the right) during prey capture (modified from Fig. 3 in ref. 20). Front is the prey positioned at 0 deg relative to the owl's center of gaze, front-side corresponds to the regions centered at ± 45 deg, side corresponds to the regions centered at ± 90 deg, side-back corresponds to the regions centered at ± 135 deg, and back corresponds to the region centered at 180 deg.

After determining the two parameters, the performance of the Bayesian estimator is consistent with the owl's localization behavior (Fig. 1e; root-mean-square error (RMSE) between the average behavior and the average Bayesian estimate was 1.66 deg). In addition, the precision of the Bayesian estimator is comparable to the behavioral precision measured for owls1,2 (s.d of direction estimates for the model 9.0 ± 0.5 deg and 13.91 ± 5.26 deg for the owl2). Differences between the average Bayesian estimate and the behavior are primarily due to two features of the owl's behavior: the asymmetry in the maximal leftward and rightward headturns and the nonsmooth variation of the behavioral data with target direction that are not matched by the model.

We tested the Bayesian model using data from two independent experiments: one that alters the relationship between ITD and sound direction, and another that changes noise in the measured ITD. The removal of the facial ruff, the heart-shaped array of dense feathers that collects and shapes incoming sound, alters the relationship between direction and ITD (Fig. 1f). This manipulation produces a corresponding change in the owl's behavior where the owl no longer reaches a plateau of direction estimates for sources in the periphery2 (Fig. 1g). We simulated the ruff removal by increasing the frequency and decreasing the amplitude of the sinusoid that describes the measured mapping of direction to ITD (Fig. 1f and Supplementary Fig. 1; amplitude = 230 μs, angular frequency = 0.0175 rad/degree). The parameters were determined by fitting the sinusoid to the measured mapping of direction to ITD after ruff removal2. Using unchanged parameters for the widths of the likelihood and the prior, the Bayesian model predicted the owl's behavior under ruff-removed conditions (Fig. 1g; RMSE between the average behavior and the average Bayesian estimate was 0.44 deg).

We used a second, independent test of the Bayesian model, by examining direction estimates under varying interaural correlation, i.e. the degree of similarity of the sounds reaching the left and right ears. Changes in interaural correlation represent a means to increase the variability in ITD. In particular, as the interaural correlation decreases, the ITD determined by the peak in a cross-correlation of the left and right input signals21,22 is influenced more by the independent noise at the left and right ears than by the coherent source signal21. Behaviorally, owls show a greater underestimation of sound source direction when interaural correlation decreases21.

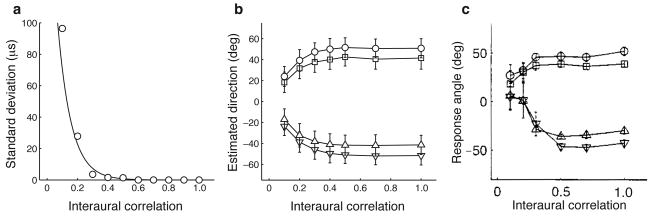

We used a cross-correlation model to determine how interaural correlation affects the variability of the measured ITD21,22. For a fixed ITD of the input signals, we observed an exponential decrease in the variability of ITD as interaural correlation increases, where the standard deviation of ITD reaches a minimum for interaural correlation values greater than 0.5 (Fig. 3a). We tested the Bayesian model using values of the standard deviation of the noise corrupting ITD that followed this exponential relationship, with a minimum value of 41.2 μs set by fitting the parameters of the Bayesian model to the behavioral data, as described above. Using this type of noise, the model predicted direction estimates that became increasingly biased toward zero as interaural correlation decreased (Fig. 3b), consistent with the owl's behavior (Fig. 3c). The model predictions show a qualitative match with observations of the owl's sound-localizing behavior when interaural correlation is manipulated21. In particular, direction estimates of the model and the owl decrease toward zero for interaural correlation values less than 0.5 (Fig. 3b,c). Given the asymmetry in the owl's estimates for directions on the left and right sides (Fig. 3c), the model captures the central feature of the owl's behavior, which is an increased bias toward zero as interaural correlation decreases.

Figure 3.

Predicted behavior under varying levels of interaural correlation. (a) Variability of ITD with interaural correlation. ITD was estimated from the peak of the cross-correlation of the left and right input signals. (b) Direction estimates from the Bayesian model using levels of the standard deviation of the noise corrupting ITD that follow the exponential relationship shown in (a) with a minimum value of 41.2 μs, estimated from the behavioral data (s.d. = 219.34 exp(−11.31×IC)+41.2, where IC is the interaural correlation). Symbols correspond to four different source directions (± 55, ± 75 degrees). Error bars represent the standard deviation over trials. (c) The predicted trend is similar to observations in behaving owls (modified from Fig. 1 in ref. 21).

Assessment of Bayesian model parameters

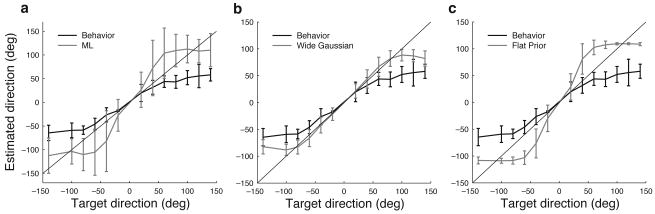

The presence of a prior distribution that emphasizes central directions is necessary to explain the owl's localization behavior. The sinusoidal direction-dependence of ITD produces a likelihood function that has multiple equivalent peaks for any given ITD (Fig. 1b). Therefore, estimates of a given source direction computed from the likelihood function alone would be expected to fall into multiple distinct regions, rather than into a single cluster as is seen in the owl's behavior1,2. As a consequence, a maximum likelihood estimator fails to capture the bias in the owl's localization behavior (Fig. 4a). The prior distribution must be incorporated into the estimation process to induce the owl to consistently localize directions near the more central of the two possible directions.

Figure 4.

Performance of alternative estimators. (a) Owl's behavior2 (bold black) and maximum likelihood (ML) estimate (gray). The thin black line is the identity. Error bars represent the standard deviation over trials. (b) Owl's behavior2 (bold black) and Bayesian estimate using the mean of the posterior distribution when using a Gaussian-shaped prior that is wider than the optimal value (gray). (c) Owl's behavior2 (bold black) and Bayesian estimate using the mean of the posterior distribution when using a flat prior (gray).

The standard deviation of the prior in the model was 23.3 degrees. This causes the estimator to favor directions near the center of gaze, but is wide enough to allow for detection of sources in the periphery. Support for this shape of the prior is given by the observation that a Bayesian estimator using a wider or flat prior fails to capture the bias in the owl's localization behavior (Fig. 4b,c).

The likelihood function used in the Bayesian model is consistent with the natural variation of ITD. The standard deviation of the noise corrupting ITD was 41.2 μs. To assess the plausibility of this value, we used barn owl head-related transfer functions19 to determine the natural variability in ITD of the signals received at the ears. For horizontal directions ranging over the frontal hemisphere, we measured the variability of ITD computed for a bank of natural sounds at different elevations23. Across horizontal directions, the median standard deviation of ITD was 24.4 μs (19 directions, interquartile range 6.7 μs). The standard deviation of ITD did not vary with the magnitude of the horizontal direction (r2 = 0.02, P = 0.68). While lower than the standard deviation of the noise corrupting ITD in the model, this value does not take into account variability due to environmental conditions16 nor from the neural computation of ITD17,18.

Neural decoding

We then asked how the Bayesian estimate of source direction could be implemented in the neural circuitry of the owl's auditory system. Neural decoding of directional variables has often been investigated using the population vector24,25. The population vector is obtained by averaging the preferred direction vectors of neurons in the population, weighted by the firing rates of the neurons (Fig. 1c). It can be shown that if (i) the neural tuning curves are proportional to the likelihood function, (ii) the preferred directions are distributed according to the prior, and (iii) the neural population is large enough, then the population vector will be consistent with the Bayesian estimate26 (Supplementary Discussion).

To test the consistency of a population vector decoder with a Bayesian estimator, we constructed a model network using 500 neurons with preferred directions drawn from the prior distribution and tuning curves that are proportional to the likelihood function. Example tuning curves from the model network are shown in Figure 6a. We computed the population vector estimate of direction under both the normal condition and the ruff-removed condition2. In each simulation, the firing rates of neurons were drawn independently from Poisson distributions with mean values given by the values of the tuning curves.

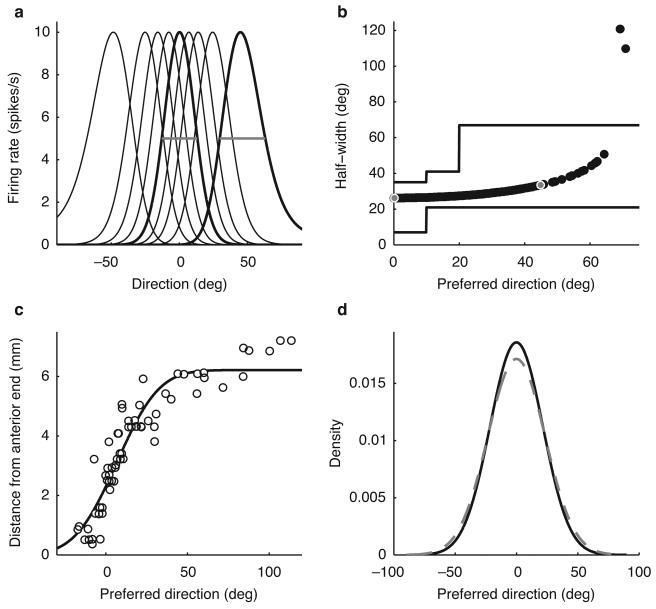

Figure 6.

Predicted midbrain representation of auditory space. (a) Example tuning curves in the model optic tectum (OT) population. (b) Plot of model tuning curve half-widths (black circles) along with experimental data measured in the OT28 (solid lines, showing plus/minus 1 standard deviation, as reported in ref. 28). Gray and white circles correspond to the tuning curves highlighted in (a). The two outlier points correspond to receptive fields in the periphery that wrap around the owl's head, and which are indeed observed in the owl's OT data as well28. (c) Measured values of space map positions of OT neurons (modified from ref. 28) together with the fit by a scaled cumulative Gaussian distribution function (solid line). (d) The model prior density of preferred direction (dashed gray) and the measured bilateral density (solid black) found by combining the unilateral densities derived from the cumulative Gaussian in (c).

The population vector estimate using the model network shown in Figure 6a matched both the owl's behavior and the Bayesian estimate in both conditions (Fig. 1e,g), with an error of less than 2 degrees. The RMSE between the average behavior and the average population vector estimate was 1.44 deg in the normal condition and 0.39 deg in the ruff removal condition. The RMSE between the average estimates of the Bayesian model and the population vector was 0.22 deg in the normal condition and 0.05 deg in the ruff removal condition.

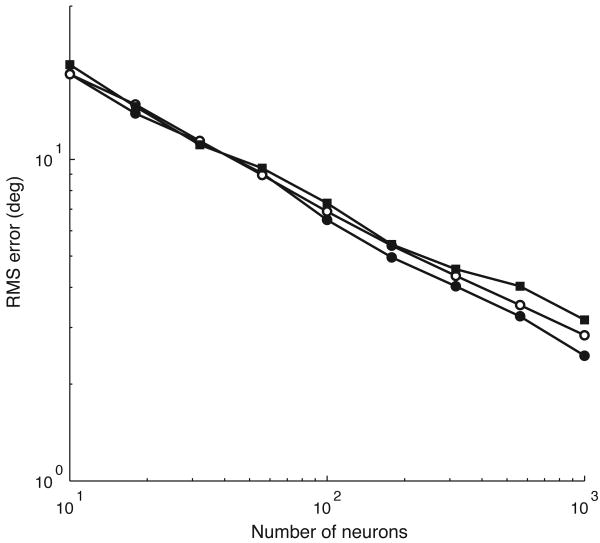

We examined the accuracy of the population vector in approximating a Bayesian estimate as a function of population size and correlation of firing-rate variability. In these simulations, firing rates of the neurons were drawn from a multivariate Gaussian distribution with mean given by the firing-rate values of the tuning curves and correlation matrix with entries that depend on the product of the tuning curve values between neurons. The Gaussian model with multiplicative noise produces neural responses where the variance increases with the mean firing rate, as in the Poisson case. The Gaussian model was used for computational convenience. In the behaviorally relevant range, the RMSE in the approximation of the Bayesian estimate by the population vector decreased as , where N is the number of neurons, for each value of the correlation coefficient (Fig. 5). Given the large number of neurons in the output layers of the OT, the population vector decoder of OT responses should approximate the Bayesian estimate with an error of less than two degrees. The robustness of this approximation indicates that the structure of the neural noise will not determine the form of the population code that produces the optimal representation of auditory space.

Figure 5.

Population vector approximation to Bayesian estimator. The root-mean-square (RMS) difference in direction estimates between the population vector and the Bayesian estimator for different correlation coefficients in the noise between neurons (black circles 0.25, white circles 0.5, black squares 0.75).

Predicting the neural representation

We used the neural population model to predict the representation of auditory space in the owl's OT. The model consists of a population of neurons with preferred directions covering the frontal hemisphere, but with greatest density near the center of gaze (Fig. 6a). Note that the population vector estimates shown in Figure 1 were computed from the same population. As a function of stimulus direction, the tuning curves were approximately Gaussian-shaped with widths that increase with eccentricity (Fig. 6a,b), in accordance with the Gaussian likelihood function that describes the statistical dependence of ITD on sound-source direction.

The nonuniform population predicted by the model is consistent with the representation of auditory space in the owl's OT. First, tuning curves of midbrain neurons can be described using Gaussian functions27. Second, the widths of the tuning curves in the model increase with eccentricity and fall directly in the bounds measured for space-specific neurons in OT28 (Fig. 6b). The increase in width with eccentricity is due to the sinusoidal function of direction that appears in the likelihood function. This occurs because the sine function describing the mapping from direction to ITD changes faster for directions near the center of gaze than for directions near the side of the head. Therefore, for preferred directions near the center of gaze, a small range of source directions will produce ITDs that are near the preferred ITD of the neuron. In contrast, for preferred directions near the side of the head, a larger range of directions will produce ITDs that are near the preferred ITD of the neuron, thus leading to wider tuning curves for neurons with peripheral preferrred directions. Finally, the distribution of preferred directions in the model is consistent with the the experimental distribution; that is, with the auditory space map in the OT. Assuming that cell density is homogeneous in OT, the physical distance between points corresponding to different preferred directions in OT's auditory space map will be proportional to the number of cells that lie between those directions. Thus, the shape of the auditory-space map on each side should be described by a curve that is proportional to the cumulative distribution function of the unilateral density of preferred directions. To determine the unilateral distribution, we fit the relationship between preferred direction and position in the OT space map measured in ref. 28 with a curve that is proportional to a cumulative Gaussian-distribution function (Fig. 6c; mean 6.8, s.d. 20.3 degrees). The bilateral density of preferred direction was obtained by linearly combining two Gaussians with means given by the estimated unilateral value and its negative. The density of preferred directions predicted by the Bayesian model is consistent with the measured density in OT, where locations representing central directions cover a greater area than locations representing peripheral directions (Fig. 6d; measured density: mean 0, s.d. 20.3 deg; Bayesian model density: mean 0, s.d. 23.3 deg.). Therefore, the shapes of the tuning curves in OT are consistent with the likelihood function and the shape of the auditory space map is consistent with the prior distribution.

Discussion

We have described a principle that explains both the sound-localizing behavior and the neural representation of auditory space in the owl. This principle is the implementation of approximate Bayesian inference using a population vector. The idea that source direction is decoded from the auditory space map using a population vector revises the classical place-coding model of sound localization in barn owls12,13. To decode source direction, the population vector utilizes the entire OT population and takes into account the overrepresentation of frontal space. Thus, the population-vector estimate is biased toward the center of gaze by the uneven distribution of preferred directions (Fig. 1c). This model provides an explanation for the owl's underestimation of source direction, which is a common perceptual bias in sound localization across species3–6.

The model presented here provides a theoretical explanation for the representation of auditory space in the owl that is more complete than previous theories. Previously, principles of optimal coding used to explain the neural representation of ITD have not addressed the issue of behavior29. Here, we used optimal Bayesian inference and naturalistic constraints to analyze the function of the localization pathway in the owl. Having localization behavior that is consistent with the Bayesian estimator ensures that the owl will strike sources near the center of gaze more accurately, at the expense of underestimating the direction of sound sources in the periphery. Note that, even though the prior emphasizes central directions, sounds arising from behind the owl will lead to head turns toward the sound source and allow for localization after multiple head turns (Fig. 1). Given that owls strongly rely on capturing animals in the dark and the inherent uncertainty in sound localization cues, maximizing the ability to localize within the sound-localizing ‘fovea’ may be the key for survival.

In our analysis of the owl's behavior, we find that a prior that emphasizes central directions is necessary to explain the observed bias in sound localization. The shape of the prior in the model comes directly from fitting the model to the behavioral data. The need for the prior is due to the ambiguous direction-dependence of ITD2. The presence of a bias in localization is not restricted to the case of the owl; the dependence of sound localization on a prior that emphasizes central directions is consistent with the biases seen in sound localization across species3–6. In fact, multiple studies of human lateralization of tones, narrowband sounds, and broadband sounds show that a centrality weighting function is necessary to predict human behavior30. Whether, and how, a prior is used in the sound localization behavior of other species remain open questions.

Behavioral experiments show that when the owl is engaged in capturing prey, the actual distribution of target directions is consistent with the centrality prior in the Bayesian model20 (Fig. 2). This behavior alternates between chase and periods when the owl and prey are immobile. The majority of the time when the owl and its prey are immobile, before a sound produced by the prey initiates chase, occurs with the owl facing its prey. The owl likely integrates information during the chase and utilizes multiple head turns to accurately track prey, and thereby ends up facing the prey more often than not. This indicates that sounds from a target source that the owl is engaged in capturing may, in natural conditions, occur with greatest frequency from directions in front of the owl. Thus, considering sources of interest that the owl engages with31, sound directions can show a distribution consistent with the prior20. Certainly, the initial location of arbitrary sound sources should be uniformly distributed in space. Even so, maximizing the ability to localize within within the sound-localizing ‘fovea’ may represent an efficient strategy, across trials.

The model presented here describes the owl's localization behavior in the horizontal dimension using ITD as the sound localization cue. A complete account of the owl's sound localization behavior will require additional sound localization cues, integration of auditory and visual information, and temporal integration of sensory signals. These computations can naturally be incorporated into a Bayesian framework. Although the general solution will require additional components, this model describes how the owl solves the ethologically relevant problem of determining the horizontal direction of a sound source13.

Bayesian approaches to modeling perception and behavior have a powerful theoretical basis and may serve as a unifying principle across species and modalities. A significant open question has been how probabilistic information, in particular a prior distribution, can be represented in a neural system32. It has been suggested that a prior can be implemented in the distribution of neural tuning curves26,32,33. In particular, maximizing the mutual information between a stimulus and a population response leads to a population code where the prior is encoded in the density of preferred stimuli and the widths of the tuning curves33. In addition, a population of Poisson neurons with divisive normalization can implement Bayesian inference if the tuning curves are proportional to the likelihood and the preferred stimuli are drawn from the prior density26. The analysis here uses a similar argument to show that a population vector can implement an approximate Bayesian inference. Our analysis also predicts that the prior is encoded in the density of preferred stimuli and the shapes of the tuning curves are determined solely by the likelihood function. The population vector implementation of Bayesian inference does not require a particular distribution of neural noise. The prediction of both the distribution of preferred stimuli and the shapes of the tuning curves were supported by experimental data (Fig. 6). This analysis provides a reinterpretation of a neural decoder that is generally viewed as suboptimal for heterogeneous populations34,35. We note that a Bayesian estimate from the posterior distribution conditioned on the neural responses does not match the owl's behavior unless the distribution of model preferred directions is wider than the measured distribution in OT and the model tuning curves are much sharper than the measured tuning curves (Supplementary Fig. 2). The conditions for optimality of the population vector, though not trivial, are likely to occur in other cases, as non-uniform representations of stimulus parameters are common26,33,36. For example, the oblique effect in visual perception has been described as Bayesian inference with a prior emphasizing cardinal axes37 and distributions of preferred locations are non-uniform, with a higher density of cells on the horizontal and vertical axes26,33,38. This provides a solution to what has been an open issue in applying Bayesian models across multiple levels of analysis from behavior to neural implementation.

Methods

Behavior

Behavioral data were provided by ref. 2. The mean and standard deviation of direction estimates are taken from the combined results for three owls.

ITD model

ITD was modeled as a sinusoidal function of source direction, corrupted by Gaussian noise. The amplitude and frequency of the sinusoid A sin(ωθ) were fit to the mapping between azimuth and the ITD in the signals received near the tympanic membranes measured by ref. 2. The period corresponds to the interpeak distance and the amplitude is the average magnitude of the positive and negative extrema. The fit was performed for the mapping measured for an owl in the normal condition and for the owl after the facial ruff feathers were removed2.

Bayesian estimate of direction

The Bayesian estimate of stimulus direction θ from ITD is given by the mean of θ under the posterior distribution p(θ|ITD). The mean direction is found by first computing the vector that points in the mean direction as , where u(θ) is a unit vector pointing in direction θ and the proportionality follows from Bayes' rule:

The direction estimate is computed from the mean vector using the inverse tangent function as

Alternative models of behavior: flat prior and maximum likelihood

To test the performance of a Bayesian estimator with a flat prior, we used a prior distribution that was constant over the circle.

The maximum likelihood estimate is the direction that maximizes the likelihood function p(ITD|θ).

Natural variability of ITD

We measured the variability in the ITD of the signals received at the owl's ears due to signal type and elevation using head-related transfer functions19 (HRTF). The HRTF is the transfer function that describes the mapping from a sound signal at a direction in space to the sound signal measured near the tympanic membrane. For each horizontal direction, we computed the ITD in the signals obtained by filtering a source signal with HRTFs at the horizontal direction and over the range of elevations covering the frontal hemisphere in five degree steps, measured in double polar coordinates. Source signals were taken from a set of 200 natural sound segments obtained by randomly selecting 20 segments of 100 ms each from 10 natural sound signals23. ITD was computed from the peak in the cross-correlation of the left and right signals, with the range of possible ITDs limited to ±260 μs.

We used the cross-correlation model of ref. 21, where input signals are first filtered with a bank of gammatone bandpass filters, then cross-correlation is performed in each frequency channel, and finally the resulting cross-correlation outputs from each frequency channel are linearly combined using a frequency-dependent weighting that matches the owl's sensitivity to frequency.

Variability of ITD and interaural correlation

We used the cross-correlation model to determine how variability in ITD depends on interaural correlation. Interaural correlation was varied by adding independent noise to the left and the right ear input signals. The noise and input signals were Gaussian signals with a flat spectrum up to 12 kHz21. Here, we are calculating the variability in ITD solely due to interaural correlation; this dependence does not depend on sound direction in the model. Therefore, the ITD of the input signals was 0 μs. Interaural correlation was given by 1/(1+k2) where k is the ratio of the root-mean-square amplitudes of the noise and target sound signals21.

Optic tectum model

The model consisted of a population of N direction-selective neurons. The preferred directions were drawn independently from the Gaussian-shaped prior distribution on direction. The prior density is given by , where the constant Z normalizes the density over the circle.

The neural tuning curves, as a function of ITD, are proportional to the likelihood function and are given by , where μn = A sin(ωθn) and θn is the preferred direction. Responses to a given stimulus direction were simulated by first generating an ITD value according to ITD = A sin(ωθ)+η, where η is drawn from a zero-mean Gaussian distribution with variance . The tuning curves as a function of direction are found by inserting the sinusoidal mapping from direction to ITD into the above equation and are given by . Note that the width parameter is the same for all neurons. The maximum firing rate was set to 10 spikes/s. During simulations, the neurons have independent Poisson distributed firing rates with mean values given by the neural tuning curves an(ITD(θ)).

To determine the density of preferred directions in OT, we obtained measured pairs of preferred direction and auditory space map position from Figure 13A in ref. 28 using the Matlab function grabit.m (MATLAB Central, Mathworks).

Population vector

The population vector is computed as a linear combination of the preferred direction vectors of the neurons, weighted by the firing rates

where u(θn) is a unit vector pointing in the nth neuron's preferred direction and rn(ITD) is the firing rate of the nth neuron, drawn either from a Poisson or Gaussian distribution as described above. The direction estimate is found by computing the direction of the population vector using the inverse tangent, as described above for the Bayesian estimate.

Modeling correlated variability

To test the effect of correlated firing rate variability on the approximation of the Bayesian estimator by the population vector, simulations were performed where the neurons have independent Gaussian distributed firing rates with mean values given by the neural tuning curves an(ITD(θ)) and covariance matrix Σ with entries , where ρ is the correlation coefficient between neurons and δij = 1 if i = j and δij = 0 if i ≠ j. This form of the covariance matrix causes the variance to equal the mean, as in the Poisson model.

For all simulations, direction estimates were obtained for the population vector and the Bayesian estimators over 150 trials.

Supplementary Material

Supplementary Information Titles

Please list each supplementary item and its title or caption, in the order shown below. Please include this form at the end of the Word document of your manuscript or submit it as a separate file.

Note that we do NOT copy edit or otherwise change supplementary information, and minor (nonfactual) errors in these documents cannot be corrected after publication. Please submit document(s) exactly as you want them to appear, with all text, images, legends and references in the desired order, and check carefully for errors.

Supplementary Figure 1 Direction-dependence of ITD.

Supplementary Figure 2 Performance of the probabilistic population code (PPC)

Supplementary Discussion [NO DESCRIPTIVE TITLE NECESSARY]

Acknowledgments

We thank L. Hausmann and H. Wagner for providing the behavioral data and C. Keller and T. Takahashi for providing the head-related transfer functions. We thank S. Edut, D. Eilam, E. Knudsen, M. Konishi, and K. Saberi whose work significantly contributed to testing our hypothesis. We thank S. Deneve, M. Konishi, A. Margolis, A. Oster, O. Schwartz, and A. Wohrer for advice and comments on the manuscript. This study was supported by the US National Institutes of Health.

Footnotes

Author Contributions: B.J.F. designed the model and performed the model simulations. J.L.P. supervised the project. B.J.F. and J.L.P. wrote the paper.

References

- 1.Knudsen EI, Blasdel GG, Konishi M. Sound localization by the barn owl (Tyto alba) measured with the search coil technique. J Comp Physiol. 1979;133:1–11. [Google Scholar]

- 2.Hausmann L, von Campenhausen M, Endler F, Singheiser M, Wagner H. Improvements of sound localization abilities by the facial ruff of the barn owl (Tyto alba) as demonstrated by virtual ruff removal. PLoS ONE. 2009;4(11):e7721. doi: 10.1371/journal.pone.0007721. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Populin LC, Yin TC. Behavioral studies of sound localization in the cat. J Neurosci. 1998;18:2147–2160. doi: 10.1523/JNEUROSCI.18-06-02147.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Jay MF, Sparks DL. Localization of auditory and visual targets for the initiation of saccadic eye movements. In: Berkley MA, Stebbins WC, editors. Comparative perception Basic mechanisms. Wiley, New York; New York, USA: 1990. pp. 351–374. [Google Scholar]

- 5.Nodal FR, Bajo VM, Parsons CH, Schnupp JW, King AJ. Sound localization behavior in ferrets: comparison of acoustic orientation and approach-to-target responses. Neuroscience. 2008;154:397–408. doi: 10.1016/j.neuroscience.2007.12.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Zahn JR, Abel LA, Dell'Osso LF. Audio-ocular response characteristics. Sens Processes. 1978;2:32–37. [PubMed] [Google Scholar]

- 7.Blauert J. Spatial hearing: The psychophysics of human sound localization. MIT Press; Cambridge, Massachusetts, USA: 1983. [Google Scholar]

- 8.Carr CE, Konishi M. A circuit for detection of interaural time differences in the brain stem of the barn owl. J Neurosci. 1990;10:3227–3246. doi: 10.1523/JNEUROSCI.10-10-03227.1990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Knudsen EI, Konishi M. A neural map of auditory space in the owl. Science. 1978;200:795–797. doi: 10.1126/science.644324. [DOI] [PubMed] [Google Scholar]

- 10.Olsen JF, Knudsen EI, Esterly SD. Neural maps of interaural time and intensity differences in the optic tectum of the barn owl. J Neurosci. 1989;9:2591–2605. doi: 10.1523/JNEUROSCI.09-07-02591.1989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Masino T, Knudsen EI. Horizontal and vertical components of head movements are controlled by distrinct neural circuits in the barn owl. Nature. 1990;345:434–437. doi: 10.1038/345434a0. [DOI] [PubMed] [Google Scholar]

- 12.Jeffress LA. A place theory of sound localization. J Comp Physiol Psychol. 1948;41:35–39. doi: 10.1037/h0061495. [DOI] [PubMed] [Google Scholar]

- 13.Konishi M. Coding of auditory space. Annu Rev Neurosci. 2003;26:31–55. doi: 10.1146/annurev.neuro.26.041002.131123. [DOI] [PubMed] [Google Scholar]

- 14.Weiss Y, Simoncelli EP, Adelson EH. Motion illusions as optimal percepts. Nature Neurosci. 2002;5:598–604. doi: 10.1038/nn0602-858. [DOI] [PubMed] [Google Scholar]

- 15.Stocker AA, Simoncelli EP. Noise characteristics and prior expectations in human visual speed perception. Nature Neurosci. 2006;9:578–585. doi: 10.1038/nn1669. [DOI] [PubMed] [Google Scholar]

- 16.Nix J, Hohmann V. Sound source localization in real sound fields based on empirical statistics of interaural parameters. J Acoust Soc Am. 2006;119:463–479. doi: 10.1121/1.2139619. [DOI] [PubMed] [Google Scholar]

- 17.Christianson GB, Peña JL. Noise reduction of coincidence detector output by the inferior colliculus of the barn owl. J Neurosci. 2006;26:5984–8954. doi: 10.1523/JNEUROSCI.0220-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Pecka M, Siveke I, Grothe B, Lesica NA. Enhancement of ITD coding within the initial stages of the auditory pathway. J Neurophysiol. 2010;103:38–46. doi: 10.1152/jn.00628.2009. [DOI] [PubMed] [Google Scholar]

- 19.Keller CH, Hartung K, Takahashi TT. Head-related transfer functions of the barn owl: measurement and neural responses. Hearing Research. 1998;118:13–34. doi: 10.1016/s0378-5955(98)00014-8. [DOI] [PubMed] [Google Scholar]

- 20.Edut S, Eilam D. Protean behavior under barn-owl attack: voles alternate between freezing and fleeing and spiny mice flee in alternating patterns. Behav Brain Res. 2004;155:207–216. doi: 10.1016/j.bbr.2004.04.018. [DOI] [PubMed] [Google Scholar]

- 21.Saberi K, Takahashi Y, Konishi M, Albeck Y, Arthur BJ, Farahbod H. Effects of interaural decorrelation on neural and behavioral detection of spatial cues. Neuron. 1998;21:789–798. doi: 10.1016/s0896-6273(00)80595-4. [DOI] [PubMed] [Google Scholar]

- 22.Fischer BJ, Christianson GB, Pena JL. Cross-correlation in the auditory coincidence detectors of the owl. J Neurosci. 2008;28:8107–8115. doi: 10.1523/JNEUROSCI.1969-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Smith EC, Lewicki MS. Efficient auditory coding. Nature. 2006;439:978–982. doi: 10.1038/nature04485. [DOI] [PubMed] [Google Scholar]

- 24.Georgopoulos AP, Kalaska JF, Crutcher MD, Caminiti R, Massey JT. The representation of movement direction in the motor cortex: single cell and population studies. In: Edelman GM, Goll WE, Cowan WM, editors. Dynamic aspects of neocortical function. Neurosciences Research Foundation; New York, USA: 1984. pp. 501–524. [Google Scholar]

- 25.van Hemmen JL, Schwartz AB. Population vector code: a geometric universal as actuator. Biol Cybern. 2008;98:509–518. doi: 10.1007/s00422-008-0215-3. [DOI] [PubMed] [Google Scholar]

- 26.Shi L, Griffiths TL. Neural implementation of hierarchical Bayesian inference by importance sampling. In: Bengio Y, Schuurmans D, Lafferty J, Williams CKI, Culotta A, editors. Advances in Neural Information Processing Systems NIPS. Vol. 22. MIT Press; Cambridge, Massachusetts: 2009. [Google Scholar]

- 27.Perez ML, Shanbhag SJ, Peña JL. Auditory spatial tuning at the crossroads of the midbrain and forebrain. J Neurophysiol. 2009;102:1472–1482. doi: 10.1152/jn.00400.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Knudsen EI. Auditory and visual maps of space in the optic tectum of the owl. J Neurosci. 1982;2:1177–1194. doi: 10.1523/JNEUROSCI.02-09-01177.1982. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Harper NS, McAlpine D. Optimal neural population coding of an auditory spatial cue. Nature. 2004;430:682–686. doi: 10.1038/nature02768. [DOI] [PubMed] [Google Scholar]

- 30.Stern RM, Trahiotis C. Models of binaural perception. In: Gilkey R, Anderson TR, editors. Binaural and Spatial Hearing in Real and Virtual Environments. Lawrence Erlbaum Associates; New York, USA: 1996. pp. 499–531. [Google Scholar]

- 31.Bergan JF, Ro P, Ro D, Knudsen EI. Hunting increases adaptive auditory map plasticity in adult barn owls. J Neurosci. 2005;25:9816–9820. doi: 10.1523/JNEUROSCI.2533-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Simoncelli EP. Optimal estimation in sensory systems. In: Gazzaniga M, editor. The Cognitive Neurosciences, IV. MIT Press; Cambridge, Massachusetts, USA: 2009. pp. 525–535. [Google Scholar]

- 33.Ganguli D, Simoncelli EP. Implicit encoding of prior probabilities in optimal neural populations. In: Lafferty J, Williams CKI, Shawe-Taylor J, Zemel RS, Culotta A, editors. Advances in Neural Information Processing Systems NIPS. Vol. 23. MIT Press; Cambridge, Massachusetts: 2010. [PMC free article] [PubMed] [Google Scholar]

- 34.Seung HS, Sompolinsky H. Simple models for reading neural population codes. Proc Natl Acad Sci USA. 1993;93:339–344. doi: 10.1073/pnas.90.22.10749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Salinas E, Abbott LF. Vector reconstruction from firing rates. J Comput Neurosci. 1994;1:89–107. doi: 10.1007/BF00962720. [DOI] [PubMed] [Google Scholar]

- 36.Köver H, Bao S. Cortical plasticity as a mechanism for storing Bayesian priors in sensory perception. PLoS ONE. 2010;5(5):e10497. doi: 10.1371/journal.pone.0010497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Girshick AR, Landy MS, Simoncelli EP. Bayesian line orientation perception: Human prior expectations match natural image statistics. CoSyne Abstracts. 2010 [Google Scholar]

- 38.Li B, Patterson MR, Freeman RD. Oblique effect: A neural basis in the visual cortex. J Neurophysiol. 2003;90:204–217. doi: 10.1152/jn.00954.2002. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Information Titles

Please list each supplementary item and its title or caption, in the order shown below. Please include this form at the end of the Word document of your manuscript or submit it as a separate file.

Note that we do NOT copy edit or otherwise change supplementary information, and minor (nonfactual) errors in these documents cannot be corrected after publication. Please submit document(s) exactly as you want them to appear, with all text, images, legends and references in the desired order, and check carefully for errors.

Supplementary Figure 1 Direction-dependence of ITD.

Supplementary Figure 2 Performance of the probabilistic population code (PPC)

Supplementary Discussion [NO DESCRIPTIVE TITLE NECESSARY]