Abstract

We investigated how visual and linguistic information interact in the perception of emotion. We borrowed a phenomenon from film theory which states that presentation of an as such neutral visual scene intensifies the percept of fear or suspense induced by a different channel of information, such as language. Our main aim was to investigate how neutral visual scenes can enhance responses to fearful language content in parts of the brain involved in the perception of emotion. Healthy participants’ brain activity was measured (using functional magnetic resonance imaging) while they read fearful and less fearful sentences presented with or without a neutral visual scene. The main idea is that the visual scenes intensify the fearful content of the language by subtly implying and concretizing what is described in the sentence. Activation levels in the right anterior temporal pole were selectively increased when a neutral visual scene was paired with a fearful sentence, compared to reading the sentence alone, as well as to reading of non-fearful sentences presented with the same neutral scene. We conclude that the right anterior temporal pole serves a binding function of emotional information across domains such as visual and linguistic information.

Keywords: language, emotion, film theory, fMRI, scenes, temporal pole

INTRODUCTION

It is relatively well known how emotionally laden stimuli such as fearful or threatening faces activate parts of the neural circuitry involved in emotion perception (e.g. Pessoa and Ungerleider, 2004; de Gelder, 2006; Adolphs, 2008). Also reading emotionally relevant words leads to activation of parts of the emotional circuitry in the brain. For instance Isenberg and colleagues (1999) showed increased activation levels in the amygdala bilaterally when healthy participants read fearful words (e.g. ‘death’, ‘threat’, ‘suffocate’) as compared to more neutral words (e.g. ‘desk’, ‘sweater’, ‘consider’) (see also Herbert et al., 2009). In the present study we investigate how linguistic and visual information interact in influencing emotion perception in the brain. To this end we used a phenomenon from film theory which implies that the presence of a neutral visual scene intensifies the percept of fear or suspense induced by a different channel of information (such as language, we elaborate on this below). So instead of focusing on the perception of emotion in linguistic or visual stimuli per se, we investigate how visual and linguistic information interact in the perception of emotion, more specifically the perception of fear.

We borrow a phenomenon from film theory which describes how pairing a fearful context with a neutral visual scene leads to a stronger sense of suspense than when the same fearful context is paired with a horror-type of scene which is emotional/fearful on its own. Director Alfred Hitchcock for instance describes how giving the audience additional information not known to the characters in a movie can create a strong sense of suspense (e.g. Pisters, 2004). The idea is to engage the audience by letting them ‘play God’ (Gottlieb, 1995), which leads to a much more implied type of fear or suspense as compared to gruesome horror scenes. A modern example is the movie The Blair Witch Project (1999), which has a high amount of suspense, without ever showing something which is literally scary: all the suspense is implied, mainly by using an unsteady home-video style of filming. The apparent neutrality of the visual scene is dramatically altered by the information that the audience has, despite the fact that the visual information is not fearful on its own right (Gottlieb, 1995; Pisters, 2004).

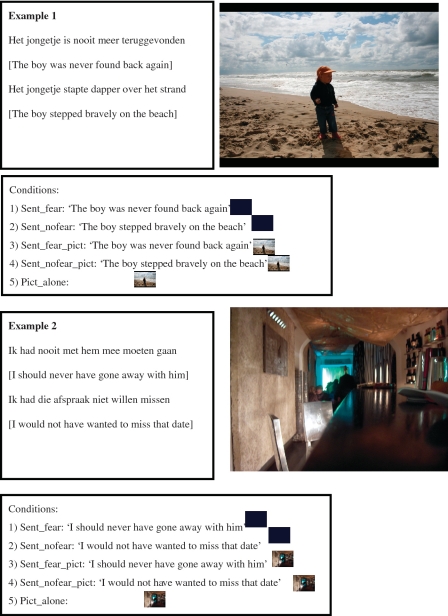

In our experiment we operationalized this by presenting sentences with fearful/suspenseful content together with neutral pictures, and compared neural responses to these stimulus combinations to sentences with less fearful content presented together with the same neutral pictures. Consider the first example in Table 1. In this example we paired the visual scene of a young child on the beach either with the sentence ‘They never found the boy back again’ or with ‘The boy stepped bravely across the beach’. The first sentence is clearly more emotionally laden since it implies that something bad happened to the child, as compared to the more neutral second sentence. The idea is that by presenting the emotional sentence together with the as such neutral picture, the audience/reader is more strongly emotionally engaged with the stimulus because the reader knows/infers that something happened to this child. We hypothesize that there will be a stronger emotional response as compared to when we read the sentence without a picture, or when we read the less emotional sentence with the same neutral picture. It should be clear from the examples in Table 1 that our sentence materials were not fearful in an obvious ‘shocking’ sense, but rather implied suspense. The sentences did not contain words that were in themselves obviously fearful, but fear was implied by the output of a compositional process on a series of non-fearful words. We label these sentences ‘fearful’ in the remainder of the manuscript mainly for ease of reading.

Table 1.

Two examples of the stimuli

|

Stimuli consisted of emotion-inducing sentences and sentences without clear emotional implications. The sentences were either presented together with a picture of a neutral visual scene or in the absence of a picture (on a black background). The top panel of each example shows the sentences in Dutch as well as their literal English translation. On the right side is the neutral picture that went together with these sentences. The box labeled ‘conditions’ illustrates the five experimental conditions. The first two conditions are the fearful (i) and non-fearful (ii) sentences presented without visual input (black screen). Conditions 3 and 4 are the same two sentences but now combined with the neutral visual scene, and condition 5 is presentation of the visual scene without language.

We also investigated a closely related question, namely whether reading fearful/suspenseful sentences leads to stronger activation of the emotional system as compared to less fearful sentences. Previous research on emotional language processing has mostly studied single words expressing emotions (emotion words). Examples are fearful words (e.g. ‘threat’ and ‘death’, etc.) as well as words expressing positive emotions (e.g. ‘successful’, ‘happy’) (Herbert et al., 2009). In our materials fear was not described by the sentence, but implied by the combination of non-fearful words. The important question is whether implied meaning as conveyed through language will also mediate responses in areas usually implicated in the direct perception of emotional events or by emotional words. Such an effect of emotional word meaning upon for instance the amygdala is reported in some (Isenberg et al., 1999; Strange et al., 2000; Herbert et al., 2009), but not in other (Beauregard et al., 1997; Cato et al., 2004; Kuchinke et al., 2005) neuroimaging investigations.

Finally, we investigated how perceiving neutral visual scenes together with sentences expressing fearful content changes subsequent perception of the visual scenes in isolation. In the first two runs of the experiment participants read sentences presented together with neutral pictures, sentences presented on their own, or pictures presented on their own. In the third and final run of the experiment we presented the neutral pictures again, now without concomitant sentences. The rationale of this experimental manipulation was to see whether the pairing of a neutral picture with a fearful sentence would carry over to the perception of the visual scene when presented on its own. An indication of such a carry-over effect would be increased activation to pictures paired with fearful sentences as compared to activation to pictures paired with non-fearful sentences in parts of the brain involved in emotion.

In summary the purpose of the present study was 3-fold: first, we explored the influence of pairing emotion-inducing language with visual scenes. Importantly, we paired language with neutral visual scenes, the rationale for which we described above. We hypothesize that parts of the brain circuitry involved in emotion will show a stronger effect of the fearful content expressed by sentences when these sentences are paired with a neutral visual scene than when they are presented on their own. A prime candidate to show such an effect is the amygdala, which has been implicated in the reading of emotional language (Herbert et al., 2009; Isenberg et al., 1999) but which is perhaps activated more robustly when emotional visual scenes (e.g. faces) are perceived (e.g. Adolphs, 2008). Alternatively, it may be that areas ‘bind’ information from language and picture together and that they do so more strongly in the case of emotional language content than in the case of less emotion-inducing language. A prime candidate to show such an effect is the right temporal pole, since a previous study found an interaction between emotion expressed by faces and the valence of a preceding context (Mobbs et al., 2006).

Second we investigated whether reading emotional sentences modulates activity in the neural circuitry implicated in perception of emotions. This would be in analogy with previous findings implicating sensori-motor cortex in the understanding of action-related or ‘visual’ language (e.g. Pulvermuller, 2005; Willems and Hagoort, 2007; Barsalou, 2008). It would be an extension of previous literature which has mainly focused on reading of emotional words, whereas in our materials the emotional meaning was established at the sentence level (cf. Razafimandimby et al., 2009).

Finally we looked at how pairing a neutral picture with a fearful sentence carries over to perception of the same picture in isolation. We hypothesize to see a ‘tag’ for the emotional content that the pictures were paired with, expressed as increased activation in parts of the brain that are involved in emotion processing.

METHODS

Participants

Fifteen young healthy individuals took part in the study (12 female, mean age 20.6 years, range 18–25). All participants were right-handed as assessed with the Edinburgh handedness inventory (Oldfield, 1971), and none had a history of neurological or psychiatric problems. Participants had no dyslexia as assessed through self-report and all had normal or corrected-to-normal vision and reported having Dutch as their mother tongue. The study was in line with the regulations laid down in the Declaration of Helsinki and was approved by the local ethics committee. Participants were paid for participation.

Stimuli

We created 180 pairs of sentences in Dutch. Each sentence pair consisted of one item with fearful content (sentence_fear) and one item with less fearful content (sentence_nofear). Emotional content of the stimuli was pretested in a separate pretest in which raters (n = 12), who did not participate in the functional magnetic resonance imaging session, rated a larger set of sentences on how much fear they thought was expressed by each sentence (1–7 point scale). Sentences were selected based upon the results of the pretest such that the fearful sentences were perceived as more fearful (mean = 3.89, s.d. = 0.72) and the non-fearful sentences as less fearful (mean = 1.27, s.d. = 0.28). This difference was statistically significant [t(178) = 47.2, P < 0.001]. Sentences in the two conditions were matched for sentence length (number of words; t < 1), lexical frequency of the words [derived from the CELEX database (Baayen et al., 1993); t < 1] and syntactic structure.

The visual stimuli were photographs of neutral scenes. They encompassed a wide variety of topics, from holiday-type pictures of landscapes to everyday situations such as people walking on the street. Importantly, none of these pictures displayed emotional of fearful content.

In accordance with the rationale for the experiment, sentences and pictures were presented in five conditions (see also Table 1):

Sentence fear (sent_fear);

Sentence no fear (sent_nofear);

Sentence fear + picture (sent_fear_pict);

Sentence no fear + picture (sent_nofear_pict);

Picture alone (pict_alone).

The sentences were presented below the pictures in the conditions in which sentences and pictures were paired.

Experimental procedure

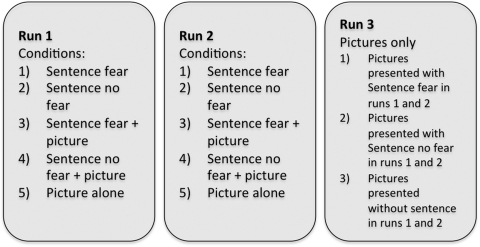

Participants took part in three runs (Figure 1). In runs 1 and 2, participants were presented with the stimuli in the five conditions outlined above. In run 3, participants were presented with the pictures that they had perceived in runs 1 and 2. Note that in run 3 only pictures were presented.

Fig. 1.

Experimental design. The experiment consisted of three runs: in runs 1 and 2, the five conditions as illustrated in Table 1 were presented. That is, participants read fear-inducing or non-fearful sentences presented without a visual scene (conditions 1 and 2), or presented together with a neutral visual scene (conditions 3 and 4), or observed the visual scenes without language (condition 5). In run 3, participants observed the same visual scenes as they had observed in runs 1 and 2, but now presented without language. The visual scenes could have been previously paired with fear-inducing language (i), with non-fearful language (ii) or presented without language (iii) in runs 1 and 2.

Stimuli were presented in an event-related manner. In runs 1 and 2 trial duration was 4 s, with a variable inter-trial interval ranging between 2 and 4 s (mean = 3 s) in steps of 250 ms, to effectively jitter onset of image acquisition and stimulus presentation (Dale, 1999). This presentation scheme ensures that the height of the hemodynamic response function can be effectively estimated despite the fact that the BOLD response peaks several seconds after presentation of a stimulus (Miezin et al., 2000). Conditions were rotated over participants such that a participant only saw one item from a stimulus set (Table 1). This means that there were five stimulus lists, each consisting of 150 items. Stimuli were presented in two blocks of 75 items, lasting 12 min each. Eight (out of 15) participants were presented with a mirrored version of the stimulus list.

In run 3 (when only pictures were presented) stimulus duration was 2 s, with the same inter-trial interval as in runs 1 and 2. Participants were presented with 108 items in total, and eight (out of 14) participants were presented with a mirrored version of the stimulus list. Each participant was presented with all pictures that were shown in the previous runs, resulting in three conditions: pictures previously presented without a sentence (pict_nosent), pictures previously presented with a fearful sentence (pict_fear), picture previously presented with a non-fear sentence (pict_nofear).

The order of runs 1 and 2 was counterbalanced across participants. Run 3 by necessity always followed runs 1 and 2, given that the conditions in run 3 depended upon how pictures were presented in runs 1 and 2.

In all runs participants were instructed to attentively read and watch the materials. Participants were told that general questions about the materials would be asked after the experiment, but that they did not have to memorize the items. We choose not to have an explicit task in order not to focus participants’ attention to a particular aspect of the stimuli. Moreover, previous work from our laboratory shows that presenting sentence materials without an explicit tasks leads to robust and reproducible activations (e.g. Hagoort et al., 2004; Özyürek et al., 2007; Tesink et al., 2008; Willems et al., 2007, 2008a, b). Since no behavioral response was required, we used an infrared eyetracker to assess vigilance/wakefulness of participants. It was checked online whether participants remained attentive with their eyes open during the whole session. Participants were familiarized with the experimental procedure by means of 10 practice trials that were shown before the start of the first run. These contained materials not used in the remainder of the experiment. The practice trials also served to ensure that participants could read the sentences.

Data acquisition and analysis

Echo-Planar Images (EPI) covering the whole brain were acquired with a 8 channel head coil on a Siemens MR system with 1.5 T magnetic field strength (TR = 2340 ms; TE = 35 ms; flip angle 90°, 32 transversal slices; voxel size 3.125 × 3.125 × 3.5 mm, 0.35 mm gap between slices). Data analysis was done using SPM5 (http://www.fil.ion.ucl.ac.uk/spm/software/spm5/). Preprocessing involved realignment through rigid body registration to correct for head motion and correction for differences in slice timing acquisition to the onset of the first slice. Subsequently for each participant a mean image from all EPI images was created, and this image was normalized to Montreal Neurological Institute (MNI) space by means of a least-squares affine transformation. The parameters obtained from the normalization procedure were applied to all EPI images. Data were interpolated to 2 × 2 × 2 mm voxel size, and spatially smoothed with an isotropic Gaussian kernel of 8 mm full-width at half maximum.

First-level analysis involved estimation of beta weights in a multiple regression analysis with regressors describing the expected hemodynamic responses (Friston et al., 1995) for each of the conditions (sent_fear, sent_nofear, sent_fear_pict, sent_nofear_pict, pict_alone in runs 1 and 2, and pict_fear, pict_nofear, pict_nosent in run 3). Stimuli were modeled as their actual duration (4 s in data from runs 1 and 2, 2 s in data from run 3) and regressors were convolved with a canonical two gamma hemodynamic response function (e.g. Friston et al., 1996). The six motion parameters obtained from the motion correction algorithm (three translations and three rotations) were included as regressors of no interest.

Second-level group analysis involved testing a mixed model with subjects as a random factor (‘random effects analysis’) (Friston et al., 1999). We first looked at which areas responded more strongly to fearful as compared to non-fearful sentences, collapsed over conditions (sent_fear + sent_fear_pict > sent_nofear + sent_nofear_pict). Given our hypothesis about the ‘additive’ effect of adding a neutral picture to a fearful sentence, we next looked for regions showing a stronger response to fearful as compared to non-fearful sentences combined with a picture as compared to fearful compared to non-fearful sentences without a picture (a directed interaction effect: [(sent_fear_pict > sent_nofear_pict) > (sent_fear > sent_nofear)]. Finally, we tested for areas which were more strongly activated to reading fearful sentences as compared to non-fearful sentences when presented without pictures (sent_fear > sent_nofear), and in addition which areas responded more strongly to fearful sentences combined with a pictures as compared to non-fearful sentences combined with a picture (sent_fear_pict > sent_nofear_pict). Group statistical maps were corrected for multiple comparisons by combining an activation level threshold of P < 0.001 at the individual subject level with a cluster extent threshold computed using the theory of Gaussian random fields, to arrive at a statistical threshold with a P < 0.05 significance level, corrected for multiple comparisons (Friston et al., 1996; Poline et al., 1997).

Given our a priori hypothesis (see Introduction section), we assessed responses of left and right amygdala in anatomically defined regions of interest (ROIs). These were created using the automated anatomical labeling (AAL) template, which is based upon a landmark guided parcellation of the MNI brain template (Tzourio-Mazoyer et al., 2002). Moreover, we used the AAL template to create a region of interest encompassing the right temporal pole (both superior and middle part), in line with our prediction for this area as laid down in the Introduction section.

RESULTS

Results from sentence reading (runs 1 and 2)

We first tested for areas that responded more strongly to fearful sentences as compared to non-fearful sentences, collapsed over conditions with and without a picture (sent_fear + sent_fear_pict > sent_nofear + sent_nofear_pict). This comparison revealed areas in left inferior frontal gyrus, the middle temporal gyri bilaterally, as well as the temporal poles bilaterally, to be more strongly activated to fearful as compared to non-fearful sentences (Table 2 and Figure 2).

Table 2.

Results from whole brain analysis of runs 1 and 2

| Comparison | MNI coordinates (x, y, z) | T(max) | Size (2 × 2 × 2 mm voxels) |

|---|---|---|---|

| Fear > no fear | |||

| Left anterior inferior frontal gyrus | −46, 28, 4 | 4.90 | 1768 |

| Left middle temporal gyrus | −62, −24, −14 | ||

| Left temporal pole | −44, 24, −22 | ||

| −48, 12, −28 | |||

| Right middle temporal gyrus | 48, −30, −8 | 4.18 | 200 |

| 50, −18, −14 | |||

| 48, −32, 2 | |||

| Right temporal pole | 52, 12, −22 | 4.05 | 161 |

| 46, 10, −36 | |||

| 48, 22, −22 | |||

| Sent_fear > Sent_nofear | |||

| Left insula | −44, 24, −22 | 4.66 | 185 |

| −32, 26, −8 | |||

| Left temporal pole | −30, 20, −32 | ||

| Left anterior inferior frontal gyrus | −56, 20, 2 | 4.28 | 195 |

| −54, 22, 12 | |||

| Sent_fear_pict > Sent_nofear_pict | |||

| Left temporal pole/middle temporal sulcus | −60, −26, −6 | 3.91 | 244 |

| −64, −4, −16 | |||

| −62, −14, −28 | |||

| Right cerebellum | 18, −76, −38 | 4.52 | 162 |

| 14, −72, −22 |

Half of the stimuli consisted of fearful or non-fearful sentences presented alone (sent_fear, sent_nofear). The other half of the stimuli were fearful and non-fearful sentences presented with neutral pictures (sent_fear_pict and sent_nofear_pict). The table shows the specificcomparison, a description of the activated region, the MNI coordinates, the t-value of peak activations within a cluster, as well as the size of each cluster in number of voxels (2 × 2 × 2 voxels). Results are corrected for multiple comparisons at the P < 0.05 level.

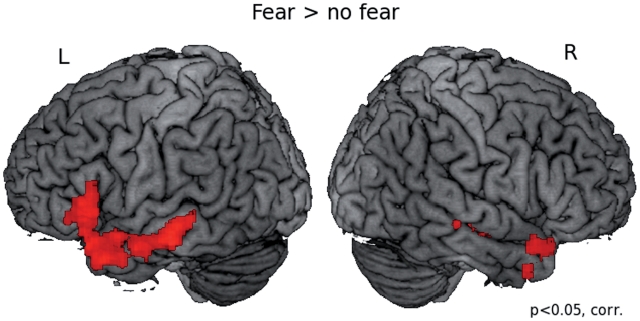

Fig. 2.

Results of whole brain analysis comparing fearful to non-fearful sentences. This contrast collapsed over sentence alone and sentence combined with picture conditions, testing a main effect of fear > no fear (sent_fear + sent_fear_pict) > (sent_nofear + sent_nofear_pict). Results are corrected for multiple comparisons at the P <0.05 level and displayed on a brain rendering that was created using MRIcron (http://www.sph.sc.edu/comd/rorden/mricron/).

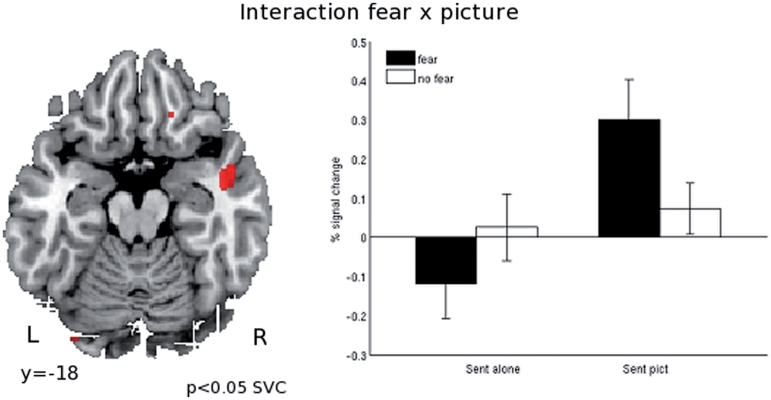

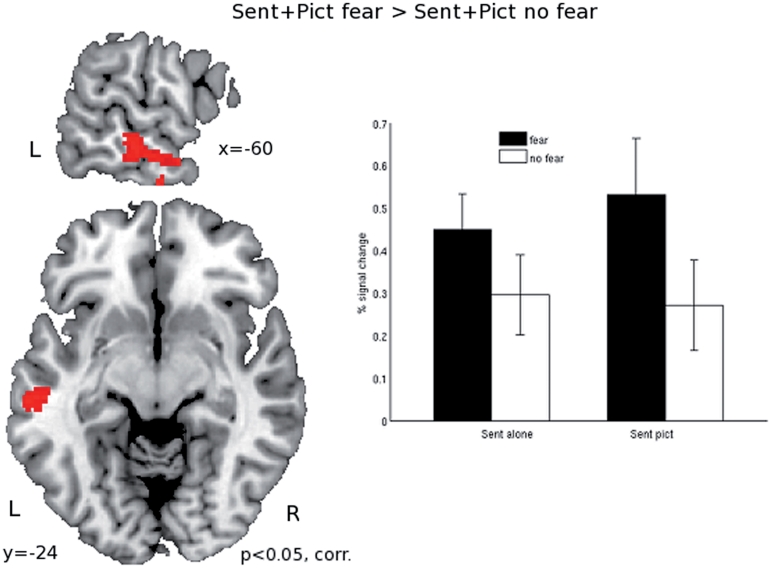

We next tested for areas in which the influence of fearful content was bigger when a picture was presented than when no picture was presented [i.e. directed interaction effect (sent_fear_pict > sent_nofear_pict) > (sent_fear > sent_nofear)]. This is the interaction effect that we predicted in the Introduction section. Small volume correction to anatomically defined right temporal pole showed this area to be sensitive to this comparison. At the whole brain level no clusters survived the cluster extent threshold, but the cluster in right temporal pole was sensitive to this comparison at the P < 0.001 uncorrected level threshold (Figure 3). No areas were sensitive to the opposite contrast.

Fig. 3.

Results of whole brain analysis testing the additive effect of combining a neutral picture with a fear-inducing sentence. The directed interaction effect tested for regions that showed a bigger differential response to fearful than to non-fearful sentences when combined with a neutral picture, as compared to the difference between fearful and non-fearful sentences presented without a neutral picture [(sent_fear_pict > sent_nofear_pict) > (sent_fear > sent_nofear)]. No areas survived a whole brain corrected statistical treshold. Correction for multiple comparisons was done by means of small volume correction (SVC) with the right temporal pole as the region of interest. The bar graphs represent percent signal change compared to the implicit session baseline. Coordinates refer to the MNI coordinates at which the image is displayed.

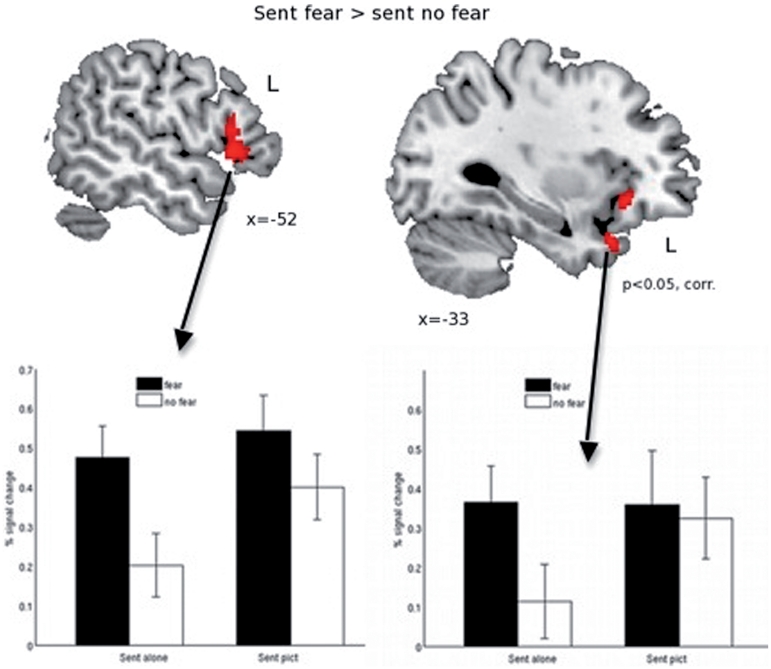

Comparing fearful sentences to non-fearful sentences (sent_fear > sent_nofear) revealed clusters of activation in the left insula, in the left temporal pole and in the anterior part of the left inferior frontal gyrus (Table 2 and Figure 4). The opposite contrast (sent_nofear > sent_fear) did not reveal any cluster of activation.

Fig. 4.

Results of whole brain analysis comparing sentences with fear-inducing content with sentences without fearful content (sent_fear > sent_nofear). Results are corrected for multiple comparisons at the P < 0.05 level. The bar graphs represent percent signal change compared to the implicit session baseline. Coordinates refer to MNI coordinates at which the image is displayed.

Next we compared the effect of fearful content in the sentences presented together with the pictures. Comparing fearful sentences presented with pictures to non-fearful sentences presented with pictures (sent_fear_pict > sent_nofear_pict) revealed clusters of activation in left temporal pole, extending into the middle temporal sulcus and in the right cerebellum (Table 2 and Figure 5). Informal inspection at the P < 0.001 uncorrected level also revealed activations in the right temporal pole and in the left amygdala. The opposite contrast (sent_nofear_pict > sent_fear_pict) revealed no activations.

Fig. 5.

Results of whole brain analysis comparing sentences with fearful content combined with neutral pictures, to sentences without fearful content combined with the same pictures (sent_ fear_pict > sent_nofear_pict). Note that the pictures were always neutral visual scenes, the emotional content was solely induced by the sentences. Results are corrected for multiple comparisons at the P < 0.05 level. The bar graphs represent percent signal change compared to the implicit session baseline. Coordinates refer to MNI coordinates at which the image is displayed.

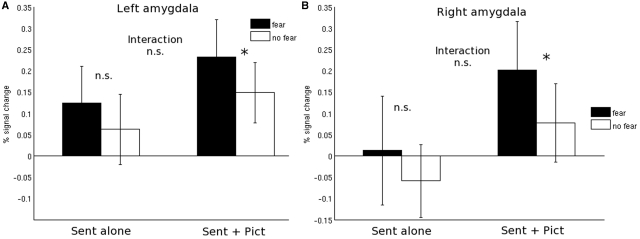

Given our a priori hypothesis for the amygdala, we tested the same contrasts on the parameter estimates taken from anatomically defined ROIs in left and right amygdala separately. None of the ROIs showed the predicted interaction effect [(sent_fear_pict > sent_nofear_pict) > (sent_fear > sent_nofear)] (all t’s <1). Planned comparisons showed that there was a stronger response to fearful sentences as compared to non-fearful sentences (sent_fear > sent_nofear), but this difference was not statistically significant in either left or right amygdala (Figure 6; one-sided t-tests: left: t(14) = 1.22, P = 0.11; right: t(14) = 1.12, P = 0.14). In both ROIs there was a stronger response to fearful sentences presented with pictures as compared to non-fearful sentences presented with pictures [one-sided t-tests: left: t(14) = 1.71, P = 0.045; right: t(14) = 1.62, P = 0.056]. This suggests that the increase in response to fearful sentence content was bigger when a picture was presented as when no picture was presented, but it should be noted that the interaction effect testing this additive effect was far from significant (see above).

Fig. 6.

Results of analysis in a priori defined ROIs in left and right amygdala. Presented are the parameter estimates (expressed as percentage signal change) to presentation of fearful (black) or non-fearful (white) sentences when presented alone (A), or when presented together with a neutral picture (B). The bar graphs represent percent signal change compared to the implicit session baseline. Asterisks indicate statistical significance at the P < 0.05 level; n.s.: not significant.

Results from presentation of pictures alone (run 3)

In run 3 the same pictures were presented as in runs 1 and 2. These pictures expressed neutral scenes, which could have been paired with a fearful sentence (pict_fear), with a non-fearful sentence (pict_nofear) or without a sentence (pict_nosent) in runs 1 and 2. It is important to stress that the suffixes ‘_fear’, ‘_nofear’ and ‘_nosent’ refer to the presentation condition in runs 1 and 2; in run 3 pictures were always presented without a sentence.

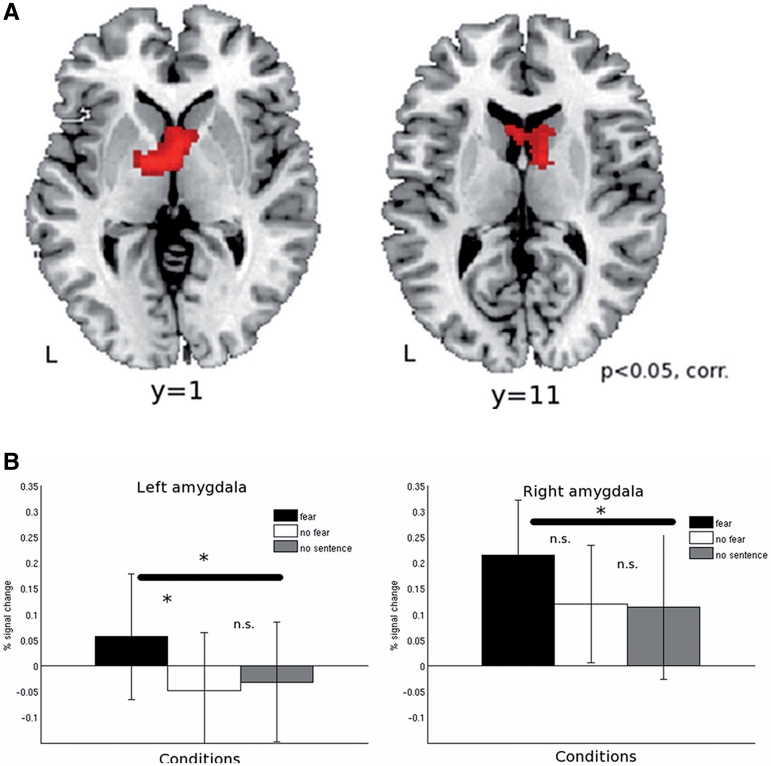

We first compared neural responses to pictures previously paired with a fearful sentence (pict_fear) with pictures presented with a non-fearful sentence (pict_nofear). This led to a large subcortical cluster of activation encompassing the caudate nucleus bilaterally, as well as the thalamus bilaterally (Table 3 and Figure 7A).

Table 3.

Results from whole brain analysis of run 3 in which only picture were presented

| Comparison | MNI coordinates (x, y, z) | T(max) | Size (2 × 2 × 2 mm voxels) |

|---|---|---|---|

| Pict_fear>Pict_nofear | |||

| Thalamus bilateral | −8, −4, 0 | 5.46 | 734 |

| −2, 2, −4 | |||

| Left caudate nucleus | −5, 11, 10 | 3.38 | |

| Right caudate nucleus | 10, 13, 9 | 3.58 | |

| Left cerebellum | −32, −78, −32 | 5.89 | 177 |

| Pict_fear>Pict_nosent | |||

| Thalamus bilaterally | 4, −2, 2 | 6.55 | 793 |

| −8, −6, −2 | |||

| Left caudate nucleus | −11, 10, 2 | 3.72 | |

| −7, 12, 10 | |||

| Right cerebellum | 12, −78, −36 | 4.41 | 189 |

| 36, −74, −36 | |||

| 24, −78, −38 |

Pictures were paired in runs 1 and 2 with a fearful sentence (Pict_fear), with a non-fear sentence (Pict_nofear), or presented without a sentence (Pict_nosent). Note that the results displayed here reflect brain activations that were obtained when only the pictures were presented. The ‘fear’ and ‘nofear’ suffixes relate to the presentation of the picture with a fearful or non-fearful sentence in the previous runs. The pictures themselves were neutral. The table shows the specific comparison, a description of the activated region, the MNI coordinates, the t-value of peak activations within a cluster, as well as the size of each cluster in number of voxels (2 × 2 × 2 voxels). Results are corrected for multiple comparisons at the P < 0.05 level.

Fig. 7.

Results of whole brain analysis of the data from run 3, in which only pictures were presented. (A) Areas are shown that were more strongly activated to the presentation of the pictures that were previously paired with fearful sentences as compared to pictures that were paired with neutral sentences (Pict_fear > Pict_nofear). Results are corrected for multiple comparisons at the P < 0.05 level. (B) Responses in a priori defined ROIs in left and right amygdala to presentation of pictures that were previously paired with fearful sentences, neutral sentences, or pictures without a concomitant sentence. The bar graphs represent percent signal change compared to the implicit session baseline. Asterisks indicate statistical significance at the P < 0.05 level; n.s.: not significant.

Next we compared the observation of pictures previously paired with a fearful sentence (pict_fear) to pictures previously presented without sentence (pict_nosent). This comparison led to activation in essentially the same cluster of activation as the previous comparison, that is, bilateral caudate nucleus and thalamus (Table 3).

Finally, we assessed responses to pictures previously presented with a non-fear sentence (pict_nofear) to pictures previously presented without a sentence (pict_nosent). No areas were activated in this contrast.

As in the analysis of the sentence-picture pairs, we assessed responses of left and right amygdala to the three picture conditions. There was no main effect of Condition in either left or right amygdala [left: F(2,42) <1; right: F(2,42) <1). Follow-up planned comparisons showed that in left amygdala the response to pictures that were paired with a fearful sentence was significantly higher than to pictures that were previously paired with a non-fearful sentence [one-sided t-test: t(14) = 1.71, P = 0.055; Figure 7B]. Moreover, pictures that were previously presented with a fearful sentence elicited stronger responses that pictures presented without a sentence [pict_fear > pict_nosent: t(14) = 2.10, P = 0.027, one-sided; Figure 7B]. No such effect was observed for the pictures previously presented with a non-fearful sentence [pict_nofear > pict_nosent: t(14) <1]. A similar pattern of responses was observed in right amygdala although the pict_fear versus pict_nofear difference was not statistically significant [pict_fear > pict_nofear: t(14) = 1.29, P = 0.108, one-sided; pict_fear > pict_nosent: t(14) = 1.79, P = 0.048, one-sided; pict_nofear > pict_nosent: t(14) < 1).

DISCUSSION

In the current study we had participants read emotion-inducing (‘fearful’) or less emotional (‘non-fearful’) sentences either presented together with a neutral visual scene, or without a visual scene. We had three experimental questions: first, we asked whether there are brain areas that show an additive response to the combination of sentences and neutral pictures, when the sentence context is emotional/fearful. Second, we looked at whether the fearful content of sentences influences activation levels in parts of the brain involved in emotion. Finally, we looked at how pairing a neutral picture with a fearful sentence influences later processing of this picture when presented without a sentence context.

Interaction of language and picture in emotional processing

In the Introduction section we predicted an additive effect of adding a (neutral) picture to an emotion-inducing sentence, a prediction that we derived from film theory. The prediction was that the difference between fearful sentences combined with a picture as compared to non-fearful sentences combined with a picture, would be bigger than the fearful versus non-fearful sentences presented on their own. The right amygdala shows the predicted response pattern, in the sense that the response to fearful as compared to non-fearful sentences presented together with pictures is significantly different, and the difference between fearful sentences as compared to non-fearful sentences when presented on their own is not (Figure 3). This effect is not robust however, given that the interaction effect was far from statistically significant.

The only region to show the additive pattern of adding a picture on processing of the emotional picture was the right temporal pole. This finding is closely related to the results of Mobbs and colleagues who showed a similar context effect on right temporal pole activation in response to the processing of faces (Mobbs et al., 2006). Participants rated the emotional expression of faces that could be preceded by movie clips with positive or negative content. Right temporal pole showed a stronger activation difference to fearful as compared to neutral faces when the faces where preceded by a negative context as compared to when they were presented by a neutral context. Interestingly, a similar interaction effect was observed for happy faces preceded by a positive context, suggesting a general binding role for the temporal poles of emotional information.

Anatomically the temporal poles are ideally situated to exhibit such a binding effect: They are strongly connected to the limbic system (including the amygdala) as well as with the insular cortex and the sensory cortices (Mesulam, 2000; Olson et al., 2007). Atrophy to the right temporal pole leads to marked personality changes, mostly involving a reduction of emotional expressiveness (e.g. Gorno-Tempini et al., 2004). In their comprehensive review of the literature Olson and colleagues conclude that the temporal poles’ main function is to ‘couple emotional responses to highly processed sensory stimuli’ (Olson et al., 2007, p. 1727). In line with this we interpret the additive effect that we observed in the right temporal pole as reflecting binding of visual and linguistic information under conditions of increased emotional content. The emotional component is crucial, since no ‘general’ binding of linguistic and visual information was observed in this region (see also Mobbs et al., 2006).

Somewhat at odds with this interpretation is a study by Kim and colleagues in which the effect of positive or negative sentences on subsequent processing of faces was assessed (Kim et al., 2004). Participants read sentence like ‘She just lost $500’ versus ‘She just found $500’ and saw a picture of a face with a surprised expression immediately afterwards. No effect of context was observed in the temporal poles. On the contrary, left amygdala was more strongly activated to faces preceded by a negative sentence as compared to faces preceded by a positive sentence, but was not sensitive to the valence expressed by the sentences as such. The latter is in accordance with our present finding, but we did no observe a robust interaction between presence of a picture and valence expressed in the sentences in the amygdala. It is unclear why Kim and colleagues did not find modulation of the temporal poles whereas Mobbs et al.’s data as well as the present finding suggest that the temporal poles serve a binding function of emotionally salient information.

A related interpretation refers to the role of the temporal poles in language processing, especially when language comprehension goes beyond single sentence comprehension, such as when participants read short stories as compared to a set of sentences that are thematically unlinked (Ferstl et al., 2008). In our experiment it may be that the fearful sentences engaged participants more, and that they formed a richer interpretation of the fearful sentence—picture pairs as compared to the non-fearful sentence—picture pairs. This interpretation is unlikely since the right temporal pole was not activated above baseline in two of the four conditions, despite the fact that these involved linguistic materials (Figure 3).

Emotion-inducing language

An overall effect of reading fearful as compared to non-fearful sentences was observed in parts of the brain traditionally implicated in language understanding such as left inferior frontal gyrus and bilateral middle temporal gyri. These areas are implicated in language comprehension, including semantic aspects of sentence comprehension (e.g. Hagoort et al., 2004, 2009; Hagoort, 2005; Willems, et al., 2007, 2008b, 2009). Holt and colleagues showed that emotional endings of single sentences lead to an increased N400 amplitude, an indicator of semantic processing as derived from electro-encephalography (Holt et al., 2009). Also previous neuroimaging studies report similar increases in left inferior frontal and/or middle temporal regions to processing of emotional as compared to more neutral single words (Beauregard et al., 1997; Strange et al., 2000; Cato et al., 2004; Kuchinke et al., 2005; Beaucousin et al., 2007; Razafimandimby et al., 2009). Hence it is conceivable that reading of fearful sentences leads to stronger semantic processing which explains the increased activation in LIFG and middle temporal gyri that we observed. Interestingly, in our study this was not a consequence of the activation of lexical items with a strong emotional valence. The individual words that made up the emotion-inducing sentences were not themselves emotion words. This implies that the emotional content of the sentences was inferred through a compositional process that creates an emotional interpretation from non-emotional lexical building blocks. The fact that the activation was also stronger in parts of temporal cortex presumably involved in lexical retrieval, supports the idea that semantic composition is instantiated by a dynamic interaction between areas involved in lexical retrieval and areas crucial for unification, such as inferior frontal cortex (see G. Baggio and P. Hagoort, submitted for publication; Snijders et al., 2009).

Interestingly, comparing sentences with a fearful/suspense type of content to sentences with more neutral content, but without a concomitant visual scene, revealed increased activation levels in the left anterior insula as well as in the left portion of the temporal pole. A large body of evidence implicates the insula in awareness of emotional stimuli (Phan et al., 2002; Kober et al., 2008), and it is especially the anterior portion of the insula that becomes activated when participants explicitly pay attention to interoceptive feelings (see Craig, 2009; Singer et al., 2009 for review). For instance, activation of the anterior insula is observed when participants smell unpleasant odors (leading to a negative emotion of disgust), as well as when they perceive others smelling an unpleasant odor which leads to a reaction of disgust in the perceived other (Wicker et al., 2003). Similarly, the reading of the fearful sentences in this experiment may have led participants to ‘feel along’ with the implied meaning of the sentence.

It should be noted that we did not replicate previous findings of amygdala involvement in the understanding of emotional/fearful language. We only observed that the amygdalae were sensitive to the emotional content of the sentences when they were paired with a visual stimulus. This is in contrast to some earlier studies that did find increased amygdala activation to language stimuli presented on their own. For instance Isenberg and colleagues (1999) showed increased amygdala activation to the reading of words like ‘threat’ and ‘hate’ as compared to more neutral words as ‘candle’ and ‘bookcase’ (see also Strange et al., 2000; Herbert et al., 2009). On the other hand, there are several studies that have failed to replicate this finding (Beauregard et al., 1997; Cato et al., 2004; Kuchinke et al., 2005), or only observed amygdala activation to emotional words in an explicit categorization task (Tabert et al., 2001). At present it is unclear why some studies do and others do not find amygdala activation in reaction to reading of emotional words. One reason may be decreased sensitivity in the amygdala due to its high sensitivity to susceptibility artifacts (LaBar et al., 2001). This is an unlikely explanation in the present study since we did observe differential activation of the amygdala to the sentences when they were presented together with pictures. In the absence of a strong associative link between the individual words and their emotional content, the amygdala might need the input from the temporal pole to show an effect. The right temporal pole showed a clear increase in activation when emotion-inducing sentences were paired with the neutral pictures. As we hypothesized above, the temporal pole might play a role in grounding the compositional process of interpreting the implied emotional meaning of the sentences with a concrete visual scene referent. Thereby, a stronger emotional valence is generated, which is a trigger for the amygdala response.

The involvement of the anterior insula in reading of emotional language is in line with the general notion that language understanding engages parts of the brain related to the content of the linguistic information. These include areas outside of the traditional language areas, such as in this case the insular cortex. A related finding is that when participants read about action-related language, parts of the cortical motor system become activated (e.g. Willems and Hagoort, 2007; Barsalou, 2008; Willems et al., 2010). Here we add to this growing literature by showing involvement of a core region of the emotional system in the brain during reading about fearful as compared to less fearful sentence content.

The influence of pairing a picture with an emotion-inducing sentence upon later processing of the picture

Finally, we noted that pairing neutral visual stimuli with a fearful sentence leads to increased activation in the amygdala when the neutral pictures are observed later, without being paired with a sentence. This shows that the emotional response to the picture–sentence pairs is retained and recalled at a later moment in time. Put differently, the sight of the picture alone triggers the emotional flavor of the linguistic information. The picture is tagged as emotionally salient by the linguistic information, and subsequent perception of the neutral picture brings back this emotional component to the previous stimulus pair. The present finding is reminiscent of the finding that labeling emotional faces with a word decreases amygdala activation upon subsequent perception of the faces (Lieberman et al., 2007; see also Tabibnia et al., 2008). The crucial difference between this earlier work and our present paper is that in the work by Lieberman and colleagues the visual stimuli were emotional/fearful on their own (fearful faces and disturbing visual scenes). Labeling of such stimuli with linguistic stimuli leads to a dampening of responses in the emotional system. On the contrary, in our study the visual scenes were inherently non-emotional/neutral. Combining such stimuli with emotional language leads to an enhancement of response in parts of the emotional brain system.

The caudate nucleus was also more strongly activated to pictures that were previously paired with a fearful sentences as compared to pictures previously paired with a less fearful sentence. We did not have a hypothesis about activation of this area and we accordingly do not present a strong interpretation of its meaning. In their meta-analysis Kober and colleagues label the basal ganglia/striatum ‘reacting in response to salient events in the environment’ (Kober et al., 2008, p. 1016), which seems an apt description of the pictures that were paired with the fearful sentences as compared to the pictures paired with the other sentences.

CONCLUSION

In conclusion we observed that the right temporal pole showed an additive effect of adding a neutral picture to a fearful sentence (‘the movie effect’). This area presumably serves a binding role, combining visual and linguistic information when the content of the language is emotional. We also showed that reading sentences with fearful content leads to increased activation of left anterior insular cortex, which we interpret as a manifestation of the reader ‘moving along’ with the emotional meaning of the sentence. Future research should be aimed at investigating how the neural integration of information from different senses with language leads to the experience of emotions in general, and fear in particular.

Acknowledgments

Supported by The Netherlands Organisation for scientific research (NWO) through a grant in the CO-OPs ‘Inter-territorial explorations in art and science’ program as well as by a ‘Rubicon’ grant (NWO 446-08-008) and by the Niels Stensen foundation. We thank two anonymous reviewers for helpful comments on an earlier version of the manuscript.

REFERENCES

- Adolphs R. Fear, faces, and the human amygdala. Current Opinion in Neurobiology. 2008;18(2):166–72. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baayen RH, Piepenbrock R, Rijn Hv. The CELEX Lexical Database. Philadelphia, PA: Linguistic Data Consortium, University of Pennsylvania; 1993. [Google Scholar]

- Barsalou LW. Grounded cognition. Annual Review of Psychology. 2008;59:617–45. doi: 10.1146/annurev.psych.59.103006.093639. [DOI] [PubMed] [Google Scholar]

- Beaucousin V, Lacheret A, Turbelin MR, Morel M, Mazoyer B, Tzourio-Mazoyer N. FMRI study of emotional speech comprehension. Cerebral Cortex. 2007;17(2):339–52. doi: 10.1093/cercor/bhj151. [DOI] [PubMed] [Google Scholar]

- Beauregard M, Chertkow H, Bub D, Murtha S, Dixon R, Evans A. The neural substrate for concrete, abstract, and emotional word lexica: a positron emission tomography study. Journal of Cognitive Neuroscience. 1997;9(4):441–61. doi: 10.1162/jocn.1997.9.4.441. [DOI] [PubMed] [Google Scholar]

- Cato MA, Crosson B, Gokcay D, et al. Processing words with emotional connotation: An fMR1 study of time course and laterality in rostral frontal and retrosplenial cortices. Journal of Cognitive Neuroscience. 2004;16(2):167–77. doi: 10.1162/089892904322984481. [DOI] [PubMed] [Google Scholar]

- Craig AD. How do you feel–now? The anterior insula and human awareness. Nature reviews. Neuroscience. 2009;10(1):59–70. doi: 10.1038/nrn2555. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Human Brain Mapping. 1999;8(2–3):109–14. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Gelder B. Towards the neurobiology of emotional body language. Nature Reviews. Neuroscience. 2006;7(3):242–9. doi: 10.1038/nrn1872. [DOI] [PubMed] [Google Scholar]

- Ferstl EC, Neumann J, Bogler C, von Cramon DY. The extended language network: A meta-analysis of neuroimaging studies on text comprehension. Human Brain Mapping. 2008;29(5):581–93. doi: 10.1002/hbm.20422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Holmes A, Poline JB, Price CJ, Frith CD. Detecting activations in PET and fMRI: levels of inference and power. NeuroImage. 1996;4(3 Pt 1):223–35. doi: 10.1006/nimg.1996.0074. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Holmes A, Worsley KJ, Poline J-B, Frith CD, Frackowiak RS. Statistical parametric maps in functional imaging: a general linear approach. Human Brain Mapping. 1995;2:189–210. [Google Scholar]

- Friston KJ, Holmes AP, Price CJ, Buchel C, Worsley KJ. Multisubject fMRI studies and conjunction analyses. Neuroimage. 1999;10(4):385–396. doi: 10.1006/nimg.1999.0484. [DOI] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Rankin KP, Woolley JD, Rosen HJ, Phengrasamy L, Miller BL. Cognitive and behavioral profile in a case of right anterior temporal lobe neurodegeneration. Cortex. 2004;40(4–5):631–44. doi: 10.1016/s0010-9452(08)70159-x. [DOI] [PubMed] [Google Scholar]

- Gottlieb S. Hitchcock on Hitchcock: Selected Writings and Interviews. Berkeley, CA: University of California Press; 1995. [Google Scholar]

- Hagoort P. On Broca, brain, and binding: a new framework. Trends in Cognitive Sciences. 2005;9(9):416–23. doi: 10.1016/j.tics.2005.07.004. [DOI] [PubMed] [Google Scholar]

- Hagoort P, Baggio G, Willems RM. Semantic unification. In: Gazzaniga MS, editor. The Cognitive Neurosciences IV. Cambridge, MA: MIT press; 2009. [Google Scholar]

- Hagoort P, Hald L, Bastiaansen M, Petersson KM. Integration of word meaning and world knowledge in language comprehension. Science. 2004;304(5669):438–41. doi: 10.1126/science.1095455. [DOI] [PubMed] [Google Scholar]

- Herbert C, Ethofer T, Anders S, et al. Amygdala activation during reading of emotional adjectives–an advantage for pleasant content. Social Cognitive and Affective Neuroscience. 2009;4(1):35–49. doi: 10.1093/scan/nsn027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holt D J, Lynn S K, Kuperberg G R. Neurophysiological correlates of comprehending emotional meaning in context. Journal of Cogntive Neuroscience. 2009;21(11):2245–62. doi: 10.1162/jocn.2008.21151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Isenberg N, Silbersweig D, Engelien A, et al. Linguistic threat activates the human amygdala. Proceedings of the National Academy of Sciences of the United States of America. 1999;96(18):10456–10459. doi: 10.1073/pnas.96.18.10456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, et al. Contextual modulation of amygdala responsivity to surprised faces. Journal of Cognitive Neuroscience. 2004;16(10):1730–45. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- Kober H, Barrett LF, Joseph J, Bliss-Moreau E, Lindquist K, Wager TD. Functional grouping and cortical-subcortical interactions in emotion: a meta-analysis of neuroimaging studies. Neuroimage. 2008;42(2):998–1031. doi: 10.1016/j.neuroimage.2008.03.059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuchinke L, Jacobs AM, Grubich C, Vo ML, Conrad M, Herrmann M. Incidental effects of emotional valence in single word processing: an fMRI study. Neuroimage. 2005;28(4):1022–32. doi: 10.1016/j.neuroimage.2005.06.050. [DOI] [PubMed] [Google Scholar]

- LaBar KS, Gitelman DR, Mesulam MM, Parrish TB. Impact of signal-to-noise on functional MRI of the human amygdala. Neuroreport. 2001;12(16):3461–64. doi: 10.1097/00001756-200111160-00017. [DOI] [PubMed] [Google Scholar]

- Lieberman MD, Eisenberger NI, Crockett MJ, Tom SM, Pfeifer JH, Way BM. Putting feelings into words - Affect labeling disrupts amygdala activity in response to affective stimuli. Psychological Science. 2007;18(5):421–8. doi: 10.1111/j.1467-9280.2007.01916.x. [DOI] [PubMed] [Google Scholar]

- Mesulam MM. Paralimbic (mesocortical) areas. In: Mesulam MM, editor. Principles of Behavioral and Cognitive Neurology. New York: Oxford University Press; 2000. pp. 49–54. [Google Scholar]

- Miezin FM, Maccotta L, Ollinger JM, Petersen SE, Buckner RL. Characterizing the hemodynamic response: effects of presentation rate, sampling procedure, and the possibility of ordering brain activity based on relative timing. NeuroImage. 2000;11(6 Pt 1):735–59. doi: 10.1006/nimg.2000.0568. [DOI] [PubMed] [Google Scholar]

- Mobbs D, Weiskopf N, Lau HC, Featherstone E, Dolan RJ, Frith CD. The Kuleshov Effect: the influence of contextual framing on emotional attributions. Social Cognitive and Affective Neuroscience. 2006;1(2):95–106. doi: 10.1093/scan/nsl014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9(1):97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Olson IR, Plotzker A, Ezzyat Y. The Enigmatic temporal pole: a review of findings on social and emotional processing. Brain. 2007;130(Pt 7):1718–31. doi: 10.1093/brain/awm052. [DOI] [PubMed] [Google Scholar]

- Özyürek A, Willems RM, Kita S, Hagoort P. On-line integration of semantic information from speech and gesture: insights from event-related brain potentials. Journal of Cognitive Neuroscience. 2007;19(4):605–16. doi: 10.1162/jocn.2007.19.4.605. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Ungerleider LG. Neuroimaging studies of attention and the processing of emotion-laden stimuli. Progress in Brain Research. 2004;144:171–82. doi: 10.1016/S0079-6123(03)14412-3. [DOI] [PubMed] [Google Scholar]

- Phan KL, Wager T, Taylor SF, Liberzon I. Functional neuroanatomy of emotion: a meta-analysis of emotion activation studies in PET and fMRI. Neuroimage. 2002;16(2):331–48. doi: 10.1006/nimg.2002.1087. [DOI] [PubMed] [Google Scholar]

- Pisters P. Lessen van Hitchcock. Amsterdam: Amsterdam University Press; 2004. [Google Scholar]

- Poline JB, Worsley KJ, Evans AC, Friston KJ. Combining spatial extent and peak intensity to test for activations in functional imaging. Neuroimage. 1997;5(2):83–96. doi: 10.1006/nimg.1996.0248. [DOI] [PubMed] [Google Scholar]

- Pulvermuller F. Brain mechanisms linking language and action. Nature Reviews Neuroscience. 2005;6(7):576–82. doi: 10.1038/nrn1706. [DOI] [PubMed] [Google Scholar]

- Razafimandimby A, Vigneau M, Beaucousin V, et al. Neural Networks of Emotional Discourse Comprehension. 2009. Jun, Paper presented at the Annual Meeting of the Organization for Human Brain Mapping, San Francisco. [Google Scholar]

- Singer T, Critchley HD, Preuschoff K. A common role of insula in feelings, empathy and uncertainty. Trends in Cognitive Science. 2009;13(8):334–40. doi: 10.1016/j.tics.2009.05.001. [DOI] [PubMed] [Google Scholar]

- Snijders TM, Vosse T, Kempen G, Van Berkum JJ, Petersson KM, Hagoort P. Retrieval and unification of syntactic structure in sentence comprehension: an fMRI study using word-category ambiguity. Cerebral Cortex. 2009;19(7):1493–503. doi: 10.1093/cercor/bhn187. [DOI] [PubMed] [Google Scholar]

- Strange BA, Henson RNA, Friston KJ, Dolan RJ. Brain mechanisms for detecting perceptual, semantic, and emotional deviance. Neuroimage. 2000;12(4):425–33. doi: 10.1006/nimg.2000.0637. [DOI] [PubMed] [Google Scholar]

- Tabert MH, Borod JC, Tang CY, et al. Differential amygdala activation during emotional decision and recognition memory tasks using unpleasant words: an fMRI study. Neuropsychologia. 2001;39(6):556–73. doi: 10.1016/s0028-3932(00)00157-3. [DOI] [PubMed] [Google Scholar]

- Tabibnia G, Lieberman MD, Craske MG. The lasting effect of words on feelings: words may facilitate exposure effects to threatening images. Emotion. 2008;8(3):307–17. doi: 10.1037/1528-3542.8.3.307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tesink CM, Petersson KM, Van Berkum JJ, van den Brink D, Buitelaar JK, Hagoort P. Unification of speaker and meaning in language comprehension: an fMRI Study. Journal of Cognitive Neuroscience. 2009;21(11):2085–99. doi: 10.1162/jocn.2008.21161. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, et al. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15(1):273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Wicker B, Keysers C, Plailly J, Royet JP, Gallese V, Rizzolatti G. Both of us disgusted in My insula: the common neural basis of seeing and feeling disgust. Neuron. 2003;40(3):655–64. doi: 10.1016/s0896-6273(03)00679-2. [DOI] [PubMed] [Google Scholar]

- Willems RM, Hagoort P. Neural evidence for the interplay between language, gesture, and action: a review. Brain Language. 2007;101(3):278–89. doi: 10.1016/j.bandl.2007.03.004. [DOI] [PubMed] [Google Scholar]

- Willems RM, Hagoort P, Casasanto D. Body-specific representations of action verbs: neural evidence from right- and left-handers. Psychological Science. 2010;21(1):67–74. doi: 10.1177/0956797609354072. [DOI] [PubMed] [Google Scholar]

- Willems RM, Oostenveld R, Hagoort P. Early decreases in alpha and gamma band power distinguish linguistic from visual information during sentence comprehension. Brain Research. 2008a;1219:78–90. doi: 10.1016/j.brainres.2008.04.065. [DOI] [PubMed] [Google Scholar]

- Willems RM, Özyürek A, Hagoort P. When language meets action: the neural integration of gesture and speech. Cerebral Cortex. 2007;17(10):2322–33. doi: 10.1093/cercor/bhl141. [DOI] [PubMed] [Google Scholar]

- Willems RM, Özyürek A, Hagoort P. Seeing and hearing meaning: ERP and fMRI evidence of word versus picture integration into a sentence context. Journal of Cognitive Neuroscience. 2008b;20(7):1235–49. doi: 10.1162/jocn.2008.20085. [DOI] [PubMed] [Google Scholar]

- Willems RM, Özyürek A, Hagoort P. Differential roles for left inferior frontal and superior temporal cortex in multimodal integration of action and language. Neuroimage. 2009;47(4):1992–2004. doi: 10.1016/j.neuroimage.2009.05.066. [DOI] [PubMed] [Google Scholar]

- Willems RM, Toni I, Hagoort P, Casasanto D. Neural dissociations between action verb understanding and motor imagery. Journal of Cognitive Neuroscience. 2010;22(10):2387–400. doi: 10.1162/jocn.2009.21386. [DOI] [PubMed] [Google Scholar]