Abstract

Background

The US FDA has been collecting information on medical devices involved in significant adverse advents since 1984. These reports have been used by researchers to advise clinicians on potential risks and complications of using these devices.

Objective

Research adverse events related to the use of Clinical Information Systems (CIS) as reported in FDA databases.

Methods

Three large, national, adverse event medical device databases were examined for reports pertaining to CIS.

Results

One hundred and twenty unique reports (from over 1.4 million reports) were found, representing 32 manufacturers. The manifestations of these adverse events included: missing or incorrect data, data displayed for the wrong patient, chaos during system downtime and system unavailable for use. Analysis of these reports illustrated events associated with system design, implementation, use, and support.

Conclusion

The identified causes can be used by manufacturers to improve their products and by clinical facilities and providers to adjust their workflow and implementation processes appropriately. The small number of reports found indicates a need to raise awareness regarding publicly available tools for documenting problems with CIS and for additional reporting and dialog between manufacturers, organizations, and users.

Keywords: Electronic health records, information systems, mandatory reporting, medical errors, United States Food and Drug Administration

1. Background

A popular anti-virus program update led to cancelled surgeries [1].

Routine maintenance on the Australian Medicare patient verification system caused an estimated 1,300-1,800 pathology report results to be assigned to the wrong family member [2].

A drug formulary update altered the default and alternate dosage amounts for certain medications [3].

These are just a few examples of the risks and consequences of updating Clinical Information Systems (CISs) reported recently in the mainstream press.

Much discussion has ensued over who bears responsibility for these types of adverse events involving clinical information systems, which can have catastrophic consequences, and what level of reporting and oversight is appropriate [4-6]. Koppel and Sittig, in separate articles, have called for increased reporting of near misses and errors to increase patient safety, as well as review of the ongoing permissibility of “Hold Harmless” clauses included in many vendor licensing agreements (4, 5). In a recent position paper, the American Medical Informatics Association (AMIA) issued a similar statement labeling “Hold Harmless” clauses “unethical” under circumstances where software defects or errors are integral to the adverse event [7].

The call for increased vendor accountability and centralized reporting is not unique to clinical information systems. The Brennan Center for Justice, a non-partisan institute focused on democratic and judicial topics, recently released a report calling for the creation of a federal clearinghouse and oversight agency for voting machine failures [8]. This situation has many similarities to CISs: high cost of entry; relatively new technology; strong vendor control; new certification organization; minimal required reporting. The Brennan Center has called for the creation of a centralized, publically available database with mandatory reporting as well as the empowerment of a federal agency to investigate and to enforce correction of alleged issues. The Center expects these recommendations to result in higher quality systems and increased public confidence.

There are three sources of information regarding adverse events related to CISs. These include U.S. Food and Drug Administration (FDA) device databases, academic research on CISs and anecdotal reports in both the mainstream and academic press.

Foremost are the FDA databases. Since 1984, the FDA has collected voluntary reports of significant adverse events associated with medical devices. The core FDA requirement pertaining to manufacturers requires reporting within 30 days of awareness of a problem with a device. Key criteria for inclusion are devices that “(1) May have caused or contributed to a death or serious injury; or (2) Has malfunctioned and this device or a similar device that you market would be likely to cause or contribute to a death or serious injury, if the malfunction were to recur.” Reports must be submitted to the FDA either through a paper report or electronically with prior approval [9]. The FDA currently considers clinical information systems to be medical devices, but to date they have refrained from enforcing their regulatory requirements [10].

The data sources for the aforementioned research and for the current study are three databases supported by the FDA: Medical Device Reporting (MDR) [11], Manufacturer and User Facility Device Experience (MAUDE) [3] and Medical Product Safety Network (MedSun) [12, 13]. All three systems provide mechanisms for submitting and reporting adverse events resulting from the use of medical devices. All have on-line search capabilities, and all de-identify the reporting source. Event descriptions in the systems ranged from 60-4,000 characters in length. Basic metadata, such as the date the report was received by the FDA, is associated with each report.

MDR is the oldest of the systems, covering mandatory reporting from 1984-1996 and voluntary reporting thru June 1993 [11]. MAUDE contains voluntary reports starting in June 1993, facility reports starting in 1991, distributor reports from 1993 and manufacturer reports since August 1996 [3].

The third database, MedSun, is leveraged by an organization focused on medical device safety. The approximately 350 member organizations, including hospitals, nursing homes and other healthcare organizations receive training and regular communications regarding medical device safety [13].

The second source of information is the academic research on effects, both intentional and unintentional, of CIS deployment [14, 15], rights of users and consumers [16], and on deployment lessons learned [17].

Finally, there are reports of adverse events associated with CIS deployment. A well-known example is that of Children’s Hospital of Pittsburgh, where the rollout of a Computerized Provider Order Entry system (CPOE) resulted in a significant increase in patient mortality [18]. In this particular case, the findings from the study have been largely attributed to the roll-out of the CPOE and the impact on hospital workflow, as a second hospital deploying the same software a year later saw no significant change in mortality [18, 19]. However, these publications further highlight the potentially severe side effects that can result from CIS implementation, independent of system malfunctions.

These in-depth studies of CIS usage have focused on a small number of sites or a particular hospital network. This study, instead, looks across the board at adverse events reported from both manufacturers and user facilities around the world.

A related area of development is the use of automated systems in feeding Spontaneous Reporting Systems (SRSs) for adverse drug events (ADEs). The ADE Spontaneous Triggered Event Reporting (ASTER) system was implemented to automatically submit ADE reports to the FDA with minimal additional physician involvement. This pilot was successful in that 217 reports were submitted during a five month period from 26 physicians who frequently discontinued medications (on average 1422/year) due to ADEs but had not submitted any reports in the previous year. This type of semi-automated reporting can increase medical knowledge and physician involvement in ADE reporting (20). This input was welcomed and well received by the FDA, which provided advice during system design (21).

2. Objectives

This paper examines historical FDA data in order to categorize reports and to gain understanding of the documented issues.

3. Methods

Based on the current national focus on Meaningful Use in Electronic Health Records, we focused solely on reports on general clinical information systems, excluding isolated, clinical domains such as Blood Bank systems or Picture Archiving and Communication Systems (PACS). The clinical information systems we studied included: Laboratory Information Systems, Perioperative Systems, and electronic health records (EHRs). It was challenging to identify these systems, because, unlike PACS and Blood Bank, there are no product codes in the FDA databases for these types of systems. Another problem was deciding where to draw the line – is a charting system or image viewer considered a clinical information system? We decided to assemble a list of manufacturers and product names from the Certification Commission for Health Information Technology (CCHIT) list [22] and the list of vendors evaluated by KLAS, a third party reviewer of healthcare systems [23] and use these vendors as our starting point and basic inclusion criteria.

Unique lists of manufacturer names were extracted from downloads of the MAUDE and MDR databases. These lists were manually searched for logical variations of the vendor names (e.g. “MEDITECH” and “MEDI-TECH” and “CERNER” and “CERNER CORP.”). These vendor names were used to find the corresponding generic product names (e.g. “INFORMATION”, “S/W” and “SOFTWARE”) and product codes. The databases were then searched for these terms, using wildcards to permit as many matches as possible and the lists scrutinized for relevant reports. The focus of this study was CIS systems, so other types of systems, such as Blood Bank software, patient monitoring and treatment planning systems were excluded. Furthermore, the list of reports is limited to commercial products. We recognize that many hospitals and physician practices utilize homegrown Clinical Information Systems. However, the FDA databases only include reports on commercial systems, as in-house systems developed solely for internal use are exempt from the reporting requirement [10]. Consequently, reports on in-house systems are not included in this analysis. The MedSun database does not support downloads, so all of the search terms (including common misspellings) were entered into the search engine manually.

Once a set of records was found, the generic product description terms for these records were used to conduct additional searches in all of the databases. This process was iterative, and “finds” in one database were used as search terms in the other databases. In addition, in the MAUDE database, the device type for discovered reports was used as a search term. This iterative process was repeated until no new terms or records were discovered. Next, the reports were abstracted and maintained off-line.

Twenty-eight duplicate reports were eliminated, including multiple reports from the same user facility to a particular vendor and multiple reports on the same issue from different user facilities. In one case there were ten (total) reports of a single error. Another case had five reports. All of the other reports were duplicates with only a single additional report. Virtually identical text was considered evidence of duplicate entries. In addition, the date the report was received by the FDA, date of event (when reported) and problem description, including manufacturer comments, were all used to identify and eliminate duplicate reports.

If another occurrence of an error was found at a later time, the second report was attributed to user error if the vendor had issued a patch and notified users. Similarly, the report was tagged as a support issue when the vendor failed to carry the fix forward to future releases.

One of the authors (RBM) initially evaluated all candidate reports and assigned cause categories. We used a grounded theory approach to establish the categories of errors contained in the three databases rather than any of the existing error classification systems since we were striving to understand people’s perceptions, intentions and actions in reporting these errors regardless of their source, the time, or place from which they originated. Furthermore, the inconsistent reporting of available data did not allow for a consistent application of any formal error classification system. The classifications and categories were then reviewed, discussed, and revised by all of the authors until full agreement was reached. The final set of categories is described in ►Table 1.

Table 1.

Identified causes in reported CIS errors

| Cause | Explanation |

|---|---|

| Functionality | Particular system feature was assumed by users, but was not present, or the system behaved in an unexpected manner. This type of error includes drug or allergy rules that were not triggered as expected or in process (versus final) notes that are available for sign out, incorrect delivery of messages within the system or updated orders not being discontinued under certain circumstances. |

| Incorrect calculation | Incorrect values derived from available data, or missing data or values assigned to the wrong patient. Includes errors in calculations such as date of delivery or incorrect drug dose calculation as well as interchanges of data between patients. |

| Incorrect content | “Rule” based logic incomplete or incorrect. Includes drug-allergy or drug-drug alerts, incorrect test reference ranges, system allowing “absurd” combinations of drugs or doses that are not possible with existing pill sizes, etc. |

| Insufficient detail | Insufficient detail was available to determine cause of the issue. These reports blamed the system for the adverse event, but did not provide specifics. |

| Integration | Pertaining to data exchange between products, which may, or may not, belong to the same vendor. |

| Large data volume | Errors that occurred when large values or numbers of items were present (often buffer overflows). |

| Other malfunction | Referencing an error other than one of those listed above. This category includes software “bugs”, such as reuse of unique identifiers. |

| Support | Statements describing issues with, or lack of, vendor support. |

| Transition of care | Errors associated with CISs that involved patients moving between levels of care. |

| Upgrade | Related to process errors or side effects from system upgrades |

| User behind on patches | Vendor response indicated that the specified problem had been fixed and a software patch released. |

| User interface | Problem due to poor display of information or difficult to use system. |

4. Results

A total of 120 unique reports were discovered. These reports identified 32 different manufacturers over a period of 18 years. Three vendors were identified in over half of all reported events. Seventy-four percent of the reports were filed by healthcare professionals. The break down of the report sources is show in ►Table 2. Note that more than one category could be indicated on the report and half of the records did not contain a reporting source, so the total does not match the number of records. The inferred causes, report counts and representative examples are tabulated in ►Table 3.

Table 2.

Reports by source

| Source | Count | Percentage |

|---|---|---|

| Health professional | 47 | 39% |

| Company Representation | 15 | 13% |

| User Facility | 13 | 11% |

| Foreign | 7 | 6% |

| Other | 2 | 2% |

Table 3.

Reports by discovered cause

| Cause | Count | Example of reported problem |

|---|---|---|

| User interface | 63 (52.5%) | “The sound of the beep is the same whether it is a ‘correct patient’ scan or it is an incorrect patient.” |

| Integration | 21 (17.5%) | “When an update is made to the frequency field on an existing prescription, the frequency schedule ID is not simultaneously updated on new orders sent to the pharmacy via [application].” |

| Calculation | 18 (15.0%) | “An additive value of the metric and english converted to metric by sys.” |

| Functionality | 16 (13.3%) | “The final document then displayed in the sign out option for final signature, however, the temporary document was actually signed out and available for printing as the final signed out report.” |

| Incorrect Content | 13 (10.8%) | “Patient had a known allergy to Tylenol which had been entered into the system last year. We cannot show that the pharmacist entering the medication or the nurse documenting the medication got an alert to say the patient was allergic to the medication, as they should have.” |

| Support | 12 (10.0%) | “This is not the first time that a safety issue with the software has been reported to the software vendor without any further communication to all end users with the warning of the issue at hand.” |

| Upgrade | 11 (9.2%) | “There was an error in the procedure used to push the new program to the workstations that resulted in the demo driver being activated.” |

| Other malfunction | 7 (5.8%) | “In the message center inbox, a user can make changes to a new pending message and save the changes without saving the message to a patient chart. If the user then performs the same task on a second pending message, the system replaces the entire text of the second message with the entire text of the first message.” |

| Large data volume | 5 (4.2%) | “If the date range is too extensive [i.e., in the request to display results] and the volume of cases to scan is over 10,000, some reports are not printed. There is no audit trail to trace the unprinted documents.” |

| User behind on patches | 5 (4.2%) | “Product was already corrected in initial report of problem under [release code]. The client validated the correction in live in 2002.” [Report filed with FDA in 2006.] |

| Insufficient Detail | 2 (1.7%) | “Hospital wide breakdown of system of electric charts and electric order gadgets resulted in confusion, neglect, failed communications and delayed treatments in the days immediately following the surgery…” |

| Transition of care | 2 (1.7%) | “Examples include orders to transfer patient from ICU to a non-ICU bed. Patient is moved to another bed but recipient care team does not receive communication and was not aware patient was under their charge.” |

The manifestations of these adverse events included: missing or incorrect data, data displayed for the wrong patient, chaos during system downtime and hung systems (system unavailable for use). In addition, while many reports note that the problem was detected before harm could be done; adverse patient outcomes were reported including delays in diagnosis or treatments, unnecessary or emergency procedures and/or treatments, incorrect medication administration, patient injury or disability, and death. Given the brevity of the reports it is difficult to classify the severity of each problem. Approximately 80% of the records provided classification as to whether or not there was an associated adverse event and the type of outcome (disability, death, etc.).

5. Discussion

5.1 Volume of Records

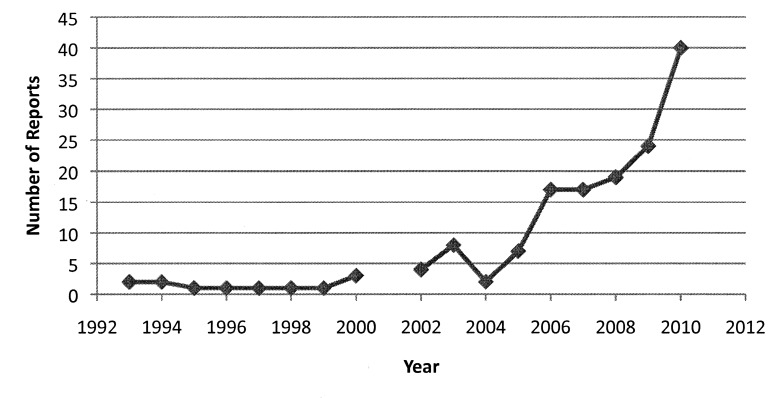

In the past few years, the number of reports attributed to CISs, including Electronic Health Records (EHRs) and Computerized Provider Order Entry (CPOE) has grown. If the number of reports recorded to date in 2010 continues at its current rate, the annual rate will be 2/3 higher than 2009, as shown in ►Figure 1.

Fig. 1.

CIS adverse event records by year. 2010 total is extrapolated from current rate of reported records through March 31, 2010.)

The volume of reports is low, but so is current market penetration of Clinical Information Systems. According to a 2008 survey, fewer than 2% of U.S. hospitals had a comprehensive EHR system, and only 8-12% had a basic system in one or more departments [24]. EHRs have been touted as part of the solution for improving the quality of medical care as well as having the potential to reduce costs in the long term. Additionally, with the financial incentives for providers and hospital systems to adopt an EHR and financial penalties for failure to adopt an EHR and use it in a “meaningful” way [25] we can expect these numbers to increase. There is already evidence to support the hypothesis that EHR adoption in the US is increasing [26]. A reasonable consequence of increased deployment is a corresponding increase of these systems’ involvement in adverse patient events, regardless of the entity mainly responsible for the adverse event.

In his testimony to the Health Information Technology (HIT) Policy Committee Adoption/Certification Workgroup on February 25, 2010, Jeffrey Shuren, Director of the FDA’s Center for Devices and Radiological Health reported that over the past 10 years 260 voluntary reports have been filed with the FDA [27]. Shuren’s count included reports from Blood Bank and Radiology Systems, which were excluded from this study. We identified and excluded 164 Blood Bank reports and over 200 other reports that were classified the same as our reports but did not meet our inclusion criteria of being on the list of certified and recommended vendors and being a general CIS. Our results align well with those reported by Shuren, with key categories of concern being errors of commission, which include wrong patient errors; omission, including loss of patient data; analysis or calculation errors, and incompatibility or interface errors. We categorized these problems as Functionality, Calculation, Incorrect Content and Integration. These types of errors comprised over half of the errors we saw.

5.2 Key Lessons Identified

A number of lessons can be inferred from these results. First, that very few reports are being filed. Second, that there are known dangerous areas in the design, implementation, use, and support of CISs, and finally, that there is clearly a need for three way communication between manufacturers, organizations, and users.

The number of unique reports found was very small – a mere 120 from the over 1.4 million reports in the combined databases. Based on our considerable experience with CISs, it is safe to say that people are not reporting all adverse events related to CISs. Causes for the low number of reports likely include the current low market penetration of CISs and the lack of knowledge on the part of users of what type of incidents should be reported (6). In addition, the difficulty of assigning responsibility for an adverse event in a complex, integrated environment can be a contributing factor. The aforementioned “Hold Harmless” clause in many vendor end user licensing agreements documents may also be a deterrent to reporting. Additionally, perhaps a general lack of awareness of the availability and anonymity of the FDA reporting system among end users contributes to the low numbers of reports as well. Lastly, reporting of errors and adverse events involving CIS and EHR’s is voluntary and as discussed above, there are substantial barriers to knowing when and where to report an event.

The number of manufacturers represented in the list of reports is even smaller, a mere 32. It is implausible that no other vendors have ever had a software “bug” that resulted in an adverse event in a clinical setting over the past 18 years. Furthermore, over half of all reported events were associated with only 3 vendors. In addition to the reasons listed above regarding difficulties in reporting, it may be that these three vendors have a much larger market share, that these products have a large number of issues, or that these manufacturers have both a) submitted many of the events they have identified themselves, and b) encouraged, or at least not discouraged, their clients to submit reports.

There is limited awareness of these databases. Even when known, it requires significant effort on the part of an individual to submit a report. When there is no requirement or expectation of value for the reporter (i.e. “what’s in it for me?”), the likelihood of a report being submitted is low. In addition, it is extremely difficult to extract reports from these databases; therefore the value of submitting a report is further diminished since access to the data is virtually non-existent. A potential solution to this problem might be to add a “File Report with FDA” feature to all certified CIS systems to help facilitate the reporting process akin to that piloted by ASTER for adverse drug events [28]. This enhancement would link users directly to the FDA web site. Such a function could auto-populate many of the necessary fields (e.g. date, vendor ID, system type, screen print, etc.) leaving only the details of the problem for the user to fill-in thus further reducing the reporting burden for end users. Copies of these reports could also be sent to vendors to facilitate their ability to improve their own software quality assurance processes.

Finally, there are no clear-cut guidelines on what types, or severity of events, should be reported. Should user errors be included? Are there threshold events akin to the Joint Commission “Never Events” that can be identified for Clinical Information Systems [29]? These could include unplanned system downtime over a predefined number of hours or situations where a patient is harmed and a computer is involved in some manner, such as when a patient is given a wrong medication or dose, or medications or treatments are administered at the wrong time [6]. Many of the reports from the FDA databases specifically stated that the error was detected before harm could be done. Should these “near misses” continue to be reported, as with the Federal Aviation Authority that requires reporting of all accidents and near misses [30]?

Another lesson learned from this analysis is that there are key events that are frequently associated with these CIS-related problems. These areas must be addressed collaboratively with manufacturers, organizations, and users. While it is difficult to address user interface issues overall, particular attention must be paid to system upgrades and data integration points, for example, when new hardware, software or clinical content is added to an existing system. More transparent systems should be designed to allow the user to more easily detect the results of these errors such as wrong patient errors and missing data.

It is important to note that adverse events are not solely due to system malfunctions. A few reports described chaotic environments when systems were unavailable. These reports illustrate the need for

-

1.

trained clinical staff,

-

2.

appropriate sanity checks and testing before implementing new applications or versions in the production environment,

-

3.

checks and balances in clinical workflow,

-

4.

increased software testing during the development processes,

-

5.

appropriate system backup plans, and

-

6.

safe and effective downtime procedures in the event of system failure [14].

These reported events likely greatly underestimate the incidence of all adverse events attributable to CISs. The small sample size, the voluntary nature of the reporting system, the relative lack of vendor self-reporting and the barriers to reporting discussed above all contribute to the biased nature of the available data. Further sources of bias include: the possibility that some vendors may selectively report events based on errors that could easily be fixed or had no negative impact on patient outcomes and the restricted list of vendors used as a starting point. Finally, the data sources used are among the only publically available longitudinal collections of data for researchers to analyze.

5.3 Recommendations to Improve the System

5.3.1 Clinical Information System Vendors

Clearly, there is a need for three-way communication between manufacturers, healthcare organizations, and users. Vendors must:

-

1.

Listen and solicit input from users;

-

2.

Track and escalate identified errors in a manner visible to their customers and potential customers [7];

-

3.

Act on the submitted reports and

-

4.

Target communication to all their clients regarding a particularly problematic feature or function in a way that reduces overall noise (i.e., separate from routine system enhancement notes).

-

5.

Provide a prepopulated Adverse Event form in a format acceptable to the FDA system(s), similar to the one produced by ASTER for Adverse Drug Events [20].

5.3.2 Healthcare Organizations and Users

Similarly, users and their organizations must

-

1.

Be encouraged to report errors to their local EHR oversight committee [31] or if none exists to one of the FDA data bases;

-

2.

Request enhancements and fixes from their vendors;

-

3.

Be aware of vendor reports regarding their products; and

-

4.

Stay up to date on system patches and updates.

5.3.3 U.S. Food and Drug Administration

Greater value for evaluating and reviewing Clinical Information Systems could be achieved by providing more consistency in data reporting. Recently, the Agency for Healthcare Research and Quality (AHRQ) released version 1.1 (beta) of their “Common Format – Device or Medical/Surgical Supply” in an attempt to provide this consistency [32]. In addition, the iHealth Alliance which is “comprised of industry leaders from medical societies, liability carriers, patient advocacy groups and others dedicated to protecting the interest of patients and providers” has created a website (www.ehrevent.org) to provide a safe and secure means of reporting EHR-related safety events. Briefly, this site provides a structured list of manufacturers and a predefined list of causes submitters can use to characterize the reports. Unfortunately, to date, the data collected via this website is not available in the public domain so it cannot be analyzed or monitored.

Finally, the Institute of Medicine has recently convened a committee to study “Patient Safety and Health Information Technology” [33]. This committee has been asked to review the evidence and experience from the field on how the use of clinical information systems affects the safety of patient care. Their report is due at the end of 2011.

6. Conclusions

The FDA databases offer a centralized location for reporting on and finding information about significant adverse events related to Clinical Information Systems. However, there is a need for increased awareness of the reporting requirements and enhanced user participation.

The discovered causes provide insight into common problems encountered with CISs and should be leveraged by manufacturers to improve their products. Users and facilities should be aware of potential issues, in particular with regard to workflow impact, integration between systems and upgrades. Everyone would benefit from forming partnerships to explore and resolve these issues.

This study demonstrates the complexity of deploying and maintaining Clinical Information Systems. These systems have the potential to add significant value in healthcare delivery, but require vigilance on the part of manufacturers, healthcare information technology facilities and providers. Steps that are not expected to be complex often are. Working together, users, facilities, CIS vendors and the FDA can build safe and effective clinical information systems that improve the efficiency and quality of healthcare in the United States.

6.1 Implications of results for practitioners and consumers

In order to improve quality of care, end users must be aware of and able to report issues with Clinical Information Systems. A federally administered centralized repository with mandatory reporting could potentially increase awareness of issues and, consequently, improve system quality.

Conflicts of Interest

The authors declare that they have no conflicts of interest in the research.

Protection of Human and Animal Subjects

Neither human nor animal subjects were included in this project.

References

- 1.NPR Staff Anti-virus program update wreaks havoc with PCs: NPR [Internet] 2010Apr 21 [cited 2010 Apr 25];Available from: http://www.npr.org/templates/story/story.php?storyId=126168997&sc=17&f=1001 [Google Scholar]

- 2.Dearne K. Medicare glitch affects records. The Australian [Internet] 2010Apr 20 [cited 2010 Apr 25];Available from: http://www.theaustralian.com.au/australian-it/medicare-glitch-affects-records/story-e6frgakx-1225855706275 [Google Scholar]

- 3.MAUDE – Manufacturer and User Facility Device Experience [Internet] [cited 2010 Apr 15]; Available from: http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfMAUDE/search.CFM [Google Scholar]

- 4.Koppel R, Kreda D. Health care information technology vendors' "hold harm-less" clause: implications for patients and clinicians. JAMA 2009; 301(12): 1276-1278 [DOI] [PubMed] [Google Scholar]

- 5.Sittig DF, Classen DC. Safe electronic health record use requires a comprehensive monitoring and evaluation framework. JAMA 2010; 303(5): 450-451 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Koppel R. Monitoring and evaluating the use of electronic health records. JAMA 2010; 303(19): 1918; author reply 1918-1919 [DOI] [PubMed] [Google Scholar]

- 7.Goodman KW, Berner ES, Dente MA, Kaplan B, Koppel R, Rucker D, et al. Challenges in ethics, safety, best practices, and oversight regarding HIT ven-dors, their customers, and patients: a report of an AMIA special task force. J Am Med Inform Assoc 2011; 18(1): 77-81 doi:10.1136/jamia.2010.008946 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Norden L. Voting system failures: a database solution [Internet] New York N.Y.: Brennan Center for Justice; 2010[cited 2010 Sep 20]. Available from: http://www.brennancenter.org/content/resource/voting_system_failures_a_database_solution/ [Google Scholar]

- 9.CFR – Code of Federal Regulations Title 21 [Internet] [cited 2011 Jan 8]; Available from: http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfcfr/CFRSearch.cfm?CFRPart=803&showFR=1&subpartNode=21:8.0.1.1.3.5 [Google Scholar]

- 10.FDA policy for the regulation of computer products, 11/13/89 (Draft) [Internet] [cited 2011 Jan 28];Available from: http://www.janosko.com/documents/FDA%20Policy%20Computer%20Products/FDAPolicyComputers1 989.htm [Google Scholar]

- 11.MDR Database Search [Internet] [cited 2010 Apr 20];Available from: http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/cfmdr/search.cfm?searchoptions=1 [Google Scholar]

- 12.Medsun Reports [Internet] [cited 2010 Apr 15];Available from: http://www.accessdata.fda.gov/scripts/cdrh/cfdocs/medsun/searchReport.cfm [Google Scholar]

- 13.MedSun: Medical Product Safety Network [Internet] [cited 2010 Apr 15]; Available from: http://www.fda.gov/MedicalDevices/Safety/MedSunMedicalProductSafetyNetwork/default.htm [Google Scholar]

- 14.Campbell EM, Sittig DF, Guappone KP, Dykstra RH, Ash JS. Overdependence on technology: an unintended adverse consequence of computerized pro-vider order entry. AMIA Annu Symp Proc 2007: 94-98 [PMC free article] [PubMed] [Google Scholar]

- 15.Campbell EM, Sittig DF, Ash JS, Guappone KP, Dykstra RH. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc 2006; 13(5): 547-556 doi:10.1197/jamia.M2042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Sittig DF, Singh H. Eight rights of safe electronic health record use. JAMA 2009; 302(10): 1111-1113 [DOI] [PubMed] [Google Scholar]

- 17.DeVore SD, Figlioli K. Lessons premier hospitals learned about implementing electronic health records. Health Aff (Millwood). 2010; 29(4): 664-667 doi:10.1377/hlthaff.2010.0250 [DOI] [PubMed] [Google Scholar]

- 18.Han YY, Carcillo JA, Venkataraman ST, Clark RSB, Watson RS, Nguyen TC, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics 2005; 116(6): 1506-1512 doi:10.1542/peds.2005-1287 [DOI] [PubMed] [Google Scholar]

- 19.Del Beccaro MA, Jeffries HE, Eisenberg MA, Harry ED. Computerized pro-vider order entry implementation: no association with increased mortality rates in an intensive care unit. Pediatrics 2006; 118(1): 290-295 doi:10.1542/peds.2006-0367 [DOI] [PubMed] [Google Scholar]

- 20.Linder JA, Haas JS, Iyer A, Labuzetta MA, Ibara M, Celeste M, et al. Secondary use of electronic health record data: spontaneous triggered adverse drug event reporting. Pharmacoepidemiol Drug Saf 2010; 19(12): 1211-1215 doi:10.1002/pds.2027 [DOI] [PubMed] [Google Scholar]

- 21.Dal Pan GJ. Commentary on "Secondary use of electronic health record data: spontaneous triggered adverse drug event reporting" by Linder et al.Pharma-coepidemiol Drug Saf 2010; 19(12): 1216-1217 doi:10.1002/pds.2050 [DOI] [PubMed] [Google Scholar]

- 22.CCHIT [Internet] [cited 2010 Apr 15]; Available from: http://www.cchit.org/ [Google Scholar]

- 23.Ambulatory EMR – Segment Profile – KLAS Helps Healthcare Providers by Measuring Vendor Performance [Internet] [cited 2010 Apr 15];Available from: http://www.klasresearch.com/Research/Segments/Default.aspx?id=3&evProductID=33609&ReturnURL= %2fResearch%2fSegments%2fDefault.aspx%3fid%3d3%26evProductID%3d33609 [Google Scholar]

- 24.Jha AK, DesRoches CM, Campbell EG, Donelan K, Rao SR, Ferris TG, et al. Use of electronic health records in U.S. hospitals. N Engl J Med 2009; 360(16): 1628-1638 doi:10.1056/NEJMsa0900592 [DOI] [PubMed] [Google Scholar]

- 25.H.R. 1 [111th]: American Recovery and Reinvestment Act of 2009(Gov-Track.us) [Internet] [cited 2011 Jan 14];Available from: http://www.govtrack.us/congress/bill.xpd?bill=h111-1 [Google Scholar]

- 26.Hsiao C, Beatty P, Hing E, Woodwell D, Rechtsteiner E, Sisk J. Products – Health E Stats – EMR and EHR Use by Office-based Physicians [Internet] [cited 2011 Jan 14]; Available from: http://www.cdc.gov/nchs/data/hestat/emr_ehr/emr_ehr.htm [Google Scholar]

- 27.Shuren J. Testimony of Jeffrey Shuren, Director of FDA's Center for Devices and Radiological Health [Internet] 2010. Available from: http://healthit.hhs.gov/portal/server.pt/gateway/PTARGS_0_10741_910717_0_0_18/3Shuren_Testimony 022510.pdf [Google Scholar]

- 28.*ASTER Study [Internet] [cited 2010 Aug 10]; Available from: http://www.asterstudy.com/ [Google Scholar]

- 29.Issue 42: Safely implementing health information and converging technologies | Joint Commission [Internet] [cited 2010 Aug 7]; Available from: http://www.jointcommission.org/SentinelEvents/SentinelEventAlert/sea_42.htm [Google Scholar]

- 30.Federal Aviation Administration Aeronautical information manual – official guide to basic flight information and ATC procedures [Internet] 2010Feb 11;Available from: http://www.faa.gov/air_traffic/publications/atpubs/aim/ [Google Scholar]

- 31.Miller RA, Gardner RM. Recommendations for responsible monitoring and regulation of clinical software systems. American Medical Informatics Association, Computer-based Patient Record Institute, Medical Library Association, Association of Academic Health Science Libraries, American Health Information Management Association, American Nurses Association. J Am Med Inform Assoc 1997; 4(6): 442-457 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.PSO Privacy Protection Center – Device or Medical/Surgical Supply, including HIT Device (Beta) [Internet] [cited 2011 Jan 18];Available from: https://www.psoppc.org/web/patientsafety/device-or-medical/surgical-supply-including-hit-device-beta [Google Scholar]

- 33.Patient Safety and Health Information Technology - Institute of Medicine [Internet] [cited 2011 Jan 18]; Available from: http://www.iom.edu/Activities/Quality/PatientSafetyHIT.aspx [Google Scholar]