1. Introduction

The anterior temporal lobes (ATL) are somewhat imprecisely defined, in part because most of is known about this region comes from studies of patients with varying degrees of progressive deterioration of the ATL or from anatomical research with nonhuman primates, both of which will be reviewed here. However, theoretical models generally include the temporal pole and some cortex inferior to the pole, including perirhinal and anterior parahippocampal cortex, in their working definition of this region, while excluding structures such as the hippocampus itself and the amygdalas. In recent literature, the (ATL) have been discussed as one homogeneous structure (Patterson, Nestor, & Rogers, 2007) when in actuality, there is reason to believe that this region may have discrete functional subregions (Ding, Van Hoesen, Cassell, & Poremba, 2009; Martin, 2009; Moran, Mufson, & Mesulam, 1987). The goal of this study was to assess two plausible subdivisions: a sensory subdivision and a semantic category subdivision.

1.1. Sensory Organization of the ATL

Evidence for sensory parcellation of the ATL is found in anatomical studies of macaques and humans. In the macaque, the dorsolateral ATL receives projections from third-order auditory association cortex (Kondo, Saleem, & Price, 2003), and cells in this region are sensitive to complex auditory signals such as the vocalizations of conspecifics (Kondo, et al., 2003; Poremba, et al., 2003). Ventral aspects of the ATL receives projections from extrastriate visual cortex in the inferior temporal lobe, and cells in the monkey’s inferior ATL are sensitive to complex visual stimuli (Nakamura & Kubota, 1996; Nakamura, Matsumoto, Mikami, & Kubota, 1994). More medial aspects of the ATL receive projections from prepiriform olfactory cortex. Similar subdivisions have been reported in humans (Blaizot, et al., 2010; Ding, et al., 2009). Our recent meta-analytic review of brain imaging studies provides indirect support for this view by showing that the human ATL, like the monkey ATL, has an audio/visual superior/inferior segregation of sensitivity based on input modality (Olson, Plotzker, & Ezzyat, 2007); see also (Visser, Jefferies, & Lambon Ralph, 2009)

It is important to bear in mind that the boundaries between these sensory-specific regions are not clean but rather, are gradual (Ding, et al., 2009). For instance, the boundary zone between visual and auditory regions, the upper bank of the anterior superior temporal sulcus (STS) has features of multimodal association cortex (Moran, et al., 1987). Moreover, the polar tip is distinct from the rest of the ATL in that it contains mostly multimodal cells (Moran, et al., 1987) and in the fact that it sends and receives most of its projections to orbitofrontal and medial frontal cortices (Moran et al., 1987). These findings indicate that the polar region of the ATL should be considered paralimbic cortex, with a possible function of integrating sensory information with affective information (Kondo, Saleem, & Price, 2005; Moran, et al., 1987; Olson, et al., 2007). In sum, anatomical findings from macaques and humans indicate that there are sensory subdivisions in the ATL.

1.2 The Hub Model

This rich pattern of sensory connectivity has led scientists to speculate that the ATL serves as a single unifying convergence zone or hub, especially in regards to semantic memory. The formation of, and access to, conceptual knowledge relies on a complex orchestration of functions including episodic memory, emotional valence and salience as well as modality specific, polymodal and sensory functions and higher order executive and regulatory functions. The ATL certainly fulfills this requirement by having rich connections to the amygdala, the basal forebrain, and prefrontal regions and by being located near secondary auditory cortices and the termination of the ventral visual pathway. One prominent hypothesis of ATL function, termed the Semantic Hub Account proposes that the ATLs serve as an hub, linking together sensory specific and semantic associations located throughout the brain (McClelland & Rogers, 2003; Patterson, et al., 2007). An updated version of this termed “the hub and spoke,” model, proposes that the ATL serves as an amodal, category general processor that is connected to other, category-specific cortical regions (Lambdon Ralph, Sage, Jones, & Mayberry, 2010; Pobric, Jefferies, & Lambdon Ralph, 2010b).

A central theme of the Hub Account is that the ATL is amodal, which is necessary for accessing concepts that must be retrieved based on different sensory cues, such as a sound, an image, or a word. Evidence for this view is drawn primary from studies of patients with semantic dementia, a disease characterized by progressive and rapid loss of semantic knowledge and cell loss that in its early stages, is localized to anterior aspects of the temporal lobe. Patients with this disorder have semantic deficits that are characterized by amodal receptive and expressive semantic deficits that are observed in response to pictures, words, sounds, and even olfactory information (Patterson, et al., 2007; Rogers, et al., 2006).

These findings are inconsistent with evidence from neuroanatomy, reviewed earlier, suggesting that there is sensory segregation of ATL function. There are other reasons to be skeptical about claims of amodality in the ATL as well. Patients with semantic dementia have cell loss that extends into regions beyond the ATL, including prefrontal cortex, and inferior temporal lobe extending into lateral temporal cortex (Hodges, 2007). It is difficult to know whether more discrete cell loss, say to inferior aspects of the ATL, would result in semantic memory deficits limited to the visual modality, as predicted by anatomical findings (Moran, et al., 1987), since semantic dementia progresses rapidly and promiscuously. Moreover ATL resection for epilepsy rarely leads to sever, amodal semantic deficits (Drane, et al., 2008). Indeed a recent meta-analyses reported that semantic tasks using visual stimuli tended to show greater activations in the inferior ATL while similar tasks using verbal stimuli showed greater activations in superior ATL (Visser, et al., 2009). It is therefore possible that there are functional subdivisions within the ATLs in regards to the sensory modality of the stimulus material.

1.3 The Social Knowledge Hypothesis

Anatomical findings indicate that other functional subdivisions may also be present within the ATL. The ATL is highly interconnected with both the amygdala and orbital/medial prefrontal cortex via a large white matter tract, the uncinate fasciculus. Like other paralimbic regions, the ATL receives and sends projections to the basal forebrain and hypothalamus (Kondo, et al., 2003, 2005). Functionally, the ATL is frequently activated in the presence of social tasks and stimuli (Olson, et al., 2007). For instance, ATL activations are often reported in tasks that require participants to apply theory of mind, mentalize, or understand deception (Frith & Frith, 2003; Olson, et al., 2007; Ross & Olson, 2010). Some investigators, including ourselves, have attempted to reconcile these finding with the research showing that portions of the ATL have a role in processing general semantic knowledge (Patterson, et al., 2007) by proposing that portions of the ATL have a role in representing a specific type of semantic knowledge: social semantic concepts (Moll, Zahn, de Oliveira-Souza, Krueger, & Grafman, 2005; Olson, et al., 2007; Ross & Olson, 2010; Simmons & Martin, 2009; Simmons, Reddish, Bellgowan, & Martin, 2010; Zahn, et al., 2007; Zahn, et al., 2009). We term this the social knowledge hypothesis. This hypothesis is based on the premise that the ATL contains a specialized subregion devoted to processing the meaning of social stimuli.

There are three lines of evidence supporting the social knowledge hypothesis. First, the ATLs geographic location and pattern of ATL connectivity, as reviewed earlier, is highly suggestive. Second, older ablation studies in monkeys reported gross changes in social behavior following bilateral ATL lesions that left the amygdala intact. The changes included failure to recognize and abide by the troop social structure, maternal neglect, and failure to produce or respond to the social signals of other monkeys (Olson, et al., 2007). Third, as noted earlier, the ATL is commonly activated in fMRI studies involving various theory of mind tasks and thus is considered part of the social brain network (Frith & Frith, 2010; Moll, et al., 2005; Olson, et al., 2007; Simmons & Martin, 2009). More recently, it was shown that social concepts such as the words “truthful”, “narcissistic”, or “helpful” preferentially activate portions of the right superior ATL (Zahn, et al., 2007; Zahn, et al., 2009). We found that the activations associated with processing the meaning of social words closely overlapped with activations to various mentalizing tasks (Ross & Olson, 2010).

We note that the social knowledge hypothesis is not entirely orthogonal to the Hub Account. It is possible to have both processes in the ATL region, but with somewhat different anatomical loci (see the General Discussion for further discussion of this topic).

1.4 Goals of the Present Study

The study presented here had two goals. Our first goal was to determine whether the ATL contains subdivisions that are differentially sensitive to the modality the stimuli are presented in or whether the ATL is amodal. The Hub-and-Spoke model predicts that all sensory modalities should activate the temporal pole equally (Patterson, et al., 2007), or in the very least that there will be a graded response across anatomical regions. Tranel and colleagues (Tranel, Grabowski, Lyon, & Damasio, 2005) identified a sensory amodal region in the temporal lobe by instructing participants to name animals and tools based on pictures and sounds. However, this study used an ROI limited to inferotemporal cortex, excluding the ATL. As noted earlier, primate research has indicated that the ATL has an anatomical organization that largely maintains a modality- specific organizationwith the exception of the polar tip (Blaizot, et al., 2010; Ding, et al., 2009). According to this anatomical organization we can predict that (1) auditory semantic stimuli should preferentially activate the superior ATL; (2) visual semantic stimuli should preferentially activate more inferior aspects of the ATL; and (3) combined audiovisual stimuli should activate the polar tip.

Our second goal was to investigate several questions surrounding the social knowledge hypothesis. First, prior studies relevant to this hypothesis have used familiar stimuli, such as famous faces, and have typically assessed activations during stimulus encoding. One problem for the social knowledge hypothesis is that familiar stimuli, such as Brad Pitt’s face, are associated with a wide range of conceptual, biographical, and emotional information that differs widely between individuals (Ross & Olson, accepted - pending revisions). This makes it difficult to assess precisely the type of knowledge that is being recollected in these tasks. Second, it is difficult to know whether the observed activations are due to the perceptual encoding of social stimuli, or to the retrieval of social information. If it is the later, it would provide additional support for the view that this region is involved in mnemonic processing. Last, it is important to understand whether the entire ATL or only a subregion of the ATL is sensitive to social stimuli.

To address these questions surrounding the social knowledge hypothesis, participants were trained to associate either social or nonsocial lexical stimuli with novel objects or sounds for which participants had no prior associations. In the scanner, the task was to view the novel item and later, retrieve the associated social or nonsocial knowledge. The social knowledge hypothesis predicts that portions of the ATL should preferentially activate to the stimuli that were associated with social knowledge.

2. Materials and Methods

2.1 Participants

Eighteen neurologically normal participants (12 female; mean age: 23.58; SD: 2.57; 6 male; M: 24.67; SD: 1.97) volunteered for this fMRI experiment. All participants were right handed, native English speakers with normal or corrected-to-normal vision. Informed consent was obtained according to the guidelines of the Institutional Review Board of the Temple University and every participant received monetary compensation for participation in the experiment. All participants were naïve in respect to the purpose of the experiment and were debriefed after the experiment.

2.2 Stimuli

Sensory stimuli fell into three categories: auditory (A), visual (V) and audiovisual (AV). Visual stimuli consisted of multicolored artificial figures created by arranging shapes in a novel way. These novel objects are called “Fribbles” and were collected from a database provided by Michael Tarr at Carnegie Melon University (http://www.cnbc.cmu.edu/tarrlab/stimuli.html). Fribbles were presented on a computer screen with a white background. Auditory stimuli were created with prerecorded animal and human action sounds, which were edited to 3s with .5s ramping at the beginning and end, distorted and rendered unrecognizable and then matched for amplitude. In the audiovisual condition Fribbles were presented simultaneously with rendered sounds. Participants were able to control the volume of the sounds during trainings. During the scanning session, a sound was played for the participant and he or she confirmed audibility before the experiment began.

Lexical stimuli were adjectives that participants were trained to match with a sensory stimulus. Eighteen adjectives describing social behavior were chosen from a larger stimulus set used in a in a previous study of social knowledge (Zahn et. al. 2007) and were provided by the authors. The social words included only human-related adjectives, and were selected for familiarity. All word stimuli can be found in Table 1. Eighteen nonsocial adjectives were also selected. Psycholinguistic measures were collected from the MRC Psycholinguistic Database. If a measure reported was not available for an adjective used in this study, the root word (no suffix or prefix) was used to collect the psycholinguistic measure. Social and nonsocial words did not differ on verbal frequency, t(12)=.609, familiarity, t(12)=1.636, meaningfulness, t(12)=1.266, or number of syllables, t(12)=1.797. Social words tended to be less concrete than nonsocial words, t(12)=4.764, p<.001, and had lower imageability scores, t(12)=3.869, p<.01. We allowed for the differences between two groups in these measures in order to allow participants to easily distinguish the two groups. Concreteness and imageability were shown to not correlate with activation in the ATL in two previous studies (Ross & Olson, 2010; Zahn, et al., 2007).

Table 1.

Participants were trained to match the words listed to novel auditory, visual and audio-visual stimuli.

| Social Words | Nonsocial Words |

|---|---|

| AFFECTIONATE | AQUATIC |

| ANGRY | BROAD |

| ANXIOUS | BROKEN |

| BASHFUL | BUMPY |

| BRAVE | COLD |

| EAGER | DIRTY |

| FRIENDLY | FAT |

| GENEROUS | GLOSSY |

| HONEST | HEAVY |

| HUMBLE | ITCHY |

| UNKIND | JAGGED |

| LAZY | NOISY |

| NERVOUS | PRICKLY |

| PLAYFUL | RAGGED |

| RESPONSIBLE | ROUND |

| SELFISH | SCRAWNY |

| TRUSTING | SMALL |

| WISE | SOGGY |

There was also a no-semantic baseline condition, in which no word was associated with the sensory stimuli. Altogether there were 6 items in each stimulus category: Auditory-Social (Asocial), Auditory-Nonsocial (Anonsocial), Auditory-No-Semantic (Ano-semantic), Visual-Social (Vsocial), Visual-Nonsocial (Vnonsocial), Visual-No-Semantic (Vno-semantic), Audiovisual-Social (AVsocial), and Audiovisual-Nonsocial (AVnonsocial). All stimuli presentation was performed using Eprime software (Psychology Software Tools Inc., Pittsburg, PA).

2.3 Procedure and Tasks

2.3.1. Pre-Scan Training

Novel stimuli were used to ensure that participants would not be influenced by uncontrolled semantic knowledge that could be associated with familiar objects. Each participant came to the laboratory 4 – 5 times for a daily 30-min. training session. Participants were informed that they would see a series of “Fribbles” and that each would be accompanied by a word on the bottom of the screen describing that particular Fribble. They were informed that they would see a picture of the Fribble, hear the sound that the Fribble makes, or both see and hear the Fribble. Participants were asked to remember the word assigned to each Fribble, and to decide whether the word would best describe a person or an object, (or in other words, whether the word was social or nonsocial). Participants made this determination during training in order to practice for the scanning task, and each participant was asked to report if they were unsure as to which category each word belonged. If a participant was confused about this categorization, they were asked to use the category that the word would fall into most often in everyday speech.

A single training session involved a self-paced presentation of all 48 auditory and visual stimuli, four times. There was a subsequent old/new recognition test of the ability to match the word assigned to each Fribble. During the test, participants were presented with each Fribble experienced during training, with either the correct or incorrect word simultaneously presented. The task was to indicate whether the word was a correct or incorrect match to the Fribble. The social/nonsocial categorization responses were made during the scanning session, and not recorded at any stage of the training beyond self-report from participants who had questions regarding the categorization. Audio feedback was provided to facilitate training. Following the training sessions, participants had to meet the criterion of 85% or more correct on the final recognition test to participate in the fMRI scan session.

2.3.2 Imaging Task

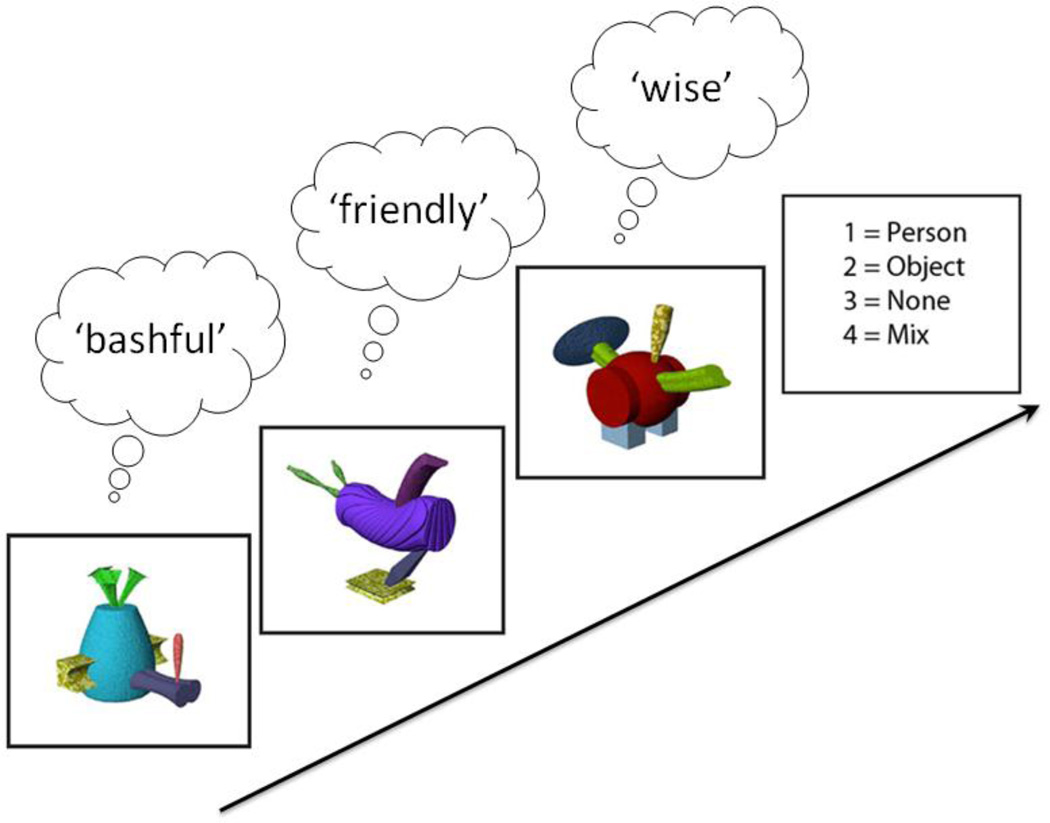

During the fMRI scan, participants saw or heard the same sensory stimuli that they had been familiarized with during the training phase but without the matching adjectives. Each block consisted of a series of three sensory stimuli for 3 s each followed by a blank delay of 1 s. This was followed by the response screen for 4 s. Participants were asked to recall the associated adjective when cued by each sensory stimuli. The task was to indicate, by right hand button press, whether the words associated with the three just-seen-stimuli would best describe a person, an object, nothing (because there were no words associated with the stimuli in that block), or a mixture. This resulted in a total block length of 16 s. Each block consisted of stimuli of the same sensory (A,V, AV) and, with the exception of the “mixed” blocks, the same semantic (social, nonsocial, no-semantic) category. The “mixed” blocks were included to ensure that participants attended to all three stimuli in each block, and these “mixed” blocks were later excluded from all analysis. Schematic illustration of the training procedure and an example of one block from the imagining session can be seen in figure 1.

Figure 1.

Schematic illustration of the scanner task and stimuli. During the scan session, the task was to view a sequence of three stimuli and mentally retrieve the word associated with each stimulus. At the end of the series, the task was to indicate by keypress whether all three retrieved words described a person, an object, if there was no word for any of the three, or if there was a mixture of word-types. In the illustration, the correct response = 1.

Blocks were presented in a counterbalanced order and individual stimuli within each block were randomly assigned. Each run consisted of two blocks from each subgroup of stimuli: Vsocial, Vnonsocial, Vno-semantic, Asocial, Anonsocial, Ano-semantic, AVsocial, and AVnonsocial. Each run also contained two blocks with mixed semantic conditions, which were used to ensure the participant performed the task for all stimuli within the block. Thus each run, including the short introduction and closing slides, was 272 s long. There were 8 functional runs in the entire experiment.

2.4 fMRI Design

2.4.1. Imaging Procedure

Neuroimaging sessions were conducted at the Temple University Hospital on a 3.0 T Siemens Verio scanner (Erlangen, Germany) using a twelve-channel Siemens head coil.

Functional T2*-weighted images sensitive to blood oxygenation level-dependent contrasts were acquired using a gradient-echo echo-planar pulse sequence (repetition time (TR), 2 s; echo time (TE), 19 msec; FOV= 240 × 240; voxel size, 3 × 3 × 3 mm; matrix size, 80 × 80; flip angle = 90°) and automatic shimming. This pulse sequence was optimized for ATL coverage and sensitivity based on pilot scans performed for this purpose, details of which are reported in Ross and Olson (2010). Visual inspection of the co-registered functional image confirmed excellent signal coverage in the ATLs in all participants. However, signal coverage was weaker in middle lateral aspects of the temporal lobes in Brodmann area (BA) 27. Some signal loss in the orbitofrontal cortex was observed and varied between participants. A TSNR map showing signal coverage for one example participant can be found in the supplementary data section.

Thirty-eight interleaved axial slices with 3 mm thickness were acquired to cover the temporal lobes. On the basis of the anatomical information of the structural scan the lowest slice was individually fitted to cover the most inferior aspect of the inferior temporal lobes.

The eight functional runs were preceded by a high-resolution structural scan. The scanning procedure began with an approximately 10 min long high- resolution anatomical scan. The anatomical image was used to fit the volume of covered brain tissue acquired in the functional scan. Participants experienced 8 functional runs that began with instructions presented visually for 4 seconds, and ended with a closing screen presented for 4 seconds. A single block lasted 16 sec, and each functional run was 4 min 48 sec duration each (144 TR’s).

The T1-weighted images were acquired using a three-dimensional magnetization-prepared rapid acquisition gradient echo pulse sequence (TR, 2000 msec; TE, 3 msec; FOV=201 × 230 mm; inversion time, 900 ms; voxel size, 1 × 0.9000 × 0.9000 mm; matrix size, 256 × 256 × 256; flip angle=15°, 160 contiguous slices of 0.9 mm thickness). Visual stimuli were shown through goggles and auditory stimuli were played through sound-resistant headphones both purchased from Resonance Technologies, California. Responses were recorded using a four-button fiber optic response pad system. The stimulus delivery was controlled by E-Prime software (Psychology Software Tools Inc., Pittsburg, PA) on a windows laptop located in the scanner control room.

2.4.2 Image Analysis

fMRI data were preprocessed and analyzed using Brain Voyager software (Goebel, Linded, Lanfermann, Zanella, & Singer, 1998). The preprocessing of the functional data included a correction for head motion (trilinear/sinc interpolation), the removal of linear trends and frequency temporal filtering. The data were coregistered with their respective anatomical data and transformed into Talairach space (Talairach & Tournoux, 1988). The resulting volumetric time course data were then smoothed using a 8mm Gaussian kernel. For all blocks, a canonical hemodynamic response function (HRF) was modeled spanning the period in each block in which the sensory stimuli was presented (12 s), excluding the response screen period.

2.4.3 Regions of Interest

An anatomical ROI of the ATL, consisting of the temporal pole (BA 38) and other nearby anterior regions of the temporal lobe (such as anterior aspects of BA 20, 21, 22 28, and 35) was created. This ROI does not include the amygdala and reaches from the sylvian fissure to the inferior sections of the temporal lobe. Our ROI was similar to the one created by Grabowski and colleagues (Grabowski, et al., 2001). Using Brain Voyager software (Goebel, et al., 1998), we drew a line on the Brain Voyager standard brain, slightly behind BA 38 and included all voxels anterior to this.

The ATL ROI was further divided into three subregions: superior ATL, inferior ATL, and polar ATL. This was done to allow our analysis to be more sensitive considering the hypothesized regional subdivision in the ATL. The superior temporal sulcus (STS) served as a rough subdivision between superior and inferior ROIs, given that auditory cortex is superior to the STS (Chartrand, Peretz, & Belin, 2008), and that high-level visual processing stream (the “what,” pathway) extends through inferior temporal cortex. The polar ROI included only the outer cortex in Brodmann’s 38/temporal pole, and did not exceed posterior of the talairach line y=13. The rationale for creating a separate polar ROI was that this region contains cells with unique structural and functional properties, as well as containing a distinct pattern of connections to limbic and prefrontal regions (Blaizot, et al., 2010; Ding, et al., 2009).

All ROIs were restricted to gray matter and drawn on a Talairach aligned standard brain provided by Brain Voyager. Following this segmentation procedure, each ROI was optimally aligned to each subject’s cortical representation using the copy-labels approach to cortex-based alignment in the Brain Voyager software package. In order to use this approach, we created an anatomical grey-matter mesh for each individual subject. Each mesh was mapped according to 80,000 different vertices, as was the template on which the ROIs were first drawn. The ROIs were then remapped based on each individual subject’s mesh, which produced ROIs that were optimally matched to the grey matter of every subject.

2.4.4 Data Analysis

Incorrect trials were removed from analysis. The results presented are set at p<.05, with a cluster threshold applied. The cluster threshold was independently selected for each comparison, using a Monte Carlo simulation which calculated the likelihood of obtaining different cluster sizes over 1,000 trials. The cluster size threshold was calculated within a whole-brain grey matter map, and then applied to relevant statistical maps within the whole ATL ROI, to ensure a global error rate of p<.05. Visual inspection using all of the data collected revealed several trends that did not reach significance.

The data analysis involved several steps. In the first step we assessed whether superior and inferior aspects of the ATLs show a differential sensitivity to auditory and visual stimuli, regardless semantic categories. We used two comparisons to identify these regions (1) all auditory over all visual conditions within the entire ATL ROI, in order to identify regions that preferentially activated to different unisensory stimulus types; (2) all audiovisual conditions to the combined unisensory conditions to identify regions that preferentially activated to audiovisual stimuli. Then beta weights were extracted for the A, V, and AV conditions. Data were analyzed with repeated measures ANOVAs using sensory condition (A, V, and AV) as the factor. This allowed us to experimentally assess observations from a review by Olson and colleagues (2007), as discussed in the introduction.

We next explored the possibility that semantic knowledge that was learned and recalled in response to a cue of a sensory type would activate within the same ROI as the respective perceptually-sensitive regions that we identified in the previous analysis. In order to identify regions sensitive to semantic knowledge associated with different sensory modalities in the entire ATL, we first created general semantic categories for each sensory modality. For example, Asemantic included both Asocial and Anonsocial, but not Ano-semantic. We then performed a simple subtraction: Asemantic versus Vsemantic.

We also searched for any areas in the ATL that could be considered, “sensory amodal,” by using a conjunction analysis. We performed the following analysis: (Asemantic versus Ano-semantic) ∩ (Vsemantic versus Vno-semantic).

In order to identify if activation in the entire ATL ROI was greater in response to the retrieval of social knowledge versus nonsocial knowledge, we collapsed across sensory categories and performed the following subtraction: all social versus all nonsocial. We then extracted beta weights for the social and nonsocial conditions, collapsing across sensory categories, within the superior, inferior, and polar ROIs. Data were analyzed with repeated-measures ANOVAs with the factors of semantic category (social, nonsocial, no-semantic) and hemisphere (L, R).

3. Results

3.1 Behavioral Results

Each participant underwent as many training sessions as was necessary to achieve 85% accuracy on the old/new recognition pre-scan test. Of the total group of participants, eight met criteria after three training sessions, nine met criteria after four training sessions and one required a fifth session to meet criterion.

Using the behavioral data collected during the scan, we performed a repeated measures ANOVA on sensory condition (A,V, AV) revealed a main effect of sensory condition (F(2, 51)= 6.367, p<.003) due to higher accuracy on auditory-visual trials compared to auditory only trials, (MAV = .87; MA=.70; t(17)=3.75, p<.001). There was no difference in the other sensory comparisons (all p’s >.07).

A similar analysis on semantic condition (social, nonsocial, non-semantic) revealed a main effect of semantic condition (F(2, 51)=7.45, p<.001) due to higher accuracy on social trials compared to nonsocial trials, (Msocial = .88; Mnonsocial=.68; t(17) = 3.963, p<.001), indicating that it was easier to retrieve information about social, compared to non-social, stimuli. There was no difference in the other comparisons (all p’s >.06). Means are listed in Table 2.

Table 2.

Accuracy in different sensory and semantic conditions tested in the scanner.

| Stimulus Type | Semantic Category |

Mean (SD) |

|---|---|---|

| Auditory | .702 (.16) | |

| Visual | .787 (.16) | |

| Audiovisual | .873 (.10) | |

| Social | .876 (.13) | |

| Nonsocial | .675 (.17) | |

| No-semantic | .777 (.16) |

3.2 fMRI Results

3.2.1 Decreased Activity with Repeated Exposure to Stimuli

Our first analysis sought to identify a hallmark of mnemonic processing: decreased neural activity associated with stimulus repetition. Prior research has shown that the ATL is very sensitive to stimulus repetition, showing decreased activations with repeated presentations (Sugiura, et al., 2001; Sugiura, Mano, Sasaki, & Sadato, 2011). Based on this finding, repeated exposure to a stimulus should lead to beta weights approaching a value of 0. To test this, we examined the mean beta weight collapsed across conditions in our whole ATL ROI over the different runs. Stimulus repetition, as indicated by the number of runs experienced, was negatively correlated with the absolute value of the beta weights, (r=−.213, p = .016) showing that repeated stimulus exposure decreased the predictive value of the different conditions on BOLD response. Careful examination of the beta weights in each condition across all runs revealed that this decrease in activity was driven by the social condition, and especially by the AVsocial condition. The decreasing activity across runs was not found in the nonsocial conditions. Based on this finding, we chose to exclude the later runs (runs 5–8) from all further analysis.

3.2.2 Sensory Subdivisions with the ATL

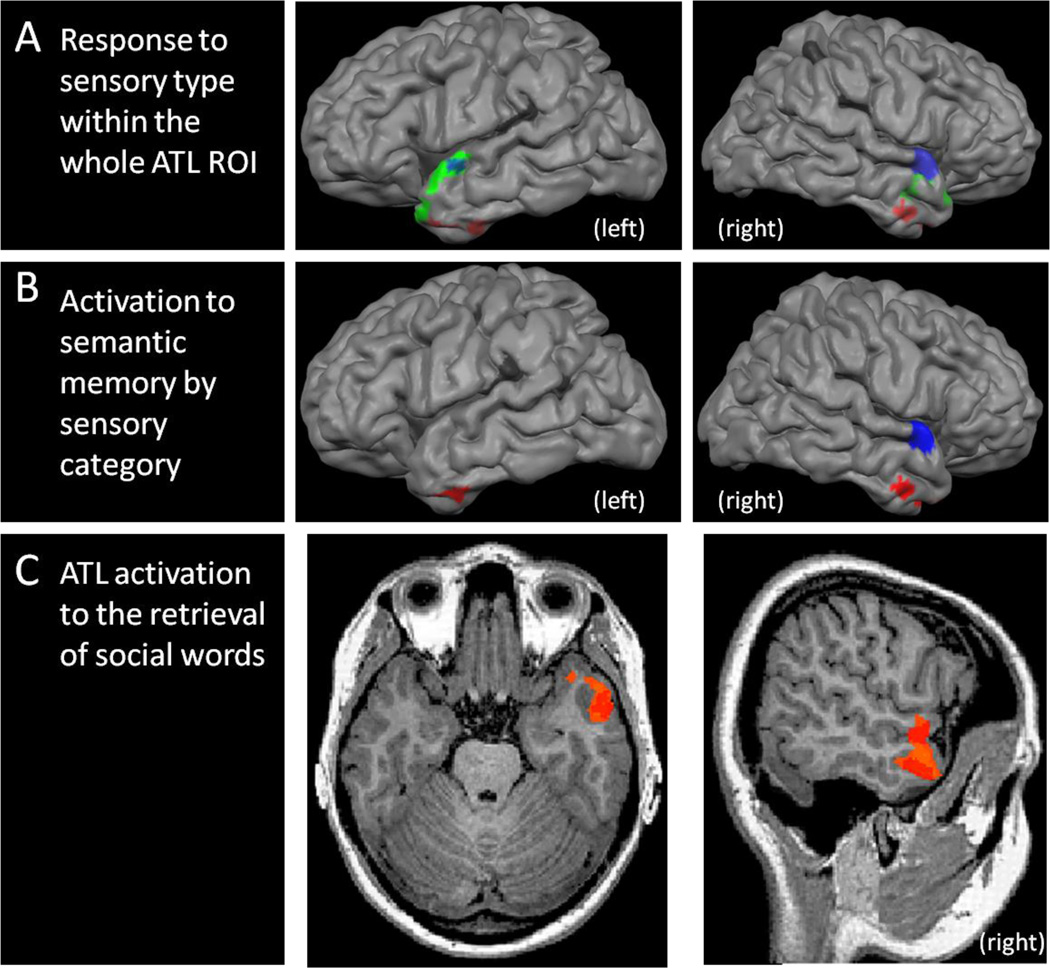

Our first analysis was performed in order to identify preferential activation to different sensory stimulus types in the ATL, collapsing across other factors. Based on the results of a BrainVoyager Monte Carlo simulation, a conservative cluster threshold of 44 voxels was applied to this statistical map set at p<.05 within the ATL (see figure 2A). As predicted, the perception of auditory stimuli was associated with increased activity in the superior ATL, while visual stimuli led to increased activity in the inferior ATL. Audiovisual stimuli led to increased BOLD signal in the polar tip of the ATL.

Figure 2.

(A) Areas responding to all auditory stimuli (blue), all visual stimuli (red) and all audiovisual stimuli (green), within our ATL ROI, shown at p<.05, cluster threshold of 44 voxels; (B) Activations in the left hemisphere in response to accessing semantic memory encoded through the auditory (blue) and visual (red) sensory systems, shown at p<.05, cluster threshold of 32 voxels. (C) Activations in the ATL ROI to the retrieval of social versus nonsocial knowledge, shown at p<.05, cluster threshold of 36 voxels.

Using a repeated measures ANOVA, our analyses showed that in the superior ROI, there was a main effect of sensory condition (F(2, 34) = 3.98, p <.05). Paired-samples t-tests confirmed that response in the superior ROI was significantly greater for auditory stimuli alone than to visual stimuli (p<.05) or audiovisual stimuli (p <.01), but there was no difference between visual and audiovisual stimuli (p>.67). In the inferior ATL ROI, there was also a main effect of sensory condition (F(2, 34) = 3.86, p < .05). Response in the inferior ROI to visual stimuli was significantly greater than to auditory stimuli (p<.05) and marginally greater than to audiovisual stimuli (p<.07). There was no difference between A and AV activations (p >.59). In the polar ROI, there were a main effect of sensory condition (F(2, 34) = 4.58, p<.05) due to significantly greater responses to AV stimuli compared to A or V (both p’s<.05). There was no difference between A and V activations (p >.78).

Our second question was whether the retrieval of semantic knowledge, which had been learned in association with different stimulus modalities, activated distinct regions of the ATL. Monte Carlo simulation dictated that a cluster threshold of 32 voxels be applied to this statistical map set at p<.05 within our ATL ROI(see Figure 2B). At this threshold, response to semantic knowledge cued by auditory stimuli was lateralized to the right superior ATL, including a large cluster on the superior medial surface. Response to semantic knowledge cued by visual stimuli was found bilaterally in the inferior ATL. A post-hoc repeated measures ANOVA using the factors of sensory type (Auditory, Visual) and semantic knowledge (semantic, no-semantic) was conducted. In both the superior and inferior ROIs, the main effect of sensory condition, as found in the previous analysis, was still significant (superior: F(1, 17) = 9.75, p<.01; inferior: F(1, 17) = 4.47, p<.05), but there was no significant main effect of semantic content found in either ROI (both p’s>.82).

In order to identify regions in the ATL that serve a general semantic, but sensory amodal, function, we performed the following conjunction analysis: (Asemantic versus Ano-semantic) ∩ (Vsemantic versus Vno-semantic) in the full ATL ROI. No voxels surpassed the significance threshold for this test.

3.2.3 Social Subdivisions within the ATL

In this analysis we asked whether different portions of the ATL are sensitive to the retrieval of memories of social words and non-social words. Figure 2C shows clusters within the ATL ROI that were sensitive to retrieved memories of social words, with a statistical threshold of p<.05 and a minimum cluster threshold of 36 voxels: a right lateralized ATL cluster around the anterior portion of the superior temporal sulcus (STS) going into the pole. There were no ATL subregions that were preferentially sensitive to the retrieval of nonsocial words.

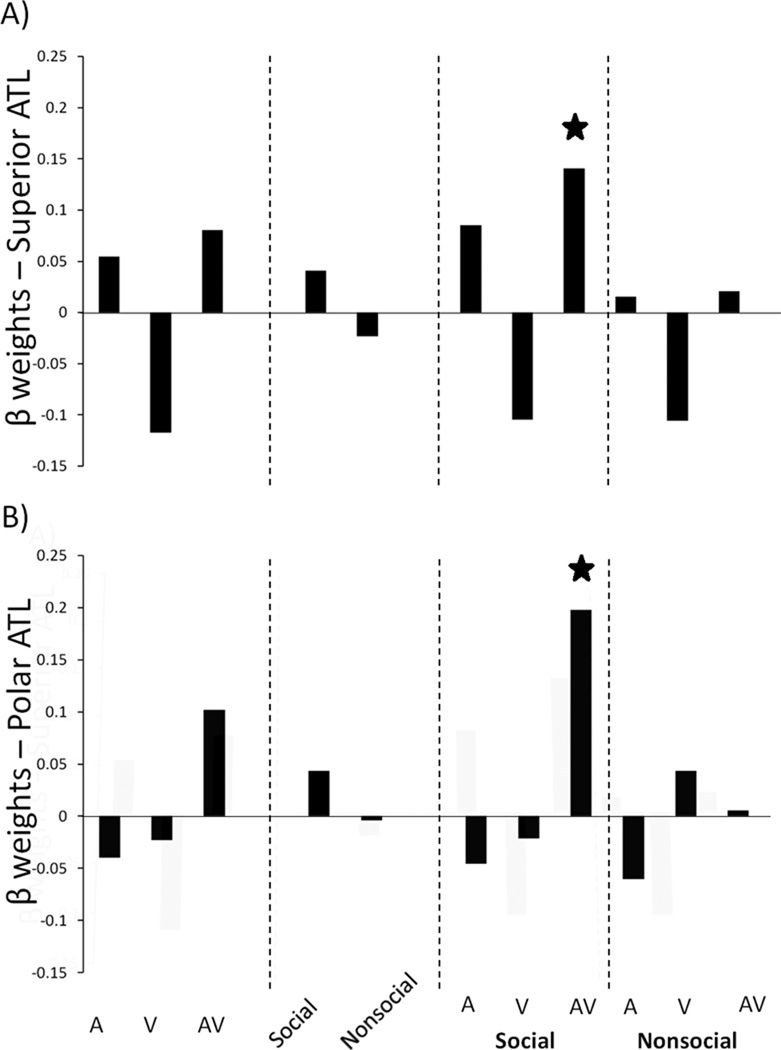

An interaction between sensory condition and social content was also identified. Figure 3 shows the beta weights for interactions between these conditions in the superior and polar ROIs. The left superior ROI was sensitive to retrieved social information that was audiovisual (p<.05) but not auditory alone (p > .15) or visual alone (p > .81). The left and right polar ROIs were also sensitive to audiovisual social stimuli (AVsocial vs. AVnonsocial in the left polar ATL: t(17) = 2.54, p <.05; right polar ATL: t(17) = 2.55, p <.05). There were no differences to the analogous unisensory comparison (all p’s >.25).

Figure 3.

(A) Beta weights for each condition, showing the interaction effect of multisensory and social conditions in the superior ATL ROI; (B) Beta weights for each condition, showing the interaction effect of multisensory and social conditions in the polar ATL ROI. The inferior ATL ROI is not depicted because no interactions were observed. A=auditory; V = visual; AV=audiovisual.

Interestingly, The inferior ATL ROI was generally insensitive to the social vs. nonsocial comparison across all stimulus modalities (all p’s >.18).

3.2.4 Whole Volume Analysis

Although the primary interest of this study was to understand the ATLs, a whole brain analysis was performed in order to explore other brain areas involved in semantic memory, and social semantic memory in particular. This analysis was done without using any masking, and using a minimum cluster threshold of 122 voxels, which was also determined on an unmasked whole-volume statistical map. Significantly greater responses to the retrieval of social words were found in the anterior cingulate running into the medial prefrontal cortex, the posterior cingulate, the right amygdala, right inferior bank of the posterior STS, and the right temporal pole (see Table 2 and the appendix). Significantly greater responses to nonsocial words were found in the bilateral parietal cortex (supermarginal gyrus) and lateral prefrontal cortex.

4. Discussion

The purpose of this study was to explore whether sensory and semantic subregions exist within the anterior temporal lobes. The first aim of this study was based on neuroanatomical findings showing that the ATL maintains a modality- specific organizationwith the exception of the polar tip (Blaizot, et al., 2010; Ding, et al., 2009). Thus we hypothesized that auditory, visual, and audiovisual stimuli would lead to greater activations in superior, inferior, and polar regions of the ATL, respectively. In addition, since the majority of the human ATL literature has associated the ATL with functions related to semantic memory (for a review see (Patterson, et al., 2007)) we explored the hypothesis that the retrieval of semantic knowledge might display a topographical differentiation based on the stimulus modality with which the retrieved concept was associated.

The second aim of this study was to investigate the social knowledge hypothesis by assessing whether the ATL preferentially processes socially relevant conceptual knowledge as opposed to nonsocial semantic knowledge (Moll, et al., 2005; Olson, et al., 2007; Ross & Olson, 2010; Zahn, et al., 2007) and whether the recall of this knowledge reveals topographical differentiations.

4.1 Sensory Segregation of the Anterior Temporal Lobes During Perceptual Encoding

The results of our study confirm that sensory segregation exists in the ATL: the perceptual encoding of auditory stimuli activated superior aspects of the ATL, superior to the STS and posterior to the polar tip, while visual stimuli activated a region of the ATL that was inferior to the STS.

Multisensory audiovisual stimulation resulted in bilateral activations of the temporal pole. The temporal poles behaved like classic multisensory integration regions such as the posterior STS and the inferior parietal cortex (Calvert & Lewis, 2004) in that the activation to the audiovisual stimuli was greater than to the sum of the unisensory stimuli. This is surprising since these multisensory effects have been ascribed to sensory and perceptual rather than semantic functions and most studies of audiovisual multisensory speech perception have reported multisensory effects in more posterior regions along the STS (Calvert & Lewis, 2004; Hein & Knight, 2008; Lewis, et al., 2004). The identification of multisensory objects is known to affect early perceptual processing in lateral occipital cortices (Molholm, Ritter, Javitt, & Foxe, 2004) and these effects have been attributed to modulations and enhancement of perceptual processes.

Although the ATL is not considered to be a classic multisensory region and fMRI activations are rarely reported in this region when non-social audiovisual stimuli are used, ATL activations are commonly reported when socially relevant multisensory stimuli are tested. For instance, studies using face-voice combinations (Olson, Gatenby, & Gore, 2003; von Kriegstein & Giraud, 2006), or audiovisual emotion combinations (Kreifelts, Ethofer, Grodd, Erb, & Wildgruber, 2007; Robins, Hunyadi, & Schultz, 2009) have reported temporal pole activations. Portions of the ATL clearly play an important role in multisensory integration as evidenced by findings from a recent study showing that individuals with ATL lesions from stroke or herpes encephalitis had difficulties integrating audiovisual object features while this was not true of individuals with lesions posterior STS (Taylor, Stamatakis, & Tyler, 2009). Older studies have consistently shown that ATL lesions or cell loss due to frontotemporal dementia can cause multimodal person identification deficits (Gianotti, 2007; Olson, et al., 2007).

ATL activations to multisensory stimuli are in line with the idea of a convergence zone in this region (Damasio, Tranel, Grabowski, Adolphs, & Damasio, 2004), which links information from different sensory modalities and connects it to conceptual knowledge. The integrative process in this region, which is further downstream than classic multisensory regions in the STS, may subserve conceptual- emotional integration, a prerequisite for the creation of social knowledge and social interactions (Olson et al., 2007), rather than low-level sensory integration. We hope that our findings, in addition to the reviewed literature, will spur investigators towards investigating this possibility in the future.

It is also possible that enhancements in stimulus processing at lower levels evident in superadditive effects led to similarly enhanced processing at higher levels such as the retrieval of conceptual knowledge or other mnemonic functions (Olson et al., 2007). Participants performed best on multisensory trials and social trials, both of which activate the temporal pole. The input from different modality channels may result in a more efficient activation of a concept and therefore in a relatively larger BOLD response. These considerations remain highly speculative, however, since changes in BOLD response are a rather general index of changes in brain metabolic processes and don’t index the precise the underlying mechanism that caused it.

4.2 Sensory Segregation of the Anterior Temporal Lobes During Memory Retrieval

We further explored whether semantic memory in the ATL is organized in a sensory specific manner such that retrieval of memories would mimic the sensory modality in which they were encoded previously. Our results confirmed this hypothesis. As predicted, the retrieval of auditory-based semantic information did activate the superior ATL, and the retrieval of visual-based semantic information did preferentially activate the inferior ATL. But portions of the superior ATL were also activated. This finding provides some support for the embodied accounts of semantic processing (Prinz, 2002) because the retrieval of semantic associations was linked to the same regions of the ATL implicated in the perceptual experience of the cues.

Prinz,

4.3 Social Knowledge and the Anterior Temporal Lobes

The second question addressed by this study was whether some portion of the ATLs are preferentially responsive to social knowledge rather than generally sensitive to all semantic knowledge, based on a prediction of the social knowledge hypothesis of ATL function (Ross & Olson, 2010). To test this, we compared activations in response to the retrieval of social and nonsocial descriptions which were learned outside of the scanner, and found that polar aspects of the ATL preferentially activated to the retrieval of social words, indicating that the social sensitivity of the ATLs extends beyond processing and encoding to memory retrieval. Because we used novel stimuli, our study rules out the possibility that other uncontrolled aspects of the stimuli were driving the social effects observed in the ATL in prior neuroimaging studies. This finding is in line with other work by our laboratory and other laboratories, showing that various social tasks and stimuli, such as social attribution film clips, social word comparison tasks, phrases about people, and theory of mind tasks all activate a similar region of the ATL (Ross & Olson, 2010).

One question that is not answered by our current data is what feature/s of social concepts - their abstractness, emotionality, or some other variable - trigger ATL activity. One explanation is that the ATLs are generally sensitive to abstract concepts, and social concepts are simply one example of this category. Lambon-Ralph and colleagues recently showed that transcranial magnetic stimulation of the anterior middle temporal gyrus (MTG) impaired processing of abstract concepts more than concrete concepts (Pobric, Lambon Ralph, & Jefferies, 2009). Also, Noppeney and Price reported greater activations in the left ATL to abstract words as compared to concrete words (Noppeney & Price, 2004). However, close inspection of the word pools used in both studies reveals that the abstract words consisted primarily of words that describe people (e.g. skilled, adept, clever, smart, shrewd, etc.) while the concrete words primarily described things (e.g. buzzing, tooting, thunder, arc, curve, etc.). Abstract words tend to be more emotionally valenced than concrete words, and it has been proposed that the dichotomy between concrete and abstract concepts is driven in part by the statistical preponderance of sensimotor information associated with concrete concepts, and emotional information with abstract concepts (Kousta, Vigliocco, Vinson, Andrews, & Del Campo, 2010). Moreover, abstractness is typically defined by level of concreteness and imageability and two studies have shown that ATL activations are largely unaffected by these variables (Ross & Olson, 2010; Zahn, et al., 2007). As such, abstractness per se does not appear to be a valid explanation for the observed effects.

A second possibility is that the ATLs are sensitive to salient knowledge, rather than social knowledge. We are sympathetic to this explanation. In earlier work we proposed that a general function of the ATL is to couple emotional responses to highly processed visual and auditory stimuli to form a sort of personal semantic memory (Olson, et al., 2007). This proposal was based on anatomical studies showing that polar aspects of the ATL, besides being multisensory, is highly interconnected with two neuromodulatory regions: the amygdala and the hypothalamus (Kondo, et al., 2003, 2005). The hypothalamus is typically considered to be a neuromodulatory region, important for autonomic regulation of emotions. Likewise, Adolphs recently proposed that the amygdala is responsive to such a wide range of social and emotional stimuli because it processes “salience” or “relevance” rather than threat (Adolphs, 2010). Three pieces of the current data support the salience explanation. First, subjects found it easier to recall social words as compared to nonsocial words, possibly because they were more salient. Second, the same temporal pole region that showed sensitivity to social words showed sensitivity to multisensory stimuli, which our behavioral data indicate may be more salient than unisensory stimuli. Third, the finding that repeated exposure to the social conditions, and not the nonsocial conditions, led to decreased activity points to the role of salience since evocative stimuli tend to lose their affective power with repeated presentation.

Also, in a different fMRI study, we sought to understand the basis of the “unique entity” effect in which the ATL is more sensitive to famous or personally familiar people as compared to unfamiliar people (Tranel, 2009). We found that the ATL was much more sensitive to faces of people that were associated with unique biographical information – such as having invented television - as compared to people who were associated with non-unique biographical information – such as having had three children (Ross & Olson, accepted - pending revisions). Once again, this finding points to the idea that portions of the ATL serve a mnemonic function that is defined by it’s sensitivity to salient knowledge. It is thus possible that social information is the canonical case of salient knowledge for social species of animals. Because some aspects of ‘salience’ are context specific and individually defined, it may be useful to examine individual differences in this variable in future studies. For instance, it is possible that individual differences in social interest may correlate with the sensitivity of the ATL to social stimuli.

4.4 The Hub Account and the Localization of Function

The Hub Account of semantic memory is a process account, which does not afford a privileged status for any stimulus category. Rather, the Hub Account proposes that enhanced activity in the ATLs is due to the specificity of the semantic operations performed on them, rather than being sensitive to any particular stimulus category or dimension. Our findings do not support this view. First, we could not identify any region in the ATL that served a general semantic, but sensory-amodal function, using the conjunction analysis (Asemantic versus Ano-semantic) ∩ (Vsemantic versus Vno-semantic). Even given the possibility that functionality can be graded across a generalized hub (Plaut, 2002), such a region would have been identified by null findings when comparing audio, visual and audiovisual simulation, due to overlapping activations to audio. Instead, we found a significant segregation in the processing of different sensory modalities, indicating that the ATL is not a general amodal semantic processor. Second, our findings show that polar aspects of the ATL were more sensitive to social words than nonsocial words. No regions were identified that were more sensitive to nonsocial words. The Hub Account also specifies that this region is especially sensitive to semantic specificity (Patterson, et al., 2007). Specificity within a semantic hierarchy is defined on the basis of several psycholinguistic measures, such as meaningfulness and frequency of use or familiarity. However, our social and nonsocial words did not differ on these measures, and instead, showed that portions of the ATLs – more specifically, superior and polar regions - are sensitive to stimuli with greater social content.

Many studies of semantic memory have also failed to support the Hub Account, or its recent incarnation, the Hub-and-Spoke model (Lambon Ralph, Sage, Jones, & Mayberry, 2010), although this is usually in the form of null results. A recent meta-analysis of neuroimaging findings on semantic memory reported that the majority of studies failed to find significant activations to semantic processing in the ATL (Binder, Desai, Graves, & Conant, 2009). Although one could attribute this absence of findings to susceptibility artifacts (Devlin, et al., 2000), it is telling that fMRI studies of theory of mind and mentalizing have consistently reported activations in the ATL (Olson, et al., 2007). Binder and colleagues found that one way to achieve strong ATL activations in a semantic task is to use linguistic input with social content, such as stories about people (Binder, et al., 2011).

These findings should not be interpreted as negating the plausibility of the Hub Account – the theoretical possibility, and even the arguments supporting its necessity still hold. What is problematic is the proposed location of the hub in the temporal pole. Simply put, the architects of the Hub Account may have erroneously placed the hub too anteriorly. In the next section we review three lines of evidence that implicate the anterior fusiform gyrus, in and around perirhinal cortex, as the location of a putative semantic hub.

First, electrical stimulation of the left anterior fusiform gyrus, a region dubbed the ‘basal temporal language area’ produces speech arrest and confrontation naming errors in patients undergoing neurosurgery (Luders, et al., 1986; Luders, et al., 1991). Language disturbances can also be produced by stimulating the inferior temporal gyrus and parahippocampal gyrus (Burnstine, et al., 1990; Schaffler, Luders, & Beck, 1996) but the area in which stimulation most consistently produces speech errors is the anterior fusiform gyrus (Burnstine, et al., 1990; Schaffler, et al., 1996). This same region (but not more polar regions) produces P400’s upon presentation of words or phrases with semantic content as shown by studies using intracranial recording methods (McCarthy, Nobre, Bentin, & Spencer, 1995; Nobre, Allison, & McCarthy, 1994; Nobre & McCarthy, 1995). As predicted by these findings, epileptic seizures localized to the basal language area can cause transient aphasia (Kirshner, Hughes, Fakhoury, & Abou-Khalil, 1995).

Second, although patients with semantic dementia characteristically have atrophy in the temporal pole, anterior-lateral temporal lobe, and anterior fusiform gyrus, the semantic deficits associated with this disorder are most consistently correlated with atrophy to the anterior fusiform gyrus and underlying white matter tracts (Binney, Embleton, Jefferies, Parker, & Lambon Ralph, 2010; Mion, et al., 2010; Nestor, Fryer, & Hodges, 2006). In some studies, an area directly medial to the anterior fusiform, perirhinal cortex, is also implicated in semantic deficits (Davies, Graham, Xuereb, Williams, & Hodges, 2004).

Third, recent fMRI studies of semantic processing have shown that peak activations in semantic memory tasks are in this same anterior-medial fusiform region (Binney, et al., 2010).

There is also evidence indicating that the putative hub could be located in the anterior middle temporal gyrus (MTG), on the lower bank on the STS, posterior to the temporal pole. Recent transcranial magnetic stimulation (TMS) studies have shown that stimulation of the anterior MTG impairs semantic processing of words and pictures (Lambon Ralph, Pobric, & Jefferies, 2009; Pobric, Jefferies, & Lambdon Ralph, 2010a; Pobric, Jefferies, & Lambon Ralph, 2007). The authors of a large meta-analysis of imaging studies of semantic memory reported that the anterior and middle MTG was activated in most studies of semantic memory (Binder, et al., 2009). The anterior fusiform was named as a separate region in the semantic memory network (Binder, et al., 2009).Whether the anterior MTG and anterior fusiform have similar or distinct functions in semantic memory must be determined by further experimentation.

In contrast, the ATL subregion most sensitive to social stimuli is located in the superior ATL, including the polar tip. In the current study our social activations were localized to the polar tip, while in a prior study, using Heider and Simmel social attribution stimuli, the reported activations were in the pole and along the STS (Ross & Olson, 2010). We have also observed activations in the polar tip to famous faces (Ross & Olson, accepted - pending revisions) and superior ATL activations to social word stimuli have been reported by other investigators (Noppeney & Price, 2004; Zahn, et al., 2007). These findings align with prior results showing that in non-human primates, the superior ATL, but not inferior ATL, contains cells that are responsive to social-emotional stimuli (Kondo, et al., 2003). The polar tip of the ATL contains bidirectional connections with medial and orbital regions of the frontal lobe, implicating this region in high-level social processing (reviewed by (Moran, et al., 1987).

In sum, social concepts and non-social concepts may rely on distinct regions of the temporal lobe: the processing of non-social concrete entities appear to rely on a region that is both more inferior and more posterior to the region activated to social concepts, which appears to rely on a superior/polar subregion of the ATL.

4.5 Limitations

The current study has two limitations: the first has to do with statistical power, the second with interpretation. It is possible that the learning task influenced the results, perhaps because the learning was too weak, thereby generating weak representations that underestimated the involvement of various ATL regions in semantic processing. Also, there was a large amount of stimulus repetition that may have caused repetition suppression, again reducing the statistical power of the results.

Second, subjects found it easier to recall social, as compared to non-social material, and multisensory as compared to unisensory, information. An uninteresting explanation for our findings is that the greater responsiveness of the ATL during social than nonsocial judgments (and multisensory compared to unisensory) is that the ATL is sensitive to the ease of memory recall and/or judgments. We feel that this explanation is unlikely for several reasons. First, although it is common to observe a greater BOLD response to more difficult tasks, it is uncommon to observe a greater BOLD response to easier tasks. This is because as a task becomes easy, the task can be completed by automatic routines requiring little attention (Faro & Mohamed, 2010). For instance, it is common to observe decreased activations associated with increased motor learning, defined by faster RTs and greater ease of task execution (Olson, et al., 2006). Second, previous studies of the social knowledge hypothesis reported ATL activations to social stimuli in the absence of accuracy or RT differences. For instance, we asked participants to view images of landmarks and faces and determine whether each was famous or not famous based on information learned specifically for the study. The findings showed relatively greater activation to faces than to landmarks despite no difference in accuracy (Ross & Olson, accepted - pending revisions). In another study, participants were required to compare related words based on meaning, and the ATL was found to be more sensitive to social words, even though this task was harder than the matched non-social condition (Zahn, et al., 2007). Last, our review of the literature found no evidence that that ATL is sensitive to difficulty, but rather, that it is sensitive to social knowledge (Olson, et al., 2007).

4.6 Conclusions

This study was motivated by the question of whether the ATL contains sensory and categorical subdivisions. To study this, we used a training regime in which study participants learned associations between objects/sounds and semantic descriptors. We demonstrated that new semantic learning, once consolidated, activates the ATL. We also found that the ATL exhibits a predictable sensory specificity: the perception of auditory stimuli preferentially activated superior regions, the perception of visual stimuli preferentially activated inferior regions, and the perception of audiovisual stimuli preferentially activated polar regions. However, the retrieval of semantic knowledge does not appear to rest within the sensory streams used to encode the same information. Orthogonally, the ATL was found to be sensitive to the type of retrieved knowledge. Social knowledge preferentially activated the ATL, over and above nonsocial knowledge, in a region similar to that activated by audiovisual stimuli.

The theoretical contribution of our paper is twofold. First, our findings fail to support several contentions of the Hub Account: that the ATL is amodal, that it is similarly sensitive to all types of semantic information, and that the putative hub is located in the ATL. Indeed our findings and literature review indicate that the temporal lobe region most sensitive to non-social, concrete semantic information is just posterior to the ATL, near perirhinal cortex. Second, our results further flesh out the social knowledge hypothesis by showing that the ATL is more sensitive to social as compared to non-social knowledge, that it’s social sensitivity is extends beyond stimulus encoding to include memory retrieval, and that the region of the ATL most sensitive to social information processing is the superior ATL extending into the polar tip.

Research Highlights.

-

>

We trained subjects to associate social and nonsocial adjectives with novel sensory stimuli.

-

>

We then used fMRI to assess brain activity during retrieval.

-

>

Findings indicate that the temporal pole shows greater activation for multisensory stimuli.

-

>

Findings show that the temporal pole is selective for social information.

Supplementary Material

Table 3.

Talairach coordinates for regions found in a whole-brain analysis that were more sensitive to social stimuli compared to nonsocial stimuli, collapsing across all sensory conditions. These results are from the corresponding p-value, uncorrected for multiple comparisons, but with a cluster size minimum of 122 voxels.

| Region | BA | Tal (x, y, z) | p value |

|---|---|---|---|

| right temporal pole | 38 | 48, 10, −31 | .05 |

| right superior temporal sulcus | 21 | 51, −9, −8 | .05 |

| right amygdala | 110, 134, 149 | .05 | |

| right middle temporal sulcus | 22 | 51, −4, −17 | .05 |

| right prefrontal cortex | 46 | 77, 96, 117 | .001 |

| right anterior hippocampus | 34 | 33, −15, −19 | .05 |

| left anterior cingulate | 33 | 6, 29, 10 | .001 |

| left medial prefrontal cortex | 32 | 6, 44, 24 | .001 |

| left superior temporal gyrus | 42 | −44, −14, 0 | .05 |

| left medial superior temporal gyrus | 22 | −34, −1, −5 | .05 |

| left anterior temporal lobe | 38 | −50, 2, −8 | .05 |

Acknowledgements

We would like to thank Dr. Feroze Mohamed and the BrainVoyager team for helpful advice. This work was supported by a National Institute of Health grant to I. Olson [RO1 MH091113], and the first author is supported by the National Science Foundation Graduate Research Fellowship Program. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institute of Mental Health, the National Institutes of Health or the National Science Foundation.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Adolphs R. What does the amygdala contribute to social cognition? Annals of the New York Academy of Sciences. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. Cerebral Cortex. 2009;19:2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binder JR, Gross WL, Allendorfer JB, Bonilha L, Chapin J, Edwards JC, Grabowski TJ, Langfitt JT, Loring DW, Lowe MJ, Koenig K, Morgan PS, Ojemann JG, Rorden C, Szaflarski JP, Tivarus ME, Weaver KE. Mapping anterior temporal lobe language areas with fMRI: A multicenter normative study. NeuroImage. 2011;54:1465–1475. doi: 10.1016/j.neuroimage.2010.09.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binney RJ, Embleton KV, Jefferies E, Parker GJM, Lambon Ralph MA. The ventral and inferolateral aspects of the Anterior Temporal Lobe are crucial in semantic memory: Evidence from a novel direct comparison of distortion-corrected fMRI, rTMS, and Semantic Dementia. Cerebral Cortex. 2010 doi: 10.1093/cercor/bhq019. [DOI] [PubMed] [Google Scholar]

- Blaizot X, Mansilla F, Insausti AM, Constans JM, Salinas-Alama´n A, Pro´-Sistiaga P, Mohedano-Moriano A, Insausti R. The human parahippocampal region: I. Temporal pole cytoarchitectonic and MRI correlation. Cerebral Cortex. 2010;20:2198–2212. doi: 10.1093/cercor/bhp289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burnstine TH, Lesser RP, Hart J, Uematsu S, Zinreich SJ, Krauss GL, Fisher RS, Vining EPG, Gordon B. Characterization of the basal temporal language area in patients with left temporal lobe epilepsy. Neurology. 1990;40:966–970. doi: 10.1212/wnl.40.6.966. [DOI] [PubMed] [Google Scholar]

- Calvert GA, Lewis JW. Hemodynamic studies of audio-visual interactions. In: Calvert GA, Spence C, Stein BE, editors. Handbook of Multisensory Processing. Cambridge, MA: MIT Press; 2004. [Google Scholar]

- Chartrand JP, Peretz I, Belin P. Auditory recognition expertise and domain specificity. Brain Research. 2008;12:191–198. doi: 10.1016/j.brainres.2008.01.014. [DOI] [PubMed] [Google Scholar]

- Damasio H, Tranel D, Grabowski T, Adolphs R, Damasio A. Neural systems behind word and concept retrieval. Cognition. 2004;92:179–229. doi: 10.1016/j.cognition.2002.07.001. [DOI] [PubMed] [Google Scholar]

- Davies RR, Graham KS, Xuereb JH, Williams GB, Hodges JR. The human perirhinal cortex and semantic memory. European Journal of Neuroscience. 2004;20:2441–2446. doi: 10.1111/j.1460-9568.2004.03710.x. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Russell RP, Davis MH, Price CJ, Wilson J, Moss HE, Matthews PM, Tyler LK. Susceptibility-induced loss of signal: comparing PET and fMRI on a semantic task. NeuroImage. 2000;11:589–600. doi: 10.1006/nimg.2000.0595. [DOI] [PubMed] [Google Scholar]

- Ding SL, Van Hoesen GW, Cassell MD, Poremba A. Parcellation of human temporal polar cortex: A combined analysis of multiple cytoarchitectonic, chemoachitectonic, and pathological markers. Journal of Computational Neurology. 2009;514:595–623. doi: 10.1002/cne.22053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drane DL, Ojemann GA, Aylward E, Ojemann JG, Johnson LC, Silbergeld DL, Miller JW, Tranel D. Category-specific naming and recognition deficits in temporal lobe epilepsy surgical patients. Neuropsychologia. 2008;46:1242–1255. doi: 10.1016/j.neuropsychologia.2007.11.034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faro SH, Mohamed FB. BOLD fMRI: A guide to functional imaging for neuroscientists. New York, NY: Springer; 2010. (Chapter Chapter). [Google Scholar]

- Frith U, Frith C. The social brain: Allowing humans to boldy go where no other species has been. Philosophical Transactions of the Royal Society B: Biological Sciences. 2010;365:165–175. doi: 10.1098/rstb.2009.0160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith U, Frith CD. Development and neuropsychology of mentalizing. Philosophical Transactions of the Royal Society B: Biological Sciences. 2003;358:459–473. doi: 10.1098/rstb.2002.1218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gianotti G. Different patterns of famous people recognition disorders in patients with right and left anterior temporal lesions: A systematic review. Neuropsychologia. 2007;45:1591–1607. doi: 10.1016/j.neuropsychologia.2006.12.013. [DOI] [PubMed] [Google Scholar]

- Goebel R, Linded DE, Lanfermann H, Zanella FE, Singer W. Functional imaging of mirror and inverse reading reveals seperate coactivated networks for oculomotion and spatial transformations. Neuroreport. 1998;9:713–719. doi: 10.1097/00001756-199803090-00028. [DOI] [PubMed] [Google Scholar]

- Grabowski T, Damasio H, Tranel D, Ponto LL, Hichwa RD, Damasio AR. A role for the left temporal pole in retrieval of words for unique entities. Human Brain Mapping. 2001;13:199–212. doi: 10.1002/hbm.1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hein G, Knight RT. Superior temporal sulcus - It's my area: or is it? Journal of Cognitive Neuroscience. 2008;20:2125–2136. doi: 10.1162/jocn.2008.20148. [DOI] [PubMed] [Google Scholar]

- Kirshner HS, Hughes T, Fakhoury T, Abou-Khalil B. Aphasia secondary to partial status epilepticus of the basal temporal language area. Neurology. 1995;45:1616–1618. doi: 10.1212/wnl.45.8.1616. [DOI] [PubMed] [Google Scholar]

- Kondo H, Saleem KS, Price JL. Differential connections of the temporal pole with the orbital and medial prefrontal networks in macaque monkeys. The Journal of Comparative Neurology. 2003;465:499–523. doi: 10.1002/cne.10842. [DOI] [PubMed] [Google Scholar]

- Kondo H, Saleem KS, Price JL. Differential connections of the perirhinal and parahippocampal cortex with the orbital and medial prefrontal networks in macaque monkey. The Journal of Comparative Neurology. 2005;493:479–509. doi: 10.1002/cne.20796. [DOI] [PubMed] [Google Scholar]

- Kousta S, Vigliocco G, Vinson DP, Andrews M, Del Campo E. The representation of abstract words: Why emotions matter. Journal of Experimental Psychology: General. 2010 doi: 10.1037/a0021446. [DOI] [PubMed] [Google Scholar]

- Kreifelts B, Ethofer T, Grodd W, Erb M, Wildgruber D. Audiovisual integration of emotional signals in voice and face: An event-related fMRI study. NeuroImage. 2007;37:1445–1456. doi: 10.1016/j.neuroimage.2007.06.020. [DOI] [PubMed] [Google Scholar]

- Lambdon Ralph MA, Sage K, Jones RW, Mayberry EJ. Coherent concepts are computed in the anterior temporal lobes. PNAS. 2010;107:2717–2722. doi: 10.1073/pnas.0907307107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lambon Ralph MA, Pobric G, Jefferies E. Conceptual knowledge is underpinned by the temporal pole bilaterally: Convergent evidence from rTMS. Cerebral Cortex. 2009;19:832–838. doi: 10.1093/cercor/bhn131. [DOI] [PubMed] [Google Scholar]

- Lambon Ralph MA, Sage K, Jones RW, Mayberry EJ. Coherent concepts are computed in the anterior temporal lobes. Proceedings of the National Academy of Sciences. 2010;107:2717–2722. doi: 10.1073/pnas.0907307107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Wightman FL, Brefczynski JA, Phinney RE, Binder JR, DeYoe EA. Human brain regions involved in recognizing environmental sounds. Cerebral Cortex. 2004;14:1008–1021. doi: 10.1093/cercor/bhh061. [DOI] [PubMed] [Google Scholar]

- Luders H, Lesser RP, Hahn J, Dinner DS, Morris H, Resor S, Harrison M. Basal temporal language area demonstrated by electrical stimulation. Neurology. 1986;36:505–510. doi: 10.1212/wnl.36.4.505. [DOI] [PubMed] [Google Scholar]

- Luders H, Lesser RP, Hahn J, Dinner DS, Morris HH, Wyllie E, Godoy J. Basal temporal language area. Brain. 1991;114:743–754. doi: 10.1093/brain/114.2.743. [DOI] [PubMed] [Google Scholar]

- Martin A. Circuits in Mind: The Neural Foundations for Object Concepts in the Cognitive Neurosciences. 4th Edition ed. MIT Press; 2009. (Chapter Chapter). [Google Scholar]

- McCarthy G, Nobre AC, Bentin S, Spencer DD. Language-related field potentials in the anterior-medial temporal lobe: I. Intracranial distribution and neural generators. The Journal of Neuroscience. 1995;15:1080–1089. doi: 10.1523/JNEUROSCI.15-02-01080.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClelland JL, Rogers TT. The parallel distributed processing approach to semantic cognition. Nature Reviews: Neuroscience. 2003;4:310–322. doi: 10.1038/nrn1076. [DOI] [PubMed] [Google Scholar]

- Mion M, Patterson K, Acosta-Cabronero J, Pengas G, Izquierdo-Garcia D, Hong YT, Fryer TD, Williams GB, Hodges JR, Nestor PJ. What the left and right anterior fusiform gyri tell us about semantic memory. Brain. 2010;133:3256–3268. doi: 10.1093/brain/awq272. [DOI] [PubMed] [Google Scholar]

- Molholm S, Ritter W, Javitt DC, Foxe JJ. Multisensory visual-auditory object recognition in humans: a high-density electrical mapping study. Cerebral Cortex. 2004;14:452–465. doi: 10.1093/cercor/bhh007. [DOI] [PubMed] [Google Scholar]

- Moll J, Zahn R, de Oliveira-Souza R, Krueger F, Grafman J. The neural basis of human moral cognition. Nature Reviews: Neuroscience. 2005;6:799–809. doi: 10.1038/nrn1768. [DOI] [PubMed] [Google Scholar]

- Moran MA, Mufson EJ, Mesulam MM. Neural inputs into the temporopolar cortex of the rhesus monkey. The Journal of Comparative Neurology. 1987;256:88–103. doi: 10.1002/cne.902560108. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Kubota K. The primate temporal pole: its putative role in object recognition memory. Behavioural Brain Research. 1996;77:53–77. doi: 10.1016/0166-4328(95)00227-8. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Matsumoto K, Mikami A, Kubota K. Visual response properties of single neurons in the temporal pole of behaving monkeys. Journal of Neurophysiology. 1994;71:1206–1221. doi: 10.1152/jn.1994.71.3.1206. [DOI] [PubMed] [Google Scholar]

- Nestor PJ, Fryer TD, Hodges JR. Declaritive memory impairments in Alzheimer's disease and semantic dementia. NeuroImage. 2006;30:1010–1020. doi: 10.1016/j.neuroimage.2005.10.008. [DOI] [PubMed] [Google Scholar]

- Nobre AC, Allison T, McCarthy G. Word recognition in the human inferior temporal lobe. Nature. 1994;372:260–263. doi: 10.1038/372260a0. [DOI] [PubMed] [Google Scholar]

- Nobre AC, McCarthy G. Language-related field potentials in the anterior-medial temporal lobe: II. Effects of word type and semantic priming. The Journal of Neuroscience. 1995;15:1090–1098. doi: 10.1523/JNEUROSCI.15-02-01090.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noppeney U, Price CJ. Retrieval of abstract semantics. NeuroImage. 2004;22:164–170. doi: 10.1016/j.neuroimage.2003.12.010. [DOI] [PubMed] [Google Scholar]

- Olson IR, Gatenby JC, Gore JC. A comparison of bound and unbound audio-visual information processing in the human cerebral cortex. Cognitive Brain Research. 2003;14:129–138. doi: 10.1016/s0926-6410(02)00067-8. [DOI] [PubMed] [Google Scholar]

- Olson IR, Plotzker A, Ezzyat Y. The enigmatic temporal pole: a review of findings on social and emotional processing. Brain. 2007;130:1718–1731. doi: 10.1093/brain/awm052. [DOI] [PubMed] [Google Scholar]

- Olson IR, Rao H, Moore KS, Wang JJ, Detre J, Aguirre GK. Using perfusion fMRI to measure continuous changes in neural activity with learning. Brain and Cognition. 2006;60:262–271. doi: 10.1016/j.bandc.2005.11.010. [DOI] [PubMed] [Google Scholar]

- Patterson K, Nestor PJ, Rogers TT. Where do you know what you know? The representation of semantic knowledge in the human brain. Nature Reviews: Neuroscience. 2007;8:976–988. doi: 10.1038/nrn2277. [DOI] [PubMed] [Google Scholar]

- Plaut DC. Graded modality-speciic specialisation in semantics: A computational account of optic aphasia. Current Neuropsychology. 2002;19:603–639. doi: 10.1080/02643290244000112. [DOI] [PubMed] [Google Scholar]

- Pobric G, Jefferies E, Lambdon Ralph MA. Category-specific versus category-general semantic impairment induced by transcranial magnetic stimulation. Current Biology. 2010a;25:964–968. doi: 10.1016/j.cub.2010.03.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pobric G, Jefferies E, Lambdon Ralph MA. Category-specific versus category-general semantic impairments induced by transcranial magnetic stimulation. Current Biology. 2010b;20:964–968. doi: 10.1016/j.cub.2010.03.070. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pobric G, Jefferies E, Lambon Ralph MA. Anterior temporal lobes mediate semantic representation: Mimicking semantic dementia by using rTMS in normal participants. Proceedings of the National Academy of Sciences. 2007;104:20137–20141. doi: 10.1073/pnas.0707383104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pobric G, Lambon Ralph MA, Jefferies E. The role of the anterior temporal lobes in the comprehension of concrete and abstract words. Cortex. 2009;45:1104–1110. doi: 10.1016/j.cortex.2009.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poremba A, Saunders RC, Crane AM, Cook M, Sokoloff L, Mishkin M. Functional mapping of the primate auditory system. Science. 2003;299:568–572. doi: 10.1126/science.1078900. [DOI] [PubMed] [Google Scholar]

- Prinz JJ. Furnishing the mind: Concepts and their perceptual basis. Cambridge, MA: MIT Press; 2002. (Chapter Chapter). [Google Scholar]

- Robins DL, Hunyadi E, Schultz RT. Superior temporal activation in response to dynamic audio-visual emotional cues. Brain and Cognition. 2009;69:269–278. doi: 10.1016/j.bandc.2008.08.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers TT, Hocking J, Noppeney U, Mechelli A, Gorno-Tempini ML, Patterson K, Price CJ. Anterior temporal cortex and semantic memory: Reconciling findings from neuropsychology and functional imaging. Cognitive, Affective, & Behavioral Neuroscience. 2006;6:201–213. doi: 10.3758/cabn.6.3.201. [DOI] [PubMed] [Google Scholar]

- Ross LA, Olson IR. Social cognition and the anterior temporal lobes. NeuroImage. 2010;49:3452–3462. doi: 10.1016/j.neuroimage.2009.11.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ross LA, Olson IR. What’s unique about unique entities? An fMRI investigation of the semantics of famous faces and landmarks. Cerebral Cortex. doi: 10.1093/cercor/bhr274. (accepted - pending revisions). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaffler L, Luders HO, Beck GJ. Quantitative comparison of language deficits produced by extraoperative electrical stimulation of Broca's, Wernicke's, and basal temporal language areas. Epilepsia. 1996;37:463–475. doi: 10.1111/j.1528-1157.1996.tb00593.x. [DOI] [PubMed] [Google Scholar]

- Simmons WK, Martin A. The anterior temporal lobes and the functional architecture of semantic memory. Journal of the International Neuropsychological Society. 2009;15:645–649. doi: 10.1017/S1355617709990348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simmons WK, Reddish M, Bellgowan PSF, Martin A. The selectivity and functional connectivity of the anterior temporal lobes. Cerebral Cortex. 2010;20:813–825. doi: 10.1093/cercor/bhp149. [DOI] [PMC free article] [PubMed] [Google Scholar]