Abstract

The ability to rapidly and accurately recognize visual stimuli represents a significant computational challenge. Yet, despite such complexity, the primate brain manages this task effortlessly. How it does so remains largely a mystery. The study of visual perception and object recognition was once limited to investigations of brain-damaged individuals or lesion experiments in animals. However, in the last 25 years, new methodologies, such as functional neuroimaging and advances in electrophysiological approaches, have provided scientists with the opportunity to examine this problem from new perspectives. This review highlights how some of these recent technological advances have contributed to the study of visual processing and where we now stand with respect to our understanding of neural mechanisms underlying object recognition.

INTRODUCTION

In many ways, the neural processes associated with object recognition are not unlike those associated with language. Just as words are formed from a combination of letters, visual objects are formed from a combination of individual features, such as lines or textures. By themselves, these features reveal little regarding the identity of the specific object to which they belong, much like individual letters do not convey the meaning of a word. The features must be combined in specific ways, into “syllables” and “words”, to create a unified percept of the object of interest. Moreover, this must be done irrespective of changes in stimulus orientation, illumination, and position to yield object invariance - the ability to recognize an object under changing conditions. Yet despite this complexity, the brain accomplishes the task effortlessly. How it does so remains largely a mystery.

Visual processing is thought to consist of two stages. The first stage involves transforming the visual stimulus into neural impulses that are transmitted via the retina and the lateral geniculate nucleus to the primary visual cortex (V1), where the process of analyzing the individual features begins. From V1, the visual information is distributed to a number of extrastriate visual areas, including areas V2, V3, V4, and MT, which process shape, color, motion, and other visual features. These features are then combined to create a complete representation of the image under scrutiny. The second stage involves binding this representation with its categorical (i.e., recognizing the collection of features as a face, a car, etc.) and ultimately its specific identity (i.e., mother, father, Ford Taurus, etc.). Historically, these two stages were believed to occur simultaneously, dependent on the same neural mechanism (i.e., seeing is recognizing). However, with the discovery of perceptual deficits in recognition in the absence of any significant loss of vision (i.e., visual agnosias; see below), it has become clear that they are separable and likely mediated by different anatomical substrates.

Lesion studies in patients have revealed a number of regions in the occipital and temporal cortices that are intimately linked to visual processing. Subsequent electrophysiological studies have uncovered individual neurons that selectively respond to a variety of visual stimuli, ranging from isolated bars of light to intact faces. Despite these advances, it is still unclear how object recognition takes place. How are visual features, such as shapes, colors and textures encoded by individual neurons (i.e., what is the visual alphabet)? How are these features combined to produce complete representations of complex objects (i.e., visual “words”)? And finally, how are these representations anatomically organized (i.e., what is the visual “dictionary”)?

Over the last 25 years, there have been a number of technological advances that have led to major strides in our understanding of the role of the inferior temporal (IT) cortex in visual processing and object recognition. This review will discuss how these technological advances have contributed to the study of visual processing and where we now stand with respect to our understanding of object recognition. We begin with a brief overview of the early studies that identified IT cortex as the center of object recognition in the brain. We next discuss how advances in optical imaging and physiological recording have revealed much about the complex properties of IT neurons. We then highlight how functional imaging revolutionized the study of cognitive neuroscience, and visual cognition in particular. Finally, we conclude by discussing how, in the last decade, efforts to relate findings from human and non-human primates have led to more integrated theories regarding the processing of faces, which are believed to represent a special category of visual stimuli.

HISTORICAL OVERVIEW

Deficits in Perception – “Seeing” is not “Recognizing”

Despite a long history of research into brain function, it is only relatively recently that different cognitive functions have been attributed to specific locations within the brain. Prior to the 19th century, it was believed that cognitive functions were equally distributed throughout the cerebral hemispheres, subcortical structures, and even the ventricles (see Gross, 1999 for review). The first widely acknowledged theory to attribute specific functions to certain brain areas was the field of phrenology, pioneered by Gall, Spurzheim, and others (Gall & Spurzheim, 1809, Gall & Spurzheim, 1810). Later, more objective examples of localized cortical function began to emerge. Paul Broca, a French pathology professor, noted that damage to the left frontal cortex was often associated with speech impairments (Broca, 1861). John Hughlings Jackson proposed the existence of a motor area based on his studies of epileptic patients (Jackson, 1870). Such demonstrations led to the establishment of cerebral localization as the standard model of brain function, and prompted the hunt for other examples of specialized regions within the brain.

One of the more active areas of research at this time was the search for visual centers of the brain. During the late 19th and early 20th century, a number of experiments were conducted that ultimately confirmed the occipital cortex as the primary visual center in primates. However, these experiments also highlighted an important dissociation in visual processing between “seeing” a visual stimulus and “recognizing” it. For example, Hermann Munk (Munk, 1881) and Brown and Schafer (1888) independently found that monkeys and dogs with temporal lobe lesions would ignore food and water, even if hungry and thirsty. However, they could still navigate rooms and avoid obstacles placed in front of them, indicating that their visual acuity was, at least partially, intact. Munk referred to this syndrome as “psychic blindness” to describe the apparent inability of the animal to understand the meaning of the visual stimuli presented. This was in contrast to “cortical blindness”, which referred to the complete loss of vision that followed extensive occipital lobe lesions.

Psychic blindness, later renamed “visual agnosia”, has since been demonstrated in humans who suffered damage to the occipital and temporal cortices (see Farah, 2004 for review). Two separate forms of visual agnosia have been characterized. Apperceptive agnosia, which occurs after diffuse damage to the occipital and temporal cortices, affects both early and late visual processing. Patients suffering from apperceptive agnosia are impaired at simple discrimination tasks, have difficulty copying pictures, and cannot name or categorize objects. Patients suffering from associative agnosia, on the other hand, can successfully identify and copy shapes. However, their ability to associate any meaning with the images is severely impaired. Associative agnosia often involves damage to the inferior portions of the posterior cortex, the ventral occipital cortex and/or the lingual and fusiform gyri in the posterior temporal lobe.

The detailed studies of perceptual deficits in patients suffering from associative agnosia foreshadowed much of what we now know about higher-order visual processing and the cortical mechanisms associated with object recognition. First, it was shown that the inability of patients to categorize or identify a given stimulus was unaffected by changes in viewpoint, size, orientation, or any other “low-level” manipulation (e.g., McCarthy & Warrington, 1986). This finding confirmed that the disorder was not associated with an impairment in visual acuity per se, but in linking the physical features of a stimulus with its meaning. In turn, this implied that the brain areas associated with object recognition should be equally insensitive to such changes (i.e. show object invariance). Second, it was shown that perceptual deficits could be restricted to specific visual categories. Joachim Bodamer (1947) reported findings from several cases of patients who lacked the ability to recognize familiar faces, which he termed prosopagnosia. In cases of pure prosopagnosia, the ability to classify and categorize non-face stimuli is unimpaired (see Damasio, Damasio & Van Hoesen, 1982 for review). In other instances, a patient may be more impaired for certain categories compared to others (e.g., a greater impairment for images of living objects such as plants and animals compared to images of inanimate objects; Warrington & Shallice, 1984). The selective nature of these deficits suggests not only that the areas responsible for “seeing” a visual stimulus are distinct from those responsible for “recognizing” it, but that the anatomical substrates for object recognition might be arranged according to categorical distinctions.

Identifying the contribution of the inferior temporal cortex to object recognition

While the involvement of the occipital cortex in vision was firmly established by the early 20th century, the role of the temporal cortex in visual perception was not fully appreciated until several decades later. In 1938, Heinrich Klüver and Paul Bucy conducted a series of lesion experiments in monkeys that produced a characteristic set of behavioral symptoms (see Klüver, 1948, Klüver & Bucy, 1938). They noted that monkeys with bilateral removal of the temporal lobe showed not only decreased emotional reactivity and increased sexual activity, but also a significant impairment in their ability to recognize and/or discriminate visual stimuli. This later became known as the Klüver-Bucy syndrome. However, these changes in visual ability were not accompanied by any appreciable loss of vision. It was later revealed that the visual deficits of the Klüver-Bucy syndrome were due to selective damage of the inferior temporal neocortex (Blum, Chow & Pribram, 1950, Chow, 1961, Iwai & Mishkin, 1969, Mishkin, 1954, Mishkin & Pribram, 1954). Damage to medially adjacent temporal-lobe regions (i.e., the amygdala) failed to produce visual deficits, but instead led to the social and sexual abnormalities.

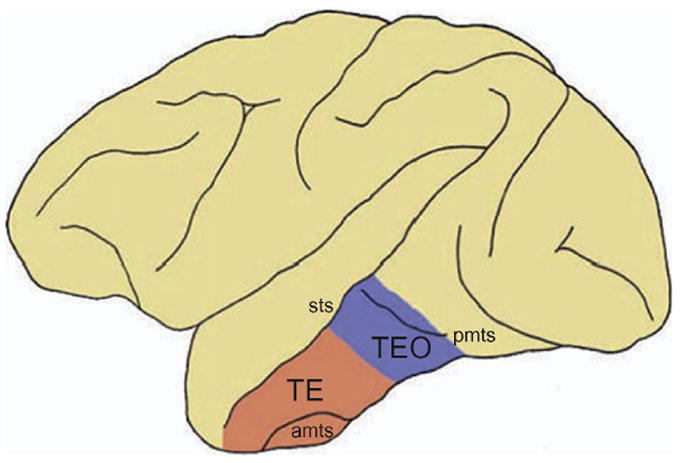

The inferior temporal (IT) cortex of monkeys was further divided into architectonically distinct regions, most notably area TE, located anteriorly along the inferior temporal gyrus, and area TEO, located posterior to area TE near the posterior middle temporal sulcus (Figure 1) (Boussaoud, Desimone & Ungerleider, 1991, Seltzer & Pandya, 1978, von Bonin & Bailey, 1947). Later work showed that damage to area TE resulted in greater deficits in visual recognition whereas damage to area TEO resulted in greater deficits in visual discrimination (Cowey & Gross, 1970, Ettlinger, Iwai, Mishkin & Rosvold, 1968, Iwai & Mishkin, 1968).

Figure 1. Architectonic subdivisions of the monkey inferior temporal cortex.

Lateral view of the monkey brain showing the two architectonic subdivisions of the inferior temporal cortex: area TE and area TEO. amts: anterior middle temporal sulcus; pmts: posterior middle temporal sulcus; sts: superior temporal sulcus (from Webster et al., 1991).

It was known at this time that more posterior visual areas, such as striate (V1) and extrastriate visual areas, received visual inputs from several subcortical structures such as the pulvinar and superior colliculus (Felleman & Van Essen, 1991). However, lesions of the pulvinar and superior colliculus repeatedly failed to produce deficits in visual discrimination (Chow, 1951, Ungerleider & Pribram, 1977), as one might expect if the region involved in visual discrimination, namely IT cortex, relied on inputs from these subcortical structures. In 1975, Rocha-Miranda and colleagues confirmed that activation of IT cortex depends exclusively on inputs received from corticocortical connections originating in V1 (Desimone, Fleming & Gross, 1980, Rockland & Pandya, 1979, Zeki, 1971). They found that bilateral removal of V1 obliterated all visual responses in IT neurons. However, unilateral lesions of V1 or transection of the forebrain commissures resulted in IT neurons that only respond to stimuli in the contralateral visual field. The behavioral significance of this corticocortical pathway could be seen in the crossed-lesion experiments conducted by Mishkin and colleagues in the 1960’s (see Mishkin, 1966). They combined V1 lesions in one hemisphere with lesions of IT cortex in the opposite hemisphere and found no visual impairment. Presumably, visual inputs from V1 of the intact hemisphere could still reach the contralateral IT cortex via the forebrain commissures. However, if the forebrain commissures were cut, thus completely isolating the intact IT cortex from its V1 input, then the animals exhibited profound visual deficits. One would not expect such a deficit if IT cortex could still obtain sufficient visual information via subcortical pathways.

Neurons in IT cortex are selective for complex stimuli

In 1969, Gross and colleagues reported on the first electrophysiological recordings from individual neurons in IT cortex. They found that the majority of neurons in IT cortex (approximately 80%) are visually responsive, displaying both excitatory and suppressed responses to visual stimuli (Gross, Bender & Rocha-Miranda, 1969, Gross, Rocha-Miranda & Bender, 1972). Neurons in IT cortex were found to have very large receptive fields relative to earlier visual areas, often spanning both hemifields and almost always including the center of gaze (Gross et al., 1972). However, what was perhaps most noteworthy about neurons in IT cortex is their strong selectivity for complex shapes, including faces and objects (e.g., Figure 2) (Bruce, Desimone & Gross, 1981, Desimone, Albright, Gross & Bruce, 1984, Gross et al., 1972, Kobatake & Tanaka, 1994, Perrett, Rolls & Caan, 1982, Rolls, Judge & Sanghera, 1977, Tanaka, Saito, Fukada & Moriya, 1991). Moreover, this selectivity was shown to be insensitive to changes in size and location (Desimone et al., 1984), as predicted by the earlier neuropsychological evidence. The majority of these early electrophysiological studies were done in anesthetized animals, demonstrating that the unique sensitivity of IT neurons to complex shapes is not a product of top-down or attentional factors but is intrinsic to the neurons themselves.

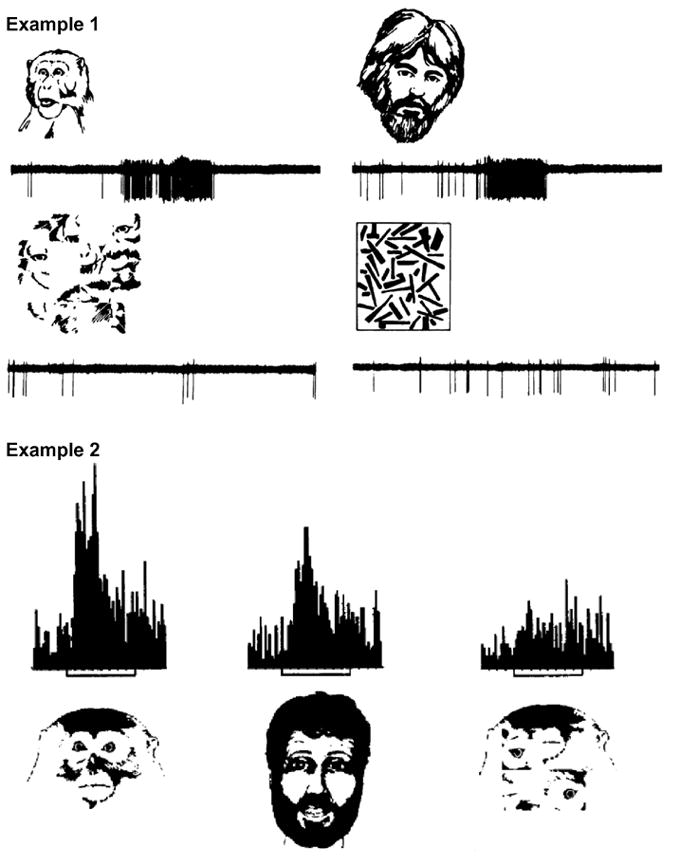

Figure 2. Face selective neurons in the monkey temporal cortex.

Two examples of face-selective neurons recorded in the temporal cortex of anaesthetized monkeys. Example 1 was recorded from the superior bank of the superior temporal sulcus (from Bruce et al., 1981). Example 2 was recorded from the inferior temporal cortex (from Desimone et al., 1984). Both neurons responded vigorously to images of human and monkey faces. However, their activity decreased significantly when presented with scrambled versions of those images, or other non-face stimuli.

The discovery of neurons selective for specific objects in IT cortex had profound repercussions on theories concerning object recognition. Some years earlier, Jerzy Konorski (1967) had postulated the existence of “gnostic” units, which are, in theory, individual neurons that signal the presence of a particular object. Such a neuron would presumably code for all iterations of this object - across changes in size, viewpoint, location, etc. The concept of gnostic units was later reworked into the “The “Grandmother Cell Hypothesis”, a term first credited to Jerry Lettvin (see Gross, 2002). If object recognition proceeds along a strictly hierarchical pathway in which selectivity among neurons gets progressively more complex, one should theoretically be able to locate a particular neuron or small population of neurons that responds selectivity to a particular object or person, such as one’s grandmother. Taking this one step further, if we were to destroy this neuron (or population of neurons), we would lose the ability to recognize said grandmother.

Summary

The progressive accumulation of information regarding the properties of visual cortex ultimately led to the hypothesis of a visual recognition pathway, stretching from V1 to area TE (the ventral stream or “what” pathway; Ungerleider & Mishkin, 1982). A second visual pathway, stretching from V1 to parietal cortex was postulated to process visuospatial information (the dorsal stream or “where” pathway). As one moves along both pathways, there is a progressive loss of retinotopic information and a progressive increase in convergence among neuronal projections (Shiwa, 1987, Weller & Kaas, 1987, Weller & Steele, 1992). For the ventral visual pathway, this could account for the insensitivity of IT neurons to spatial location, size, and viewpoint: invariance necessary for object recognition.

One assumption based on this evidence is that object recognition is a serial process that proceeds through a chain of hierarchically organized areas beginning with early visual areas responsible for analyzing simple visual features (e.g., lines, color, etc.), to highly selective areas that respond to individual categorical exemplars (e.g., faces). Neurons within these end-stage areas (i.e., a “gnostic” or “grandmother” neuron) would presumably encode a complete representation of a given object. It was eventually pointed out, however, that if objects were indeed encoded by individual neurons, there would be an insufficient number of neurons to encode all possible objects, and thus the concept of such a sparse-encoding scheme fell out of favor. Nonetheless, it did leave the community with two critical, and as of yet, unanswered questions. First, how are objects represented, if not by “grandmother” or “gnostic” neurons? And second, what is the relationship of such object representations to visual agnosias? In the remainder of this review, we will highlight several research avenues followed over the last 25 years that have attempted to address these fundamental questions.

BUILDING OBJECT REPRESENTATIONS IN THE INFERIOR TEMPORAL CORTEX

“Combination Coding”: Representing complex objects through combinations of simpler elements

The 1980’s and 1990’s saw a number of electrophysiological studies attempting to characterize the basic response properties of IT neurons and their relationship to object recognition. What rapidly became clear was that IT neurons show a wide range of stimulus preferences that do not immediately lend themselves to an obvious organizational scheme. Unlike neurons in earlier visual areas such as V1, which are organized retinotopically, or V2, which are organized into modules according to functional properties (e.g., color), neurons in IT cortex did not, at first, seem to be organized according to any recognizable scheme.

In 1984, Desimone and colleagues performed one of the first systematic studies of the neuronal properties of IT neurons. They compared the responses of IT neurons in anesthetized monkeys to a wide array of stimuli, ranging from simple shapes, such as bars and circles, to complex stimuli, such as faces and snakes. They found that while a small subset of neurons responded selectively to faces and hands, the majority responded to a variety of different simple stimuli. In the latter case, they attempted to identify the critical features of the stimulus that were responsible for driving individual neurons by presenting cutouts or otherwise altered versions of the stimuli (see also Schwartz, Desimone, Albright & Gross, 1983). They found that the majority of visually responsive neurons required complex shapes, textures, colors or some combination thereof to be maximally activated.

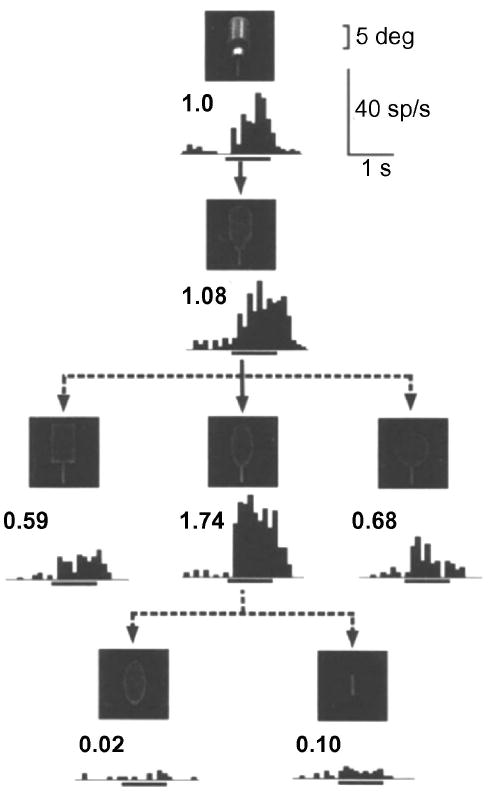

Several years later, Tanaka and colleagues developed a more sophisticated stimulus reduction algorithm that allowed them to better characterize the critical features that activated each sampled neuron (Fujita, Tanaka, Ito & Cheng, 1992, Ito, Tamura, Fujita & Tanaka, 1995, Kobatake & Tanaka, 1994, Tanaka et al., 1991). They found that neurons in the posterior one-third of IT cortex (area TEO) could be maximally activated by bars or circles, whereas those in the anterior two-thirds of IT cortex (area TE) required more complex arrangements of features to elicit a maximal response (e.g., Figure 3). These critical features were often small groups of elements derived from intact real-world objects. Tanaka and colleagues proposed that objects might be represented by a small number of neurons, each sensitive to the various features of that object (“combination coding”; Figure 4). The benefit of combination over sparse coding is that the former is much more flexible, allowing for limitless representations through the integration of the responses from different sets of feature-selective neurons.

Figure 3. Systematic reduction of real-world object reveals feature selectivity of IT neurons.

Responses of an IT neuron to intact (top panel) and systematically reduced versions of a water bottle. Inset numbers above the histograms represent the normalized response magnitudes relative to the response to the intact image. Stimulus presentation window is indicated by horizontal line below each histogram (from Tanaka, 1996).

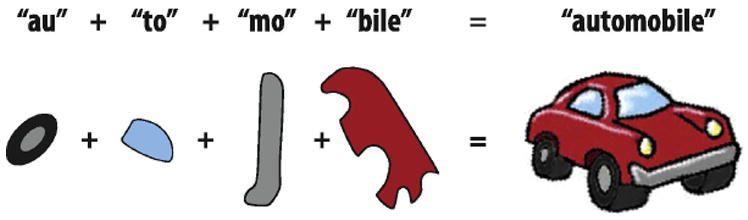

Figure 4. Real-world objects represented by combinations of different features.

Similar to how individual syllables are combined to create words, “Combination coding” suggests that objects are represented by neurons (or small populations of neurons) each coding for the different complex features that comprise the object (see Tanaka, 1996 for review).

Around the same time, psychologists were developing similar “object-based” models of object recognition, which were all, more or less, based on the principle of simpler shapes being combined to produce complex representations. Perhaps the most well known of these is the theory of “Recognition-by-Components” (RBC), first proposed by Biederman (1987). Similar to combination coding, RBC proposed that objects are represented through various combinations of more primitive shapes. What was particularly intriguing about RBC is that it constrained these primitives to a set of no more than 36 geometric icons or “geons” (Figure 5A). These geons were thought to be distinguished from one another on the basis of five properties: curvature, collinearity, symmetry, parallelism, and cotermination.

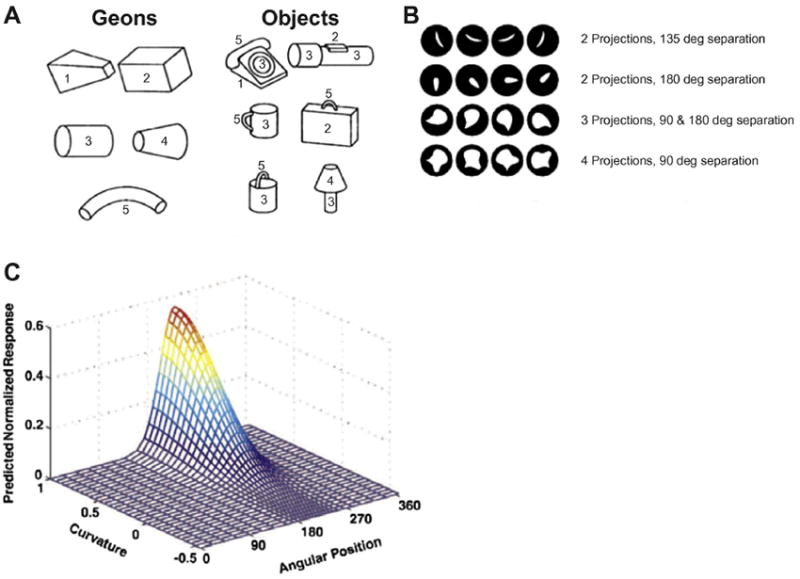

Figure 5. Object-based (view-independent) models of object recognition.

A. Examples of geometric icons (”geons”) as originally proposed by Biederman (1987), and how they could be combined to produce representations of real-world objects. B. Stimuli used by Pasupathy and Connor (2001) to investigate tuning properties of V4 neurons. Stimuli contained between 2-4 projections, separated by 90-180 degrees, presented at various orientations. C. Example of V4 neuron exhibiting tuning to a specific feature composed of a particular curvature and angular position.

Object-based models of object recognition make certain predictions about what one might expect to find at the neuronal level. For example, object-based models predict the existence of neurons sensitive to intermediate shapes (e.g., geons). Tanaka and colleagues were among the first to provide direct neurophysiological evidence for object-based models of object recognition – specifically, neurons that respond selectively and most strongly to decomposed versions of real-world objects. This was followed up by an extensive examination of an intermediate stage along the visual pathway by Connor and colleagues, which provided significant support for object-based models.

Pasupathy and Connor (1999, 2001, 2002) measured the responses of neurons in area V4 to a large set of contoured shapes, each with a different number of convex projections (2-4), different orientations, and degrees of curvature (Figure 5B). They found that neurons in V4 responded most strongly to single contours or combinations of contours, arranged in particular configurations (e.g., acute convexity located in the lower right of the stimulus, acute convexity immediately adjacent to a shallow concavity, etc.; see example in Figure 5C). Notably, this tuning remained consistent when the particular configuration was placed within a variety of more complex shapes, thus illustrating how a single neuron might participate in the coding of many different complex objects.

Later, Brincat and Connor (2004, 2006) reported similar findings in posterior IT cortex (i.e., area TEO). They further found that some neurons within this region encoded shape information at multiple levels: an initial visual response that represented the simple contour fragments and a second, later response that differed according to how the various shape fragments were combined. This biphasic property is very similar to that found among neurons in anterior IT cortex as described by Sugase and colleagues (1999) and others (e.g., Tamura & Tanaka, 2001; see below). Brincat and Connor inferred that the second phase of the response represented the outcome of a recursive network process responsible for constructing selective responses of increased complexity.

These data suggest that neurons in V4 and posterior IT cortex might serve to identify and discriminate between the primitive “geons” described in Biederman’s RBC theory. At the very least, these data show how neuronal selectivity progresses from simple line segments (in V1) to simple curves (in V2), to complex curves or combination of curves (in V4 and posterior IT cortex), and finally to complex shapes such as faces and hands (in anterior IT cortex).

Object-based models are considered to be “view-independent” because they rely on hard-coded representations of geons (or similar primitives) encompassing all possible orientations. As such, the orientation of the object being examined is irrelevant provided the various components can be recognized and distinguished from one another. An alternative to such models are the so-called “view-dependent” or “image-based” models of object recognition (Logothetis & Sheinberg, 1996, Tarr & Bulthoff, 1998). These models propose that objects are represented by multiple independent views of that object. Immediate criticisms of these image-based (view-dependent) models include the unlikelihood that we could store all possible viewpoints for all objects in our environment (i.e., “combinatorial explosion”) or that we are so limited in our recognition abilities that we can only recognize a given object if we have previously seen it in that precise configuration. Therefore, to overcome these criticisms, image-based models assume that the brain is somehow able to extrapolate a more complete representation of a given object from an incomplete collection of experienced viewpoints. Despite this assumption, such models do predict that subjects should be faster at recognizing objects when presented in familiar vs. unfamiliar viewpoints – which is indeed the case (e.g., Humphrey & Khan, 1992). Bülthoff and Edelman (1992) evaluated the ability of human subjects to correctly identify previously unfamiliar objects when presented in unfamiliar orientations. Subjects were first presented a series of 2D images of an unfamiliar object from a limited number of viewpoints. Later, subjects were presented with new images of the same object from different perspectives. The ability of subjects to correctly recognize the objects systematically decreased with greater deviations from the familiar viewpoints.

Imaged-based models also predict that neuronal responses among IT neurons should show evidence of view-dependence, which has again been demonstrated. Using a paradigm similar to that of Bülthoff and Edelman (1992), Logothetis and colleagues demonstrated that monkeys were better at recognizing the objects when presented in a previously presented viewpoint (as compared to a novel viewpoint) (Logothetis, Pauls, Bulthoff & Poggio, 1994). When they sampled activity from neurons in IT cortex while monkeys performed this task, they found that neurons in IT cortex responsive to the objects were viewpoint dependent, responding most strongly to one viewpoint and decreasing activity systematically as the object was rotated away from its preferred orientation (Logothetis, Pauls & Poggio, 1995). They proposed that a view-invariant representation of a given object might be generated by a small population of view-dependent neurons that can, through changes in their individual firing patterns, signal the presence of a given object as well as extrapolate its current orientation.

Evidence for both object-based and image-based models of object recognition continues to accumulate, leading most to believe that the underlying mechanism incorporates aspects of both. Exemplar level recognition (e.g., discriminating between a Toyota and a Ford) is addressed particularly well by image-based models, which are essentially based on storing multiple snapshots of specific exemplars. On the other hand, how these snapshots are grouped according to semantic relationships to perform categorical distinctions (e.g., cars from chairs) is unclear. For that matter, it is unclear how object-based models construct categorical groupings, highlighting one of the many challenges in understanding object recognition.

IT neurons are organized into columns according to feature selectivity

The studies cited above, and others like them, detailed the functional role of individual IT neurons in object recognition. Around the same time, evidence began to emerge that described how neurons in IT cortex might be spatially organized. Early experiments showed that neuronal activity within IT cortex is better correlated among pairs of neighboring neurons (within 100 μm) and weakens significantly when the two neurons are separated by more than 250 μm (Gochin, Miller, Gross & Gerstein, 1991). Similarly, neurons along the same recording track perpendicular to the cortical surface exhibit similar preferences for visual features (Fujita et al., 1992). These findings suggested that neurons might be clustered according to their stimulus preferences.

Critical evidence for a clustered arrangement of IT neurons came from a landmark study that used optical imaging to investigate the preferences of neurons in area TE. Optical imaging measures differences in light absorption on the cortical surface based on the ratio between deoxygenated to oxygenated blood. The extent to which deoxygenated compared to oxygenated blood absorbs light varies as a function of wavelength. If a particular region of cortex is more active than another, a change will occur in the ratio of deoxygenated vs. oxygenated blood, thereby causing a change in light absorption that can be measured using sensitive cameras (Grinvald, Lieke, Frostig, Gilbert & Wiesel, 1986). Wang and colleagues (1996) used this technology to show that neurons in area TE are arranged into patches, spanning approximately 500 μm, which are spatially distributed according to their selectivity for complex features. By combining this approach with electrophysiological recordings, Tsunoda and colleagues (2001) demonstrated that as real-world objects are systematically reduced into their component elements, the patches (and corresponding neurons) that would normally respond to the elements removed are effectively silenced.

Studies such as these have shown us how responses from small populations of neurons, each sensitive to a particular set of features that might belong to many different objects, can be combined to produce a complete representation of a given object. Returning to the analogy of language, it is as if individual neurons in IT cortex code for individual syllables that, when combined, spell out different words – the number of neurons (or populations of neurons) involved being a function of the complexity of the word/object. Further, these neurons are organized not according to their receptive fields, as in earlier visual areas, but according to the similarity of their preferred features.

Dynamic properties of IT neurons

In the preceding section, we outlined how electrophysiological studies combined with optical imaging revealed a potential mechanism through which objects might be encoded by small populations of IT neurons. The sophisticated stimulus selectivity of IT neurons follows a logical progression of increasing complexity originating with simple neurons in V1. However, the majority of the aforementioned studies were performed in anaesthetized animals, which ignores any potential impact of behavioral factors on the neural responses. Once investigators began to conduct studies in awake, behaving animals, it was immediately clear that IT neurons were far more flexible in their response properties than had been originally shown. In the following section, we describe a few of the many properties of IT neurons that illustrate how their responses reflect far more than the visual features presented to the retina.

Presenting a novel object to a subject naturally captures attention. New stimuli in the environment could represent a potential threat, or a new potential for reward. In either case, a new stimulus warrants further investigation. One way in which IT neurons denote familiarity is through repetition suppression, a phenomenon whereby response magnitudes decrease over repeated presentations of the same stimulus. Miller and colleagues (1991) trained monkeys to perform a delayed match-to-sample task in which a sample image was first presented followed by a series of test images, one after the other. The monkey was required to release a bar if the test image matched the original sample. The sample and the match could be separated by as many as 4 intervening non-match distractor stimuli, thus requiring the monkey to hold the identity of the sample image in working memory throughout the duration of the trial. It was found that responses to the matching test image were attenuated compared to that to the initial sample. Thus, when the same stimulus was presented more than once and became increasingly familiar to the animal, the responses to that stimulus decreased (i.e., “repetition suppression”). This same effect was later shown to persist over the course of an entire recording session (>1 hour), after hundreds of intervening stimuli (Li, Miller & Desimone, 1993). In essence, repetition suppression enables IT neurons to act as filters for novel stimuli. Repetition suppression is found throughout the brain (e.g., ventral temporal cortex, Fahy et al., 1993, Riches et al., 1991; prefrontal cortex, Dobbins et al., 2004; Mayo and Sommer, 2008; parietal cortex, Lehky and Sereno, 2007) and is believed to play a critical role in behavioral priming (McMahon & Olson, 2007, Wiggs & Martin, 1998).

Another example of the flexible nature of IT neurons is how they can adjust their tuning profiles and selectivity according to the task at hand. For example, through associative learning, it is possible to dynamically “retune” IT neurons to respond to additional stimuli. Sakai and Miyashita (1991) trained monkeys to memorize pairs of stimuli by first presenting them with a cue stimulus followed shortly by two test stimuli. The monkeys were rewarded for selecting one of the latter two stimuli that made up an arbitrary pair with the cue stimulus. Initially, neurons in IT cortex responded only to one stimulus of the pair. However, over the course of the experiment, as the monkeys learned to associate one stimulus with another, a subset of neurons responded to both elements of the pair. The IT neurons had “learned” to signal the presence of either element of the pair. Similarly, Sigala and Logothetis (2002) found that IT neurons could shift their tuning according to the demands of the current behavioral task. They trained monkeys to discriminate images of fish and faces based on a limited set of diagnostic features (e.g., fin shape, nose length). They found that IT neurons became tuned to the specific features necessary to form these discriminations (Figure 6).

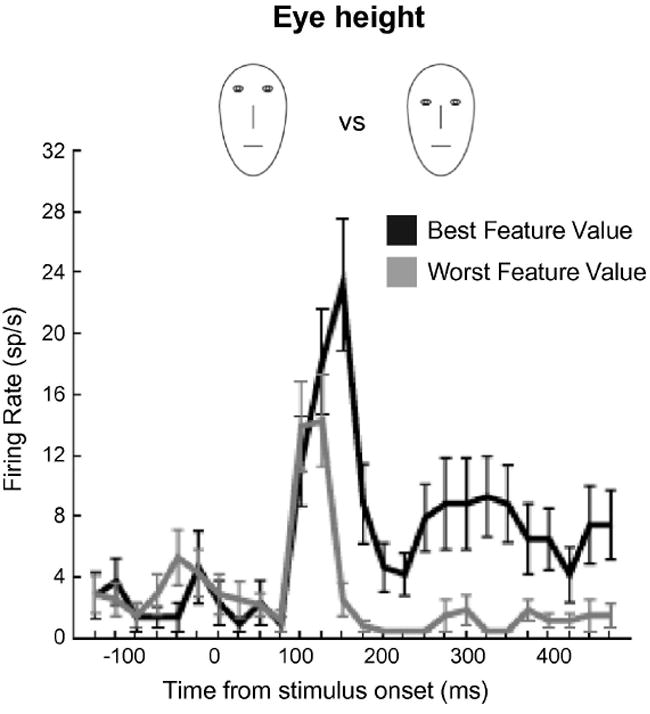

Figure 6. Selectivity of IT neurons adapts to current task demands.

Example of an individual IT neuron whose selectivity was shaped by current task demands. Monkeys were trained to discriminate between different face caricatures on the basis of four diagnostic features: eye height, eye separation, nose length, and mouth height. Black trace indicates the average response to the best feature value (i.e., the exemplar that elicited the strongest response). Gray trace indicates the average response for the worst feature value (i.e., the exemplar that elicited the weakest response). When the monkey was required to discriminate faces on the basis of eye height, for example, the selectivity of the neuron was shaped according to the appropriate feature (from Sigala and Logothetis, 2002).

These studies are a few of many showing how activity among IT neurons reflects more than the presence or absence of certain visual features in the external environment. Activity in IT cortex has been shown to be affected not only by stimulus novelty and experience, associative learning, and task demands but also by spatial attention (e.g., Buffalo, Bertini, Ungerleider & Desimone, 2005, Moran & Desimone, 1985) and stimulus value (e.g., Mogami & Tanaka, 2006). This evidence tells us that IT neurons are not hard-wired to respond to a particular set of features, but can adapt dynamically to the current behavioral objectives, making them ideally suited as the neural substrate for object recognition.

Generating invariant representations through experience

Above, we described how responses among IT neurons decrease as stimuli become more familiar to the subject (i.e., repetition suppression). It has been argued that experience might also be responsible for one of the hallmark characteristics of IT neurons, namely, position invariance. Position invariance refers to the ability of IT neurons to maintain their stimulus selectivity regardless of where the stimulus appears in the visual field. For example, if an IT neuron shows a preference for a particular stimulus (e.g., an image of a face), that preference will be maintained regardless of where the face appears in the visual field or how large the retinal image is. This would be true regardless of the absolute magnitude of the responses. That is to say while the response to a preferred stimulus might be 50 spikes/sec when presented to one location and 30 spikes/sec when presented to another; the response to a non-preferred stimulus will always be less in both locations (e.g., 35 and 15 spikes/sec, respectively). Expanding on this point, if a given neuron prefers faces to houses, and houses to fruit, as long as this ordering of stimulus preference is maintained, the absolute magnitude of the individual responses (which might change in response to changes in size, position, etc.) to the different stimuli is irrelevant (Li, Cox, Zoccolan & DiCarlo, 2009). This property, possibly unique to IT cortex, is extremely advantageous to object recognition because it allows neurons to signal the presence of a particular object regardless of the particular viewing conditions.

Li and DiCarlo (2008) proposed that position invariant representations could be constructed based on the assumption that temporally contiguous shifts of stimuli correspond to the same object. In other words, if a given set of features is, at one moment, 10 degrees to your left and you generate a saccade 10 degrees to the right, then the set of features that are now located 20 degrees to your left likely belong to the same object. They argued that over many exposures, IT neurons could learn to associate a given set of features in one retinal location with the same set of features in another. As such, the same IT neuron would eventually respond to the same set of features, regardless of where they appear. Li and DiCarlo tested this theory by determining whether it would be possible to “fool” a neuron into responding to two different stimuli as if they were the same stimulus presented to two different locations (Figure 7A). While monkeys fixated centrally, a preferred stimulus (i.e., a stimulus that produced a robust response in the neuron currently being recorded) was presented in the periphery. When the monkeys generated a saccade to that stimulus, its identity immediately changed to a different, non-preferred, stimulus. Initially, the two stimuli evoked different response magnitudes, which would effectively allow neurons further downstream to discriminate between them. However, over repeated exposures, the difference in response magnitude between the preferred and the non-preferred stimuli (i.e., the measure of object selectivity) decreased systematically (Figure 7B). One interpretation of this result is that, based on the new similarity between the magnitudes of the neuronal responses, the two stimuli were “seen” as the same object by neurons further downstream: the ability of such downstream neurons to discriminate between the two stimuli had been abolished. In the case of this particular experiment, the effect was limited to one particular location but, in a natural setting over many such exposures, this mechanism could result in a single neuron responding to the same stimulus, regardless of its position in the visual field. Presumably, a similar mechanism could explain not only position invariance but also size invariance. For example, as we approach or move away from a given object, the retinal image of that object will increase or decrease in size, thus recruiting different populations of neurons in earlier areas of visual cortex. However, the visual features will remain the same and, over time, neurons in IT cortex could learn to respond to the different retinal images as the same object. To our knowledge, this adaptability is unique to neurons within IT cortex, further emphasizing its particular relevance for object recognition.

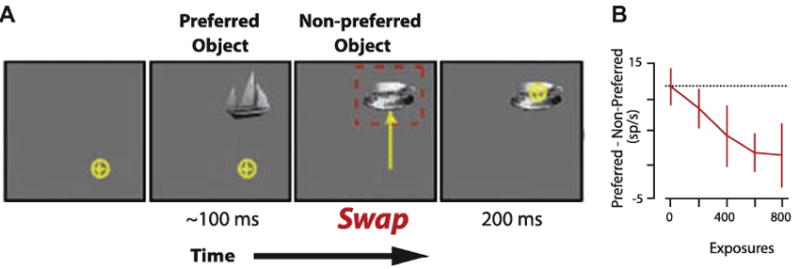

Figure 7. Experience-driven production of position invariant representations in IT cortex.

Example of how altered experience reduces stimulus selectivity of IT neurons to stimuli presented to different retinal positions. A. While their fixation was stable, monkeys were presented with a preferred stimulus (i.e., a stimulus that evoked a strong response in the neuron currently being recorded). When the monkey initiated a saccade to that stimulus, its identity changed to that of a non-preferred stimulus (i.e., one that evoked a weak response). B. Difference between the responses to preferred vs. non-preferred stimuli for 10 multiunit recording sites as a function of the number of swap exposures. Initially, the two stimuli evoked significantly different responses (i.e., strong object selectivity). However, as the number of swap exposures increased, the difference between the two stimuli decreased to the point where the neurons responded with approximately the same magnitude of response to the two different stimuli (i.e., weak object selectivity). In effect, the neurons responded as if the same stimulus was present in the two different locations. The effect was specific to the swap exposure location. This mechanism shows how experience can lead to position invariant responses within individual IT neurons (from Li and DiCarlo, 2008).

Summary

Therefore, in contrast to the sparse-encoding scheme originally suggested by gnostic neurons (i.e., “Grandmother Cells”), it is now hypothesized that objects are represented by a population of neurons, each sensitive to different visual features of the stimulus, moving towards a more distributed encoding perspective of object representations in IT cortex. In essence, researchers had discovered the visual alphabet, which appears to be organized into syllables – syllables that do not necessarily convey meaning, but allow for great flexibility in the number of objects they can encode. Additional evidence of the flexibility of IT neurons is their ability to maintain their high degree of stimulus selectivity over different image sizes and positions (i.e., size/position invariance), to signal familiarity vs. novelty, and to adapt to current task demands. Interestingly, these characteristics are all remarkably reminiscent of the predications regarding the neural correlates of object recognition made on the basis of the early neuropsychological and behavioral studies (see above). However, this model is not as easily reconciled with the neuropsychological observations in human patients with agnosias: How can we have a highly specific deficit for a particular category of stimuli, which might include all manner of visual features, if the neurons encoding those features are distributed throughout IT cortex? The answer to this question arose as a result of approaching the problem from a completely different perspective using an innovative new technology.

FUNCTIONAL NEUROIMAGING INVESTIGATIONS OF VISUAL PROCESSING

The use of functional neuroimaging has revolutionized the study of cognitive neuroscience. Prior to the availability of neuroimaging techniques, the only method for researchers to examine the correlation between brain structures and behavior in humans was to study brain-damaged individuals. Functional neuroimaging techniques, and functional magnetic resonance imaging (fMRI) in particular, provided researchers with the opportunity to examine brain function in healthy subjects as well as in patient populations using a non-invasive approach. Interestingly, fMRI does not measure neuronal activity per se, but instead the hemodynamic responses that occur as a result of changes in neuronal activity. Specifically, fMRI provides an indirect measure of neuronal activity by taking advantage of differences in the magnetic susceptibility between oxygenated and deoxygenated hemoglobin. As brain regions become more active, their metabolic demands increase and local oxygen delivery is enhanced. Oxygenated hemoglobin is diamagnetic and produces a strong magnetic signal as compared to deoxygenated hemoglobin, which is paramagnetic and produces a weaker signal. Using an MRI scanner, one can obtain a measure of the relative dependence on oxygenated blood within a given brain region (i.e., the “blood oxygen level dependent” or “BOLD” signal). Although it is generally accepted that the BOLD signal provides some indication of the neuronal activity in the underlying brain tissue, the precise physiological basis of the BOLD signal is still poorly understood.

A first glimpse at the intact human brain

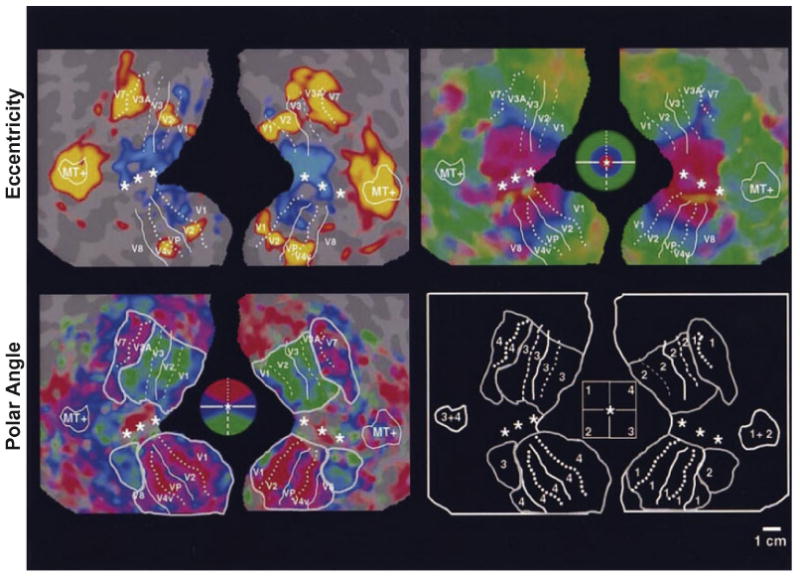

By the time functional neuroimaging methods became available, much was known about the hierarchical organization and properties of the different visual regions in the monkey brain. We knew, for example, that a series of interconnected visual areas, many with independent retinotopic maps, were organized in a pathway stretching from V1 to area TE in the anterior temporal lobe (i.e., the ventral visual pathway; see above). Many of the early neuroimaging studies attempted to determine if humans showed a similar organization. Haxby and colleagues (1994) used positron emission tomography (PET) to demonstrate that humans, like monkeys, show two visual pathways: a dorsal pathway, stretching from V1 through extrastriate to parietal cortex, which is primarily concerned with visuospatial processing, and a ventral pathway, stretching from V1 through extrastriate to temporal cortex, which is primarily concerned with object processing. Functionally distinct regions in human cortex along both the dorsal and ventral pathways were delineated on the basis of retinotopic fMRI maps (e.g., Figure 8) (DeYoe, Bandettini, Neitz, Miller & Winans, 1994, Engel, Rumelhart, Wandell, Lee, Glover, Chichilnisky & Shadlen, 1994, Huk, Dougherty & Heeger, 2002, Sereno, Dale, Reppas, Kwong, Belliveau, Brady, Rosen & Tootell, 1995, Tootell, Hadjikhani, Hall, Marrett, Vanduffel, Vaughan & Dale, 1998, Tootell, Hadjikhani, Vanduffel, Liu, Mendola, Sereno & Dale, 1998, Tootell, Mendola, Hadjikhani, Ledden, Liu, Reppas, Sereno & Dale, 1997, Tootell, Mendola, Hadjikhani, Liu & Dale, 1998, Wade, Brewer, Rieger & Wandell, 2002). In monkeys, we know these maps become increasingly less well defined as one moves anteriorly along the inferior temporal cortex (see above). Grill-Spector and colleagues (1998) used fMRI to show a similar trend in humans.

Figure 8. Retinotopic organization of visual cortex revealed by fMRI.

Inflated and flattened views of the human brain showing eccentricity and polar angle maps, stretching from V1 to V4. Maps were produced using expanding and rotating checkerboard patterns, respectively (from Sereno et al., 1995).

In addition to its use as an anatomical tool, fMRI has also allowed researchers to identify regions involved in object recognition on the basis of their functional properties. According to the monkey literature, regions involved in object recognition not only show weak retinotopic organization and become increasingly more selective for complex objects, but also are increasingly less responsive to scrambled images (see above; Bruce et al., 1981, Vogels, 1999). Furthermore, they often show position and size invariance (i.e., the representations of individual objects remain constant regardless of their retinal position or size; see above; Ito et al., 1995). When the sensitivity of the ventral visual pathway in humans to scrambled images was assessed, the first stage at which regions no longer responded strongly to scrambled images was located near the occipitotemporal junction (Grill-Spector et al., 1998, Kanwisher, Chun, McDermott & Ledden, 1996, Lerner, Hendler, Ben-Bashat, Harel & Malach, 2001, Malach, Reppas, Benson, Kwong, Jiang, Kennedy, Ledden, Brady, Rosen & Tootell, 1995). The underlying collection of areas, termed the lateral occipital complex (LOC; see Grill-Spector, Kourtzi & Kanwisher, 2001), is believed to be where regions associated with processing simple visual features (e.g., lines, simple shapes) transition to those responsible for processing more complex visual stimuli (e.g., complex shapes, faces, scenes, etc.). Shortly thereafter, it was shown that LOC is less sensitive to changes in stimulus size and position as compared to changes in viewpoint or illumination (Grill-Spector, Kushnir, Edelman, Avidan, Itzchak & Malach, 1999). This result is consistent with the properties of neurons in IT cortex, but not of those in earlier visual areas of monkeys (such as V1-V4), supporting the idea that LOC may be a functional homolog of IT cortex in monkeys (Grill-Spector et al., 1998, Malach et al., 1995).

Functional neuroimaging studies reveal neural substrates of category-selective agnosias

Although LOC exhibited many of the features one associates with a higher-order object recognition area, including weak retinotopy, sensitivity to image scrambling, and size/position invariance, it did not appear to possess the degree of selectivity necessary to identify specific objects. Namely, LOC responds strongly to any object but does not show selective responses to specific objects or features. However, anterior to LOC, a number of other brain areas were identified that were found to be very selective for specific categories of objects (Figure 9). For example, Sergent and colleagues (1992) identified regions within the right fusiform gyrus and anterior superior temporal sulcus selectively recruited during a face discrimination task. Puce and colleagues (Puce, Allison, Asgari, Gore & McCarthy, 1996, Puce, Allison, Gore & McCarthy, 1995) and Kanwisher and colleagues (1997) later replicated these findings using fMRI. The latter group called the fusiform activation the “fusiform face area” (Figure 9) (FFA; Kanwisher et al., 1997). Other face-selective regions were also identified: one in the ventral occipital cortex (the “occipital face area”, OFA) (Ishai, Ungerleider, Martin, Schouten & Haxby, 1999), another in the superior temporal sulcus (Haxby, Ungerleider, Clark, Schouten, Hoffman & Martin, 1999), and yet another in the anterior portion of the temporal lobe (Kriegeskorte, Formisano, Sorger & Goebel, 2007, Rajimehr, Young & Tootell, 2009, Tsao, Moeller & Freiwald, 2008). The significance of these regions for the processing of face stimuli is best illustrated by the observation that damage that includes the occipitotemporal cortex and/or the fusiform gyrus produces acquired prosopagnosia (e.g., Landis, Regard, Bliestle & Kleihues, 1988, Rossion, Caldara, Seghier, Schuller, Lazeyras & Mayer, 2003).

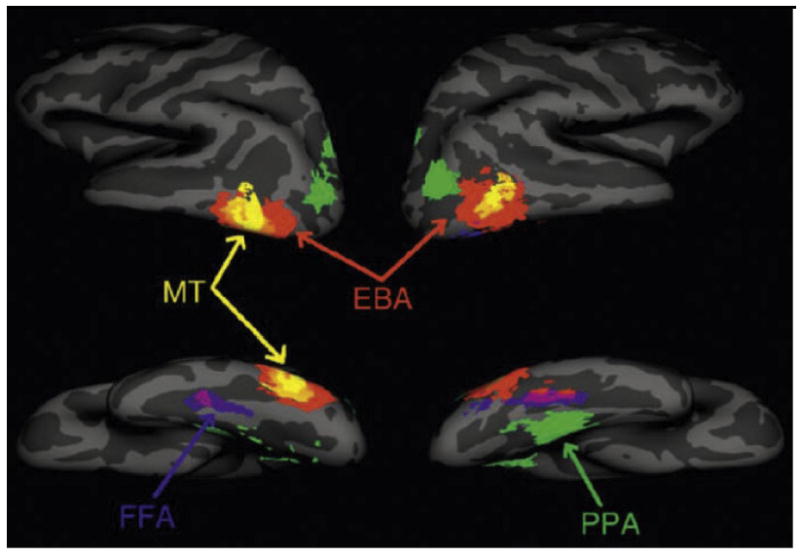

Figure 9. Category-selective regions in the human brain revealed by fMRI.

Inflated views of the human brain showing regions selective for images of faces in the fusiform gyrus (“fusiform face area, FFA”), images of places in the adjacent parahippocampal gyrus (“parahippocampal place area, PPA”), and images of human body-parts near the lateral occipitotemporal cortex (“extrastriate body-part area, EBA”). MT: motion-selective region. (from Spiridon et al., 2006).

In addition to face-selective regions, regions selective for other visual categories were identified. An area selectively activated by locations, places, and landmarks was identified in the parahippocampal gyrus, and termed the “parahippocampal place area” (PPA) (Figure 9) (Epstein & Kanwisher, 1998). Activation of the PPA requires the presentation of surfaces that convey information about the local environment; random displays of objects (e.g., furniture) produce minimal responses, but if those same objects are arranged in such a way as to give the illusion of a room, then this area becomes significantly more responsive. As with face-selective regions, damage to the parahippocampal gyrus produces difficulties in navigating familiar locations (“topographical disorientation”; Epstein, DeYoe, Press & Kanwisher, 2001).

Along the lateral extrastriate cortex, an area selectively activated by images of human body-parts was found, the so-called extrastriate body-part area (EBA) (Figure 9) (Downing, Jiang, Shuman & Kanwisher, 2001). This region was later shown to also respond to limb and goal-directed movements (Astafiev, Stanley, Shulman & Corbetta, 2004). There was even the discovery of a region selective for letters and nonsense letterstrings (Puce et al., 1996). This so-called “visual word form area” (VWFA) was localized to a region within/adjacent to the fusiform gyrus and was further shown to respond preferentially to real words compared to nonsense letterstrings (Cohen, Lehericy, Chochon, Lemer, Rivaud & Dehaene, 2002). The VWFA is found almost exclusively in the left hemisphere - the same hemisphere as other language-related areas, such as Broca’s and Wernicke’s areas (Binder, Frost, Hammeke, Cox, Rao & Prieto, 1997). Importantly, because we are not born with knowledge about written words, the existence of VWFA indicates that at least some category-specific cortex must arise as a result of experience.

Recently, neuroimaging experiments in monkeys have revealed similar category-selective regions throughout IT cortex (Figure 10). Tsao and colleagues (2003) and later others (Bell, Hadj-Bouziane, Frihauf, Tootell & Ungerleider, 2009, Pinsk, Arcaro, Weiner, Kalkus, Inati, Gross & Kastner, 2009, Pinsk, DeSimone, Moore, Gross & Kastner, 2005) identified several regions along the superior temporal sulcus (STS) selectively responsive to faces (of both monkeys and humans). Recordings from one of these face-selective regions has demonstrated high proportions of face-selective neurons (Tsao, Freiwald, Tootell & Livingstone, 2006). Located adjacent to these face-selective regions are regions selective for monkey body-parts, and regions selective for places have been found within the STS and along ventral IT cortex (Bell et al., 2009, Pinsk et al., 2005). Establishing precise homologies between the two species will require adherence to both anatomical and functional criteria (Rajimehr et al., 2009, Tsao et al., 2008) and is still debated. Nonetheless, the existence of fMRI-identified category-selective regions in the monkey brain provides a clear bridge between experiments involving fMRI and electrophysiology, greatly facilitating cross-species comparisons.

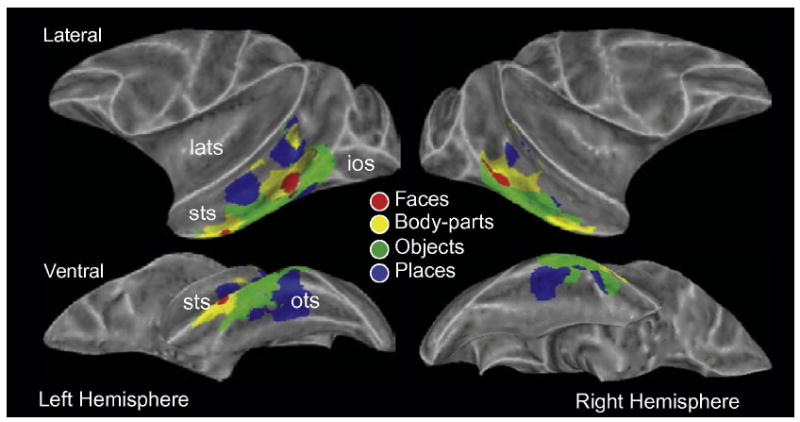

Figure 10. Category-selective regions in the monkey brain revealed by fMRI.

Inflated views of the monkey brain showing regions selective for images of faces, body-parts, objects, and places. ios: inferior occipital sulcus; lats: lateral sulcus; ots: occipitotemporal sulcus; sts: superior temporal sulcus (from Bell et al., 2009).

In humans, these category-selective regions were shown to be sensitive to the conscious percept of a stimulus. Tong and colleagues (1998) presented subjects with an image of a face to one eye and an image of a house to the other, thereby creating a bi-stable percept that shifted back and forth between faces and houses every few seconds. They found that FFA activation increased when subjects reported perceiving a face whereas PPA activation increased when subjects reported perceiving a house, despite both images being present at all times. Moreover, these regions respond to the percept of the stimulus, even in its absence. Summerfield and colleagues (2006) asked subjects to discriminate between noisy images of faces and houses. When subjects incorrectly reported seeing a face when actually presented with a house, activation in FFA increased, indicating that the activation was related more to the percept than to the physical stimulus itself.

How (and when during development) these category-selective regions originate is still unclear, although there is mounting evidence to suggest that they are not innate but arise through learning and experience. For example, a number of studies have demonstrated training and experience-related changes in activation of temporal cortex. Op de Beeck and colleagues (2006) scanned subjects before and after discrimination training on a set of novel objects and found that activation in areas responsive to the objects increased after training. This increase in signal strength due to training was not uniform across temporal cortex (i.e., was not, for example, a non-specific attentional effect) but rather was greater in some visual cortical areas (e.g., right fusiform gyrus) compared to others. Wong and colleagues (2009) offered further insights into the spatial distribution of training-related increases in activation. They found that training subjects to discriminate a set of novel objects at an exemplar (subordinate) level produced greater and more focal activation in right fusiform gyrus than in other areas of visual cortex (see also van der Linden, Murre & van Turennout, 2008). By contrast, training subjects to discriminate objects at a categorical level produced more diffuse increases in activation. Changes in activation may reflect an underlying increase in neuronal selectivity among IT neurons that is observed following discrimination learning (Baker, Behrmann & Olson, 2002). In some cases, experience-related changes in activation and selectivity may take place over several years. For example, although humans are relatively adept at face discrimination at a very early age (Fagan, 1972, Pascalis, de Schonen, Morton, Deruelle & Fabre-Grenet, 1995), face-selective regions (i.e., FFA, OFA) do not appear until early to late adolescence (Golarai, Ghahremani, Whitfield-Gabrieli, Reiss, Eberhardt, Gabrieli & Grill-Spector, 2007, Scherf, Behrmann, Humphreys & Luna, 2007).

To summarize, the existence of brain regions selectively activated by images from specific categories offered, for the first time, a clear correlate to some of the category-selective agnosias first characterized over half a century earlier (e.g., prosopagnosia). Damage to these regions produces perceptual deficits specific for these categories. What is perhaps most interesting about these regions is how they respond to all conceptually related stimuli, regardless of their individual visual features. Images of body-parts, for example, might include many different shapes and textures but activate the same small, circumscribed region. This coding mechanism, based on conceptual similarity, is in direct contrast with the combination coding mechanism, based on visual similarity, currently proposed in monkeys. The former mechanism appears well suited for making rapid category-level identifications but may not have the selectivity necessary to dissociate individual exemplars from within individual categories. On the other hand, combination coding is well suited to dissociate individual exemplars based on subtle differences in visual appearance, but has no obvious way to organize or link representations based on perceptual or semantic relationships. Reconciling these two theories will likely reveal much about how the brain recognizes both categorical distinctions and individual exemplars from within categories.

Advancements in neuroimaging methods and analyses: Category-selective regions form interconnected networks

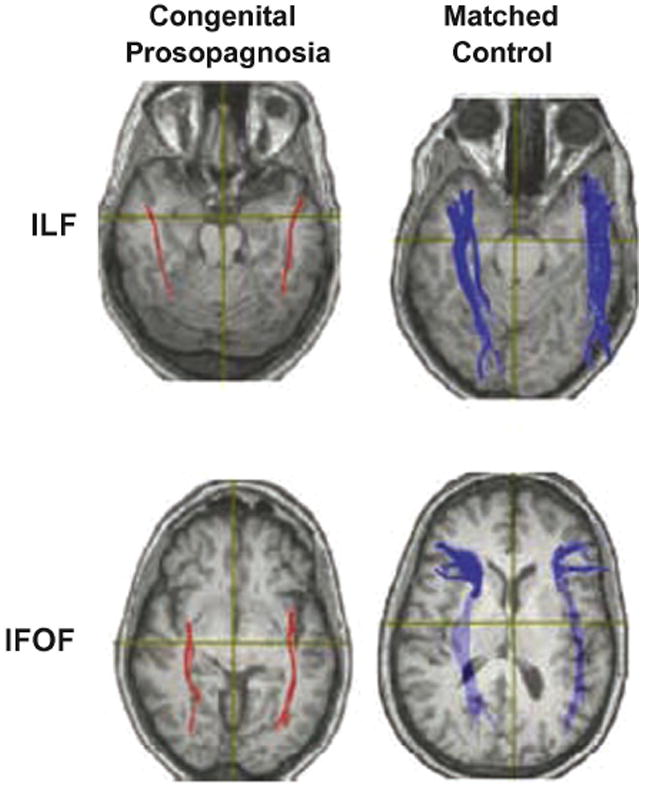

Most categories tested have been shown to evoke multiple category-selective regions within the human and monkey brains: Images of faces evoke four in the human occipital and temporal cortex (FFA, OFA, STS, anterior temporal cortex) and between two and six in the monkey temporal cortex (Bell et al., 2009, Moeller, Freiwald & Tsao, 2008, Pinsk et al., 2005). Places and scenes evoke activation in the human PPA as well as in a region of the retrosplenial cortex (Epstein, Parker & Feiler, 2008, Park & Chun, 2009, Walther, Caddigan, Fei-Fei & Beck, 2009). Similarly, several place-selective regions in the monkey temporal cortex have been described (Bell et al., 2009). The existence of multiple regions for each category strongly suggests the existence of cortical networks specialized for the processing of different stimulus categories. Evaluating the architecture and input-output anatomical relationships among regions in distributed networks was once only possible through terminal tracer experiments in animals. However, new analytic techniques in fMRI offer the possibility of characterizing cortical networks using non-invasive approaches (see Bandettini, 2009 for review). Diffusion tensor imaging (DTI), for example, measures the diffusion of water along axonal fibers, providing both the location and relative size of white matter tracts between structures and thus allows investigators to quantify the degree of connectivity between functionally similar regions (Basser, 1995, Basser, Mattiello & LeBihan, 1994). DTI has been used to investigate how changes in the connectivity among face-selective regions in the ventral temporal cortex of humans affect face processing. For example, patients suffering from congenital prosopagnosia show reduced volume in the inferior longitudinal fasciculus and inferior fronto-occipito fasciculus, two white matter pathways that pass through the fusiform gyrus (Figure 11) (Thomas, Avidan, Humphreys, Jung, Gao & Behrmann, 2009). Incidentally, the latter fasciculus has been shown to degrade with age, which correlates with a reduction in face discrimination abilities among older subjects (Thomas, Moya, Avidan, Humphreys, Jung, Peterson & Behrmann, 2008).

Figure 11. Reduced connectivity in congenital prosopagnosia revealed with DTI.

Diffusion tensor images of patient with congenital prosopagnosia (left column) and age/gender matched control (right column), plotted on axial slices. Patients with congenital prosopagnosia show reduced white matter volume in inferior lateral and inferior fronto-occipito fasciculi (ILF and IFOF), two white matter tracts that pass through the fusiform gyrus (from Thomas et al., 2009).

Another analytic technique, called “functional connectivity”, estimates connections between brain regions based on the relationship between activity patterns in different seed regions. These can be assessed at rest (e.g., resting state connectivity; Greicius, Krasnow, Reiss & Menon, 2003, Raichle, MacLeod, Snyder, Powers, Gusnard & Shulman, 2001) or during the performance of a task (e.g., dynamic causal modeling; DCM; Friston, Harrison & Penny, 2003). Resting state connectivity has been used extensively in the study of neuropsychiatric disorders (Greicius, 2008). Patients suffering from Alzheimer’s disease show altered connectivity between the hippocampus, cingulate and prefrontal cortices (Wang, Zang, He, Liang, Zhang, Tian, Wu, Jiang & Li, 2006). Assessing functional connectivity during task performance can provide insights as to how connectivity is altered during different behavioral states. Using DCM, Nummenmaa and colleagues (2009) examined how face-selective regions in the ventral temporal cortex interact with attention and oculomotor structures in the frontal cortex to mediate shifts in attention induced by changes in gaze direction.

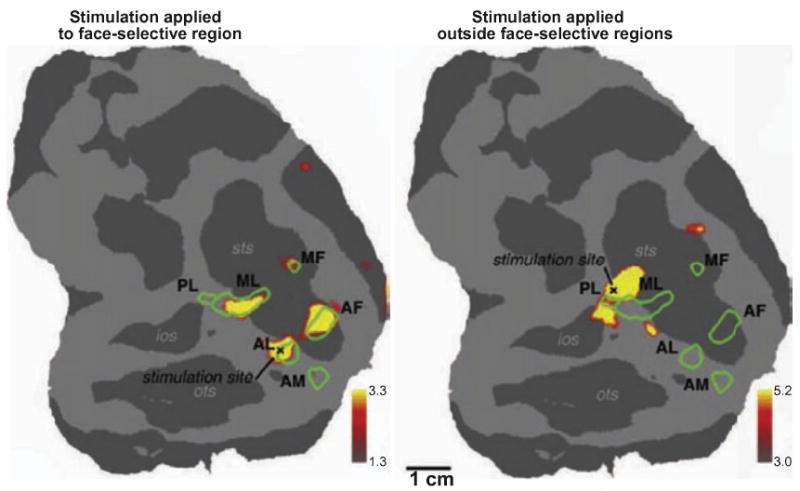

In monkeys, it is possible to use electrical microstimulation in combination with fMRI to visualize the connections between areas (Field, Johnston, Gati, Menon & Everling, 2008, Tolias, Sultan, Augath, Oeltermann, Tehovnik, Schiller & Logothetis, 2005). Moeller and colleagues (2008) used this technique to map the connections among six discrete face-selective regions in IT cortex, which were found to be strongly interconnected with one another but not with adjacent regions (Figure 12), thereby demonstrating that monkeys show similar specialized networks to those found in humans.

Figure 12. fMRI-identified face-selective regions in monkey brain form interconnected network.

Interconnections between face-selective regions in the monkey temporal cortex revealed using electrical microstimulation and fMRI. Microstimulation applied to a face-selective region in area TE evoked activation in several nearby face-selective regions, indicating functional connections among them (left panel). Microstimulation applied outside all face-selective regions failed to evoke activation within any of the face-selective regions (right panel). Similar experiments were conducted to show that all face-selective regions within the temporal cortex of the monkey are interconnected. AF: anterior fundus; AL: anterior lateral; AM: anterior medial; MF: middle fundus; ML: middle lateral; PL: posterior lateral (from Moeller et al., 2008).

Finally, in addition to new experimental methodologies, the last several years have seen a number of advancements in how imaging data are analyzed. Traditionally, fMRI data are analyzed by first using a localizer contrast (e.g., faces vs. places) to locate a region or regions of interest (ROIs; e.g., face-selective regions), and then restricting subsequent analyses to these isolated voxels. Alternatively, ROIs might be defined anatomically (e.g., hippocampus). Examining ROIs as isolated entities is highly advantageous because it restricts the analysis to the regions most likely to show an effect. However, it also ignores any information that might be contained within the pattern of activation among voxels outside the ROIs. Recently, investigators have begun to examine these patterns of activation (e.g., Haxby et al., 2001; Kriegeskorte et al,. 2006; Sapountzis et al., 2010; Oosterhof et al., 2010; Norman et al., 2006; Naselaris et al,. 2010; Kamitani and Tong, 2005; see Bandettini, 2009 for review). For example, Kay and colleagues (2008) used patterns of activation within early visual areas to identify which of 120 novel natural images the subject was viewing. Subjects were first shown a set of 1750 natural images while fMRI data were collected from V1-V3. These data were used to create a receptive field model for each voxel. The model was then used to predict the pattern of activation that would result when the subjects were later shown the 120 novel images. Accuracy exceeded 80% - well above chance performance. Using fMRI data in this way is often referred to as “brain decoding” and has been used in a number of recent fMRI studies looking at a variety of cognitive functions (e.g., Brouwer & Heeger, 2009, Esterman, Chiu, Tamber-Rosenau & Yantis, 2009, Macevoy & Epstein, 2009, Reddy, Tsuchiya & Serre, 2010, Rodriguez, 2010).

Pattern analysis and brain decoding may challenge some preconceived ideas about how representations are organized in the brain. For instance, it is easy to assume that only those regions identified using ROI-based analyses process stimuli from a specific category and yet pattern analysis might subsequently reveal valuable information about that category outside of these ROIs. However, this leads to the problem of how does one interpret the results of a pattern-analysis? That is to say – just because an analysis might provide accurate information, we cannot assume that this is how the brain actually accomplishes the task. This is a difficult question to address as the answer may change from one study to another, or from one brain region to another – requiring caution when applying pattern analysis to fMRI data. Nonetheless, the positive results obtained thus far from pattern analysis emphasize the importance of considering activations outside isolated regions of interest when examining fMRI data.

Summary

Neuroimaging has allowed researchers to learn much about the neural substrates of perceptual representations in the human brain. The demonstration of category-selective regions in ventral occipital and temporal cortex offers clear correlates to the category-selective agnosias observed in humans. Activation within these category-selective regions appears to be associated with the conscious perception of stimuli from a given category. Finally, these regions form interconnected networks specialized for processing stimuli from a given category. These data suggest that as one moves anteriorly along the occipito-temporal pathway, the neuronal representation of a given stimulus is transformed from a strict reflection of its visual appearance (i.e., a sphere, a rectangle) to one that incorporates its categorical identity (i.e., a face, a car). As new analytic techniques evolve, potential for greater insights into understanding mechanisms for categorical and exemplar distinctions will increase.

A SPECIAL CASE OF VISUAL PERCEPTION – FACE PROCESSING

Given their unique importance to social communication, faces undoubtedly represent a special category of stimuli with respect to visual processing. We rely on our ability to distinguish individuals and read their facial expressions in order to understand our relationships with others and to interpret their intentions. Face perception is multifaceted, involving face detection, identity discrimination, and the analysis of the changeable aspects of the face relevant for social context (e.g., facial expressions). In the following sections, we will describe how studies conducted over the last two decades have greatly expanded our knowledge of how the brain codes and interprets face stimuli.

Face-selective neurons in monkey IT cortex sensitive to socially relevant aspects of facial stimuli

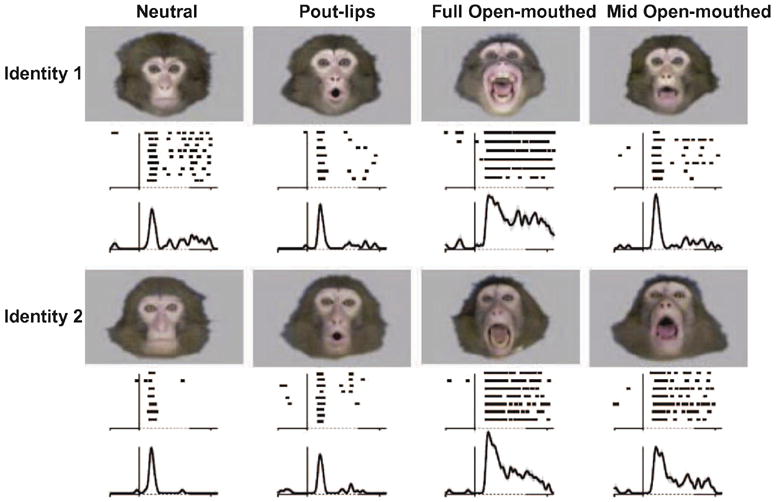

Since the earliest recording studies, researchers have found neurons in IT cortex that are highly selective for face stimuli. Moreover, unlike the majority of IT neurons in the monkey that respond systematically to reduced versions of real-world objects, face-selective neurons typically respond only to intact images of faces (see above; Desimone et al., 1984). If the individual elements of the face are rearranged or parts removed (e.g., eyes, mouth), the firing rates of these neurons drop significantly (Desimone et al., 1984, Hasselmo, Rolls & Baylis, 1989, Perrett et al., 1982). Thus, face-selective neurons in monkey IT cortex appear to be sensitive to the holistic percept of an intact face. Given this degree of specificity, face selective neurons likely represent the primary neural substrate for face processing in the primate brain. As such, one would expect to see evidence of all aspects of face processing (i.e., face detection, discrimination, etc.) within the neuronal responses, which is indeed the case. For example, in addition to signaling the presence of a face, face-selective neurons also appear to be sensitive to a number of socially relevant cues, such as head orientation (Desimone et al., 1984, Perrett, Smith, Potter, Mistlin, Head, Milner & Jeeves, 1985) and gaze direction (Perrett et al., 1985). Neurons responsive to different facial expressions (e.g., open-mouth threat) have also been identified (Hasselmo et al., 1989) and activation throughout IT cortex has been shown, using fMRI, to be modulated in response to different facial expressions (Hadj-Bouziane, Bell, Knusten, Ungerleider & Tootell, 2008, Hoffman, Gothard, Schmid & Logothetis, 2007). Face-selective neurons can therefore encode both the presence of a face as well as the social content of the face image - but how might this be accomplished by a single neuron?

Sugase and colleagues (1999) offered a potential solution. In their study, monkeys were trained to maintain central fixation while visual stimuli were presented foveally. The stimuli included images of monkey and human faces, each with a different identity and facial expression. They found that responses among IT neurons often consisted of an early phase followed by a later phase (Figure 13). The early phase, which occurred approximately 100-150 ms following stimulus onset, encoded the global features of the stimulus that were relevant for face detection or simple discrimination (e.g., between a face and a non-face, or between a monkey face and a human face). The later phase, which occurred approximately 50 ms later, encoded the fine features of the stimulus, such as those necessary to discriminate between individual faces or between different facial expressions.

Figure 13. IT neurons encode global and fine features related to face images.

Example of an IT neuron encoding multiple levels of information related to face perception. Each face evokes a sharp but brief response, beginning approximately 100-150 ms following stimulus onset. The magnitude of the second phase of the response, beginning approximately 50 ms later, differs according to the facial expression and identity of the facial image (from Sugase et al., 1999).

The encoding of individual facial identities has also been hypothesized to occur at the level of individual neurons. Leopold and colleagues (2006) have suggested that facial identity is encoded by single neurons using a norm-based encoding scheme. According to this theory, neurons in IT cortex signal the distance of a given face from a prototypical or “mean” face along multiple dimensions, each representing different diagnostic features. This scheme, in contrast to the earlier “Grandmother Cell Hypothesis”, allows for infinite flexibility because it does not rely on previous viewings or many stored representations. A similar norm-based encoding scheme has been proposed in humans (Loffler, Yourganov, Wilkinson & Wilson, 2005).

The studies cited above have highlighted the capabilities of individual neurons to represent different facial features. Another important, and as of yet unanswered, question is how do these neurons contribute to behavior? Surprisingly, only a few studies have addressed this question directly. Eifuku and colleagues (2004) trained monkeys to perform a face identity discrimination task in which monkeys were first presented with a sample face stimulus followed by several potential target faces. The monkeys were required to push a lever when the target stimulus matched the identity of the sample stimulus. The two matching stimuli were pictures of the same familiar person, but not necessarily from the same viewpoint, thus requiring the monkey to make true face identifications and not simply exemplar matches. It was found that face-selective neurons in the anterior IT cortex showed correlations between the latency of the neuronal response and the latency of the behavioral response, suggesting that these neurons directly contributed to the perceptual decision. More recently, Afraz and colleagues (2006) provided direct evidence linking the responses of face neurons to the perception of a face. After locating a cluster of face-selective neurons, they applied electrical microstimulation while monkeys performed a difficult face vs. non-face discrimination task. When stimulation was applied, monkeys were significantly more likely to report a face, regardless of the identity of the visual stimulus.

Individual face-selective neurons have a remarkable capacity to encode different aspects of the facial image. A single neuron not only signals the presence of a face within the visual field, but also conveys information about the identity of the face as well as its emotional content. Furthermore, the activity of these neurons correlates with performance in face detection and face discrimination tasks, suggesting they contribute directly to perceptual decisions involving faces.

Two systems in the human brain specialized for different aspects of face processing