Abstract

Adaptive decision making in real-world contexts often relies on strategic simplifications of decision problems. Yet, the neural mechanisms that shape these strategies and their implementation remain largely unknown. Using a novel economic decision-making task, we dissociate brain regions that predict specific choices from those predicting an individual’s preferred strategy. Choices that maximized gains or minimized losses were predicted by fMRI activation in ventromedial prefrontal cortex or anterior insula, respectively. However, choices that followed a simplifying strategy (i.e., attending to overall probability of winning) were associated with activation in parietal and lateral prefrontal cortices. Dorsomedial prefrontal cortex, through differential functional connectivity with parietal and insular cortex, predicted individual variability in strategic preferences. Finally, we demonstrate that robust decision strategies follow from neural sensitivity to rewards. We conclude that decision making reflects more than compensatory interaction of choice-related regions; in addition, specific brain systems potentiate choices depending upon strategies, traits, and context.

Introduction

The neuroscience of decision making under risk has focused on identifying brain systems that shape behavior toward or against particular choices (Hsu et al., 2005; Kuhnen and Knutson, 2005; Platt and Huettel, 2008). These studies typically involve compensatory paradigms that trade two decision variables against each other, as when individuals choose between a safer, lower-value option and a riskier, higher-value option (Coricelli et al., 2005; De Martino et al., 2006; Huettel, 2006; Tom et al., 2007). Activation in distinct regions reliably predicts the choices that are made: increased activation in the anterior insula follows risk-averse choices (Paulus et al., 2003; Preuschoff et al., 2008) and increased activation in the ventromedial PFC and striatum predicts risk-seeking choices (Kuhnen and Knutson, 2005; Tobler et al., 2007). In contrast, prefrontal and parietal control regions support executive control processes associated with risky decisions, as well as the evaluation of risk and judgments about probability and value (Barraclough et al., 2004; Huettel et al., 2005; Paulus et al., 2001; Sanfey et al., 2003). These and other studies have led to a choice-centric neural conception of decision making: tradeoffs between decision variables, such as whether someone seeks to minimize potential losses or maximize potential gains, reflect similar tradeoffs between the activation of brain regions (Kuhnen and Knutson, 2005; Loewenstein et al., 2008; Sanfey et al., 2003). Accordingly, individual differences in decision making have been characterized neurometrically by estimating parameters associated with a single model of risky choice and identifying regions that correlate with individual differences in those parameters (De Martino et al., 2006; Huettel et al., 2006; Tom et al., 2007).

Yet, following a purely compensatory approach to decision making would require substantial computational resources, especially for complex decision problems that involve multiple decision variables. It has become increasingly apparent that people employ a variety of strategies to simplify the representations of decision problems and reduce computational demands (Camerer, 2003; Gigerenzer and Goldstein, 1996; Kahneman and Frederick, 2002; Payne et al., 1992; Payne et al., 1988; Tversky and Kahneman, 1974). For example, when faced with a complex decision scenario that could result in a range of positive or negative monetary outcomes, some individuals adopt a simplifying strategy that de-emphasizes the relative magnitudes of the outcomes but maximizes the overall probability of winning. Other individuals emphasize the minimization of potential losses or the maximization of potential gains in ways consistent with more compensatory models of risky choice such as expected utility maximization (Payne, 2005). Adaptive decision making in real-world settings typically involves multiple strategies that may be adopted based on the context and computational demands of the task (Gigerenzer and Goldstein, 1996; Payne et al., 1993). As noted above, there has been considerable research on identifying brain systems that shape behavior toward or against particular choices (risky or safer gambles); however, much less is known about the neural mechanisms that underlie inter- and intra-individual variability in decision strategies. We sought to address this limitation in the present study by dissociating choice-related and strategy-related neural contributors to decision making.

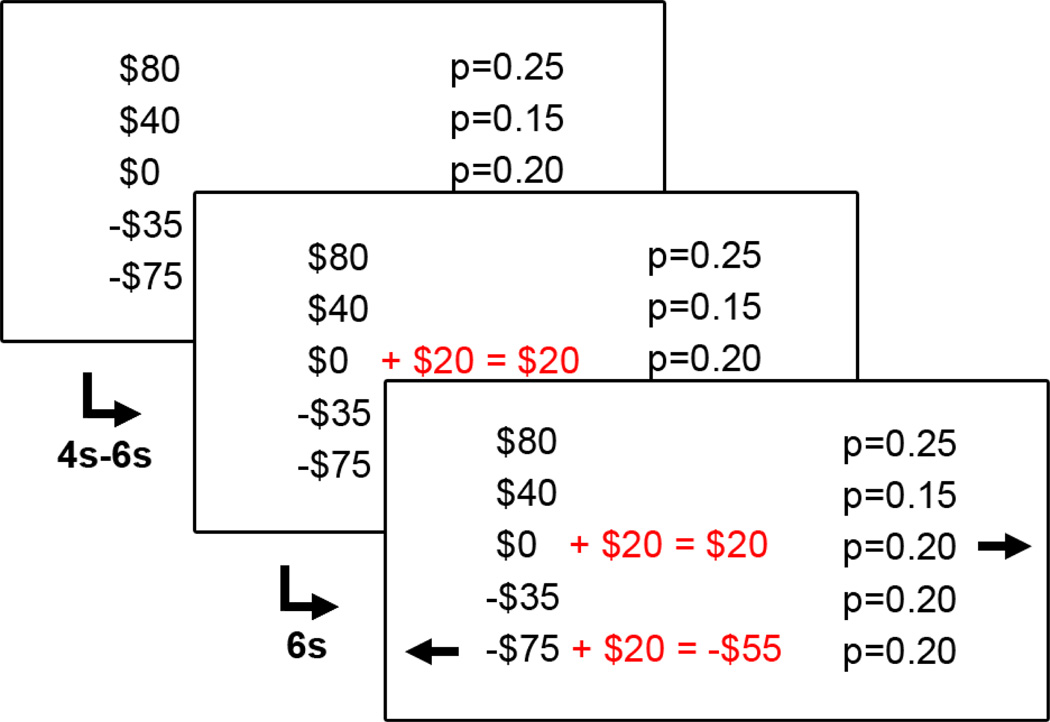

We used a novel incentive-compatible decision-making task (Payne, 2005) that contained economic gambles with five rank-ordered outcomes, ranging from large monetary losses to large monetary gains (Fig. 1). There were three types of choices: gain-maximizing, loss-minimizing or probability-maximizing. Making a gain-maximizing (Gmax) choice increased the magnitude of the largest monetary gain (i.e., the most money that could be won), whereas making a loss-minimizing (Lmin) choice reduced the magnitude of the largest monetary loss (i.e., the most money that could be lost). The gambles were constructed so that these two choices (Gmax and Lmin) were generally consistent with a compensatory strategy (See Supplementary Material for a discussion of model predictions), such as following expected utility theory and/or rank-dependent expectation models like cumulative prospect theory (Birnbaum, 2008; Payne, 2005; Tversky and Kahneman, 1992). On the other hand, making a probability-maximizing (Pmax) choice increases the overall probability of winning money compared to losing money. Therefore, such choices would be consistent with a simplifying strategy (e.g., “maximize the chance of winning”) that ignores reward magnitude. Finally, we characterized our subjects’ strategic preferences according to their relative proportion of simplifying (Pmax) versus compensatory (Gmax and Lmin) choices. Such a definition positions the two strategies as the end points of a continuum with a high value indicating an individual’s preference for a simplifying strategy and a low value indicating a preference for a compensatory strategy. We emphasize that, as defined operationally here, strategies for decision making may be either explicit or implicit.

Figure 1. Experimental task and behavioral results.

(A) Subjects were first shown, for 4–6s, a multi-attribute mixed gamble consisting of five potential outcomes, each associated with a probability of occurrence. Then, two alternatives for improving the gambles were highlighted in red, whereupon subjects had 6s to decide which improvement they preferred. Finally, after two arrows identified the buttons corresponding to the choices, subjects indicated their choice by pressing the corresponding button as soon as possible. Here, the addition of $20 to the central, reference outcome would maximize the overall probability of winning (Pmax choice), whereas the addition of $20 to the extreme loss would reflect a loss-minimizing (Lmin) choice. The next trial appeared after a variable interval of 4, 6 or 8s. In other trials, subjects could have a chance to add money to the extreme gain outcome, reflecting a gain-maximizing (Gmax) choice.

To distinguish neural mechanisms underlying choices from those underlying the strategies that generate those choices, we collected several forms of behavioral and functional magnetic resonance imaging (fMRI) data. Consistent with many previous studies (De Martino et al., 2006; Sanfey et al., 2003), we characterized brain regions as choice-related if the magnitude of their activation predicted a specific behavior (e.g., select the option providing the largest gain) throughout our subject sample. In contrast, we characterized brain regions as strategy-related based on their association with individual difference measures; i.e., if the magnitude of their activation depended on whether or not an individual engages in their preferred strategy, regardless of which of the choices that entails. Moreover, strategy-related regions should exert a modulatory influence on choice-related regions. A strong candidate for a strategy-related region is the dorsomedial prefrontal cortex, which has been shown to play an important role in tasks involving decision conflict, as well as in making decisions that run counter to general behavioral tendencies (De Martino et al., 2006; Pochon et al., 2008). Moreover, this region exhibits distinct patterns of functional connectivity to affective and cognitive networks (Meriau et al., 2006), making it a candidate for shaping activation in those networks based on context and computational demands (Behrens et al., 2007; Kennerley et al., 2006).

Using large-sample behavioral experiments, we first demonstrate systematic individual variability in decision making, with a significant bias towards choices that maximize the overall probability of winning (i.e., toward a simplifying strategy). Then, using fMRI, we show that distinct neural systems underlie choices made on each trial and variability in strategic preferences across individuals. Finally, we also demonstrate a striking relation between neural sensitivity to monetary outcomes and individual differences in strategic preferences, indicating that robust decision strategies may follow from the neural response to rewards. These results demonstrate that decision making under uncertainty does not merely reflect competition between brain regions predicting distinct decision variables; in addition, the relation between neural activation and subsequent decisions is mediated by underlying strategic tendency.

Results

We conducted two behavioral experiments (N1 = 128, N2 = 71) and one fMRI experiment (N = 23), all involving the basic paradigm illustrated in Fig. 1. Subjects were young adult volunteers from the Duke University community (see Supplementary material for details on the experiments). Research was conducted under protocols approved by the Institutional Review Boards of Duke University and Duke University Medical Center.

Across both behavioral experiments (details available in Supplementary Material), we found a significant bias towards the probability-maximizing choices (Supplementary Fig. 1), extending prior findings in the behavioral literature (Payne, 2005). In addition to demonstrating the robustness of the preferences toward the Pmax choices, the second experiment also indicates that this bias can be reversed or accentuated by experimental manipulations. The findings from these studies are also consistent with Pmax choice representing a simplifying strategy (see Supplementary Material). Finally, importantly for the goals of our imaging studies, we also found substantial inter-individual variability: some subjects nearly always preferred a simplifying strategy (choosing the Pmax option in most trials); others nearly always preferred a compensatory strategy (choosing the Gmax or Lmin options in most trials), while still others switched strategies on different trials resulting in both intra- and inter-subject variability in strategy (Supplementary Fig. 2).

Variability in Underlying Neural Mechanisms

We used high-field (4T) fMRI to evaluate the neural systems associated with strategic decision making under uncertainty. We adapted the basic design from our behavioral experiments to the fMRI setting. Subjects first made a series of choices without feedback. On each trial, subjects initially viewed the decision options and then learned the assignment of choices to responses, to eliminate any potential confounding effects of response selection (Pochon et al., 2008). Then, following the completion of all decision trials, we resolved a set of those trials for real monetary rewards. This allowed us to measure reward-related activation without altering subsequent decisions through learning.

Consistent with our two behavioral experiments, fMRI subjects made Pmax choices on approximately 70% of the trials when the choices were matched for expected value. Moreover, the proportion of Pmax choices was systematically modulated by the tradeoff in expected value between the choices, indicating that subjects were not simply insensitive to expected value (Supplementary Table 1). We evaluated intra-subject choice consistency using split-sample analysis. We split each subject’s choices into samples from odd-numbered runs and from even-numbered runs. There was a strong correlation between the proportion of Pmax choices in each sample (r = 0.61; p < 0.01), even without considering other factors like relative expected value. For comparison, we used a similar split-sample approach to estimate subject-specific parameters for canonical expected utility and cumulative prospect theory models of decision making (see Supplementary Material). We found that model parameters estimated from one-half of the experimental data did not significantly classify choices within the other runs (Supplementary Fig. 3). Finally, the proportion of Pmax choices decreased with increasing self-reported tendency to maximize (r = −0.67, p < 0.001; Supplementary Fig. 4).

Neural Predictors of Choices

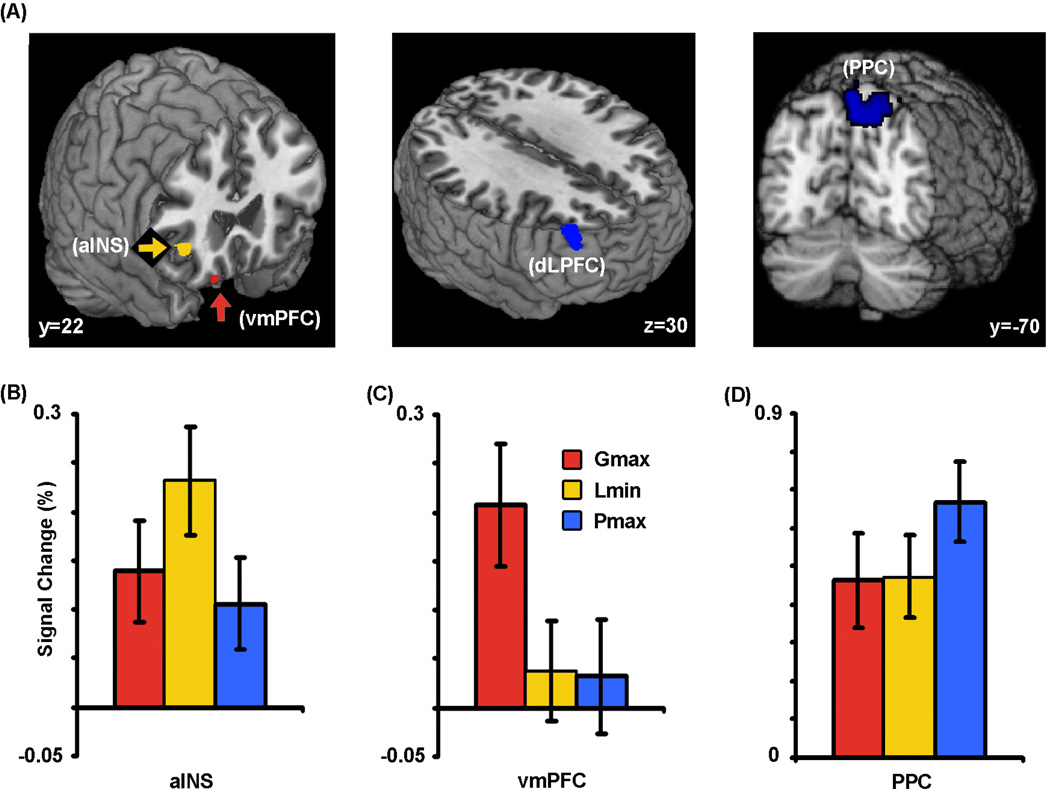

Our initial analyses identified brain regions whose activation was driven, across subjects and trials, by the selected choice. There was greater activation in anterior insular cortex (aINS) and ventromedial prefrontal cortex (vmPFC; Fig. 2A) for the compensatory magnitude-sensitive choices (combined across Gmax and Lmin). These regions are typically associated with emotional function, particularly the affective evaluation of the outcome of a choice in decision-making tasks (Bechara et al., 2000a; Dalgleish, 2004; Paulus et al., 2003; Sanfey et al., 2003). We subsequently performed a region of interest analysis to explore specifically the differences in activation between Gmax and Lmin. Note that this analysis was restricted, a priori, to a subset of fifteen subjects with a sufficient number of choices in each condition of interest. We found a clear double dissociation between aINS and vmPFC: Gmax choices were associated with greater activation within vmPFC while Lmin choices were associated with increased activation in aINS (Fig. 2B,C). Conversely, Pmax choices resulted in increased activation in the dorsolateral prefrontal cortex (dlPFC) and posterior parietal cortex (PPC; Fig. 2A, Supplementary Table 2), regions typically associated with executive function and decision making under risk and uncertainty (Bunge et al., 2002; Huettel et al., 2005; Huettel et al., 2006; Paulus et al., 2001). These regions showed greater activation for Pmax choices compared to both Gmax and Lmin, but no difference between Gmax and Lmin options (Fig. 2D).

Figure 2. Distinct sets of brain regions predict choices.

(A) Increased activation in the right anterior insula (peak MNI space coordinates: x = 38, y = 28, z = 0) and in the ventromedial prefrontal cortex (x = 16, y = 21, z = −23) predicted Lmin and Gmax choices respectively, while increased activation in the lateral prefrontal cortex (x = 44, y = 44, z = 27) and posterior parietal cortex (x = 20, y = −76, z = 57) predicted Pmax choices. Activation maps show active clusters that surpassed a threshold of z > 2.3 with cluster-based Gaussian random field correction. (B–D) Percent signal change in these three regions to each type of choice. On this and subsequent figures, error bars represent ±1 standard error of the mean for each column.

Neural Predictors of Strategic Variability across Individuals

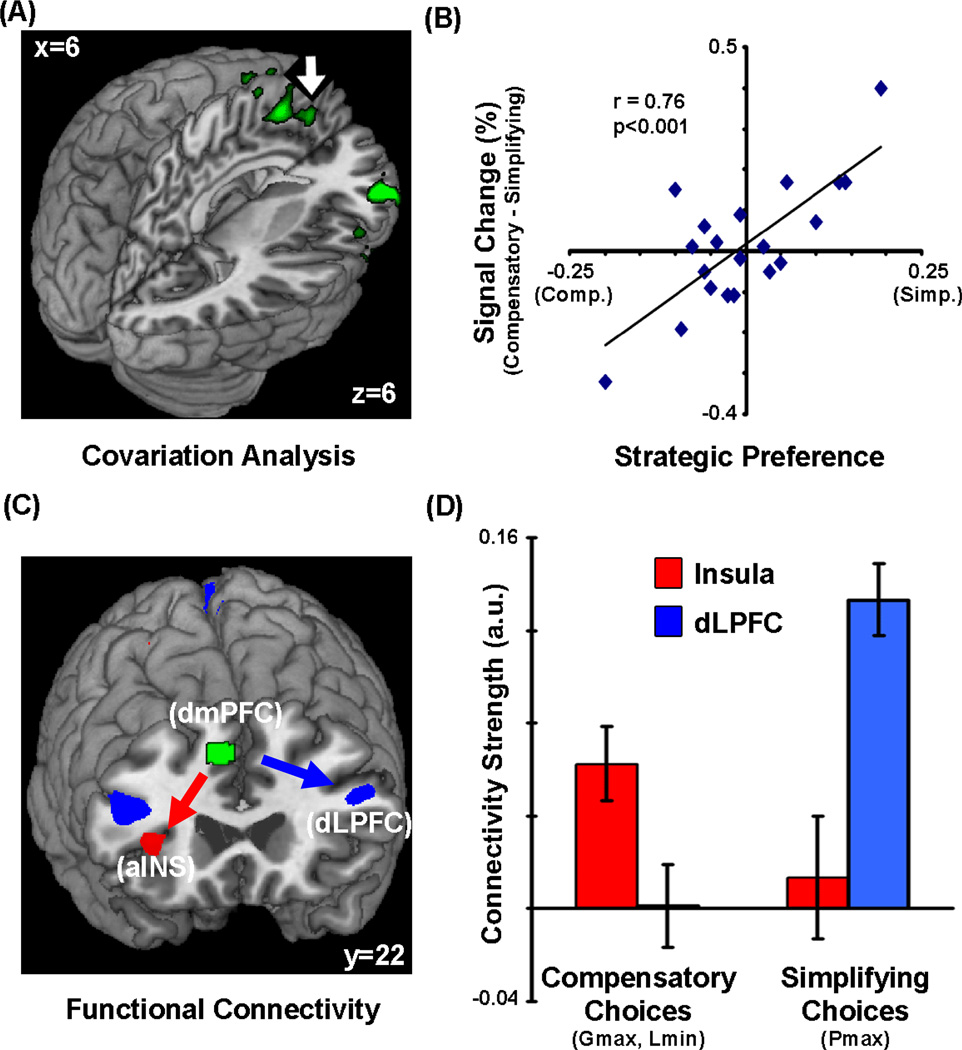

We next investigated whether there were brain regions whose activation varied systematically with individual differences in strategic preferences. To do this, we entered each subject’s strategic preference as a normalized regressor into the across-subjects fMRI analyses of the contrast between choices. Strategic variability predicted individual differences in activation in two clusters (Fig. 3A,B): the dorsomedial prefrontal cortex (dmPFC) and the right inferior frontal gyrus (rIFG). Within these regions, there was no significant difference in activation between the choices. However, there was a significant interaction: activation increased when an individual with preference for the more compensatory strategy made a simplifying Pmax choice and vice versa. We focus on the dmPFC in the rest of this manuscript, based on our prior hypothesis about the role of this region as well as the fact that only this region significantly predicted trial-by-trial choices (as discussed later).

Figure 3. Dorsomedial prefrontal cortex predicts strategy use during decision making.

(A,B) Activation in dorsomedial prefrontal cortex (dmPFC, x = 10, y = 22, z = 45; indicated with arrow) and the right inferior frontal gyrus (rIFG) exhibited a p. 34 significant decision-by trait-interaction, such that the difference in activation between compensatory and simplifying choices was significantly correlated with preference for simplifying strategy (mean-subtracted) across individuals. (C,D) Functional connectivity of dmPFC varied as a function of strategy: there was increased connectivity with dlPFC (and PPC) for simplifying choices and increased connectivity with aINS (and amygdala) for compensatory choices.

We next evaluated whether dmPFC activation might shape activation in those regions that predicted specific choices (i.e., Pmax: dlPFC and PPC; Lmin: aINS and Gmax: vmPFC), using seed-voxel-based whole-brain functional connectivity analyses. This would provide additional converging evidence for the role of this region in determining choice behavior, contingent on preferred strategies. We found a double dissociation in the functional connectivity of dmPFC depending upon the choice made by the subject (Fig. 3C,D). When subjects made Pmax choices, connectivity with dmPFC increased in dlPFC and PPC, whereas when subjects made more magnitude-sensitive compensatory choices, connectivity increased in the aINS (and amygdala, but not in vmPFC). Moreover, the relative strength of the connectivity between dmPFC and these regions was significantly associated with individual differences in strategy preferences across subjects (Supplementary Fig. 5). Finally, we also conducted additional analyses to rule out the possibility that dmPFC activation was related to response conflict, as has been found in several previous studies (Botvinick et al., 1999; Kerns et al., 2004); details can be found in Supplementary Material.

Thus, we provide a broad range of converging results – drawn from overall activation, functional connectivity, factor analysis of behavioral data (see Supplementary Material), association with individual differences in strategy, and trial-by-trial analysis (below) – that together indicate that dmPFC supports strategic considerations during decision making, shaping behavior toward or against individual’s strategic preferences.

Integrating Choices and Strategies to Predict Behavior

We used the brain regions implicated above as choice-related (aINS, vmPFC, dlPFC, PPC) or strategy-related (rIFG, dmPFC) to predict choices on individual trials. We extracted, for every trial for every subject, the activation amplitude in each of these regions of interest, along with the decision made on that trial. We used a hierarchical logistic regression approach to evaluate which of these regions were significant and independent predictors of trial-to-trial decisions (Table 1).

Table 1.

Predicting trial-by-trial choices from trait and neural data.

| Model Variables | Coefficient (S.E.) |

Wald (Significance) |

Model Significance (χ2) |

Model Fit (Nagelkerke R2) |

|

|---|---|---|---|---|---|

| Trait | 76.09 | 0.069 | |||

| Constant | −0.14 (0.05) | 6.19 (0.13) | |||

| Proportion of Pmax choices | 1.02 (.12) | 70.79 (.000) | |||

| Brain | 18.05 | 0.017 | |||

| Constant | −0.21 (0.06) | 13.10 (.000) | |||

| Right Posterior Parietal Cortex | 32.41 (8.43) | 14.80 (.000) | |||

| Right Anterior Insula | −40.52 (13.28) | 9.31 (.002) | |||

| Trait (+ Brain) | 90.26 | 0.081 | |||

| Constant | −0.22 (0.06) | 13.38 (.000) | |||

| Proportion of Pmax choices | 1.00 (0.12) | 67.23 (.000) | |||

| Right Posterior Parietal Cortex | 30.54 (8.62) | 12.56 (.000) | |||

| Right Anterior Insula | −33.32 (13.69) | 5.93 (.012) | |||

| Trait + Brain + (Trait*Brain) | 97.66 | 0.088 | |||

| Constant | −0.23 (0.06) | 13.78 (.000) | |||

| Proportion of Pmax choices | 1.10 (0.13) | 71.75 (.000) | |||

| Right Posterior Parietal Cortex | 31.96 (8.70) | 13.49 (.000) | |||

| Right Anterior Insula | −33.00 (13.77) | 5.74 (.017) | |||

| dMPFC * Strategic Variability | −70.58 (26.28) | 7.21 (.007) | |||

All χ2 values were highly significant (p < 10−4)

We used stepwise logistic regression to evaluate the contributions of trait effects (i.e., overall proportion of choices) and brain effects (i.e., activation of a given region on a given trial) to the specific choices (coded as a binary variable of Pmax choice) made by subjects. As expected, subjects’ overall preference for simplifying strategy was a good predictor of Pmax choices on individual trials. An independent logistic regression analysis revealed that activation in two brain regions, the posterior parietal cortex and anterior insula, were significant positive and negative predictors of Pmax choices, and that these regions remained significant predictors even when the behavioral data were included in the model. Note that the dmPFC activation, when not weighted by strategy, did not significantly improve the model fit at any stage. However, when weighted by strategy, the resulting brain * strategy variable was a highly significant predictor of choices, even when the strategy itself was already included in the model. Regions not indicated in this table were not significant predictors of choice behavior.

We first entered into the model subjects’ overall preference for the simplifying strategy (proportion of Pmax choices). We found, unsurprisingly, that this was a highly significant predictor of trial-to-trial choices. Next, we used activation values from our brain regions of interest, considering them both in isolation and with strategic preference already entered into the model. We found that activation in insular cortex was a significant predictor of magnitude-sensitive choices, while parietal activation was a significant predictor of Pmax choices. Critically, activation in these brain regions improved the fit of the model even when the behavioral data had already been included. None of the other regions, including dmPFC, predicted either type of choice. Yet, when we weighted dmPFC activation with each subject’s strategy preference, the resulting variable became a significant and robust predictor of behavior, and overall model error was reduced (Table 1). Thus, dmPFC activation does not predict either type of choice, but instead predicts choices that are inconsistent with one’s preferred decision strategy.

We emphasize that the brain-behavior relations reported here were highly significant even though the behavioral choice data across trials for each subject (a behavioral strategy indictor) were already included in the logistic regression model. That is, we could use the fMRI activation evoked within key brain regions to improve our predictions of subjects’ decisions on individual trials over what was predicted from behavioral data alone.

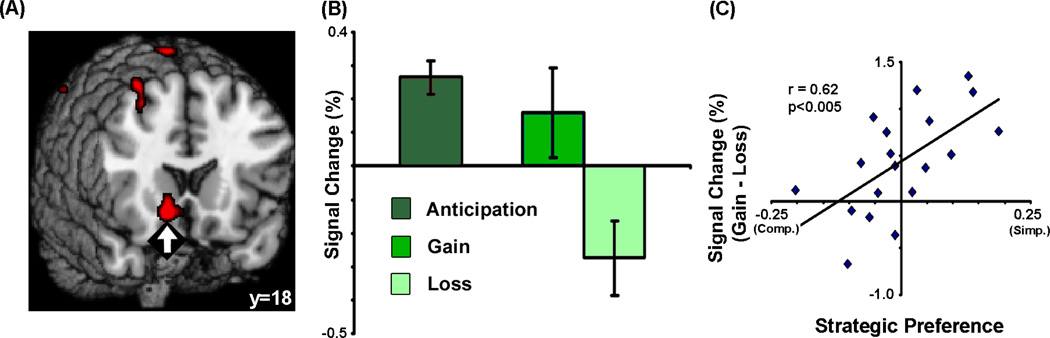

Neural Reward Sensitivity Predicts Individual Differences in Strategy

Finally, we evaluated whether an independent neural measure of reward sensitivity could predict the strategic preferences outlined in the previous sections. At the end of the scanning session, each subject passively viewed a subset of their improved gambles, which were each resolved to an actual monetary gain or loss. While subjects were anticipating the outcome of each gamble, there was increased activation in the ventral striatum (vSTR), a brain region commonly implicated in learning about positive and negative rewards (Schultz et al., 1997; Seymour et al., 2007; Yacubian et al., 2006). Then, when the gamble was resolved, vSTR activation increased to gains but decreased to losses (Fig. 4A,B). Moreover, there were striking and significant correlations between strategic variability and vSTR activation: those individuals who showed the greatest vSTR increases to gains and decreases to losses both preferred the simplifying Pmax strategy (Fig. 4C) and scored low on a behavioral measure of maximizing (Supplementary Fig. 6). These results suggest that the use of a simplifying strategy that improves one’s overall chances of winning (Pmax) may result from increased neural sensitivity to reward outcomes.

Figure 4. Ventral striatal sensitivity to rewards predicts strategic variability.

At the end of the experiment, some gambles were resolved to monetary gains or losses. (A,B) Activation in the ventral striatum (x = 14, y = 16, z = −10) increased when subjects were waiting for gambles to be resolved (anticipation) and, following resolution, increased to gains but decreased to losses. (C) Notably, the difference between gain-related and loss-related activation in the ventral striatum correlated with variability in strategic preferences across subjects, with subjects who were most likely to prefer the probability-maximizing exhibiting the greatest neural sensitivity to rewards.

Discussion

When facing complex decision situations, many individuals engage in simplifying strategies – such as choosing based on the overall probability of a positive outcome – to reduce computational demands compared to compensatory strategies. Here, we demonstrated two neural predictors of strategic variability in decision making. First, during the decision process, the dmPFC shapes choices (in a manner depending on strategic tendency) through changes in functional connectivity with insular and prefrontal cortices. Second, independent neurometric responses to rewards predicted strategic preferences: those individuals with the greatest striatal sensitivity to reward valence are most likely to use a simplifying strategy that emphasizes valence, but ignores magnitude. These results provide clear and converging evidence that the neural mechanisms of choice reflect more than competition between decision variables; they additionally involve strategic influences that vary across trials and individuals.

A large literature suggests that decisions between simple gambles can be predicted by compensatory models like expected utility and Cumulative Prospect Theory (Fennema and Wakker, 1997; Huettel et al., 2006; Preuschoff et al., 2008; Wu et al., 2007). Individual differences in sensitivity to the parameters within these models lead to distinct patterns of choices, even when the same model is applied to all individuals (Huettel et al., 2006; Tom et al., 2007). As decision problems become more complex, however, the assumption of a single canonical decision strategy becomes more and more problematic. As suggested by Tversky and Kahneman (1992) and Payne et al. (1993), people employ a variety of strategies to represent decision problems and evaluate options. Some of those strategies will be consistent with traditional models like expected utility maximization, whereas other strategies will be more heuristic or simplifying. Further, depending on the decision context, people shift among multiple strategies to maintain a balance between minimizing cognitive effort or maximizing decision accuracy, among other goals (Payne et al., 1993). Finally, strategy use to solve the same decision problem differs across individuals, perhaps reflecting trait differences such as a tendency towards satisficing versus maximizing. Our findings, involving a complex risky choice task, across both behavioral and neuroimaging experiments, provide evidence in favor of intra- and inter-subject variability in the use of strategies across participants. Importantly, we show that the parameters estimated using traditional economic models of risky choice were poor predictors of choices in our paradigm, providing possible evidence for differences in decision strategy within and across participants.

One influential conjecture in decision making is that people frequently use a variety of simplifying heuristics that reduce effort associated with the decision process (Shah and Oppenheimer, 2008; Simon, 1957). Pmax choices in the current task are consistent with such an effort-reduction framework, given that they were associated with faster response times in the behavioral experiments (note that we do not have accurate estimates of response times in the imaging experiment as we sought to explicitly separate the decision and response phases in our design), and that the proportion of Pmax choices decreased adaptively with increasing cost in terms of expected value in all experiments. We suggest, therefore, that strategic preferences in the current task reflect tradeoffs – resolved differently by individual subjects and over trials – between one strategy that simplifies a complex decision problem by using a simple heuristic of maximizing the chances of winning (Pmax) and another, more compensatory strategy that involves consideration of additional information as well as the emotions associated with extreme gains (Gmax) or losses (Lmin).

To the extent that the Pmax choices reflect a more simplifying strategy, the pattern of activations seen in this study seems counterintuitive: the regions conventionally associated with automatic and affective processing (aINS and vmPFC) predicted magnitude-sensitive choices that were more consistent with traditional economic models such as expected utility maximization, whereas the regions conventionally associated with executive functions (dlPFC and PPC) predicted choices more consistent with a simplifying strategy. The lateral prefrontal cortex has been shown in previous studies to be active during probabilistic decision-making (Heekeren et al., 2004; Heekeren et al., 2006) as well as sensitive to individual differences in the processing of probability (Tobler et al., 2008). Neurons within this region have also been shown to track reward probabilities (Kobayashi et al., 2002) and process reward and action in stochastic situations (Barraclough et al., 2004). Similarly, the parietal cortex also plays an important role in tracking outcome probabilities (Dorris and Glimcher, 2004; Huettel et al., 2005). Given that Pmax choices are based on the overall probability of winning, activation in dlPFC and PPC could be associated with tracking subjective probabilities in these gambles.

Conversely, the Gmax and Lmin choices increase the chances of an aversive outcome, relative to a neutral aspiration level (Lopes and Oden, 1999). Supporting this interpretation, we found a clear double dissociation with activation in vmPFC predicting Gmax choices and activation in aINS predicting Lmin choices. The contributions of vmPFC to gain-seeking behavior (at the expense of potential losses) have been documented in both patient (Bechara et al., 2000) and neuroimaging studies (Tobler et al., 2007). Conversely, there has been substantial recent work demonstrating the importance of aINS for aversion to negative consequences, even to the point of making risk-averse mistakes in economic decisions (Kuhnen and Knutson, 2005; Paulus et al., 2003; Preuschoff et al., 2008; Rolls et al., 2008). Together, these findings suggest that the conventional notion that decisions reflect compensatory balancing of decision variables is an oversimplification. In addition, different brain regions bias how people approach decision problems, which may in turn lead to one form of behavior or another depending on the task context.

Furthermore, the balance between cognitive and affective brain regions did not, by itself, explain individual differences in strategy preferences. Activation in another region, dmPFC, predicted variability in strategic preferences across subjects. We note that the role of dmPFC in complex decision making remains relatively unknown. One very recent experiment found increased activation in this region when subjects faced greater decision-related conflict (Pochon et al., 2008), as dissociable from the more commonly reported response conflict (Botvinick et al., 2001). A similar region of dmPFC was implicated by de Martino and colleagues (De Martino et al., 2006), again when subjects made decisions counter to their general behavioral tendency (i.e., against typical framing effects). However, it is important to note that all subjects in their study exhibited a bias toward using the framing heuristic, while in the current study, subjects varied in their relative preference for two different strategies. Therefore, a parsimonious explanation for the function of this region of dmPFC is that it supports aspects of decision making that are coded in relation to an underlying strategic tendency, not effects specific to framing. Further support for this hypothesis is provided by the differential functional connectivity of the dmPFC to dlPFC and anterior insula for simplifying and compensatory choices respectively. These findings are consistent with the interpretation that activation differences of the dmPFC shape behavior by modulating choice-related brain regions, with the strength of this modulatory effect dependent on an individual’s preferred strategy.

We additionally observed a striking relationship between neurometric sensitivity to reward and strategic biases across individuals. Our initial analyses found that activation of the vSTR increased when anticipating the outcome of a monetary gamble, increased further if that gamble was resolved to a gain, but decreased if that gamble was resolved to a loss. This pattern of results was consistent with numerous prior studies using human neuroimaging (Breiter et al., 2001; Delgado et al., 2000; Seymour et al., 2007) and primate electrophysiology (Schultz et al., 1997). However, we additionally observed the novel result that the magnitude of the vSTR response was a strong predictor of individual strategic preferences. Specifically, the sensitivity to gains and losses in the vSTR is greatest for individuals who prefer the Pmax choices, consistent with their strategy of maximizing their chances of winning. We emphasize that the gambles were not resolved until after all decisions were made, so this effect could not be attributed to learning from outcomes. Although our design does not allow determination of the direction of causation, these results suggest that an increased sensitivity to reward valence may lead to simple decision rules that overemphasize the probability of achieving a positive outcome.

Depending upon the circumstances, organisms may adopt strategies that emphasize different forms of computation, whether to obtain additional information (Daw et al., 2006), to improve models of outcome utility (Montague and Berns, 2002), or to simplify a complex decision problem. Accordingly, the activation of a given brain system (e.g. dlPFC) may sometimes lead to behavior consistent with economic theories of rationality (Sanfey et al., 2003) and in other circumstances (such as the present experiment) predict a non-normative choice consistent with a simplifying strategy. Our results demonstrate that decision making reflects an interaction among brain systems coding for different sorts of computations, with some regions (e.g., aINS, vmPFC) coding for specific behaviors and others (e.g., dmPFC) for preferred strategies.

Methods

Subjects

We conducted two behavioral experiments (N1 = 128, N2 = 71) and one fMRI experiment (N = 23). All subjects were young adults who participated for monetary payment. All subjects gave written informed consent as part of protocols approved by the Institutional Review Boards of Duke University and Duke University Medical Center. Details of the procedures for the behavioral experiments can be found in the supplementary materials.

Twenty-three healthy, neurologically normal young-adult volunteers (13 female; age range: 18–31y; mean age: 24y) participated in the fMRI session. No subject was repeated from the behavioral pilot session. All subjects acclimated to the fMRI environment using a mock MRI scanner and participated in two short practice runs consisting of six trials each, one inside and one outside of the fMRI scanner. Three subjects were excluded from some analyses involving strategy effects due to lack of variability in their response (two subjects always chose the Pmax option while the third subject never chose the Pmax option), leaving a total of 20 subjects in the complete analyses of the decision-making trials. One additional subject was excluded from the outcome-delivery trials due to a computer error in saving the timing associated with the trials.

At the outset of the experiment, subjects were provided detailed instructions about the payment procedures (see Supplementary Materials for details). They were then given a sealed envelope that contained an endowment to offset potential losses; this envelope was sufficiently translucent that they could see that there was cash inside, even though the quantity could not be determined. Subjects were also told that there was no deception in the study and were given an opportunity to question the experimenter about any procedures before entering the scanner. All subjects expressed that they understood and believed in the procedures.

Experimental Stimuli

In the fMRI experiment, all subjects were presented with a total of 120 five-outcome mixed gambles in a completely randomized order. Each of the gambles comprised two positive outcomes (an extreme outcome of $65 to $80; an intermediate outcome of $35 to $50), two negative outcomes (an extreme outcome of −$65 to −$85; an intermediate outcome of −$35 to −$50), and a central, reference outcome. The reference outcome was $0 in half the trials and a negative value ranging from −$10 to −$25 in remaining half of the trials. Probabilities of each of the five outcomes varied between 0.1 and 0.3 in units of 0.05, and always summed to 1 across the five outcomes. We describe the similar stimuli and methods for the behavioral experiments in the Supplementary Material.

On each trial, subjects could choose between two options for adding money to one of the outcomes. Adding to the reference outcome increased the overall chance of winning money compared to losing money and hence was called the probability-maximizing (Pmax) choice. Alternatively, adding money to an extreme option either increased the magnitude of the best monetary outcome or decreased the magnitude of the worst monetary outcome, and hence were referred to as gain-maximizing (Gmax) and loss-minimizing (Lmin) choices respectively. The amount of money that subjects could add to the outcomes ranged between $10 and $25 and could differ between the two outcomes. For trials with negative reference values, one of the options for adding money always changed the reference option to $0. All outcome values used in this experiment were multiples of $5.

Expected value relations between the two choices were systematically manipulated by changing the amount and/or probabilities associated with each of the options (See Supplementary Materials). Only trial types that placed the two choices in maximal conflict (72 gambles per subject) were included in the primary imaging analyses; other trials were included in the model as separate regressors, but not further analyzed. Note that the trials were counterbalanced for valence of the extreme outcome (i.e., gain or loss) and for valence of the reference outcome (i.e., neutral or loss).

Experimental Design

Each trial began with the display of a five-outcome gamble for 4 or 6s (Fig. 1). Subjects were instructed to examine each gamble as it was presented. Subsequently, subjects were given a choice between two ways of improving the gamble. The amount that could be added and the resulting modified outcome values were displayed in red for both choices, to minimize individual differences resulting from calculation or estimation biases. The modified gamble remained on the screen for 6s, whereupon two arrows appeared to specify which button corresponded to which choice. The association of the buttons to choice was random. Subjects then pressed the button corresponding to their choice. Response times were coded as the time between the appearance of arrows and the button press response (Note that this may not be a true representation of the actual decision times in this task). Subjects were instructed to arrive at their decision during the 6s interval and to press the button corresponding to their choice as soon as the arrows appeared. The decision and response phases were explicitly separated to prevent the contamination of decision effects with response-preparation effects. During the inter-trial interval of 4–8 seconds, a fixation cross was displayed on the screen. Notice that no feedback was provided at the end of each trial and hence there was no explicit learning during the decision phase of the task.

Subjects participated in six runs of this decision task, each containing 20 gambles and lasting approximately 6 minutes. Before those runs, subjects had the opportunity to practice the experimental task (without reward) in two six-gamble blocks, one presented outside the MRI scanner and the other presented within the MRI scanner but prior to collection of the fMRI data. All stimuli were created using the Psychophysics Toolbox (Brainard, 1997; Pelli, 1997) for MATLAB (Mathworks, inc.) and were presented to the subjects via MR-compatible LCD goggles. Subjects responded with the index fingers of each hand via a MR-compatible response box.

Following completion of the decision phase, there was a final 6-minute run in which 40 of the improved gambles were resolved to an actual monetary gain or loss. These gambles were selected randomly from the gambles presented during the decision phase and were presented in modified form based on that subject’s choices. On each trial, subjects passively viewed one of these improved gambles on the screen for 2s (anticipation phase), during which time random numbers flashed rapidly at the bottom of the screen before stopping at a particular value. A text message corresponding to the amount won or lost was then displayed for 1s, followed by an inter-trial fixation period of 3–7s before the onset of the next trial.

Imaging Methods

We acquired fMRI data on a 4T GE scanner using an inverse-spiral pulse sequence (Glover and Law, 2001; Guo and Song, 2003) with parameters: TR = 2000ms; TE = 30ms; 34 axial slices parallel to the AC-PC plane, with voxel size of 3.75*3.75*3.8mm. High resolution 3D full-brain SPGR anatomical images were acquired and used for normalizing and co-registering individual subjects’ data.

Analysis was carried out using FEAT (FMRI Expert Analysis Tool) Version 5.63, part of FSL (FMRIB's Software Library, www.fmrib.ox.ac.uk/fsl) package (Smith et al., 2004). The following pre-statistics processing steps were applied: motion correction using MCFLIRT, slice-timing correction, removal of non-brain voxels using BET, spatial smoothing with a Gaussian kernel of FWHM 8mm, and high-pass temporal filtering. Registration to high resolution and standard images was carried out using FLIRT. All statistical images presented were thresholded using clusters determined by z > 2.3 and a whole-brain corrected cluster significance threshold of p < 0.05.

We used separate first-level regression models to analyze decision effects and outcome effects. The decision model comprised two regressors modeling the magnitude-sensitive compensatory choices (Gmax and Lmin were combined for additional power) and simplifying Pmax choices in the conflict conditions, one regressor modeling the responses in the remaining conditions, one regressor for the initial presentation of the gamble, and one regressor to model the subject responses. (An additional post-hoc analysis on a subset of fifteen subjects separated the magnitude-sensitive choices according to whether they were gain-maximizing or loss-minimizing.) Analysis for the outcome phase consisted of three regressors: one to model the anticipation phase (as subjects were waiting for the corresponding outcome to be revealed), one for positive outcomes (gain), and one for negative outcomes (loss). All regressors were generated by convolving impulses at the onsets of events of interest with a double-gamma hemodynamic response function. Second-level analysis for condition and decision effects within each subject was carried out using a fixed-effects model across runs. Random-effects across-subjects analyses were carried out using FLAME (stage 1 only). When evaluating the effects of behavioral traits (transformed into z-scores) upon brain function, we included our subjects’ trait measures as additional covariates in the third-level analysis.

Logistic Regression Models of Trial-to-Trial Choices

For obtaining the parameter estimates from individual trials for trial-by-trial prediction analysis, we used data that were corrected for motion and differences in slice scan timing but were not smoothed. The data were also transformed into standard space, on which the individual regions-of-interest (ROI) were defined. We used seven different ROIs for this analysis: the right anterior insula and ventromedial prefrontal cortex, which show greater activation for Lmin and Gmax choices respectively; the right posterior parietal cortex, the right precuneus, and right dorsolateral prefrontal cortex, which show greater activation for Pmax choices; and finally the dorsomedial prefrontal cortex and right inferior frontal gyrus, which track strategic variability across subjects. All ROIs were defined functionally based on the third-level activation maps. Activation amplitude was defined as the mean signal change (in percent) over the 6s time-interval from 4s to 10s after the onset of decision phase (i.e., when subjects are shown the two alternative choices). This time window was chosen to encompass the maximal signal change of the fMRI hemodynamic response. A summary measure was obtained for each ROI by averaging over all constituent voxels.

We then performed a hierarchical logistic regression using SPSS to predict the choices made by subjects on each individual trial based on strategic preference (proportion of Pmax choices), brain activation, and interactions between trait and activation. The complete model included a total of 1440 trials (72 trials for each of 20 subjects). Parameters were entered into the model in a stepwise manner, starting with just the behavioral trait measure, then brain activations from the seven ROIs, and finally an interaction term consisting of activation in dmPFC multiplied by strategic tendency. All parameters that significantly improved the model at each stage are summarized in Table 1. The results were consistent regardless of whether forward selection or backward elimination was used in the hierarchical regression.

Functional-Connectivity Analyses

We used a modified version of the decision model described above to perform task-related connectivity analysis. A seed region was defined using activation in the dmPFC that covaried with the strategic variability across subjects (Fig. 3). For each run for each subject, we then extracted the time-series from this region. A box-car vector was then defined for each condition of interest, with the “on” period defined from 4s–10s after the onset of the decision phase for each trial in that condition. These box-car vectors were then multiplied with the extracted time-series to form the connectivity regressors. This allowed us to examine brain connectivity as a function of strategy, specific to the decision phase. These regressors were then used as covariates in a separate GLM analysis, which included the original variables of interest, from the decision-model described above (Cohen et al., 2005). Group activation maps were then obtained in the same way as the traditional regression analysis. A positive activation for the connectivity regressors indicates that the region correlates more positively with the seed region during the experimental condition of interest.

Supplementary Material

Acknowledgments

We thank Antonio Rangel and Charles Noussair for suggestions about behavioral analyses, Michael Platt, Alison Adcock, and Enrico Diecidue for comments on the manuscript, Adrienne Taren for assistance with the connectivity analysis, and Kelsey Merison for assistance in data collection. This research was supported by the US National Institute of Mental Health (NIMH-70685) and by the US National Institute of Neurological Disease and Stroke (NINDS-41328). Scott Huettel was supported by an Incubator Award from the Duke Institute for Brain Sciences.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Supplementary Information accompanies the online version of the paper.

The authors have no competing financial interests.

Author Contributions

All authors contributed to the design of the experiments. VV led the programming of the experiments, data collection, and data analysis, in collaboration with SAH. All authors contributed to writing and editing the final manuscript.

References

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bechara A, Tranel D, Damasio H. Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain. 2000;123:2189–2202. doi: 10.1093/brain/123.11.2189. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Woolrich MW, Walton ME, Rushworth MF. Learning the value of information in an uncertain world. Nat Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Birnbaum MH. New paradoxes of risky decision making. Psychological Review. 2008;115:463–501. doi: 10.1037/0033-295X.115.2.463. [DOI] [PubMed] [Google Scholar]

- Botvinick M, Nystrom LE, Fissell K, Carter CS, Cohen JD. Conflict monitoring versus selection-for-action in anterior cingulate cortex. Nature. 1999;402:179–181. doi: 10.1038/46035. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychol Rev. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- Camerer CF. Behavioural studies of strategic thinking in games. Trends Cogn Sci. 2003;7:225–231. doi: 10.1016/s1364-6613(03)00094-9. [DOI] [PubMed] [Google Scholar]

- Cohen MX, Heller AS, Ranganath C. Functional connectivity with anterior cingulate and orbitofrontal cortices during decision-making. Brain Res Cogn Brain Res. 2005;23:61–70. doi: 10.1016/j.cogbrainres.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Coricelli G, Critchley HD, Joffily M, O'Doherty JP, Sirigu A, Dolan RJ. Regret and its avoidance: a neuroimaging study of choice behavior. Nature Neuroscience. 2005;8:1255–1262. doi: 10.1038/nn1514. [DOI] [PubMed] [Google Scholar]

- Daw ND, O'Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. Journal of Neurophysiology. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- Dorris MC, Glimcher PW. Activity in posterior parietal cortex is correlated with the relative subjective desirability of action. Neuron. 2004;44:365–378. doi: 10.1016/j.neuron.2004.09.009. [DOI] [PubMed] [Google Scholar]

- Fennema H, Wakker P. Original and cumulative prospect theory: A discussion of empirical differences. Journal of Behavioral Decision Making. 1997;10:53–64. [Google Scholar]

- Gigerenzer G, Goldstein DG. Reasoning the fast and frugal way: models of bounded rationality. Psychol Rev. 1996;103:650–669. doi: 10.1037/0033-295x.103.4.650. [DOI] [PubMed] [Google Scholar]

- Glover GH, Law CS. Spiral-in/out BOLD fMRI for increased SNR and reduced susceptibility artifacts. Magn Reson Med. 2001;46:515–522. doi: 10.1002/mrm.1222. [DOI] [PubMed] [Google Scholar]

- Guo H, Song AW. Spiral-in-and-out functional image acquisition with embedded z-shimming for susceptibility signal recovery. Journal of Magnetic Resonance Imaging. 2003;18:389–395. doi: 10.1002/jmri.10355. [DOI] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Bandettini PA, Ungerleider LG. A general mechanism for perceptual decision-making in the human brain. Nature. 2004;431:859–862. doi: 10.1038/nature02966. [DOI] [PubMed] [Google Scholar]

- Heekeren HR, Marrett S, Ruff DA, Bandettini PA, Ungerleider LG. Involvement of human left dorsolateral prefrontal cortex in perceptual decision making is independent of response modality. Proceedings of the National Academy of Sciences of the United States of America. 2006;103:10023–10028. doi: 10.1073/pnas.0603949103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Huettel SA. Behavioral, but not reward, risk modulates activation of prefrontal, parietal, and insular cortices. Cognitive, Affective, and Behavioral Neuroscience. 2006;6:141–151. doi: 10.3758/cabn.6.2.141. [DOI] [PubMed] [Google Scholar]

- Huettel SA, Song AW, McCarthy G. Decisions under uncertainty: Probabilistic context influences activity of prefrontal and parietal cortices. Journal of Neuroscience. 2005;25:3304–3311. doi: 10.1523/JNEUROSCI.5070-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huettel SA, Stowe CJ, Gordon EM, Warner BT, Platt ML. Neural signatures of economic preferences for risk and ambiguity. Neuron. 2006;49:765–775. doi: 10.1016/j.neuron.2006.01.024. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Frederick S. Representativeness revisited: Attribute substitution in intuitive judgement. In: Gilovich T, Griffin D, Kahneman D, editors. Hurestics and biases: The psychology of intuitive thought. New York: Cambridge University Press; 2002. pp. 49–81. [Google Scholar]

- Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- Kerns JG, Cohen JD, MacDonald AW, 3rd, Cho RY, Stenger VA, Carter CS. Anterior cingulate conflict monitoring and adjustments in control. Science. 2004;303:1023–1026. doi: 10.1126/science.1089910. [DOI] [PubMed] [Google Scholar]

- Kobayashi S, Lauwereyns J, Koizumi M, Sakagami M, Hikosaka O. Influence of reward expectation on visuospatial processing in macaque lateral prefrontal cortex. J Neurophysiol. 2002;87:1488–1498. doi: 10.1152/jn.00472.2001. [DOI] [PubMed] [Google Scholar]

- Kuhnen CM, Knutson B. The neural basis of financial risk taking. Neuron. 2005;47:763–770. doi: 10.1016/j.neuron.2005.08.008. [DOI] [PubMed] [Google Scholar]

- Lopes LL, Oden GC. The role of aspiration level in risky choice: A comparison of cumulative prospect theory and SP A theory. Journal of Mathematical Psychology. 1999;43:286–313. doi: 10.1006/jmps.1999.1259. [DOI] [PubMed] [Google Scholar]

- Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Hozack N, Zauscher B, McDowell JE, Frank L, Brown GG, Braff DL. Prefrontal, parietal, and temporal cortex networks underlie decision-making in the presence of uncertainty. NeuroImage. 2001;13:91–100. doi: 10.1006/nimg.2000.0667. [DOI] [PubMed] [Google Scholar]

- Paulus MP, Rogalsky C, Simmons A, Feinstein JS, Stein MB. Increased activation in the right insula during risk-taking decision making is related to harm avoidance and neuroticism. NeuroImage. 2003;19:1439–1448. doi: 10.1016/s1053-8119(03)00251-9. [DOI] [PubMed] [Google Scholar]

- Payne JW. It is whether you win or lose: The importance of the overall probabilities of winning or losing in risky choice. J Risk Uncertainty. 2005;30:5–19. [Google Scholar]

- Payne JW, Bettman JR, Coupey E, Johnson EJ. A Constructive Process View of Decision-Making - Multiple Strategies in Judgment and Choice. Acta Psychologica. 1992;80:107–141. [Google Scholar]

- Payne JW, Bettman JR, Johnson EJ. Adaptive Strategy Selection in Decision-Making. Journal of Experimental Psychology-Learning Memory and Cognition. 1988;14:534–552. [Google Scholar]

- Payne JW, Bettman JR, Johnson EJ. The Adaptive Decision Maker. Cambridge University Press; 1993. [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Platt ML, Huettel SA. Risky business: the neuroeconomics of decision making under uncertainty. Nat Neurosci. 2008;11:398–403. doi: 10.1038/nn2062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pochon JB, Riis J, Sanfey AG, Nystrom LE, Cohen JD. Functional imaging of decision conflict. Journal of Neuroscience. 2008;28:3468–3473. doi: 10.1523/JNEUROSCI.4195-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Preuschoff K, Quartz SR, Bossaerts P. Human insula activation reflects risk prediction errors as well as risk. J Neurosci. 2008;28:2745–2752. doi: 10.1523/JNEUROSCI.4286-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET, McCabe C, Redoute J. Expected value, reward outcome, and temporal difference error representations in a probabilistic decision task. Cerebral Cortex. 2008;18:652–663. doi: 10.1093/cercor/bhm097. [DOI] [PubMed] [Google Scholar]

- Sanfey AG, Rilling JK, Aronson JA, Nystrom LE, Cohen JD. The neural basis of economic decision-making in the Ultimatum Game. Science. 2003;300:1755–1758. doi: 10.1126/science.1082976. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- Seymour B, Daw N, Dayan P, Singer T, Dolan R. Differential encoding of losses and gains in the human striatum. J Neurosci. 2007;27:4826–4831. doi: 10.1523/JNEUROSCI.0400-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shah AK, Oppenheimer DM. Heuristics made easy: An effort-reduction framework. Psychol Bull. 2008;134:207–222. doi: 10.1037/0033-2909.134.2.207. [DOI] [PubMed] [Google Scholar]

- Simon HA. Models of Man: Social and Rational. New York: Wiley; 1957. [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TEJ, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, et al. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23:S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Tobler PN, Christopoulos GI, O'Doherty JP, Dolan RJ, Schultz W. Neuronal distortions of reward probability without choice. J Neurosci. 2008;28:11703–11711. doi: 10.1523/JNEUROSCI.2870-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. Journal of Neurophysiology. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tom SM, Fox CR, Trepel C, Poldrack RA. The neural basis of loss aversion in decision-making under risk. Science. 2007;315:515–518. doi: 10.1126/science.1134239. [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D. Judgment under uncertainty: Heuristics and biases. Science. 1974;185:1124–1131. doi: 10.1126/science.185.4157.1124. [DOI] [PubMed] [Google Scholar]

- Tversky A, Kahneman D. Advances in prospect theory: Cumulative representation of uncertainty. J Risk Uncertainty. 1992;5:297–323. [Google Scholar]

- Wu G, Zhang J, Gonzalez R. Decision Under Risk. In: Koehler D, Harvey N, editors. Handbook of Judgment and Decision Making. Blackwell Publishing; 2007. [Google Scholar]

- Yacubian J, Glascher J, Schroeder K, Sommer T, Braus DF, Buchel C. Dissociable systems for gain- and loss-related value predictions and errors of prediction in the human brain. J Neurosci. 2006;26:9530–9537. doi: 10.1523/JNEUROSCI.2915-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.