Abstract

Identifying emerging viral pathogens and characterizing their transmission is essential to developing effective public health measures in response to an epidemic. Phylogenetics, though currently the most popular tool used to characterize the likely host of a virus, can be ambiguous when studying species very distant to known species and when there is very little reliable sequence information available in the early stages of the outbreak of disease. Motivated by an existing framework for representing biological sequence information, we learn sparse, tree-structured models, built from decision rules based on subsequences, to predict viral hosts from protein sequence data using popular discriminative machine learning tools. Furthermore, the predictive motifs robustly selected by the learning algorithm are found to show strong host-specificity and occur in highly conserved regions of the viral proteome.

Introduction

Emerging pathogens constitute a continuous threat to our society, as it is notoriously difficult to perform a realistic assessment of optimal public health measures when little information on the pathogen is available. Recent outbreaks include the West Nile virus in New York (1999); SARS coronavirus in Hong Kong (2002–2003); LUJO virus in Lusaka (2008); H1N1 influenza pandemic virus in Mexico and the US (2009); and cholera in Haiti (2010). In all these cases, an outbreak of unusual clinical diagnoses triggered a rapid response, and an essential part of this response is the accurate identification and characterization of the pathogen.

Sequencing is becoming the most common and reliable technique to identify novel organisms. For instance, LUJO was identified as a novel, very distinct virus after the sequence of its genome was compared to other arenaviruses [1]. The genome of an organism is a unique fingerprint that reveals many of its properties and past history. For instance, arenaviruses are zoonotic agents usually transmitted from rodents.

Another promising area of research is metagenomics, in which DNA and RNA samples from different environments are sequenced using shotgun approaches. Metagenomics is providing an unbiased understanding of the different species that inhabit a particular niche. Examples include the human microbiome and virome, and the Ocean metagenomics collection [2]. It has been estimated that there are more than 600 bacterial species living in the mouth but that only 20% have been characterized.

Pathogen identification and metagenomic analysis point to an extremely rich diversity of unknown species, where partial genomic sequence is the only information available. The main aim of this work is to develop approaches that can help infer characteristics of an organism from subsequences of its genomic sequence where primary sequence information analysis does not allow us to identify its origin. In particular, our work will focus on predicting the host of a virus from the viral genome.

The most common approach to deduce a likely host of a virus from the viral genome is sequence/phylogenetic similarity (i.e., the most likely host of a particular virus is the one that is infected by related viral species). However, similarity measures based on genomic/protein sequence or protein structure could be misleading when dealing with species very distant to known, annotated species. Other approaches are based on the fact that viruses undergo mutational and evolutionary pressures from the host. For instance, viruses could adapt their codon bias for a more efficient interaction with the host translational machinery or they could be under pressure of deaminating enzymes (e.g. APOBEC3G or HIV infection). All these factors imprint characteristic signatures in the viral genome. Several techniques have been developed to extract these patterns (e.g., nucleotide and dinucleotide compositional biases, and frequency analysis techniques [3]). Although most of these techniques could reveal an underlying biological mechanism, they lack sufficient accuracy to provide reliable assessments [4], [5]. A relatively similar approach to the one presented here is DNA barcoding. Genetic barcoding identifies conserved genomic structures that contain the necessary information for classification.

Using contemporary machine learning techniques, we present an approach to predicting the hosts of unseen viruses, based on the amino acid sequences of proteins of viruses whose hosts are well known. Given protein sequence and host information of Picornaviridae and Rhabdoviridae, two well-characterized families of RNA viruses, we learn a multi-class classifier composed of simple sequence-motif based questions (e.g., does the viral sequence contain the motif ‘DALMWLPD’?) that achieves high prediction accuracies on held-out data. Prediction accuracy of the classifier is measured by the area under the ROC curve, and is compared to a straightforward nearest-neighbor classifier. Importantly (and quite surprisingly), a post- processing study of the highly predictive sequence-motifs selected by the algorithm identifies strongly conserved regions of the viral genome, facilitating biological interpretation.

Results

Data specifications

We aim to learn a predictive model to identify hosts of viruses belonging to a specific family; we show results for Picornaviridae and Rhabdoviridae. Picornaviridae is a family of viruses that contain a single stranded, positive sense RNA. The viral genome usually contains about 1–2 Open Reading Frames (ORF), each coding for protein sequences about 2000–3000 amino acids long. Rhabdoviridae is a family of negative sense single stranded RNA viruses whose genomes typically code for five different proteins: large protein (L), nucleoprotein (N), phosphoprotein (P), glycoprotein (G), and matrix protein (M). The data consist of 148 viruses in the Picornaviridae family and 50 viruses in the Rhabdoviridae family. For some choice of  and

and  , we represent each virus as a vector of counts of all possible

, we represent each virus as a vector of counts of all possible  -mers, up to

-mers, up to  -mismatches, generated from the amino-acid alphabet. Each virus is also assigned a label depending on its host: vertebrate/invertebrate/plant in the case of Picornaviridae, and animal/plant in the case of Rhabdoviridae (see Tables S1 and S2 for the names and label assignments of viruses). Using multiclass Adaboost [6], we learn an Alternating Decision Tree (ADT) classifier [7] on training data drawn from the set of labeled viruses and test the model on the held-out viruses.

-mismatches, generated from the amino-acid alphabet. Each virus is also assigned a label depending on its host: vertebrate/invertebrate/plant in the case of Picornaviridae, and animal/plant in the case of Rhabdoviridae (see Tables S1 and S2 for the names and label assignments of viruses). Using multiclass Adaboost [6], we learn an Alternating Decision Tree (ADT) classifier [7] on training data drawn from the set of labeled viruses and test the model on the held-out viruses.

BLAST Classifier accuracy

Given whole protein sequences, a straightforward classifier is given by a nearest-neighbor approach based on the Basic Local Alignment Search Tool (BLAST) [8]. We can use BLAST score (or  -value) as a measure of the distances between the unknown virus and a set of viruses with known hosts. The nearest neighbor approach to classification then assigns the host of the closest virus to the unknown virus. Intuitively, as this approach uses the whole protein to perform the classification, we expect the accuracy to be very high. This is indeed the case – BLAST, along with a

-value) as a measure of the distances between the unknown virus and a set of viruses with known hosts. The nearest neighbor approach to classification then assigns the host of the closest virus to the unknown virus. Intuitively, as this approach uses the whole protein to perform the classification, we expect the accuracy to be very high. This is indeed the case – BLAST, along with a  -nearest neighbor classifier, successfully classifies all viruses in the Rhabdoviridae family, and all but 3 viruses in the Picornaviridae family. What is missing from this approach, however, is the ability to ascertain and interpret host relevant motifs.

-nearest neighbor classifier, successfully classifies all viruses in the Rhabdoviridae family, and all but 3 viruses in the Picornaviridae family. What is missing from this approach, however, is the ability to ascertain and interpret host relevant motifs.

ADT Classifier accuracy

The accuracy of the ADT model, at each round of boosting, is evaluated using a multi-class extension of the Area Under the Curve (AUC). Here the ‘curve’ is the Receiver Operating Characteristic (ROC) which traces a measure of the classification accuracy of the ADT for each value of a real-valued discrimination threshold. As this threshold is varied, a virus is considered a true (or false) positive if the prediction of the ADT model for the true class of that protein is greater (or less) than the threshold value. The ROC curve is then traced out in True Positive Rate – False Positive Rate space by changing the threshold value and the AUC score is defined as the area under this ROC curve.

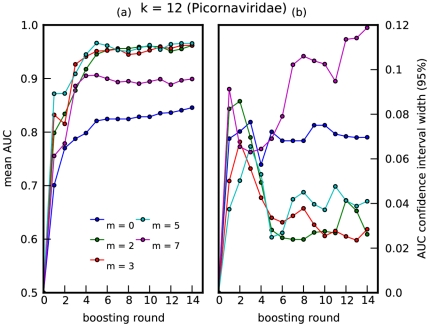

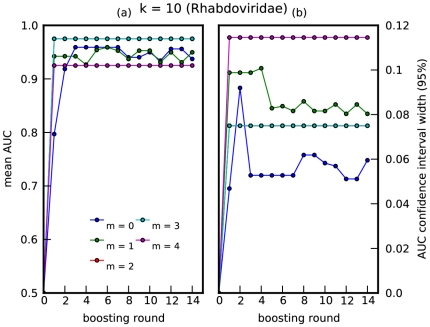

The ADT is trained using 10-fold cross validation, calculating the AUC, at each round of boosting, for each fold using the held-out data. The mean AUC and standard deviation over all folds is plotted against boosting round in Figures 1, 2. Note that the ‘smoothing effect’ introduced by using the mismatch feature space allows for improved prediction accuracy for  . For Picornaviridae, the best accuracy is achieved at

. For Picornaviridae, the best accuracy is achieved at  , for a choice of

, for a choice of  ; this degree of ‘smoothing’ is optimal for the algorithm to capture predictive amino-acid subsequences present, up to a certain mismatch, in rapidly mutating viral protein sequences. For Rhabdoviridae, near perfect accuracy is achieved with merely one decision rule, i.e., Rhabdoviridae with plant or animal hosts can be distinguished based on the presence or absence of one highly conserved region in the L protein.

; this degree of ‘smoothing’ is optimal for the algorithm to capture predictive amino-acid subsequences present, up to a certain mismatch, in rapidly mutating viral protein sequences. For Rhabdoviridae, near perfect accuracy is achieved with merely one decision rule, i.e., Rhabdoviridae with plant or animal hosts can be distinguished based on the presence or absence of one highly conserved region in the L protein.

Figure 1. Prediction accuracy for Picornaviridae.

A plot of (a) mean AUC vs boosting round, and (b) 95% confidence interval vs boosting round. The mean and standard deviation were computed over 10-folds of held-out data, for Picornaviridae, where  . Boosting round 0 corresponds to introducing the offset term into the model. Thus, the boosting round can also be interpreted as one-half the number of decision rules (one-half because each round introduces a decision rule and its negation into the model).

. Boosting round 0 corresponds to introducing the offset term into the model. Thus, the boosting round can also be interpreted as one-half the number of decision rules (one-half because each round introduces a decision rule and its negation into the model).

Figure 2. Prediction accuracy for Rhabdoviridae.

A plot of (a) mean AUC vs boosting round, and (b) 95% confidence interval vs boosting round. The mean and standard deviation were computed over 5-folds of held-out data, for Rhabdoviridae, where  . The relatively higher uncertainty for this virus family was likely due to very small sample sizes. Note that the cyan curve lies on top of the red curve.

. The relatively higher uncertainty for this virus family was likely due to very small sample sizes. Note that the cyan curve lies on top of the red curve.

Over representation of highly similar viruses within the data used for learning is an important source of overfitting that should be kept in mind when using this technique. Specifically, if the data largely consist of nearly-similar viral sequences (e.g. different sequence reads from the same virus), the learned ADT model would overfit to insignificant variations within the data (even if 10-fold cross validation were employed), making generalization to new subfamilies of viruses extremely poor. To check for this, we hold out viruses corresponding to a particular subfamily (see Tables S1 and S2 for subfamily annotation of the viruses used), run 10-fold cross validation on the remaining data and compute the expected fraction of misclassified viruses in the held-out subfamily, averaged over the learned ADT models. Tables 1 and 2 list the mean classifier validation error and number of viruses for subfamilies in Picornaviridae and Rhabdoviridae. Note that the Picornaviridae data used consist mostly of Cripaviruses; thus, the high misclassification rate when holding out Cripaviruses could also be attributed to a significantly lower sample size used in learning. The poorly classified subfamilies contain a very small number of viruses, showing that the method is strongly generalizable on average.

Table 1. Validation error for virus subfamilies in Picornaviridae.

| Subfamily | Number of viruses | Validation Error |

| Hepatovirus | 2 | 0.40 |

| Waikivirus | 1 | 0.00 |

| Aphthovirus | 8 | 0.07 |

| Parechovirus | 3 | 0.47 |

| Tremovirus | 1 | 1.00 |

| Cardiovirus | 4 | 0.23 |

| Enterovirus | 17 | 0.12 |

| Iflavirus | 4 | 0.47 |

| Sequivirus | 1 | 0.50 |

| Senecavirus | 1 | 0.20 |

| Teschovirus | 1 | 0.30 |

| Sapelovirus | 1 | 0.00 |

| Kobuvirus | 4 | 0.00 |

| Waikavirus | 1 | 0.70 |

| Rhinovirus | 3 | 0.10 |

| Marnavirus | 1 | 0.30 |

| Cripavirus | 76 | 1.00 |

| Erbovirus | 3 | 0.30 |

Table 2. Validation error for virus subfamilies in Rhabdoviridae.

| Subfamily | Number of viruses | Validation Error |

| Lyssavirus | 18 | 0.00 |

| Novirhabdovirus | 8 | 0.75 |

| Dimarhabdovirus | 4 | 0.00 |

| Cytorhabdovirus | 4 | 0.77 |

| Nucleorhabdovirus | 5 | 0.52 |

| Vesiculovirus | 8 | 0.00 |

| Ephemerovirus | 1 | 0.00 |

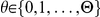

Predictive subsequences are conserved within hosts

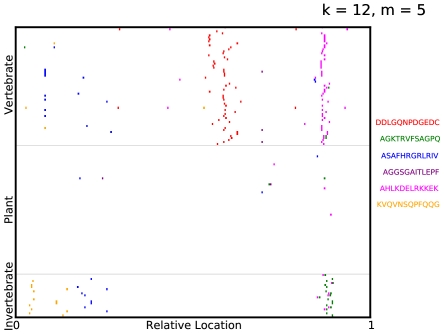

Having learned a highly predictive model, we would like to locate where the selected  -mers occur in the viral proteomes. We visualize the

-mers occur in the viral proteomes. We visualize the  -mer subsequences selected in a specific ADT by indicating elements of the mismatch neighborhood of each selected subsequence on the virus protein sequences. In Figure 3, the virus proteomes are grouped vertically by their label with their lengths scaled to

-mer subsequences selected in a specific ADT by indicating elements of the mismatch neighborhood of each selected subsequence on the virus protein sequences. In Figure 3, the virus proteomes are grouped vertically by their label with their lengths scaled to  . Quite surprisingly, the predictive

. Quite surprisingly, the predictive  -mers occur in regions that are strongly conserved among viruses sharing a specific host. Note that the representation we used for viral sequences retained no information regarding the location of each

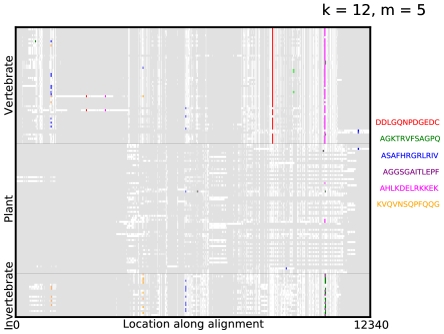

-mers occur in regions that are strongly conserved among viruses sharing a specific host. Note that the representation we used for viral sequences retained no information regarding the location of each  -mer on the virus protein. To visualize this more explicitly, we aligned the protein sequences using the multiple alignment algorithm COBALT [9] and plotted the alignments in Figure 4, with gaps in the alignment indicated in grey and the location of the selected

-mer on the virus protein. To visualize this more explicitly, we aligned the protein sequences using the multiple alignment algorithm COBALT [9] and plotted the alignments in Figure 4, with gaps in the alignment indicated in grey and the location of the selected  -mers indicated in their respective colors. Furthermore, these selected

-mers indicated in their respective colors. Furthermore, these selected  -mers are significant as they are robustly selected by Adaboost for different choices of train/test split of the data, as shown in Figure 5.

-mers are significant as they are robustly selected by Adaboost for different choices of train/test split of the data, as shown in Figure 5.

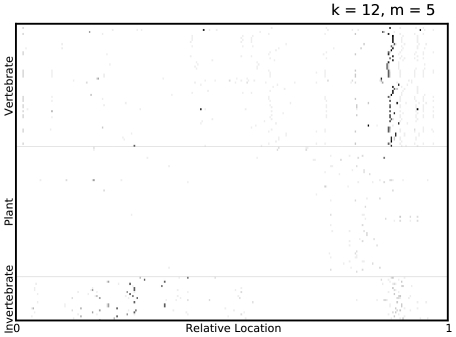

Figure 3. Visualizing predictive subsequences.

A visualization of the mismatch neighborhood of the first 6  -mers selected in an ADT for Picornaviridae, where

-mers selected in an ADT for Picornaviridae, where  . The virus proteomes are grouped vertically by their label with their lengths scaled to

. The virus proteomes are grouped vertically by their label with their lengths scaled to  . Regions containing elements of the mismatch neighborhood of each

. Regions containing elements of the mismatch neighborhood of each  -mer are then indicated on the virus proteome. Note that the proteomes are not aligned along the selected

-mer are then indicated on the virus proteome. Note that the proteomes are not aligned along the selected  -mers but merely stacked vertically with their lengths normalized.

-mers but merely stacked vertically with their lengths normalized.

Figure 4. Visualizing predictive subsequences on aligned sequences.

A visualization of the mismatch neighborhood of the first 6  -mers selected in an ADT for Picornaviridae, where

-mers selected in an ADT for Picornaviridae, where  . The virus proteomes are aligned using the multiple alignment algorithm COBALT and the alignments are grouped vertically by their label with gaps in the alignment indicated in grey. Regions containing elements of the mismatch neighborhood of each

. The virus proteomes are aligned using the multiple alignment algorithm COBALT and the alignments are grouped vertically by their label with gaps in the alignment indicated in grey. Regions containing elements of the mismatch neighborhood of each  -mer are then indicated on the alignment.

-mer are then indicated on the alignment.

Figure 5. Visualizing predictive regions of protein sequences.

A visualization of the mismatch neighborhood of the first 7  -mers, selected in all ADTs over 10-fold cross validation, for Picornaviridae, where

-mers, selected in all ADTs over 10-fold cross validation, for Picornaviridae, where  . Regions containing elements of the mismatch neighborhood of each selected

. Regions containing elements of the mismatch neighborhood of each selected  -mer are indicated on the virus proteome, with the grayscale intensity on the plot being inversely proportional to the number of cross-validation folds in which some

-mer are indicated on the virus proteome, with the grayscale intensity on the plot being inversely proportional to the number of cross-validation folds in which some  -mer in that region was selected by Adaboost. Thus, darker spots indicate that some

-mer in that region was selected by Adaboost. Thus, darker spots indicate that some  -mer in that part of the proteome was robustly selected by Adaboost. Furthermore, a vertical cluster of dark spots indicate that region, selected by Adaboost to be predictive, is also strongly conserved among viruses sharing a common host type.

-mer in that part of the proteome was robustly selected by Adaboost. Furthermore, a vertical cluster of dark spots indicate that region, selected by Adaboost to be predictive, is also strongly conserved among viruses sharing a common host type.

We can now BLAST the selected  -mers in Figure 3 against the GenBank database [10] of Picornaviridae. It is interesting to point out that most of these motifs are found in regions with an essential biological function. For instance, the motif ‘DDLGQNPDGEDC’ occurs in a highly conserved region in the 2C protein [11]. The 2C protein functions as ATPase and GTPase [12], [13], is involved in membrane-binding [14], [15] and RNA-binding activities [16]. In particular, this motif forms part of a larger NTP-binding pattern that is found not only in picornaviruses but in DNA viruses (papova-, parvo-, geminiviruses, and P4 bacteriophage) and RNA viruses (coma- and nepoviruses). While genes coding for these proteins occur in a variety of viruses, this specific motif aligned strongly with proteins from vertebrate viruses like human cosavirus, saffold virus and Theilers murine encephalomyelitis virus. The

-mers in Figure 3 against the GenBank database [10] of Picornaviridae. It is interesting to point out that most of these motifs are found in regions with an essential biological function. For instance, the motif ‘DDLGQNPDGEDC’ occurs in a highly conserved region in the 2C protein [11]. The 2C protein functions as ATPase and GTPase [12], [13], is involved in membrane-binding [14], [15] and RNA-binding activities [16]. In particular, this motif forms part of a larger NTP-binding pattern that is found not only in picornaviruses but in DNA viruses (papova-, parvo-, geminiviruses, and P4 bacteriophage) and RNA viruses (coma- and nepoviruses). While genes coding for these proteins occur in a variety of viruses, this specific motif aligned strongly with proteins from vertebrate viruses like human cosavirus, saffold virus and Theilers murine encephalomyelitis virus. The  -mer ‘AHLKDELRKKEK’ occurs in a region coding for RNA-dependent RNA polymerase, a protein found in all RNA viruses essential for direct replication of RNA from an RNA template. This motif strongly aligned with proteins from hepatitis A virus, Ljungan virus and rhinovirus isolated in humans and ducks, while the

-mer ‘AHLKDELRKKEK’ occurs in a region coding for RNA-dependent RNA polymerase, a protein found in all RNA viruses essential for direct replication of RNA from an RNA template. This motif strongly aligned with proteins from hepatitis A virus, Ljungan virus and rhinovirus isolated in humans and ducks, while the  -mer ‘AGKTRVFSAGPQ’ occurs in a functionally similar region for invertebrate viruses. Finally, the

-mer ‘AGKTRVFSAGPQ’ occurs in a functionally similar region for invertebrate viruses. Finally, the  -mers ‘ASAFHRGRLRIV’ and ‘KVQVNSQPFQQG’ occur in regions coding for viral capsid protein. This motif strongly aligned with proteins from Human parechovirus, Drosophila C virus and Cricket paralysis virus. Variations in the amino acid sequence of these proteins are important both for determining viral host- specificity and contributing to antigenic diversity. For Rhabdoviridae, the motif ‘GLPLKASETW’ is found highly conserved in the RNA polymerase in the L protein of plant viruses (see Figures S1, S2).

-mers ‘ASAFHRGRLRIV’ and ‘KVQVNSQPFQQG’ occur in regions coding for viral capsid protein. This motif strongly aligned with proteins from Human parechovirus, Drosophila C virus and Cricket paralysis virus. Variations in the amino acid sequence of these proteins are important both for determining viral host- specificity and contributing to antigenic diversity. For Rhabdoviridae, the motif ‘GLPLKASETW’ is found highly conserved in the RNA polymerase in the L protein of plant viruses (see Figures S1, S2).

Discussion

We have presented a supervised learning algorithm that learns a model to classify viruses according to their host and identifies a set of highly discriminative oligopeptide motifs. As expected, the  -mers selected in the ADT for Picornaviridae (Figures 3, 5) and Rhabdoviridae (Figures S1, S2) are mostly selected in areas corresponding to the replicase motifs of the polymerase – one of the most conserved parts of the viral genome. Thus, given that partial genomic sequence is normally the only information available, we could achieve quicker bioinformatic characterization by focusing on the selection and amplification of these highly predictive regions of the genome, instead of full genomic characterization and contiguing. Moreover, in contrast with generic approaches currently under use, such a targeted amplification approach might also speed up the process of sample preparation and improve the sensitivity for viral discovery.

-mers selected in the ADT for Picornaviridae (Figures 3, 5) and Rhabdoviridae (Figures S1, S2) are mostly selected in areas corresponding to the replicase motifs of the polymerase – one of the most conserved parts of the viral genome. Thus, given that partial genomic sequence is normally the only information available, we could achieve quicker bioinformatic characterization by focusing on the selection and amplification of these highly predictive regions of the genome, instead of full genomic characterization and contiguing. Moreover, in contrast with generic approaches currently under use, such a targeted amplification approach might also speed up the process of sample preparation and improve the sensitivity for viral discovery.

Other applications for this technique include identification of novel pathogens using genomic data, analysis of the most informative fingerprints that determine host specificity, and classification of metagenomic data using genomic information. For example, an alternative application of our approach would be the automatic discovery of multi-locus barcoding genes. Multi-locus barcoding is the use of a set of genes which are discriminative between species, in order to identify known specimens and to flag possible new species [17]. While we have focused on virus host in this work, ADTs could be applied straightforwardly to the barcoding problem, replacing the host label with a species label. Additional constraints on the loss function would have to be introduced to capture the desire for suitable flanking sites of each selected  -mer in order to develop the universal PCR primers important for a wide application of the discovered barcode [18].

-mer in order to develop the universal PCR primers important for a wide application of the discovered barcode [18].

Methods

Our overall aim is to discover aspects of the relationship between a virus and its host. Our approach is to develop a model that is able to predict the host of a virus given its sequence; those features of the sequence that prove most useful are then assumed to have a special biological significance. Hence, an ideal model is one that is parsimonious and easy to interpret, whilst incorporating combinations of biologically relevant features. In addition, the interpretability of the results is improved if we have a simple learning algorithm which can be straightforwardly verified.

Formally, for a given virus family, we learn a function  , where

, where  is the space of viral sequences and

is the space of viral sequences and  is the space of viral hosts. The space of viral sequences

is the space of viral hosts. The space of viral sequences  is generated by an alphabet

is generated by an alphabet  where,

where,  (genome sequence) or

(genome sequence) or  (primary protein sequence).

(primary protein sequence).

Defining a function on a sequence requires representation of the sequence in some feature space. Below, we specify a representation  , where a sequence

, where a sequence  is mapped to a vector of counts of subsequences

is mapped to a vector of counts of subsequences  . Given this representation, we have the well-posed problem of finding a function

. Given this representation, we have the well-posed problem of finding a function  built from a space of simple binary-valued functions.

built from a space of simple binary-valued functions.

Collected Data

The collected data consist of  genome sequences or primary protein sequences, denoted

genome sequences or primary protein sequences, denoted  , of viruses whose host class, denoted

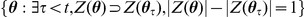

, of viruses whose host class, denoted  is known. For example, these could be ‘plant’, ‘vertebrate’ and ‘invertebrate’. The label for each virus is represented numerically as

is known. For example, these could be ‘plant’, ‘vertebrate’ and ‘invertebrate’. The label for each virus is represented numerically as  where

where  if the index of the host class of the virus is

if the index of the host class of the virus is  , and where

, and where  denotes the number of host classes. Note that this representation allows for a virus to have multiple host classes. Here and below we use boldface variables to indicate vectors and square brackets to denote the selection of a specific element in the vector, e.g.,

denotes the number of host classes. Note that this representation allows for a virus to have multiple host classes. Here and below we use boldface variables to indicate vectors and square brackets to denote the selection of a specific element in the vector, e.g.,  is the

is the  element of the

element of the  label vector.

label vector.

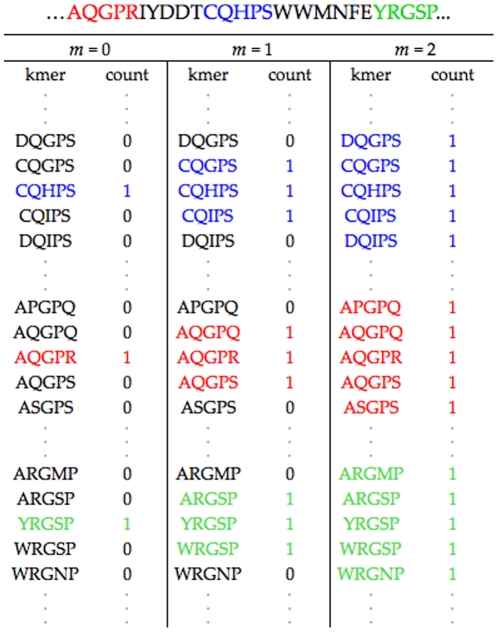

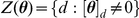

Mismatch Feature Space

A possible feature space representation of a viral sequence is the vector of counts of exact matches of all possible  - length subsequences (

- length subsequences ( -mers). However, due to the high mutation rate of viral genomes [19], [20], a predictive function learned using this simple representation of counts would fail to generalize well to new viruses. Instead, motivated by the mismatch feature space used in constructing string kernels for kernel-based classification algorithms [21], we count not just the presence of an individual

-mers). However, due to the high mutation rate of viral genomes [19], [20], a predictive function learned using this simple representation of counts would fail to generalize well to new viruses. Instead, motivated by the mismatch feature space used in constructing string kernels for kernel-based classification algorithms [21], we count not just the presence of an individual  -mer but also the presence of subsequences within

-mer but also the presence of subsequences within  mismatches from that

mismatches from that  -mer. The mismatch- or

-mer. The mismatch- or  -neighborhood of a

-neighborhood of a  -mer

-mer  , denoted

, denoted  , is the set of all

, is the set of all  -mers with a Hamming distance [22] at most

-mers with a Hamming distance [22] at most  from it, as shown in Figure 6. Let

from it, as shown in Figure 6. Let  denote the indicator function of the

denote the indicator function of the  -neighborhood of

-neighborhood of  such that

such that

| (1) |

We can then define, for any possible  -mer

-mer  , the mapping

, the mapping  from the sequence

from the sequence  onto a count of the elements in

onto a count of the elements in  's

's  -neighborhood as

-neighborhood as

| (2) |

Finally, the  element of the feature vector for a given sequence is then defined element-wise as

element of the feature vector for a given sequence is then defined element-wise as

| (3) |

for every possible  -mer

-mer  , where

, where  and

and  .

.

Figure 6. Mismatch feature space representation.

The mismatch feature space representation of a segment of a protein sequence (shown on top of figure).

Note that when  ,

,  exactly captures the simple count representation described earlier. This biologically realistic relaxation allows us to learn discriminative functions that better capture rapidly mutating and yet functionally conserved regions in the viral genome facilitating generalization to new viruses.

exactly captures the simple count representation described earlier. This biologically realistic relaxation allows us to learn discriminative functions that better capture rapidly mutating and yet functionally conserved regions in the viral genome facilitating generalization to new viruses.

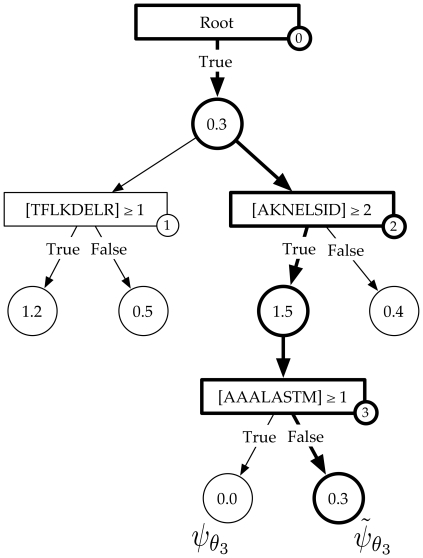

Alternating Decision Trees

Given this representation of the data, we aim to learn a discriminative function that maps features  onto host class labels

onto host class labels  , given some training data

, given some training data  . We want the discriminative function to output a measure of “confidence” [23] in addition to a predicted host class label. To this end, we learn on a class of functions

. We want the discriminative function to output a measure of “confidence” [23] in addition to a predicted host class label. To this end, we learn on a class of functions  , where the indices of positive elements of

, where the indices of positive elements of  can be interpreted as the predicted labels to be assigned to

can be interpreted as the predicted labels to be assigned to  and the magnitudes of these elements to be the confidence in the predictions.

and the magnitudes of these elements to be the confidence in the predictions.

A simple class of such real-valued discriminative functions can be constructed from the linear combination of simple binary- valued functions  . The functions

. The functions  can, in general, be a combination of single- feature decision rules or their negations:

can, in general, be a combination of single- feature decision rules or their negations:

| (4) |

| (5) |

where  ,

,  is the number of binary-valued functions,

is the number of binary-valued functions,  is 1 if its argument is true, and zero otherwise,

is 1 if its argument is true, and zero otherwise,  , where

, where  , and

, and  is a subset of feature indices. This formulation allows functions to be constructed using combinations of simple rules. For example, we could define a function

is a subset of feature indices. This formulation allows functions to be constructed using combinations of simple rules. For example, we could define a function  as the following

as the following

| (6) |

where  .

.

Alternatively, we can view each function  to be parameterized by a vector of thresholds

to be parameterized by a vector of thresholds  , where

, where  indicates

indicates  is not a function of the

is not a function of the  feature

feature  . In addition, we can decompose the weights

. In addition, we can decompose the weights  into a vote vector

into a vote vector  and a scalar weight

and a scalar weight  [24]. The discriminative model, then, can be written as

[24]. The discriminative model, then, can be written as

| (7) |

| (8) |

Every function in this class of models can be concisely represented as an Alternating Decision Tree (ADT) [7]. Similar to ordinary decision trees, ADTs have two kinds of nodes: decision nodes and output nodes. Every decision node is associated with a single-feature decision rule, the attributes of the node being the relevant feature and corresponding threshold. Each decision node is connected to two output nodes corresponding to the associated decision rule and its negation. Thus, binary-valued functions in the model come in pairs  ; each pair is associated with the the pair of output nodes for a given decision node in the tree (see Figure 7). Note that

; each pair is associated with the the pair of output nodes for a given decision node in the tree (see Figure 7). Note that  and

and  share the same threshold vector

share the same threshold vector  and only differ in whether they contain the associated decision rule or its negation. The attributes of the output node pair are the vote vectors

and only differ in whether they contain the associated decision rule or its negation. The attributes of the output node pair are the vote vectors  and the scalar weights

and the scalar weights  associated with the corresponding functions

associated with the corresponding functions  .

.

Figure 7. Alternating Decision Tree.

An example of an ADT where rectangles are decision nodes, circles are output nodes and, in each decision node,  is the feature associated with the

is the feature associated with the  -mer

-mer  in sequence

in sequence  . The output nodes connected to each decision node are associated with a pair of binary-valued functions

. The output nodes connected to each decision node are associated with a pair of binary-valued functions  . The binary-valued function corresponding to the highlighted path is given as

. The binary-valued function corresponding to the highlighted path is given as  and the associated

and the associated  .

.

Each function  has a one-to-one correspondence with a path from the root node to its associated output node in the tree; the single-feature decision rules in

has a one-to-one correspondence with a path from the root node to its associated output node in the tree; the single-feature decision rules in  being the same as those rules associated with decision nodes in the path, with negations applied appropriately. Combinatorial features can, thus, be incorporated into the model by allowing for trees of depth greater than 1. Including a new function

being the same as those rules associated with decision nodes in the path, with negations applied appropriately. Combinatorial features can, thus, be incorporated into the model by allowing for trees of depth greater than 1. Including a new function  in the model is, then, equivalent to either adding a new path of decision and output nodes at the root node in the tree or growing an existing path at one of the existing output nodes. This tree-structured representation of the model will play an important role in specifying how Adaboost, the learning algorithm, greedily searches over an exponentially large space of binary-valued functions. It is important to note that, unlike in ordinary decision trees where each example traverses only one path in the tree, each example runs down an ADT through every path originating from the root node.

in the model is, then, equivalent to either adding a new path of decision and output nodes at the root node in the tree or growing an existing path at one of the existing output nodes. This tree-structured representation of the model will play an important role in specifying how Adaboost, the learning algorithm, greedily searches over an exponentially large space of binary-valued functions. It is important to note that, unlike in ordinary decision trees where each example traverses only one path in the tree, each example runs down an ADT through every path originating from the root node.

Multi-class Adaboost

Having specified a representation for the data and the model, we now briefly describe Adaboost, a large-margin supervised learning algorithm which we use to learn an ADT given a data set. Ideally, a supervised learning algorithm learns a discriminative function  that minimizes the number of mistakes on the training data, known as the Hamming loss [22]:

that minimizes the number of mistakes on the training data, known as the Hamming loss [22]:

| (9) |

where  denotes the Heaviside function. The Hamming loss, however, is discontinuous and non-convex, making optimization intractable for large-scale problems.

denotes the Heaviside function. The Hamming loss, however, is discontinuous and non-convex, making optimization intractable for large-scale problems.

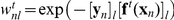

Adaboost is the unconstrained minimization of the exponential loss, a smooth, convex upper-bound to the Hamming loss, using a coordinate descent algorithm.

| (10) |

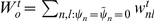

Adaboost learns a discriminative function  by iteratively selecting the

by iteratively selecting the  that maximally decreases the exponential loss. Since each

that maximally decreases the exponential loss. Since each  is parameterized by a

is parameterized by a  -dimensional vector of thresholds

-dimensional vector of thresholds  , the space of functions

, the space of functions  is of size

is of size  , where

, where  is the largest

is the largest  -mer count observed in the data, making an exhaustive search at each iteration intractable for high-dimensional problems.

-mer count observed in the data, making an exhaustive search at each iteration intractable for high-dimensional problems.

To avoid this problem, at each iteration, we only allow the ADT to grow by adding one decision node to one of the existing output nodes. To formalize this, let us define  to be the set of active features corresponding to a function

to be the set of active features corresponding to a function  . At the

. At the  iteration of boosting, the search space of possible threshold vectors is then given as

iteration of boosting, the search space of possible threshold vectors is then given as  . In this case, the search space of thresholds at the

. In this case, the search space of thresholds at the  iteration is of size

iteration is of size  and grows linearly in a greedy fashion at each iteration (see Figure 7). Note, however, that this greedy search, enforced to make the algorithm tractable, is not relevant when the class of models are constrained to belong to ADTs of depth 1.

and grows linearly in a greedy fashion at each iteration (see Figure 7). Note, however, that this greedy search, enforced to make the algorithm tractable, is not relevant when the class of models are constrained to belong to ADTs of depth 1.

In order to pick the best function  , we need to compute the decrease in exponential loss admitted by each function in the search space, given the model at the current iteration. Formally, given the model at the

, we need to compute the decrease in exponential loss admitted by each function in the search space, given the model at the current iteration. Formally, given the model at the  iteration, denoted

iteration, denoted  , the exponential loss upon inclusion of a new decision node, and hence the creation of two new paths

, the exponential loss upon inclusion of a new decision node, and hence the creation of two new paths  , into the model can be written as

, into the model can be written as

| (11) |

| (12) |

where  . Here

. Here  is interpreted as the weight on each sample, for each label, at boosting round

is interpreted as the weight on each sample, for each label, at boosting round  . If, at boosting round

. If, at boosting round  , the model disagrees with the true label

, the model disagrees with the true label  for sample

for sample  , then

, then  is large. If the model agrees with the label then the weight is small. This ensures that the boosting algorithm chooses a decision rule at round

is large. If the model agrees with the label then the weight is small. This ensures that the boosting algorithm chooses a decision rule at round  , preferentially discriminating those examples with a large weight, as this will lead to the largest reduction in

, preferentially discriminating those examples with a large weight, as this will lead to the largest reduction in  .

.

For every possible new decision node that can be introduced to the tree, Adaboost finds the ( ,

, ) pair that minimizes the exponential loss on the training data. These optima can be derived as

) pair that minimizes the exponential loss on the training data. These optima can be derived as

| (13) |

| (14) |

where for each new path  associated with each new decision node

associated with each new decision node

| (15) |

| (16) |

Corresponding equations for the ( ,

, ) pair can be written in terms of

) pair can be written in terms of  and

and  obtained by replacing

obtained by replacing  with

with  in the equations above. The minimum loss function for the threshold

in the equations above. The minimum loss function for the threshold  is then given as

is then given as

| (17) |

where  . Based on these model update equations, each iteration of the Adaboost algorithm involves building the set of possible binary-valued functions to search over, selecting the one for which the loss function given by Eq. 17 and computing the associated

. Based on these model update equations, each iteration of the Adaboost algorithm involves building the set of possible binary-valued functions to search over, selecting the one for which the loss function given by Eq. 17 and computing the associated  pair using Eq. 13 and Eq. 14. The software implementation for the methods described here can be found at http://mkboost.sourceforge.net.

pair using Eq. 13 and Eq. 14. The software implementation for the methods described here can be found at http://mkboost.sourceforge.net.

Supporting Information

Visualizing predictive subsequences for

Rhabdoviridae

. A visualization of the mismatch neighborhood of the  -mer selected in an ADT for Rhabdoviridae, where

-mer selected in an ADT for Rhabdoviridae, where  . The virus proteomes are grouped vertically by their label with their lengths scaled to

. The virus proteomes are grouped vertically by their label with their lengths scaled to  . Regions containing elements of the mismatch neighborhood of each

. Regions containing elements of the mismatch neighborhood of each  -mer are then indicated on the virus proteome. Note that, for Rhabdoviridae, plant and animal viruses could be distinguished with just one

-mer are then indicated on the virus proteome. Note that, for Rhabdoviridae, plant and animal viruses could be distinguished with just one  -mer.

-mer.

(TIFF)

Visualizing predictive regions for

Rhabdoviridae

. A visualization of the mismatch neighborhood of the  -mers selected in an ADT for Rhabdoviridae, where

-mers selected in an ADT for Rhabdoviridae, where  . The virus proteomes are grouped vertically by their label with their lengths scaled to

. The virus proteomes are grouped vertically by their label with their lengths scaled to  . Regions containing elements of the mismatch neighborhood of each

. Regions containing elements of the mismatch neighborhood of each  -mer are then indicated on the virus proteome.

-mer are then indicated on the virus proteome.

(TIFF)

List of viruses in Picornaviridae family used in learning.

(PDF)

List of viruses in Rhabdoviridae family used in learning.

(PDF)

Acknowledgments

The authors thank Vladimir Trifonov and Joseph Chan for interesting suggestions and discussions.

Footnotes

Competing Interests: The authors have declared that no competing interests exist.

Funding: RR and CW are supported by the National Institutes of Health (U54 CA121852-05). RR and GP are supported by the Northeast Biodefence Center (U54-AI057158). RR is also supported by the National Library of Medicine (1R01LM010140-01). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Briese T, Paweska JT, McMullan LK, Hutchison SK, Street C, et al. Genetic detection and characterization of Lujo virus, a new hemorrhagic fever-associated arenavirus from southern Africa. PLoS pathogens. 2009;5:e1000455. doi: 10.1371/journal.ppat.1000455. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Williamson SJ, Rusch DB, Yooseph S, Halpern AL, Heidelberg KB, et al. The Sorcerer II Global Ocean Sampling Expedition: metagenomic characterization of viruses within aquatic microbial samples. PloS one. 2008;3:e1456. doi: 10.1371/journal.pone.0001456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Touchon M, Rocha EPC. From GC skews to wavelets: a gentle guide to the analysis of compositional asymmetries in genomic data. Biochimie. 2008;90:648–59. doi: 10.1016/j.biochi.2007.09.015. [DOI] [PubMed] [Google Scholar]

- 4.Trifonov V, Rabadan R. Frequency Analysis Techniques for Identification of Viral Genetic Data. mBio. 2010;1:e00156–10. doi: 10.1128/mBio.00156-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jimenez-Baranda S, Greenbaum B, Manches O, Handler J, Rabadan R, et al. Oligonucleotide Motifs That Disappear During the Evolution of Influenza in Humans Increase IFN-alpha secretion by Plasmacytoid Dendritic Cells. Journal of Virology. 2011;85:3893–3904. doi: 10.1128/JVI.01908-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Freund Y, Schapire RE. A desicion-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences. 1997;55:119–139. [Google Scholar]

- 7.Freund Y, Mason L. The Alternating Decision Tree Algorithm. 1999. pp. 124–133. In: Proceedings of the 16th International Conference on Machine Learning.

- 8.Altschul SF, Gish W, Miller W, Myers EW, Lipman DJ. Basic Local Alignment Search Tool. Journal of Molecular Biology. 1990;215:403–410. doi: 10.1016/S0022-2836(05)80360-2. [DOI] [PubMed] [Google Scholar]

- 9.Papadopoulos JS, Agarwala R. COBALT: constraint-based alignment tool for multiple protein sequences. Bioinformatics (Oxford, England) 2007;23:1073–9. doi: 10.1093/bioinformatics/btm076. [DOI] [PubMed] [Google Scholar]

- 10.Benson Da, Karsch-Mizrachi I, Lipman DJ, Ostell J, Sayers EW. GenBank. Nucleic acids research. 2010;39:32–37. doi: 10.1093/nar/gkn723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Gorbalenya AE, Blinov VM, Donchenko AP, Koonin EV. An NTP-binding motif is the most conserved sequence in a highly diverged monophyletic group of proteins involved in positive strand RNA viral replication. Journal of Molecular Evolution. 1989;28:256–268. doi: 10.1007/BF02102483. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Rodríguez PL, Carrasco L. Poliovirus protein 2C has ATPase and GTPase activities. The Journal of biological chemistry. 1993;268:8105–10. [PubMed] [Google Scholar]

- 13.Pfister T, Wimmer E. Characterization of the nucleoside triphosphatase activity of poliovirus protein 2C reveals a mechanism by which guanidine inhibits poliovirus replication. The Journal of biological chemistry. 1999;274:6992–7001. doi: 10.1074/jbc.274.11.6992. [DOI] [PubMed] [Google Scholar]

- 14.Kusov YY, Probst C, Jecht M, Jost PD, Gauss-Müller V. Membrane association and RNA binding of recombinant hepatitis A virus protein 2C. Archives of virology. 1998;143:931–44. doi: 10.1007/s007050050343. [DOI] [PubMed] [Google Scholar]

- 15.Aldabe R, Carrasco L. Induction of Membrane Proliferation by poliovirus proteins 2C and 2BC. Biochemical and biophysical research communications. 1995;206:64–76. doi: 10.1006/bbrc.1995.1010. [DOI] [PubMed] [Google Scholar]

- 16.Rodrguez P, Carrasco L. Poliovirus protein 2C contains two regions involved in RNA binding activity. Journal of Biological Chemistry. 1995;270:10105. doi: 10.1074/jbc.270.17.10105. [DOI] [PubMed] [Google Scholar]

- 17.Seberg O, Petersen G. How many loci does it take to DNA barcode a crocus? PloS one. 2009;4:e4598. doi: 10.1371/journal.pone.0004598. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kress WJ, Erickson DL. DNA barcodes: genes, genomics, and bioinformatics. Proceedings of the National Academy of Sciences of the United States of America. 2008;105:2761–2762. doi: 10.1073/pnas.0800476105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Duffy S, Shackelton LA, Holmes EC. Rates of evolutionary change in viruses: patterns and determinants. Nature Reviews Genetics. 2008;9:267–276. doi: 10.1038/nrg2323. [DOI] [PubMed] [Google Scholar]

- 20.Pybus OG, Rambaut A. Evolutionary analysis of the dynamics of viral infectious disease. Nature reviews Genetics. 2009;10:540–50. doi: 10.1038/nrg2583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Leslie CS, Eskin E, Cohen A, Weston J, Noble WS Mismatch string kernels for discriminative protein classification. Bioinformatics. 2004;20(4):467–476. doi: 10.1093/bioinformatics/btg431. [DOI] [PubMed] [Google Scholar]

- 22.Hamming RW. Error detecting and error correcting codes. Bell System Technical Journal. 1950;29:147–160. [Google Scholar]

- 23.Schapire RE, Singer Y. Improved boosting algorithms using confidence-rated predictions. Machine Learning. 1999;37(3):297–336. [Google Scholar]

- 24.Busa-Fekete R, Kegl B. Accelerating AdaBoost using UCB. 2009. pp. 111–122. In: JMLR: Workshop and Conference Proceedings, KDD cup 2009. volume 7.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Visualizing predictive subsequences for

Rhabdoviridae

. A visualization of the mismatch neighborhood of the  -mer selected in an ADT for Rhabdoviridae, where

-mer selected in an ADT for Rhabdoviridae, where  . The virus proteomes are grouped vertically by their label with their lengths scaled to

. The virus proteomes are grouped vertically by their label with their lengths scaled to  . Regions containing elements of the mismatch neighborhood of each

. Regions containing elements of the mismatch neighborhood of each  -mer are then indicated on the virus proteome. Note that, for Rhabdoviridae, plant and animal viruses could be distinguished with just one

-mer are then indicated on the virus proteome. Note that, for Rhabdoviridae, plant and animal viruses could be distinguished with just one  -mer.

-mer.

(TIFF)

Visualizing predictive regions for

Rhabdoviridae

. A visualization of the mismatch neighborhood of the  -mers selected in an ADT for Rhabdoviridae, where

-mers selected in an ADT for Rhabdoviridae, where  . The virus proteomes are grouped vertically by their label with their lengths scaled to

. The virus proteomes are grouped vertically by their label with their lengths scaled to  . Regions containing elements of the mismatch neighborhood of each

. Regions containing elements of the mismatch neighborhood of each  -mer are then indicated on the virus proteome.

-mer are then indicated on the virus proteome.

(TIFF)

List of viruses in Picornaviridae family used in learning.

(PDF)

List of viruses in Rhabdoviridae family used in learning.

(PDF)