Abstract

A dilemma faced by teachers, and increasingly by designers of educational software, is the trade-off between teaching new material and reviewing what has already been taught. Complicating matters, review is useful only if it is neither too soon nor too late. Moreover, different students need to review at different rates. We present a mathematical model that captures these issues in idealized form. The student’s needs are modeled as constraints on the schedule according to which educational material and review are spaced over time. Our results include algorithms to construct schedules that adhere to various spacing constraints, and bounds on the rate at which new material can be introduced under these schedules.

Keywords: asymptotic analysis, scheduling

In his 2009 speech to the National Academy of Sciences, President Barack Obama exhorted the audience to imagine some of the things that could be made possible by a commitment to scientific research, including the invention of “learning software as effective as a private tutor” (1). This paper is a modest step in that direction.

An important challenge for the design of educational software is to incorporate the results of empirical research on how people learn. Such research endeavors to provide principles for how to choose what is taught, how to present it, and how to sequence the material. Ultimately, educational software will require mechanisms for managing the constraints that arise when these principles are applied in different settings.

Here we develop and analyze an idealized mathematical model for incorporating a fundamental class of such constraints into educational software—constraints arising from the importance of timing and review in the presentation of new material. For example, software for building vocabulary must determine when to introduce new words that the student has not yet learned, and when to review words whose definitions the student has successfully recalled in the past. The issue is similar to that faced by a high school math teacher deciding how often to schedule lessons that involve trigonometry, or by a piano teacher who needs to decide how much time a student should devote to practicing scales while also learning to play new pieces.

The study of the importance of timing with respect to review dates to at least 1885 (2). The notion that it is better to spread studying over time instead of doing it all at once is called the spacing effect. In 1988, Dempster noted that “the spacing effect is one of the most studied phenomena in the 100-year history of learning research” (3). As Balota et al. point out in their review (4), “spacing effects occur across domains (e.g., learning perceptual motor tasks vs. learning lists of words), across species (e.g., rats, pigeons, and humans), across age groups and individuals with different memory impairments, and across retention intervals of seconds to months.” See refs. 5 and 6 for reviews. For further results and background, see refs. 7–9. For work on exploiting the spacing effect to build vocabulary (in humans), see refs. 10–13.

Review is an important part of the learning process, but the extent to which review is needed varies by student. Some students need to review early and often, whereas others can learn a lot without any review at all. Personalized educational software of the future could fit a model to the user and then schedule review in a way that is tailored to the model.

With such educational software in mind, we envision a system in which the software designer can specify a schedule for the introduction of new material, together with a schedule by which the review of existing material is spaced over time. What we find, however, is that the resulting scheduling problems are mathematically subtle: Existing techniques do not handle scheduling problems with spacing constraints of this type.

Our main contribution is to develop an approach for reasoning about the feasibility of schedules under spacing constraints. We begin by introducing a stylized mathematical model for the constraints that arise from the spacing effect, and then consider the design of schedules that incorporate these constraints.

Models

Roughly speaking, we model the introduction and review of material as a sequence of abstract educational units, and we model the needs of the student using two sequences, {ak} and {bk}: After an educational unit has been introduced, the “ideal” time for the student to see it for the (k + 1)st time is between ak and bk time steps after seeing it for the kth time. We also model various educational outcomes that the designer of educational software may seek to achieve. The details are given below.

The Educational Process.

We imagine the underlying educational software as implementing a process that presents a sequence of abstract educational units which could represent facts such as the definitions of vocabulary words, concepts such as trigonometric identities, or skills such as playing a scale on the piano. For example, the sequence u1, u2, u3, u1, u4,…, indicates that educational unit u1 was introduced at the first time step and reviewed at the fourth time step. This sequence defines the schedule according to which the units will be presented. We also allow our schedules to contain blanks, or time steps in which no educational unit is presented; thus, an arbitrary schedule will have each entry equal to either an educational unit or a blank.

This model is highly idealized. It ignores possible relationships between units, such as the etymological (and potentially pedagogically useful) connection between the vocabulary words “neophyte” and “neologism,” for example, or the dependence of trigonometry on more basic concepts in geometry. It also treats all units as equal. Thus it does not capture, for example, that an experienced pianist may benefit more from practicing a scale than practicing Twinkle Twinkle Little Star.

The real-life educational process is nuanced, complex, and context dependent. Future work in building models for educational software may introduce more complexity to this model, or simply reduce scope and model more specific situations. Here, in the interest of generality as well as mathematical tractability, we use this very simple model of the general educational process in order to create a formalism that captures spacing constraints and their role in reviewing material after it has been introduced.

Spacing Constraints.

A large body of empirical work in learning research has studied the expanding nature of optimal review. For example, when students first learn a new vocabulary word, they must review it soon or else they likely lose the ability to correctly recall the definition. If they do review it before forgetting it, they will then generally be able to go longer than before without needing review. By repeatedly reviewing, the student “builds up” the ability to go longer and longer without seeing the word while maintaining the ability to recall its definition. However, reviewing a word too soon after studying it can reduce the benefit of the review. These are the principles of the well-established theory of expanded retrieval; see ref. 4 for a review specifically on expanded retrieval. More generally, see refs. 3–13.

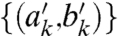

We want a simple formalism that captures the need to review an educational unit on a schedule that “expands” the spacing between successive viewings. We wish to leave the exact rate of expansion under the control of the software designer, motivated by the goal of creating different schedules for different students. We thus imagine that the designer of the software specifies a set of spacing constraints, consisting of an infinite sequence of ordered pairs (a1,b1), (a2,b2),…,(ak,bk),…, where ak ≤ bk are positive integers for all k. Intuitively, the idea is that, for each educational unit ui in the schedule, the designer wants the gap in the schedule between the kth and (k + 1)st occurrences of ui to have length in the closed interval [ak,bk]. The fact that a student can go longer between occurrences as they gain familiarity with the educational unit is represented by the assumption that the numbers ak and bk are weakly increasing: We impose the requirement that ak ≤ ak+1 and bk ≤ bk+1 for all k.

Thus, our key definition is that a schedule satisfies a set of spacing constraints if, for each ui in the schedule, the (k + 1)st occurrence of ui in the schedule comes between ak and bk positions (inclusive) after the kth occurrence. Roughly speaking, the numbers bk model how long the student can retain learned material. The numbers ak model how long a student should wait before review is beneficial, capturing the notion that there is an ideal time to review. If review is done too early, it is less beneficial. If it is done too late, the student will forget the material in the interim.

This model is, by design, a simplification of spacing constraints and their role in learning. A more nuanced model might allow for “blurry” boundaries for the intervals [ak,bk], in which there is a numerical penalty for missing the interval by a small amount. Another refinement would be to allow the model to discriminate between educational units to reflect that some things are easier to learn than others. However, our simple model captures the essence of the phenomenon we are investigating, and will allow for mathematical analysis that elucidates the mechanics of scheduling review in an optimal way.

Educational Goals.

We consider two natural goals for the designer of the educational software. The mission of the software could be to teach students in such a way that they grow their knowledge without bound, never forgetting anything along the way; a sort of “lifelong learning” approach to education. Alternatively, the mission could simply be to get students familiar with a certain set of educational units by a particular point in time, regardless of whether they are destined to forget what they learned quickly thereafter; something like the studying technique known as “cramming.” We address both goals in this paper.

We model the first goal by saying that a schedule exhibits infinite perfect learning with respect to some spacing constraints if (i) it satisfies the spacing constraints, and (ii) it contains infinitely many educational units, each of which occurs infinitely often. Thus if the constraints represented the needs of a student, then with such a schedule the student would, over the course of the infinite sequence, learn an infinite set of educational units without ever forgetting anything.

For the second goal we consider a finite sequence, representing the presentation of material up to a test or performance. For a positive integer n, we say that the sequence is a cramming sequence, and exhibits bounded learning of order n, if (i) it satisfies the spacing constraints, and (ii) it contains at least n distinct educational units such that, if a unit occurs a total of k times in the sequence, then its last occurrence is within bk positions of the end of the sequence. Condition (ii) captures the requirement that the student should still be able to recall all of the n educational units at the end of the sequence, which was the whole point of cramming. Note that, although the spacing constraints have been respected up to the end of the finite sequence, there is no guarantee that the sequence could be extended while continuing to satisfy the spacing constraints.

With respect to infinite perfect learning, we will be interested in the rate at which the student would learn if taught according to the schedule. To this end, we define the introduction time function: For a given schedule of educational units, let tn denote the position in the schedule of the first occurrence of the nth distinct educational unit. Thus the slower the growth of tn, the faster new educational units are being introduced.

We will be considering questions of the following nature. Given spacing constraints {(ak,bk)}, does there exist a schedule that exhibits infinite perfect learning, or bounded learning, with respect to the constraints? If so, how can we construct such schedules? And when such a schedule exists, what rate of learning (as measured by the sequence t1,t2,…) is achievable? These are fundamental problems that would be faced by an educational software designer seeking to incorporate spacing constraints in the design of the underlying algorithms. As we will see, despite the simply stated formulation of these questions, the combinatorial challenges that they lead to quickly become quite intricate.

Results

The spacing constraints are described by two infinite sequences of parameters, {ak} and {bk}. In this section, we describe how choices for these parameters affect the rate at which new educational units can be introduced into the schedule and how schedules can be tailored to particular parameter regimes and educational goals.

Overview of Results.

We begin by examining the first main issue of this paper: the trade-off between (i) the rate at which bk grows as a function of k and (ii) the rate at which tn grows as a function of n. Informally, if bk grows relatively slowly, then a lot of time must be spent on review rather than on introducing new units, and hence tn must grow more quickly, corresponding to slower learning. It is clear that tn≥n for any schedule, because even without review we can only introduce one educational unit per time step. With these considerations in mind, we investigate the following pair of questions that explore the two sides of the trade-off. First, is there a set of spacing constraints for which tn is close to this trivial bound, growing nearly linearly in n? Second, as we require bk to grow slower as a function of k, it becomes more difficult to achieve infinite perfect learning. Is there a set of spacing constraints for which infinite perfect learning is possible, and for which bk grows as a polynomial function of k? We answer both of these questions in the affirmative.

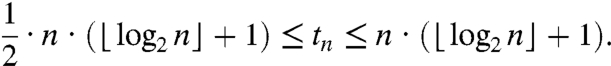

In The Recap Method, we describe a set of spacing constraints for which a schedule can be constructed that exhibits infinite perfect learning, and where the rate of learning is relatively quick. In the schedule we construct, tn grows as Θ(n log n), and in fact tn ≤ n·(⌊ log 2n⌋+1). Recalling that we must have tn≥n for all n for any schedule, we see that the recap schedule achieves infinite perfect learning with only a modest increase on this bound—that is, with a relatively small amount of review. In the SI Text, we show how to construct a schedule where tn grows at a rate that, in some sense, can be as close to linear as desired. In Superlinearity of the Introduction Time Function, we show that there can be no schedule whatsoever such that tn grows as O(n).

In The Slow Flashcard Schedule, we show a set of spacing constraints for which ak and bk grow polynomially in k and for which infinite perfect learning is possible. With these spacing constraints based on much smaller ak and bk, the schedule we construct has a slower rate of learning; tn is bounded below by Ω(n2) and bounded above by O(n3), in contrast to the schedule from The Recap Method for which the introduction time function grows as Θ(n log n). The gap between the quadratic lower bound and the cubic upper bound is an interesting open question; we give numerical evidence that in fact the lower bound may be tight, and that tn grows as O(n2). Much of this analysis is done in the SI Text.

Following these results, we turn to the second main issue in this paper, which is to identify general possibility and impossibility results for satisfying classes of spacing constraints. We first show, in Flexible Students, that the difficulty in achieving infinite perfect learning stems, in a sense, from the fact that the numbers ak are growing: Specifically, we show that for any spacing constraints in which ak = 1 and bk → ∞, it is possible to construct a schedule that exhibits infinite perfect learning. The construction introduced here demonstrates a general method that can be adapted to many sets of spacing constraints with ak > 1 as well.

Thus far, we have only considered spacing constraints that allow for the construction of schedules that exhibit infinite perfect learning. In The Finicky Slow Student, we show that there exist spacing constraints for which no schedule can exhibit infinite perfect learning. In particular, we build this impossibility result from an extreme case, where ak = bk = f(k) and f(k) is a function that grows slowly in k. These constraints represent a setting in which the student insists on reviewing material on an extremely precise and plodding schedule, with no room for error.

The difficulty in constructing a schedule for such a set of constraints is that as the knowledge base—the number of educational units introduced—grows, so does the need to review, and so the potential for scheduling conflicts increases. The slower the student [the slower the growth of f(k)], the fewer educational units can be put on the back burner, so to speak, while the student focuses on new units. The more finicky the student (the smaller the windows bk - ak), the less wiggle room there is in scheduling review.

All of these points match with intuition. Students who don’t need to review much and aren’t too picky about when the review needs to happen can be taught a lot, and fast. But students who need a lot of review and who only derive benefit from very well-timed review will be more difficult to teach. The educational mantra is “every child can learn,” but designers of personalized educational software may find that scheduling the educational process for some students is, at the least, more difficult for some than for others.

In Cramming, we show that every student can cram. More precisely, we show that, for any set of spacing constraints and any n, it is possible to construct a finite sequence that exhibits bounded learning of order n. Consistent with the discussion that accompanied the definition of bounded learning earlier, the construction assures nothing about whether the sequence can be extended beyond this moment of “expertise” at its end.

Finally, also in Cramming, we explore the question of how much can be crammed in a given amount of time. Given a set of spacing constraints and a finite number T, we derive a nontrivial upper bound on those n for which bounded learning of order n is possible using only T time steps.

The Recap Method.

Here we explore spacing constraints that allow for infinite perfect learning with a rapid learning rate—that is, where the introduction time function, tn, grows slowly.

Consider the spacing constraints ak = 2k and bk = 2k-1(k + 1). A schedule that allows for infinite perfect learning with respect to these spacing constraints can be described as follows. To find the first (k + 1)·2k entries of the schedule, consider a depth-first postorder traversal of a full binary tree of depth k with 2k leaves labeled u1, u2,…,u2k from left to right. (A depth-first postorder traversal of a tree is a particular order for visiting the nodes of a tree, defined as follows. Starting at the root v of the tree, the depth-first postorder traversal is first applied recursively to each subtree below v one at a time; after all these traversals are done, then the root v is declared to be visited.) Begin with an empty sequence. Every time a leaf is visited, append the sequence with the corresponding educational unit. Every time a nonleaf node is visited, append the sequence with the units corresponding to all of the descendant leaves, in left-to-right order. The resulting sequence gives the first (k + 1)·2k entries in the schedule.

Thus, using k = 2 we have that the first 12 entries of the schedule are u1,u2,u1,u2,u3,u4,u3,u4,u1,u2,u3,u4. We call this schedule “the recap schedule” because it incorporates periodic review of everything that has been learned so far, like a teacher saying “okay, let’s recap.”

In this schedule, the number of time steps between the kth and (k + 1)st occurrence of any particular unit is always between 2k and 2k-1(k + 1), with both bounds actually achieved for each k. This fact—along with the fact that infinitely many units occur infinitely often due to properties of depth-first traversals—establishes that the recap schedule allows for infinite perfect learning with respect to the given spacing constraints. Calculations which establish these facts are shown in the SI Text.

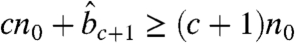

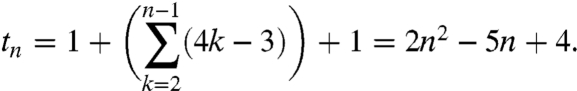

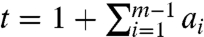

Further calculations, also shown in the SI Text, establish that tn grows as Θ(n log n) in this schedule. More precisely,

|

By generalizing the construction of the schedule, using a more general class of trees, we can show that, for a large class of functions r(n), schedules can be constructed that exhibit infinite perfect learning for which tn grows as Θ(n·r-1(n)). The class includes functions r(n) that grow arbitrarily fast, and so in that sense we can create schedules for which tn grows at a rate that is as close to linear as desired. The downside of these schedules is that they require increasingly lax spacing constraints as the growth rate of tn approaches linearity: The schedules that we construct for which tn grows as Θ(n·r-1(n)) require bk, as well as bk - ak, to grow as Θ(k·r(k)). Calculations which establish these facts, too, are shown in the SI Text.

Superlinearity of the Introduction Time Function.

Although our constructions are able to achieve introduction times tn that grow arbitrarily close to linearly in n, we can also show that an actual linear rate of growth is not achievable: For schedules that exhibit infinite perfect learning, the introduction time function tn must be superlinear. More precisely, we show that, for any schedule that exhibits infinite perfect learning with respect to any spacing constraints {(ak,bk)}, there cannot be a constant c such that tn ≤ cn for all n.

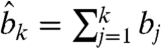

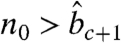

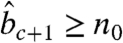

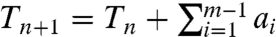

To prove this statement, we consider an arbitrary set of spacing constraints {(ak,bk)} and an arbitrary schedule that exhibits infinite perfect learning with respect to these constraints, and assume for the sake of contradiction that there is a constant c such that tn ≤ cn for all n. Let  and let n0 be any integer such that

and let n0 be any integer such that  . By our assumption, at least n0 educational units have been introduced by the time step cn0. In general, for any schedule that exhibits infinite perfect learning, any unit that has been introduced by time step t will have occurred k times by time step

. By our assumption, at least n0 educational units have been introduced by the time step cn0. In general, for any schedule that exhibits infinite perfect learning, any unit that has been introduced by time step t will have occurred k times by time step  , by the definition of

, by the definition of  . Thus at least n0 units will have occurred c + 1 times by time step

. Thus at least n0 units will have occurred c + 1 times by time step  . So

. So  because each time step corresponds to at most one educational unit. Subtracting cn0 from both sides, we have that

because each time step corresponds to at most one educational unit. Subtracting cn0 from both sides, we have that  , which contradicts our choice of n0. Thus, there cannot be a constant c such that tn ≤ cn for all n.

, which contradicts our choice of n0. Thus, there cannot be a constant c such that tn ≤ cn for all n.

The Slow Flashcard Schedule.

One can describe the construction of the recap schedule without using depth-first traversals or full binary trees, but rather using a deck of flashcards. Doing so will shed some light on the recap schedule, and will also serve as a useful jumping-off point for discussing the very different schedule which is the focus of this section. So we begin our discussion here by revisiting the recap schedule.

Imagine a deck of flashcards, with the kth card corresponding to an educational unit uk. Thus the top card corresponds to u1, the next card corresponds to u2, etc. Then we construct a schedule as follows. At every step, we present to the student the educational unit corresponding to the top card, and then reinsert the card into position 2k, where k is the number of times we have presented the educational unit corresponding to that card, up to and including this latest time step. Thus first we present u1, then we remove it from the deck and reinsert it into position 2 in the deck. Then we present u2, then remove it and reinsert it into position 2 in the deck. Then u1 is again on top and so we present it for a second time and then remove it and reinsert it into position 22 = 4. This process produces the recap schedule.

In this section, we consider a schedule that is much more difficult to describe explicitly than the recap schedule, but whose construction can similarly be described in terms of a deck of flashcards. Instead of reinserting into position 2k as above, though, we reinsert into position k + 1. Carefully applying this rule shows the first few entries of the schedule to be u1,u2,u1,u2,u3,u1,u3,u2,u4,u3. Of all schedules that can be constructed with a similar flashcard-reinsertion scheme using some strictly increasing reinsertion function r(k) with r(1) > 1, it is this schedule, constructed using r(k) = k + 1, which progresses through the deck the slowest. For this reason, we call this schedule “the slow flashcard schedule.”

In the SI Text, we show that the slow flashcard schedule exhibits infinite perfect learning with respect to the spacing constraints with (ak,bk) = (k,k2). Thus the slow flashcard schedule provides a dramatic alternative to the recap schedule. Whereas bk and bk - ak both grew exponentially in k in the recap schedule, here they grow quadratically and yet still allow for infinite perfect learning.

Numerical simulations shown in the SI Text suggest that this schedule in fact exhibits infinite perfect learning even with respect to the much tighter spacing constraints with (ak,bk) = (k,2k). If that is the case, the contrast with the recap schedule would be even more stark.

The trade-off for this slow growth in bk is the speed at which the knowledge base grows. Whereas tn, the time needed for the knowledge base to achieve size n, grew as Θ(n log n) in the recap schedule, here it is bounded below by Ω(n2). A proof of this fact can be found in the SI Text, along with numerical evidence that it in fact grows as Θ(n2).

The spacing constraints in The Recap Method and The Slow Flashcard Schedule are tailored to allow for existence proofs that certain bounds on tn, bk, and bk - ak can be achieved in the context of infinite perfect learning. The methods used to describe the schedules, though, suggest general principles for how to construct schedules with desirable properties. Moreover, we note that the schedules constructed are relevant to any set of spacing constraints that are more relaxed than the given ones: If a schedule exhibits infinite perfect learning with respect to spacing constraints {(ak,bk)}, then it also exhibits infinite perfect learning with respect to  when

when  and

and  for all k.

for all k.

In the next section, Flexible Students, we begin with a general class of students and build schedules tailored to each individual student in the class.

Flexible Students.

What if the student did not need to wait at all in order to derive benefit from studying? In other words, what if ak = 1 for all k? In this case, we can use a technique for constructing schedules that we call “hold-build”: sequencing the educational units that are known to the student in a “holding pattern” so that they meet the spacing constraints, while showing new educational units in quick repetition (thereby “building” them up). The only assumptions that are needed for the construction, besides ak ≡ 1, are that b1≥2 and that bk → ∞. (Note that bk is already required to be weakly increasing.)

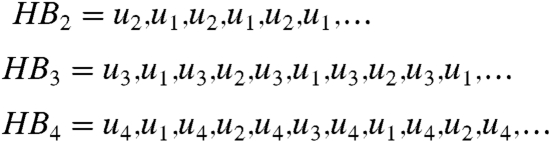

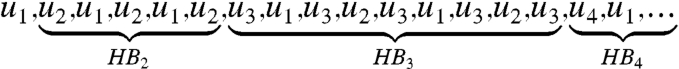

We define the sequence HBm to be the infinite sequence that starts with um, contains um in every other entry, and cycles through units u1,…,um-1 in the remaining entries. So, for example,

|

Now, consider an arbitrary set of spacing constraints such that ak ≡ 1, b1≥2, and bk → ∞. For ease of discussion, we say that an educational unit is at level k when it has been shown exactly k times. We construct the schedule that assures infinite perfect learning as follows.

First, present unit u1. Then present units according to HB2. So far so good; so long as units are presented according to HB2, we know the spacing constraints will be met, because bk≥2 for all k. Meanwhile, the levels for u1 and u2 can be built up without bound.

Continue HB2 as long as necessary until it is feasible to move on to HB3. In other words, show enough of HB2 so that if the schedule up to that point were followed by an indefinite run of HB3, then the spacing constraints would be met. This condition is guaranteed to be true after a finite number of time steps because bk → ∞ and HB2 is periodic.

Thus, once enough of HB2 has been shown, we show as much of HB3 as necessary until we can afford to move on to HB4. Then we show as much of HB4 as necessary until we can afford to move on to HB5, and we continue building the schedule like this indefinitely.

The schedule formed by concatenating hold-build patterns in this way assures infinite perfect learning, and it applies to any set of spacing constraints with ak ≡ 1, b1≥2, and bk → ∞. Thus we actually have a class of spacing constraints where infinite perfect learning is possible and yet bk can grow arbitrarily slowly. The trade-off for an exceedingly slow-growing bk will again be a fast-growing tn, corresponding to a slow rate of learning. The exact rate will depend on the exact rate of growth of bk.

To give a concrete example of this hold-build construction and an accompanying calculation of tn, we can consider the simple case where bk = k + 1. (Note that, because we only require that bk be weakly increasing, there are much slower-growing choices for bk than this one.) Then, by carrying out the construction above, we have that the sequence is

|

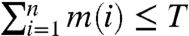

with 4k - 3 time steps spent in HBk for every k. Thus, because ui will be introduced one time step after finishing the HBi-1 part of the schedule, we have that

|

The idea behind the hold-build construction, namely the method of putting some units in a holding pattern while others are being “built up,” could readily be used to construct schedules assuring infinite perfect learning for many sets of spacing constraints with ak > 1 as well (or spacing constraints with b1 = 1, for that matter). It is a tool that can be used to tailor educational processes to model students in general.

The Finicky Slow Student.

We now give a set of spacing constraints {(ak,bk)} for which no schedule can exhibit infinite perfect learning. They are simply the constraints defined by ak = bk = k. We call this set of constraints “the finicky slow student” because bk - ak is so small, and because bk grows so slowly as a function of k. We show that no schedule can exhibit infinite perfect learning with respect to the finicky slow student.

Suppose, for the sake of contradiction, that there were a schedule that exhibited infinite perfect learning with respect to the finicky slow student. We say that a schedule incorporates educational unit ui if the unit occurs infinitely many times, and if the sequence of occurrences satisfies the spacing constraints. Thus, given the particulars of the finicky slow student, if a unit ui is incorporated, and if it first occurs at step τ, then it must also occur at steps τ + 1, τ + 3, τ + 6, τ + 10,….

By assumption, the schedule incorporates infinitely many educational units. We can assume, without loss of generality, that educational unit u1 is incorporated and that its first occurrence is at time step τ0 = 0. (Letting time start at zero here allows for cleaner calculations.) Then we know that u1 also occurs at precisely the steps τ1, τ2, τ3,…, where  . It will be sufficient to show that no other unit can be incorporated without creating a scheduling conflict—in other words, without needing to eventually be scheduled at a step of the form τi.

. It will be sufficient to show that no other unit can be incorporated without creating a scheduling conflict—in other words, without needing to eventually be scheduled at a step of the form τi.

Suppose another unit, call it u2, were incorporated, with its first occurrence at step s0. Then we know that u2 must also occur at precisely the steps s1, s2, s3,…, where  .

.

We show that there must be some step common to both sequences {si} and {τi}. Thus we will have a contradiction because, at most, one educational unit can appear in each entry of the schedule.

We begin by noting that si - τi = s0 for all i, and that si+1 - si = τi+1 - τi = ai+1 for all i. Now choose k large enough so that τk+1 - τk > s0. Then τk+1 > τk + s0 = sk. Thus for sufficiently large k, we have that τk+1 > sk. Now let m be the smallest number such that τm+1 > sm. We know m≥1, because τ0 = 0 and τ1 = 1 by construction. We claim that τm = sm-1.

If τm > sm-1, then m would not be the smallest number such that τm+1 > sm (because then m - 1 would also qualify), so τm≯sm-1.

If τm < sm-1, then we have that τm < sm-1 < sm < τm+1, which implies that sm - sm-1 ≤ τm+1 - τm - 2, because all si and τi are integer valued. Thus am ≤ am+1 - 2, so am+1 - am≥2, which is not possible because ak+1 - ak = 1 for all k. So τm≮sm-1.

Thus we have that τm = sm-1, which is a contradiction, of course, because only one educational unit can be scheduled for any given time step. Thus no schedule can exhibit infinite perfect learning with respect to the finicky slow student; in fact, the finicky slow student does not even allow for the incorporation of more than one educational unit.

This proof holds not only for the spacing constraints ak = bk = k, but for any spacing constraints such that ak = bk = f(k), where f(k) is an integer sequence such that f(1) = 1, f(k + 1) - f(k)∈{0,1}, and f(k) → ∞. The exact choice doesn’t matter; the finickiness (ak = bk) and the slowness [f(1) = 1 and f(k + 1) - f(k)∈{0,1}] are sufficient to carry out the proof as written, but with the final argument using ak+1 - ak ≤ 1 instead of ak+1 - ak = 1.

Cramming.

The focus up until now has been on infinite perfect learning, but there could be less ambitious goals for a student. We turn our attention now to cramming. At the end of this section, we address the question of how much cramming can be done in a given amount of time. We begin here with a positive result, showing that for every positive integer n and every set of spacing constraints with bk → ∞, there exists a sequence that achieves bounded learning of order n.

We consider an arbitrary set of spacing constraints with bk → ∞ and proceed by induction on n. It is clear that bounded learning is possible for n = 1; the sequence consisting simply of u1 satisfies the definition.

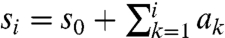

Now, let Sn be a sequence of length Tn that achieves bounded learning of order n. To complete the induction, we construct a new sequence, Sn+1 of length Tn+1, that achieves bounded learning of order n + 1.

Recall from Flexible Students that the level of an educational unit at time t in a sequence is the number of times it has appeared prior to time t. The basic idea behind the construction of Sn+1 is to start by building up the level of u1 until it is at a level m such that bm > Tn. Then we use the next Tn steps to present units u2, u3,…,un+1 according to the sequence Sn. When that is done, the time limit of bm has still not been reached for u1, and hence the sequence satisfies the definition of bounded learning of order n + 1.

Formally, let m be the smallest integer such that bm > Tn. Then present unit u1 at time t = 0 and at times  for j = 1,2,…,m - 1. Present a blank in the sequence at every other time step in between. Then, starting at time

for j = 1,2,…,m - 1. Present a blank in the sequence at every other time step in between. Then, starting at time  , present units u2,u3,…,un+1 according to the Tn elements of the sequence Sn. This sequence, through time

, present units u2,u3,…,un+1 according to the Tn elements of the sequence Sn. This sequence, through time  , is our new sequence Sn+1. By construction it satisfies the conditions of bounded learning of order n + 1. By induction, then, bounded learning of order n is possible for all positive integers n for any set of spacing constraints with bk → ∞.

, is our new sequence Sn+1. By construction it satisfies the conditions of bounded learning of order n + 1. By induction, then, bounded learning of order n is possible for all positive integers n for any set of spacing constraints with bk → ∞.

In the construction above, it is entirely possible that one or more units would begin to violate the spacing constraints even one time step later. Little is assured other than the educational units having met the scheduling constraints up to a certain time step. We call this sort of construction cramming because it presents the material with a particular target time in view and without regard to the scheduling of material after this target time, like a student cramming for a final exam who doesn’t worry about how much will be retained after the test.

Condition (ii) of our definition of bounded learning models the notion of studying up to a point in time and then being able to remember everything that was studied for at least one more time step, as if there were a quiz lasting one time step which would occur in the time step immediately following the cramming sequence. We could similarly model the notion of a quiz that lasts d time steps by requiring that if a unit’s last occurrence is s time steps from the end of the sequence, and the unit occurs a total of k times in the sequence, then s + d must be less than or equal to bk. We note that our results regarding cramming sequences could be adapted to such an alternative model.

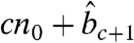

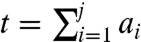

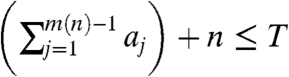

We turn now to the issue of how much can be crammed in a given amount of time. Given a set of spacing constraints {(ak,bk)}, and a positive integer T, we can put an upper bound on the numbers n for which bounded learning of order n is possible in T time steps. If we let m(i) denote the smallest integer k such that bk≥i, then it can be shown that n must satisfy  and

and  . Each inequality implicitly bounds n from above. These bounds reflect the constraints imposed by the limited number of time steps available, and the notions that {ak} and {bk} represent limitations on how fast the level of an educational unit can be built up and how long educational units can be remembered. Details can be found in the SI Text.

. Each inequality implicitly bounds n from above. These bounds reflect the constraints imposed by the limited number of time steps available, and the notions that {ak} and {bk} represent limitations on how fast the level of an educational unit can be built up and how long educational units can be remembered. Details can be found in the SI Text.

Despite the negative connotations associated with cramming, the basic idea can actually be useful in a number of settings in real life. A traveler may only care to learn a language enough to travel in a foreign country just once, for example, or a performer may only need to have a certain skill set on the day of a performance and not necessarily after that. Perhaps educational software of the future will have tunable parameters that allow the student (or teacher or parent) to set the goal of the educational process. This way the software may not only adapt to the natural abilities of the students, but also to their personal goals.

Conclusion

The possibilities for future work seem limitless. A more complete theory of infinite perfect learning could be one goal. Such a theory would include more techniques for constructing educational processes tailored to students, and a more complete theory relating ak and bk to the maximum rate at which the model student can accrue knowledge.

A major goal should be a truly adaptive educational process: one that adapts to the student in real time. For example, in this paper we model the educational process as a sequence designed to satisfy a set of constraints fixed in advance, but an alternate approach would be to test the student’s knowledge throughout the process, and for the schedule to be controlled by an online algorithm that chooses the next unit based on the answers the student has given. Such a system would model the process of a teacher observing student progress before deciding what to teach next.

Modeling this situation would be an exciting challenge. The interaction between the two online algorithms, one modeling the student and the other modeling the teacher, promises to be complex and fascinating, and hopefully enlightening and useful to future designers and engineers of educational software. There is much opportunity here for mathematical modeling, theoretical calculations, and numerical simulations that shed light on what makes an effective teacher and how educational software can adapt in real time to user behavior.

Another area for future work is the design and analysis of models that are tailored to specific subjects. Perhaps a model involving a network of educational units could be used to investigate the phenomenon that it is often easier to learn a set of facts that somehow “reinforce” each other than a set of unrelated facts. Introducing relationships between educational units calls for new models of the student’s reception of the units, which in turn call for different educational processes.

Yet another avenue of research is empirical work. The techniques and intuitions gained from theoretical work should be put to use to create actual educational software. Then data from real students can be collected and the process of using the data to validate and refine the models can begin.

Finally, the mathematics of managing spacing constraints in sequences could find additional applications beyond those considered above, for example, to task management in parallel processing or the study of multitasking in humans.

The models presented in this paper are simple and theoretical. Designers of educational software will likely need to implement models and algorithms that are more complex and tailored to the educational content being delivered. It is our hope that work on simple theoretical models will provide the foundations of intuition for designers of educational software, in much the same way that algorithmic game theory does for engineers who work in online ad auctions and other related fields.

With the current boom in educational software—not to mention the humanoid robot teacher industry (14)—it is clear that the time has come to develop a theory of algorithmic education.

Supplementary Material

Acknowledgments.

Research supported in part by an National Science Foundation (NSF) Graduate Research Fellowship, The John D. and Catherine T. MacArthur Foundation, a Google Research Grant, a Yahoo! Research Alliance Grant, and NSF Grants CCF-0325453, BCS-0537606, IIS-0705774, and CISE-0835706.

Footnotes

Conflict of interest statement: T.P.N. is the owner of a small, wholly owned company, Flash of Genius, LLC, that sells educational software.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1109863109/-/DCSupplemental.

References

- 1.Obama B. Washington, DC: Natl Acad Sci; April 27, 2009. Remarks by the President at the National Academy of Sciences Annual Meeting. http://www.whitehouse.gov/the_press_office/Remarks-by-the-President-at-the-National-Academy-of-Sciences-Annual-Meeting/ [Google Scholar]

- 2.Ebbinghaus H. Memory: A Contribution to Experimental Psychology (Translated from German) [trans Bussenius RH (1913)]. New York: Teachers College at Columbia University; 1885. [Google Scholar]

- 3.Dempster F. The spacing effect: A case study in the failure to apply the results of psychological research. Am Psychol. 1988;43:627–634. [Google Scholar]

- 4.Balota DA, Ducheck JM, Logan JM. In: Foundation of Remembering: Essays in Honor of Henry L. Roediger III. Nairne JS, editor. New York: Psychology Press; 2007. pp. 83–105. [Google Scholar]

- 5.Cepeda NJ, Pashler H, Vul E, Wixted JT, Rohrer D. Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychol Bull. 2006;132:354–380. doi: 10.1037/0033-2909.132.3.354. [DOI] [PubMed] [Google Scholar]

- 6.Crowder RG. Principles of Learning and Memory. New York: Psychology Press; 1976. [Google Scholar]

- 7.Roediger HI, III, Karpicke JD. In: Successful Remembering and Successful Forgetting: A Festschrift in Honor of Robert A. Bjork. Benjamin AS, editor. New York: Psychology Press; 2011. pp. 23–48. [Google Scholar]

- 8.Melton AW. Situation with respect to spacing of repetitions and memory. J Verb Learn Verb Behav. 1970;9:596–606. [Google Scholar]

- 9.Bahrick HP, Bahrick LE, Bahrick AS, Bahrick AP. Maintenance of foreign language vocabulary and the spacing effect. Psychol Sci. 1993;4:316–321. [Google Scholar]

- 10.Landauer T, Bjork R. Practical Aspects of Memory. New York: Academic; 1978. pp. 625–632. [Google Scholar]

- 11.Pimsleur P. A memory schedule. Mod Lang J. 1967;51:73–75. [Google Scholar]

- 12.Wozniak PA, Gorzelanczyk EJ. Optimization of repetition spacing in the practice of learning. Acta Neurobiol Exp. 1994;54:59–62. [PubMed] [Google Scholar]

- 13.Wolf G. Want to remember everything you’ll ever learn? Surrender to this algorithm. Wired. 2005.

- 14.Hyun E, Kim S, Jang S, Park S. Comparative study of effects of language instruction program using intelligence robot and multimedia on linguistic ability of young children. Robot and Human Interactive Communication. 2008:187–192. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.