Abstract

Motion capture studies show that American Sign Language (ASL) signers distinguish end-points in telic verb signs by means of marked hand articulator motion, which rapidly decelerates to a stop at the end of these signs, as compared to atelic signs (Malaia & Wilbur, in press). Non-signers also show sensitivity to velocity in deceleration cues for event segmentation in visual scenes (Zacks et al., 2010; Zacks et a., 2006), introducing the question of whether the neural regions used by ASL signers for sign language verb processing might be similar to those used by non-signers for event segmentation.

The present study investigated the neural substrate of predicate perception and linguistic processing in ASL. Observed patterns of activation demonstrate that Deaf signers process telic verb signs as having higher phonological complexity as compared to atelic verb signs. These results, together with previous neuroimaging data on spoken and sign languages (Shetreet, Friedmann, & Hadar, 2010; Emmorey et al., 2009), illustrate a route for how a prominent perceptual-kinematic feature used for non-linguistic event segmentation might come to be processed as an abstract linguistic feature due to sign language exposure.

Keywords: sign language, ASL, fMRI, event structure, verb, neuroplasticity, motion

1. Introduction

Humans use kinematic features of motion in dynamic scenes, such as velocity and deceleration of actor limb movements, to parse natural scenes into discrete events (Speer, Swallow, & Zacks, 2003; Swallow, Zacks, & Abrams, 2009; Zacks, Kumar, Abrams, & Mehta, 2009; Zacks, Swallow, Vettel, & McAvoy, 2006). Neuroimaging studies show that perceived event boundaries - whether derived from visual segmentation of a natural scene, or conceptual, as inferred from spoken language - trigger an update in episodic memory, thus playing an important role in extracting information from visual and linguistic input (Swallow et al., 2009; Yarkoni, Speer, & Zacks, 2008). Recent motion capture studies of predicates in American Sign Language (ASL) demonstrated that signers produce verb signs denoting event boundaries using higher peak velocity and significantly faster deceleration at the end of the sign (Malaia, Borneman, & Wilbur, 2008; Malaia & Wilbur, in press). The present study investigates the neural substrate of comprehension of visual language - ASL verbs - denoting event boundaries.

Event boundaries as conceptualized in verb typology have long been of interest to linguistic theory as possible semantic primitives (Dowty, 1979; Jackendoff, 1991; Pustejovsky, 1991; Ramchand, 2008; van Hout, 2001; Van Valin, 2007; Vendler, 1967; Verkuyl, 1972). Predicates denoting events with an inherent end-point representing a change of state (break, appear) are considered semantically telic, as opposed to predicates describing homogenous – atelic – events, such as swim or sew. The semantic type of predicate – whether it is telic or atelic - has been shown to affect the syntactic structure of the sentence in such typologically distinct spoken languages as Russian, Dutch, Icelandic, and Bengali (Ramchand, 1997; G. Ramchand, 2008; Svenonius, 2002; van Hout, 2001). ERP studies in sentence processing show that semantically telic verbs facilitate online syntactic processing during a reading task (Malaia, Wilbur, & Weber-Fox, 2009). Developmental research on language acquisition shows that children with specific language impairment are less sensitive to telicity cues, which might contribute to the difficulties they experience in learning and using finite tense forms (Leonard & Deevy, 2010).

At least since Poizner (1981, 1983), sign language researchers hypothesized that kinematic properties of hand articulator movement in sign languages carry phonological information. From the production standpoint, sign languages are characterized by use of rhythmic (syllabic) hand motion, consistently high speed with rapid acceleration-deceleration patterns, and frequent changes in the direction of motion (Brentari, 1998; Emmorey, Xu, Gannon, Goldin-Meadow, & Braun, 2009; MacSweeney, Capek, Campbell, & Woll, 2008). The adaptations of the signers’ visual system due to the processing requirements of sign language have been found to correlate with informationally dense (i.e. linguistically distinctive) features of sign language, enhancing signer’s abilities in motion detection (Bosworth & Dobkins, 1999; Neville & Lawson, 1987), peripheral vision (Loke & Song, 1991; Parasnis & Samar, 1985; Reynolds, 1993), and motion similarity (Poizner, 1981, 1983). Perception of rapid spectral changes in the signal, characteristic of linguistic input (Poizner, 1981; Zatorre & Belin, 2001), is supported by the neurons sensitive to specific ranges of spectral information change over time (as characterized by their spectral-temporal receptive fields, or STRFs), which adapt to the information-carrying properties of the environment (Theunissen et al., 2001; Vinje & Gallant, 2000). The features extracted from linguistic input (whether in the auditory or visual modality) can be further processed as linguistic (phonological) information.

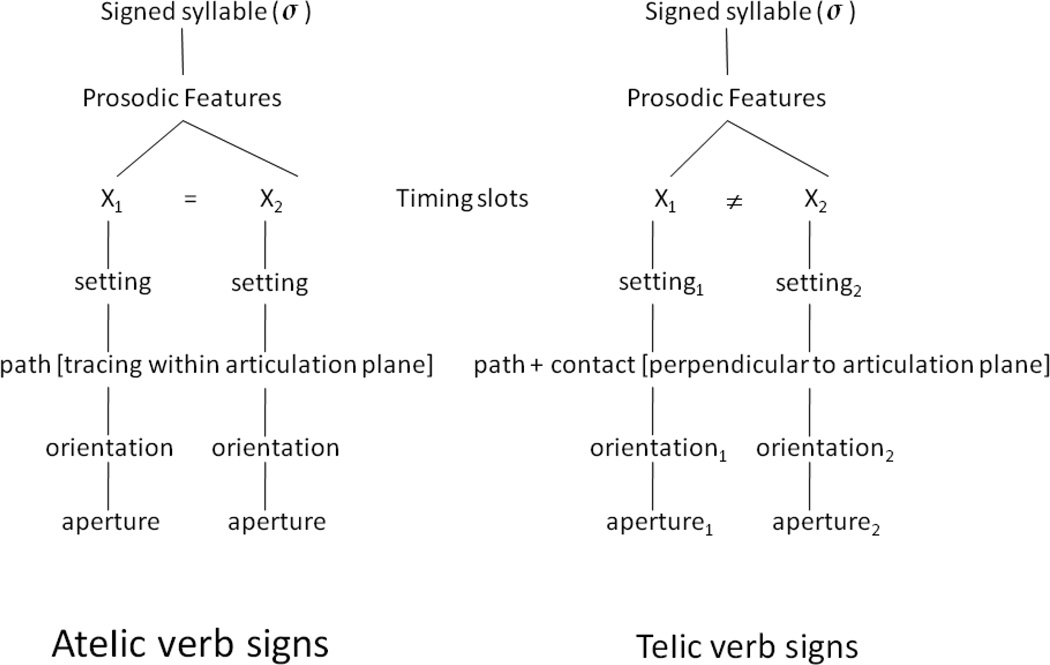

In Brentari’s theoretical model of sign language phonology, ASL verb signs denoting discrete events with boundaries (telic), and events that do not have inherent boundary points (atelic) differ in their phonological features, which unfold sequentially over time (i.e., dynamic, or prosodic features), and in their syllable structure (Brentari, 1998). Specifically, atelic verb signs have the same handshape and orientation specifications for the initial and final positions of the sign, and thus present a simple syllable structure; in contrast, telic ASL signs have a more complex syllabic structure, as they always employ one of the following dynamic changes between the beginning and the end of the sign: (1) change of handshape aperture (open to closed, or closed to open); (2) change of handshape orientation (e.g., palm up to palm down); and (3) movement in a direction orthogonal to the plane of articulation, with an abrupt stop at a location in space (Wilbur, 2008). Within Brentari’s (1998) Prosodic Model of sign language phonology, telic and atelic signs thus fall into distinct phonological classes (Figure 1).

Figure 1.

Atelic and telic verb signs in ASL differ in whether the two timing slots in sign-syllables contain the same or different setting, orientation, aperture, and directionality of the movement path.

The objective of the present study was to investigate the neural substrate of processing telic and atelic ASL verb signs using fMRI. Native Deaf1 signers and a control group of hearing non-signers were presented with videos of telic and atelic ASL signs. The signers were asked whether the action denoted by the sign was likely to occur inside or outside the house; this task was given to ensure that subjects were paying attention and linguistically processing the stimuli. The non-signers, who had no prior exposure to American Sign Language, were asked to assess whether the signer’s hands were moving symmetrically with regard to the central body axis so that we could ensure that they were paying attention to the stimuli. We hypothesized that processing of telic vs. atelic verb signs might elicit differential activation in the areas associated with phonological and semantic processing of sign language in the Deaf participants.

Prior sign language studies demonstrated engagement of the left IFG, planum temporale, and superior temporal gyrus (STG) in phonological processing of visual languages (MacSweeney, Waters, Brammer, Woll, & Goswami, 2008; MacSweeney et al., 2002; Petitto et al., 2000); we expected to see focal activation in these regions in the comparison between viewing ASL signs vs. gross hand motion. With regard to Hearing participants, we expected to see activations in motion-sensitive regions (temporo-occipito-parietal junction) due to large velocity and acceleration differences between ASL and gross gesture.

Identification of event boundaries in linguistic input and visual scenes in hearing participants previously elicited activation in a network of regions including motion-sensitive region MT+, fusiform gyrus, posterior cingulate cortex/precuneus (BA 7, 23, 31); right superior temporal cortex (BA 22), right anterior middle temporal cortex (BA 21), and middle frontal gyrus (BA 6/8) (Speer et al., 2003; Speer, Zacks, & Reynolds, 2007; Yarkoni et al., 2008). We hypothesized that for Deaf participants, processing of conceptual event boundaries posited by telic verbs would elicit higher activation of these areas during viewing of telic verb signs compared to atelic ones. Based on prior literature, we also hypothesized that phonological (spectro-temporal) differences between telic and atelic signs would elicit activation in left STG in Deaf signers.

2. Materials and methods

2.1 Participants

Two subjects populations were studied: Deaf signers and Hearing non-signers. Seventeen healthy deaf adults who were native ASL signers (including 8 Deaf of Deaf parents; 10 male, 7 female; 18–58 years old, mean age 35.6, SD=14.2) and thirteen hearing non-signers (7 male, 5 female, 19–36 years old, mean age 24.1, SD=4.5) participated for monetary compensation after giving written informed consent in accord with the Institutional Review Board at Purdue University. Data collected from three Deaf participants were discarded, two due to equipment malfunction and one due to left-handedness (Oldfield, 1971); data from one hearing participant was also discarded due to recording issues. All of the included participants were right-handed; five Deaf and seven hearing participants were right-eye dominant. None of the participants had any history of head injury or other neurological problems, and all had normal or corrected-to-normal vision.

2.2 Stimuli

ASL verb signs and non-communicative gestures, produced by a native signer wearing motion capture sensors, were video recorded and used as the stimuli for the study. The gesture consisted of non-intentional (non-communicative) slow movements of the arms being raised to a T-position (straight out) to the sides of the body, and lowered from it. The following signs from everyday ASL discourse were used in the study2:

Telic (21): STING, SHUT-DOWN-COMPUTER, HIT, PLUG-IN, APPEAR, CATCH-UP, OPEN-DOOR, STOP, FINISH, CHECK, TAKE-FROM, CLOSE-DOOR, DIE, SEIZE, DISAPPEAR, ARREST, UNZIP, BECOME, LOOK-AT, SEND, ARRIVE.

Atelic (21): TRAVEL, RIDE-IN, SWIM, LIVE, PROCEED, SHAVE, FOLLOW, VISIT, WRITE, KNOW, FALL-BEHIND, SMELL, TOLERATE, HATE, DRAW, SEW-BY-MACHINE, RELAX, LIKE, HAVE, MEAN, SUGGEST.

These ASL signs and non-communicative gestures (raising of both hands to a T-pose and lowering them) were produced by a native signer wearing a Gypsy 3.0 wired motion capture suit, with the data (XYZ positions of all markers) collected at the rate of 50 fps. A simultaneous video recording at 30fps rate was made with a NTSC video camera on a tripod outside the motion capture recording field. The positional data from the marker on the right wrist, tracking the movement of the dominant signing hand, was used for the kinematic analysis (Malaia & Wilbur, in press).

On average, the maximum velocity of the dominant hand motion was lower in ASL signs (M=1.17 m/s, SE=0.054) than in gesture (M=1.78 m/s, SE=0.279) (the difference was significant at t (42)=−2.338, p<0.024); this is due in part to the fact that the gestures moved across a much larger spatial volume in the same duration as the signs). Average maximum deceleration within the stimuli motion, on the other hand, was significantly higher (t (42)= −2.585, p<0.013) in ASL (M=18.98 m/s2, SE=10.21), as compared to gesture (M=0.096 m/s2, SE=0.08). On average, maximum acceleration was also higher in ASL signs (M=12.25 m/s2, SE=5.67), as compared to gesture (M=8.81 m/s2, SE=0.168), but this difference was not significant (t (42)=0.85, p>.05).

Video clips of these ASL verb signs were used to create blocks of stimuli consisting of 7 telic or 7 atelic ASL signs in a block paradigm, with non-ASL gesture as a baseline condition. Video recordings of ASL predicates were divided into 28-second blocks of telic or atelic signs, each containing seven 2.5 second videos of a predicate sign followed by 1.5 seconds of monochrome grey background for a total of 4 seconds per verb sign. The gestures were similarly composed into blocks, and the entire set was presented in a block paradigm: 16-second block of 4 baseline gestures, 28-second block of 7 telic ASL predicates, 28-second block of 7 baseline gestures, 28-second block of 7 atelic predicates, etc., for a total of 6 repetitions of the ASL stimuli blocks and 28-second baseline gesture blocks (B-T-B-A-B-T-B-A-B-T-B-A-B). Each paradigm lasted a total of 5 mins 52 seconds, and was repeated four times for each subject, the stimuli for the runs being the same; only two runs were recorded for one of the subjects due to equipment malfunction.

The stimuli were displayed to participants via Nordic NeuroLab Visual System goggles (field of view: 30° horizontal, 23° vertical). Deaf signing participants responded to the stimuli by pressing buttons on an MRI-compatible response box (Current Designs LLC HH-2x4-C) with their left hand, using their index finger to indicate that the action denoted by the predicate was likely to happen inside a house, and using their middle finger to indicate that it was more likely to happen outside a house. Hearing non-signing participants were asked to indicate “yes” (index finger) or “no” (middle finger) when viewing the ASL sign videos, whether the signer’s hands were moving symmetrically with regard to the central body axis, using the same response box. Both Deaf and hearing participants completed a training session prior to the neuroimaging session. The task data were collected during fMRI sessions to ensure continuous attention to the stimuli for all participants, and semantic processing of the predicates in the case of Deaf signers. One Deaf subject was excluded from final analysis for behavioral non-compliance, while the rest of the participants responded to each ASL stimulus with a button-press. No analysis was made on the basis of the sub-category of stimulus (inside/outside) as these tasks were primarily used to ensure that all participants were awake and paying attention to the stimuli and that the Deaf participants carried out semantic processing of the stimuli.

2.3 Data acquisition and analysis

All imaging data were collected on a 3 T GE Signa HDx (Purdue University MRI Facility, West Lafayette, Indiana), with 3D FSPGR high-resolution anatomical images (FOV = 24cm, 186 sagittal slices, 1 mm × 1 mm in-plane resolution, slice thickness = 1mm) acquired prior to functional scans. Functional scans were collected using a gradient echo EPI sequence (TE = 22ms, TR=2s, FOV = 24 cm, FA=70°, FOV=24cm, 26 contiguous slices with 4 mm thickness, and 3.8 mm × 3.8 mm in-plane resolution; 176 time points). Four runs of this sequence were used to collect functional data for each subject, except one, for whom only two runs were collected.

Preliminary fixed effects analysis of functional imaging data was carried out using SPM5 software (http://www.fil.ion.ucl.ac.uk/spm). First, the initial 6 acquired volumes were removed to account for scanner stabilization, and each subject’s data were motion corrected to the 7th acquired volume; volumes associated with excessive head movement (more than 1 mm displacement between successive acquisitions) were eliminated. Data were then normalized to the standard MNI space using the T2-weighted template provided by the SPM5 software and resliced to 2×2×2 mm3. Image registration was manually tested after the normalization process to verify the validity of this process. Each subject's T1-weighted whole brain anatomical image was coregistered to the T1 weighted template provided by SPM5, and segmented to extract the gray matter maps. These maps were then optimally thresholded using the Masking toolbox of SPM5 to produce binary masks to be used as explicit masks in subsequent analyses. The last pre-processing step consisted of smoothing the functional data with an isotropic Gaussian filter (FWHM = 8 mm) to compensate for anatomical variability between subjects, and to match the statistical requirements of the general linear model.

Initial fMRI analyses: Individual subject analyses were first performed in all subjects in order to identify the areas of the brain differentially activated by Telic and Atelic predicate signs. For each subject, t-statistic maps were computed using a general linear model in SPM5, incorporating the six motion parameters as additional regressors. Specifically, fMRI activation across Atelic and Telic verbs were contrasted, yielding maps illustrating greater responses to the atelic stimuli (Atelic > Telic) or to the telic stimuli (Telic > Atelic); additionally, brain activation for the conjunction of the two ASL sign conditions was contrasted against activation for the baseline gesture.

Secondary fMRI analysis: The individual contrasts for Telic vs. Gesture and Atelic vs. Gesture in Deaf and Hearing participants were used as the input to repeated-measures analysis of variance (ANOVA) with two factors (Telicity [Telic, Atelic], and Deafness [Deaf, Hearing]) in SPM5. As this analysis revealed main effect of the Deafness, the individual contrasts for Telic vs. Atelic, Telic vs. Gesture, and Atelic vs. Gesture were then used as input to a one-sample t-test analysis in SPM5 to obtain fixed effects results for Deaf and Hearing groups separately. The anatomical regions, maximum t values, MNI coordinates, and cluster sizes of the significant activation regions (p< 0.05, corrected for false discovery rate; number of voxels ≥ 10) for all contrasts as revealed by random-effects analysis were identified. Additional trend-level clusters (size ≥ 10) that achieved an uncorrected cluster significance of p< 0.001 were also identified to evaluate the extent of cortical involvement.

3. Results

3.1 Behavioral results

3.1.1. Deaf signers

Button-press responses to the question “Is this event more likely to happen inside a house, or outside?” were collected during the verb sign presentation to ensure behavioral compliance and sustained attention to the semantics of the stimuli. Participants were instructed not to respond to gesture. All except one participant (who was excluded from the group analysis) carried out the task correctly, responding to each ASL stimulus.

3.1.2. Hearing non-signers

Hearing non-signers were asked to determine whether the movement of hands in verb signs was symmetrical with regard to central axis of the body. Participants were instructed not to respond to gesture. All participants included in the analysis carried out the task correctly, responding to each ASL sign.

3.2. Activation analyses

3.2.1. Main effects (ANOVA)

Significant main effect of Deafness, as yielded by ANOVA, is presented in Table 1. Deaf participants appear to have higher activation than hearing non-signers in right Inferior Frontal and Middle Temporal gyri, as well as bilateral Middle Occipital Gyrus and premotor cortex (Middle/Superior Frontal Gyri). No other main effects or interaction were found.

Table 1.

Cortical areas activated by viewing of ASL predicates vs. gesture in Deaf signers and hearing non-signers (2-tailed t-test).

| Anatomical region | cluster size |

BA | peak t value |

Peak voxel coordinates |

cluster size |

BA | peak t value |

Peak voxel coordinates |

|---|---|---|---|---|---|---|---|---|

| Left hemisphere activations | Right hemisphere activations | |||||||

| Telic >Atelic | ||||||||

| Fusiform gyrus | 102 | 36/37 | 4.87 | 28 −34 −22 | ||||

| Lingual gyrus | 35 | 4.26 | −18 −80 −14 | 197 | 5.53 | 22 −60 −6 | ||

| Parahippocampal gyrus | 129 | 5.38 | 30 −36 −8 | |||||

| Superior temporal gyrus | 17 | 22 | 4.09 | 54 10 0 | ||||

| 51 | 22 | 4.87 | 52 −20 4 | |||||

| Heschl’s gyrus | 67 | 13 | 4.35 | −32 −26 12 | ||||

| Inferior Parietal lobe/AG/SMG | 64 | 19/39 | 4.30 | 36 −82 36 | ||||

| 23 | 40 | 4.14 | −36 −42 50 | 190 | 40/39 | 5.19 | 58 −58 42 | |

| Cerebellum | 17 | 4.26 | −28 −54 −36 | 412 | 5.55 | 18 −68 −30 | ||

| Precuneus/post. cingulate | 274 | 31 | 5.53 | 10 −30 36 | ||||

| 19 | 7 | 3.70 | 12 −74 54 | |||||

| Inferior Frontal Gyrus | 54 | 47/13 | 4.35 | 36 16 −4 | ||||

| ASL > Gesture | ||||||||

| Occipital lobe | 122 | 18/19 | 4.40 | 42 −80 −14 | ||||

| 23 | 18/19 | 3.68 | −48 −76 −12 | 29 | 18 | 4.38 | 8 −104 −4 | |

| Middle Temporal gyrus | 497 | 21 | 5.59 | −62 −46 −8 | 49 | 4.64 | 48 −24 −10 | |

| 30 | 39 | 4.00 | −58 −58 6 | |||||

| Cerebellum | 33 | 4.43 | −4 −52 −36 | |||||

| 57 | 4.25 | −42 −66 −28 | ||||||

| 62 | 4.55 | −38 −50 −30 | ||||||

| Middle Frontal Gyrus | 81 | 10/46 | 4.73 | −42 48 8 | 145 | 9 | 4.61 | 44 28 34 |

| Inferior Frontal Gyrus/insula | 1558 | 6/9/46 44/45/47 | 5.71 | −38 2 34 | 331 | 13/45/47 | 5.17 | 36 18 10 |

| 124 | 13/45/47 | 4.04 | −30 22 10 | |||||

Clusters achieving p< 0.001, uncorrected, are reported.

For each cluster, the peak location is given in MNI coordinates, accompanied by location in terms of Brodmann’s area and sulcal/gyral locus. T values represent the peak voxel activation within each cluster. Clusters have been thresholded at 17 voxels (136 mm3), as Monte-Carlo simulation of 1000 iteration (AlphaSim, AFNI package). suggests whole-brain p<.05 at cluster size treshold of k=17, with the individual voxel detection probability threshold of .005.

3.2.2. Hearing non-signers

The summary of neural activations elicited by ASL verb signs and gestures in hearing non-signers is presented in Table 2.

Table 2.

Cortical areas activated by viewing of ASL predicates and gesture in hearing non-signers.

| Anatomical region | cluster size |

BA | peak t value |

Peak voxel coordinates |

cluster size |

BA | peak t value |

Peak voxel coordinates |

|---|---|---|---|---|---|---|---|---|

| Left hemisphere activations | Right hemisphere activations | |||||||

| Telic >Atelic | ||||||||

| Fusiform gyrus | 135 | 37 | 8.95 | −34 −52 −10 | 314 | 37 | 6.92 | 34 −50 −8 |

| Superior temporal gyrus | 28 | 21/22 | 5.74 | 64 −14 0 | ||||

| Superior parietal lobe | 37 | 7 | 4.95 | 14 −70 52 | ||||

| ASL > Gesture | ||||||||

| Occipital and temporal lobes, including cerebellum | 2638 | 19/37 18, 39, 22, 20 | 11.18 | −46 −68 12 | 2038 | 19/37 18, 39 | 9.44 | 40 −62 −10 |

| Inferior Parietal lobe | 569 | 40 | 9.97 | −46 −34 44 | 339 | 40 | 6.54 | 36 −40 46 |

| Superior/Middle Frontal Gyrus | 59 | 6 | 4.65 | −44 −2 58 | 89 | 6 | 5.42 | 40 6 60 |

| Cerebellum (posterior lobe) | 176 | 5.64 | −12 −70 −22 | |||||

| SMA (bilateral) | 213 | 6 | 6.35 | −6 18 48 | ||||

| Inferior and Middle Frontal Gyrus | 986 | 6/9, 44/45/46 | 9.73 | −50 10 24 | 110 | 46 | 5.62 | 48 40 26 |

| Inferior Frontal Gyrus | 53 | 47 | 4.94 | −46 18 −8 | 62 | 45 | 4.98 | 40 8 28 |

| Inferior Frontal Gyrus/insula | 16 | 13/45 | 5.14 | 38 22 8 | ||||

Clusters achieving p< 0.05 corrected for False Discovery Rate (FDR) are reported.

For each cluster, the peak location is given in MNI coordinates, accompanied by location in terms of Brodmann’s area and sulcal/gyral locus. T values represent the peak voxel activation within each cluster. Clusters have been thresholded at 17 voxels (136 mm3), as Monte-Carlo simulation of 1000 iteration (AlphaSim, AFNI package). suggests whole-brain p<.05 at cluster size treshold of k=17, with the individual voxel detection probability threshold of .005.

Telic > Atelic

Telic verb signs elicited stronger bilateral activation of fusiform gyrus (BA 37), left lingual gyrus, and right superior temporal and superior parietal gyri as compared to atelic verb signs, in hearing non-signers.

Atelic > Telic

No brain regions were more active in processing of atelic, as compared to telic signs, at the statistical significance level (pFDR< 0.05) or trend (pUncorr< 0.001) level.

ASL > Gesture

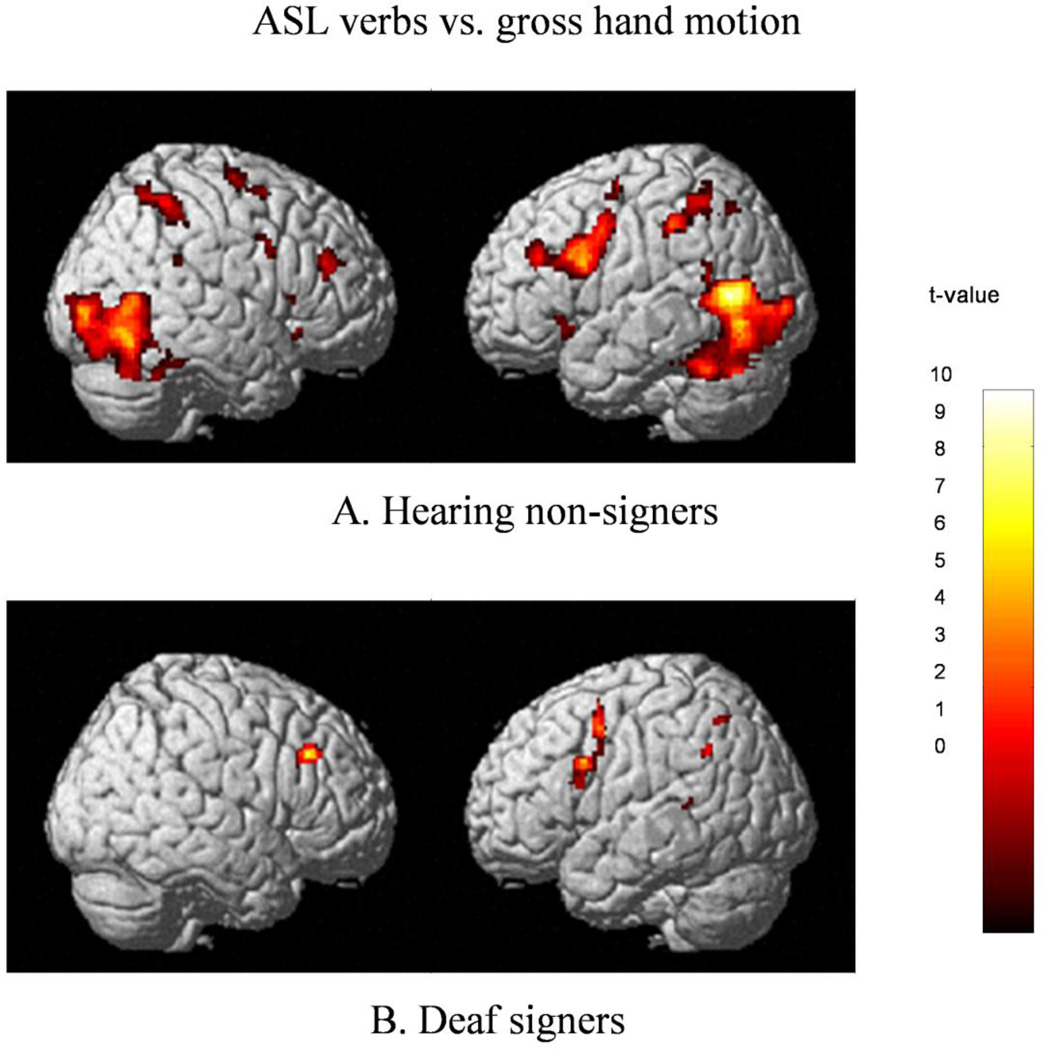

Hearing non-signers viewing ASL as compared to gesture exhibited extensive bilateral activations in temporal and occipital lobes extending into the cerebellum (BA 19/37, 18, 39), inferior parietal lobes (BA 40), superior and middle frontal gyri (BA 6), temporal lobe, and SMA (BA6) (see Fig.2A). Extensive activation in the left frontal lobe encompassed inferior and middle frontal gyri(BA 6/9, 44/45/46), with a smaller discrete cluster in the pars orbitalis (BA 47); activations in the homologous areas of the right hemisphere included four discrete clusters: in pars triangularis/insular area (BA 13/45), pars triangularis (BA 45), pars orbitalis (BA 47), and middle frontal gyrus (BA 46).

Figure 2.

Regions significantly more active during viewing of ASL sign vs. gross hand motion (gesture) in Hearing non-signers (figure A) and Deaf signers (figure B); thresholded at k>17, FWE-corrected, p<.05.

3.2.3. Deaf signers

The summary of neural activations for all comparisons between ASL verb signs and non-communicative gesture is presented in Table 3, for statistically significant (pFDR< 0.05) and trend-level (pUncorr< 0.001) clusters.

Table 3.

Cortical areas activated by viewing of ASL predicates in Deaf signers.

| Anatomical region | cluster size |

BA | peak t value |

Peak voxel coordinates |

cluster size |

BA | peak t value |

Peak voxel coordinates |

|---|---|---|---|---|---|---|---|---|

| Left hemisphere activations | Right hemisphere activations | |||||||

| Telic >Atelic | ||||||||

| *Superior temporal gyrus | 17 | 22 | 7.30 | 50 −20 4 | ||||

| Precuneus | 14 | 4.89 | 18 −54 10 | |||||

| Cerebellum | 12 | 5.54 | 8 −62 −20 | |||||

| ASL > Gesture | ||||||||

| *Inferior & middle frontal gyri | 215 | 9/46 | 5.87 | −42 4 58 | 103 | 9/46 | 6.15 | 46 28 34 |

| 6 | 5.06 | −38 2 32 | ||||||

| 44 | 4.68 | −48 12 28 | ||||||

| Inferior frontal gyrus; insula | 84 | 13/47 | 5.36 | −30 20 6 | ||||

| Telic >Gesture | ||||||||

| Occipito-temporal junction | 16 | 19 | 6.20 | 48 −76 −8 | ||||

| Inferior operculum; insula | 149 | 13/47 | 6.57 | −28 22 6 | 232 | 13/47 | 5.68 | 32 16 −2 |

| Inferior frontal gyrus | 26 | 45 | 4.49 | −50 10 26 | ||||

| Middle frontal gyrus | 29 | 6 | 4.91 | −46 2 54 | 59 | 9 | 6.95 | 44 28 34 |

| Inferior parietal lobe | 24 | 40 | 5.68 | −54 −52 42 | 26 | 40 | 4.68 | 48 −54 40 |

| SMA (bilateral) | 92 | 6 | 4.97 | −2 2 56 | ||||

| Atelic >Gesture | ||||||||

| Inferior & middle frontal gyrus | 95 | 46/9 | 5.73 | −42 12 28 | ||||

| Middle frontal gyrus/precentral gyrus | 81 | 6 | 5.32 | −44 4 52 | ||||

| Inferior frontal gyrus | 19 | 44/45 | 4.80 | −52 16 12 | ||||

Clusters achieving p< 0.05 corrected for False Discovery Rate (FDR) are denoted by *, other clusters indicated are at the trend-level of p< 0.001, uncorrected.

For each cluster, the peak location is given in MNI coordinates, accompanied by location in terms of Brodmann’s area and sulcal/gyral locus. T values represent the peak voxel activation within each cluster. Clusters have been thresholded at 10 voxels (80 mm3).

ASL > Gesture

Processing of ASL signs, compared to viewing of gesture, elicited extensive left-lateralized activations in the left inferior and middle frontal gyri when contrasted with baseline gesture (see Figure 2B). The three “peaks” within this large cluster appear to comprise three areas of activation, one in the inferior operculum (BA 44), one in SMA(BA 6), and one in the MFG (BA 9/46). A homologous area in the right hemisphere exhibited a trend-level cluster in MFG (BA 9/46).The left hemisphere also exhibited a trend-level cluster at the insular/opercular junction (BA 13/47).

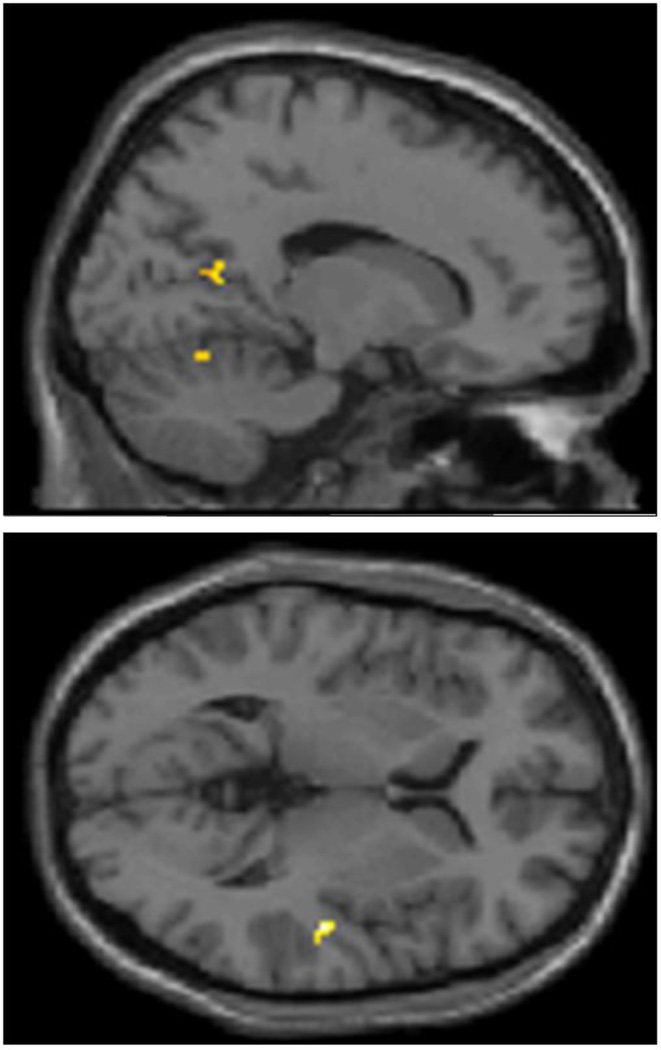

Telic > Atelic

Analysis of brain regions which were more active during processing of telic, as compared to atelic predicates, yielded right-lateralized activation clusters in the STG (BA22). Weaker, localized activity was observed at a trend-level in the right precuneus and cerebellum (see Figure 3).

Figure 3.

Precuneus, cerebellum, and right STG demonstrate significantly more activation during viewing of Telic vs. Atelic verbs in Deaf signers; thresholded at k>10, uncorrected p<.001.

Atelic > Telic

No brain regions were more active in processing of atelic, as compared to telic signs, at the statistical significance level (pFDR<0.05) or trend level.

Telic > Gesture

The participants showed more extensive activations during semantic processing of telic predicates, as compared to perception of meaningless gesture. Significant bilateral activations were present in opercular/insular junction, SMA, middle frontal gyrus, and inferior parietal lobe (BA 40). In addition, this contrast revealed left-lateralized activation in IFG (BA 45), and right-lateralized activation in inferior occipital gyrus (BA 19).

Atelic > Gesture

The brain regions, which showed extensive activations in response to semantic processing of atelic predicates, as compared to perception of gesture, included inferior frontal gyrus (including BA 44 and BA 46) and middle frontal gyrus (BA 6).

4. Discussion

In this study, the participants were presented with visual stimuli consisting of telic and atelic ASL signs, with simple gestures as a baseline condition. The simple gestures consisted of non-intentional (non-communicative) slow movements of the arms being lowered from a T-position (straight out) to the sides of the body, and back up. The participants (13 Deaf native ASL signers, 12 hearing non-signers) were presented with video stimuli in a block paradigm, while monitoring for the semantics of the sign (Deaf signers) or the symmetry of hand movement (hearing non-signers). The neural activations elicited by linguistic vs. non-linguistic stimuli in Deaf signers in the present study were consistent with earlier research, which demonstrated that areas activated by sign language include cortical networks in the left perisylvian language areas (Corina et al., 2007; Emmorey et al., 2009; MacSweeney et al., 2004; MacSweeney, Capek et al., 2008).

Deaf participants exhibited highly focused activations in language-processing regions in response to telic vs. atelic signs, as well as ASL vs. gesture. Higher activation of STG for the processing of telic vs. atelic verb signs can be interpreted as a reflection of higher complexity of the phonological structure of telic verbs, compared to atelic ones. Prior sign language research has demonstrated that left STG is activated in creating abstract phonological representations based on spatial properties of signs (Emmorey et al., 2003; Emmorey, Mehta, & Grabowski, 2007; MacSweeney et al., 2004; Petitto et al., 2000). While the right STG has not been identified in phonological tasks to date, its activation has been noted in the processing of sign language discourse (Neville et al., 1998). The question then arises: what property of the stimuli elicit right STG activation in the Deaf participants?

Spectro-temporal differences in auditory stimuli (Hall et al., 2000) have been noted to elicit activation of right STG at MNI coordinates (54 −12 −2) while the participants listened to modulated vs. static tones. In the present study, the MNI coordinates of right STG activation in the Deaf group are close at (50 −20 4), suggesting the possibility that STG activation in the telic vs. atelic contrast might be due to the processing of velocity differences between the two stimuli types3.

A recent rTMS study by Duque et al. (2010) also proposed that the right STG plays a crucial role in the processing of relative speeds of motion for the two hands. The center of the rTMS stimulation site in the Duque et al. study was at Talairach (67 −35 16); the Talairach equivalent4 of right STG activation in the Hearing participants was (60 −15 4). There is, thus, a possibility that not the individual speed of each hand, but their motion relative to each other might have been used strategically as a perceptual cue by Hearing participants5.

Trend-level activations in the current data (cluster level p< 0.05, uncorrected) also support the hypothesis that telic and atelic verb signs elicit differential phonological processing in Deaf signers: for example, cerebellar activation, as seen in the telic >atelic contrast, has been previously shown to play a role in linguistic-cognitive processing in both signed and spoken languages (Corina, Jose-Robertson, Guillemin, High, & Braun, 2003). The telic > atelic contrast also demonstrated increased activation of the precuneus at the trend level. Perceptual studies requiring segmentation of continuous video into discrete events (Zacks et al., 2001; Zacks et al., 2006), as well as studies of event segmentation in text narratives (Speer et al., 2007), show increased activation of the precuneus at event boundaries. The higher activations of the precuneus by telic, as compared to atelic, verbs in the present study may indicate the indexing of event boundaries triggered by comprehension of telic predicates, although comparison of neural activations in Deaf signers and hearing non-signers should be made with caution (Meyer et al., 2007). Overall, the findings suggest that telic ASL signs might be processed as more phonologically complex in comparison to atelic signs by the Deaf signers, and appear to show event segmentation-related activation during semantic processing.

The fact that activations in response to the stimuli were highly focal in Deaf participants was expected, since expertise-based neural activations have been shown to be more localized in various domains (cf. McKiernan, Kaufman, Kucera-Thompson, & Binder, 2003; Parsons, Sergent, Hodges, & Fox, 2005; Petrini et al., 2011). Thus, the localized STG activation might point to the fact that the Deaf have high expertise in the processing of spectro-temporal properties (such as velocity) of visual stimuli. It is also possible that in the present experiment the neural response of Deaf participants to ASL signs, in contrast to low-complexity motion (gesture),was not driven by sensory processing of motion complexity, but rather by the top-down processing (feature extraction), as signers continually monitor visual input for linguistic information.

The interpretation of the results of this study is subject to a caveat common to cognitive science literature, in that the elicited activations may reflect neural processes related to the differences in task complexity between the group of Deaf signers and the control group of hearing non-signers. It is also possible that stimulus properties other than those defined by the linguistic or kinematic features (such as frequency of stimulus signs in normal signing discourse) might have contributed to the results of the study. It is, however, unlikely, as the contrasts in the present study did not correspond to networks of brain areas previously implicated in task difficulty or effects related to stimulus frequency6.

Future studies may help shed more light on the relationship between visuo-kinematic properties of signs and form-to-meaning mapping, and circumscribe the neurocognitive mechanisms responsible for it, both in ASL and in other sign languages. Additional cross-modal investigations of known language universals, similar to verb types investigated in the present study, might provide further information about what abstract processing tasks are carried out by individual components of language network.

Acknowledgements

We are grateful to Aina Puce and Sharlene Newman for comments on earlier versions of this manuscript, and to Derek Evan Nee for consultations on statistical analyses. The work was partially supported by NIH grants DC00524 to R.B. Wilbur and EB003990 to T.M. Talavage. All data were acquired at the Purdue University MRI Facility at InnerVision, West Lafayette, IN, USA.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

use of capital D in Deaf is an indicator of cultural affiliation, including use of sign language as primary means of communication.

The sign names are given in uppercase English words, following the standards of linguistic transcription for sign languages.

Hall (2000) also noted a more posterior right STG activation due to task demands (MNI 52 −40 0). This activation, however, is significantly more posterior than the right STG activation in either group in the present study.

Generated by Yale Non-Linear MNI 2 Talairach converter (Lacadie, Fulbright, Rajeevan, Constable, & Papademetris, 2008).

We thank an anonymous reviewer for bringing this argument to our attention.

fMRI studies of spoken language processing have previously suggested that right cerebellar activation in conjunction with SMA and bilateral postcentral gyri activation can be related to decision-making in the behavioral task (Booth, Wood, Lu, Houk, & Bitan, 2007; Carreiras, Mechelli, Estevez, & Price, 2007). Increased activation of a neural network comprised of the left IFG, right cerebellum, and cingulate has also been related to lexical decision task (Noppeney & Price, 2002). Frequency of stimulus occurrence in discourse is also known to have an effect on neural activations during language processing: e.g., high-frequency linguistic stimuli (Chinese words) elicited increased activation in bilateral fusiform gyri, cerebellum, right IPL, MFG (BA 45/46/9), and the right temporal-occipital junction (BA 21/37) in native speakers of Chinese (Peng et al., 2003), while high-frequency French words activated left angular and supramarginal gyri in native speakers of French (Joubert et al., 2004). None of these networks are observed in the current data set.

References

- Booth JR, Wood L, Lu D, Houk JC, Bitan T. The role of the basal ganglia and cerebellum in language processing. Brain Res. 2007;1133(1):136–144. doi: 10.1016/j.brainres.2006.11.074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosworth RG, Dobkins KR. Left hemisphere dominance for motion processing in deaf signers. Psychological Science. 1999;10:256–262. [Google Scholar]

- Brentari D. A prosodic model of sign language phonology. Cambridge, MA: The MIT Press; 1998. [Google Scholar]

- Carreiras M, Mechelli A, Estevez A, Price CJ. Brain activation for lexical decision and reading aloud: two sides of the same coin? J Cogn Neurosci. 2007;19(3):433–444. doi: 10.1162/jocn.2007.19.3.433. [DOI] [PubMed] [Google Scholar]

- Corina D, Chiu YS, Knapp H, Greenwald R, San Jose-Robertson L, Braun A. Neural correlates of human action observation in hearing and deaf subjects. Brain Research. 2007;1152:111–129. doi: 10.1016/j.brainres.2007.03.054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina D, Jose-Robertson LS, Guillemin A, High J, Braun AR. Language lateralization in a bimanual language. Journal of Cognitive Neuroscience. 2003;15(5):718–730. doi: 10.1162/089892903322307438. [DOI] [PubMed] [Google Scholar]

- Dowty D. Word Meaning and Montague Grammar. Dordrecht: D. Reidel; 1979. [Google Scholar]

- Emmorey K, Grabowski T, McCullough S, Damasio H, Ponto LL, Hichwa RD, et al. Neural systems underlying lexical retrieval for sign language. Neuropsychologia. 2003;41(1):85–95. doi: 10.1016/s0028-3932(02)00089-1. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Mehta S, Grabowski TJ. The neural correlates of sign versus word production. Neuroimage. 2007;36(1):202–208. doi: 10.1016/j.neuroimage.2007.02.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Xu J, Gannon P, Goldin-Meadow S, Braun A. CNS activation and regional connectivity during pantomime observation: No engagement of the mirror neuron system for deaf signers. Neuroimage. 2009 doi: 10.1016/j.neuroimage.2009.08.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Summerfield AQ, Palmer AR, Elliott MR, et al. Modulation and task effects in auditory processing measured using fMRI. Human brain mapping. 2000;10(3):107–119. doi: 10.1002/1097-0193(200007)10:3<107::AID-HBM20>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jackendoff R. Parts and boundaries. Cognition. 1991;41(1–3):9–45. doi: 10.1016/0010-0277(91)90031-x. [DOI] [PubMed] [Google Scholar]

- Joubert S, Beauregard M, Walter N, Bourgouin P, Beaudoin G, Leroux JM, et al. Neural correlates of lexical and sublexical processes in reading. Brain Lang. 2004;89(1):9–20. doi: 10.1016/S0093-934X(03)00403-6. [DOI] [PubMed] [Google Scholar]

- Lacadie CM, Fulbright RK, Rajeevan N, Constable RT, Papademetris X. More accurate Talairach coordinates for neuroimaging using non-linear registration. Neuroimage. 2008;42(2):717–725. doi: 10.1016/j.neuroimage.2008.04.240. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leonard LB, Deevy P. Tense and aspect in sentence interpretation by children with specific language impairment. J Child Lang. 2010;37(2):395–418. doi: 10.1017/S0305000909990018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loke WH, Song S. Central and peripheral visual processing in hearing and nonhearing individuals. Bulletin of the Psychonomic Society. 1991;29(5):437–440. [Google Scholar]

- MacSweeney M, Campbell R, Woll B, Giampietro V, David AS, McGuire PK, et al. Dissociating linguistic and nonlinguistic gestural communication in the brain. Neuroimage. 2004;22(4):1605–1618. doi: 10.1016/j.neuroimage.2004.03.015. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Capek CM, Campbell R, Woll B. The signing brain: the neurobiology of sign language. Trends in Cognitive Sciences. 2008;12(11):432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Waters D, Brammer MJ, Woll B, Goswami U. Phonological processing in deaf signers and the impact of age of first language acquisition. Neuroimage. 2008;40(3):1369–1379. doi: 10.1016/j.neuroimage.2007.12.047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacSweeney M, Woll B, Campbell R, McGuire PK, David AS, Williams SC, et al. Neural systems underlying British Sign Language and audio-visual English processing in native users. Brain. 2002;125(Pt 7):1583–1593. doi: 10.1093/brain/awf153. [DOI] [PubMed] [Google Scholar]

- Malaia E, Borneman J, Wilbur RB. Analysis of ASL Motion Capture Data towards Identification of Verb Type. In: Bos J, Delmonte R, editors. Semantics in Text Processing. STEP 2008 Conference Proceedings; College Publications; 2008. pp. 155–164. [Google Scholar]

- Malaia E, Wilbur RB. Kinematic signatures of telic and atelic events in ASL predicates. Language and Speech. doi: 10.1177/0023830911422201. (in press) [DOI] [PubMed] [Google Scholar]

- Malaia E, Wilbur RB, Weber-Fox C. ERP evidence for telicity effects on syntactic processing in garden-path sentences. Brain and Language. 2009;108(3):145–158. doi: 10.1016/j.bandl.2008.09.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKiernan KA, Kaufman JN, Kucera-Thompson J, Binder JR. A parametric manipulation of factors affecting task-induced deactivation in functional neuroimaging. Journal of Cognitive Neuroscience. 2003;15(3):394–408. doi: 10.1162/089892903321593117. [DOI] [PubMed] [Google Scholar]

- Meyer M, Toepel U, Keller J, Nussbaumer D, Zysset S, Friederici AD. Neuroplasticity of sign language: implications from structural and functional brain imaging. Restor Neurol Neurosci. 2007;25(3–4):335–351. [PubMed] [Google Scholar]

- Neville HJ, Lawson D. Attention to central and peripheral visual space in a movement detection task. III. Separate effects of auditory deprivation and acquisition of a visual language. Brain Res. 1987;405(2):284–294. doi: 10.1016/0006-8993(87)90297-6. [DOI] [PubMed] [Google Scholar]

- Noppeney U, Price CJ. A PET study of stimulus- and task-induced semantic processing. Neuroimage. 2002;15(4):927–935. doi: 10.1006/nimg.2001.1015. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Parasnis I, Samar VJ. Parafoveal attention in congenitally deaf and hearing young adults* 1. Brain and Cognition. 1985;4(3):313–327. doi: 10.1016/0278-2626(85)90024-7. [DOI] [PubMed] [Google Scholar]

- Parsons LM, Sergent J, Hodges DA, Fox PT. The brain basis of piano performance. Neuropsychologia. 2005;43(2):199–215. doi: 10.1016/j.neuropsychologia.2004.11.007. [DOI] [PubMed] [Google Scholar]

- Peng DL, Xu D, Jin Z, Luo Q, Ding GS, Perry C, et al. Neural basis of the non-attentional processing of briefly presented words. Hum Brain Mapp. 2003;18(3):215–221. doi: 10.1002/hbm.10096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitto LA, Zatorre RJ, Gauna K, Nikelski EJ, Dostie D, Evans AC. Speech-like cerebral activity in profoundly deaf people processing signed languages: implications for the neural basis of human language. Proc Natl Acad Sci U S A. 2000;97(25):13961–13966. doi: 10.1073/pnas.97.25.13961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrini K, Pollick FE, Dahl S, McAleer P, McKay L, Rocchesso D, et al. Action expertise reduces brain activity for audiovisual matching actions: An fmri study with expert drummers. Neuroimage. 2011 doi: 10.1016/j.neuroimage.2011.03.009. [DOI] [PubMed] [Google Scholar]

- Poizner H. Visual and "phonetic" coding of movement: Evidence from American Sign Language. Science. 1981;212(4495):691. doi: 10.1126/science.212.4495.691. [DOI] [PubMed] [Google Scholar]

- Poizner H. Perception of movement in American Sign Language: Effects of linguistic structure and linguistic experience. Attention, Perception, & Psychophysics. 1983;33(3):215–231. doi: 10.3758/bf03202858. [DOI] [PubMed] [Google Scholar]

- Pustejovsky J. The Syntax of Event Structure. Cognition. 1991;41(1–3):47–81. doi: 10.1016/0010-0277(91)90032-y. [DOI] [PubMed] [Google Scholar]

- Ramchand G. Aspect and predication: The semantics of argument structure. USA: Oxford University Press; 1997. [Google Scholar]

- Ramchand G. Perfectivity as aspectual definiteness: Time and the event in Russian. Lingua. 2008;118:1690–1715. [Google Scholar]

- Ramchand G. Verb Meaning and the Lexicon - The First-Phase Syntax. Cambridge University Press; 2008. [Google Scholar]

- Reynolds HN. Effects of foveal stimulation on peripheral visual processing and laterality in deaf and hearing subjects. The American journal of psychology. 1993;106(4):523–540. [PubMed] [Google Scholar]

- San Jose-Robertson L, Corina DP, Ackerman D, Guillemin A, Braun AR. Neural systems for sign language production: mechanisms supporting lexical selection, phonological encoding, and articulation. Hum Brain Mapp. 2004;23(3):156–167. doi: 10.1002/hbm.20054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schönwiesner M, Zatorre RJ. Spectro-temporal modulation transfer function of single voxels in the human auditory cortex measured with high-resolution fMRI. Proceedings of the National Academy of Sciences. 2009;106(34):14611. doi: 10.1073/pnas.0907682106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Speer NK, Swallow KM, Zacks JM. Activation of human motion processing areas during event perception. Cogn Affect Behav Neurosci. 2003;3(4):335–345. doi: 10.3758/cabn.3.4.335. [DOI] [PubMed] [Google Scholar]

- Speer NK, Zacks JM, Reynolds JR. Human brain activity time-locked to narrative event boundaries. Psychol Sci. 2007;18(5):449–455. doi: 10.1111/j.1467-9280.2007.01920.x. [DOI] [PubMed] [Google Scholar]

- Svenonius P. Icelandic case and the structure of events. The Journal of Comparative Germanic Linguistics. 2002;5(1):197–225. [Google Scholar]

- Swallow KM, Zacks JM, Abrams RA. Event boundaries in perception affect memory encoding and updating. Journal of Experimental Psychology: General. 2009;138(2):236. doi: 10.1037/a0015631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Theunissen FE, David SV, Singh NC, Hsu A, Vinje WE, Gallant JL. Estimating spatio-temporal receptive fields of auditory and visual neurons from their responses to natural stimuli. Network: Computation in Neural Systems. 2001;12(3):289–316. [PubMed] [Google Scholar]

- van Hout A. Event Semantics in the Lexicon-Syntax Interface. In: Tenny C, Pustejovsky J, editors. Events as Grammatical Objects. CSLI Publications; 2001. pp. 239–282. [Google Scholar]

- Van Valin R. Some universals of verb semantics. In: Mairal R, Gil J, editors. Linguistic Universals. Cambridge University Press; 2007. [Google Scholar]

- Vendler Z. Linguistics in Philosophy. Ithaca, NY: Cornell University Press; 1967. [Google Scholar]

- Verkuyl H. On the Compositional Nature of Aspects. Dordrecht: Reidel; 1972. [Google Scholar]

- Vinje WE, Gallant JL. Sparse coding and decorrelation in primary visual cortex during natural vision. Science. 2000;287(5456):1273. doi: 10.1126/science.287.5456.1273. [DOI] [PubMed] [Google Scholar]

- Wilbur RB. Complex predicates involving events, time, and aspect: Is this why sign languages look so similar? In: Quer J, editor. Theoretical Issues in sign language research. Hamburg: Signum Press; 2008. [Google Scholar]

- Yarkoni T, Speer NK, Zacks JM. Neural substrates of narrative comprehension and memory. Neuroimage. 2008;41(4):1408–1425. doi: 10.1016/j.neuroimage.2008.03.062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zacks JM, Braver TS, Sheridan MA, Donaldson DI, Snyder AZ, Ollinger JM, et al. Human brain activity time-locked to perceptual event boundaries. Nat Neurosci. 2001;4(6):651–655. doi: 10.1038/88486. [DOI] [PubMed] [Google Scholar]

- Zacks JM, Kumar S, Abrams RA, Mehta R. Using movement and intentions to understand human activity. Cognition. 2009;112(2):201–216. doi: 10.1016/j.cognition.2009.03.007. [DOI] [PubMed] [Google Scholar]

- Zacks JM, Swallow KM, Vettel JM, McAvoy MP. Visual motion and the neural correlates of event perception. Brain Res. 2006;1076(1):150–162. doi: 10.1016/j.brainres.2005.12.122. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cerebral Cortex. 2001;11(10):946. doi: 10.1093/cercor/11.10.946. [DOI] [PubMed] [Google Scholar]