Abstract

Traditional approaches to the problem of parameter estimation in biophysical models of neurons and neural networks usually adopt a global search algorithm (for example, an evolutionary algorithm), often in combination with a local search method (such as gradient descent) in order to minimize the value of a cost function, which measures the discrepancy between various features of the available experimental data and model output. In this study, we approach the problem of parameter estimation in conductance-based models of single neurons from a different perspective. By adopting a hidden-dynamical-systems formalism, we expressed parameter estimation as an inference problem in these systems, which can then be tackled using a range of well-established statistical inference methods. The particular method we used was Kitagawa's self-organizing state-space model, which was applied on a number of Hodgkin-Huxley-type models using simulated or actual electrophysiological data. We showed that the algorithm can be used to estimate a large number of parameters, including maximal conductances, reversal potentials, kinetics of ionic currents, measurement and intrinsic noise, based on low-dimensional experimental data and sufficiently informative priors in the form of pre-defined constraints imposed on model parameters. The algorithm remained operational even when very noisy experimental data were used. Importantly, by combining the self-organizing state-space model with an adaptive sampling algorithm akin to the Covariance Matrix Adaptation Evolution Strategy, we achieved a significant reduction in the variance of parameter estimates. The algorithm did not require the explicit formulation of a cost function and it was straightforward to apply on compartmental models and multiple data sets. Overall, the proposed methodology is particularly suitable for resolving high-dimensional inference problems based on noisy electrophysiological data and, therefore, a potentially useful tool in the construction of biophysical neuron models.

Author Summary

Parameter estimation is a problem of central importance and, perhaps, the most laborious task in biophysical modeling of neurons and neural networks. An emerging trend is to treat parameter estimation in this context as yet another statistical inference problem, which can be tackled using well-established methods from Computational Statistics. Inspired by these recent advances, we adopted a self-organizing state-space-model approach augmented with an adaptive sampling algorithm akin to the Covariance Matrix Adaptation Evolution Strategy in order to estimate a large number of parameters in a number of Hodgkin-Huxley-type models of single neurons. Parameter estimation was based on noisy electrophysiological data and involved the maximal conductances, reversal potentials, levels of noise and, unlike most mainstream work, the kinetics of ionic currents in the examined models. Our main conclusion was that parameters in complex, conductance-based neuron models can be inferred using the aforementioned methodology, if sufficiently informative priors regarding the unknown model parameters are available. Importantly, the use of an adaptive algorithm for sampling new parameter vectors significantly reduced the variance of parameter estimates. Flexibility and scalability are additional advantages of the proposed method, which is particularly suited to resolve high-dimensional inference problems.

Introduction

Among several tools at the disposal of neuroscientists today, data-driven computational models have come to hold an eminent position for studying the electrical activity of single neurons and the significance of this activity for the operation of neural circuits [1]–[4]. Typically, these models depend on a large number of parameters, such as the maximal conductances and kinetics of gated ion channels. Estimating appropriate values for these parameters based on the available experimental data is an issue of central importance and, at the same time, the most laborious task in single-neuron and circuit modeling.

Ideally, all unknown parameters in a model should be determined directly from experimental data analysis. For example, based on a set of voltage-clamp recordings, the type, kinetics and maximal conductances of the voltage-gated ionic currents flowing through the cell membrane could be determined [5] and, then, combined in a conductance-based model, which replicates the activity of the biological neuron of interest under current-clamp conditions with sufficient accuracy. Unfortunately, this is not always possible, especially for complex compartmental models, which contain a large number of ionic currents.

A first problem arises from the fact that not all parameters can be estimated within an acceptable error margin, especially for small currents and large levels of noise. A second problem arises from the practice of estimating different sets of parameters based on data collected from different neurons of a particular type, instead of estimating all unknown parameters using data collected from a single neuron. Different neurons of the same type may have quite different compositions of ionic currents [6]–[9] (but, see also [10]). This implies that combining ionic currents measured from different neurons in the same model or even using the average of several parameters calculated over a population of neurons of the same type will not necessarily result in a model that expresses the experimentally recorded patterns of electrical activity under current-clamp conditions. Usually, only some parameters are well characterized, while others are difficult or impossible to measure directly. Thus, most modeling studies rely on a mixture of experimentally determined parameters and estimates of the remaining unknown ones using automated optimization methodology (see, for example, [11]–[22]). Typically, these methods require the construction of a cost function (for measuring the discrepancy between various features of the experimental data and the output of the model) and an automated parameter selection method, which iteratively generates new sets of parameters, such that the value of the cost function progressively decreases during the course of the simulation (see [23] for a review). Popular choices of such methods are evolutionary algorithms, simulated annealing and gradient descent methods. Often, a global search method (i.e. an evolutionary algorithm) is combined with local search (gradient descent) for locating multiple minima of the cost function with high precision. Since a poorly designed cost function (for example, one that merely matches model and experimental membrane potential trajectories) can seriously impede optimization, the construction of this function often requires particular attention (see, for example, [24]). Nevertheless, these computationally intensive methodologies have gained much popularity, particularly due to the availability of powerful personal computers at consumer-level prices and the development of specialized optimization software (e.g. [25]).

Alternative approaches also exist as, for example, methods based on the concept of synchronization between model dynamics and experimental data [26]. An emerging trend in parameter estimation methodologies for models in Computational Biology is to recast parameter estimation as an inference problem in hidden dynamical systems and then adopt standard Computational Statistics techniques to resolve it [27], [28]. For example, a particular study following this approach makes use of Sequential Monte Carlo methods (particle filters) embedded in an Expectation Maximization (EM) framework [28]. Given a set of electrophysiological recordings and a set of dynamic equations that govern the evolution of the hidden states, at each iteration of the algorithm the expected joint log-likelihood of the hidden states and the data is approximated using particle filters (Expectation Step). At a second stage during each iteration (Maximization Step), the log-likelihood is locally maximized with respect to the unknown parameters. The advantage of these methods, beyond the fact that they recast the estimation problem in a well-established statistical framework, is that they can handle various types of noisy biophysical data made available by recent advances in voltage and calcium imaging techniques.

Inspired by this emerging approach, we present a method for estimating a large number of parameters in Hodgkin-Huxley-type models of single neurons. The method is a version of Kitagawa's self-organizing state-space model [29] combined with an adaptive algorithm for selecting new sets of model parameters. The adaptive algorithm we have used is akin to the Covariance Matrix Adaption (CMA) Evolution Strategy [30], but other methods (e.g. Differential Evolution as described in [31]) may be used instead. We demonstrate the applicability of the algorithm on a range of models using simulated or actual electrophysiological data. We show that the algorithm can be used successfully with very noisy data and it is straightforward to apply on compartmental models and multiple datasets. An interesting result from this study is that by using the self-organizing state-space model in combination with a CMA-like algorithm, we managed to achieve a dramatic reduction in the variance of the inferred parameter values. Our main conclusion is that a large number of parameters in a conductance-based model of a neuron (including maximal conductances, reversal potentials and kinetics of gated ionic currents) can be inferred from low-dimensional experimental data (typically, a single or a few recordings of membrane potential activity) using the algorithm, if sufficiently informative priors are available, for example in the form of well-defined ranges of valid parameter values.

Methods

Modeling Framework

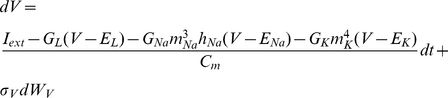

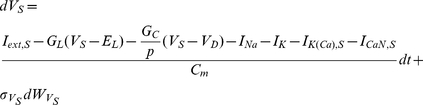

We begin by presenting the current conservation equation that describes the time evolution of the membrane potential for a single-compartment model neuron:

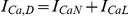

| (1) |

where  ,

,  and

and  are all functions of time. In the above equation,

are all functions of time. In the above equation,  is the membrane capacitance,

is the membrane capacitance,  is the membrane potential,

is the membrane potential,  is the externally applied (injected) current,

is the externally applied (injected) current,  and

and  are the maximal conductance and reversal potential of the leakage current, respectively, and

are the maximal conductance and reversal potential of the leakage current, respectively, and  is the

is the  transmembrane ionic current. A voltage-gated current

transmembrane ionic current. A voltage-gated current  can be modeled according to the Hodgkin-Huxley formalism, as follows:

can be modeled according to the Hodgkin-Huxley formalism, as follows:

| (2) |

where  and

and  are both functions of time. In the above expression,

are both functions of time. In the above expression,  and

and  are the maximal conductance and reversal potential of the

are the maximal conductance and reversal potential of the  ionic current,

ionic current,  and

and  are dynamic gating variables, which model the voltage-dependent activation and inactivation of the current, and

are dynamic gating variables, which model the voltage-dependent activation and inactivation of the current, and  is a small positive integer power (usually, not taking values larger than 4). The product

is a small positive integer power (usually, not taking values larger than 4). The product  is the proportion of open channels in the membrane that carry the

is the proportion of open channels in the membrane that carry the  current. The gating variables

current. The gating variables  and

and  obey first-order relaxation kinetics, as shown below:

obey first-order relaxation kinetics, as shown below:

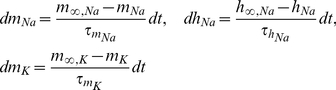

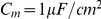

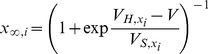

| (3) |

where the steady states ( ,

,  ) and relaxation times (

) and relaxation times ( ,

,  ) are all functions of voltage.

) are all functions of voltage.

Using vector notation, we can write the above system of Ordinary Differential Equations (ODEs) in more concise form:

| (4) |

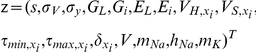

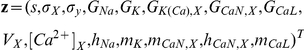

where the state vector  is composed of the time-evolving state variables

is composed of the time-evolving state variables  ,

,  and

and  and the vector-valued function

and the vector-valued function  , which describes the evolution of

, which describes the evolution of  in time, is formed by the right-hand sides of Eqs. 1 and 3. Notice that

in time, is formed by the right-hand sides of Eqs. 1 and 3. Notice that  also depends on a parameter vector

also depends on a parameter vector  , which for now is dropped from Eq. 4 for notational clarity. Components of

, which for now is dropped from Eq. 4 for notational clarity. Components of  are the maximal conductances

are the maximal conductances  , the reversal potentials

, the reversal potentials  and the various parameters that control the voltage-dependence of the steady states and relaxation times in Eq. 3.

and the various parameters that control the voltage-dependence of the steady states and relaxation times in Eq. 3.

The above deterministic model does not capture the inherent variability in the electrical activity of neurons, but rather some average behavior of intrinsically stochastic events. In general, this variability originates from various sources, such as the random opening and shutting of transmembrane ion channels or the random bombardment of the neuron with external (e.g. synaptic) stimuli [32]. Here, we model the inherent variability in single-neuron activity by augmenting Eq. 4 with a noisy term and re-writing as follows:

| (5) |

where  is a covariance matrix and

is a covariance matrix and  is a standard Wiener process over the state space of

is a standard Wiener process over the state space of  .

.  may be a diagonal matrix of variances (

may be a diagonal matrix of variances ( ,

,  and

and  ) corresponding to each component of the state vector.

) corresponding to each component of the state vector.

Typically, we assume that the above model is coupled to a measurement “device”, which permits indirect observations of the hidden state  :

:

| (6) |

where  is an observation noise vector. In the simplest case, the vector of observations

is an observation noise vector. In the simplest case, the vector of observations  is one-dimensional and it may consist of noisy measurements of the membrane potential:

is one-dimensional and it may consist of noisy measurements of the membrane potential:

| (7) |

where  is the standard deviation of the observation noise and

is the standard deviation of the observation noise and  a random number sampled from a Gaussian distribution with zero mean and standard deviation equal to unity. More complicated non-linear, non-Gaussian observation functions may be used when, for example, the measurements are recordings of the intracellular calcium concentration, simultaneous recordings of the membrane potential and the intracellular calcium concentration or simultaneous recordings of the membrane potential from multiple sites (e.g. soma and dendrites) of a neuron.

a random number sampled from a Gaussian distribution with zero mean and standard deviation equal to unity. More complicated non-linear, non-Gaussian observation functions may be used when, for example, the measurements are recordings of the intracellular calcium concentration, simultaneous recordings of the membrane potential and the intracellular calcium concentration or simultaneous recordings of the membrane potential from multiple sites (e.g. soma and dendrites) of a neuron.

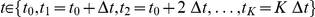

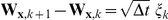

Assuming that time  is partitioned in a very large number

is partitioned in a very large number  of time steps

of time steps  , such that

, such that  and the corresponding states are

and the corresponding states are  , we can approximate the solution to Eq. 5 using the following difference equation:

, we can approximate the solution to Eq. 5 using the following difference equation:

| (8) |

where  and

and  is a random vector with components sampled from a normal distribution with zero mean and unit variance. The above expression implements a simple rule for computing the membrane potential, activation and inactivation variables at each point

is a random vector with components sampled from a normal distribution with zero mean and unit variance. The above expression implements a simple rule for computing the membrane potential, activation and inactivation variables at each point  of the discretized time based on information at the previous time point

of the discretized time based on information at the previous time point  and it can be considered as a specific instantiation of the Euler-Maruyama method for the numerical solution of Stochastic Differential Equations [33].

and it can be considered as a specific instantiation of the Euler-Maruyama method for the numerical solution of Stochastic Differential Equations [33].

Then, the observation model becomes:

| (9) |

In general, measurements do not take place at every point  of the discretized time, but rather at intervals of

of the discretized time, but rather at intervals of  time steps (depending on the resolution of the measurement device), thus generating a total of

time steps (depending on the resolution of the measurement device), thus generating a total of  measurements. For simplicity in the above description, we have assumed that

measurements. For simplicity in the above description, we have assumed that  . However, all the models we consider in the Results section assume

. However, all the models we consider in the Results section assume  .

.

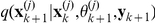

In terms of probability density functions, the non-linear state-space model defined by Eqs. 8 and 9 (known as the dynamics model) and the observation model, respectively) can be written as:

| (10) |

| (11) |

where the initial state  is distributed according to a prior density

is distributed according to a prior density  . The above formulas are known as the state transition and observation densities, respectively [34].

. The above formulas are known as the state transition and observation densities, respectively [34].

Simulation-Based Filtering and Smoothing

In many inference problems involving state-space models, a primary concern is the sequential estimation of the following two conditional probability densities [29]: (a)  and (b)

and (b)  , where

, where  , i.e. the set of observations (for example, a sequence of measurements of the membrane potential) up to the time point

, i.e. the set of observations (for example, a sequence of measurements of the membrane potential) up to the time point  . Density (a), known as the filter density, models the distribution of state

. Density (a), known as the filter density, models the distribution of state  given all observations up to and including the time point

given all observations up to and including the time point  , while density (b), known as the smoother density, models the distribution of state

, while density (b), known as the smoother density, models the distribution of state  given the whole set of observations up to the final time point

given the whole set of observations up to the final time point  .

.

In principle, the filter density can be estimated recursively at each time point  using Bayes' rule appropriately [29]:

using Bayes' rule appropriately [29]:

| (12) |

where  and

and  are the state transition and observation densities, respectively, and

are the state transition and observation densities, respectively, and  is the filter density at the previous time step

is the filter density at the previous time step  .

.

Then, the smoother density can be obtained by using the following general recursive formula:

| (13) |

which evolves backwards in time and makes use of the pre-calculated filter,  . Given either of the above posterior densities, we can compute the expectation of any useful function of the hidden model state as:

. Given either of the above posterior densities, we can compute the expectation of any useful function of the hidden model state as:

| (14) |

where  is either the filter or the smoother density. Common examples of

is either the filter or the smoother density. Common examples of  are

are  itself (giving the mean

itself (giving the mean  ) and the squared difference from the mean (giving the covariance of

) and the squared difference from the mean (giving the covariance of  ).

).

In practice, the computations defined by the above formulas can be performed analytically only for linear Gaussian models using the Kalman smoother/filter and for finite state-space hidden Markov models. For non-linear models, the extended Kalman filter is a popular approach, which however can fail when non-Gaussian or multimodal density functions are involved [34]. A more generally applicable, albeit computationally more intensive approach, approximates the filter and smoother densities using Sequential Monte Carlo (SMC) methods, also known as particle filters

[34], [35]. Within the SMC framework, the filter density at each time point is approximated by a large number  of discrete samples or particles,

of discrete samples or particles,  , and associated non-negative importance weights,

, and associated non-negative importance weights,  :

:

| (15) |

where  is the Dirac delta function centered at the

is the Dirac delta function centered at the  particle,

particle,  .

.

Given an initial set of particles sampled from a prior distribution and their associated weights, a simple update rule involves the following steps [29]:

Step 1: For  , sample a new set of particles from the proposal transition density function,

, sample a new set of particles from the proposal transition density function,  . In general, one has enormous freedom in choosing the form of this density and even condition it on future observations, if these are available (see, for example, [36]). However, the simplest (and a quite common) choice is to use the transition density as the proposal, i.e.

. In general, one has enormous freedom in choosing the form of this density and even condition it on future observations, if these are available (see, for example, [36]). However, the simplest (and a quite common) choice is to use the transition density as the proposal, i.e.  . This is the approach we follow in this paper.

. This is the approach we follow in this paper.

Step 2: For each new particle  , evaluate the importance weight:

, evaluate the importance weight:

|

(16) |

Notice that when  , then the computation of the importance weights is significantly simplified, i.e.

, then the computation of the importance weights is significantly simplified, i.e.  .

.

Step 3: Normalize the computed importance weights, by dividing each of them with their sum, i.e.

|

(17) |

The derived set of weighted samples  is considered an approximation of the filter density

is considered an approximation of the filter density  .

.

In practice, the above algorithm is augmented with a re-sampling step (preceding Step 1), during which  particles are sampled from the set of weighted particles computed at the previous iteration with probabilities proportional to their weights [34], [35]. All re-sampled particles are given weights equal to

particles are sampled from the set of weighted particles computed at the previous iteration with probabilities proportional to their weights [34], [35]. All re-sampled particles are given weights equal to  . This step results in discarding particles with small weights and multiplying particles with large weights, thus compensating for the gradual degeneration of the particle filter i.e. the situation where all particles but one have weights equal to zero. For performance reasons, the resampling step may be applied only when the effective number of particles drops below a threshold value, e.g.

. This step results in discarding particles with small weights and multiplying particles with large weights, thus compensating for the gradual degeneration of the particle filter i.e. the situation where all particles but one have weights equal to zero. For performance reasons, the resampling step may be applied only when the effective number of particles drops below a threshold value, e.g.  . An estimation of the effective number of particles is given by

. An estimation of the effective number of particles is given by

| (18) |

The above filter can be extended to a fixed-lag smoother, if instead of resampling just the particles at the current time step, we store and resample all particles up to  time steps before the current time step, i.e.

time steps before the current time step, i.e.  [29]. The resampled particles can be considered a realization from a posterior density

[29]. The resampled particles can be considered a realization from a posterior density  , which is an approximation of the smoother density

, which is an approximation of the smoother density  , for sufficiently large values of

, for sufficiently large values of  .

.

Within this Monte Carlo framework, the expectation in Eq. 14 can be approximated as:

| (19) |

for a large number  of weighted samples.

of weighted samples.

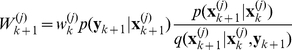

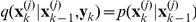

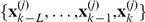

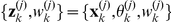

Simultaneous Estimation of Hidden States and Parameters

It is possible to apply the above standard filtering and smoothing techniques to parameter estimation problems involving state-space models. The key idea [29] is to define an extended state vector  by augmenting the state vector

by augmenting the state vector  with the model parameters, i.e.

with the model parameters, i.e.  . Then, the time evolution of the extended state-space model becomes:

. Then, the time evolution of the extended state-space model becomes:

| (20) |

while the observational model remains unaltered:

| (21) |

The marginal posterior density of the parameter vector  is given by:

is given by:

| (22) |

and, subsequently, the expectation of any function of  can be computed as in Eq. 14:

can be computed as in Eq. 14:

| (23) |

Furthermore, given a set of particles and associated weights, which approximate the smoother density  as outlined in the previous section, i.e.

as outlined in the previous section, i.e.  for

for  , the above expectation can be approximated as:

, the above expectation can be approximated as:

| (24) |

for large  .

.

Under this formulation, parameter estimation, which is traditionally treated as an optimization problem, is reduced to an integration problem, which can be tackled using filtering and smoothing methodologies for state-space models, a well-studied subject in the field of Computational Statistics.

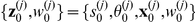

Connection to Evolutionary Algorithms

It should be emphasized that although in Eq. 20 the parameter vector  was assumed constant, i.e.

was assumed constant, i.e.  , the same methodology applies in the case of parameters that are naturally evolving in time, such as a time-varying externally injected current

, the same methodology applies in the case of parameters that are naturally evolving in time, such as a time-varying externally injected current  . A particularly interesting case arises when an artificial evolution rule is imposed on a parameter vector, which is otherwise constant by definition. Such a rule allows sampling new parameter vectors based on samples at the previous time step, i.e.

. A particularly interesting case arises when an artificial evolution rule is imposed on a parameter vector, which is otherwise constant by definition. Such a rule allows sampling new parameter vectors based on samples at the previous time step, i.e.  , and generating a sequence

, and generating a sequence  , which explores the parameter space and, ideally converges in a small optimal subset of it, after a sufficiently large number of iterations. It is at this point that the opportunity to use techniques borrowed from the domain of Evolutionary Algorithms arises. Here, we assume that the artificial evolution of the parameter vector

, which explores the parameter space and, ideally converges in a small optimal subset of it, after a sufficiently large number of iterations. It is at this point that the opportunity to use techniques borrowed from the domain of Evolutionary Algorithms arises. Here, we assume that the artificial evolution of the parameter vector  is governed by a version of the Covariance Matrix Adaptation algorithm [30], a well-known Evolution Strategy, although the modeler is free to make other choices (e.g. Differential Evolution [31]). For the

is governed by a version of the Covariance Matrix Adaptation algorithm [30], a well-known Evolution Strategy, although the modeler is free to make other choices (e.g. Differential Evolution [31]). For the  particle, we write:

particle, we write:

| (25) |

where  is a random vector with elements sampled from a normal distribution with zero mean and unit variance.

is a random vector with elements sampled from a normal distribution with zero mean and unit variance.  and

and  are a mean vector and covariance matrix respectively, which are computed as follows:

are a mean vector and covariance matrix respectively, which are computed as follows:

| (26) |

| (27) |

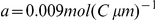

In the above expressions, a and  are small adaptation constants and

are small adaptation constants and  and

and  are the expectation and covariance of the weighted sample of

are the expectation and covariance of the weighted sample of  , respectively.

, respectively.  is a scale parameter that evolves according to a log-normal update rule:

is a scale parameter that evolves according to a log-normal update rule:

| (28) |

where  is a small adaptation constant and

is a small adaptation constant and  is a normally distributed random number with zero mean and unit variance.

is a normally distributed random number with zero mean and unit variance.

According to Eq. 25, the parameter vector  is sampled at each iteration of the algorithm from a multivariate normal distribution, which is centered at

is sampled at each iteration of the algorithm from a multivariate normal distribution, which is centered at  and has a covariance matrix equal to

and has a covariance matrix equal to  :

:

| (29) |

Both  and

and  are slowly adapting to the sample mean

are slowly adapting to the sample mean  and covariance

and covariance  , with an adaptation rate determined by the constants

, with an adaptation rate determined by the constants  and

and  . Notice that by switching off the adaptation process (i.e. by setting

. Notice that by switching off the adaptation process (i.e. by setting  ),

),  evolves according to a multivariate Gaussian distribution, which is centered at the previous parameter vector and has a covariance matrix equal to

evolves according to a multivariate Gaussian distribution, which is centered at the previous parameter vector and has a covariance matrix equal to  :

:

| (30) |

Therefore, given an initial set of weighted particles  sampled from some prior density function and an initial covariance matrix

sampled from some prior density function and an initial covariance matrix  , which may be set equal to the identity matrix, the smoothing algorithm presented earlier becomes:

, which may be set equal to the identity matrix, the smoothing algorithm presented earlier becomes:

Step 1a: Compute the expectation  and covariance

and covariance  of the weighted sample of

of the weighted sample of

Step 1b: For  , compute the scale factor

, compute the scale factor  according to Eq. 28. Notice that this scale factor is now part of the extended state

according to Eq. 28. Notice that this scale factor is now part of the extended state  for each particle

for each particle

Step 1c: For  , compute the mean vector

, compute the mean vector  , as shown in Eq. 26

, as shown in Eq. 26

Step 1d: Compute the covariance matrix  , as shown in Eq. 27

, as shown in Eq. 27

Step 1e: For  , sample

, sample  , as shown in Eq. 25

, as shown in Eq. 25

Step 1f: For  , sample a new set of state vectors from the proposal density

, sample a new set of state vectors from the proposal density  , thus completing sampling the extended vectors

, thus completing sampling the extended vectors  . Notice that the proposal density

. Notice that the proposal density  is conditioned on the updated parameter vector

is conditioned on the updated parameter vector  .

.

Step 2–3: Execute steps 2 and 3 as described previously

Notice that in the algorithm outlined above, the order in which the components of  are sampled is important. First, we sample the scaling factor

are sampled is important. First, we sample the scaling factor  . Then, we sample the parameter vector

. Then, we sample the parameter vector  given the updated

given the updated  . Finally, we sample the state vector

. Finally, we sample the state vector  from a proposal, which is conditioned on the updated parameter vector

from a proposal, which is conditioned on the updated parameter vector  . When resampling occurs, the state vectors

. When resampling occurs, the state vectors  with large importance weights are selected and multiplied with high probability along with their associated parameter vectors and scaling factors, thus resulting in a gradual self-adaptation process. This self-adaptation mechanism is very common in the Evolution Strategies literature.

with large importance weights are selected and multiplied with high probability along with their associated parameter vectors and scaling factors, thus resulting in a gradual self-adaptation process. This self-adaptation mechanism is very common in the Evolution Strategies literature.

Implementation

The algorithm described in the previous section was implemented in MATLAB and C (source code available as Supplementary Material; unmaintained FORTRAN code is also available upon request from the first author) and tested on parameter inference problems using simulated or actual electrophysiological data and a number of Hodgkin-Huxley-type models: (a) a single-compartment model (derived from the classic Hodgkin-Huxley model of neural excitability) containing a leakage, transient sodium and delayed rectifier potassium current, (b) a two-compartment model of a cat spinal motoneuron [37] and (c) a model of a B4 motoneuron in the Central Nervous System of the pond snail Lymnaea stagnalis

[38], which was developed as part of this study. Each of these models is described in detail in the Results section. Models (a) and (b) were used for generating noisy voltage traces at a sampling rate of  (one sample every

(one sample every  ). The simulated data was subsequently used as input to the algorithm in order to estimate a large number of parameters; typically, maximal conductances of ionic currents, reversal potentials, the parameters governing the activation and inactivation kinetics of ionic currents, as well as the levels of intrinsic and observation noise. Estimated parameter values were subsequently compared against the true parameter values in the model. The MATLAB environment was used for visualization and analysis of simulation results. For the estimation of the unknown parameters in model (c), actual electrophysiological data were used, as described in the next section.

). The simulated data was subsequently used as input to the algorithm in order to estimate a large number of parameters; typically, maximal conductances of ionic currents, reversal potentials, the parameters governing the activation and inactivation kinetics of ionic currents, as well as the levels of intrinsic and observation noise. Estimated parameter values were subsequently compared against the true parameter values in the model. The MATLAB environment was used for visualization and analysis of simulation results. For the estimation of the unknown parameters in model (c), actual electrophysiological data were used, as described in the next section.

Prior information was incorporated in the smoother by assuming that parameter values were not allowed to exceed well-defined upper or lower limits (see Tables 1, 2 and 3). For example, maximal conductances never received negative values, while time constants were always larger than zero. At the beginning of each simulation, the initial population of particles was uniformly sampled from within the acceptable range of parameter values and, during each simulation, parameters were forced to remain within their pre-defined limits.

Table 1. True and estimated values and prior intervals used during smoothing for all parameters in the single-compartment conductance-based model.

Table 2. True and estimated values and prior intervals used during smoothing for all parameters in the two-compartment conductance-based model.

| # | Parameter | Unit | True Value | Estimated Value1 | Lower Bound | Upper Bound |

| 1 |

|

|

|

|

|

|

| 2 |

|

|

|

|

|

|

| 3 |

|

|

|

|

|

|

| 4 |

|

|

|

|

|

|

| 5 |

|

|

|

|

|

|

| 6 |

|

|

|

|

|

|

| 7 |

|

|

|

|

|

|

| 8 |

|

|

|

|

|

|

| 9 |

|

|

|

|

|

|

| 10 |

|

|

|

|

|

|

| 11 |

|

|

−35.0 |

|

−60.0 (−45.0) 2 |

(−25.0)

(−25.0)

|

| 12 |

|

|

|

|

−60.0 (−65.0) |

(−45.0)

(−45.0)

|

| 13 |

|

|

|

|

−60.0 (−40.0) |

(−20.0)

(−20.0)

|

| 14 |

|

|

|

|

−60.0 (−40.0) |

(−20.0)

(−20.0)

|

| 15 |

|

|

|

|

−60.0 (−55.0) |

(−35.0)

(−35.0)

|

| 16 |

|

|

|

|

−60.0 (−50.0) |

(−30.0)

(−30.0)

|

| 17 |

|

|

|

|

(5.0)

(5.0)

|

(10.0)

(10.0)

|

| 18 |

|

|

|

|

(−10.0)

(−10.0)

|

(−5.0)

(−5.0)

|

| 19 |

|

|

|

|

(10.0)

(10.0)

|

(20.0)

(20.0)

|

| 20 |

|

|

|

|

(3.0)

(3.0)

|

(8.0)

(8.0)

|

| 21 |

|

|

0 0 |

|

(−8.0)

(−8.0)

|

(−3.0)

(−3.0)

|

| 22 |

|

|

|

|

(5.0)

(5.0)

|

(10.0)

(10.0)

|

| 23 |

|

|

|

|

|

|

| 24 |

|

|

|

|

|

|

| 25 |

|

|

|

|

|

|

| 26 |

|

- |

|

|

(0.5)

(0.5)

|

(1.0)

(1.0)

|

| 27 |

|

- |

|

|

(0.5)

(0.5)

|

(1.0)

(1.0)

|

| 28 |

|

|

|

|

|

|

| 29 |

|

|

|

|

|

|

| 30 |

|

|

|

|

|

|

These parameter values were estimated when we used the broad prior intervals (see Fig. 11Ai).

Values in bold indicate the narrow prior intervals we used for generating Figs. 11Aii, 11B, 11C (and Supplementary Figs. S4 and S5).

Table 3. Estimated mean values and prior limits used during smoothing for all parameters in the B4 model.

| # | Parameter | Unit | Estimated Mean Value1 , 2 | Lower Bound | Upper Bound |

| 1 |

|

|

|

|

|

| 2 |

|

|

|

|

|

| 3 |

|

|

|

|

|

| 4 |

|

|

|

(−40.0)

(−40.0)

|

(−20.0)

(−20.0)

|

| 5 |

|

|

|

(−40.0)

(−40.0)

|

(−20.0)

(−20.0)

|

| 6 |

|

|

|

(−40.0)

(−40.0)

|

(−20.0)

(−20.0)

|

| 7 |

|

|

|

(−20.0)

(−20.0)

|

(0.0)

(0.0)

|

| 8 |

|

|

|

(−70.0)

(−70.0)

|

(−40.0)

(−40.0)

|

| 9 |

|

|

|

(5.0)

(5.0)

|

(10.0)

(10.0)

|

| 10 |

|

|

|

(−10.0)

(−10.0)

|

(−5.0)

(−5.0)

|

| 11 |

|

|

|

(10.0)

(10.0)

|

(15.0)

(15.0)

|

| 12 |

|

|

|

(5.0)

(5.0)

|

(10.0)

(10.0)

|

| 13 |

|

|

|

(−25.0)

(−25.0)

|

(−15.0)

(−15.0)

|

| 14 |

|

|

|

(15.0)

(15.0)

|

(25.0)

(25.0)

|

| 15 |

|

|

|

(25.0)

(25.0)

|

(35.0)

(35.0)

|

| 16 |

|

|

|

(25.0)

(25.0)

|

(35.0)

(35.0)

|

| 17 |

|

|

|

(35.0)

(35.0)

|

(60.0)

(60.0)

|

All simulations were performed on an Intel dual-core i5 processor with 4 GB of memory running Ubuntu Linux. The number of particles used in each simulation was typically  , where

, where  was the dimensionality of the extended state

was the dimensionality of the extended state  (equal to the number of free parameters and dynamic states in the model). The time step

(equal to the number of free parameters and dynamic states in the model). The time step  in the Euler-Maruyama method was set equal to

in the Euler-Maruyama method was set equal to  . The parameter

. The parameter  of the fixed-lag smoother was set equal to

of the fixed-lag smoother was set equal to  (unless stated otherwise), which is equivalent to a time window

(unless stated otherwise), which is equivalent to a time window  wide (since data were sampled every

wide (since data were sampled every  ). The adaptation constants

). The adaptation constants  ,

,  and

and  in Eqs. 26, 27 and 28 were all set equal to

in Eqs. 26, 27 and 28 were all set equal to  , unless stated otherwise. Depending on the size of

, unless stated otherwise. Depending on the size of  , the complexity of the model and the length of the (actual or simulated) electrophysiological recordings, simulation times ranged from a few minutes up to more than

, the complexity of the model and the length of the (actual or simulated) electrophysiological recordings, simulation times ranged from a few minutes up to more than  hours.

hours.

Electrophysiology

As part of this study, we developed a single-compartment Hodgkin-Huxley-type model of a B4 neuron in the pond-snail Lymnaea stagnalis

[38]. B4 neurons are part of the neural circuit that controls the rhythmic movements of the feeding muscles via which the animal captures and ingests its food. The Lymnaea central nervous system was dissected from adult animals (shell length  ) that were bred at the University of Leicester as described previously [39]. All dissections were carried out in

) that were bred at the University of Leicester as described previously [39]. All dissections were carried out in  -buffered saline containing (in

-buffered saline containing (in  )

)  ,

,  ,

,  ,

,  , and

, and  ,

,  , in distilled water. All chemicals were purchased from Sigma. The buccal ganglia containing the B4 neurons were separated from the rest of the nervous system by cutting the cerebral buccal connectives and the buccal-buccal connective was crushed to eliminate electrical coupling between B4 neurons in the left and right buccal ganglion. Prior to recording, excess saline was removed from the dish and small crystals of protease type XIV were placed directly on top of the buccal ganglia to soften the connective tissue and aid the impalement of individual neurons. The protease crystals were washed of after about

, in distilled water. All chemicals were purchased from Sigma. The buccal ganglia containing the B4 neurons were separated from the rest of the nervous system by cutting the cerebral buccal connectives and the buccal-buccal connective was crushed to eliminate electrical coupling between B4 neurons in the left and right buccal ganglion. Prior to recording, excess saline was removed from the dish and small crystals of protease type XIV were placed directly on top of the buccal ganglia to soften the connective tissue and aid the impalement of individual neurons. The protease crystals were washed of after about  with multiple changes of

with multiple changes of  -buffered saline. The B4 neuron was visually identified based on its size and position and impaled with two sharp intracellular electrodes filled with a mixture of

-buffered saline. The B4 neuron was visually identified based on its size and position and impaled with two sharp intracellular electrodes filled with a mixture of  potassium acetate and

potassium acetate and  potassium chloride (resistance

potassium chloride (resistance  ). During the recording, the preparation was bathed in

). During the recording, the preparation was bathed in  -buffered saline plus

-buffered saline plus  hexamethonium chloride to block cholinergic synaptic inputs and suppress spontaneous fictive feeding activity.

hexamethonium chloride to block cholinergic synaptic inputs and suppress spontaneous fictive feeding activity.

The signals from the two intracellular electrodes were amplified using a Multiclamp 900A amplifier (Molecular Devices), digitized at a sampling frequency of  using a CED1401plus A/D converter (Cambridge Electronic Devices) and recorded on a PC using Spike2 version 6 software (Cambridge Electronic Devices). A custom set of instructions using the Spike2 scripting language was used to generate sequences of current pulses consisting of individual random steps ranging in amplitude from

using a CED1401plus A/D converter (Cambridge Electronic Devices) and recorded on a PC using Spike2 version 6 software (Cambridge Electronic Devices). A custom set of instructions using the Spike2 scripting language was used to generate sequences of current pulses consisting of individual random steps ranging in amplitude from  to

to  and a duration from

and a duration from  to

to  . The current signal was injected through one of the recording electrodes whilst the second electrode was used to measure the resulting changes in membrane potential.

. The current signal was injected through one of the recording electrodes whilst the second electrode was used to measure the resulting changes in membrane potential.

Results

Hidden States, Intrinsic and Observational Noise are Simultaneously Estimated Using the Fixed-Lag Smoother

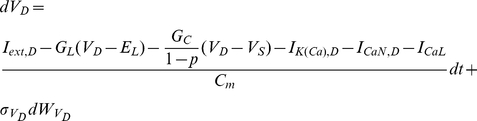

The applicability of the fixed-lag smoother presented above was demonstrated on a range of Hodgkin-Huxley-type models using simulated or actual electrophysiological data. The first model we examined consisted of a single compartment containing leakage, sodium and potassium currents, as shown below:

|

|

(32) |

where  . Notice the absence of noise in the dynamics of

. Notice the absence of noise in the dynamics of  ,

,  and

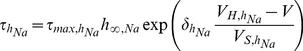

and  , which is valid if we assume a very large number of channels (see Supplementary Material and Supplementary Figures S1 and S2 for the case were noise is present in the dynamics of these variables). The steady states and relaxation times of the activation and inactivation gating variables were voltage-dependent, as shown below (e.g. [5]):

, which is valid if we assume a very large number of channels (see Supplementary Material and Supplementary Figures S1 and S2 for the case were noise is present in the dynamics of these variables). The steady states and relaxation times of the activation and inactivation gating variables were voltage-dependent, as shown below (e.g. [5]):

|

(33) |

and

|

(34) |

where  and

and  . The parameters

. The parameters  ,

,  ,

,  ,

,  and

and  in Eqs. 33 and 34 were chosen such that

in Eqs. 33 and 34 were chosen such that  and

and  fit closely the corresponding steady-states and relaxation times of the classic Hodgkin-Huxley model of neural excitability in the giant squid axon [40]. Observations consisted of noisy measurements of the membrane potential, as shown in Eq. 7. The full set of parameter values in the above model is given in Table 1.

fit closely the corresponding steady-states and relaxation times of the classic Hodgkin-Huxley model of neural excitability in the giant squid axon [40]. Observations consisted of noisy measurements of the membrane potential, as shown in Eq. 7. The full set of parameter values in the above model is given in Table 1.

First, we used the fixed-lag smoother to simultaneously infer the hidden states ( ,

,  ,

,  ,

,  ) and standard deviations of the intrinsic (

) and standard deviations of the intrinsic ( ) and observation (

) and observation ( ) noise based on

) noise based on  -long simulated recordings of the membrane potential

-long simulated recordings of the membrane potential  . These recordings were generated by assuming a time-dependent

. These recordings were generated by assuming a time-dependent  in Eq. 31, which consisted of a sequence of current steps with amplitude randomly distributed between

in Eq. 31, which consisted of a sequence of current steps with amplitude randomly distributed between  and

and  and random duration up to a maximum of

and random duration up to a maximum of  . Two simulated voltage recordings were generated corresponding to two different levels of observation noise,

. Two simulated voltage recordings were generated corresponding to two different levels of observation noise,  and

and  , respectively. The second value (

, respectively. The second value ( ) was rather extreme and it was chosen in order to illustrate the applicability of the method even at very high levels of observation noise. Simulated data points were sampled every

) was rather extreme and it was chosen in order to illustrate the applicability of the method even at very high levels of observation noise. Simulated data points were sampled every  (

( ). The standard deviation of the intrinsic noise was set at

). The standard deviation of the intrinsic noise was set at  . The injected current

. The injected current  and the induced voltage trace (for either value of

and the induced voltage trace (for either value of  ) were then used as input to the smoother, during the inference phase. At this stage, all other parameters in the model (conductances, reversal potentials, and ionic current kinetics) were assumed known, thus the extended state vector took the form

) were then used as input to the smoother, during the inference phase. At this stage, all other parameters in the model (conductances, reversal potentials, and ionic current kinetics) were assumed known, thus the extended state vector took the form  , where

, where  was a scale factor as in Eq. 25. New samples for

was a scale factor as in Eq. 25. New samples for  were taken from a log-normal distribution (Eq. 28), while new samples for

were taken from a log-normal distribution (Eq. 28), while new samples for  and

and  were drawn from an adaptive bivariate Gaussian distribution at each iteration of the algorithm (Eq. 25). For each data set, smoothing was repeated for two different values of the smoothing lag, i.e.

were drawn from an adaptive bivariate Gaussian distribution at each iteration of the algorithm (Eq. 25). For each data set, smoothing was repeated for two different values of the smoothing lag, i.e.  and

and  .

.  corresponds to filtering, while

corresponds to filtering, while  corresponds to smoothing with a fixed lag equal to

corresponds to smoothing with a fixed lag equal to  . Our results from this set of simulations are summarized in Fig. 1.

. Our results from this set of simulations are summarized in Fig. 1.

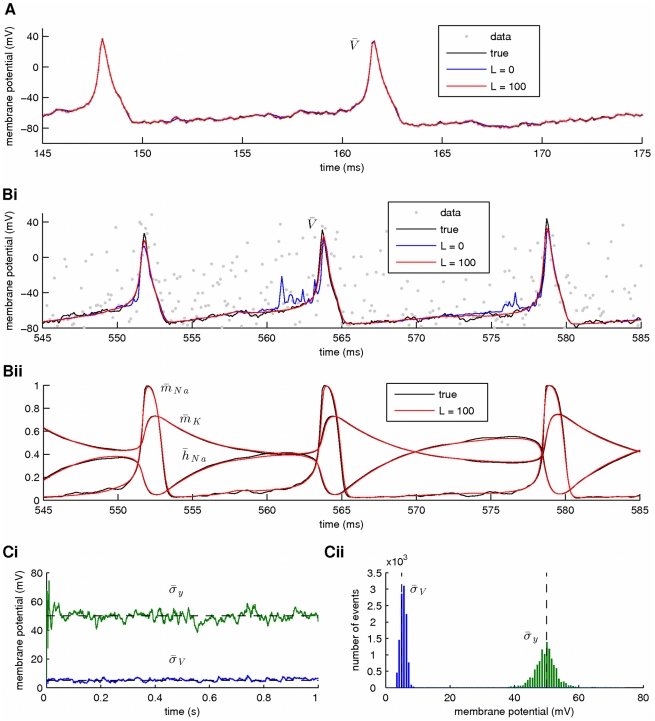

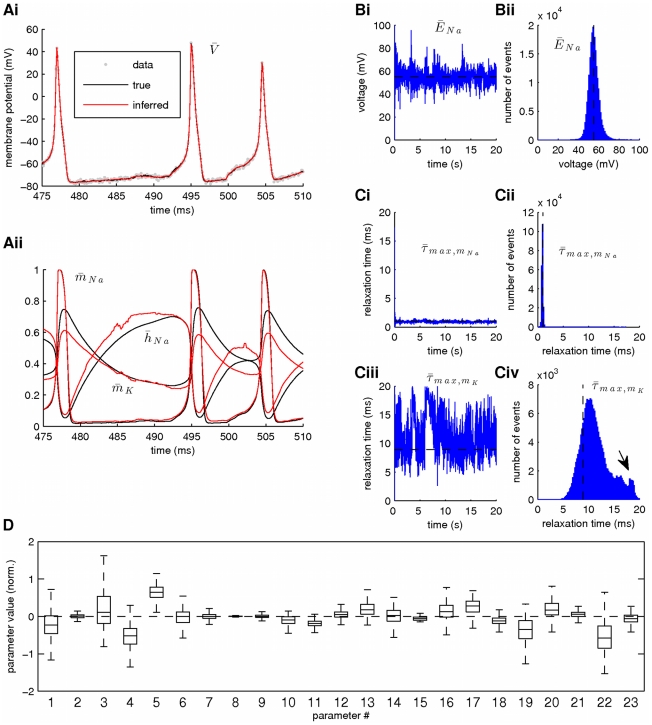

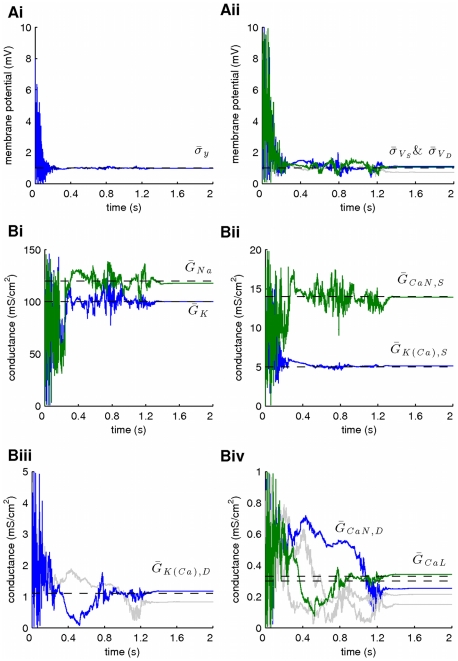

Figure 1. Simultaneous estimation of hidden states, intrinsic and observation noise.

Estimation was based on a simulated recording of membrane potential with duration  . For clarity, only

. For clarity, only  of activity are shown in A and Bi,ii. (A) Smoothing of the membrane potential (the observed variable), when observation noise was low (

of activity are shown in A and Bi,ii. (A) Smoothing of the membrane potential (the observed variable), when observation noise was low ( ). High-fidelity smoothing was achieved for either small (

). High-fidelity smoothing was achieved for either small ( ) or large (

) or large ( ) values of the fixed smoothing lag

) values of the fixed smoothing lag  . Simulated and smoothed data are difficult to distinguish due to their overlap. (Bi) Smoothing of the membrane potential at high levels of observation noise (

. Simulated and smoothed data are difficult to distinguish due to their overlap. (Bi) Smoothing of the membrane potential at high levels of observation noise ( ). A large value of the smoothing lag (

). A large value of the smoothing lag ( ) was required for high-fidelity smoothing. (Bii) Inference of the unobserved activation (

) was required for high-fidelity smoothing. (Bii) Inference of the unobserved activation ( ,

,  ) and inactivation (

) and inactivation ( ) variables for sodium and potassium currents as functions of time, during smoothing of the data shown in Bi for

) variables for sodium and potassium currents as functions of time, during smoothing of the data shown in Bi for  . (Ci) Inference of the standard deviations for the intrinsic and observation noise (

. (Ci) Inference of the standard deviations for the intrinsic and observation noise ( and

and  , respectively) during smoothing of the data shown in Bi for

, respectively) during smoothing of the data shown in Bi for  . Dashed lines indicate the true values of

. Dashed lines indicate the true values of  and

and  . (Cii) Histograms of the time series for

. (Cii) Histograms of the time series for  and

and  in Ci. Again, dashed lines indicate the true values of the corresponding parameters. At this stage, maximal conductances, reversal potentials and kinetic parameters in the model were assumed known. The number of particles was

in Ci. Again, dashed lines indicate the true values of the corresponding parameters. At this stage, maximal conductances, reversal potentials and kinetic parameters in the model were assumed known. The number of particles was  . Also,

. Also,  . The scaling factors in Eq. 25 were all considered equal to

. The scaling factors in Eq. 25 were all considered equal to  .

.

We observed that at low levels of observation noise (Fig. 1A), the inferred expectation of the voltage (solid blue and red lines) closely matched the underlying (true) signal (solid black line). This was true for both values of the fixed lag  used for smoothing. However, at high levels of observation noise (Fig. 1Bi), the true voltage was inferred with high fidelity when a large value of the fixed lag (

used for smoothing. However, at high levels of observation noise (Fig. 1Bi), the true voltage was inferred with high fidelity when a large value of the fixed lag ( ) was used (solid red line), but not when

) was used (solid red line), but not when  (solid blue line). Furthermore, the inferred expectations of the unobserved dynamic variables

(solid blue line). Furthermore, the inferred expectations of the unobserved dynamic variables  ,

,  and

and  (solid red lines in Fig. 1Bii) also matched the true hidden time series (solid black lines in the same figure) remarkably well, when

(solid red lines in Fig. 1Bii) also matched the true hidden time series (solid black lines in the same figure) remarkably well, when  .

.

We repeat that during these simulations an artificial update rule was imposed on the two free standard deviations  and

and  , as shown in Eq. 25. The artificial evolution of these parameters is illustrated in Fig. 1Ci, where the inferred expectations of

, as shown in Eq. 25. The artificial evolution of these parameters is illustrated in Fig. 1Ci, where the inferred expectations of  and

and  are presented as functions of time. These expectations converged immediately, fluctuating around the true values of

are presented as functions of time. These expectations converged immediately, fluctuating around the true values of  and

and  (dashed lines in Fig. 1Ci). This is also illustrated by the histograms in Fig. 1Cii, which were constructed from the data points in Fig. 1Ci. We observed that the peaks of these histograms were located quite closely to the true values of

(dashed lines in Fig. 1Ci). This is also illustrated by the histograms in Fig. 1Cii, which were constructed from the data points in Fig. 1Ci. We observed that the peaks of these histograms were located quite closely to the true values of  and

and  (dashed lines in Fig. 1Cii).

(dashed lines in Fig. 1Cii).

In summary, the fixed-lag smoother was able to recover the hidden states and standard deviations of the intrinsic and observation noise in the model based on noisy observations of the membrane potential. This was true even at high levels of observation noise, subject to the condition that a sufficiently large smoothing lag  was adopted during the simulation.

was adopted during the simulation.

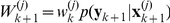

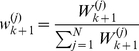

Adaptive Sampling Reduces the Variance of Inferred Parameter Distributions and Accelerates Convergence of the Algorithm

Next, we treated two more parameters in the model as unknown, i.e. the maximal conductances of the transient sodium ( ) and delayed rectifier potassium (

) and delayed rectifier potassium ( ) currents. The extended state vector, thus, took the form

) currents. The extended state vector, thus, took the form  . As in the previous section, new samples for

. As in the previous section, new samples for  were drawn from a log-normal distribution (Eq. 28), while

were drawn from a log-normal distribution (Eq. 28), while  ,

,  ,

,  and

and  were sampled by default from an adaptive multivariate Gaussian distribution at each iteration of the algorithm (Eq. 25).

were sampled by default from an adaptive multivariate Gaussian distribution at each iteration of the algorithm (Eq. 25).

In order to examine the effect of this adaptive sampling approach on the variance of the inferred parameter distributions, we repeated fixed-lag smoothing on  -long simulated recordings of the membrane potential assuming each time that different aspects of the adaptive sampling process were switched off, as illustrated in Fig. 2. First, we assumed that no adaptation was imposed on

-long simulated recordings of the membrane potential assuming each time that different aspects of the adaptive sampling process were switched off, as illustrated in Fig. 2. First, we assumed that no adaptation was imposed on  or the “unknown” noise parameters and maximal conductances, i.e. the constants

or the “unknown” noise parameters and maximal conductances, i.e. the constants  ,

,  and

and  in Eqs. 26–28 were all set equal to zero. In this case, the multivariate Gaussian distribution from which new samples of

in Eqs. 26–28 were all set equal to zero. In this case, the multivariate Gaussian distribution from which new samples of  ,

,  ,

,  and

and  were drawn from reduced to Eq. 30. In addition, we assumed that

were drawn from reduced to Eq. 30. In addition, we assumed that  in the same equation was equal to

in the same equation was equal to  , for all samples

, for all samples  . Under these conditions, the true values of the free parameters were correctly estimated through application of the fixed-lag smoother, as illustrated for the case of

. Under these conditions, the true values of the free parameters were correctly estimated through application of the fixed-lag smoother, as illustrated for the case of  and

and  in Figs. 2Ai and 2Aii.

in Figs. 2Ai and 2Aii.

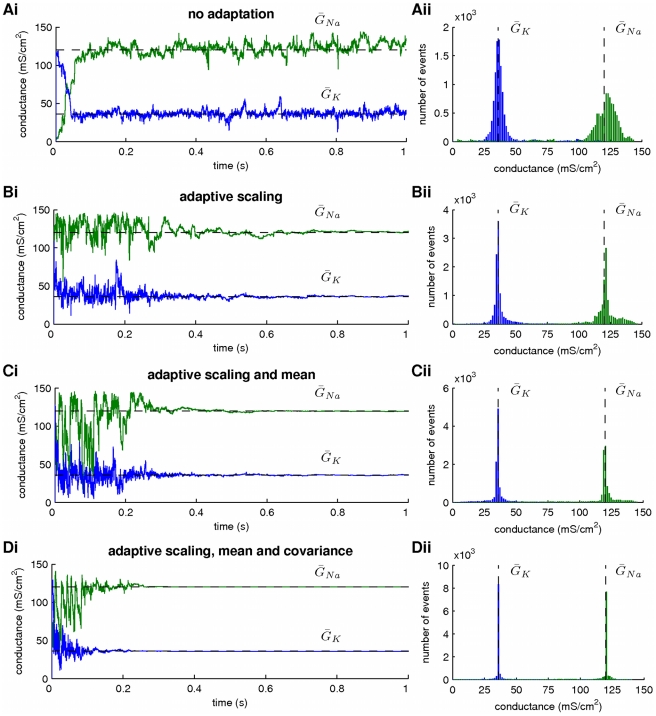

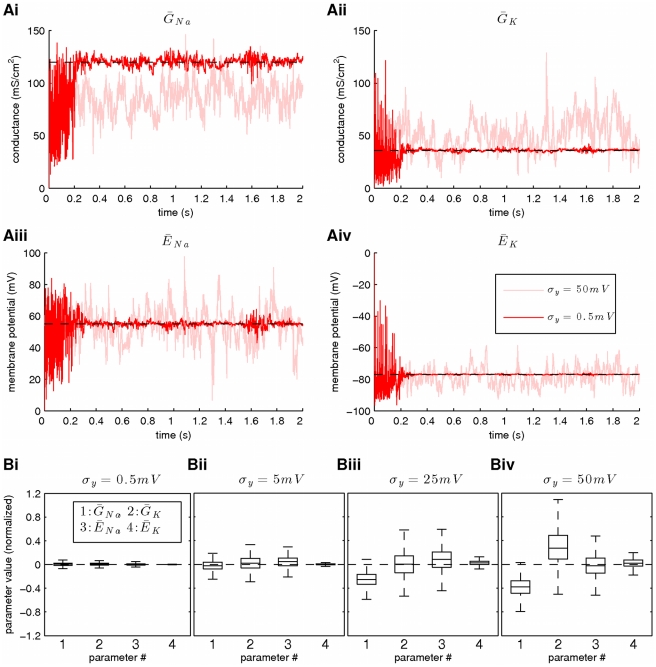

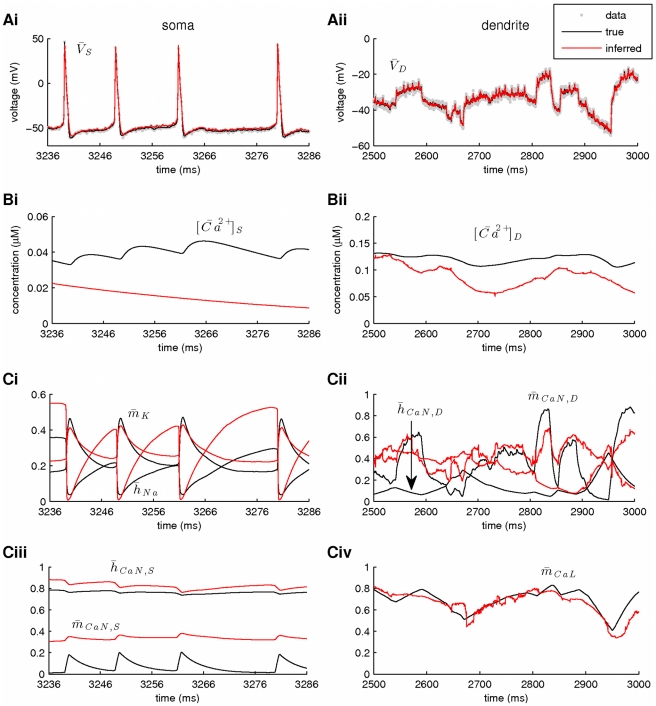

Figure 2. The effect of adaptive parameter sampling on the variance of parameter estimates.

Merging the fixed-lag smoother with an adaptive sampling algorithm akin to the Covariance Matrix Adaptation Evolution Strategy reduced significantly the variance of parameter estimates. At this stage, the maximal conductances for the sodium ( ) and potassium (

) and potassium ( ) currents were assumed unknown. Estimation was based on a simulated recording of membrane potential with duration

) currents were assumed unknown. Estimation was based on a simulated recording of membrane potential with duration  and

and  . (A) Inference of

. (A) Inference of  and

and  during smoothing, when new parameter samples were drawn from a non-adaptive multi-variate normal distribution (Eq. 30). Dashed lines indicate the true parameter values. (B) Inference of

during smoothing, when new parameter samples were drawn from a non-adaptive multi-variate normal distribution (Eq. 30). Dashed lines indicate the true parameter values. (B) Inference of  and

and  during smoothing, when new samples were drawn from a multi-variate normal distribution (Eq. 25) with an adaptive scaling factor

during smoothing, when new samples were drawn from a multi-variate normal distribution (Eq. 25) with an adaptive scaling factor  (

( in Eq. 28). (C) Inference of

in Eq. 28). (C) Inference of  and

and  during smoothing, when new samples were drawn from a multi-variate normal distribution (Eq. 25) with adaptive scaling (as in B) and mean (

during smoothing, when new samples were drawn from a multi-variate normal distribution (Eq. 25) with adaptive scaling (as in B) and mean ( in Eq. 26). (D) Inference of

in Eq. 26). (D) Inference of  and

and  during smoothing, when new samples were drawn from a multi-variate normal distribution with adaptive scaling (as in B), mean (as in C) and covariance (

during smoothing, when new samples were drawn from a multi-variate normal distribution with adaptive scaling (as in B), mean (as in C) and covariance ( in Eq. 27). The histograms in the right plots were constructed from the time series in the left plots. Membrane potential, activation and inactivation variables, intrinsic and observation noise were also subject to estimation, as in Fig. 1. Smoothing lag and number of particles were

in Eq. 27). The histograms in the right plots were constructed from the time series in the left plots. Membrane potential, activation and inactivation variables, intrinsic and observation noise were also subject to estimation, as in Fig. 1. Smoothing lag and number of particles were  and

and  , respectively. The prior interval of the scaling factors

, respectively. The prior interval of the scaling factors  was

was  .

.

Subsequently, we repeated smoothing assuming that the scale factor  evolved according to the log-normal update rule given by Eq. 28 with

evolved according to the log-normal update rule given by Eq. 28 with  , while

, while  and

and  were again set equal to

were again set equal to  . As illustrated in Figs. 2Bi and 2Bii for parameters

. As illustrated in Figs. 2Bi and 2Bii for parameters  and

and  , by imposing this simple adaptation rule on the multivariate Gaussian distribution from which the free parameters in the model were sampled, we managed again to estimate correctly their values, but this time the variance of the inferred parameter distributions (the width of the histograms in Fig. 2Bii) was drastically reduced.

, by imposing this simple adaptation rule on the multivariate Gaussian distribution from which the free parameters in the model were sampled, we managed again to estimate correctly their values, but this time the variance of the inferred parameter distributions (the width of the histograms in Fig. 2Bii) was drastically reduced.

By further letting the mean and covariance of the proposal Gaussian distribution in Eq. 25 adapt (by setting  in Eqs. 26 and 27), we achieved a further decrease in the spread of the inferred parameter distributions (Figs. 2C and 2D). Parameters

in Eqs. 26 and 27), we achieved a further decrease in the spread of the inferred parameter distributions (Figs. 2C and 2D). Parameters  and

and  and the hidden states

and the hidden states  ,

,  ,

,  and

and  were also inferred with very high fidelity in all cases (as in Fig. 1), but the variance of the estimated posteriors for

were also inferred with very high fidelity in all cases (as in Fig. 1), but the variance of the estimated posteriors for  and

and  followed the same pattern as the variance of

followed the same pattern as the variance of  and

and  .

.

It is worth observing that when all three adaptation processes were switched on (i.e.  ), the algorithm converged to a single point in parameter space within the first

), the algorithm converged to a single point in parameter space within the first  of simulation, which coincided with the true parameter values in the model (see Fig. 2D for the case of

of simulation, which coincided with the true parameter values in the model (see Fig. 2D for the case of  and

and  ). At this point, the covariance matrix

). At this point, the covariance matrix  became very small (i.e. all its elements were less than

became very small (i.e. all its elements were less than  , although the matrix itself remained non-singular) and the mean

, although the matrix itself remained non-singular) and the mean  was very close to the true parameter vector

was very close to the true parameter vector  . We note that

. We note that  and

and  , where

, where  stands for the expectation computed over the population of particles. In this case, it is not strictly correct to claim that the chains in Fig. 2Di approximate the posteriors of the unknown parameters

stands for the expectation computed over the population of particles. In this case, it is not strictly correct to claim that the chains in Fig. 2Di approximate the posteriors of the unknown parameters  and

and  ; since repeating the simulation many times would result in convergence at slightly different points clustered tightly around the true parameter values, it would be more reasonable to claim that these optimal points are random samples from the posterior parameter distribution and they can be treated as estimates of its mode.

; since repeating the simulation many times would result in convergence at slightly different points clustered tightly around the true parameter values, it would be more reasonable to claim that these optimal points are random samples from the posterior parameter distribution and they can be treated as estimates of its mode.

Depending on the situation, one may wish to estimate the full posteriors of the unknown parameters or just an optimal set of parameter values, which can be used in a subsequent predictive simulation. In Fig. 3A, we examined in more detail how the scale factor  affects the variance of the final estimates, assuming that

affects the variance of the final estimates, assuming that  . We repeat that each particle

. We repeat that each particle  contains

contains  as a component of its extended state. Each scaling factor

as a component of its extended state. Each scaling factor  is updated at each iteration of the algorithm following a lognormal rule (Eq. 28, Step 1b of the algorithm in the Methods section). Sampling new parameter vectors is conditioned on these updated scaling factors (Eq. 25, Step 1e of the algorithm). When at a later stage weighting (and resampling) of the particles occurs, the scaling factors that are associated with high-weight parameters and hidden states are likely to survive into subsequent iterations (or “generations”) of the algorithm. During the course of this adaptive process, the scaling factors

is updated at each iteration of the algorithm following a lognormal rule (Eq. 28, Step 1b of the algorithm in the Methods section). Sampling new parameter vectors is conditioned on these updated scaling factors (Eq. 25, Step 1e of the algorithm). When at a later stage weighting (and resampling) of the particles occurs, the scaling factors that are associated with high-weight parameters and hidden states are likely to survive into subsequent iterations (or “generations”) of the algorithm. During the course of this adaptive process, the scaling factors  are allowed to fluctuate only within predefines limits, similarly to the other components of the extended state vector.

are allowed to fluctuate only within predefines limits, similarly to the other components of the extended state vector.

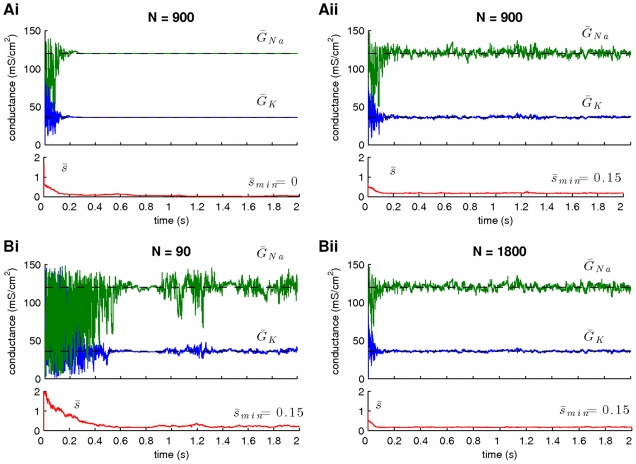

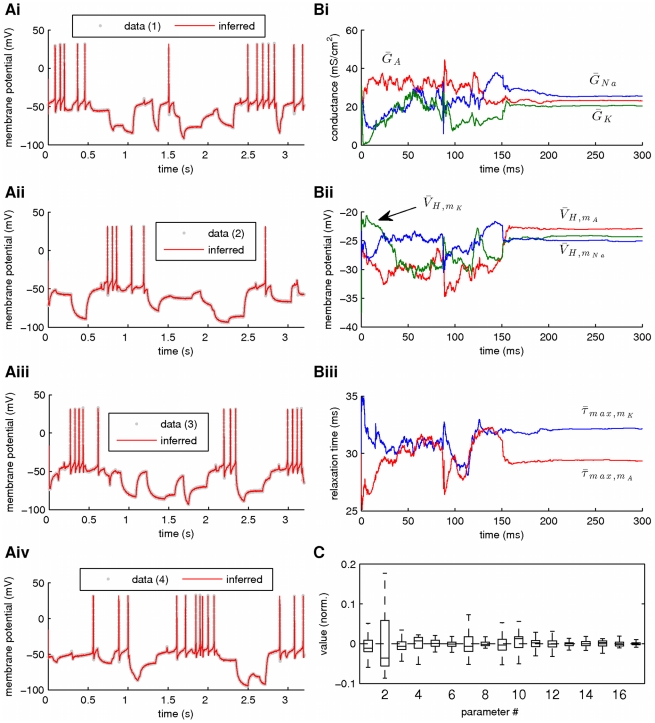

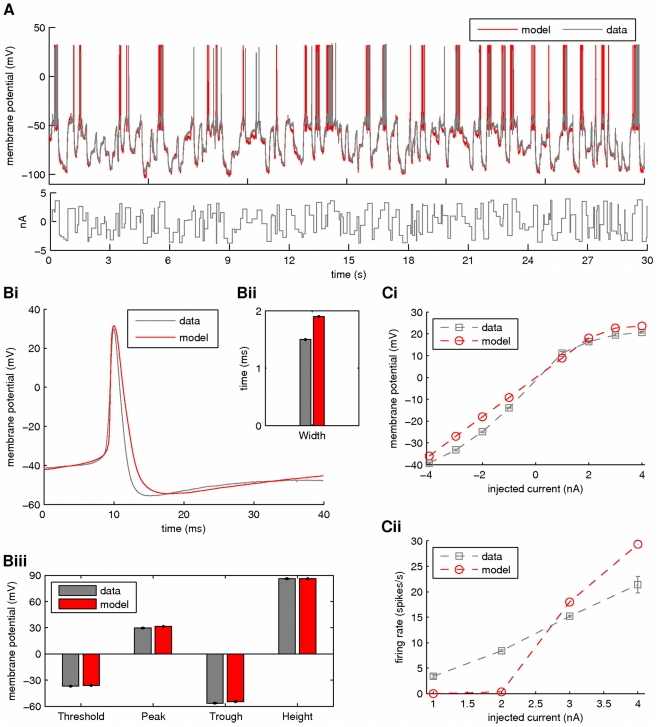

Figure 3. The effect of the size of the scaling factor .

and the number of particles

and the number of particles

on the variance of the estimates. Large minimal values of

on the variance of the estimates. Large minimal values of  and small values of

and small values of  imply large variance of the estimates. (A) Resampling of particles (see Methods) implies adaptation of (among others) the scaling factors

imply large variance of the estimates. (A) Resampling of particles (see Methods) implies adaptation of (among others) the scaling factors  , which gradually approach the lower bound of their prior interval (red lines in Ai,ii). A prior interval with zero lower bound (i.e.

, which gradually approach the lower bound of their prior interval (red lines in Ai,ii). A prior interval with zero lower bound (i.e.  ) leads to estimates with negligible variance (Ai). A prior interval with relatively large lower bound (e.g.

) leads to estimates with negligible variance (Ai). A prior interval with relatively large lower bound (e.g.  ) leads to estimates with non-zero variance (Aii). Notice that the expectation

) leads to estimates with non-zero variance (Aii). Notice that the expectation  in Ai does not actually take the value

in Ai does not actually take the value  (instead it becomes approximately equal to

(instead it becomes approximately equal to  ). (B) A small number of particles (Bi,

). (B) A small number of particles (Bi,  ) implies estimates with large variance (compare to Bii,

) implies estimates with large variance (compare to Bii,  ). Notice that the difference between Aii (

). Notice that the difference between Aii ( ) and Bii (

) and Bii ( ) is negligible, implying the presence of a ceiling effect, when the number of particles becomes very large. In these simulations,

) is negligible, implying the presence of a ceiling effect, when the number of particles becomes very large. In these simulations,  and

and  .

.

In Fig. 3Ai, we demonstrate the case where the scaling factors  were allowed to take values from the prior interval

were allowed to take values from the prior interval  . We observed that during the course of the simulation (which utilized

. We observed that during the course of the simulation (which utilized  -long simulated membrane potential recordings), the average value of the scaling factor,

-long simulated membrane potential recordings), the average value of the scaling factor,  , decreased gradually towards

, decreased gradually towards  and this was accompanied by a dramatic decrease in the variance of the inferred parameters

and this was accompanied by a dramatic decrease in the variance of the inferred parameters  and

and  , which eventually “collapsed” to a point in parameter space located very close to their true values. This situation was the same as the one illustrated in Fig. 2D. Notice that although

, which eventually “collapsed” to a point in parameter space located very close to their true values. This situation was the same as the one illustrated in Fig. 2D. Notice that although  decreased towards zero, it never actually took this value; it merely became very small (

decreased towards zero, it never actually took this value; it merely became very small ( ). When we used a prior interval for

). When we used a prior interval for  with non-zero lower bound (i.e.

with non-zero lower bound (i.e.  ]; see Fig. 3Aii), the final estimates had a larger variance, providing an approximation of the full posteriors of the “unknown” parameters

]; see Fig. 3Aii), the final estimates had a larger variance, providing an approximation of the full posteriors of the “unknown” parameters  and

and  . Thus, controlling the lower bound of the prior interval for the scaling factors

. Thus, controlling the lower bound of the prior interval for the scaling factors  provides a simple method for controlling the variance of the final estimates. Notice that the variance of the final estimates also depends on the number of particles (Fig. 3B). A smaller number of particles resulted in a larger variance of the estimates (compare Fig. 3Bi to Fig. 3Bii). However, when a large number of particles was already in use, further increasing their number did not significantly affect the variance of the estimates or the rate of convergence (compare Fig. 3Bii to Fig. 3Aii), indicating the presence of a ceiling effect.

provides a simple method for controlling the variance of the final estimates. Notice that the variance of the final estimates also depends on the number of particles (Fig. 3B). A smaller number of particles resulted in a larger variance of the estimates (compare Fig. 3Bi to Fig. 3Bii). However, when a large number of particles was already in use, further increasing their number did not significantly affect the variance of the estimates or the rate of convergence (compare Fig. 3Bii to Fig. 3Aii), indicating the presence of a ceiling effect.

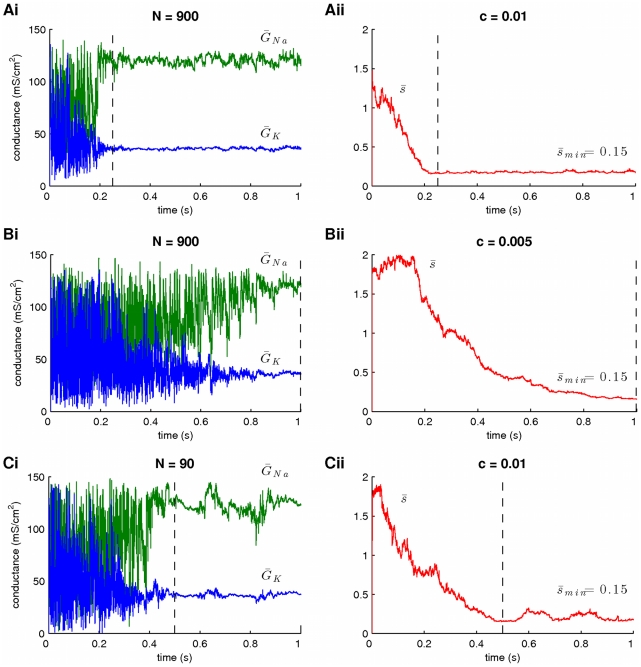

The adaptive sampling of the scaling factors  further depends on parameter

further depends on parameter  in Eq. 28, which determines the width of the lognormal distribution from which new samples are drawn. The value of this parameter provides a simple way to control the rate of convergence of the algorithm; larger values of

in Eq. 28, which determines the width of the lognormal distribution from which new samples are drawn. The value of this parameter provides a simple way to control the rate of convergence of the algorithm; larger values of  resulted in faster convergence, when processing

resulted in faster convergence, when processing  -long simulated recordings (compare Fig. 4A to Fig. 4B). The rate of convergence also depends on the number of particles in use (compare Fig. 4A to Fig. 4C), although it is more sensitive to changes in parameter

-long simulated recordings (compare Fig. 4A to Fig. 4B). The rate of convergence also depends on the number of particles in use (compare Fig. 4A to Fig. 4C), although it is more sensitive to changes in parameter  ; dividing the value of

; dividing the value of  by

by  (Fig. 4B) had a larger effect on the rate of convergence than dividing the number of particles by

(Fig. 4B) had a larger effect on the rate of convergence than dividing the number of particles by  (Fig. 4C).

(Fig. 4C).

Figure 4. The effect of adaptation of the scaling factor .

and the number of particles

and the number of particles

on the speed of convergence. A slow rate of adaptation for

on the speed of convergence. A slow rate of adaptation for  and a small number of particles

and a small number of particles  imply slow convergence of the algorithm. The rate at which

imply slow convergence of the algorithm. The rate at which  adapts depends on the parameter

adapts depends on the parameter  in Eq. 28. Reducing

in Eq. 28. Reducing  in half results in a significant decrease in the rate of convergence (compare A to B). Also, reducing the number of particles by a factor of

in half results in a significant decrease in the rate of convergence (compare A to B). Also, reducing the number of particles by a factor of  slows down the speed of convergence (compare A to C), but not as much as when parameter

slows down the speed of convergence (compare A to C), but not as much as when parameter  was adjusted. The plots on the right illustrate the profile of

was adjusted. The plots on the right illustrate the profile of  associated with the estimation of the parameters on the left plots. In these simulations,

associated with the estimation of the parameters on the left plots. In these simulations,  ,

,  and the prior interval for the scaling factors

and the prior interval for the scaling factors  was

was  .

.

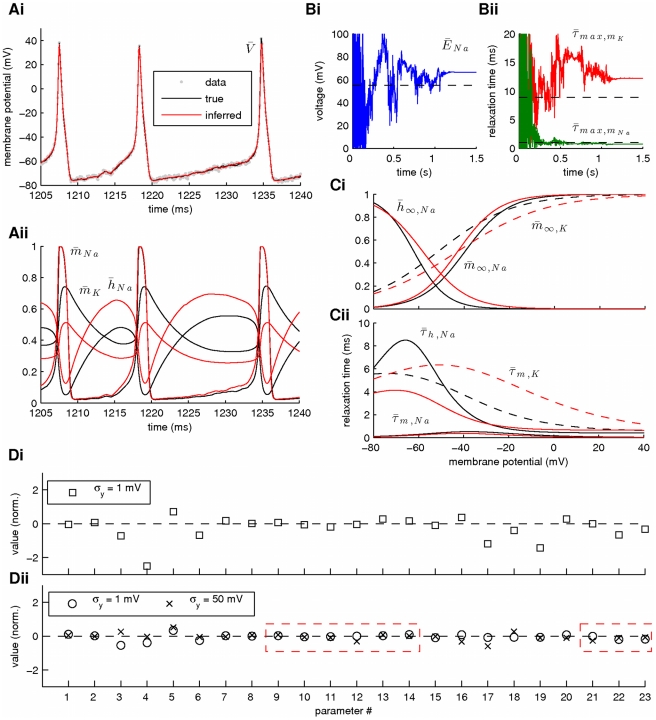

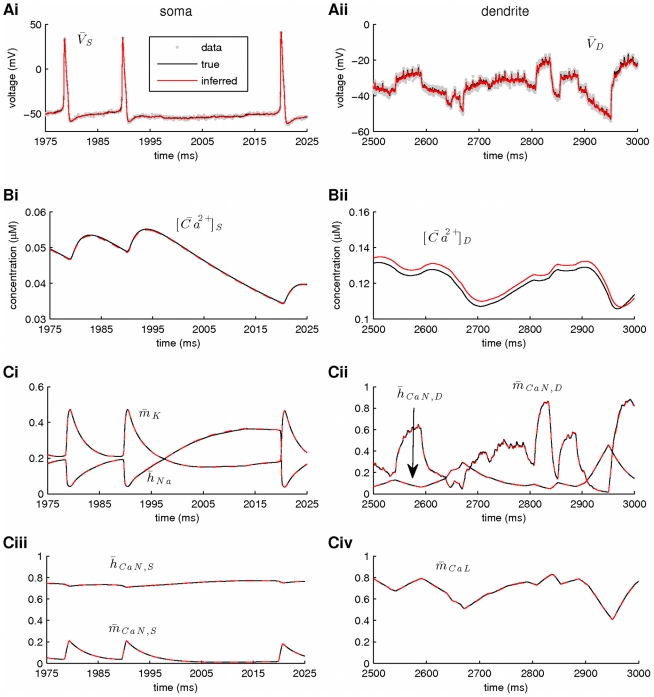

In summary, by assuming an adaptive sampling process for the unknown parameters in the model, we managed to achieve a significant reduction in the spread of the inferred posterior distributions of these parameters. Furthermore, adjusting the prior interval and adaptation rate  of the scaling factors