Abstract

Biological systems often consist of multiple interacting subsystems, the brain being a prominent example. To understand the functions of such systems it is important to analyze if and how the subsystems interact and to describe the effect of these interactions. In this work we investigate the extent to which the cause-and-effect framework is applicable to such interacting subsystems. We base our work on a standard notion of causal effects and define a new concept called natural causal effect. This new concept takes into account that when studying interactions in biological systems, one is often not interested in the effect of perturbations that alter the dynamics. The interest is instead in how the causal connections participate in the generation of the observed natural dynamics. We identify the constraints on the structure of the causal connections that determine the existence of natural causal effects. In particular, we show that the influence of the causal connections on the natural dynamics of the system often cannot be analyzed in terms of the causal effect of one subsystem on another. Only when the causing subsystem is autonomous with respect to the rest can this interpretation be made. We note that subsystems in the brain are often bidirectionally connected, which means that interactions rarely should be quantified in terms of cause-and-effect. We furthermore introduce a framework for how natural causal effects can be characterized when they exist. Our work also has important consequences for the interpretation of other approaches commonly applied to study causality in the brain. Specifically, we discuss how the notion of natural causal effects can be combined with Granger causality and Dynamic Causal Modeling (DCM). Our results are generic and the concept of natural causal effects is relevant in all areas where the effects of interactions between subsystems are of interest.

Introduction

Biological systems often consist of multiple interacting subsystems. An important step in the analysis of such systems is to uncover how the subsystems are functionally related and to study the effects of functional interactions in the system. A prominent example of a biological system with interacting subsystems is the brain, having interconnected ‘units’ at many different levels of description: e.g. neurons, microcircuits, and brain regions. It is the current belief that much of what we associate with brain function comes about through interactions between these different subsystems, and to characterize these interactions and their effects is one of the greatest challenges of the Neurosciences. Note that this is not only an experimental challenge but also a conceptual one. Indeed, even if given access to all the relevant variables in the nervous system, it is far from obvious how to analyze how brain activity relates to function.

In neurophysiology, the traditional approach to link brain activity to function is to perturb the nervous system and observe which variables change as a function of the perturbation. For example, many cells in primary sensory cortices elicit spikes at a rate that depends on a particular property of the stimulus (e.g. [1], [2]), and cells in the primary motor cortex tend to elicit spikes in relation to sensory conditioned movements (e.g. [3]). This approach is based on a conceptual cause-and-effect model: The perturbation (e.g. sensory stimulus) is the cause and the response of the nervous system (e.g. an increase in firing rate) the effect. There are two aspects of this experimental situation that allow a cause-and-effect interpretation: the perturbation is exogenous, or external, to the system; and, the effect follows the cause only after some temporal delay. That the perturbation is exogenous makes it possible to disentangle spurious dependencies from those due to a mechanistic (causal) coupling. The temporal delay between cause and effect is in line with the intuitive notion that a cause must precede its effect in time, and with our current understanding of the underlying mechanisms (e.g. how light is transformed into membrane currents and propagated through the visual system of the brain). This cause-and-effect model has been successfully applied to studies of response properties of single cells and brain regions, as well as to the relation between isolated limb movements and corresponding neuronal activity.

Perhaps inspired by the success of the cause-and-effect models in sensory and motor neurophysiology, workers have more recently started to look at interactions between different brain regions using the same framework. That is, researchers try to characterize the activity of one subsystem in terms of how it is caused by the activities of other subsystems. Indeed, a lot of theoretical and experimental work has been directed at investigating what has been called ‘direction of information flow’ (e.g. [4]), ‘causal relations’ (e.g. [5]), ‘causal influences’ (e.g. [6]), or ‘effective connectivity’ (e.g. [7], [8]), to just mention a few. A major difference with respect to the type of studies mentioned above is that the ‘cause’ is typically not a perturbation introduced by the experimenter. Rather, the joint natural activity of the subsystems is decomposed in causes and effects by statistical techniques. One crucial implication of this difference is that direction of the ‘causal flow’ is not restricted a priori: Contrary to the case of an externally applied stimulus, where the causality (if any) must ‘flow’ from the stimulus to the brain, in the case of two interacting brain systems it is quite possible that there is ‘causal flow’ in both directions. We will see that this bidirectionality has serious consequences for a cause-and-effect interpretation of interactions in the natural brain dynamics.

In this work we take a critical look at the cause-and-effect framework and demonstrate some fundamental shortcomings when this framework is used to study the natural dynamics of a system. We base our work on a standard model of causality that has emerged in the fields of statistics and artificial intelligence during the last decades (e.g. [9], [10]), synthesized in the framework of interventional causality proposed by Pearl [10]. In this framework external interventions (i.e. perturbations) of the system play a major role, both in defining the existence of causal connections between variables as well as in quantifying their effects. In our work we focus on situations where the interest is in characterizing the effects of natural interactions going on in a system. In such situations external interventions can not be used to quantify causal effects as they would typically disrupt the natural dynamics (c.f. [11]). We will analyze the conditions under which the natural interactions between subsystems can be interpreted according to a cause-and-effect framework. That is, our work is not about determining if a causal connection exists or not, but rather how to interpret the effects of existing causal connections. We derive conditions for when the interactions between two subsystems can be interpreted in terms of one system causing the other. The main requirement is that the causing subsystem acts as an exogenous source of activity. In particular, we show that the effects of interactions between mutually connected subsystems typically cannot be interpreted in terms of the effects that the individual subsystems exerts on the remaining ones. A conclusion of our work is therefore that the cause-and-effect framework is of limited use when characterizing the internal dynamics of the brain. The analysis is general and our conclusions therefore have important consequences for the interpretation of previous studies using different measures of ‘causality’, including Granger causality and Dynamic Causal Modeling.

Results

In this section we first argue that it is important to distinguish between three different types of questions asked about causality and we introduce some basic notions about causal graphs. We then, in a number of subsections, develop what is needed to reach the main goal of the paper: to state conditions for when the effects of natural interactions between variables can be given a cause-and-effect interpretation. To reach this goal, we first give a brief overview of the interventional framework of causality. We then introduce an important distinction between situations where the main interest is the effect of external interventions and situations where the main interest is the impact of the causal connections on the dynamics happening naturally in the system. Next the conditions for when the natural interactions can be given a cause-and-effect interpretation are stated. Subsequently, we derive the consequences of these conditions for the special case of bivariate time series. We further suggest some novel approaches to the analysis of causal effects. Then we show how our work complements and extends two common approaches of ‘causality’ analysis: Granger Causality and Dynamic Causal Modeling (DCM). Finally we apply the analysis of causal effects to a simple model system to illustrate some of the theoretical points made.

In this work we are concerned with sets of variables and their interactions. We assume that the state of the variables is uncertain and that we have access to the (possibly time-dependent) joint probability over the variables. This is to avoid issues related to estimation from data. Our results are generic but it might be instructive to think of the variables as corresponding to the states of a set of neurons or other ‘units’ of the brain. We will further assume that the variables interact directly with each other, that is, that the variables are, or might be, causally connected. Note that experimental data sometimes reflect non-causal variables such as the blood oxygenation level depend (BOLD) signal and local field potentials (LFPs), in which case some additional level of modeling might be needed in order to make inferences about causality (c.f. [12]).

Three questions about causality

To put our work in proper context and to facilitate a comparison with existing approaches to causality it is helpful to separate questions about causality into the following three types:

Q1: Is there a direct causal connection from

to

to  ? (existence)

? (existence)Q2: How is the causal connections from

to

to  implemented? (mechanism)

implemented? (mechanism)Q3: What is the causal effect of

on

on  ? (quantification)

? (quantification)

(here  and

and  are two generic, and possibly high-dimensional, variables). We will show that it is important to keep these questions separate, and that different approaches are typically required to answer them. This might seem obvious, but in fact these three questions are often mixed into a ‘causality analysis’, and tools appropriate for the first two questions are often erroneously used to address also the third.

are two generic, and possibly high-dimensional, variables). We will show that it is important to keep these questions separate, and that different approaches are typically required to answer them. This might seem obvious, but in fact these three questions are often mixed into a ‘causality analysis’, and tools appropriate for the first two questions are often erroneously used to address also the third.

The first question (Q1) addresses the existence of a direct causal connection between two variables. A causal connection is a directed binary relation that carries only qualitative information. If the distribution of  is invariant to perturbations in

is invariant to perturbations in  there is no causal connection from

there is no causal connection from  to

to  . The total set of causal connections in a system is referred to as the causal structure. For a system containing a set

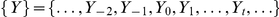

. The total set of causal connections in a system is referred to as the causal structure. For a system containing a set  of variables

of variables  ,

,  , the causal structure can very conveniently be represented as a causal graph in which the nodes correspond to the variables and directed edges point from

, the causal structure can very conveniently be represented as a causal graph in which the nodes correspond to the variables and directed edges point from  to

to  if there is a direct causal connection from

if there is a direct causal connection from  to

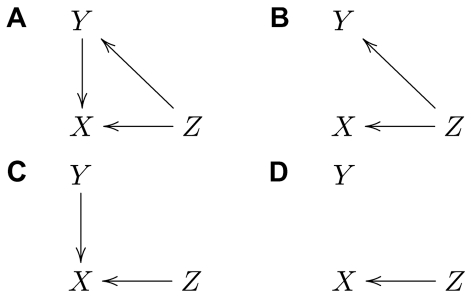

to  . For example, Figure 1A, shows a causal graph where there are direct causal connections from

. For example, Figure 1A, shows a causal graph where there are direct causal connections from  to

to  , from

, from  to

to  , and from

, and from  to

to  . If the causal graph is without cycles (i.e. forming a directed acyclic graph, DAG) then the joint probability over the variables can be factorized according to the causal structure e.g. [10]. This is an important and useful result that we will use below and that is used extensively in the interventional framework of causality (see below). In fact this factorization following the causal structure is fundamental to relate the interventions to the joint probability (see Supporting Information S1). The causal graph contains all information needed to answer Q1. Unfortunately inferring the causal graph from observed data (here, the joint distribution) is in general not possible. A given joint distribution might be compatible with different causal structures [10], in which cases these graphs are said to be observationally equivalent

[13]. Furthermore, the difficulty to infer the causal graph increases if there are hidden variables, i.e. variables that are not observed. The traditional approach to Q1 is therefore to experimentally modify (perturb) one variable and study the impact on the remaining ones. For example, the graphs in Figure 1A and 1B are both consistent with a statistical dependence between

. If the causal graph is without cycles (i.e. forming a directed acyclic graph, DAG) then the joint probability over the variables can be factorized according to the causal structure e.g. [10]. This is an important and useful result that we will use below and that is used extensively in the interventional framework of causality (see below). In fact this factorization following the causal structure is fundamental to relate the interventions to the joint probability (see Supporting Information S1). The causal graph contains all information needed to answer Q1. Unfortunately inferring the causal graph from observed data (here, the joint distribution) is in general not possible. A given joint distribution might be compatible with different causal structures [10], in which cases these graphs are said to be observationally equivalent

[13]. Furthermore, the difficulty to infer the causal graph increases if there are hidden variables, i.e. variables that are not observed. The traditional approach to Q1 is therefore to experimentally modify (perturb) one variable and study the impact on the remaining ones. For example, the graphs in Figure 1A and 1B are both consistent with a statistical dependence between  and

and  . If

. If  is not observed, it is not possible to distinguish between ‘

is not observed, it is not possible to distinguish between ‘ is causing

is causing  ’ and ‘

’ and ‘ is not causing

is not causing  ’ without intervening (i.e. perturbing) the system (see below). On the other hand, if

’ without intervening (i.e. perturbing) the system (see below). On the other hand, if  is observed we see that for the graph in Figure 1B,

is observed we see that for the graph in Figure 1B,  and

and  are conditionally independent given

are conditionally independent given  , while for that in Figure 1A conditioning on

, while for that in Figure 1A conditioning on  does not render

does not render  and

and  independent. In these types of causal structures

independent. In these types of causal structures  is considered a confounder because, being a common driver, it produces a statistical dependence between

is considered a confounder because, being a common driver, it produces a statistical dependence between  and

and  even without the existence of any direct causal connection between them.

even without the existence of any direct causal connection between them.

Figure 1. Causal graphs illustrating the effect of interventions.

A: Graph showing a case where the statistical dependence between  and

and  is (partly) due to a causal interaction from

is (partly) due to a causal interaction from  to

to  . B: Graph showing a case where the statistical dependence between

. B: Graph showing a case where the statistical dependence between  and

and  is induced solely by the confounding variable

is induced solely by the confounding variable  . C: Graph corresponding to the intervention

. C: Graph corresponding to the intervention  in the causal graph shown in A. D: Graph corresponding to the intervention

in the causal graph shown in A. D: Graph corresponding to the intervention  in the causal graph shown in B.

in the causal graph shown in B.

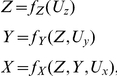

Consider now the second question (Q2) about the mechanisms implementing the causal connections. In the ideal case the answer to this question would be given in terms of a biophysically realistic model of the system under study. However, often one has to make do with a phenomenological model that captures enough features of both structure and dynamics to give an adequate description of the system. That is, a model that is at some level functionally equivalent to the real physical system. Such a functional model would contain a formal description of how the variables in the system are generated, and how each variable depends on the other ones. For the causal graph of Figure 1A, the following formal equations define a functional model:

|

where the  -terms stand for random (non-observable) disturbances. We note that deriving a functional model from a detailed biophysical model, without modifying the impact of the causal interactions, might not be trivial. Nor is it trivial to go from experimental observations to a functional model that allows causal inference about the real physical system.

-terms stand for random (non-observable) disturbances. We note that deriving a functional model from a detailed biophysical model, without modifying the impact of the causal interactions, might not be trivial. Nor is it trivial to go from experimental observations to a functional model that allows causal inference about the real physical system.

Since the model built to answer Q2 must already contain all the needed information to draw the corresponding causal graph, answering Q2 implies also answering Q1. In particular, any variable inside the function  is considered a parent of

is considered a parent of  (the set of all parents is denoted

(the set of all parents is denoted  ) and an arrow from it to

) and an arrow from it to  is included in the graph. Since the functional model is supposed to reflect the underlying mechanisms generating the variables, the parents

is included in the graph. Since the functional model is supposed to reflect the underlying mechanisms generating the variables, the parents  are the minimal set of variables with a direct causal connection to

are the minimal set of variables with a direct causal connection to  . In such modeling approaches to causality it is important to emphasize that all notions of causality refer to the model and not to the system that is being modeled. In other words, only if the model is a faithful description of the process generating the variables can it be used as a model of real causal interactions.

. In such modeling approaches to causality it is important to emphasize that all notions of causality refer to the model and not to the system that is being modeled. In other words, only if the model is a faithful description of the process generating the variables can it be used as a model of real causal interactions.

In biology in general and in neuroscience in particular, the variables of interest are often functions of time. In such cases the functional model must be formulated in terms of dynamic equations (typically as difference or differential equations) and the variables represented in the causal graph will correspond to particular discrete times. In fact, for differential equations (i.e. representing a time-continuous dynamics), the causal graph corresponds only to a discrete representation of the equations (see [14] for a discussion of the correspondence between discrete and continuous models).

Since the answer to Q2 contains a model consistent with the mechanisms implementing the causal connections one may think that this would also be enough to answer Q3, i.e. the causal effect of  on

on  . However, answering Q3 is not so straightforward. It is clear that the causal graph (Q1) does not contain information about the impact of the causal connections but only about their existence. Similarly, from a set of dynamic equations the resulting dynamics are only implicitly represented. This means that the effects of the causal connections modeled by the equations can typically not be read off directly from the equations. Rather, the quantification of the causal effect has to be done by analyzing the dynamics, either using observed data or, given the ‘correct’ model that generated the data, using simulated data from the model or analytical techniques. However, even if the required data are available, one further needs to clearly define what is meant by the causal effect that results from the causal connections. Therefore, question Q3 has to be addressed separately and is typically not reducible to answering Q2 or Q1.

. However, answering Q3 is not so straightforward. It is clear that the causal graph (Q1) does not contain information about the impact of the causal connections but only about their existence. Similarly, from a set of dynamic equations the resulting dynamics are only implicitly represented. This means that the effects of the causal connections modeled by the equations can typically not be read off directly from the equations. Rather, the quantification of the causal effect has to be done by analyzing the dynamics, either using observed data or, given the ‘correct’ model that generated the data, using simulated data from the model or analytical techniques. However, even if the required data are available, one further needs to clearly define what is meant by the causal effect that results from the causal connections. Therefore, question Q3 has to be addressed separately and is typically not reducible to answering Q2 or Q1.

In the rest of this work we will focus on this last question related to the analysis and quantification of causal effects (Q3). Following the distinction between the three questions about causality discussed above we will be very precise with our terminology: First, when talking about causal connections we will refer only to the causal structure in the graph. That is, a causal connection from variable  to variable

to variable  exists if and only if it is possible to go from

exists if and only if it is possible to go from  to

to  following a path composed by arrows whose direction is respected. In particular, a direct causal connection from

following a path composed by arrows whose direction is respected. In particular, a direct causal connection from  to

to  is equivalent to the existence of an arrow from

is equivalent to the existence of an arrow from  to

to  . For two sets of variables the causal connection between the sets exists if it exists between at least a pair of variables. Second, when talking about the causal effect from

. For two sets of variables the causal connection between the sets exists if it exists between at least a pair of variables. Second, when talking about the causal effect from  to

to  we will refer to the impact or influence of the causal connections from

we will refer to the impact or influence of the causal connections from  to

to  . This impact depends on the causal structure, on the actual mechanisms that implement it, and is, as we will see, only appreciable in the dynamics.

. This impact depends on the causal structure, on the actual mechanisms that implement it, and is, as we will see, only appreciable in the dynamics.

Causal effects in the framework of Pearl's interventions

In this section we will define causal effects using the framework of interventional causality developed by Judea Pearl and coworkers (e.g. [10]). We note that this framework is closely related to the potential outcomes approach to causality developed by Donald Rubin and coworkers (e.g. [9]) and that the causal effect used in that approach is fully compatible with the corresponding entity in the interventional framework. The definition of causal effects stated below can therefore be considered a ‘standard’ definition.

The starting point of the interventional framework is the realization that a causal connection between two variables can typically only be identified by intervening. That is, by actively perturbing one variable and studying the effect on the other. This is of course what experimentalists typically would do to study cause-and-effect relations. As a motivating example, consider paired recordings of membrane potentials of two, possibly interconnected, excitatory neurons ( and

and  say). If we observe that membrane potential of neuron

say). If we observe that membrane potential of neuron  tends to depolarize briefly after neuron

tends to depolarize briefly after neuron  elicited an action potential, we might be tempted to conclude that

elicited an action potential, we might be tempted to conclude that  has causal influence over

has causal influence over  . However, the same phenomenon could easily be accounted for by that

. However, the same phenomenon could easily be accounted for by that  and

and  are receiving common (or at least highly correlated) inputs. The obvious thing to do in order to distinguish these two scenarios is to intervene, i.e. to force

are receiving common (or at least highly correlated) inputs. The obvious thing to do in order to distinguish these two scenarios is to intervene, i.e. to force  to emit an action potential (e.g. by injecting current into the cell). If depolarizations of the membrane potential of

to emit an action potential (e.g. by injecting current into the cell). If depolarizations of the membrane potential of  are consistently found after such interventions, we are clearly much more entitled to conclude that

are consistently found after such interventions, we are clearly much more entitled to conclude that  has a causal influence over

has a causal influence over  . Note that the crucial aspect of the intervention is that it ‘forces’ one variable to take a particular value (e.g. fire an action potential) independently of the values of other variables in the system. It therefore ‘breaks’ interdependencies that are otherwise part of the system and hence can be used to distinguish between causal and spurious associations. The recent study by Ko et al. [15] is an excellent example of how interventions can be used to determine the causal structure between single neurons, and how this structure can account for observed statistical dependencies.

. Note that the crucial aspect of the intervention is that it ‘forces’ one variable to take a particular value (e.g. fire an action potential) independently of the values of other variables in the system. It therefore ‘breaks’ interdependencies that are otherwise part of the system and hence can be used to distinguish between causal and spurious associations. The recent study by Ko et al. [15] is an excellent example of how interventions can be used to determine the causal structure between single neurons, and how this structure can account for observed statistical dependencies.

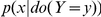

One of the contributions of the interventional framework of causality is that it formalizes the notion of an intervention and develops rules for how interventions can be incorporated into probability theory [10]. Symbolically an intervention is represented by the ‘ ’ operator. For example, setting one variable,

’ operator. For example, setting one variable,  , in the system to a particular value,

, in the system to a particular value,  , is denoted

, is denoted  . These interventions to a fixed value are commonly used in framework of Pearl's causality. Experimental interventions rarely can be exactly controlled, but the important point is not that a variable can be fixed to a given value but that the mechanisms generating this variable are perturbed. We will see below that the variability in the intervention can be captured introducing a probability distribution of interventions

. These interventions to a fixed value are commonly used in framework of Pearl's causality. Experimental interventions rarely can be exactly controlled, but the important point is not that a variable can be fixed to a given value but that the mechanisms generating this variable are perturbed. We will see below that the variability in the intervention can be captured introducing a probability distribution of interventions  . The effect of an intervention on the joint distribution is most easily seen when the joint distribution is factorized according to a causal graph. In this case the intervention

. The effect of an intervention on the joint distribution is most easily seen when the joint distribution is factorized according to a causal graph. In this case the intervention  corresponds to deleting the term corresponding to

corresponds to deleting the term corresponding to  in the factorization and setting

in the factorization and setting  in all other terms depending on

in all other terms depending on  . This truncated factorization of the joint distribution is referred to as the postinterventional distribution, that is, the distribution resulting from an intervention. In Supporting Information S1 we give a formal definition of the effect of an intervention and some examples. Graphically, the effect of an intervention is particularly illuminating: intervening in one variable corresponds to the removal of all the arrows pointing to that variable in the causal graph. This represents the crucial aspect of interventions mentioned above: intervention ‘disconnects’ the intervened variable from the rest of the system. Figure 1A and B illustrate two different scenarios for how a statistical dependence between

. This truncated factorization of the joint distribution is referred to as the postinterventional distribution, that is, the distribution resulting from an intervention. In Supporting Information S1 we give a formal definition of the effect of an intervention and some examples. Graphically, the effect of an intervention is particularly illuminating: intervening in one variable corresponds to the removal of all the arrows pointing to that variable in the causal graph. This represents the crucial aspect of interventions mentioned above: intervention ‘disconnects’ the intervened variable from the rest of the system. Figure 1A and B illustrate two different scenarios for how a statistical dependence between  and

and  could come about. If only

could come about. If only  and

and  are observed, these scenarios are indistinguishable without intervening. The causal graphs corresponding to the intervention

are observed, these scenarios are indistinguishable without intervening. The causal graphs corresponding to the intervention  are shown in Figure 1C and D. It is clear that only the graph shown in Figure 1C implies a statistical relation between

are shown in Figure 1C and D. It is clear that only the graph shown in Figure 1C implies a statistical relation between  and

and  illustrating how the intervention helps to distinguish between causal and spurious (non-causal) associations. Indeed, interventions can generally be used to infer the existence of causal connections. Conditions and measures to infer causal connectivity from interventions have been studied for example in [16], [17].

illustrating how the intervention helps to distinguish between causal and spurious (non-causal) associations. Indeed, interventions can generally be used to infer the existence of causal connections. Conditions and measures to infer causal connectivity from interventions have been studied for example in [16], [17].

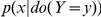

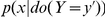

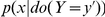

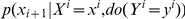

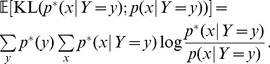

Given this calculus of interventions, the causal effect of the intervention of a variable  on a variable

on a variable

is defined as the postinterventional probability distribution

is defined as the postinterventional probability distribution  (see Definition 3.2.1 in [10] and Supporting Information S1). This definition understands the causal effect as a function from the space of

(see Definition 3.2.1 in [10] and Supporting Information S1). This definition understands the causal effect as a function from the space of  to the space of probability distributions of

to the space of probability distributions of  . In particular, for each intervention

. In particular, for each intervention  ,

,  denotes the probability distribution of

denotes the probability distribution of  given this intervention. In Supporting Information S1 we show how

given this intervention. In Supporting Information S1 we show how  can be computed from a given factorization of the joint distribution of

can be computed from a given factorization of the joint distribution of  and

and  . Note that this definition of causal effect is valid also if

. Note that this definition of causal effect is valid also if  and

and  are multivariate.

are multivariate.

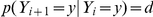

This definition of causal effects is very general and in practice it is often desirable to condense this family of probability distributions (i.e. one distribution per intervention) to something lower-dimensional. Often the field of study will suggest a suitable measure of the causal effect. Consider the example of the two neurons introduced above, and let  denote the intervention corresponding to making neuron

denote the intervention corresponding to making neuron  emit an action potential. To not introduce new notation we let

emit an action potential. To not introduce new notation we let  and

and  stand for both the identity of the neurons as well as their membrane potentials. Then a reasonable measure of the causal effect of

stand for both the identity of the neurons as well as their membrane potentials. Then a reasonable measure of the causal effect of  on

on  could be

could be

| (1) |

That is, the difference between the expected values of the postinterventional distribution  and the marginal distribution

and the marginal distribution  (c.f. [18]). In other words, the causal effect would be quantified as the mean depolarization induced in

(c.f. [18]). In other words, the causal effect would be quantified as the mean depolarization induced in  by an action potential in

by an action potential in  . Clearly this measure does not capture all possible causal effects, for example, the variability of the membrane potential could certainly be affected by the intervention.

. Clearly this measure does not capture all possible causal effects, for example, the variability of the membrane potential could certainly be affected by the intervention.

Intervening one variable is similar to conditioning on this variable, this is illustrated both in the notation and also in the effect of an intervention on the joint distribution. However, there is a very important difference in that an intervention actually changes the causal structure whereas conditioning does not. As mentioned above, it is this aspect of the intervention that makes it a key tool in causal analysis. Formally, this difference is expressed in that  in general differs from

in general differs from  . Consider for example the case when

. Consider for example the case when  is causing

is causing  but not the other way around, i.e.

but not the other way around, i.e.  , then

, then  whereas, in general,

whereas, in general,  .

.

A very important and useful aspect of this definition of casual effect is that if all the variables in the system are observed the causal effect can be computed from the joint distribution over the variables in the observed non-intervened system. That is, even if the causal effect is formulated in terms of interventions, we might not need to actually intervene in order to compute it. See Supporting Information S1 for details of this procedure and S2 for the calculation of causal effects in the graphs of Figure 1. On the other hand, if there are hidden (non-observed) variables, physical intervention is typically required to estimate the causal effect.

Requirements for a definition of causal effect between neural systems

The definition of causal effects stated above is most useful when studying the effect of one or a few singular events in a system, that is, events isolated in time that can be thought of in terms of interventions. However, in neuroscience the interest is often in functional relations between different subsystems over an extended period of time (say, during one trial of some task). Furthermore, the main interest is not in the effect of perturbations, but in the interactions that are part of the brains natural dynamics. Consider for example the operant conditioning experiment, a very common paradigm in systems neuroscience. Here a subject is conditioned to express a particular behavior contingent upon the sensory stimuli received. Assume we record the simultaneous activity of many different functional ‘units’. Then a satisfactory notion of causal effect of one unit on another should quantify how much of the task-related activity in one unit can be accounted for by the impact of the causal connections from the other, and not the extent to which it would be changed by an externally imposed intervention. Of course, there are other cases where the effect of an intervention is the main interest, such as for example in studies of deep brain stimulation (e.g. [19]). In these cases the interventional framework is readily applicable and we will consequently not consider these cases further. We will instead focus on the analysis of natural brain dynamics which is also where DCM and Granger causality typically have been applied.

These considerations indicate some requirements for a definition of causal effects in the context of natural brain dynamics. First, causal effects should be assessed in relation to the dynamics of the neuronal activity. From a modeling point-of-view this implies that the casal effect can typically not be identified with parameters in the model. Second, the causal effects should characterize the natural dynamics, and not the dynamics that would result from an external intervention. This is because we want to learn the impact of the causal connections over the unaltered brain activity.

We will refer to causal effects that fulfill these requirements as natural causal effects between dynamics. We will now see that it is possible to derive a definition of natural causal effects between dynamics from the interventional definition of causal effects. We start by examining when natural causal effects between variables exist and in the following section we consider natural causal effects between dynamics.

Natural causal effects

As explained above, a standard way to define causal effects is in terms of interventions. Yet, many of the most pertinent questions in neuroscience cannot be formulated in terms of interventions in a straightforward way. Indeed, workers are often interested in the ‘the influence one neural system exerts over another’ in the unperturbed (natural) state [8]. In this section we will state the conditions for when the impact of causal connections from one subsystem to another (as quantified by the conditional probability distribution) can be given such a cause-and-effect interpretation.

We first consider the causal effect of one isolated intervention. For a given value  of the random variable

of the random variable  , we define the natural causal effect of

, we define the natural causal effect of  on the random variable

on the random variable

to be

to be  if and only if

if and only if

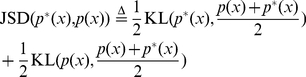

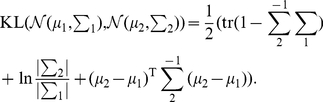

| (2) |

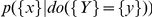

In words, if and only if conditioning on  is identical to intervening to

is identical to intervening to  , the influence of

, the influence of  on

on  is a causal effect that we call a natural causal effect. Since the observed conditional distribution is equal to the postinterventional distribution given

is a causal effect that we call a natural causal effect. Since the observed conditional distribution is equal to the postinterventional distribution given  , we interpret this as the intervention naturally occurring in the system. Note that this definition implies that if Eq. 2 does not hold, then the natural causal effect of

, we interpret this as the intervention naturally occurring in the system. Note that this definition implies that if Eq. 2 does not hold, then the natural causal effect of  on

on  does not exist.

does not exist.

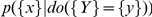

Next we formulate the natural causal effect between two (sets of) random variables. The natural causal effect of  on

on  is given by

is given by

| (3) |

if and only if Eq. 2 holds for each value of  . Note that Eq. 3 is a factorization of the joint distribution of

. Note that Eq. 3 is a factorization of the joint distribution of  and

and  . Indeed we have

. Indeed we have

| (4) |

This means that if Eq. 2 holds for each value of  then the natural causal effect of

then the natural causal effect of  on

on  is given by the joint distribution of

is given by the joint distribution of  and

and  . At first glance it might seem strange that the factor

. At first glance it might seem strange that the factor  appears in the definition of the natural causal effect (Eq. 3). After all, the effects of the causal interactions are ‘felt’ only by

appears in the definition of the natural causal effect (Eq. 3). After all, the effects of the causal interactions are ‘felt’ only by  , and we think of

, and we think of  as being the cause. However, it is clear that the conditional distributions

as being the cause. However, it is clear that the conditional distributions  will in general depend on

will in general depend on  which means that to account for the causal effects of

which means that to account for the causal effects of  on

on  we need to consider all the different values of

we need to consider all the different values of  according to the distribution with which they are observed. This means that we must consider how often the different single natural interventions

according to the distribution with which they are observed. This means that we must consider how often the different single natural interventions  happen, that is, we need to include

happen, that is, we need to include  in the definition.

in the definition.

An important characteristic of natural causal effects is that the interventions they represent are not chosen by an experimenter (or policy maker) but are ‘chosen’ by the dynamics of the system itself. This means that we can think of the natural causal effect of  on

on  as the joint effect of all possible interventions

as the joint effect of all possible interventions  with the additional constraint that the distribution of the interventions is given by

with the additional constraint that the distribution of the interventions is given by

| (5) |

We can thus separate the definition of the natural causal effect from variable

to

to  into two different criteria. The first one is a criterion of existence of natural causal effects of

into two different criteria. The first one is a criterion of existence of natural causal effects of  on

on  (Eq. 2), which determines when interventions occur naturally in the system. The second one, is a criterion of maintenance of the natural joint distribution (Eq. 5), which interprets the observed marginal distribution of the intervened variable as a distribution of interventions, so that the natural joint distribution is preserved (Eq. 4).

(Eq. 2), which determines when interventions occur naturally in the system. The second one, is a criterion of maintenance of the natural joint distribution (Eq. 5), which interprets the observed marginal distribution of the intervened variable as a distribution of interventions, so that the natural joint distribution is preserved (Eq. 4).

We now turn to the conditions on the causal structure under which natural causal effects exist. This means that we need to identify the conditions for which

| (6) |

In the interventional framework, this condition on the causal effect on  of intervening

of intervening  is called not confounded (Ch. 6 in[10]). Importantly, the fulfillment or not of this condition is determined only by the causal structure. In particular, in Supporting Information S1 we demonstrate that Eq. 6 holds if the following two conditions are fulfilled: First, that there are no causal connections in the opposite direction (i.e. from

is called not confounded (Ch. 6 in[10]). Importantly, the fulfillment or not of this condition is determined only by the causal structure. In particular, in Supporting Information S1 we demonstrate that Eq. 6 holds if the following two conditions are fulfilled: First, that there are no causal connections in the opposite direction (i.e. from  to

to  ). Second, that there is no common driver of

). Second, that there is no common driver of  and

and  . These two conditions assure that the dependence reflected in the conditional probability

. These two conditions assure that the dependence reflected in the conditional probability  is specific for the causal flow from

is specific for the causal flow from  to

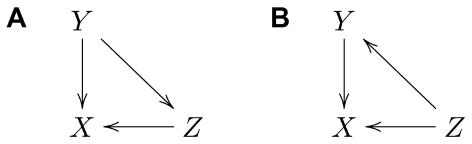

to  . Notice though, that the presence of mediating variables is allowed, that is, a natural causal effect can be due to indirect causal connections. See Figure 2A for an illustration of a causal graph supporting natural causal effects that are partly indirect.

. Notice though, that the presence of mediating variables is allowed, that is, a natural causal effect can be due to indirect causal connections. See Figure 2A for an illustration of a causal graph supporting natural causal effects that are partly indirect.

Figure 2. Natural causal effects.

Examples of causal graphs illustrating when the effect of the influence of  on

on  can be interpreted as a natural causal effect (A) and when it cannot (B).

can be interpreted as a natural causal effect (A) and when it cannot (B).

We emphasize that if Eq. 6 does not hold then the impact on  of the causal connections from

of the causal connections from  to

to  cannot be given a cause-and-effect interpretation. An example of this is given in Figure 2B where

cannot be given a cause-and-effect interpretation. An example of this is given in Figure 2B where  is a common driver of both

is a common driver of both  and

and  which therefore precludes a cause-and-effect interpretation of the joint distribution of

which therefore precludes a cause-and-effect interpretation of the joint distribution of  and

and  . In this case we can still calculate the causal effect of an intervention,

. In this case we can still calculate the causal effect of an intervention,  , according to

, according to

| (7) |

see Supporting Information S1 for a detailed calculation. It might seem contradictory that on the one hand side, the impact of the causal connections from  to

to  does not result in a natural causal effect and on the other hand side we can still compute the causal effect of

does not result in a natural causal effect and on the other hand side we can still compute the causal effect of  on

on  using the above formula. The key here is what we add by the modifier ‘natural’. To illustrate this, consider what would happen if we were to reconstruct the joint distribution of

using the above formula. The key here is what we add by the modifier ‘natural’. To illustrate this, consider what would happen if we were to reconstruct the joint distribution of  and

and  from the marginal distribution of

from the marginal distribution of  and the distribution of the interventions given in Eq. 7. That is, consider the joint distribution

and the distribution of the interventions given in Eq. 7. That is, consider the joint distribution

with the additional constraint that we choose the distribution of interventions according to Eq. 5. Now given the formula in Eq. 7 we see that  (unless, of course, if

(unless, of course, if  , but this condition is not compatible with Figure 2B, that is, with

, but this condition is not compatible with Figure 2B, that is, with  being a common driver). This means that even if we make sure that the marginal distribution of

being a common driver). This means that even if we make sure that the marginal distribution of  is the correct one, we cannot reconstruct the observed natural joint distribution and hence the natural ’dynamics’ of the variables in the system. In other words, the interventions change the system and the causal effect is with respect to this changed system. As mentioned above, sometimes this is indeed what is desired but in most cases where causality analysis is applied to the neurosciences the aim is to characterize what we have called the natural causal effect.

is the correct one, we cannot reconstruct the observed natural joint distribution and hence the natural ’dynamics’ of the variables in the system. In other words, the interventions change the system and the causal effect is with respect to this changed system. As mentioned above, sometimes this is indeed what is desired but in most cases where causality analysis is applied to the neurosciences the aim is to characterize what we have called the natural causal effect.

Apart from causal effects of the form  , one could argue that for cases like the one in Figure 2B, it would be relevant to consider conditional causal effects

, one could argue that for cases like the one in Figure 2B, it would be relevant to consider conditional causal effects  . That is, given that

. That is, given that  is a confounder, a way to get rid of its influence is to examine the causal effect for each observed value of

is a confounder, a way to get rid of its influence is to examine the causal effect for each observed value of  separately. In analogy with the definition of natural causal effect above we define the conditional natural causal effect of

separately. In analogy with the definition of natural causal effect above we define the conditional natural causal effect of  on

on  given

given  to be

to be

| (8) |

if and only if

| (9) |

Eq. 9 is analogous to Eq. 2 and constitutes a criterion for the existence of the conditional natural causal effects. Furthermore, in Eq. 8  should be interpreted as

should be interpreted as  in Eq. 5, being the distribution of the interventions related to the criterion of maintenance of the natural joint distribution. It is important to note the different nature of this causal effect with respect to the unconditional one. In effect, for each value of the conditioned variable

in Eq. 5, being the distribution of the interventions related to the criterion of maintenance of the natural joint distribution. It is important to note the different nature of this causal effect with respect to the unconditional one. In effect, for each value of the conditioned variable  there is a (potentially) different natural causal effect of

there is a (potentially) different natural causal effect of  on

on  . That is, in this case it does not make sense to talk about the causal effect of

. That is, in this case it does not make sense to talk about the causal effect of  on

on  , instead the causal effect is of

, instead the causal effect is of  on

on  for

for  . In contrast to the criterion of Eq. 2 this criterion (i.e. Eq. 9) is fulfilled in the causal graph of Figure 2B (see Supporting Information S1 for the details). We will further address the interpretation of unconditional and conditional natural causal effects in a subsequent section below. In Supporting Information S1 we show the conditions under which Eq. 9 holds. Like for the unconditional case one of the requirements is that there are no causal connections in the opposite direction (i.e. from

. In contrast to the criterion of Eq. 2 this criterion (i.e. Eq. 9) is fulfilled in the causal graph of Figure 2B (see Supporting Information S1 for the details). We will further address the interpretation of unconditional and conditional natural causal effects in a subsequent section below. In Supporting Information S1 we show the conditions under which Eq. 9 holds. Like for the unconditional case one of the requirements is that there are no causal connections in the opposite direction (i.e. from  to

to  ). The other condition is analogous to the lack of common drivers in the unconditional case. In particular, the influence of any possible common driver should be blocked by the conditioning on the variables in

). The other condition is analogous to the lack of common drivers in the unconditional case. In particular, the influence of any possible common driver should be blocked by the conditioning on the variables in  , or in technical language,

, or in technical language,  satisfies the back-door criterion relative to

satisfies the back-door criterion relative to  and

and  (see Definition 3.3.1 in [10]).

(see Definition 3.3.1 in [10]).

We have defined the natural causal effect from one (set of) variables to another as their joint distribution. In practice it will often be more convenient to characterize the natural causal effect with a lower-dimensional measure. Below we will indicate some possible such measures. However, note that the emphasis in this work is not so much in applying this framework to data but to show under which conditions the interactions between subsystems can be given a cause-and-effect interpretation.

Natural causal effects between brain dynamics

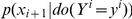

Introducing natural causal effects above we considered  and

and  to be univariate or multivariate random variables. In the case of studying the interactions between different subsystems of the brain we are led to consider natural causal effects between time series. We assume that the variables in the time series are causal, that is, the variables in one time series can potentially have direct causal influence over variables in the other. Since we are modeling a system (e.g. the brain) without instantaneous causality, we will not include instantaneous causality in the models below. (Note that in applications it might be important to have a high enough sampling rate to avoid ‘instantaneous causality’.) Given two subsystems

to be univariate or multivariate random variables. In the case of studying the interactions between different subsystems of the brain we are led to consider natural causal effects between time series. We assume that the variables in the time series are causal, that is, the variables in one time series can potentially have direct causal influence over variables in the other. Since we are modeling a system (e.g. the brain) without instantaneous causality, we will not include instantaneous causality in the models below. (Note that in applications it might be important to have a high enough sampling rate to avoid ‘instantaneous causality’.) Given two subsystems  and

and  with time changing activities we let

with time changing activities we let  and

and  denote two time series corresponding to the activities of

denote two time series corresponding to the activities of  and

and  . That is, relative to some temporal reference frame,

. That is, relative to some temporal reference frame,  is the random variable that models the activity in

is the random variable that models the activity in  at time

at time  .

.

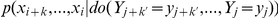

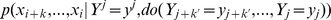

When asking ‘causality questions’ about time series it is important to be specific about exactly what is the type of causal effect of interest. In particular, one could be interested in causal effects at different scales. For example, the interest could be in the causal effect of  on

on  , which would then be viewed as the impact of the totality of the causal connections from

, which would then be viewed as the impact of the totality of the causal connections from  to

to  . Indeed, this seem to be the causal effect that has received most interest in neuroscience (e.g. [8]). Alternatively the interest could be in the causal effect at a particular point in time, e.g. we could ask about the causal effect of

. Indeed, this seem to be the causal effect that has received most interest in neuroscience (e.g. [8]). Alternatively the interest could be in the causal effect at a particular point in time, e.g. we could ask about the causal effect of  on

on  . Of course, these two types of causal effects at different levels of descriptions are related, but are not equivalent and reflect different aspects of the impact of the causal connections between the subsystems.

. Of course, these two types of causal effects at different levels of descriptions are related, but are not equivalent and reflect different aspects of the impact of the causal connections between the subsystems.

We will use the framework of causal graphs to represent the causal structure of the dynamics of the subsystems. Since we assume that there is no instantaneous causality,  cannot interact directly with

cannot interact directly with  (and vice versa). We use

(and vice versa). We use

to denote the past of  , relative to time

, relative to time  . Furthermore, for simplicity we only represent direct causal connections of order

. Furthermore, for simplicity we only represent direct causal connections of order  in the causal graph (i.e. from

in the causal graph (i.e. from  to

to  ), but our results are generic.

), but our results are generic.

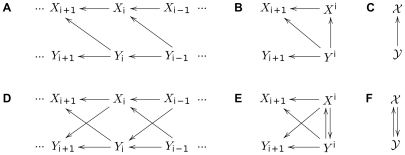

The subsystems can be represented at different scales, according to the type of causal effect one is interested in. We will consider the case of two subsystems with unidirectional causal connections from  to

to  (Figure 3A–C), or alternatively with bidirectional causal connections (Figure 3D–F).

(Figure 3A–C), or alternatively with bidirectional causal connections (Figure 3D–F).

Figure 3. Graphical representation of causal connections for subsystems changing in time.

Causal graphs represent two subsystems with unidirectional causal connections from  to

to  (A–C), or bidirectional causal connections (D–F). From left to right the scale of the graphs changes from a microscopic level, representing the dynamic, to a macroscopic one, in which each subsystem is represented by a single node.

(A–C), or bidirectional causal connections (D–F). From left to right the scale of the graphs changes from a microscopic level, representing the dynamic, to a macroscopic one, in which each subsystem is represented by a single node.

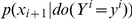

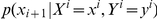

The microscopic representation of the causal structure displays explicitly all the variables and their causal connections (Figures 3A,D). At this microscopic level the graph is always a directed acyclic graph (DAG), given the above assumption of no instantaneous interactions. The microscopic level is required to examine types of causal effects that consider some particular intervals of the time series, e.g.  . If instead we consider the time series

. If instead we consider the time series  and

and  in their totality we get the macroscopic causal graph shown in Figure 3C,F. The macroscopic representation is useful because of its simplicity, with only one node per system, and it has been often used in the literature e.g. [20]–[22]. Note that at the macroscopic level a microscopic DAG can become cyclic (Figure 3F). At the macroscopic level the only causal effect to consider is

in their totality we get the macroscopic causal graph shown in Figure 3C,F. The macroscopic representation is useful because of its simplicity, with only one node per system, and it has been often used in the literature e.g. [20]–[22]. Note that at the macroscopic level a microscopic DAG can become cyclic (Figure 3F). At the macroscopic level the only causal effect to consider is  . As one intermediate possibility we could consider a mesoscopic representation (Figure 3B,E). Here the ‘past’ of the two time series at some point in time (referred to by

. As one intermediate possibility we could consider a mesoscopic representation (Figure 3B,E). Here the ‘past’ of the two time series at some point in time (referred to by  and

and  , respectively) is only implicitly represented, whereas the ‘future’ (

, respectively) is only implicitly represented, whereas the ‘future’ ( and

and  ) is explicit. The causal effects related to this representation are of the type

) is explicit. The causal effects related to this representation are of the type  , and the conditional causal effects

, and the conditional causal effects  . As we will see below, this is the representation that best accommodates Granger causality.

. As we will see below, this is the representation that best accommodates Granger causality.

Different levels of representation may be used depending on the type of causal effects to be studied. However, it is important to emphasize that the conditions for the existence of natural causal effects give consistent results independently of the scale of the representation. For example, whether the natural causal effects  exist or not can be checked using the microscopic causal graph. This is because the representations at the different levels are consistent, so that an arrow in the macroscopic graph from

exist or not can be checked using the microscopic causal graph. This is because the representations at the different levels are consistent, so that an arrow in the macroscopic graph from  to

to  exists only if any directed causal connection from

exists only if any directed causal connection from  to

to  exists. This consistency reflects that at the macroscopic level the time series are conceived, not just as a set of random variables, but as representative of the dynamics of the subsystems. While at the microscopic level the status of the relation of a variable

exists. This consistency reflects that at the macroscopic level the time series are conceived, not just as a set of random variables, but as representative of the dynamics of the subsystems. While at the microscopic level the status of the relation of a variable  with another

with another  may seem equivalent to the one with

may seem equivalent to the one with  , being this relation determined by the causal connections, the consideration of the time series as an entity breaks this equivalence, because the variables

, being this relation determined by the causal connections, the consideration of the time series as an entity breaks this equivalence, because the variables  and

and  can be merged as part of the time series

can be merged as part of the time series  , while

, while  and

and  are not considered together at the macroscopic level.

are not considered together at the macroscopic level.

At the macroscopic level, the natural causal effect from time series  to

to  is, in analogy with Eq. 3, given by

is, in analogy with Eq. 3, given by

| (10) |

and can be seen as reflecting the total influence of the dynamics of the subsystem  to the dynamics of

to the dynamics of  . However, as we mentioned above, this high-dimensional causal effect is not the only type of causal effects that can result from the causal connections from

. However, as we mentioned above, this high-dimensional causal effect is not the only type of causal effects that can result from the causal connections from  to

to  . Other types of causal effects related to distributions of lower dimension, like the ones mentioned above, also reflect some aspects of the impact of the causal connections. This diversity of types of causal effects indicates that the causal structure should be seen as a medium that channels different types of natural causal effects. The idea of quantifying causal effects with a single measure of strength is an oversimplification, and although in some circumstances focusing on one of these types of natural causal effects may suffice to characterize the dynamics, in general they provide us complementary information.

. Other types of causal effects related to distributions of lower dimension, like the ones mentioned above, also reflect some aspects of the impact of the causal connections. This diversity of types of causal effects indicates that the causal structure should be seen as a medium that channels different types of natural causal effects. The idea of quantifying causal effects with a single measure of strength is an oversimplification, and although in some circumstances focusing on one of these types of natural causal effects may suffice to characterize the dynamics, in general they provide us complementary information.

The causal graphs at different scales can be related to different types of models. Macroscopic causal graphs have been used to represent structural equation models (SEM), where time is ignored. This type of models have been described in detail in the interventional framework of causality (see Chapter 5 in [10]), but there is no fundamental limitation of this framework to functional models that do not take time into account. In fact, sequential time interventions have also been studied (e. g. [23]) and recently the relation between interventions and Granger causality in time series was considered [24]. See also the so-called Dynamic structural causal modeling in [14]. Once time is explicitly represented like in the microscopic scale, the acyclic structure of the graph is not incompatible with the representation of feedback loops between the subsystems.

The constraints of the causal structure on the existence and characterization of natural causal effects between dynamics

Here we will consider in more detail when natural causal effects exist and can be characterized, and thus when the question "What is the causal effect of the subsystem  on the subsystem

on the subsystem  ?" is meaningful. For simplicity we will restrict ourselves to the bivariate case illustrated in Figure 3.

?" is meaningful. For simplicity we will restrict ourselves to the bivariate case illustrated in Figure 3.

Consider first the case of unidirectional causal connections from  to

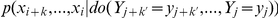

to  in Figure 3A–C. For any type of causal effect that involves an intervention of some variables of the time series

in Figure 3A–C. For any type of causal effect that involves an intervention of some variables of the time series  , generally

, generally  , we need to examine if they are common drivers between

, we need to examine if they are common drivers between  and

and  . Due to the unidirectional causal connections all the common drivers are contained in

. Due to the unidirectional causal connections all the common drivers are contained in  . This means that any conditional causal effect

. This means that any conditional causal effect  is a natural causal effect. Furthermore, the criterion of existence is also fulfilled for

is a natural causal effect. Furthermore, the criterion of existence is also fulfilled for  and

and  (See Supporting Information S1). In all these cases one can select the marginal distribution of interventions in agreement with the natural distribution according to Eq. 5 and thus preserve the natural joint distribution (Eq. 4). This implies that in the case of unidirectional causality it is possible to quantify the impact of the casual connections in terms of the causal effect of

(See Supporting Information S1). In all these cases one can select the marginal distribution of interventions in agreement with the natural distribution according to Eq. 5 and thus preserve the natural joint distribution (Eq. 4). This implies that in the case of unidirectional causality it is possible to quantify the impact of the casual connections in terms of the causal effect of  on

on  . In the next section we will indicate how this can be done.

. In the next section we will indicate how this can be done.

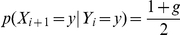

Consider now the case of bidirectional causal connections in Figure 3D–F. At the microscopic level we see that for  and each variable

and each variable  ,

,  , the variables

, the variables  ,

,  constitute common drivers (direct or indirect). This is also reflected in the mesoscopic time scale, where

constitute common drivers (direct or indirect). This is also reflected in the mesoscopic time scale, where  is a common driver of

is a common driver of  and

and  and there is a loop between

and there is a loop between  and

and  . Therefore, the criterion of existence of the natural causal effects is not fulfilled in the case of bidirectional causality.

. Therefore, the criterion of existence of the natural causal effects is not fulfilled in the case of bidirectional causality.

By contrast, the criterion of existence is fulfilled for the conditional natural causal effects, for example  that we mentioned when we introduced the mesoscopic level. In this case all the possible common drivers, contained in

that we mentioned when we introduced the mesoscopic level. In this case all the possible common drivers, contained in  , are conditioned. Therefore we can say that there are conditional natural causal effects from

, are conditioned. Therefore we can say that there are conditional natural causal effects from  to

to  given

given  even in the case of bidirectional causality. However, to determine if this type of conditional causal effects can be used to characterize the impact of the causal connections from

even in the case of bidirectional causality. However, to determine if this type of conditional causal effects can be used to characterize the impact of the causal connections from  to

to  , we still have to consider the preservation of the joint conditional distribution (Eq. 8). In analogy to Eq. 5, to preserve the natural dynamics we need to select the interventions according to

, we still have to consider the preservation of the joint conditional distribution (Eq. 8). In analogy to Eq. 5, to preserve the natural dynamics we need to select the interventions according to

| (11) |

That is, we have to choose the interventions conditionally upon  , but this is clearly contradictory to the idea of defining a causal effect from

, but this is clearly contradictory to the idea of defining a causal effect from  to

to  . Since

. Since  conditions the interventions, we cannot interpret the causal effect as representative of the impact of the causal connections from one subsystem to the other. This is because we do not simply consider the conditional effect of a set of variables

conditions the interventions, we cannot interpret the causal effect as representative of the impact of the causal connections from one subsystem to the other. This is because we do not simply consider the conditional effect of a set of variables  in a variable

in a variable  given a another set of variables

given a another set of variables  . The variables

. The variables  and

and  are related since we consider them as part of a single entity, namely the time series

are related since we consider them as part of a single entity, namely the time series  . The conditional natural causal effects

. The conditional natural causal effects  with a distribution according to Eq. 11 occur given how the causal connections generate the observed dynamics, but these conditional causal effects cannot be understood as being from one subsystem to the other. In particular, the distribution of the natural interventions is also determined by the causal connections from

with a distribution according to Eq. 11 occur given how the causal connections generate the observed dynamics, but these conditional causal effects cannot be understood as being from one subsystem to the other. In particular, the distribution of the natural interventions is also determined by the causal connections from  to

to  .

.

Altogether, in the case of bidirectional causality, none of the candidate causal effects considered fulfills the criteria for the existence of natural causal effects and the maintenance of the joint distribution. For bidirectional causality the causal effects like  and

and  do not exist as natural causal effects, while conditional causal effects like

do not exist as natural causal effects, while conditional causal effects like  take place in the system, but cannot be understood as causal effects between the subsystems.

take place in the system, but cannot be understood as causal effects between the subsystems.

We emphasize that what prevents the interpretation of the conditional natural causal effects discussed above as a causal effect between the subsystems is to some extent the point-of-view of the interpreter. What our analysis show is that problems of interpretation arises when grouping variables together as when considering  as standing for the activity of a particular subsystem. At the microscopic level, keeping time locality, one can analyze these conditional natural causal effects. For example,

as standing for the activity of a particular subsystem. At the microscopic level, keeping time locality, one can analyze these conditional natural causal effects. For example,  can be viewed the natural causal effect of

can be viewed the natural causal effect of  on

on  given

given  . That is, relative to a particular time instant, we can meaningfully talk about conditional natural causal effects. However, note that since

. That is, relative to a particular time instant, we can meaningfully talk about conditional natural causal effects. However, note that since  does not represent the dynamics of

does not represent the dynamics of  , this natural causal effect cannot be considered the effect of

, this natural causal effect cannot be considered the effect of  on

on  .

.

That we cannot in general answer what is the causal effect of a brain subsystem on another while processing some stimulus or performing some neural computation may seem surprising. However, the definition of the natural causal effects (Eq. 3) was derived precisely to be consistent with what should be expected from a definition of causal effect to be used to analyze the natural activity of brain dynamics. We will now give some arguments to indicate that the restrictions imposed by the causal structure are in fact intuitive.

In Figure 3 we showed that, while at the microscopic scale a DAG is obtained as long as instantaneous interactions are excluded, at the mesoscopic and macroscopic scales the existence of bidirectional causality leads to a cyclic graph (Figure 3E–F). This means that  and

and  are mutually determined. This mutual determination can be understood as the impossibility to write a set of equations such that the dynamics of

are mutually determined. This mutual determination can be understood as the impossibility to write a set of equations such that the dynamics of  can be determined previously without simultaneously determining the dynamics of

can be determined previously without simultaneously determining the dynamics of  . At the microscopic scale, for bidirectional causality (Figure 3D), one can consider a path from some node

. At the microscopic scale, for bidirectional causality (Figure 3D), one can consider a path from some node  ,

,  , to

, to  which is directed and thus follows the causal flow, but contains both arrows

which is directed and thus follows the causal flow, but contains both arrows  and

and  . In this case it is not possible to disentangle in the natural dynamics the causal effect in the opposite directions: when considering the causal effect from

. In this case it is not possible to disentangle in the natural dynamics the causal effect in the opposite directions: when considering the causal effect from  to

to  , assuming

, assuming  , it is not clear to which degree the influence of

, it is not clear to which degree the influence of  on

on  is intrinsic to

is intrinsic to  or due to the previous influence of

or due to the previous influence of  on

on  .

.

That is, in the case of a bidirectional coupling,  and

and  form a unique bivariate system in which the contribution of one system to the dynamics of the other cannot be meaningfully quantified. Therefore it does not make sense to ask for the causal effect from one subsystem to the other, but to examine how the causal connections participate in the generation of the joint dynamics. A simple neural example illustrating this view would be the processing of a visual stimulus in the primary visual cortex (

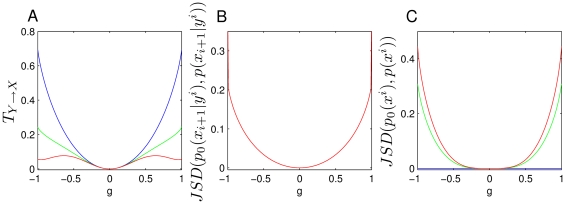

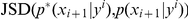

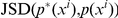

form a unique bivariate system in which the contribution of one system to the dynamics of the other cannot be meaningfully quantified. Therefore it does not make sense to ask for the causal effect from one subsystem to the other, but to examine how the causal connections participate in the generation of the joint dynamics. A simple neural example illustrating this view would be the processing of a visual stimulus in the primary visual cortex ( ). For example, responses of