Abstract

Spike-timing-dependent plasticity (STDP) has been observed in many brain areas such as sensory cortices, where it is hypothesized to structure synaptic connections between neurons. Previous studies have demonstrated how STDP can capture spiking information at short timescales using specific input configurations, such as coincident spiking, spike patterns and oscillatory spike trains. However, the corresponding computation in the case of arbitrary input signals is still unclear. This paper provides an overarching picture of the algorithm inherent to STDP, tying together many previous results for commonly used models of pairwise STDP. For a single neuron with plastic excitatory synapses, we show how STDP performs a spectral analysis on the temporal cross-correlograms between its afferent spike trains. The postsynaptic responses and STDP learning window determine kernel functions that specify how the neuron “sees” the input correlations. We thus denote this unsupervised learning scheme as ‘kernel spectral component analysis’ (kSCA). In particular, the whole input correlation structure must be considered since all plastic synapses compete with each other. We find that kSCA is enhanced when weight-dependent STDP induces gradual synaptic competition. For a spiking neuron with a “linear” response and pairwise STDP alone, we find that kSCA resembles principal component analysis (PCA). However, plain STDP does not isolate correlation sources in general, e.g., when they are mixed among the input spike trains. In other words, it does not perform independent component analysis (ICA). Tuning the neuron to a single correlation source can be achieved when STDP is paired with a homeostatic mechanism that reinforces the competition between synaptic inputs. Our results suggest that neuronal networks equipped with STDP can process signals encoded in the transient spiking activity at the timescales of tens of milliseconds for usual STDP.

Author Summary

Tuning feature extraction of sensory stimuli is an important function for synaptic plasticity models. A widely studied example is the development of orientation preference in the primary visual cortex, which can emerge using moving bars in the visual field. A crucial point is the decomposition of stimuli into basic information tokens, e.g., selecting individual bars even though they are presented in overlapping pairs (vertical and horizontal). Among classical unsupervised learning models, independent component analysis (ICA) is capable of isolating basic tokens, whereas principal component analysis (PCA) cannot. This paper focuses on spike-timing-dependent plasticity (STDP), whose functional implications for neural information processing have been intensively studied both theoretically and experimentally in the last decade. Following recent studies demonstrating that STDP can perform ICA for specific cases, we show how STDP relates to PCA or ICA, and in particular explains the conditions under which it switches between them. Here information at the neuronal level is assumed to be encoded in temporal cross-correlograms of spike trains. We find that a linear spiking neuron equipped with pairwise STDP requires additional mechanisms, such as a homeostatic regulation of its output firing, in order to separate mixed correlation sources and thus perform ICA.

Introduction

Organization in neuronal networks is hypothesized to rely to a large extent on synaptic plasticity based on their spiking activity. The importance of spike timing for synaptic plasticity has been observed in many brain areas for many types of neurons [1], [2], which was termed spike-timing-dependent plasticity (STDP). On the modeling side, STDP was initially proposed to capture information within spike trains at short timescales, as can be found in the auditory pathway of barn owls [3]. For more than a decade, STDP has been the subject of many theoretical studies to understand how it can select synapses based on the properties of pre- and postsynaptic spike trains. A number of studies have focused on how STDP can perform input selectivity by favoring input pools with higher firing rates [4], [5], with synchronously firing inputs [6], or both [7], detect spike patterns [8] and rate-modulated patterns [9], and interact with oscillatory signals [10], [11]. The STDP dynamics can simultaneously generate stability of the output firing rate and competition between individual synaptic weights [6], [12]–[15]. In order to strongly drive the postsynaptic neurons, which we refer to as robust neuronal specialization. [16]. When considering recurrently connected neurons, the weight dynamics can lead to emerging functional pathways [17]–[19] and specific spiking activity [20], [21]. Recent reviews provide an overview of the richness of STDP-based learning dynamics [22], [23].

The present paper aims to provide a general interpretation of the synaptic dynamics at a functional level. In this way, we want to characterize how spiking information is relevant to plasticity. Previous publications [24], [25] mentioned the possible relation between STDP and Oja's rate-based plasticity rule [26], which performs principal component analysis (PCA). Previous work [27] showed how STDP can capture slow time-varying information within spike trains in a PCA-like manner, but this approach does not actually make use of the temporal (approximate) antisymmetry of the typical STDP learning window for excitatory synapses; see also earlier work about storing correlations of neuronal firing rates [28]. Along similar lines, STDP was used to perform independent component analysis (ICA) for specific input signals typically used to discriminate between PCA and ICA [7], [29]. In particular, STDP alone did not seem capable of performing ICA in those numerical studies: additional mechanisms such as synaptic scaling were necessary. On the other hand, additive-like STDP has been shown to be capable of selecting only one among two identical input pools with independent correlations from each other, also referred to as ‘symmetry breaking’ [13], [17]. In addition to studies of the synaptic dynamics, considerations on memory and synaptic management (e.g., how potentiated weights are maintained) have been used to relate STDP and optimality in unsupervised learning [30], [31]. To complement these efforts, the present paper proposes an in-depth study of the learning dynamics and examines under which conditions pairwise STDP can perform ICA. For this purpose, we consider input spiking activity that mixes correlation sources. We draw on our previously developed framework that describes the weight dynamics [15], [23] and extend the analysis to the case of an arbitrary input correlation structure. This theory is based on the Poisson neuron model [6] and focuses on pairwise weight-dependent STDP for excitatory synapses. Mutual information is used to evaluate how STDP modifies the neuronal response to correlated inputs [32]. This allows us to relate the outcome of STDP to either PCA and ICA [33]. Finally, we examine the influence of the STDP and neuronal parameters on the learning process. Our model captures fundamental properties shared by more elaborate neuronal and STDP models. In this way, it provides a minimal and tractable configuration to study the computational power of STDP, bridging the gap between physiological modeling and machine learning.

Results

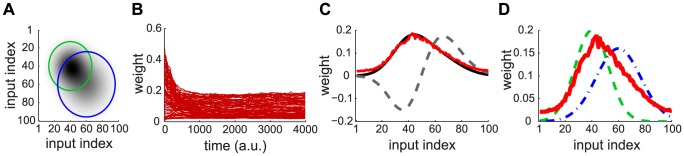

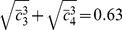

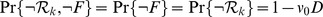

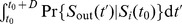

Spectral decomposition is typically used to find the meaningful components or main trends in a collection of input signals (or data). In this way, one can represent or describe the inputs in a summarized manner, i.e., in a space of lower dimension. This paper focuses on the information conveyed by spike trains, which will be formalized later. The function of neuronal processing is to extract the dominant component(s) of the information that it receives, and disregard the rest, such as noise. In the context of learning, synaptic competition favors some weights at the expense of others, which tunes the neuronal selectivity. As a first step to introduce spectral decomposition, we consider Oja's rule [26] that enables a linear non-spiking neuron to learn the correlations between its input firing rates. At each time step, the 100 input firing rates are determined by two Gaussian profiles with distinct means, variances and amplitudes (green and blue curves in Fig. 1D), in addition to noise. The area under the curve indicates the strength of input correlations; here the green dashed curve “dominates” the blue dashed-dotted curve. This results in correlation among the input rates, as represented by the matrix in Fig. 1A. The vector of weights  is modified by Oja's rule:

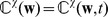

is modified by Oja's rule:

| (1) |

where  is the input rates and

is the input rates and  is the neuron output (

is the neuron output ( indicates the scalar product of the two vectors). The weight evolution is represented in Fig. 1B. The final weight distribution reflects the principal component of the correlation matrix (red solid curve in Fig. 1C). As shown in Fig. 1D, this does not represent only the stronger correlation source (green dashed curve), but also the weaker one (blue dashed-dotted curve). This follows because the principal component mixes the two sources, which overlap in Fig. 1A. In other words, Oja's rule cannot isolate the strongest source and thus cannot perform ICA, but only PCA. We will examine later whether the same phenomenon occurs for STDP. Note that the rate correlation matrix is always symmetric. This differs from using PCA in the context of data analysis, such as finding the direction that provides the dependence of highest magnitude in a cloud of data points.

indicates the scalar product of the two vectors). The weight evolution is represented in Fig. 1B. The final weight distribution reflects the principal component of the correlation matrix (red solid curve in Fig. 1C). As shown in Fig. 1D, this does not represent only the stronger correlation source (green dashed curve), but also the weaker one (blue dashed-dotted curve). This follows because the principal component mixes the two sources, which overlap in Fig. 1A. In other words, Oja's rule cannot isolate the strongest source and thus cannot perform ICA, but only PCA. We will examine later whether the same phenomenon occurs for STDP. Note that the rate correlation matrix is always symmetric. This differs from using PCA in the context of data analysis, such as finding the direction that provides the dependence of highest magnitude in a cloud of data points.

Figure 1. Example of PCA performed by Oja's rate-based plasticity rule.

(A) Theoretical cross-correlation matrix of 100 inputs induced by two Gaussian distributions of rates. Darker pixels indicate higher correlations. The circles indicate where the correlation for each source drops below 10% of its maximum (see the Gaussian profiles in D). (B) Traces of the weights modified by Oja's rule [26] in (1). At random times, the input rates  follow either Gaussian rate profile corresponding to the green and blue curves in D; white noise is added at each timestep. (C) The asymptotic distribution of the weights (red) is close to the principal component of the matrix in A (black solid curve), but distinct from the second component (black dashed curve). (D) The final weight distribution (red) actually overlaps both Gaussian rate profiles in green dashed and blue dashed-dotted lines that induce correlation in the inputs. The green and blue curves correspond to the small and large circles in A, respectively.

follow either Gaussian rate profile corresponding to the green and blue curves in D; white noise is added at each timestep. (C) The asymptotic distribution of the weights (red) is close to the principal component of the matrix in A (black solid curve), but distinct from the second component (black dashed curve). (D) The final weight distribution (red) actually overlaps both Gaussian rate profiles in green dashed and blue dashed-dotted lines that induce correlation in the inputs. The green and blue curves correspond to the small and large circles in A, respectively.

Spiking neuron configuration

In order to examine the computational capabilities of STDP, we consider a single neuron whose  excitatory synapses are modified by STDP, as shown in Fig. 2A. Our theory relies on the Poisson neuron model, which fires spikes depending on a stochastic rate intensity that relates to the soma potential. Each presynaptic spike induces variation of the soma potential, or postsynaptic potential (PSP), described by the normalized kernel function

excitatory synapses are modified by STDP, as shown in Fig. 2A. Our theory relies on the Poisson neuron model, which fires spikes depending on a stochastic rate intensity that relates to the soma potential. Each presynaptic spike induces variation of the soma potential, or postsynaptic potential (PSP), described by the normalized kernel function  , shifted by the axonal and dendritic delays,

, shifted by the axonal and dendritic delays,  and

and  , respectively (Fig. 2B). The size of the PSP is scaled by the synaptic weight

, respectively (Fig. 2B). The size of the PSP is scaled by the synaptic weight  .

.

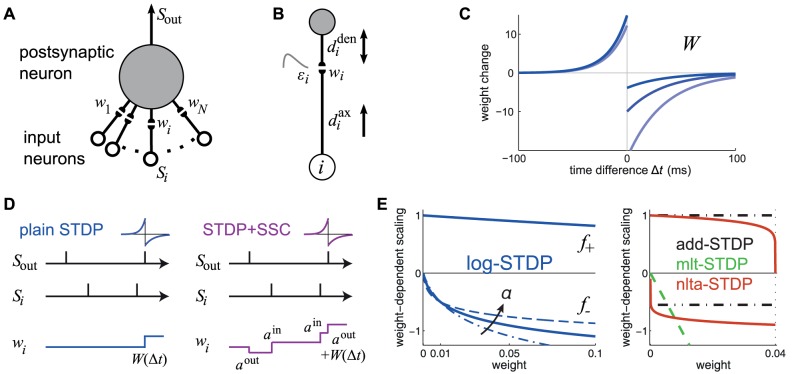

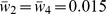

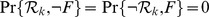

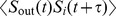

Figure 2. Single neuron with STDP-plastic excitatory synapses.

(A) Schematic representation of the neuron (top gray-filled circle) and the  synapses (pairs of black-filled semicircles) that are stimulated by the input spike trains

synapses (pairs of black-filled semicircles) that are stimulated by the input spike trains  (bottom arrows). (B) Detail of synapse

(bottom arrows). (B) Detail of synapse  , whose weight is

, whose weight is  , postsynaptic response kernel

, postsynaptic response kernel  , axonal and dendritic delays

, axonal and dendritic delays  and

and  , respectively. The arrows indicate that

, respectively. The arrows indicate that  describes the propagation along the axon to the synapse, while

describes the propagation along the axon to the synapse, while  relates to both conduction of postsynaptic potential (PSP) toward soma and back-propagation of action potential toward the synaptic site. (C) Example of temporally Hebbian weight-dependent learning window

relates to both conduction of postsynaptic potential (PSP) toward soma and back-propagation of action potential toward the synaptic site. (C) Example of temporally Hebbian weight-dependent learning window  that determines the STDP contribution of pairs of pre- and postsynaptic spikes. The curve corresponds to (22). Darker blue indicates a stronger value for

that determines the STDP contribution of pairs of pre- and postsynaptic spikes. The curve corresponds to (22). Darker blue indicates a stronger value for  , which leads to less potentiation and more depression. (D) Schematic evolution of the weight

, which leads to less potentiation and more depression. (D) Schematic evolution of the weight  for given pre- and postsynaptic spike trains

for given pre- and postsynaptic spike trains  and

and  . The size of each jump is indicated by the nearby expression. Comparison between plain STDP for which only pairs contribute and STDP+SCC where single spikes also modify the weight via the terms

. The size of each jump is indicated by the nearby expression. Comparison between plain STDP for which only pairs contribute and STDP+SCC where single spikes also modify the weight via the terms  . Here only the pair of latest spikes falls into the temporal range of STDP and thus significantly contributes to STDP. (E) Scaling functions of

. Here only the pair of latest spikes falls into the temporal range of STDP and thus significantly contributes to STDP. (E) Scaling functions of  that determine the weight dependence for LTP and LTD. In the left panel, the blue solid curve corresponds to log-STDP [16] with

that determine the weight dependence for LTP and LTD. In the left panel, the blue solid curve corresponds to log-STDP [16] with  ,

,  and

and  in (23). The parameter

in (23). The parameter  controls the saturation of the LTD curve: the dashed curve corresponds to

controls the saturation of the LTD curve: the dashed curve corresponds to  and the dashed-dotted curve to

and the dashed-dotted curve to  . In the right panel, the red solid curves represent

. In the right panel, the red solid curves represent  for nlta-STDP [13] with

for nlta-STDP [13] with  and

and  in (24); the black dashed-dotted horizontal lines indicate the add-STDP that is weight independent; the green dashed line corresponds to a linearly dependent LTD for mlt-STDP [38].

in (24); the black dashed-dotted horizontal lines indicate the add-STDP that is weight independent; the green dashed line corresponds to a linearly dependent LTD for mlt-STDP [38].

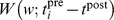

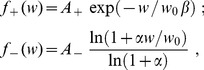

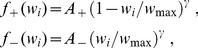

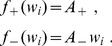

Pairwise weight-dependent STDP model

We use a phenomenological STDP model described by a learning window  as in Fig. 2C. Importantly, LTP/LTD is not determined by the relative timing of firing at the neuron somas, but by the time difference at the synaptic site, meaning that

as in Fig. 2C. Importantly, LTP/LTD is not determined by the relative timing of firing at the neuron somas, but by the time difference at the synaptic site, meaning that  incorporates the axonal and dendritic delays. This choice can be related to more elaborate plasticity models based on the local postsynaptic voltage on the dendrite [7], [34].

incorporates the axonal and dendritic delays. This choice can be related to more elaborate plasticity models based on the local postsynaptic voltage on the dendrite [7], [34].

We will examine common trends and particularities of the weight specialization for several models of STDP.

A “plain” STDP model postulates that all pairs of pre- and postsynaptic spikes, and only them, contribute to the weight modification, provided the time difference

is in the range of the learning window

is in the range of the learning window  as illustrated in Fig. 2D.

as illustrated in Fig. 2D.A second scheme assumes that, in addition to STDP-specific weight updates, each pre- or postsynaptic spike also induces a weight update via the corresponding contribution

, as illustrated in Fig. 2D. This will be referred to as ‘STDP+SSC’, as opposed to ‘plain STDP’ (or ‘STDP’ alone when no precision is needed). Although sometimes regarded as less plausible from a biological point of view, single-spike contributions can regulate the neuronal output firing in a homeostatic fashion [35], [36]. In particular, we will examine the role of

, as illustrated in Fig. 2D. This will be referred to as ‘STDP+SSC’, as opposed to ‘plain STDP’ (or ‘STDP’ alone when no precision is needed). Although sometimes regarded as less plausible from a biological point of view, single-spike contributions can regulate the neuronal output firing in a homeostatic fashion [35], [36]. In particular, we will examine the role of  that has been used to enhance the competition between synaptic inputs [6].

that has been used to enhance the competition between synaptic inputs [6].We will consider weight dependence for STDP, namely how the learning window function

depends on the weight

depends on the weight  as in Fig. 2C, following experimental observations [37]. Figure 2E represents four examples of weight dependence: our ‘log-STDP’ in blue [16], the weight-independent ‘add-STDP’ for additive STDP [6], [12], ‘nlta-STDP’ proposed by Gütig et al. [13], and ‘mlt-STDP’ for the multiplicative STDP by van Rossum et al. [38] in which LTD scales linearly with

as in Fig. 2C, following experimental observations [37]. Figure 2E represents four examples of weight dependence: our ‘log-STDP’ in blue [16], the weight-independent ‘add-STDP’ for additive STDP [6], [12], ‘nlta-STDP’ proposed by Gütig et al. [13], and ‘mlt-STDP’ for the multiplicative STDP by van Rossum et al. [38] in which LTD scales linearly with  . For log-STDP and nlta-STDP, the weight dependence can be adjusted via a parameter. For log-STDP (left panel), the LTD curve scales almost linearly with respect to

. For log-STDP and nlta-STDP, the weight dependence can be adjusted via a parameter. For log-STDP (left panel), the LTD curve scales almost linearly with respect to  for small values of

for small values of  in a similar manner to mlt-STDP, whereas it is additive-like (weight-independent) STDP for large values of

in a similar manner to mlt-STDP, whereas it is additive-like (weight-independent) STDP for large values of  (in the range

(in the range  ). Likewise, nlta-STDP scales between the other ‘multiplicative’ STDP proposed by Rubin et al. [39] for

). Likewise, nlta-STDP scales between the other ‘multiplicative’ STDP proposed by Rubin et al. [39] for  and add-STDP for

and add-STDP for  ; the red curve in Fig. 2E uses

; the red curve in Fig. 2E uses  .

.

Variability is also incorporated in the weight updates through the white noise  , although its effect will not be examined specifically in the present work. Typical parameters used in simulations are given in Table 1 and detailed expressions for

, although its effect will not be examined specifically in the present work. Typical parameters used in simulations are given in Table 1 and detailed expressions for  are provided in Methods.

are provided in Methods.

Table 1. Neuronal and learning parameters.

| Quantity: | variable name and value |

| time step |

|

| simulation duration |

|

| Input parameters | |

| input firing rate |

|

| input correlation strength |

|

| PSP parameters | |

| synaptic rise time constant |

|

| synaptic decay time constant |

|

| axonal delays |

|

| dendritic delays |

|

| STDP model | |

| learning speed |

|

| LTP time constant |

|

| LTD time constant |

|

| white noise standard deviation |

|

| log-STDP in (23) | |

| LTP scaling coefficient |

|

| LTP decay factor |

|

| LTD scaling coefficient |

|

| LTD curvature factor |

|

| reference weight |

|

| nlta-STDP in (24) | |

| LTP scaling coefficient |

|

| LTD scaling coefficient |

|

| weight-dependence exponent |

|

| weight upper bound |

|

| add-STDP | |

| LTP scaling coefficient |

|

| LTD scaling coefficient |

|

| weight upper bound |

|

| mlt-STDP in (25) | |

| LTP scaling coefficient |

|

| LTD scaling coefficient |

|

| single-spike plasticity terms (SCC) | |

| presynaptic contribution |

|

| postsynaptic contribution |

|

Unless specified, the above parameters are used in numerical simulation.

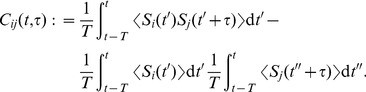

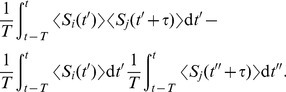

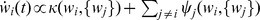

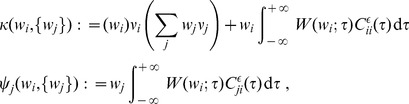

Learning dynamics

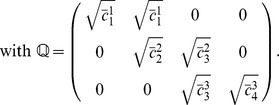

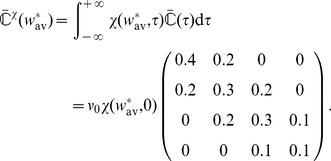

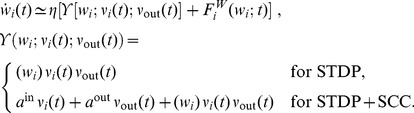

The present analysis is valid for any pairwise STDP model that is sensitive to up-to-second order spike-time correlations. In its present form, it cannot deal with, for example, the ‘triplet’ STDP model [40] and the stochastic model proposed by Appleby and Elliott [41]. The neuronal spiking activity is described by the corresponding firing rates and spike-time correlations. See Table 2 for an overview of the variables in our system. The input rates and correlations are assumed to be consistent over the learning epoch. Details of the analytical calculations are provided in Methods. The evolution of the vector of plastic weights  is then governed by the following differential equation:

is then governed by the following differential equation:

| (2) |

where the dependence over time  is omitted. The function

is omitted. The function  lumps rate contributions to plasticity (including STDP) and depends on the vector of input firing rates

lumps rate contributions to plasticity (including STDP) and depends on the vector of input firing rates  and neuronal output firing rate

and neuronal output firing rate  , as well as the weights. The second term describes STDP-specific spike-based effects. The STDP effect are described by the matrix

, as well as the weights. The second term describes STDP-specific spike-based effects. The STDP effect are described by the matrix  , which is assumed to be independent of

, which is assumed to be independent of  and whose elements are:

and whose elements are:

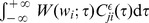

| (3) |

namely the (anti)convolution of the input spike-time cross-correlograms  with the kernel functions

with the kernel functions  , for each pair of inputs

, for each pair of inputs  and

and  . A schematic example is illustrated in Fig. 3A. For clarity purpose, we rewrite the time difference

. A schematic example is illustrated in Fig. 3A. For clarity purpose, we rewrite the time difference  as

as  hereafter. In (3), each kernel

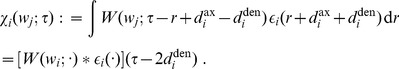

hereafter. In (3), each kernel  combines the STDP learning window at synapse

combines the STDP learning window at synapse  and the postsynaptic response kernels

and the postsynaptic response kernels  :

:

| (4) |

where the convolution indicated by  concerns the variable

concerns the variable  , as illustrated in Fig. 3B. For weight-dependent STDP, the kernel is modified via the scaling of both potentiation and depression for

, as illustrated in Fig. 3B. For weight-dependent STDP, the kernel is modified via the scaling of both potentiation and depression for  in terms of

in terms of  (Fig. 2C). In addition, the postsynaptic response crucially shapes

(Fig. 2C). In addition, the postsynaptic response crucially shapes  [19], [27], as shown in Fig. 3C. In the case of a single neuron (as opposed to a recurrent network), the dendritic delay

[19], [27], as shown in Fig. 3C. In the case of a single neuron (as opposed to a recurrent network), the dendritic delay  plays a distinct role compared to the axonal delay

plays a distinct role compared to the axonal delay  in that it shifts the kernel

in that it shifts the kernel  as a function of

as a function of  to the right, namely implying more potentiation for

to the right, namely implying more potentiation for  .

.

Table 2. Variables and parameters that describe the neuronal learning system.

| Description | symbol | (vector/matrix notation) |

| input firing rates |

|

|

| input spike-time cross-covariances |

|

|

| neuronal firing rate |

|

|

| input-output spike-time covariances |

|

|

| synaptic weights |

|

|

| PSP function |

|

|

| axonal delays |

|

|

| dendritic delays |

|

|

kernel functions for synapse

|

|

|

| lumped plasticity rate-based effects |

|

|

| STDP-specific plasticity spike effects |

|

|

| integral value of STDP |

|

The variable  denotes the time, whereas

denotes the time, whereas  indicates the spike-time difference (or time lag) used in correlations and covariances.

indicates the spike-time difference (or time lag) used in correlations and covariances.

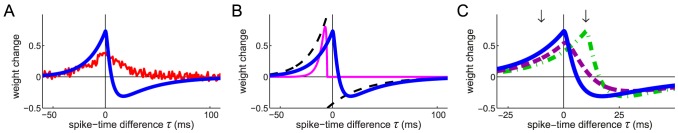

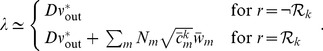

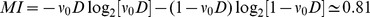

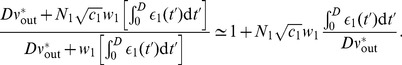

Figure 3. Kernel function  .

.

(A) Anticonvolution of a fictive correlogram  (red curve) and a typical kernel function

(red curve) and a typical kernel function  (blue curve). The amount of LTP/LTD corresponds to the area under the curve of the product of the two functions. (B) Plot of a typical kernel

(blue curve). The amount of LTP/LTD corresponds to the area under the curve of the product of the two functions. (B) Plot of a typical kernel  as a function of

as a function of  (blue curve). It corresponds to (4) for log-STDP with the baseline parameters in Table 1, namely rise and decay time constants

(blue curve). It corresponds to (4) for log-STDP with the baseline parameters in Table 1, namely rise and decay time constants  and

and  in (29), respectively, and a purely axonal delay

in (29), respectively, and a purely axonal delay  . The related STDP learning window

. The related STDP learning window  is plotted in black dashed line and the mirrored PSP response in pink solid line. The effect of the axonal delay shifts both the

is plotted in black dashed line and the mirrored PSP response in pink solid line. The effect of the axonal delay shifts both the  and the PSP in the same direction, which cancels out. (C) Variants of

and the PSP in the same direction, which cancels out. (C) Variants of  for longer PSP time constants,

for longer PSP time constants,  and

and  (purple curve); and for a dendritic delay

(purple curve); and for a dendritic delay  (green dashed-dotted curve). In contrast to

(green dashed-dotted curve). In contrast to  that does not play a role in (4),

that does not play a role in (4),  shifts

shifts  to the right. The arrows indicate

to the right. The arrows indicate  .

.

Encoding the input correlation structure into the weight structure

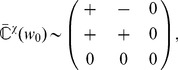

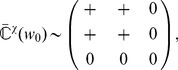

We stress that the novel contribution of the present work lies in considering general input structures, i.e., when the matrix of cross-correlograms  is arbitrary. This extends our previous study [15] of the case of homogeneous within-pool correlations and no between-pool correlations, the matrix

is arbitrary. This extends our previous study [15] of the case of homogeneous within-pool correlations and no between-pool correlations, the matrix  in (3) is diagonal (by block). We focus on the situation where the average firing rates across inputs do not vary significantly. This means that rate-based plasticity rules cannot extract the spiking information conveyed by these spike trains. In this case, pairwise spike-time correlations mainly determine the weight specialization induced by STDP via

in (3) is diagonal (by block). We focus on the situation where the average firing rates across inputs do not vary significantly. This means that rate-based plasticity rules cannot extract the spiking information conveyed by these spike trains. In this case, pairwise spike-time correlations mainly determine the weight specialization induced by STDP via  , dominating rate effects lumped by

, dominating rate effects lumped by  in (2). The key is the spectral properties of

in (2). The key is the spectral properties of  , which will be analyzed as follows:

, which will be analyzed as follows:

evaluation of the equilibrium value for the mean weight

in the uncorrelated case;

in the uncorrelated case;calculation of the matrix

that combines the input correlation structure, the PSP and STDP parameters (

that combines the input correlation structure, the PSP and STDP parameters ( is the homogeneous weight vector for which

is the homogeneous weight vector for which  for all

for all  );

);analysis of the spectrum of

to find the dominant eigenvalue(s) and the corresponding left-eigenvector(s);

to find the dominant eigenvalue(s) and the corresponding left-eigenvector(s);decomposition of the initial weight structure (e.g., homogeneous distribution) in the eigenspace to predict the specialization.

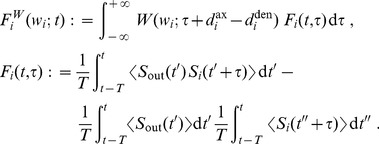

Equilibrium for the mean weight

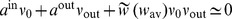

Partial stability of the synaptic dynamics is necessary in order that not all weights cluster at zero or tend to continuously grow. This also implies the stabilization of the output firing rate. Here we require that the STDP dynamics itself provides a stable fixed point for  . In particular, this must be true for uncorrelated inputs, which relates to equating to zero the term

. In particular, this must be true for uncorrelated inputs, which relates to equating to zero the term  in (2).

in (2).

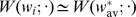

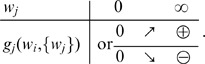

For plain STDP with weight dependence, the corresponding fixed point is determined by the STDP learning window alone, which is the same for all weights  here and is related to the integral value

here and is related to the integral value

| (5) |

Here the weight dependence alone can stabilize the mean synaptic weights, which requires that  decreases when

decreases when  increases [13], [38], [42].

increases [13], [38], [42].

For STDP+SCC, a mean-field approximation of  over the pool of incoming synapses is often used to evaluate the stability of the mean weight

over the pool of incoming synapses is often used to evaluate the stability of the mean weight  , which gives

, which gives  , where

, where  is the mean input firing rate. The equilibrium values for the mean weight

is the mean input firing rate. The equilibrium values for the mean weight  and the neuronal firing rate

and the neuronal firing rate  are then related by

are then related by

| (6) |

A stable fixed point for arbitrary input configuration is ensured by  ,

,  and a negative derivative

and a negative derivative  as a function of

as a function of  as well as

as well as  at the weight equilibrium value [19]. This means that the right-hand side is a negative function of

at the weight equilibrium value [19]. This means that the right-hand side is a negative function of  . Note that the additional condition

. Note that the additional condition  is required for networks with plastic recurrent synapses [43]. The plasticity terms

is required for networks with plastic recurrent synapses [43]. The plasticity terms  can lead to a homeostatic constraint on the output firing rate

can lead to a homeostatic constraint on the output firing rate  [35]. In the case of stability, the equilibrium values

[35]. In the case of stability, the equilibrium values  and

and  depend on the respective input firing rate

depend on the respective input firing rate  . For weight-dependent STDP+SCC, fixed points

. For weight-dependent STDP+SCC, fixed points  also exist for individual weights

also exist for individual weights  and correspond to (6) when replacing

and correspond to (6) when replacing  by

by  and

and  by

by  . The implications of these two different ways of stabilizing

. The implications of these two different ways of stabilizing  will be discussed via numerical results later.

will be discussed via numerical results later.

Spectrum of  and initial weight specialization

and initial weight specialization

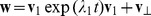

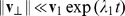

Following our previous study [16], we consider that rate-based effects vanish and focus on the initial stage when weights specialize due to the spike-time correlation term involving  in (2). This means that we approximate

in (2). This means that we approximate

| (7) |

The weight evolution can be evaluated using (7) provided spike-time correlations are significantly strong compared to the “noise” in the learning dynamics. The rate terms in  are proportional to the

are proportional to the  whereas the spike-based term grow with

whereas the spike-based term grow with  only. This implies stronger noise and more difficulty to potentiate weights when

only. This implies stronger noise and more difficulty to potentiate weights when  is high at the baseline state, e.g., for large input firing rates. Assuming homogeneous weights

is high at the baseline state, e.g., for large input firing rates. Assuming homogeneous weights  as initial condition, the weight dynamics is determined by the learning window

as initial condition, the weight dynamics is determined by the learning window  . As a first step, we consider the case where the matrix

. As a first step, we consider the case where the matrix  is diagonalizable as a real matrix, namely

is diagonalizable as a real matrix, namely  with

with  a diagonal matrix and

a diagonal matrix and  the matrix for the change of basis (all with real elements). The rows of

the matrix for the change of basis (all with real elements). The rows of  are the orthogonal left-eigenvectors

are the orthogonal left-eigenvectors  corresponding to the eigenvalues

corresponding to the eigenvalues  ,

,  that are the diagonal elements of

that are the diagonal elements of  . The weight vector

. The weight vector  can be decomposed in the basis of eigenvectors (or spectral components)

can be decomposed in the basis of eigenvectors (or spectral components)

| (8) |

where  are the coordinates of

are the coordinates of  in the new basis. By convention, we require all

in the new basis. By convention, we require all  to be normalized and that

to be normalized and that  at time

at time  for

for  . Transposing (7) in the new basis, the evolution of

. Transposing (7) in the new basis, the evolution of  can be approximated by

can be approximated by  , which gives

, which gives

| (9) |

The initial weight specialization is thus dominated by the  related to the largest positive eigenvalues

related to the largest positive eigenvalues  and can be predicted by the corresponding eigenvectors

and can be predicted by the corresponding eigenvectors  [19], [25].

[19], [25].

In general, we can use the property that the set of diagonalizable matrices with complex elements is dense in the vector space of square matrices [44, p 87]. This means that it is possible to approximate  , in which case

, in which case  and

and  may have non-real elements. If the eigenvalue with the largest real part is a real number, the same conclusion as above is expected to hold, even though the eigenvectors may not be orthogonal. When a pair of eigenvalues dominate the spectrum,

may have non-real elements. If the eigenvalue with the largest real part is a real number, the same conclusion as above is expected to hold, even though the eigenvectors may not be orthogonal. When a pair of eigenvalues dominate the spectrum,  and its conjugate

and its conjugate  . The decomposition of the homogeneous vector

. The decomposition of the homogeneous vector  on the plane of the corresponding eigenvectors that gives

on the plane of the corresponding eigenvectors that gives  leads to the dominant term

leads to the dominant term  in the equivalent to (9);

in the equivalent to (9);  denotes the real part here. The initial growth or decay of

denotes the real part here. The initial growth or decay of  is given by the derivative:

is given by the derivative:

| (10) |

Note that this expression applies to the real case too, where  and the convention

and the convention  simply means that

simply means that  reflects the signs of the elements of the derivative vector.

reflects the signs of the elements of the derivative vector.

In most cases, the spectrum is dominated as described above and we can use the expression (10), which will be referred to as the ‘strongest’ spectral component of  . Note that, in the case of a non diagonalizable matrix, the Jordan form of

. Note that, in the case of a non diagonalizable matrix, the Jordan form of  could be used to describe more precisely the weight evolution, for example. We have also neglected the case

could be used to describe more precisely the weight evolution, for example. We have also neglected the case  for

for  , for which the decomposition of the before-learning weight specialization

, for which the decomposition of the before-learning weight specialization  may also play a role. Nevertheless, noise in the weight dynamics will lead the system away from such unstable fixed points.

may also play a role. Nevertheless, noise in the weight dynamics will lead the system away from such unstable fixed points.

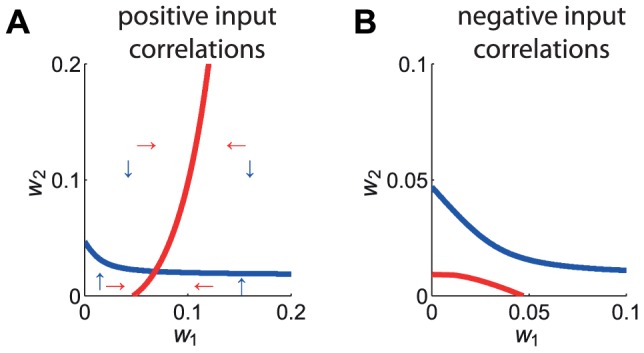

Asymptotic weight structure and stability

Now we focus on the final weight distribution that emerges, following the initial splitting. In particular, a stable asymptotic structure can be obtained when the learning equation (2) has a (stable) fixed point, as illustrated in Fig. 4A for the simple case of two weights  and

and  . Weight dependence can lead to the existence of at least a realizable and stable fixed point. Two conditions ensure the existence of a solution to the learning equation. First, the weight dependence should be such that LTD vanishes for small weights while LTP vanishes for large weights, as is the case for both log-STDP and nlta-STDP for

. Weight dependence can lead to the existence of at least a realizable and stable fixed point. Two conditions ensure the existence of a solution to the learning equation. First, the weight dependence should be such that LTD vanishes for small weights while LTP vanishes for large weights, as is the case for both log-STDP and nlta-STDP for  . Second, the inputs should be positively correlated. If this second assumption is lifted, the fixed point may become unrealizable (e.g.,

. Second, the inputs should be positively correlated. If this second assumption is lifted, the fixed point may become unrealizable (e.g.,  ) or simply not exist as in Fig. 4B. Nevertheless, we can also conclude the existence of a stable fixed point in the range of small negative correlations. This follows because of the continuity of the matrix coefficients in (2), which determines the fixed points

) or simply not exist as in Fig. 4B. Nevertheless, we can also conclude the existence of a stable fixed point in the range of small negative correlations. This follows because of the continuity of the matrix coefficients in (2), which determines the fixed points  , with respect to the matrix elements of

, with respect to the matrix elements of  .

.

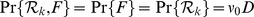

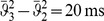

Figure 4. Existence of a fixed point for the weight dynamics.

(A) Curves of the zeros of (39) for  weights in the case of positively correlated inputs. The two curves have an intersection point, as the equilibrium curve for

weights in the case of positively correlated inputs. The two curves have an intersection point, as the equilibrium curve for  in red spans all

in red spans all  , while that for

, while that for  in blue spans all

in blue spans all  . The arrows indicate the signs of the derivatives

. The arrows indicate the signs of the derivatives  and

and  in each quadrant (red and blue, resp.). (B) Similar to A with negative input correlations, for which the curves do not intersect.

in each quadrant (red and blue, resp.). (B) Similar to A with negative input correlations, for which the curves do not intersect.

A general result about the relationship between the fixed point(s) and  is a difficult problem because

is a difficult problem because  changes together with

changes together with  for a weight-dependent learning window

for a weight-dependent learning window  . This implies that the eigenvector basis

. This implies that the eigenvector basis  are modified together with

are modified together with  . With the further assumption of a weak weight dependence and for a single dominant eigenvector

. With the further assumption of a weak weight dependence and for a single dominant eigenvector  , the term

, the term  , which determines the weight specialization, remains similar to

, which determines the weight specialization, remains similar to  . By this, we mean that the elements of both vectors are sorted in the same order. At the equilibrium, rate-based effects lumped in

. By this, we mean that the elements of both vectors are sorted in the same order. At the equilibrium, rate-based effects lumped in  balance the spike-based effects that are qualitatively described by

balance the spike-based effects that are qualitatively described by  . Under our assumptions, the vector elements of

. Under our assumptions, the vector elements of  are decreasing functions of the weights

are decreasing functions of the weights  . It follows that inputs corresponding to larger elements of (10) end up at a higher level of potentiation. However, when

. It follows that inputs corresponding to larger elements of (10) end up at a higher level of potentiation. However, when  has a strong antisymmetric component due to negative matrix elements, it can exhibit complex conjugate dominant eigenvalues with large imaginary parts. The weight vector

has a strong antisymmetric component due to negative matrix elements, it can exhibit complex conjugate dominant eigenvalues with large imaginary parts. The weight vector  may experience a rotation-like evolution, in which case the final distribution differs qualitatively from the initial splitting. Nevertheless, the weights with strongest initial LTP are expected to be mostly potentiated eventually. Further details are provided in Methods. Deviation from the predictions can also occur when several eigenvalues with similar real parts dominate the spectrum.

may experience a rotation-like evolution, in which case the final distribution differs qualitatively from the initial splitting. Nevertheless, the weights with strongest initial LTP are expected to be mostly potentiated eventually. Further details are provided in Methods. Deviation from the predictions can also occur when several eigenvalues with similar real parts dominate the spectrum.

In the particular case of additive STDP, a specific issue arises since the existence of a fixed point is not guaranteed. When  has purely complex eigenvalues, the weight dynamics are expected to exhibit an oscillatory behavior that may impair the emergence of an asymptotic weight structure, as was pointed out by Sprekeler et al. [27]. An example with add-STDP+SCC and eigenvalues that have large imaginary parts is provided in Text S1.

has purely complex eigenvalues, the weight dynamics are expected to exhibit an oscillatory behavior that may impair the emergence of an asymptotic weight structure, as was pointed out by Sprekeler et al. [27]. An example with add-STDP+SCC and eigenvalues that have large imaginary parts is provided in Text S1.

As some weights grow larger, they compete to drive the neuronal output firing [12]. This phenomenon is relatively weak for Poisson neurons compared to integrate-and-fire neurons [16]. For STDP+SCC, synaptic competition is enhanced when using  . Following (6), the larger negative

. Following (6), the larger negative  is, the lower the output firing rate

is, the lower the output firing rate  that is maintained by STDP at the equilibrium. This also holds when inputs are correlated. These rate effects lead to a form of sparse coding in which fewer weights are significantly potentiated, while the remainder weights are kept small. Another interpretation of the effect of

that is maintained by STDP at the equilibrium. This also holds when inputs are correlated. These rate effects lead to a form of sparse coding in which fewer weights are significantly potentiated, while the remainder weights are kept small. Another interpretation of the effect of  relies on the fact that all weights are homogeneously depressed after postsynaptic spiking. Then, only the weights of inputs involved in triggering firing may not experience depression provided STDP sufficiently potentiate them. This concerns inputs related to a common correlation source and may result in a winner-take-all situation. Moreover, this effect increases with the output firing rate and may become dominant when STDP generates strong LTP, leading to large weights.

relies on the fact that all weights are homogeneously depressed after postsynaptic spiking. Then, only the weights of inputs involved in triggering firing may not experience depression provided STDP sufficiently potentiate them. This concerns inputs related to a common correlation source and may result in a winner-take-all situation. Moreover, this effect increases with the output firing rate and may become dominant when STDP generates strong LTP, leading to large weights.

In summary, for plain STDP, the final weight structure for plain STDP is expected to reflect the initial splitting, which is determined by the strong spectral component of  in (10), at least for the most potentiated weights that win the competition. The assumption of “sufficiently weak” weight dependence holds for log-STDP with

in (10), at least for the most potentiated weights that win the competition. The assumption of “sufficiently weak” weight dependence holds for log-STDP with  (sublinear saturation for

(sublinear saturation for  ) and for nlta-STDP with small values of

) and for nlta-STDP with small values of  (for

(for  away from the bounds). STDP+SCC may modify the final weight distribution when the single-spike contributions have comparably strong effects to STDP. In particular, competition between correlation sources is expected to be enhanced when

away from the bounds). STDP+SCC may modify the final weight distribution when the single-spike contributions have comparably strong effects to STDP. In particular, competition between correlation sources is expected to be enhanced when  is sufficiently large negative. In the following sections, we verify these predictions using numerical simulation for various input configurations.

is sufficiently large negative. In the following sections, we verify these predictions using numerical simulation for various input configurations.

Input spike-time correlation structure

In order to illustrate the above analysis, we consider input configurations that give to “rich” matrices of pairwise correlations  . Model input spike trains commonly combine stereotypical activity and random “background” spikes. Namely, to predict the evolution of plastic synaptic weights, it is convenient that the statistical properties of the inputs are invariant throughout the learning epoch (e.g., the presentation of a single stimulus). Mathematically, we require the input spike trains to be second-order stationary. In this way, the input firing rates

. Model input spike trains commonly combine stereotypical activity and random “background” spikes. Namely, to predict the evolution of plastic synaptic weights, it is convenient that the statistical properties of the inputs are invariant throughout the learning epoch (e.g., the presentation of a single stimulus). Mathematically, we require the input spike trains to be second-order stationary. In this way, the input firing rates  and the spike-time correlograms

and the spike-time correlograms  in (2) are well-defined and practically independent of time

in (2) are well-defined and practically independent of time  , even though the spike trains themselves may depend on time. The formal definitions of

, even though the spike trains themselves may depend on time. The formal definitions of  and

and  in Methods combine a stochastic ensemble average and a temporal average. This allows to deal with a broad class of inputs that have been used to investigate the effect of STDP, such as spike coordination [6], [13], [14], [36] and time-varying input signals that exhibit rate covariation [12], [27], [45], as well as elaborate configurations proposed recently [46]–[48]. Most numerical results in the present paper use the spike coordination that mixes input correlation sources. In the last section of Results, rate covariation will also be examined for the sake of generality.

in Methods combine a stochastic ensemble average and a temporal average. This allows to deal with a broad class of inputs that have been used to investigate the effect of STDP, such as spike coordination [6], [13], [14], [36] and time-varying input signals that exhibit rate covariation [12], [27], [45], as well as elaborate configurations proposed recently [46]–[48]. Most numerical results in the present paper use the spike coordination that mixes input correlation sources. In the last section of Results, rate covariation will also be examined for the sake of generality.

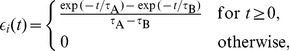

Pools with mixed spike-time correlation

Inputs thus generated model signals that convey information via precise timing embedded in noisy spike trains. A simple example consists of instantaneously correlated spike trains that correspond to input neurons belonging to the same afferent pathway, which have been widely used to study STDP dynamics [13], [14], [36]. Here we also consider the situation where synapses can take part in conveying distinct independent signals, as well as time lags between the relative firing of inputs. To do so, spike trains are generated using a thinning of homogeneous Poisson processes. Namely, independent homogeneous Poisson processes are used as references  to determine correlated events at a given rate

to determine correlated events at a given rate  that trigger for some designated inputs. For input

that trigger for some designated inputs. For input  , we denote

, we denote  the number of spikes associated with each correlated event from

the number of spikes associated with each correlated event from  . The probability of firing after a given latency

. The probability of firing after a given latency  is

is  with

with  . Outside correlated events, inputs randomly fire spikes such that they all have the same time-averaged firing rate

. Outside correlated events, inputs randomly fire spikes such that they all have the same time-averaged firing rate  . This corresponds to an additional Poisson process with rate

. This corresponds to an additional Poisson process with rate  , summing over all independent references indexed by

, summing over all independent references indexed by  . As a result, for two inputs

. As a result, for two inputs  and

and  related to a single common reference

related to a single common reference  , the between-pool cross-covariance is given by

, the between-pool cross-covariance is given by

| (11) |

where  is the Dirac delta function. The correlogram comprises delta peaks at the time difference between all pairs of spikes (indexed by

is the Dirac delta function. The correlogram comprises delta peaks at the time difference between all pairs of spikes (indexed by  and

and  , respectively) coming from inputs

, respectively) coming from inputs  and

and  . The covariance contributions in (11) from distinct references summate. This method of generating input pattern activity is an alternative to that used in previous studies [8], [9], but it produces similar correlograms.

. The covariance contributions in (11) from distinct references summate. This method of generating input pattern activity is an alternative to that used in previous studies [8], [9], but it produces similar correlograms.

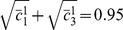

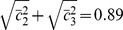

Extraction of the principal spectral component

This first application shows how STDP can perform PCA, which is the classical spectral analysis for symmetric matrices. To do so, we consider input pools that have multiple sources of correlated activity, which gives within-pool and between-pool correlations. In the example in Fig. 5A, inputs are partitioned into  pools of 50 inputs each that have the same firing rate

pools of 50 inputs each that have the same firing rate  . Some pools share common references that trigger coincident firing as described in (11): pools

. Some pools share common references that trigger coincident firing as described in (11): pools  and

and  (from left to right) share a correlation reference

(from left to right) share a correlation reference  with respective correlation strengths

with respective correlation strengths  and

and  for the concerned inputs; pools

for the concerned inputs; pools  and

and  share

share  with

with  ; and pools

; and pools  and

and  share

share  with

with  . The overline indicates pool variables. All references

. The overline indicates pool variables. All references  correspond to coincident firing (

correspond to coincident firing ( ) and the rate of correlated events is

) and the rate of correlated events is  . The matrix

. The matrix  is composed of blocks and given by

is composed of blocks and given by

| (12) |

|

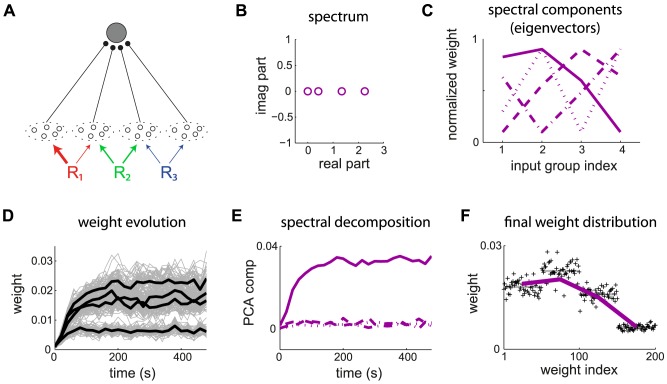

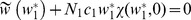

Figure 5. Principal component analysis for mixed correlation sources.

(A) The postsynaptic neuron is excited by  pools of 50 inputs each with the global input correlation matrix

pools of 50 inputs each with the global input correlation matrix  in (13). The thickness of the colored arrows represent the correlation strengths from each reference to each input pool. The input synapses are modified by log-STDP with

in (13). The thickness of the colored arrows represent the correlation strengths from each reference to each input pool. The input synapses are modified by log-STDP with  . The simulation parameters are given in Table 1. (B) Spectrum and (C) eigenvectors of

. The simulation parameters are given in Table 1. (B) Spectrum and (C) eigenvectors of  . The eigenvalues sorted from the largest to the smallest one correspond to the solid, dashed, dashed-dotted and dotted curves, respectively. (D) Evolution of the weights (gray traces) and the means over each pool (thick black curves) over 500 s. (E) Evolution of the weights

. The eigenvalues sorted from the largest to the smallest one correspond to the solid, dashed, dashed-dotted and dotted curves, respectively. (D) Evolution of the weights (gray traces) and the means over each pool (thick black curves) over 500 s. (E) Evolution of the weights  in the basis of spectral components (eigenvectors in C). (F) Weight structure at the end of the learning epoch. Each weight is averaged over the last 100 s. The purple curve represents the dominant spectral component (solid line in C).

in the basis of spectral components (eigenvectors in C). (F) Weight structure at the end of the learning epoch. Each weight is averaged over the last 100 s. The purple curve represents the dominant spectral component (solid line in C).

Each row of  corresponds to a single correlation source here. We further assume that all synapses have identical kernels

corresponds to a single correlation source here. We further assume that all synapses have identical kernels  . Combining (11) and (3), their covariance matrix

. Combining (11) and (3), their covariance matrix  reads

reads

|

(13) |

The covariance matrix  in (13) is symmetric and thus diagonalizable, so it has real eigenvalues and admits a basis of real orthogonal eigenvectors. Here the largest real eigenvalue is isolated in Fig. 5B. The theory thus predicts that the corresponding spectral component (solid line in in Fig. 5C) dominates the dynamics and is potentiated, whereas the remaining ones are depressed. Numerical simulation using log-STDP agrees with this prediction, as illustrated in Fig. 5E. By gradually potentiating the correlated inputs, weight-dependent STDP results in a multimodal weight distribution that can better separate the mean weights of the pools. The final weight structure in Fig. 5F reflects the dominant eigenvector. Despite the variability of individual weight traces in Fig. 5D due to the noise in the weight update and rather fast learning rate used here, the emerging weight structure remains stable in the long run.

in (13) is symmetric and thus diagonalizable, so it has real eigenvalues and admits a basis of real orthogonal eigenvectors. Here the largest real eigenvalue is isolated in Fig. 5B. The theory thus predicts that the corresponding spectral component (solid line in in Fig. 5C) dominates the dynamics and is potentiated, whereas the remaining ones are depressed. Numerical simulation using log-STDP agrees with this prediction, as illustrated in Fig. 5E. By gradually potentiating the correlated inputs, weight-dependent STDP results in a multimodal weight distribution that can better separate the mean weights of the pools. The final weight structure in Fig. 5F reflects the dominant eigenvector. Despite the variability of individual weight traces in Fig. 5D due to the noise in the weight update and rather fast learning rate used here, the emerging weight structure remains stable in the long run.

Spike transmission after learning

Following the specialization induced by STDP, the modified weight distribution tunes the transient response to the input spikes. To illustrate this, we examine how STDP modifies the neuronal response to the three correlation sources  in the previous configuration in Fig. 5. Practically, we evaluate the firing probability during a given time interval of

in the previous configuration in Fig. 5. Practically, we evaluate the firing probability during a given time interval of  consecutive to a spike from input

consecutive to a spike from input  , similar to a peristimulus time histogram (PSTH). Before learning, the PSTHs for

, similar to a peristimulus time histogram (PSTH). Before learning, the PSTHs for  (red),

(red),  (green) and

(green) and  (blue) are comparable in Fig. 6A, which follows because

(blue) are comparable in Fig. 6A, which follows because  ,

,  and

and  . After learning, pools

. After learning, pools  and

and  that relate to

that relate to  and

and  are much more potentiated than pool

are much more potentiated than pool  by STDP in Fig. 5F. Consequently, even though pool

by STDP in Fig. 5F. Consequently, even though pool  is potentiated and transmits correlated activity from

is potentiated and transmits correlated activity from  , the spike transmission after learning is clearly stronger for

, the spike transmission after learning is clearly stronger for  and

and  than

than  in Fig. 6B. The respective increases of the areas under the PSTHs are summarized in Fig. 6C. The overall increase in firing rate (from about 10 to 30 sp/s) is not supported equally by all

in Fig. 6B. The respective increases of the areas under the PSTHs are summarized in Fig. 6C. The overall increase in firing rate (from about 10 to 30 sp/s) is not supported equally by all  .

.

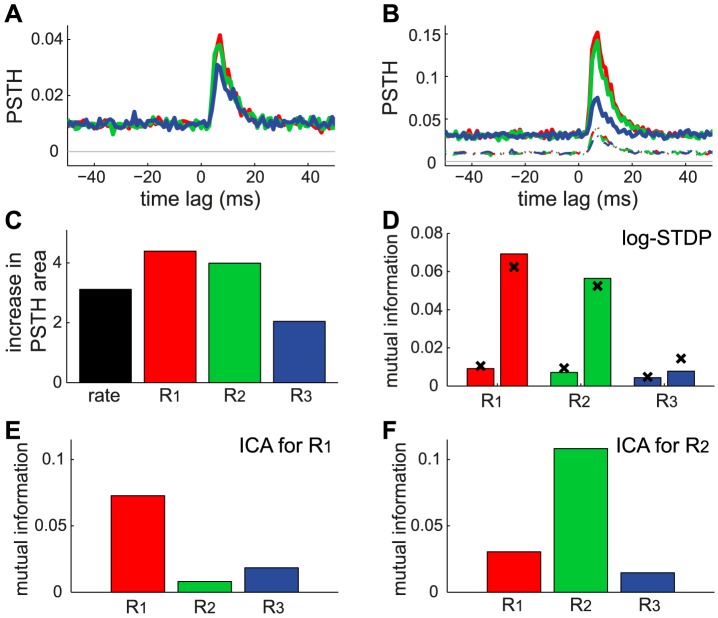

Figure 6. Transmission of the correlated activity after learning by STDP.

The results are averaged over 10 neurons and 100 s with the same configuration as in Fig. 5. Comparison of the PSTHs of the response to each correlated event of  (A) before and (B) after learning for

(A) before and (B) after learning for  (red),

(red),  (green) and

(green) and  (blue). Note the change of scale for the y-axis; the curves in A are reproduced in B in dashed line. (C) Ratio of the learning-related increase of mean firing rate (black) and PSTHs in B with respect to A (same colors). For each PSTH, only the area above its baseline is taken into account. (D) Mutual information

(blue). Note the change of scale for the y-axis; the curves in A are reproduced in B in dashed line. (C) Ratio of the learning-related increase of mean firing rate (black) and PSTHs in B with respect to A (same colors). For each PSTH, only the area above its baseline is taken into account. (D) Mutual information  between a correlated event and the firing of two spikes, as defined in (14). For each reference, the left (right) bar indicates

between a correlated event and the firing of two spikes, as defined in (14). For each reference, the left (right) bar indicates  before (after) learning. The crosses correspond to the theoretical prediction using (16) as explained in the text. (E) Example of neuron selective to

before (after) learning. The crosses correspond to the theoretical prediction using (16) as explained in the text. (E) Example of neuron selective to  with weight means for each pool set by hand to

with weight means for each pool set by hand to  ;

;  and

and  . The bars correspond to the simulated

. The bars correspond to the simulated  similar to D. (F) Same as E with a neuron selective to

similar to D. (F) Same as E with a neuron selective to  and

and  ;

;  and

and  .

.

To further quantify the change in spiking transmission, we evaluate the mutual information  based on the neuronal firing probability, considering correlated events as the basis of information. In this way, the increases in PSTHs are compared to the background firing of the neuron, considered to be noise. In contrast to previous studies that examined optimality with respect to limited synaptic resources [30], [31], we only examine how STDP tunes the transmission of synchronous spike volleys. We define

based on the neuronal firing probability, considering correlated events as the basis of information. In this way, the increases in PSTHs are compared to the background firing of the neuron, considered to be noise. In contrast to previous studies that examined optimality with respect to limited synaptic resources [30], [31], we only examine how STDP tunes the transmission of synchronous spike volleys. We define  with respect to the event ‘the neuron fires two spikes or more within the period

with respect to the event ‘the neuron fires two spikes or more within the period  ’, denoted by

’, denoted by  ;

;  is its complementary. Hereafter, we denote by

is its complementary. Hereafter, we denote by  and

and  the occurrence of a correlated event and its complementary, respectively The mutual information is defined as

the occurrence of a correlated event and its complementary, respectively The mutual information is defined as

| (14) |

with  and

and  . The probabilities are defined for the events occurring during a time interval

. The probabilities are defined for the events occurring during a time interval  . We have

. We have  and the realization of

and the realization of  can be evaluated using a Poisson random variable with intensity

can be evaluated using a Poisson random variable with intensity

| (15) |

In the above expression,  can be evaluated via the baseline firing rate for

can be evaluated via the baseline firing rate for  and the PSTHs in Fig. 6B for

and the PSTHs in Fig. 6B for  . Namely, we adapt (48) and (49) in Methods to obtain

. Namely, we adapt (48) and (49) in Methods to obtain

|

(16) |

Using the simulation results for the mean  and

and  we obtain the predicted values (crosses) for

we obtain the predicted values (crosses) for  in (14) in Fig. 6D. They are in reasonable agreement with

in (14) in Fig. 6D. They are in reasonable agreement with  evaluated from the simulated spike trains after dividing the 100 s duration in bins of lengths

evaluated from the simulated spike trains after dividing the 100 s duration in bins of lengths  . In a clearer manner than with the ratio in Fig. 6C,

. In a clearer manner than with the ratio in Fig. 6C,  shows that the strong potentiation induced by STDP leads to the reliable transmission (considering that Poisson neurons are noisy) of the correlated events involved in the strong spectral component of

shows that the strong potentiation induced by STDP leads to the reliable transmission (considering that Poisson neurons are noisy) of the correlated events involved in the strong spectral component of  , namely

, namely  and

and  , while that for

, while that for  remains poor.

remains poor.

For the input firing rate  used here, STDP potentiates the weights such that the postsynaptic neuron fires at

used here, STDP potentiates the weights such that the postsynaptic neuron fires at  after learning. Because the frequency of correlated events for each source

after learning. Because the frequency of correlated events for each source  is also 10 times per second,

is also 10 times per second,  is not so high in our model. Perfect detection for

is not so high in our model. Perfect detection for  corresponds to firing three spikes for each corresponding correlated event and none ??otherwise. In this case,

corresponds to firing three spikes for each corresponding correlated event and none ??otherwise. In this case,  ,

,  and

and  , yielding the maximum

, yielding the maximum  . In comparison, for

. In comparison, for  and the baseline log-STDP with

and the baseline log-STDP with  (results not shown), the firing rate after training is roughly eightfold that before learning. Then,

(results not shown), the firing rate after training is roughly eightfold that before learning. Then,  for

for  instead of about

instead of about  in Fig. 6. For the Poisson neuron especially, high firing rates lead to poor

in Fig. 6. For the Poisson neuron especially, high firing rates lead to poor  because of the noisy output firing rate. Performance can be much enhanced by using inhibition [9], but we will not pursue optimal detection in the present paper.

because of the noisy output firing rate. Performance can be much enhanced by using inhibition [9], but we will not pursue optimal detection in the present paper.

From PCA to ICA: influence of STDP properties on input selectivity

The high neuronal response to both correlation sources in Fig. 6C arises because pools  ,

,  and

and  in Fig. 5F exhibit strong weights, in a similar manner to the example with Oja's rule in Fig. 1D. However, it is possible to obtain a much better neuronal selectivity to either

in Fig. 5F exhibit strong weights, in a similar manner to the example with Oja's rule in Fig. 1D. However, it is possible to obtain a much better neuronal selectivity to either  or

or  , as illustrated in Fig. 6E–F for two distributions set by hand. The corresponding mean weights were chosen such that

, as illustrated in Fig. 6E–F for two distributions set by hand. The corresponding mean weights were chosen such that  favors the desired correlation source compared to others under the constraint of positive weights; cf.

favors the desired correlation source compared to others under the constraint of positive weights; cf.  in (12) and

in (12) and  indicates the matrix transposition. We use mutual information as a criterion to evaluate whether kSCA resembles PCA or ICA [33]. Here the analysis of independent spectral component for the postsynaptic neuron means a strong response to only one correlation source in terms of

indicates the matrix transposition. We use mutual information as a criterion to evaluate whether kSCA resembles PCA or ICA [33]. Here the analysis of independent spectral component for the postsynaptic neuron means a strong response to only one correlation source in terms of  .

.

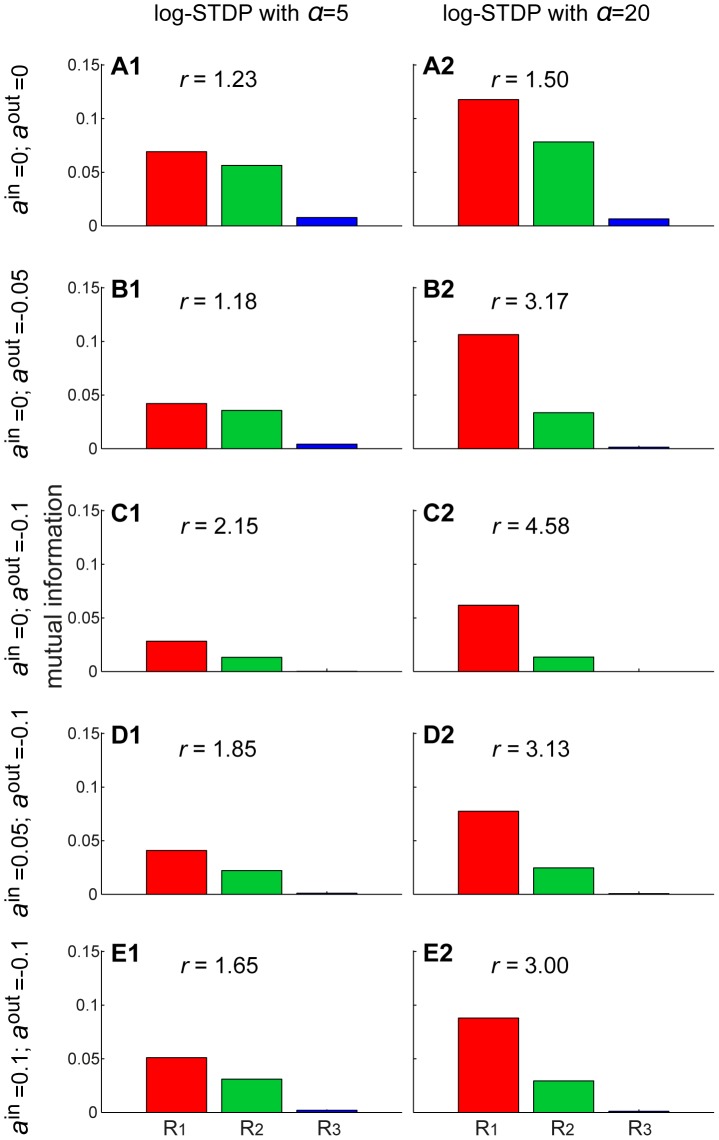

To separate correlation sources as in Fig. 6E–F, stronger competition between the synaptic inputs is necessary. When tuning the weight dependence of log-STDP toward an additive-like regime, input weights corresponding to the dominant spectral component are more strongly potentiated. This increase results in higher  with

with  in Fig. 7A2 for both

in Fig. 7A2 for both  and

and  , as compared to

, as compared to  in Fig. 7A1. However, the neuron still responds strongly to

in Fig. 7A1. However, the neuron still responds strongly to  in addition to

in addition to  , as indicated by the ratio

, as indicated by the ratio  between the respective

between the respective  . So long as STDP causes the weights to specialize in the direction of the dominant spectral component, pool

. So long as STDP causes the weights to specialize in the direction of the dominant spectral component, pool  is the most potentiated and the neuron does not isolate

is the most potentiated and the neuron does not isolate  . Even for log-STDP with

. Even for log-STDP with  or add-STDP (not shown), we obtain

or add-STDP (not shown), we obtain  . This follows because of the positive input correlations used here. We need a mechanism that causes inputs excited by distinct correlation sources to compete more strongly to drive the neuron. The synaptic competition induced by a negative postsynaptic single-spike contribution

. This follows because of the positive input correlations used here. We need a mechanism that causes inputs excited by distinct correlation sources to compete more strongly to drive the neuron. The synaptic competition induced by a negative postsynaptic single-spike contribution  satisfactorily increases the ratio

satisfactorily increases the ratio  in Fig. 7B–C compared to A (except for B1). One drawback is that the larger negative

in Fig. 7B–C compared to A (except for B1). One drawback is that the larger negative  is, the smaller the mean equilibrium weight become, cf. (6). Consequently, even though the ratio

is, the smaller the mean equilibrium weight become, cf. (6). Consequently, even though the ratio  increases,

increases,  decreases and the transmission of correlations is weakened. To compensate and obtain sufficiently large weights after learning, one can use a positive presynaptic single-spike contribution

decreases and the transmission of correlations is weakened. To compensate and obtain sufficiently large weights after learning, one can use a positive presynaptic single-spike contribution  . This gives both

. This gives both  for

for  and large ratios

and large ratios  in Fig. 7D2–E2, but not in Fig. 7D1–E1. We conclude that, in order that the neuron performs ICA and robustly selects

in Fig. 7D2–E2, but not in Fig. 7D1–E1. We conclude that, in order that the neuron performs ICA and robustly selects  , STDP itself should also be sufficiently competitive to obtain robust selectivity, see Fig. 7B–E with

, STDP itself should also be sufficiently competitive to obtain robust selectivity, see Fig. 7B–E with  compared to

compared to  . By homogeneously weakening all weights after each output spike in addition to strong STDP-based LTP, only the inputs that most strongly drive the output firing remain significantly potentiated. In other words,

. By homogeneously weakening all weights after each output spike in addition to strong STDP-based LTP, only the inputs that most strongly drive the output firing remain significantly potentiated. In other words,  introduces a threshold-like effect on the correlation to determine which inputs experience LTP and LTD. In agreement with our prediction, this “dynamic” threshold becomes more effective for large output firing rates, which only occurs when STDP leads to strong LTP (

introduces a threshold-like effect on the correlation to determine which inputs experience LTP and LTD. In agreement with our prediction, this “dynamic” threshold becomes more effective for large output firing rates, which only occurs when STDP leads to strong LTP ( ). This is reminiscent of BCM-like plasticity for firing rates [49]. Note that we found in simulation (not shown) that using

). This is reminiscent of BCM-like plasticity for firing rates [49]. Note that we found in simulation (not shown) that using  alone did not lead to ICA; this only increases the mean input weights.

alone did not lead to ICA; this only increases the mean input weights.

Figure 7. From PCA to ICA.

The plots show the mutual information between each correlation source  and the neuronal output firing after learning as in Fig. 6D–F. The neuron is stimulated by

and the neuronal output firing after learning as in Fig. 6D–F. The neuron is stimulated by  pools that mix three correlation sources as in Fig. 5. The two columns compare log-STDP with different degrees of weight dependence: (1)

pools that mix three correlation sources as in Fig. 5. The two columns compare log-STDP with different degrees of weight dependence: (1)  and (2)

and (2)  that induces stronger competition via weaker LTD. Each row corresponds to a different combination of single-spike contributions: (A) plain log-STDP meaning

that induces stronger competition via weaker LTD. Each row corresponds to a different combination of single-spike contributions: (A) plain log-STDP meaning  and log-STDP+SCC with (B)

and log-STDP+SCC with (B)  and

and  ; (C)

; (C)  and

and  ; (D)

; (D)  and

and  ; (E)

; (E)  and

and  . The scale on the y-axis is identical for all plots. The ratio of

. The scale on the y-axis is identical for all plots. The ratio of  between

between  and

and  is indicated by

is indicated by  .

.

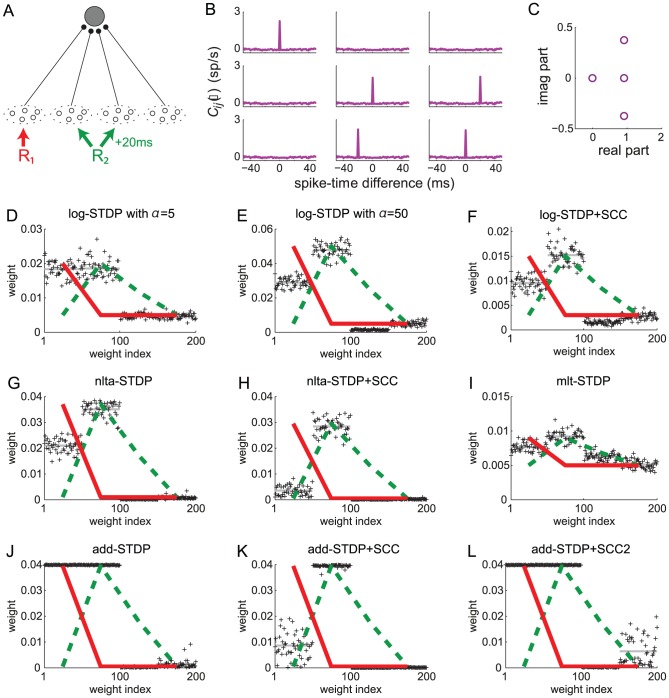

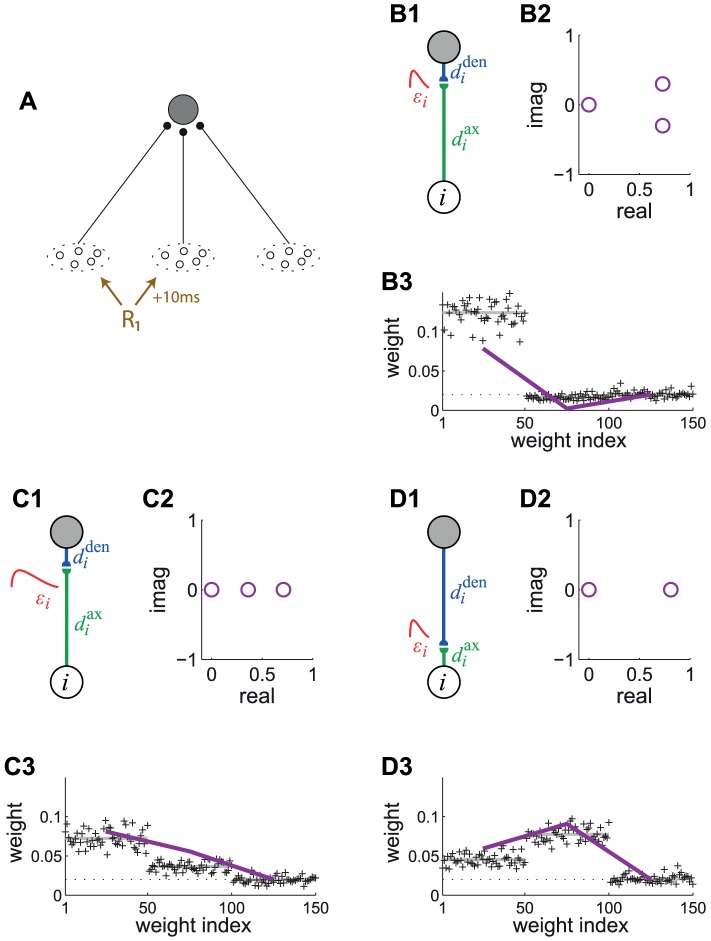

To further examine the effect of STDP parametrization and assess the generality of our analysis, we examine common trends and discrepancies in the weight specialization for different schemes for weight dependence for plain STDP: log-STDP [16], nlta-STDP [13], mlt-STDP [38] and add-STDP [12]; as well as the influence of single-spike contributions with log-STDP+SCC, nlta-STDP+SCC and add-STDP+SCC [6]. We consider the configuration represented in Fig. 8A where two sources of correlation excite three pools among four. The third pool from the left is stimulated by the same source as the second pool after a time lag of 20 ms. The corresponding spectrum of  is given in Fig. 8C, leading to two dominant spectral components with equal real part, one for each correlation source. Due to the large imaginary parts of the complex conjugate eigenvalues related to

is given in Fig. 8C, leading to two dominant spectral components with equal real part, one for each correlation source. Due to the large imaginary parts of the complex conjugate eigenvalues related to  , the final distribution in Fig. 8D does not reflect the green component in the sense that pool

, the final distribution in Fig. 8D does not reflect the green component in the sense that pool  is not potentiated, but depressed. This follows because its correlated stimulation comes late compared to pool

is not potentiated, but depressed. This follows because its correlated stimulation comes late compared to pool  . Therefore, the weights from pool

. Therefore, the weights from pool  become depressed when the weights from pool