Abstract

Bilinguals have been shown to activate their two languages in parallel, and this process can often be attributed to overlap in input between the two languages. The present study examines whether two languages that do not overlap in input structure, and that have distinct phonological systems, such as American Sign Language (ASL) and English, are also activated in parallel. Hearing ASL-English bimodal bilinguals’ and English monolinguals’ eye-movements were recorded during a visual world paradigm, in which participants were instructed, in English, to select objects from a display. In critical trials, the target item appeared with a competing item that overlapped with the target in ASL phonology. Bimodal bilinguals looked more at competing items than at phonologically unrelated items, and looked more at competing items relative to monolinguals, indicating activation of the sign-language during spoken English comprehension. The findings suggest that language co-activation is not modality specific, and provide insight into the mechanisms that may underlie cross-modal language co-activation in bimodal bilinguals, including the role that top-down and lateral connections between levels of processing may play in language comprehension.

Keywords: Bilingualism, Language Processing, American Sign Language

Successful understanding of spoken language involves the activation and retrieval of lexical items that correspond to incoming featural information. The nature of spoken language invites a degree of ambiguity into this process – until a disambiguating phoneme arrives, the syllable “can-” may potentially activate lexical entries for “candle” and “candy” (Marslen-Wilson, 1987; Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995). For bilinguals who use two spoken languages (commonly referred to as unimodal bilinguals), this ambiguity is not limited to a single language; auditory input non-selectively activates corresponding lexical items regardless of the language to which they belong (Dijkstra & Van Heuven, 2002; Marian & Spivey, 2003a,b; Spivey & Marian, 1999; but see Weber & Cutler, 2004), which suggests that unimodal bilinguals co-activate both languages during spoken language comprehension. However, unimodal bilingual research typically relies on some degree of structural similarity between languages. The present study investigated whether language co-activation occurs between languages that do not share modality, during spoken comprehension, by examining parallel processing in bimodal bilinguals, users of a spoken and a signed language.

Evidence from research exploring language co-activation in unimodal bilinguals indicates that a bilingual’s two spoken languages can interact at various levels of processing. For instance, cross-linguistic priming effects have been found at both lexical (Finkbeiner, Forster, Nicol, & Nakamura, 2004; Schoonbaert, Duyck, Brysbaert, & Hartsuiker, 2009) and syntactic (Hartsuiker, Pickering, & Veltkamp, 2004; Loebell & Bock, 2003) levels, and there appears to be a close relationship between orthography and phonology across languages in bilinguals (Kaushanskaya & Marian, 2007; Thierry & Wu, 2007). Furthermore, unimodal bilinguals have been shown to co-activate lexical items in their two languages across highly diverse language pairs (Blumenfeld & Marian, 2007; Canseco-Gonzalez, et al., 2010; Cutler, Weber, and Otake, 2006; Thierry & Wu, 2007; Weber & Cutler, 2004). Some of the most compelling evidence for language co-activation comes from eye-tracking studies, which often rely on phonological overlap between cross-language word pairs (e.g., English “marker” and Russian “marka”/stamp). Phonology is important in cross-linguistic interaction as the speed and accuracy of unimodal bilinguals in lexical decision tasks is affected by degree of phonological overlap (e.g., Marian, Blumenfeld, and Boukrina, 2008), and the extent to which unimodal bilinguals entertain cross-linguistic competitors is affected by fine-grained phonological details, such as voice-onset time (e.g., Ju & Luce, 2004). It is possible that the early cross-linguistic activation found in eye-tracking studies is modality-specific, in that it only occurs when the input overlaps in structure across languages. If that is the case, we might expect that the pattern of parallel activation seen in unimodal bilinguals would not be seen in bimodal bilinguals, whose languages involve distinct, non-overlapping phonological and lexical systems.

This interaction of sign and speech has been investigated in several recent studies with bimodal bilinguals. Parallel activation has been found in production studies, where hearing bimodal bilinguals commonly produce code-blends, which are simultaneous productions of spoken and signed lexical items. For example, when asked to tell a story to an interlocutor in English, bimodal bilinguals produced semantically related ASL lexical items that were strongly time-locked to the English utterances (Emmorey, Borinstein, Thompson, & Gollan, 2008). This pattern was seen even when the bilinguals had no knowledge of whether their interlocutor was also bilingual (Casey & Emmorey, 2008). These findings suggest that bimodal bilinguals were able to simultaneously access lexical items from both ASL and English during language production. Additionally, a bimodal bilingual’s two languages have been shown to compete during comprehension. Van Hell, Ormel, van der Loop, and Hermans, (2009) tested hearing Dutch-Sign Language of the Netherlands (SLN) bilinguals using a word-verification task, in which a sign and a picture were presented and participants judged whether they matched in meaning. Results indicated that Dutch-SLN bilinguals were slower to reject mismatched pairs when the Dutch translation of the sign overlapped in phonology with the picture label in Dutch, suggesting that the bilinguals activated Dutch when processing SLN. While the Van Hell et al. study indicated that spoken-language knowledge is active during sign-language processing, recent evidence suggests that sign-language information is also activated during written-language comprehension. In a study by Morford, Wilkinson, Villwock, Piñar, and Kroll (2011), deaf American Sign Language-English bilingual participants judged printed word pairs as semantically related (BIRD/DUCK) or semantically unrelated (MOVIE/PAPER). Some of the contrasts consisted of words whose translation equivalents overlapped in ASL phonology. Deaf bimodal bilinguals were slower to reject semantically unrelated word pairs, but were faster to accept semantically related pairs, when the translations overlapped in ASL phonology. In contrast, hearing unimodal bilinguals did not show the same effects, suggesting that the reaction-time differences were driven by the co-activation of ASL and written English in the bimodal bilinguals.

Previous research on parallel processing in bimodal bilinguals suggests that language co-activation may involve the integration of linguistic information across modalities during production (Casey & Emmorey, 2008; Emmorey et al., 2008), and during the comprehension of written (Morford et al., 2011) and signed (Van Hell et al., 2009) language. However, to our knowledge, no study of hearing bimodal bilinguals has examined online, spoken language processing. Critically, co-activation via phonological overlap is chiefly driven by linguistic input - lexical items that share featural (i.e., phonological) similarity with the input are activated, regardless of language membership. Since spoken and signed languages do not share modality, the connection between two languages that is most commonly emphasized in unimodal bilingual speech-comprehension research (overlap at the phonological level) is not present in bimodal bilinguals. Investigating language co-activation in bimodal bilinguals is one possible way that we may be able to examine whether mechanisms other than bottom-up, input driven activation might play a role in language comprehension, namely top-down and lateral connections between languages.

One method for examining language co-activation during bimodal bilingual language comprehension is the visual world paradigm, wherein participants’ eye-movements are recorded while they are instructed to manipulate objects in a visual scene (Tanenhaus et al., 1995). Eye-movements have been shown to index activation of lexical items, resulting from the match between representations derived from visual input and spoken input. Furthermore, the visual world paradigm has been successfully implemented with bilingual participants. Marian and Spivey (2003a,b) performed a bilingual version of the visual-world task, where one of the objects in the display represented the target word (e.g., marker), while another represented a competitor word that overlapped phonologically with the target word in the unused language (e.g., stamp, Russian marka). The authors found that unimodal bilinguals fixated cross-language competitor words significantly more than monolinguals, and more than unrelated filler items, reflecting activation of the non-target language.

The present study adapted the bilingual visual-world paradigm to examine the pattern of parallel language activation in hearing bimodal bilinguals. English monolinguals and highly-proficient American Sign Language (ASL)-English bilinguals viewed computer displays containing four pictures (a target, a competitor, and two distractors) in a three-by-three grid, and were instructed to click on one of the images. Target-competitor pairs consisted of pairs of signs matched on three of four phonological parameters in ASL - handshape, movement, location of the sign in space, and orientation of the palm/hand. For example, the signs for cheese and paper match in handshape, location, and orientation, but differ in the movement of the sign. Moreover, the target-competitor pairs did not overlap in English phonology. We hypothesized that bilinguals would look more at competitor items than at items that were phonologically unrelated in both ASL and English, and that bilinguals would look more at competitor items than monolinguals, reflecting parallel activation of ASL and English. If bimodal bilinguals do show parallel activation, it would suggest that language co-activation can occur (1) across modalities and (2) under circumstances where there is limited overlap in input structure.

Methods

Participants

Twenty-six participants (13 bilingual users of ASL and English and 13 English monolinguals) were tested. Five additional participants were tested but not included in the analysis –three due to low ASL proficiency and two due to technical issues with the eye-tracker. Participants were recruited from the greater Chicago area and Northwestern University. Table 1 contains summary information about the participants. Self-rated language proficiency and exposure ratings for both English and ASL were obtained from the Language Experience and Proficiency Questionnaire (LEAP-Q; Marian, Blumenfeld, & Kaushanskaya, 2007). English vocabulary skill was measured by the Peabody Picture Vocabulary Test (PPVT-III; Dunn & Dunn, 1997). All participants reported normal hearing and vision, and no history of neurological dysfunction1.

Table 1.

Language background information for bilingual and monolingual participants

| Bilinguals | Monolinguals | Comparisons | ||

|---|---|---|---|---|

| Mean (Standard Deviation) | Mean (Standard Deviation) | t(24) | p | |

| Age (in years) | 33.2 (11.8) | 23.9 (9.8) | −2.18 | .04 |

| English Proficiency-Understanding | 8.8 (1.0) | 9.0 (1.1) | 0.37 | ns |

| English Proficiency-Producing | 8.7 (1.2) | 9.1 (0.9) | 1.12 | ns |

| PPVT Standard Score (English) | 108.2 (9.9) | 111.8 (9.4) | 0.96 | ns |

| PPVT Percentile (English) | 67.8 (21.0) | 75.3 (18.9) | 0.96 | ns |

| Age of ASL acquisition | 7.5 (8.3) | — | — | — |

| ASL Proficiency-Understanding | 8.8 (0.7) | — | — | — |

| ASL Proficiency-Producing | 8.5 (0.7) | — | — | — |

| Current ASL Exposure (%) | 34.4 (13.8) | — | — | — |

Note. Values obtained from the Language Experience and Proficiency Questionnaire (LEAP-Q), and Peabody Picture Vocabulary Test (PPVT-III).

Materials

Sign-Pairs

Twenty minimal sign pairs, which represented the target and competitor pairs, were developed from forty signs. Similar to spoken languages, the ASL phonological system consists of a finite number of segments that are combined in a systematic way to create lexical items. At the phonological level, ASL signs consist of four parameters, (a) handshape, (b) movement, (c) location of the sign on the body, or in space, and (d) orientation of the palm/hand (Brentari, 1998). Each target-competitor pair consisted of two non-fingerspelled signs that were highly similar on three of the four major parameters of sign-language phonology and differed on the fourth. For example, the signs for cheese and paper overlap in handshape, location, and palm orientation, but differ in the movement of the sign2. During the experiment, all objects (target, competitor, and distractors) were represented by black and white line drawings obtained from Blumenfeld and Marian (2007) and from the International Picture Naming Database.

Target and competitor items did not significantly differ in English word frequency [t(38) = 0.97, p > 0.1], nor did competitor and distractor signs [t(58) = 0.59, p > 0.1] (obtained from the SubtLexus database; Brysbaert & New, 2009). All distractor items were semantically-unrelated to targets and competitors, and did not overlap in ASL or English phonology with target or competitor items. Due to the absence of an ASL frequency database, we were unable to control for ASL frequency; however, controlling for English frequency may help mediate ASL frequency effects. A list of all stimuli can be found in Appendix 1.

Stimuli were also controlled for semantic relatedness3 and phonological overlap in English. To control for semantic relatedness, target-competitor pairs were normed by 21 monolingual English speakers, and 20 ASL-English bilinguals on a 1–10 scale, with 1 being extremely dissimilar, and 10 being extremely similar. The groups did not differ in their average ratings for the target-competitor pairs (monolingual: M = 3.07, SD = 1.24; bilingual: M = 2.66, SD = 2.00; t(39) = 0.79, p > 0.1). To control for phonological overlap, we selected target, competitor and distractor items that were not phonologically similar in English.

Auditory Stimuli

The English label for each target item was recorded at 44.1 Khz, 32 bits by a female, monolingual speaker of English. Target items were recorded in sentence context, as the final word in the phrase “click on the _____.” All recordings were normalized such that the carrier phrase was of equal length for all target sentences, and the onset of the target word always occurred at 600 ms post onset of the target phrase. Recordings were amplitude-normalized.

Design

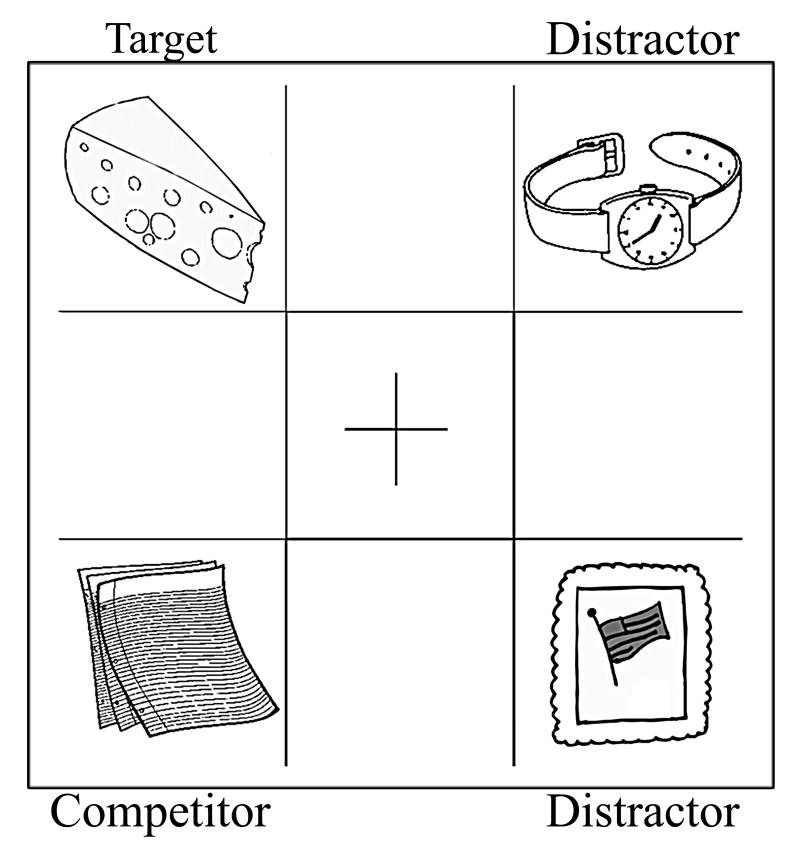

In twenty critical trials, participants were presented with a display that contained an image of the target item, a competitor item whose ASL label overlapped in phonology with the target, and two distractor items whose labels did not overlap with the target or competitor in English or ASL phonology. Forty-four (44) filler trials were also presented, which contained a target and three phonologically unrelated distractors. Filler trials were included so that participants would not notice the overlap between competitor and target items, and to ensure that the objective of the study remained concealed. None of the participants reported noticing the overlap between targets and competitors, or identified the objective of the study during post-testing interviews. In each trial, participants were instructed to click on a center cross, which was followed by a 1500 ms inter-stimulus interval, and then the experimental display. Stimulus displays were created by embedding four black-and-white line-drawings into the corners of a 3×3 grid measuring 1280 × 1024 pixels, with a fixation cross in the center. The location of target, competitor, and distractor items was counterbalanced across trials. Trial presentation was pseudo-randomized such that experimental trials were separated by filler trials, and was counterbalanced across participants a fixation cross in the center (see Figure 1).”.

Figure 1.

Example of an experimental trial in the visual world paradigm. The target item is cheese, the competitor item is paper, and the distractor items are stamp and watch. The target item cheese and the competitor item paper overlap in handshape, location, and orientation (but not movement), while no overlap is present between the target item cheese and either unrelated distractor.

Procedure

After informed consent was obtained, monolingual participants received a description of the experiment in English, while the bilingual participants received a description of the experiment in both English and ASL3. After receiving instructions, participants were fitted with a head-mounted ISCAN eye-tracker which recorded the location of their gaze thirty times per second, or once every 33.3 ms, during the experiment. Participants were seated approximately twenty inches from the computer screen, and were instructed to place their chin in a chinrest to limit head movement and maintain calibration of the eye-tracking apparatus. During the experiment, the eye-tracker was calibrated four times, once at the onset of the experiment, and again after every twenty-one trials until the experiment was complete. Participants were told that they would hear instructions to choose a specific object in a visual display, and should click on the object that best represents the target word. Five practice trials were provided to familiarize participants with the task. Auditory stimuli were presented over headphones and appeared synchronously with experimental displays such that the onset of the display coincided with the onset of the carrier phrase “click on the ____.” Participants received only English instruction during this experimental task, and at no point received input in American Sign Language. A trial ended when the participant selected an item from the display. After the eye-tracking portion of the experiment, participants’ language proficiency and language history were assessed using the PPVT and the LEAP-Q. In addition, bilingual participants were presented with a list of words used earlier in the experiment and asked to provide the American Sign Language translations. Bilinguals provided correct translations for 95.2% of the words (M=62.8/66, SD=2.5).

Data Analysis

Two dependent measures were collected: (1) the overall proportion of looks, and (2) duration of looks. A look was defined as beginning at the onset of the saccade that entered a region (i.e., grid) containing a picture stimulus and terminating at the onset of the saccade that exited the region. In addition, only those eye-movements that resulted in the fixation of a picture stimulus for three consecutive frames or greater (or, 100 ms or greater) were counted as looks. For the analyses, twenty distractor items were randomly selected (one from each experimental trial; see Appendix 1). Looks to the competitors were directly compared to looks to these distractor items5. The overall proportion of looks to competitor items and distractor items was calculated by assigning a value of 1 to every trial that contained a look, and a 0 to every trial that did not. Second, the time spent looking at competitor and distractor items was calculated by dividing a participant’s number of looks to a particular item by that participant’s total number of looks. The proportion and duration of looks to competitor and distractor items were analyzed starting at 200 ms post word onset, and ending at 1400 ms post word onset. The onset of the range was selected based on research suggesting a 200–300 ms delay for planning an eye-movement (Viviani, 1990), and the offset was determined by the average click-response time for the critical trials across groups (M = 1412 ms, SE = 49 ms) rounded to the nearest frame. We also examined the time-course of activation by comparing eye-movements to competitor items, relative to unrelated distractor items, and target items, relative to unrelated distractor items, in 100 ms bins, beginning at the onset of the display, and ending 2000 ms post-onset of the target word. These analyses were performed separately for the monolingual and bilingual groups. Twenty percent of all data were also coded by a second independent coder who was blind to the purpose of the study, and the inter-coder reliability was 93.4%. Disagreements were discussed until consensus was reached.

Results

Overall Looks Analysis

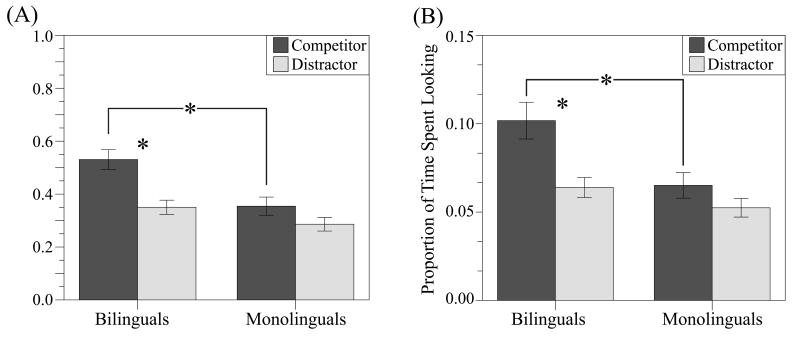

Overall accuracy rates were very high at 95.4%; the remaining 4.6% of trials were removed due to incorrect or early (accidental mouse clicks) responses. The results revealed that bilinguals activated ASL lexical items when receiving input in English alone6. A Repeated-measures Analysis of Variance comparing looks to competitors versus looks to unrelated distractor items revealed a significant Group (bilingual, monolingual) x Item (Competitor, Distractor) interaction for both the proportion [F1(1,24) = 6.16, p < 0.05, ηp2 = 0.204; F2(1,38) = 5.86, p < 0.05, ηp2 = 0.134] and duration [F1(1,24) = 5.50, p < 0.05, ηp2 = 0.186; F2(1,38) = 5.88, p < 0.05, ηp2 = 0.134] of eye-movements (Figure 2). Bilinguals looked at competitor items more than at distractor items [Competitor: M = 53.1%, SE = 3.7%; Distractor: M = 35.8%, SE = 3.4%; t(12) = 4.22, p < 0.001] and for a longer period of time [Competitor: M = 10.2%, SE = 1.0%; Distractor: M = 6.2%, SE = 0.7%; t(12) = 4.36, p < 0.001], suggesting that bilinguals activated phonologically-related competitors more than phonologically-unrelated distractor items. No differences were found in the monolingual group for either proportion [t(12) = 0.61, p > 0.1] or duration [t(12) = 0.71, p > 0.1] of eye-movements. Bilinguals also looked at competitor items more than monolinguals [Bilingual: M = 53.1%, SE = 3.7%; Monolingual: M = 35.4%, SE = 3.4%; t(24) = 3.48, p < 0.01] and for a longer period of time [Bilingual: M = 10.2%, SE = 1.0%; Monolingual: M = 6.5%, SE = 0.7%; t(24) = 2.90, p < 0.01] while both groups looked at distractor items equally [Proportion: t(24) = 0.63, p > 0.1; Duration: t(24) = 0.42, p > 0.1], suggesting that bilinguals activated phonologically-related items more than monolinguals.

Figure 2.

Results from the eye-tracking analyses. (A) Proportion of overall looks to competitor and distractor items in bilingual and monolingual groups. (B) Time spent looking at competitor and distractor items in bilingual and monolingual groups. Error bars represent +/− 1 Standard Error and asterisks indicate significance at p < 0.05.

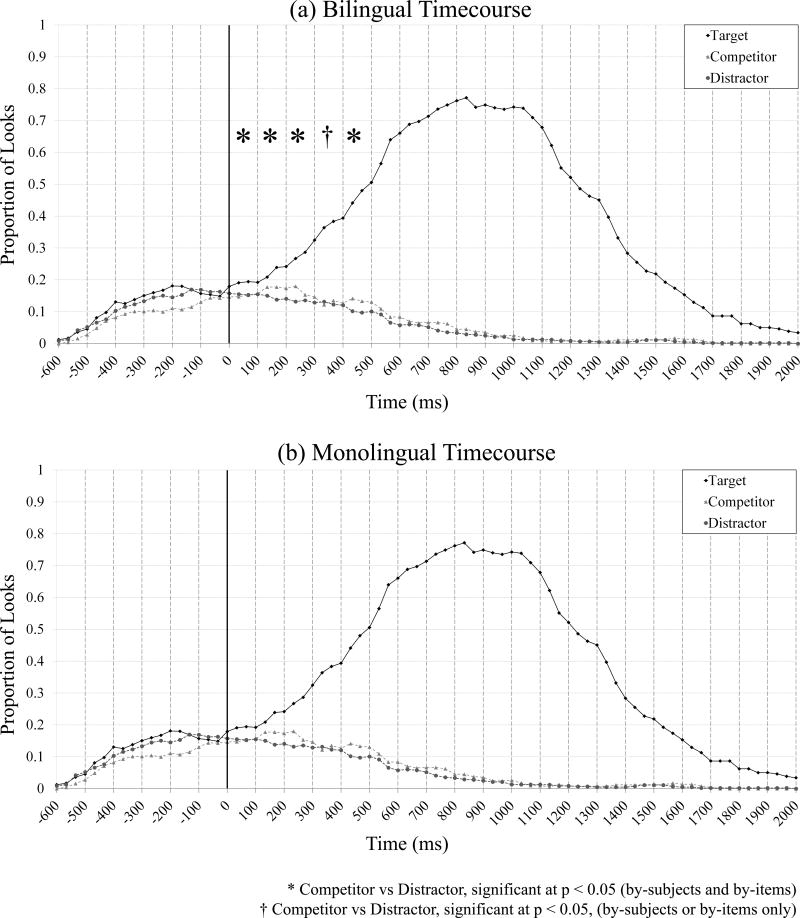

Time-Course of Activation

Analysis of the bilingual time-course of activation was consistent with the analysis of overall looks, with bilinguals looking at competitors more than at unrelated distractor items. In contrast, the monolinguals showed no difference. Data were analyzed both by-subjects and by-items for each 100 ms window, beginning at −600 (onset of the picture stimulus), and ending with the window between 1900 and 2000 ms. Significant differences were found both by subjects and by items in the consecutive time windows between 0 ms (word onset) and 300 ms, and between 400 and 500 ms, in which bilinguals showed significantly more looks to competitor items than to unrelated distractor items (Figure 3a). In addition, the proportion of looks to the competitor items in the time-window from 300 ms to 400, relative to distractors, was significant by items (p < 0.05), and marginally-significant by-subjects (p = 0.054), again showing more looks to competitors than to distractors (see Table 2). Analyses were also performed to examine the looks to target items relative to distractors. In the bilingual group, significant differences were found, both by-subjects and by-items, in the consecutive time windows from −200 ms to 0 ms and 200 ms to 2000 ms (all ps < 0.05). Although the above analyses indicate that the target diverges significantly from the distractor (in the −200-0 ms windows) earlier than does the competitor (in the 0–300 ms windows), a direct comparison of looks to the target vs. the competitor revealed no significant differences in these windows (all p’s > 0.05).

Figure 3.

Time-course of activation for (a) Bilingual and (b) Monolingual participants. The solid line represents onset of the target word.

* Competetitor vs Distractor, significant at p<0.05 (by subjects and by-items)

† Competetitor vs Distractor, significant at p<0.05 (by subjects or by-items only)

Table 2.

Bilingual Time-Course Comparisons for Competitors vs. Distractors

| t-tests | 0–100 ms | 100–200 ms | 200–300 ms | 300–400 ms | 400–500 ms |

|---|---|---|---|---|---|

| By-Subjects | |||||

| t(12) | 3.49 | 3.01 | 2.60 | 1.73 | 1.93 |

| p | < 0.05 | < 0.05 | < 0.05 | = 0.054 | < 0.05 |

|

| |||||

| By-Items | |||||

| t(38) | 2.54 | 2.12 | 2.14 | 2.02 | 2.11 |

| p | < 0.05 | < 0.05 | < 0.05 | < 0.05 | < 0.05 |

|

| |||||

| Significance | ** | ** | ** | * | ** |

Note: All ps are one-tailed.

Indicates significance either by-subjects or by-items only;

indicates significance at alpha of 0.05 for both by-subject and by-items analyses.

Similar analyses performed on the monolingual activation curves revealed no significant differences between looks to competitor and distractor items for the monolingual group in any time window, either by-subjects or by-items (all ps > 0.1), suggesting that monolinguals did not look more at competitor items than at distractor items (Figure 3b). Analyses of the looks to targets and distractors revealed that the monolinguals began looking at the target items significantly more than at the distractor items from 200 ms post word onset, until the end of the trial (all ps < 0.05, one-tailed). In addition, the time point at which the target and competitor items diverged and remained significant did not differ for bilinguals (M = 451 ms, SD = 116 ms) and monolinguals (M = 458, SD = 126 ms), t(1,24) = 0.15, p > 0.1. This suggests that the time-course of target activation was not significantly different across the two groups.

Controlling for Pre-onset Looks

In order to determine whether the results found in the overall-looks analyses were independent of the pre-onset looks, and not a by-product of pre-onset activation, the overall looks analyses (2 × 2 Repeated-Measures ANOVA using the 200–1400 ms activation window) were repeated using (a) the average proportion of looks and (b) the average looking time to items in the display in the time window from −200 to 200 ms as covariates for the proportion and duration analyses, respectively. Results indicate that when controlling for these early looks, parallel activation of ASL during English processing persists. Significant Group (bilingual, monolingual) x Item (Competitor, Distractor) interactions were found for the proportion (F1(1,23) = 7.09, p < 0.05, ηp2 = .235; F2(1,37) = 7.32, p < 0.05, ηp2 =.165) of looks. The Group x Item interaction for the duration of looks was significant by-items (F(1,37) = 6.27, p < 0.05, ηp2 =.145), and marginally-significant by-subjects (F(1,23) = 4.19, p = 0.052, ηp2 = .154). Planned post-hoc t-tests using the adjusted means yielded the same pattern of results as the initial analyses. Again, t-tests indicated that bilinguals looked at competitor items more than at distractor items [Competitor: M = 51.9%, SE = 3.6%; Distractor: M = 33.6%, SE = 2.9%; t(38) = 3.95, p < 0.01] and for a longer period of time [Competitor: M = 9.8%, SE = 0.9%; Distractor: M = 5.9%, SE = 0.7%; t(38) = 3.42, p < 0.01]. The monolinguals did not differ in their looks to competitors and distractors (all ps > 0.1). In addition, bilinguals looked more at competitors than monolinguals [M = 36.6%, SE = 3.6%; t(24) = 3.01, p < 0.01], and for a longer period of time than monolinguals [M = 6.9%, SE = 0.9%; t(24) = 2.28, p < 0.05]. No difference was found between bilinguals’ and monolinguals’ looks to distractor items (all ps > 0.1).

Discussion

The present study indicates that bimodal bilinguals activate their two languages in parallel during spoken language comprehension. When hearing auditory input in English, bilinguals looked more and longer at competing items whose American Sign Language translations overlapped in phonology with the ASL translations of the target items than at items that did not overlap in either English or ASL. Bilinguals also looked more and longer at phonologically overlapping items, relative to monolinguals. While successful task performance only required participants to activate English (the sole language of presentation), the bimodal bilinguals also activated the underlying ASL translations of the items in the display. These results extend previous research on both deaf and hearing bimodal bilinguals by providing evidence for cross-linguistic, cross-modal language activation during unimodal spoken comprehension. The phonological and lexical systems of languages that do not share modality appear to be activated in parallel, even when featural information from one of the two languages is largely absent from the input.

Bilinguals readily integrate information from auditory and visual modalities when processing language (for a review, see Marian, 2009), similar to monolinguals (e.g., Shahin & Miller, 2009; Sumby & Pollack, 1954; Summerfield, 1987). For example, the addition of visual information can help second-language learners identify unfamiliar phonemes (Navarra & Soto-Faraco, 2005), and bimodal bilinguals show benefits in language comprehension when linguistic information is presented simultaneously in ASL and English, relative to either language alone (Emmorey, Petrich, & Gollan, 2009). Research on the relationship between orthography and phonology has also provided evidence for cross-modal interaction in bilinguals. Unimodal bilinguals have been shown to activate the orthographic and phonological forms of words simultaneously during language processing (Dijkstra, Frauenfelder, & Schreuder, 1993; Kaushanskaya & Marian, 2007; Van Wijnendaele & Brysbaert, 2002). Our findings indicate that this cross-modal interaction can also be found between two distinct and highly unrelated phonological systems.

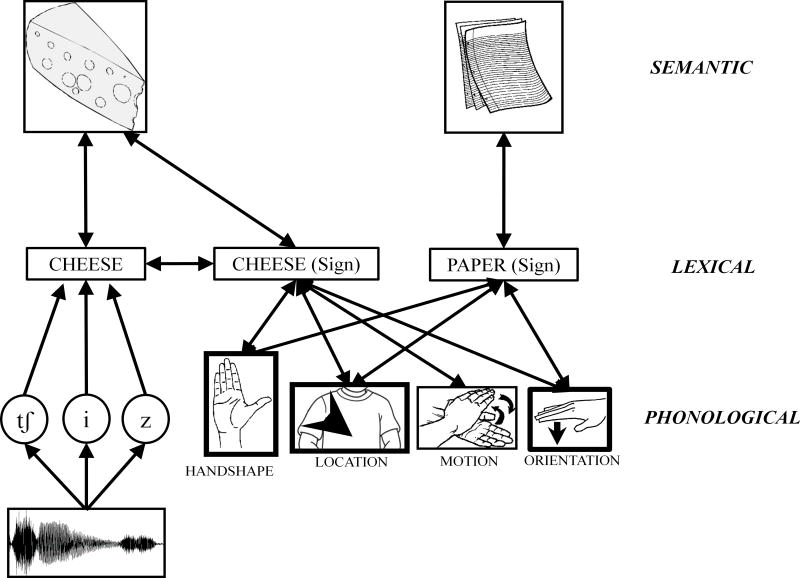

We believe that our results support the notion that cross-modal language co-activation is the result of a language system that can be governed by top-down and/or lateral connections (Figure 4). A top-down explanation of bimodal co-activation would stem from feedback connections between the semantic and lexical levels, consistent with the finding that translation priming effects appear to be mediated by conceptual representations (Schoonbaert et al., 2009). As auditory information enters the language system, it may selectively activate the spoken English lexical representation, and subsequently its conceptual representation. Since the semantic/conceptual system in bilinguals appears to be shared across languages (Kroll &De Groot, 1997; Salamoura & Williams, 2007; Schoonbaert, Hartsuiker, & Pickering, 2007), the conceptual representation could then feed activation back down through the processing system thereby activating the corresponding ASL lexical representation, and in turn activating phonologically similar ASL lexical items. A mechanism of this nature would suggest that the bilingual language processing system employs top-down feedback information during processing, at least when bottom-up input is unavailable.

Figure 4.

Proposed co-activation pathways in bimodal bilinguals during speech comprehension

A second possibility is that cross-modal parallel activation in bimodal bilinguals is a product of lateral, lexical-level connections between ASL and English translation equivalents. Hearing children born to deaf families (CODAs) often become natural interpreters, acting as the linguistic bridge between their deaf parents or siblings and the hearing world. As our participants were either CODAs or were working ASL interpreters, it is likely that they have experience processing and translating English and ASL together, and this experience may result in direct associative links between lexical items. As a listener hears spoken English input, translational links at the lexical level could guide cross-modal parallel activation by stimulating ASL translation equivalents via direct excitatory connections at the lexical level, resulting in the activation of phonologically similar ASL items. However, there is evidence to suggest that bilinguals may not make strong associative links between cross-language lexical items; for example, it has been found that cross-linguistic translation effects in unimodal bilinguals, rather than being driven by direct lexical connections, seem to be largely conceptually mediated (Finkbeiner et al., 2004; Grainger & Frenck-Mestre, 1998; La Heij, Hooglander, Kerling, & van der Velden., 1996; Schoonbaert, Duyck, Brysbaert, & Hartsuiker, 2009), except in cases of large asymmetry in L1–L2 proficiency (Sunderman & Kroll, 2006). Semantic mediation for translational priming could potentially reflect a system that does not rely heavily on direct lexical translational links. The present study cannot completely rule out lateral connections between languages as a possible explanation of the observed pattern of language co-activation. However, such an account would still provide compelling evidence for rapid interaction between languages that do not share modality, even with single-language input. Furthermore, it is possible that lateral connections between translation-equivalents may influence language co-activation alongside top-down feedback connections between semantic and lexical representations.

Our findings also suggest a possible interaction between visual and linguistic information during language co-activation in the visual world paradigm. In the current analyses, bimodal bilinguals showed significant competitor and target activation early in the time-course analyses. Specifically, targets were preferred over distractors starting from 200 ms before target onset and competitors were preferred over distractors starting from target onset. However, the proportion of looks to targets and competitors in this time window did not differ, which suggests that targets and competitors were not preferentially activated relative to one another. A plausible account of these results is that the bilinguals co-activated target and competitor items based purely on visual input. Participants may have been able to access the labels of the display objects prior to the onset of the target word and subsequently activate the corresponding phonological representations. Because target and competitor items overlap in three of four phonological parameters in ASL, their shared phonological representations would receive greater activation than those of the distractor items. Thus, when the phonological representations transfer their activation back to the lexical level, the target and competitor items would be activated more strongly than distractors. This cascading activation, coupled with the activation gained from seeing both target and competitor pictures in the display, could result in more overall activation for the target and competitor items.

This account relies on the activation of the labels prior to auditory input in the visual world paradigm. Evidence for the presence of pre-labeling comes from a study by Meyer, Belke, Telling and Humphreys (2007), who showed monolinguals a picture of an object and asked them to search for that item in a four-picture array, which also contained a homonym to the target. For example, participants may have been asked to find a picture of a baseball bat, while the display also contained an image of a bat (animal). Participants looked more to the competitor than to unrelated items, suggesting they were implicitly activating the objects’ names. Furthermore, Huettig and McQueen (2007) found that if listeners were shown a four-object display for approximately 3000 ms prior to the onset of a target word (e.g., beaker), they looked more to at phonological competitors (e.g., beaver) than at distractors after target presentation. Huettig and McQueen did not find the same effects when the displays appeared for only 200 ms, suggesting that 200 ms did not provide enough time to access the labels of the items. Since the current study allowed participants 600 ms to label pictures prior to target onset, there may have been sufficient time for lexical encoding to occur.

While Meyer et al. (2007) and Huettig and McQueen (2007) indicate that participants may label objects in a display given sufficient time, their findings do not explicitly suggest that the visual input can activate multiple objects that share underlying linguistic features. However, previous eye-tracking studies have shown stronger activation of target and competitor items relative to distractors at the time of target onset (e.g., Bartolotti & Marian, 2012; Blumenfeld & Marian, 2007; Magnuson, Tanenhaus, & Aslin, 2008; Weber, Braun, & Crocker, 2006; Weber & Cutler, 2004). While these studies do not directly address the early target/competitor and distractor looks, the differences appear similar in magnitude to those observed in the current study. Furthermore, while several other visual world studies don’t report differences in early fixations, these differences may have been masked by experimental design, such as constraining a participant’s gaze to a specific location at the time of target onset (e.g., Allopenna, Magnuson, & Tanenhaus, 1998), or by limiting data analyses eye movements that occur after target onset (e.g., Yee & Sedivy, 2006; Huettig & Altmann, 2005). Future research is necessary to understand the role that visual input alone may play in language co-activation.

Regardless of the exact mechanisms underlying the effects of visual input on language co-activation, our findings suggest that the linguistic input also influences bimodal bilingual language processing. When we controlled for the pre-onset activation, the significant competitor activation for bimodal bilinguals (relative both to unrelated items, as well as to monolinguals’ processing of competitors) persisted, suggesting that even if the pre-onset looks are partially due to activation of the objects in the display by visual information alone, this effect cannot fully account for the results seen in the 200 ms – 1400 ms post-onset window. It is likely that the effects seen in the present study are a product of both visual and linguistic processing; however, further research is necessary to determine the relative contributions of visual and spoken input to language co-activation.

The pattern of interaction found in our bimodal bilinguals (i.e., activation of lexical items with limited bottom-up input, either through top-down or lateral mechanisms) may be universal to language processing and not unique to bimodal bilinguals. This suggestion is consistent with previously-reported similarities in behavioral (Shook & Marian, 2009, for a review) and neurological (e.g., Neville, et al. 1997) processing of spoken and signed languages. The similarities in cognitive and neurological aspects of spoken and signed languages suggest that the effects observed in the current study are not likely to be driven by unique ASL properties. Furthermore, information from multiple levels of linguistic processing is available during, and guides, spoken comprehension in both unimodal bilinguals (Dussias & Sagarra, 2007; Schwartz & Kroll, 2006; Thierry & Wu, 2007; van Heuven, Dijkstra, & Grainger, 1998) and monolinguals (Huettig & Altmann, 2005; Yee & Sedivy, 2006; Yee & Thompson-Schill, 2007; Ziegler, Muneaux, & Grainger, 2003), as well. However, one issue that studies examining interactivity in speech comprehension with monolinguals or unimodal bilinguals face is determining how linguistic entities are activated. When the language (or languages) of a listener match the modality of the input, it may be difficult to separate the effect that the input itself has on the linguistic system from the potential impact of top-down or lateral information (McClelland, Mirman, & Holt, 2006; McQueen, Jesse, & Norris, 2009). Investigating language processing in bimodal bilinguals provides a way of limiting the impact of featural information on a non-target language, in order to highlight other mechanisms that might play a role during language processing. Thus, while the present study cannot distinguish between top-down and lateral activation, future research on cross-modal, cross-language activation may be capable of separating the individual effects that each has on language processing. Our findings extend previous research suggesting highly interactive language systems in unimodal bilinguals and monolinguals to bimodal bilinguals, and suggest that linguistic information can be readily transferred across modalities (even in a unimodal context), a process that reflects substantial cross-linguistic connectivity during language processing.

We examine language processing in hearing English-American Sign Language bilinguals.

We use eye-tracking to measure ASL activation during spoken English comprehension.

English-ASL bilinguals activated both of their languages when listening to English.

Bilinguals show high interactivity across languages, modalities, types of processing.

Top-down and lateral information influence language activation.

Acknowledgments

This research was funded by grant NICHD RO1 HD059858 to the second author. The authors would like to acknowledge Dr. Margarita Kaushanskaya, Dr. Henrike Blumenfeld, Scott Schroeder, James Bartolotti, Caroline Engstler, Michelle Masbaum, and Amanda Kellogg for their contributions to this project. We thank Dr. Jesse Snedeker and five anonymous reviewers for helpful comments on the manuscript.

Appendix 1

Table 3.

Stimuli Pairs, and Distractor Items

| Targets | Freq. | Competitors | Freq. | Distractor 1 | Freq. | Distractor 2 | Freq. |

|---|---|---|---|---|---|---|---|

| Candy | 3.21 | Apple | 3.04 | Stapler | 2.03 | Watch | 4.17 |

| Pig | 3.13 | Frog | 2.56 | Mitten | 1.59 | Pipe | 3.05 |

| Poison | 2.92 | Bone | 3.04 | Fish | 3.36 | Tree | 3.61 |

| Glasses | 3.15 | Camera | 3.36 | Wrench | 2.38 | Rock | 3.52 |

| Chocolate | 3.11 | Island | 2.89 | Leaf | 2.39 | Window | 3.59 |

| Clown | 2.72 | Wolf | 2.66 | Match | 3.37 | Gun | 3.76 |

| Knife | 3.33 | Egg | 3.08 | Cake | 3.35 | Pencil | 2.77 |

| Subway | 2.80 | Iron | 2.93 | Banana | 2.58 | Flower | 2.89 |

| Cheese | 3.33 | Paper | 3.88 | Stamp | 4.08 | Cat | 3.35 |

| Movie | 3.61 | School | 4.01 | Car | 4.09 | Duck | 2.97 |

| Potato | 2.68 | Church | 3.28 | Door | 2.92 | Lighter | 2.75 |

| Screwdriver | 1.98 | Key | 3.54 | Orange | 3.48 | Doll | 2.99 |

| Chair | 3.46 | Train | 3.62 | Ball | 2.23 | Goat | 2.60 |

| Alligator | 2.21 | Hippo | 1.63 | Dresser | 2.40 | Tape | 3.27 |

| Bread | 3.26 | Wood | 3.04 | Flashlight | 3.01 | Piano | 2.99 |

| Butter | 3.05 | Soap | 3.01 | Hammer | 2.39 | Computer | 3.17 |

| Umbrella | 2.62 | Coffee | 3.68 | Peach | 2.83 | Belt | 2.99 |

| Thermometer | 2.13 | Carrot | 2.18 | Gum | 2.20 | Perfume | 2.78 |

| Skunk | 2.19 | Lion | 2.56 | Dinosaur | 2.81 | Grass | 2.91 |

| Napkin | 2.49 | Lipstick | 2.62 | Battery | 2.42 | Zipper | 2.28 |

|

| |||||||

| Mean (SD) | 2.87 (.47) | 3.03 (.57) | 2.80 (.66) | 3.10 (.44) | |||

Note. Target, competitor, and distractor stimuli with spoken word frequency (Log-10) obtained from SUBTLEXus (Brysbaert & New, 2009).

Footnotes

While the bilinguals and monolinguals significantly differed in age, research indicates that word processing may not differ between adults in their twenties and thirties (Geal-Dor, Goldstein, Kamenir, & Babkoff, 2006; Giaquinto, Ranghi, & Butler, 2007; Spironelli & Angrilli, 2009), and that listening comprehension remains relatively stable until approximately age 65 (Sommers, Hale, Myerson, Rose, Tye-Murray, and Spehar, 2011).

While three signs used initialized forms (i.e., “Chocolate” and “Church” use the “C” handshape; “Island” uses the “I” handshape), to ensure that orthographic overlap (activated by the close print-to-sign relationship held by fingerspelling and initialization) would not drive any possible effects, no target-competitor pair overlapped in initialized handshape.

The semantic similarity ratings were taken to ensure that the results of the present study could not be explained by a Whorfian mechanism where conceptual representations are mediated by phonological structure. Some target and competitor items contain phonological features that may invoke salient conceptual properties about their meaning (e.g., the turning motion shared by “key” and “screwdriver”). Concurrent similarity in structure between word pairs coupled with the relationship between phonological features and conceptual properties could result in a semantic system in which phonologically-overlapping items are considered more closely related semantically. Furthermore, the results of Morford et al. (2011) could be construed as evidence that phonological similarity in ASL results in stronger relationships between the semantic representations of those words. Since semantic relatedness can drive lexical activation in eye-tracking tasks (Yee & Sedivy, 2006; Yee & Thompson-Schill, 2007), our findings could potentially be due to links between targets and competitors at the semantic level, rather than parallel lexical activation. However, since the bilinguals and monolinguals consider the target-competitor pairs to be equally related, our results are not likely to be due to semantic relatedness effects.

When a bilingual’s two languages differ in their rates of current exposure, there can be an asymmetry in the direction of competition (e.g., if a bilingual’s L1 is used less frequently than their L2, then L2-to-L1 competition is larger, relative to L1-to-L2 competition; Spivey & Marian, 1999). Marian and Spivey (2003b) found that this asymmetry could be reduced by providing pre-experimental exposure to the less-used language. Therefore, instructions were provided in ASL to minimize the impact of differences in exposure rates on language processing.

In addition to the randomly-selected set of twenty distractors (D1), the analyses were also performed (a) across-trials using a control item in the same location as the competitor item and that occurred with the same target and distractor items as the competitor, (b) within-trials using the averaged set of all distractors (40 items), and (c) within-trials using the set of twenty distractor items (D2) that were not in the randomly-selected set. The finding that bilinguals activate their ASL representations during spoken English comprehension was robust across comparisons (i.e., bilinguals looked significantly more and longer at competitors than control/distractor items, and significantly more and longer at competitors than monolinguals, for each comparison). In addition, split-half analyses were also performed to ensure that the effects were not a product of learning or strategy development over time. The results seen in the collapsed analyses were also found in the split-half analyses, both for the overall proportion and duration comparisons and the time-course (all ps < 0.05).

Within the bilingual group, eight participants were either Children of Deaf Adults (CODAs) or immediate family of Deaf Adults, and considered ASL their first language (L1), while five participants learned ASL later in life (average AoA=16;6). At the time of the study, 11 of the 13 bilingual participants worked in an environment where ASL was spoken, either as a professional interpreter or working with deaf children in a school located in the greater Chicago area. Though the bilingual group contained both early (N = 8) and late (N = 5) bimodal bilinguals, comparison of the proportion of looks showed that early and late bilinguals looked at competitor (54.1% vs. 51.4%) and distractor items (34.5% vs. 35.8%) to a similar degree. Likewise, early and late bilinguals looked at competitor (9.7% vs. 11.0%) and distractor items (6.4% vs. 6.3%) for a similar duration of time (all non-parametric ps<0.05). When contrasted with monolingual performance, the early and late bilinguals showed the same pattern of results – both groups looked more and longer at competitor items than monolinguals. While the sample sizes make interpretation of the p-values difficult, we see similar effect sizes for the Early [Proportion: t(19) = 2.35, p < 0.05, d = 1.07; Duration: t(19) = 3.085, p < 0.01, d = 1.42] and Late [Proportion: t(16) = 2.74, p < 0.05, d = 1.37; Duration: t(16) = 2.50, p < 0.05, d = 1.25] bilinguals.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Allopenna PD, Magnuson JS, Tanenhaus MK. Tracking the time course of spoken word recognition using eye movements: Evidence for continuous mapping models. Journal of Memory and Language. 1998;38:419–439. [Google Scholar]

- Bartolotti J, Marian V. Language learning and control in monolinguals and bilinguals. Cognitive Science. 2012 doi: 10.1111/j.1551-6709.2012.01243.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumenfeld H, Marian V. Constraints on parallel activation in bilingual spoken language processing: Examining proficiency and lexical status using eye-tracking. Language and Cognitive Processes. 2007;22(5):633–660. [Google Scholar]

- Brentari D. A Prosodic Model of Sign Language Phonology. MIT Press; 1998. [Google Scholar]

- Brysbaert M, New B. Moving beyond Kucera and Francis: A critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavioral Research Methods. 2009;41(4):977–990. doi: 10.3758/BRM.41.4.977. [DOI] [PubMed] [Google Scholar]

- Canseco-Gonzalez E, Brehm L, Brick CA, Brown-Schmidt S, Fischer K, Wagner K. Carpet or cárcel: The effect of age of acquisition and language mode on bilingual lexical access. Language and Cognitive Processes. 2010;25(5):669–705. [Google Scholar]

- Casey S, Emmorey K. Co-speech gesture in bimodal bilinguals. Language and Cognitive Processes. 2008:1–23. doi: 10.1080/01690960801916188. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cutler A, Weber A, Otake T. Asymmetric mapping from phonetic to lexical representations in second-language listening. Journal of Phonetics. 2006;34:269–284. [Google Scholar]

- Dijkstra T, Frauenfelder UH, Schreuder R. Bidirectional grapheme-phoneme activation in a bimodal detection task. Journal of Experimental Psychology: Human Perception and Performance. 1993;19(5):931–950. doi: 10.1037//0096-1523.19.5.931. [DOI] [PubMed] [Google Scholar]

- Dijkstra T, Van Heuven WJB. The architecture of the bilingual word recognition system: From identification to decision. Bilingualism: Language and Cognition. 2002;5:175–197. [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3. Circle Pine, MN: American Guidance Service; 1997. [Google Scholar]

- Dussias PE, Sagarra N. The effect of exposure on syntactic parsing in Spanish-English bilinguals. Bilingualism: Language and Cognition. 2007;10:101–116. [Google Scholar]

- Emmorey K, Borinstein HB, Thompson R, Gollan TH. Bimodal bilingualism. Bilingualism: Language and Cognition. 2008;11(1):43–61. doi: 10.1017/S1366728907003203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, Petrich J, Gollan T. Simultaneous production of ASL and English costs the speaker, but benefits the perceiver. Presented at the 7th International Symposium on Bilingualism; Utrecht, Netherlands. 2009. [Google Scholar]

- Finkbeiner M, Forster K, Nicol J, Nakamura K. The role of polysemy in masked semantic and translation priming. Journal of Memory and Language. 2004;51(1):1–22. [Google Scholar]

- Geal-Dor M, Goldstein A, Kamenir Y, Babkoff H. The effect of aging on event-related potentials and behavioral responses: Comparison of tonal, phonologic, and semantic targets. Clinical Neurophysiology. 2006;117(9):1974–1989. doi: 10.1016/j.clinph.2006.05.024. [DOI] [PubMed] [Google Scholar]

- Giaquinto S, Ranghi F, Butler S. Stability of word comprehension with age. An electrophysiological study. Mechanisms of Ageing and Development. 2007;128(11–12):628–636. doi: 10.1016/j.mad.2007.09.003. [DOI] [PubMed] [Google Scholar]

- Grainger J, Frenck-Mestre C. Masked translation priming in bilinguals. Language and Cognitive Processes. 1998;13:601–623. [Google Scholar]

- Hartsuiker RJ, Pickering MJ, Veltkamp E. Is syntax separate or shared between languages? Cross-linguistic syntactic priming in Spanish-English bilinguals. Psychological Science. 2004;15(6):409–414. doi: 10.1111/j.0956-7976.2004.00693.x. [DOI] [PubMed] [Google Scholar]

- Huettig F, Altmann GTM. Word meaning and the control of eye fixation: semantic competitor effects and the visual world paradigm. Cognition. 2005;96:B23–B32. doi: 10.1016/j.cognition.2004.10.003. [DOI] [PubMed] [Google Scholar]

- Huettig F, McQueen JM. The tug of war between phonological, semantic and shape information in language-mediated visual search. Journal of Memory and Language. 2007;57:460–482. [Google Scholar]

- Ju M, Luce PA. Falling on sensitive ears. Psychological Science. 2004;15:314–318. doi: 10.1111/j.0956-7976.2004.00675.x. [DOI] [PubMed] [Google Scholar]

- Kaushanskaya M, Marian V. Non-target language recognition and interference: Evidence from eye-tracking and picture naming. Language Learning. 2007;57(1):119–163. [Google Scholar]

- Kroll J, De Groot AMB. Lexical and conceptual memory in the bilingual: Mapping form to meaning in two languages. In: Kroll J, De Groot AMB, editors. Tutorials in Bilingualism: Psycholinguistic Perspectives. Mahwah, NJ: Lawrence Erlbaum; 1997. [Google Scholar]

- La Heij W, Hooglander A, Kerling R, van der Velden E. Nonverbal context effects in forward and backward word translation: Evidence for concept mediation. Journal of Memory and Language. 1996;35(5):648–665. [Google Scholar]

- Loebell H, Bock K. Structural priming across languages. Linguistics. 2003;41(5):791–824. [Google Scholar]

- Magnuson JS, Tanenhaus MK, Aslin RN. Immediate effects of form-class constraints on spoken word recognition. Cognition. 2008;108(3):866–873. doi: 10.1016/j.cognition.2008.06.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marian V. Audio-visual integration during bilingual language processing. In: Pavlenko A, editor. The Bilingual Mental Lexicon. Clevedon, UK: Multilingual Matters; 2009. [Google Scholar]

- Marian V, Blumenfeld H, Boukrina O. Sensitivity to phonological similarity within and across languages: A native/non-native asymmetry in bilinguals. Journal of Psycholinguistic Research. 2008;37:141–170. doi: 10.1007/s10936-007-9064-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marian V, Blumenfeld H, Kaushanskaya M. The language experience and proficiency questionnaire (LEAP-Q): Assessing language profiles in bilinguals and multilinguals. Journal of Speech, Language, and Hearing Research. 2007;50(4):940–967. doi: 10.1044/1092-4388(2007/067). [DOI] [PubMed] [Google Scholar]

- Marian V, Spivey M. Bilingual and monolingual processing of competing lexical items. Applied Psycholinguistics. 2003a;24:173–193. [Google Scholar]

- Marian V, Spivey M. Competing activation in bilingual language processing: Within-and between-language competition. Bilingualism: Language and Cognition. 2003b;6(2):97–115. [Google Scholar]

- Marslen-Wilson WD. Functional parallelism in spoken word-recognition. Cognition. 1987;25:71–102. doi: 10.1016/0010-0277(87)90005-9. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Mirman D, Holt LL. Are there interactive processes in speech perception? Trends in Cognitive Sciences. 2006;10(8):363–369. doi: 10.1016/j.tics.2006.06.007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McQueen JM, Jesse A, Norris D. No lexical-prelexical feedback during speech perception, or: Is it time to stop playing those Christmas tapes? Journal of Memory and Language. 2009;61(1):1–18. [Google Scholar]

- Meyer AS, Belke E, Telling AL, Humphreys GW. Early activation of objects names in visual search. Psychonomic Bulletin & Review. 2007;14(4):710–716. doi: 10.3758/bf03196826. [DOI] [PubMed] [Google Scholar]

- Morford J, Wilkinson E, Villwock A, Piñar P, Kroll J. When deaf signers read English: Do written words activate their sign translations? Cognition. 2011;118(2):286–292. doi: 10.1016/j.cognition.2010.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarra J, Soto-Faraco S. Hearing lips in a second language: Visual articulatory information enables the perception of second language sounds. Psychological Research. 2005;71:4–12. doi: 10.1007/s00426-005-0031-5. [DOI] [PubMed] [Google Scholar]

- Neville HJ, Coffey SA, Lawson DS, Fischer A, Emmorey K, Bellugi U. Neural systems mediating American Sign Language: Effects of sensory experience and age of acquisition. Brain and Language. 1997;57:285–308. doi: 10.1006/brln.1997.1739. [DOI] [PubMed] [Google Scholar]

- Salamoura A, Williams JN. Processing verb argument structure across languages: Evidence for shared representations in the bilingual lexicon. Applied Psycholinguistics. 2007;28(4):627–660. [Google Scholar]

- Schoonbaert S, Duyck W, Brysbaert M, Hartsuiker RJ. Semantic and translation priming from a first language to a second and back: Making sense of the findings. Memory and Cognition. 2009;37:569–586. doi: 10.3758/MC.37.5.569. [DOI] [PubMed] [Google Scholar]

- Schoonbaert S, Hartsuiker RJ, Pickering MJ. The representation of lexical and syntactic information in bilinguals: Evidence from syntactic priming. Journal of Memory and Language. 2007;56(2):153–171. [Google Scholar]

- Schwartz AI, Kroll JF. Bilingual lexical activation in sentence context. Journal of Memory and Language. 2006;55:197–212. [Google Scholar]

- Shahin AJ, Miller LM. Multisensory integration enhances phonemic restoration. Journal of the Acoustical Society of America. 2009;125(3):1744–1750. doi: 10.1121/1.3075576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shook A, Marian V. Language processing in bimodal bilinguals. In: Caldwell E, editor. Bilinguals: Cognition, Education and Language Processing. Hauppauge, NY: Nova Science Publishers; 2009. [Google Scholar]

- Sommers MS, Hale S, Myerson J, Rose N, Tye-Murray N, Spehar B. Listening comprehension: Approach, design, procedure. Ear and Hearing. 2011;32(6):219–240. doi: 10.1097/AUD.0b013e3182234cf6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spironelli C, Angrilli A. Developmental aspects of automatic word processing: Language lateralization of early ERP components in children, young adults and middle-aged subjects. Biological Psychology. 2009;80(1):35–45. doi: 10.1016/j.biopsycho.2008.01.012. [DOI] [PubMed] [Google Scholar]

- Spivey MJ, Marian V. Cross talk between native and second languages: Partial activation of an irrelevant lexicon. Psychological Science. 1999;10(3):281–284. [Google Scholar]

- Sumby WH, Pollack I. Visual contributions to speech intelligibility in noise. Journal of the Acoustical Society of America. 1954;26:212–215. [Google Scholar]

- Summerfield AQ. Some preliminaries to a comprehensive account of audio-visual speech perception. In: Dodd B, Campbell R, editors. Hearing by eye: The psychology of lip-reading. Hillsdale, NJ: Erlbaum; 1987. pp. 3–51. [Google Scholar]

- Sunderman G, Kroll J. First language activation during second language lexical processing: An investigation of lexical form, meaning, and grammatical class. Studies in Second Language Acquisition. 2006;28(3):387–422. [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton M, Eberhard K, Sedivy J. Integration of visual and linguistic information during spoken language comprehension. Science. 1995;268:1632–1634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- Thierry G, Wu YJ. Brain potentials reveal unconscious translation during foreign-language comprehension. Proceedings of the National Academy of Sciences. 2007;104:12530–12535. doi: 10.1073/pnas.0609927104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Hell JG, Ormel E, van der Loop J, Hermans D. Cross-language interaction in unimodal and bimodal bilinguals. Paper presented at the 16th Conference of the European Society for Cognitive Psychology; Cracow, Poland. September 2–5.2009. [Google Scholar]

- Van Heuven WJB, Dijkstra T, Grainger J. Orthographic neighborhood effects in bilingual word recognition. Journal of Memory and Language. 1998;39:458–483. [Google Scholar]

- Van Wijnendaele I, Brysbaert M. Visual word recognition in bilinguals: Phonological priming from the second to the first language. Journal of Experimental Psychology: Human Perception and Performance. 2002;28(3):616–627. [PubMed] [Google Scholar]

- Viviani P. Eye movements in visual search: Cognitive, perceptual and motor control aspects. Reviews of Oculomotor Research. 1990;4:353–393. [PubMed] [Google Scholar]

- Weber A, Cutler A. Lexical competition in non-native spoken-word recognition. Journal of Memory and Language. 2004;50:1–25. [Google Scholar]

- Weber A, Braun B, Crocker MW. Finding referents in time: Eye-tracking evidence for the role of contrastive accents. Language and Speech. 2006;49(3):367–392. doi: 10.1177/00238309060490030301. [DOI] [PubMed] [Google Scholar]

- Yee E, Sedivy J. Eye movements reveal transient semantic activation during spoken word recognition. Journal of Experimental Psychology: Learning, Memory and Cognition. 2006;32:1–14. doi: 10.1037/0278-7393.32.1.1. [DOI] [PubMed] [Google Scholar]

- Yee E, Thompson-Schill S. Does lexical activation flow from word meanings to word sounds during spoken word recognition?. Poster presented at the 48th annual meeting of the Psychonomic Society; Long Beach, CA. 2007. [Google Scholar]

- Ziegler JC, Muneaux M, Grainger J. Neighborhood effects in auditory word recognition: Phonological competition and orthographic facilitation. Journal of Memory and Language. 2003;48:779–793. [Google Scholar]