Abstract

Background

Clinical trials of medications to alleviate the cognitive and behavioural symptoms of individuals with fragile X syndrome (FXS) are now underway. However, there are few reliable, valid and/or sensitive outcome measures available that can be directly administered to individuals with FXS. The majority of assessments employed in clinical trials may be suboptimal for individuals with intellectual disability (ID) because they require face-to-face interaction with an examiner, taxing administration periods, and do not provide reinforcement and/or feedback during the test. We therefore examined the psychometric properties of a new computerised ‘learning platform’ approach to outcome measurement in FXS.

Method

A brief computerised test, incorporated into the Discrete Trial Trainer© – a commercially available software program designed for children with ID – was administered to 13 girls with FXS, 12 boys with FXS and 15 matched ID controls aged 10 to 23 years (mental age = 4 to 12 years). The software delivered automated contingent access to reinforcement, feedback, token delivery and prompting procedures (if necessary) on each trial to facilitate responding. The primary outcome measure was the participant’s learning rate, derived from the participant’s cumulative record of correct responses.

Results

All participants were able to complete the test and floor effects appeared to be minimal. Learning rates averaged approximately five correct responses per minute, ranging from one to eight correct responses per minute in each group. Test–retest reliability of the learning rates was 0.77 for girls with FXS, 0.90 for boys with FXS and 0.90 for matched ID controls. Concurrent validity with raw scores obtained on the Arithmetic subtest of the Wechsler Intelligence Scale for Children-III was 0.35 for girls with FXS, 0.80 for boys with FXS and 0.56 for matched ID controls. The learning rates were also highly sensitive to change, with effect sizes of 1.21, 0.89 and 1.47 in each group respectively following 15 to 20, 15-min sessions of intensive discrete trial training conducted over 1.5 days.

Conclusions

These results suggest that a learning platform approach to outcome measurement could provide investigators with a reliable, valid and highly sensitive measure to evaluate treatment efficacy, not only for individuals with FXS but also for individuals with other ID.

Keywords: behavioural measurement methods, cognitive behaviour, fragile X, intellectual disability

Introduction

Individuals diagnosed with fragile X syndrome (FXS), the most common known form of inherited intellectual disability (ID), exhibit a range of cognitive, behavioural and medical symptoms that can significantly interfere with educational progress and daily functioning (Reiss & Hall 2007; Reiss 2009). These symptoms include mild to severe ID (Skinner et al. 2005), social anxiety (Hall et al. 2009), hyperactivity (Sullivan et al. 2006), inattention (Cornish et al. 2004), autistic-like behaviours (Hall et al. 2010) and increased risk of seizures (Berry-Kravis et al. 2010). Over the past decade, a number of clinical trials have been conducted in an attempt to ameliorate these symptoms, and further clinical trials are planned or are now underway (Berry-Kravis et al. 2011).

Medications evaluated to date in individuals with FXS include the ampakine compound CX516 (Berry-Kravis et al. 2006), lithium (Berry-Kravis et al. 2008a), the mGluR5 inhibitors, fenobam (Berry-Kravis et al. 2009) and AFQ056 (Jacquemont et al. 2011), minocycline (Paribello et al. 2010; Utari et al. 2010), acamprosate (Erickson et al. 2010), riluzole (Erickson et al. 2011c), the acetlycholinesterase inhibitor, donepezil (Kesler et al. 2009), aripiprazole (Erickson et al. 2011a, 2011b), memantine (Erickson et al. 2009) and oxytocin (Hall et al. 2012). These medication trials could be considered to be ‘disease-specific’ in that they are intended specifically to target the potential downstream systems involved in the pathogenesis of FXS (Reiss 2009).

Fragile X syndrome is a genetic disorder caused by mutations to a single gene (FMR1) located on the long arm of the X chromosome (Verkerk et al. 1991). Expansions of the trinucleotide CGG-repeat sequence in the promoter region of the gene causes the gene to become hypermethylated, resulting in reduced or absent levels of its protein product, FMRP – a key protein involved in synaptic plasticity and dendritic maturation in the brain (Greenough et al. 2001). Recent studies have shown that FMRP may be involved in regulating translation and/or signalling pathways associated with a number of key brain systems such as those involving glutamate (Antar et al. 2004), acetylcholine (Chang et al. 2008), dopamine (Wang et al. 2008) and gamma-aminobutyric acid (Olmos-Serrano et al. 2010). Thus, manipulation of these systems to ‘correct’ for downstream effects associated with reduced FMRP may help to improve learning and memory in FXS.

Despite increased knowledge concerning these potential downstream targets, only small improvements in cognitive and behavioural functioning have actually been observed in the many clinical trials conducted to date (see Hall 2009). Indeed, given that individuals with FXS exhibit a broad range of cognitive and behavioural symptoms, the evaluation of clinical change using existing standardised measures of cognitive and behavioural functioning would appear to be particularly challenging in this population. Until a sensitive, valid and reliable outcome measure is available for use in clinical trials for individuals with FXS, it is unclear whether the small effect sizes obtained in studies conducted to date are due to the poor efficacy of the medications themselves, or to the poor psychometric properties and clinical utility of the outcome measures employed in the studies.

For example, Berry-Kravis and colleagues (Berry- Kravis et al. 2006) administered a large battery of standardised cognitive tests to 49 participants with FXS, aged 18 to 49 years, in an attempt to measure treatment response to the ampakine, CX516. Measures administered directly to each participant included subtests of the Test of Visual-Perceptual Skills (TVPS) (Gardner 1996), the Woodcock- Johnson Tests of Cognitive Ability-Revised (W-J R) (Woodcock & Johnson 1990), the Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) (Randolph 1998), the Integrated Visual & Auditory Continuous Performance Test (IVA) (Sandford & Turner 1995), the Peabody Picture Vocabulary Test-III (PPVT-III) (Dunn & Dunn 1997), the Preschool Language Scale-4 (PLS-4) (Zimmermann et al. 2002), the Clinical Evaluation of Language Fundamentals-3 (CELF-3) (Semel et al. 1995) and the Autism Diagnostic Observation Schedule (ADOS) (Lord et al. 1999). Unfortunately, measurement issues associated with these tests seriously compromised the ability of the investigators to appraise the efficacy of the treatment. For example, very few patients could complete the entire test battery, with some tests exhibiting poor reliability (e.g. the ADOS and TVPS) and others (e.g. the CELF-3 and RBANS subtests) being unable to be completed by low functioning individuals. While the IVA test was found to be one of the few measures that could be completed by all participants, the authors stated that ‘The IVA was later found to be too difficult or confusing for some patients and the data quality could not be assured’ (p. 529). Floor effects were also apparent for many of the assessment instruments and, in some cases, different versions of the language assessments were required to be administered simply to cover the wide mental age range of the study participants.

These issues highlight some of the many difficulties associated with administering standardised cognitive and behavioural tests to individuals with ID. First, the time required to administer standardised test batteries can be significant, thus decreasing the motivation for an individual with ID to complete the test (Koegel et al. 1997). Second, most standardised tests involve direct face-to-face interaction with a trained examiner, thus potentially increasing social anxiety that may have detrimental effects on performance (Hall et al. 2006). Third, the normative samples used to derive standardised indices on many standardised tests do not include large numbers of individuals with ID – thus, individuals with ID may often obtain scores that are on the floor of the test (Hessl et al. 2009). Fourth, and perhaps most significantly, standardised tests are usually administered to subjects under conditions in which reinforcement and/or feedback is not available to the subject. These factors are crucial to promote and maintain successful responding in individuals with ID (Lovaas 2003).

To examine the completion rates and psychometric properties of measures commonly employed to assess individuals with FXS, Berry-Kravis and colleagues (Berry-Kravis et al., 2008b) administered a large battery of tests to 31 participants with FXS, aged 5 to 47 years (mental age range = 2.1 to 10.7 years). The tests included the Carolina Fragile X Project Continuous Performance Test (CPT) (Sullivan et al. 2007), the Card Task (Johnson-Glenberg 2008), the NEPSY tower subtest (Korkman et al. 1998), the Spatial Relations subtest from the W-J R (Woodcock and Johnson, 1990), the Symbol Search subtest from the Wechsler Intelligence Scale for Children-IV (WISC-IV) (Wechsler 2003), the List and Story Memory subtests from the RBANS (Randolph 1998) and the Non-Verbal Associative Learning Task (NVALT) (Boutet et al. 2005). Results showed that none of the tests could be completed by all individuals in the study, with the Symbol Search subtest from the WISC having the lowest completion rate (52% of participants) while the NVALT had the highest completion rate (97%). Refusal rates were also the highest for the Symbol Search test (30% of participants) and lowest for the NVALT (11%). Interestingly, the NVALT was the only test in the assessment battery that actually provided reinforcement to the subject during test trials. That is, during the NVALT, subjects received a nickel after they made a correct response. Indeed, Berry-Kravis et al. (2008b) stated that the NVALT ‘is particularly engaging for subjects with FXS because of the frequent reinforcement the subject receives when he/she gets to keep the nickels found, and because the test produces less anxiety than most tests as the subject does not have to make eye contact or engage socially’ (p. 1753). However, the test–retest reliability of this outcome measure was not reported.

Although not designed as outcome measures for clinical trials, several studies have employed computerised paradigms to measure aspects of behaviour in very young (and not very able) participants with FXS. For example, Scerif and colleagues (Scerif et al., 2005) measured oculomotor control in young boys with FXS aged 14 to 55 months of age by presenting visual stimuli on a computer screen and using reinforcement (in the form of an animated cartoon animal) to maintain accurate looking. In two other studies, Scerif and colleagues (Scerif et al., 2004, Scerif et al. 2007) measured visual search and attentional control in young boys with FXS (2 to 5 year olds) by limiting verbal instructions, providing demonstrations of the procedures, and avoiding face-to-face contact. Finally, Farzin and colleagues (Farzin et al. 2008; Farzin & Rivera 2010) measured dynamic object processing in boys and girls with FXS aged 14 to 45 months using an eye tracking system. These findings suggest that contingent reinforcement, sensitivity to the difficulties experienced by participants with FXS, and computerised presentation may be highly successful procedures to use with younger and less able individuals with FXS. Further, it seems plausible that any test that increases a student’s motivation to respond, provides frequent reinforcement for correct responding, and does not involve the presentation of social demands during the test, may provide a sensitive, valid and reliable outcome measure for individuals with FXS (and perhaps, individuals with other ID).

Discrete trial training (DTT), a procedure commonly employed to promote skill acquisition in children with autism (Smith 2001), may be one such approach. Based on the principles of applied behavior analysis (ABA), DTT involves successively presenting learning trials to a participant in a consistent and highly structured manner combined with ample reinforcement and feedback. Briefly, in a match-to-sample format, DTT may involve the presentation of a sample stimulus, followed by the presentation of two or more comparison stimuli, one of which is the correct matching stimulus. If the student selects the correct comparison stimulus, reinforcement is delivered (e.g. verbal praise, tokens, edibles and/or coins); if the student selects an incorrect comparison stimulus, the student receives feedback that the response was incorrect. Finally, if the student produces a series of incorrect responses to the same sample stimulus, a prompting procedure (e.g. moving the incorrect comparison stimuli further away from the student) to increase the salience of the correct comparison stimulus can be implemented. DTT is thus a simple, yet well-researched and established teaching technique that includes many of the features that may be necessary to maintain responding in individuals with ID during test situations. To our knowledge, however, DTT has not been adapted for use as an outcome measure for individuals with ID. Because DTT can now be presented on a computer in an automated fashion (Butter & Mulick 2001), computerised DTT may be particularly appealing for evaluating clinical change in FXS, simply because social test anxiety may be minimised.

The purpose of the present study, therefore, was to examine the feasibility of using computerised DTT as an assessment tool to evaluate clinical change in individuals with FXS. Our primary dependent measure of performance was the subject’s learning rate, derived from each participant’s cumulative record of correct responding during the session. Cumulative records have been employed in the experimental analysis of behaviour for well over 60 years (Lattal 2004) to measure behaviours in non-human subjects (Ferster & Skinner 1957) as well as human subjects (Rodgers & Iwata 1991). The slope of the cumulative record, equivalent to the subject’s overall rate of responding, has been shown to be superior to other dependent measures of behaviour such as probability, running rate and response latency (Killeen & Hall 2001). We examined the psychometric properties of the learning rate measure by evaluating its test–retest reliability, concurrent validity and sensitivity to change. Finally, to determine whether this new learning platform approach may be useful for individuals with ID in general, we also included a control group of individuals with an unknown form of ID and compared the resultant learning rates.

Method

Participants and setting

Participants were 13 girls with FXS, 12 boys with FXS and 15 matched ID controls (3 females, 12 males). Participants with FXS were recruited via an email sent out to members of the National Fragile X Foundation, or from an ongoing longitudinal study of children and adolescents with FXS. [Note that five girls, six boys and 11 matched ID controls participated in a concurrent investigation (Hammond et al. 2012)]. All had a confirmed genetic diagnosis of FXS (i.e. more than 200 CGG repeats on the FMR1 gene and evidence of aberrant methylation). Two boys with FXS were mosaic. Participants with ID were recruited via local agencies serving individuals with special needs – all tested negative for FXS and none had a diagnosis of a genetic disorder. Participants were included in the study if they were aged between 10 and 23 years, obtained standardised IQs on the Wechsler Abbreviated Scale of Intelligence (WASI) (Wechsler 1999) between 50 and 90 points (note that 50 is the floor of the test), could sit in front of a computer for more than 5 min, and could use a computer mouse without assistance. Individual participant characteristics are listed in Table 1. The mean age (in years) of the participants was 14.4 (SD = 3.6) for girls with FXS, 17.4 (SD = 4.8) for boys with FXS and 16.1 (SD = 4.4) for matched ID controls, a non- significant difference between the groups (F2,39 = 1.60, P = 0.22). The mean mental age (in years) of the participants (estimated by converting raw scores obtained on the WASI subtests into age equivalent scores, and computing the average) was 8.3 (SD = 1.8) for girls with FXS, 7.3 (SD = 1.8) for boys with FXS and 7.1 (SD = 2.4) for matched ID controls, a non-significant difference between the groups (F2,39 = 1.37, P = 0.27). The groups were therefore well matched in terms of age and mental age. As expected, mean IQs were significantly higher for girls with FXS (mean = 72.8, SD = 8.3) than for boys with FXS (mean = 62.3, SD = 8.7) [t(23) = 3.06, P = 0.006, d = 1.24]. Five (38.5%) girls with FXS, four (33.3%) boys with FXS and five (33.3%) matched ID controls obtained scores in the autism spectrum disorder (ASD) range on the Social Communication Questionnaire (i.e. 15 points or above). There were no differences between individuals who scored in the ASD range and those who did not score in the ASD range in each group in terms of age, IQ or mental age. Six (46.2%) girls with FXS, eight (66.7%) boys with FXS and five (33.3%) matched ID controls were taking psychoactive medications. There were no differences between individuals who were taking psychoactive medications and those not taking psychoactive medications in each group.

Table 1.

Participant characteristics

| Participant | Age (years) | IQ | Mental age | ASD status | Psychoactive medications | ABC-C total score | Learning rate |

|---|---|---|---|---|---|---|---|

| Girls with FXS | |||||||

| 1 | 21.6 | 78 | 11.00 | Yes | No | 5 | 7.59 |

| 2 | 16.8 | 86 | 11.75 | No | No | 21 | 7.44 |

| 3 | 16.3 | 78 | 7.92 | Yes | Yes | 79 | 7.07 |

| 4 | 15.1 | 63 | 10.17 | Yes | Yes | 65 | 6.00 |

| 5 | 12.3 | 61 | 5.61 | Yes | No | 11 | 5.49 |

| 6 | 10.3 | 84 | 7.90 | No | Yes | 8 | 5.19 |

| 7 | 13.4 | 69 | 7.52 | No | No | 5 | 5.00 |

| 8 | 18.7 | 73 | 9.67 | No | Yes | 11 | 4.98 |

| 9 | 10.2 | 76 | 7.05 | No | Yes | 24 | 4.36 |

| 10 | 10.7 | 77 | 7.75 | No | No | 14 | 3.87 |

| 11 | 14.6 | 65 | 7.09 | No | No | 12 | 3.71 |

| 12 | 10.5 | 75 | 7.17 | Yes | Yes | 59 | 2.46 |

| 13 | 16.4 | 61 | 7.67 | No | No | 9 | 2.43 |

| Boys with FXS | |||||||

| 1 | 15.8 | 54 | 6.23 | No | No | 6 | 7.19 |

| 2 | 18.7 | 63 | 7.47 | No | Yes | 7 | 6.76 |

| 3 | 22.8 | 56 | 6.97 | No | No | 33 | 6.35 |

| 4 | 23.9 | 54 | 7.13 | No | Yes | 29 | 5.24 |

| 5 | 21.6 | 62 | 8.12 | Yes | Yes | 44 | 4.73 |

| 6* | 11.2 | 78 | 7.63 | No | Yes | 8 | 4.39 |

| 7* | 17.1 | 79 | 12.00 | Yes | Yes | 7 | 4.22 |

| 8 | 10.3 | 59 | 4.39 | No | Yes | 35 | 4.22 |

| 9 | 14.9 | 65 | 8.25 | Yes | Yes | 54 | 4.07 |

| 10 | 19.6 | 55 | 6.81 | No | No | 5 | 3.02 |

| 11 | 21.8 | 56 | 6.23 | No | No | 0 | 2.11 |

| 12 | 11.1 | 67 | 5.83 | Yes | Yes | 55 | 1.65 |

| Matched ID controls | |||||||

| 1 | 14.8 | 90 | 12.75 | No | Yes | 8 | 7.95 |

| 2 | 20.4 | 56 | 6.11 | Yes | Yes | 30 | 7.37 |

| 3 | 22.5 | 68 | 9.33 | No | No | 13 | 6.84 |

| 4 | 18.2 | 79 | 11.17 | No | Yes | 27 | 6.67 |

| 5 | 17.2 | 55 | 6.80 | No | No | 0 | 6.24 |

| 6 | 10.4 | 82 | 8.22 | No | No | 38 | 6.18 |

| 7 | 18.8 | 56 | 7.42 | No | No | 2 | 6.13 |

| 8 | 10.0 | 69 | 5.11 | Yes | No | 17 | 6.08 |

| 9 | 20.4 | 53 | 6.38 | Yes | No | 64 | 5.49 |

| 10 | 14.9 | 61 | 6.98 | No | No | 1 | 5.29 |

| 11 | 12.8 | 66 | 6.61 | No | Yes | 15 | 2.79 |

| 12 | 23.0 | 59 | 6.30 | Yes | Yes | 31 | 2.11 |

| 13 | 14.2 | 54 | 4.04 | Yes | No | 33 | 1.83 |

| 14 | 10.0 | 65 | 5.01 | No | No | 14 | 1.22 |

| 15 | 14.2 | 54 | 4.81 | Yes | No | 41 | 0.80 |

Mosaic for FXS.

ABC-C, Aberrant Behavior Checklist-Community; ASD, autism spectrum disorder; FXS, fragile X syndrome; ID, intellectual disability.

Sessions were conducted in one of two rooms located within the Department of Psychiatry and Behavioral Sciences at Stanford University. Session rooms contained a table or desk, chairs, a laptop computer and a computer mouse. All procedures were approved by the institutional review board at Stanford University; parental consent and subject assent were obtained in all cases.

Procedures

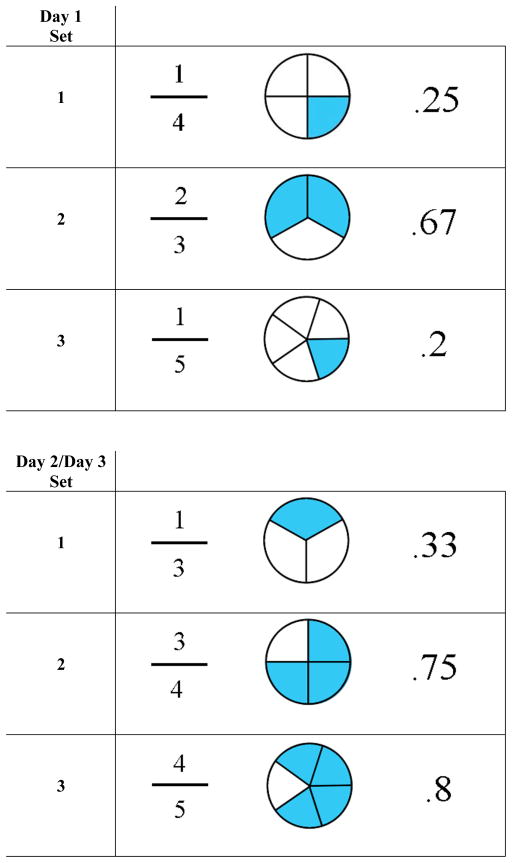

All participants received a single 15-min test session using the Discrete Trial Trainer© (Accelerations Educational Software, 2007) – a commercially available software program that was specifically designed for use with children with ID. The software contains over 200 DTT programs that are incorporated into over 10 key learning domains, including classification, counting, identification, math, money, sequencing, spatial relations, time, word analysis, written words and ‘wh—’ questions (who, what, when, etc.). For the purpose of the present study, we selected two relatively advanced programs from the math domain in the Discrete Trial Trainer© – ‘fractions to pie charts’ and ‘pie charts to decimals’. We selected these stimuli to ensure that participants would be relatively unfamiliar with the stimuli at baseline, and because children with FXS are reported to have difficulties mastering mathematical concepts (Murphy & Mazzocco 2008). Each program contained six pairs of matching stimuli (Fig. 1). In the fractions to pie charts program, fractions were always the sample stimuli, and pie charts were the comparison stimuli. In the pie charts to decimals program, pie charts were always the sample stimuli and decimals were the comparison stimuli. Stimuli were randomised across participants so that three sets of stimuli from each program were presented to the subject during the test. (The remaining three sets of stimuli from each program were employed during a test–retest reliability session conducted on the following day – see below.)

Figure 1.

Stimuli employed in the assessment. Three sets of stimuli were randomly chosen from six available sets on day 1. The remaining three sets of stimuli were presented for the reliability and sensitivity analyses.

Each participant received the following verbal instructions before a session began: ‘Today you are going to work on some math tasks on the computer. First, you will see a fraction or a pie chart appear at the top of the computer screen. You will also hear a question spoken in a female voice. Three boxes then will appear below the top box, one of which will be the correct answer to the question. Your job will be to select the box that best matches the top box using the computer mouse. Another math problem then will appear, and so on. You will also see four green circular tokens displayed at the bottom right corner of the screen. If you choose the correct answer you will receive another token; if you choose the incorrect answer, a token will be removed. Once you have five tokens, you will be allowed to play on a computer game for a short time. You will have about 10 seconds to answer each question, so you should have plenty of time. Please try to do the best you can. And if you’re not sure of an answer, it’s okay to guess. Any questions?’ For individuals who did not appear to understand the instructions, the procedures were demonstrated on the computer using non-test items.

The default options in the Discrete Trial Trainer© were selected for each session and the two programs as well as the computer games ‘Whack an alien’, ‘Whack a spider’, ‘Super-pong’ and ‘Blinky’ (available within the Discrete Trial Trainer©) were selected for presentation. Stimuli were programmed to be presented in the ‘Random mode’ at the beginning of each session. Each trial was scheduled to last 10 s. At the beginning of a trial, the computer selected one of the programs at random, and then randomly selected a sample stimulus from the set of three available items within that program (e.g. ¼). A pre-programmed female vocal prompt then said ‘Match the correct fraction’. After 2 s, three comparison stimuli (one being the correct matching stimulus, the other two being ‘distracter’ stimuli selected from the remaining comparison stimuli in the program) then were presented equidistant underneath the sample stimulus for 8 s. Correct responses to a trial resulted in the delivery of reinforcement i.e. pre-programmed vocal feedback (e.g. ‘good job, you showed me one quarter!’) and delivery of a token. All comparison stimuli were then removed and the correct matching stimulus was displayed in the middle of the screen for 2 s. Following a 2-s inter-trial interval, the next trial began.

If the participant responded incorrectly on a trial, the two incorrect distracter stimuli were removed, and the correct matching stimulus remained on the screen for 2 s, accompanied by a pre-programmed vocal ‘reminder’ of the correct matching stimulus (e.g. ‘one-quarter’). A token was also removed from the column of tokens. Following a 2-s inter-trial interval, the same sample stimulus was presented again on the next trial with the comparison stimuli being presented in a different randomised order.

If the participant responded incorrectly on two successive trials with the same sample stimulus, relation, the computer initiated a prompting procedure on the next trial to assist the participant in making a correct response. On prompted trials, the computer decreased the size of the incorrect comparison stimuli and moved them away from the correct (matching) comparison stimulus. If the participant continued to select the incorrect comparison stimuli on prompted trials, distracter stimuli were removed altogether and the participant had no option but to select the correct matching stimulus. Once a correct response occurred, the computer then systematically increased the size of the distracter stimuli and moved them closer to the correct (matching) stimulus on the bottom row of the screen on subsequent trials – first with only one distracter stimulus presented, and then with two distracter stimuli presented until stimulus presentation again was identical to that before the prompt procedure was implemented.

If the participant had acquired five tokens, one of the computer games was randomly selected by the computer and presented to the subject for approximately 20 s. Once the game had finished, the computer presented the next trial with no tokens being displayed.

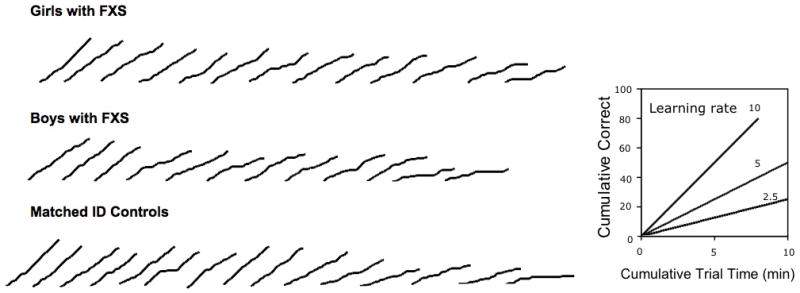

Data collection was automated on a trial-by-trial basis, such that the computer automatically recorded the trial type (i.e. whether the trial was prompted or unprompted), the duration of each trial (in seconds), and whether the participant had responded correctly on a trial. From these data, a cumulative record of correct responses to unprompted trials was plotted as a function of the cumulative trial duration (Fig. 2). The participant’s learning rate (which corresponds to the slope of the participant’s cumulative record) was then calculated as the cumulative number of correct responses obtained on unprompted trials divided by the cumulative trial duration (in minutes).

Figure 2.

Cumulative records plotted over the course of the test for each participant from the session on day 1. Note that each participant’s learning rate corresponds to the slope of the participant’s cumulative record. FXS, fragile X syndrome; ID, intellectual disability.

To evaluate test–retest reliability of the learning rates, eight girls with FXS, eight boys with FXS and all 15 participants with ID received a second 15-min test session on the following day (day 2). Participants who had received a second test session then received 15 to 20 sessions of intensive computerised DTT on the Discrete Trial Trainer©. These sessions were conducted over 4–6 h on the second day, and in the morning of a third day with all 12 pairs of stimuli shown in Fig. 1. (These data are reported in a separate paper.) To evaluate the sensitivity to change of the learning rates, these participants then received a third test session on the third day using the day 2 stimuli.

Results

Figure 2 shows each participant’s cumulative record of correct responses from the first session plotted for girls with FXS (upper panel), boys with FXS (middle panel) and matched ID controls (lower panel). As can be seen from the figure, the majority of the cumulative records were linear, suggesting that most participants responded at a steady rate throughout the session. However, for some individuals, different patterns of responding can be seen. For example, for participant number 6 in the matched control group, the cumulative record was steep, then flat, then steep again. It appears that this individual was learning successfully, then lapsed in attention (or experienced difficulty learning a particular stimulus relation), and then began learning successfully again (see the sixth cumulative record from the left in the bottom row of Fig. 2). For participant 15 in the matched control group, the slope of the cumulative record is shallow throughout the session suggesting that this individual experienced greater difficulties in learning throughout, and therefore required prompts consistently (see the first cumulative record from the right in the bottom row of Fig. 2).

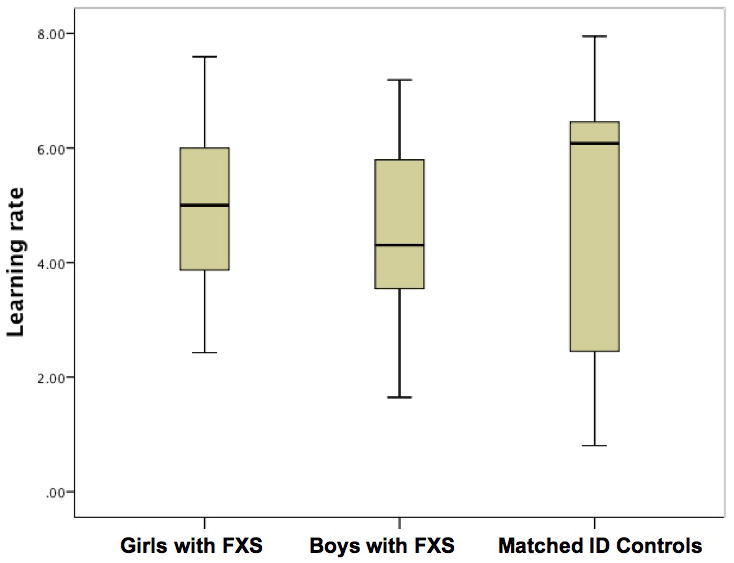

The learning rates, calculated from the cumulative records, are shown in Table 1. Box plots of the learning rates for each group are shown in Fig. 3. The learning rates were well distributed, had a good range (approx. one to eight correct responses per minute) and there were no significant outliers in each group. Mean learning rates were 5.05 (SD = 1.70) for girls with FXS, 4.50 (SD = 1.73) for boys with FXS and 4.87 (SD = 2.41) for matched ID controls, a non-significant difference between the groups (F2,39 = 0.24, P = 0.79).

Figure 3.

Box plots of the learning rates from the session on day 1 plotted for each group. FXS, fragile X syndrome; ID, intellectual disability.

Correlations between participant background characteristics and subsequent learning rates indicated that there was a significant association between the learning rates and mental age for girls with FXS [r(13) = 0.623, P = 0.023] and for matched ID controls [r(15) = 0.678, P = 0.005], but not for boys with FXS [r(12) = 0.100, P = 0.758]. There were no other associations between learning rates and participant background characteristics.

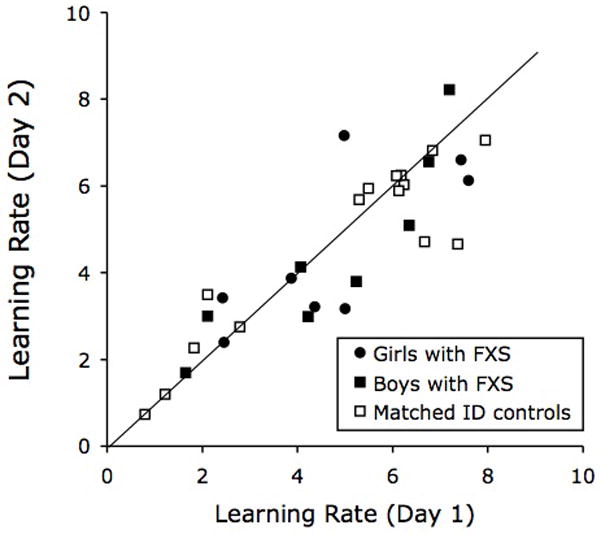

Figure 4 shows test–retest reliability data obtained for those participants who received the second test session on the following day. The figure shows plots of the learning rates obtained on the second day as a function of learning rates obtained on the first day. The intra-class correlations (ICC) were 0.768 for girls with FXS, 0.900 for boys with FXS and 0.903 for matched ID controls, indicating that the test–retest reliability of the learning rates was good.

Figure 4.

Test–retest reliability data of the learning rates for each group. FXS, fragile X syndrome; ID, intellectual disability.

Concurrent validity of the learning rates was established by computing the correlation between the learning rates and raw scores obtained on the Arithmetic subtest of the Wechsler Intelligence Scale for Children-III (WISC-III) (Wechsler 1991). We used raw scores for the Arithmetic subtest because, as expected, the majority of the standardised scores obtained for this subtest were on the floor of the test. To control for ability level, we included mental age as a covariate in the analysis. The partial correlation was highly significant in boys with FXS [r(9) = 0.80, P = 0.003], and in matched ID controls [r(12) = 0.563, P = 0.036], but not in girls with FXS [r(10) = 0.35, P > 0.05]. We also computed correlations between the learning rates and raw scores obtained on the (non-arithmetical) subtests of the WASI (i.e. vocabulary, similarities, block design and matrix reasoning subtests), controlling for mental age. These partial correlations were all non-significant.

To examine the sensitivity to change of the learning rates, we compared the learning rates obtained from the first session to those obtained during the third session, after participants had received 15–20, 15-min sessions of DTT training with the six sets of stimuli over 1.5 days. We used Cohen’s effect size statistic, correcting for the dependence among means (Morris & DeShon 2002). Following 20 sessions of intensive DTT training, learning rates improved from an average of 4.89 (SD = 2.09) to 7.42 (SD = 3.26) in girls with FXS, from 4.72 (SD = 2.07) to 6.40 (SD = 2.39) in boys with FXS and from 4.65 (SD = 2.33) to 7.06 (SD = 2.67) in matched ID controls. The effect size for this difference was 1.21, 0.89 and 1.42 for each group respectively, indicating that sensitivity to change of the learning rates was large in each group.

Discussion

Over the past few years, an increasing number of clinical trials have been conducted to evaluate the effects of pharmacological agents on the cognitive and behavioural symptoms of individuals with FXS, despite few reliable, valid and sensitive outcome measures being available to evaluate these effects. Currently available tests for individuals with FXS have been shown to have poor psychometric properties, floor effects, or cannot be administered to all individuals with FXS. In this preliminary study, we examined the psychometric properties of a new ‘learning platform’ approach to outcome measurement in FXS. Given that most standardised tests require individuals with ID to perform under conditions that may be suboptimal (e.g. face-to-face interaction with an examiner, taxing administration periods, and an absence of reinforcement and/or feedback), we examined whether a test that was computerised, could be administered over a short period of time (i.e. 15 min), and included reinforcement and feedback to motivate the student during the test, could provide investigators with a better outcome measure. Specifically, we adapted a well-known teaching procedure commonly used for individuals with autism – DTT – and derived a measure of test performance during the training. By plotting the participant’s cumulative record of correct responses to unprompted trials as a function of cumulative trial time, we evaluated the psychometric properties of the participant’s learning rate (which corresponds to the slope of the participant’s cumulative record). Given that the learning rate measure is produced under optimal learning conditions in which individuals with ID can be successful – i.e. a ‘learning platform’ – the learning rate measure may therefore be a more useful and valid measure of change.

We found that in both groups of individuals, the cumulative records obtained over a single 15-min session of DTT were extremely linear, and had excellent internal consistency. Indeed, the learning rates were well distributed, with no floor or ceiling effects being apparent. Only one participant in the ID group appeared to score close to the floor of the test, i.e. the participant obtained a learning rate of less than 1.0. Examination of the cumulative record for this participant showed that he predominantly received trials under the prompting procedure throughout the session. In effect, this participant was ‘prompt dependent’. Importantly, however, this participant was at least able to complete the test. No participants refused to respond in the sessions.

For an outcome measure to be informative and clinically useful in the field of ID, it must have a broad dynamic range, be sensitive to different levels of ability, and have established test–retest reliability and validity. In addition to these properties, we believe that an outcome measure should be obtained directly from the participant with ID, rather than from information gathered from third parties (e.g. parents and/or teachers) – simply because third-party questionnaires may be subject to rater bias and subjective recall effects. Further, we believe that the test should be relatively easy to administer, involve automated data collection and scoring procedures, and employ a single index to aid interpretation to professionals. The learning rate index proposed here appears to have many of these properties. Learning rates obtained in the initial test session ranged from approximately one to eight correct responses per minute, averaging approximately five responses per minute in each group. Thus, the learning rate index appears to have a broad dynamic range. Although the mental age range of our study sample was broad (4 to 12 years), we found that the learning rates were significantly correlated to mental age in girls with FXS and in the matched controls. Thus, the learning rates were sensitive to different levels of ability in these groups. The reliability and validity of the learning rate measure was good, and the measure’s sensitivity to change was excellent. Taken together, these preliminary data suggest that the proposed learning rate measure may provide investigators with a reliable, valid and sensitive outcome measure for use in clinical trials in individuals with FXS.

There are, however, a number of potential limitations associated with the study. First, we presented only a small number of stimuli to the participants during the test – six sets of fractions, pie charts and decimals – thus the content validity of the test could be questioned. We employed relatively difficult math stimuli in the test (fraction, pie chart and decimal conversions) so that language confounds could be minimised and because participants would have limited familiarity with these stimuli. Further studies could be conducted, however, in which different versions of the test, with different sets of stimuli from different domains of functioning, could be administered to examine the generalisability of the learning rates obtained. By employing abstract stimulus pairs in the test for example, potential familiarity and language confounds could be avoided altogether. A second limitation concerns the relatively short time period over which the test–retest reliability data was collected (i.e. 1 day). In a previous study evaluating potential outcome measures for individuals with FXS, the test–retest reliability interval was 1 week, presumably in order to correspond more closely to the intervals commonly employed in clinical trials (Berry-Kravis et al. 2008b). However, we have no reason to suspect that the test–retest reliability of our learning rate measure would necessarily decrease following a longer time interval. A final limitation concerns whether our sample was representative of individuals with FXS in the general population. We included individuals with FXS who were aged between 10 and 23 years and had IQs between 50 and 90 (which translated to a mental age range of 4 to 12 years). These individuals were able to sit in front of a computer for at least 5 min, and were able to use a computer mouse. In the study by Berry- Kravis et al. (2008b), the age range of the participants was 5 to 47 years while participant IQs ranged from 30 to 89 (which translated to a mental age range of 2 to 10 years). Future studies will therefore be needed to examine whether our test can be completed by individuals with mental ages below 4 years. However, the mental age range of our participants (4 to 12 years) is comparable to those of participants with FXS that have been included in clinical trials to date.

One way to evaluate the benefit of a particular medication in clinical trials is to obtain measures of task performance at two or more time points under test or ‘extinction’ conditions (i.e. withholding feedback or reinforcement during the test). It is well known, however, that extinction conditions will decrease the frequency of responding. By providing feedback and reinforcement during the evaluation, we are therefore providing a platform upon which individuals with ID can perform successfully. We have therefore called this approach, the ‘learning platform’ approach. Currently, pharmacological treatments are evaluated in isolation from learning factors. We believe this may be a mistake and can increase the likelihood of false negative results. Indeed, by attempting to measure what an individual can or cannot do while he/she is taking a particular medication, investigators in the field of ID are failing to consider a more important question: does the medication actually facilitate the participant’s learning ability? Given that learning is such a fundamental component of functioning and development in individuals with ID, the measurement of learning effects is an important issue in the evaluation of medication effects.

How will the test be used by professionals conducting clinical trials in the field? The Discrete Trial Trainer© is a commercially available software product with an established website (http://www.dttrainer.com); thus, professionals conducting clinical trials will be able to download the software directly from the website for use as part of their test battery. Trial-by-trial data are automatically saved to the computer during administration; thus, learning rates can be computed prior to and following an intervention to evaluate treatment efficacy.

The identification of a sensitive, specific, reliable and valid outcome measure (with a low floor), which aims to improve testing consistency as well as enhancing student motivation, will allow future pharmacological interventions to be evaluated more objectively in the future. Currently, pharmacological interventions for individuals with ID are conducted in isolation from behavioural interventions. We hope that our outcome measure will therefore encourage clinicians to conduct pharmacological and behavioural interventions synergistically in the future.

Acknowledgments

This research was supported by Award Number K08MH081998 from the National Institute of Mental Health (PI Scott Hall) and by a clinical grant from the National Fragile X Foundation (PI Scott Hall). Jennifer Hammond is funded by a T32 training grant (T32MH019908) from the National Institute of Mental Health (PI Allan Reiss).

References

- Antar LN, Afroz R, Dictenberg JB, Carroll RC, Bassell GJ. Metabotropic glutamate receptor activation regulates fragile x mental retardation protein and FMR1 mRNA localization differentially in dendrites and at synapses. Journal of Neuroscience. 2004;24:2648–55. doi: 10.1523/JNEUROSCI.0099-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry-Kravis E, Krause SE, Block SS, Guter S, Wuu J, Leurgans S, et al. Effect of CX516, an AMPA-modulating compound, on cognition and behavior in fragile X syndrome: a controlled trial. Journal of Child and Adolescent Psychopharmacology. 2006;16:525–40. doi: 10.1089/cap.2006.16.525. [DOI] [PubMed] [Google Scholar]

- Berry-Kravis E, Sumis A, Hervey C, Nelson M, Porges SW, Weng N, et al. Open-label treatment trial of lithium to target the underlying defect in fragile X syndrome. Journal of Developmental and Behavioral Pediatrics. 2008a;29:293–302. doi: 10.1097/DBP.0b013e31817dc447. [DOI] [PubMed] [Google Scholar]

- Berry-Kravis E, Sumis A, Kim OK, Lara R, Wuu J. Characterization of potential outcome measures for future clinical trials in fragile X syndrome. Journal of Autism and Developmental Disorders. 2008b;38:1751–7. doi: 10.1007/s10803-008-0564-8. [DOI] [PubMed] [Google Scholar]

- Berry-Kravis E, Hessl D, Coffey S, Hervey C, Schneider A, Yuhas J, et al. A pilot open label, single dose trial of fenobam in adults with fragile X syndrome. Journal of Medical Genetics. 2009;46:266–71. doi: 10.1136/jmg.2008.063701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berry-Kravis E, Raspa M, Loggin-Hester L, Bishop E, Holiday D, Bailey DB. Seizures in fragile X syndrome: characteristics and comorbid diagnoses. American Journal on Intellectual and Developmental Disabilities. 2010;115:461–72. doi: 10.1352/1944-7558-115.6.461. [DOI] [PubMed] [Google Scholar]

- Berry-Kravis E, Knox A, Hervey C. Targeted treatments for fragile X syndrome. Journal of Neurodevelopmental Disorders. 2011;3:193–210. doi: 10.1007/s11689-011-9074-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boutet I, Ryan M, Kulaga V, McShane C, Christie LA, Freedman M, et al. Age-associated cognitive deficits in humans and dogs: a comparative neuropsychological approach. Progress in Neuro- Psychopharmacology and Biological Psychiatry. 2005;29:433–41. doi: 10.1016/j.pnpbp.2004.12.015. [DOI] [PubMed] [Google Scholar]

- Butter EM, Mulick JA. ABA and the computer: a review of the Discrete Trial Trainer. Behavioral Interventions. 2001;16:287–91. [Google Scholar]

- Chang S, Bray SM, Li Z, Zarnescu DC, He C, Jin P, et al. Identification of small molecules rescuing fragile X syndrome phenotypes in Drosophila. Nature Chemical Biology. 2008;4:256–63. doi: 10.1038/nchembio.78. [DOI] [PubMed] [Google Scholar]

- Cornish KM, Turk J, Wilding J, Sudhalter V, Munir F, Kooy F, et al. Annotation: deconstructing the attention deficit in fragile X syndrome: a developmental neuropsychological approach. Journal of Child Psychology and Psychiatry. 2004;45:1042–53. doi: 10.1111/j.1469-7610.2004.t01-1-00297.x. [DOI] [PubMed] [Google Scholar]

- Dunn LM, Dunn LM. Peabody Picture Vocabulary Test. 3. American Guidance Service; Circle Pines, MN: 1997. [Google Scholar]

- Erickson CA, Mullett JE, McDougle CJ. Open-label memantine in fragile X syndrome. Journal of Autism and Developmental Disorders. 2009;39:1629–35. doi: 10.1007/s10803-009-0807-3. [DOI] [PubMed] [Google Scholar]

- Erickson CA, Mullett JE, McDougle CJ. Brief report: acamprosate in fragile X syndrome. Journal of Autism and Developmental Disorders. 2010;40:1412–16. doi: 10.1007/s10803-010-0988-9. [DOI] [PubMed] [Google Scholar]

- Erickson CA, Stigler KA, Posey DJ, McDougle CJ. Aripiprazole in autism spectrum disorders and fragile X syndrome. Neurotherapeutics. 2011a;7:258–63. doi: 10.1016/j.nurt.2010.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erickson CA, Stigler KA, Wink LK, Mullett JE, Kohn A, Posey DJ, et al. A prospective open-label study of aripiprazole in fragile X syndrome. Psychopharmacology. 2011b;216:85–90. doi: 10.1007/s00213-011-2194-7. [DOI] [PubMed] [Google Scholar]

- Erickson CA, Weng N, Weiler IJ, Greenough WT, Stigler KA, Wink LK, et al. Open-label riluzole in fragile X syndrome. Brain Research. 2011c;1380:264–70. doi: 10.1016/j.brainres.2010.10.108. [DOI] [PubMed] [Google Scholar]

- Farzin F, Rivera SM. Dynamic object representations in infants with and without fragile X syndrome. Frontiers in Human Neuroscience. 2010;4:12. doi: 10.3389/neuro.09.012.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farzin F, Whitney D, Hagerman RJ, Rivera SM. Contrast detection in infants with fragile X syndrome. Vision Research. 2008;48:1471–8. doi: 10.1016/j.visres.2008.03.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferster CB, Skinner BF. Schedules of Reinforcement. Appleton-Century-Crofts, Inc; NewYork: 1957. [Google Scholar]

- Gardner MF. Test of Visual-Perceptual Skills – Revised. Psychological and Educational Publications, Inc; Hydesville, CA: 1996. [Google Scholar]

- Greenough WT, Klintsova AY, Irwin SA, Galvez R, Bates KE, Weiler IJ. Synaptic regulation of protein synthesis and the fragile X protein. Proceedings of the National Academy of Sciences of the United States of America. 2001;98:7101–6. doi: 10.1073/pnas.141145998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall SS. Treatments for fragile X syndrome: a closer look at the data. Developmental Disabilities Research Reviews. 2009;15:353–60. doi: 10.1002/ddrr.78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall SS, Debernardis GM, Reiss AL. Social escape behaviors in children with fragile X syndrome. Journal of Autism and Developmental Disorders. 2006;36:935– 47. doi: 10.1007/s10803-006-0132-z. [DOI] [PubMed] [Google Scholar]

- Hall SS, Lightbody AA, Huffman LC, Lazzeroni LC, Reiss AL. Physiological correlates of social avoidance behavior in children and adolescents with fragile x syndrome. Journal of the American Academy of Child and Adolescent Psychiatry. 2009;48:320–9. doi: 10.1097/CHI.0b013e318195bd15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall SS, Lightbody AA, Hirt M, Rezvani A, Reiss AL. Autism in fragile X syndrome: a category mistake? Journal of the American Academy of Child and Adolescent Psychiatry. 2010;49:921–33. doi: 10.1016/j.jaac.2010.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall SS, Lightbody AA, McCarthy BE, Parker KJ, Reiss AL. Effects of intranasal oxytocin on social anxiety in males with fragile X syndrome. Psychoneuroendocrinology. 2012;37:509–18. doi: 10.1016/j.psyneuen.2011.07.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hammond JL, Hirt M, Hall SS. Effects of computerized match-to-sample training on emergent fraction-decimal relations in individuals with fragile X syndrome. Research in Developmental Disabilities. 2012;33:1–11. doi: 10.1016/j.ridd.2011.08.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hessl D, Nguyen DV, Green C, Chavez A, Tassone F, Hagerman RJ, et al. A solution to limitations of cognitive testing in children with intellectual disabilities: the case of fragile X syndrome. Journal of Neurodevelopmental Disorders. 2009;1:33–45. doi: 10.1007/s11689-008-9001-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacquemont S, Curie A, Des Portes V, Torrioli MG, Berry-Kravis E, Hagerman RJ, et al. Epigenetic modification of the FMR1 gene in fragile X syndrome is associated with differential response to the mGluR5 antagonist AFQ056. Science Translational Medicine. 2011;3:64ra1. doi: 10.1126/scitranslmed.3001708. [DOI] [PubMed] [Google Scholar]

- Johnson-Glenberg MC. Fragile X syndrome: neural network models of sequencing and memory. Cognitive Systems Research. 2008;9:274–92. doi: 10.1016/j.cogsys.2008.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kesler SR, Lightbody AA, Reiss AL. Cholinergic dysfunction in fragile X syndrome and potential intervention: a preliminary 1H MRS study. American Journal of Medical Genetics Part A. 2009;149A:403–7. doi: 10.1002/ajmg.a.32697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen PR, Hall SS. The principal components of response strength. Journal of the Experimental Analysis of Behavior. 2001;75:111–34. doi: 10.1901/jeab.2001.75-111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koegel LK, Koegel RL, Smith A. Variables related to differences in standardized test outcomes for children with autism. Journal of Autism and Developmental Disorders. 1997;27:233–43. doi: 10.1023/a:1025894213424. [DOI] [PubMed] [Google Scholar]

- Korkman M, Kirk U, Kemp S. NEPSY: A Developmental Neuropsychological Assessment. The Psychological Corporation; San Antonio, TX: 1998. [Google Scholar]

- Lattal KA. Steps and pips in the history of the cumulative recorder. Journal of the Experimental Analysis of Behavior. 2004;82:329–55. doi: 10.1901/jeab.2004.82-329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord C, Rutter M, Dilavore P, Risi S. The Autism Diagnostic Observation Schedule. Western Psychological Services; Los Angeles, CA: 1999. [Google Scholar]

- Lovaas OI. Teaching Individuals with Developmental Delays: Basic Intervention Techniques. Pro-Ed Inc; Ausin, TX: 2003. [Google Scholar]

- Morris SB, Deshon RP. Combining effect size estimates in meta-analysis with repeated measures and independent-groups designs. Psychological Methods. 2002;7:105–25. doi: 10.1037/1082-989x.7.1.105. [DOI] [PubMed] [Google Scholar]

- Murphy MM, Mazzocco MMM. Rote numerical skills may mask underlying mathematical disabilities in girls with fragile X syndrome. Developmental Neuropsychology. 2008;33:345–64. doi: 10.1080/87565640801982429. [DOI] [PubMed] [Google Scholar]

- Olmos-Serrano JL, Paluszkiewicz SM, Martin BS, Kaufmann WE, Corbin JG, Huntsman MM. Defective GABAergic neurotransmission and pharmacological rescue of neuronal hyperexcitability in the amygdala in a mouse model of fragile X syndrome. Journal of Neuroscience. 2010;30:9929–38. doi: 10.1523/JNEUROSCI.1714-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paribello C, Tao L, Folino A, Berry-Kravis E, Tranfaglia M, Ethell IM, et al. Open-label add-on treatment trial of minocycline in fragile X syndrome. BMC Neurology. 2010;10:91. doi: 10.1186/1471-2377-10-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Randolph C. Repeatable Battery for the Assessment of Neuropsychological Status (RBANS) The Psychological Corporation; San Antonio, TX: 1998. [Google Scholar]

- Reiss AL. Childhood developmental disorders: an academic and clinical convergence point for psychiatry, neurology, psychology and pediatrics. Journal of Child Psychology and Psychiatry, and Allied Disciplines. 2009;50:87–98. doi: 10.1111/j.1469-7610.2008.02046.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss AL, Hall SS. Fragile X syndrome: assessment and treatment implications. Child and Adolescent Psychiatric Clinics of North America. 2007;16:663–75. doi: 10.1016/j.chc.2007.03.001. [DOI] [PubMed] [Google Scholar]

- Rodgers TA, Iwata BA. An analysis of errorcorrection procedures during discrimination training. Journal of Applied Behavior Analysis. 1991;24:775–81. doi: 10.1901/jaba.1991.24-775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandford JA, Turner A. Manual for the Integrated Visual and Auditory Continuous Performance Test. Braintrain; Richmond, VA: 1995. [Google Scholar]

- Scerif G, Cornish K, Wilding J, Driver J, Karmiloff-Smith A. Visual search in typically developing toddlers and toddlers with Fragile X or Williams syndrome. Developmental Science. 2004;7:116–30. doi: 10.1111/j.1467-7687.2004.00327.x. [DOI] [PubMed] [Google Scholar]

- Scerif G, Karmiloff-Smith A, Campos R, Elsabbagh M, Driver J, Cornish K. To look or not to look? Typical and atypical development of oculomotor control. Journal of Cognitive Neuroscience. 2005;17:591– 604. doi: 10.1162/0898929053467523. [DOI] [PubMed] [Google Scholar]

- Scerif G, Cornish K, Wilding J, Driver J, Karmiloff-Smith A. Delineation of early attentional control difficulties in fragile X syndrome: focus on neurocomputational changes. Neuropsychologia. 2007;45:1889–98. doi: 10.1016/j.neuropsychologia.2006.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Semel E, Wiig EH, Secord WA. Clinical Evaluation of Language Fundamentals. 3. The Psychological Corporation; San Antonio, TX: 1995. [Google Scholar]

- Skinner M, Hooper S, Hatton DD, Roberts J, Mirrett P, Schaaf J, et al. Mapping nonverbal IQ in young boys with fragile X syndrome. American Journal of Medical Genetics Part A. 2005;132A:25–32. doi: 10.1002/ajmg.a.30353. [DOI] [PubMed] [Google Scholar]

- Smith T. Discrete trial training in the treatment of autism. Focus on Autism and Other Developmental Disabilities. 2001;16:86–92. [Google Scholar]

- Sullivan K, Hatton D, Hammer J, Sideris J, Hooper S, Ornstein P, et al. ADHD symptoms in children with FXS. American Journal of Medical Genetics Part A. 2006;140A:2275–88. doi: 10.1002/ajmg.a.31388. [DOI] [PubMed] [Google Scholar]

- Sullivan K, Hatton DD, Hammer J, Sideris J, Hooper S, Ornstein PA, et al. Sustained attention and response inhibition in boys with fragile X syndrome: measures of continuous performance. American Journal of Medical Genetics Part B, Neuropsychiatric Genetics. 2007;144B:517–32. doi: 10.1002/ajmg.b.30504. [DOI] [PubMed] [Google Scholar]

- Utari A, Chonchaiya W, Rivera SM, Schneider A, Hagerman RJ, Faradz SM, et al. Side effects of minocycline treatment in patients with fragile X syndrome and exploration of outcome measures. American Journal on Intellectual and Developmental Disabilities. 2010;115:433–43. doi: 10.1352/1944-7558-115.5.433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verkerk AJ, Pieretti M, Sutcliffe JS, Fu YH, Kuhl DP, Pizzuti A, et al. Identification of a gene (FMR-1) containing a CGG repeat coincident with a breakpoint cluster region exhibiting length variation in fragile X syndrome. Cell. 1991;65:905–14. doi: 10.1016/0092-8674(91)90397-h. [DOI] [PubMed] [Google Scholar]

- Wang H, Wu LJ, Kim SS, Lee FJ, Gong B, Toyoda H, et al. FMRP acts as a key messenger for dopamine modulation in the forebrain. Neuron. 2008;59:634– 47. doi: 10.1016/j.neuron.2008.06.027. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Intelligence Scale for Children. 3. The Psychological Corporation; San Antonio, TX: 1991. [Google Scholar]

- Wechsler D. The Wechsler Abbreviated Scale of Intelligence Scale. The Psychological Corporation; San Antonio, TX: 1999. [Google Scholar]

- Wechsler D. Wechsler Intelligence Scale for Children. 4. The Psychological Corporation; San Antonio, TX: 2003. [Google Scholar]

- Woodcock RW, Johnson MB. Woodcock- Johnson Psycho-Educational Battery – Revised. DLM Teaching Resources; Allen, TX: 1990. [Google Scholar]

- Zimmermann IL, Steiner VG, Pond RE. Preschool Language Scale. 4. The Psychological Corporation; San Antonio, TX: 2002. [Google Scholar]