A Web-based application that uses the Annotation and Image Markup standard was developed to provide an automated means for radiologists to review the results of prior imaging studies, analyze quantitative imaging results over time, and evaluate alternative quantitative disease response metrics.

Abstract

Quantitative assessments on images are crucial to clinical decision making, especially in cancer patients, in whom measurements of lesions are tracked over time. However, the potential value of quantitative approaches to imaging is impeded by the difficulty and time-intensive nature of compiling this information from prior studies and reporting corresponding information on current studies. The authors believe that the quantitative imaging work flow can be automated by making temporal data computationally accessible. In this article, they demonstrate the utility of the Annotation and Image Markup standard in a World Wide Web–based application that was developed to automatically summarize prior and current quantitative imaging measurements. The system calculates the Response Evaluation Criteria in Solid Tumors metric, along with several alternative indicators of cancer treatment response, by using the data stored in the annotation files. The application also allows the user to overlay the recorded metrics on the original images for visual inspection. Clinical evaluation of the system demonstrates its potential utility in accelerating the standard radiology work flow and in providing a means to evaluate alternative response metrics that are difficult to compute by hand. The system, which illustrates the utility of capturing quantitative information in a standard format and linking it to the image from which it was derived, could enhance quantitative imaging in clinical practice without adversely affecting the current work flow.

© RSNA, 2012

Introduction

The interpretation and reporting of quantitative radiologic imaging information is challenging because it requires the various measurements of disease obtained at prior studies to be amassed and reviewed, and the corresponding information about disease provided by the current study to be measured and reported. Such assessment of quantitative information is a crucial task in cancer patients for whom the radiologist’s reported information is the basis for applying treatment response criteria (1,2). However, this assessment is a time-consuming task for the radiologist, since it involves reviewing the reported abnormalities and their measurements from studies performed on multiple imaging dates. In the current high-volume practice of radiology, it is difficult for the radiologist to perform this assessment thoroughly and completely.

The measurements made on images during quantitative assessment by the radiologist are referred to as “image metadata” and are recorded by the picture archiving and communication system (PACS) as annotations on the image. It would be helpful if an application could access metadata electronically to automatically summarize the quantitative information obtained at prior imaging studies, thereby eliminating the need for the radiologist to carry out this time-consuming task. Creating such an application would require standards for image metadata, since there are many different PACS vendors. Digital Imaging and Communications in Medicine (DICOM) provides a standard for the representation and communication of image datasets. Recently, the DICOM committee developed a standard for image metadata, and a set of objects have been created under the DICOM Structured Reporting (DICOM SR) specification (3,4). At present, however, most commercial PACS do not capture or store image annotations in DICOM SR (5).

The Annotation and Image Markup (AIM) project was recently undertaken by the Cancer Biomedical Imaging Workspace of the National Cancer Institute to streamline the capture and use of image metadata in applications (6,7). The purpose of the AIM project was to address the challenge of representing and communicating quantitative imaging metadata in a manner that can be integrated into the radiology work flow. AIM specifies a common structure for the storage of metadata in Extensible Markup Language (XML) format (8) and allows translation into the DICOM SR standard. It includes support for a wide spectrum of annotations and measurements and maintains a record of the original image file’s unique DICOM identifiers, thereby ensuring that the metadata can be connected to their source image. Furthermore, AIM’s XML format makes it amenable to conversion into the Clinical Document Architecture, another type of XML document that is defined in the Health Level Seven standard for medical information exchange (9). Previous work has demonstrated that AIM functions well for the automated generation of reports, including those generated for temporal tracking (10).

Despite the availability of supporting standards and infrastructure such as AIM, DICOM SR, and previous studies showing the value of these standards, these technologies are not routinely used to capture quantitative imaging metadata or annotations. Instead, written reports remain the dominant vehicle for representing and communicating quantitative imaging results. However, a text-based reporting format thwarts automated summation or reporting of the results of quantitative imaging studies. In addition, retrospective computation of alternative metrics of disease response (eg, based on area or volume of lesions) would be a difficult task requiring reassessment of all images. Consequently, quantitative imaging measurements and the treatment criteria for disease response are presently collected and computed by hand. Not only is this time consuming, it is also error prone; omitted or incorrect metadata are a known source of error in reporting the results of quantitative imaging studies (11). Interpretation and reporting of quantitative imaging measurements is unpopular among radiologists (12), even though it is understood to be a crucial activity among practitioners (13). Finally, efforts to define alternative disease response criteria have been unsuccessful due to the difficulty of collecting the necessary quantitative imaging metadata, which are not recorded in machine-accessible format during routine radiology interpretation work flow.

In this article, we describe our work, whose goal is to provide an automated means for radiologists to (a) review the results of prior imaging studies; (b) analyze quantitative imaging results over time (helping them to evaluate disease response in particular), and (c) evaluate alternative quantitative disease response metrics. Our approach leverages the emerging AIM standard and an infrastructure for the management and analysis of image metadata. As we will demonstrate, incorporating the management of image metadata into the image interpretation work flow facilitates and streamlines the analysis of quantitative imaging data. These methods could lead to a new paradigm in radiology wherein the PACS could help radiologists access and use the quantitative imaging information for better interpretations, as well as access and view the images themselves.

Methods

The context of our work is the evaluation of disease response—specifically, that of cancer—with quantitative imaging criteria. To assess cancer treatment response in individual patients, the following five tasks are carried out by the radiologist or oncologist: (a) review of images and labeling of a subset of the lesions to be measured (ie, target lesions); (b) measurement of the target lesions according to a metric to evaluate response (eg, diameter, area, or volume of lesions); (c)calculation of a “disease burden metric” based on the target lesion measurements; (d) summary of the disease burden metric over time (usually expressed as a graph); and (e) application of criteria to assess treatment response (generally as a category label, such as “stable disease” or “partial response”). The work flow for evaluating disease response with quantitative imaging criteria requires that these steps be carried out by hand because current PACS are not designed to store and compare quantitative imaging findings from serial imaging studies. Our system provides a solution that removes a significant calculation burden from the radiologist and oncologist, streamlining this work flow by automating all five of the aforementioned tasks.

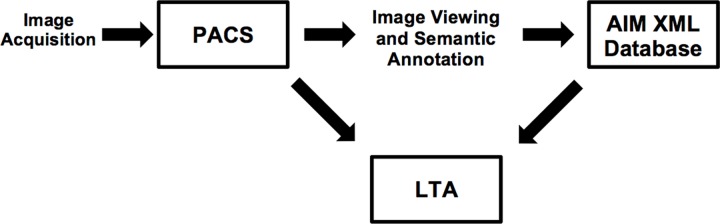

Our system consists of a PACS workstation for managing the images, an AIM database for managing the image metadata, and an image metadata analysis application that is specialized for the task of lesion tracking (Fig 1). The World Wide Web–based LTA accesses both the AIM database and the PACS. It uses the AIM database to perform automated assessment of disease response, summarizing the disease burden according to various quantitative imaging biomarkers (eg, diameter, circumference, and area). The LTA uses the PACS to provide visual summaries of current and prior measurements of pathologic lesions as overlays on the original images.

Figure 1.

Diagram illustrates the architecture of the Lesion Tracking Application (LTA) and its supporting components. A PACS stores and displays the acquired images. A radiologist uses semantic annotation software on the workstation to generate an AIM XML file containing the image annotation metadata. The AIM files are stored in an XML database and made available for query and analysis by the LTA. The LTA retrieves information about lesions (eg, lesion name, location, and measurements) from the AIM XML database to produce lesion tracking summaries. In addition, the LTA accesses the corresponding annotated images in the PACS to display to the user, thereby streamlining the review of prior lesions and measurements.

Picture Archiving and Communication System

Our system assumes that the images are viewed and annotated on a workstation that supports AIM. At present, open-source implementations are available on the Osirix (14) and ClearCanvas (15) platforms; workstations from a number of vendors (eg, GE Medical Systems [16] and Siemens [17]) are also beginning to support AIM. For this work, we used the electronic physician annotation device (ePAD, previously named iPAD) plug-in (18) with the Osirix platform to view and annotate images.

The AIM files produced during image annotation are stored in the AIM database; the images themselves are stored in the PACS. Osirix includes a PACS; however, we opted to store the images in a DCM4CHE database (19) so that our LTA could query the images using the Web Access to DICOM Objects (WADO) protocol (20). DCM4CHE is an open-source clinical image and object management PACS implementation in Java that supports several healthcare communication standards (eg, Health Level Seven, WADO). If the commercial PACS supports WADO and there are no network firewall issues, it could be used to store and access the images, with no need to set up a separate PACS such as DCM4CHE.

Image Data, Viewing, and Annotation

We used a set of computed tomographic (CT) examinations performed in a cancer patient with multiple pancreatic target lesions to develop and evaluate the system. The patient underwent CT of the abdomen and pelvis on three different dates during cancer treatment (baseline examination prior to treatment and two follow-up examinations after initiation of treatment). Protected health information was removed from the image dataset, and the work was approved by our institutional review board under an exemption for research involving human subjects.

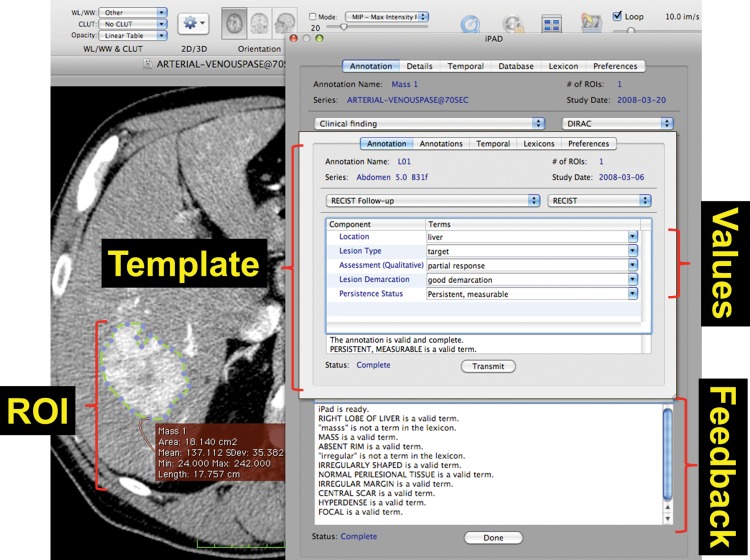

The ePAD was used by a radiologist to annotate the measurable lesions on the images obtained at each study and to measure the diameter of each target lesion (Fig 2). The tool prompted the radiologist to provide a name (eg, “L01”), anatomic location, and type (target, nontarget, new, or resolved) for each lesion. This annotation information, which was collected as part of the routine lesion viewing and measurement work flow, provided all of the information required by the LTA tool to access the target lesion measurements, compute the disease burden metrics, and summarize the response assessment (see “Lesion Tracking Application”). Note that in AIM, the anatomic location is specified using a controlled term that is defined in a controlled terminology such as RadLex or SNOMED (Systematized Nomenclature of Medicine). The ePAD constrains the specification of anatomic locations entered by the user to a controlled terminology. The LTA does not modify AIM documents created by the ePAD; thus, controlled terms from the annotation generation software (eg, ePAD) are maintained throughout the use of the LTA. The ePAD provided functionality to facilitate the annotation process, showing the radiologist the annotations on prior examinations so as to streamline annotation and help make lesion reporting more complete.

Figure 2.

Screen shot of the ePAD software used to generate image annotations that describe identified lesions. The radiologist outlines a lesion on the image, and the software computes relevant metrics, including diameter and area. The software also records geometric coordinates for the lesion and prompts the radiologist to provide an identifier, anatomic location, and type (eg, target, nontarget, new) for each lesion. The program then saves this information in the AIM format. Our software operates on AIM and demonstrates the usefulness of storing these annotations in a database. ROI = region of interest.

The radiologist annotated lesions using the Response Evaluation Criteria in Solid Tumors (RECIST) 1.1 criteria (21,22), measuring all lesions ≥1 cm and reporting up to two lesions per organ. A total of three annotations were made in the patient (three pancreatic lesions). Thus, a total of nine AIM annotation files were produced: one for each of the target lesions imaged on each of three study dates. The annotation data that were output in AIM format by the ePAD were imported into the AIM XML database.

AIM XML Database

The LTA is capable of loading AIM XML files directly from the file system or from an XML database. The open-source BerkeleyDB XML database was used to test the latter functionality. A custom library was written to allow queries for specific values pertinent to the system, including retrieval of the list of available studies and the following study-specific values: (a) imaging dates, (b) available metrics, (c) metric values, and (d) metric SI (Systéme International) units. The LTA stores the AIM data in a custom library that makes the original source (file system or database) transparent to the user. This library provides access to retrieved values using a Java class inheritance hierarchy that mirrors that of the original AIM file. This inheritance makes the system extensible to other work flows outside of lesion tracking by providing a standard way to access arbitrary XML attributes through Java methods.

Lesion Tracking Application

We built the LTA to summarize the measurements of lesions being tracked in quantitative imaging and to calculate the response criteria and temporal changes in disease response according to RECIST. The LTA was built using the Google Web Toolkit (23) and deployed as a Java servlet using Apache Tomcat (24). The LTA application consists of a Web-based graphical user interface and server-side functions. The server-side functions execute operations requested by the graphical user interface, such as listing patients in the database, retrieving image metadata for a selected patient from the AIM database, querying the AIM files for metadata from a specific study, and retrieving the image files corresponding to image metadata from the PACS database. A custom library containing functions for extracting specific data from AIM files was also created.

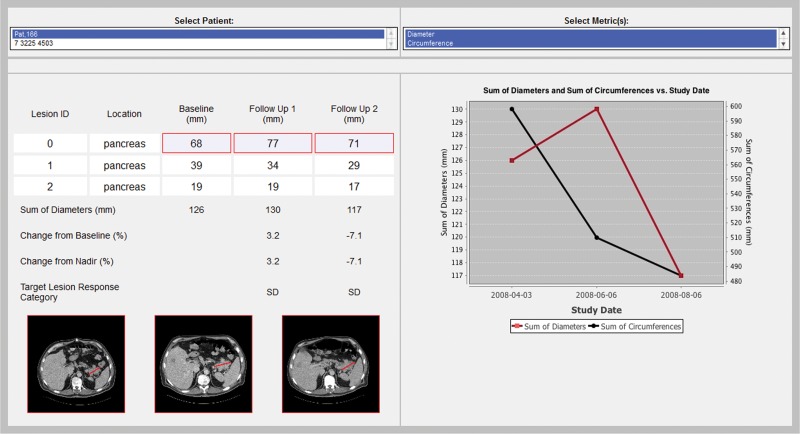

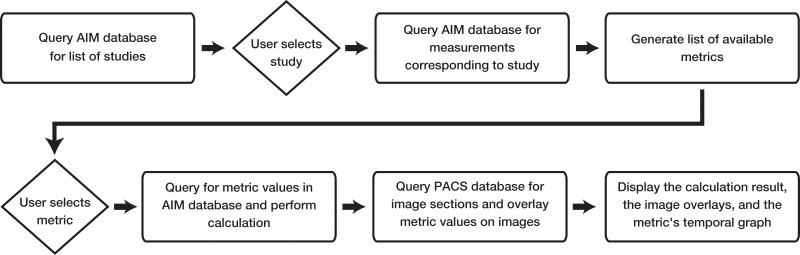

The graphical user interface contains four sections: study selection, metric selection, response metric calculation linked to source images and annotations, and graphical display of the response metrics over time (Fig 3). The user first selects a patient and the response metric desired for use in evaluating disease response. The system then calculates the disease burden metrics, summarizes the target lesion measurements, and infers the response criteria. The tool also shows a response graph summarizing the disease burden over time, and allows the user to review the images with an overlay of the target lesion measurements for data audit and consistency checking (Fig 4).

Figure 3a.

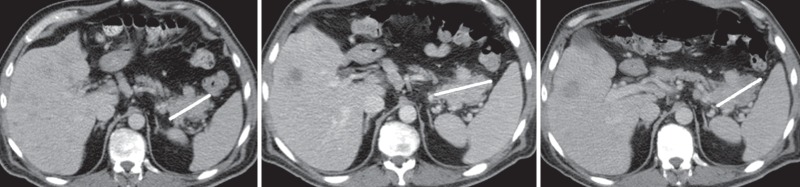

(a) Screen shot of the LTA user interface, which includes a list of patients in the database (deidentified for privacy) whose studies are available for review (top left), a list of disease burden metrics that are available for assessment of disease response for the selected study (top right), and a table (middle left) that summarizes the target lesion measurements and calculation of the selected disease burden metrics. In addition, the image from which each measurement was derived with an overlay of the actual measurement is available by clicking on either an individual measurement or the lesion identifier (which displays all the lesion measurements) (bottom left). The table also shows the category of response inferred by applying RECIST response criteria to the data. A graphical display of the selected metrics over time (middle right) facilitates assessment of disease response and comparison of alternative disease burden metrics of response. (b) Magnified views of the three images shown in a illustrate the progression of a pancreatic lesion (ID 0) (white line) from baseline (left) to first follow-up (middle) to second follow-up (right). Annotations made during the measurement of a target lesion are stored in the AIM XML database. The LTA overlays these annotations on the original images. The images are retrieved from the DICOM PACS and rendered in the LTA; the annotations are rendered by the LTA on the basis of information in the AIM metadata file.

Figure 3b.

(a) Screen shot of the LTA user interface, which includes a list of patients in the database (deidentified for privacy) whose studies are available for review (top left), a list of disease burden metrics that are available for assessment of disease response for the selected study (top right), and a table (middle left) that summarizes the target lesion measurements and calculation of the selected disease burden metrics. In addition, the image from which each measurement was derived with an overlay of the actual measurement is available by clicking on either an individual measurement or the lesion identifier (which displays all the lesion measurements) (bottom left). The table also shows the category of response inferred by applying RECIST response criteria to the data. A graphical display of the selected metrics over time (middle right) facilitates assessment of disease response and comparison of alternative disease burden metrics of response. (b) Magnified views of the three images shown in a illustrate the progression of a pancreatic lesion (ID 0) (white line) from baseline (left) to first follow-up (middle) to second follow-up (right). Annotations made during the measurement of a target lesion are stored in the AIM XML database. The LTA overlays these annotations on the original images. The images are retrieved from the DICOM PACS and rendered in the LTA; the annotations are rendered by the LTA on the basis of information in the AIM metadata file.

Figure 4.

Diagram illustrates user interaction for the LTA. The system relies on an AIM XML database and a WADO-enabled DICOM PACS database.

In addition to displaying the originally assessed diameter of the target lesion, the LTA calculated alternative quantitative imaging biomarkers, including circumference, cross-sectional area, and mean density. The first two metrics were derived by assuming a circular shape for the lesion and deducing the parameters from the diameter. The mean density of the target lesion was derived by using the coordinates of the circular region of interest derived from the diameter to localize the pixel values within this region of interest.

Results

As with the PACS, the user selects, from a menu, the patient whose images are to be viewed. Once a patient is selected, the user can choose one or more metrics that were used to measure the target lesion (Fig 3). As in most studies, only one type of target lesion measurement was made in our study (ie, diameter); however, as mentioned earlier, our LTA can generate additional quantitative imaging measurements, and these are also listed as alternative measurements that can be assessed. In fact, the user can select more than one type of measurement for comparison (eg, comparative assessment of maximum diameter and circumference [Fig 3a]). Selection of these metrics resulted in the generation of a response table and response graph.

The response table in the LTA is designed to look like the flow sheets oncologists use to summarize and analyze lesion data in their patients. The table shows the name, anatomic location, and measurement values for each target lesion seen on each study date. In addition, the LTA calculates the metrics of total disease burden on each study date and the resulting response category. Note that, although the system is capable of displaying a table for each metric selected, for the sake of clarity we display the measurement values for only the first metric when multiple metrics are selected. On the other hand, response categories are displayed for all selected metrics.

In our example, the user compared the target lesions in terms of diameter and circumference. Although both diameter and circumference indicated whether disease was stable, only diameter showed an increase between the baseline examination and the first follow-up examination. This information is readily available from the response graph (Fig 3a).

When reviewing the measurements reported in the response table, the user may question the accuracy or appropriateness of specific measurements. The LTA enables the user to quickly audit any measurement by simply clicking on that measurement. The LTA queries the PACS for the original image and renders the measurement as an overlay on the image (Fig 3b).

Discussion

Tumor lesion tracking and assessment of tumor response to treatment are essential for cancer treatment decision making. The application of standardized cancer treatment response criteria such as RECIST can improve the consistency and reproducibility of assessments of treatment response and decrease practice variance. However, application of formal response criteria in everyday clinical practice is time consuming for both the radiologist and the oncologist. Lesion tracking and calculation of quantitative estimates of tumor burden and tumor response are especially time-intensive and potentially error-prone manual processes. Automated methods of calculating and classifying response directly from imaging findings are needed to improve the speed and accuracy of generating these quantitative calculations. Methods are also needed to assist the radiologist and oncologist in navigating to those images that determine the response classification for both efficient review of prior imaging studies and annotation of the current study.

We have developed an application for automating the process of tracking cancer lesions that has several advantages. First, our approach is implemented in a manner that is expected to minimally disrupt the current radiology work flow: The radiologist continues using the image viewing workstation in the same manner to which he or she is accustomed. The only change is that when radiologists take a measurement of a lesion by drawing an image annotation, they are prompted to give the lesion a name so as to identify it similarly to how it was identified on previous studies. Because the actual image annotations are the source of information for the LTA-generated lesion summaries and graphs, the lesions need to be named. In addition, the radiologist indicates the lesion location and type (eg, target lesion). Although these are additional steps that are not a part of current work flow, they do not require much time; thus, we believe they will have minimal impact on work flow, especially since the ability to review prior lesions more quickly than with the LTA-generated data will likely save time.

A second advantage of our approach is that the radiologist can rapidly navigate to the images on which a given lesion was previously measured. Clicking on the lesion name brings up all of the relevant images. The radiologist can then quickly navigate to the corresponding images from the current examination to make the required measurements of the same lesions. In addition, because the measurements are also displayed as overlays on the images, the radiologist is able to draw annotations similar to those on previous images (eg, by selecting the same long axis), thereby ensuring better consistency in measurements, the lack of which is a known problem in quantitative imaging (25).

A third advantage is that the oncologist is provided with a flow sheet and graphical summary of patient response, the generation of which entails no extra work for the radiologist or oncologist. At present, oncologists generate these documents by hand to extract the information they require from the radiology reports and annotated images. Using a tool such as the LTA could provide the oncologist with a report that is much more meaningful than the current radiology text report.

Our work is greatly enhanced by the AIM metadata standard. AIM encodes the “semantic” information about images: for example, the lesions they contain; coordinates of regions of interest (eg, lines that indicate lesion outlines or sizes); and measurements, pixel values, or other quantitative data related to the region of interest (7,26). These image metadata in AIM are encoded using an XML syntax that facilitates the development of applications such as our LTA. At the same time, the AIM toolkit provides software to translate the AIM information into DICOM SR to enable interoperability. Compliance with such standards will allow sharing of image metadata without interconverting between proprietary formats. At present, however, because only a few commercial systems support DICOM SR, it is convenient to work directly with the AIM XML, whose file contents are self-describing.

A notable feature of our system architecture is the separation and linkage of images, image metadata, and the software applications that use these resources (Fig 1). The image metadata are collected in a file separate from the image itself and stored in the XML database. Applications such as the LTA query both the PACS and the XML database to implement their functionality. The advantages of this approach are that (a) the LTA (or some other future application functionality) can be built atop existing image viewing software; (b) the image metadata query is efficient, since all information is stored in a dedicated database containing only image metadata; and (c) applications such as the LTA are not tightly linked to the imaging workstation environment; they can be Web based and can be accessed in many different environments, such as the oncology clinic. In addition, although our application domain is tracking cancer lesions and assessing cancer treatment response, our architecture (Fig 1) and LTA are generalizable and could be extended to aid in the assessment of treatment response of other diseases in the future. A quantitative imaging metric similar to that for assessing cancer would need to be defined for tracking other types of disease.

Related work has been done in creating structured summaries of radiology reports or imaging information. Zimmerman et al (10) incorporated support for the AIM standard into a structured reporting work flow that allows quantitative data collected at an imaging workstation to be incorporated into the radiology report with minimal radiologist involvement. Their study focused on the incorporation of raw measurements into the radiology report (10), whereas our work focuses on the synthesis of the quantitative imaging information, in terms of both (a) abstraction of target lesion measurements into a metric of disease burden, and (b) summarization of quantitative metrics from prior serial imaging studies with subsequent inference of disease response. In a related study, Iv et al (27) made direct transfer of raw data into a structured radiology report, although their focus was on raw data from the imaging device, rather than image annotation data, and no additional processing (eg, computerized inference) was done.

It has been established that both referring physicians and radiologists view structured reports as having better content and greater clarity than conventional narrative reports (28), although it has been contended that report clarity is not substantially affected (29). However, both referring physicians and radiologists prefer structured reporting over the unstructured narrative (30), despite the fact that structured reporting may be more time intensive. Furthermore, there are significant limitations to current approaches for summarizing imaging findings in radiology reports (31). However, applications such as the LTA could streamline the generation of radiology reports for tracking disease by creating the summaries that at present must be dictated by hand, and could directly address the challenges of radiologist-oncologist communication and of using quantitative imaging results to guide patient care (31).

Our work has several limitations. First, we are assuming that the radiologist uses an AIM-compliant workstation for image interpretation. In our study, we used Osirix. However, no Food and Drug Administration–approved workstations currently support AIM, although experimental implementations have been produced by several commercial vendors. The adoption of methods such as ours that leverage AIM will need to await the release of commercial workstations that support this image metadata standard. Commercial support of DICOM SR (which continues to be harmonized with AIM) will greatly accelerate the rate at which applications such as the LTA are put into practice.

A second limitation is that some alteration in the image annotation work flow is required to adopt our methods—or any method that leverages AIM, for that matter. Radiologists need to label the lesions that are measured and do so in a manner consistent with previous studies. They also need to record lesion location and type. Labeling a lesion consistently between studies is a tractable requirement because annotation software such as the ePAD records lesion location automatically when a measurement is made and associates it with the lesion’s label. The radiologist may then recall a lesion’s label between studies by using the geometric coordinates stored in AIM.

These capabilities—annotation measurement and review—require an annotations interface such as the ePAD. Radiologists currently measure lesions at the workstation but do not provide the other information (name, location, and type) in the process of creating an annotation. Tools such as the ePAD facilitate this process by providing a simple on-screen form that pops up when an image annotation (such as a linear measurement) is made. Adoption of our methods will require commercial workstations to provide a similar simple interface to collect the basic information required by the LTA. In addition, radiologists may find it cumbersome to record this additional information as part of the work flow of measuring lesions. This process may be made less onerous if the information from the annotation is used to populate the reports automatically, saving the radiologist time in generating the reports (10).

Finally, we have implemented only the quantitative aspects of the RECIST response criteria in this pilot application. The RECIST criteria contain both quantitative and qualitative assessments of changes in cancer lesions and tumor burden to infer a final classification of disease response to treatment. AIM is capable of capturing and reasoning through both quantitative and qualitative features of annotated lesions. We are currently conducting a comparative evaluation of RECIST cancer assessment with and without the ePAD. We intend to conduct an expanded formal analysis of the LTA following further development and integration with the ePAD software.

Conclusions

We have developed a software architecture that provides an automated means for radiologists and referring physicians to facilitate their analysis of quantitative imaging results over time, helping them to evaluate cancer treatment response in particular and enabling them to evaluate alternative quantitative disease response metrics. Our approach leverages the AIM standard and an infrastructure for the management and analysis of image metadata. Our model couples a PACS image database with an AIM annotations database, a combination that provides automated analysis calculations such as RECIST. We believe our model is extensible to other oncologic biomarkers (eg, standard uptake value at positron emission tomography, dynamic contrast-enhanced magnetic resonance imaging findings) for applications other than that of tracking cancer.

Disclosures of Potential Conflicts of Interest.—D.L.R.: Related financial activities: none. Other financial activities: grant from GE Medical Systems. M.L.: Related financial activities: none. Other financial activities: speakers bureau for the National Comprehensive Cancer Network.

Acknowledgments

We thank Yuzhe (Brian) Liu for the ongoing development of an AIM XML database that supports an increasing number of clinically pertinent metadata queries.

Presented as an education exhibit at the 2011 RSNA Annual Meeting.

M.L. and D.L.R. have disclosed various financial relationships (see “Disclosures of Potential Conflicts of Interest”); the other author has no financial relationships to disclose.

Funding: The research was supported by the National Institutes of Health [grant number U01CA142555-01].

Abbreviations:

- AIM

- Annotation and Image Markup

- DICOM

- Digital Imaging and Communications in Medicine

- DICOM SR

- DICOM Structured Reporting

- ePAD

- electronic physician annotation device

- LTA

- Lesion Tracking Application

- PACS

- picture archiving and communication system

- RECIST

- Response Evaluation Criteria in Solid Tumors

- WADO

- Web Access to DICOM Objects

- XML

- Extensible Markup Language

References

- 1.van Persijn van Meerten EL, Gelderblom H, Bloem JL. RECIST revised: implications for the radiologist—a review article on the modified RECIST guideline. Eur Radiol 2010;20(6):1456–1467 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Therasse P, Eisenhauer EA, Verweij J. RECIST revisited: a review of validation studies on tumour assessment. Eur J Cancer 2006;42(8):1031–1039 [DOI] [PubMed] [Google Scholar]

- 3.Hussein R, Engelmann U, Schroeter A, Meinzer HP. DICOM structured reporting. I. Overview and characteristics. RadioGraphics 2004;24(3):891–896 [DOI] [PubMed] [Google Scholar]

- 4. DICOM reference guide. Health Devices 2001; 30(1-2):5–30. [PubMed]

- 5.Hussein R, Engelmann U, Schroeter A, Meinzer HP. DICOM structured reporting. II. Problems and challenges in implementation for PACS workstations. RadioGraphics 2004;24(3):897–909 [DOI] [PubMed] [Google Scholar]

- 6.Channin DS, Mongkolwat P, Kleper V, Sepukar K, Rubin DL. The caBIG Annotation and Image Markup project. J Digit Imaging 2010;23(2):217–225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Rubin DL, Mongkolwat P, Channin DS. A semantic image annotation model to enable integrative translational research. Summit on Translat Bioinforma 2009;2009:106–110 [PMC free article] [PubMed] [Google Scholar]

- 8.Bosak J, Bray T. XML and the Second-Generation Web. Sci Am 1999;280(5):89–93 [Google Scholar]

- 9.Han SH, Lee MH, Kim SG, et al. Implementation of Medical Information Exchange System based on EHR standard. Healthc Inform Res 2010;16(4):281–289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zimmerman SL, Kim W, Boonn WW. Informatics in radiology: automated structured reporting of imaging findings using the AIM standard and XML. RadioGraphics 2011;31(3):881–887 [DOI] [PubMed] [Google Scholar]

- 11.Levy MA, Rubin DL. Tool support to enable evaluation of the clinical response to treatment. AMIA Annu Symp Proc 2008 Nov. 6:399–403 [PMC free article] [PubMed] [Google Scholar]

- 12.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients. I. Radiology practice patterns at major U.S. cancer centers. AJR Am J Roentgenol 2010;195(1):101–106 [DOI] [PubMed] [Google Scholar]

- 13.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients. II. Oncologists’ opinions and expectations at major U.S. cancer centers. AJR Am J Roentgenol 2010;195(1):W19–W30 [DOI] [PubMed] [Google Scholar]

- 14.Rosset A, Spadola L, Ratib O. OsiriX: an open-source software for navigating in multidimensional DICOM images. J Digit Imaging 2004;17(3):205–216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.ClearCanvas. http://www.clearcanvas.ca/dnn. Accessed August 10, 2011

- 16.Rubin DL, Korenblum D, Yeluri V, Frederick P, Herfkens R. Semantic annotation and image markup in a commercial PACS workstation (abstr). In: Radiological Society of North America scientific assembly and annual meeting program. Oak Brook, Ill: Radiological Society of North America, 2010; 333 [Google Scholar]

- 17.Rubin DL. Open-source tools to analyze, report, and communicate radiology results in the quantitative imaging era: quantitative imaging reading room of the future. Presented at the 96th Scientific Assembly and Annual Meeting of the Radiological Society of North America, Chicago, Ill, November 28–December 3, 2010.

- 18.Rubin DL, Rodriguez C, Shah P, Beaulieu C. iPad: semantic annotation and markup of radiological images. AMIA Annu Symp Proc 2008 Nov. 6:626–630 [PMC free article] [PubMed] [Google Scholar]

- 19.Warnock MJ, Toland C, Evans D, Wallace B, Nagy P. Benefits of using the DCM4CHE DICOM archive. J Digit Imaging 2007;20(suppl 1):125–129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Digital Imaging and Communications in Medicine (DICOM) Part 18: Web Access to DICOM Persistent Objects (WADO). ftp://medical.nema.org/medical/dicom/2009/09_18pu.pdf. Accessed August 14, 2011

- 21.Eisenhauer EA, Therasse P, Bogaerts J, et al. New response evaluation criteria in solid tumours: revised RECIST guideline (version 1.1). Eur J Cancer 2009;45(2):228–247 [DOI] [PubMed] [Google Scholar]

- 22.Nishino M, Jagannathan JP, Ramaiya NH, Van den Abbeele AD. Revised RECIST guideline version 1.1: what oncologists want to know and what radiologists need to know. AJR Am J Roentgenol 2010;195(2):281–289 [DOI] [PubMed] [Google Scholar]

- 23.Google Web Toolkit. http://code.google.com/webtoolkit. Accessed August 10, 2011

- 24.Apache Tomcat. http://tomcat.apache.org. Accessed August 10, 2011

- 25.Erasmus JJ, Gladish GW, Broemeling L, et al. Interobserver and intraobserver variability in measurement of non-small-cell carcinoma lung lesions: implications for assessment of tumor response. J Clin Oncol 2003;21(13):2574–2582 [DOI] [PubMed] [Google Scholar]

- 26.Channin DS, Mongkolwat P, Kleper V, Rubin DL. The Annotation and Image Mark-up project. Radiology 2009;253(3):590–592 [DOI] [PubMed] [Google Scholar]

- 27.Iv M, Patel MR, Santos A, Kang YS. Use of a macro scripting editor to facilitate transfer of dual-energy X-ray absorptiometry reports into an existing departmental voice recognition dictation system. RadioGraphics 2011;31(4):1181–1189 [DOI] [PubMed] [Google Scholar]

- 28.Schwartz LH, Panicek DM, Berk AR, Li Y, Hricak H. Improving communication of diagnostic radiology findings through structured reporting. Radiology 2011;260(1):174–181 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Johnson AJ, Chen MY, Zapadka ME, Lyders EM, Littenberg B. Radiology report clarity: a cohort study of structured reporting compared with conventional dictation. J Am Coll Radiol 2010;7(7):501–506 [DOI] [PubMed] [Google Scholar]

- 30.Bosmans JM, Weyler JJ, De Schepper AM, Parizel PM. The radiology report as seen by radiologists and referring clinicians: results of the COVER and ROVER surveys. Radiology 2011;259(1):184–195 [DOI] [PubMed] [Google Scholar]

- 31.Levy MA, Rubin DL. Current and future trends in imaging informatics for oncology. Cancer J 2011;17(4):203–210 [DOI] [PMC free article] [PubMed] [Google Scholar]